1. Introduction

The Internet of Things (IoT) generates massive volumes of heterogeneous data, often sensitive and continuously produced, that require rapid and localized processing. In this context, the centralized architecture of cloud computing quickly reveals its limitations: high latency, network congestion, and increased risks to data confidentiality [

1,

2]. To address these shortcomings, the Fog and Edge computing paradigms have been proposed as extensions of the Cloud. Fog computing provides intermediate processing between Edge nodes and the Cloud, while Edge computing brings computational capabilities closer to connected objects [

3]. Together, these paradigms form a distributed processing continuum capable of meeting the demands of critical IoT applications, particularly in terms of responsiveness, mobility, energy efficiency, and security [

4,

5]. In certain contexts, such as healthcare, industrial, or smart urban environments, the dynamic positioning of resources and the management of migrations become essential to ensure quality of service [

6]. Evaluating these distributed architectures under physical conditions remains challenging due to their complexity, infrastructure cost, and the lack of experimental reproducibility. Simulation tools offer a valuable alternative for exploring different processing, placement, or migration strategies without requiring physical deployment. Several frameworks have been developed to support this purpose. However, their ability to represent interactions across the Edge, Fog, and Cloud layers, as well as their maintainability and functional richness, varies significantly. While many studies focus on technical classifications or functional comparisons [

7,

8,

9,

10,

11], few combine in-depth theoretical analysis with empirical validation applied to complex hybrid scenarios [

12]. This imbalance limits the ability to guide researchers in selecting tools that meet the requirements of modern distributed architectures.

This study aims to bridge that gap. It introduces a dual analysis—functional and experimental—of simulators capable of modeling hybrid IoT architectures in which the Edge, Fog, and Cloud paradigms are explicitly integrated. Following a review of existing tools, only two frameworks were selected for the testing phase: iFogSim2 and FogNetSim++. These simulators feature advanced technical capabilities, notably in migration, mobility, modularity, and energy management, and offer sufficiently open structures to enable reproducible experimentation.

To the best of our knowledge, this is the first study to perform a unified and reproducible comparison of two simulators—iFogSim2 and FogNetSim++—under the exact same hybrid Edge–Fog–Cloud architecture. This approach ensures a fair and direct performance comparison using a harmonized simulation environment.

The main contributions of this article are as follows:

A selection methodology based on functional, technical, and practical criteria, applied to open-source simulators capable of modeling the Edge, Fog, and Cloud layers, and leading to the first unified experimental framework comparing two simulators under identical architectural conditions;

A comparative analysis of thirteen open-source simulators, based on key dimensions such as migration support, mobility management, energy modeling, and software modularity;

A reasoned selection of two representative simulators—iFogSim2 and FogNetSim++—chosen for their ability to support the full range of distributed paradigms and their potential to simulate complex scenarios with flexibility and granularity;

An experimental cross-evaluation on a shared hybrid IoT architecture, comparing the selected simulators across eight performance metrics: execution time, application loop delay, CPU processing time by tuple type, energy consumption, cloud execution cost, network usage, scalability, and robustness;

Formulation of guidelines based on the comparative analysis results to inform the selection and use of simulators in the context of distributed architectures and large-scale or degraded operating conditions.

This article is structured as follows.

Section 2 presents a structured state of the art, defining the Edge, Fog, and Cloud paradigms, explaining the role of IoT simulators in scientific research, providing an overview of existing simulation tools, and offering a critical synthesis of prior comparative studies.

Section 3 details the simulator selection methodology based on clearly defined inclusion and exclusion criteria.

Section 4 describes the experimental testbed, including simulation environments, modeled architecture, and resource allocation.

Section 5 presents the comparative evaluation of the selected simulators using eight performance metrics, including execution time, application loop delay, CPU processing time, energy consumption, cloud cost, network usage, scalability, and robustness.

Section 6 provides practical recommendations to guide simulator selection and usage in distributed IoT architectures. Finally,

Section 7 concludes the article and outlines directions for future work.

2. State of the Art

2.1. Edge, Fog, and Cloud Computing Paradigms

Given the increasing heterogeneity of IoT applications and the diversity of constraints they entail, centralized processing is no longer sufficient to ensure real-time responsiveness. The growing adoption of distributed approaches has led to the structuring of IoT architectures around three distinct but interconnected paradigms: Cloud computing, Fog computing, and Edge computing.

2.1.1. Cloud Computing

Cloud computing is defined in academic literature as a service-oriented model that lets users access configurable computing resources. These include data storage, processing units, and application-level services, all available through the Internet or a private network. According to the widely accepted definition by the National Institute of Standards and Technology (NIST), “these resources allow rapid allocation and access on demand, from any location, with minimal administrative effort” [

13].

This model relies on infrastructure pooling, resource elasticity, and large-scale virtualization. It provides computing and storage capabilities that meet the growing demands of distributed applications. However, the physical distance of data centers introduces transmission delays that limit its efficiency for latency-sensitive applications, thereby motivating the emergence of complementary paradigms such as Fog and Edge computing.

2.1.2. Fog Computing

Fog computing, originally introduced by Cisco, enhances the cloud model to address key limitations in IoT systems by decentralizing processing and storage tasks [

13,

14]. It adds an intermediary layer between the Cloud and the Edge, bringing computational and storage operations closer to data sources. Fog nodes typically include intermediary hardware such as gateways, routers, or micro-servers, which are equipped to manage processing, storage, and transmission functions. In contrast to centralized Cloud infrastructures located in remote data centers, Fog nodes operate within local network environments [

14].

This proximity reduces communication delays and optimizes bandwidth usage by transmitting only relevant or partially processed data to the Cloud [

13,

14]. As a result, Fog computing proves effective for IoT applications with strict latency requirements or high sensitivity to network congestion [

13].

2.1.3. Edge Computing

Edge computing represents a decentralized model in which data are processed, stored, and analyzed in close proximity to their point of origin. Unlike Cloud and Fog architectures, Edge devices are positioned at the network’s outer boundary, interfacing directly with physical systems through sensors or connected equipment [

15].

These devices may take the form of embedded systems, local gateways, or lightweight servers, each capable of performing time-sensitive processing, enforcing localized control logic, or pre-filtering data prior to transmission to upstream layers [

13].

The model seeks to minimize latency, alleviate network traffic, and strengthen autonomous local operations. It is particularly relevant in contexts that demand real-time responsiveness or continuous on-site functionality, including industrial automation, self-driving technologies, and mission-critical healthcare systems [

15,

16].

2.2. The Role of IoT Simulators in the Scientific Process

Validating IoT systems under real-world conditions remains a significant challenge. Physical experimentation demands considerable resources, dedicated infrastructure, and complex configuration management. In this context, simulators have emerged as indispensable tools in scientific research. They enable researchers to test their methods without the burden of physical deployment, while also improving experimental control, lowering associated costs, and fostering reproducibility.

Fahimullah et al. [

12] point out that platforms like iFogSim, LEAF, and YAFS offer suitable environments for modeling distributed applications in domains such as healthcare, monitoring, and interactive entertainment. These simulators facilitate the evaluation of placement policies, resource usage, and latency, all within a reproducible experimental setting. Along similar lines, Mechalikh et al. [

7] emphasize that simulation provides a critical link between conceptual development and implementation, allowing researchers to test hypotheses using quantifiable indicators.

Bajaj et al. [

8], in their comparative study, underline the utility of simulators for validating IoT communication protocols, analyzing mobility patterns, and supporting resource planning. They argue that platforms should accurately reflect the characteristics of Edge and Fog environments. Patel et al. [

9], in an earlier contribution, stress that such tools are fundamental to the structure of scientific evaluation. Their findings demonstrate that simulators provide a coherent framework for comparing models, repeating experiments, and analyzing performance in controlled conditions.

Khattak et al. [

11] focus on the role of simulators in intelligent transportation systems, especially in large-scale use cases like traffic management and vehicle scheduling. Beilharz et al. [

17], meanwhile, advocate for a hybrid testbed approach combining simulation, emulation, and hardware elements to approximate real-world operational contexts of distributed applications.

Almutairi et al. [

10] offer a comprehensive review of how simulators contribute to experimental research. They highlight their relevance in addressing key challenges such as security, interoperability, energy efficiency, and system resilience. Their analysis shows that these tools support rigorous testing, architecture comparison, and performance evaluation in simulated environments.

Taken together, the literature clearly establishes the central role of IoT simulators in contemporary research. These tools not only support the design and validation of distributed architectures but also structure experimental workflows. Nevertheless, limitations persist—particularly concerning model accuracy and tool interoperability—as underscored by Almutairi et al. [

10] and Beilharz et al. [

17]. These shortcomings underscore the ongoing need for simulation platforms that align more closely with the evolving complexity of modern IoT systems.

2.3. Overview of Existing Simulation Tools

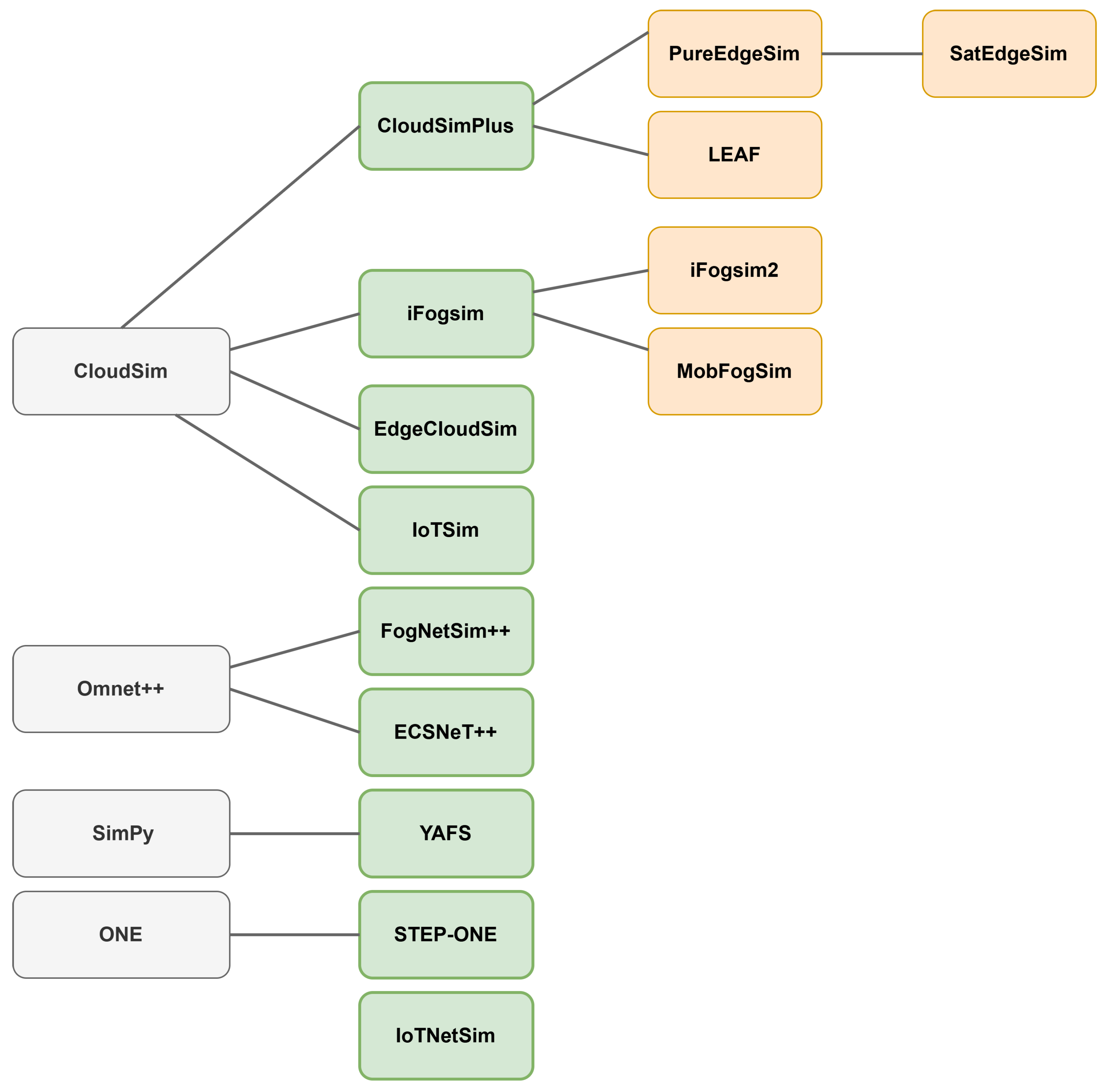

This section provides an overview of the most widely used open-source simulators for studying distributed IoT architectures. They are categorized based on their software foundation: tools derived from CloudSim, those built on OMNeT++, and others based on independent simulation engines. Each category is illustrated with representative simulators, highlighting their main features and limitations.

Figure 1 presents the lineage relationships between the various simulators, illustrating how newer tools extend earlier platforms. For instance, extensions such as iFogSim2 or LEAF build upon older simulators and add specific capabilities for distributed processing and hierarchical resource management across the Edge, Fog, and Cloud layers.

2.3.1. Simulators Based on CloudSim

CloudSim, developed by the CLOUDS Lab at the University of Melbourne, is a simulation framework designed to study virtualization, scheduling, and resource management in cloud data centers [

12]. This general-purpose simulator has served as the basis for several IoT-oriented extensions that incorporate functionalities for the Fog and Edge layers, mobility support, energy modeling, and decentralized orchestration.

iFogSim [

18] explicitly models Fog and Edge entities such as sensors, actuators, intermediate nodes, and data centers. Applications are represented as tuples flowing between processing modules, enabling the evaluation of placement strategies based on metrics such as latency or energy consumption. However, iFogSim lacks support for device mobility and software modularity.

iFogSim2 [

19] extends iFogSim by introducing mobility management, Fog cluster formation, service migration, and microservice orchestration. Nevertheless, its lack of advanced energy modeling limits its use in sustainability-focused studies.

MobFogSim [

6], built on iFogSim, simulates the mobility of connected devices (e.g., vehicles, users) and triggers application module migration based on predefined rules. However, it is limited to the Fog layer and does not model interactions with the Cloud.

EdgeCloudSim [

20] adapts CloudSim to Edge–Cloud environments. It includes modules for user mobility, specific network models (WLAN, WAN), a configurable load generator, and an Edge orchestrator. By default, it does not support energy modeling.

IoTSim [

21], focused on large-scale data processing in the Cloud, models MapReduce-like components (JobTracker, Mapper, etc.). It does not include support for mobility or Edge/Fog layers.

CloudSim Plus [

22], a modular object-oriented rewrite of CloudSim, serves as the foundation for several extensions, such as PureEdgeSim [

23]. The latter models Fog/Edge environments with separate components for mobility, energy management, and communication over heterogeneous networks. Its 2022 release includes energy estimation over WAN, LAN, and MAN networks, along with a visualization interface.

SatEdgeSim [

24], an extension of PureEdgeSim, targets satellite-enabled Edge infrastructures. It simulates low-Earth-orbit constellations and intermittent satellite–terrestrial links using various task allocation policies.

LEAF [

25], based on CloudSim Plus, focuses on energy modeling. It represents communication graphs (latency, losses, bandwidth), and supports node mobility and task dependencies. It is not designed for complex bidirectional applications.

2.3.2. Simulators Based on OMNeT++

FogNetSim++ [

26] is a C++ event-driven simulator built on OMNeT++. It accounts for packet loss, unstable links, and allows for the configuration of Edge, Fog, and Cloud nodes. It supports IoT protocols (MQTT, CoAP, AMQP), various mobility models, and custom behavior definitions. It includes an energy model based on device states (idle, active, transmit) but does not consider WAN link energy consumption or service migration mechanisms.

ECSNeT++ [

27], also based on OMNeT++, focuses on Edge–Cloud architectures with containerized microservices. It includes an orchestrator, task scheduler, and monitoring module. It models inter-layer communication using configurable links (latency, bandwidth, reliability) and supports priority management and mobility. It lacks an energy model and support for dynamic Fog cluster formation.

2.3.3. Simulators Independent of CloudSim and OMNeT++

Some simulators rely on alternative engines such as SimPy or ONE. They enable the modeling of Edge–Fog–Cloud environments without depending on CloudSim or OMNeT++. This category includes YAFS, STEP-ONE, and IoTNetSim.

YAFS [

28], developed in Python with SimPy, targets IoT applications in Fog environments. It supports dynamic entity creation, network topology definition, failure simulation, mobility, and custom policies. It lacks both an energy model and computational capacity management.

STEP-ONE [

29], an extension of ONE, introduces a BPMN 2.0-compatible engine to represent business processes distributed across the Edge, Fog, and Cloud layers. It models mobility, link disruptions, and resource availability. However, it does not support microservices or decentralized orchestration.

IoTNetSim [

30], a discrete-event platform, models IoT interactions in Edge–Fog–Cloud architectures. It accounts for 3D mobility, energy profiles, congestion, and network failures. It does not support dynamic cluster formation or mobile service management.

2.4. Existing Comparative Studies on IoT Simulators and Their Limitations

Several studies have proposed comparative reviews of IoT simulators. These contributions describe the available functionalities, application domains, or technical characteristics of existing tools. They provide an overview of the evolution of simulation platforms, particularly within distributed environments structured around the Edge, Fog, and Cloud paradigms. Below, we summarize the main studies addressing this topic.

The analysis conducted by Mechalikh et al. [

7] focuses on the software quality of twenty-four Edge simulators. The authors examine GitHub repositories, code structure, and technical documentation using automated analysis tools. Although methodologically rigorous, this study does not include any evaluation scenarios applied to the simulators.

A complementary approach is found in Bajaj et al.’s work [

8], who classify several Cloud and Fog simulators according to internal criteria such as programming language, development base, or open-source status. Their comparative study remains descriptive and does not incorporate use cases or performance metrics.

In a broader context, the authors of [

9] examine sixteen simulators, four emulators, and six testbeds. Their classification considers the targeted IoT layer, programming languages, API integration level, and aspects such as security and resilience. However, their work does not result in any experimental implementation of the reviewed tools.

Almutairi et al. [

10], for their part, focus on the functional diversity of simulators. Their study identifies several shortcomings, including the lack of realistic mobility modeling, limited support for complex distributed architectures, and the absence of advanced energy mechanisms. Once again, the tools are not evaluated against practical scenarios.

The review by Khattak et al. [

11] centers on intelligent transportation systems. It lists several commonly used simulators, such as iFogSim, EdgeCloudSim, and CloudSim, and proposes a typology based on use cases (networking, big data, full software simulation). However, no comparative experimentation is provided.

Other studies are limited to brief descriptions of available tools. This is the case for Mamdouh et al. [

31], who mention a few simulators in the context of IoHT authentication without comparison. Similarly, Darabkh et al. [

32] review several simulation platforms in a more general survey on IoT, without analyzing their performance or application cases. Rashid et al. [

33], meanwhile, propose a functional grid of nine simulators, but their evaluation is limited to tables without experimental validation.

The study by Fahimullah et al. [

12] stands out by including an experimental validation, which is absent from prior work. The authors evaluate iFogSim, LEAF, and YAFS using application scenarios, based on both technical criteria (support for migration, microservices, energy models) and non-technical ones (documentation, update frequency). They also incorporate metrics such as execution time and CPU consumption. Despite this contribution, their analysis remains focused on Fog and Cloud environments. It does not consider Edge computing as a specific layer, nor hybrid scenarios that integrate all three paradigms. These omissions limit the relevance of their findings for modern IoT architectures, which are often heterogeneous and distributed.

These gaps highlight the need for a targeted study that combines functional analysis with experimental evaluation in distributed environments where Edge, Fog, and Cloud layers are explicitly represented, in line with current IoT architectures.

The present work follows this perspective. It combines a theoretical comparative study of simulators capable of explicitly modeling the Edge, Fog, and Cloud paradigms with an experimental evaluation of two tools—iFogSim2 and FogNetSim++—applied to a hybrid IoT architecture composed of all three layers.

3. Framework Selection Methodology

This section outlines the approach used to identify, analyze, and select simulators suitable for conducting an experimental comparison. The aim was to assess the ability of these tools to accurately model an IoT architecture explicitly integrating Edge, Fog, and Cloud layers, consistent with the expected characteristics of heterogeneous distributed environments.

Initially, simulators documented in the literature underwent preliminary screening. The criteria guiding this initial selection included IoT-specific orientation, open-source availability, recent development activity, and comprehensive functional coverage of Edge–Fog–Cloud modeling capabilities. The identified tools were compared based on their declared functionalities, as summarized in

Table 1.

Subsequently, only simulators explicitly capable of modeling all three layers—Edge, Fog, and Cloud—were selected for the experimental phase. This decision ensured that the selected tools closely matched the functional requirements for simulating distributed IoT architectures. Following this rigorous selection, only iFogSim2 and FogNetSim++ were identified as fully meeting all the criteria.

3.1. Inclusion and Exclusion Criteria

The selection of simulation frameworks followed a structured analytical grid combining functional, technical, and methodological criteria. This ensured alignment between the evaluated tools and the specific demands of simulating distributed IoT architectures based on Edge, Fog, and Cloud computing paradigms.

3.1.1. IoT Specialization

Only simulators explicitly developed for IoT contexts were considered. General-purpose tools such as OMNeT++, NS-3, or MATLAB, despite adaptations described in certain studies, do not inherently provide necessary abstractions such as hierarchical entity management, context-aware traffic generation, or explicit resource constraints. Consequently, these general tools were excluded from further consideration.

3.1.2. Open-Source Licensing and Accessibility

Experiment reproducibility is fundamental in academic research. Therefore, only open-source simulators accompanied by publicly accessible documentation and actively maintained repositories were retained. Simulators lacking transparency, having closed-source code, or without available downloads were explicitly excluded.

3.1.3. Explicit Support for Edge, Fog, and Cloud Computing Paradigms

Included simulators had to explicitly support modeling at least one of the Edge, Fog, or Cloud computing paradigms. This criterion aimed to identify tools specifically suited for distributed IoT architectures, ensuring diverse functional coverage in the comparative analysis.

3.1.4. Recentness and Maintainability

Simulators developed prior to 2017 and lacking recent updates were excluded. This choice was made to exclude outdated simulators that no longer reflect current advancements in distributed IoT architectures.

3.2. Selected Simulation Frameworks

Among the identified simulators, only iFogSim2 and FogNetSim++ meet all the inclusion criteria defined in

Section 3.1. Their selection stems from a detailed comparative analysis, summarized in

Table 1, highlighting their ability to explicitly represent the Edge, Fog, and Cloud layers within distributed hybrid architectures.

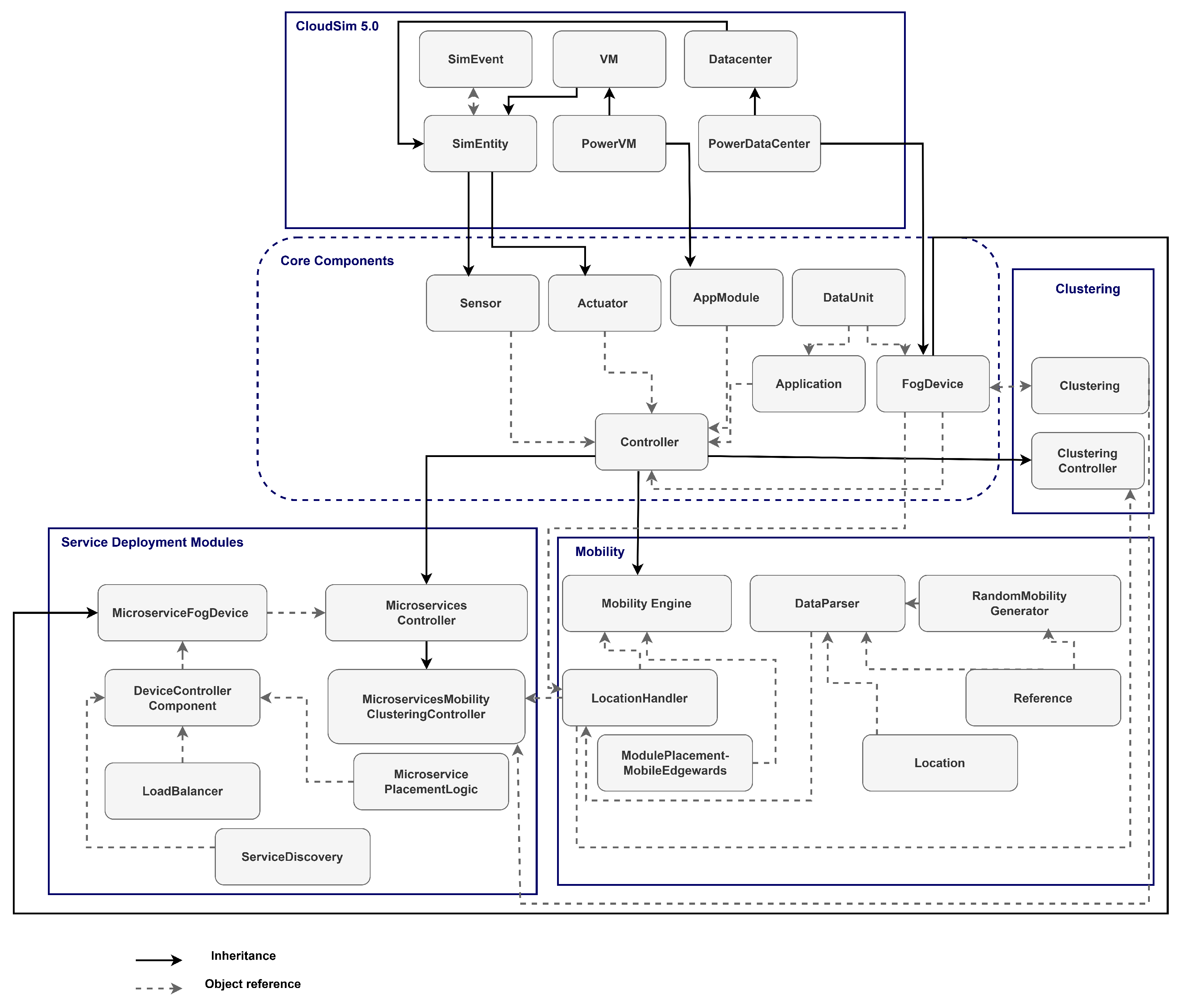

Beyond their functional coverage, these two tools are built upon complementary simulation paradigms: modular abstraction based on CloudSim in iFogSim2, and fine-grained event-driven modeling via OMNeT++ in FogNetSim++. This methodological duality enables a balanced cross-evaluation within a unified experimental environment.

The software architecture of iFogSim2, illustrated in

Figure 2, consists of three functional blocks: components derived from CloudSim, modules for mobility and migration management, and microservice-oriented extensions, as presented in [

19].

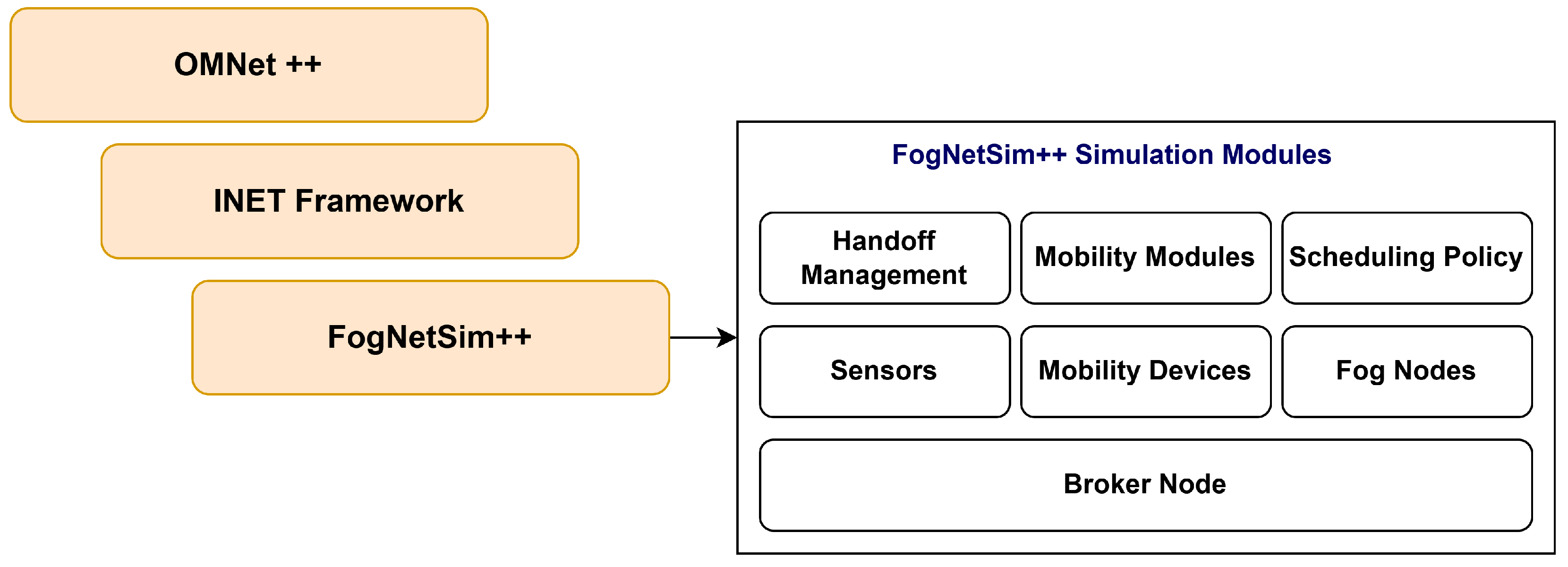

FogNetSim++, in contrast, adopts a modular architecture built on OMNeT++ and the INET framework. As shown in

Figure 3, this design enables detailed control over transmissions, mobility, migration policies, and processing delays in dynamic and heterogeneous environments [

12].

The other simulators analyzed did not fully meet the required criteria. For example, LEAF offers advanced features, but its hybrid Python/Java architecture limits fine-grained control over multi-layer interactions. YAFS focuses primarily on placement, without explicit management of application loops or migrations. IoTNetSim, while well suited for network interactions, does not provide a clear modular structure for Edge, Fog, and Cloud entities. Other simulators, such as CloudSim, EdgeCloudSim, or IoTSim, remain limited to one or two layers and lack support for modeling the full continuum.

4. Testbed Design

4.1. Simulator Execution Environments

The simulation was performed using two simulators: FogNetSim++ and iFogSim2.

Table 2 summarizes the associated hardware and software configurations for each simulator.

FogNetSim++ was executed on an Ubuntu 16.04.7 (64-bit) virtual machine, hosted on a Windows 11 system. The simulator utilizes the OMNeT++ IDE and is based on OMNeT++ version 4.6, coupled with the INET 3.3.0 module for network modeling. The virtual machine was configured with 8 GB RAM, 3 CPU cores, and 25 GB disk space, ensuring stable execution of simulated scenarios.

iFogSim2 was deployed directly onto the host environment running on Windows 11. It operates within the Eclipse IDE using JDK 8 and leverages the CloudSim 5.0 library.

Both simulators ran on the same physical machine, equipped with an Intel Core Ultra 7 155U processor at 1.70 GHz and 32 GB RAM. Uniform hardware ensured consistent experimental conditions for comparative evaluations.

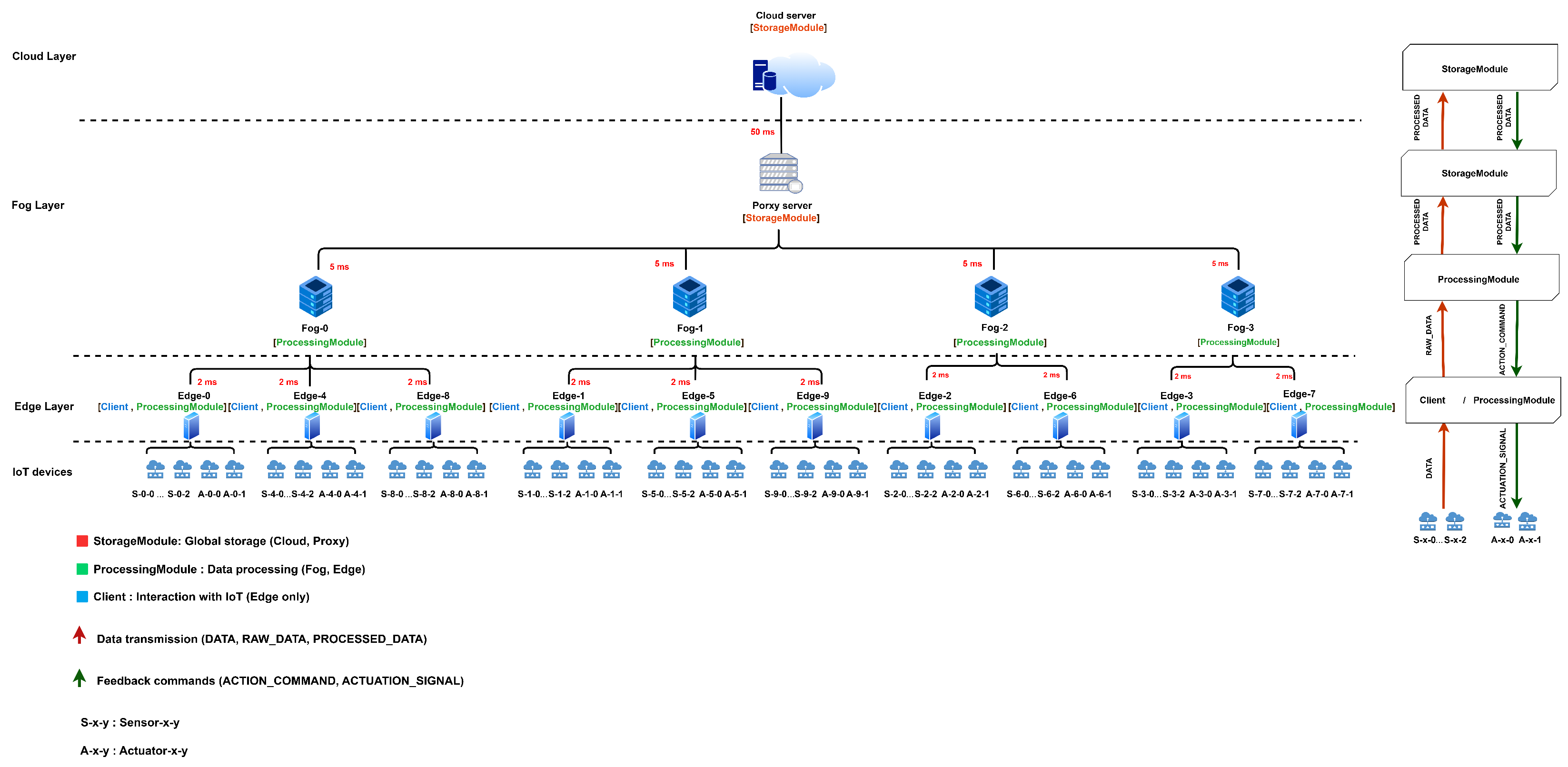

4.2. Context of the Simulated Architecture

The architecture depicted in

Figure 4 adopts a four-layer hierarchy: IoT devices, Edge layer, Fog layer, and Cloud layer. It models a distributed environment where processing, storage, and physical interactions are assigned across various levels according to their proximity to the data sources.

The IoT layer consists of sensors and actuators linked to Edge devices. Each Edge device is connected to three sensors and two actuators. The sensors generate raw data, referred to as DATA tuples, subsequently transmitted to the corresponding Edge device. Actuators receive final instructions in the form of ACTUATION_SIGNAL tuples.

Each Edge device hosts two software modules. The CLIENT module receives data from sensors, encapsulates them as RAW_DATA tuples, and forwards these tuples to the processing module. It also issues downstream commands to actuators. The processingModule performs initial local data processing. Communication between Edge devices and their respective Fog gateways has a latency of 2 ms.

The Fog layer includes four Fog nodes, each connected to multiple Edge devices. These Fog nodes host a processingModule responsible for the primary data processing tasks. At this level, RAW_DATA tuples are transformed into PROCESSED_DATA tuples, which are either forwarded to higher layers for storage or converted into ACTION_COMMAND tuples to send commands back downstream.

A proxy server occupies an intermediate position between the Fog and Cloud layers. It hosts a storage module, storageModule, identical to that in the Cloud. Its purpose is temporary aggregation of processed data, reducing load toward the Cloud, and partial result consolidation. The proxy server connects to Fog nodes with 5 ms latency and to the Cloud with 50 ms latency. Due to its intermediate role and functional closeness to distributed processing nodes, the proxy server is treated as an upper sublayer within the Fog layer.

The Cloud layer consists of a central node dedicated to data archiving, running the storageModule. This node primarily stores PROCESSED_DATA tuples, enabling long-term retention or deferred analysis.

Data exchanges among software modules are structured via multiple tuple types. Sensors generate DATA tuples. These are converted by the CLIENT module into RAW_DATA tuples, subsequently processed by the processingModule either into PROCESSED_DATA tuples for storage or ACTION_COMMAND tuples for downstream actuation commands. Finally, the CLIENT module generates ACTUATION_SIGNAL tuples sent directly to actuators.

Data flows are represented in

Figure 4 by directional arrows, clearly distinguishing upward and downward communications. Latency values specified for each link clearly illustrate the hierarchical and distributed nature of the simulated system.

4.3. Resources Allocated to Devices

Hardware resources allocated to various devices were calibrated according to their hierarchical position and functional roles within the architecture.

Table 3 summarizes the computing capacities (MIPS), RAM, and uplink/downlink bandwidth for each device tier.

The Cloud node is provisioned with the highest capacity, featuring 44,800 MIPS and 40 GB of RAM. This high capacity supports extensive data storage and deferred processing requirements. Positioned between the Cloud and Fog layers, the proxy server serves as a high-throughput central relay. It is allocated 5600 MIPS, 8 GB of RAM, and a symmetrical bandwidth of 20 Gbps, efficiently handling traffic from multiple Fog nodes without congestion.

Fog nodes, each with 6000 MIPS and 6 GB of RAM, provide intermediate processing of data originating from Edge nodes. Their downstream bandwidth of 5 Gbps allows simultaneous handling of data from several peripheral devices. Edge nodes, allocated 4000 MIPS, 4 GB RAM, and a downstream bandwidth of 2 Gbps, primarily collect sensor data and execute lightweight processing tasks close to data sources.

5. Comparative Study

This section presents a comparative analysis between iFogSim2 and FogNetSim++ based on an equivalent Edge–Fog–Cloud architecture. All key parameters—including sensor emission frequency, topology, tuple size, network latencies, MIPS, energy cost per MIPS, and the application’s functional logic—have been rigorously harmonized to ensure a fair evaluation.

Each simulation scenario was executed five times, and the reported values for each metric correspond to the average across runs. This approach reinforces result consistency and accounts for minor execution variations that may arise from internal event handling or queue scheduling in the simulation engines.

5.1. Evaluation Metrics

The evaluation is based on a set of metrics applied consistently to the same Edge–Fog–Cloud architecture simulated in both iFogSim2 and FogNetSim++ environments. These indicators compare the simulators’ results in terms of time performance, energy efficiency, processing load, and network traffic, as well as their ability to scale with architectural complexity and remain resilient under abnormal conditions.

Execution time (s) represents the total duration required to complete the simulation scenario. It reflects the efficiency of the simulation engine and its ability to manage events.

Application loop delay (s) measures the average time elapsed from tuple generation by a sensor to the corresponding action execution by an actuator. It assesses the overall system latency within a distributed processing context.

CPU processing delay (s) indicates the time required to process each message type by the deployed software modules. This metric identifies the actual load sustained by different devices throughout the simulation execution.

Energy consumption (J) is calculated based on processing capacity (expressed in MIPS), tuple processing duration, and a defined energy factor specific to each device type:

where

MIPS is the computational power of the module, measured in millions of instructions per second;

processingDelay is the duration required to process the message (in seconds);

costPerMips is a constant representing the energy consumed per MIPS per second (J/MIPS·s), determined according to the device’s power characteristics.

This metric assesses the energy impact associated with processing tasks executed across the Edge, Fog, and Cloud layers.

Cloud execution cost is derived from the volume of processing performed by Cloud nodes, the associated execution time, and a unit cost defined per MIPS:

where

MIPS is the computational power of the Cloud module (in MIPS);

execTime is the total execution time of messages (in seconds);

costPerMips is a constant representing the cost per MIPS per second (U/MIPS·s), defined in abstract units to allow normalized comparison between simulators.

For both metrics, the constant costPerMips is adapted to reflect either physical energy consumption or abstract computational cost, depending on the metric’s objective. In the context of energy consumption, it models the energy used per MIPS per second (J/MIPS·s), while in the context of cloud execution cost, it represents a normalized unit cost (U/MIPS·s) used for comparative evaluation across simulators.

Total network usage (bytes) corresponds to the cumulative volume of data exchanged between simulated entities. It quantifies the intensity of traffic generated by the application across the infrastructure, from sensors to the Cloud.

Scalability evaluation. This metric aims to assess the impact of a progressive increase in the number of Edge and Fog nodes on the overall performance of the simulators. To this end, four scenarios were defined:

Scenario 1 (S1): Five Edge devices and two Fog nodes;

Scenario 2 (S2): Ten Edge devices and four Fog nodes;

Scenario 3 (S3): Fifteen Edge devices and six Fog nodes;

Scenario 4 (S4): Twenty Edge devices and eight Fog nodes.

Each Edge device is connected to three sensors and three actuators. The architecture also includes a Cloud node and a proxy server, both present in all scenarios.

All system parameters are kept constant across the scenarios, including sensor transmission frequency, the structure of application modules, fixed processing delays at each level (Edge, Fog, Cloud), and the end-to-end data processing logic.

Scalability is evaluated empirically, based on the variation of six key metrics as the number of nodes increases: execution time, application loop delay, CPU processing delay by tuple type, energy consumption by layer, Cloud execution cost, and total network usage. This approach helps determine whether performance remains stable or degrades significantly as the architecture scales. It also provides insight into each simulator’s ability to effectively model large-scale IoT environments.

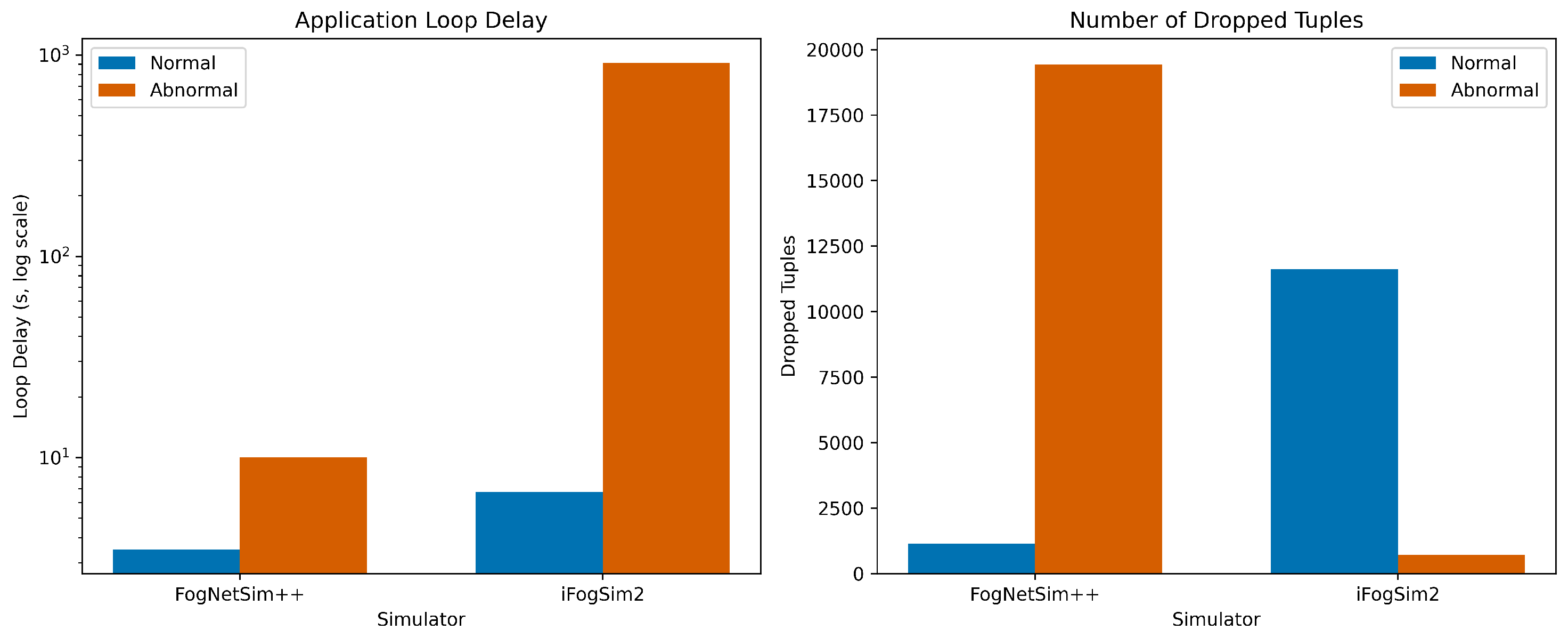

Robustness evaluation. Robustness is assessed by observing system behavior under abnormal conditions, particularly when processing delays are significantly increased. Two simulation scenarios are defined:

A normal scenario, with a fixed processing delay of 3.5 s at each level (Edge and Fog);

An abnormal scenario, with a high processing delay of 10 s.

Two indicators are used to evaluate robustness:

The application loop delay (in seconds) under stress conditions, which indicates whether the simulator maintains acceptable end-to-end latency despite the extended processing time;

The number of dropped tuples, which accounts for messages ignored or discarded during the simulation due to congestion, buffer overflows, or scheduling conflicts.

This evaluation measures each simulator’s ability to maintain performance and ensure data reliability under degraded conditions. It also provides insight into their tolerance to overload, resource contention, and event synchronization efficiency.

The selection of these metrics is based on their relevance in characterizing distributed system behavior within an IoT context. They identify potential disparities between the simulators and ensure a coherent and reproducible basis for comparison.

5.2. Analysis of Comparative Results

5.2.1. Execution Time

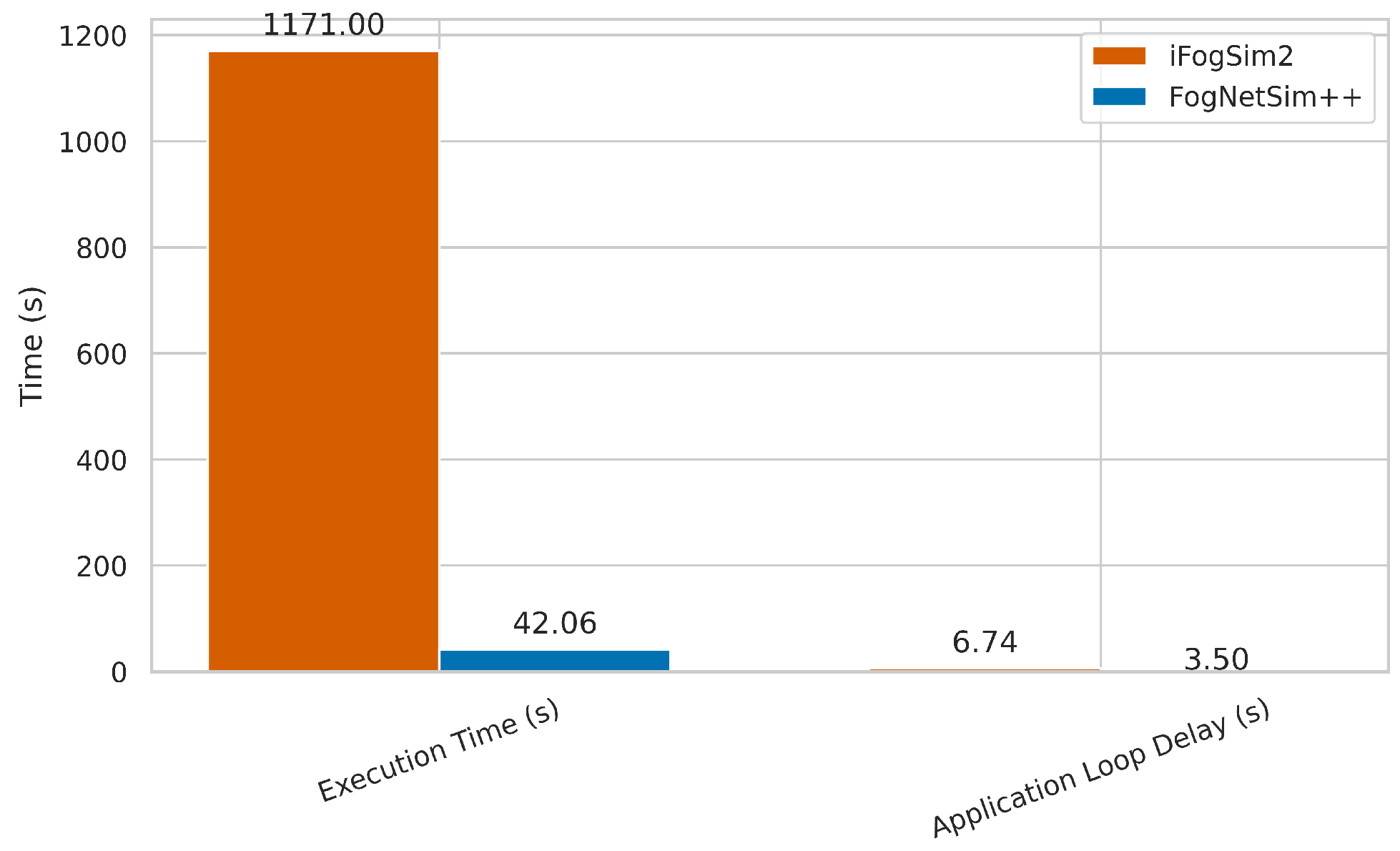

The simulation using iFogSim2 required 1171 s of system time, compared to only 42.06 s with FogNetSim++, as shown in

Table 4 and

Figure 5. This value reflects the actual runtime needed to process all events, not the simulated duration.

The simulated structure is identical in both environments. This consistency ensures that the observed differences arise from the internal behavior of the simulators rather than from disparities in simulation conditions. The processing delay was fixed at 3.5 s per level based on preliminary tests. This value was chosen as a compromise: long enough to reveal queueing and scheduling effects, yet short enough to avoid excessive congestion or unrealistic system overload. It thus ensures both rigorous experimental control and fairness in comparing the simulators.

This substantial discrepancy in execution time primarily stems from fundamental architectural differences between the two platforms. iFogSim2 runs within the Java Virtual Machine (JVM) and is based on CloudSim, which introduces abstraction layers and overhead related to memory management and less efficient event scheduling. In contrast, FogNetSim++, compiled in C++ and based on OMNeT++, benefits from native memory allocation and a highly optimized event engine. These differences explain the observed 27-fold performance gap, despite an equivalent simulation configuration.

5.2.2. Application Loop Delay

Application loop latency is a key indicator for evaluating a simulator’s efficiency in handling chained events. The values in

Table 5 and

Figure 5 show a significant difference between the two simulators. iFogSim2 records an average delay of 6.7355 s between the sensor and actuator, whereas FogNetSim++ achieves 3.5024 s. This nearly 48% improvement reflects FogNetSim++’s capability to streamline tuple flow throughout the application cycle.

This performance is due to OMNeT++’s precise and native event engine, enabling more efficient parallel processing of events, and FogNetSim++’s manual transmission control, which allows fine-grained tuning of delays and exit congestion. In contrast, iFogSim2 abstracts and automates queue management, increasing latency under high message contention.

This difference is critical in high-demand scenarios where rapid loop execution determines system responsiveness.

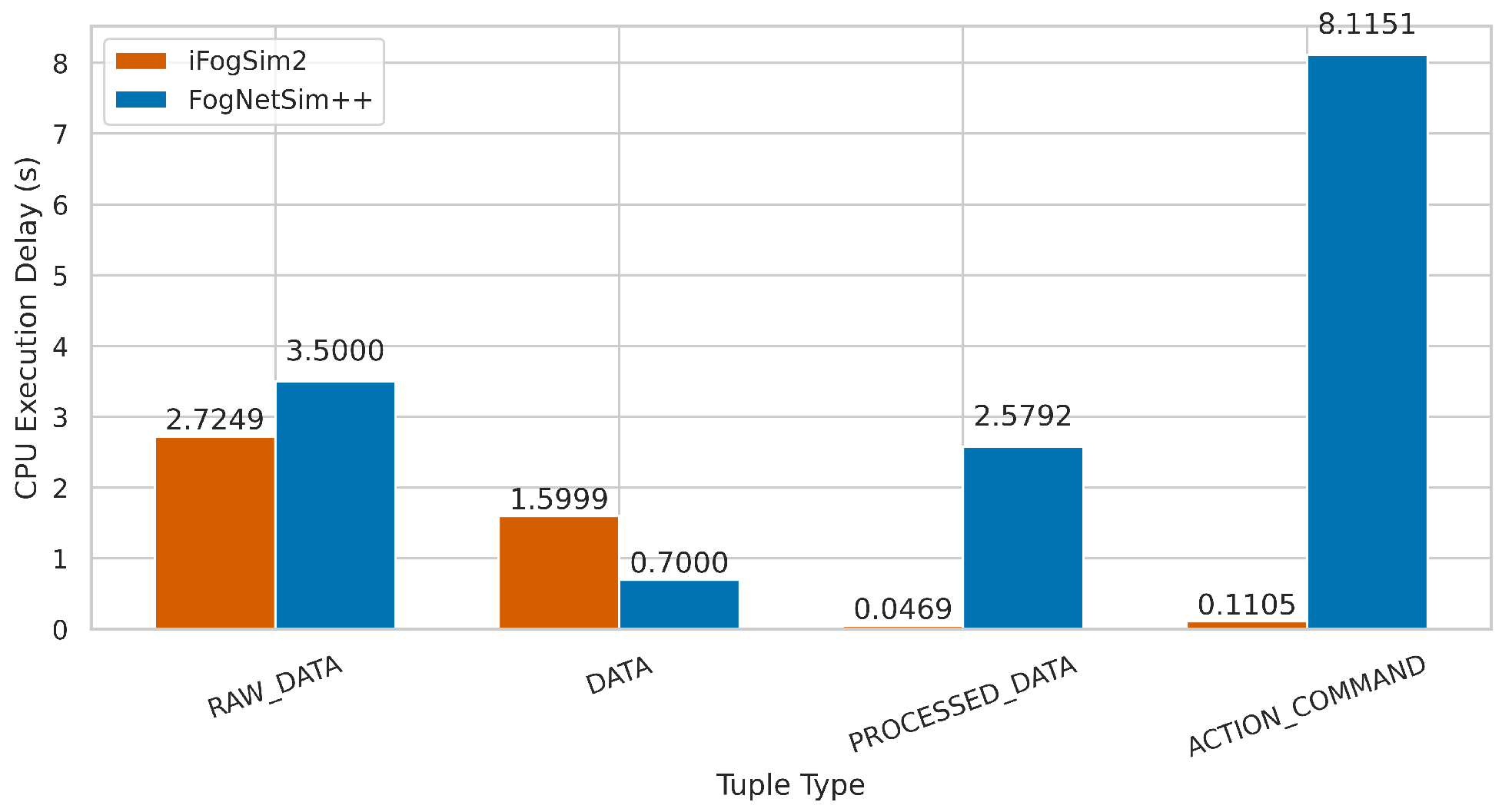

5.2.3. CPU Processing Delay by Tuple Type

Processing of PROCESSED_DATA and ACTION_COMMAND tuples in FogNetSim++ is significantly slower than in iFogSim2, as shown in

Table 6 and

Figure 6. This is due to FogNetSim++’s lack of implicit tuple transformation, requiring manual duplication of each output message. This leads to an increase in transmitted messages and necessitates explicit channel control using

scheduleAt to avoid send conflicts. These constraints increase processing time in the

processingModule. Conversely, the DATA tuple is processed faster in FogNetSim++, suggesting more direct and lightweight execution in the client module.

The slightly higher delay for RAW_DATA is attributed to OMNeT++’s manual queue management, which is handled automatically in iFogSim2.

5.2.4. Energy Consumption (in Joules)

Energy consumption distribution shows marked differences between iFogSim2 and FogNetSim++.

Table 7 and

Figure 7 indicate that FogNetSim++ consumes 7.9 million joules at the Cloud level—nearly three times more than iFogSim2. This is due to the explicit generation of PROCESSED_DATA tuples that are systematically sent to the Cloud’s storageModule. Unlike iFogSim2, which applies implicit transformation rules, FogNetSim++ requires explicit tuple emission, increasing centralized processing load and energy use.

At the Edge, FogNetSim++ consumes far less energy (50,400 J vs. 956,174.8 J in iFogSim2), reflecting a reduction in local processing and faster offloading to higher levels. This recentralization is also seen in Fog consumption (82,320 J vs. 786,666.66 J), indicating reduced load on Edge nodes.

The proxy level shows a similar trend, with higher consumption in FogNetSim++ (52,948 J vs. 33,731.99 J), consistent with its increased processing role.

Overall, FogNetSim++ reflects an explicit execution model where the developer has full control over data flows and processing. This offers greater flexibility but places more demand on central nodes, particularly in the absence of local processing or aggregation strategies.

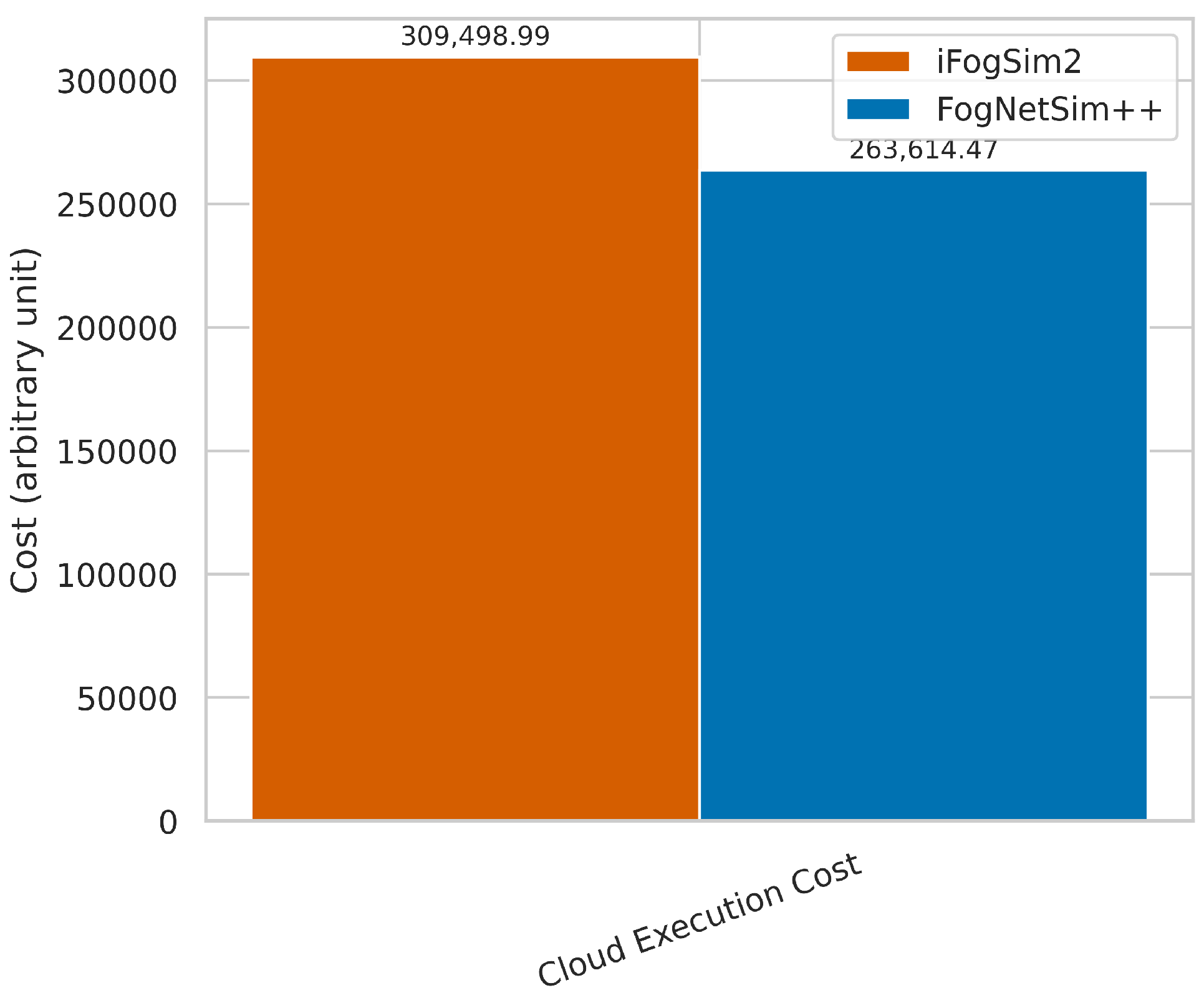

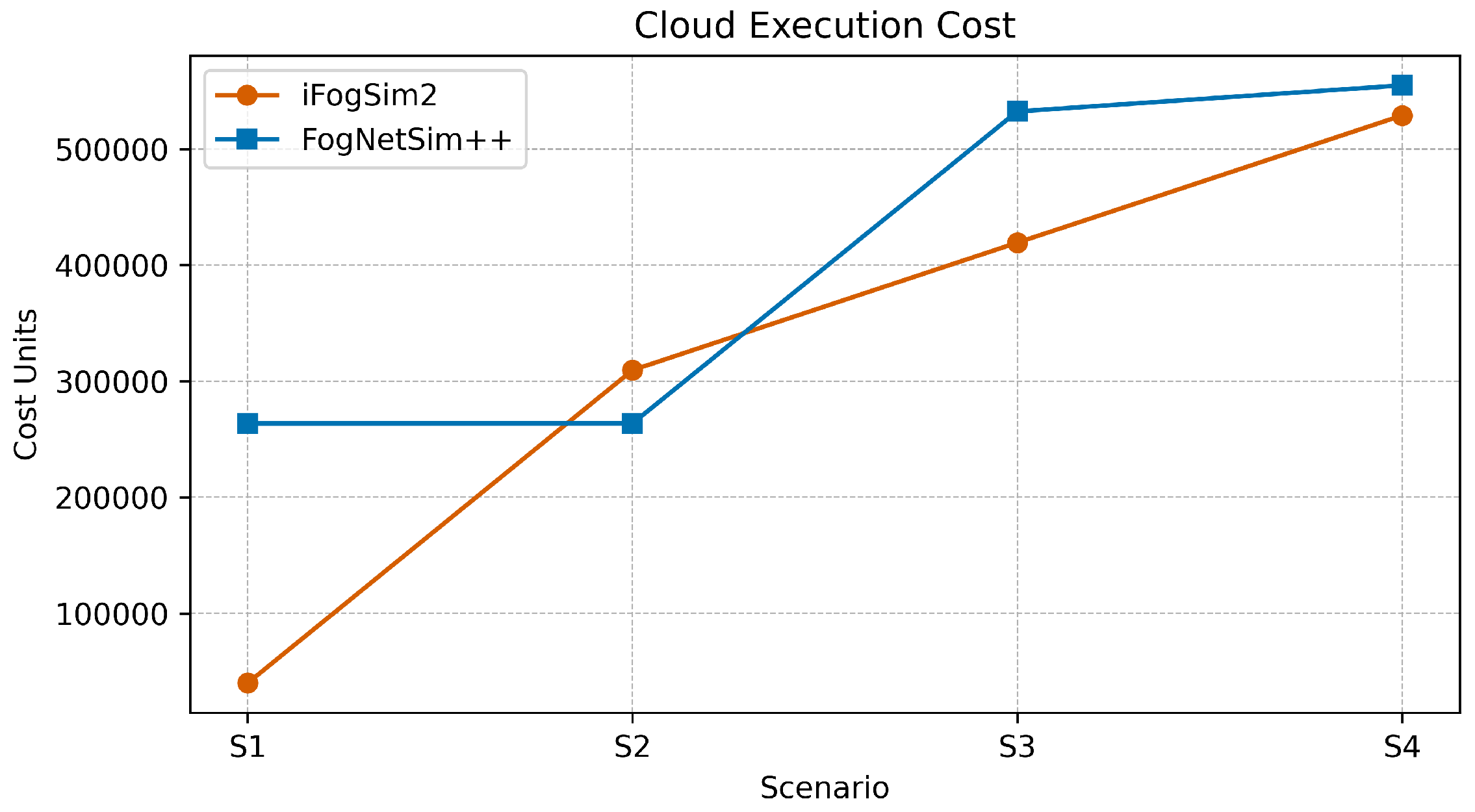

5.2.5. Cloud Execution Cost

FogNetSim++ shows a lower execution cost (263,614.47) than iFogSim2 (309,498.99), despite higher energy use on the Cloud side, as seen in

Table 8 and

Figure 8. This difference results from faster and more synchronized execution in FogNetSim++, reducing resource occupation time. iFogSim2, while consuming less energy, requires longer overall execution time, increasing cost based on resource usage duration.

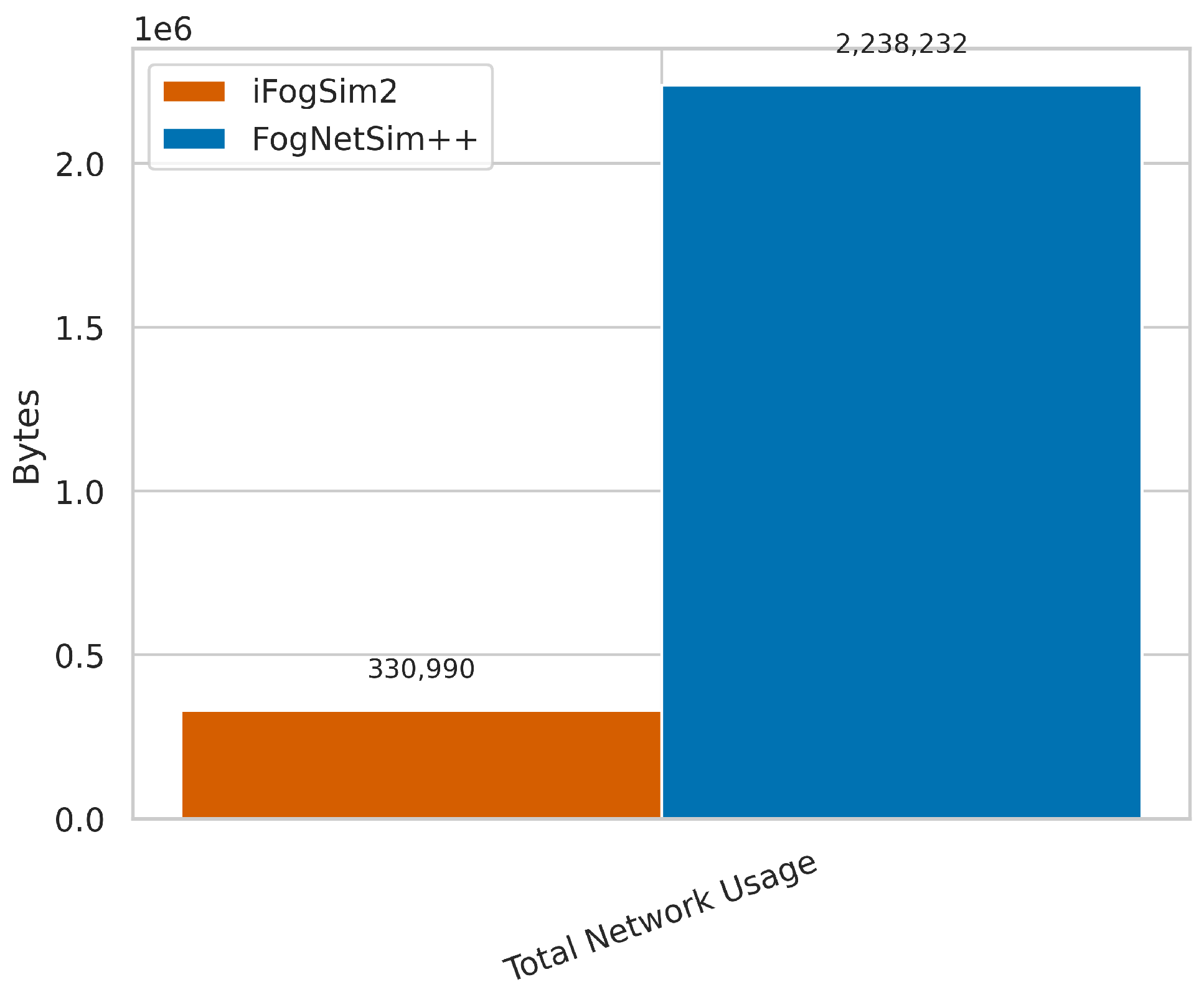

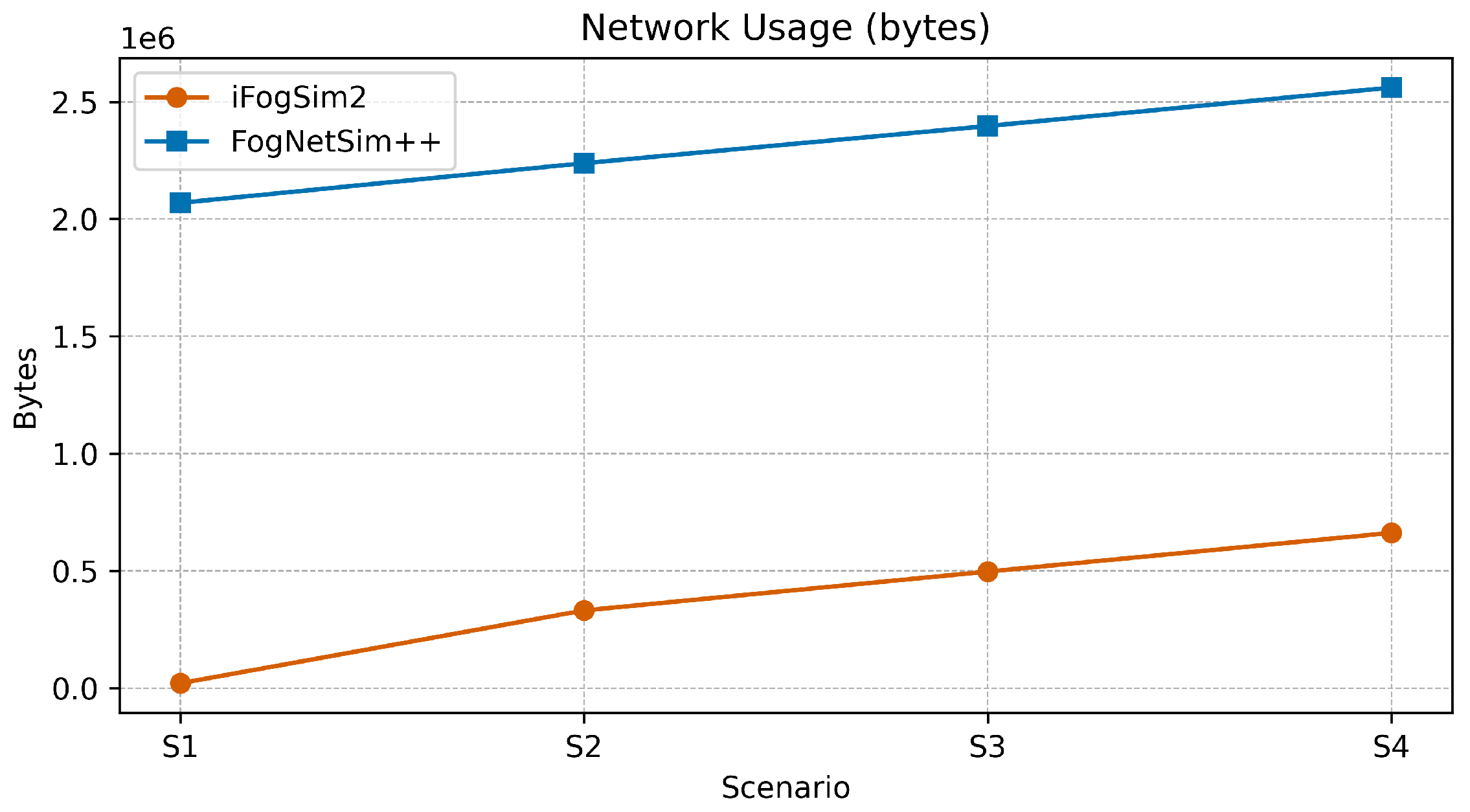

5.2.6. Network Usage

FogNetSim++ generates significantly more network traffic than iFogSim2. As shown in

Table 9 and

Figure 9, FogNetSim++ produces over 2.2 million bytes of traffic compared to only 331,000 bytes in iFogSim2. This is primarily due to the manual duplication of messages in processing modules. Each RAW_DATA tuple is manually replicated as PROCESSED_DATA and ACTION_COMMAND, whereas iFogSim2 handles these transformations implicitly through selectivity rules. Moreover, OMNeT++ provides no abstraction for flow management; every emission and redirection must be explicitly declared and simulated. This granularity allows fine tracking of message exchanges but increases the volume of transmitted bytes. The result is nearly seven times more network load in FogNetSim++ without degrading loop latency or overall simulation performance.

5.2.7. Scalability Evaluation

The results presented in

Table 10 and

Table 11, supported by

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14 and

Figure 15, provide a detailed analysis of the scalability of both simulators in response to the progressive increase in architectural complexity (scenarios S1 to S4). To ensure a multidimensional evaluation, six representative metrics were selected: execution time, application loop delay, CPU delay for PROCESSED_DATA tuples, cloud energy consumption, cloud execution cost, and network traffic. These metrics respectively reflect temporal efficiency, application responsiveness, processing load, energy consumption, operational cost, and impact on the network infrastructure.

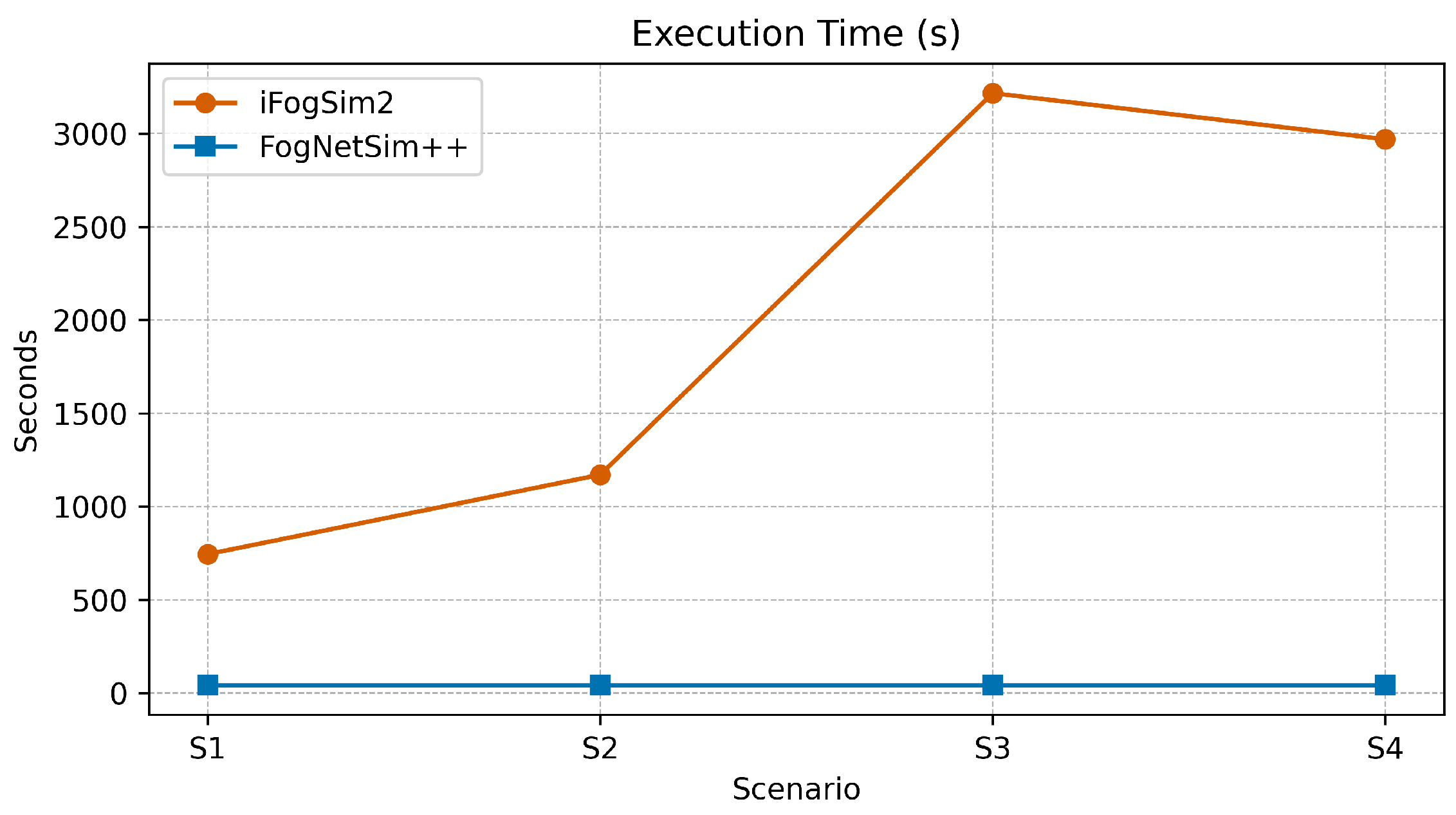

As the number of Edge and Fog nodes increases, iFogSim2 exhibits a significant rise in execution time, from 745 s in S1 to 3217 s in S3, followed by a slight decrease to 2970 s in S4, likely due to queue saturation. In contrast, FogNetSim++ maintains a constant execution time of approximately 42 s across all scenarios (

Figure 10), indicating excellent runtime stability even under increasing system load.

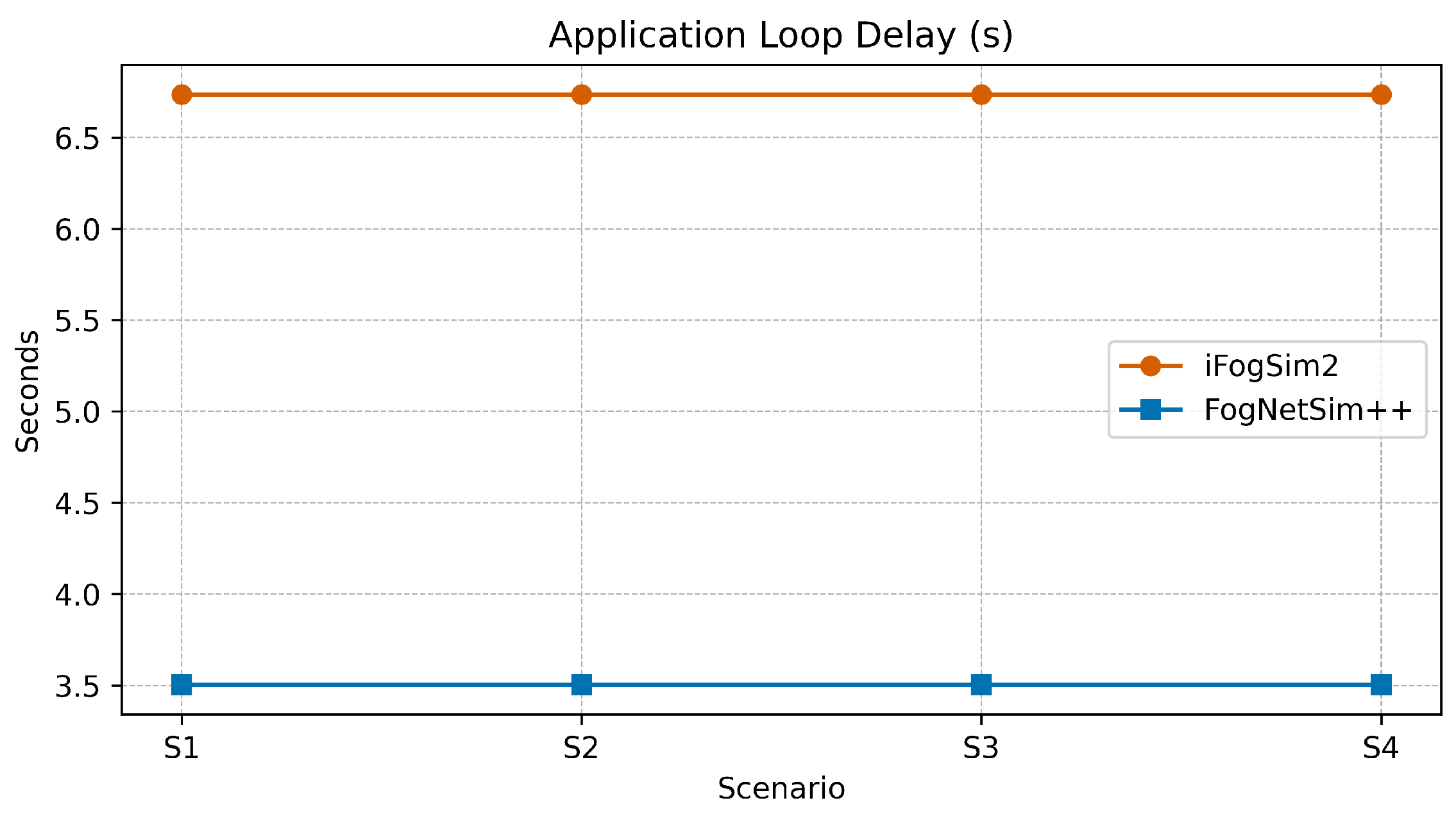

In terms of responsiveness, the application loop delay remains stable within each simulator but differs significantly in absolute values: 6.7355 s for iFogSim2 versus 3.5024 s for FogNetSim++ (

Figure 11). This consistency highlights FogNetSim++’s ability to maintain real-time behavior at scale, which is particularly advantageous for modeling latency-sensitive services.

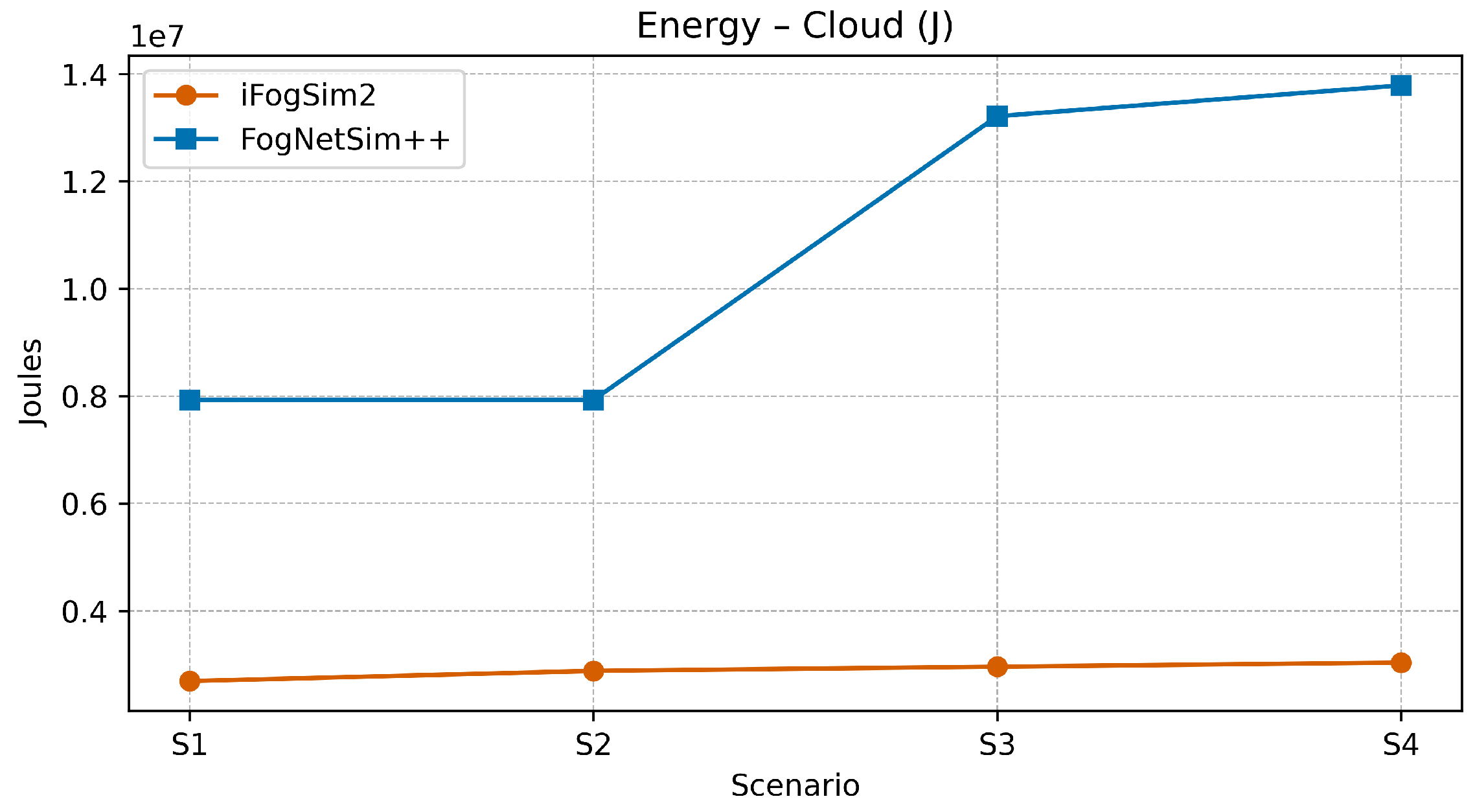

This performance, however, comes with a higher resource cost. In FogNetSim++, cloud energy consumption nearly doubles between scenarios S2 and S4, rising from 7.9 to 13.7 million joules (

Figure 13), while execution cost also increases sharply, from 263k to 554k units (

Figure 14). In comparison, iFogSim2 shows a more gradual and contained progression across these two metrics, reflecting more efficient resource usage enabled by its abstract modeling.

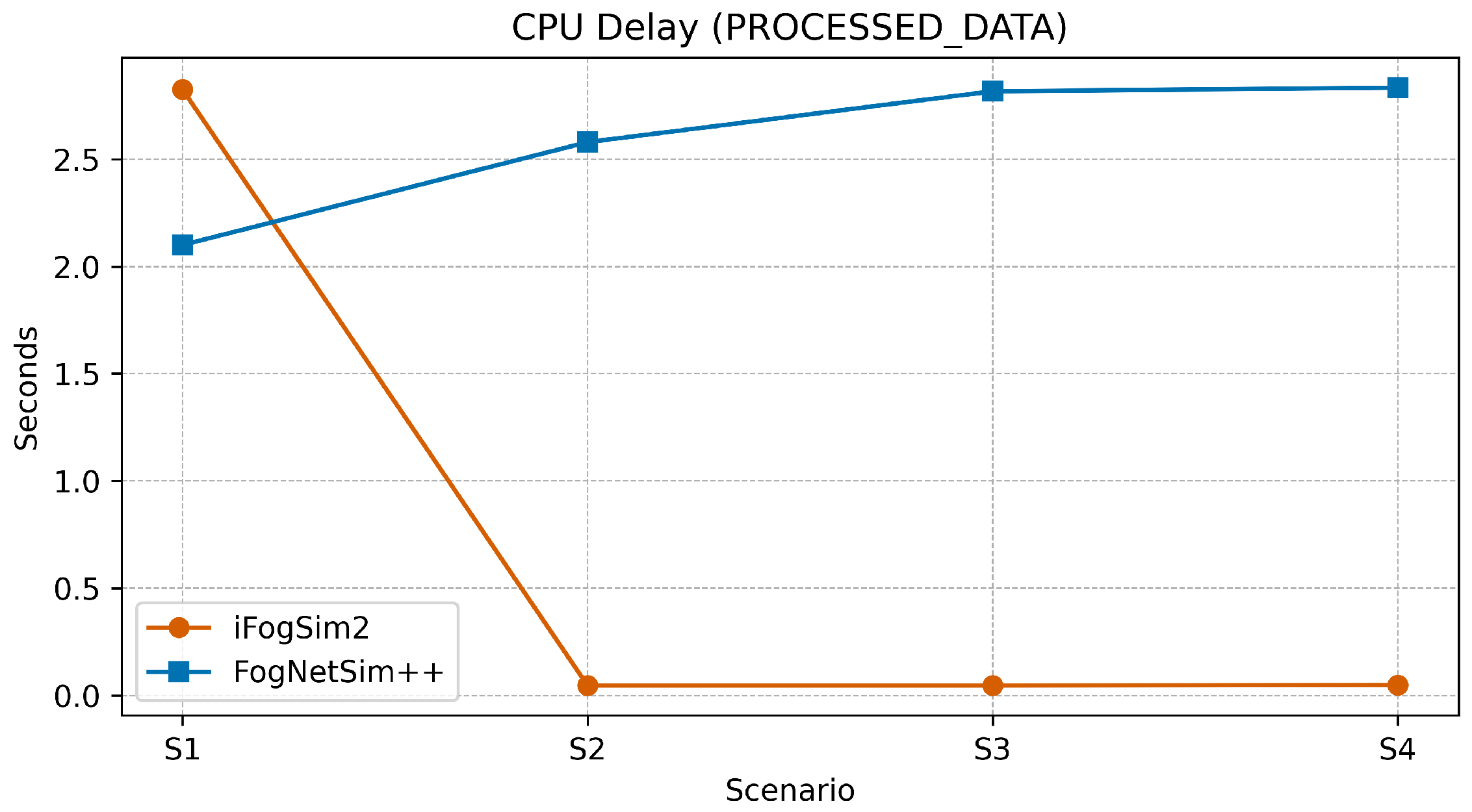

CPU processing of PROCESSED_DATA tuples reveals contrasting behaviors: in iFogSim2, this delay becomes negligible from scenario S2 onward due to implicit transformations via selectivity rules. In contrast, FogNetSim++ exhibits a progressive increase in delay, reaching 2.83 s in S4 (

Figure 12), a direct result of the explicit and detailed handling of processing operations.

Finally, the volume of generated network traffic underscores the modeling strategy differences. FogNetSim++ simulates each transmission independently, causing traffic to increase from 2.06 to 2.56 million bytes between S1 and S4 (

Figure 15), whereas iFogSim2 limits data exchanges through internal abstraction mechanisms, with only 661,680 bytes in the most complex scenario.

In summary, iFogSim2 demonstrates moderate scalability with limited resource consumption, making it suitable for mid-scale topologies and lightweight simulation needs. FogNetSim++ provides superior scalability in terms of execution and latency while offering more precise control over network behavior, at the expense of higher energy and computational costs. These results highlight a fundamental trade-off between execution efficiency and modeling granularity, underscoring the complementary nature of both tools depending on the intended simulation objectives.

5.2.8. Robustness Evaluation

The results presented in

Table 12, supported by

Figure 16, provide insight into the behavior of both simulators when subjected to a deliberate increase in processing time from 3.5 to 10 s. The evaluation relies on two key indicators: loop delay, which reflects system responsiveness, and the number of dropped tuples, which indicates the simulator’s tolerance to congestion.

In the case of FogNetSim++, the loop delay increases proportionally, reaching 10 s. This trend illustrates a deterministic execution model with strict compliance to the injected delays. However, this precision comes at the cost of a significant number of dropped messages. The number of dropped tuples rises from 1140 under normal conditions to 19,440 in the overload scenario. This sharp increase suggests a lack of internal adaptation mechanisms and a rigid queue management strategy.

Conversely, iFogSim2 adopts a more flexible approach. Under normal conditions, the loop delay already reaches 6.73 s. When the processing delay increases, the loop delay grows substantially, exceeding 910 s. Despite this degradation, the number of dropped tuples drops significantly, from 11,610 to only 720. This behavior indicates that messages are retained in the queue, even when processing times become excessive.

These results highlight two fundamentally different strategies. FogNetSim++ maintains temporal stability at the cost of a high data loss rate under overload conditions, while iFogSim2 delays execution considerably to preserve data flow continuity.

This evaluation complements the findings from the scalability analysis. It demonstrates that robustness does not solely depend on the stability of temporal metrics, but also on the simulator’s ability to absorb delays and handle events under constrained conditions. These results help better characterize the internal logic governing event and queue management in each simulator, which is essential for interpreting performance measurements.

6. Recommendations and Guidelines

In light of the results obtained, several guidelines can be drawn to assist researchers in selecting and using the iFogSim2 and FogNetSim++ simulators within hierarchical IoT architectures.

iFogSim2 promotes abstraction and rapid prototyping through its selectivity mechanism, which automates tuple transformation and simplifies flow management. This approach reduces modeling overhead but partially obscures the details related to concurrent transmissions, queue handling, or channel contention.

Conversely, FogNetSim++ is based on the explicit modeling of each message, each duplication, and each transmission delay. This configuration gives the user direct control over network behavior and distributed processing, at the cost of more complex development and more detailed code.

This methodological contrast is reflected throughout the observed results. FogNetSim++ demonstrates strong performance in terms of latency and execution speed, partly due to its efficient event engine and more realistic traffic management. However, this precision comes at the expense of higher energy consumption and increased network traffic, due to the lack of implicit transformation mechanisms. iFogSim2, on the other hand, offers greater logical readability and more balanced energy usage, making it particularly suitable for large-scale simulations or scenarios where temporal granularity is of secondary importance.

The analysis of scalability scenarios confirms this orientation. FogNetSim++ maintains stable loop delay and execution time even as the number of devices increases, demonstrating excellent runtime stability at scale. In contrast, iFogSim2 shows an increase in execution time and resource usage, which reflects its abstract modeling approach but may limit its applicability in very large-scale deployments.

The robustness evaluation also highlights the trade-offs between the two tools. Under stress conditions, iFogSim2 demonstrates strong resilience by retaining messages and ensuring the continuity of data flows, albeit at the cost of increased latency. FogNetSim++, on the other hand, maintains strict timing accuracy but tends to drop a larger number of tuples under overload, due to its deterministic event processing and more rigid queue management.

The choice between iFogSim2 and FogNetSim++ closely depends on the simulation’s priorities. iFogSim2 is particularly well-suited for exploratory experiments or for evaluating placement and resource allocation strategies, especially when ease of development and rapid deployment are sought. In contrast, FogNetSim++ is better suited for studies that require high temporal precision, fine-grained traceability of network exchanges, and enhanced behavioral fidelity. Its ability to accurately reproduce temporal interactions, simulate congestion effects, and handle large-scale topologies with low latency makes it a valuable tool for research focused on responsiveness, robustness, and infrastructure scalability in constrained operational environments.

7. Conclusions and Future Directions

This work presents a structured method for evaluating IoT simulators capable of modeling the Edge–Fog–Cloud continuum. Thirteen open-source tools were examined based on functional, technical, and practical criteria. Among them, iFogSim2 and FogNetSim++ were selected for the experimental phase due to their ability to explicitly represent the three layers of the IoT architecture and support key features such as module migration, mobility, software modularity, and energy modeling.

The comparative results highlight that the two simulators follow distinct modeling paradigms. iFogSim2 favors abstraction to facilitate functional exploration and accelerate prototyping, while FogNetSim++ provides a more detailed representation of network interactions and distributed execution. This contrast was further confirmed through additional evaluations incorporating scalability and robustness metrics. FogNetSim++ demonstrated high execution stability in the face of increasing architectural complexity, along with strong performance in maintaining low latency. In contrast, iFogSim2 exhibited greater resilience under stress conditions by preserving data flow continuity despite significant execution delays. These complementary profiles underscore the importance of aligning simulator selection with the specific constraints and objectives of the targeted IoT environment.

Based on the results obtained, several research directions emerge as priorities. The simulation of IoT architectures incorporating decentralized security mechanisms, such as those based on blockchain, remains a necessary avenue. Such investigations would enable the evaluation of the impact of consensus protocols and access control systems on energy consumption and network performance. The modeling of critical medical applications also represents a significant challenge. These applications impose strict requirements in terms of real-time responsiveness, reliability, and traceability, which call for advanced simulation capabilities. Finally, the development of standardized, modular, and reusable experimental testbeds would promote cross-tool comparisons and strengthen research reproducibility across the scientific community.