Federated Learning for Anomaly Detection: A Systematic Review on Scalability, Adaptability, and Benchmarking Framework

Abstract

1. Introduction

- Examine the impact of communication overhead towards scalability and real-time performance and provide possible solutions.

- Analyse the adaption of FL frameworks to dynamic server clusters.

- Identify key components for a standardised benchmarking framework to evaluate FL in anomaly detection.

2. Overview of FL

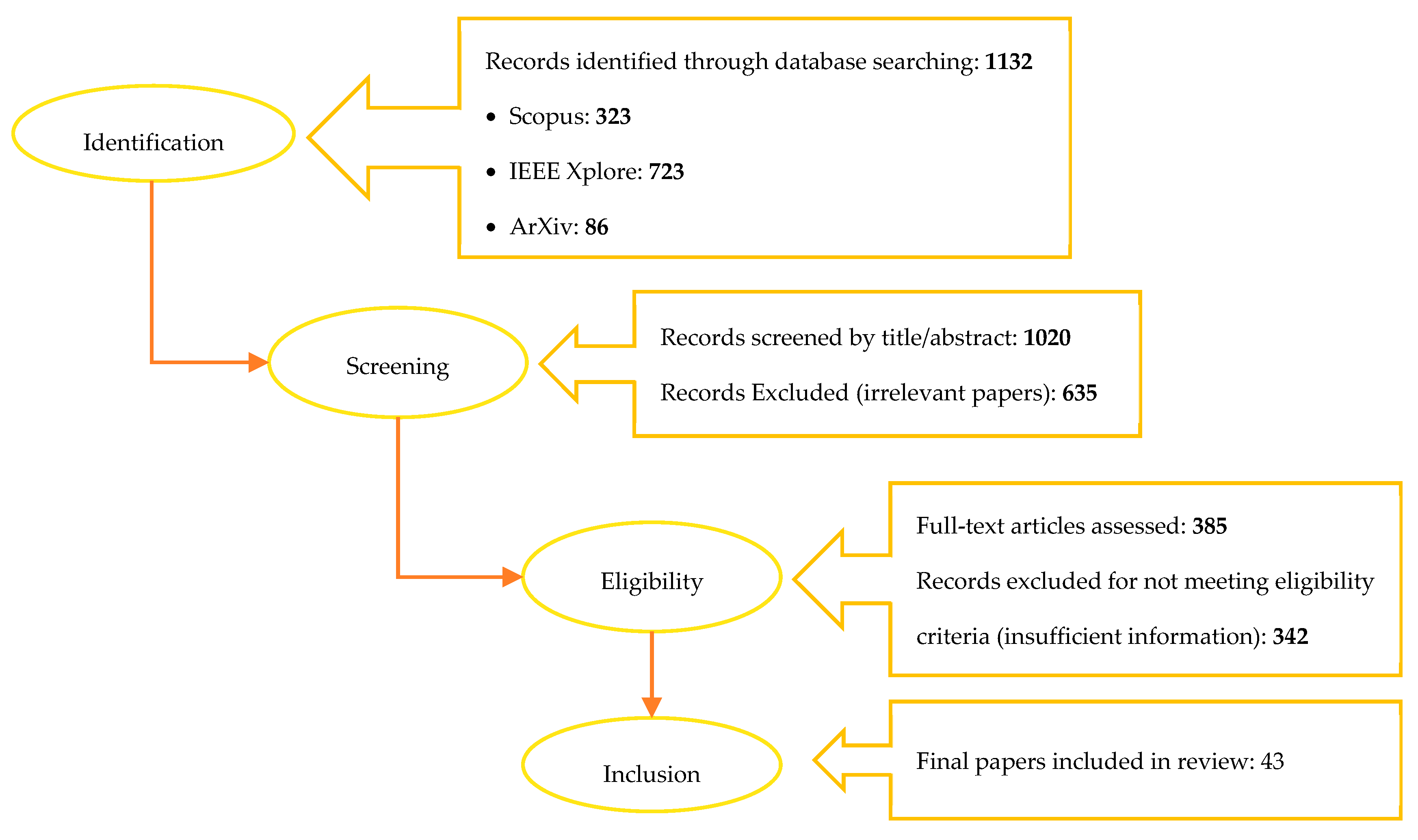

3. Materials and Methods

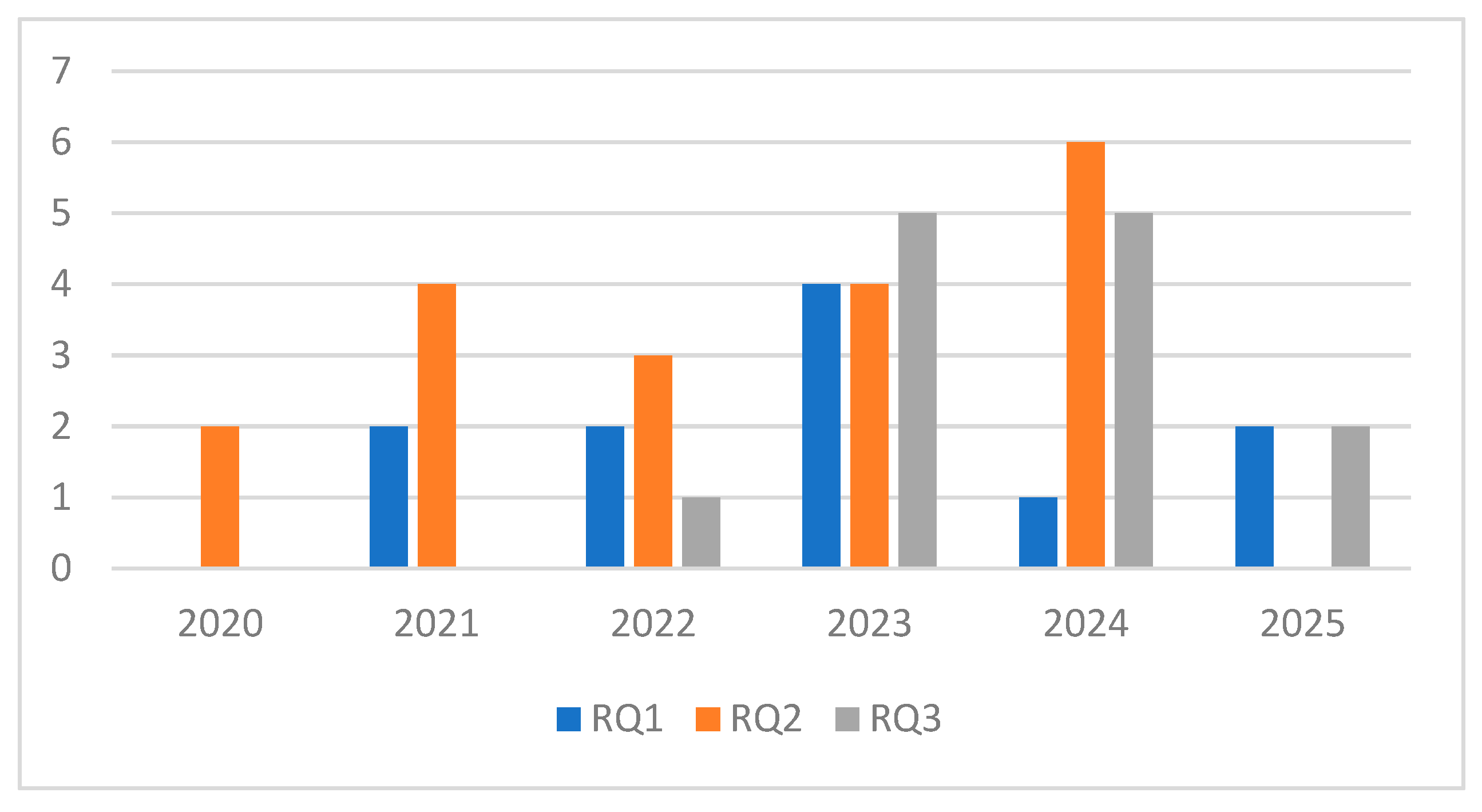

3.1. Research Questions

- RQ 1—How does communication overhead impact the scalability and real-time performance of Federated Learning for anomaly detection in distributed server environments?

- RQ 2—How can a Federated Learning framework adapt to dynamic server clusters?

- RQ3—What key components are required to develop a standardised benchmarking framework for evaluating the performance of Federated Learning anomaly detection?

3.2. Data Search Strategies

3.3. Paper Selection

3.4. Data Extraction and Synthesis

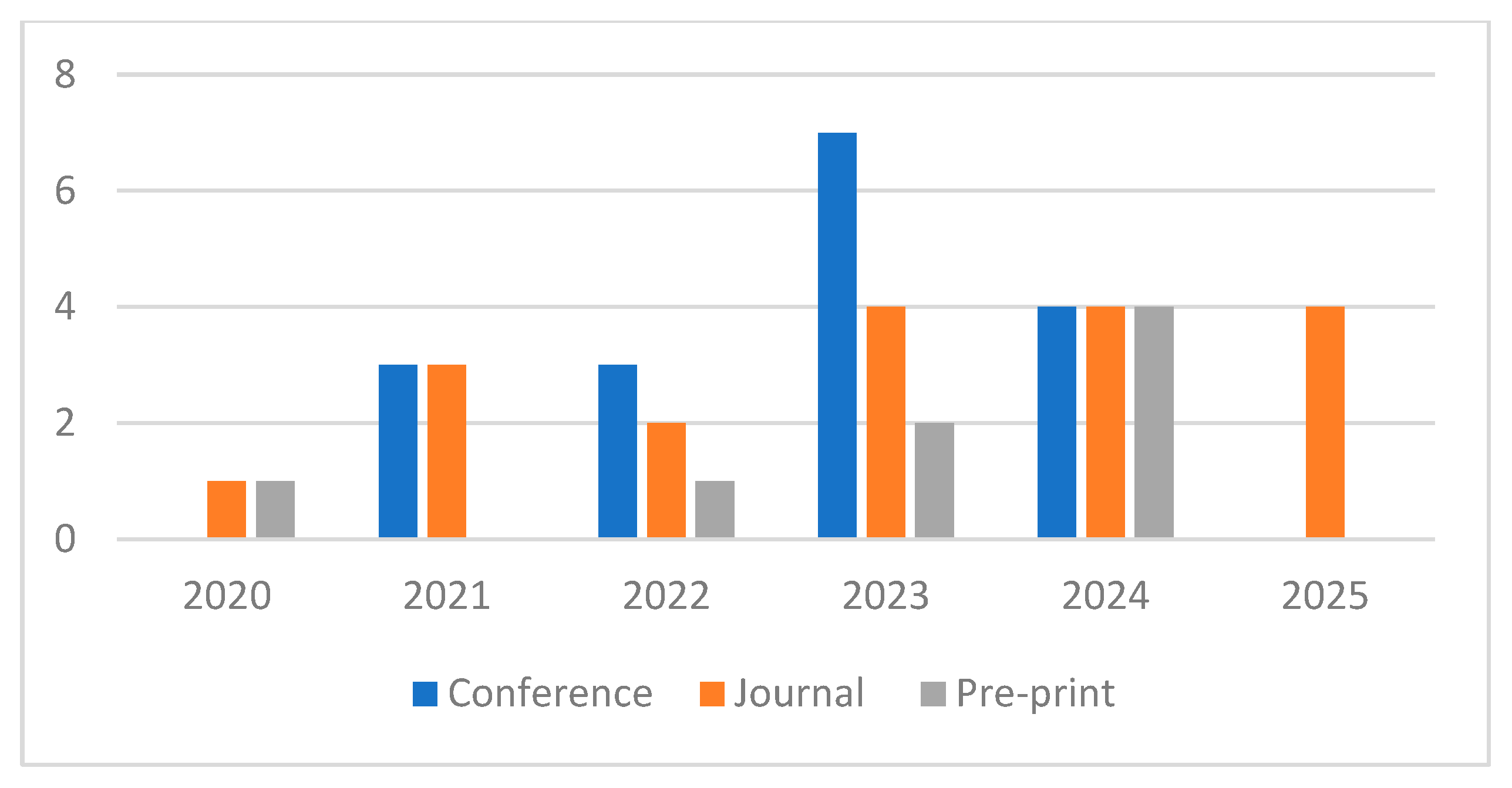

4. Results and Discussion

| No. | Databases Searched | Title | Publication Type | Publication Year | RQ | Ref. |

|---|---|---|---|---|---|---|

| 1 | Scopus | Anomaly Detection from Distributed Data Sources via Federated Learning | Conference | 2022 | RQ1 | [19] |

| 2 | Scopus | FedMSE: Semi-supervised federated learning approach for IoT network intrusion detection | Journal | 2025 | RQ1 | [24] |

| 3 | Scopus | FedTADBench: Federated Time-series Anomaly Detection Benchmark | Conference | 2022 | RQ1 | [28] |

| 4 | ArXiv | Federated Semi-Supervised and Semi-Asynchronous Learning for Anomaly Detection in IoT Networks | Journal | 2023 | RQ1 | [29] |

| 5 | Scopus | Communication-Efficient Federated Learning for Network Traffic Anomaly Detection | Conference | 2023 | RQ1 | [30] |

| 6 | Scopus | Adaptive Hierarchical GHSOM with Federated Learning for Context-Aware Anomaly Detection in IoT Networks | Conference | 2024 | RQ1 | [31] |

| 7 | IEEE | Asynchronous Real-Time Federated Learning for Anomaly Detection in Microservice Cloud Applications | Journal | 2025 | RQ1 | [32] |

| 8 | ArXiv | TemporalFED: Detecting Cyberattacks in Industrial Time-Series Data Using Decentralized Federated Learning | Journal | 2023 | RQ1 | [33] |

| 9 | IEEE | Quantized Distributed Federated Learning for Industrial Internet of Things | Journal | 2021 | RQ1 | [34] |

| 10 | Scopus | Decentralized Federated Learning for Industrial IoT With Deep Echo State Networks | Journal | 2023 | RQ1 | [35] |

| 11 | Scopus | FedSA: A Semi-Asynchronous Federated Learning Mechanism in Heterogeneous Edge Computing | Journal | 2021 | RQ1 | [36] |

| 12 | IEEE | Dynamic Clustering in Federated Learning | Conference | 2021 | RQ2 | [37] |

| 13 | Scopus | Federated Learning in Dynamic and Heterogeneous Environments: Advantages, Performances, and Privacy Problems | Journal | 2024 | RQ2 | [38] |

| 14 | Scopus | DCFL: Dynamic Clustered Federated Learning under Differential Privacy Settings | Conference | 2023 | RQ2 | [39] |

| 15 | Scopus | Fed-RAC: Resource-Aware Clustering for Tackling Heterogeneity of Participants in Federated Learning | Journal | 2024 | RQ2 | [40] |

| 16 | Scopus | Highlight Every Step: Knowledge Distillation via Collaborative Teaching | Journal | 2022 | RQ2 | [41] |

| 17 | Scopus | A dynamic adaptive iterative clustered federated learning scheme | Journal | 2023 | RQ2 | [42] |

| 18 | Scopus | Clustered Federated Learning: Model-Agnostic Distributed Multitask Optimization Under Privacy Constraints | Journal | 2021 | RQ2 | [43] |

| 19 | ArXiv | FedAC: An Adaptive Clustered Federated Learning Framework for Heterogeneous Data | Journal | 2024 | RQ2 | [44] |

| 20 | Scopus | FedGroup: Efficient Federated Learning via Decomposed Similarity-Based Clustering | Conference | 2021 | RQ2 | [45] |

| 21 | Scopus | Multi-center federated learning: clients clustering for better personalization | Journal | 2023 | RQ2 | [46] |

| 22 | ArXiv | Towards Client-Driven Federated Learning | Journal | 2024 | RQ2 | [47] |

| 23 | ArXiv | Three Approaches for Personalization with Applications to Federated Learning | Journal | 2020 | RQ2 | [48] |

| 24 | Scopus | FedSoft: Soft Clustered Federated Learning with Proximal Local Updating | Conference | 2022 | RQ2 | [49] |

| 25 | ArXiv | IP-FL: Incentivized and Personalized Federated Learning | Journal | 2024 | RQ2 | [50] |

| 26 | Scopus | An Efficient Framework for Clustered Federated Learning | Journal | 2022 | RQ2 | [51] |

| 27 | Scopus | Temporal Adaptive Clustering for Heterogeneous Clients in Federated Learning | Conference | 2024 | RQ2 | [52] |

| 28 | Scopus | Efficient Cluster Selection for Personalized Federated Learning: A Multi-Armed Bandit Approach | Conference | 2023 | RQ2 | [53] |

| 29 | Scopus | Automated Collaborator Selection for Federated Learning with Multi-armed Bandit Agents | Conference | 2021 | RQ2 | [54] |

| 30 | Scopus | Multi-Armed Bandit-Based Client Scheduling for Federated Learning | Journal | 2020 | RQ2 | [55] |

| 31 | Scopus | FedAD-Bench: A Unified Benchmark for Federated Unsupervised Anomaly Detection in Tabular Data | Conference | 2024 | RQ3 | [56] |

| 32 | ArXiv | Anomaly Detection in Large-Scale Cloud Systems: An Industry Case and Dataset | Journal | 2024 | RQ3 | [57] |

| 33 | Scopus | Federated Learning for Network Anomaly Detection in a Distributed Industrial Environment | Conference | 2023 | RQ3 | [58] |

| 34 | Scopus | Performance Evaluation of Federated Learning for Anomaly Network Detection | Conference | 2023 | RQ3 | [59] |

| 35 | Scopus | Anomaly Detection via Federated Learning | Conference | 2023 | RQ3 | [60] |

| 36 | Scopus | Privacy-Preserving Federated Learning-Based Intrusion Detection Technique for Cyber-Physical Systems | Journal | 2024 | RQ3 | [61] |

| 37 | Scopus | Intrusion Detection Approach for Industrial Internet of Things Traffic Using Deep Recurrent Reinforcement Learning Assisted Federated Learning | Journal | 2025 | RQ3 | [62] |

| 38 | Scopus | A Federated Learning Approach for Efficient Anomaly Detection in Electric Power Steering Systems | Journal | 2024 | RQ3 | [63] |

| 39 | Scopus | CAFNet: Compressed Autoencoder-based Federated Network for Anomaly Detection | Conference | 2023 | RQ3 | [64] |

| 40 | Scopus | Federated Learning for Cloud and Edge Security: A Systematic Review of Challenges and AI Opportunities | Journal | 2025 | RQ3 | [65] |

| 41 | ArXiv | UNIFED: ALL-IN-ONE FEDERATED LEARNING PLATFORM TO UNIFY OPEN-SOURCE FRAMEWORKS | Journal | 2022 | RQ3 | [66] |

| 42 | Scopus | Trust-Based Anomaly Detection in Federated Edge Learning | Conference | 2024 | RQ3 | [67] |

| 43 | Scopus | Fed-ANIDS: Federated learning for anomaly-based network intrusion detection systems | Journal | 2023 | RQ3 | [68] |

4.1. Research Question 1—How Does Communication Overhead Impact the Scalability and Real-Time Performance of Federated Learning for Anomaly Detection in Distributed Server Environments?

| Method | Core Technique | Strengths | Suitable Use Case | Source |

|---|---|---|---|---|

| Model Compression |

|

|

| [29,30] |

| Selective Update |

|

|

| [31] |

| Weighted Aggregation |

|

|

| [30] |

| Asynchronous Aggregation |

|

|

| [32] |

| Decentralised FL (DFL) |

|

|

| [33,34] |

| Semi-Decentralised FL (SDFL) |

|

|

| [33] |

| Client Clustering/Selection |

|

|

| [30] |

| Difference Transmission |

|

|

| [29] |

| Adaptive Learning Rate |

|

|

| [29,36] |

4.2. Research Question 2—How Can a Federated Learning Framework Adapt to Dynamic Server Clusters?

| Category: Server-Driven | |||

|---|---|---|---|

| Method/Paper | Key Mechanism/Method | Adaptation Mechanism | Advantages |

| DCFL [39] |

| Client data distribution changes dynamic cluster restructuring |

|

| Fed-RAC [40,41] |

| High-resource clients act as leaders Inefficient clusters are compressed |

|

| AICFL [42] |

| Clients join/leave clusters based on loss evaluation |

|

| Improved CFL [43] |

| Gradient-based dynamic client reassignment |

|

| FedAC [44] EDC [45] |

| Real-time clustering based on model/gradient drift |

|

| [46] |

| Parameter distance-based dynamic reassignment |

|

| Category: Client-Driven | |||

| CDFL [47] |

| Clients initiate updates when local performance shifts |

|

| FedAMP [48] FedPCL [49] |

| Clients adjust participation and aggregation weights |

|

| [50] |

| Clients withdraw when performance drops |

|

| [51] |

| Self-evaluation to find best-fitting cluster |

|

| Category: Adaptive and Learning-based | |||

| GAN + HypCluster [37] |

| Multi-stage adaptation to data and resource variation |

|

| Temporal K-Means [52] |

| Periodic re-clustering based on evolving data patterns |

|

| MAB + dUCB [53] |

| Rewards-based client-cluster assignment |

|

| MAB Extensions [54,55] |

| Selects high-contribution or low-latency clients |

|

4.3. Research Question 3—What Key Components Are Required to Develop a Standardised Benchmarking Framework for Evaluating the Performance of Federated Learning Anomaly Detection?

4.4. Dataset

| Dataset Name and Size | Domain | Availability | Temporal (Time Series) | Multivariate/High-Dimensional | Non-IID Partition Feasible | Labelled Anomalies | Real-World (vs Simulated) | Scalable to FL Setting |

|---|---|---|---|---|---|---|---|---|

| IBM Cloud [57] (39,365 records, 117,448 features) | Cloud Telemetry | Public | No, Tabular Snapshot | Very High-Dimensional | Limited (needs engineering) | Not Explicitly | Real-world | Limited due to size |

| NAB [57] (57 time series × ~6303 rows each) | Streaming/Cloud Ops | Public | Yes | Limited (univariate/mixed) | Time-based partitions | Yes | Mixed (real + synthetic) | Yes |

| Microsoft Cloud Monitoring [57] (67 time series × ~3757 rows each) | Cloud Systems Monitoring | Public | Yes | Moderate (67 metrics) | Can simulate clients | Yes | Real-world | Yes |

| Westermo ICS [58] (1.8 million packets → 48,657 flow records, 53 features) | Industrial Networks/ICS | Public | Yes | Multivariate and High-Dimensional | Per device/attack type | Yes | Simulated testbed | Yes |

| CICIDS2017 [59] (~2.8 million network flow records) | Network Security/IDS | Public | Yes (Flow-based) | Multivariate | Per attack/client | Yes | Realistic traffic | Yes |

| KDDCUP99 [56] (494,021 samples, 41 features) NSL-KDD [56] (148,517 samples, 41 features) | General Network Intrusion | Public | No, Static | Low to Moderate | Easily partitioned | Yes | Synthetic or outdated | Yes |

| Arrhythmia [56] (452 samples, 274 features) Thyroid [56] (3772 samples, 6 features) | Medical/Bioinformatics | Public | No, Tabular | Moderate | Class-based partitions | Yes | Out-of-domain | Yes |

4.5. Experiment Setup

| Paper | Dataset | Data Type | Model Used | FL Method | FL Aggregation Algorithm |

|---|---|---|---|---|---|

| [60] | CIC-IDS2017, CIC-IDS2018, NCC-DC, MAWI-Lab | Non-IID, Multivariate | Autoencoder + Classifier | Supervised | FedSam (novel min–max + sampling) |

| [61] | ToN_IoT dataset | Heterogeneous IoT sensor data (Non-IID) | DNN, LSTM, GRU, FCN, LeNet | Supervised | Federated Averaging (FedAvg) |

| [62] | TON_IoT, Edge_IIoT, X-IIoTID | Non-IID, Multivariate Time Series | GRU (Gated Recurrent Units) + DRL | Supervised | FedAvg with DRL-assisted selection |

| [63] | EPS test jig dataset | Multivariate Time Series | Unsupervised Anomaly Detection (USAD) | Unsupervised | Federated Averaging (FedAvg) |

| [64] | CICDDoS2019, Bot-IoT, UNSW-NB15 | Network Traffic (IID) | Autoencoder | Unsupervised | Federated Averaging (FedAvg) |

4.6. Performance Metrics

5. Discussion

6. Limitations of the Study

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ivanovic, M. Influence of Federated Learning on Contemporary Research and Applications. In Proceedings of the 2024 International Conference on INnovations in Intelligent SysTems and Applications (INISTA), Craiova, Romania, 4–6 September 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Li, Y.; Yan, Y.; Liu, Z.; Yin, C.; Zhang, J.; Zhang, Z. A federated learning method based on blockchain and cluster training. Electronics 2023, 12, 4014. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Hosseinzadeh, M.; Rahmani, A.M.; Vo, B.; Bidaki, M.; Masdari, M.; Zangakani, M. Improving security using SVM-based anomaly detection: Issues and challenges. Soft Comput. 2021, 25, 3195–3223. [Google Scholar] [CrossRef]

- Lin, T.-H.; Jiang, J.-R. Anomaly Detection with Autoencoder and Random Forest. In Proceedings of the 2020 International Computer Symposium (ICS), Tainan, Taiwan, 17–19 December 2020; pp. 96–99. [Google Scholar] [CrossRef]

- Lee, T.-W.; Ong, L.-Y.; Leow, M.-C. Experimental Study using Unsupervised Anomaly Detection on Server Resources Monitoring. In Proceedings of the 2023 11th International Conference on Information and Communication Technology (ICoICT), Melaka, Malaysia, 23–24 August 2023; pp. 517–522. [Google Scholar] [CrossRef]

- Wang, N.; Yang, W.; Wang, X.; Wu, L.; Guan, Z.; Du, X.; Guizani, M. A blockchain based privacy-preserving federated learning scheme for Internet of Vehicles. Digit. Commun. Netw. 2024, 10, 126–134. [Google Scholar] [CrossRef]

- Ganguly, B.; Aggarwal, V. Online Federated Learning via Non-Stationary Detection and Adaptation Amidst Concept Drift. IEEE/ACM Trans. Netw. 2024, 32, 643–653. [Google Scholar] [CrossRef]

- Marfo, W.; Tosh, D.K.; Moore, S.V. Adaptive Client Selection in Federated Learning: A Network Anomaly Detection Use Case. In Proceedings of the 2025 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 17–20 February 2025; pp. 601–605. [Google Scholar] [CrossRef]

- Drainakis, G.; Pantazopoulos, P.; Katsaros, K.V.; Sourlas, V.; Amditis, A.; Kaklamani, D.I. From centralized to Federated Learning: Exploring performance and end-to-end resource consumption. Comput. Netw. 2023, 225, 109657. [Google Scholar] [CrossRef]

- Zhang, H.; Ye, J.; Huang, W.; Liu, X.; Gu, J. Survey of federated learning in intrusion detection. J. Parallel Distrib. Comput. 2024, 195, 104976. [Google Scholar] [CrossRef]

- Fedorchenko, E.; Novikova, E.; Shulepov, A. Comparative review of the intrusion detection systems based on federated learning: Advantages and open challenges. Algorithms 2022, 15, 247. [Google Scholar] [CrossRef]

- Agrawal, S.; Sarkar, S.; Aouedi, O.; Yenduri, G.; Piamrat, K.; Alazab, M.; Bhattacharya, S.; Maddikunta, P.K.R.; Gadekallu, T.R. Federated learning for intrusion detection system: Concepts, challenges and future directions. Comput. Commun. 2022, 195, 346–361. [Google Scholar] [CrossRef]

- Siddiqi, S.; Qureshi, F.; Lindstaedt, S.; Kern, R. Detecting Outliers in Non-IID Data: A Systematic Literature Review. IEEE Access 2023, 11, 70333–70352. [Google Scholar] [CrossRef]

- Berkani, M.R.A.; Chouchane, A.; Himeur, Y.; Ouamane, A.; Miniaoui, S.; Atalla, S.; Mansoor, W.; Al-Ahmad, H. Advances in Federated Learning: Applications and Challenges in Smart Building Environments and Beyond. Computers 2025, 14, 124. [Google Scholar] [CrossRef]

- Wang, Y.; Zobiri, F.; Mustafa, M.A.; Nightingale, J.; Deconinck, G. Consumption prediction with privacy concern: Application and evaluation of Federated Learning. Sustain. Energy Grids Netw. 2024, 38, 101248. [Google Scholar] [CrossRef]

- Reddy, D.T.; Nandigam, H.; Indla, S.C.; Raja, S.P. Federated Learning in Data Privacy and Security. Adv. Distrib. Comput. Artif. Intell. J. 2024, 13, 21. [Google Scholar]

- Korkmaz, A.; Rao, P. A Selective Homomorphic Encryption Approach for Faster Privacy-Preserving Federated Learning. arXiv 2025, arXiv:2501.12911. [Google Scholar]

- Cavallin, F.; Mayer, R. Anomaly detection from distributed data sources via federated learning. In International Conference on Advanced Information Networking and Applications; Springer International Publishing: Cham, Switzerland, 2022; pp. 317–328. [Google Scholar] [CrossRef]

- Olanrewaju-George, B.; Pranggono, B. Federated learning-based intrusion detection system for the internet of things using unsupervised and supervised deep learning models. Cyber Secur. Appl. 2025, 3, 100068. [Google Scholar] [CrossRef]

- Nardi, M.; Valerio, L.; Passarella, A. Anomaly Detection Through Unsupervised Federated Learning. In Proceedings of the 2022 18th International Conference on Mobility, Sensing and Networking (MSN), Guangzhou, China, 14–16 December 2022; pp. 495–501. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y. Semi-supervised federated learning for collaborative security threat detection in control system for distributed power generation. Eng. Appl. Artif. Intell. 2025, 148, 110374. [Google Scholar] [CrossRef]

- Quyen, N.H.; Duy, P.T.; Nguyen, N.T.; Khoa, N.H.; Pham, V.H. FedKD-IDS: A robust intrusion detection system using knowledge distillation-based semi-supervised federated learning and anti-poisoning attack mechanism. Inf. Fusion 2025, 117, 102807. [Google Scholar] [CrossRef]

- Nguyen, V.T.; Beuran, R. Fedmse: Semi-Supervised Federated Learning Approach for IoT Network Intrusion Detection. Comput. Secur. 2025, 151, 104337. [Google Scholar] [CrossRef]

- Tham, C.-K.; Yang, L.; Khanna, A.; Gera, B. Federated Learning for Anomaly Detection in Vehicular Networks. In Proceedings of the 2023 IEEE 97th Vehicular Technology Conference (VTC2023-Spring), Florence, Italy, 20–23 June 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Hao, J.; Chen, P.; Chen, J.; Li, X. Effectively detecting and diagnosing distributed multivariate time series anomalies via Unsupervised Federated Hypernetwork. Inf. Process. Manag. 2025, 62, 104107. [Google Scholar] [CrossRef]

- Shrestha, R.; Mohammadi, M.; Sinaei, S.; Salcines, A.; Pampliega, D.; Clemente, R.; Lindgren, A. Anomaly detection based on LSTM and autoencoders using federated learning in smart electric grid. J. Parallel Distrib. Comput. 2024, 193, 104951. [Google Scholar] [CrossRef]

- Liu, F.; Zeng, C.; Zhang, L.; Zhou, Y.; Mu, Q.; Zhang, Y.; Zhang, L.; Zhu, C. FedTADBench: Federated Time-series Anomaly Detection Benchmark. In Proceedings of the 2022 IEEE 24th Int Conf on High Performance Computing & Communications; 8th Int Conf on Data Science & Systems; 20th Int Conf on Smart City; 8th Int Conf on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), Hainan, China, 18–20 December 2022; pp. 303–310. [Google Scholar] [CrossRef]

- Zhai, W.; Wang, F.; Liu, L.; Ding, Y.; Lu, W. Federated Semi-Supervised and Semi-Asynchronous Learning for Anomaly Detection in IoT Networks. arXiv 2023, arXiv:2308.11981. [Google Scholar]

- Cui, X.; Han, X.; Liu, G.; Zuo, W.; Wang, Z. Communication-Efficient Federated Learning for Network Traffic Anomaly Detection. In Proceedings of the 2023 19th International Conference on Mobility, Sensing and Networking (MSN), Nanjing, China, 14–16 December 2023; pp. 398–405. [Google Scholar] [CrossRef]

- Alkulaib, L. Adaptive Hierarchical GHSOM with Federated Learning for Context-Aware Anomaly Detection in IoT Networks. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 5917–5925. [Google Scholar] [CrossRef]

- Raeiszadeh, M.; Ebrahimzadeh, A.; Glitho, R.H.; Eker, J.; Mini, R.A.F. Asynchronous Real-Time Federated Learning for Anomaly Detection in Microservice Cloud Applications. IEEE Trans. Mach. Learn. Commun. Netw. 2025, 3, 176–194. [Google Scholar] [CrossRef]

- Gómez, Á.L.P.; Beltrán, E.T.M.; Sánchez, P.M.S.; Celdrán, A.H. TemporalFED: Detecting cyberattacks in industrial time-series data using decentralized federated learning. arXiv 2023, arXiv:2308.03554. [Google Scholar]

- Ma, T.; Wang, H.; Li, C. Quantized Distributed Federated Learning for Industrial Internet of Things. IEEE Internet Things J. 2023, 10, 3027–3036. [Google Scholar] [CrossRef]

- Qiu, W.; Ai, W.; Chen, H.; Feng, Q.; Tang, G. Decentralized Federated Learning for Industrial IoT With Deep Echo State Networks. IEEE Trans. Ind. Inform. 2023, 19, 5849–5857. [Google Scholar] [CrossRef]

- Ma, Q.; Xu, Y.; Xu, H.; Jiang, Z.; Huang, L.; Huang, H. FedSA: A Semi-Asynchronous Federated Learning Mechanism in Heterogeneous Edge Computing. IEEE J. Sel. Areas Commun. 2021, 39, 3654–3672. [Google Scholar] [CrossRef]

- Kim, Y.; Hakim, E.A.; Haraldson, J.; Eriksson, H.; da Silva, J.M.B.; Fischione, C. Dynamic Clustering in Federated Learning. In Proceedings of the ICC 2021—IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Liberti, F.; Berardi, D.; Martini, B. Federated Learning in Dynamic and Heterogeneous Environments: Advantages, Performances, and Privacy Problems. Appl. Sci. 2024, 14, 8490. [Google Scholar] [CrossRef]

- Augello, A.; Falzone, G.; Re, G.L. DCFL: Dynamic Clustered Federated Learning under Differential Privacy Settings. In Proceedings of the 2023 IEEE International Conference on Pervasive Computing and Communications Workshops and Other Affiliated Events (PerCom Workshops), Atlanta, GA, USA, 13–17 March 2023; pp. 614–619. [Google Scholar] [CrossRef]

- Mishra, R.; Gupta, H.P.; Banga, G.; Das, S.K. Fed-RAC: Resource-Aware Clustering for Tackling Heterogeneity of Par-ticipants in Federated Learning. IEEE Trans. Parallel Distrib. Syst. 2024, 35, 1207–1220. [Google Scholar] [CrossRef]

- Zhao, H.; Sun, X.; Dong, J.; Chen, C.; Dong, Z. Highlight Every Step: Knowledge Distillation via Collaborative Teaching. IEEE Trans. Cybern. 2022, 52, 2070–2081. [Google Scholar] [CrossRef] [PubMed]

- Du, R.; Xu, S.; Zhang, R.; Xu, L.; Xia, H. A dynamic adaptive iterative clustered federated learning scheme. Knowl.-Based Syst. 2023, 276, 110741. [Google Scholar] [CrossRef]

- Sattler, F.; Müller, K.-R.; Samek, W. Clustered Federated Learning: Model-Agnostic Distributed Multitask Optimization Under Privacy Constraints. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3710–3722. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Chen, H.; Lin, Z.; Chen, Z.; Zhao, J. FedAC: An Adaptive Clustered Federated Learning Framework for Het-erogeneous Data. arXiv 2024, arXiv:2403.16460. [Google Scholar]

- Duan, M.; Liu, D.; Ji, X.; Liu, R.; Liang, L.; Chen, X.; Tan, Y. FedGroup: Efficient Federated Learning via Decomposed Similarity-Based Clustering. In Proceedings of the 2021 IEEE Intl Conf on Parallel & Distributed Processing with Applications, Big Data & Cloud Computing, Sustainable Computing & Commu-nications, Social Computing & Networking (ISPA/BDCloud/SocialCom/SustainCom), New York City, NY, USA, 30 September–3 October 2021; pp. 228–237. [Google Scholar] [CrossRef]

- Long, G.; Xie, M.; Shen, T.; Zhou, T.; Wang, X.; Jiang, J. Multi-center federated learning: Clients clustering for better per-sonalization. World Wide Web 2023, 26, 481–500. [Google Scholar] [CrossRef]

- Li, S.; Zhu, C. Towards client driven federated learning. arXiv 2024, arXiv:2405.15407. [Google Scholar] [CrossRef]

- Mansour, Y.; Mohri, M.; Ro, J.; Suresh, A.T. Three approaches for personalization with applications to federated learn-ing. arXiv 2020, arXiv:2002.10619. [Google Scholar] [CrossRef]

- Ruan, Y.; Joe-Wong, C. Fedsoft: Soft clustered federated learning with proximal local updating. Proc. AAAI Conf. Artif. Intell. 2022, 36, 8124–8131. [Google Scholar] [CrossRef]

- Khan, A.F.; Wang, X.; Le, Q.; Khan, A.A.; Ali, H.; Jin, M.; Ding, J.; Butt, A.R.; Anwar, A. IP-FL: Incentivized and Personalized Federated Learning. arXiv 2023, arXiv:2304.07514. [Google Scholar] [CrossRef]

- Ghosh, A.; Chung, J.; Yin, D.; Ramchandran, K. An Efficient Framework for Clustered Federated Learning. IEEE Trans. Inf. Theory 2022, 68, 8076–8091. [Google Scholar] [CrossRef]

- Ali, S.S.; Kumar, A.; Ali, M.; Singh, A.K.; Choi, B.J. Temporal Adaptive Clustering for Heterogeneous Clients in Federated Learning. In Proceedings of the 2024 International Conference on Information Networking (ICOIN), Ho Chi Minh City, Vietnam, 17–19 January 2024; pp. 11–16. [Google Scholar] [CrossRef]

- Ni, Z.; Hashemi, M. Efficient Cluster Selection for Personalized Federated Learning: A Multi-Armed Bandit Approach. In Proceedings of the 2023 IEEE Virtual Conference on Communications (VCC), NY, USA, 28–30 November 2023; pp. 115–120. [Google Scholar] [CrossRef]

- Larsson, H.; Riaz, H.; Ickin, S. Automated collaborator selection for federated learning with multi-armed bandit agents. In Proceedings of the 4th FlexNets Workshop on Flexible Networks Artificial Intelligence Supported Network Flexibility and Agility, Virtual Event, USA, 23 August 2021; pp. 44–49. [Google Scholar] [CrossRef]

- Xia, W.; Quek, T.Q.S.; Guo, K.; Wen, W.; Yang, H.H.; Zhu, H. Multi-Armed Bandit-Based Client Scheduling for Federated Learning. IEEE Trans. Wirel. Commun. 2020, 19, 7108–7123. [Google Scholar] [CrossRef]

- Anwar, A.; Moser, B.; Herurkar, D.; Raue, F.; Hegiste, V.; Legler, T.; Dengel, A. FedAD-Bench: A Unified Benchmark for Federated Unsupervised Anomaly Detection in Tabular Data. In Proceedings of the 2024 2nd International Conference on Federated Learning Technologies and Applications (FLTA), Valencia, Spain, 17–20 September 2024; pp. 115–122. [Google Scholar] [CrossRef]

- Islam, M.S.; Rakha, M.S.; Pourmajidi; Sivaloganathan, J.; Steinbacher, J.; Miranskyy, A. Anomaly Detection in Large-Scale Cloud Systems: An Industry Case and Dataset. arXiv 2024, arXiv:2411.09047. [Google Scholar]

- Dehlaghi-Ghadim, A.; Markovic, T.; Leon, M.; Söderman, D.; Strandberg, P.E. Federated Learning for Network Anomaly Detection in a Distributed Industrial Environment. In Proceedings of the 2023 International Conference on Machine Learning and Applications (ICMLA), Jacksonville, FL, USA, 15–17 December 2023; pp. 218–225. [Google Scholar] [CrossRef]

- Alhammadi, R.; Gawanmeh, A.; Atalla, S.; Alkhatib, M.Q.; Mansoor, W. Performance Evaluation of Federated Learning for Anomaly Network Detection. In Proceedings of the 2023 Congress in Computer Science, Computer Engineering, & Applied Computing (CSCE), Las Vegas, NV, USA, 24–27 July 2023; pp. 116–122. [Google Scholar] [CrossRef]

- Vucovich, M.; Tarcar, A.; Rebelo, P.; Rahman, A.; Nandakumar, D.; Redino, C.; Choi, K.; Schiller, R.; Bhattacharya, S.; Veeramani, B.; et al. Anomaly Detection via Federated Learning. In Proceedings of the 2023 33rd International Telecommunication Networks and Applications Conference, Melbourne, Australia, 29 November–1 December 2023; pp. 259–266. [Google Scholar] [CrossRef]

- Mahmud, S.A.; Islam, N.; Islam, Z.; Rahman, Z.; Mehedi, S.T. Privacy-Preserving Federated Learning-Based Intrusion Detection Technique for Cyber-Physical Systems. Mathematics 2024, 12, 3194. [Google Scholar] [CrossRef]

- Kaur, A. Intrusion Detection Approach for Industrial Internet of Things Traffic Using Deep Recurrent Reinforcement Learning Assisted Federated Learning. IEEE Trans. Artif. Intell. 2025, 6, 37–50. [Google Scholar] [CrossRef]

- Kea, K.; Han, Y.; Min, Y.-J. A Federated Learning Approach for Efficient Anomaly Detection in Electric Power Steering Systems. IEEE Access 2024, 12, 67525–67536. [Google Scholar] [CrossRef]

- Tayeen, A.S.M.; Misra, S.; Cao, H.; Harikumar, J. CAFNet: Compressed Autoencoder-based Federated Network for Anomaly Detection. In Proceedings of the MILCOM 2023–2023 IEEE Military Communications Conference (MILCOM), Boston, MA, USA, 30 October–3 November 2023; pp. 325–330. [Google Scholar] [CrossRef]

- Albshaier, L.; Almarri, S.; Albuali, A. Federated Learning for Cloud and Edge Security: A Systematic Review of Challenges and AI Opportunities. Electronics 2025, 14, 1019. [Google Scholar] [CrossRef]

- Liu, X.; Shi, T.; Xie, C.; Li, Q.; Hu, K.; Kim, H.; Xu, X.; Vu-Le, T.A.; Huang, Z.; Nourian, A.; et al. UniFed: All-in-One Federated Learning Platform to Unify Open-Source Frameworks. arXiv 2022, arXiv:2207.10308. [Google Scholar]

- Zatsarenko, R.; Chuprov, S.; Korobeinikov, D.; Reznik, L. Trust-Based Anomaly Detection in Federated Edge Learning. In Proceedings of the 2024 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 29–31 May 2024; pp. 273–279. [Google Scholar] [CrossRef]

- Idrissi, M.J.; Alami, H.; El Mahdaouy, A.; El Mekki, A.; Oualil, S.; Yartaoui, Z.; Berrada, I. Fed-ANIDS: Federated learning for anomaly-based network intrusion detection systems. Expert Syst. Appl. 2023, 234, 121000. [Google Scholar] [CrossRef]

- Xie, C.; Koyejo, S.; Gupta, I. Asynchronous federated optimization. arXiv 2019, arXiv:1903.03934. [Google Scholar]

- Sattler, F.; Wiedemann, S.; Müller, K.-R.; Samek, W. Robust and Communication-Efficient Federated Learning From Non-i.i.d. Data. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 3400–3413. [Google Scholar] [CrossRef]

- Wang, Q.; Yang, Q.; He, S.; Shi, Z.; Chen, J. AsyncFedED: Asynchronous federated learning with Euclidean distance based adaptive weight aggregation. arXiv 2022, arXiv:2205.13797. [Google Scholar]

- Shi, C.; Zhao, H.; Zhang, B.; Zhou, M.; Guo, D.; Chang, Y. FedAWA: Adaptive Optimization of Aggregation Weights in Federated Learning Using Client Vectors. arXiv 2025, arXiv:2503.15842. [Google Scholar]

- He, C.; Li, S.; So, J.; Zeng, X.; Zhang, M.; Wang, H.; Avestimehr, S. FedML: A research library and benchmark for federated machine learning. arXiv 2023, arXiv:2007.13518. [Google Scholar]

| Paper Title | Problem Addressed | Detection Method | Key Findings | Performance |

|---|---|---|---|---|

| Anomaly Detection from Distributed Data Sources via Federated Learning [19] | FL-based anomaly detection across decentralised data sources while preserving data privacy. | Unsupervised [Isolation Forest (IF)] | The experiment results show that the supervised model (MLP) achieves the best performance in every scenario (centralised, FL-15 client, FL-30 client) while the unsupervised model (IF) has the worst performance. MLP has its best result in a centralised setting, with slight drops in FL with 15 clients and a significant decline in FL with 30 clients. This result shows that although the number of clients can improve the performance of FL as it increases, the FL performance can decrease as the number of clients increases beyond a certain point. This means the scalability of FL remains a challenge. IF fails to detect anomalies in all scenarios, especially in FL with 30 clients. The semi-supervised model (GMM) shows high variability and unpredictable performance. Nevertheless, GMM has a more stable performance compared to IF. | Credit-Card Fraud Dataset Centralised MLP Precision: 87.5% Recall: 85.7% F2: 86.1% GMM Precision: 36.6% Recall: 39.8% F2: 39.1% IF Precision: 13.3% Recall: 4.1% F2: 4.7% |

| Semi-supervised [Gaussian Mixture Models (GMM)] | FL-15 Clients MLP Precision: 85.4% Recall: 71.4% F2: 73.8% GMM Precision: 40.7% Recall: 52.4% F2: 48.6% IF Precision: 0.9% Recall: 16.3% F2: 3.8% | |||

| Supervised [MLP] | FL-30 Clients MLP Precision: 78.6% Recall: 44.9% F2: 49.1% GMM Precision: 78.6% Recall: 44.9% F2: 49.1% IF Precision: 0.0% Recall: 0.0% F2: 0.0% | |||

| Federated Learning for Anomaly Detection in Vehicular Networks [25] | Intrusion and anomaly detection in Internet of Vehicles (IoV) using FL. | Supervised [LSTM] [CNN-LSTM] | This experiment applies supervised FL method for anomaly detection in vehicular networks. The FL is developed based on Federated Averaging (FedAvg) aggregation method and the FedAvg with Adam Optimiser has better performance compared to FedProx algorithm. This study compares the performance of LSTM and hybrid CNN-LSTM models under IID (Random) and Non-IID (Quad) conditions. The experiment results show that in the IID (Random) setting, where each client has the same data type and data distribution, CNN-LSTM has a better overall performance compared to LSTM. However, in non-IID settings (Quad), where data type and data distribution are varied across clients, LSTM performs better than CNN-LSTM. Both of the models perform better in IID scenarios compared to non-IID settings. | FedAvg-Adam (Random) LSTM Accuracy: 95.54% Precision: 92.79% Recall: 99.55% F1: 96.05% CNN-LSTM Accuracy: 95.60% Precision: 92.96% Recall: 99.44% F1: 96.09% FedAvg-Adam (Quad) LSTM Accuracy: 95.10% Precision: 92.51% Recall: 99.03% F1: 95.66% CNN-LSTM Accuracy: 94.38% Precision: 92.17% Recall: 98.02% F1: 95.00% |

| Federated learning-based intrusion detection system for the internet of things using unsupervised and supervised deep learning models [20] | Scalable intrusion detection in heterogeneous IoT networks using FL. | Unsupervised [Autoencoder (AE)] Supervised [Deep Neural Network (DNN)] | This study aims to compare supervised and unsupervised FL methods for anomaly detection in IoT devices. The supervised model is DNN while the unsupervised model is an autoencoder (AE). The experiment uses accuracy, precision, recall, F1-score, true-positive rate (TPR) and false-positive rate (FPR) as performance metrics. The result shows that FL DNN achieves the same performance to non-FL DNN across all the metrics, except that non-FL DNN has higher FPR compared to FL DNN. A similar trend is shown in the AE model, where FL AE outperforms non-FL AE in FPR. Comparing the FL DNN and FL AE, the unsupervised FL AE model performs better than the supervised FL DNN model with a lower FPR. | |

| Anomaly detection based on LSTM and autoencoders using federated learning in smart electric grid [27] | Federated deep learning for smart grid anomaly detection | Unsupervised [LSTM + Autoencoder (AE)] | This study applies an unsupervised LSTM-Autoencoder model for anomaly detection in a smart electric grid system. The experiment compares two anomaly detection standards, which are Mean Standard Deviation (MSD) and Median Absolute Deviation (MAD). Homomorphic encryption (HE) is applied in experiments to prevent sensitive data exposure. The models are tested with different threshold value (K). All of the models show the best performance in threshold value K = 5 with HE 128-bit key applied. The final result shows that MSD performs better than MAD across all performance metrics. | Mean Standard Deviation (MSD) K = 5 HE = 128 bits Accuracy: 98% Precision: 97% Recall: 98% F1: 97% Median Absolute Deviation (MAD) K = 5 HE = 128 bits Accuracy: 79% Precision: 85% Recall: 79% F1: 78% |

| Anomaly Detection through Unsupervised Federated Learning [21] | Unsupervised anomaly detection on decentralised, non-IID edge data via FL. | Unsupervised [Autoencoder (AE)] | This experiment proposes an innovative FL method, which uses community detection to improve the accuracy of anomaly detection. Firstly, the OC-SVM model is used to group the clients based on their data characteristic. After the clients are grouped into distinct communities, each community collaborates to train an autoencoder model under federated settings for anomaly detection. This study shows the feasibility of community-based FL where the clients can be grouped into clusters or communities based on their characteristics. | |

| Effectively detecting and diagnosing distributed multivariate time series anomalies via Unsupervised Federated Hypernetwork [26] | Federated anomaly detection and localisation for distributed multivariate time series. | Unsupervised [self-proposed—uFedHy-DisMTSADD model] | The proposed Unsupervised Federated Hypernetwork Method for Distributed Multivariate Time-Series Anomaly Detection and Diagnosis (uFedHy-DisMTSADD) allows for collaborative model training while ensuring the data privacy of each client. The core component of this model is the Federated Hypernetwork Architecture which effectively solves the problem of data heterogeneity and fluctuations in distributed environments. This model integrates with Series-Conversion Normalisation Transformer (SC Nor-Transformer), which improves the accuracy of anomaly detection by enhancing the temporal dependence of subsequences. SC Nor-Transformer is able to handle timing biases that arise during model aggregation which can help to boost the robustness of FL system. | Compared to baseline models, the proposed model improves by achieving an average F1score increase of 9.19% and an average AUROC increase of 2.41%. |

| Semi-supervised federated learning for collaborative security threat detection in control system for distributed power generation [22] | Semi-supervised intrusion detection in distributed power systems under privacy constraints. | Semi-supervised [Federated Uncertainty Aware Pseudo-label Selection (FedUPS)] | The proposed Federated Uncertainty-aware Pseudo-label Selection (FedUPS) framework combines the concept of semi-supervised learning and Federated Learning for anomaly detection in security systems. Convolutional Neural Networks (CNNs) are utilised in this framework to identify security threats in distributed power-generation systems. The Uncertainty-aware Pseudo-label Selection (UPS) module is integrated into this framework. By integrating the UPS module, the model can effectively handle the unlabelled data while ensuring the credibility of pseudo-labels. This framework not only enhances the accuracy of the anomaly detection system but also ensures data privacy. However, the proposed FedUPS framework has a limitation, as the communication and computational overhead rise when the number of clients increases. This may limit the scalability of the model in real-world applications. | FedUPS Accuracy: 86.47% Precision: 91.12% Recall: 89.86% F1: 86.57% |

| FedKD-IDS: A robust intrusion detection system using knowledge distillation-based semi-supervised federated learning and anti-poisoning attack mechanism [23] | Robust FL-based IoT intrusion detection tackling Non-IID data, limited labels, and collaborative poisoning threats. | Semi-supervised [FedKD-IDS] | The proposed FedKD-IDS framework combines the concept of semi-supervised Federated Learning (SSFL) with knowledge distillation (KD) for intrusion detection system (IDS). This method can handle both labelled and unlabelled data, making it able to learn from diverse datasets without requiring extensive manual labelling. The KD mechanism allows the FL-based models to share the logits instead of model weights. This can significantly reduce the communication overhead and enhance data privacy. | Malicious Collaborator Rate: 50% FedKD-IDS Accuracy: 79.09% Precision: 79.09% Recall: 73.14% F1: 75.55% SSFL Accuracy: 19.86% Precision: 19.51% Recall: 15.70% F1: 17.40% |

| FedMSE:Semi-supervised federated learning approach for IoT network intrusion detection [24] | Secure IoT intrusion detection under heterogeneity via SAE-CEN and MSE-aware aggregation. | Semi-supervised | The proposed FedMSE framework utilised a hybrid model that combines Shrink Autoencoder (SAE) and centroid one-class classifier (CEN) for IoT network intrusion detection. SAE is leveraged for feature representation, which is responsible for compressing high-dimensional network data into a lower-dimensional latent space. CEN identifies anomalies by measuring the distance of data points from the centroid of normal data in the latent space. This framework applies semi-supervised learning, where the model is trained with normal data, while the data which deviates from the normal data is categorised as anomalies. Moreover, the MSEAvg aggregation method is utilised for the global model update. MSRAvg assigns weights to local models based on their reconstruction error (MSE). The models with lower MSE are given higher priority in global model aggregation. This method can effectively improve global model accuracy because the global model is less influenced by the poor performance of clients. The experiment results show that the proposed FedMSE algorithm achieves better performance compared to the same model that uses FedAvg and FedProx during the global aggregation. However, the limitation of the proposed solution is the computational overhead of MSEAvg. This is because the server side needs to calculate the MSE for every client during the global aggregation round which increases the computational overhead on the server side. | SAE-CEN (accuracy) FedAvg: 96.93 ± 0.70 FedProx: 97.28 ± 0.84 FedMSE: 97.3 ± 0.49 |

| Keyword | Boolean Search String |

|---|---|

| Federated Learning | “Federated Learning” OR “FL” OR “Decentralized Learning” OR “Distributed Federated Learning” |

| Anomaly Detection | “Anomaly Detection” OR “Intrusion Detection” OR “Outlier Detection” OR “Fault Detection” |

| Scalability | “Scalability” OR “Elasticity” OR “Load Adaptability” |

| Real-time Performance | “Real-time performance” OR “Low Latency” OR “Response Time” |

| Dynamic Server Clusters | “Dynamic Server Clusters” OR “Adaptive Server Network” OR “Scalable Nodes” OR “Dynamic Infrastructure” |

| Benchmarking Framework | “Benchmarking framework” OR “Performance Evaluation” OR “Evaluation Metrics” OR “Standardized Assessment” OR “FL Benchmark” OR “Anomaly Detection Metrics” |

| Inclusion | Criteria | Exclusion |

|---|---|---|

| Journal and conference | Publication Type | White paper, thesis, dissertation |

| 2020–2025 | Publication Year | Before 2020 |

| English | Publication Language | Non-English |

| Related | Related to RQs | Non-Related |

| Round1 | Round2 | Round3 | |||||

|---|---|---|---|---|---|---|---|

| All | RQ1 | RQ2 | RQ3 | RQ1 | RQ2 | RQ3 | |

| Scopus | 323 | 57 | 86 | 50 | 6 | 14 | 11 |

| IEEE | 723 | 29 | 86 | 47 | 3 | 1 | 0 |

| ArXiv | 86 | 9 | 12 | 9 | 2 | 4 | 2 |

| Total | 95 | 184 | 106 | 11 | 19 | 13 | |

| 1132 | 385 | 43 | |||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, L.-H.; Ong, L.-Y.; Leow, M.-C. Federated Learning for Anomaly Detection: A Systematic Review on Scalability, Adaptability, and Benchmarking Framework. Future Internet 2025, 17, 375. https://doi.org/10.3390/fi17080375

Lim L-H, Ong L-Y, Leow M-C. Federated Learning for Anomaly Detection: A Systematic Review on Scalability, Adaptability, and Benchmarking Framework. Future Internet. 2025; 17(8):375. https://doi.org/10.3390/fi17080375

Chicago/Turabian StyleLim, Le-Hang, Lee-Yeng Ong, and Meng-Chew Leow. 2025. "Federated Learning for Anomaly Detection: A Systematic Review on Scalability, Adaptability, and Benchmarking Framework" Future Internet 17, no. 8: 375. https://doi.org/10.3390/fi17080375

APA StyleLim, L.-H., Ong, L.-Y., & Leow, M.-C. (2025). Federated Learning for Anomaly Detection: A Systematic Review on Scalability, Adaptability, and Benchmarking Framework. Future Internet, 17(8), 375. https://doi.org/10.3390/fi17080375