Patient-Oriented Smart Applications to Support the Diagnosis, Rehabilitation, and Care of Patients with Parkinson’s: An Umbrella Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Objective and Research Questions

- RQ1: What measurement and interaction technologies are being used to develop patient-oriented smart applications to support healthcare provision for PwP?

- RQ2: What types of patient-oriented smart applications are being proposed?

- RQ3: What is the effectiveness of the proposed smart applications?

- RQ4: What limitations and open issues of current research were identified by the existing reviews?

2.2. Literature Search

- Parkinson’s disease: Parkinson, neurodegenerative disorder, neurological disorder.

- Smart applications: smart, remote, computerized, ehealth, digital, mobile, sensor, biosensor, wearable, artificial intelligence, machine learning, deep learning, information technologies, and communication technologies.

- Review: review, secondary and systematic mapping.

2.3. Inclusion and Exclusion Criteria

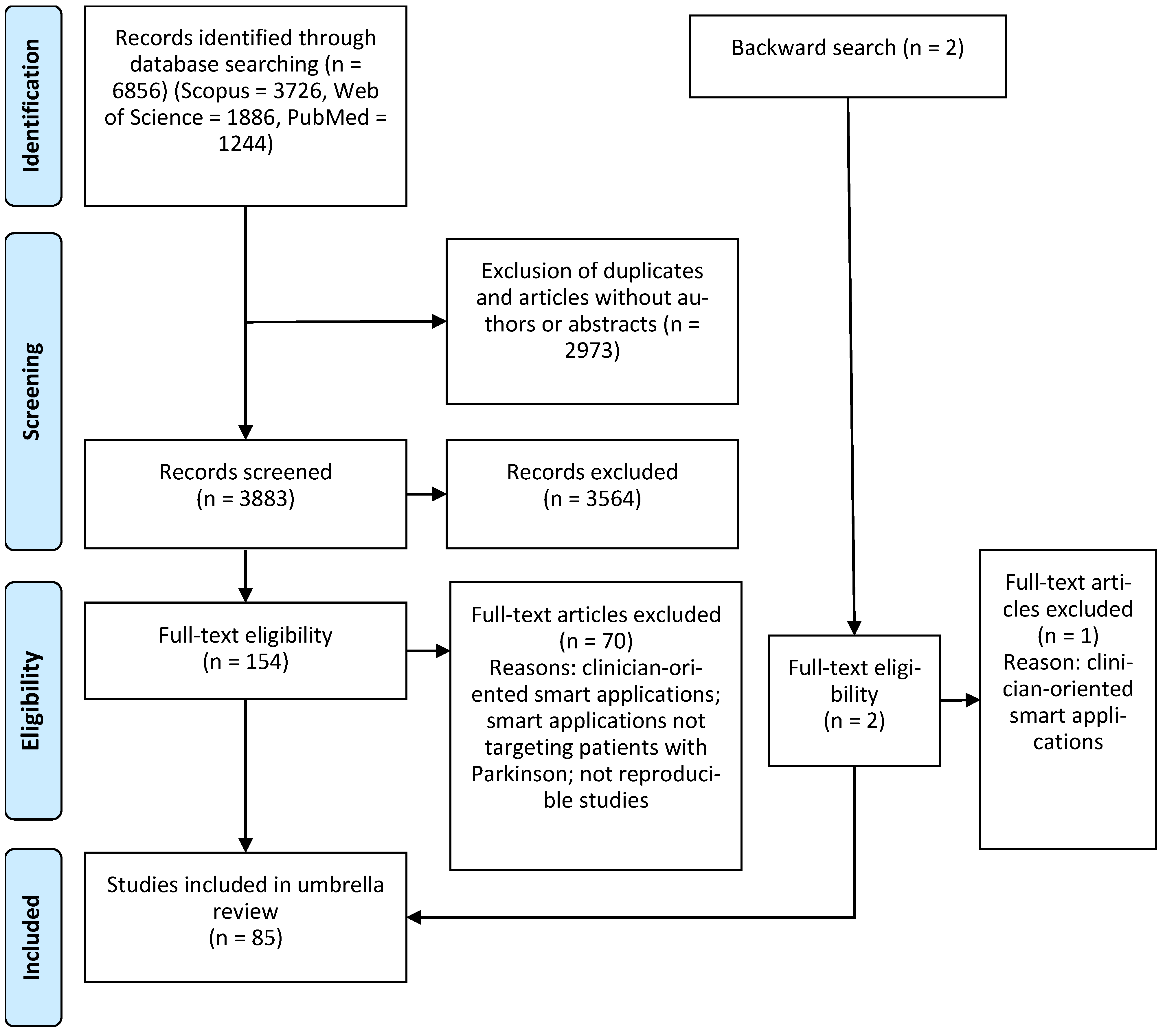

2.4. Identification Process

2.5. Quality Assessment

2.6. Data Extraction

2.7. Data Synthesis

3. Results

3.1. Identification of Studies

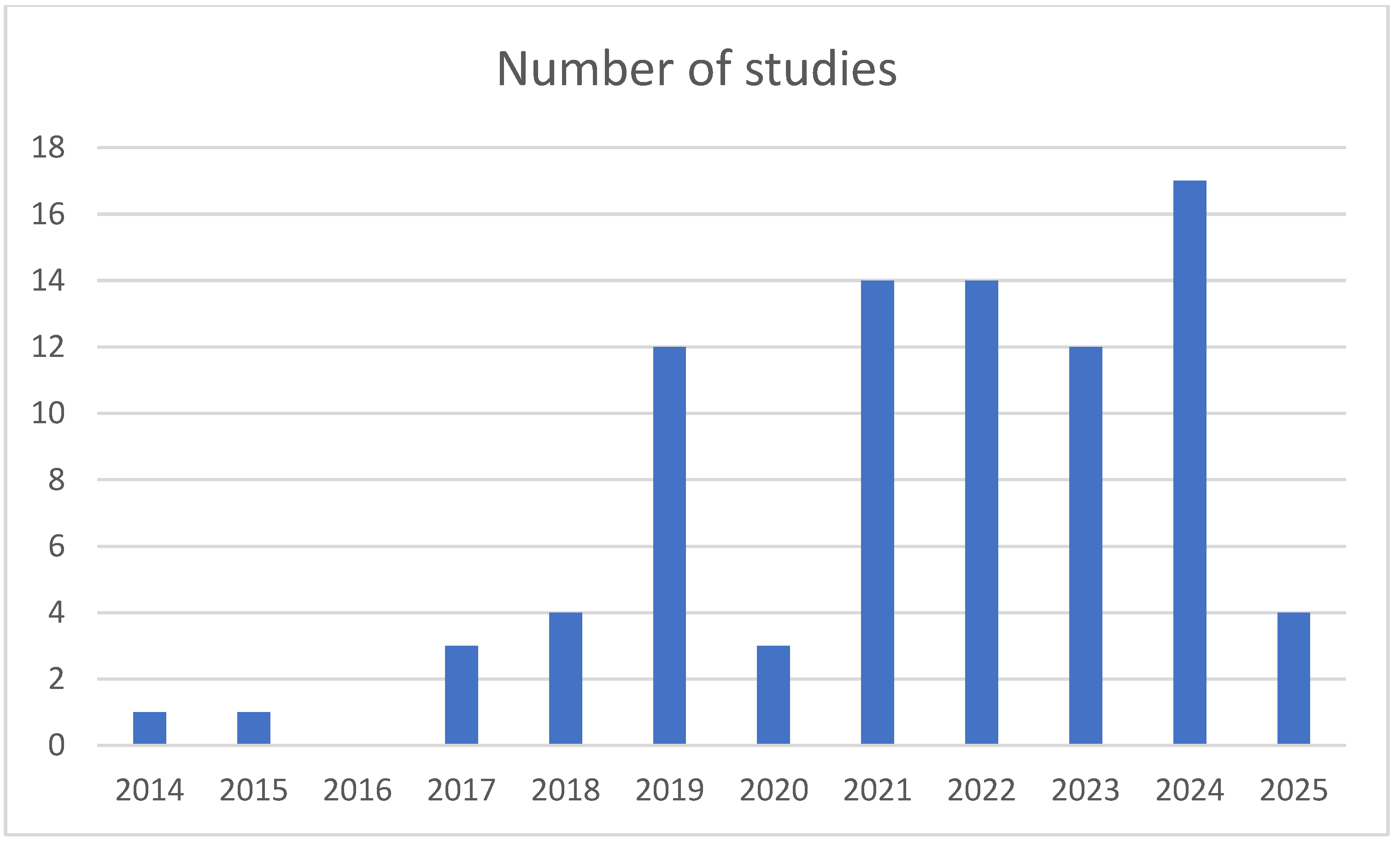

3.2. Characteristics of Included Studies

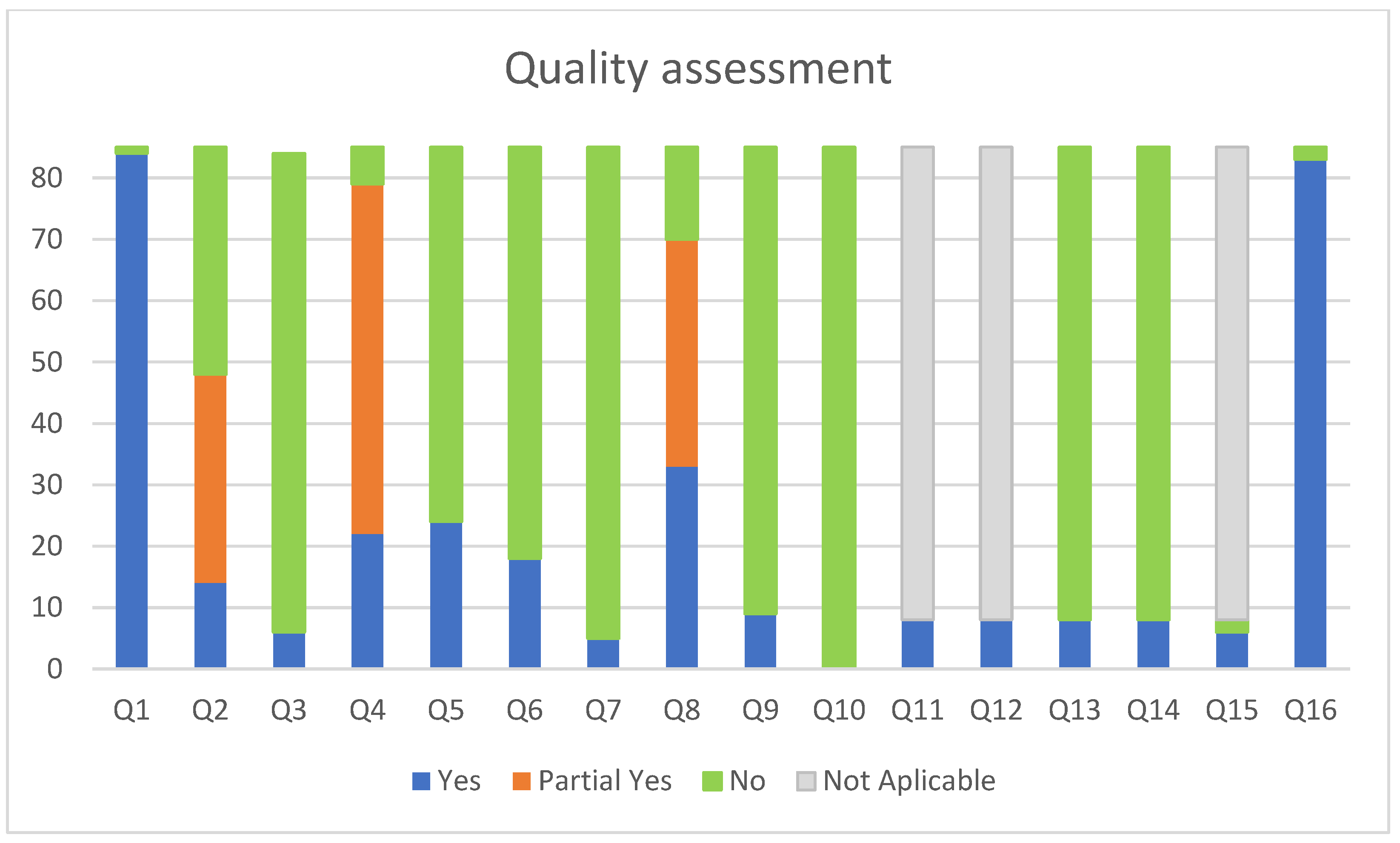

3.3. Quality Assessment

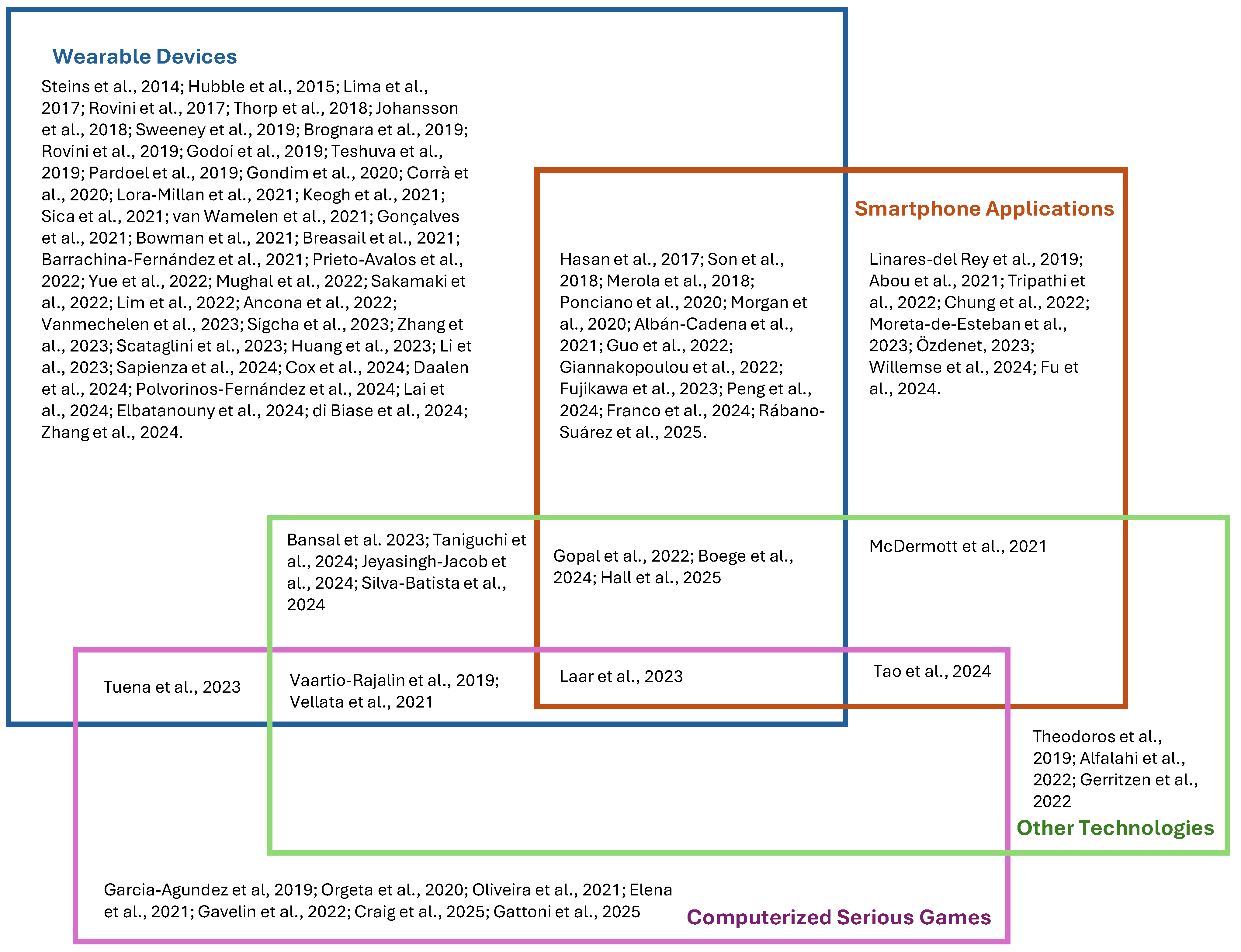

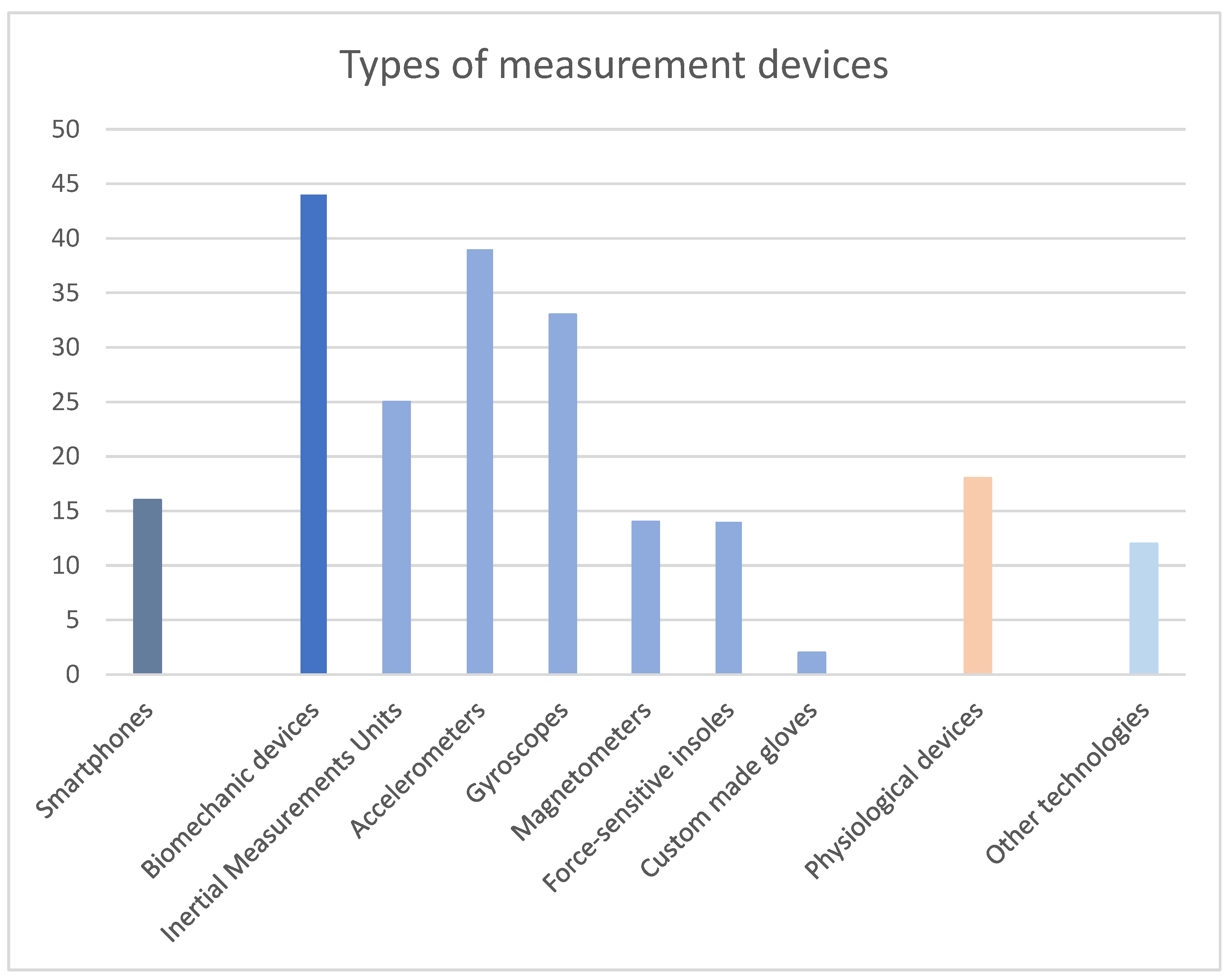

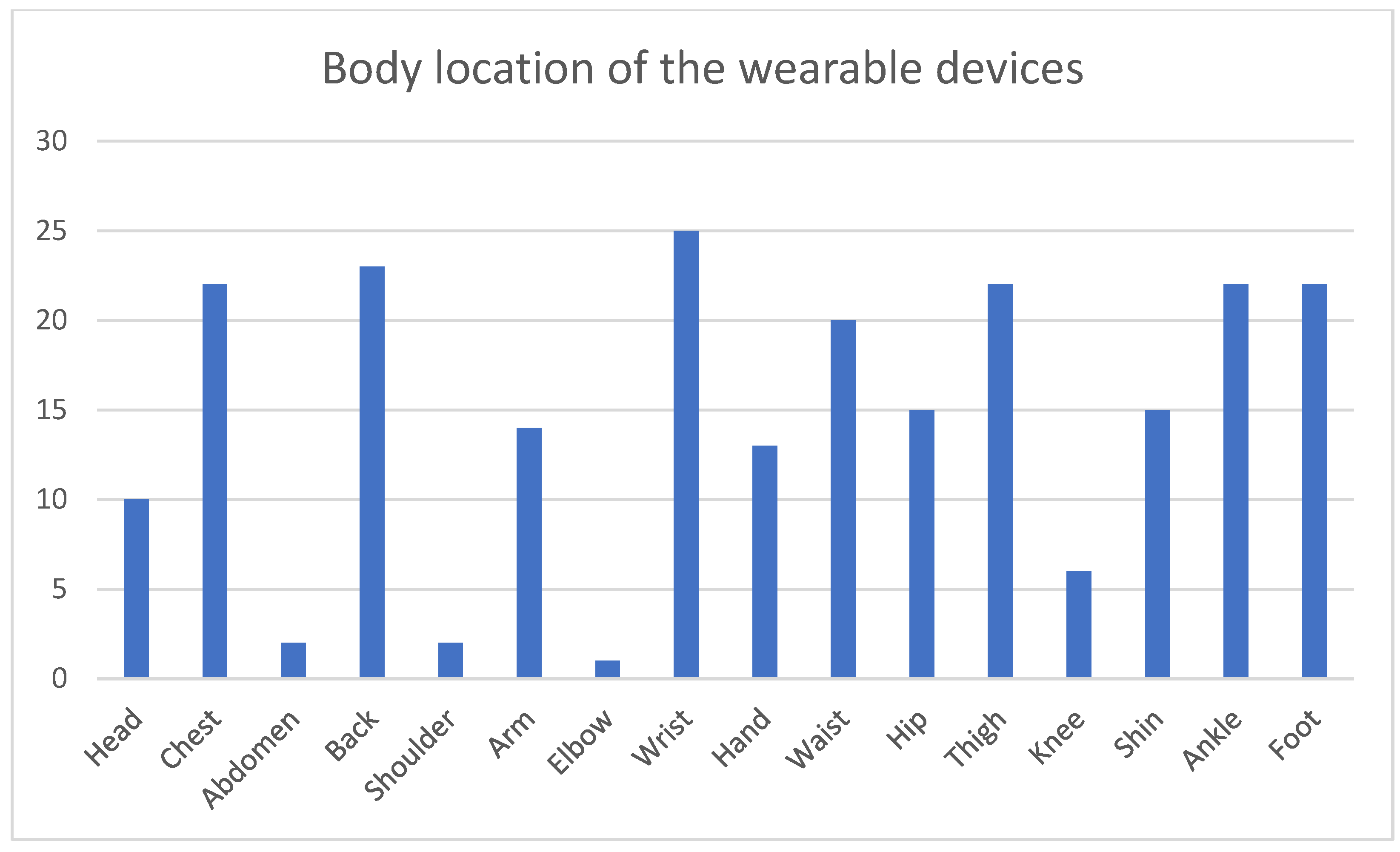

3.4. Measurement and Interaction Technologies

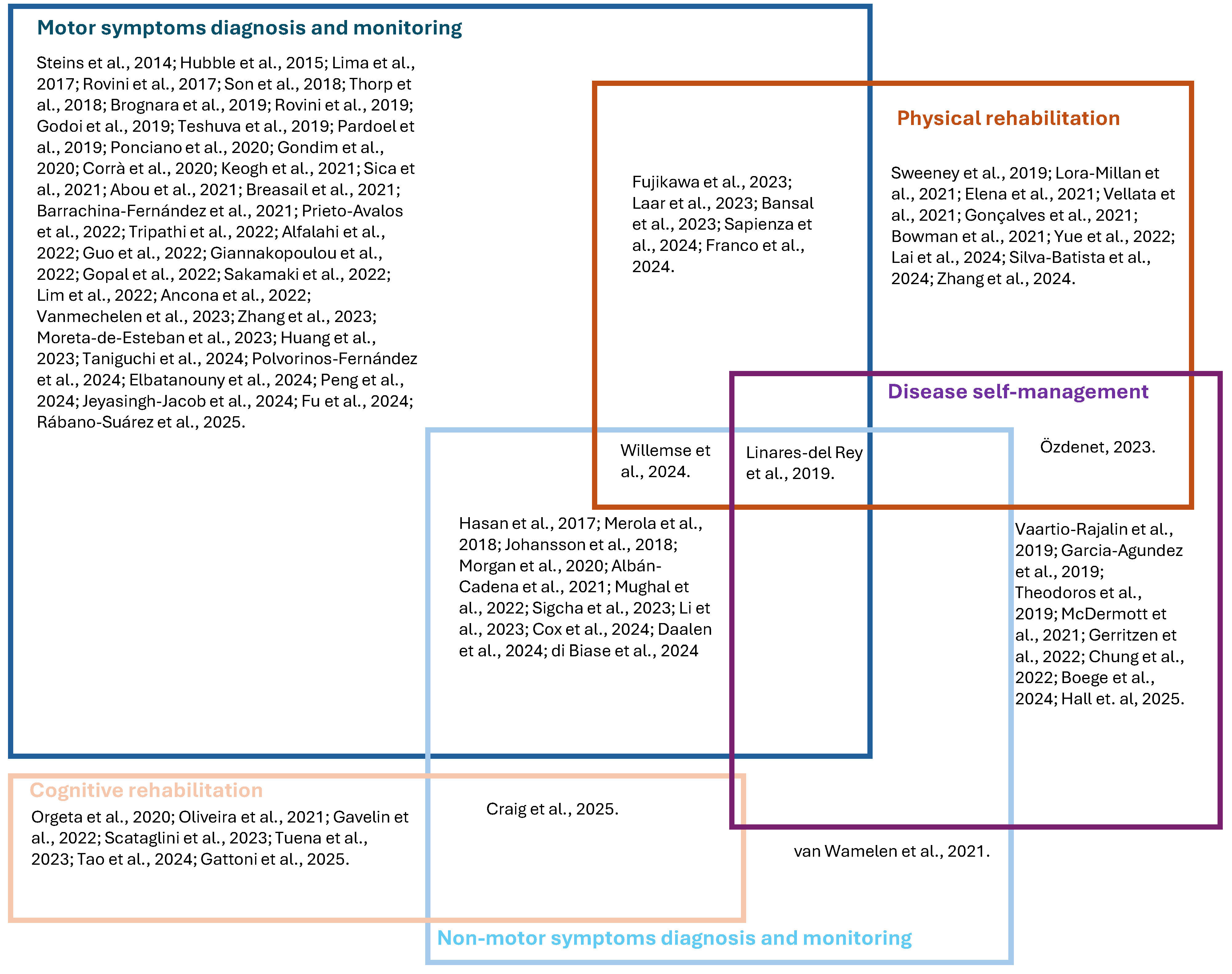

3.5. Application Areas

3.5.1. Patients’ Diagnosis and Monitoring Based on Motor Clinical Variables

3.5.2. Patients’ Diagnosis and Monitoring Based on Non-Motor Clinical Variables

3.5.3. Physical Rehabilitation

3.5.4. Cognitive Rehabilitation

3.5.5. Disease Management

3.6. Challenges and Open Issues

4. Discussion

4.1. Measurement and Interaction Technologies

4.2. Types of Smart Applications

4.3. Effectiveness of the Proposed Smart Applications

4.4. Limitations and Open Issues in Current Research

4.4.1. Experimental Design

4.4.2. Clinical Viability

4.4.3. Acceptability

4.4.4. Regulatory Conformity

4.5. Limitations of the Umbrella Review

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dorsey, E.R.; Sherer, T.; Okun, M.S.; Bloem, B.R. The emerging evidence of the Parkinson pandemic. J. Park. Dis. 2018, 8, S3–S8. [Google Scholar] [CrossRef]

- Chaudhuri, K.R.; Azulay, J.P.; Odin, P.; Lindvall, S.; Domingos, J.; Alobaidi, A.; Kandukuri, P.L.; Chaudhari, V.S.; Parra, J.C.; Yamazaki, T.; et al. Economic burden of Parkinson’s disease: A multinational, real-world, cost-of-illness study. Drugs Real World Outcomes 2024, 11, 1–11. [Google Scholar] [CrossRef]

- Dorsey, E.; Bloem, B.R. The Parkinson pandemic—A call to action. JAMA Neurol. 2018, 75, 9–10. [Google Scholar] [CrossRef]

- Stocchi, F.; Bravi, D.; Emmi, A.; Antonini, A. Parkinson disease therapy: Current strategies and future research priorities. Nat. Rev. Neurol. 2024, 20, 695–707. [Google Scholar] [CrossRef]

- Antonini, A.; Moro, E.; Godeiro, C.; Reichmann, H. Medical and surgical management of advanced Parkinson’s disease. Mov. Disord. 2018, 33, 900–908. [Google Scholar] [CrossRef] [PubMed]

- Samson, E.; Noseworthy, M.D. A review of diagnostic imaging approaches to assessing Parkinson’s disease. Brain Disord. 2022, 6, 100037. [Google Scholar] [CrossRef]

- Wang, J.; Xue, L.; Jiang, J.; Liu, F.; Wu, P.; Lu, J.; Zhang, H.; Bao, W.; Xu, Q.; Ju, Z.; et al. Diagnostic performance of artificial intelligence-assisted PET imaging for Parkinson’s disease: A systematic review and meta-analysis. NPJ Digit. Med. 2024, 7, 17. [Google Scholar] [CrossRef]

- Grigas, O.; Maskeliunas, R.; Damaševičius, R. Early detection of dementia using artificial intelligence and multimodal features with a focus on neuroimaging: A systematic literature review. Health Technol. 2024, 14, 201–237. [Google Scholar] [CrossRef]

- Ghaderi, S.; Mohammadi, M.; Sayehmiri, F.; Mohammadi, S.; Tavasol, A.; Rezaei, M.; Ghalyanchi-Langeroudi, A. Machine learning approaches to identify affected brain regions in movement disorders using MRI data: A systematic review and diagnostic meta-analysis. J. Magn. Reson. Imaging 2024, 60, 2518–2546. [Google Scholar] [CrossRef]

- Bacon, E.J.; He, D.; Achi, N.; Wang, L.; Li, H.; Yao-Digba, P.D.; Monkam, P.; Qi, S. Neuroimage analysis using artificial intelligence approaches: A systematic review. Med. Biol. Eng. Comput. 2024, 62, 2599–2627. [Google Scholar] [CrossRef]

- Aggarwal, N.; Saini, B.S.; Gupta, S. Role of artificial intelligence techniques and neuroimaging modalities in detection of Parkinson’s disease: A systematic review. Cogn. Comput. 2024, 16, 2078–2115. [Google Scholar] [CrossRef]

- Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.J.H.W. Cochrane Handbook for Systematic Reviews of Interventions, 2nd ed.; Wiley: Hoboken, NJ, USA, 2019. [Google Scholar]

- Aromataris, E.; Fernandez, R.; Godfrey, C.M.; Holly, C.; Khalil, H.; Tungpunkom, P. Summarizing systematic reviews: Methodological development, conduct and reporting of an umbrella review approach. JBI Evid. Implement. 2015, 13, 132–140. [Google Scholar] [CrossRef] [PubMed]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Prisma Group. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Int. J. Surg. 2010, 8, 336–341. [Google Scholar] [CrossRef]

- Shea, B.J.; Bouter, L.M.; Peterson, J.; Boers, M.; Andersson, N.; Ortiz, Z.; Ramsay, T.; Bai, A.; Shukla, V.K.; Grimshaw, J.M. External validation of a measurement tool to assess systematic reviews (AMSTAR). PLoS ONE 2007, 2, e1350. [Google Scholar] [CrossRef]

- Shea, B.J.; Reeves, B.C.; Wells, G.; Thuku, M.; Hamel, C.; Moran, J.; Moher, D.; Tugwell, P.; Welch, V.; Kristjansson, E.; et al. AMSTAR 2: A critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017, 358, j4008. [Google Scholar] [CrossRef]

- Aljarallah, N.A.; Dutta, A.K.; Sait, A.R.W. A systematic review of genetics-and molecular-pathway-based machine learning models for neurological disorder diagnosis. Int. J. Mol. Sci. 2024, 25, 6422. [Google Scholar] [CrossRef]

- Kaushal, A. A central role of stimulator of interferon genes’ adaptor protein in defensive immune response. Immunol. Res. 2025, 73, 39. [Google Scholar] [CrossRef]

- Kaur, A.; Mittal, M.; Bhatti, J.S.; Thareja, S.; Singh, S. A systematic literature review on the significance of deep learning and machine learning in predicting Alzheimer’s disease. Artif. Intell. Med. 2024, 154, 102928. [Google Scholar] [CrossRef]

- Mahavar, A.; Patel, A.; Patel, A. A Comprehensive Review on Deep Learning Techniques in Alzheimer’s Disease Diagnosis. Curr. Top. Med. Chem. 2025, 25, 335–349. [Google Scholar] [CrossRef]

- Dennis, A.G.P.; Strafella, A.P. The identification of cognitive impairment in Parkinson’s disease using biofluids, neuroimaging, and artificial intelligence. Front. Neurosci. 2024, 18, 1446878. [Google Scholar] [CrossRef]

- Dzialas, V.; Doering, E.; Eich, H.; Strafella, A.P.; Vaillancourt, D.E.; Simonyan, K.; van Eimeren, T.; International Parkinson Movement Disorders Society-Neuroimaging Study Group. Houston, we have AI problem! quality issues with neuroimaging-based artificial intelligence in Parkinson’s disease: A systematic review. Mov. Disord. 2024, 39, 2130–2143. [Google Scholar] [CrossRef]

- Lamba, R.; Gulati, T.; Alharbi, H.F.; Jain, A. A hybrid system for Parkinson’s disease diagnosis using machine learning techniques. Int. J. Speech Technol. 2022, 25, 583–593. [Google Scholar] [CrossRef]

- Bruno, M.K.; Dhall, R.; Duquette, A.; Haq, I.U.; Honig, L.S.; Lamotte, G.; Mari, Z.; McFarland, N.R.; Montaser-Kouhsari, L.; Rodriguez-Porcel, F.; et al. A general neurologist’s practical diagnostic algorithm for atypical parkinsonian disorders: A consensus statement. Neurol. Clin. Pract. 2024, 14, e200345. [Google Scholar] [CrossRef]

- Pratihar, R.; Sankar, R. Advancements in Parkinson’s Disease Diagnosis: A Comprehensive Survey on Biomarker Integration and Machine Learning. Computers 2024, 13, 293. [Google Scholar] [CrossRef]

- Sankineni, S.; Saraswat, A.; Suchetha, M.; Aakur, S.N.; Sehastrajit, S.; Dhas, D.E. An insight on recent advancements and future perspectives in detection techniques of Parkinson’s disease. Evol. Intell. 2024, 17, 1715–1731. [Google Scholar] [CrossRef]

- Bawa, A.; Banitsas, K.; Abbod, M. A review on the use of Microsoft Kinect for gait abnormality and postural disorder assessment. J. Healthc. Eng. 2021, 2021, 4360122. [Google Scholar] [CrossRef] [PubMed]

- Anikwe, C.V.; Nweke, H.F.; Ikegwu, A.C.; Egwuonwu, C.A.; Onu, F.U.; Alo, U.R.; Teh, Y.W. Mobile and wearable sensors for data-driven health monitoring system: State-of-the-art and future prospect. Expert Syst. Appl. 2022, 202, 117362. [Google Scholar] [CrossRef]

- Yen, J.M.; Lim, J.H. A clinical perspective on bespoke sensing mechanisms for remote monitoring and rehabilitation of neurological diseases: Scoping review. Sensors 2023, 23, 536. [Google Scholar] [CrossRef]

- Ngo, Q.C.; Motin, M.A.; Pah, N.D.; Drotár, P.; Kempster, P.; Kumar, D. Computerized analysis of speech and voice for Parkinson’s disease: A systematic review. Computer Methods Programs Biomed. 2022, 226, 107133. [Google Scholar] [CrossRef]

- Lam, W.W.; Tang, Y.M.; Fong, K.N. A systematic review of the applications of markerless motion capture (MMC) technology for clinical measurement in rehabilitation. J. Neuroeng. Rehabil. 2023, 20, 57. [Google Scholar] [CrossRef]

- Islam, M.A.; Majumder, M.Z.H.; Hussein, M.A.; Hossain, K.M.; Miah, M.S. A review of machine learning and deep learning algorithms for Parkinson’s disease detection using handwriting and voice datasets. Heliyon 2024, 10, e25469. [Google Scholar] [CrossRef]

- Park, K.W.; Mirian, M.S.; McKeown, M.J. Artificial intelligence-based video monitoring of movement disorders in the elderly: A review on current and future landscapes. Singap. Med. J. 2024, 65, 141–149. [Google Scholar] [CrossRef] [PubMed]

- Pereira, C.R.; Pereira, D.R.; Weber, S.A.; Hook, C.; De Albuquerque, V.H.C.; Papa, J.P. A survey on computer-assisted Parkinson’s disease diagnosis. Artif. Intell. Med. 2019, 95, 48–63. [Google Scholar] [CrossRef] [PubMed]

- Chudzik, A.; Śledzianowski, A.; Przybyszewski, A.W. Machine learning and digital biomarkers can detect early stages of neurodegenerative diseases. Sensors 2024, 24, 1572. [Google Scholar] [CrossRef] [PubMed]

- Sánchez-Fernández, L.P. Biomechanics of Parkinson’s Disease with Systems Based on Expert Knowledge and Machine Learning: A Scoping Review. Computation 2024, 12, 230. [Google Scholar] [CrossRef]

- Steins, D.; Dawes, H.; Esser, P.; Collett, J. Wearable accelerometry-based technology capable of assessing functional activities in neurological populations in community settings: A systematic review. J. Neuroeng. Rehabil. 2014, 11, 36. [Google Scholar] [CrossRef]

- Hubble, R.P.; Naughton, G.A.; Silburn, P.A.; Cole, M.H. Wearable sensor use for assessing standing balance and walking stability in people with Parkinson’s disease: A systematic review. PLoS ONE 2015, 10, e0123705. [Google Scholar] [CrossRef]

- Silva de Lima, A.L.; Evers, L.J.; Hahn, T.; Bataille, L.; Hamilton, J.L.; Little, M.A.; Okuma, Y.; Bloem, B.; Faber, M.J. Freezing of gait and fall detection in Parkinson’s disease using wearable sensors: A systematic review. J. Neurol. 2017, 264, 1642–1654. [Google Scholar] [CrossRef]

- Rovini, E.; Maremmani, C.; Cavallo, F. How wearable sensors can support Parkinson’s disease diagnosis and treatment: A systematic review. Front. Neurosci. 2017, 11, 555. [Google Scholar] [CrossRef]

- Hasan, H.; Athauda, D.S.; Foltynie, T.; Noyce, A.J. Technologies assessing limb bradykinesia in Parkinson’s disease. J. Park. Dis. 2017, 7, 65–77. [Google Scholar] [CrossRef]

- Son, H.; Park, W.S.; Kim, H. Mobility monitoring using smart technologies for Parkinson’s disease in free-living environment. Collegian 2018, 25, 549–560. [Google Scholar] [CrossRef]

- Thorp, J.E.; Adamczyk, P.G.; Ploeg, H.L.; Pickett, K.A. Monitoring motor symptoms during activities of daily living in individuals with Parkinson’s disease. Front. Neurol. 2018, 9, 1036. [Google Scholar] [CrossRef] [PubMed]

- Merola, A.; Sturchio, A.; Hacker, S.; Serna, S.; Vizcarra, J.A.; Marsili, L.; Fasano, A.; Espay, A.J. Technology-based assessment of motor and nonmotor phenomena in Parkinson disease. Expert Rev. Neurother. 2018, 18, 825–845. [Google Scholar] [CrossRef] [PubMed]

- Johansson, D.; Malmgren, K.; Murphy, M.A. Wearable sensors for clinical applications in epilepsy, Parkinson’s disease, and stroke: A mixed-methods systematic review. J. Neurol. 2018, 265, 1740–1752. [Google Scholar] [CrossRef]

- Sweeney, D.; Quinlan, L.R.; Browne, P.; Richardson, M.; Meskell, P.; ÓLaighin, G. A technological review of wearable cueing devices addressing freezing of gait in Parkinson’s disease. Sensors 2019, 19, 1277. [Google Scholar] [CrossRef]

- Brognara, L.; Palumbo, P.; Grimm, B.; Palmerini, L. Assessing gait in Parkinson’s disease using wearable motion sensors: A systematic review. Diseases 2019, 7, 18. [Google Scholar] [CrossRef]

- Rovini, E.; Maremmani, C.; Cavallo, F. Automated systems based on wearable sensors for the management of Parkinson’s disease at home: A systematic review. Telemed. E-Health 2019, 25, 167–183. [Google Scholar] [CrossRef]

- Linares-Del Rey, M.; Vela-Desojo, L.; Cano-de La Cuerda, R. Mobile phone applications in Parkinson’s disease: A systematic review. Neurología 2019, 34, 38–54. [Google Scholar] [CrossRef]

- Godoi, B.B.; Amorim, G.D.; Quiroga, D.G.; Holanda, V.M.; Júlio, T.; Tournier, M.B. Parkinson’s disease and wearable devices, new perspectives for a public health issue: An integrative literature review. Rev. Da Assoc. Médica Bras. 2019, 65, 1413–1420. [Google Scholar] [CrossRef]

- Vaartio-Rajalin, H.; Rauhala, A.; Fagerström, L. Person-centered home-based rehabilitation for persons with Parkinson’s disease: A scoping review. Int. J. Nurs. Stud. 2019, 99, 103395. [Google Scholar] [CrossRef]

- Garcia-Agundez, A.; Folkerts, A.K.; Konrad, R.; Caserman, P.; Tregel, T.; Goosses, M.; Göbel, S.; Kalbe, E. Recent advances in rehabilitation for Parkinson’s Disease with Exergames: A Systematic Review. J. Neuroeng. Rehabil. 2019, 16, 17. [Google Scholar] [CrossRef]

- Theodoros, D.; Aldridge, D.; Hill, A.J.; Russell, T. Technology-enabled management of communication and swallowing disorders in Parkinson’s disease: A systematic scoping review. Int. J. Lang. Commun. Disord. 2019, 54, 170–188. [Google Scholar] [CrossRef]

- Teshuva, I.; Hillel, I.; Gazit, E.; Giladi, N.; Mirelman, A.; Hausdorff, J.M. Using wearables to assess bradykinesia and rigidity in patients with Parkinson’s disease: A focused, narrative review of the literature. J. Neural Transm. 2019, 126, 699–710. [Google Scholar] [CrossRef]

- Pardoel, S.; Kofman, J.; Nantel, J.; Lemaire, E.D. Wearable-sensor-based detection and prediction of freezing of gait in Parkinson’s disease: A review. Sensors 2019, 19, 5141. [Google Scholar] [CrossRef]

- Orgeta, V.; McDonald, K.R.; Poliakoff, E.; Hindle, J.V.; Clare, L.; Leroi, I. Cognitive training interventions for dementia and mild cognitive impairment in Parkinson’s disease. Cochrane Database Syst. Rev. 2020, 2, CD011961. [Google Scholar] [CrossRef] [PubMed]

- Ponciano, V.; Pires, I.M.; Ribeiro, F.R.; Marques, G.; Villasana, M.V.; Garcia, N.M.; Zdravevski, E.; Spinsante, S. Identification of diseases based on the use of inertial sensors: A systematic review. Electronics 2020, 9, 778. [Google Scholar] [CrossRef]

- de Oliveira Gondim, I.T.G.; de Souza, C.D.C.B.; Rodrigues, M.A.B.; Azevedo, I.M.; de Sales, M.D.G.W.; Lins, O.G. Portable accelerometers for the evaluation of spatio-temporal gait parameters in people with Parkinson’s disease: An integrative review. Arch. Gerontol. Geriatr. 2020, 90, 104097. [Google Scholar] [CrossRef]

- Morgan, C.; Rolinski, M.; McNaney, R.; Jones, B.; Rochester, L.; Maetzler, W.; Craddock, I.; Whone, A.L. Systematic review looking at the use of technology to measure free-living symptom and activity outcomes in Parkinson’s disease in the home or a home-like environment. J. Park. Dis. 2020, 10, 429–454. [Google Scholar] [CrossRef]

- Corrà, M.F.; Warmerdam, E.; Vila-Chã, N.; Maetzler, W.; Maia, L. Wearable health technology to quantify the functional impact of peripheral neuropathy on mobility in Parkinson’s disease: A systematic review. Sensors 2020, 20, 6627. [Google Scholar] [CrossRef]

- Lora-Millan, J.S.; Delgado-Oleas, G.; Benito-León, J.; Rocon, E. A review on wearable technologies for tremor suppression. Front. Neurol. 2021, 12, 700600. [Google Scholar] [CrossRef]

- de Oliveira, L.C.; Mendes, L.C.; de Lopes, R.A.; Carneiro, J.A.; Cardoso, A.; Júnior, E.A.; de Oliveira Andrade, A. A systematic review of serious games used for rehabilitation of individuals with Parkinson’s disease. Res. Biomed. Eng. 2021, 37, 849–865. [Google Scholar] [CrossRef]

- Keogh, A.; Argent, R.; Anderson, A.; Caulfield, B.; Johnston, W. Assessing the usability of wearable devices to measure gait and physical activity in chronic conditions: A systematic review. J. Neuroeng. Rehabil. 2021, 18, 138. [Google Scholar] [CrossRef] [PubMed]

- Sica, M.; Tedesco, S.; Crowe, C.; Kenny, L.; Moore, K.; Timmons, S.; Barton, J.; O’Flynn, B.; Komaris, D.S. Continuous home monitoring of Parkinson’s disease using inertial sensors: A systematic review. PLoS ONE 2021, 16, e0246528. [Google Scholar] [CrossRef] [PubMed]

- Elena, P.; Demetris, S.; Christina, M.; Marios, P. Differences between exergaming rehabilitation and conventional physiotherapy on quality of life in Parkinson’s disease: A systematic review and meta-analysis. Front. Neurol. 2021, 12, 683385. [Google Scholar] [CrossRef]

- van Wamelen, D.J.; Sringean, J.; Trivedi, D.; Carroll, C.B.; Schrag, A.E.; Odin, P.; Antonini, A.; Bloem, B.R.; Bhidayasiri, R.; Chaudhuri, K.R.; et al. Digital health technology for non-motor symptoms in people with Parkinson’s disease: Futile or future? Park. Relat. Disord. 2021, 89, 186–194. [Google Scholar] [CrossRef] [PubMed]

- Vellata, C.; Belli, S.; Balsamo, F.; Giordano, A.; Colombo, R.; Maggioni, G. Effectiveness of telerehabilitation on motor impairments, non-motor symptoms and compliance in patients with Parkinson’s disease: A systematic review. Front. Neurol. 2021, 12, 627999. [Google Scholar] [CrossRef]

- Abou, L.; Peters, J.; Wong, E.; Akers, R.; Dossou, M.S.; Sosnoff, J.J.; Rice, L.A. Gait and balance assessments using smartphone applications in Parkinson’s disease: A systematic review. J. Med. Syst. 2021, 45, 87. [Google Scholar] [CrossRef]

- McDermott, A.; Haberlin, C.; Moran, J. The use of ehealth to promote physical activity in people living with Parkinson’s disease: A systematic review. Physiother. Pract. Res. 2021, 42, 79–92. [Google Scholar] [CrossRef]

- Gonçalves, H.R.; Rodrigues, A.M.; Santos, C.P. Vibrotactile biofeedback devices in Parkinson’s disease: A narrative review. Med. Biol. Eng. Comput. 2021, 59, 1185–1199. [Google Scholar] [CrossRef]

- Bowman, T.; Gervasoni, E.; Arienti, C.; Lazzarini, S.G.; Negrini, S.; Crea, S.; Cattaneo, D.; Carrozza, M.C. Wearable devices for biofeedback rehabilitation: A systematic review and meta-analysis to design application rules and estimate the effectiveness on balance and gait outcomes in neurological diseases. Sensors 2021, 21, 3444. [Google Scholar] [CrossRef]

- Ó Breasail, M.; Biswas, B.; Smith, M.D.; Mazhar, M.K.A.; Tenison, E.; Cullen, A.; Lithander, F.; Roudaut, A.; Henderson, E.J. Wearable GPS and accelerometer technologies for monitoring mobility and physical activity in neurodegenerative disorders: A systematic review. Sensors 2021, 21, 8261. [Google Scholar] [CrossRef]

- Albán-Cadena, A.C.; Villalba-Meneses, F.; Pila-Varela, K.O.; Moreno-Calvo, A.; Villalba-Meneses, C.P.; Almeida-Galárraga, D.A. Wearable sensors in the diagnosis and study of Parkinson’s disease symptoms: A systematic review. J. Med. Eng. Technol. 2021, 45, 532–545. [Google Scholar] [CrossRef] [PubMed]

- Barrachina-Fernández, M.; Maitín, A.M.; Sánchez-Ávila, C.; Romero, J.P. Wearable technology to detect motor fluctuations in Parkinson’s disease patients: Current state and challenges. Sensors 2021, 21, 4188. [Google Scholar] [CrossRef] [PubMed]

- Prieto-Avalos, G.; Sánchez-Morales, L.N.; Alor-Hernández, G.; Sánchez-Cervantes, J.L. A review of commercial and non-commercial wearables devices for monitoring motor impairments caused by neurodegenerative diseases. Biosensors 2022, 13, 72. [Google Scholar] [CrossRef]

- Tripathi, S.; Malhotra, A.; Qazi, M.; Chou, J.; Wang, F.; Barkan, S.; Hellmers, N.; Henchcliffe, C.; Sarva, H. Clinical review of smartphone applications in Parkinson’s disease. Neurologist 2022, 27, 183–193. [Google Scholar] [CrossRef]

- Gavelin, H.M.; Domellöf, M.E.; Leung, I.; Neely, A.S.; Launder, N.H.; Nategh, L.; Finke, C.; Lampit, A. Computerized cognitive training in Parkinson’s disease: A systematic review and meta-analysis. Ageing Res. Rev. 2022, 80, 101671. [Google Scholar] [CrossRef]

- Alfalahi, H.; Khandoker, A.H.; Chowdhury, N.; Iakovakis, D.; Dias, S.B.; Chaudhuri, K.R.; Hadjileontiadis, L.J. Diagnostic accuracy of keystroke dynamics as digital biomarkers for fine motor decline in neuropsychiatric disorders: A systematic review and meta-analysis. Sci. Rep. 2022, 12, 7690. [Google Scholar] [CrossRef]

- Guo, C.C.; Chiesa, P.A.; de Moor, C.; Fazeli, M.S.; Schofield, T.; Hofer, K.; Belachew, S.; Scotland, A. Digital devices for assessing motor functions in mobility-impaired and healthy populations: Systematic literature review. J. Med. Internet Res. 2022, 24, e37683. [Google Scholar] [CrossRef]

- Li, X.; Chen, Z.; Yue, Y.; Zhou, X.; Gu, S.; Tao, J.; Guo, H.; Zhu, M.; Du, Q. Effect of wearable sensor-based exercise on musculoskeletal disorders in individuals with neurodegenerative diseases: A systematic review and meta-analysis. Front. Aging Neurosci. 2022, 14, 934844. [Google Scholar] [CrossRef]

- Giannakopoulou, K.M.; Roussaki, I.; Demestichas, K. Internet of things technologies and machine learning methods for Parkinson’s disease diagnosis, monitoring and management: A systematic review. Sensors 2022, 22, 1799. [Google Scholar] [CrossRef]

- Gerritzen, E.V.; Lee, A.R.; McDermott, O.; Coulson, N.; Orrell, M. Online peer support for people with Parkinson disease: Narrative synthesis systematic review. JMIR Aging 2022, 5, e35425. [Google Scholar] [CrossRef]

- Mughal, H.; Javed, A.R.; Rizwan, M.; Almadhor, A.S.; Kryvinska, N. Parkinson’s disease management via wearable sensors: A systematic review. IEEE Access 2022, 10, 35219–35237. [Google Scholar] [CrossRef]

- Gopal, A.; Hsu, W.Y.; Allen, D.D.; Bove, R. Remote assessments of hand function in neurological disorders: Systematic review. JMIR Rehabil. Assist. Technol. 2022, 9, e33157. [Google Scholar] [CrossRef]

- Sakamaki, T.; Furusawa, Y.; Hayashi, A.; Otsuka, M.; Fernandez, J. Remote patient monitoring for neuropsychiatric disorders: A scoping review of current trends and future perspectives from recent publications and upcoming clinical trials. Telemed. E-Health 2022, 28, 1235–1250. [Google Scholar] [CrossRef]

- Lim, A.C.Y.; Natarajan, P.; Fonseka, R.D.; Maharaj, M.; Mobbs, R.J. The application of artificial intelligence and custom algorithms with inertial wearable devices for gait analysis and detection of gait-altering pathologies in adults: A scoping review of literature. Digit. Health 2022, 8, 20552076221074128. [Google Scholar] [CrossRef]

- Lee, J.; Yeom, I.; Chung, M.L.; Kim, Y.; Yoo, S.; Kim, E. Use of mobile apps for self-care in people with Parkinson disease: Systematic review. JMIR Mhealth Uhealth 2022, 10, e33944. [Google Scholar] [CrossRef] [PubMed]

- Ancona, S.; Faraci, F.D.; Khatab, E.; Fiorillo, L.; Gnarra, O.; Nef, T.; Bassetti, C.L.A.; Bargiotas, P. Wearables in the home-based assessment of abnormal movements in Parkinson’s disease: A systematic review of the literature. J. Neurol. 2022, 269, 100–110. [Google Scholar] [CrossRef] [PubMed]

- Vanmechelen, I.; Haberfehlner, H.; De Vleeschhauwer, J.; Van Wonterghem, E.; Feys, H.; Desloovere, K.; Aerts, J.M.; Monbaliu, E. Assessment of movement disorders using wearable sensors during upper limb tasks: A scoping review. Front. Robot. AI 2023, 9, 1068413. [Google Scholar] [CrossRef] [PubMed]

- Sigcha, L.; Borzì, L.; Amato, F.; Rechichi, I.; Ramos-Romero, C.; Cárdenas, A.; Gascó, L.; Olmo, G. Deep learning and wearable sensors for the diagnosis and monitoring of Parkinson’s disease: A systematic review. Expert Syst. Appl. 2023, 229, 120541. [Google Scholar] [CrossRef]

- Zhang, W.; Sun, H.; Huang, D.; Zhang, Z.; Li, J.; Wu, C.; Sun, Y.; Gong, M.; Wang, Z.; Sun, C.; et al. Detection and prediction of freezing of gait with wearable sensors in Parkinson’s disease. Neurol. Sci. 2023, 45, 431–453. [Google Scholar] [CrossRef]

- Fujikawa, J.; Morigaki, R.; Yamamoto, N.; Nakanishi, H.; Oda, T.; Izumi, Y.; Takagi, Y. Diagnosis and treatment of tremor in Parkinson’s disease using mechanical devices. Life 2023, 13, 78. [Google Scholar] [CrossRef] [PubMed]

- Scataglini, S.; Van Dyck, Z.; Declercq, V.; Van Cleemput, G.; Struyf, N.; Truijen, S. Effect of music based therapy rhythmic auditory stimulation (ras) using wearable device in rehabilitation of neurological patients: A systematic review. Sensors 2023, 23, 5933. [Google Scholar] [CrossRef] [PubMed]

- Moreta-de-Esteban, P.; Martin-Casas, P.; Ortiz-Gutierrez, R.M.; Straudi, S.; Cano-de-la-Cuerda, R. Mobile applications for resting tremor assessment in parkinson’s disease: A systematic review. J. Clin. Med. 2023, 12, 2334. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.; Li, M.; Huang, J. Recent trends in wearable device used to detect freezing of gait and falls in people with Parkinson’s disease: A systematic review. Front. Aging Neurosci. 2023, 15, 1119956. [Google Scholar] [CrossRef]

- Laar, A.; de Lima, A.L.S.; Maas, B.R.; Bloem, B.R.; de Vries, N.M. Successful implementation of technology in the management of Parkinson’s disease: Barriers and facilitators. Clin. Park. Relat. Disord. 2023, 8, 100188. [Google Scholar] [CrossRef]

- Bansal, S.K.; Basumatary, B.; Bansal, R.; Sahani, A.K. Techniques for the detection and management of freezing of gait in Parkinson’s disease–A systematic review and future perspectives. MethodsX 2023, 10, 102106. [Google Scholar] [CrossRef]

- Tuena, C.; Borghesi, F.; Bruni, F.; Cavedoni, S.; Maestri, S.; Riva, G.; Tettamanti, M.; Liperoti, R.; Rossi, L.; Ferrarin, M.; et al. Technology-assisted cognitive motor dual-task rehabilitation in chronic age-related conditions: Systematic review. J. Med. Internet Res. 2023, 25, e44484. [Google Scholar] [CrossRef]

- Özden, F. The effect of mobile application-based rehabilitation in patients with Parkinson’s disease: A systematic review and meta-analysis. Clin. Neurol. Neurosurg. 2023, 225, 107579. [Google Scholar] [CrossRef]

- Li, P.; van Wezel, R.; He, F.; Zhao, Y.; Wang, Y. The role of wrist-worn technology in the management of Parkinson’s disease in daily life: A narrative review. Front. Neuroinformatics 2023, 17, 1135300. [Google Scholar] [CrossRef]

- Taniguchi, S.; Yamamoto, A.; D’cruz, N. Assessing impaired bed mobility in patients with Parkinson’s disease: A scoping review. Physiotherapy 2024, 124, 29–39. [Google Scholar] [CrossRef]

- Sapienza, S.; Tsurkalenko, O.; Giraitis, M.; Mejia, A.C.; Zelimkhanov, G.; Schwaninger, I.; Klucken, J. Assessing the clinical utility of inertial sensors for home monitoring in Parkinson’s disease: A comprehensive review. NPJ Park. Dis. 2024, 10, 161. [Google Scholar] [CrossRef]

- Cox, E.; Wade, R.; Hodgson, R.; Fulbright, H.; Phung, T.H.; Meader, N.; Walker, S.; Rothery, C.; Simmonds, M. Devices for remote continuous monitoring of people with Parkinson’s disease: A systematic review and cost-effectiveness analysis. Health Technol. Assess. 2024, 28, 1. [Google Scholar] [CrossRef]

- Janssen Daalen, J.M.; van den Bergh, R.; Prins, E.M.; Moghadam, M.S.C.; van den Heuvel, R.; Veen, J.; Mathur, S.; Meijerink, H.; Mirelman, A.; Darweesh, S.K.L.; et al. Digital biomarkers for non-motor symptoms in Parkinson’s disease: The state of the art. NPJ Digit. Med. 2024, 7, 186. [Google Scholar] [CrossRef] [PubMed]

- Polvorinos-Fernández, C.; Sigcha, L.; Borzì, L.; Olmo, G.; Asensio, C.; López, J.M.; Arcas, G.; Pavón, I. Evaluating Motor Symptoms in Parkinson’s Disease Through Wearable Sensors: A Systematic Review of Digital Biomarkers. Appl. Sci. 2024, 14, 10189. [Google Scholar] [CrossRef]

- Lai, P.; Zhang, J.; Lai, Q.; Li, J.; Liang, Z. Impact of Wearable Device-Based Walking Programs on Gait Speed in Older Adults: A Systematic Review and Meta-Analysis. Geriatr. Orthop. Surg. Rehabil. 2024, 15, 21514593241284473. [Google Scholar] [CrossRef] [PubMed]

- Elbatanouny, H.; Kleanthous, N.; Dahrouj, H.; Alusi, S.; Almajali, E.; Mahmoud, S.; Hussain, A. Insights into Parkinson’s Disease-Related Freezing of Gait Detection and Prediction Approaches: A Meta Analysis. Sensors 2024, 24, 3959. [Google Scholar] [CrossRef]

- Peng, Y.; Ma, C.; Li, M.; Liu, Y.; Yu, J.; Pan, L.; Zhang, Z. Intelligent devices for assessing essential tremor: A comprehensive review. J. Neurol. 2024, 271, 4733–4750. [Google Scholar] [CrossRef]

- di Biase, L.; Pecoraro, P.M.; Pecoraro, G.; Shah, S.A.; Di Lazzaro, V. Machine learning and wearable sensors for automated Parkinson’s disease diagnosis aid: A systematic review. J. Neurol. 2024, 271, 6452–6470. [Google Scholar] [CrossRef]

- Jeyasingh-Jacob, J.; Crook-Rumsey, M.; Shah, H.; Joseph, T.; Abulikemu, S.; Daniels, S.; Sharp, D.; Haar, S. Markerless motion capture to quantify functional performance in neurodegeneration: Systematic review. JMIR Aging 2024, 7, e52582. [Google Scholar] [CrossRef]

- Boege, S.; Milne-Ives, M.; Ananthakrishnan, A.; Carroll, C.; Meinert, E. Self-Management Systems for Patients and Clinicians in Parkinson’s Disease Care: A Scoping Review. J. Park. Dis. 2024, 14, 1387–1404. [Google Scholar] [CrossRef]

- Willemse, I.H.; Schootemeijer, S.; van den Bergh, R.; Dawes, H.; Nonnekes, J.H.; van de Warrenburg, B.P. Smartphone applications for Movement Disorders: Towards collaboration and re-use. Park. Relat. Disord. 2024, 120, 105988. [Google Scholar] [CrossRef]

- Fu, Y.; Zhang, Y.; Ye, B.; Babineau, J.; Zhao, Y.; Gao, Z.; Mihailidis, A. Smartphone-Based Hand Function Assessment: Systematic Review. J. Med. Internet Res. 2024, 26, e51564. [Google Scholar] [CrossRef] [PubMed]

- Silva-Batista, C.; de Almeida, F.O.; Wilhelm, J.L.; Horak, F.B.; Mancini, M.; King, L.A. Telerehabilitation by Videoconferencing for Balance and Gait in People with Parkinson’s Disease: A Scoping Review. Geriatrics 2024, 9, 66. [Google Scholar] [CrossRef] [PubMed]

- Zhang, T.; Meng, D.T.; Lyu, D.Y.; Fang, B.Y. The Efficacy of Wearable Cueing Devices on Gait and Motor Function in Parkinson Disease: A Systematic Review and Meta-analysis of Randomized Controlled Trials. Arch. Phys. Med. Rehabil. 2024, 105, 369–380. [Google Scholar] [CrossRef] [PubMed]

- Franco, A.; Russo, M.; Amboni, M.; Ponsiglione, A.M.; Di Filippo, F.; Romano, M.; Amato, F.; Ricciardi, C. The Role of Deep Learning and Gait Analysis in Parkinson’s Disease: A Systematic Review. Sensors 2024, 24, 5957. [Google Scholar] [CrossRef]

- Tao, D.; Awan-Scully, R.; Ash, G.I.; Cole, A.; Zhong, P.; Gao, Y.; Sun, Y.; Shao, S.; Wiltshire, H.; Baker, J.S. The Role of Technology-based Dance Intervention for Enhancing Wellness-A Systematic Scoping Review and Meta-synthesis. Ageing Res. Rev. 2024, 100, 102462. [Google Scholar] [CrossRef]

- Craig, S.N.; Dempster, M.; Curran, D.; Cuddihy, A.M.; Lyttle, N. A systematic review of the effectiveness of digital cognitive assessments of cognitive impairment in Parkinson’s disease. Appl. Neuropsychol. Adult 2025, 1–13. [Google Scholar] [CrossRef]

- Hall, A.M.; Allgar, V.; Carroll, C.B.; Meinert, E. Digital health technologies and self-efficacy in Parkinson’s: A scoping review. BMJ Open 2025, 15, e088616. [Google Scholar] [CrossRef]

- Rábano-Suárez, P.; Del Campo, N.; Benatru, I.; Moreau, C.; Desjardins, C.; Sánchez-Ferro, Á.; Fabbri, M. Digital Outcomes as Biomarkers of Disease Progression in Early Parkinson’s Disease: A Systematic Review. Mov. Disord. 2025, 40, 184–203. [Google Scholar] [CrossRef]

- Gattoni, M.F.; Gobbo, S.; Feroldi, S.; Salvatore, A.; Navarro, J.; Sorbi, S.; Saibene, F.L. Identification of Cognitive Training for Individuals with Parkinson’s Disease: A Systematic Review. Brain Sci. 2025, 15, 61. [Google Scholar] [CrossRef]

- Tripoliti, E.E.; Tzallas, A.T.; Tsipouras, M.G.; Rigas, G.; Bougia, P.; Leontiou, M.; Konitsiotis, S.; Chondrogiorgi, M.; Tsouli, S.; Fotiadis, D.I. Automatic detection of freezing of gait events in patients with Parkinson’s disease. Comput. Methods Programs Biomed. 2013, 110, 12–26. [Google Scholar] [CrossRef]

- Tzallas, A.T.; Tsipouras, M.G.; Rigas, G.; Tsalikakis, D.G.; Karvounis, E.C.; Chondrogiorgi, M.; Psomadellis, F.; Cancela, J.; Pastorino, M.; Arredondo, M.T.; et al. PERFORM: A system for monitoring, assessment and management of patients with Parkinson’s disease. Sensors 2014, 14, 21329–21357. [Google Scholar] [CrossRef]

- Arora, S.; Venkataraman, V.; Zhan, A.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Park. Relat. Disord. 2015, 21, 650–653. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, J.J.; Godinho, C.; Santos, A.T.; Domingos, J.; Abreu, D.; Lobo, R.; Gonçalves, N.; Barra, M.; Larsen, F.; Fagerbakke, Ø.; et al. Quantitative home-based assessment of Parkinson’s symptoms: The SENSE-PARK feasibility and usability study. BMC Neurol. 2015, 15, 89. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Lee, H.J.; Lee, W.; Kwon, S.; Kim, S.K.; Jeon, H.S.; Park, H.; Shin, C.W.; Yi, W.J.; Jeon, B.S.; et al. Unconstrained detection of freezing of Gait in Parkinson’s disease patients using smartphone. In Proceedings of the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milano, Italy, 25–29 August 2015. [Google Scholar] [CrossRef]

- Pan, D.; Dhall, R.; Lieberman, A.; Petitti, D.B. A mobile cloud-based Parkinson’s disease assessment system for home-based monitoring. JMIR Mhealth Uhealth 2015, 3, e3956. [Google Scholar] [CrossRef]

- Rezvanian, S.; Lockhart, T.E. Towards real-time detection of freezing of gait using wavelet transform on wireless accelerometer data. Sensors 2016, 16, 475. [Google Scholar] [CrossRef]

- Capecci, M.; Pepa, L.; Verdini, F.; Ceravolo, M.G. A smartphone-based architecture to detect and quantify freezing of gait in Parkinson’s disease. Gait Posture 2016, 50, 28–33. [Google Scholar] [CrossRef]

- Ginis, P.; Nieuwboer, A.; Dorfman, M.; Ferrari, A.; Gazit, E.; Canning, C.G.; Rocchi, L.; Chiari, L.; Hausdorff, J.M.; Mirelman, A. Feasibility and effects of home-based smartphone-delivered automated feedback training for gait in people with Parkinson’s disease: A pilot randomized controlled trial. Park. Relat. Disord. 2016, 22, 28–34. [Google Scholar] [CrossRef]

- Kassavetis, P.; Saifee, T.A.; Roussos, G.; Drougkas, L.; Kojovic, M.; Rothwell, J.C.; Edwards, M.; Bhatia, K.P. Developing a tool for remote digital assessment of Parkinson’s disease. Mov. Disord. Clin. Pract. 2016, 3, 59–64. [Google Scholar] [CrossRef]

- Lee, C.Y.; Kang, S.J.; Kim, Y.E.; Lee, U.; Ma, H.I.; Kim, Y.J. A validation study of a smartphone-based finger tapping application for quantitative assessment of bradykinesia in Parkinson’s disease: 553. Mov. Disord. 2016, 31, S180. [Google Scholar] [CrossRef]

- Camps, J.; Samà, A.; Martín, M.; Rodríguez-Martín, D.; Pérez-López, C.; Alcaine, S.; Mestre, B.; Prats, A.; Crespo, M.C.; Cabestany, J.; et al. Deep learning for detecting freezing of gait episodes in Parkinson’s disease based on accelerometers. In Proceedings of the 14th International Work-Conference on Artificial Neural Networks, IWANN 2017, Cadiz, Spain, 14–16 June 2017. [Google Scholar] [CrossRef]

- Rodríguez-Martín, D.; Samà, A.; Pérez-López, C.; Català, A.; Moreno Arostegui, J.M.; Cabestany, J.; Bayés, À.; Alcaine, S.; Mestre, B.; Prats, A.; et al. Home detection of freezing of gait using support vector machines through a single waist-worn triaxial accelerometer. PLoS ONE 2017, 12, e0171764. [Google Scholar] [CrossRef]

- Lipsmeier, F.; Taylor, K.I.; Kilchenmann, T.; Wolf, D.; Scotland, A.; Schjodt-Eriksen, J.; Cheng, W.Y.; Fernandez-Garcia, I.; Siebourg-Polster, J.; Jin, L.; et al. Evaluation of smartphone-based testing to generate exploratory outcome measures in a phase 1 Parkinson’s disease clinical trial. Mov. Disord. 2018, 33, 1287–1297. [Google Scholar] [CrossRef] [PubMed]

- Queirós, A.; Dias, A.; Silva, A.G.; Rocha, N.P. Ambient assisted living and health-related outcomes—A systematic literature review. Informatics 2017, 4, 19. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Boyle, L.D.; Giriteka, L.; Marty, B.; Sandgathe, L.; Haugarvoll, K.; Steihaug, O.M.; Husebo, B.; Patrascu, M. Activity and Behavioral Recognition Using Sensing Technology in Persons with Parkinson’s Disease or Dementia: An Umbrella Review of the Literature. Sensors 2025, 25, 668. [Google Scholar] [CrossRef]

- Wartenberg, C.; Elden, H.; Frerichs, M.; Jivegård, L.L.; Magnusson, K.; Mourtzinis, G.; Nyström, O.; Quitz, K.; Sjöland, H.; Svanberg, T.; et al. Clinical benefits and risks of remote patient monitoring: An overview and assessment of methodological rigour of systematic reviews for selected patient groups. BMC Health Serv. Res. 2025, 25, 133. [Google Scholar] [CrossRef]

- Ahmed, S.K. How to choose a sampling technique and determine sample size for research: A simplified guide for researchers. Oral Oncol. Rep. 2024, 12, 100662. [Google Scholar] [CrossRef]

- Hopewell, S.; Chan, A.W.; Collins, G.S.; Hróbjartsson, A.; Moher, D.; Schulz, K.F.; Tunn, R.; Aggarwal, R.; Berkwits, M.; Boutron, I. CONSORT 2025 statement: Updated guideline for reporting randomised trials. Lancet 2025, 405, 1633–1640. [Google Scholar] [CrossRef]

- Martins, A.I.; Queirós, A.; Silva, A.G.; Rocha, N.P. Usability evaluation methods: A systematic review. In Human Factors in Software Development and Design; Daeed, I., Bajwa, I.S., Mahmood, Z., Eds.; IGI Global: Hershey, PA, USA, 2014; pp. 250–273. [Google Scholar] [CrossRef]

- Silva, A.G.; Caravau, H.; Martins, A.; Almeida, A.M.P.; Silva, T.; Ribeiro, Ó.; Santinha, G.; Rocha, N.P. Procedures of user-centered usability assessment for digital solutions: Scoping review of reviews reporting on digital solutions relevant for older adults. JMIR Hum. Factors 2021, 8, e22774. [Google Scholar] [CrossRef]

- O’herrin, J.K.; Fost, N.; Kudsk, K.A. Health Insurance Portability Accountability Act (HIPAA) regulations: Effect on medical record research. Ann. Surg. 2004, 239, 772–778. [Google Scholar] [CrossRef]

- Shuren, J.; Patel, B.; Gottlieb, S. FDA regulation of mobile medical apps. JAMA 2018, 320, 337–338. [Google Scholar] [CrossRef]

- Keutzer, L.; Simonsson, U.S. Medical device apps: An introduction to regulatory affairs for developers. JMIR Mhealth Uhealth 2020, 8, e17567. [Google Scholar] [CrossRef]

- van Vroonhoven, J. Risk Management for Medical Devices and the New BS EN ISO 14971; BSI Standards Ltd.: London, UK, 2020. [Google Scholar]

| Database | Query |

|---|---|

| Scopus | TITLE-ABS ((Parkinson OR neurodegenerative OR neurological) AND (smart OR remote OR computerized OR ehealth OR digital OR mobile OR sensor OR biosensor OR wearable OR “artificial intelligence” OR “machine learning” OR “deep learning” OR ((technology OR technologies) AND (information OR communication))) AND (review OR secondary OR “systematic mapping”)) |

| Web of Science | TS = (Parkinson OR neurodegenerative OR neurological) AND (smart OR remote OR computerized OR ehealth OR digital OR Mobile OR sensor OR biosensor OR wearable OR “artificial intelligence” OR “machine learning” OR “deep learning” OR ((technology OR technologies) AND (information OR communication))) AND TS = (review OR secondary OR “systematic mapping”) |

| PubMed | (Parkinson [Title/Abstract] OR neurodegenerative [Title/Abstract] OR neurological [Title/Abstact]) AND (smart [Title/Abstract] OR remote [Title/Abstract] OR computerized [Title/Abstract] OR ehealth [Title/Abstract] OR digital [Title/Abstract] OR mobile [Title/Abstract] OR sensor [Title/Abstract] OR biosensor [Title/Abstract] OR wearable [Title/Abstract] OR “artificial intelligence” [Title/Abstract] OR “machine learning” [Title/Abstract] OR “deep learning” [Title/Abstract] OR ((technology [Title/Abstract] OR technologies [Title/Abstract]) AND (information [Title/Abstract] OR communication [Title/Abstract])) AND (review [Title/Abstract] OR secondary [Title/Abstract] OR “systematic mapping” [Title/Abstract])) |

| Inclusion Criteria | Exclusion Criteria |

|---|---|

| IC1: Reviews related to patient-oriented smart applications to support the diagnosis, rehabilitation, and care of PwP published in peer-reviewed journals. IC2: Reviews with a well-documented literature search strategy, allowing for its replication. | EC1: References without authors or abstract. EC2: Reviews focusing on smart applications oriented towards formal and informal caregivers. EC3: Reviews published in conference proceedings or as book chapters. EC4: Reviews that, although including studies targeting PwP, targeted other health conditions in 70% of their primary studies. EC5: Reviews that, although considered patient-oriented smart applications, were focused on smart applications oriented towards formal and informal caregivers in more than 70% of their primary studies. EC6: Reviews published in languages other than English. EC7: Articles whose full text was not available. |

| Motor Clinical Variables | Wearable Devices | Smartphones | Other Technologies |

|---|---|---|---|

| Freezing of gait | [39,40,43,44,45,48,55,58,59,64,73,79,81,83,85,90,91,95,96,97,103,107,116,120] | [44,59,68,73,79,81,112,116,120] | [97,110] (a) |

| Other gait disturbances | [37,38,40,41,42,44,45,47,50,57,58,59,63,64,73,74,75,79,81,83,85,86,88,90,96,100,102,105,109,110,116,120] | [41,42,44,49,57,59,68,73,79,81,112,116,120] | [110] (a) |

| Tremor | [40,41,42,43,44,45,73,75,79,81,83,85,89,90,92,100,103,105,108,109] | [41,42,44,49,73,76,79,81,92,94,108,112] | |

| Balance | [38,40,42,44,45,58,73,75,79,81,85,88,105,109,120] | [42,44,68,73,76,79,81,112,120] | |

| Bradykinesia | [41,42,43,44,49,54,64,73,75,79,83,88,89,90,100,103] | [41,42,44] | |

| Dyskinesia | [40,41,42,43,45,48,73,75,79,85,88,89,100,103,109] | [41,42,73,79] | |

| Functional activities | [37,40,42,59,60,72,73] | [42,73,112,120] | |

| Physical activity | [42,45,59,63,64,72,73,96,100] | [42,73,112] | |

| Falls | [39,45,48,59,64,73,75,85,95] | [42,68,73] | |

| Fine motor impairments | [73,79,81,84,105,109] | [73,79,81,112,113] | [78,84] (b,c) |

| Rigidity | [40,41,44,54,83,109] | [41,44] | |

| Swallowing disorders | [104,109] | [104] | |

| Hypokinesia | [43,73] | [73] | |

| Impaired bed mobility | [101] | [101] (d) |

| Motor Clinical Variables | Assessment Results |

|---|---|

| Freezing of gait | Johansson et al. [45] showed good agreement between wearable and video-based ratings regarding the number of freezing episodes and the percentage of time with freezing of gait, while other studies [39,68,79,90,103] concluded that wearables and smartphone devices might be used to detect freezing of gait. However, inconsistencies in the assessment processes still hinder the use of these applications in clinical practice [91]. |

| Gait disturbances excluding freezing of gait | Some gait outcomes, such as jerk, harmonic stability or oscillation range, were able to differentiate PwP and healthy controls [38,40,90]. Additionally, good correlations were found between gait outcomes measured by wearable devices and clinical scales such as UPDRS, MDP-UPDRS or Hoehn and Yahr [81]. However, significant differences in gait spatiotemporal parameters were found between the primary studies, with high variability in terms of parameters and placement of the wearable devices [47]. This led to contradictory findings, which seem to indicate that some parameters are more consistent than others [38]. |

| Tremor | Some reviews [40,43,89,94] found good results for correlation with gold-standard clinical tremor measurements (e.g., UPDRS or MDS-UPDRS), while other studies [49,73,76,81,90,94,103,109] demonstrated good accuracy in identifying tremor presence and severity and in differentiating PwP with tremor from healthy individuals. |

| Balance | Considering diverse parameters (e.g., mean acceleration, jerk, or sway distance), Abou et al. [68] found significant correlations between smartphone balance assessments and balance clinical tests, Barrachina-Fernández et al. [73] demonstrated that wearable devices can detect fluctuations of the center of gravity, and Johansson et al. [38] and di Biase et al. [109] found smart applications with good accuracy when discriminating PwP from healthy controls. However, it seems that the measured parameters have different consistency levels [38]. |

| Bradykinesia | Some applications can accurately measure bradykinesia [41,54,73,88,89,90,103] since their outcomes present good correlations with clinical assessment scales [41,54,73,88,89,103] or the severity of bradykinesia assessed by experienced clinicians [90]. Moreover, Son et al. [42] and Thorp et al. [43] reported smart applications with good accuracy in detecting bradykinesia versus no bradykinesia. |

| Dyskinesia | Some measurements provided by the proposed smart applications might support discrimination between dyskinetic and non-dyskinetic events [43,45,89,103] compared to clinical ratings [43,45,89,103] such as UPDRS or an experienced observer [43]. However, considering the significant differences in accuracy between the proposed smart applications, Thorp et al. [43] suggested that the location of the wearable devices influences the precision of the measurements, making the detection of dyskinesia more accurate when the wearable devices are not attached to body parts involved in the tasks performed by patients. Moreover, Rovini et al. [40] and Thorp et al. [43] concluded that detecting dyskinesia during daily activities is particularly complex due to the difficulty in distinguishing voluntary movements from dyskinetic movements. |

| Functional activities | According to two studies [37,42], the proposed applications are not only capable of assessing the type, quantity and quality measures, but also of differentiating the Parkinson’s disease-specific mobility patterns of healthy controls and classifying disease severity and progression within PwP. |

| Physical activity | There is some evidence of good correlations between physical activity measurements and clinical instruments [42,59] and capacity to discriminate sedentary or upright and walking behavior [45]. |

| Detection of falls | Johansson et al. [45] reported that the quantification of missteps and risk of falls was shown to discriminate non-fallers and fallers, Abou et al. [68] suggested the discriminative ability of smartphone applications to predict future falls, and Sica et al. [64] reported the ability to predict future falls, even in patients without a prior history of falling. |

| Fine motor impairments | Keystroke dynamics presents good accuracy, sensitivity and specificity to discriminate and classify PwP [78]. Moreover, Tripathi et al. [76] and Gopal et al. [84] concluded that statistically significant correlations with traditional clinic metrics were most frequently reported, and Polvorinos-Fernández et al. [105] highlighted finger tapping as a particularly strong indicator for diagnosing and monitoring disease progression. |

| Rigidity | Teshuva et al. [54] suggested that some of the proposed applications have the capacity to discriminate between patients with rigidity and healthy controls but concluded that the accuracy of the applications measuring rigidity was not very high. |

| Other motor clinical variables (i.e., swallowing disorders, hypokinesia, and impaired bed mobility) | Due to the reduced number of primary studies addressing swallowing disorders, hypokinesia, and impaired bed mobility, it was not possible to systematize evidence about the effectiveness of the respective applications. |

| Non-Motor Clinical Variables | Wearable Devices | Smartphones | Serious Computerized Games |

|---|---|---|---|

| Affective state | [49] | ||

| Anxiety | [104] | ||

| Autonomic function | [44] | [44] | |

| Brain dysfunction | [90] | ||

| Cognition | [41,66,90,104] | [41,104,112] | [118] |

| Constipation | [66,83,104] | ||

| Depression | [66,83,104] | ||

| Emotional dysfunction | [90] | ||

| Fatigue | [104] | ||

| Hallucinations and delusions | [104] | ||

| Heart rate variability | [104] | ||

| Impulse control disorder | [66] | ||

| Skin impedance | [104] | ||

| Orthostatic hypotension | [104] | ||

| Pain | [104] | ||

| Sleep disturbances | [44,45,59,66,73,83,103,104] | [44,73] | |

| Voice and speech quality | [41,90,109] | [41] | |

| Urinary dysfunction | [66,104] |

| Category | Sub-Category |

|---|---|

| Experimental design | Heterogeneity of methods. Heterogeneity of outcomes. Heterogeneity of participants. Representativeness of the participants. Number of participants. Variability and specification of scripted tasks. Specification of the placement of the wearable devices. Correlations with clinical assessment instruments. Learning effects. Confounding variables. |

| Reproducibility. | |

| Clinical viability | Clinical utility. Limited research evidence on the efficiency and efficacy of smart applications with contradictory findings, namely when used in home environments. Translation to clinical practice. Long-term impact on health outcomes. Sociocultural factors affecting adherence to new clinical models. Cost effectiveness. Integration within clinical workflows. Efficient clinical management tools. Unscripted and unconstrained daily tasks and activities. Variability of metrics. Usefulness of some metrics. Non-motor clinical variables. Variability of the number and placement of the wearable devices. Transparency of the outcomes. Passive monitoring versus active monitoring. Closed-loop applications. Interoperability. |

| Acceptability | Adherence. User experience. Comfort. Invasiveness. Stakeholders’ perspectives. Technical and training assistance. |

| Regulatory conformaty | Conformance requirements. Security and data protection. Risk analysis. Standardized assessment criteria. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bastardo, R.; Pavão, J.; Martins, A.I.; Silva, A.G.; Rocha, N.P. Patient-Oriented Smart Applications to Support the Diagnosis, Rehabilitation, and Care of Patients with Parkinson’s: An Umbrella Review. Future Internet 2025, 17, 376. https://doi.org/10.3390/fi17080376

Bastardo R, Pavão J, Martins AI, Silva AG, Rocha NP. Patient-Oriented Smart Applications to Support the Diagnosis, Rehabilitation, and Care of Patients with Parkinson’s: An Umbrella Review. Future Internet. 2025; 17(8):376. https://doi.org/10.3390/fi17080376

Chicago/Turabian StyleBastardo, Rute, João Pavão, Ana Isabel Martins, Anabela G. Silva, and Nelson Pacheco Rocha. 2025. "Patient-Oriented Smart Applications to Support the Diagnosis, Rehabilitation, and Care of Patients with Parkinson’s: An Umbrella Review" Future Internet 17, no. 8: 376. https://doi.org/10.3390/fi17080376

APA StyleBastardo, R., Pavão, J., Martins, A. I., Silva, A. G., & Rocha, N. P. (2025). Patient-Oriented Smart Applications to Support the Diagnosis, Rehabilitation, and Care of Patients with Parkinson’s: An Umbrella Review. Future Internet, 17(8), 376. https://doi.org/10.3390/fi17080376