Rethinking Modbus-UDP for Real-Time IIoT Systems

Abstract

1. Introduction

2. Related Work

3. Protocol Specification

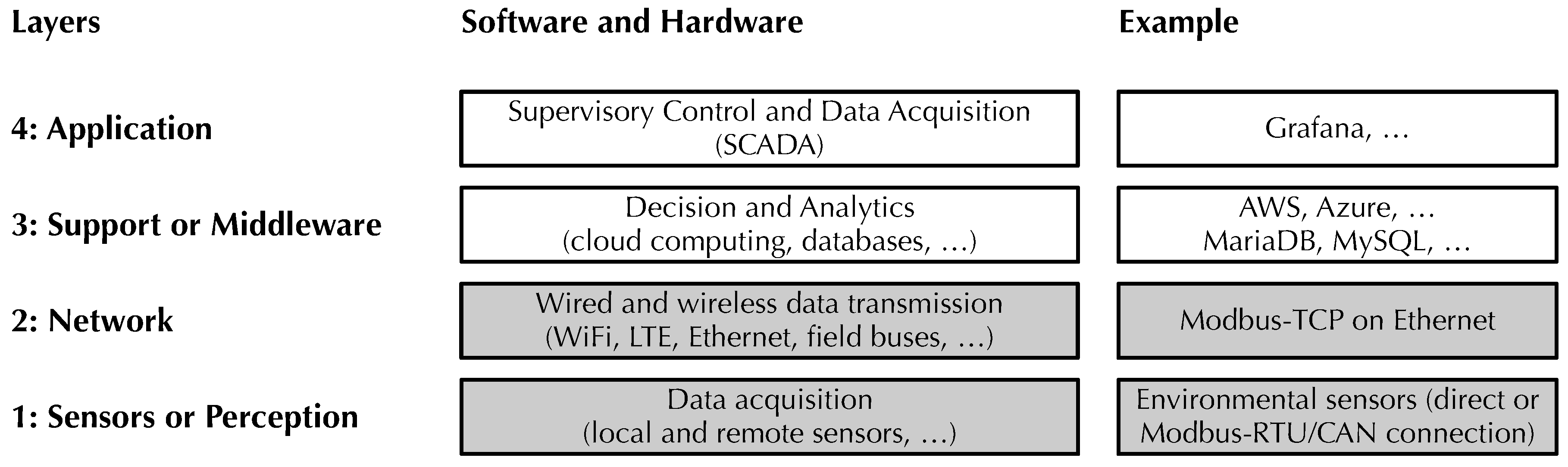

3.1. Overview

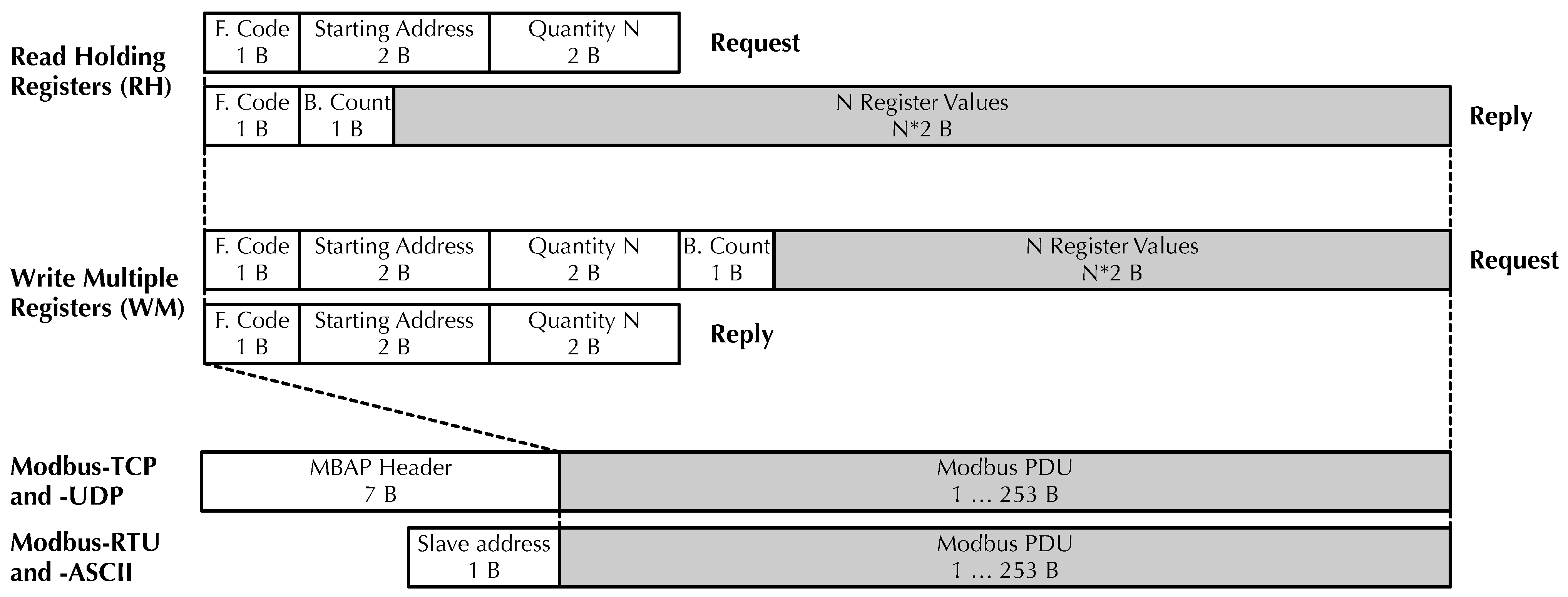

3.2. Frame Format, Addressing, and Frame Length Calculation

- Modbus PDUs are encapsulated in, and transported by, UDP datagrams. Moreover, L2 frames are tagged with an IEEE 802.1Q VLAN tag. Section 3.6 provides more information on tag content and, more generally, how Modbus-UDP messages are prioritized at L2 and L3.

- The TID is still backward-compatible but has additional semantics attached than in the original specification, so it serves not only as a way to pair requests and replies but also differentiates unicast and broadcast Modbus transactions. Section 3.3 defines the newly specified semantics.

- Modbus-UDP datagrams are sent either to the directed broadcast IP address of the network interface chosen for Modbus-UDP communication or to a suitable multicast address. In the second case, the normal multicast subscription process must be followed to ensure that any interposed gateway forwards multicast messages appropriately.

- The unit identifier is also used to identify directly connected slaves in addition to remote slaves connected on the other side of a gateway, as specified in Modbus-TCP.

3.3. MBAP Header and TID

3.4. TID Generation and Check

3.5. Message Retransmission

3.6. Modbus-UDP Packet Priority and Coexistence with Other Traffic

- IP Type of Service (ToS) Differentiated Services Code Point (DSCP) AF42, as originally specified in RFC 2474 [40], IEEE 802.1Q Priority Code Point (PCP) 5 [38] (generally used for voice traffic) in the VLAN tag, and internal SO_PRIORITY 6. This priority assignment does not require the process to be privileged.

- IP ToS DSCP CS4, IEEE 802.1Q PCP 5 in the VLAN tag, and internal priority 7. The process requesting this priority assignment must be privileged due to the internal priority being .

$ ip link add link $IF name $VLAN_IF type vlan id $VLAN_ID

$ ip address add $ADDR brd $BRD dev $VLAN_IF

$ ip link set dev $VLAN_IF type vlan \

egress-qos-map 7:5 ingress-qos-map 5:7

$ ip link set dev $VLAN_IF up

configures a virtual network interface called $VLAN_IF that uses physical interface $IF to send and transmit VLAN-tagged packets with VLAN identifier $VLAN_ID. The virtual interface has IP address $ADDR and broadcast address $BRD. Moreover, the third command asks the protocol stack to map outgoing packets at SO_PRIORITY 7 into PCP 5 (VO, voice traffic with latency) and vice versa for incoming packets. All other outgoing traffic has PCP 0, and all other incoming traffic is given SO_PRIORITY 0, the lowest.4. Experimental Results

4.1. Testbed

- Two slave programs, based on the FreeMODBUS [28] Modbus slave library. Both programs respond to the RH and WM requests described in Section 3.2. The internal processing time is minimized by discarding incoming data and generating dummy data upon request.

- Two master programs, based on the Modbus Master [29] Modbus master library. They issue n = 10,000 sequential RH and WM transactions to the corresponding slave and measure their round-trip time.

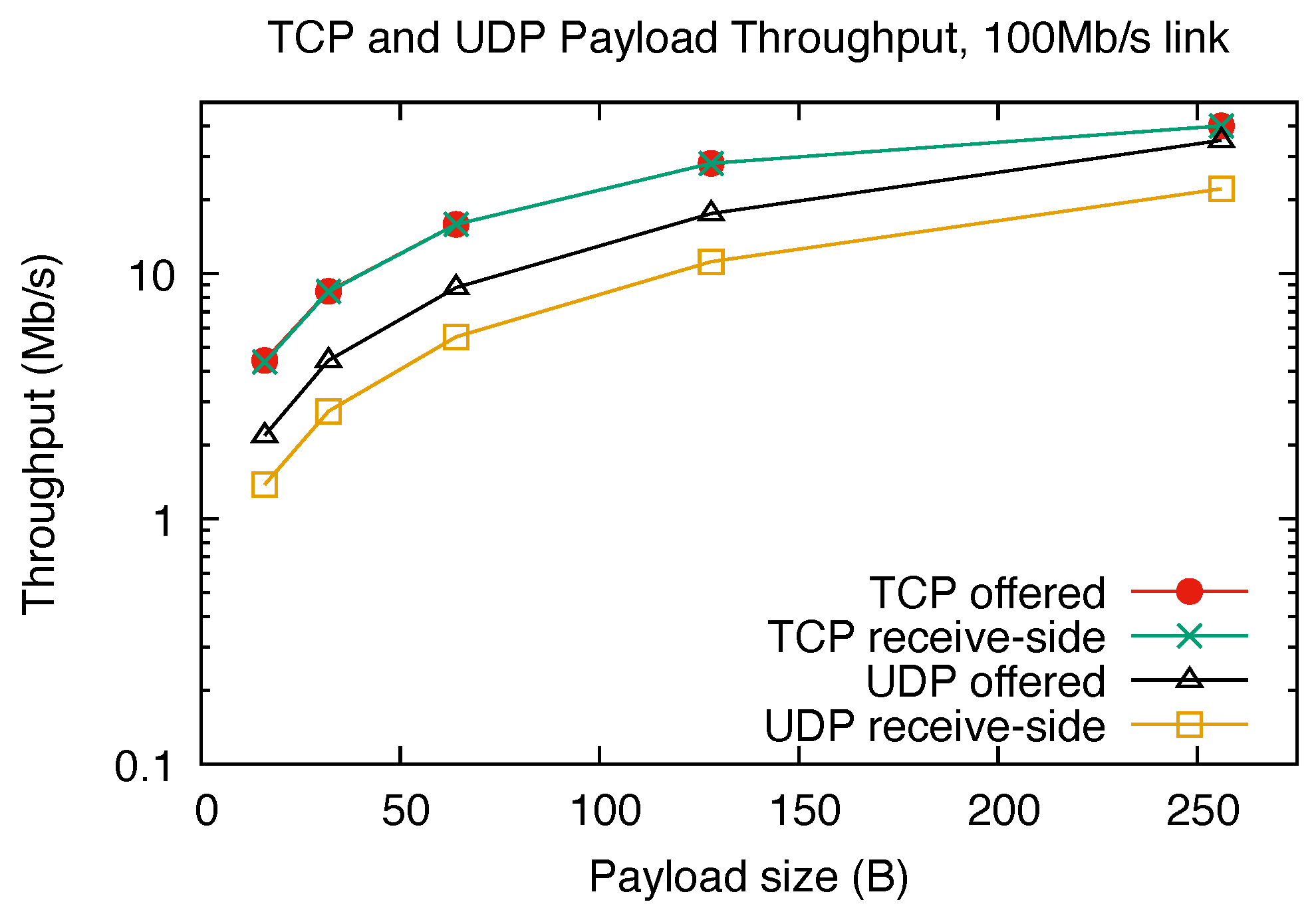

4.2. TCP and UDP Performance Assessment

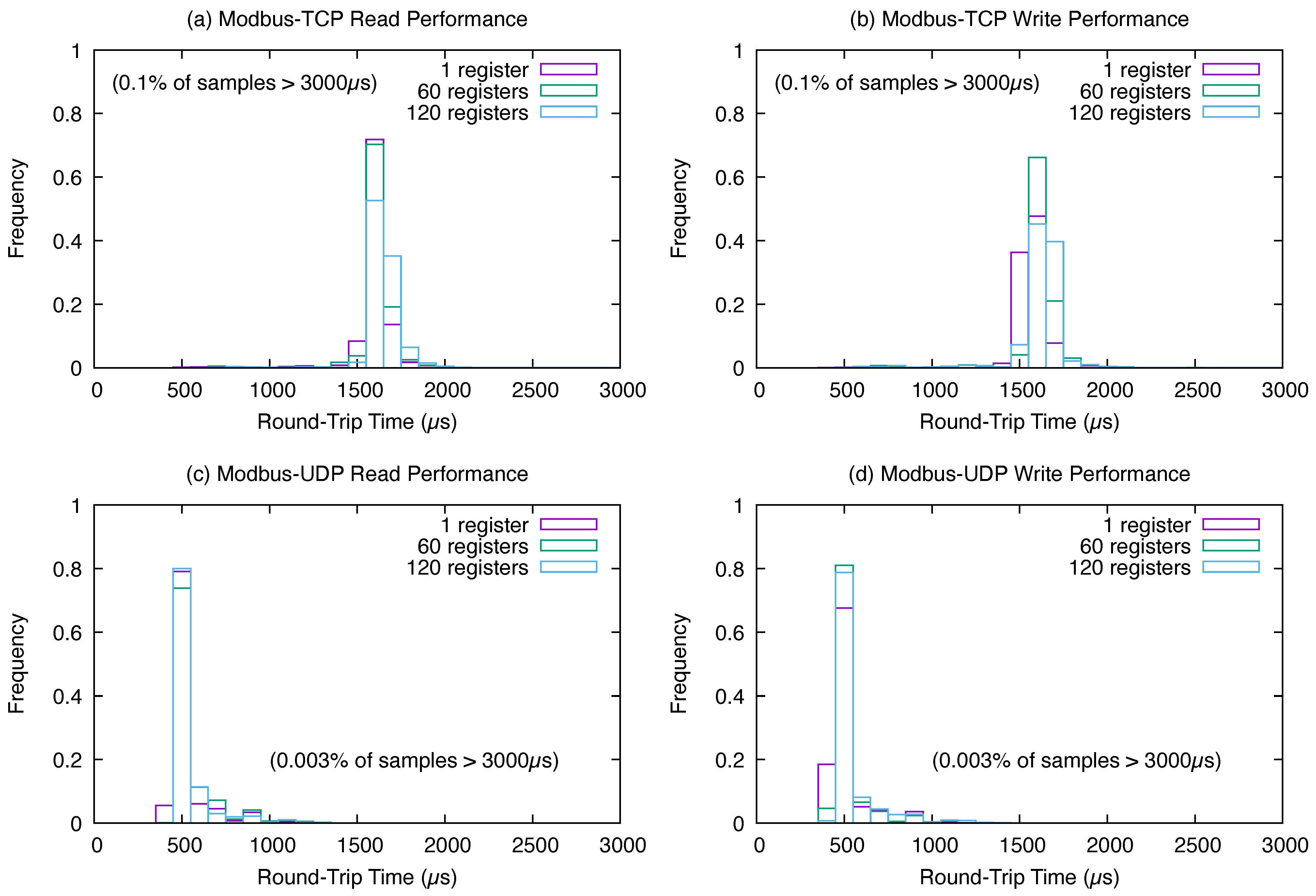

4.3. Round-Trip Time

4.4. Effect of Data Link Errors

$ iptables -A INPUT -i eth0 -m statistic --mode random \

--probability 0.01 -j DROP

where eth0 is the name of the Ethernet interface used for the experiments.4.5. Modbus-TCP and Modbus-UDP Throughput

- The Modbus-TCP throughput is significantly lower than even though the baseline TCP throughput is significantly higher than the baseline UDP throughput. This discrepancy can be attributed to the fact that TCP data aggregation mechanisms, which enhanced throughput in the baseline tests, cannot be used when dealing with request–response transactions whose messages are significantly shorter than the maximum TCP segment size.

- The Modbus-UDP throughput is still much lower than the baseline UDP throughput and never went beyond about 16% of it. The implications of this result are twofold. Firstly, it highlights the ineffectiveness of UDP datagram queuing and pipelining in request–response transactions. In fact, in this kind of transaction, any queues or pipelines that the protocol stack may put into effect are, by definition, completely drained at the end of each transaction. Secondly, it shows that Modbus protocol stack overheads above L4, as well as application-level message processing on both the master and slave side, are significant. In turn, this suggests that optimizing the protocol stack at this level may be the subject of future work.

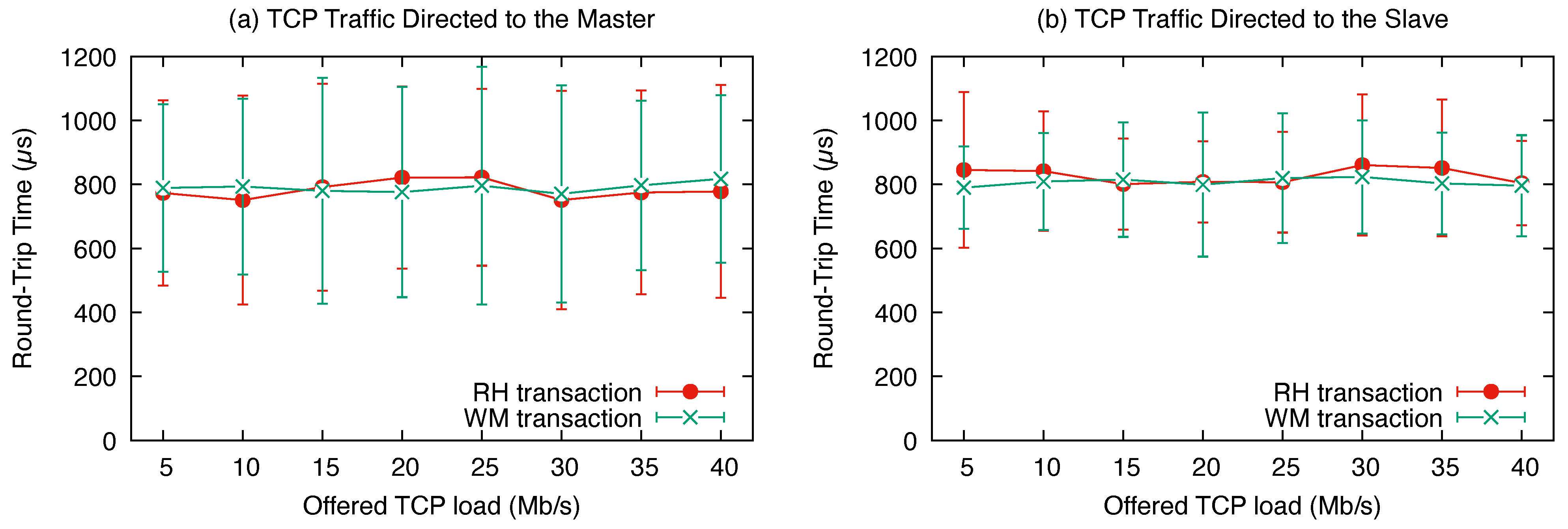

4.6. Sensitivity to Interfering Network Load

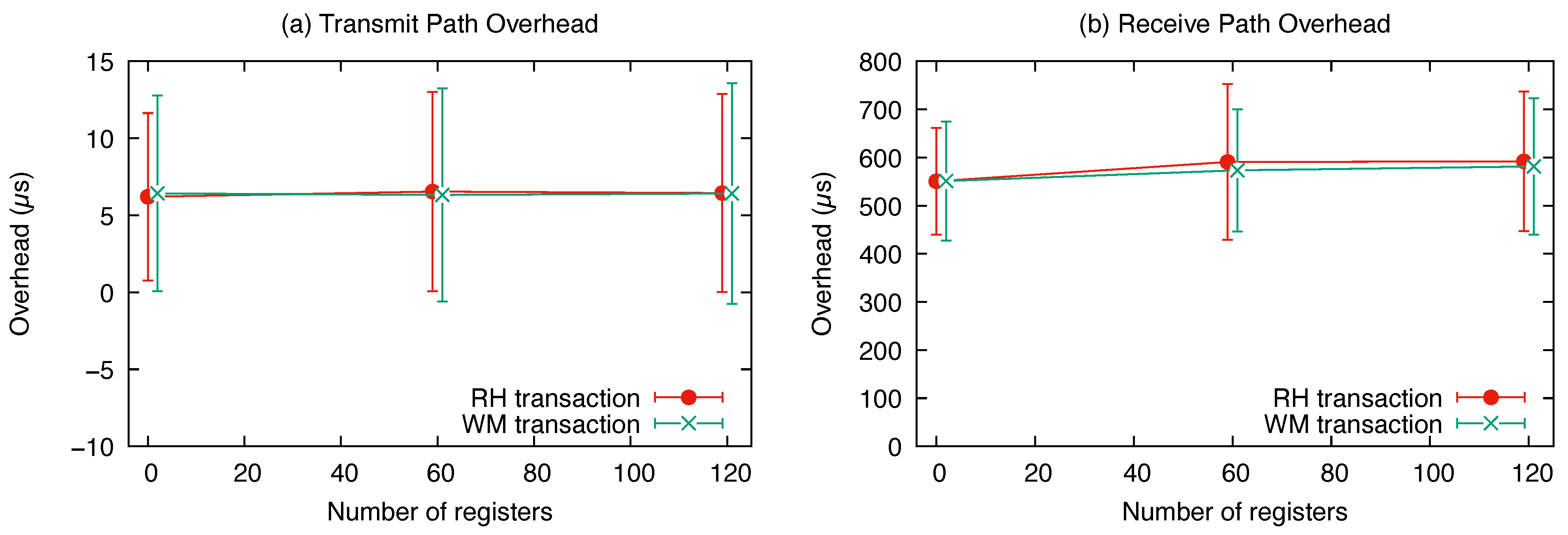

4.7. Transmit and Receive Path Overhead

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Modbus-IDA. MODBUS Application Protocol Specification V1.1b; Modbus-IDA: Westford, MA, USA, 2006; Available online: https://www.modbus.org/docs/Modbus_Application_Protocol_V1_1b.pdf (accessed on 29 July 2025).

- Modbus-IDA. MODBUS over Serial Line Specification and Implementation Guide V1.02; Modbus-IDA: Westford, MA, USA, 2006; Available online: https://www.modbus.org/docs/Modbus_over_serial_line_V1_02.pdf (accessed on 29 July 2025).

- TIA. Electrical Characteristics of Generators and Receivers for Use in Balanced Digital Multipoint Systems (ANSI/TIA/EIA-485-A-98) (R2003); Telecommunications Industry Association: Arlington, VA, USA, 1998. [Google Scholar]

- TIA. Interface Between Data Terminal Equipment and Data Circuit-Terminating Equipment Employing Serial Binary Data Interchange (ANSI/TIA-232-F-1997) (R2002); Telecommunications Industry Association: Arlington, VA, USA, 1997. [Google Scholar]

- Jayalaxmi, P.; Saha, R.; Kumar, G.; Kumar, N.; Kim, T.H. A Taxonomy of Security Issues in Industrial Internet-of-Things: Scoping Review for Existing Solutions, Future Implications, and Research Challenges. IEEE Access 2021, 9, 25344–25359. [Google Scholar] [CrossRef]

- Calderón, D.; Folgado, F.J.; González, I.; Calderón, A.J. Implementation and Experimental Application of Industrial IoT Architecture Using Automation and IoT Hardware/Software. Sensors 2024, 24, 8074. [Google Scholar] [CrossRef]

- Cena, G.; Cibrario Bertolotti, I.; Hu, T.; Valenzano, A. Design, verification, and performance of a MODBUS-CAN adaptation layer. In Proceedings of the 10th IEEE International Workshop on Factory Communication Systems (WFCS), Toulouse, France, 5–7 May 2014; pp. 1–10. [Google Scholar] [CrossRef]

- Modbus-IDA. MODBUS Messaging on TCP/IP Implementation Guide V1.0b; Modbus-IDA: Westford, MA, USA, 2006; Available online: https://www.modbus.org/docs/Modbus_Messaging_Implementation_Guide_V1_0b.pdf (accessed on 29 July 2025).

- Johansson, R. Libmodbus, Open-Source Library for MODBUS TCP and UDP. Available online: https://libmodbus.org;https://github.com/rscada/libmodbus (accessed on 14 July 2025).

- Wimberger, D. Java Modbus Library. Available online: https://sourceforge.net/projects/jamod/ (accessed on 14 July 2025).

- ISO 11898-1:2024; Road Vehicles–Controller Area Network (CAN)—Part 1: Data Link Layer and Physical Signalling. International Organization for Standardization: Geneva, Switzerland, 2024.

- Postel, J. (Ed.) Transmission Control Protocol—DARPA Internet Program Protocol Specification, RFC 793; USC/Information Sciences Institute (ISI): Marina Del Rey, CA, USA, 1981. [Google Scholar]

- Cena, G.; Cibrario Bertolotti, I.; Hu, T. Formal Verification of a Distributed Master Election Protocol. In Proceedings of the 9th IEEE International Workshop on Factory Communication Systems (WFCS), Lemgo/Detmold, Germany, 21–24 May 2012; pp. 245–254. [Google Scholar]

- Liu, X.; Cheng, L.; Bhargava, B.; Zhao, Z. Experimental Study of TCP and UDP Protocols for Future Distributed Databases; Technical Report 95-046; Department of Computer Science, Purdue University: West Lafayette, IN, USA, 1995. [Google Scholar]

- Silva, C.R.M.; Silva, F.A.C.M. An IoT Gateway for Modbus and MQTT Integration. In Proceedings of the 2019 SBMO/IEEE MTT-S International Microwave and Optoelectronics Conference (IMOC), Aveiro, Portugal, 10–14 November 2019; pp. 1–3. [Google Scholar] [CrossRef]

- John, T.; Vorbröcker, M. Enabling IoT connectivity for ModbusTCP sensors. In Proceedings of the 2020 25th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vienna, Austria, 8–11 September 2020; Volume 1, pp. 1339–1342. [Google Scholar] [CrossRef]

- Elamanov, S.; Son, H.; Flynn, B.; Yoo, S.K.; Dilshad, N.; Song, J. Interworking between Modbus and internet of things platform for industrial services. Digit. Commun. Netw. 2024, 10, 461–471. [Google Scholar] [CrossRef]

- He, Y.; Lv, X. The Application of Modbus TCP in Universal Testing Machine. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing China, 12–14 March 2021; Volume 5, pp. 1878–1881. [Google Scholar] [CrossRef]

- Li, Y.; Ma, Y.; Chen, M.; Wang, C. A Power IoT Terminal Asset Identification Technology Suitable for Modbus Protocol. In Proceedings of the 2024 International Conference on Interactive Intelligent Systems and Techniques (IIST), Bhubaneswar, India, 4–5 March 2024; pp. 224–228. [Google Scholar] [CrossRef]

- Künzel, G.; Corrêa Ribeiro, M.A.; Pereira, C.E. A tool for response time and schedulability analysis in Modbus serial communications. In Proceedings of the 2014 12th IEEE International Conference on Industrial Informatics (INDIN), Porto Alegre, RS, Brazil, 27–30 July 2014; pp. 446–451. [Google Scholar] [CrossRef]

- Rodríguez-Pérez, N.; Domingo, J.M.; López, G.L.; Stojanovic, V. Scalability Evaluation of a Modbus TCP Control and Monitoring System for Distributed Energy Resources. In Proceedings of the 2022 IEEE PES Innovative Smart Grid Technologies Conference Europe (ISGT-Europe), Novi Sad, Serbia, 10–12 October 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Reid, S.; Marceau, M.; Filler, K.; Mecham, K.D.; Whitaker, B.M. Supervised Machine Learning for Modbus Communication Protocol Decoding. In Proceedings of the 2025 Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 9–10 May 2025; pp. 1–4. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, Q.; Zhao, Q.; Zhang, X.; Guo, Y.; Lu, S.; Song, L.; Zhao, Z. Enhancing Bidirectional Modbus TCP ↔ RTU Gateway Performance: A UDP Mechanism and Markov Chain Approach. Sensors 2025, 25, 3861. [Google Scholar] [CrossRef] [PubMed]

- Găitan, N.C.; Zagan, I.; Găitan, V.G. Proposed Modbus Extension Protocol and Real-Time Communication Timing Requirements for Distributed Embedded Systems. Technologies 2024, 12, 187. [Google Scholar] [CrossRef]

- Găitan, V.G.; Zagan, I.; Găitan, N.C. Modbus RTU Protocol Timing Evaluation for Scattered Holding Register Read and ModbusE-Related Implementation. Processes 2025, 13, 367. [Google Scholar] [CrossRef]

- Chakraborty, S.; Aithal, P.S. Industrial Automation Debug Message Display Over Modbus RTU Using C#. Int. J. Manag. Technol. Soc. Sci. (IJMTS) 2023, 8, 305–313. [Google Scholar] [CrossRef]

- Krakora, J. Basic Functionality of the Modbus TCP and UDP Based Protocol Using PHP. Available online: https://github.com/krakorj/phpmodbus (accessed on 14 July 2025).

- Walter, C. FreeMODBUS—A Modbus ASCII/RTU and TCP Implementation. Available online: http://freemodbus.berlios.de/ (accessed on 16 June 2025).

- Embedded Solutions. Modbus Master. Available online: http://www.embedded-solutions.at/ (accessed on 19 May 2025).

- Iyengar, J.; Thomson, M. QUIC: A UDP-Based Multiplexed and Secure Transport; Internet Engineering Task Force (IETF): Fremont, CA, USA, 2021; Available online: https://datatracker.ietf.org/doc/rfc9000/ (accessed on 29 July 2025). [CrossRef]

- Luxemburk, J.; Hynek, K.; Čejka, T.; Lukačovič, A.; Šiška, P. CESNET-QUIC22: A large one-month QUIC network traffic dataset from backbone lines. Data Brief 2023, 46, 108888. [Google Scholar] [CrossRef]

- Simpson, A.; Alshaali, M.; Tu, W.; Asghar, M.R. Quick UDP Internet Connections and Transmission Control Protocol in unsafe networks: A comparative analysis. IET Smart Cities 2024, 6, 351–360. [Google Scholar] [CrossRef]

- CS536-Modbus-QUIC. Available online: https://github.com/CS536-Modbus-QUIC (accessed on 30 July 2025).

- Schneider Electric. MODBUS/TCP Security Protocol Specification; Schneider Electric: Andover, MA, USA, 2018; Available online: https://modbus.org/docs/MB-TCP-Security-v21_2018-07-24.pdf (accessed on 29 July 2025).

- Rescorla, E.; Dierks, T. The Transport Layer Security (TLS) Protocol Version 1.2; Internet Engineering Task Force (IETF): Fremont, CA, USA, 2008; Available online: https://datatracker.ietf.org/doc/html/rfc5246 (accessed on 29 July 2025). [CrossRef]

- Thomson, M.; Turner, S. Using TLS to Secure QUIC; Internet Engineering Task Force (IETF): Fremont, CA, USA, 2021; Available online: https://datatracker.ietf.org/doc/html/rfc9001 (accessed on 29 July 2025). [CrossRef]

- Helmy, M.; Samy, M.T.; Azab, K.A. Advanced Security Solutions for CAN Bus Communications in AUTOSAR. In Proceedings of the 2024 International Conference on Computer and Applications (ICCA), Cairo, Egypt, 17–19 December 2024; pp. 1–6. [Google Scholar] [CrossRef]

- IEEE. ISO/IEC/IEEE International Standard: Telecommunications and Exchange Between Information Technology Systems–Requirements for Local and Metropolitan Area Networks—Part 1Q: Bridges and Bridged Networks; IEEE: Piscataway, NJ, USA, 2024. [Google Scholar] [CrossRef]

- Cena, G.; Cereia, M.; Cibrario Bertolotti, I.; Scanzio, S. A Modbus Extension for Inexpensive Distributed Embedded Systems. In Proceedings of the 8th IEEE International Workshop on Factory Communication Systems (WFCS), Nancy, France, 18–21 May 2010; pp. 251–260. [Google Scholar]

- Nichols, K.; Blake, S.; Baker, F.; Black, D. Definition of the Differentiated Services Field (DS Field) in the IPv4 and IPv6 Headers; Internet Engineering Task Force (IETF): Fremont, CA, USA, 1998; Available online: https://datatracker.ietf.org/doc/html/rfc2474 (accessed on 29 July 2025).

- BeagleBoard.org Foundation. BeagleBone® Black; BeagleBoard.org Foundation: Michigan, IN, USA. Available online: https://www.beagleboard.org/boards/beaglebone-black (accessed on 26 June 2025).

- Texas Instruments, Inc. AM335x and AMIC110 Sitara™ Processors Technical Reference Manual; Texas Instruments, Inc.: Dallas, TX, USA, 2019. [Google Scholar]

- ARM, Ltd. ARM® Architecture Reference Manual for A-Profile Architecture; ARM, Ltd.: Cambridge, UK, 2025. [Google Scholar]

- Neumann, P. Communication in industrial automation—What is going on? Control Eng. Pract. 2007, 15, 1332–1347. [Google Scholar] [CrossRef]

| RH Transaction | WM Transaction | |||||

|---|---|---|---|---|---|---|

| N | Request | Response | Total | Request | Response | Total |

| 1 | 72 | 72 | 144 | 73 | 72 | 145 |

| 60 | 72 | 187 | 259 | 191 | 72 | 263 |

| 120 | 72 | 307 | 379 | 311 | 72 | 383 |

| RH Transaction | WM Transaction | |||||

|---|---|---|---|---|---|---|

| Request | Response | Total | Request | Response | Total | |

| 1 | 12 | 11 | 23 | 15 | 12 | 27 |

| 60 | 12 | 129 | 141 | 133 | 12 | 145 |

| 120 | 12 | 249 | 261 | 253 | 12 | 265 |

| Protocol | R/W | (s) | (s) | Min (s) | Max (s) | |

|---|---|---|---|---|---|---|

| Modbus-TCP | R | 1 | 1649.08 | 209.63 | 513 | 12,317 |

| Modbus-TCP | R | 60 | 1667.15 | 167.32 | 605 | 11,287 |

| Modbus-TCP | R | 120 | 1700.70 | 188.89 | 734 | 10,363 |

| Modbus-TCP | W | 1 | 1615.28 | 193.38 | 490 | 12,503 |

| Modbus-TCP | W | 60 | 1662.86 | 225.05 | 561 | 12,949 |

| Modbus-TCP | W | 120 | 1676.69 | 217.37 | 538 | 11,924 |

| Modbus-UDP | R | 1 | 580.00 | 105.64 | 477 | 1353 |

| Modbus-UDP | R | 60 | 609.60 | 130.15 | 490 | 1518 |

| Modbus-UDP | R | 120 | 591.87 | 117.42 | 505 | 4962 |

| Modbus-UDP | W | 1 | 565.15 | 112.80 | 482 | 1336 |

| Modbus-UDP | W | 60 | 578.02 | 112.92 | 488 | 5142 |

| Modbus-UDP | W | 120 | 601.35 | 131.83 | 492 | 1852 |

| Test Case | |||

|---|---|---|---|

| R/W | Conf. int. of (s) | Accept | |

| R | 1 | ||

| R | 60 | ||

| R | 120 | ||

| W | 1 | ||

| W | 60 | ||

| W | 120 | ||

| Protocol | R/W | (s) | (s) | Min (s) | Max (s) | |

|---|---|---|---|---|---|---|

| Modbus-TCP | R | 1 | 1836.76 | 1253.06 | 526 | 487,738 |

| Modbus-TCP | R | 60 | 1839.95 | 1180.89 | 550 | 298,533 |

| Modbus-TCP | R | 120 | 1835.40 | 1172.27 | 557 | 443,298 |

| Modbus-TCP | W | 1 | 1819.59 | 1174.32 | 531 | 417,290 |

| Modbus-TCP | W | 60 | 1818.13 | 1147.78 | 612 | 416,851 |

| Modbus-TCP | W | 120 | 1855.41 | 1247.55 | 550 | 448,144 |

| Modbus-UDP | R | 1 | 723.15 | 547.65 | 427 | 8242 |

| Modbus-UDP | R | 60 | 731.58 | 543.42 | 460 | 8039 |

| Modbus-UDP | R | 120 | 759.47 | 569.08 | 468 | 8411 |

| Modbus-UDP | W | 1 | 724.23 | 561.19 | 441 | 7874 |

| Modbus-UDP | W | 60 | 748.72 | 552.16 | 456 | 8297 |

| Modbus-UDP | W | 120 | 745.42 | 539.76 | 468 | 8026 |

| Test Case | |||

|---|---|---|---|

| R/W | Conf. int. of | Accept | |

| R | 1 | ||

| R | 60 | ||

| R | 120 | ||

| W | 1 | ||

| W | 60 | ||

| W | 120 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cibrario Bertolotti, I. Rethinking Modbus-UDP for Real-Time IIoT Systems. Future Internet 2025, 17, 356. https://doi.org/10.3390/fi17080356

Cibrario Bertolotti I. Rethinking Modbus-UDP for Real-Time IIoT Systems. Future Internet. 2025; 17(8):356. https://doi.org/10.3390/fi17080356

Chicago/Turabian StyleCibrario Bertolotti, Ivan. 2025. "Rethinking Modbus-UDP for Real-Time IIoT Systems" Future Internet 17, no. 8: 356. https://doi.org/10.3390/fi17080356

APA StyleCibrario Bertolotti, I. (2025). Rethinking Modbus-UDP for Real-Time IIoT Systems. Future Internet, 17(8), 356. https://doi.org/10.3390/fi17080356