1. Introduction

Edge intelligence within the Industrial Internet of Things (IIoT) represents a transformative paradigm that is revolutionizing operational efficiency and fostering innovation [

1]. By strategically situating computational capabilities proximate to data sources, edge computing (EC) empowers real-time insights and decision-making processes [

2]. This strategic localization is critical to optimizing resource utilization—a key factor in meeting the stringent demands of industrial ecosystems [

3]. The integration of edge intelligence allows IIoT systems to transcend conventional limitations, enabling greater adaptability and agility. The localized architecture of EC accelerates data processing, thereby reducing latency and enhancing overall system efficiency [

4]. Proximity to data sources facilitates rapid analysis, which is indispensable for real-time responsiveness. This positioning expedites processing, minimizes latency, and improves resource utilization [

5].

Efficient task allocation (TA) and controller migration (CM) are essential for optimizing the performance of edge-based IIoT systems [

6]. These systems rely on distributed architectures, where tasks are allocated based on computational capacity and workload distribution [

7]. CM ensures system adaptability by dynamically reallocating tasks among nodes to maximize resource utilization. The seamless orchestration of TA and CM promotes streamlined operations and significantly enhances productivity within IIoT environments [

8]. Together, TA and CM strengthen system resilience and responsiveness—traits that are vital in industrial contexts [

9,

10]. CM supports adaptability by redistributing tasks in response to variable workloads and network conditions. The synergy between TA and CM underpins efficient resource utilization, which is crucial for meeting industrial performance requirements [

11].

The integration of machine learning (ML) techniques enhances TA and performance optimization in edge-based IIoT systems [

12]. ML algorithms utilize historical and real-time data to refine task allocation strategies, ensuring optimal resource utilization and efficiency [

13]. ML facilitates the proactive identification of performance bottlenecks, enabling the continuous improvement of IIoT operations [

14]. The convergence of EC, TA, CM, and ML drives productivity and competitiveness in modern industrial IoT systems. ML’s integration enables IIoT systems to achieve proactive optimization, anticipating and addressing performance challenges before they impact operations [

15,

16]. Through continuous learning and adaptation, IIoT systems evolve dynamically, ensuring sustained performance enhancements and operational efficiency. ML methods further enhance the optimization of TA and performance within edge-based IIoT systems, driving productivity and competitiveness in industrial settings [

16,

17]. The importance of adaptive control under uncertain and dynamic conditions has also been demonstrated in other domains such as robotics, where impedance learning has been applied to manage human-guided interaction [

18]. The key contributions of this paper are

- −

A cross-layer tasking scheme (CLCTS) that coordinates task grouping and controller re-tasking in IIoT systems.

- −

A dual-phase model combining task similarity grouping and graph-based controller migration.

- −

A learning mechanism that minimizes task acceptance error and re-tasking delays.

- −

Experimental validation demonstrating improved performance over existing methods in completion ratio, processing time, and TAE.

2. Related Works

Existing approaches to IIoT task management can be grouped into four main categories: heuristic optimization, mobility-aware scheduling, market-based allocation, and learning-based or architecture-driven strategies.

Heuristic and optimization-based techniques are commonly used to manage task allocation in resource-constrained IIoT systems. Shahryari et al. [

19] proposed a task-offloading scheme based on a genetic algorithm to address high energy consumption in fog-enabled IoT networks. The scheme identifies the necessity of computation-intensive tasks and provides relevant resources to fog nodes, resulting in improved energy efficiency and network performance. Wang et al. [

20] introduced a time-expanded graph (TEG) model for task offloading in IoT-edge networks. Their approach employs a utility maximization algorithm that converts network dynamics into static weights for allocation. The algorithm aligns terminals to enable proper offloading and enhances the utility range of edge resources.

Mobility- and prediction-based scheduling models aim to reduce latency in dynamic edge environments. Qin et al. [

21] designed a mobility-aware computation offloading and task migration (MCOTM) approach for edge computing systems, primarily to reduce task turnaround time. The model uses trajectory and resource prediction techniques to identify issues in mobility-based offloading. While the approach effectively lessens latency in IIoT applications, it incurs high energy costs during offloading. To complement such predictive methods, Darwish et al. [

22] proposed a green real-time scheduling method for multitask allocation in 3D printing services within IIoT. Their architecture focuses on robust load-balancing by customizing task attributes based on necessity and priority, thus improving scheduling efficiency and minimizing overload in service delivery.

Market-based models optimize task assignment through economic mechanisms. Ma et al. [

23] introduced a truthful combinatorial double auction (TCDA) framework for MEC in IIoT. This mechanism assesses task priorities and provides feasible resources to edge nodes using a linear programming-based padding method. The TCDA mechanism significantly enhances the effectiveness ratio of IIoT networks. An improved version of this model was later proposed by Lin et al. [

24] for non-orthogonal multiple access (NOMA) and MEC networks. This variant focuses on effective task scheduling and resource allocation by identifying requests for resources and applying a deep learning algorithm to determine task necessity and deliver optimal services.

Learning-based and architecture-driven strategies have also gained attention for dynamic scheduling and controller-level decisions. Tan et al. [

25] introduced a new task-offloading method based on learning automata for mobile edge networks. The model offloads requests at the edge layer and uses an auto-scaling strategy to estimate expected cloud requests, improving overall energy efficiency. Sun et al. [

26] extended the work of Hou et al. [

27] by proposing a Bayesian network-based evolutionary algorithm (BNEA) for task prioritization in MEC-enabled IIoT systems. The BNEA evaluates task relationships and reduces the complexity of allocation, thereby minimizing latency in resource provision. Dao et al. [

28] developed a pattern-identified online task scheduling (PIOTS) scheme for multitier edge computing in IIoT services. Using a self-organizing map (SOM), the model represents task features for scheduling, leading to improved service capability and reduced scheduling errors. Zhang et al. [

29] introduced a truth detection-based task assignment (TDTA) scheme for mobile crowdsourced IIoT environments. The TDTA model assesses worker reliability and assigns micro-tasks accordingly, enhancing the accuracy and effectiveness of recognition processes.

Several studies have focused on system-level and architectural solutions to support dynamic scheduling. Coito et al. [

30] developed an integrated IT-based scheme for dynamic scheduling in IIoT architectures. This scheme identifies online events that trigger task execution and defines relevant conditions for latency reduction and improved production efficiency. Malik et al. [

31] proposed an ambient intelligence (AmI)-enabled fog computing model for IIoT applications, which combines contextual and environmental data to enhance network performance and reduce task outages. Compared to other methods, the proposed system increases the number of successfully served tasks at fog nodes. Yu et al. [

32] introduced LayerChain, a hierarchical edge-cloud blockchain for large-scale IIoT applications. LayerChain recognizes differences between edge nodes, organizes blockchain data in a hierarchical format, and improves the scalability and reliability of IIoT systems while reducing latency in resource allocation.

Despite the breadth of these contributions, most existing methods focus on isolated aspects of IIoT task management—such as offloading, energy use, or economic incentive—without jointly addressing real-time synchronization, cross-layer controller re-tasking, or graph-based learning for continuous adaptation. The proposed CLCTS fills this gap through a dual-phase design that integrates deep graph learning with temporal task grouping and controller migration. It provides synchronized task allocation, reduces task acceptance errors, and ensures consistent productivity across distributed IIoT layers.

3. Cross-Layer Controller Tasking Scheme

This section details the proposed algorithm, Cross-Layer Controller Tasking Scheme (CLCTS), designed to reduce task acceptance error (TAE) through task grouping, re-tasking, and cross-layer controller coordination in IIoT environments.

Monotonous job completion in smart industries aided by automation through edge devices and remote assistance is limited. Task offloading and prolonged executions are inevitable due to asynchronous task types. The problem is addressed using task offloading, scheduling, prioritizing, and multiple allocations, as discussed above. The initial differentiation of tasks based on their type, processing time, and resource utilization can be grouped to a certain extent to reduce task acceptance errors. The proposed scheme utilizes this limited certainty to ensure better task completion by reducing task acceptance rates. For this purpose, neural learning is used by dividing the operation phases based on different intervals and edge nodes for performance-centric decisions.

3.1. Data Collection

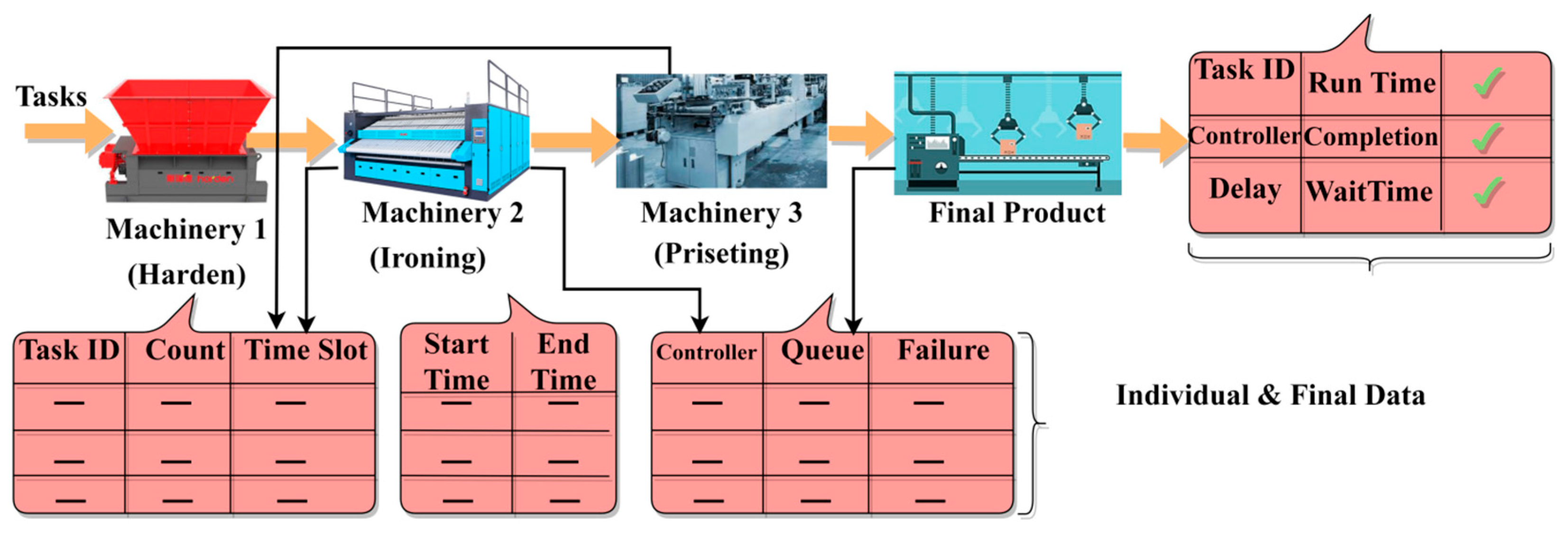

A scheduling optimization dataset (

https://zenodo.org/records/4106746, accessed on 15 December 2024) is utilized in the article to correlate the CLCT scheme with the performance metrics and factors. This dataset includes the work schedule of a textile industry operating under three (major) layers with individual machines for 16 h of tasks/day. The average time slot of a machine varies between 20 and 300 with 60 tasks/min (max). The dataset is represented in

Figure 1.

The above machinery representations are presented for hardening, ironing, and printing with independent controllers. The edge devices are connected to six controllers (three for the above process + categorization, cut, and stripping). The actual data is categorized (re-organized) to further suit the process flow of the proposed scheme. As mentioned, the dataset contains 256 tasks assigned with similarities and dissimilarities linking the 3 layers.

The design goal of CLCTS is to maximize the productivity rate of the smart industry by reducing task acceptance error (TAE) in the IIoT combined smart industrial platform. Smart industry layers are used to gather data from multiple sources and processed through IIoT experiences of different types of machinery and edge devices that are deployed for improving productivity. The proposed scheme is capable of providing better productivity and industrial management towards automation for all the layers in the smart industry. In particular, the EC intelligence and graph-combined learning using IIoT are prevented from TAE to achieve the performance improvement of smart factories.

The function of CLCTS (

Figure 2) is to provide reliable task-grouping and cross-layer control for avoiding time delay systems. Data gathered from the multiple source layers is stored and distributed for analysis to a centralized processing unit in the smart industry. The industrial environment and edge devices are connected through IIoT. The machinery and edge devices are regulated by the centralized controller in the smart industry. Accurate task allocation and distribution are administered to avoid the mis-scheduling of edge devices/machinery information. The IIoT technology enhances the production, maintenance, and outcome to ensure unchangeable task distribution between the smart industry’s processing units. The function of task grouping and cross-layer control in the IIoT is used for task allocation, distribution, and similarity verification. The time delay and scheduling mismatches in devices in the smart industry are identified to prevent TAE from using edge computing and graph learning. The aforementioned processes are discussed in the following sections. Before the discussion, the symbols used throughout the article are described in

Table 1.

3.2. IIoT System Setup

The IIoT system is defined using two phases, namely the task grouping assignment and cross-layer control. The task grouping assignment is responsible for gathering similar tasks for completion, whereas cross-layer control administers dissimilar tasks, from which the re-tasking is scheduled inside and outside the group. The task grouping assignment communicates with a set of edge devices/machinery symbolized as

; these edge devices are capable of allocating and processing tasks from all the industrial layers of smart industry. The variable

indicates the number of tasks in different time intervals

. Assume that

shows the tasked devices that are used in the smart industry layers. Based on the above, the number of task allocations and processing per unit of time is

such that the task grouping

is given as

Such that

and

where

From Equations (1)–(4) above, the variables

and

represent the false task grouping and failed cross-layer controller in any industrial layer. In the above equation,

and

illustrates the mapping of all industrial layers

communicated with the edge devices in different time intervals

. In Algorithm 1, the task grouping process is presented.

| Algorithm 1. Task Grouping |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

In the first phase, the controller nodes with similar tasks are grouped for tasking time to ensure monotonous accomplishments. Contrarily, in the second phase, dissimilar tasks are grouped, and from it, the re-tasking is performed. These two phases are recurrently processed to improve the productivity rate in the smart industry. In this instance, the number of task groups equals the count of monotonic task sets. In the task grouping process, the sequential task allocation

and distribution

are the added-up metric for ensuring reliable task grouping in different time intervals. From the different industrial layers, the similar and dissimilar task groups are interconnected by the graph structures to establish concurrent tasking and completion. The task grouping between different

and

is computed using edge computing based on the similarity of completion and resource utilization. In Equation (1), the condition

identifies dissimilar tasks in the edge devices. The time grouping for the routine cross-layer control based on

is the verifying conditions for classification, and it is formulated as

and

As per Equations (5) and (6), the similar and dissimilar tasks are independently grouped based on the timed task grouping and the routine cross-layer control instance. From the above equations, the reliable reputation of the edge devices is computed for each instance at the appropriate time interval . If denotes the maximum task grouping, the computation checks for all i whether and to define concurrent task execution and completion. The concurrent tasking is dependent on grouping and cross-layer control sequences and such that values are defined at all outputs of the intermediate layer . The linear output of the task groups is interconnected for maximizing . The and final output are difficult to define .

3.3. Case Analysis

The case analysis presents a discussion of

, and

based on

(in h/day). From the data source provided, the maximum number of tasks (256) in 16 h operation is split as 16/hour. By considering this as the optimal (standard) outcome, the above factors are analyzed (in

Figure 3).

The outcome analysis presents the

and

for 256 tasks allocated in a day. The variations are the input and output to the standard (theoretical) value based on

response and

rate. This is due to the similar and dissimilar lengths of the task assigned under “harden”, “ironing”, and “printing” layers in the manufacturing process. Therefore, the processing time of each

varies from the next cycle (

Figure 3). End of Case Analysis.

The inputs for the learning network are for both and that have different groupings. The learning network process is discussed below.

3.4. Learning Network Process

The learning network used for both the mappings differs based on the conditions

,

and

. An edge device is available in the task’s grouped time if it is

or else

. The output of the mediate layer in the first task grouping

generates a linear output, whereas

extracts the output of

from

with

. In Equations (7) and (8), the

and

for

are grouped. The computations are performed for both the estimation of

and

for the conditional assessment of either

or

in

. Therefore, the results are required from all the resources allocated and utilize time interval

. In the above task grouping process,

serves as an input after the detection of

in

grouping is represented as

In (7) and (8) above, the linear output is formulated as

, and if

, then

and

. Therefore, the network is recurrently trained for the inverse outcome of the first phase to prevent TAE, and the second phase trains the learning network based on task migration between the controllers as the optimal output and

. The linear deep learning network initialization is presented in

Figure 4.

The linear learning representation for

classification and

estimation is illustrated in

Figure 4 above. The change in

to

is validated throughout the hidden layer for

extraction. The satisfying condition is

such that

is maximum. However, the change in synchronous (based on

) completion varies this process, and therefore,

is required. This learning phase requires

and

changeovers to identify both phase controls. Therefore, the output from

to

resurrects the

for TAE reduction (referenced in

Figure 4). Hence, the reputation of such edge devices/machinery in smart industries is retained as

. The IIoT stores

for each

, and this graph structure determines the cross-layer controller for the edge devices. Instead,

for

and

is computed using Equations (9) and (10), respectively.

From Equations (9) and (10), the mediate layer and final outputs are obtained by validating the conditions

and

or

for each instance in a step-by-step manner. For instance, if

then

is the last output for graph learning from which the task acceptance error is prevented, and if

, then

. Hence, similar tasks are grouped under the tasking time. For this output,

is the final reputation value of the edge devices, and this is updated with all the mediate layer output and final output as in Equations (9) and (10). This condition is not applicable for the first phase, as in Equations (7) and (8), because it relies on all similar tasks grouped to the given time interval. Therefore, the reputation of all the edge devices, along with different resource allocation and utilization, is observed through IIoT, and hence it is constant. The learning process for the two different task phases applied is described in Algorithm 2.

| Algorithm 2. Learning process description for two phases |

| |

| |

| |

| |

| |

| |

| |

| |

| → | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | |

| | ← |

| |

| |

| |

| |

| |

| |

| |

| |

| → | |

| | |

| | |

| | |

| | |

In the sequential task grouping, in its previous time interval determines the working of acquiring edge device information. If this sequence is observed in any instance (later), then the task grouping from is halted to prevent TAE in the automation and productivity process toward smart industrial management. In this paper, the IIoT provides a notification (signal) to the industrial management to take appropriate actions to address the edge device problems. The aforementioned training is pursued to prevent task prolonging time in the task groups interconnected using edge nodes and the tasking/migration/re-tasking information from the edge devices. These nodes rely on the access delegated to their participation in any time interval. This process prevents TAE and false information infection by gathering accurate information, whereas the productivity rate is high. The task acceptance rate is controlled to ensure delay-less task grouping and controller migration between interconnected layers within the smart industry. However, the chances for tasking/migration/re-tasking information modification in the IIoT environment are high. The task migration and re-tasking information between IIoT layers are distributed in a synchronized completion time to ensure a high productivity rate.

3.5. Case Analysis

This case analysis after learning demonstrates the

Re-tasking for the machines, TM, and

. Similar to the previous group count of 16, the variations below and beyond this standard value are analyzed.

Figure 5 compares tasking without grouping, with grouping, and with CLCTS-based re-tasking.

This case assessment for

and re-tasking with 300 TM and 16

is presented in

Figure 5 above. The learning process differentiates two initializations

and

, as presented in Algorithm 2. These differentiations are performed to handle both phases of operations under non-re-tasking and prolonged processing time. Therefore,

, present in any

, re-assigns tasks as per

and

to increase the

from the consecutive

. This ensures

converges toward the maximum

and achieves better

.

3.6. TAE Reduction

In the task acceptance error reduction process, the learning network follows the recommendation of cross-layer controller migration for re-tasking. The cross-layer controller between interconnected layers relies on

,

, and

for sharing observed information. Though device-level productivity is administered based on the reputation of the edge devices, sharing gathered information using a heterogeneous IIoT platform is still at risk. This concerns heterogeneous network brims for productivity in an end-to-end manner. This network brim is administered based on the recommendation of controller migration and

concurrently for reducing TAE and thereby improving productivity. This is the second phase of task completion administered for productivity between the smart industry and the centralized processing unit. In this productivity improvement process, the groups are interconnected using edge nodes, and the tasking/migration and re-tasking information are shared between the cross-layer controllers and the centralized processing unit. The first phase requires an inverse outcome that is trained to reduce the TAE and is formulated as

where the variable

is the range of assigned and re-tasked, from which the maximum completed task,

, is fetched for cross-layer controller migration. Equation (11) is used to define the validity of synchronized completion time for either

or

as classified by the previous learning network output. Now, the task prolonging time

is given as

This task prolonging time is calculated from the learning network training based on task migration between the cross-layer controllers for the

, after which

is replicated for

. Now, the task volume is formulated as

The above tasking/migration and re-tasking information between the IIoT layers are shared based on in the different time intervals. The sharing information is valid only for the edge nodes from which the successive edge nodes are isolated from the current instance for reducing the overlapping of the next sessions.

3.7. Case Analysis

This case analysis is performed for and under different and machines. This outcome does not fit the , as the processing tasks are similar and are evenly allocated. Therefore, the analysis is considered as the post-conclusive outcome of the learning process below.

In

Figure 6, the outcomes for

and

are represented by assimilating the data source and proposed concept. The

per interval increases if

increases and

is operative. This requires sequential validation using

and

assessments using the learning process provided. Based on the increasing

, the information (of the controller) sharing increases for cross-layer operations. Hence, in this case, the learning network operates concurrently for

and

reduction as inferred from

. This further recalls the

initialization such that the

in

selects controllers with

. The external edge nodes are responsible for ensuring the controller assignment is swapped between

for increasing tasks. End of Case Analysis.

In the IIoT platform, the task acceptance error is prevented to reduce the complexity in production, maintenance, outcome, and prolonged time delay. However, the cross-layer controllers perform an information-sharing analysis as in Equation (14). This analysis ensures better productivity as follows:

In Equation (14), the task grouping conditions as in Equations (1)–(3) and (11) are consolidated to provide a linear output. Based on the mediate outputs and learning network, task migration and information sharing are performed. Hence, if the task prolonging time and synchronized completion time vary, then the above task volume is used. Both the concurrent tasking and completion are carried out, whereas the cross-layer controllers are migrated alone in the IIoT along with the reliable reputation of the edge devices. The IIoT information is disseminated between the interconnected layers and centralized processing units to improve the productivity rate. Therefore, synchronized completion time ensures additional network training and high-time-delay systems in both the sender and receiver ends. Edge computing and graph learning are used to reduce the TAE and time delay systems in the IIoT platform.

4. Performance Evaluation and Analysis

This section presents the performance assessment of the proposed CLCTS using definite metrics. The metrics include task completion time, completion ratio, TAE, processing time, and re-tasking overhead. These metrics are inferred from the existing [

19,

20,

27,

28] references. From these, the methods PIOTS, H-GA-PSO, and TEG-ST in the order [

19,

20,

28] are used as the proposed scheme for a comparative assessment. The number of edge nodes varies between 1 and 16, operating between 20 and 300 time slots. This information is picked from the considered dataset with a maximum of 256 tasks per split between three layered machines. Each machine is assigned two controllers that interface with the 16 edge nodes for information sharing. This information is cloud-dependent for sharing, controlling, and remote handling of the tasks assigned.

The edge devices employed in smart industry layers are utilized for improving productivity and automation through graph learning. This analysis output is used to provide appropriate decisions for sequential task grouping and cross-layer control between the interconnected industrial layers in different time intervals. The two phases are operated under the proposed scheme to ensure monotonous accomplishments and re-tasking inside and outside the group by edge computing. The task acceptance error in prolonged task timing is addressed based on the task migration between the controllers. To enhance the production, maintenance, and outcome in smart industries, controller nodes with similar and dissimilar tasks are grouped concerning the tasking time. This analysis and network training is pursued to achieve better performance. Therefore, the first phase is processed to prevent TAE, and the second phase continuously trains the learning network based on task migration between the controllers. Hence, the industrial layer information is obtained to classify the similarity of completion and resource utilization to satisfy a lower task completion time (

Figure 7). The proposed scheme reduces task completion time by 9.38% and 10.68% for the # of edge devices and the # of time slots in order.

In this proposed cross-layer controller, tasking is performed based on migration and re-tasking information sharing between the IIoT layers to achieve a high completion ratio (Refer to

Figure 8). The first and second phases are processed to improve automation and productivity in smart industries with fewer TAEs. The controller migration and re-tasking inside and outside the group are pursued between the controllers based on training the learning network through EC intelligence and graph-combined learning. The recurrent training of the learning network minimizes task prolonging time with the interconnected groups using edge nodes, and re-tasking/migration information sharing between the layers in the synchronized completion time leads to high productivity. Such controller nodes with similar tasks are grouped to achieve monotonous accomplishments between the IIoT layers. The function of task grouping and cross-layer controlling between the IIoT layers is pursued for improving task allocation, distribution, and similarity verification, thereby improving the completion ratio. Therefore, the productivity based on completion ratio is high compared to the other methods. For the # of edge nodes and the # of time slots, the completion ratio is improved by 13.19% and 13.27%, respectively.

The learning network is trained to prevent task completion errors in edge-controlled IIoT. Based on the task grouping assignment and cross-layer controlling, is difficult to determine each interconnected layer and centralized processing unit in EC. The appropriate recommendation provided for operating the two phases through graph structures is to reduce TAE. The two phases are concurrently processed to achieve a high productivity rate in the smart industry. From the instance, the number of similar tasks grouped is the same as the monotonous accomplishments. The task grouping between interconnected layers is represented as

and

to inherit the edge computing based on task similarity verification. The proposed CLCTS addresses such TAEs through the mediate layer and final output. The controller re-tasking is performed by maximizing the network training for addressing high-time delay systems through the graph structure. This computation is pursued by identifying the conditions

and

for all

to determine the concurrent tasking and completion from the current instance. Consider the

and

groupings for maximizing the productivity rate with fewer TAEs (

Figure 9). This TAE is reduced by 17.41% for the # of edge devices and 12.24% for the # of time slots.

This proposed scheme is aided to satisfy the low processing time for the application of EC in the next generation IIoT. This reduces the task acceptance error in task grouping, from which the high completion ratio is achieved (refer to

Figure 10). In this article, graph learning is used to define concurrent tasking and completion to identify the dissimilar tasks in edge devices and thereby minimizes task prolonging time. The controller migration is performed based on task groups that are interconnected using graph structures to reduce the high time-delay systems. The maximum resource utilization and similarity of completion is achieved for the controller re-tasking inside and outside the group. The task acceptance error is identified at the time of training the learning network using edge computing and graph learning for verifying similar tasks for better productivity with less running time using the condition

and

. This condition is not applicable for the first phase because it relies on all similar tasks grouped in the given time interval. Therefore, the reputation of all the edge devices, along with concurrent task allocation and utilization, is observed through IIoT, and hence, it is constant. From this analysis, the inverse outcome of the first phase is used to reduce TAE. Hence, the running time is lower compared to the other factors. The task processing time is reduced by 7.36% and 7.39% for the varying # Edge Devices and # Time Slots.

The production, maintenance, and outcome are improved through the proposed scheme and learning network under high task volumes (

Figure 11). In the continuous task grouping,

in its current and previous tasks grouping is compared to define the working of acquiring edge devices. If such groups are interconnected through graph learning, as observed using the condition

(later), then the task grouping from

is halted to prevent TAE and improve the automation and productivity rate towards smart industries. The learning network is trained to prevent task prolonging time and TAE using edge nodes and the tasking/migration/re-tasking information from the edge devices. The learning network is used to identify the re-tasking overhead in cross-layer controllers. These heterogeneous edge networks are employed to achieve high productivity and automation. These network brims are administered based on the task grouping, and controller migration is concurrently analyzed to reduce TAE and thereby increase productivity. In this article, the graph structure is used to reduce the re-tasking overhead in any instance for maximizing productivity. Therefore, less re-tasking overhead from the controller re-tasking is compared to the other factors. The CLCT scheme reduces 8.67% and 12.84% of the overhead for the # of edge nodes and the # of time slots.

5. Conclusions

This study presents the development and implementation of a Cross-Layer Controller Tasking Scheme (CLCTS) aimed at enhancing task completion in IIoT environments supported by edge computing. The scheme addresses the challenges of prolonged processing time and persistent task acceptance errors arising from asynchronous operations. Task assignment, processing, and re-tasking are orchestrated by controllers embedded within each IIoT layer, functioning both independently and in coordination via edge nodes. These nodes facilitate the exchange of task-related and completion-specific data among layer controllers, thereby increasing the likelihood of controller migration. This migration is guided by a deep graph learning paradigm that underpins the scheme’s dual-phase operational model. The learning framework is trained to promote the grouping of similar tasks in order to minimize processing delays and enable timely migration in cases of asynchronous tasking. Inverse training is employed to reduce the incidence of task offloading failures and acceptance errors. Consequently, consecutive time slots are defined with dynamic task groupings and pre-assigned controller readiness, informed by prior decisions derived from graph-based learning. The approach is iterated until optimal task completion is achieved, thereby enhancing overall IIoT productivity. This work presents an architectural design that integrates graph-based controller migration, temporal task grouping, and learning-based scheduling. The implementation is validated through performance comparisons with existing techniques, which demonstrate measurable improvements in task handling and system coordination.

Though the intelligence of the brim network is reliable in assisting IIoT in cross-layer control, the shuffled edge connectivity requires a pause time before device allocation. This pause time was found to increase with the increasing edge devices. It is quite obvious that for a large-scale IIoT, the layer count is high, and therefore, large densities of edge nodes are required. For such a scenario, to reduce the pause time, a completion-time-primitive allocation with precise training is intended. In that case, the network operations are to be extended to accommodate time-specific metrics for better productivity decisions.