Accessible IoT Dashboard Design with AI-Enhanced Descriptions for Visually Impaired Users

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Proposed Solution and Contributions

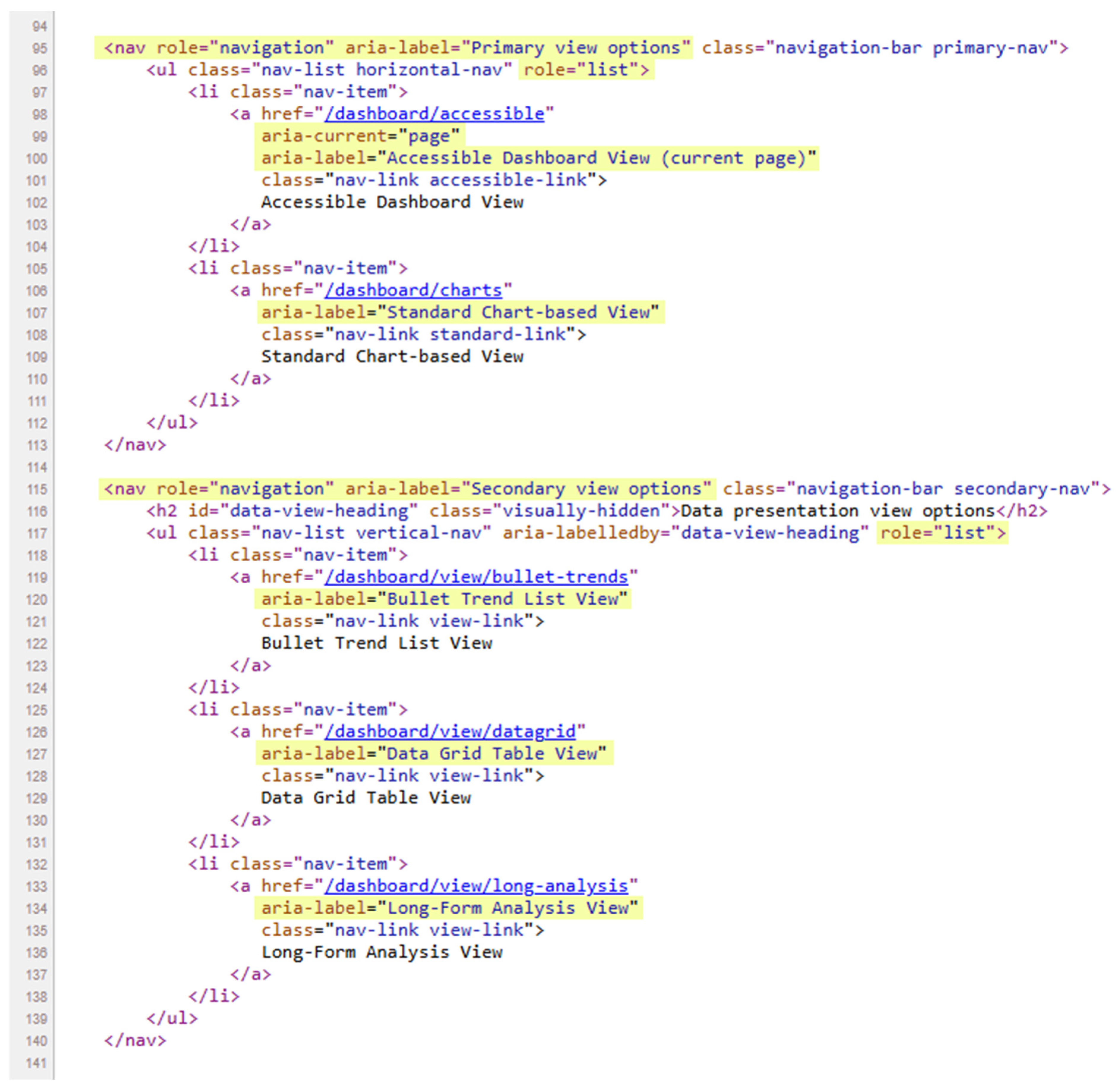

- Accessibility-First Semantic Design: AccessiDashboard uses semantic HTML5 markup and WAI-ARIA (Web Accessibility Initiative—Accessible Rich Internet Applications) [13] attributes to ensure that screen readers can interpret the page structure and content intuitively. The front-end interface is organized with landmarks (e.g., <header>, <main>, <section> regions), headings, and labels for real-time sensor elements. This structure provides a meaningful reading order and enables efficient keyboard navigation for users who cannot rely on visual cues.

- AI-Generated Long Descriptions: Moving beyond simple alt text, the system leverages an LLM to produce extended textual descriptions of charts and complex graphics. These long descriptions detail trends, anomalies, and key numerical insights that would otherwise be conveyed only visually. The AI-generated narratives serve as an alternative interface modality, catering to users who cannot interpret the visuals. Importantly, these descriptions are context-aware and can include relevant domain knowledge (e.g., noting that a temperature reading is within normal range for a given context).

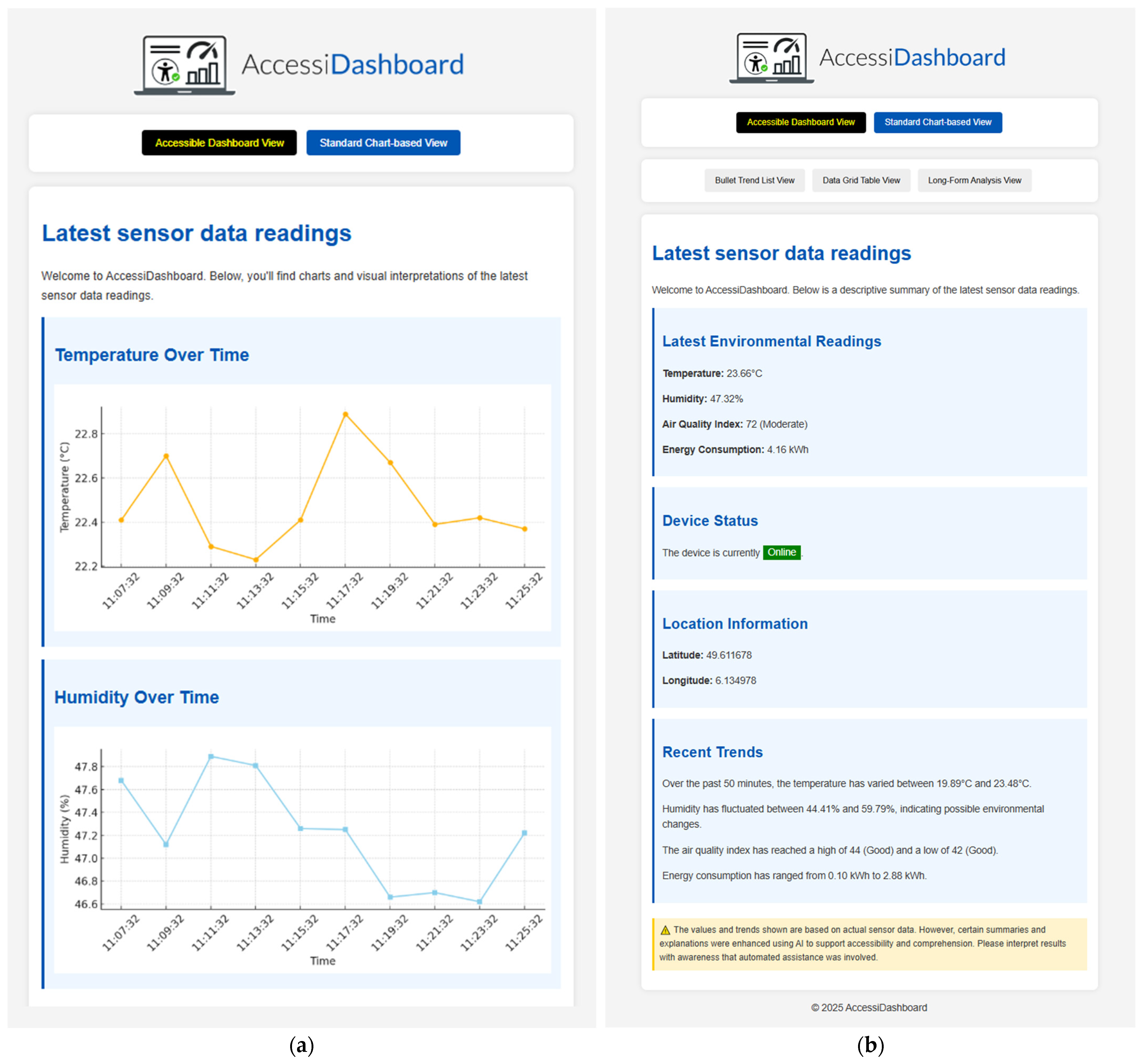

- Multi-Modal Data Representation: AccessiDashboard provides multiple synchronized representations of the sensor data. In addition to the conventional chart-based view, it offers a text-first interface comprising structured data tables, bullet-point summaries of sensor status, and AI-generated analytical paragraphs. Users can switch between these modes to suit their preferences or assistive technology needs. This multi-modal approach benefits not only blind users but also those who may prefer textual data (for example, users of screen magnifiers or braille displays, or situations where visual attention is limited).

- Scalable Architecture with Ethical AI Integration: The platform’s back-end is built with the Laravel PHP framework [14] following an MVC (Model-View-Controller) [15] design, which allows clean separation of data, logic, and presentation. The system integrates with external IoT data sources through APIs (Application Programming Interfaces) [16] for device data ingestion, and uses a database to store sensor readings and corresponding AI-generated descriptions. A privacy-by-design [17] paradigm is adopted for the AI components: user data is handled in compliance with GDPR guidelines [18], with controls to prevent sensitive information from being sent to external AI services and logs to audit the AI’s outputs. The design also considers scalability (able to handle many sensors and frequent updates) and security (secure transmission of data and user authentication).

2. Related Work

2.1. Accessibility in IoT Dashboards

2.2. AI in Accessibility and Multi-Modal Interfaces

2.3. Identified Research Gap

3. System Design and Architecture

3.1. System Overview

3.2. Core Components

3.2.1. Semantic HTML5 and WAI-ARIA Front-End

3.2.2. Laravel Back-End (MVC Architecture)

- Sensor—representing an IoT sensor or data source. A Sensor record holds metadata such as a unique ID, a human-readable name or label, the type of measurement (e.g., temperature, humidity, air quality index, etc.).

- SensorReading—representing a single data point from a sensor. Each reading has a timestamp, a value (or multiple values if the sensor outputs a complex object), and a foreign key linking it to a Sensor. This model might also include derived fields (like whether this reading triggered an alert, or a categorization of the value such as “moderate” or “high”).

- LongDescription—representing an AI-generated descriptive text for a visual element or set of readings. It stores the text of the description, the time it was generated, the context (which sensor it corresponds to), and metadata like which model generated it or who (if an admin edited it).

3.2.3. AI Integration for Generating Long Descriptions

- Data and Context Gathering: When a new sensor visualization is created or updated, the system compiles the relevant data points. This includes recent readings (e.g., the last N data points or last T minutes of data), summary statistics (min, max, average, current value), and contextual metadata (sensor name, units, any threshold definitions such as what constitutes “high” or “low”). If a short caption or alt text exists, that might be included to guide the AI.

- Prompt Construction: The system constructs a prompt to send to the LLM. The prompt is written in natural language, instructing the model to act as an explainer. For example: “The following are recent readings from a sensor measuring temperature (in °C). Provide a detailed yet concise description of the trend and any significant changes. Data: 14:00—22.5 °C, 14:10—23.0 °C, 14:20—24.5 °C, 14:30—26.0 °C, 14:40—25.5 °C. The sensor is indoors; normal range is 20–25 °C.”. This prompt encourages the AI to note the trend (rising then slightly falling temperature), relate to normal range, etc.

- AI Generation: The LLM processes the prompt and returns a generated description. The output is expected to be a structured text—typically a few sentences or a short paragraph. We encourage the model to mention the overall trend (e.g., increasing, decreasing, stable), highlight any anomalies or out-of-range values, and possibly contextualize what those values mean (if it has the info, like “this is within normal range” or “this is unusually high”).

- Post-processing and Storage: The returned text is checked (basic validations like ensuring it is not empty and does not contain obviously wrong units, etc.). Then, it is stored in the LongDescription model associated with that sensor. If the AI output contains any phrasing that might be misleading or require tweaking (perhaps the AI used an uncommon term for something), an administrator interface allows for editing the description before it is shown to end-users. In the current demonstrator, we log the AI’s raw output for auditing but display it directly to the user if no human edits are made.

3.2.4. Multi-Modal Data Representation

- Visual Chart Mode: The traditional dashboard view with charts, graphs, and minimal text. This mode is similar to conventional dashboards and is primarily intended for sighted users or those who prefer graphical representation. Each chart includes alt text and a caption, but the interface assumes the user can perceive the visual elements. This is the only mode that does not rely on AI generation.

- Bullet Trend List Mode: In this AI-generated textual mode, the latest sensor readings or summarized trends are presented as bullet points. For example, instead of a graph showing the last 10 readings, the dashboard might display a list of items such as: “Temperature: 22.4 °C—moderate level detected; Humidity: 48.1%—comfortable range; Air Quality Index: 94—moderate air quality.” Each bullet provides a concise, plain-language summary of a parameter. This format is accessible for screen reader users and presents clearly separated data points in a linear sequence, enabling efficient comprehension.

- Data Grid Table Mode: Also AI-generated, this mode presents data in a tabular format similar to a spreadsheet. It may list the last N readings for each sensor in a table with columns like Timestamp, Temperature, Humidity, etc. This structured layout enables users to navigate cell by cell and compare values across time or across sensors. It leverages semantic HTML table markup, ensuring relationships between headers and data remain accessible and clear to assistive technologies.

- Detailed Analysis Mode: This mode provides a narrative, paragraph-based summary where the AI generates long-form textual descriptions of data trends and contextual insights. Instead of displaying raw values, the user receives a coherent and context-rich interpretation of the data—similar to an automated report or commentary powered by a large language model.

3.2.5. Security and Privacy Considerations

- Lawfulness, Fairness, and Consent: Before using sensor data, organizations agree to a data-processing policy based on legitimate interest. If personal metadata is included (e.g., names in alerts), explicit opt-in is required. Users are clearly informed about what data is shared and why, supporting transparency.

- Purpose Limitation and Data Minimization: Only essential fields, like timestamp, sensor ID, and readings, are used for AI processing. Any labels that could reveal identity or location are removed or generalized before being sent.

- Anonymization and Pseudonymization: Identifiers are securely hashed, data is encrypted at rest, and AI-generated outputs are linked to anonymized keys for added protection.

- Storage Limitation and Security: Sensor data is stored for up to 30 days, and AI outputs for up to 90 days before anonymization. All communications are encrypted, and access is logged to ensure accountability.

- User Rights and Data Control: A “My Data” section allows users to view, export, or delete their data at any time, with deletions applied to both original records and related AI outputs.

- Model Flexibility and Data Sovereignty: The system can switch between cloud-based and self-hosted AI models, allowing organizations to keep data processing within their jurisdiction.

4. Implementation Details

- Temperature: 22.41 °C—Moderate level detected.

- Humidity: 52.68%—Comfortable environment.

- Air Quality Index: 90—Moderate air quality.

- Energy Consumption: 1.18 kWh—Normal usage.

- Device Status: Idle.

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alenizi, A.S.; Al-Karawi, K.A. Internet of Things (IoT) Adoption: Challenges and Barriers. In Proceedings of the Seventh International Congress on Information and Communication Technology; Yang, X.S., Sherratt, S., Dey, N., Joshi, A., Eds.; Springer: Singapore, 2023; Lecture Notes in Networks and Systems, 464; pp. 259–273. [Google Scholar] [CrossRef]

- Zong, J.; Lee, C.; Lundgard, A.; Jang, J.; Hajas, D.; Satyanarayan, A. Rich Screen Reader Experiences for Accessible Data Visualization. Comput. Graph. Forum 2022, 41, 15–27. [Google Scholar] [CrossRef]

- World Health Organization. Blindness and Vision Impairment. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 8 June 2025).

- WebAIM. Screen Reader User Survey #10 Results. Available online: https://webaim.org/projects/screenreadersurvey10/ (accessed on 8 June 2025).

- Fan, D.; Siu, A.F.; Rao, H.; Kim, G.S.-H.; Vazquez, X.; Greco, L.; O’Modhrain, S.; Follmer, S. The accessibility of data visualizations on the web for screen reader users: Practices and experiences during COVID-19. ACM Trans. Access. Comput. 2023, 16, 4. [Google Scholar] [CrossRef]

- U.S. General Services Administration. Section 508 of the Rehabilitation Act (29 U.S.C. §794d). Available online: https://www.section508.gov/ (accessed on 2 April 2025).

- EN 301 549 V3.2.1 (2021-03); European Telecommunications Standards Institute (ETSI). Accessibility Requirements for ICT Products and Services. ETSI Standard. ETSI: Sophia-Antipolis, France, 2021.

- Patil, R.; Gudivada, V. A Review of Current Trends, Techniques, and Challenges in Large Language Models (LLMs). Appl. Sci. 2024, 14, 2074. [Google Scholar] [CrossRef]

- Othman, A.; Dhouib, A.; Al Jabor, A.N. Fostering Websites Accessibility: A Case Study on the Use of the Large Language Models ChatGPT for Automatic Remediation. In Proceedings of the 16th International Conference on Pervasive Technologies Related to Assistive Environments (PETRA ’23), Corfu, Greece, 5–7 July 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 707–713. [Google Scholar] [CrossRef]

- Sin, J.; Franz, R.L.; Munteanu, C.; Barbosa Neves, B. Digital Design Marginalization: New Perspectives on Designing Inclusive Interfaces. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI ’21), Yokohama, Japan, 8–13 May 2021; ACM: New York, NY, USA, 2021. Article 380. pp. 1–11. [Google Scholar] [CrossRef]

- Cheng, D. Research on HTML5 Responsive Web Front-End Development Based on Bootstrap Framework. In Proceedings of the 2024 7th International Conference on Computer Information Science and Application Technology (CISAT), Hangzhou, China, 15–17 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 711–718. [Google Scholar] [CrossRef]

- World Wide Web Consortium (W3C). WAI-ARIA Authoring Practices 1.2 – Structural Roles. Available online: https://www.w3.org/WAI/ARIA/apg/practices/structural-roles/ (accessed on 5 April 2025).

- World Wide Web Consortium (W3C). Accessible Rich Internet Applications (WAI-ARIA) 1.2. W3C Recommendation, 21 December 2021. Available online: https://www.w3.org/TR/wai-aria-1.2/ (accessed on 10 April 2025).

- Laravel. Laravel PHP Framework–Home Page. Available online: https://laravel.com/ (accessed on 12 March 2025).

- Guamán, D.; Delgado, S.; Pérez, J. Classifying Model-View-Controller Software Applications Using Self-Organizing Maps. IEEE Access 2021, 9, 45201–45229. [Google Scholar] [CrossRef]

- Raatikainen, M.; Kettunen, E.; Salonen, A.; Komssi, M.; Mikkonen, T.; Lehtonen, T. State of the Practice in Application Programming Interfaces (APIs): A Case Study. In Software Architecture (ECSA 2021); Biffl, S., Navarro, E., Löwe, W., Sirjani, M., Mirandola, R., Weyns, D., Eds.; Springer: Cham, Switzerland, 2021; Lecture Notes in Computer Science, 12857; pp. 225–241. [Google Scholar] [CrossRef]

- Andrade, V.C.; Gomes, R.D.; Reinehr, S.; de Almendra Freitas, C.O.; Malucelli, A. Privacy by Design and Software Engineering: A Systematic Literature Review. In Proceedings of the XXI Brazilian Symposium on Software Quality (SBQS ’22), Maceió, Brazil, 22–24 August 2022; ACM: New York, NY, USA, 2023. Article 18. pp. 1–10. [Google Scholar] [CrossRef]

- European Union. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 (General Data Protection Regulation). Off. J. Eur. Union 2016, L119, 1–88. [Google Scholar]

- AccessibleWeb. WCAG Conformance Rating: Level AA. Available online: https://accessibleweb.com/rating/aa/ (accessed on 23 February 2025).

- Svalina, A.; Pibernik, J.; Dolić, J.; Mandić, L. Data Visualizations for the Internet of Things Operational Dashboard. In Proceedings of the 2021 International Symposium ELMAR, Zadar, Croatia, 13–15 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 91–96. [Google Scholar] [CrossRef]

- World Wide Web Consortium (W3C). Web Content Accessibility Guidelines (WCAG) 2.1. W3C Recommendation, 5 June 2018. Available online: https://www.w3.org/TR/WCAG21/ (accessed on 5 December 2024).

- Srinivasan, A.; Harshbarger, T.; Hilliker, D.; Mankoff, J. Azimuth: Designing Accessible Dashboards for Screen Reader Users. In Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’23), New York, NY, USA, 22–25 October 2023; ACM: New York, NY, USA, 2023. Article 49. pp. 1–16. [Google Scholar] [CrossRef]

- Schicchi, D.; Taibi, D. AI-Driven Inclusion: Exploring Automatic Text Simplification and Complexity Evaluation for Enhanced Educational Accessibility. In Higher Education Learning Methodologies and Technologies Online (HELMeTO 2023); Communications in Computer and Information Science, 2076; Springer: Cham, Switzerland, 2024; pp. 359–371. [Google Scholar]

- Murtaza, M.; Ahmed, Y.; Shamsi, J.A.; Sherwani, F.; Usman, M. AI-Based Personalized E-Learning Systems: Issues, Challenges, and Solutions. IEEE Access 2022, 10, 81323–81342. [Google Scholar] [CrossRef]

- Pradhan, A.; Mehta, K.; Findlater, L. “Accessibility Came by Accident”: Use of Voice-Controlled Intelligent Personal Assistants by People with Disabilities. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Montreal, QC, Canada, 21–26 April 2018; ACM: New York, NY, USA, 2018. Paper 459. pp. 1–13. [Google Scholar] [CrossRef]

- Jain, D.; Findlater, L.; Gilkeson, J.; Holland, B.; Duraiswami, R.; Zotkin, D.; Vogler, C.; Froehlich, J.E. Head-Mounted Display Visualizations to Support Sound Awareness for the Deaf and Hard of Hearing. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ’15), Seoul, Republic of Korea, 18–23 April 2015; ACM: New York, NY, USA, 2015; pp. 241–250. [Google Scholar] [CrossRef]

- Setlur, V.; Battersby, S.E.; Tory, M.; Gossweiler, R.; Chang, A.X. Eviza: A Natural Language Interface for Visual Analysis. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology (UIST ’16), Tokyo, Japan, 16–19 October 2016; ACM: New York, NY, USA, 2016; pp. 365–377. [Google Scholar] [CrossRef]

- Thobhani, A.; Zou, B.; Kui, X.; Abdussalam, A.; Asim, M.; Shah, S.; ELAffendi, M. A Survey on Enhancing Image Captioning with Advanced Strategies and Techniques. CMES—Comput. Model. Eng. Sci. 2025, 142, 2247–2280. [Google Scholar] [CrossRef]

- European Parliament and Council. Directive (EU) 2019/882 of 17 April 2019 on the Accessibility Requirements for Products and Services (European Accessibility Act). Off. J. Eur. Union 2019, L151, 70–115. [Google Scholar]

- JSON.org. Introducing JavaScript Object Notation (JSON). Available online: https://www.json.org/json-en.html (accessed on 17 January 2025).

- Ehsan, A.; Abuhaliqa, M.A.M.E.; Catal, C.; Mishra, D. RESTful API Testing Methodologies: Rationale, Challenges, and Solution Directions. Appl. Sci. 2022, 12, 4369. [Google Scholar] [CrossRef]

- Django Software Foundation. Django Web Framework—Home Page. Available online: https://www.djangoproject.com/ (accessed on 15 November 2024).

- OpenAI. OpenAI Platform Documentation. Available online: https://platform.openai.com/ (accessed on 22 March 2025).

- NV Access. NVDA Screen Reader—Download Page. Available online: https://www.nvaccess.org/download/ (accessed on 1 May 2025).

- Apple Inc. VoiceOver for macOS—User Guide. Available online: https://support.apple.com/en-gb/guide/voiceover/welcome/mac (accessed on 27 April 2025).

- Redis. Redis In-Memory Data Store—Home Page. Available online: https://redis.io/ (accessed on 14 December 2024).

- Mozilla Developer Network (MDN). Document Object Model (DOM) API Reference. Available online: https://developer.mozilla.org/en-US/docs/Web/API/Document_Object_Model (accessed on 21 March 2025).

- Laravel. Blade Templating Engine—Documentation (v12.x). Available online: https://laravel.com/docs/12.x/blade (accessed on 13 April 2025).

- Hu, Q.; Asghar, M.R.; Brownlee, N. A Large-Scale Analysis of HTTPS Deployments: Challenges, Solutions, and Recommendations. J. Comput. Secur. 2020, 29, 25–50. [Google Scholar] [CrossRef]

- Wu, C.H.; Tang, K.D.; Peng, K.L.; Huang, Y.M.; Liu, C.H. The Effects of Matching/Mismatching Cognitive Styles in E-Learning. Educ. Psychol. 2024, 44, 1048–1072. [Google Scholar] [CrossRef]

- Kumar, M.; Kumar, A.; Verma, S.; Bhattacharya, P.; Ghimire, D.; Kim, S.-h.; Hosen, A.S.M.S. Healthcare Internet of Things (H-IoT): Current Trends, Future Prospects, Applications, Challenges, and Security Issues. Electronics 2023, 12, 2050. [Google Scholar] [CrossRef]

- Choi, W.; Kim, J.; Lee, S.; Park, E. Smart Home and Internet of Things: A Bibliometric Study. J. Clean. Prod. 2021, 301, 126908. [Google Scholar] [CrossRef]

- Khan, J.A. Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC). In Improving Security, Privacy, and Trust in Cloud Computing; Goel, P.K., Pandey, H.M., Singhal, A., Agarwal, S., Eds.; IGI Global: Hershey, PA, USA, 2024; pp. 113–126. [Google Scholar] [CrossRef]

- Laravel. Cross-Site Request Forgery (CSRF) Protection—Documentation (v12.x). Available online: https://laravel.com/docs/12.x/csrf (accessed on 24 March 2025).

- Kumar, D.D.; Mukharzee, J.D.; Reddy, C.V.D.; Rajagopal, S.M. Safe and Secure Communication Using SSL/TLS. In Proceedings of the 2024 International Conference on Emerging Smart Computing and Informatics (ESCI), Pune, India, 5–7 January 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Kolevski, D.; Michael, K.; Abbas, R.; Freeman, M. Cloud Data Breach Disclosures: The Consumer and Their Personally Identifiable Information (PII)? In Proceedings of the IEEE Conference on Norbert Wiener in the 21st Century (21CW), Chennai, India, 22–25 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–9. [Google Scholar] [CrossRef]

- Horn, S.A.; Dasgupta, P.K. The Air Quality Index (AQI) in Historical and Analytical Perspective: A Tutorial Review. Talanta 2024, 267, 125260. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. arXiv 2023, arXiv:2311.05232. [Google Scholar] [CrossRef]

- Verhoeff, T. Staying DRY with OO and FP. Olymp. Inform. 2024, 18, 113–128. [Google Scholar] [CrossRef]

- Bootstrap. Bootstrap 5 Front-End Toolkit—Home Page. Available online: https://getbootstrap.com/ (accessed on 18 January 2025).

- WebAIM. WAVE Web Accessibility Evaluation Tool. Available online: https://wave.webaim.org/ (accessed on 1 May 2025).

- Grafana Labs. Grafana: The Open and Composable Observability Platform. Available online: https://grafana.com/ (accessed on 9 June 2025).

- Uptrace. Top 11 Grafana Alternatives [comparison 2025]. Available online: https://uptrace.dev/comparisons/grafana-alternatives (accessed on 10 June 2025).

- Grafana Labs. Accessibility. Available online: https://grafana.com/accessibility/ (accessed on 9 June 2025).

- Google LLC. Lighthouse–Automated Website Performance and Accessibility Testing. Available online: https://developer.chrome.com/docs/lighthouse (accessed on 6 February 2025).

- Skulmowski, A.; Xu, K.M. Understanding Cognitive Load in Digital and Online Learning: A New Perspective on Extraneous Cognitive Load. Educ. Psychol. Rev. 2022, 34, 171–196. [Google Scholar] [CrossRef]

| Feature | AccessiDashboard (AI-Enhanced IoT Dashboard) | Azimuth (Accessible Dashboard Prototype) | Grafana (General Dashboard Platform) |

|---|---|---|---|

| Real-Time Accessible IoT Dashboards | Yes. Designed for real-time IoT sensor monitoring with accessibility in mind. It delivers live updates to screen reader users by generating dynamic textual summaries as data streams in, ensuring blind users get insights in real time. The system uses ARIA live regions so that changes (e.g., new sensor readings) are announced. | Yes (dynamic updates supported). Azimuth generates dashboards that can be actively explored and updated. This allows users to monitor changes or apply filters in a dashboard and be informed of updates without visual cues. However, Azimuth was demonstrated with user-driven data updates (like filters) rather than autonomous IoT sensor streams. | Partial. Grafana excels at real-time visualization of metrics (widely used for live IoT data dashboards [53]), but accessible real-time feedback is limited [54]. Screen readers do not automatically announce new data points on a chart. Grafana has plans to improve this, but as of now, any real-time accessible output (like a textual update) would require manual configuration. |

| AI-Enhanced Descriptions for Visually Impaired Users | Yes—LLM-generated descriptions. A core feature of AccessiDashboard is its use of AI to produce rich textual descriptions of data in real time. Each incoming sensor feed is connected to a Large Language Model, which generates context-aware narratives (trends, anomalies, comparisons) as alternative text for charts. These AI-driven descriptions go well beyond static alt text and are updated as data changes. | No (auto-generated text, but not AI/LLM). Azimuth provides automatically generated textual descriptions of dashboards, but it does not employ machine learning or LLMs for this. Instead, it uses rule-based templates and data analysis to create descriptions. These complementary descriptions are generated algorithmically (using the provided data JSON), so while they offer useful insights (like “Category X has the highest value Y”), they lack the more nuanced narrative that an AI might provide. | No. Grafana does not include any AI-driven description capability. It provides no native feature to automatically generate alt text or summaries for charts. Any descriptive text for a panel must be written and added manually by the dashboard creator. Essentially, visually impaired users only get whatever labels or captions a human has provided—Grafana itself does not produce explanatory narratives about the data. |

| WCAG Compliance (Accessibility Standards) | High—Built to meet WCAG 2.1 AA. Accessibility is a foundational design goal for AccessiDashboard. It uses semantic HTML5, proper ARIA roles, and keyboard navigation. Headings are in logical order, regions/landmarks are properly labeled, every interactive element is keyboard-accessible, and color contrast is checked automatically. In short, it aims to exceed WCAG 2.1 AA guidelines, not just minimally comply. | High (best-practice adherence). While the Azimuth paper does not explicitly claim a WCAG conformance level, the system was designed with accessibility guidelines in mind. The dashboard generated by Azimuth is structured similar to an accessible website: a consistent heading hierarchy and regions make navigation logical, and standard HTML controls are used for all interactive elements. | Moderate (partial compliance). Grafana is partially conformant with WCAG 2.1 Level AA as of 2023 [54]. This means some parts of Grafana meet the guidelines, but others do not. Keyboard accessibility is another known gap—some controls or panels cannot be fully used via keyboard alone, though work is underway to fix these (adding skip-to-content links, fixing focus traps, etc.). |

| Multimodal Support (Text, Tables, Charts, Narratives) | Yes—rich multimodal output. AccessiDashboard presents data through multiple synchronized modalities. Alongside the usual visual charts/graphs, it provides text-first alternatives: structured data tables, bullet-list summaries of sensor readings, and narrative descriptions generated by AI. This multimodal design means sighted users and visually impaired users have parallel access to the information—charts for those who can see them, and text/tables/narratives for those using screen readers. | Yes—visual and textual views. Azimuth dashboards are designed to be perceivable in multiple ways. Visually, it renders the original charts. For non-visual access, it generates a textual dashboard description which includes an overview, a list of “data facts”, and a layout summary. Azimuth supports charts, structured text, and underlying tables, covering multiple modalities. One thing it does not provide is AI narrative—the text is formulaic—but it still ensures that the information conveyed by visuals is also available in textual form. | Partial. Grafana supports multiple data presentation formats (it has panel types for graphs, tables, single-value stats, text markdown, etc.), but it does not automatically produce synchronized narrative descriptions. Dashboard designers can include a text panel to describe a chart or show a table alongside a graph, but this is a manual process. There is no built-in narrative summary of data or automatic text alternative for charts in Grafana. |

| Human-in-the-Loop Validation (for Accessibility) | Yes. AccessiDashboard includes a human oversight mechanism for its AI-generated content. Administrators can review the long descriptions produced by the AI and adjust or approve them before they reach end-users. This “human-in-the-loop” approach ensures that any errors or awkward phrasing from the AI can be corrected, and it provides accountability—important since the narratives are automatically generated. | No (fully automated). Azimuth’s model is to generate accessible dashboards automatically from a specification. There is not a dedicated step for human validation of the generated textual descriptions. The descriptions are generated by deterministic templates and logic, and a “review” step was not built into the pipeline. If changes are needed, it would require modifying the code or template rather than a simple admin edit on the fly. | No. Grafana does not have any concept of human-in-the-loop accessibility verification built into the platform. Ensuring a Grafana dashboard is accessible is entirely up to the humans building it—for example, they must manually check color contrasts, add descriptive text, and test with screen readers. Grafana’s workflow does not include any AI generation that would need oversight, nor any specialized review interface for accessibility. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stelea, G.A.; Sangeorzan, L.; Enache-David, N. Accessible IoT Dashboard Design with AI-Enhanced Descriptions for Visually Impaired Users. Future Internet 2025, 17, 274. https://doi.org/10.3390/fi17070274

Stelea GA, Sangeorzan L, Enache-David N. Accessible IoT Dashboard Design with AI-Enhanced Descriptions for Visually Impaired Users. Future Internet. 2025; 17(7):274. https://doi.org/10.3390/fi17070274

Chicago/Turabian StyleStelea, George Alex, Livia Sangeorzan, and Nicoleta Enache-David. 2025. "Accessible IoT Dashboard Design with AI-Enhanced Descriptions for Visually Impaired Users" Future Internet 17, no. 7: 274. https://doi.org/10.3390/fi17070274

APA StyleStelea, G. A., Sangeorzan, L., & Enache-David, N. (2025). Accessible IoT Dashboard Design with AI-Enhanced Descriptions for Visually Impaired Users. Future Internet, 17(7), 274. https://doi.org/10.3390/fi17070274