Abstract

The accurate identification of look-alike medical vials is essential for patient safety, particularly when similar vials contain different substances, volumes, or concentrations. Traditional methods, such as manual selection or barcode-based identification, are prone to human error or face reliability issues under varying lighting conditions. This study addresses these challenges by introducing a real-time deep learning-based vial identification system, leveraging a Lightweight YOLOv4 model optimized for edge devices. The system is integrated into a Mixed Reality (MR) environment, enabling the real-time detection and annotation of vials with immediate operator feedback. Compared to standard barcode-based methods and the baseline YOLOv4-Tiny model, the proposed approach improves identification accuracy while maintaining low computational overhead. The experimental evaluations demonstrate a mean average precision (mAP) of 98.76 percent, with an inference speed of 68 milliseconds per frame on HoloLens 2, achieving real-time performance. The results highlight the model’s robustness in diverse lighting conditions and its ability to mitigate misclassifications of visually similar vials. By combining deep learning with MR, this system offers a more reliable and efficient alternative for pharmaceutical and medical applications, paving the way for AI-driven MR-assisted workflows in critical healthcare environments.

1. Introduction

Medication errors persist as the predominant category of errors in emergency departments. Children face a higher risk of prescription errors due to the lack of standardized doses, necessitating individual calculations depending on their weight. The rates of drug errors in emergency departments (ED) vary from 4% to 14%, escalating to 39% in pediatric cases [1]. A recent investigation into prehospital pediatric medication dosing errors revealed the ongoing prevalence of errors despite the availability of state-wide dosing resources such as the MI-MEDIC card, with a notable proportion of these errors arising from medications necessitating intricate calculations (e.g., dextrose, glucagon, intranasal fentanyl, midazolam, and epinephrine) [2]. Globally, the World Health Organization (WHO) estimates that medication errors cost approximately $42 billion annually, contributing to severe patient harm and increased healthcare expenditures. These findings underscore the need for more resilient systems that guarantee precise dosage calculations and facilitate the proper identification of vials in real time, particularly when vials are visually similar. The identification of medical vials is an essential procedure in healthcare settings. Due to the range in vial sizes, labeling discrepancies, and the rapid speed of medical settings, there is a significant danger of human error in vial identification. This is especially crucial, as errors in vial identification could have significant health consequences.

In healthcare settings, accurate and efficient vial identification is crucial to prevent medication errors. Although barcode scanning is widely used, several other identification methods are also employed to enhance patient safety. These include color-coding, manual verification, and Radio Frequency Identification (RFID)-based solutions. However, each of these approaches presents challenges in terms of human error, efficiency, and real-time usability, particularly in high-pressure environments such as emergency departments and operating rooms. Color-coding, for example, is designed to facilitate the quick identification of medications by assigning different colors to different drug classes. However, its effectiveness is limited by the small number of distinctly recognizable colors, making it inadequate to differentiate a wide range of medications [3]. Furthermore, reliance solely on color-coding can lead to misinterpretation if healthcare providers bypass reading the label, assuming that the color alone is sufficient for identification [4]. The lack of a standardized color-coding system across different institutions further exacerbates this confusion, particularly for healthcare workers operating in multiple facilities [5]. Manual verification, which involves healthcare professionals cross-checking medication labels before administration, remains fundamental but is highly prone to human error due to fatigue, distractions, and high workloads [6]. Moreover, inconsistencies in diligence and experience among staff can lead to unreliable verification processes. RFID-based solutions, which use electronic tags attached to medication vials for automatic identification and tracking, offer improved inventory management and reduce dependency on manual checks. However, challenges such as the high implementation costs, interoperability issues, and security concerns, including the unauthorized interception of RFID data, limit their widespread adoption in healthcare settings [7]. These limitations highlight the necessity for more advanced and reliable vial identification systems that enhance patient safety and operational efficiency. In this context, integrating deep learning-based object detection with Mixed Reality (MR) presents a promising approach, offering real-time, automated, and highly accurate vial recognition.

Recent advances in Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR) have significantly transformed medical applications, particularly in education, training, and clinical procedures. According to Yoo and Son [8], MR technology has emerged as a powerful tool in medical environments, providing real-time interaction and contextual visualization that surpasses traditional training and operational methods. Their study highlights the growing adoption of MR for medical training, improving procedural accuracy and decision making. However, while MR has been widely explored in pediatric healthcare and training, its application in real-time pharmaceutical environments, such as medical vial identification, remains underexplored. Recent research [9] highlights the use of extended reality (XR) frameworks like home-based mirror therapy for phantom limb pain, showing the growing relevance of AI-assisted XR interfaces across diverse user groups, including patients, nurses, and physicians.

Additionally, the integration of artificial intelligence (AI) and deep learning in healthcare has demonstrated remarkable advancements in areas such as diagnostic imaging, robotic surgery, and predictive analytics. Object detection algorithms, particularly YOLO-based models, have shown exceptional performance in real-time medical applications, enabling the rapid and accurate identification of objects in complex environments [10]. The application of deep learning to vial identification, combined with the use of MR for real-time visualization, presents a novel solution to improve accuracy and operational efficiency in pharmaceutical and medical settings.

In our previous research [11], we introduced a barcode-based medical vial detection system that utilizes mixed reality technology, specifically designed for HoloLens 2. However, a notable limitation of this system was HoloLens 2’s inability to accurately detect smaller barcodes, particularly those on amiodron and fentanyl citrate vials. To address this challenge, the current study proposes the integration of deep learning models for real-time vial detection using visual attributes for identification rather than relying solely on barcodes. This enhancement aims to significantly improve detection accuracy, especially in environments where barcodes can be obscured or difficult to scan, as well as to reduce the workload of medical professionals by automating the entire treatment process. The study highlights the potential of object detection algorithms to improve system performance and reliability in real-world medical settings.

2. Related Works

Object detection using deep learning and convolutional neural networks (CNNs) has been extensively researched in various domains, including healthcare, manufacturing, and augmented reality applications. In particular, real-time object detection systems have demonstrated their potential to enhance safety and efficiency in critical environments, such as medical settings, industrial automation, and assistive technologies. Given the high risk associated with medication errors, the identification of look-alike vials remains a challenging problem. Traditional methods, such as barcode scanning, often face problems with obstructions, poor lighting, and the need to be checked by hand. This is why researchers are looking into deep learning models to improve vial recognition and classification.

2.1. Deep Learning for Object Detection in Industrial and Medical Applications

Deep learning models, particularly YOLO (You Only Look Once), Faster R-CNN, and SSD (Single Shot MultiBox Detector), have been widely adopted in real-time object detection tasks. These models have been successfully applied in manufacturing environments to automate processes and improve operational efficiency. Lai et al. [12] used Faster R-CNN to track assembly tools in real time, allowing for operators to quickly locate the required tools, reducing errors and improving workflow efficiency. Similarly, Zheng et al. [13] developed an AI-powered cable assembly system that uses deep learning to detect and position brackets accurately, thus streamlining industrial automation tasks. These studies showcase how real-time deep learning models can improve task efficiency by automating object detection and reducing manual intervention.

In medical applications, deep learning-based detection models have been deployed for diagnostic imaging, surgical tool tracking, and drug identification. Wang et al. [14] developed a YOLOv5-based model to identify medical instruments in surgical environments, highlighting the potential of CNNs to improve procedural precision. Furthermore, Gu et al. [15] implemented YOLOv4 for real-time traffic sign detection, demonstrating that optimized deep learning models can improve detection speed and accuracy, even in complex and dynamic environments.

However, in the identification of medical vials, deep learning-based solutions are still in their early stages. Traditional approaches, such as barcode-based scanning, remain the most commonly used method. However, barcode recognition suffers from limitations in real-world conditions, such as smudged labels, varying vial orientations, and obstructions, leading to higher rates of misidentification. This requires the integration of alternative computer vision-based techniques to improve the reliability of detection.

2.2. Augmented and Mixed Reality in Object Detection

Mixed Reality (MR) and Augmented Reality (AR) have gained traction in object recognition and real-time interactive systems, particularly in medical training and industrial automation. Bahri et al. [16] demonstrated how YOLOv3 can be integrated with HoloLens for object detection, achieving real-time classification with moderate performance limitations due to computational constraints. Similarly, Eckert et al. [17] explored the use of YOLOv2 in HoloLens to aid visually impaired users, allowing for real-time object recognition with audio feedback.

Despite these advances, the real-time detection of small objects remains challenging, particularly in low-contrast or cluttered environments. Łysakowski et al. [18] implemented YOLOv8 on HoloLens 2 for real-time detection tasks, demonstrating that deep learning models can be successfully deployed on AR headsets, although with latency and processing limitations.

A key study by Farasin et al. [19] examined the feasibility of deploying real-time object detection models on HoloLens, emphasizing the trade-off between model complexity and real-time performance. Their findings suggest that lightweight models are essential for efficient execution on AR headsets, aligning with our approach of developing a YOLOv4-Tiny-based model optimized for Mixed Reality applications.

2.3. YOLO-Based Small Object Detection in Mixed Reality

Recent studies have explored the potential of YOLO models in AR/MR environments for real-time tracking and classification. Tiwari et al. [20] proposed an enhanced YOLOv4-based object detection and tracking system in Mixed Reality environments, improving classification accuracy and localization. Their model achieved an mAP of 0.988, surpassing previous YOLO versions, making it highly effective for general object detection tasks. However, their study focused on large and medium-sized objects in collaborative workspaces rather than small medical vials, which present unique challenges in terms of feature extraction and real-time usability.

Additionally, Yoo and Son [8] conducted a comprehensive review of MR applications in healthcare, emphasizing the growing role of Mixed Reality in medical training and procedural guidance. However, real-time vial identification in MR-assisted pharmaceutical environments remains underexplored, highlighting the need for specialized deep learning architectures tailored to small-object detection in MR-based workflows.

2.4. Contributions of This Study

Building on these previous works, this paper presents a Lightweight YOLOv4-based model for real-time look-alike vial identification in Mixed Reality environments. Traditional barcode-based systems depend on being able to see the label and require additional workload to make the final decision regarding the medication. Our method uses deep learning to find and sort vials based on their appearance so they can be identified even when conditions are not ideal. Our model improves the ability to find small objects by combining ResBlocks and Spatial Pyramid Pooling (SPP). This technique reduces the number of false positives and speeds up the inference process. Also, our Mixed Reality integration allows for 3D annotations to happen in real time, providing operators with interactive feedback and lowering the chance of misidentifying a vial. This work builds on previous research by combining AI-driven object detection with MR-assisted visualization. It provides a new way to identify vials in medical settings, where a lot is at stake.

3. Proposed Approach

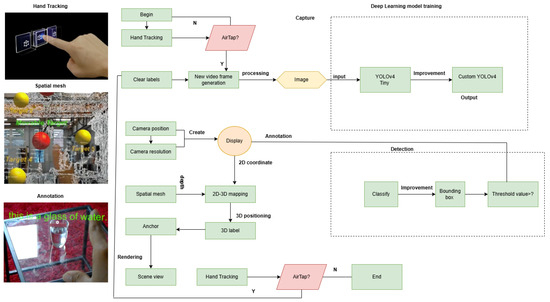

The proposed real-time look-alike vial identification system integrates a custom lightweight YOLOV4 model with Mixed Reality (MR), enabling precise vial detection within an interactive MR environment. The workflow, illustrated in Figure 1, consists of several key stages: hand tracking, spatial mapping, image acquisition, deep learning-based detection, annotation, and MR visualization.

Figure 1.

Workflow of the proposed MR-based vial detection system. This figure illustrates the key steps in the proposed system: hand-tracking for user interaction, spatial mapping to create a 3D environment, and image acquisition through the HoloLens 2 camera, followed by preprocessing to optimize detection. The AirTap gesture (tapping the air with a finger) is used for selecting and interacting with objects in the MR environment, allowing the user to engage with the system effectively. The Lightweight YOLOv4 model is then used for vial detection, generating bounding boxes, and projecting them into the MR space for real-time visualization and interaction.

3.1. Hand-Tracking and Spatial Mapping

To facilitate seamless interaction with the MR system [21], hand-tracking is employed, allowing users to engage through natural gestures. The HoloLens 2 tracks the operator’s hand movements and gestures, triggering interactions with the MR interface. Simultaneously, a spatial mesh is generated to construct a 3D representation of the workspace. This mesh assists in environmental mapping and depth estimation, ensuring the accurate overlaying of virtual objects onto the physical environment.

3.2. Image Acquisition and Preprocessing

The MR headset camera continuously captures video frames of the surroundings, which undergo pre-processing before being fed into the detection model. Pre-processing includes resolution calibration to adjust image dimensions for optimal processing, contrast enhancement to improve image clarity for better feature extraction, and noise reduction to remove distortions, improving detection accuracy [22]. Once an image is captured, 2D coordinates of detected vials are annotated and projected into 3D space, ensuring accurate positioning within the MR interface.

3.3. Deep Learning-Based Vial Detection

The preprocessed images are input into the Lightweight YOLOV4, which detects vials and generates bounding boxes. To make our model better at finding small, visually similar vials, we added more convolutional layers to the feature extraction layers to improve small object recognition. We also used custom anchor boxes made for medical vial sizes and tweaked the bounding box adjustments to cut down on false positives and boost classification accuracy. In the bounding box, B, in Equation (1), each detected vial is represented mathematically as follows:

where represents the center of the bounding box, and denote the width and height of the detected object. The classification confidence score for each detected object is calculated as C in Equation (2):

where is the probability of an object being present in the given bounding box, and represents the highest probability among all object classes.

3.4. Object Classification and Thresholding

Once vials are detected, they are categorized based on their label and structural features. A thresholding function is applied to enhance detection accuracy by eliminating false positive detections. The final decision function is defined as in Equation (3):

where T represents the confidence threshold. If the computed confidence score C is greater than or equal to T, the vial is considered correctly identified and classified.

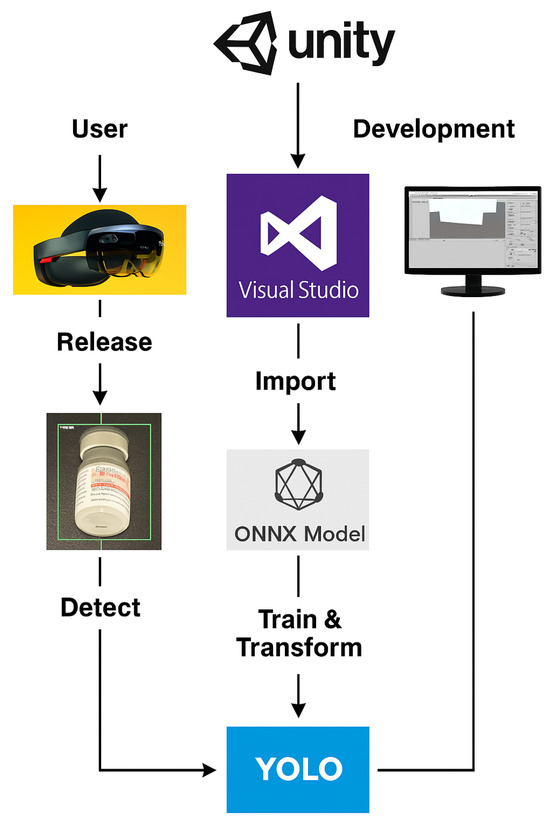

3.5. System Integration and Deployment

The detected vials are visually highlighted with bounding boxes and 3D labels in the MR environment. This allows users to interact in real-time, improving decision-making in medical workflows. The entire system is integrated within the Unity and Visual Studio, as depicted in Figure 2. The final implementation supports real-time vial identification, the fast and accurate recognition of look-alike vials, an optimized YOLO model for enhanced small-object detection with minimal latency, and Mixed Reality integration for interactive 3D visualization with spatial annotations. By leveraging deep learning and MR, the proposed approach provides a robust, efficient, and interactive solution for vial identification in medical and pharmaceutical environments.

Figure 2.

Deployment pipeline of the MR-assisted vial detection system. The YOLO-based deep learning model is first trained and converted to the ONNX format for optimized inference. The ONNX model is then imported into Unity and integrated into the MR environment using Visual Studio. The compiled application is deployed on HoloLens 2, allowing for real-time object detection and interaction within the Mixed Reality workspace.

4. Proposed Deep Learning Model

We propose a real-time look-alike vial identification model based on the YOLOv4-Tiny architecture, optimized to detect small, visually similar medical vials. Given the challenges associated with small object detection in dynamic environments, several architectural enhancements were implemented. These include backbone restructuring, activation function optimization, small object detection improvements, and a multi-scale feature extraction strategy using three YOLO heads. These modifications ensure robust detection in real-time Mixed Reality (MR) environments, making the system well-suited to pharmaceutical and medical applications.

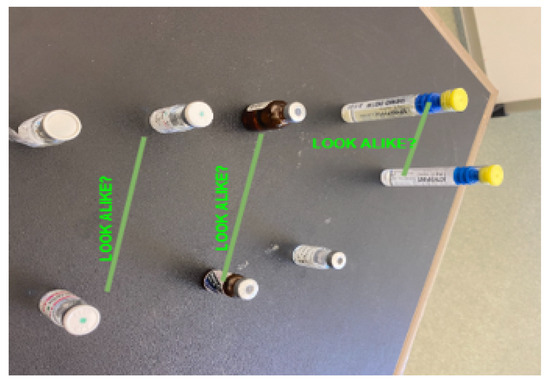

4.1. Dataset and Annotation

The dataset used for training consists of 1500 medical vial images, categorized into 11 classes, and collected under varied environmental conditions, such as different lighting conditions, different levels of background clutter, and varying angles. To ensure a well-balanced model, the dataset was divided into 70% training, 15% validation, and 15% testing. Each vial was manually annotated with bounding boxes and class labels.

To enhance generalization, data augmentation techniques were applied, including random rotation (), brightness variation (), horizontal flipping, contrast adjustment, and random cropping (up to 15% per image). These augmentations ensure that the model can effectively detect vials under diverse real-world conditions. Figure 3 presents a sample of the dataset, illustrating the challenge posed by visually similar vials.

Figure 3.

Sample dataset images showing visually similar vials. This figure displays a set of images from the dataset, highlighting the look-alike vials commonly encountered in medical environments. The vials, which appear similar in size and shape, are annotated with labels to indicate potential confusion. Each vial is marked with the annotation “LOOK ALIKE?”, suggesting the challenge of visually distinguishing these vials. This image demonstrates the complexity of the task and the importance of accurate detection for preventing errors in vial identification, which is addressed by the proposed system.

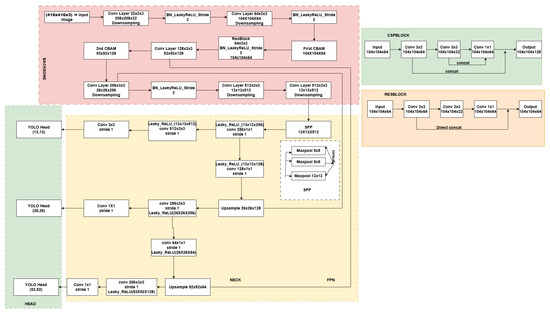

4.2. Backbone Enhancement

To improve feature extraction, the original CSPBlock in YOLOv4-Tiny was replaced with a ResBlock-based structure Figure 4. While CSPBlocks are effective for general object detection, they introduce an information bottleneck that can degrade performance when identifying small objects. By contrast, ResBlocks preserve more detailed features while maintaining computational efficiency.

Figure 4.

Architecture of the Proposed Lightweight YOLOV4 and comparison of original CSPBlock and modified ResBlock.This figure presents the architecture of the proposed Lightweight YOLOv4 model, showcasing the integration of CSPBlock and ResBlock. The architecture is divided into three primary sections: the backbone, the neck, and the head. The backbone consists of a series of convolutional layers, including a ResBlock, which is designed to extract robust features for detecting small objects like medical vials. The CSPBlock is used in the initial stages of the network to improve feature extraction efficiency, while the ResBlock (modified in this model) focuses on enhancing small object detection and improving model robustness by incorporating direct concatenation and skip connections. These modifications are key to optimizing performance in small object detection in real-time Mixed Reality (MR) applications. The SPP (Spatial Pyramid Pooling) module further improves the model’s ability to detect objects at multiple scales, ensuring more accurate vial identification. The architecture also includes YOLO heads at three different scales (13 × 13, 26 × 26, and 52 × 52), enabling the detection of objects of varying sizes. This proposed lightweight design ensures high accuracy and efficiency in detection, especially in real-time applications in devices with limited computational resources.

A ResBlock consists of two convolutional layers followed by a convolutional layer, with a direct residual connection, mathematically represented as y in Equation (4):

where x represents the input, is the transformation function applied to x using weight parameters W, and y is the output feature map. This structure allows for an improved gradient flow, preventing feature degradation and ensuring the effective learning of vial-specific features.

4.3. Activation Function Optimization

To further enhance learning efficiency, the standard ReLU activation function was replaced with Leaky ReLU. Traditional ReLU can lead to the “dying ReLU” problem, where neurons become inactive during training. Leaky ReLU mitigates this issue by allowing for small negative gradients, Equation (5):

where ensures that negative values are retained, preserving gradient flow. This modification significantly improves convergence stability, particularly for deeper layers involved in the detection of small and complex objects, such as vials.

4.4. Enhancing Small Object Detection

Small object detection is critical in this application, as medical vials are often compact and visually similar. To improve the model’s ability to detect such objects, we integrated Spatial Pyramid Pooling (SPP) into the neck of the model. The SPP module enables the extraction of multi-scale features by applying pooling at different kernel sizes:

In Equation (6) denotes pooling at kernel size k, and represents the aggregated feature map. In this work, we used pooling sizes of , , and , ensuring that objects of varying sizes and distances can be effectively recognized.

4.5. Multi-Head Architecture for Scale Adaptation

To further improve detection performance, we employed a multi-scale feature extraction strategy using three YOLO heads, each responsible for detecting objects of different sizes:

- Large Object Head () detects coarser features for larger objects.

- Medium Object Head () handles medium-sized vials.

- Small Object Head () specializes in recognizing small vials.

The final detection score was obtained, as shown in Equation (7):

This multi-scale approach significantly enhances the model’s ability to detect vials across different spatial scales, ensuring robustness in challenging scenarios.

4.6. Final Detection and Output Generation

After generating bounding boxes for detected vials, Non-Maximum Suppression (NMS) was applied to eliminate redundant detections. NMS operates by retaining the box with the highest confidence score while suppressing overlapping boxes based on the Intersection over Union (IoU) threshold, as shown in Equation (8):

In this study, a threshold of 0.5 for the Intersection over Union (IoU) was applied, indicating that detections exhibiting an overlap exceeding 50% were suppressed to ensure that only the most pertinent vials were preserved.

Once finalized, the detected vials were rendered in the Mixed Reality environment, where they were annotated with bounding boxes and class labels. This enables real-time interaction, allowing medical professionals to efficiently identify and differentiate between vials, reducing the likelihood of medication errors.

5. Evaluation and Discussion of the Proposed Model’s Results

We evaluated the performance of the proposed Lightweight YOLOv4 model and compared it with the baseline YOLOv4-Tiny model using a dataset of 1500 medical vial images. The evaluation focused on detection accuracy, inference speed, and real-world usability in a Mixed Reality (MR) environment. We employed YOLOV4-tiny as the foundational model due to its better stability in terms of inference speed, accuracy, and ONNX export compatibility for deployment on mobile mixed reality devices, such as HoloLens 2.

5.1. Model Performance Evaluation

The proposed model demonstrates significant improvements in various evaluation metrics, as shown in Table 1. In particular, it achieves higher mAP scores, a faster inference time, and a better FPS than the YOLOv4 Tiny model.

Table 1.

Performance comparison between the proposed model and YOLOv4 Tiny, showing overall evaluation metrics as well as class-wise accuracy for selected vials.

The proposed model outperforms YOLOv4-Tiny in both accuracy and real-time performance, achieving a higher mAP of 98.76% and a lower inference time of 20 ms. These enhancements ensure its smoother deployment in Mixed Reality applications while maintaining high detection precision.

5.2. Class-Wise Detection Accuracy

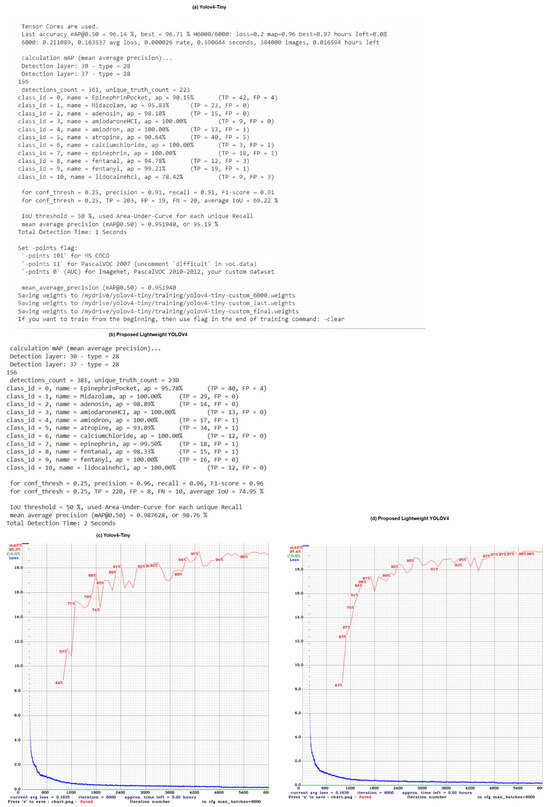

Figure 5 presents a comparative analysis between the YOLOv4-Tiny model and the proposed Lightweight YOLOv4 model in terms of detection accuracy, training convergence, and inference performance. The evaluation results in Figure 5a,b highlight key metrics such as mean average precision (mAP), precision, recall, and F1 score. The proposed model achieves a higher mAP of 98.76% compared to 95.19% for YOLOv4-Tiny, demonstrating an improved detection accuracy, especially for small and visually similar medical vials. In addition, the class-wise evaluation shows that the proposed model achieves 100% accuracy in multiple vial categories, while YOLOv4-Tiny exhibits misclassification errors due to overlap and occlusions.

Figure 5c,d illustrates the training loss and mAP convergence over multiple iterations. The proposed model demonstrates a faster and smoother convergence, indicating improved learning stability and generalization. The mAP progression curve in Figure 5d shows that the proposed model achieves higher accuracy earlier in the training process, while YOLOv4-Tiny exhibits more fluctuations and slower improvement. Furthermore, the inference speed of the proposed model is 20 ms per frame, maintaining real-time processing capabilities, which is essential for Mixed Reality (MR)-assisted vial detection applications.

Figure 5.

Comparison of YOLOv4-Tiny and Proposed Lightweight YOLOv4 Model Performance. (a,b) show the numerical evaluation of both models, including precision, recall, F1-score, and mean average precision (mAP). The proposed model achieves an mAP of 98.76%, outperforming YOLOv4-Tiny’s 95.19%. (c,d) present the training loss and mAP progression across training iterations, demonstrating a faster convergence rate and higher final accuracy for the proposed model.

5.3. Impact of Architectural Enhancements

The key modifications to the baseline model ResBlocks, SPP, and multi-head YOLO architecture contributed to the improved detection. The main enhancements and their effects are summarized in Table 2.

Table 2.

Effect of Model Enhancements on Performance.

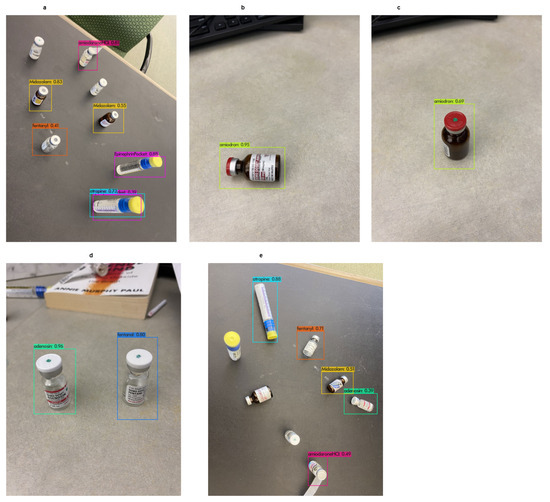

5.4. Visual Results

The proposed Lightweight YOLOv4 model demonstrates strong performance in real-world vial detection scenarios, particularly when identifying single vials in an image. As shown in Figure 6, the model effectively recognizes and labels individual medical vials with high confidence scores, even under varying lighting conditions and perspectives. When vials are presented individually, as in Figure 6b–d, the model consistently achieves accurate classification, with confidence levels above 0.95 for most vial types.

Figure 6.

Detection results of the proposed Lightweight YOLOv4 model. (a,e) demonstrate cases where multiple vials are present, leading to misclassification issues, particularly between visually similar vials such as Midazolam and Amiodron. The model struggles to differentiate these vials when they appear together in the same frame. (b–d) illustrate successful detections where a single vial is present, achieving high accuracy with confidence scores above 0.95.

However, when there is more than one vial in a frame Figure 6a,e, the model has trouble classifying them correctly, especially when the vials look alike, like Midazolam and Amiodron. In cases where multiple vials with a similar appearance are closely positioned, the model occasionally assigns incorrect labels, indicating a challenge in differentiating look-alike vials under occlusion and clustered arrangements. This suggests that the model relies heavily on label positioning and vial texture features, which may not be sufficient in dense pharmaceutical settings.

Overall, these results indicate that while the model performs exceptionally well for single-object detection, further improvements are needed to enhance multi-vial differentiation in complex scenarios.

6. Three-Dimensional Spatial Representation and Annotation in Mixed Reality

One of the most important aspects of Mixed Reality (MR)-assisted object detection is ensuring that identified objects are not only recognized but also correctly placed within the 3D environment. In this study, we go beyond simple 2D bounding box overlays by mapping detected vials into a three-dimensional space, aligning them with real-world coordinates. This enables users to view, interact with, and track vials in a way that feels natural, allowing for better decision-making in fast-paced pharmaceutical settings.

6.1. Spatial Mapping and Depth Estimation

For the system to properly place vial annotations in MR, it must understand the depth and spatial relationships of the objects in the user’s surroundings. HoloLens 2, which serves as the primary MR platform for this work, continuously generates a spatial mesh of the environment using its built-in depth sensors. This real-time mesh acts as a virtual reconstruction of the physical world, allowing for the precise alignment of the detected vials with the actual surfaces.

A key step in this process is to convert the detected vials from their original image coordinates, , to real-world coordinates, . This transformation (9) is performed using the well-known pinhole camera model:

where is the depth value retrieved from the spatial mapping system and (10) represents the intrinsic camera matrix, K:

Here, and denote the focal lengths of the camera in the x and y directions, while indicate the optical center of the image sensor. This calculation ensures that the detected vials are positioned accurately in the user’s field of view, even as they move through the environment.

6.2. Attaching Annotations to Real-World Objects

Once the 3D position of each vial is determined, the system needs to make sure that the virtual labels and bounding boxes remain correctly aligned with their corresponding physical objects. Unlike traditional AR overlays, which are simply drawn on the screen, our approach anchors each annotation to a fixed position in the real-world coordinate system.

To achieve this, we employ a transformation matrix that maps the detected 2D position of a vial onto the MR world space:

In Equation (11), is the position of the detected object in the camera frame, and is the transformation matrix that converts the local camera coordinates into global world coordinates. The matrix is derived using the camera calibration process, which combines intrinsic parameters (such as focal length, principal point, etc.) and extrinsic parameters (such as rotation and translation between the camera and the world coordinate system). This calibration is achieved using depth sensor data and the spatial mapping system available in the HoloLens 2. By obtaining a 3D mesh of the environment, the spatial mapping allows for the accurate positioning of objects in the real world, even as the user moves or adjusts their viewpoint. Once a vial is detected and labeled, its annotation remains fixed in space, even as the user moves around. This approach eliminates common issues such as floating or drifting labels, which can make MR applications difficult to use in real-world settings.

6.3. Real-Time Rendering and Interaction in MR

The final stage of the system involves displaying detected vials in the MR environment in a way that feels intuitive and interactive. The bounding boxes and class labels are projected into the user’s field of view using HoloLens’ rendering engine, ensuring that they adjust dynamically based on user movement.

The system also considers occlusions and environmental changes in real time. If a vial becomes partially or fully obscured, its annotation is automatically adjusted, either fading out or repositioning to maintain clarity. This behavior mimics how human perception works—focusing on visible objects while ignoring obstructions.

7. Implementation and Real-World Performance Analysis

Deploying the proposed real-time look-alike vial identification system into a Mixed Reality (MR) environment requires a combination of deep learning inference optimization, real-time rendering, and efficient MR interactions. This section discusses the end-to-end system implementation and model deployment, and provides an evaluation of its performance in real-world conditions.

7.1. Model Training and Optimization

The detection model was trained on a dataset of 1,500 medical vial images spanning 11 different classes, ensuring robustness in various real-world conditions, such as lighting variations, occlusions, and background clutter. The dataset was augmented with random rotations, brightness adjustments, and contrast variations to enhance the generalizability of the model.

7.2. Model Deployment in Mixed Reality

Once the model achieved satisfactory accuracy, it was converted to the ONNX format for lightweight inference on the HoloLens 2 headset. This step is critical as real-time applications demand low-latency performance to ensure smooth user interactions.

The deployment process followed these steps: 1. The trained YOLOv4-Tiny model was exported to ONNX format. 2. ONNX Runtime was integrated into Unity to enable real-time inference on HoloLens. 3. The model was optimized for mobile GPU execution to maintain at least 15 FPS on the headset. 4. The MR environment was developed using Unity and MRTK (Mixed Reality Toolkit), allowing for the real-time visualization of detected vials.

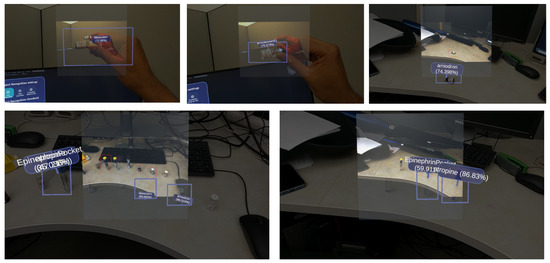

7.3. MR Implementation Results

The Mixed Reality (MR) implementation of the proposed vial identification system enables the real-time detection, classification, and spatial annotation of medical vials within an augmented environment. Figure 7 illustrates how the system overlays bounding boxes and labels onto detected vials, ensuring an interactive and intuitive identification process.

Figure 7.

Mixed Reality (MR) implementation of the proposed real-time vial detection system. The top row shows the real-time detection of individual vials using spatially anchored bounding boxes. The bottom row illustrates multi-vial detection, highlighting cases where the system struggles with misclassification due to visually similar vials (e.g., Epinephrine Pocket and Atropine). The MR system provides an intuitive, interactive interface for medical professionals, enabling real-time decision-making and reducing the risk of vial identification errors.

In the top row of images, users interact with vials in real time using an MR headset. The system successfully recognizes and labels vials such as Midazolam and Amiodron, displaying their respective confidence scores. The detected objects remain anchored in 3D space, allowing users to move around and still maintain correct visual alignment with the vials.

The bottom row of the images shows the multi-vial detection capability of the system, where multiple vials are identified simultaneously. However, the system experiences detection errors in clustered settings, particularly with visually similar vials such as Epinephrine Pocket and Atropine. These cases suggest that overlapping objects and varying lighting conditions can affect classification accuracy, indicating the need for further occlusion handling and feature refinement.

Despite these challenges, the implementation of MR successfully integrates computer vision and spatial computing, allowing for users to interact with medical vials in a way that improves efficiency and reduces human error compared to traditional barcode scanning methods.

7.4. Performance Metrics and Evaluation

The system was evaluated based on detection accuracy, processing speed, and real-time interaction efficiency in the MR environment. The proposed model achieved a mean average precision (mAP) of 98.76 percent for vial classification, demonstrating a significant improvement compared to the baseline YOLOv4-Tiny model. Detection accuracy varied depending on environmental conditions, with the highest performance observed under controlled lighting, where certain vial classes achieved 100 percent accuracy, while accuracy slightly decreased to 89.4 percent when cluttered backgrounds were used. The model performed well when detecting single vials, but exhibited occasional misclassification in multi-vial scenarios, particularly for visually similar vials such as Midazolam and Amiodarone, due to overlapping labels and their high visual similarity. The model achieved real-time inference with an average processing time of 68 milliseconds per frame, resulting in approximately 15 frames per second on the HoloLens 2. Compared to CPU-based inference, the GPU-accelerated execution provided a 3.5 times speed improvement, ensuring the smooth real-time detection in MR environments. The MR implementation allowed users to interact with detected vials seamlessly, with bounding boxes and labels remaining stable even as users moved around, with no noticeable lag or misalignment. Hand-tracking interactions for vial selection were evaluated, and the system demonstrated an average response time of 250 milliseconds, which falls within the acceptable usability limits for MR applications. However, in scenarios where multiple vials were present, the system occasionally misclassified overlapping objects, suggesting that further improvements in occlusion handling and spatial feature extraction are necessary to enhance detection reliability in complex medical settings.

7.5. Challenges and Optimizations

Several challenges were encountered during the deployment, particularly in optimizing real-time performance on a mobile device. The major challenges and corresponding solutions are summarized below:

- High latency during initial inference: The first inference run experienced delays due to ONNX model loading overhead. This was mitigated by preloading the model at startup.

- Reduced detection accuracy in occlusions: When the vials were partially covered, the detection accuracy dropped. Future work may involve integrating depth-aware segmentation to improve the handling of occlusions.

- Stability of 3D annotations: Although most annotations remained stable, rapid head movements in MR caused a minor misalignment. This was improved by adjusting the spatial anchor update rates.

7.6. Summary of Real-World Performance

To provide a clearer view of the system performance in real-world conditions, Table 3 summarizes the key evaluation metrics.

Table 3.

Real-World performance of the MR vial detection system.

The results confirm that the system is well-optimized for real-time MR applications, delivering high detection accuracy while maintaining an interactive frame rate suitable for Mixed Reality environments.

8. User Study and Real-World Validation

To evaluate the practical effectiveness of the proposed Mixed Reality (MR)-assisted vial identification system, a user study was conducted in a controlled environment replicating real-world medication workflows. The goal was to evaluate the usability, accuracy and efficiency of the system compared to traditional vial identification methods.

8.1. Study Design and Participants

The study involved 12 participants, including medical professionals, pharmacists, nurses, students, and non-experts (researchers and engineers), to assess both expert and novice usability. Participants were asked to identify medical vials under three different conditions:

- Manual identification–participants visually identified vials without any technological assistance.

- Barcode-based identification—participants used barcode scanners to identify vials.

- MR-assisted identification (proposed system)—participants used the MR system with real-time object detection.

Each participant completed 10 vial identification tasks across the three conditions, ensuring statistical robustness.

8.2. Evaluation Metrics

To compare the effectiveness of each identification method, the following key metrics were analyzed:

- Identification accuracy (%)—the percentage of correctly identified vials.

- Time to identify (seconds)—the average time taken per vial identification.

- Error rate (%)—the rate of misidentification, particularly for look-alike vials.

- User experience score (1–5)—a subjective usability rating, collected through a post-study questionnaire.

8.3. Results and Findings

The study results, summarized in Table 4, indicate that the MR-assisted system significantly improved identification accuracy while reducing task completion time.

Table 4.

Comparison of vial identification methods.

The results demonstrate that the MR-assisted system outperforms traditional methods in terms of both accuracy and efficiency. Participants completed tasks approximately 60 percent faster than with manual identification and 40 percent faster than with barcode-based methods. Additionally, the error rate was reduced by 57.3 percent compared to manual identification, ensuring safer and more reliable medication handling.

8.4. Observations and Limitations

While the MR-assisted system significantly improved identification accuracy and efficiency, several challenges were noted. In scenarios where the vials were partially obscured or positioned at extreme angles, the detection model occasionally misclassified the object, indicating limitations in occlusion handling. Some users reported mild discomfort when wearing the HoloLens 2 for extended periods beyond 20 min, suggesting the need for a more ergonomic device for long-term usage. Furthermore, performance slightly degraded under extreme lighting variations, and the system struggled in environments with excessive glare or dim lighting. These issues indicate the need for further model enhancements to improve robustness under variable conditions.

8.5. User Feedback and Future Improvements

The post-experiment surveys collected feedback from participants on usability and potential improvements. The most common suggestions included integrating a voice-assisted interaction system, allowing users to retrieve vial information through verbal commands rather than relying solely on hand gestures. Medical professionals also suggested incorporating multi-user collaboration features to enable simultaneous interactions within the same MR workspace, which could enhance teamwork efficiency in hospital and pharmacy environments. Another significant concern was the bulkiness of HoloLens 2, with some participants preferring an alternative lightweight MR device to improve comfort during extended use.

Future work will focus on developing occlusion-aware detection models to enhance recognition accuracy for partially hidden vials. Furthermore, further research will explore lightweight augmented reality glasses as an alternative to the HoloLens 2 to provide a more ergonomic and practical solution for prolonged use in real-world medical settings.

9. Conclusions and Future Work

This study introduced a mixed reality-assisted real-time vial identification system using a lightweight YOLOv4-based deep learning model. The proposed system was designed to address the limitations of traditional barcode-based and manual identification methods by integrating real-time object detection with MR-based spatial annotations. Through a series of architectural enhancements, including ResBlocks, Spatial Pyramid Pooling, and a multiscale detection framework, the model achieved a mean average precision of 98.76 percent, outperforming baseline YOLOv4-Tiny implementations. The system demonstrated robust performance in real-world scenarios, particularly in controlled lighting conditions, where detection accuracy reached 100 percent for several vial classes. The integration of Mixed Reality enabled seamless user interaction, with spatially anchored bounding boxes ensuring that the vial labels remained correctly positioned in 3D space. The results of the user study confirmed that the MR-assisted system significantly improved efficiency, reducing task completion time by 60 percent compared to manual identification and 40 percent compared to barcode-based methods. Furthermore, the error rate was reduced by 57.3 percent, highlighting the potential of this approach in mitigating vial misidentification errors in pharmaceutical and healthcare settings.

Despite its advantages, the system exhibited challenges in detecting multiple visually similar vials within the same frame, occasionally misclassifying look-alike medications such as Midazolam and Amiodron. Additionally, performance degradation was observed under extreme lighting conditions and during occlusion events, suggesting the need for further model optimization. Some users also reported mild discomfort when using HoloLens 2 for extended periods, underscoring the need for more ergonomic MR devices. A future study will investigate the incorporation of instance segmentation architectures, such as Mask R-CNN or YOLO-SegFormer, to rectify misclassification under multi-vial conditions. These methodologies facilitate pixel-level segmentation, which is essential for distinguishing closely clustered or partially obscured vials. Furthermore, attention-based modules originating from transformer designs may be used to enhance feature differentiation in visually complex environments. Additional efforts will explore the integration of lightweight augmented reality glasses such as Magic Leap 2 and Nreal Light, which offer improved comfort and reduced fatigue to improve long-term usability. Expanding the dataset to include a wider range of vial types and training in various real-world conditions will further refine the robustness of the model. The findings of this study demonstrate the feasibility of MR-assisted deep learning models in medical applications, paving the way for safer and more efficient vial identification systems in high-risk environments.

Author Contributions

Conceptualization, B.U.M. and G.H.; methodology, B.U.M.; software, B.U.M. and V.R.L.; validation, B.U.M., G.H., N.B. and Z.D.A.; formal analysis, B.U.M.; investigation, B.U.M. and G.H.; resources, B.U.M. and G.H.; data curation, B.U.M., G.H. and V.R.L.; writing—original draft preparation, B.U.M.; writing—review and editing, All authors; visualization, B.U.M., V.R.L. and N.B.; supervision, G.H., Z.D.A. and N.B.; project administration, G.H.; funding acquisition, G.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Weant, K.A.; Bailey, A.M.; Baker, S.N. Strategies for reducing medication errors in the emergency department. Open Access Emerg. Med. 2014, 6, 45–55. [Google Scholar] [CrossRef] [PubMed]

- Kazi, R.; Hoyle, J.D., Jr.; Huffman, C.; Ekblad, G.; Ruffing, R.; Dunwoody, S.; Hover, T.; Cody, S.; Fales, W. An Analysis of Prehospital Pediatric Medication Dosing Errors after Implementation of a State-Wide EMS Pediatric Drug Dosing Reference. Prehospital Emerg. Care 2023, 28, 43–49. [Google Scholar] [CrossRef] [PubMed]

- Filiatrault, P.; Hyland, S. Does colour-coded labelling reduce the risk of medication errors? Can. J. Hosp. Pharm. 2009, 62, 154–156. [Google Scholar] [CrossRef] [PubMed]

- Anesthesia Patient Safety Foundation. Anesthesia Patient Safety Foundation Pro/Con Debate: Color-Coded Medication Labels—CON: Anesthesia Drugs Should NOT Be Color-Coded. Available online: https://www.apsf.org/article/pro-con-debate-color-coded-medication-labels-con-anesthesia-drugs-should-not-be-color-coded/ (accessed on 13 May 2025).

- WHO. WHO Collaborating Centre for Patient Safety Solutions Patient Identification Patient Safety Solutions; WHO: Geneva, Switzerland, 2007. [Google Scholar]

- Ble, C.; Tsitsopoulos, P.P.; Anestis, D.M.; Hadjileontiadou, S.; Koletsa, T.; Papaioannou, M.; Tsonidis, C. Osteoporotic spinal burst fracture in a young adult as first presentation of systemic mastocytosis. J. Surg. Case Rep. 2016, 2016, rjw063. [Google Scholar] [CrossRef] [PubMed]

- The Benefits and Barriers to RFID Technology in Healthcare. Available online: https://gkc.himss.org/resources/benefits-and-barriers-rfid-technology-healthcare (accessed on 13 May 2025).

- Yoo, S.; Son, M.H. Virtual, augmented, and mixed reality: Potential clinical and training applications in pediatrics. Clin. Exp. Pediatr. 2024, 67, 92–103. [Google Scholar] [CrossRef] [PubMed]

- Marullo, G.; Innocente, C.; Ulrich, L.; Faro, A.L.; Porcelli, A.; Ruggieri, R.; Vecchio, B.; Vezzetti, E. Home-based mirror therapy in phantom limb pain treatment: The augmented humans framework. Multimed. Tools Appl. 2025, 1–33. [Google Scholar] [CrossRef]

- Koyuncu, M. Use of Artificial Intelligence in the Healthcare Sector. Lokman Hekim Health Sci. 2024, 5, 195–202. [Google Scholar] [CrossRef]

- Mahmud, B.U.; Hong, G.Y.; Sharmin, A.; Asher, Z.D.; Hoyle, J.D., Jr. Accurate Medical Vial Identification Through Mixed Reality: A HoloLens 2 Implementation. Electronics 2024, 13, 4420. [Google Scholar] [CrossRef]

- Lai, Z.-H.; Tao, W.; Leu, M.C.; Yin, Z. Smart Augmented Reality Instructional System for Mechanical Assembly towards Worker-Centered Intelligent Manufacturing. J. Manuf. Syst. 2020, 55, 69–81. [Google Scholar] [CrossRef]

- Zheng, L.; Liu, X.; An, Z.; Li, S.; Zhang, R. A Smart Assistance System for Cable Assembly by Combining Wearable Augmented Reality with Portable Visual Inspection. Virtual Real. Intell. Hardw. 2020, 2, 12–27. [Google Scholar] [CrossRef]

- Jiang, K.; Pan, S.; Yang, L.; Yu, J.; Lin, Y.; Wang, H. Surgical Instrument Recognition Based on Improved YOLOv5. Appl. Sci. 2023, 13, 11709. [Google Scholar] [CrossRef]

- Gu, Y.; Si, B. A Novel Lightweight Real-Time Traffic Sign Detection Integration Framework Based on YOLOv4. Entropy 2022, 24, 487. [Google Scholar] [CrossRef] [PubMed]

- Bahri, H.; Krčmařík, D.; Kočí, J. Accurate Object Detection System on HoloLens Using YOLO Algorithm. In Proceedings of the 2019 International Conference on Control, Artificial Intelligence, Robotics & Optimization (ICCAIRO), Athens, Greece, 8–10 December 2019; pp. 219–224. [Google Scholar] [CrossRef]

- Eckert, M.; Blex, M.; Friedrich, C.M. Object Detection Featuring 3D Audio Localization for Microsoft HoloLens—A Deep Learning Based Sensor Substitution Approach for the Blind. In Proceedings of the 11th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2018), Madeira, Portugal, 19–21 January 2018. [Google Scholar] [CrossRef]

- Farasin, A.; Peciarolo, F.; Grangetto, M.; Gianaria, E.; Garza, P. Real-Time Object Detection and Tracking in Mixed Reality Using Microsoft HoloLens. In Proceedings of the 17th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Online, 6–8 February 2022; pp. 165–172. [Google Scholar] [CrossRef]

- Lysakowski, M.; Zywanowski, K.; Banaszczyk, A.; Nowicki, M.R.; Skrzypczy’nski, P.; Tadeja, S.K. Real-Time Onboard Object Detection for Augmented Reality: Enhancing Head-Mounted Display with YOLOv8. In Proceedings of the 2023 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 2–8 July 2023; pp. 364–371. [Google Scholar]

- Tiwari, A.; Patel, N.; Kumar, S. Enhancement of Real-Time Object Detection and Tracking in Collaborative Environment using AI and Mixed Reality. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 1262–1272. [Google Scholar] [CrossRef]

- Lolambean MRTK2-Unity Developer Documentation—MRTK 2. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/mrtk-unity/mrtk2/?view=mrtkunity-2022-05 (accessed on 3 April 2025).

- Al-Hababi, M.A.M.; Liu, Y. Enhanced Pre-Processing for Robust Small Object Detection. Procedia Comput. Sci. 2024, 242, 256–263. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).