1. Introduction

The role of Unmanned Aerial Vehicles (UAVs) in the Internet of Things (IoT) ecosystem has evolved from simple information collectors to critical mobile sensing nodes. Modern UAVs are equipped with high-resolution sensors capable of generating massive visual data streams; however, such data communication is constrained by wireless bandwidth limitations [

1], particularly when multiple UAVs operate in the same airspace, resulting in high transmission latency and degraded quality of service [

2].

To mitigate these communication constraints, the research paradigm is shifting from data-centric approaches that transmit raw data back to ground stations for processing toward UAV-assisted edge computing. In this vision, UAVs function as mobile edge computing units that process video data locally, extract semantic information (e.g., object categories and coordinates), and then transmit only the analytical results. By sending solely the analysis outcomes rather than raw video streams, reliance on high-bandwidth data links can be substantially reduced, enabling rapid autonomous decision-making when combined with other algorithms, even in unstable network environments in the future [

3]. However, this paradigm shift requires that detection algorithms must be deployed on UAVs, which imposes stringent constraints on power consumption, memory, and computational capacity.

Although two-stage detectors like Faster R-CNN [

4] have high accuracy, their huge computational cost and slow inference speed are not suitable for real-time processing on drones. Similarly, although Transformer-based models such as RT-DETR-R18 [

5] have global receptive fields, they usually perform poorly in small object detection because their architecture may lose important spatial details during the downsampling process. In contrast, single-stage detectors, especially the YOLO (You Only Look Once) series, are more suitable for edge deployment because they balance speed and accuracy [

6]. Among them, YOLOv10 [

7] reduces inference latency by eliminating the non-maximum suppression (NMS) post-processing required by traditional YOLO, making it an ideal choice for resource-constrained environments. However, YOLOv10 is mainly designed for natural images (such as the COCO dataset [

8]), where objects are clear and located in the center of the image. Our empirical analysis shows that the model wastes a large amount of computational resources on the deep pyramid layer (P5) to extract high-level semantic abstractions, which are largely redundant for tiny, pixel-limited objects typical in drone perspectives.

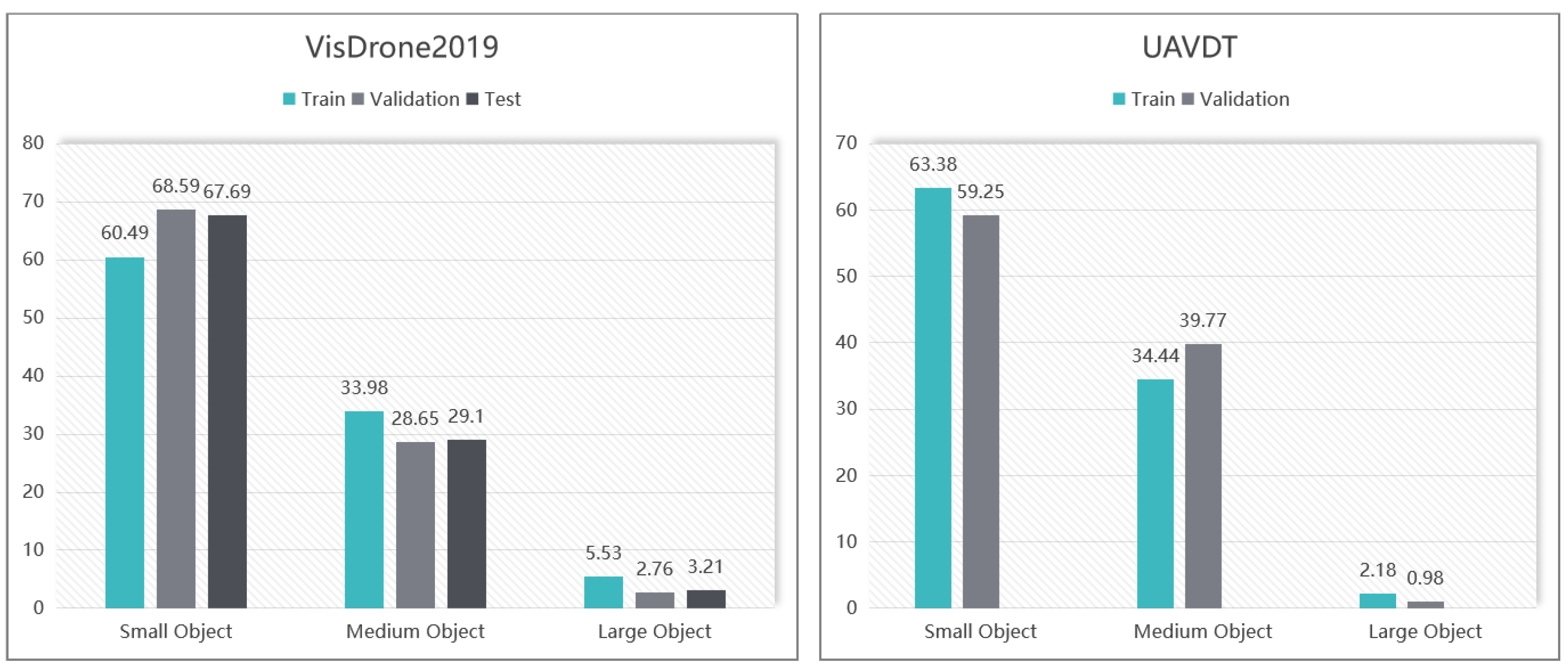

Our examination of the VisDrone2019 [

9] and UAVDT [

10] datasets reveals that the P5 layer accounts for merely 6.76% and 2.43% of total detections, respectively, despite its considerable computational overhead. We present a detailed analysis of this impact on detection performance in

Section 3.1.

Based on this finding, we propose LSCNet (Lightweight Shallow Feature Cascade Network), a lightweight architecture for UAV-based small object detection built upon YOLOv10. Rather than relying on ineffective deep pyramid layers, our approach strategically concentrates on shallow-stage features where small object information is better preserved. By reallocating computational resources from deep pyramid elaboration to shallow feature refinement, LSCNet achieves superior detection performance on the both VisDrone2019 and UAVDT datasets with a compact parameter count.

The design of LSCNet is motivated by the unique challenges of UAV-based object detection. As mentioned earlier, edge deployment on UAV platforms imposes strict constraints on model complexity, power consumption, and memory footprint, thus requiring a lightweight architecture capable of operating in resource-constrained environments. Besides the limitations of the drone platform itself, high-altitude dynamic aerial photography often encounters many adverse factors, such as motion blur, environmental interference, and changes in lighting conditions. In addition, the high-altitude perspective also brings complex background clutter and a greater sense of scale variations [

11].

We conducted experimental validation on two demanding UAV object detection benchmark datasets, VisDrone and UAVDT. Experimental outcomes reveal that LSCNet achieves substantial mean Average Precision improvements of 10.1% and 5.06% over the baseline YOLOv10 model on these respective datasets. Moreover, LSCNet exhibits competitive performance against contemporary state-of-the-art lightweight detection models. Remarkably, these performance gains are accomplished with fewer parameters than the baseline architecture, thereby confirming the computational efficiency of our LSCNet design. LSCNet not only significantly surpasses YOLOv10 in small object detection accuracy but also achieves additional parameter reduction, providing a practical solution for real-time object detection on resource-limited edge platforms such as UAV systems.

Three areas comprise our primary contributions:

LoGStem Module [

12]: When processing degraded aerial data with inherent blur and noise, conventional object detection backbones frequently struggle with inadequate feature extraction capacity. We use the LoG-Stem module from LEGNet, which was initially created to improve feature representation in low-quality aerial imagery circumstances, to overcome this restriction at the network’s earliest stage. The LoG-Stem module serves as a robust initial feature extractor that replaces the traditional P1 and P2 layers in YOLO architecture.

SAOK Fusion Module: To effectively aggregate multi-scale information from the shallow feature cascade layers where small object features are predominantly preserved, we propose the Small-target-Aware Omni-Kernel (SAOK) module. Drawing inspiration from the Omni-Kernel Network’s multi-branch architecture for image restoration [

13], SAOK strategically adapts the receptive field configuration and attention mechanism specifically for aerial small object detection requirements.

DyHead [

14]: To compensate for potential information loss resulting from the simplified two-layer pyramid architecture and to maximize detection capability within this streamlined framework, we integrate the Dynamic Head (DyHead) as our detection module. Traditional detection heads process features at different scales, spatial locations, and task objectives independently, leading to suboptimal feature utilization. DyHead addresses this limitation through a unified attention framework that coherently optimizes feature representations across three dimensions: scale, space, and task.

The remainder of this paper is organized as follows.

Section 2 reviews related work in feature pyramid networks and aerial object detection.

Section 3 details the architectural design of LSCNet, including the proposed LoGStem and SAOK modules.

Section 4 presents comprehensive experimental results and comparisons on the VisDrone2019 and UAVDT benchmarks.

Section 5 compares our model with recent lightweight models. Finally,

Section 6 concludes this paper and discusses future directions for lightweight onboard models in UAV systems.

2. Related Work

Object detection in UAV aerial imagery requires balancing between high precision for tiny targets and low computational cost for edge deployment. This is primarily due to the extreme scale variations, specific viewing angles, and complex backgrounds inherent in aerial image recognition scenarios, as well as the constraints of UAV endurance and onboard equipment. In this section, we review related literature across three dimensions: multi-scale feature representations, small object detection in aerial imagery and attention mechanisms.

Feature pyramid representations serve as the fundamental basis for handling scale variation in modern object detection. Early SSD [

15] is a single-stage detector that employs a bottom-up network structure, predicting objects by computing multi-scale feature maps through forward propagation. The Feature Pyramid Network (FPN) [

16] creatively introduces a top-down path with lateral connections. This design combines high-resolution characteristics with semantically rich deep features, successfully addresses the issue of inadequate shallow semantic information. Building upon this, PANet [

17] introduced an additional bottom-up information pathway, which effectively reduces the propagation distance for lower-level features.

The evolution of the YOLO series has progressed alongside the development of feature pyramids, from YOLOv3 [

18], which first used the FPN concept, to later YOLO versions [

7,

19,

20,

21] that integrated PANet’s bottom-up pathway architecture. From this evolutionary trajectory, we can see how YOLO has continuously adopted to pyramidal architectures. Today, the YOLO series has become a representative example of feature pyramid networks. Most notably, YOLOv10 [

7] eliminates non-maximum suppression (NMS) and introduces efficient architectural designs to minimize latency. However, when these models developed for general tasks are applied to drone aerial scenarios, their deep structures often lose fine features due to oversampling.

To overcome the resolution limitations of generic detectors, researchers have developed specialized strategies for high-altitude aerial imagery. Early approaches such as ION [

22] utilized context-aware mechanisms, called spatial recurrent neural networks, to address the issue of insufficient feature resolution. Relation Networks [

23], meanwhile, enhanced feature discriminability by modeling appearance and geometric relationships. Another direction incorporates super-resolution techniques that generate fine details of small objects at higher resolutions, demonstrated by Perceptual GAN [

24] and MTGAN [

25].

More recently, studies have increasingly turned to Transformer-based architectures. The reason is that purely CNN-based methods often suffer from limitations in processing global contextual information. In this domain, DETR [

26] and UAV-DETR [

27] have demonstrated robust performance by effectively capturing global dependencies. To further address the specific challenges of UAV imaging, several hybrid approaches have been proposed. For instance, RingMoLite [

28] employs a dual-branch structure combining CNNs and Transformers, and Hyneter [

29] utilizes a hybrid backbone with dual-switching modules to fuse local data with global dependencies. Similarly, AST [

30] introduces a pyramid structure to jointly learn local details and global dependencies at various scales. Collectively, these studies demonstrate the potential of integrating attention mechanisms with feature pyramid structures. However, these methods bring substantial computational overhead. Context modeling and GAN-based generation significantly increase inference latency, while Transformers typically demand extensive GPU memory and computing power.

There are lightweight attention mechanisms, which solve the conflict between feature enrichment and computational constraints. Channel attention methods, such as SENet [

31] and Efficient Channel Attention (ECA) [

32], adaptively recalibrate feature channels to emphasize informative representations. ECA, in particular, is highly efficient, avoiding dimensionality reduction through local cross-channel interaction. Beyond channel-wise refinement, unified frameworks like Dynamic Head (DyHead) [

14] integrate scale-aware, spatial-aware, and task-aware mechanisms to coherent optimize representation across multiple dimensions. In this work, we integrate these efficient mechanisms to compensate for architectural simplification. Unlike prior works that apply attention to deep backbones, LSCNet embeds the Omni-Kernel strategy (SAOK) and DyHead specifically within the Shallow Feature Cascade Network. This design leverages the lightweight nature of attention to maximize the discriminative ability of shallow features, thereby achieving the balance between edge-device efficiency and small object detection accuracy.

Current deep pyramid models are often computationally demanding for high-altitude small object detection on UAV platforms, while existing lightweight models typically suffer from significant accuracy degradation. LSCNet addresses this essential problem by establishing a balance between performance and efficiency. We integrated the aforementioned lightweight attention mechanism into our Shallow Feature Cascades; the model can achieve high-precision detection with a reduced number of parameters.

3. Method

In this section, we will explain the detailed implementation of LSCNet; it is structured as follows. First, in

Section 3.1, we present the deep feature layer efficiency analysis that motivates our Shallow Feature Cascade architecture.

Section 3.2 introduces the Small-target-Aware Omni-Kernel (SAOK) module designed for effective multi-scale feature fusion.

Section 3.3 describes the LoGStem module utilized for robust initial feature extraction. Finally,

Section 3.4 elaborates on the integration of the Dynamic Head (DyHead) mechanism to enhance detection capability through unified attention.

We introduce LSCNet, a novel architecture created specifically for UAV image small object detection. Our approach completely rethinks the YOLO framework by incorporating three key innovations: improved feature extraction, dynamic detection capabilities, and architectural simplification. Our fundamental design idea is optimizing detection accuracy while maintaining model efficiency. Specifically, LSCNet employs a simpler two-layer detection architecture with only two layers with 8× and 16× downsampling detection heads to prevent the loss of small object information caused by deep feature propagation.

LSCNet’s architectural design, illustrated in

Figure 1, addresses key challenges in UAV-based small object detection through specialized modules. The LoGStem component enhances the initial feature extraction stage by outputting quarter-resolution representations while simultaneously filtering noise through Laplacian-of-Gaussian operations. To enable seamless multi-scale fusion, backbone P4 features are spatially downsampled via PixelUnshuffle, ensuring dimensional alignment with P3-level SPPF outputs after upsampling.

LSCNet adopts the principle of computational efficiency through targeted elimination of resource-intensive deep detection heads. Our SAOK module, combined with architectural adaptations, proves that removing one detection head does not compromise small object detection accuracy. The module processes fused P3–P4 features using multi-scale kernels with ECA attention for enhanced feature refinement. DyHead integration compensates for the reduced head count, delivering improved detection while keeping computational costs acceptable.

3.1. Shallow Feature Cascade

Standard detectors typically allocate substantial computational resources to deep pyramid levels (e.g., P5) under the assumption that larger receptive fields universally benefit detection. However, this paradigm, while effective for natural scene images, warrants re-examination in the context of UAV-based small object detection.

To investigate the actual contribution of each pyramid level in aerial small object detection, we conducted a comprehensive quantitative analysis using the standard YOLOv10 architecture on two representative UAV datasets: VisDrone2019 and UAVDT. As detailed in

Table 1 and

Table 2, we evaluated 548 validation images from VisDrone2019 and analyzed the detection distribution across three pyramid levels (P3, P4, and P5). For VisDrone2019, the P3 layer accounts for 75.92% of all effective detections, while the P4 layer contributes 17.31%. Remarkably, the deepest detection layer, the P5 layer, produces merely 6.76% of detections despite consuming substantial computational resources. This pattern intensifies in the UAVDT dataset, where P5 contributes less than 3% while P3 and P4 collectively account for over 97% of successful detections. However the bounding box confidence output by the P5 layer is the highest in Visdrone2019 but lower in UAVDT. In the task of small object detection, the overall results were not satisfactory.

The basic cause of this phenomenon is that, despite the fact that deep features have larger receptive fields and richer semantic information, feature information of small targets is severely attenuated or even completely disappears during multiple downsampling processes due to the significant reduction in spatial resolution. Shallow features, on the other hand, retain more local details and spatial details.

Motivated by this discovery, we suggest the LSCNet architecture, which focuses computational resources on the fusion and augmentation of shallow features rather than the three detection heads of the conventional YOLO structure. In particular, LSCNet only keeps two detection layers. More crucially, this architecture allows us to use more potent initial backbone networks while also drastically reducing the network’s computational complexity and parameter count.

Comprehensive experimental validation across both benchmark datasets substantiates the efficacy of our proposed architecture. On the VisDrone2019 dataset, LSCNet achieves 44.6% , representing a substantial improvement of 10.1% over the baseline YOLOv10n. Similarly, on the UAVDT dataset, LSCNet improved by 5.06%, surpassing YOLOv10n’s 31.04% , while reducing the parameter count by 33%.

3.2. SAOK

We implement the Small-target-aware Omni-Kernel (SAOK) module during the P3 and P4 feature fusion stage to improve multi-scale feature performance. Inspired by the Omni-Kernel Network [

13] in image restoration, the SAOK module uses an adaptive aggregation mechanism of multi-scale convolution kernels to efficiently capture feature information at various scales and improve feature discriminability.

To handle the particular difficulties of UAV aerial scenarios, we do, however, make important adjustments. The SAOK (Small-target-Aware Omni-Kernel) module, a key part of our suggested LSCNet architecture, is especially made to handle the feature fusion issues at the crucial P3 layer, where small object information is most noticeable in UAV pictures. SAOK acts as a link between deeper semantic representations and shallow edge characteristics that LoGStem extracts within the LSCNet architecture. SAOK, which comes after the P3 feature extraction stage (see

Figure 2), combines pixel-unshuffle-processed shallow features with multi-scale contextual information from both P3 and upsampled P4 features. The SAOK module may function at a relatively shallow feature resolution due to this strategic placement, which preserves sufficient spatial representation while gaining rich semantic context for small objects.

Kernel Configuration for Small Objects: Drawing inspiration from Cui et al.’s Omni-Kernel architecture [

13] for image restoration, we adapt the multi-scale convolution strategy specifically for aerial small object detection. Unlike the original design employing ultra-large 63 × 63 kernels optimized for high-resolution image reconstruction, our SAOK adopts a refined kernel configuration tailored to UAV imagery characteristics: 1 × 1 kernels capture local fine-grained details, 5 × 5 and 7 × 7 kernels extract immediate contextual information, and 15 × 15 kernels capture broader spatial relationships. This design philosophy stems from a critical observation: small objects in UAV imagery (typically spanning 10–50 pixels) demand moderate receptive fields that strike a balance between preserving fine-grained features and incorporating sufficient contextual awareness. Additionally, the incorporation of strip-shaped kernels (1 × 15 and 15 × 1) strengthens the module’s capability to capture elongated structural patterns—such as vehicle edges and road boundaries—that frequently appear in aerial perspectives.

ECA-enhanced Omni-Kernel (ECAOK): To effectively aggregate the multi-scale features extracted by diverse kernel configurations, we design the ECA-enhanced Omni-Kernel (ECAOK) module as the core computational unit of SAOK. The ECAOK module integrates channel attention mechanism with adaptive multi-scale feature fusion to enhance feature discriminability for small objects. Given an input feature

, the ECAOK module first applies a lightweight feature transformation:

where GELU [

33] is a nonlinear activation function based on a Gaussian distribution; the formula is

It combines the non-saturating property of ReLU with the smoothness of Sigmoid/Tanh, so GELU can be viewed as a smooth variant between ReLU and Sigmoid/Tanh activation functions.

Subsequently, the transformed features are processed through two parallel branches: an Efficient Channel Attention (ECA) branch [

32] and a multi-scale depthwise convolution branch. In order to highlight informative channels for small object representation, the ECA branch adaptively recalibrates channel-wise feature responses:

Simultaneously, the multi-scale branch aggregates spatial features across different receptive fields through weighted depthwise convolutions:

where

represents the depthwise convolution with kernel sizes {1 × 15, 15 × 1, 15 × 15, 1 × 1, 5 × 5, 7 × 7} and

denotes the learnable aggregation weights normalized by the softmax. This adaptive weighting mechanism allows the network to automatically adjust the contribution of each scale according to the characteristics of the input features.

Finally, the outputs from both branches are fused with the original input through a residual connection, followed by activation and projection:

This design enables the ECAOK module to not only process rich multi-scale spatial information but also effectively discriminate features across different channels. The residual connection further facilitates gradient flow and preserves original details to better serve the recognition of small object features.

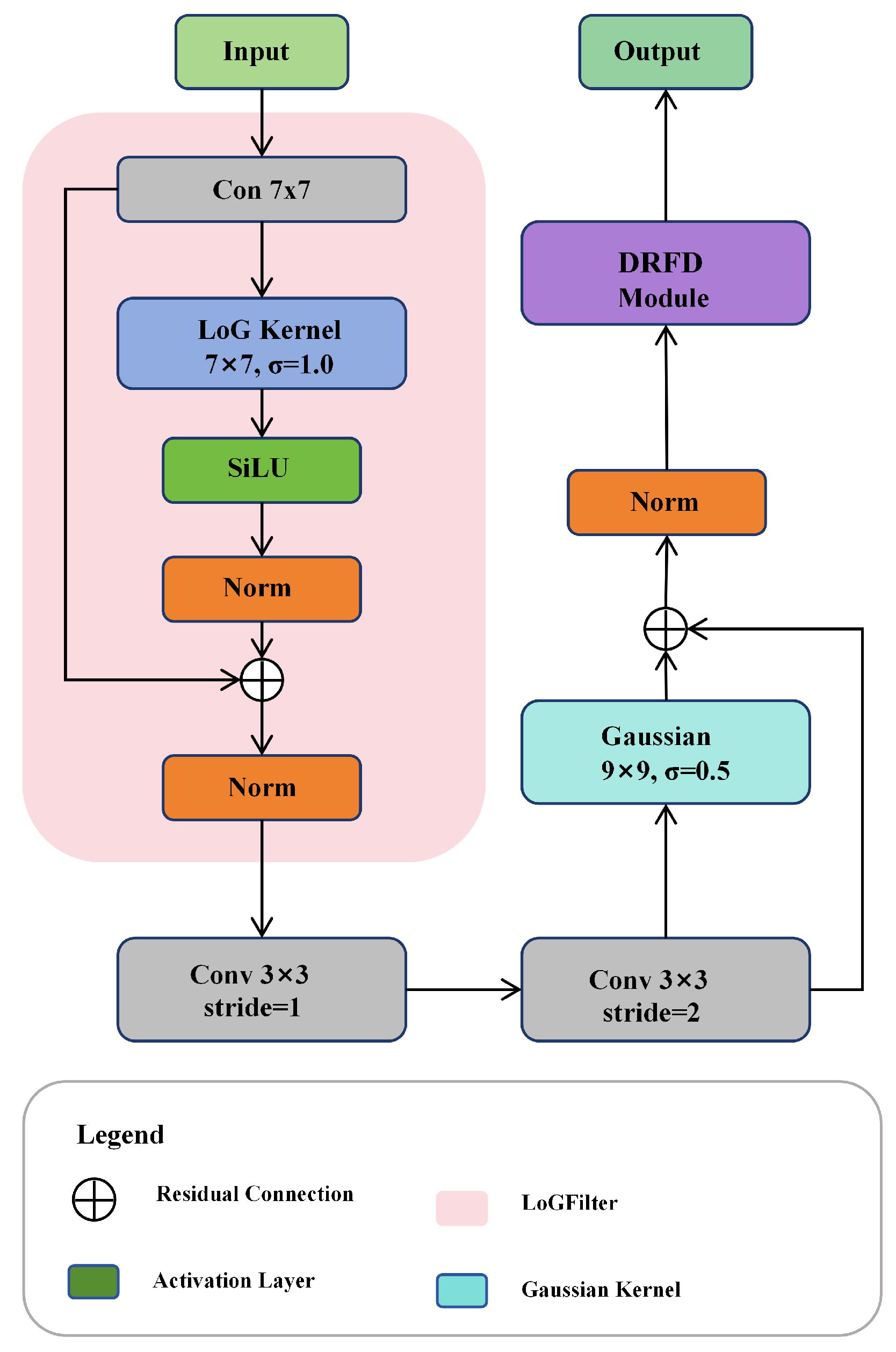

3.3. LoGStem

Feature extractors are usually inadequate for extracting features from small targets or blurry images for traditional YOLO models. Early on in the image input process, we need a more reliable feature extractor made especially for blurry small targets in complicated situations. We use the LEGNet network’s LoG-Stem [

12]. The first feature extraction module of the LEGNet network, the LoGStem layer, was created especially to handle noise and edge information loss problems in low-quality remote sensing images. It makes use of the dual capabilities of LoG filters for edge detection and noise suppression, improving edge features early on. Additionally, this feature extractor’s output is downsampled to 1/4 resolution, which precisely matches the purpose of YOLOv10’s P1 and P2 layers. As a result, this module serves as the first component of our enhanced network backbone.

The benefits of Laplacian operators and Gaussian smoothing are combined in LoG filters. Equation (

6) displays the formula for the LoG Kernel. Equation (

7) displays the formula for the Gaussian Kernel with

= 1.0 and a 7 × 7 kernel size.

The LoGStem structure is shown in

Figure 3.

3.4. DyHead

To compensate for potential performance degradation from the simplified two-layer detection architecture, we integrate the Dynamic Head (DyHead) [

14] to enhance the detection capability of our LSCNet. DyHead provides a unified detection head framework through attention mechanisms, coherently integrating three sequential self-attention mechanisms across the three fundamental dimensions of the feature tensor: level, space, and channel.

Scale-aware attention dynamically fuses features from different pyramid levels based on their semantic importance. Complementing our P3- and P4-focused architecture in LSCNet, this attention mechanism adaptively aggregates multi-resolution features to enhance the model’s perception capability for objects at varying scales, particularly small objects.

Spatial-aware attention models long-range spatial dependencies through deformable convolution. By concentrating on discriminative regions that consistently coexist at both spatial locations and feature levels, this method strengthens the spatial structure of objects and enhances localization performance in difficult situations like occlusion and motion blur.

Operating on the channel dimension, task-aware attention dynamically switches feature channels ON and OFF to favor distinct detection tasks (classification versus localization). For small objects in UAV imagery that typically exhibit weak and ambiguous feature responses, this mechanism ensures task-specific features—whether for object classification or bounding box regression—are appropriately emphasized during forward propagation. The ablation studies in

Section 4.4 validate the effectiveness of this integrated dynamic head design.

4. Experiment

4.1. Implementation Details

All experimental procedures were conducted on a computational platform equipped with an Intel Xeon Platinum 8352V processor, 90 GB system memory, and an NVIDIA GeForce RTX 4090 graphics card, operating under Ubuntu 20.04.4 LTS. The software stack comprised Python 3.10.14, PyTorch 2.2.2 with CUDA 12.1 support, and the Ultralytics 8.2.50 framework. To ensure equitable comparison across all evaluated architectures, we adopted a consistent training protocol.

All models underwent training from random initialization without leveraging any pre-trained weights, thereby eliminating potential biases from transfer learning. The training regimen consisted of 300 epochs with input images resized to 640 × 640 pixels and a batch size of 8 samples per iteration. Additional hyperparameters, encompassing optimizer selection and learning rate scheduling mechanisms, adhered to YOLOv10’s default configuration to preserve experimental reproducibility and maintain consistency across comparative studies.

4.2. Evaluation Metrics

To evaluate the effectiveness of our proposed algorithm on UAV aerial imagery, we employ a combination of accuracy metrics and model complexity indicators. For detection accuracy, we utilize Precision (P), Recall (R), and Mean Average Precision (mAP) as our primary evaluation metrics. Additionally, we assess the model’s computational efficiency through the number of arameters, which directly reflects the model size and deployment feasibility on resource-constrained UAV platforms. The metrics and denote the mean AP across all classes at Intersection over Union (IoU) thresholds of 0.5 and 0.95, respectively. IoU represents the overlap ratio between predicted and ground truth bounding boxes, calculated as the ratio of their intersection to their union

In the confusion matrix, True Positives (TP) represent correctly detected objects where both the predicted bounding box and ground truth are positive; False Negatives (FN) occur when actual objects are missed by the detector; False Positives (FP) indicate erroneous detections where the model predicts an object that does not exist; and True Negatives (TN) represent correctly rejected regions.

Based on these foundational concepts, precision measures the proportion of correctly detected objects among all detections made by the model. It reflects the model’s ability to avoid false detection and is calculated as

Recall evaluates the proportion of actual objects that are successfully detected by the model. It indicates the model’s capability to identify all existing targets and is defined as

To balance false positives and false negatives, therefore considering both

Precision and

Recall, the

F1 score is the harmonic mean of

Precision and

Recall. This prevents models from achieving high scores by sacrificing one metric. The formula is

IoU is calculated as the ratio of the intersection area to the union area between a predicted bounding box

and its corresponding ground truth

, where

denotes the intersection area and

denotes the union area of the two boxes. The formula is

Average Precision (

AP) is then computed as the area under the Precision–Recall curve for a specific object category at a given

IoU threshold, where

denotes the precision as a function of recall

R and

represents the differential element of recall used to compute the integral area under the PR curve. The formula is

Mean Average Precision (

mAP) extends this concept by averaging

AP values across all object categories:

where

N represents the number of object categories and

AP(

i) denotes the average precision for category

i. In our experiments, we report both

(

mAP at

IoU threshold of 0.5) and

(

mAP at

IoU threshold of 0.95), which provides a more stringent evaluation of localization accuracy.

GFLOPs (Giga Floating-point Operations) quantifies the theoretical computational complexity of the model. It represents the number of billion floating-point operations required to perform a single forward pass on an input image. The formula is

Lower GFLOPs indicate reduced computational demand. Although the actual performance may vary across different hardware platforms due to optimization differences, GFLOPs generally serves as a general metric for evaluating the computational resources required to run a model.

FPS (Frames Per Second) measures the inference speed, indicating the number of images the model can process in one second. It is calculated as

where

denotes the total number of processed frames and

represents the total elapsed time in seconds. Higher FPS indicates faster model inference speed.

4.3. Datasets

We conducted experiments on two challenging UAV datasets, VisDrone2019 and UAVDT. Both datasets contain various scenarios, including different city and weather conditions.

As shown in

Figure 4, we conducted a statistical analysis of object size distribution in the two datasets. Following the COCO standard [

8], objects are categorized into small (area less than 32

2 pixels), medium (area between 32

2 and 96

2 pixels), and large (area greater than 96

2 pixels). The statistics reveal that both datasets are heavily dominated by small objects, which constitute over 60% of the samples in both datasets. Large objects are extremely rare in both datasets, particularly in UAVDT, where they account for less than 3%.

4.3.1. VisDrone

The VisDrone2019 dataset includes 10,209 still photos and 288 video clips with 261,908 frames taken in various cities and situations and was created by the AISKYEYE team at the Machine Learning and Data Mining Laboratory of Tianjin University. This dataset’s object detection task comprises 3190 test photos, 548 validation images, and 6471 training images with annotations for ten object categories, such as trucks, cars, vans, and pedestrians. With a total of 2.6 million annotation boxes, it poses major hurdles typical of real-world drone applications, including occlusions, dense tiny objects, and variable illumination and weather conditions across many places and settings. This dataset is very useful for assessing model performance and proving the efficacy of our suggested approach because it covers a wide range of UAV aerial surveillance scenarios. Additionally, we compare this dataset with a few recently released lightweight models.

4.3.2. UAVDT

The UAVDT (UAV Detection and Tracking) benchmark was released by the Computer Vision Laboratory at Shenzhen University in 2019. This comprehensive dataset comprises over 10 h of raw videos with approximately 80,000 representative frames extracted from 50 video sequences. The sequences are captured under diverse real-world scenarios, including different weather conditions, varying camera views, different altitudes, and various urban and rural environments. The dataset provides detailed annotations with over 2.8 million bounding boxes across three primary categories: car, truck, and bus. Due to its focus on vehicle detection from UAV perspectives, UAVDT has been widely adopted in the computer vision community as an important benchmark for evaluating detection model performance under realistic UAV operational conditions.

UAVDT is uniquely structured as a dual-purpose benchmark for both object detection and tracking tasks. The motion blur introduced during UAV flight significantly increases detection difficulty, as objects may appear distorted across frames. However, this characteristic makes UAVDT more representative of real-world scenarios where UAVs encounter dynamic conditions such as platform instability and varying flight speeds.

4.4. Ablation Study

To validate the effectiveness of each component in LSCNet, we designed ablation experiments with single modules and module combinations. The experiments shows in

Table 3 use YOLOv10n as the baseline and are evaluated on the VisDrone2019-val dataset. All performance metrics, including

,

, parameter count, and GFLOPs, are obtained through YOLO’s official validation script using fused parameters. FPS values are calculated as the average of five independent test runs. Each test is preceded by a 200-image GPU warm-up, followed by measuring the total inference time on 1000 images.

The ablation study is conducted in two parts. First, we independently evaluate the contribution of each module. LoGStem improves by 2.6 percentage points through enhanced shallow feature extraction; SAOK achieves a 2.1 percentage point improvement by optimizing the feature pyramid structure and integrating spatial-aware mechanisms, while significantly reducing parameters to 0.84 M; and DyHead brings a 1.1 percentage point performance gain through dynamic detection head mechanisms. Notably, SAOK not only significantly reduces parameters but also increases FPS from 133.7 to 160.3, optimizing computational efficiency while improving accuracy.

Second, we also evaluate the synergistic effects of different module combinations. The combination of LoGStem and SAOK achieves 40.7% , validating the complementarity between shallow feature enhancement and optimized pyramid architecture. When integrating all three modules, the model reaches optimal performance: of 44.6%, an improvement of 10.1 percentage points over the baseline, and a improvement of 7.3 percentage points. The complete model uses only 1.48 M parameters, a 32.7% reduction compared to the baseline.

4.5. Performance on VisDrone2019

LSCNet was created especially for situations involving the detection of small objects. Compared to baseline models, our approach demonstrates outstanding performance on this benchmark while maintaining extremely lightweight characteristics. We chose a number of recent outstanding models from relevant disciplines for comparison, as summarized in

Table 4.

Comparison with lightweight models: LSCNet demonstrates superior efficiency by achieving 44.6% with only 1.48 M parameters and 27.3 GFLOPs. Among models with comparable parameter counts, LSCNet exhibits a significant performance advantage: it surpasses OSD-YOLOv10 (1.6M parameters), the model with the most similar size, by 11.2 percentage points in while using fewer parameters. Furthermore, LSCNet matches the detection performance of LRDS-YOLO with 64.5% fewer parameters, reducing the model size from 4.17 M to 1.48 M parameters.

Comparison with specialized UAV detection models: LSCNet demonstrates strong competitive performance. While achieving a 3.2 percentage point improvement in over Drone-YOLO, our method utilizes 72.3% fewer parameters than Drone-YOLO’s 5.35 M. Although TA-YOLO-S achieves slightly higher accuracy with a 0.8 percentage point lead in , it requires 9.4 times more parameters (13.9 M) than LSCNet, highlighting our model’s substantial advantage in parameter efficiency.

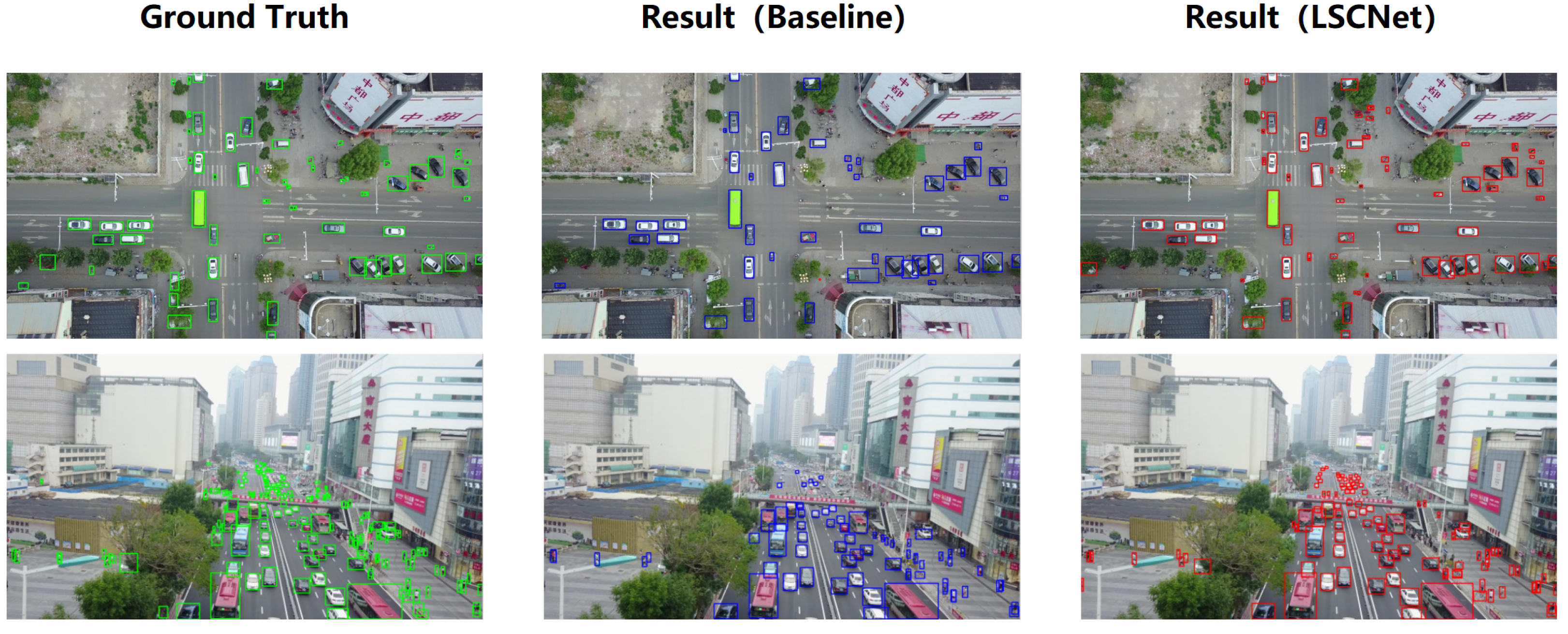

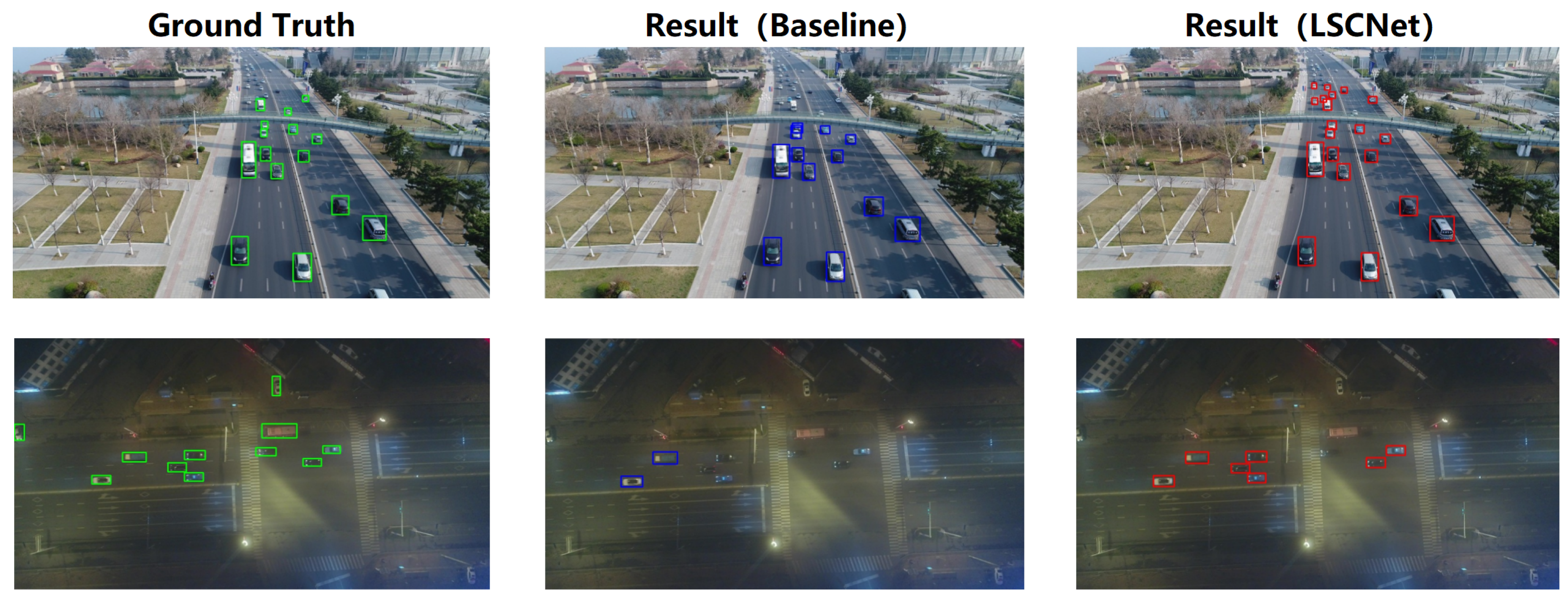

As illustrated in

Figure 5, we present a three-way comparison featuring Ground Truth (GT) annotations, baseline model outputs, and LSCNet predictions. In the upper example showing an intersection scene, the GT labels 68 objects and the baseline captures 49, while LSCNet identifies 76 objects. The lower example presents a congested urban traffic environment where the GT marks 186 objects, the baseline detects only 72, and LSCNet achieves 113 detections.

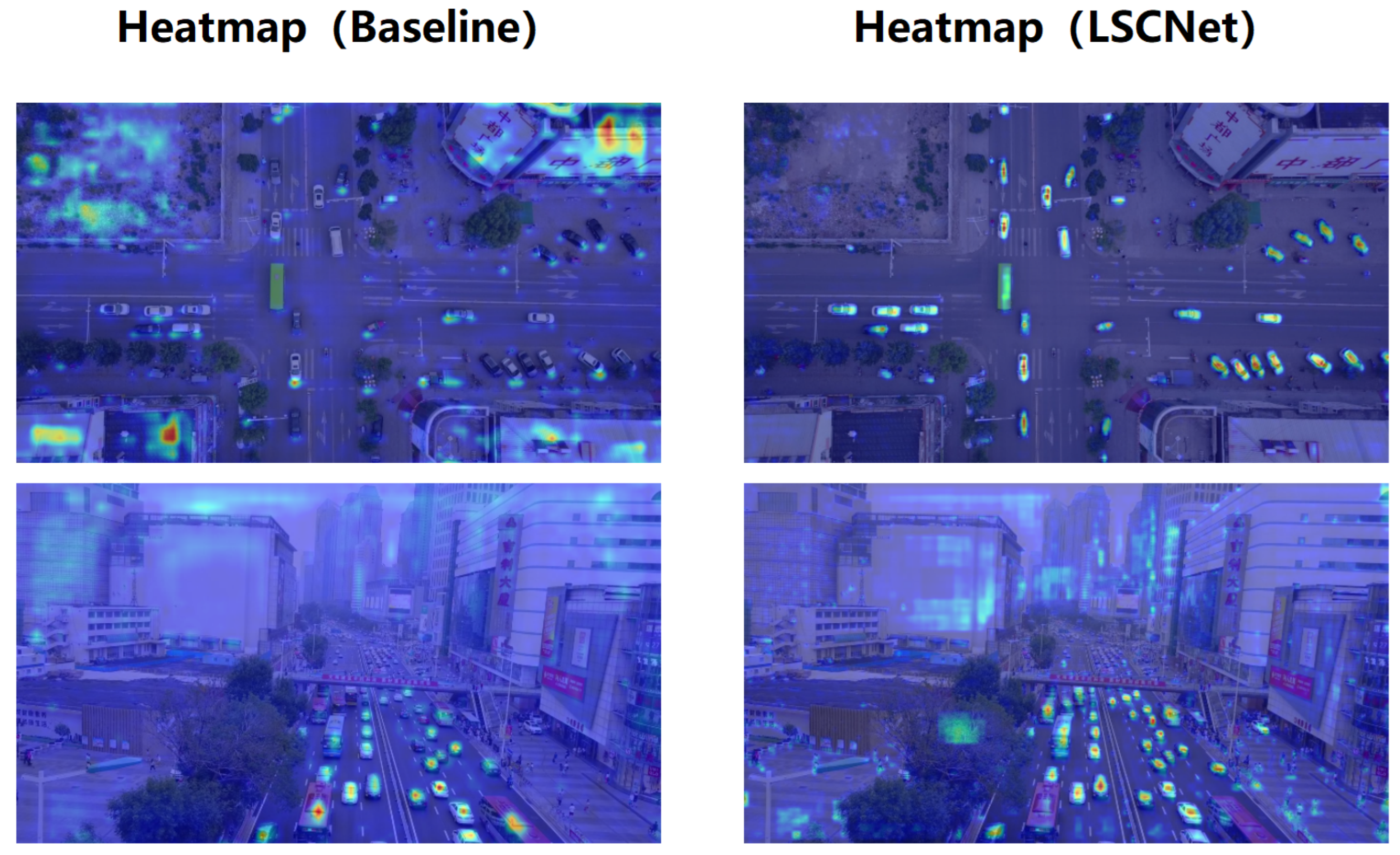

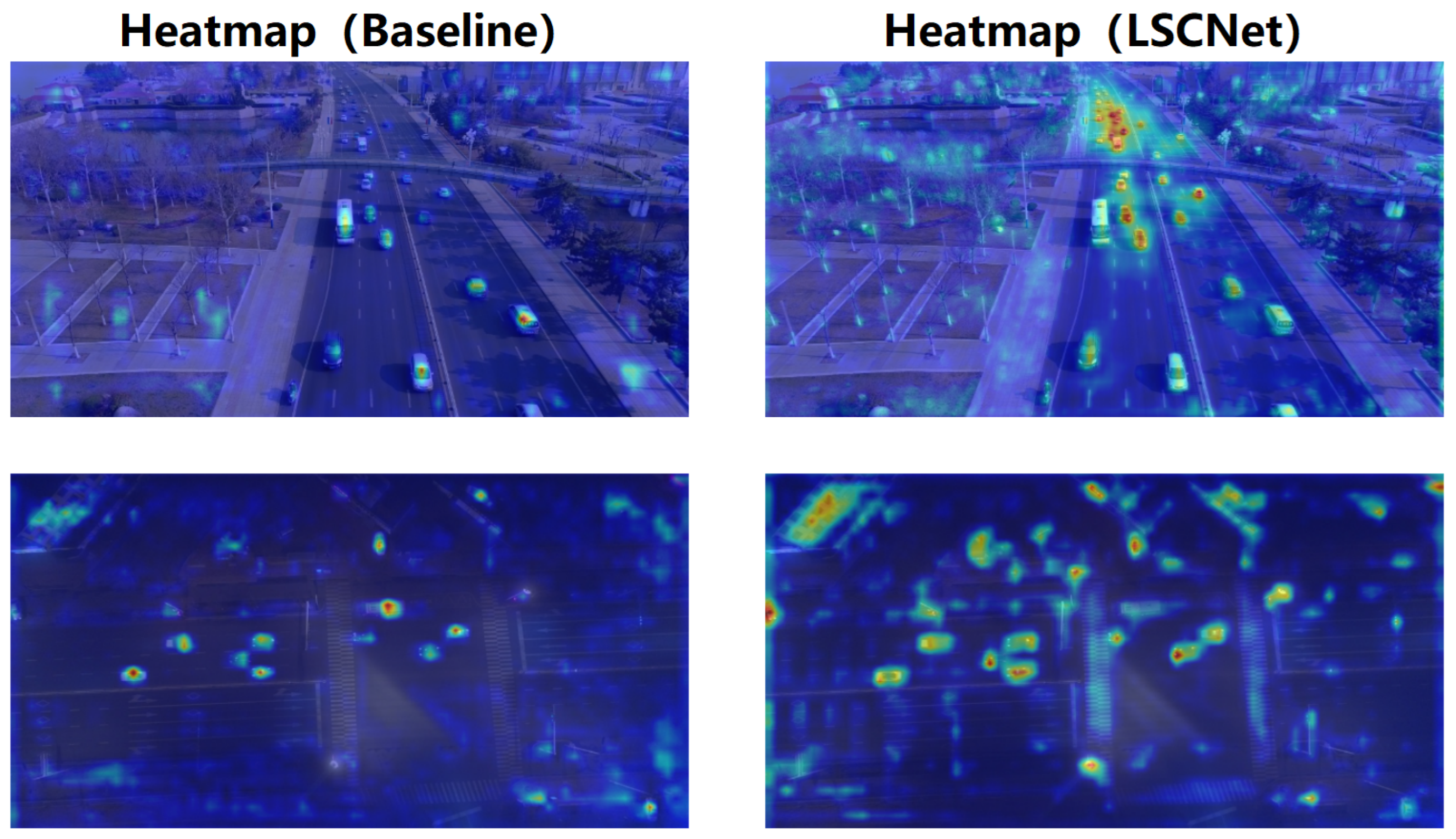

The attention mechanism analysis in

Figure 6 provides additional insights: the baseline model (left panel) shows a scattered attention distribution with considerable background interference, especially in areas with poor contrast, whereas LSCNet (right panel) exhibits focused attention responses targeting small objects, including roadside pedestrians and faraway vehicles, while effectively minimizing irrelevant background activation.

Through visual analysis of both detection outputs and attention visualization, LSCNet demonstrates enhanced capability in detecting small-scale targets such as pedestrians, bicycles, and distant vehicles, substantially exceeding baseline performance. These improvements confirm the soundness and efficacy of the LSCNet framework.

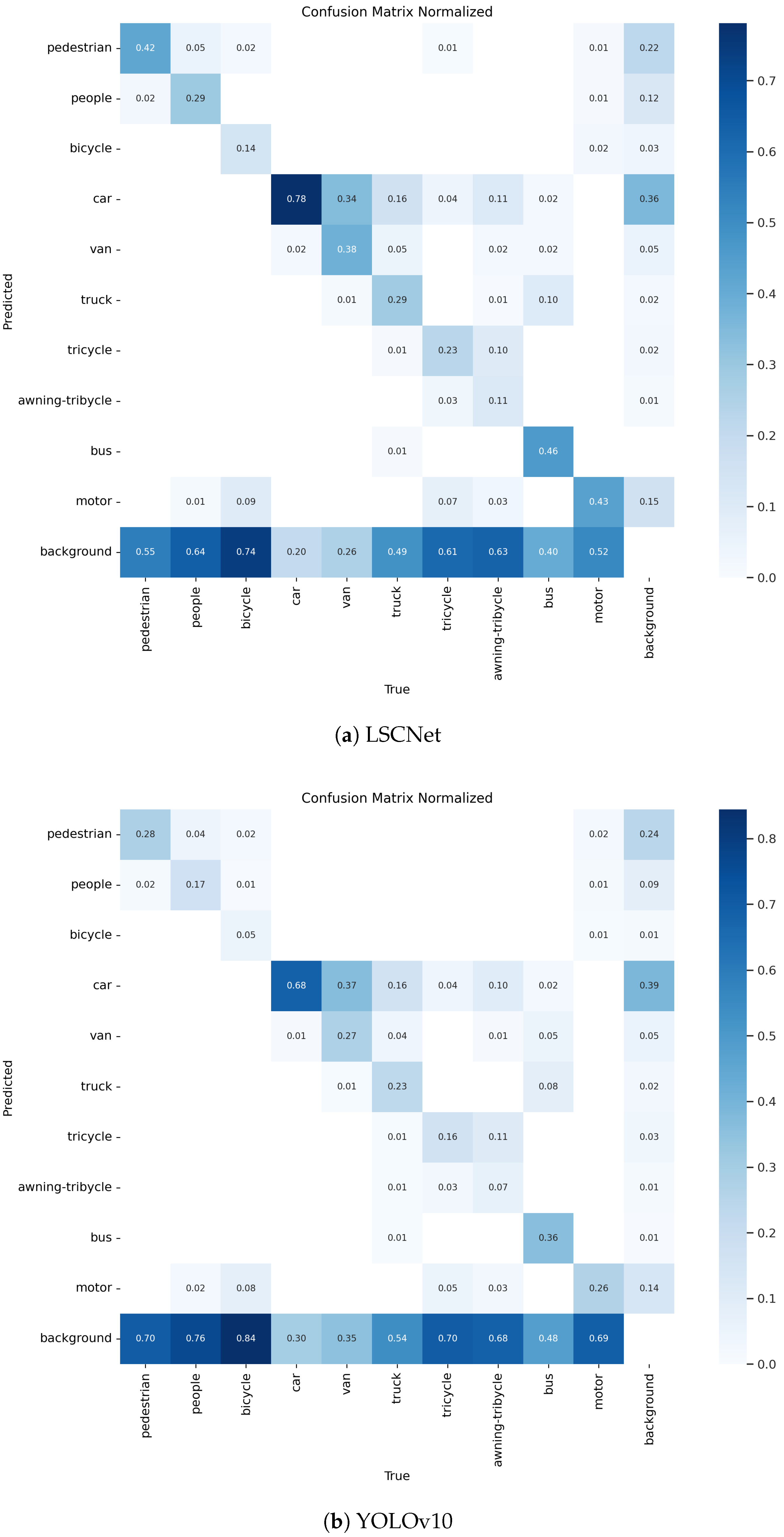

To further analyze the performance of LSCNet, we provide the normalized confusion matrix for the VisDrone2019 dataset in

Figure 7. The horizontal axis represents the ground truth labels, while the vertical axis shows predicted labels.

In the matrix, the cells along the main diagonal represent instances where the model’s prediction matches the ground truth. High values in these cells indicate robust classification accuracy for the respective categories, also called True Positives. Unlike standard object categories, the “background” column and row represent the absence of a target object. In the column of “background”, the model incorrectly predicts background clutter as a specific object, also known as False Positives. In the background row, the model fails to detect an existing object, effectively categorizing it as “background”, which are False Negatives.

For instance, the high value of 0.70 in the cell (Background, Pedestrian) indicates a relatively high value of missed detections for small pedestrians. Additionally, notable inter-class confusion exists between visually similar categories. For example, 37% of “vans” were incorrectly classified as “cars”, and semantic confusion exists between “pedestrian” and “people.” This may be attributed to the low resolution of aerial images, which makes it difficult to discern subtle structural differences between vehicle types, as well as semantic ambiguity in the dataset annotation standards. These are common challenges in high-altitude aerial imagery.

4.6. Performance of UAVDT

Training and validating models on different datasets is crucial for assessing model performance and robustness. It can greatly enhance object identification models’ performance, robustness, and capacity for generalization, guaranteeing their efficacy in a range of real-world applications. We carried out thorough training and testing experiments on the UAVDT dataset to further confirm the LSCNet architecture’s universality. The LSCNet model exhibits better detection performance, as indicated in

Table 5, with improvements in Precision and

of 14.5% and 5.06%, respectively. LSCNet outperforms YOLOv10n by 3.63% for the

measure in particular, demonstrating consistent detection performance across various

IoU thresholds.

We can see that the LSCNet model does better at identifying small objects by examining the accuracy performance across various categories. Compared with YOLOv10n, the for the truck category increases by 6.58% with a precision improvement of 20.35%; for the bus category, increases by 13.94%, precision improves by 21.6%, and recall improves by 13.05%. These findings show that LSCNet offers notable architectural benefits for detecting small objects, validating that the synergistic effect of the LoGStem module, SAOK attention mechanism, and DyHead detection head can effectively enhance the model’s capability for small object detection across different datasets.

Figure 8 compares detection results across three columns: Ground Truth (GT) annotations, baseline model predictions, and our LSCNet predictions. The first row shows an open highway scene under sufficient lighting conditions, where GT contains 15 annotated objects, while the baseline model detects only 13 objects and our LSCNet successfully identifies 20 objects. This demonstrates that LSCNet’s detection capability for vehicles in open high-altitude scenarios with good lighting far surpasses that of the baseline model. Examining the corresponding attention heatmap reveals that the model exhibits extremely high attention to small vehicle objects, while also allocating considerable attention to vehicles parked on both sides of the distant roadway.

The second row presents detection performance in blurred nighttime imagery, where LSCNet correctly identifies the majority of annotated vehicles on the road, showing substantial improvement over the baseline model. GT contains 11 annotated objects, excluding vehicles in shadowed areas and those parked outside the roadway, and the baseline model detects only 2 objects, whereas our LSCNet successfully identifies 7 objects.

The attention heatmap (

Figure 9) visualization demonstrates that the model allocates attention to nearly all vehicles in the image. Notably, vehicles parked in the shadowed area at the top of the image, which are barely visible to the human eye, also receive considerable attention from the model, though they were ultimately not included due to confidence scores falling below the threshold. These two examples fully demonstrate our proposed model’s adaptability to various complex environments.

5. Discussion

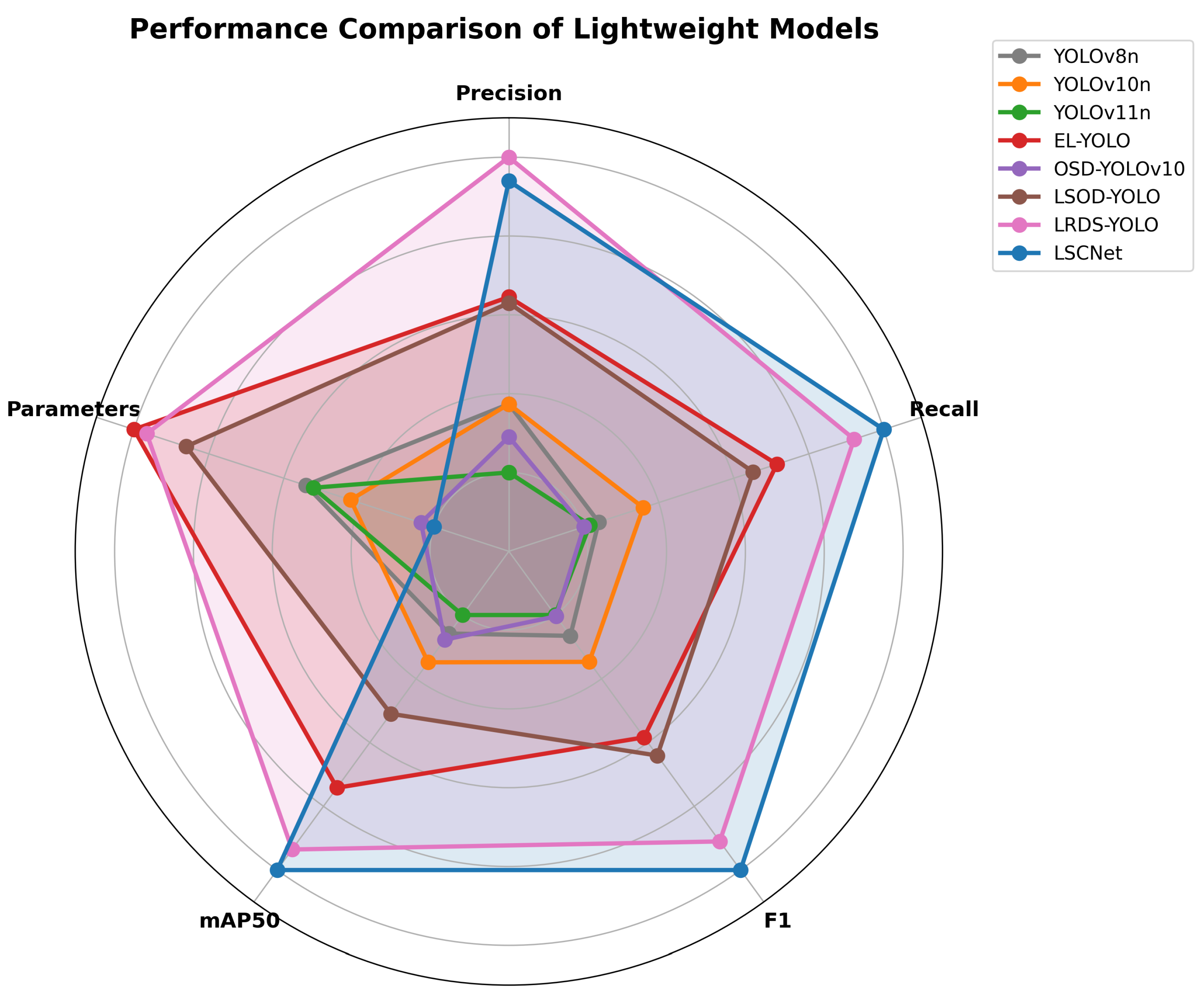

As shown in

Table 4, we compare LSCNet with numerous recent lightweight models in this domain. The results demonstrate that our method achieves superior performance while maintaining fewer parameters compared to other state-of-the-art models.

When compared to the YOLOv10 family, LSCNet (1.48 M params) outperforms not only the baseline YOLOv10n but also the significantly larger YOLOv10s (7.22 M params) and YOLOv10m (15.31 M params). Specifically, LSCNet achieves a higher

(44.6%) than YOLOv10m (44.2%) while utilizing less than 10% of its parameters. Furthermore, LSCNet surpasses the Transformer-based RT-DETR-R18 [

5] (+3.2%

), showing that for edge-based small object detection, a CNN model is currently more efficient than heavy Transformer architectures.

Compared to recent specialized UAV models, LSCNet shows distinct advantages. Specifically, compared with OSD-YOLOv10 [

37], which has a similar parameter count (1.6 M), LSCNet leads by a substantial margin of 11.2% in

, demonstrating that our architectural innovations (LoGStem and SAOK) provide far superior feature representation than standard lightweight modifications. When compared with the high-performing TA-YOLO-S [

38], which has 45.4%

, LSCNet achieves comparable accuracy within 0.8% difference but with a drastic reduction in model complexity, using only 1/9th of the parameters (1.48 M vs. 13.9 M) and 37% fewer GFLOPs (27.3 vs. 43.3). Furthermore, LSCNet outperforms LRDS-YOLO [

34] by 1.0% in

while reducing parameters by 64.5% and maintaining a similar computational cost. To provide a more intuitive comparison, we visualize the performance of lightweight models from

Table 4 in a radar chart, shown as

Figure 10.

Despite the promising results, our study has limitations. First, while the parameter count is minimized, the increased GFLOPs due to high-resolution processing slightly reduce the inference speed (FPS) compared to the ultra-fast YOLOv10n. However, when compared with YOLOv10m, which achieves similar accuracy, LSCNet demonstrates a substantial reduction in GFLOPs (27.3 vs. 58.9), representing a 53.6% decrease in computational cost.

6. Conclusions and Future Work

This paper proposes Lightweight Shallow Feature Cascade Network (LSCNet), aimed at addressing the unique challenges of small object detection in UAV aerial imagery. We demonstrate that a Shallow Feature Cascade design focusing on high-resolution shallow layer features (P2–P4)performs significantly better than deep pyramid architectures for aerial small object detection. We integrated the LogGStem feature extractor, SAOK multi-scale fusion, and DyHead attention mechanism. LSCNet achieved of 44.6% and 36.1% on the VisDrone2019 and UAVDT benchmarks, respectively, with only 1.48M parameters. We achieved a balance between detection accuracy and model complexity, reducing the number of parameters by 33% compared to the YOLOv10n benchmark model.

From an IoT perspective, LSCNet reduces the processing latency of real-time perception networks, which will contribute to improving the feasibility of deploying aerial computing edge devices in the future. However, we also recognize the limitation that relying on high-resolution feature maps to preserve small object details incurs additional computational costs, which can be further mitigated in future work through distillation and pruning to reduce the model’s computational complexity. Future work will focus on two directions. First, we will further prune LSCNet to reduce the model’s computational cost so that it can be integrated into UAV platforms, utilizing drones as semantic compression nodes to optimize the local processing capability for UAV-based detection. Second, we plan to extend our efficiency analysis of deep pyramid layers to pyramid networks in various scenarios.