Optimizing Cloud Service Composition with Cuckoo Optimization Algorithm for Enhanced Resource Allocation and Energy Efficiency

Abstract

1. Introduction

- Develop a COA-based cloud service composition model that provides effective and efficient resource allocation and power management.

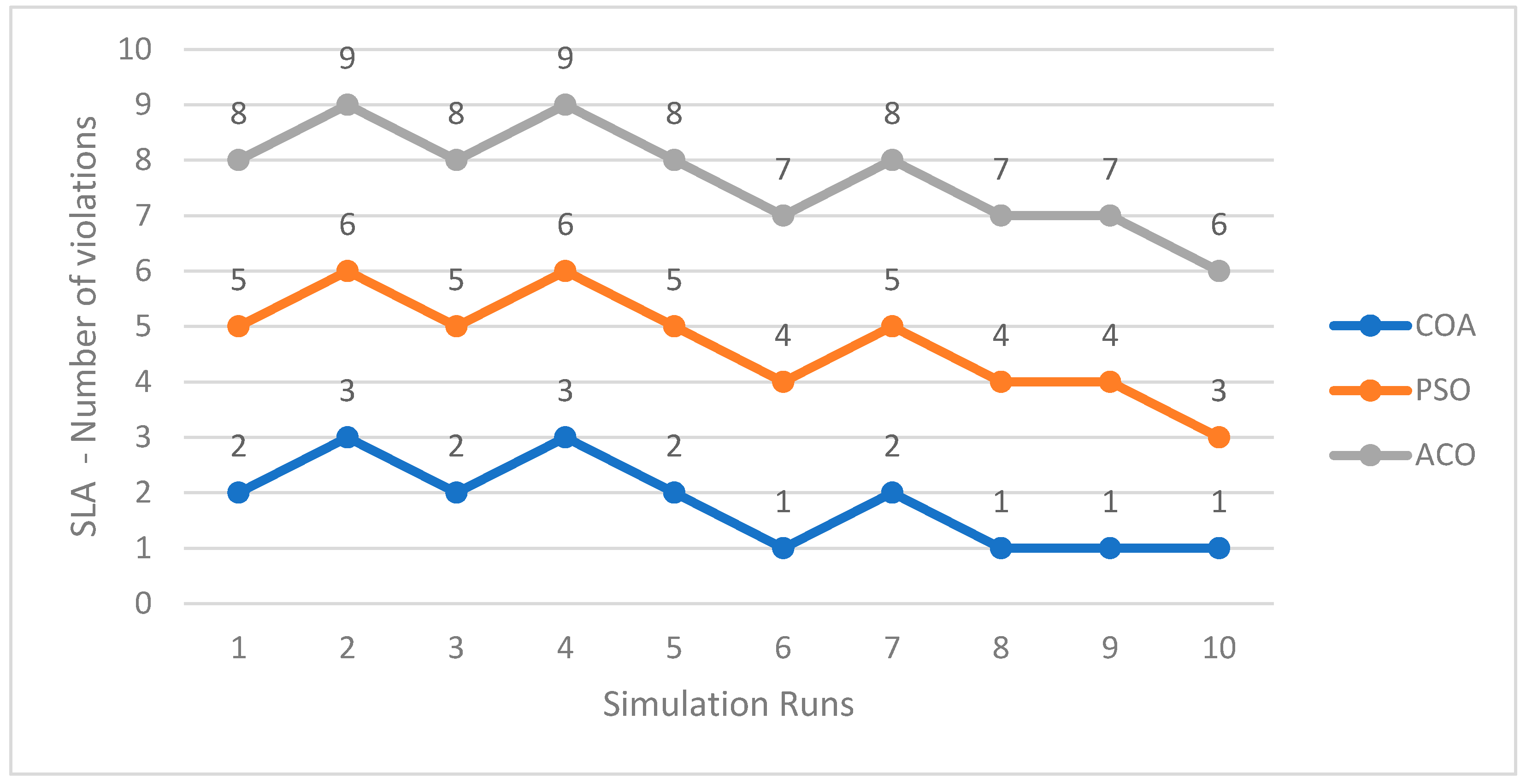

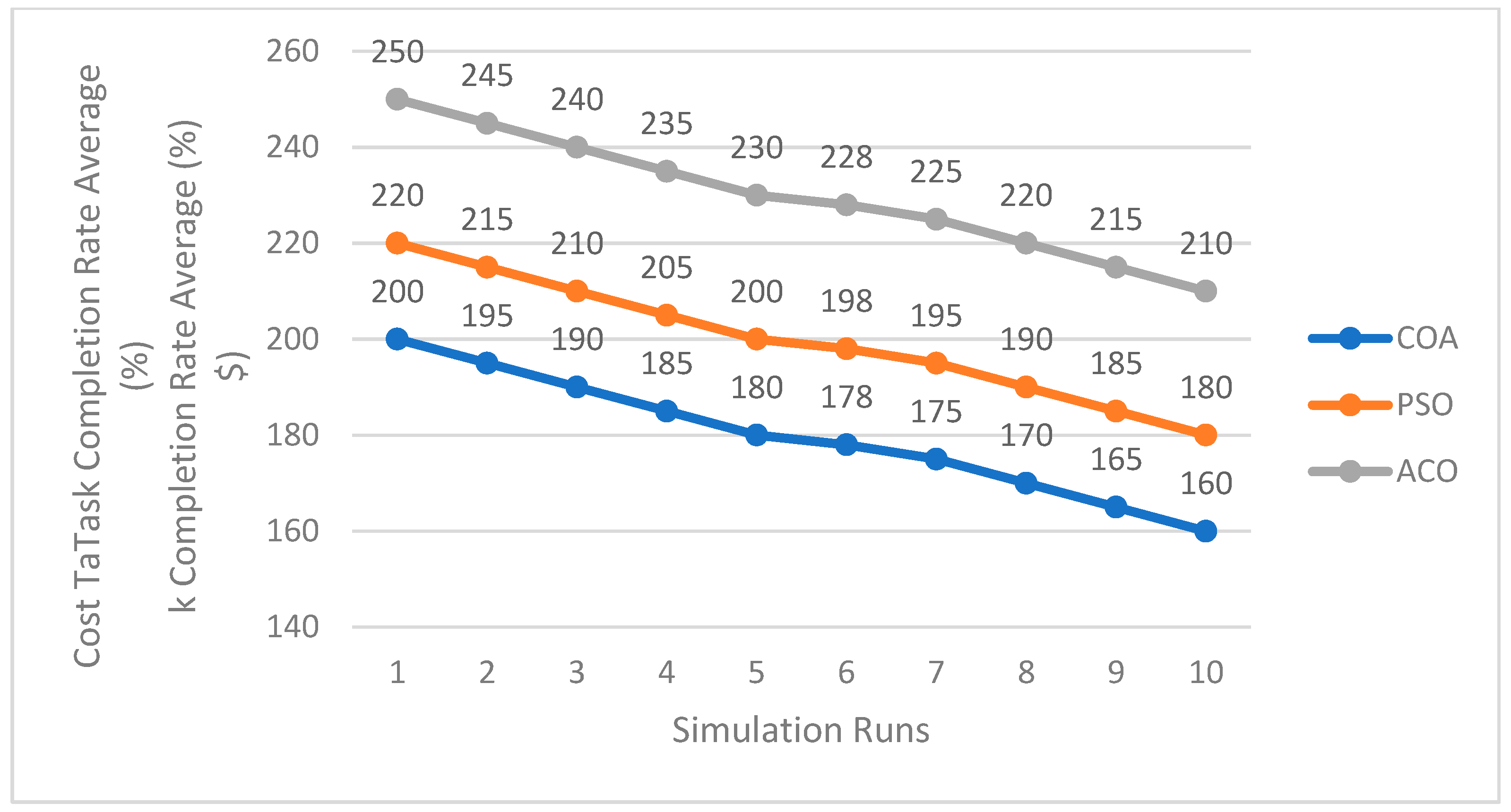

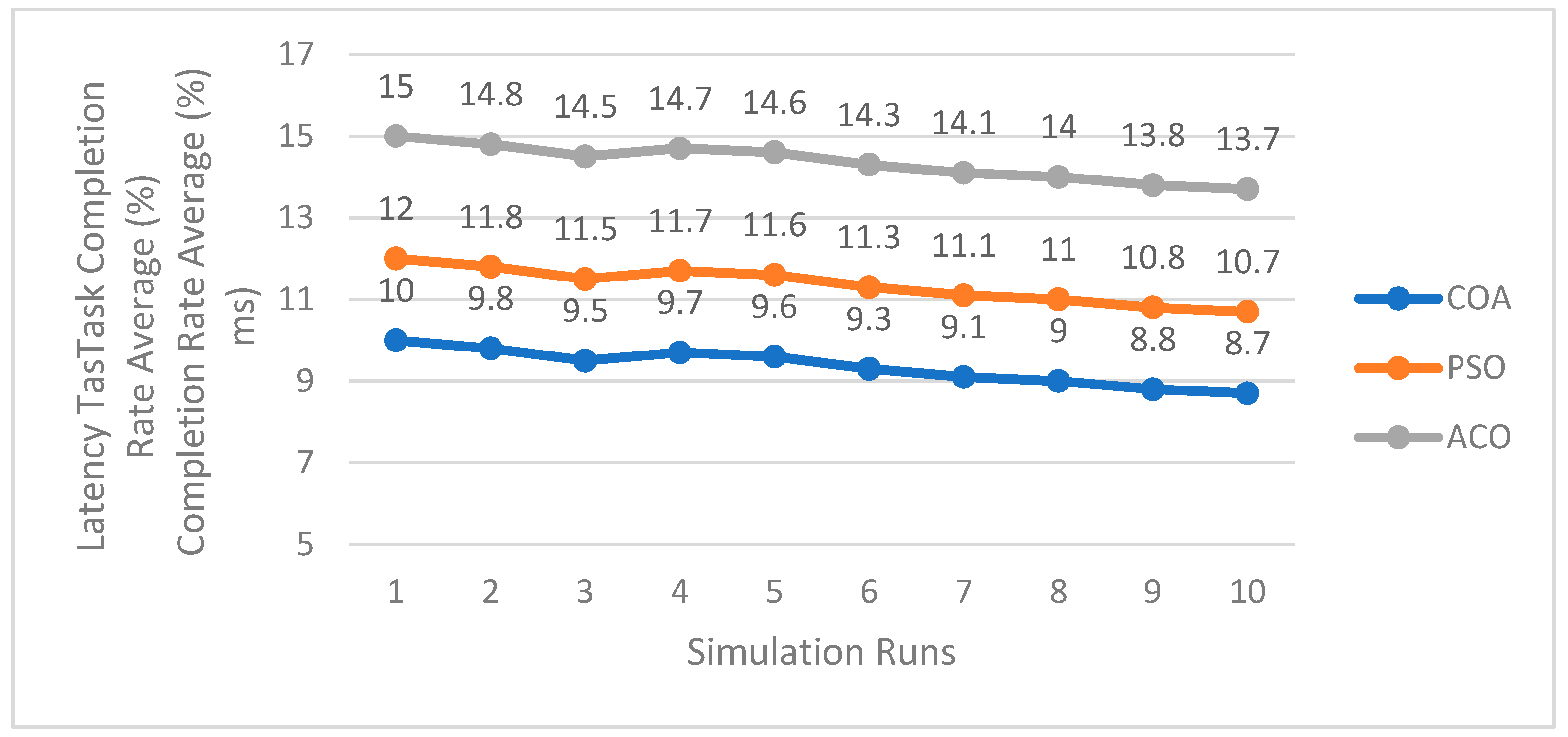

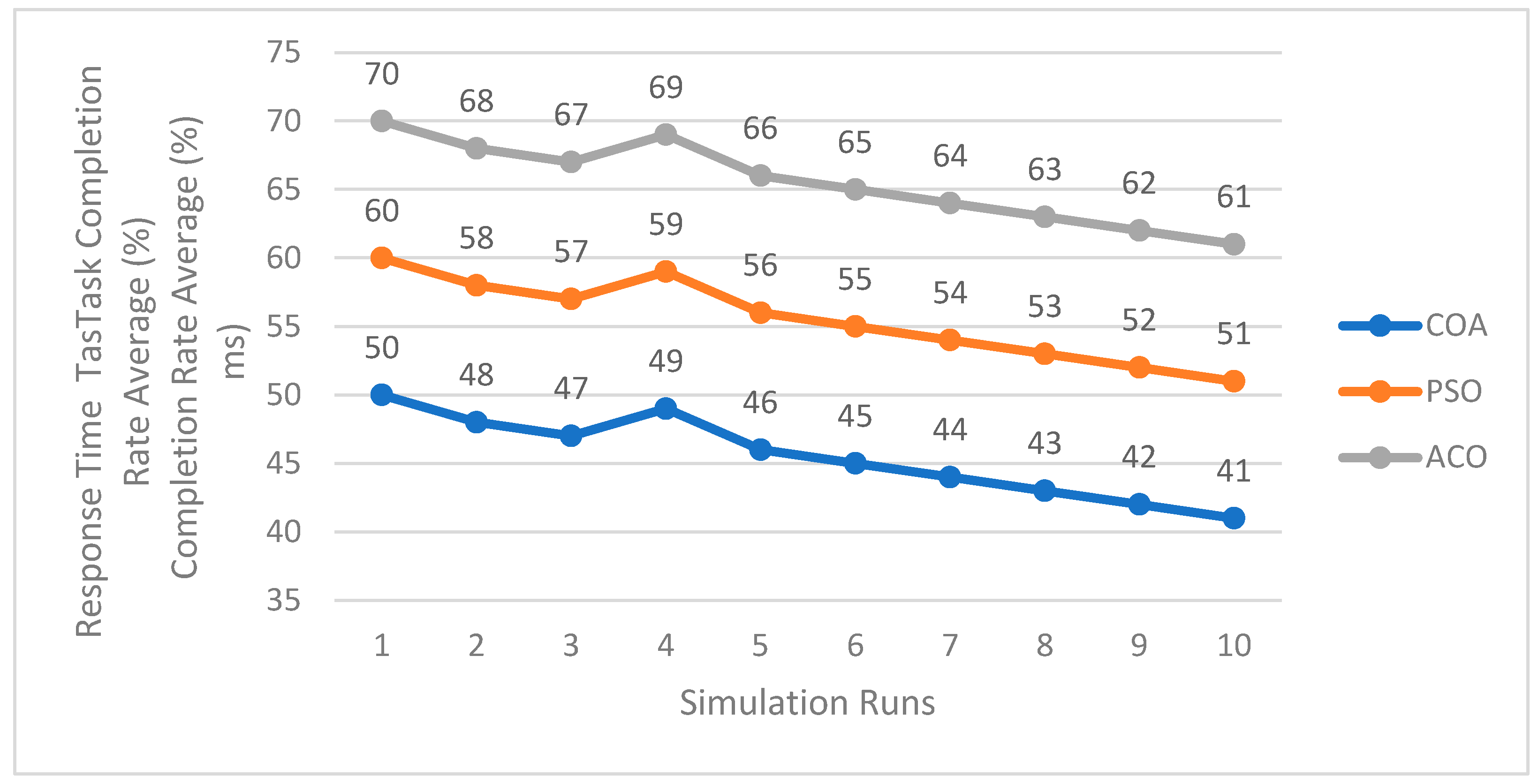

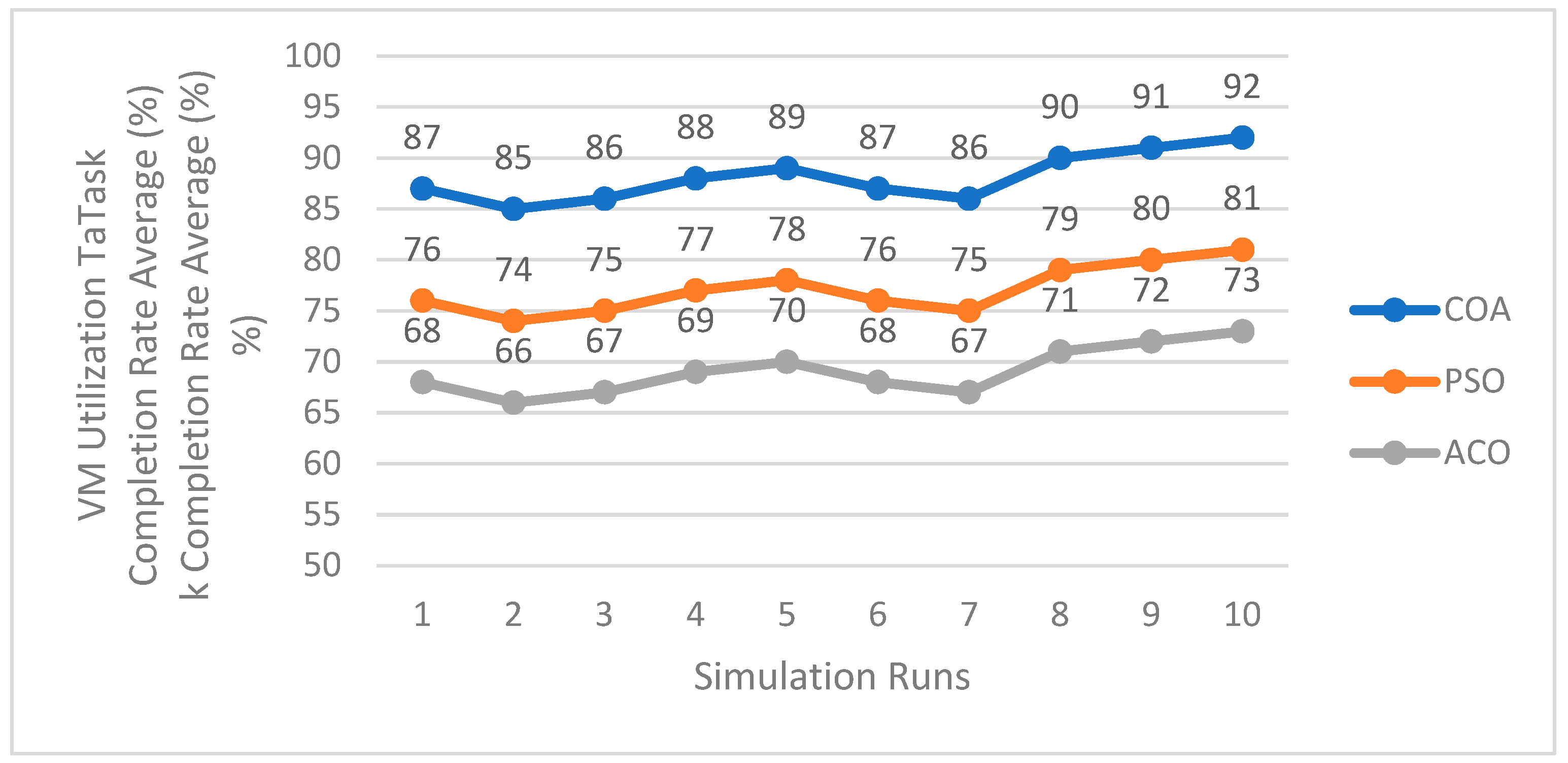

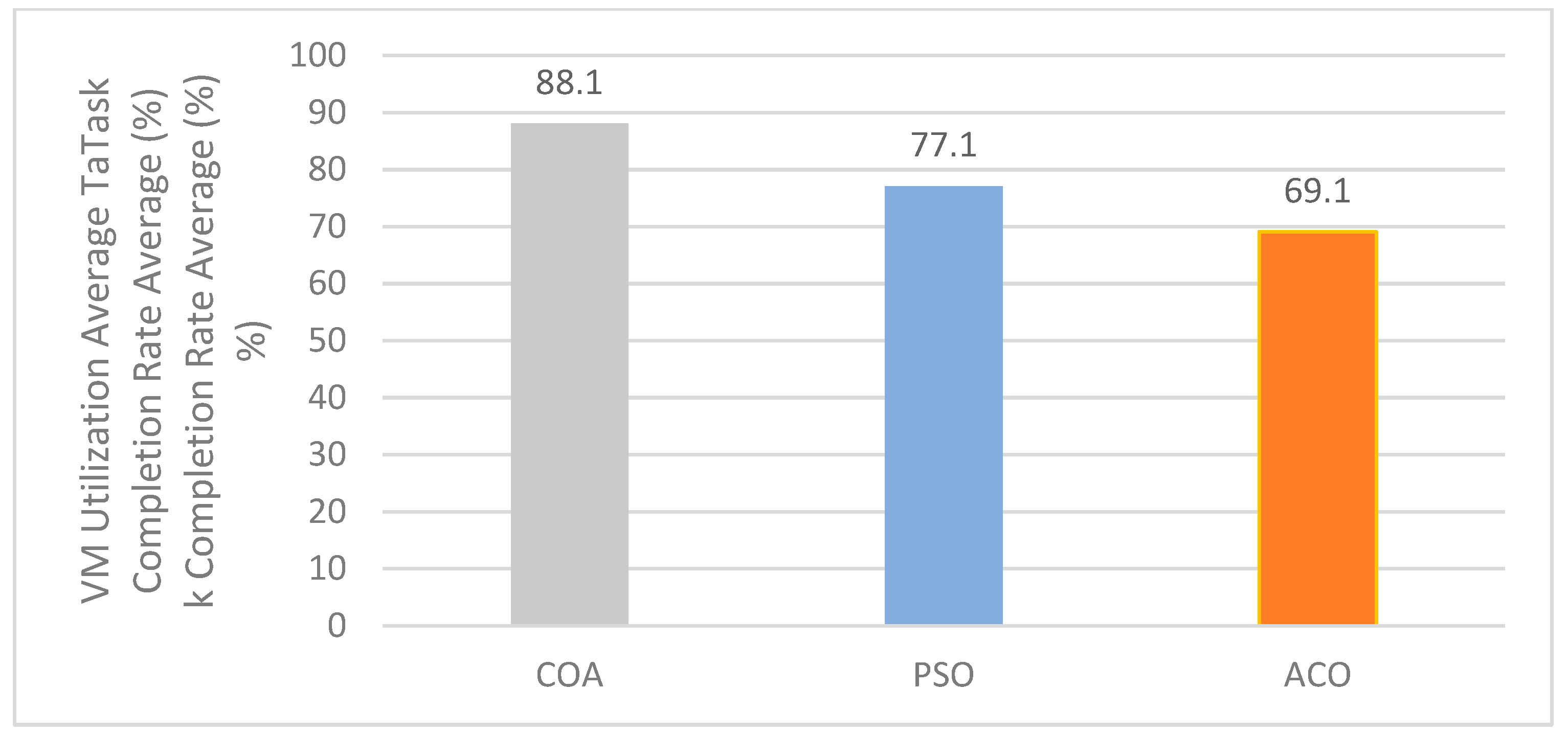

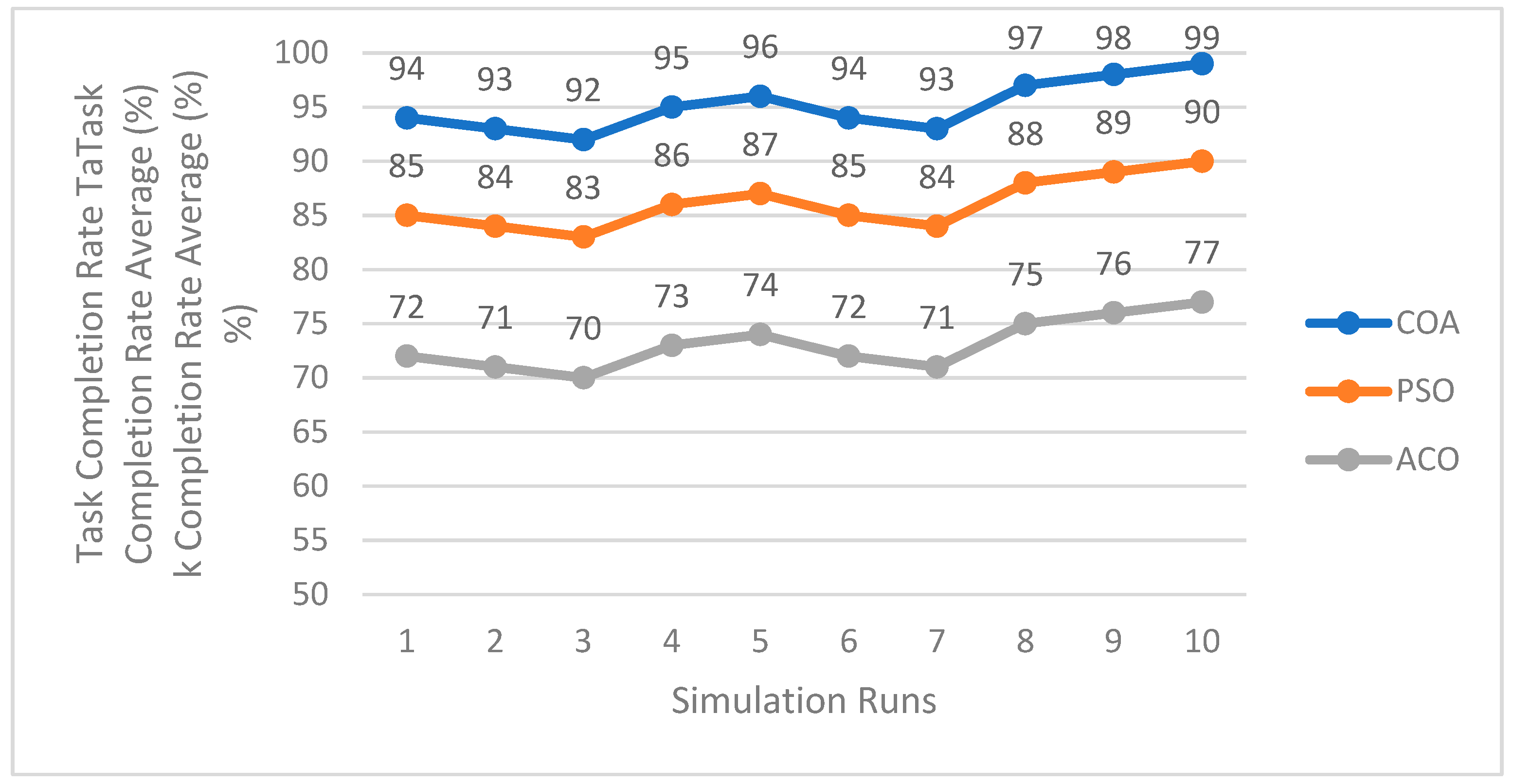

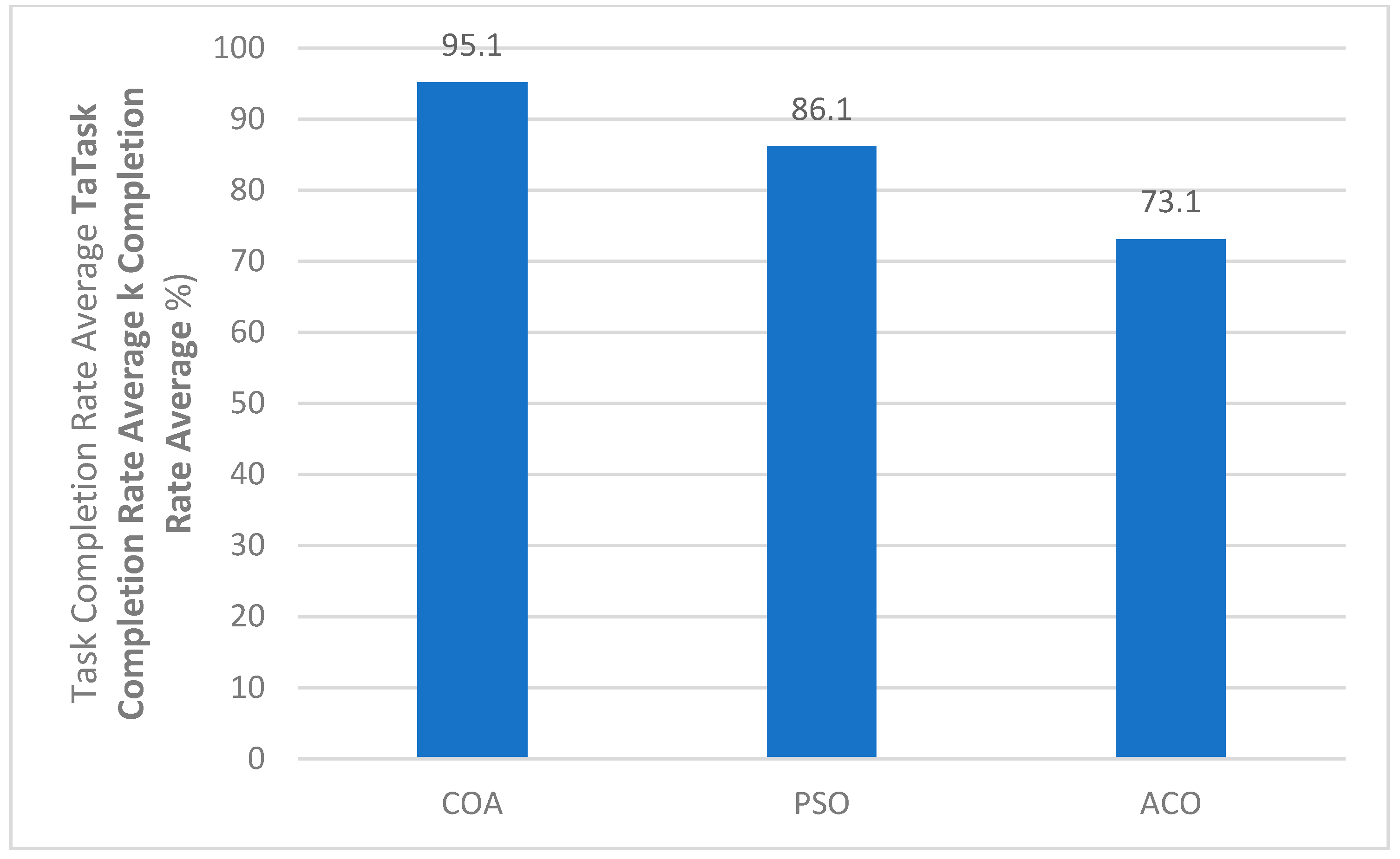

- Compare the performance of the COA against PSO and ACO, highlighting the effectiveness of swarm-intelligence-based methods in cloud optimization.

- Evaluate important cloud performance metrics, such as execution time, power consumption, response time, costs, and SLA violations.

- Demonstrate the applicability and the capabilities of the COA in cloud services, presenting insights into its capability for actual, real-world cloud service control and management.

2. Literature Review

3. Methodology

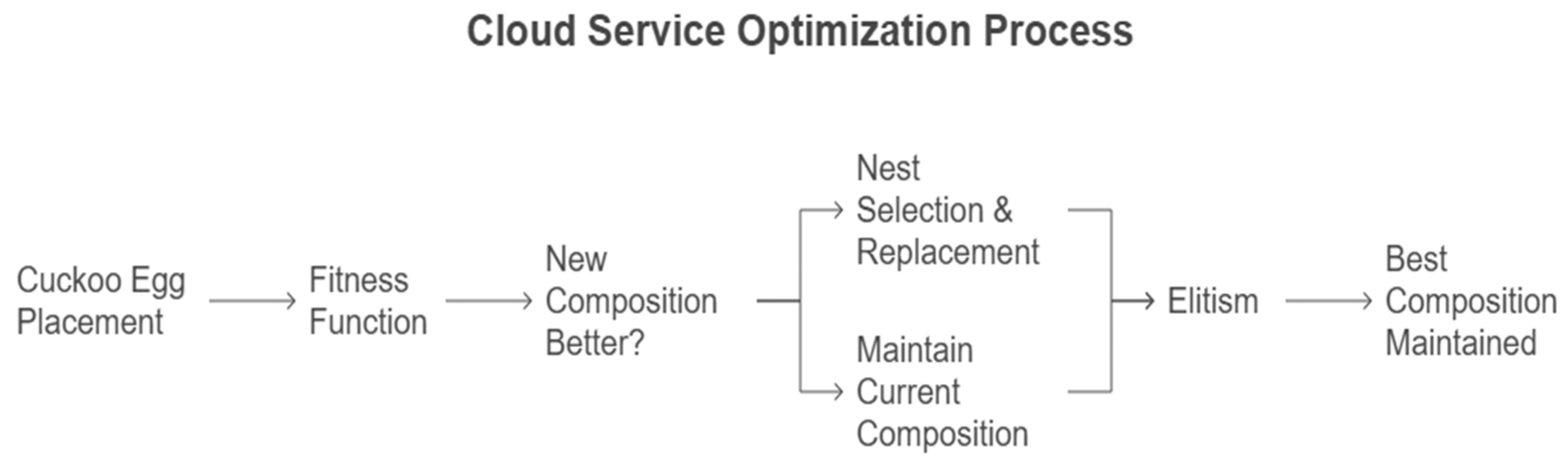

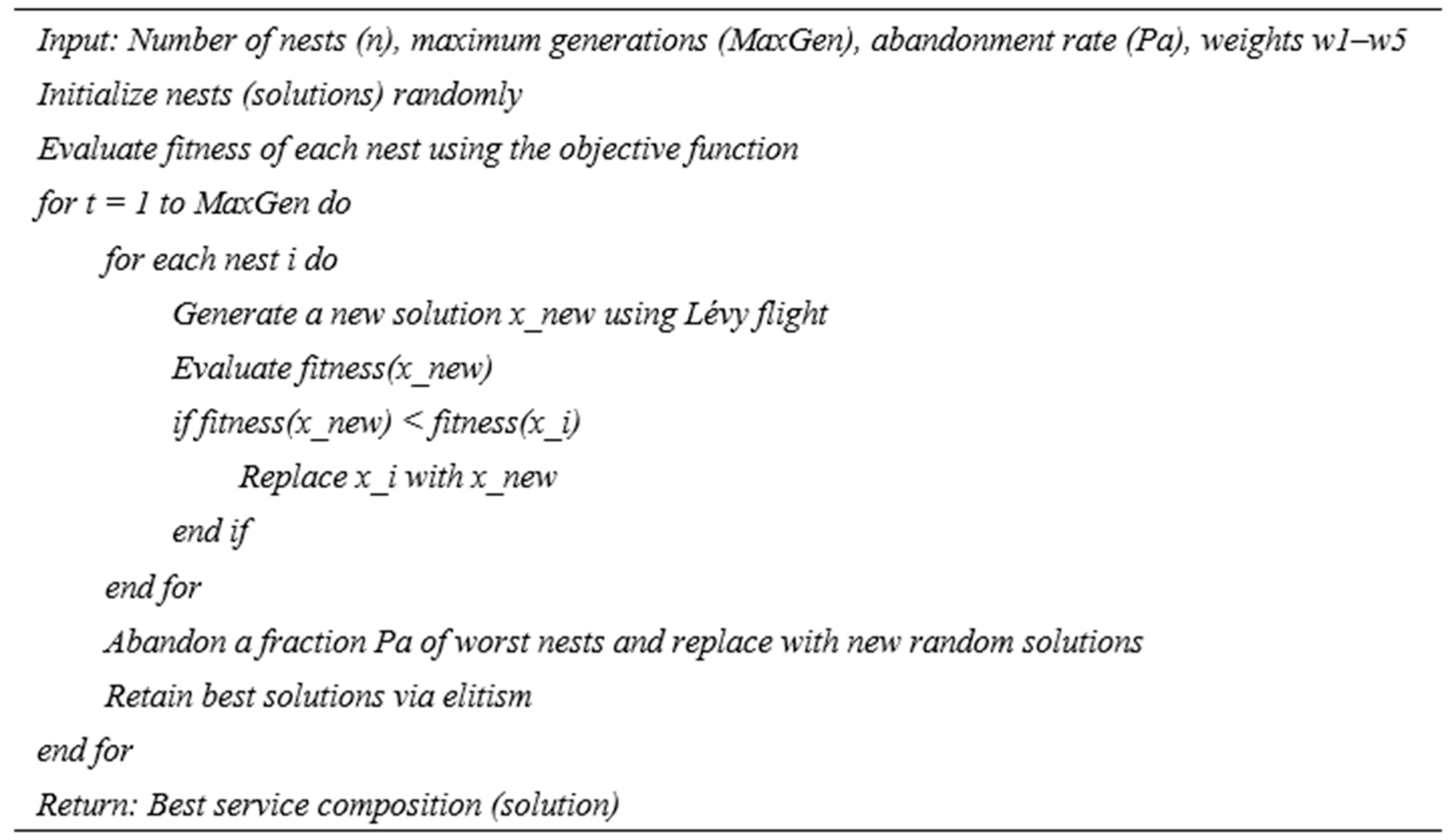

3.1. Implementation of the COA

3.1.1. Initialization of Parameters

- n: Number of host nests (VMs);

- m: Number of service requests (cuckoo eggs);

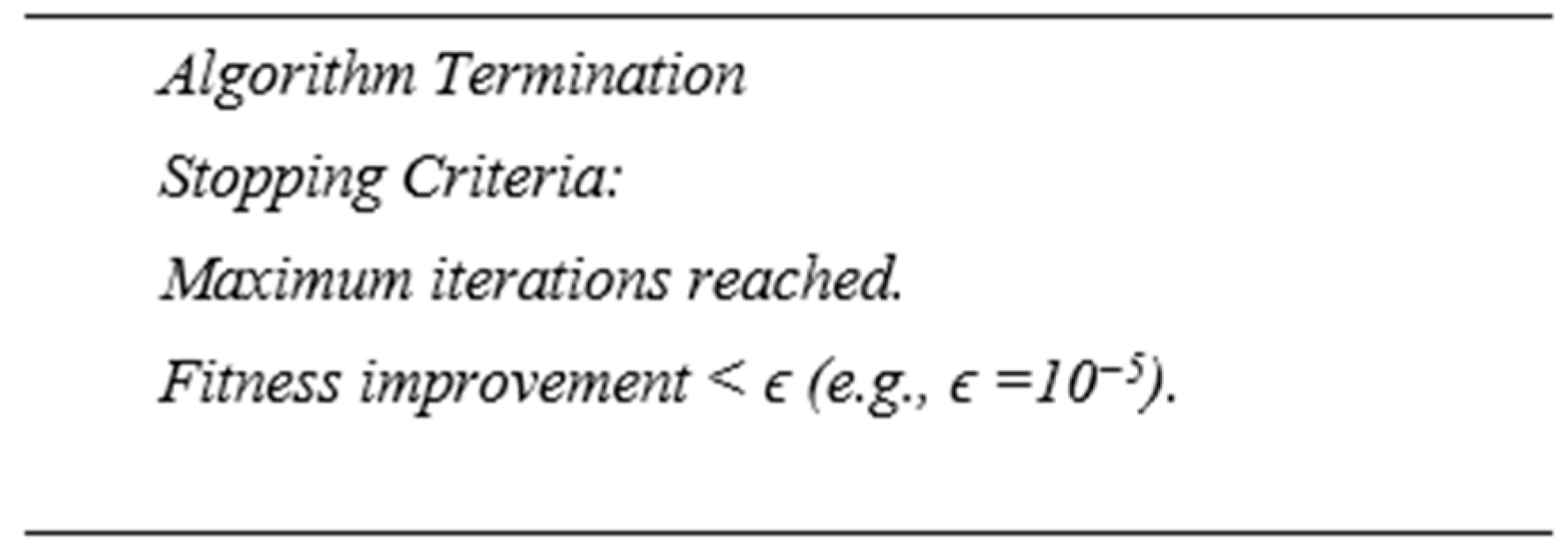

- MaxGen: Maximum number of generations (iterations);

- Pa: Probability of discovering alien eggs (abandonment rate);

- w1 to w5: Weight coefficients for multi-objective fitness;

- α: Step size scaling factor for Lévy flights;

- Nests (VMs): Each nest represents a possible service composition (VM allocation);

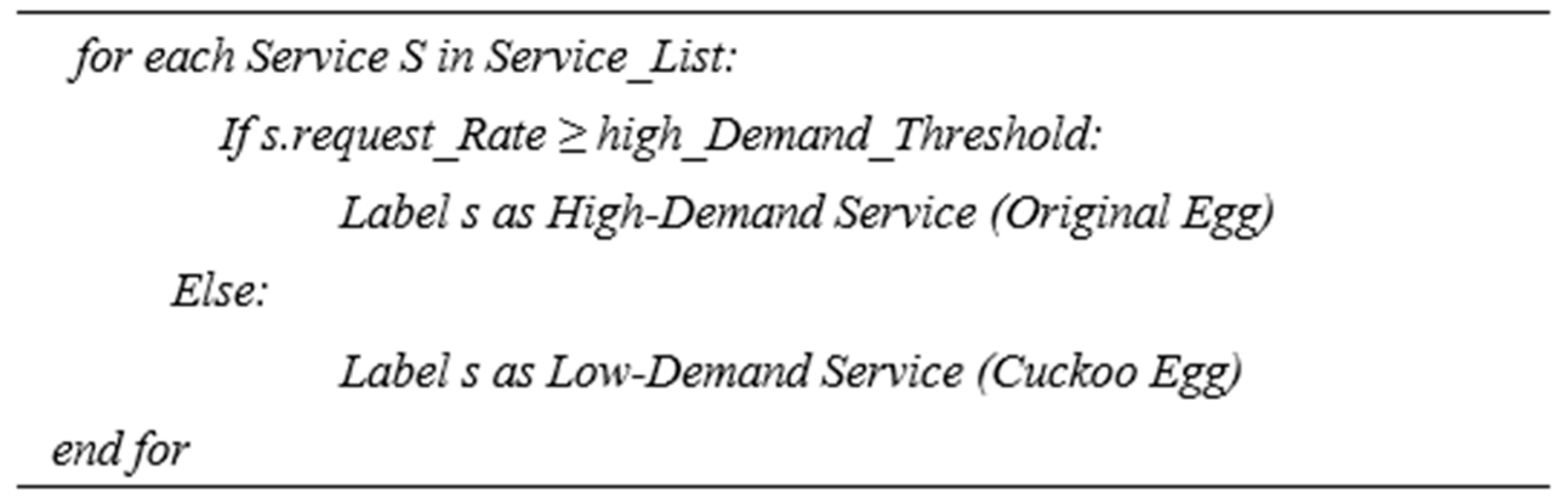

- Eggs (services):

- Original eggs (high-demand services): Services with strict QoS requirements.

- Cuckoo eggs (low-demand services): Services that can be dynamically replaced for better efficiency.

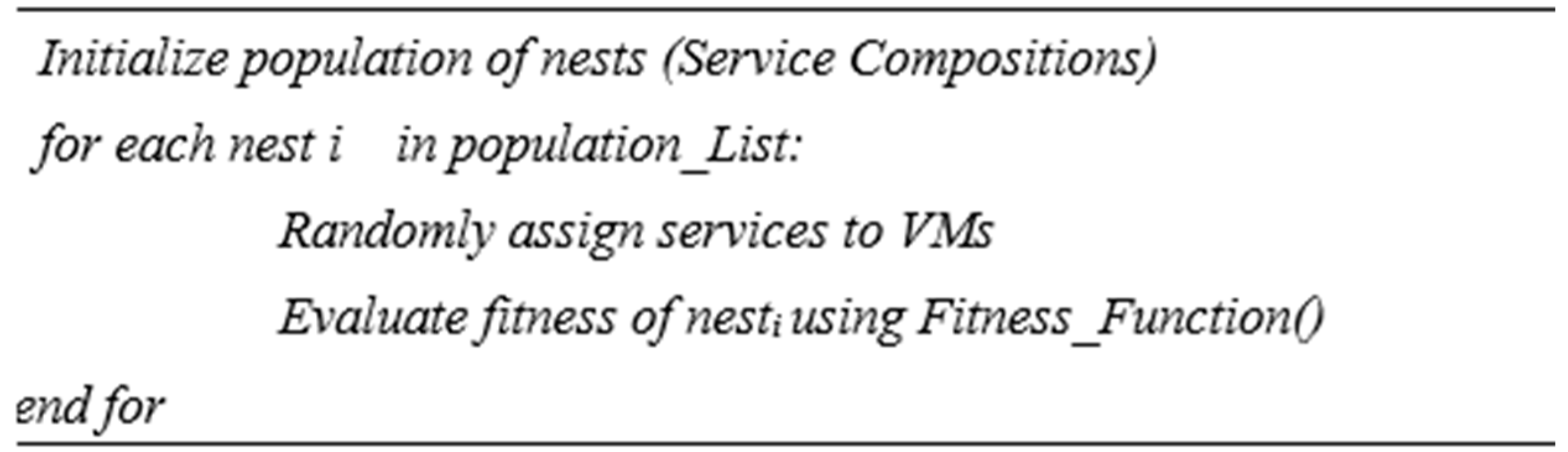

3.1.2. Initial Population Generation

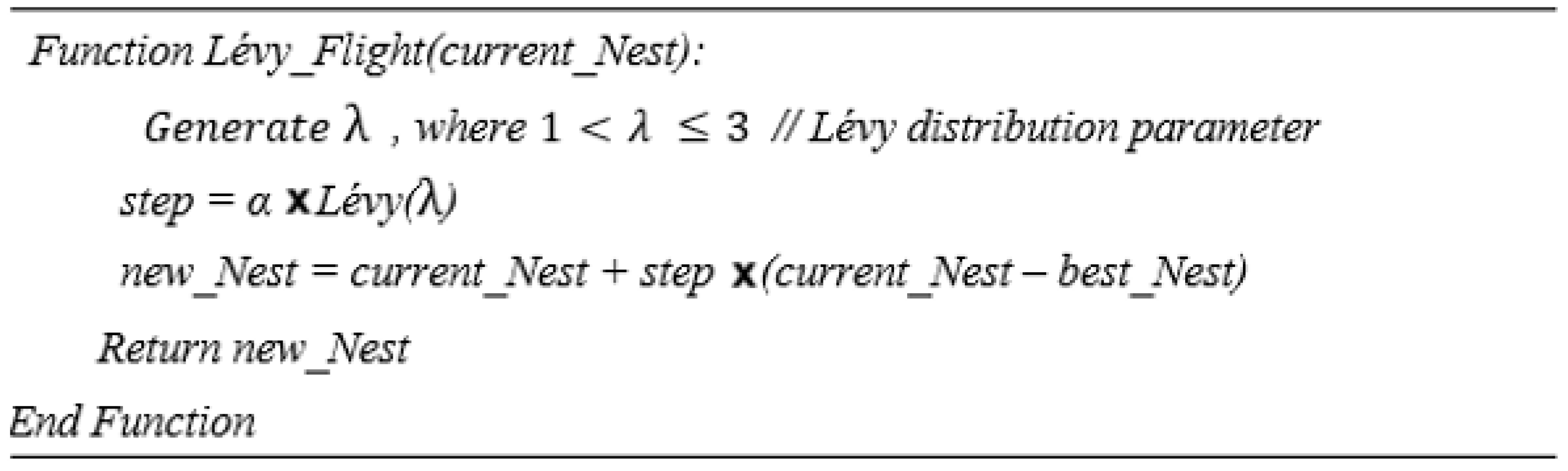

3.1.3. Generating New Solutions (Lévy-Flight-Based)

3.1.4. Fitness Function

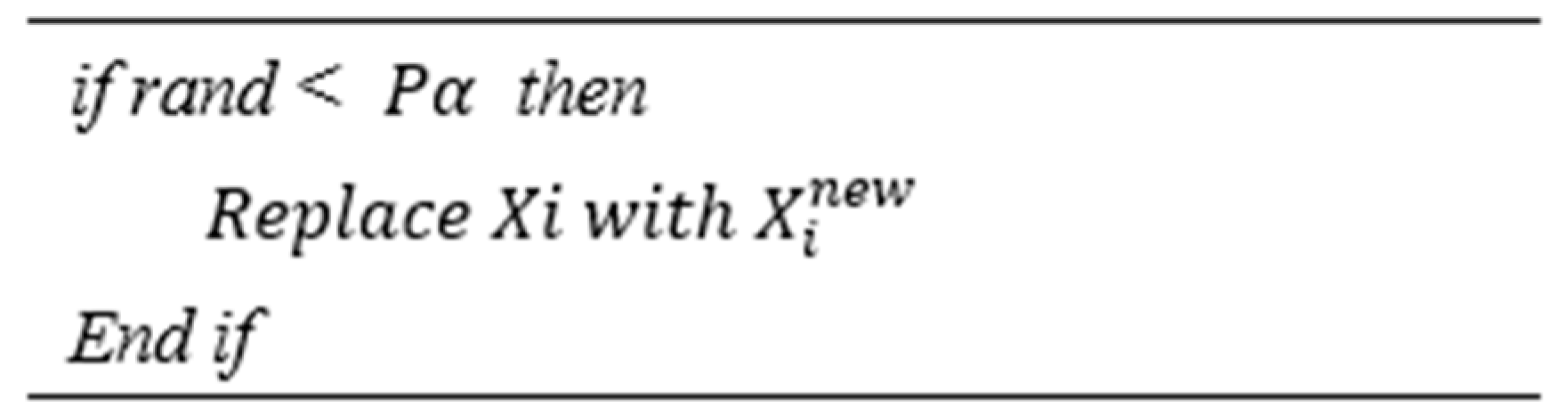

3.1.5. Discovery and Replacement Strategy

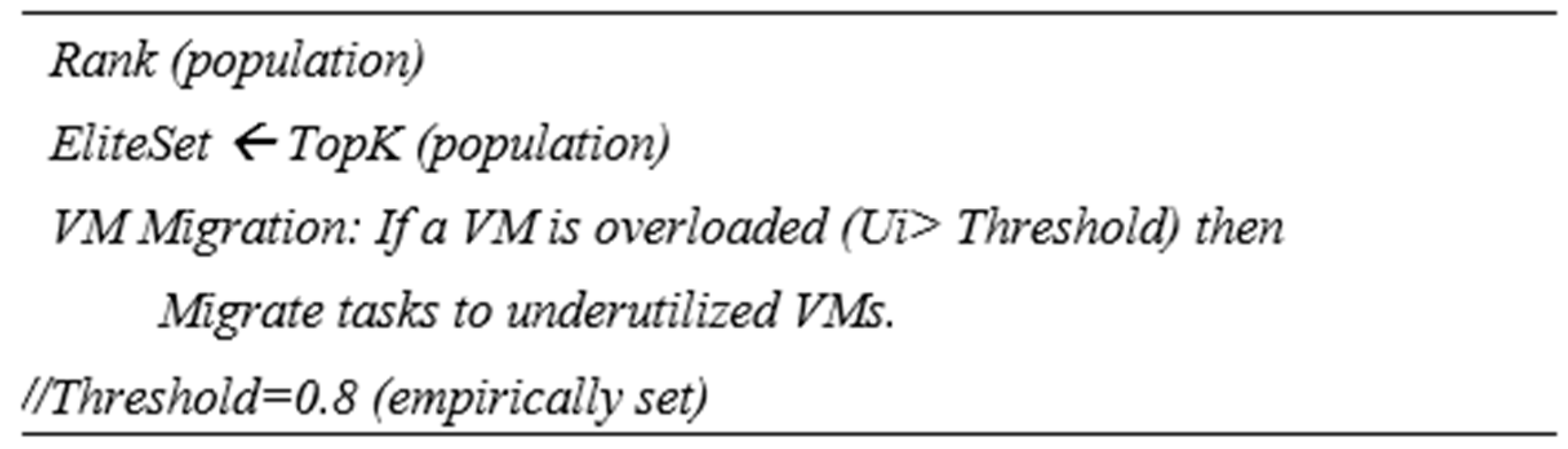

3.1.6. Nest Selection and Elitism

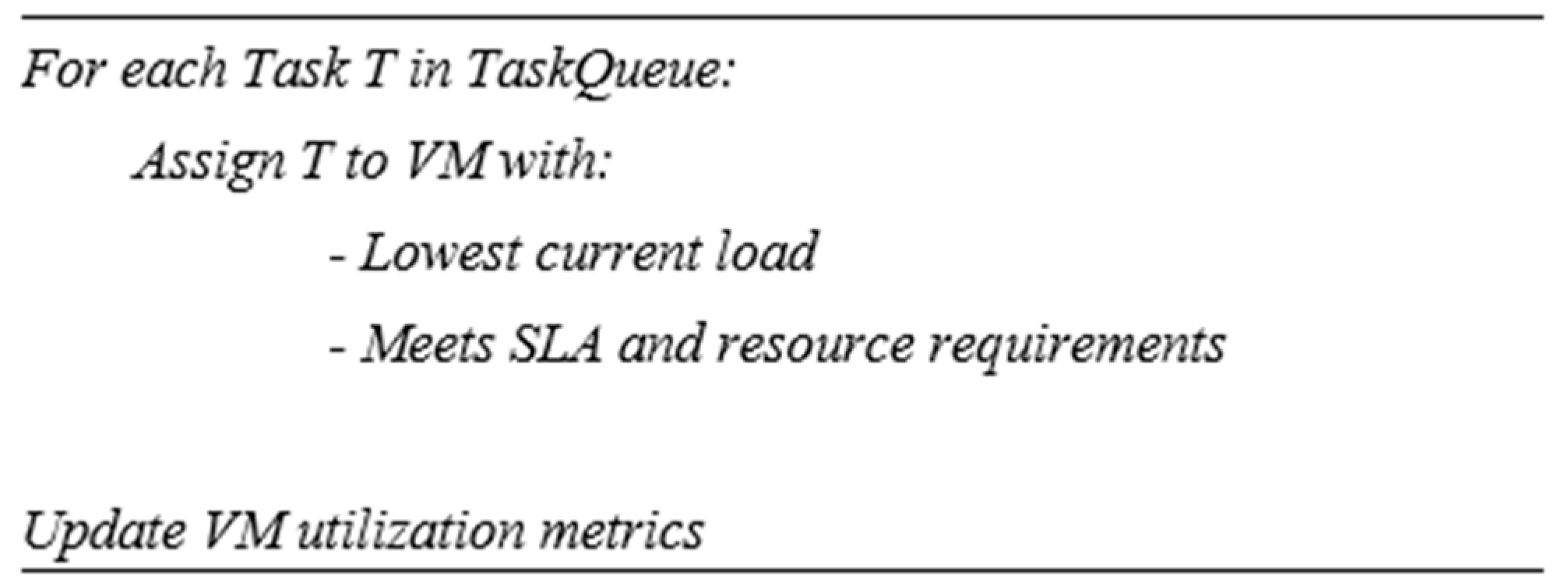

3.1.7. Task Scheduling Strategy

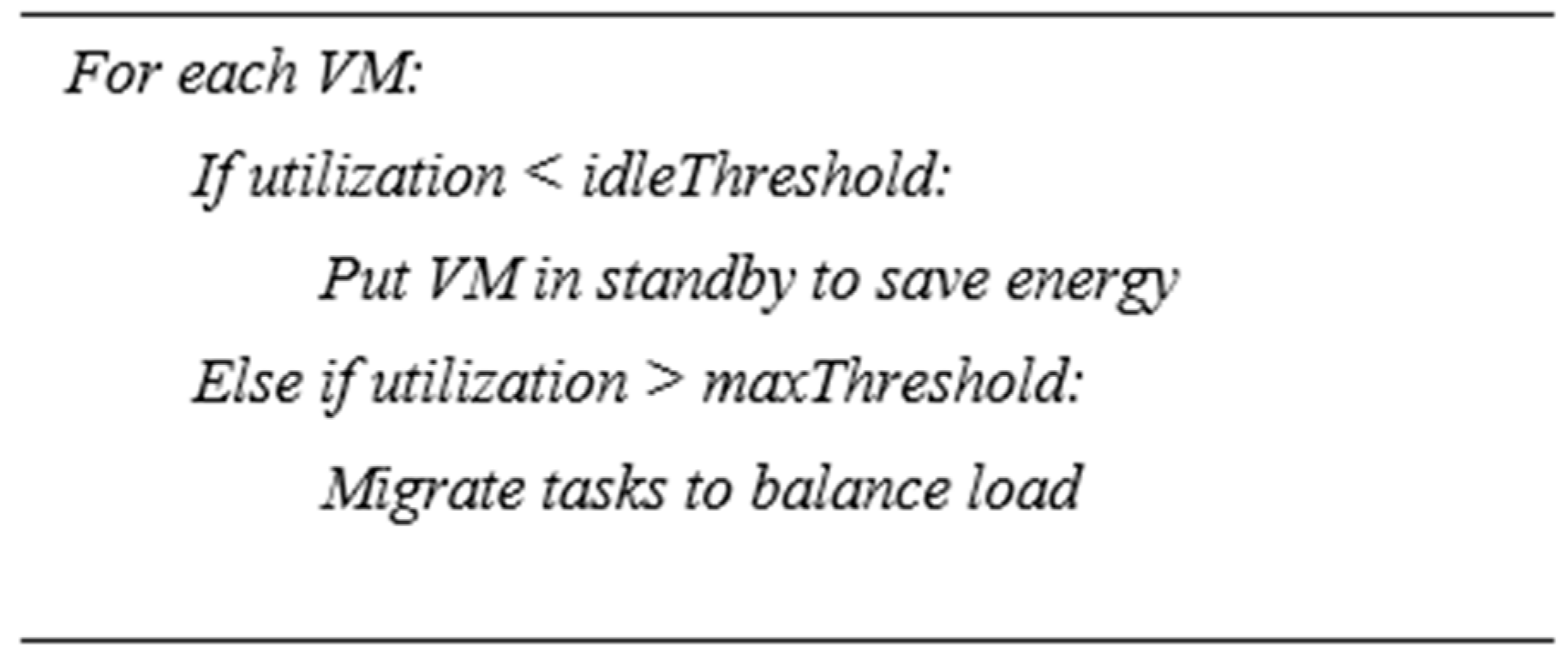

3.1.8. Energy-Aware VM Consolidation

3.1.9. Energy Management Model

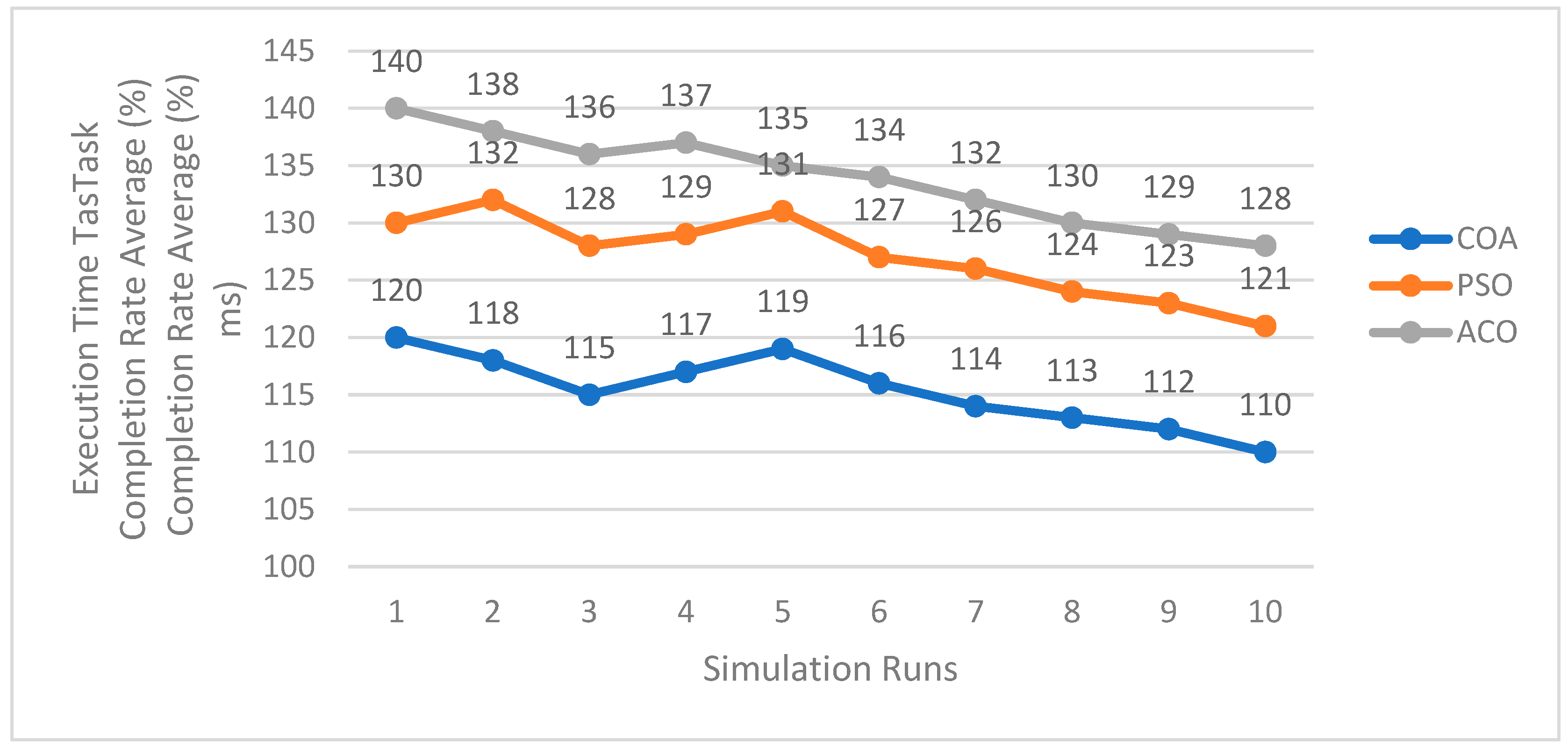

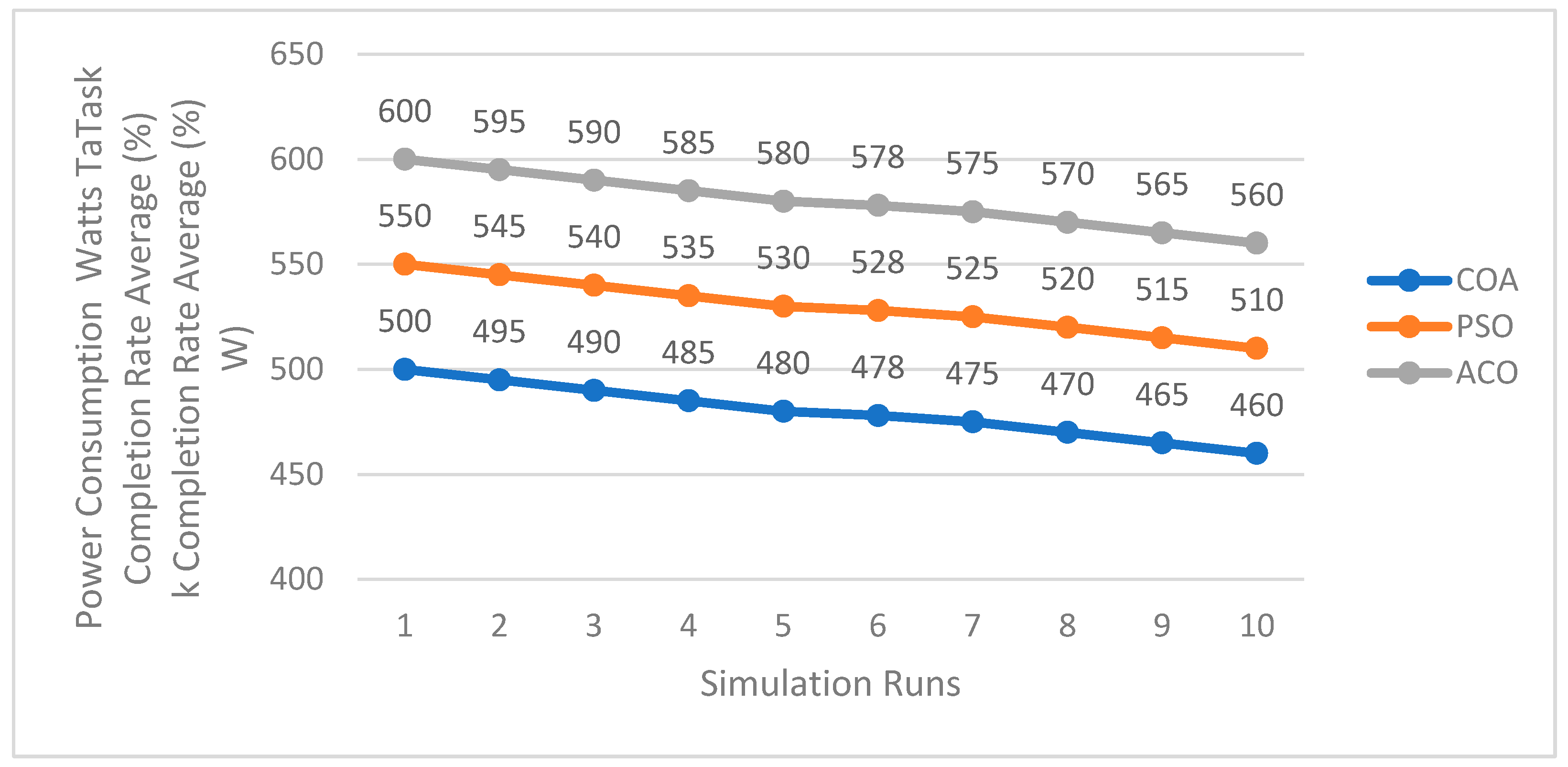

4. Simulation, Results and Discussion

4.1. Simulation

4.2. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SLA | Service Level Agreement |

| VM | Virtual Machine |

| COA | Cuckoo Optimization Algorithm |

| PSO | Particle Swarm Optimization |

| ACO | Ant Colony Optimization |

| SaaS | Software As a Service |

| PaaS | Platform As a Service |

| IaaS | Infrastructure As a Service |

| SOA | Oriented Architecture |

| CPU | Central Processing Unit |

| MCE | Multi-Cloud Environment |

| GA | Genetic Algorithm |

| OEJSR | Optimized Efficient Job Scheduling Resource |

| LJFP | Longest Job to Fastest Processor |

| MCT | Minimum Completion Time |

| MSF | Multistage Forward Search |

| SMO | Spider Monkey Optimization |

| AWCO | Advanced Willow Catkin Optimization |

| GWO | Gray Wolf Optimization |

| AWS | Amazon Web Services |

References

- Mahdizadeh, M.; Montazerolghaem, A.; Jamshidi, K. Task Scheduling and Load Balancing in SDN-Based Cloud Computing: A Review of Relevant Research. J. Eng. Res. 2024, S2307187724002773. [Google Scholar] [CrossRef]

- Choudhary, V.; Vithayathil, J. The Impact of Cloud Computing: Should the IT Department Be Organized as a Cost Center or a Profit Center? J. Manag. Inf. Syst. 2013, 30, 67–100. [Google Scholar] [CrossRef]

- Rallabandi, V.S.S.S.N.; Gottumukkala, P.; Singh, N.; Shah, S.K. Optimized Efficient Job Scheduling Resource (OEJSR) Approach Using Cuckoo and Grey Wolf Job Optimization to Enhance Resource Search in Cloud Environment. Cogent Eng. 2024, 11, 2335363. [Google Scholar] [CrossRef]

- Avram, M.G. Advantages and Challenges of Adopting Cloud Computing from an Enterprise Perspective. Procedia Technol. 2014, 12, 529–534. [Google Scholar] [CrossRef]

- Biswas, D.; Jahan, S.; Saha, S.; Samsuddoha, M. A Succinct State-of-the-Art Survey on Green Cloud Computing: Challenges, Strategies, and Future Directions. Sustain. Comput. Inform. Syst. 2024, 44, 101036. [Google Scholar] [CrossRef]

- Mumtaz, R.; Samawi, V.; Alhroob, A.; Alzyadat, W.; Almukahel, I. PDIS: A Service Layer for Privacy and Detecting Intrusions in Cloud Computing. Int. J. Adv. Soft Comput. Its Appl. 2022, 14, 15–35. [Google Scholar] [CrossRef]

- Idahosa, M.E.; Eireyi-Edewede, S.; Eruanga, C.E. Application of Cloud Computing Technology for Enhances E-Government Services in Edo State. In Advances in Electronic Government, Digital Divide, and Regional Development; Lytras, M.D., Alkhaldi, A.N., Ordóñez De Pablos, P., Eds.; IGI Global: Hershey, PA, USA, 2024; pp. 221–278. ISBN 979-8-3693-7678-2. [Google Scholar]

- Tarawneh, H.; Alhadid, I.; Khwaldeh, S.; Afaneh, S. An Intelligent Cloud Service Composition Optimization Using Spider Monkey and Multistage Forward Search Algorithms. Symmetry 2022, 14, 82. [Google Scholar] [CrossRef]

- Ma, H.; Chen, Y.; Zhu, H.; Zhang, H.; Tang, W. Optimization of Cloud Service Composition for Data-Intensive Applications via E-CARGO. In Proceedings of the 2018 IEEE 22nd International Conference on Computer Supported Cooperative Work in Design (CSCWD), Nanjing, China, 9–11 May 2018; IEEE: Piscataway, NJ, USA; pp. 785–789. [Google Scholar]

- Wajid, U.; Marin, C.A.; Karageorgos, A. Optimizing Energy Efficiency in the Cloud Using Service Composition and Runtime Adaptation Techniques. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics, Manchester, UK, 13–16 October 2013; IEEE: Piscataway, NJ, USA; pp. 115–120. [Google Scholar]

- Khwaldeh, S.; Abu-taieh, E.; Alhadid, I.; Alkhawaldeh, R.S.; Masa’deh, R. DyOrch: Dynamic Orchestrator for Improving Web Services Composition. In Proceedings of the 33rd International Business Information Management Association Conference, IBIMA 2019, Granada, Spain, 10–11 April 2019; pp. 6030–6047. [Google Scholar]

- Alhadid, I.; Tarawneh, H.; Kaabneh, K.; Masa’deh, R.; Hamadneh, N.N.; Tahir, M.; Khwaldeh, S. Optimizing Service Composition (SC) Using Smart Multistage Forward Search (SMFS). Intell. Autom. Soft Comput. 2021, 28, 321–336. [Google Scholar] [CrossRef]

- AlHadid, I.; Abu-Taieh, E. Web Services Composition Using Dynamic Classification and Simulated Annealing. MAS 2018, 12, 395. [Google Scholar] [CrossRef]

- Masdari, M.; Nozad Bonab, M.; Ozdemir, S. QoS-Driven Metaheuristic Service Composition Schemes: A Comprehensive Overview. Artif. Intell. Rev. 2021, 54, 3749–3816. [Google Scholar] [CrossRef]

- Nazif, H.; Nassr, M.; Al-Khafaji, H.M.R.; Jafari Navimipour, N.; Unal, M. A Cloud Service Composition Method Using a Fuzzy-Based Particle Swarm Optimization Algorithm. Multimed. Tools Appl. 2023, 83, 56275–56302. [Google Scholar] [CrossRef]

- Sreeramulu, M.D.; Mohammed, A.S.; Kalla, D.; Boddapati, N.; Natarajan, Y. AI-Driven Dynamic Workload Balancing for Real-Time Applications on Cloud Infrastructure. In Proceedings of the 2024 7th International Conference on Contemporary Computing and Informatics (IC3I), Greater Noida, India, 18 September 2024; IEEE: Piscataway, NJ, USA; pp. 1660–1665. [Google Scholar]

- Fister, I.; Yang, X.-S.; Fister, D.; Fister, I. Cuckoo Search: A Brief Literature Review. In Cuckoo Search and Firefly Algorithm; Yang, X.-S., Ed.; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2014; Volume 516, pp. 49–62. ISBN 978-3-319-02140-9. [Google Scholar]

- Rajabioun, R. Cuckoo Optimization Algorithm. Appl. Soft Comput. 2011, 11, 5508–5518. [Google Scholar] [CrossRef]

- Yang, X.-S.; Deb, S. Cuckoo Search: Recent Advances and Applications. Neural Comput Applic 2014, 24, 169–174. [Google Scholar] [CrossRef]

- Chiroma, H.; Herawan, T.; Fister, I.; Fister, I.; Abdulkareem, S.; Shuib, L.; Hamza, M.F.; Saadi, Y.; Abubakar, A. Bio-Inspired Computation: Recent Development on the Modifications of the Cuckoo Search Algorithm. Appl. Soft Comput. 2017, 61, 149–173. [Google Scholar] [CrossRef]

- Yang, X.-S. (Ed.) Cuckoo Search and Firefly Algorithm: Theory and Applications; Studies in Computational Intelligence; Springer International Publishing: Cham, Switzerland, 2014; Volume 516, ISBN 978-3-319-02140-9. [Google Scholar]

- Kurdi, H.; Ezzat, F.; Altoaimy, L.; Ahmed, S.H.; Youcef-Toumi, K. MultiCuckoo: Multi-Cloud Service Composition Using a Cuckoo-Inspired Algorithm for the Internet of Things Applications. IEEE Access 2018, 6, 56737–56749. [Google Scholar] [CrossRef]

- Zavieh, H.; Javadpour, A.; Li, Y.; Ja’fari, F.; Nasseri, S.H.; Rostami, A.S. Task Processing Optimization Using Cuckoo Particle Swarm (CPS) Algorithm in Cloud Computing Infrastructure. Clust. Comput. 2023, 26, 745–769. [Google Scholar] [CrossRef]

- Liang, H.T.; Kang, F.H. Adaptive Mutation Particle Swarm Algorithm with Dynamic Nonlinear Changed Inertia Weight. Optik 2016, 127, 8036–8042. [Google Scholar] [CrossRef]

- Dordaie, N.; Navimipour, N.J. A Hybrid Particle Swarm Optimization and Hill Climbing Algorithm for Task Scheduling in the Cloud Environments. ICT Express 2018, 4, 199–202. [Google Scholar] [CrossRef]

- Guntsch, M.; Middendorf, M. A Population Based Approach for ACO. In Applications of Evolutionary Computing; Cagnoni, S., Gottlieb, J., Hart, E., Middendorf, M., Raidl, G.R., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2002; Volume 2279, pp. 72–81. ISBN 978-3-540-43432-0. [Google Scholar]

- STUTZLE, T.; DORIGO, M. ACO Algorithms for the Traveling Salesman Problem. Evol. Algorithms Eng. Comput. Sci. 1999, 4, 163–183. [Google Scholar]

- Yin, C.; Li, S.; Li, X. An Optimization Method of Cloud Manufacturing Service Composition Based on Matching-Collaboration Degree. Int. J. Adv. Manuf. Technol. 2024, 131, 343–353. [Google Scholar] [CrossRef]

- Bei, L.; Wenlin, L.; Xin, S.; Xibin, X. An Improved ACO Based Service Composition Algorithm in Multi-Cloud Networks. J Cloud Comp 2024, 13, 17. [Google Scholar] [CrossRef]

- Prity, F.S.; Uddin, K.M.A.; Nath, N. Exploring Swarm Intelligence Optimization Techniques for Task Scheduling in Cloud Computing: Algorithms, Performance Analysis, and Future Prospects. Iran J. Comput. Sci. 2024, 7, 337–358. [Google Scholar] [CrossRef]

- Gupta, S.; Tripathi, S. A Comprehensive Survey on Cloud Computing Scheduling Techniques. Multimed. Tools Appl. 2023, 83, 53581–53634. [Google Scholar] [CrossRef]

- Aslanpour, M.S.; Gill, S.S.; Toosi, A.N. Performance Evaluation Metrics for Cloud, Fog and Edge Computing: A Review, Taxonomy, Benchmarks and Standards for Future Research. Internet Things 2020, 12, 100273. [Google Scholar] [CrossRef]

- Kumar, M.; Sharma, S.C.; Goel, A.; Singh, S.P. A Comprehensive Survey for Scheduling Techniques in Cloud Computing. J. Netw. Comput. Appl. 2019, 143, 1–33. [Google Scholar] [CrossRef]

- Assudani, P.; Abimannan, S. Cost Efficient Resource Scheduling in Cloud Computing: A Survey. Int. J. Eng. Technol. 2018, 7, 38–43. [Google Scholar]

- Katal, A.; Dahiya, S.; Choudhury, T. Energy Efficiency in Cloud Computing Data Centers: A Survey on Software Technologies. Clust. Comput. 2023, 26, 1845–1875. [Google Scholar] [CrossRef]

- Mao, M.; Humphrey, M. A Performance Study on the VM Startup Time in the Cloud. In Proceedings of the 2012 IEEE Fifth International Conference on Cloud Computing, Honolulu, HI, USA, 24–29 June 2012; IEEE: Piscataway, NJ, USA; pp. 423–430. [Google Scholar]

- Yakubu, I.Z.; Musa, Z.A.; Muhammed, L.; Ja’afaru, B.; Shittu, F.; Matinja, Z.I. Service Level Agreement Violation Preventive Task Scheduling for Quality of Service Delivery in Cloud Computing Environment. Procedia Comput. Sci. 2020, 178, 375–385. [Google Scholar] [CrossRef]

- Ahmad, M.; Abawajy, J.H. Service Level Agreements for the Digital Library. Procedia—Soc. Behav. Sci. 2014, 147, 237–243. [Google Scholar] [CrossRef]

- Qazi, F.; Kwak, D.; Khan, F.G.; Ali, F.; Khan, S.U. Service Level Agreement in Cloud Computing: Taxonomy, Prospects, and Challenges. Internet Things 2024, 25, 101126. [Google Scholar] [CrossRef]

- Ghandour, O.; El Kafhali, S.; Hanini, M. Adaptive Workload Management in Cloud Computing for Service Level Agreements Compliance and Resource Optimization. Comput. Electr. Eng. 2024, 120, 109712. [Google Scholar] [CrossRef]

- Mondal, B. Load Balancing in Cloud Computing Using Cuckoo Search Algorithm. Int. J. Cloud Comput. 2024, 13, 267–284. [Google Scholar] [CrossRef]

- Ala’anzy, M.; Othman, M. Load Balancing and Server Consolidation in Cloud Computing Environments: A Meta-Study. IEEE Access 2019, 7, 141868–141887. [Google Scholar] [CrossRef]

- Devi, N.; Dalal, S.; Solanki, K.; Dalal, S.; Lilhore, U.K.; Simaiya, S.; Nuristani, N. A Systematic Literature Review for Load Balancing and Task Scheduling Techniques in Cloud Computing. Artif. Intell. Rev. 2024, 57, 276. [Google Scholar] [CrossRef]

- Mahmoud, H.; Thabet, M.; Khafagy, M.H.; Omara, F.A. An Efficient Load Balancing Technique for Task Scheduling in Heterogeneous Cloud Environment. Clust. Comput. 2021, 24, 3405–3419. [Google Scholar] [CrossRef]

- Shafiq, D.A.; Jhanjhi, N.Z.; Abdullah, A. Load Balancing Techniques in Cloud Computing Environment: A Review. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 3910–3933. [Google Scholar] [CrossRef]

- Milani, A.S.; Navimipour, N.J. Load Balancing Mechanisms and Techniques in the Cloud Environments: Systematic Literature Review and Future Trends. J. Netw. Comput. Appl. 2016, 71, 86–98. [Google Scholar] [CrossRef]

- Jayaswal, C.J.; Bindulal, P. Edge Computing: Applications, Challenges and Opportunities. J. Comput. Technol. Appl. 2023, 9, 1–4. [Google Scholar] [CrossRef]

- Zhou, Z.; Li, F.; Zhu, H.; Xie, H.; Abawajy, J.H.; Chowdhury, M.U. An Improved Genetic Algorithm Using Greedy Strategy toward Task Scheduling Optimization in Cloud Environments. Neural Comput. Appl. 2020, 32, 1531–1541. [Google Scholar] [CrossRef]

- Kadarla, K.; Sharma, S.C.; Bhardwaj, T.; Chaudhary, A. A Simulation Study of Response Times in Cloud Environment for IoT-Based Healthcare Workloads. In Proceedings of the 2017 IEEE 14th International Conference on Mobile Ad Hoc and Sensor Systems (MASS), Orlando, FL, USA, 22–25 October 2017; IEEE: Piscataway, NJ, USA; pp. 678–683. [Google Scholar]

- Ebadifard, F.; Babamir, S.M. PSO Based Task Scheduling Algorithm Improved Using a Load—Balancing Technique for the Cloud Computing Environment. Concurr. Comput. 2018, 30, e4368. [Google Scholar] [CrossRef]

- Abbasi, A.A.; Abbasi, A.; Shamshirband, S.; Chronopoulos, A.T.; Persico, V.; Pescape, A. Software-Defined Cloud Computing: A Systematic Review on Latest Trends and Developments. IEEE Access 2019, 7, 93294–93314. [Google Scholar] [CrossRef]

- Yin, H.; Huang, X.; Cao, E. A Cloud-Edge-Based Multi-Objective Task Scheduling Approach for Smart Manufacturing Lines. J. Grid Comput. 2024, 22, 9. [Google Scholar] [CrossRef]

- Behera, I.; Sobhanayak, S. Task Scheduling Optimization in Heterogeneous Cloud Computing Environments: A Hybrid GA-GWO Approach. J. Parallel Distrib. Comput. 2024, 183, 104766. [Google Scholar] [CrossRef]

- Sarrafzade, N.; Entezari-Maleki, R.; Sousa, L. A Genetic-Based Approach for Service Placement in Fog Computing. J. Supercomput. 2022, 78, 10854–10875. [Google Scholar] [CrossRef]

- Kruekaew, B.; Kimpan, W. Multi-Objective Task Scheduling Optimization for Load Balancing in Cloud Computing Environment Using Hybrid Artificial Bee Colony Algorithm With Reinforcement Learning. IEEE Access 2022, 10, 17803–17818. [Google Scholar] [CrossRef]

- Wang, D.; Wang, J.; Liu, X.; Yu, J.; Gu, H.; Wang, C.; Liu, J.; Zhang, Y. Towards Dynamic Virtual Machine Placement Based on Safety Parameters and Resource Utilization Fluctuation for Energy Savings and QoS Improvement in Cloud Computing. Future Gener. Comput. Syst. 2025, 171, 107853. [Google Scholar] [CrossRef]

- Banerjee, S.; Roy, S.; Khatua, S. Towards Energy and QoS Aware Dynamic VM Consolidation in a Multi-Resource Cloud. Future Gener. Comput. Syst. 2024, 157, 376–391. [Google Scholar] [CrossRef]

- Muhairat, M.; Abdallah, M.; Althunibat, A. Cloud Computing in Higher Educational Institutions. Compusoft 2019, 8, 3507–3513. [Google Scholar]

- Du, T.; Xiao, G.; Chen, J.; Zhang, C.; Sun, H.; Li, W.; Geng, Y. A Combined Priority Scheduling Method for Distributed Machine Learning. J. Wireless Com Network 2023, 2023, 45. [Google Scholar] [CrossRef]

- Rawajbeh, M.A. Performance Evaluation of a Computer Network in a Cloud Computing Environment. ICIC Express Lett. 2019, 13, 719–727. [Google Scholar]

- N Al-Dwairi, R.; Jditawi, W. The Role of Cloud Computing on the Governmental Units Performance and EParticipation (Empirical Study). Int. J. Adv. Soft Comput. Its Appl. 2022, 14, 79–93. [Google Scholar] [CrossRef]

- Alwasouf, A.A.; Kumar, D. Research Challenges of Web Service Composition. In Software Engineering; Hoda, M.N., Chauhan, N., Quadri, S.M.K., Srivastava, P.R., Eds.; Advances in Intelligent Systems and Computing; Springer: Singapore, 2019; Volume 731, pp. 681–689. ISBN 978-981-10-8847-6. [Google Scholar]

- Houssein, E.H.; Gad, A.G.; Wazery, Y.M.; Suganthan, P.N. Task Scheduling in Cloud Computing Based on Meta-Heuristics: Review, Taxonomy, Open Challenges, and Future Trends. Swarm Evol. Comput. 2021, 62, 100841. [Google Scholar] [CrossRef]

- Shao, K.; Fu, H.; Wang, B. An Efficient Combination of Genetic Algorithm and Particle Swarm Optimization for Scheduling Data-Intensive Tasks in Heterogeneous Cloud Computing. Electronics 2023, 12, 3450. [Google Scholar] [CrossRef]

- Asghari, S.; Jafari Navimipour, N. The Role of an Ant Colony Optimisation Algorithm in Solving the Major Issues of the Cloud Computing. J. Exp. Theor. Artif. Intell. 2023, 35, 755–790. [Google Scholar] [CrossRef]

- Krishnadoss, P.; Chandrashekar, C.; Poornachary, V.K. RCOA Scheduler: Rider Cuckoo Optimization Algorithm for Task Scheduling in Cloud Computing. Int. J. Intell. Eng. Syst. 2022, 15, 505–514. [Google Scholar] [CrossRef]

- Azari, M.S.; Bouyer, A.; Zadeh, N.F. Service Composition with Knowledge of Quality in the Cloud Environment Using the Cuckoo Optimization and Artificial Bee Colony Algorithms. In Proceedings of the 2015 2nd International Conference on Knowledge-Based Engineering and Innovation (KBEI), Tehran, Iran, 5–6 November 2015; IEEE: Piscataway, NJ, USA; pp. 539–545. [Google Scholar]

- Dahan, F.; Alwabel, A. Artificial Bee Colony with Cuckoo Search for Solving Service Composition. Intell. Autom. Soft Comput. 2023, 35, 3385–3402. [Google Scholar] [CrossRef]

- Subbulakshmi, S.; Ramar, K.; Saji, A.E.; Chandran, G. Optimized Web Service Composition Using Evolutionary Computation Techniques. In Intelligent Data Communication Technologies and Internet of Things; Hemanth, J., Bestak, R., Chen, J.I.-Z., Eds.; Lecture Notes on Data Engineering and Communications Technologies; Springer: Singapore, 2021; Volume 57, pp. 457–470. ISBN 978-981-15-9508-0. [Google Scholar]

- Tawfeek, M.A.; El-Sisi, A.; Keshk, A.E.; Torkey, F.A. Cloud Task Scheduling Based on Ant Colony Optimization. In Proceedings of the 2013 8th International Conference on Computer Engineering & Systems (ICCES), Cairo, Egypt, 26–27 November 2013; IEEE: Piscataway, NJ, USA; pp. 64–69. [Google Scholar]

- Nabi, S.; Ahmad, M.; Ibrahim, M.; Hamam, H. AdPSO: Adaptive PSO-Based Task Scheduling Approach for Cloud Computing. Sensors 2022, 22, 920. [Google Scholar] [CrossRef]

- Sharma, N.; Sonal; Garg, P. Ant Colony Based Optimization Model for QoS-Based Task Scheduling in Cloud Computing Environment. Meas. Sens. 2022, 24, 100531. [Google Scholar] [CrossRef]

- Dubey, K.; Sharma, S.C. A Novel Multi-Objective CR-PSO Task Scheduling Algorithm with Deadline Constraint in Cloud Computing. Sustain. Comput. Inform. Syst. 2021, 32, 100605. [Google Scholar] [CrossRef]

- Pradhan, A.; Bisoy, S.K. A Novel Load Balancing Technique for Cloud Computing Platform Based on PSO. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 3988–3995. [Google Scholar] [CrossRef]

- Alsaidy, S.A.; Abbood, A.D.; Sahib, M.A. Heuristic Initialization of PSO Task Scheduling Algorithm in Cloud Computing. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 2370–2382. [Google Scholar] [CrossRef]

- Dogani, J.; Khunjush, F. Cloud Service Composition Using Genetic Algorithm and Particle Swarm Optimization. In Proceedings of the 2021 11th International Conference on Computer Engineering and Knowledge (ICCKE), Mashhad, Iran, 28 October 2021; IEEE: Piscataway, NJ, USA; pp. 98–104. [Google Scholar]

- Nezafat Tabalvandani, M.A.; Hosseini Shirvani, M.; Motameni, H. Reliability-Aware Web Service Composition with Cost Minimization Perspective: A Multi-Objective Particle Swarm Optimization Model in Multi-Cloud Scenarios. Soft Comput. 2024, 28, 5173–5196. [Google Scholar] [CrossRef]

- Jangu, N.; Raza, Z. Improved Jellyfish Algorithm-Based Multi-Aspect Task Scheduling Model for IoT Tasks over Fog Integrated Cloud Environment. J. Cloud Comput. 2022, 11, 98. [Google Scholar] [CrossRef]

- Li, Q.; Peng, Z.; Cui, D.; Lin, J.; Zhang, H. UDL: A Cloud Task Scheduling Framework Based on Multiple Deep Neural Networks. J. Cloud Comput. 2023, 12, 114. [Google Scholar] [CrossRef]

- Kadhim, Q.K.; Yusof, R.; Mahdi, H.S.; Ali Al-shami, S.S.; Selamat, S.R. A Review Study on Cloud Computing Issues. J. Phys. Conf. Ser. 2018, 1018, 012006. [Google Scholar] [CrossRef]

- Cheikh, S.; Walker, J.J. Solving Task Scheduling Problem in the Cloud Using a Hybrid Particle Swarm Optimization Approach. Int. J. Appl. Metaheuristic Comput. 2021, 13, 1–25. [Google Scholar] [CrossRef]

- Wei, X. Task Scheduling Optimization Strategy Using Improved Ant Colony Optimization Algorithm in Cloud Computing. J. Ambient. Intell. Hum. Comput. 2020. [Google Scholar] [CrossRef]

- Chahal, P.K.; Kumar, K.; Soodan, B.S. Grey Wolf Algorithm for Cost Optimization of Cloud Computing Repairable System with N -Policy, Discouragement and Two-Level Bernoulli Feedback. Math. Comput. Simul. 2024, 225, 545–569. [Google Scholar] [CrossRef]

- Yu, N.; Zhang, A.-N.; Chu, S.-C.; Pan, J.-S.; Yan, B.; Watada, J. Innovative Approaches to Task Scheduling in Cloud Computing Environments Using an Advanced Willow Catkin Optimization Algorithm. Comput. Mater. Contin. 2025, 82, 2495–2520. [Google Scholar] [CrossRef]

- Calheiros, R.N.; Ranjan, R.; Beloglazov, A.; De Rose, C.A.F.; Buyya, R. CloudSim: A Toolkit for Modeling and Simulation of Cloud Computing Environments and Evaluation of Resource Provisioning Algorithms. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

| Performance Metrics Evaluated | Author(s)/Year | Optimization Technique/Algorithm |

|---|---|---|

| Response Time | Kurdi et al., 2018 [22] | Bio-inspired optimization algorithm based on cuckoo birds |

| Load Balance, VM Utilization | Tarawneh et al., 2022 [8] | Spider Monkey Optimization (SMO) algorithm |

| Latency and Response Time | Bei et al. [29] | ACO |

| Task Scheduling, SLA | Jangu and Raza, 2022 [78] | Improved Jellyfish Algorithm-based |

| Task Scheduling, Execution Time | Li et al., 2023 [79] | Multiple deep neural networks |

| Load Balancing, Execution Time, Cost | Kadhim et al., 2018 [80] | Limitations of Traditional Rule-Based Approaches |

| QoS Optimization, Avoid Local Optima | Bei et al., 2024 [29] | Enhanced ACO + Genetic-Algorithm-Inspired Mutation |

| Data-Intensive Task Scheduling | Shao et al., 2023 [64] | PGSAO (GA + PSO) hybrid |

| Task Scheduling Across VMs | Cheikh and Walker, 2021 [81] | Hybrid Particle Swarm Optimization |

| Task Scheduling, Load Balancing | Wei, 2022 [82] | Improved ACO with Satisfaction Function |

| Fault Tolerance, Cost Optimization | Chahal et al., 2024 [83] | Gray Wolf Algorithm (GWA) |

| Multi-objective Task Scheduling | Behera and Sobhanaya, 2024 [53] | Hybrid Genetic Algorithm + Gray Wolf Optimization (GA-GWO) |

| Task Scheduling, Load Balancing | Yu et al., 2025 [84] | Advanced Willow Catkin Optimization (AWCO) |

| Task Scheduling, Load Balancing | Pradhan and Bisoy, 2022 [74] | PSO |

| Task Scheduling | Sharma et al., 2022 [72] | ACO |

| Cost | Ahmad et al., 2023 [85] | Cost optimization in a cloud/fog environment based on Task Deadline |

| Parameter | Value |

|---|---|

| Number of Requests | 1000–5000 (varies) |

| Number of Cloudlets | 100–500 (varies) |

| Number of VMs | 10–50 |

| Number of Data Centers | 1–3 |

| Host Configuration | Quad-core, 16GB RAM |

| VM Configuration | 2 vCPUs, 4GB RAM, 1000 MIPS |

| Scheduling Policy | Time-Shared/Space-Shared |

| Load Balancing Algorithm | COA, PSO, ACO |

| Simulation Runs | 10 |

| Energy-Aware Mechanism | Enabled |

| Number of Services per Composition | 5–20 |

| Service Categories | Computation-intensive, storage-intensive, latency-sensitive, energy-efficient |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

AlHadid, I.; Abu-Taieh, E.; Al Rawajbeh, M.; Afaneh, S.; Daghbosheh, M.E.; Alkhawaldeh, R.S.; Khwaldeh, S.; Alrowwad, A. Optimizing Cloud Service Composition with Cuckoo Optimization Algorithm for Enhanced Resource Allocation and Energy Efficiency. Future Internet 2025, 17, 526. https://doi.org/10.3390/fi17110526

AlHadid I, Abu-Taieh E, Al Rawajbeh M, Afaneh S, Daghbosheh ME, Alkhawaldeh RS, Khwaldeh S, Alrowwad A. Optimizing Cloud Service Composition with Cuckoo Optimization Algorithm for Enhanced Resource Allocation and Energy Efficiency. Future Internet. 2025; 17(11):526. https://doi.org/10.3390/fi17110526

Chicago/Turabian StyleAlHadid, Issam, Evon Abu-Taieh, Mohammad Al Rawajbeh, Suha Afaneh, Mohammed E. Daghbosheh, Rami S. Alkhawaldeh, Sufian Khwaldeh, and Ala’aldin Alrowwad. 2025. "Optimizing Cloud Service Composition with Cuckoo Optimization Algorithm for Enhanced Resource Allocation and Energy Efficiency" Future Internet 17, no. 11: 526. https://doi.org/10.3390/fi17110526

APA StyleAlHadid, I., Abu-Taieh, E., Al Rawajbeh, M., Afaneh, S., Daghbosheh, M. E., Alkhawaldeh, R. S., Khwaldeh, S., & Alrowwad, A. (2025). Optimizing Cloud Service Composition with Cuckoo Optimization Algorithm for Enhanced Resource Allocation and Energy Efficiency. Future Internet, 17(11), 526. https://doi.org/10.3390/fi17110526