1. Introduction

Quantum key distribution (QKD) has emerged as the only proven method to achieve information-theoretically secure key exchange between two legitimate parties, conventionally referred to as Alice and Bob [

1,

2]. Unlike classical cryptographic schemes, whose security relies on computational assumptions such as the hardness of integer factorization or discrete logarithms [

3,

4], QKD derives its security directly from the fundamental principles of quantum mechanics. The impossibility of perfectly cloning an unknown quantum state, guaranteed by the no-cloning theorem [

5], together with the Heisenberg uncertainty principle [

6], ensures that any attempt by an eavesdropper (Eve) to intercept the quantum channel inevitably introduces detectable disturbances. In principle, this enables QKD to provide unconditional security for key exchange, provided the system is implemented without imperfections [

7].

In this context, an alternative promising paradigm is quantum secure direct communication (QSDC) [

8,

9,

10], which enables the direct transmission of secret messages with information-theoretic security, thereby eliminating the need for prior key establishment and potentially enhancing efficiency in scenarios that require immediate secure data exchange. However, many practical QSDC implementations demand substantially different physical resources or operational models—such as hyperentangled states with complete Bell-state discrimination, two-way quantum operations, or variants employing forward error correction—and they exhibit distinct error and loss characteristics that complicate direct comparison with prepare-and-measure QKD schemes [

10]. Moreover, QKD protocols currently benefit from greater experimental maturity, scalability, and integration with existing communication infrastructures, thereby motivating our focus on improving the practical security of QKD through realistic eavesdropper detection methods.

The strategic relevance of QKD extends well beyond academic interest. Its potential applications in the military and defense domains are particularly critical, as it promises to secure tactical and strategic communications, protect diplomatic and intelligence channels, and enable resilient satellite-based secure networks [

11,

12]. Beyond defense, QKD has potential applications in protecting critical infrastructure such as energy grids, transportation systems, and global financial networks [

13,

14,

15]. This is of growing importance as advances in quantum computing threaten the long-term security of classical cryptographic systems. For military and governmental use, it is, therefore, imperative to develop quantum-resistant solutions that can ensure the confidentiality of classified information over extended time horizons. Nevertheless, despite rapid progress in recent years, significant obstacles remain to the widespread deployment of QKD, including hardware limitations, channel imperfections, and the need for scalable and cost-effective implementations [

16].

Among the many QKD protocols, the Six-State protocol [

17,

18] represents a natural extension of the well-known BB84 scheme, offering increased robustness against noise by employing three mutually unbiased bases. Its design allows for enhanced detection of eavesdropping attempts, particularly in channels affected by collective-rotation noise [

19]. This makes the Six-State protocol an attractive candidate for environments where transmission imperfections are unavoidable, though the presence of realistic noise sources and imperfections still poses challenges to its implementation.

In this work, we address one of the key challenges in deploying QKD: the reliable detection of an eavesdropper under realistic attack models [

20]. Specifically, we investigate the Six-State protocol under a partial intercept-and-resend attack, in which Eve targets only a fraction of the transmitted qubits in order to reduce her chances of detection. Additionally, we incorporate system noise into our network model, as it can further complicate the assessment of both the protocol security and the overall system performance [

21]. In practice, distinguishing with absolute certainty between quantum errors induced by an eavesdropping attack and those resulting from imperfections in the quantum channel remains a significant challenge. Consequently, as emphasized in [

22], most studies on QKD protocols have concentrated on evaluating performance in terms of the achievable secret key rate rather than on detecting the presence of an eavesdropper. This work addresses this fundamental issue by explicitly proposing and evaluating two threshold-based detection methods specifically designed to identify eavesdropping attempts while accounting for both channel noise and the statistical properties of the quantum bit error rate (QBER). Through extensive simulation on backends inspired by real IBM quantum devices, we demonstrate the effectiveness and accuracy of the proposed methods, thereby contributing to the advancement in practical, secure implementations of Six-State QKD.

Paper Contribution

The primary contributions are as follows:

The paper proposes a generalization to the Six-State protocol of threshold-based eavesdropper detection techniques proposed for the BB84 scheme in [

23,

24]. We explicitly derive two optimal QBER detection thresholds designed to minimize both the false positive rate (FPR) and false negative rate (FNR), following, respectively, upper Hoeffding’s theoretical bounds [

25] and limit probability density function approach, thereby broadening the applicability of threshold-based detection strategies.

The analysis explicitly incorporates the scenario of partial intercept–resend attack (characterized by a constant interception density), also accounting for channel noise. This scenario enables a nuanced evaluation of detection performance under variable attack intensities.

A comprehensive simulation model, developed on the Quantum Solver framework and implemented using the Qiskit library, is presented. This model facilitates both the testing of detection methods on real quantum systems and the isolation of different noise sources through controlled simulations. The simulation framework is used to systematically compare both methods in terms of detection accuracy and robustness. By doing so, we move beyond purely theoretical analysis and this allows the investigation of practical system noise effects on detection performance.

2. Related Work

The standard security proof for Six-State QKD has been available since 2005 [

26], and more recently a simpler proof has been proposed in [

27]. The latter work entirely avoids the use of Rényi entropies, applying state smoothing directly in the Bell basis. Another class of studies investigates eavesdropping in the Six-State protocol when signal states are mixed with white noise [

28,

29]. In these works, the white noise is assumed to originate either from deliberate addition by Alice before the states leave her laboratory or from a realistic scenario in which Eve cannot replace the noisy quantum channel with a noiseless one. The authors analyze Eve’s optimal mutual information with Alice, for individual attacks, as a function of the qubit error rate. Their main finding is that added quantum noise reduces Eve’s mutual information more significantly than Bob’s. In contrast, our work focuses on the definition of a detection method for identifying Eve’s presence in intercept–resend attacks.

Garapo et al. [

19] study the effect of collective-rotation noise on the security of the Six-State protocol under intercept–resend attacks. They first introduce a collective-rotation noise model for the Six-State protocol and provide a parametrization of the mutual information between Alice and Eve. The authors also derive the QBER for three intercept–resend scenarios, concluding that the Six-State protocol is robust against such attacks when the rotation angle remains within certain bounds. However, their work does not investigate detection methods for Eve’s presence.

Other studies have explored eavesdropping in combination with depolarizing errors in quantum channels [

30]. In particular, the authors propose the use of a k-means clustering algorithm to analyze potential attacks in the BB84 protocol under depolarizing noise. Their results show that the shared keys remain secure even in the presence of both noise and eavesdropping, and they demonstrate that clustering is an efficient approach for detecting eavesdroppers, particularly when channel noise is relatively high. Different from this approach, our work extends the detection techniques presented in [

23,

24] and develops a theoretical framework based on statistical analysis to define threshold-based detection methods for the Six-State protocol. These methods optimize FNR and FPR, while also allowing the user to adjust the balance between them depending on the application scenario.

Ding et al. [

31] propose a machine-learning-based attack detection scheme (MADS) for continuous-variable QKD (CVQKD). Their method combines density-based spatial clustering of applications with noise (DBSCAN [

32]) and multiclass support vector machines (MCSVMs [

33]). MADS demonstrates high effectiveness in detecting quantum hacking attacks. However, it is specifically designed for CVQKD and requires the prior definition of attack-related features, which are used to construct feature vectors. These vectors serve as inputs for DBSCAN to identify and filter noise or outliers, after which trained MCSVMs are employed for classification and prediction. The output immediately determines whether a final secret key can be generated. Beyond its application to discrete-variable QKD, our approach distinguishes itself from these works by eliminating the need for a model training phase, relying instead on statistical analysis.

3. Background on Six-State Protocol

The Bechmann-Pasquinucci and Gisin protocol extends the BB84 scheme by using three mutually unbiased bases (MUBs) in a

d-dimensional Hilbert space [

17]. For the special case where

(qubit), these three bases correspond to the eigenbases of the Pauli

X,

Y, and

Z operators. Explicitly, the three MUBs are defined as follows:

The Computational basis (Z basis): .

The Hadamard basis (X basis): .

The Circular basis (Y basis): .

By introducing a third MUB, the protocol increases the uncertainty for an eavesdropper (Eve), thereby enhancing the security margin. In particular, having three MUDs implies six possible states, shown in

Table 1. Having two more states than BB84 reduces the effectiveness of certain eavesdropping strategies, as Eve’s probability of correctly guessing the basis decreases.

The objective of the protocol is to enable Alice and Bob to establish a secure shared key. If an eavesdropper is detected during execution, the protocol must abort immediately. In its standard configuration, Alice utilizes a quantum source capable of generating states that encode randomly produced key bits. These qubits are subsequently transmitted to Bob via an insecure quantum channel. During transmission, an adversary (Eve) may interact with the system in an attempt to extract maximal information. Additionally, the protocol requires an authenticated classical channel through which Eve can observe all the exchanged messages but cannot alter them.

The protocol begins with the state preparation. Alice randomly selects one of the three MUBs and then randomly chooses one of the basis states within that basis to encode a key symbol. Each basis has a probability of being chosen equal to

. After that, Alice generates the corresponding quantum state using, for example, the quantum coding scheme shown in

Table 2, and sends it over the insecure quantum channel. During the quantum state transmission, Eve can execute an intercept–resend attack.

Upon receipt, Bob independently and randomly selects one of the three bases to measure the received state. The randomness in the choice of the measurement basis is fundamental. Eve should not be able to predict the chosen basis for the protocol to work correctly. These two steps are repeated K times so that at the end, both Alice and Bob have a list of K pairs (bit, basis). The bit transmitted by Alice is denoted as b, while the received bit of Bob is .

After transmission, Alice and Bob, via a public channel, publicly announce the basis used for each state (but not the actual state). Only those states obtained when their choices of basis coincide are retained for key generation. The others are discarded. This procedure is denoted as key sifting. The a priori probability for Alice and Bob to choose the same basis is . Hence, after the key sifting of the exchanged bits are removed on average.

Alice and Bob publicly and randomly disclose a subset of their remaining bits, denoted as shared key length, . The comparison of the shared bits is used to estimate the QBER, which is a critical metric for evaluating communication security and detecting potential eavesdropping. In an ideal noiseless scenario, the disclosed bits should exhibit perfect correlation. However, in practice, discrepancies may arise due to channel noise, measurement imperfections, or adversarial interference (e.g., by Eve). By analyzing a sufficiently large sample of shared bits, Alice and Bob can accurately estimate the QBER, which serves two key purposes: (1) to detect an eavesdropping analysing if the QBER is higher than expected, (2) to quantify information leakage, given that the magnitude of the QBER provides an upper bound on the information Eve may have obtained. If the computed QBER exceeds a predefined security threshold, the protocol must be aborted, as the key exchange is no longer deemed secure. This threshold ensures that any excessive information leakage to an adversary results in immediate termination, preserving the system integrity. This step is essential in QKD, as it allows Alice and Bob to dynamically assess whether the key establishment process can proceed securely or must be halted.

Alice and Bob execute a classical error correction protocol to reconcile discrepancies between their respective keys. Subsequently, they verify key equality by computing the bitwise XOR of their corrected keys and comparing the result. A successful verification (i.e., a null result) confirms key agreement, while any mismatch indicates residual errors requiring further correction or protocol abortion.

The protocol concludes with

privacy amplification, a critical post-processing step designed to exponentially reduce any partial information that Eve may have obtained about the key [

22]. In essence, this step compresses the reconciled key into a shorter, highly secure version that is statistically uncorrelated with Eve’s data. It is implemented through the application of a universal hash function—a class of mathematical functions ensuring that even minimal differences in input produce statistically independent outputs. Although this operation inevitably reduces the final key length, it provides an information-theoretic guarantee that Eve’s mutual information with the key becomes negligible. The compression ratio, which quantifies the degree of shortening during the hashing process, is carefully chosen by the legitimate parties based on the estimated QBER to ensure the required level of security while maximizing the usable key rate. It is important to note that the final two stages of the process—

error correction and

privacy amplification—are considered classical post-processing procedures rather than intrinsic components of the QKD protocol itself and are, therefore, not further analyzed in this work.

Upon completion of all the protocol steps, two mutually exclusive outcomes emerge. If the presence of Eve is detected by excessive QBER or verification failures, Alice and Bob immediately abort the protocol. In this case, all the exchanged information is securely discarded, and the key establishment process restarts from the initial phase. This action ensures no potentially compromised key material is retained or utilized. On the contrary, if all the security checks are passed, Alice and Bob obtain a shared secret key that is both identical and information-theoretically secure. The key is guaranteed to be free from eavesdropper interference, as any attempted interception would have been detected during earlier verification stages.

The protocol is designed such that it never produces a compromised key; either a secure key is successfully established, or the protocol terminates without generating any key material. This fail-safe property is fundamental to the protocol security guarantees, and ensures that Alice and Bob only proceed with communication when unconditional security can be assured.

4. Model with System Noise

In practical QKD applications, the accurate measurement of qubits is affected by various imperfections in the components of the system and by Eve interference. These imperfections introduce errors that can impact the final key generation and overall security of the QKD protocol [

34]. The main sources of errors can be categorized into transmitter imperfections, receiver imperfections, and channel imperfections. Transmitter imperfections could include, for example, misalignment of optical components or imperfect coupling between the transmitter and the quantum channel (optical fiber). Moreover, there could also be some flaws in the generation of the quantum states. All these imperfections could result in some signal loss and distortions. Receiver imperfections also negatively affect the measurements. Dark counts in detectors can introduce false positive detections even when there are no incoming photons [

35,

36]. Finally, the transmitting medium can introduce errors by itself. Path loss can reduce the number of photons reaching the detectors [

37]. All these imperfections contribute to increasing the QBER of the system. Since the QBER is a crucial parameter in QKD systems, it is fundamental to carefully characterize these imperfections to achieve secure key distribution.

In this work, we make several fundamental assumptions regarding both the quantum channel noise and the potential Eve actions.

System Noise Model Assumption. We assume that bit-flip events induced by ”system noise” can be accurately modeled as Bernoulli random variables. Specifically, for each qubit transmitted between Alice and Bob, the occurrence of a bit-flip due to noise is treated as an independent and identically distributed (i.i.d.) Bernoulli process. Let

denote the probability of a bit-flip event for a single qubit. This assumption implies that, for

l qubits transmitted, the number of bit-flip events follows a binomial distribution with parameters

l and

[

38]. In this context, an additional assumption adopted throughout the remainder of this work is that the channel and device conditions are stationary with respect to noise; that is,

remains constant over the duration of the QKD protocol execution. The validity of this assumption has been substantiated in practical implementations by [

39], who reported that fluctuations in the error rate did not exceed 0.16% during a 70 h monitoring period. Furthermore, we assume that Alice and Bob can estimate

from a preliminary measurement of quantum interference visibility prior to the actual QKD transmission. As demonstrated by [

40], the discrepancy between the estimated and experimentally measured QBER values remains within 1% for QKD transmission over 122 km of standard telecommunication fiber.

Eavesdropping Model Assumption. A second key assumption concerns the capabilities of a potential eavesdropper. In this study, we consider the partial intercept–resend attack, a variant of the standard intercept–resend attack. In this scenario, Eve intercepts only a fraction of the qubits transmitted from Alice to Bob, rather than all of them.

Let p denote the interception density, defined as the probability that Eve intercepts a given qubit during its transmission. By adjusting p, Eve balances the trade-off between information gain and risk of detection: a lower p reduces her detection probability but limits the information she acquires, while a higher p increases both the detection probability and her information gain. For the purposes of this analysis, p is assumed to be constant during the entire protocol execution.

The main notations are summarized in

Table 3.

4.1. Logical Model

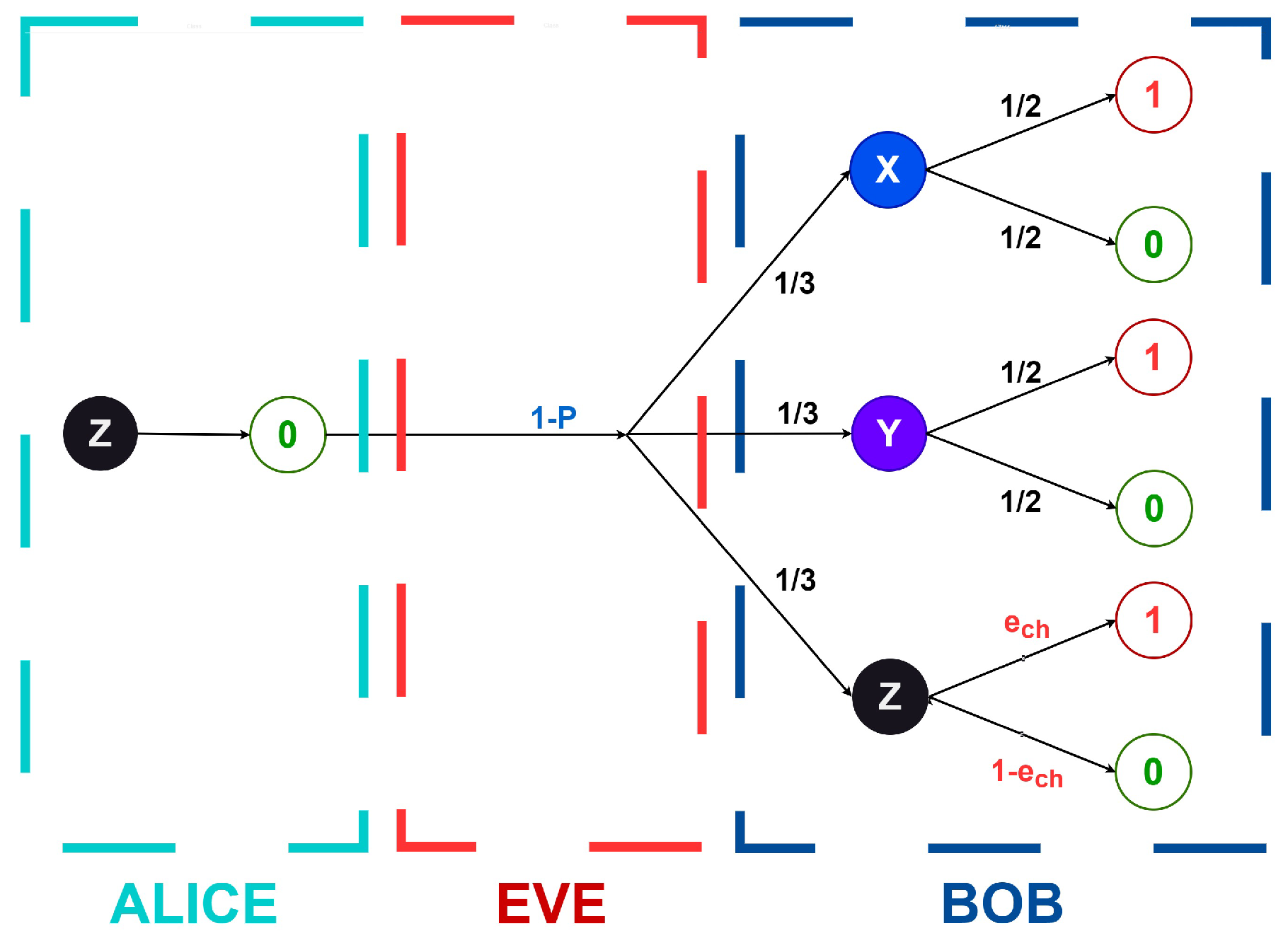

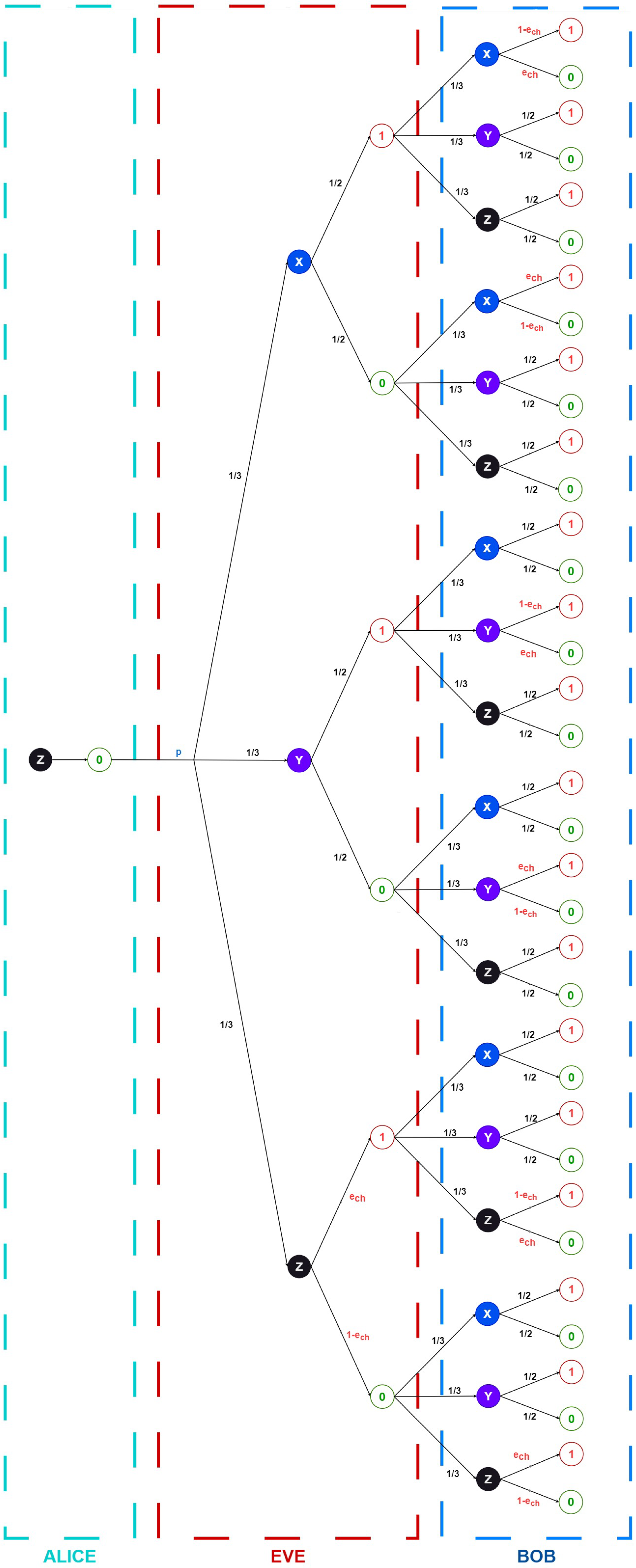

For systematic analysis, we constructed logical diagrams (

Figure 1 and

Figure 2) enumerating all the possible protocol outcomes in both non-eavesdropped and eavesdropped scenarios. These diagrams explicitly represent bit values rather than qubit states, though the underlying quantum phenomena are implicitly accounted for in determining these values.

These figures illustrate the impact of system noise on qubit transmission. In particular, the presented analysis focuses on Alice transmitting a 0 encoded in the Z basis; however, due to the inherent symmetry of the protocol, these results generalize straightforwardly to other cases. Each transition path in the diagrams is annotated with the corresponding probability of occurrence, including basis selection probabilities and measurement outcomes.

Notably, when all the possible outcomes occur with equal probability, the noise becomes statistically indistinguishable from the inherent randomness of the quantum system, effectively rendering it benign. This equiprobable case represents an important boundary condition where the noise contribution vanishes from an information-theoretic perspective.

The

is defined as follows:

The numerator represents the number of instances where a bit sent by Alice differs from the bit measured by Bob, considering only the cases in which the same basis is used. The denominator, on the other hand, is the total number of bits in the sifted key that are compared. From this definition, it is possible to derive a

theoretical expression as follows:

Indeed, the probability for Alice and Bob to choose the same basis in this protocol is

. By applying the total probability law, we get the following:

As per the protocol definition, it is known that

and

. Leveraging this symmetry,

can be computed as follows:

Referring to

Figure 1 and

Figure 2,

can be graphically obtained as follows:

Using Equation (

5) and combining it with Equations (

2) and (

4), we obtain the following:

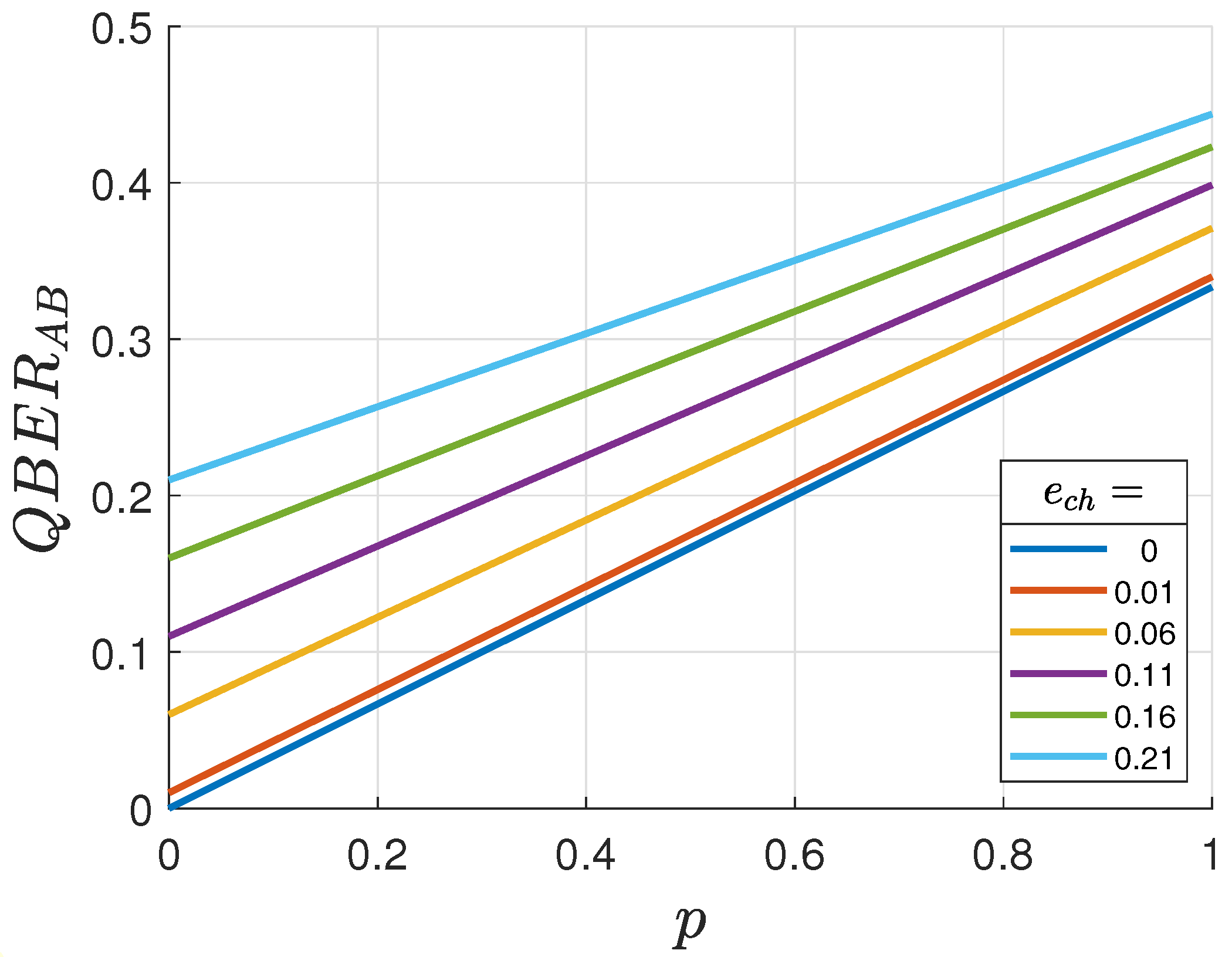

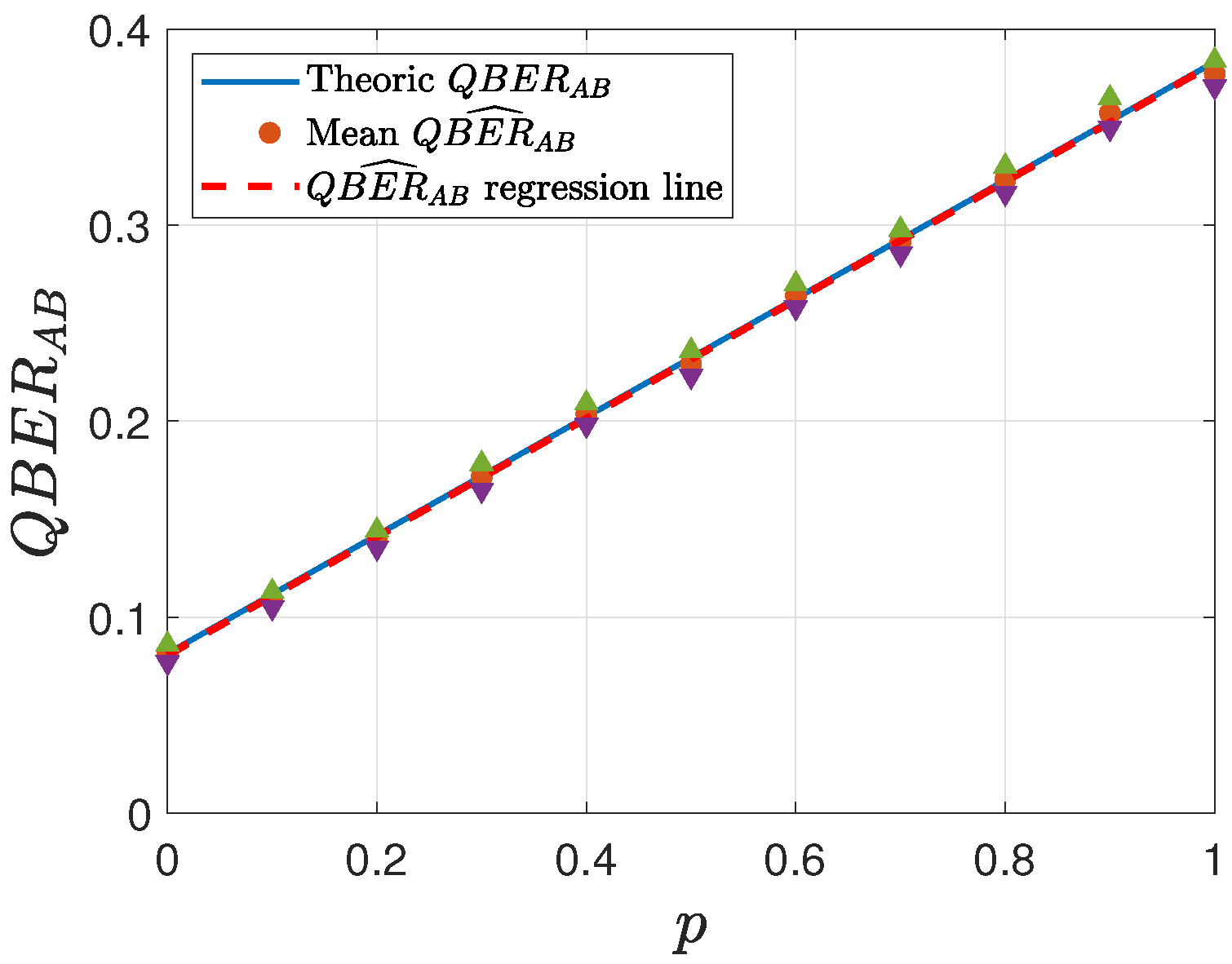

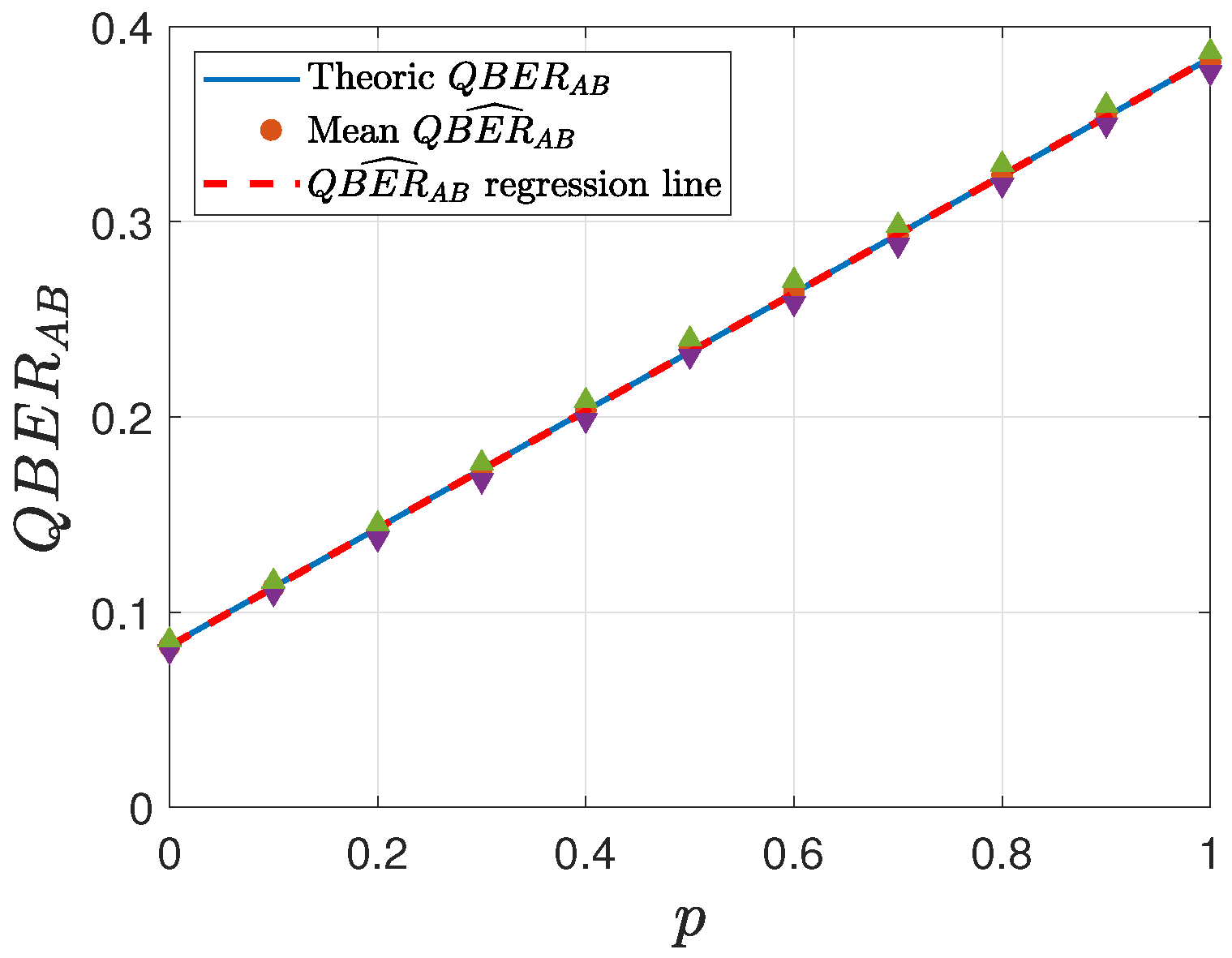

The theoretical

versus

p is shown in

Figure 3. Its analysis reveals several key insights. First, the relationship between

and

p exhibits a linear dependence. Second,

introduces a vertical offset in the QBER curve, displacing it from the origin. Furthermore, the slope of the QBER curve becomes slightly less steep when accounting for both noise and Eve presence compared to the scenario involving only Eve intervention. Notably, the combined effects of noise and eavesdropping can produce a cancellation phenomenon, where a qubit undergoes two consecutive bit flips—one induced by noise and another by Eve—resulting in no net observable change to the transmitted state.

4.2. Interception Density Estimation

The sharing of parts of their keys after the sifting allows Alice and Bob to estimate

. As a result, it is possible for them to obtain an estimation of the interception density

p by inverting the theoretical expression of

when

is available:

In a practical implementation, Alice and Bob are expected to determine the value of

either through precise calibration measurements during the system installation or from technical specifications provided by the installer. Numerous studies have demonstrated that

can be estimated with high precision, with errors below

reported over fiber lengths up to

[

39]. To assess the accuracy in estimating the interception density

p, it is useful to analyze the statistical properties of

. Alice and Bob use

n bits for the estimation of

. As a result, since the events are assumed to be i.i.d., it follows that the mean of

should be

and its variance can be expressed as

. These results are derived from the assumption that

is obtained as the ratio of the number of errors in the shared key to its total length, assuming each bit is an independent Bernoulli random variable. By calculating the expected value from Equation (

7) the following is obtained:

This result demonstrates that the proposed estimator for

p is unbiased.

Equation (

9) shows that the sample size

n directly affects the accuracy of the estimate: as the length of the shared key between Alice and Bob increases, the variance in the estimator decreases, resulting in a more accurate determination of

p. This observation aligns with the well-established statistical principle that larger sample sizes yield more precise estimates.

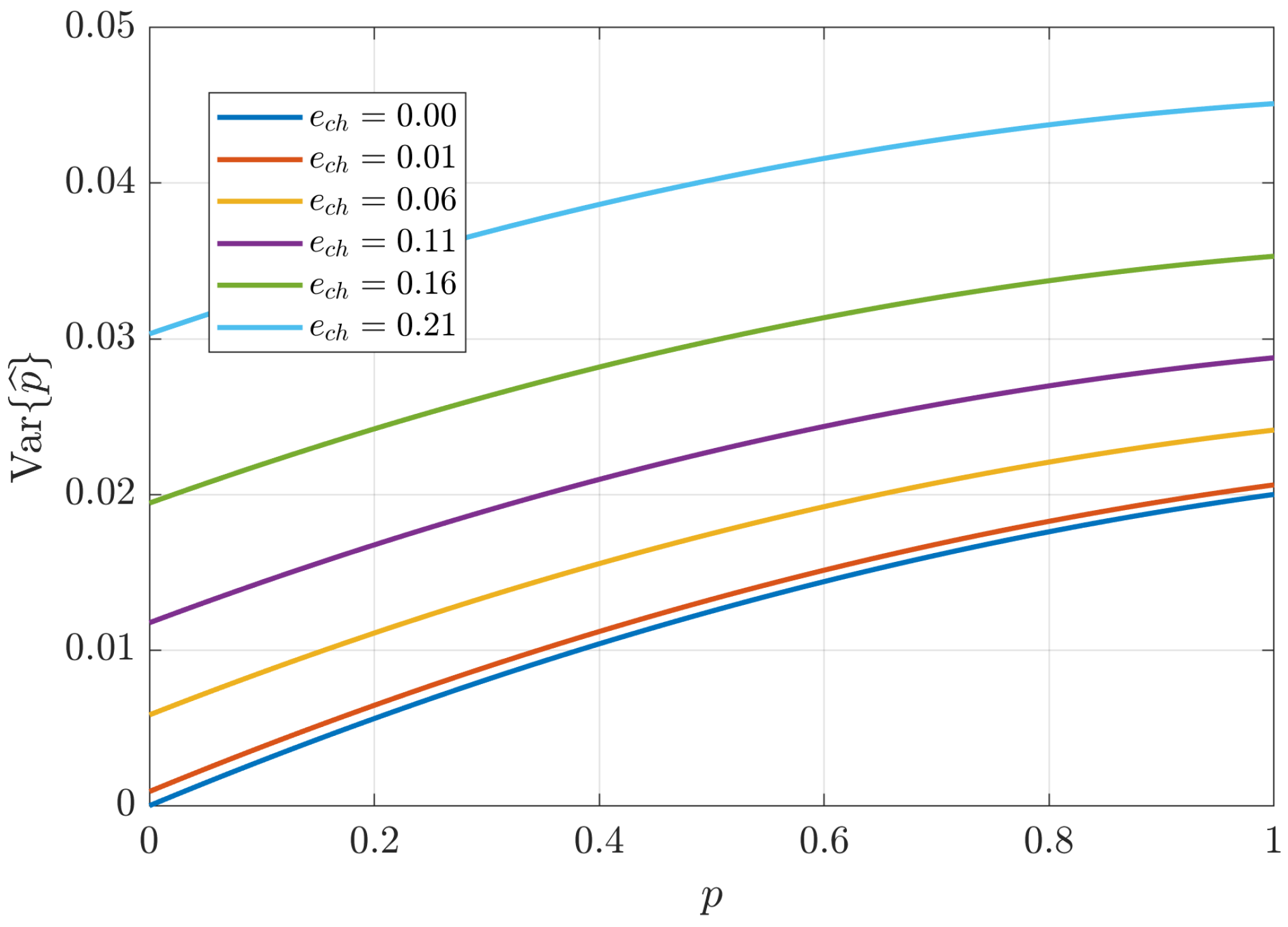

Figure 4 illustrates the dependence of the variance in

on the true values of

p and

. Higher values of

p correspond to increased variance in

, indicating that as the extent of eavesdropping rises, the difficulty of accurately estimating the interception density also increases. This effect may be attributed to the additional uncertainty introduced by more frequent eavesdropping interventions. Additionally, higher system noise levels (

) lead to further degradation in the accuracy of the estimation. This outcome is intuitive, as elevated noise makes it increasingly challenging to distinguish between errors arising from eavesdropping and those inherent to the quantum channel.

5. Detection Methods

To detect Eve’s presence, two threshold-based methods are proposed, both designed to minimize FPR and FNR. To evaluate the performance of the proposed detection methods, the

Accuracy, FNR, and FPR are defined as follows:

where

denotes true positives,

true negatives,

false positives, and

false negatives.

5.1. Method “A”

Given the assumption of i.i.d. channel error events and the possibility of a partial intercept–resend attack by Eve with interception density p, the central limit theorem allows us to approximate by a normal distribution when n is sufficiently large. Accordingly, the probability density function of can be approximated as in the absence of Eve, and as when Eve is present. Here, and denote the theoretical values of for the respective scenarios, while and are the corresponding binomial variances. Specifically, . The detection algorithm decides the presence of eavesdropping by comparing the measured to a threshold , which is assumed to lie within the interval .

By applying Hoeffding’s inequality [

25], it is possible to derive an upper bound for both FNR and FPR. Both bounds exhibit exponential dependence on the threshold, the mean of

, and the number of shared bits

n. Let

denote the value of

computed over

n bits when Eve is absent. The upper bound for the FPR is then given by the following:

Similarly, defining

as the value of

over

n bits when Eve is present, the upper bound for the FNR is given by the following:

where

and

.

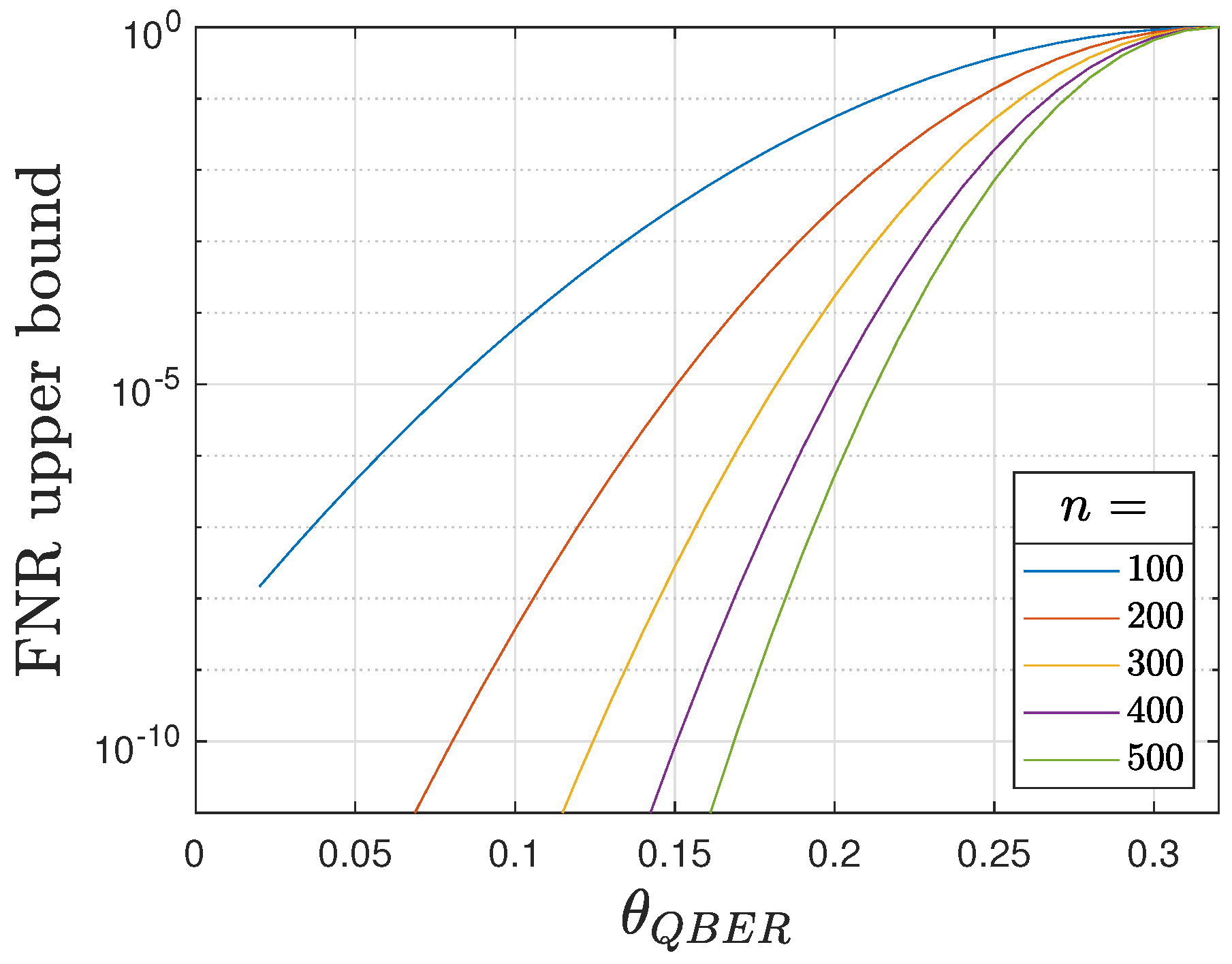

Figure 5 and

Figure 6 illustrate the upper bounds for the

FNR and

FPR, respectively. Assuming

, various values of

n are considered. The curves confirm that the upper bounds decrease exponentially as

n increases. Furthermore, decreasing

lowers the upper bound for the

FNR but raises the one for the

FPR; conversely, increasing

has the opposite effect. Therefore, the selection of

is critical for balancing detection performance. The optimal value of

can be found by solving

where the optimal threshold minimizes the weighted sum of the upper bounds of FNR and FPR. The parameter

weights the importance of the FPR relative to the FNR. Both terms in Equation (

15) are differentiable with respect to

. Therefore, by differentiating with respect to

and solving, we obtain the following:

which can be rearranged as follows:

It follows:

By restricting

to the interval

, the expression within the brackets remains strictly positive. Given that

, the corresponding exponent approaches zero, allowing the last term to be approximated as unity. Under this approximation, the optimal threshold

can be readily computed:

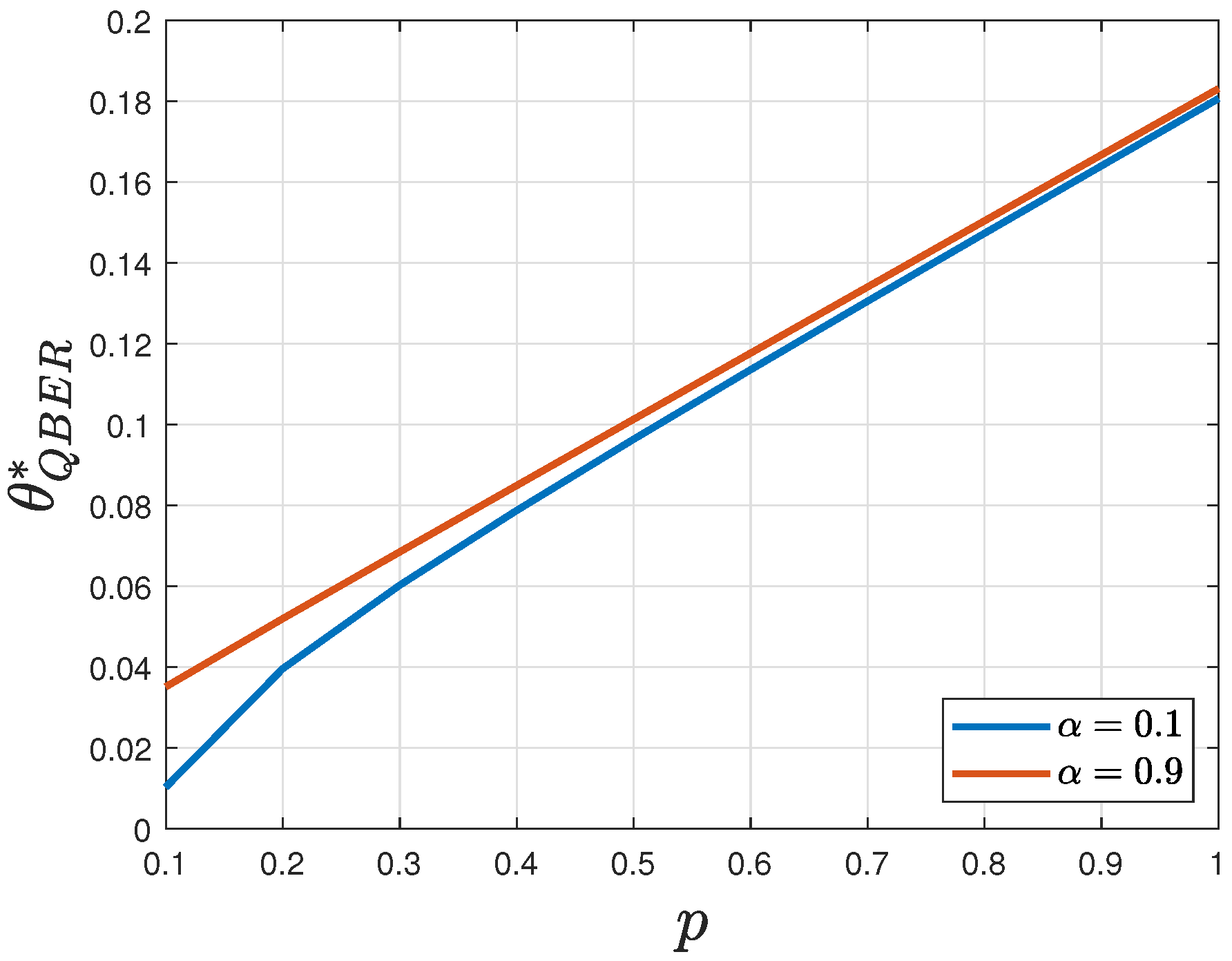

Figure 7 illustrates the optimal threshold as a function of

. As

increases, the threshold also increases, leading to a reduction in the FNR but at the expense of a higher FPR. This effect is particularly pronounced at lower values of

p. Thus, by selecting an appropriate

, it is possible to assign greater weight to either the FPR or the FNR. For the purpose of a fair comparison between Method A and Method B, we set

in this work.

5.2. Method “B”

Recalling that

when Eve is absent and

when she is present, an alternative approach to determining the optimal threshold can be considered. In this context, the variances of each distribution are given by:

Moreover, assuming

n sufficiently large, FPR and FNR can be expressed as:

where

denotes the cumulative distribution function of the standard normal distribution.

By adopting the same criterion for the optimal threshold as in the previous method, and noting that both FPR and FNR are differentiable functions of

, the following relation holds:

Hence:

Finally, the optimal threshold can be written as follows:

where:

Considerations on the Optimal Threshold. Several considerations arise in the determination of the optimal threshold. Both approaches require knowledge of

,

n, and

p. The value of

n is directly available, as it is set during protocol execution. In contrast, accurately measuring

during the protocol is challenging; consequently, this parameter must be provided by the installer or measured prior to protocol initiation. The interception density

p, on the other hand, cannot be known a priori by Alice and Bob. In this work, it is assumed that Eve maintains a constant interception density, compatible with a passive attack strategy, allowing Alice and Bob to accurately estimate

p as described in

Section 4.2.

6. Experimental Setup

To verify the effectiveness of the proposed algorithms, we employed a simulator based on the QuantumSolver (QS) library [

41]. To allow the replication of the results, the developed code is publicly available on GitHub [

42]. Leveraging the open-source Qiskit framework, we can simulate quantum circuits and their associated operations. Qiskit also provides the capability to execute programs locally using a backend that incorporates a realistic noise model, closely reflecting the performance of contemporary quantum hardware. Although execution on an actual IBM quantum device was technically feasible, it was rendered impractical due to excessive queue times.

The simulator initiates with the Key Generation step. During this operation, the following parameters must be specified as inputs:

Key length (K): The number of qubits exchanged during the transmission process.

Shared key length (n): The number of qubits used for the estimation of QBER.

Interception density (p).

Number of iterations: The number of repetitions of the proposed algorithm.

System noise error probability (: The expected error rate associated with the quantum channel.

The simulation procedure begins with Alice generating two random binary arrays of length K, corresponding to the key bits and the bases used for encoding, respectively. Bob and Eve each independently generate one random array of the same length to determine their measurement bases. In the encoding phase, three MUBs are available: Z, X, and Y. All the qubits are initialized in the state.

The encoding of each bit proceeds as follows: If the bit value to be transmitted is “1”, an X gate (logical NOT) is first applied. Subsequently, depending on the selected encoding basis, further operations are performed depending on the selected basis. In particular, no additional gate is applied when the Z-basis is selected, while an H gate is applied in the case of X-basis. To generate the quantum information when the Y-basis is selected, an H gate followed by an S gate is applied in sequence.

The H gate is defined as follows:

while the S gate is defined as follows:

During this process, an alphanumeric message of length K is generated. Following the encoding phase, Eve intervenes by choosing to intercept each qubit with probability p. For intercepted qubits, Eve performs a measurement in her randomly chosen basis by applying the appropriate gates (either H or ) prior to the resend phase. In contrast, Bob receives all the transmitted qubits (i.e., ) and measures each according to his randomly assigned basis.

Following the measurement, Alice and Bob publicly share their chosen bases over an authenticated classical channel. The sifting phase is then executed, wherein only the bits corresponding to matching bases are retained; typically, m bits survive this phase. Subsequently, and are computed.

7. Simulation Results

The simulation analysis is designed to evaluate the estimation procedures for both QBER and p, which are essential for the proposed eavesdropping detection methods. Subsequently, the performance and effectiveness of these detection methods are discussed.

7.1. QBER Estimation

For this study, multiple IBM backend simulators were employed to model realistic quantum noise environments. The characteristics of the selected backends are summarized in

Table 4. These simulators were chosen specifically for their different

values, which allow for a comprehensive assessment of the proposed methods under diverse channel conditions.

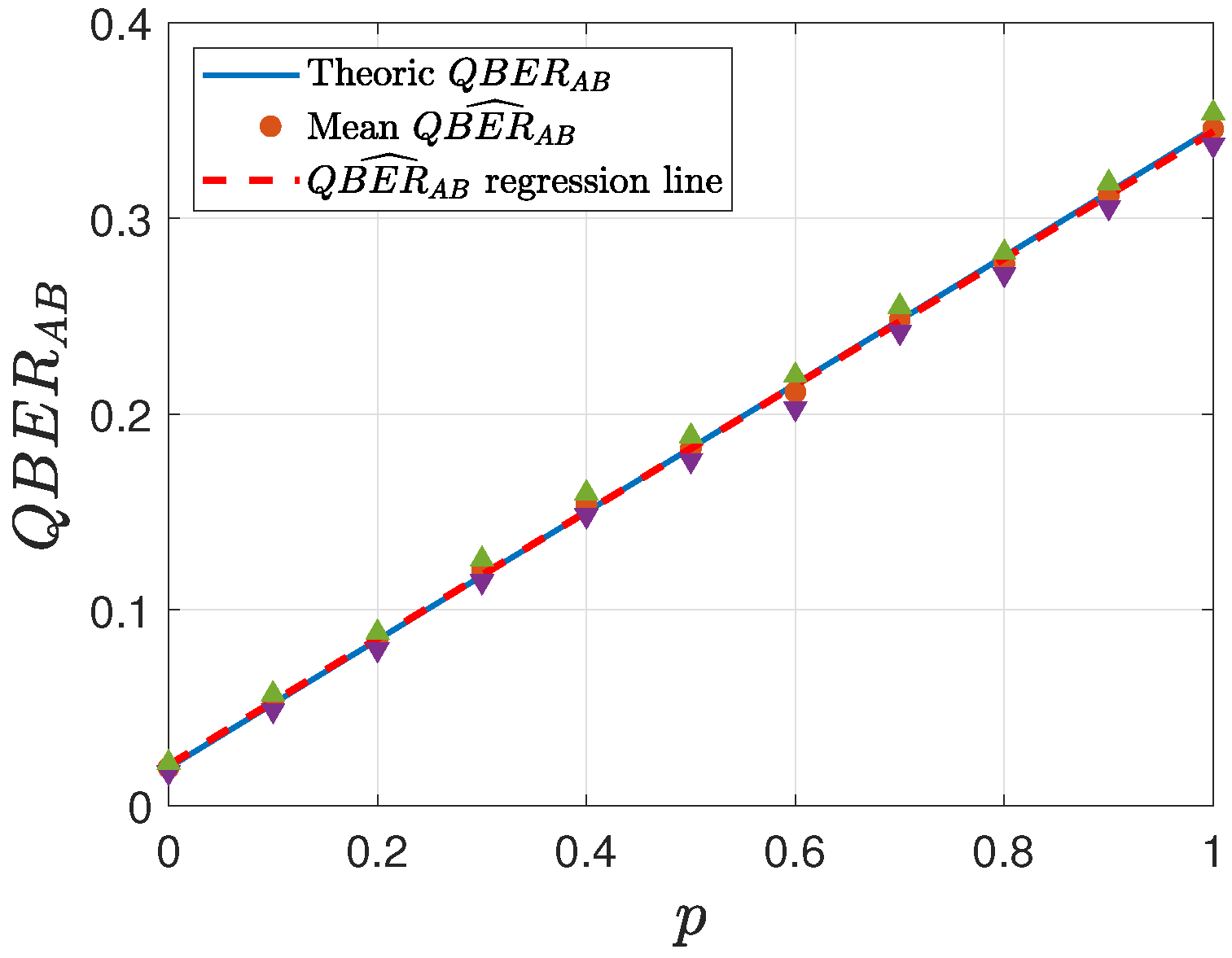

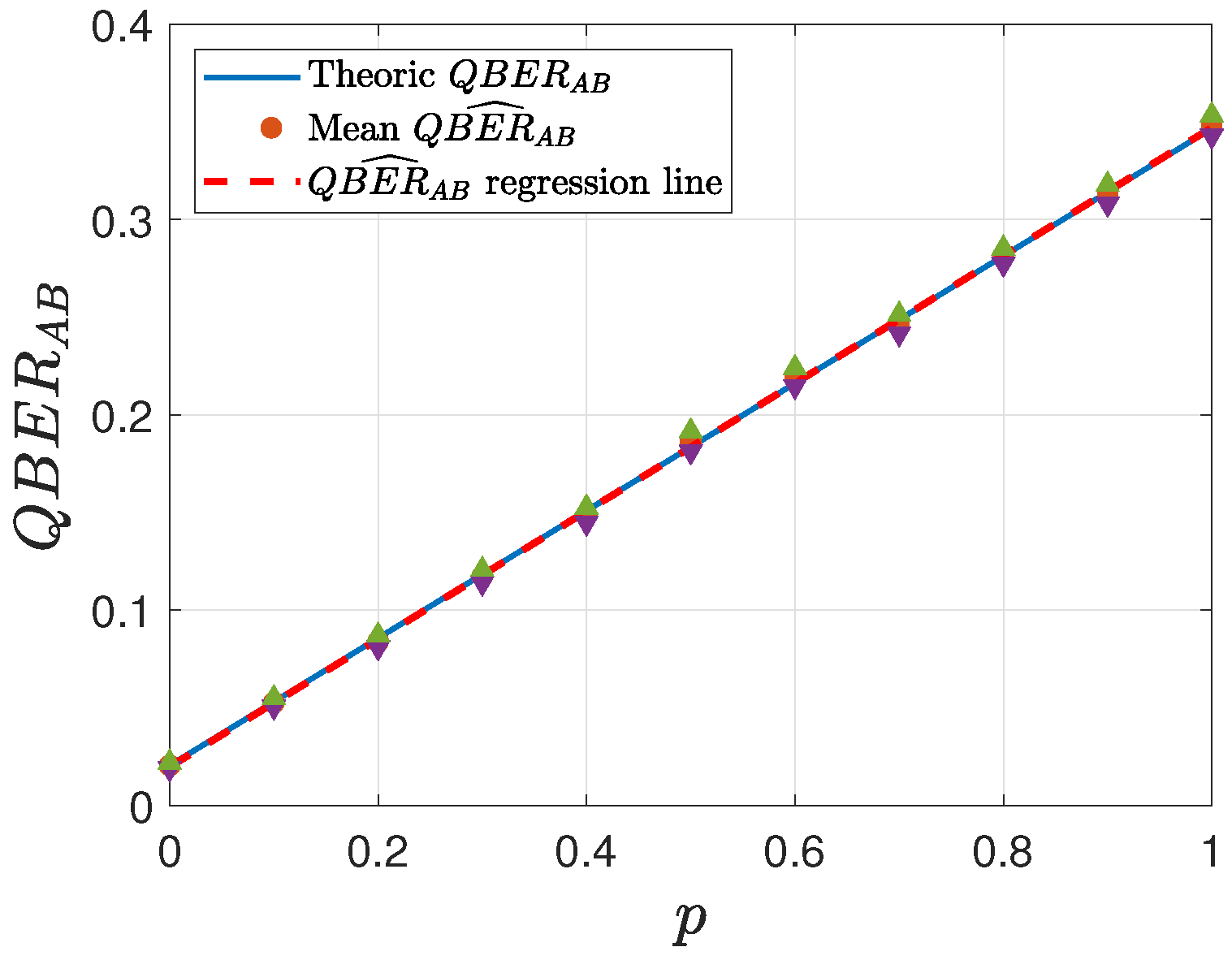

For clarity, we present the results corresponding to the backends with the lowest and highest

, namely

fake_casablanca (

) and

fake_tenerife (

). The results obtained with the remaining backends are consistent with the conclusions drawn from these two representative cases.

Figure 8,

Figure 9,

Figure 10 and

Figure 11 report the corresponding estimated values of

, with the regression line and the

confidence intervals (indicated by triangle symbols). For each value of

p, every simulation was repeated 50 times, and the mean value was subsequently computed. This study considers two different settings for

K, i.e.,

. Considering a sifting factor of

arising from the basis reconciliation phase, and assuming that

n corresponds to half of the sifted key length, these choices ensure, in a conservative manner, at least a twofold security margin with respect to the standard key sizes employed in AES-128 and AES-256. Consequently, they satisfy the post-quantum security requirement against Grover’s algorithm [

43]. This selection, thus, provides a reasonable trade-off between computational robustness and protocol feasibility.

In all the analyzed cases, the regression line consistently lies within the confidence intervals, indicating the robustness of the estimation procedure. Furthermore, as the key length K increases, the accuracy of the estimation improves, following statistical theory. Additionally, it is evident that lower values of yield greater precision in the estimation, as expected.

7.2. Interception Density Estimation

Building upon the

estimation, we also obtained estimates for the interception density

p. The corresponding results are reported in

Table 5 and

Table 6. For both the considered values of

K, the estimation of

p demonstrates good precision. Specifically, the sample standard deviation

decreases as

K increases, confirming that longer key lengths yield more accurate estimates. Conversely,

increases with larger values of

p, indicating that higher interception densities are more challenging to estimate precisely. Additionally, an increase in the system error rate

leads to a degradation in estimation accuracy.

7.3. Detection

The performance of the proposed thresholding methods is quantitatively assessed through numerical evaluation of FPR and FNR, as defined in Equations (

22) and (

23). To formalize the accuracy metric, recall Equation (

10):

Rewriting the definitions of FPR and FNR, we have the following:

Noting that

accounts for all the trials where Eve is not present (with probability

), and

accounts for all the trials where Eve is present (with probability

p), we have:

Thus,

can be rewritten as:

This result highlights how the overall detection error is a weighted combination of the two principal error types, modulated by the true prevalence of eavesdropping events in the communication. The practical implications are twofold:

Sensitivity to Interception Density (p): As p increases, the contribution of to the total error becomes more significant, emphasizing the importance of minimizing false negatives in high-threat environments.

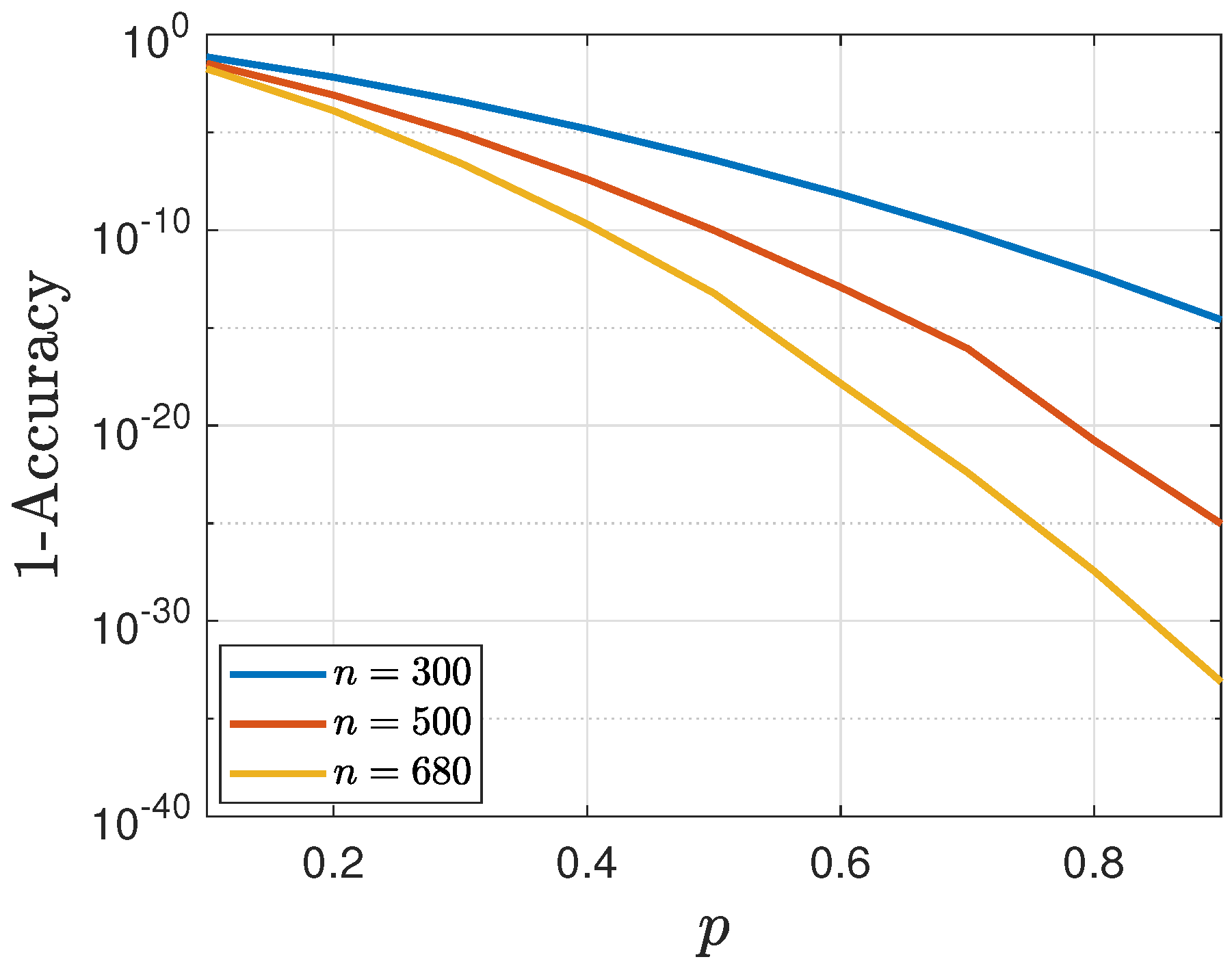

Role of Shared Key Length (n): The accuracy of both methods is found to improve with increasing key length, as shown in

Figure 12. This is a direct consequence of the reduction in statistical uncertainty with larger samples, which enhances the reliability of threshold-based detection.

Figure 12 further demonstrates that the optimal thresholding method (method “B”) maintains high accuracy across the range of

p values tested. Notably, for longer keys, the accuracy asymptotically approaches the theoretical maximum, thereby supporting the adoption of larger key sizes in practical implementations.

It is noteworthy that higher values of p are associated with improved detectability of eavesdropping. At first glance, this observation may appear counterintuitive, as previous analysis established that increasing p also increases the variance in , potentially degrading the accuracy of the optimal threshold calculation. However, this apparent contradiction can be resolved by examining the relationship between the sample QBER and the detection threshold.

Specifically, although a larger p induces greater variance in , it also leads to a greater separation between the expected system noise mean () and the mean QBER in the presence of eavesdropping (). Consequently, the average distance between the sample QBER and the detection threshold increases with p, which compensates for the increased variance and enhances the overall probability of correctly identifying an eavesdropper.

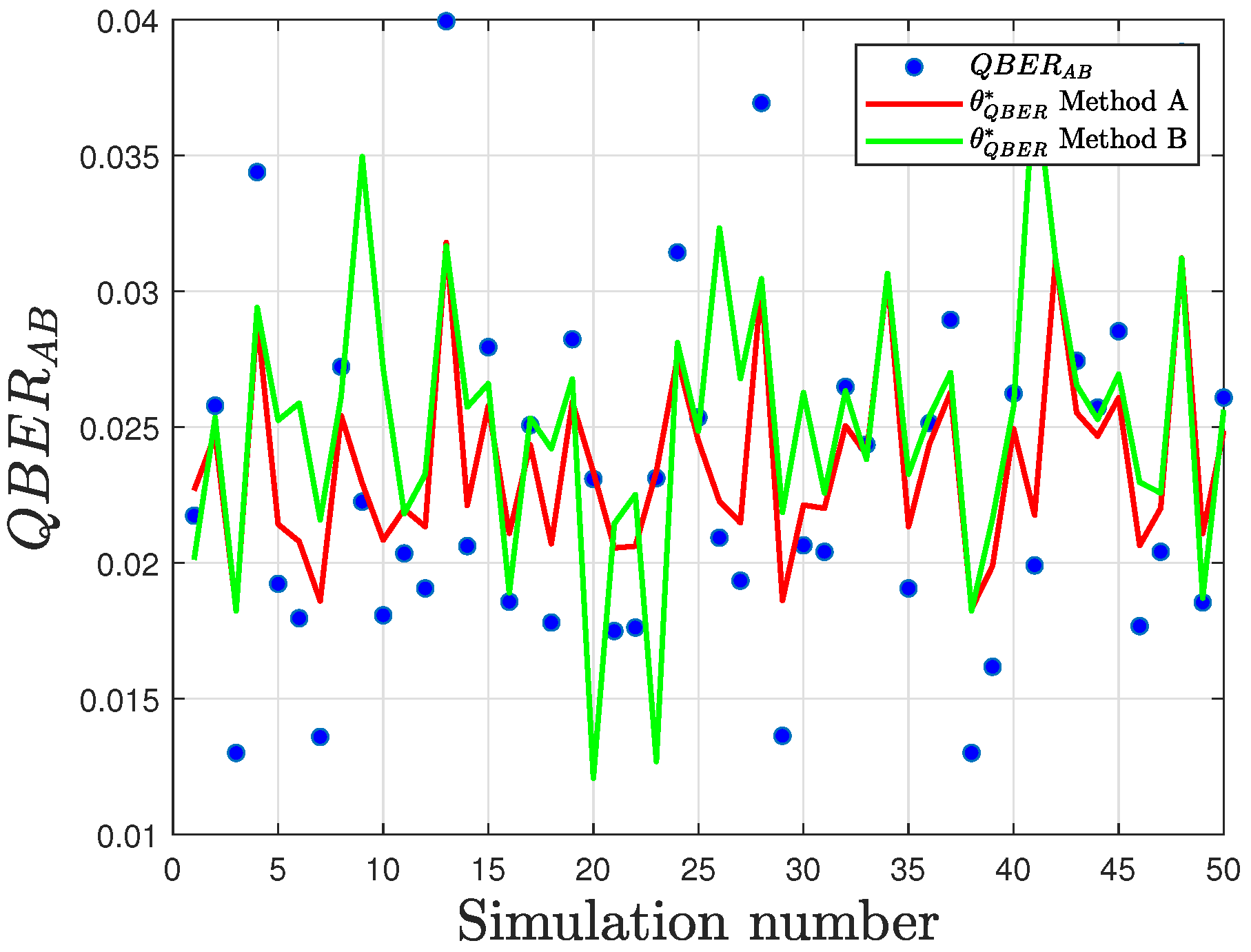

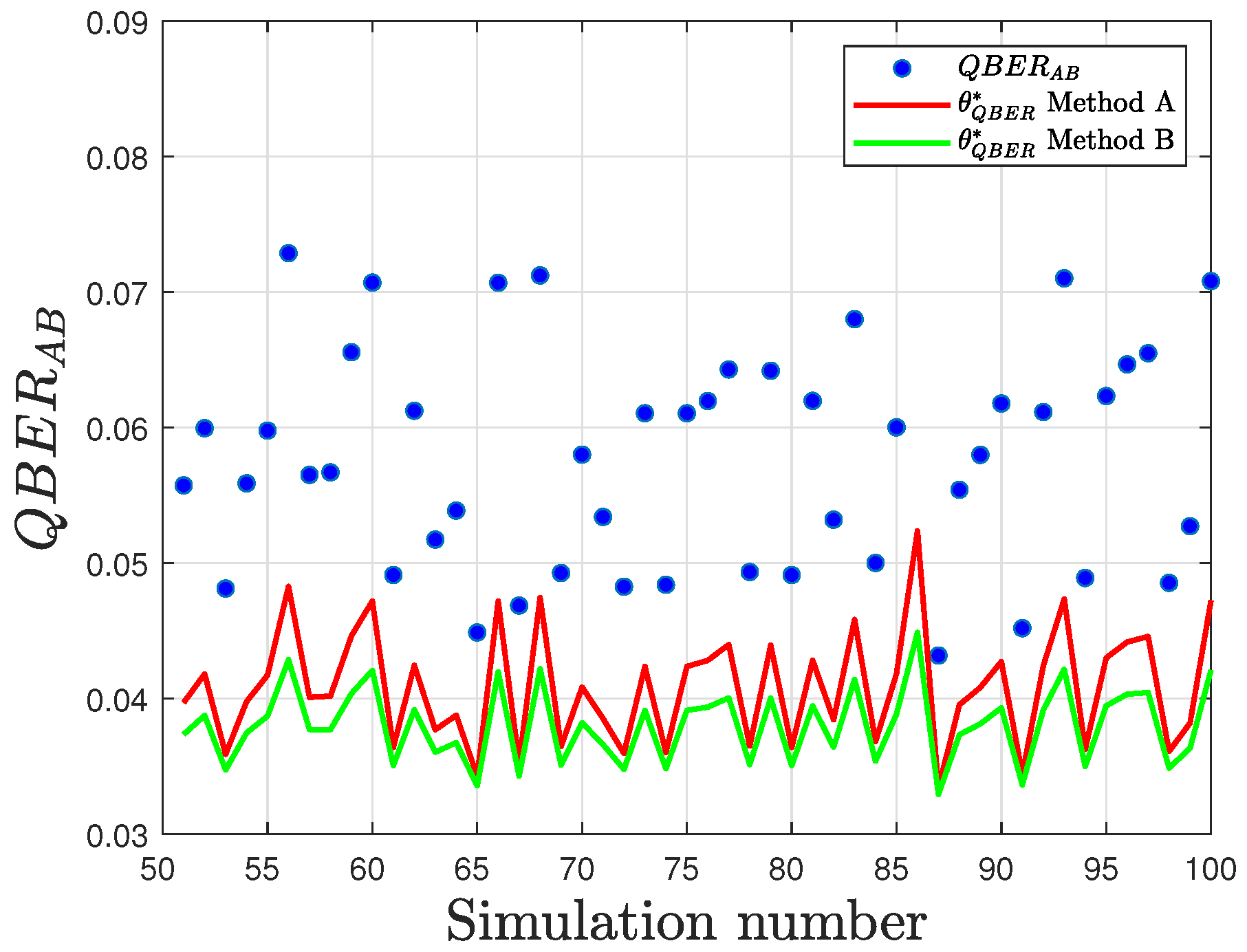

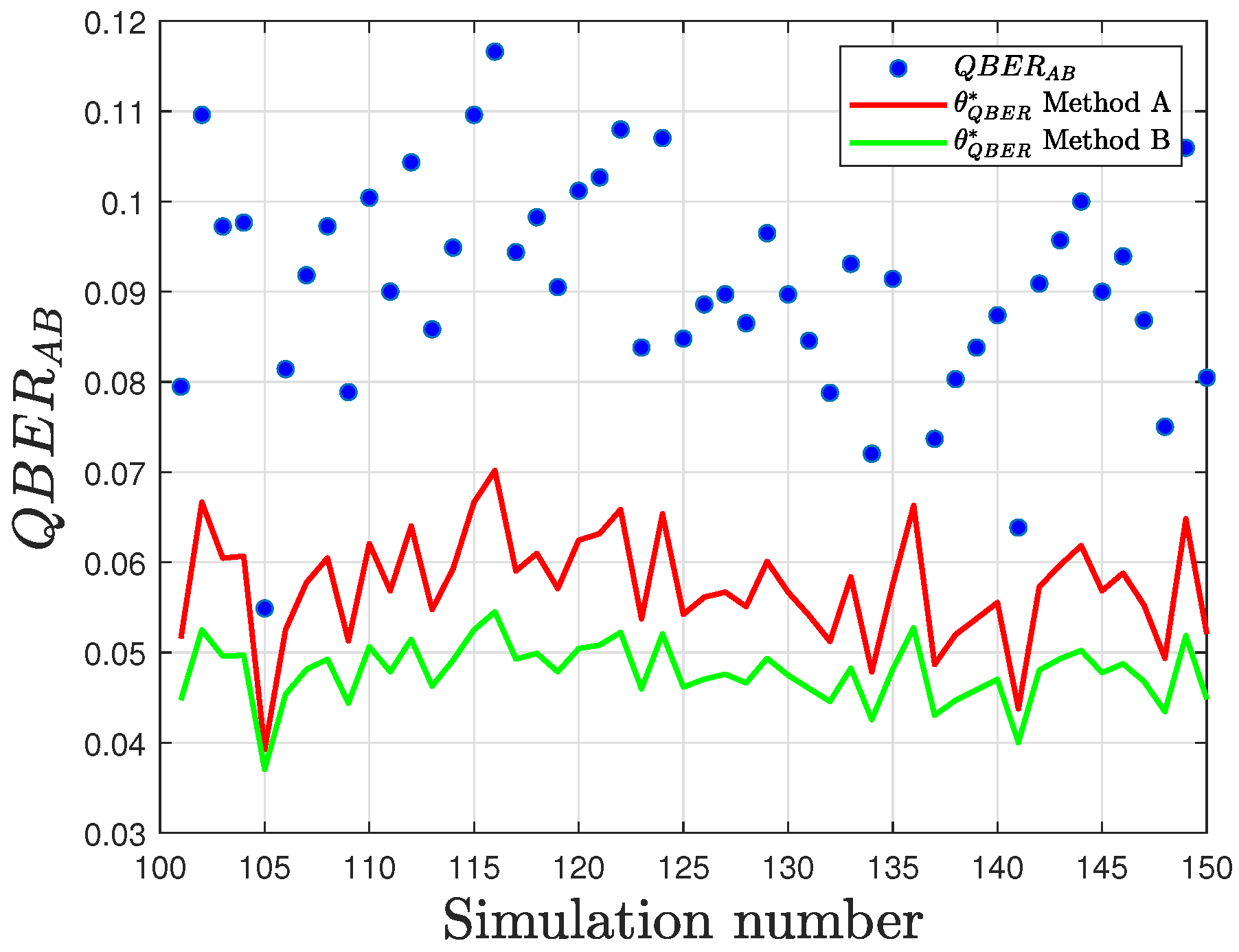

This phenomenon is evident in both the proposed detection methods, as demonstrated by the results in

Figure 13 and

Figure 14. For this analysis, the experimental results obtained using the

fake_armonk backend simulator with a key length of

was considered. For each value of

p (ranging from 0 to 1 in increments of

), the simulation was repeated 50 times. Thus, simulation runs 1–50 correspond to

, 51–100 to

, and so forth.

The quantitative analysis presented in

Table 7 reports the measured distances between the sample QBER and the optimal threshold for both methods. Notably, method “B” consistently exhibits a larger separation, resulting in higher detection accuracy, particularly at greater values of interception density.

Table 8 summarizes the results obtained obtained by the two considered methods for all the considered backends.

Across all the simulations, both the detection methods consistently achieve accuracies exceeding . As anticipated, lower levels of system noise () correspond to higher detection accuracy. This trend underscores the importance of minimizing channel noise in practical QKD systems to maximize the reliability of eavesdropping detection.

A more detailed examination of the error distribution can be obtained by closely analyzing

Figure 13 and

Figure 14. By further inspecting

Figure 15,

Figure 16 and

Figure 17, it becomes evident that as

p increases, the separation between the sample quantum bit error rate (

) and the detection threshold also increases. This observation directly confirms the previously discussed relationship between

p and detection performance.

Moreover, these figures validate the consistency between the theoretical analysis (as shown in

Figure 12) and the experimental outcomes: the vast majority of detection errors are concentrated at low values of

p, where the distinction between normal channel noise and eavesdropping-induced errors is less pronounced.

An additional noteworthy result is that for higher values of p, the difference between the thresholds determined by Method B increases. This divergence arises from Method A employs a static threshold, which does not adjust to sample variance and is thus less robust in scenarios with higher p or increased channel noise.

7.4. Final Remarks

The detailed comparative analysis of the two threshold-based detection methods highlights both their operational differences and the practical implications for QKD security.

Method B incorporates not only the expected value but also the variance in

in the threshold calculation. The resulting threshold,

, thus adapts to the uncertainty inherent in the estimation process and can be expressed as:

where

is the standard deviation of the sample QBER and

is the design parameter determined by the desired balance between the FPR and FNR.

As shown in

Table 7, the mean distance between the sample QBER and the threshold is consistently greater for Method B compared to Method A across all the tested values of

p. This increased separation is particularly pronounced at higher values of

p, where the presence of an eavesdropper introduces a significant shift in the mean QBER. By incorporating variance, Method B dynamically adjusts the threshold to maintain robust discrimination even as sample variability increases.

The experimental results across all the simulated scenarios confirm that Method B yields higher detection accuracy, especially for moderate to high interception densities. Method A, while simple and less computationally demanding, is more susceptible to errors when sample variability is high, as it lacks the ability to adapt to statistical fluctuations in the QBER. Conversely, Method B use of variance enables it to more effectively distinguish between normal channel noise and genuine eavesdropping events.

Both methods benefit from larger key lengths K, which reduce statistical uncertainty and thus improve estimation precision. However, the performance gap between the two methods widens at higher values of (system noise) and p. In particular, Method B adaptive threshold offers superior performance under challenging conditions, as it is less prone to both false positives and false negatives.

In summary, the comparative analysis demonstrates that Method B consistently outperforms Method A in terms of both accuracy and robustness, especially in scenarios with high variability or elevated interception density. The findings support the adoption of variance-aware adaptive thresholds for secure and reliable eavesdropping detection in practical QKD systems.

8. Conclusions

This work has advanced the state of the art in eavesdropper detection for the Six-State QKD protocol by proposing, optimizing, and evaluating two threshold-based detection methods in the context of partial intercept–resend attacks with constant interception density. A rigorous system model was adopted, including an explicit and realistic characterization of system noise as independent Bernoulli bit-flip events, and the consequent impact on transmission fidelity was thoroughly analyzed.

The first part of this study focused on the statistical properties of the quantum bit error rate () and the estimation of the eavesdropper’s interception density p. By employing statistical estimators and variance analysis, we highlighted the critical dependence of estimation accuracy on key system parameters, notably key length and noise rate.

We then presented two detection strategies tailored to the Six-State protocol: a Hoeffding-based thresholding method and an analytically extended probabilistic approach. Both methods aim to minimize the sum of FPR and FNR, but differ fundamentally in their construction. Method B exploits the full probabilistic structure of the QBER estimator. Notably, this study represents the first extension and practical evaluation of these detection schemes in the Six-State context, encompassing realistic partial interception scenarios.

A key strength of this work lies in its comprehensive simulation campaign, implemented in Python using the Qiskit library and the Quantum Solver framework. This approach enabled testing on simulated backends modeled after real IBM quantum hardware, thereby capturing a range of practical noise conditions. The simulation results demonstrate that both detection methods are highly accurate across all the tested scenarios, with performance consistently exceeding , and Method B exhibiting clear superiority in more challenging noise and attack regimes due to its variance-aware thresholding.

In summary, this research confirms the practical feasibility and robustness of advanced eavesdropper detection in Six-State QKD systems, bridging the gap between theoretical security analysis and real-world implementation. The paper also provides actionable guidance for selecting appropriate thresholding methods based on specific channel conditions. Future research may extend this framework to account for time-varying noise conditions and dynamic eavesdropping strategies through the development of adaptive threshold optimization techniques. Additionally, future work could involve collaborations with experimental groups and industry partners to facilitate real-world validation and practical deployment of the proposed methods.