Efficient Lightweight Image Classification via Coordinate Attention and Channel Pruning for Resource-Constrained Systems

Abstract

1. Introduction

- Unified lightweight framework: We propose a novel lightweight framework that systematically integrates the position-sensitive CA mechanism with L1-regularized structured channel pruning into a single, co-optimized PACB.

- Rigorous empirical and efficiency demonstration: We provide a rigorous empirical demonstration that the combined CA-pruning strategy achieves superior efficiency, reporting substantial reductions in parameters (up to 6.53 M) and FLOPs (24.3%), thereby proving the practical effectiveness of the framework.

- Critical architectural analysis: We perform a comparative analysis, particularly examining the CA mechanism’s architectural limitations on low-resolution datasets, providing crucial insights for the future design of position-aware attention modules in lightweight models.

- Generalizability across baselines: We demonstrate that this integrated methodology offers a robust and generalizable optimization paradigm, showing consistent performance gains when applied to two distinct lightweight architectures: MobileNetV3 and RepVGG.

2. Proposed Lightweight Image Classification Framework

2.1. Datasets

2.1.1. CIFAR-10 [21]

2.1.2. Fashion-MNIST [22]

2.1.3. GTSRB [23]

2.2. Lightweight Networks

2.2.1. MobileNetV3 [8]

2.2.2. RepVGG [9]

2.3. CA [10]

2.4. Channel Pruning [15]

2.5. Algorithmic Complexity Analysis

2.6. Complementarity of CA and Channel Pruning

2.7. Implementation Workflow

- Architecture integration: Embed the CA module after the convolutional layers of the baseline models (creating the ‘Baseline+CA’ architecture). The strategic placement of the CA module is critical. In both MobileNetV3 and RepVGG, we integrate CA into every repeating feature extraction block (bottlenecks or layers). By adopting this uniform placement strategy, we eliminate the need for computationally expensive NAS to determine layer-specific attention positions, thereby adhering to the lightweight principle of minimizing both design complexity and computational overhead. Although alternative placements (e.g., inserting CA only in early or late stages) could in principle be explored, we adopt a uniform integration strategy for both simplicity and effectiveness. This uniform placement also ensures architectural consistency and facilitates deployment across different platforms.

- Sparsity-inducing training—Train the integrated (Baseline+CA) model, applying L1 regularization to the BN layer scaling factors as defined in Equation (7) to encourage channel sparsity.

- Pruning and fine-tuning—After sparsity training, remove channels whose scaling factors are below a predefined threshold. Subsequently, fine-tune the pruned, smaller network to recover any performance loss.

- Evaluation—Assess the final models (Baseline, Baseline+CA, and the pruned Baseline+CA) in terms of classification accuracy, parameter count, and FLOPs.

2.8. Pseudocode for the PACB

| Algorithm 1: Pseudocode of PACB. |

|

3. Experimental Results

3.1. Experimental Environment Setup and Implementation Details

3.2. Ablation Study on MobileNetV3 Variants

3.2.1. Overall Performance Comparison of MobileNetV3 Variants

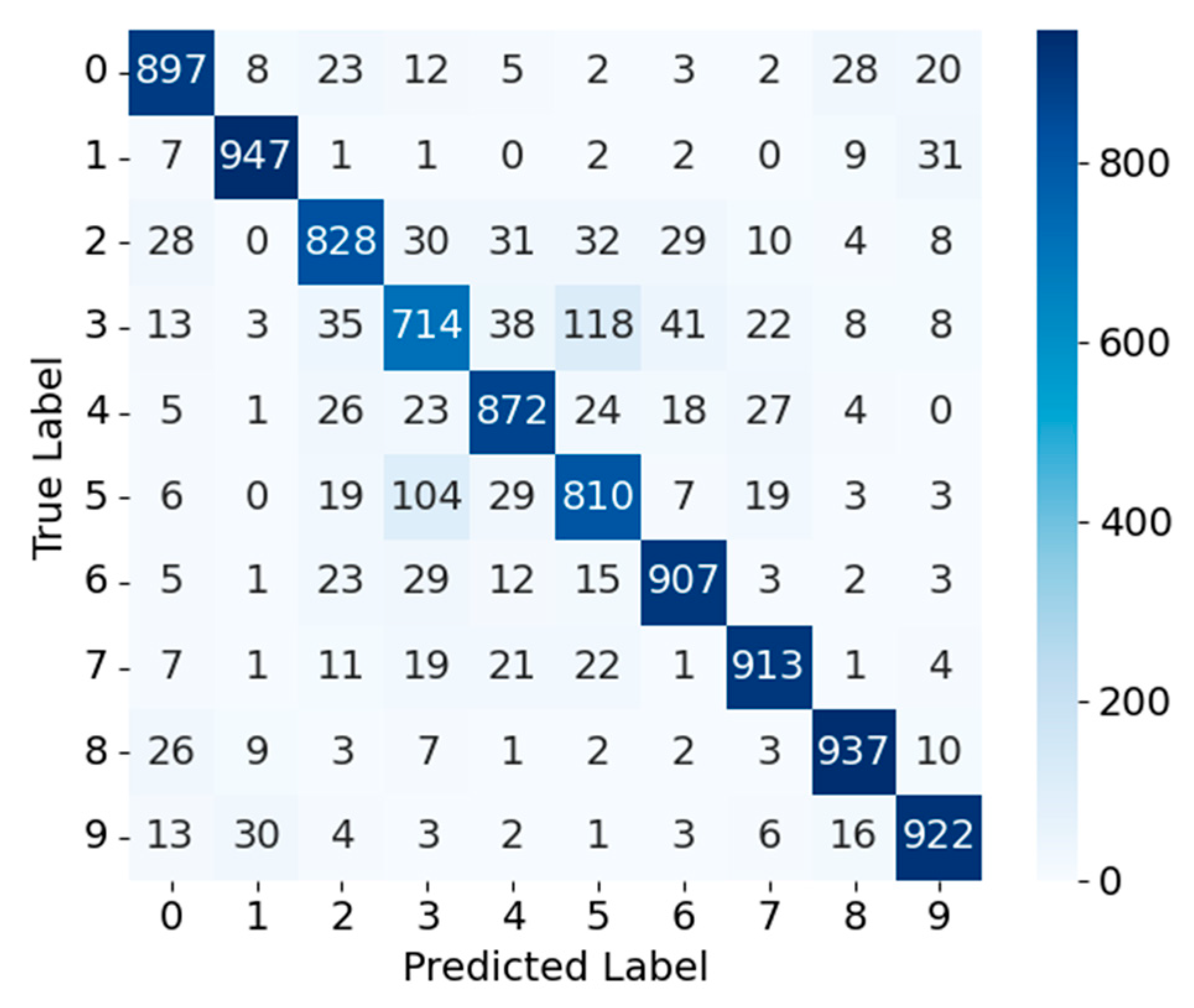

3.2.2. Confusion Matrix Analysis of MobileNetV3_CA and MobileNetV3_CA_Pruned

3.3. Ablation Study on RepVGG Variants

3.3.1. Overall Performance Comparison of RepVGG Variants

3.3.2. Confusion Matrix Analysis of RepVGG_CA and RepVGG_CA_Pruned

3.4. Overall Comparison and Analysis

3.4.1. Impact of CA Across Architectures

3.4.2. Effect of Structured Channel Pruning

3.4.3. Interaction Between CA and Pruning

3.4.4. Cross-Architecture Performance Comparison

3.4.5. Overall Implications

3.5. Application Scenarios

3.6. Inference Latency on Edge Hardware

4. Conclusions and Future Prospects

Funding

Data Availability Statement

Conflicts of Interest

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Hadji, I.; Wildes, R.P. What do we understand about convolutional networks? arXiv 2018, arXiv:1803.08834. [Google Scholar] [PubMed]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets great again. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13728–13737. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13708–13717. [Google Scholar] [CrossRef]

- Qu, M.; Jin, Y.; Zhang, G. Lightweight Image Classification Network Based on Feature Extraction Network SimpleResUNet and Attention. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 7–11 May 2024; Available online: https://openreview.net/forum?id=tItq3cwzYc (accessed on 22 September 2025).

- Li, H.; Kadav, A.; Durdanovic, I.; Samet, H.; Graf, H.P. Pruning filters for efficient ConvNets. arXiv 2016, arXiv:1608.08710. [Google Scholar]

- Han, S.; Pool, J.; Tran, J.; Dally, W.J. Learning both weights and connections for efficient neural networks. In Proceedings of the 28th International Conference on Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 1135–1143. [Google Scholar]

- Liu, Z.; Mu, H.; Zhang, X.; Guo, Z.; Yang, X.; Cheng, T.; Sun, J. MetaPruning: Meta learning for automatic neural network channel pruning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3296–3305. [Google Scholar] [CrossRef]

- He, Y.; Zhang, X.; Sun, J. Channel pruning for accelerating very deep neural networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1398–1406. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [PubMed]

- Rastegari, M.; Ordonez, V.; Redi, E.; Farhadi, A. Xnor-Net: Imagenet Classification Using Binary Convolutional Neural Networks. In Computer Vision—ECCV 2016, Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 525–541. [Google Scholar]

- Guo, Z.; Zhang, X.; Mu, H.; Heng, W.; Liu, Z.; Wei, Y.; Sun, J. Single path one-shot neural architecture search with uniform sampling. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; pp. 544–560. [Google Scholar] [CrossRef]

- Xue, Y.; Yao, W.; Peng, S.; Yao, S. Automatic Filter Pruning Algorithm for Image Classification. Appl. Intell. 2024, 54, 216–230. [Google Scholar] [CrossRef]

- Zou, G.; Yao, L.; Liu, F.; Zhang, C.; Li, X.; Chen, N.; Xu, S.; Zhou, J. RemoteTrimmer: Adaptive Structural Pruning for Remote Sensing Image Classification. arXiv 2024, arXiv:2412.12603. [Google Scholar] [CrossRef]

- Krizhevsky, A. CIFAR-10 Dataset. University of Toronto. Available online: https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 14 September 2025).

- Xiao, H.; Rasul, K.; Vollgraf, R.; Fashion-MNIST Dataset. Zalando Research. Available online: https://www.kaggle.com/datasets/zalando-research/fashionmnist (accessed on 14 September 2025).

- Stallkamp, J.; Schlipsing, M.; Salmen, J.; Igel, C. GTSRB—German Traffic Sign Recognition Benchmark. Available online: https://www.kaggle.com/datasets/meowmeowmeowmeowmeow/gtsrb-german-traffic-sign (accessed on 14 September 2025).

- Chen, X.; Hu, Q.; Li, K.; Zhong, C.; Wang, G. Accumulated Trivial Attention Matters in Vision Transformers on Small Datasets. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 1003–1012. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, Y. MSCViT: A Small-Size ViT Architecture with Multi-Scale Self-Attention Mechanism for Tiny Datasets. arXiv 2025, arXiv:2501.06040. [Google Scholar] [CrossRef] [PubMed]

- Hou, Y.; Chen, Z.; Li, S. Traffic sign recognition based on deep learning and simplified GTSRB dataset. J. Phys. Conf. Ser. 2021, 1881, 042052. [Google Scholar] [CrossRef]

| Parameter | Specification |

|---|---|

| CPU | Intel Core i7-9700K |

| GPU Random access memory Operating system Programming language Deep learning framework | NVIDIA GeForce RTX 4090 32 GB Windows 10 Python 3.8 PyTorch 1.13.1 |

| Dataset | Method | Acc (%) | Params (M) | FLOPs (M) |

|---|---|---|---|---|

| CIFAR-10 | MobileNetV3 (Base) | 95.20 ± 0.12 | 16.239 | 9.073 |

| MobileNetV3_CA | 87.92 ± 0.18 | 11.596 | 9.192 | |

| MobileNetV3_CA_Pruned | 87.20 ± 0.21 | 9.711 | 7.388 | |

| Fashion-MNIST | MobileNetV3 (Base) | 94.61 ± 0.09 | 16.239 | 10.156 |

| MobileNetV3_CA | 95.16 ± 0.11 | 11.596 | 10.225 | |

| MobileNetV3_CA_Pruned | 91.94 ± 0.15 | 9.709 | 7.502 | |

| GTSRB | MobileNetV3 (Base) | 97.09 ± 0.14 | 16.239 | 11.297 |

| MobileNetV3_CA | 98.81 ± 0.10 | 11.596 | 11.652 | |

| MobileNetV3_CA_Pruned | 97.37 ± 0.16 | 9.871 | 8.552 |

| Dataset | Method | Acc (%) | Params (M) | FLOPs (M) |

|---|---|---|---|---|

| CIFAR-10 | RepVGG (Base) | 86.61 ± 0.20 | 7.683 | 27.774 |

| RepVGG_CA | 87.47 ± 0.18 | 7.893 | 27.866 | |

| RepVGG_CA_Pruned | 87.05 ± 0.22 | 7.063 | 23.918 | |

| Fashion-MNIST | RepVGG (Base) | 93.48 ± 0.12 | 7.683 | 30.218 |

| RepVGG_CA | 93.50 ± 0.10 | 7.893 | 30.308 | |

| RepVGG_CA_Pruned | 93.33 ± 0.14 | 7.050 | 26.955 | |

| GTSRB | RepVGG (Base) | 97.49 ± 0.11 | 7.683 | 31.264 |

| RepVGG_CA | 97.67 ± 0.09 | 7.893 | 31.336 | |

| RepVGG_CA_Pruned | 96.74 ± 0.15 | 7.093 | 27.918 |

| Dataset | CIFAR-10 | Fashion-MNIST | GTSRB | |

|---|---|---|---|---|

| Method | ||||

| MobileNetV3 (Base) | 18.7 | 19.2 | 20.5 | |

| MobileNetV3_CA | 19.5 | 20.0 | 21.3 | |

| MobileNetV3_CA_Pruned | 12.4 | 13.1 | 14.0 | |

| RepVGG (Base) | 27.8 | 28.5 | 29.7 | |

| RepVGG_CA | 28.6 | 29.3 | 30.5 | |

| RepVGG_CA_Pruned | 19.1 | 20.4 | 21.2 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, Y.-L. Efficient Lightweight Image Classification via Coordinate Attention and Channel Pruning for Resource-Constrained Systems. Future Internet 2025, 17, 489. https://doi.org/10.3390/fi17110489

Chung Y-L. Efficient Lightweight Image Classification via Coordinate Attention and Channel Pruning for Resource-Constrained Systems. Future Internet. 2025; 17(11):489. https://doi.org/10.3390/fi17110489

Chicago/Turabian StyleChung, Yao-Liang. 2025. "Efficient Lightweight Image Classification via Coordinate Attention and Channel Pruning for Resource-Constrained Systems" Future Internet 17, no. 11: 489. https://doi.org/10.3390/fi17110489

APA StyleChung, Y.-L. (2025). Efficient Lightweight Image Classification via Coordinate Attention and Channel Pruning for Resource-Constrained Systems. Future Internet, 17(11), 489. https://doi.org/10.3390/fi17110489