1. Introduction

5G, representing the latest generation of mobile communication networks, delivers substantial enhancements in device connectivity, data transmission rates, and latency management, thereby establishing the foundation for the Internet of Everything. However, the proliferation of users and the diversification of service demands result in increased network traffic and greater complexity in service processing. Inefficient virtual network function mapping can result in excessive resource consumption, reduced request acceptance rates, increased end-to-end delays, and other performance issues, thereby directly affecting network service quality and resource utilization efficiency. To address this challenge, Service Function Chain (SFC), a pivotal technology arising from the integration of Network Function Virtualization (NFV) and Software-Defined Networking (SDN), not only improves resource utilization and service quality but also enables end-to-end service processing by sequentially orchestrating multiple Virtualized Network Functions (VNFs) according to a defined network service orchestration strategy. It thus provides a flexible and efficient solution to accommodate differentiated and dynamic service demands in the 5G environment [

1].

Based on the virtualization capabilities of NFV, Service Function Chains (SFCs) abstract the underlying computing, communication, storage, and other resources, enabling network function instances—such as firewalls and deep packet inspection—to be flexibly deployed on general-purpose hardware [

2]. Efficiently orchestrating and scheduling VNF instances in SFCs to handle dynamic fluctuations in resource supply and demand, while simultaneously optimizing end-to-end Quality of Service (QoS), has become a critical challenge. Although the introduction of SFCs enhances the flexibility of network services, it also brings more complex scheduling problems. Traditional scheduling approaches struggle to meet the high concurrency and low-latency requirements of 5G networks, creating an urgent need for intelligent scheduling mechanisms that optimize resource utilization and service performance. As SFCs assume an increasingly central role in network services, their scheduling faces key challenges, including competition for computing, network, and storage resources, strict temporal constraints, and significant operational overhead. Moreover, resource preemption among VNF instances and nonlinear fluctuations in service demand result in imbalanced resource utilization [

3]. The strict ordering constraints of SFC topologies further conflict with the scheduling complexity of parallel service chains, exacerbating the mismatch between scheduling behavior and actual demand, and ultimately degrading scheduling efficiency while increasing computational overhead.

Existing SFC scheduling methods (reinforcement learning, mathematical programming, generative/matching theory) have critical limitations: reinforcement learning faces data dependency and slow convergence in large scales, mathematical programming lacks stability and has high complexity, and generative/matching theory struggles with multi-objective balance, which creating an urgent need for an efficient, low-overhead approach to achieve stable multi-objective optimization and global resource coordination.

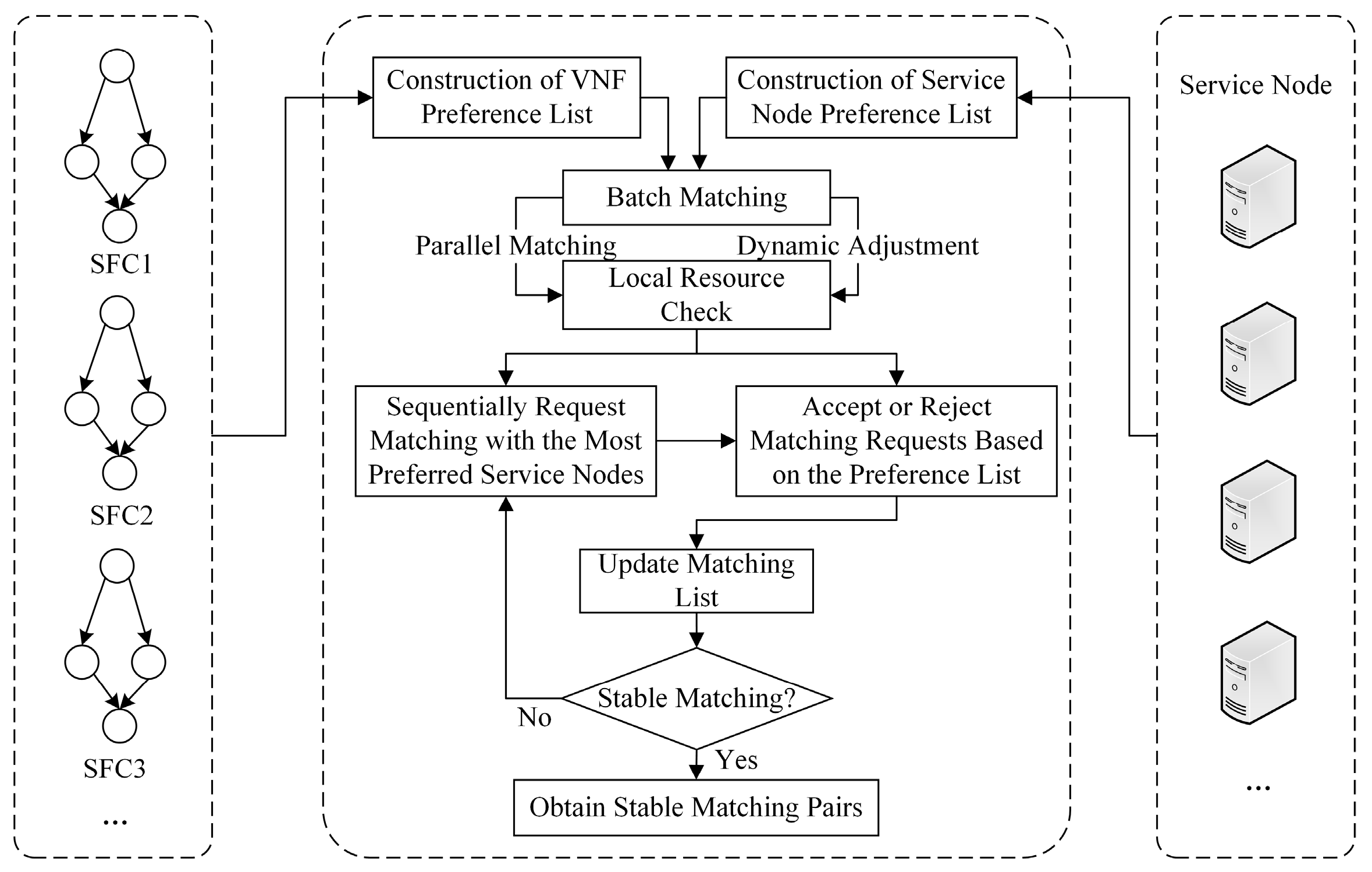

To address the above challenges, this paper proposes a service function chain scheduling algorithm based on matching game (SFC-GS). By leveraging bidirectional preference matching and a hierarchical batch-processing mechanism, the proposed approach achieves global resource optimization with low overhead, effectively tackling the core challenges in SFC scheduling. The main contributions of this paper are as follows:

We develop a multi-objective SFC scheduling model that considers four objectives: maximizing request acceptance rate, minimizing end-to-end delay, minimizing resource consumption, and minimizing scheduling execution time. This model improves SFC orchestration efficiency and 5G network resource utilization, thereby enhancing the network’s overall service-carrying capacity.

We further propose a two-way preference matching mechanism based on a matching game. The mechanism accounts for VNF resource demands—including computation, storage, and bandwidth—along with physical service node availability and local topology. Multiple rounds of iterative games enable rapid local matching, improving scheduling responsiveness, refining fitness evaluation, and strengthening dynamic adaptability.

We introduce a hierarchical batch scheduling strategy based on resource demand. Service requests are processed in priority-based batches, with resource allocation for subsequent batches dynamically adjusted according to feedback from previous results, enabling iterative optimization. This mechanism ensures timely response to high-priority requests, maintains fairness for low-priority requests, and balances overall resource utilization, thereby enhancing scheduling flexibility and allocation efficiency.

The remainder of this paper is organized as follows:

Section 2 summarizes previous related work conducted by other researchers;

Section 3 describes the system model and problem description;

Section 4 describes in detail the matching model setting and matching preference list construction, and presents the algorithmic steps of multi-objective optimal service function chain scheduling based on matching game;

Section 5 describes the simulation experiment environment construction and design and systematically analyzes the experimental results;

Section 6 summarizes the work of this paper and discusses directions for future work.

2. Related Work

In recent years, research on SFC scheduling has focused on reducing end-to-end latency, improving load balancing, and enhancing system reliability [

4]. This paper reviews existing approaches from three perspectives: reinforcement learning-based methods, mathematical programming-based methods, and methods based on generative models and matching theory. Their respective strengths and limitations are analyzed, and the potential of matching game-based methods for SFC scheduling is further explored. The details are as follows:

(1) Reinforcement Learning-Based Methods

Jia et al. [

5] proposed a reconfigurable time-expanded graph and further developed a dynamic SFC scheduling model. The algorithm, combined with deep reinforcement learning, is proposed to solve the SFC scheduling problem formulated as integer linear programming under limited resources and time constraints. Zhang et al. [

6] formulated the SFC scheduling problem as a multi-Markov decision process and employed a multi-state action deep reinforcement learning algorithm to learn the optimal scheduling policy, aiming to minimize the weighted total delay. Song et al. [

7] proposed a deep reinforcement learning-based approach for virtual network function deployment, which reduced transmission delay by identifying the shortest matching paths. Additionally, they introduced a service function chain rescheduling mechanism based on network calculus theory to minimize queuing delay and enhance the flexibility of the scheduling algorithm. Cao et al. [

8] proposed a task-oriented service provisioning and scheduling model for networks’ dynamic complexity. Building on this model, they formulated the SFC scheduling problem as maximizing the number of task completions under limited resource and time constraints and proposed a deep reinforcement learning algorithm to solve it as an integer linear programming problem. Baharvand et al. [

9] proposed a deep Q-learning-based service function chain scheduling method that used a scheduling mechanism to reorder the functions in the chain. The method explored the simultaneous scheduling of multiple SFCs, aiming to reduce the total completion time of the scheduling, and improved the resource utilization and request acceptance rate.

However, there are problems with training data dependency, slow convergence, and lagging policy updates in the mega-scale scenarios. Most of the existing research focuses on unidirectional resource allocation, ignoring the service function chain and resource nodes, such as the bidirectional preference of performance demand and load balancing, which is prone to trigger local optimum, resource conflict and high computational overhead. Notably, in VPN (Virtual Private Network)-secured scenarios, these methods’ high computational overhead from training makes it hard to accommodate additional encryption/decryption resource demands, a gap SFC-GS’s lightweight local resource check can address.

(2) Mathematical Programming-Based Methods

Jia et al. [

10] represented the SFC scheduling problem for NFV-enabled 5G networks as a mixed-integer nonlinear programming problem and proposed an efficient algorithm to identify redundant virtual neural networks, aiming to maximize the number of requests that satisfy both latency and reliability constraints. E. Nagireddy et al. [

11] conducted a rigorous evaluation of different action spaces within the model to optimize the action space across various paths. The results demonstrated that the proposed algorithm served as a feasible and effective optimization solution to the problem of SFC request scheduling within the given scope. Wang et al. [

12] formulated the service function chain scheduling problem as a 0–1 nonlinear integer programming problem and proposed a two-stage heuristic algorithm to solve this problem. In the first phase, if resources were sufficient, the service function chains were deployed in parallel to the UAV edge servers based on the proposed pairing principle between the service function chains and the UAVs in order to minimize the sum of task completion times. Instead, when resources were insufficient, a revenue maximization heuristic was used to deploy arriving SFCs in a serial service mode.

Despite their theoretical optimality, mathematical programming methods share key drawbacks: they lack stability guarantees (often forming “blocking pairs” that reduce resource utilization and waste network resources) and incur extremely high solution costs in large-scale complex network environments. Adding VPN-specific resource constraints (e.g., secure tunnel bandwidth) would further increase their solving complexity, conflicting with VPN services’ low-latency needs—an issue SFC-GS’s batch matching avoids via simplified local computation.

(3) Generative Model and Matching Theory-Based Methods

Liao et al. [

13] addressed SFC scheduling as an integer linear programming problem by dynamically sensing resource availability under different time slots. They proposed a resource-aware SFC scheduling algorithm that combined VNFs and time slots. The algorithm efficiently coordinates computing and network resources in the spatiotemporal domain, optimized resource utilization while strictly adhering to application latency requirements. Zhang et al. [

14] proposed a novel network diffuser based on a conditional generative model. This model formulated the SFC optimization problem as a state sequence generation task for planning purposes and performed graph diffusion over state trajectories, conditioning the extraction of SFC decisions on the optimization constraints and objectives. Pham et al. [

15] proposed a matching-based algorithm to solve the NP-hard VNF scheduling problem, aiming to address the challenges of high computational cost and the inability to perform online scheduling while ensuring scheduling stability and satisfying the allocation requirements of all network services.

However, these methods face a shared challenge: when simultaneously optimizing multi-objectives, they struggle with resource trade-offs and coordination difficulties. How to achieve efficient and fair resource scheduling under the constraints of uncertainty in resource requirements and request priorities such as computing, communication, and storage is still a key challenge in current SFC scheduling. Notably, existing matching theory methods here rarely consider VPN security, whereas SFC-GS’s bilateral preference list can explicitly weight VPN resource fitness, thereby filling this practical gap.

In summary, current SFC scheduling methods have distinct but overlapping limitations. Additionally, few methods address bidirectional preferences between VNFs and resource nodes or adapt to the uncertainty in computing/communication/storage demands. This highlights an urgent need for a VNF scheduling method that synergizes resource states, enables efficient decisions, and scales well-while simultaneously optimizing scheduling efficiency, resource cost, and QoS to enhance overall 5G network service performance. In the SFC scheduling problem, how to determine the execution order of VNF instances at each node based on satisfying the location constraints and priority constraints to achieve the optimal solution is an urgent problem. Matching game is a game-theoretic approach applicable to the resource allocation problem, which can make two-way preference choices among multiple subjects to achieve efficient and fair resource scheduling. The complex supply and demand relationship between VNF instances and server resources and the dynamic nature of service requests require scheduling algorithms with fast adaptation capabilities. Compared to the traditional integer planning or heuristic search-based methods, the matching game has higher scalability and real-time performance, which enables SFC scheduling to be more efficient and flexible to adapt to the dynamic demands of 5G networks. Therefore, the SFC scheduling method based on matching game has an important research value in improving resource utilization and optimizing resources.

3. System Model and Problem Formulation

3.1. System Model

In order to construct a reasonable VNF mapping model, a formal description of the topology of the physical network is required, and the mapping model is shown in

Figure 1. In this paper, the underlying physical network is defined as an undirected graph

,

denotes the set of physical nodes in NFV, and max represents the maximum number of service nodes. These nodes are composed of generalized high-performance server clusters

, which serve as the fundamental hardware infrastructure for hosting VNFs. They possess capabilities in computation, storage, and network communication. Each physical service node is equipped with a certain number of CPU cores, memory modules, and network interface cards, which collectively determine the node’s resource capacity and processing capability. For each physical node

, there are computational resources

such as the number of CPU cores, storage resources

such as memory capacity, and network bandwidth resources

. The set

denotes the physical links between NFV nodes, which serve as communication channels connecting the physical nodes. These links determine the maximum amount of data that can be transmitted between nodes per unit of time, thereby ensuring the smooth transmission of data within the service function chain. For a physical link

connecting a physical node

and a physical node

has a link capacity

denotes the upper limit of the amount of data that can be transmitted over the link, where

, and

. Let

denote the set of all VNFS. The set of service function chains is defined as

, where each service function chain

consists of a set of sequence of VNFs arranged in a specific order. For example, to run a web application, a firewall function must first inspect incoming data packets before forwarding them to the web service function.

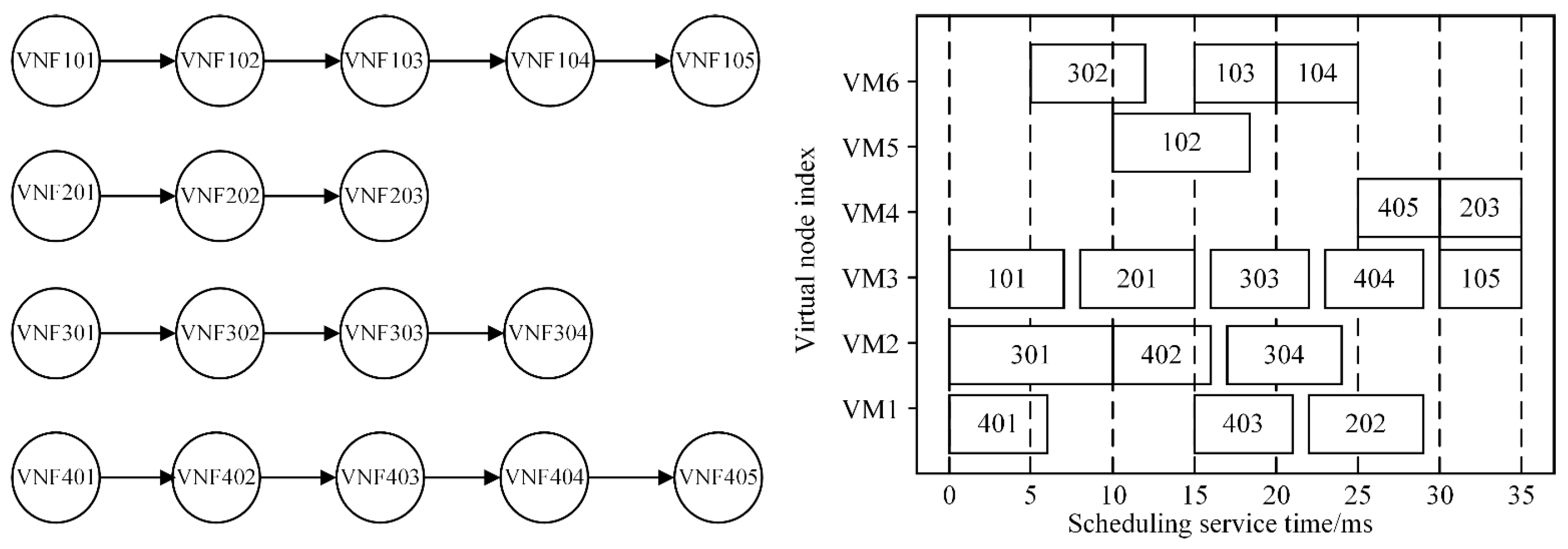

SFC instances have differentiated service requirements and need to be reasonably scheduled based on resource constraints, efficient utilization, and quality of service requirements. This paper investigates how to optimize scheduling algorithms in resource-constrained computing environments to achieve efficient VNF placement on heterogeneous virtual machines, thereby meeting the diverse requirements of different SFC instances. Assuming that there are four SFC instances with differentiated service demands for scheduling, namely, SFC1: VNF101–105, SFC2: VNF201–203, SFC3: VNF301–304, and SFC4: VNF401–405. The scheduling algorithm is required to perform dynamic mapping within a resource pool composed of six heterogeneous virtual machines (VM1–VM6), aiming to allocate VNFs in a demand-driven manner based on the predefined VNF–VM mapping relationships, under the constraint of limited computing resources. The VNF scheduling example is shown in

Figure 2.

3.2. Display Style

In this paper, we divide the processing of a service request into multiple time intervals, denote these time slots by the set

, and set each time slot

as a processing period for a link mapping. Define a binary Boolean variable

that indicates whether the VNF

requested by the SFC is scheduled on physical node

, if yes then

, otherwise

. Define a binary Boolean variable

, which indicates whether the virtual link

connecting nodes

and

in the service function chain is mapped to the physical link

, if yes then

, otherwise

. In this paper, the end-to-end delay mainly consists of the processing delay

of the VNF at the physical node and the propagation delay

of the data traffic on the physical link, defining the maximum tolerable delay

. The total end-to-end delay

is shown in Equation (1).

In VNF architecture design, physical node selection, along with strategies for VNF mapping and sequencing, are key factors influencing both link transmission delay and processing delay. As critical components of overall network service latency, these factors are directly related to network performance and user experience. In order to optimize network operations and reduce resource consumption and service delay, systematic optimization of key indicators such as end-to-end delay, average request acceptance rate, and resource consumption is required based on actual scenarios to improve VNF performance and network service quality.

In NFV scenarios, the Average Acceptance Rate (AAR) is a key metric for evaluating the system’s service capability. It represents the proportion of SFC requests that are successfully accepted out of the total incoming requests. At any time between 1 and

, when request

is successfully accepted, the binary Boolean variable

takes the value of 1; when request

is rejected due to insufficient resources or constraint conflicts, the binary Boolean variable

takes the value of 0. The Average Acceptance Rate is expressed as shown in Equation (2).

The resource consumption

of the SFC request scheduling represents the normalized sum of the CPU resource consumption

, storage resource consumption

, and bandwidth resource consumption

of each virtual network node in the SFC, where

, as shown in Equation (3).

Given that the actual network environment and service requirements are extremely complex, the following constraints need to be introduced in the SFC orchestration process in order to ensure service quality.

(1) Resource constraints aspects

Computational resource constraints: For each physical node , there is , denotes the amount of computational resources required by the , which limits the computational resource occupancy of the VNF on a physical node so that it always remains within the available computational capacity of that node to prevent resource overload.

Storage resource constraints: , where denotes the number of storage resources required by the to ensure that the storage resources of the node meet the demand.

Network bandwidth constraint: For each physical link , , where denotes the traffic demand in the service function chain from to in the service function chain, ensuring that the link is not congested due to traffic overload.

(2) Sequential constraint aspects

Define the variable to denote the start execution time of and to denote the execution time of , with the formula , where is the amount of task computation and is the computation speed. For the order of VNFs in each service function chain , there is , which ensures that the VNFs are executed sequentially in the specified order.

5. Experimental Design and Results Analysis

5.1. Experimental Setup

To comprehensively evaluate the performance of the SFC-GS algorithm, this paper constructs a dynamic service function chain scheduling scenario based on a simulation platform. The algorithm simulation is implemented in an environment with the Windows 11 operating system, an Intel(R) Core (TM) i5-12490F CPU @3.00 GHz, 16 GB DDR4 RAM, and an NVIDIA GTX 3070 GPU, using tools such as Python 3.6.12 and TensorFlow 2.4.1.

This paper employs the Monte Carlo method [

17], with the physical node topology randomly generated. To mitigate the impact of randomness, the average value of 100 simulation tests is taken as the result. The setup of this experimental scenario refers to the commonly used load testing scenarios and resource demand distribution methods in existing research. It is important to clarify two points here: First, the Monte Carlo method is only used to generate random physical node topologies and reduce randomness in simulation results, not to model real data center environments—for real-world applications, SFC-GS can directly adopt actual data center resource parameters to construct the demand vector (

), eliminating reliance on random generation. Second, the proposed SFC-GS algorithm does not use deep learning to generate the demand vector (

) or scheduling decisions; deep learning is only applied in the DQN and DDPG baseline algorithms for performance comparison. SFC-GS’s core logic relies on matching game and batch scheduling, which avoids the additional system load caused by deep learning and aligns with the resource efficiency goal emphasized in the paper.

The experimental parameters are set as follows: set the maximum number of service function chains to 100–500 SFC requests, covering light to overloaded scenarios, with an increase of 100 requests per phase to simulate load increment. The total input/output bandwidth range for each node is from 5 to 10 Mbps. It is assumed that SFC requests arrive dynamically and follow a Poisson distribution. Each SFC request consists of one or more VNFs, with the number of VNFs uniformly distributed between 2 and 5. The CPU required for each VNF to operate normally is randomly distributed between 5 and 20, and the memory resource consumption follows a uniform distribution between 5 and 15. The neural network employs the Adam optimizer and the ReLU activation function, with a four-layer fully connected architecture comprising 256, 128, 64, and 32 neurons, respectively. The learning rate is set to 0.0005, the batch size is 128, and the discount factor is set to 0.7. The simulation parameters are detailed in

Table 1. The experimental design covers a comprehensive load spectrum ranging from 100 to 500 requests and incorporates multi-dimensional performance metrics for comparison, thereby providing a thorough validation of the superiority of the SFC-GS algorithm across varying scale scenarios.

5.2. Baselines

Three benchmark algorithms are selected for performance comparison with SFC-GS, with brief descriptions as follows:

A deep reinforcement learning algorithm that approximates the Q-value function via a neural network, learning optimal scheduling policies by interacting with the environment; it is widely used in dynamic resource allocation tasks but faces challenges of slow convergence in large-scale scenarios.

(2) Deep Deterministic Policy Gradient [

19]

DDPG is a reinforcement learning algorithm suitable for continuous action spaces, widely applied in resource allocation, such as bandwidth allocation in communication networks and computing resource scheduling in data centers. It outputs specific resource allocation amounts via the actor network, evaluates the allocation scheme with the critic network, and achieves stable learning by combining experience replay and target networks, thereby dynamically optimizing resource allocation strategies to improve core objectives like resource utilization.

A naive scheduling algorithm that randomly allocates VNFs to physical nodes without optimization strategies; it serves as a baseline to verify the effectiveness of optimized algorithms.

5.3. Performance Metrics

Five core metrics are used to evaluate the scheduling performance of all algorithms:

(1) Average Request Acceptance Rate

The proportion of successfully accepted SFC requests to total incoming requests, reflecting the algorithm’s ability to handle service demands.

(2) End-to-End Delay

The total latency of data transmission in SFCs, including VNF processing delay and physical link propagation delay, a key indicator of service quality.

(3) Resource Utilization

The ratio of used resources (CPU, memory, bandwidth) to total available resources in physical nodes, measuring resource allocation efficiency.

(4) Runtime

The total computation time of the scheduling algorithm, reflecting its real-time performance and scalability for large-scale tasks.

(5) Resource Consumption

The normalized sum of CPU, memory, and bandwidth resources consumed by SFC scheduling, evaluating the algorithm’s resource-saving ability.

5.4. Results and Discussion

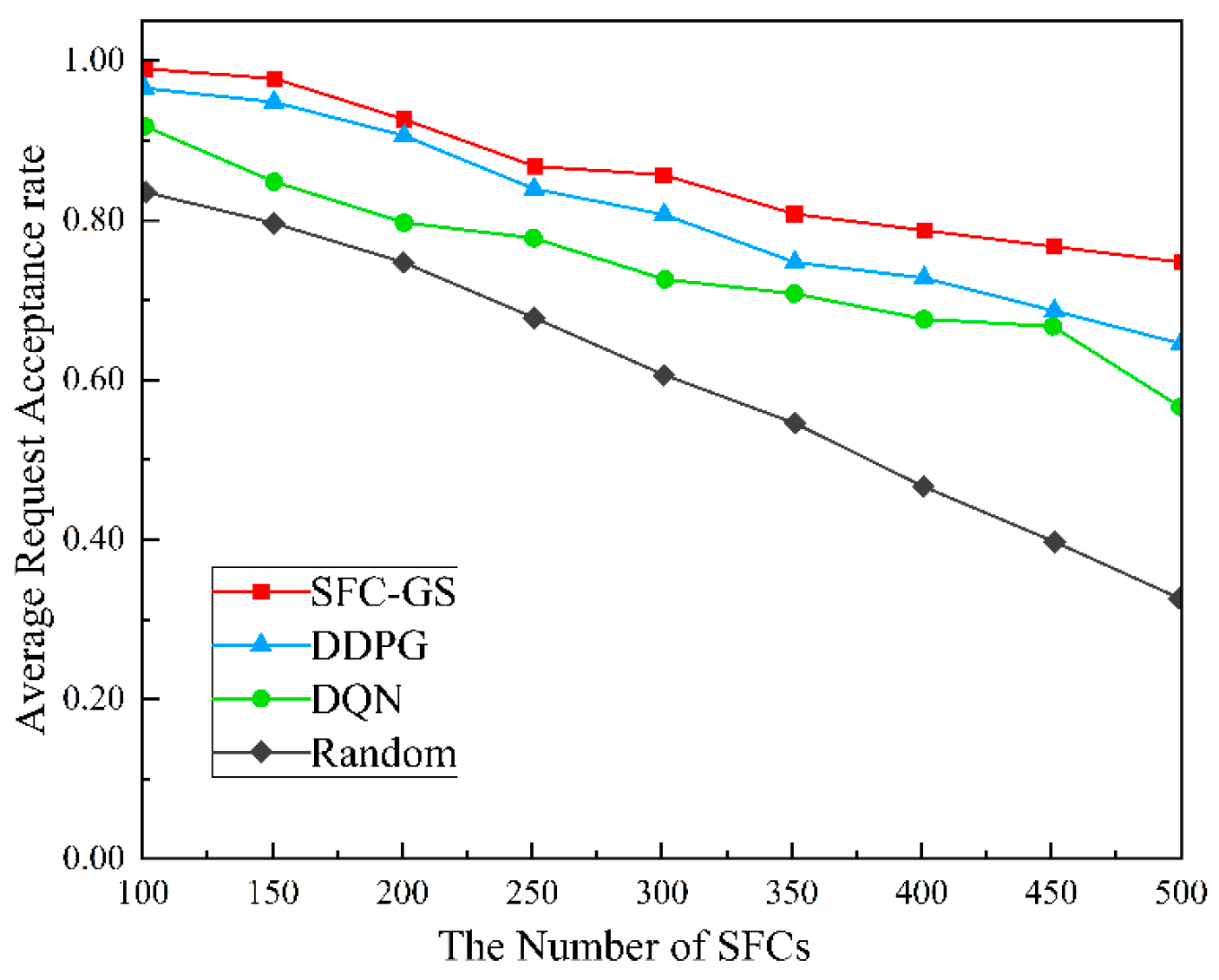

(1) Comparison of average request acceptance rate

Figure 4 illustrates the trend of the average request acceptance rate with respect to the number of SFCs for different algorithms dealing with SFC scheduling. As the number of SFCs increases, the acceptance rate of all algorithms declines due to higher resource demands and increased scheduling complexity. However, the SFC-GS algorithm consistently outperforms the other three algorithms, achieving an average request acceptance rate that is more than 8.108% higher, thereby demonstrating a significant advantage. Batch-based matching enables SFC-GS to handle a large volume of requests more efficiently, while local resource checking helps prevent conflicts and resource waste during allocation. This mechanism ensures that data packets traverse multiple network service nodes as required by users, maintaining stable and reliable service delivery even under highly complex network conditions. So, the algorithm sustains a high acceptance rate under substantial request loads. In comparison, DDPG and DQN, as deep reinforcement learning algorithms, demonstrate superior performance in certain scenarios. However, in large-scale SFC scheduling, their learning complexity leads to less stable performance compared to SFC-GS. The Random algorithm allocates resources in a completely arbitrary manner without any optimization strategy, leading to the most rapid decline in the average request acceptance rate under intense resource competition.

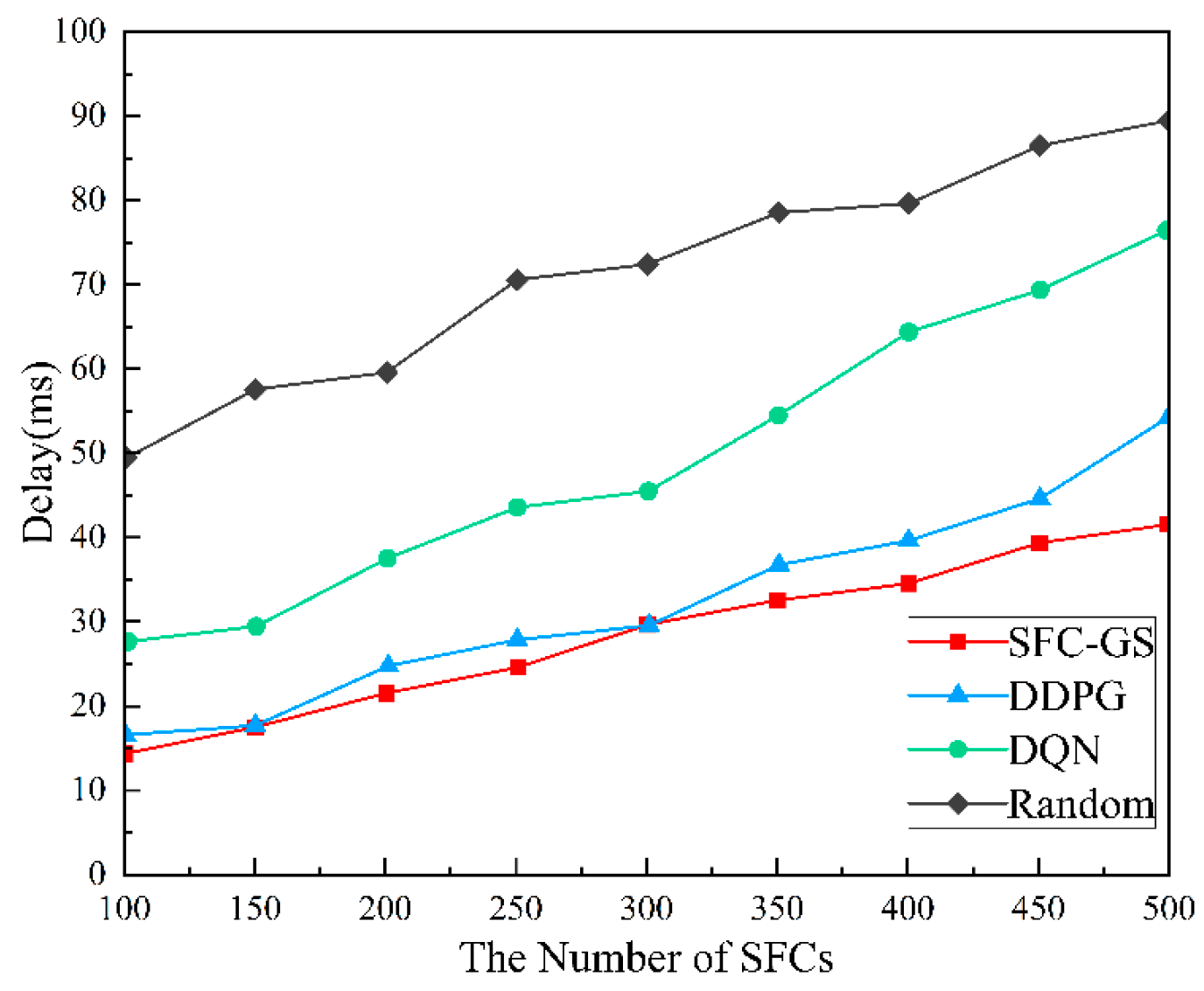

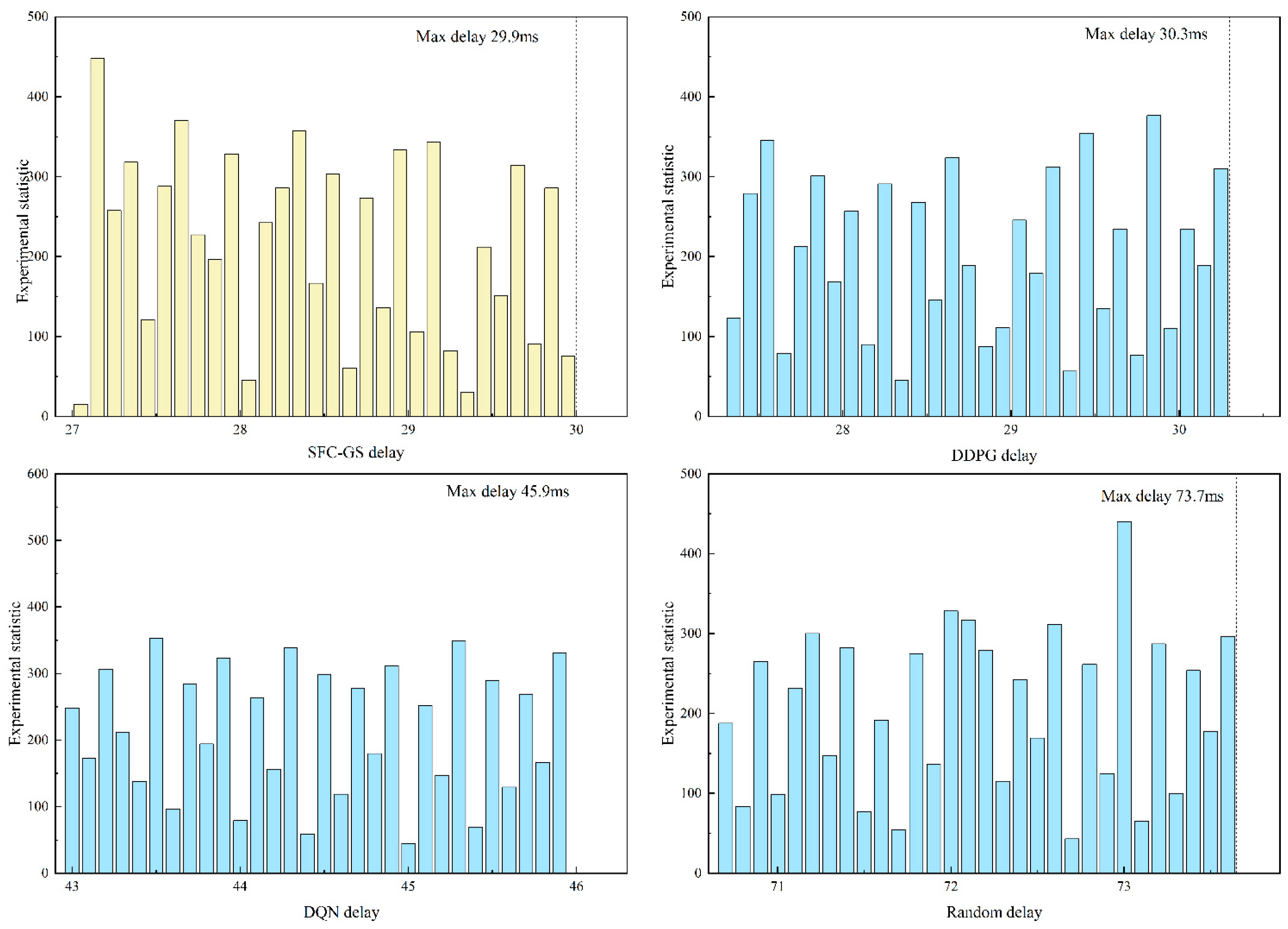

(2) Delay comparison

Figure 5 illustrates the delay performance of different algorithms in handling SFC requests. As the number of SFC requests increases, the end-to-end delay for all algorithms shows an upward trend. However, the SFC-GS algorithm exhibits a delay growth rate that is reduced by more than 10.2% compared to the baseline algorithms, indicating that SFC-GS effectively mitigates the delay increase when handling large-scale SFCs. The SFC-GS algorithm combines the batch matching approach of the Gale-Shapley stable matching algorithm with an improved local resource check method, resulting in more efficient resource allocation. This avoids resource waste and conflicts, thereby reducing delays. The SFC-GS curve remains relatively smooth across the entire range, without the drastic fluctuations observed in the Random algorithm, indicating that it can maintain stable performance under varying loads.

In the training process of this paper, to better simulate real-world network environments and conduct effective research under limited resources, the scenario with 300 SFCs was selected to evaluate the distribution of end-to-end delay. The distribution of training episode counts corresponding to specific latency levels is illustrated in

Figure 6. The SFC-GS algorithm exhibits a higher frequency of lower latency occurrences and achieves the lowest maximum latency among all compared algorithms, indicating its clear advantage in mitigating latency escalation trends. The analysis of the experimental data distribution, shows that SFC-GS has a large statistic of small end-to-end delay bias and the lowest end-to-end delay compared to other algorithms. These results demonstrate that SFC-GS exhibits significant stability in latency control for SFC scheduling. Meanwhile, SFC-GS reduces computational redundancy through local resource checking, iteratively refines the matching process over multiple rounds to approximate the global optimum, and enhances allocation efficiency via batched parallel processing. This approach effectively avoids premature acceptance of locally optimal requests that may lead to global resource imbalance, thereby significantly improving the overall network latency performance.

(3) Comparison of resource utilization rate

Figure 7 illustrates the resource utilization performance of different scheduling algorithms under varying numbers of service function chains. As the number of SFCs increases, the resource utilization of all algorithms improves, indicating that the degree of optimization in resource scheduling enhances with the growth of task load. The SFC-GS algorithm performs best in all SFC number intervals and outperforms the comparison algorithm by more than 8.57%. It indicates that its collaborative matching game strategy that takes into account the bilateral states can effectively improve the resource utilization rate. Reinforcement learning is affected by the exploration efficiency and training effect in resource allocation in a short period. In contrast, SFC-GS improves matching efficiency and reduces computational resource consumption by employing batch matching to minimize the waiting time of individual matches, and by incorporating local resource checking to avoid invalid matching attempts.

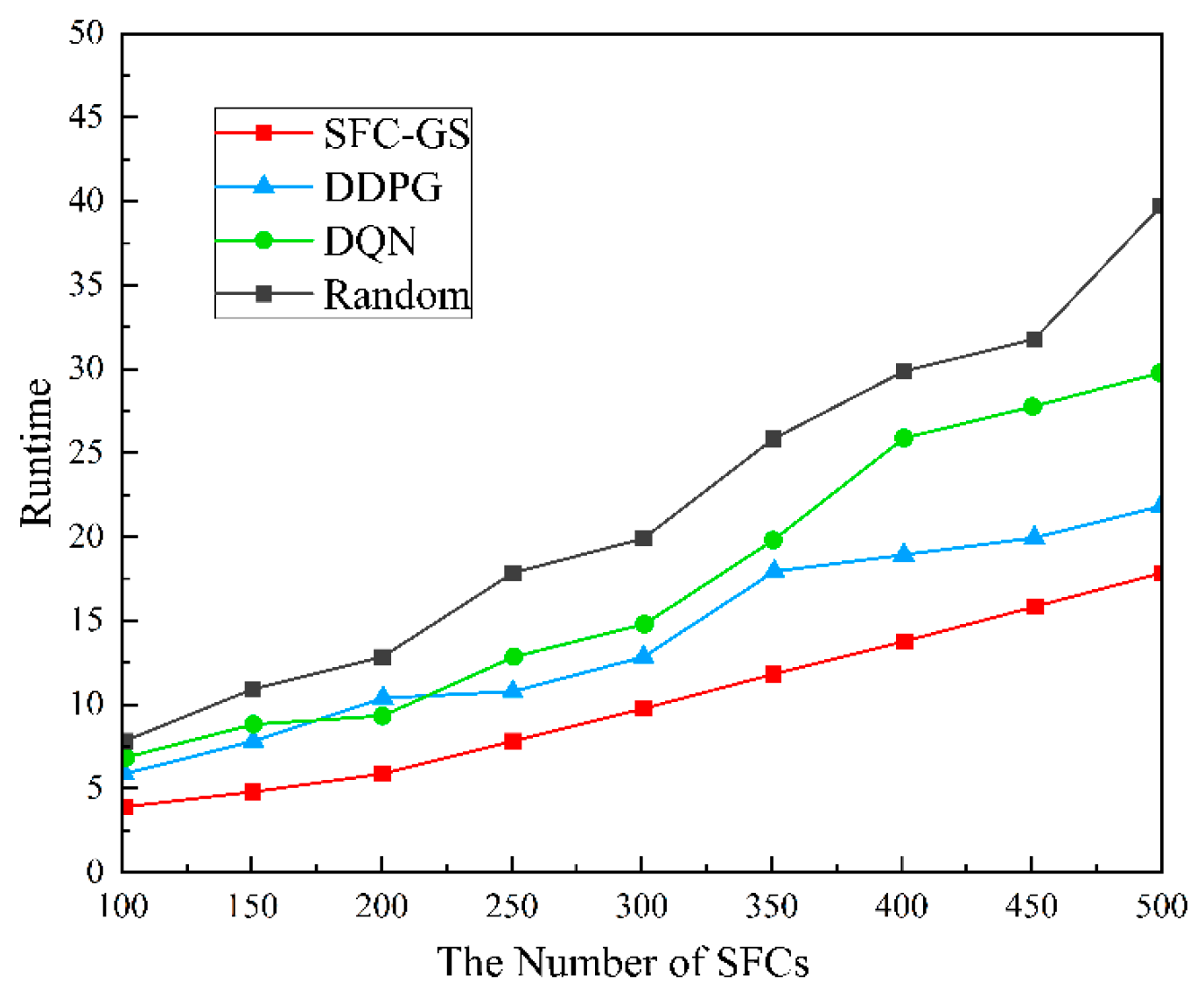

(4) Comparison of Runtime

Figure 8 illustrates the differences in runtime performance of various scheduling algorithms under different numbers of service function chains. The runtime increases with the number of SFCs, indicating that the scheduling computation complexity is positively correlated with the number of SFCs. When the number of SFCs is 500, SFC-GS reduces the computation time by about 25% and 40% compared to DDPG and DQN, respectively. It indicates that reinforcement learning methods still have high computational resource overheads, while SFC-GS reduces unnecessary computations and improves execution efficiency using batch matching and local resource checking. As the number of SFCs increases, the runtime of the Random algorithm rises sharply. When SFC = 500, the runtime of SFC-GS is only 1/3 of that of Random, indicating that unoptimized scheduling incurs extremely high computational overhead and is incapable of effectively adapting to large-scale SFC scheduling scenarios. SFC-GS consistently maintains the lowest runtime throughout the entire process, indicating that its optimized matching game strategy is highly effective in controlling computational complexity. This advantage becomes even more pronounced as the number of SFCs increases, demonstrating its superior scalability and efficiency in large-scale scenarios.

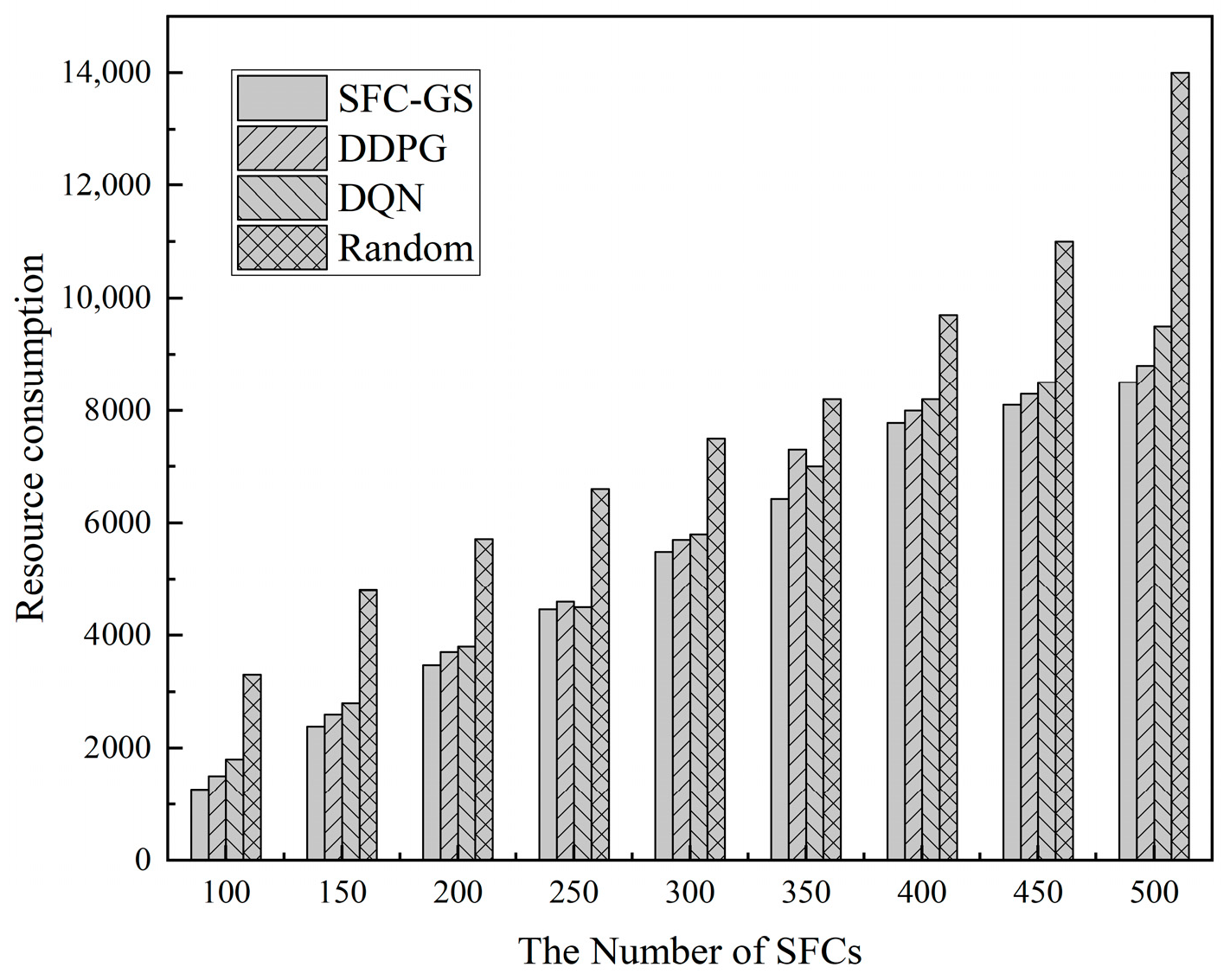

(5) Comparison of Resource Consumption

The resource consumption under varying service request arrival intensities is illustrated in

Figure 9. As the number of SFCs increases, the overall resource consumption also rises, which aligns with the principle that resource demand is positively correlated with the scale of SFC deployments. The highest resource consumption observed in the Random algorithm indicates that scheduling without an optimization strategy results in significant resource waste. In this paper’s algorithm, the matching strategy of each batch optimizes the subsequent batch matching process, thereby enhancing the overall resource utilization efficiency. The reinforcement learning algorithm optimizes resource scheduling, but the resource consumption is still higher than that of SFC-GS due to the fact that there are still redundant computations during training and inference. Through the optimization of batch matching and local resource inspection, SFC-GS achieves lower resource consumption compared to DQN, DDPG, and Random algorithms. In large-scale SFC scheduling scenarios, SFC-GS reduces resource consumption by approximately 10.5–15%, demonstrating higher resource utilization and scheduling efficiency, making it a superior choice for scheduling algorithms.

In summary, the SFC-GS algorithm demonstrates significant comprehensive advantages in large-scale SFC scheduling scenarios. When the number of SFCs increases, SFC-GS exhibits the smallest decline in acceptance rate, the slowest growth in delay, with steady fluctuations, and consistently maintains the highest level of resource utilization. At the same time, its running time is reduced by 25–40%, and resource consumption is reduced by 10.5–15% compared with the comparison algorithm. Especially when the number of SFCs reaches 500, the running time is only 1/3 of the Random algorithm. The algorithm in this paper outperforms DQN, DDPG, and Random algorithms in five core metrics: average request acceptance rate, delay control, resource utilization, running time, and resource consumption through the co-optimization of batch matching and local resource checking. The algorithm effectively balances the resource allocation efficiency and computational complexity through the matching game strategy, providing an efficient and stable scheduling solution for NFV environments under high load.

6. Conclusions

This paper proposes SFC-GS, a multi-objective SFC scheduling algorithm based on matching games, which innovatively integrates three core designs: a bilateral preference matching model for VNFs and service nodes, a local resource check mechanism to reduce invalid attempts, and a hierarchical batch scheduling strategy for iterative optimization. Experimental results show that the proposed method improves request acceptance rate and resource utilization by approximately 8% and reduces latency and resource consumption by approximately 10% compared with DQN, DDPG, and Random algorithms.

Existing SFC scheduling methods still exhibit certain limitations, particularly in multi-objective collaborative optimization and algorithm efficiency. Future research will focus on designing multi-dimensional optimization frameworks for scheduling strategies, aiming to maximize system resource utilization, minimize task response latency, and achieve load-balancing metrics superior to current benchmark algorithms, while exploring novel scheduling paradigms with significantly reduced computational complexity.