Self-Sovereign Identities and Content Provenance: VeriTrust—A Blockchain-Based Framework for Fake News Detection

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Problem Statement

1.3. Research Objectives

1.4. Contributions

- VeriTrust Framework: A pioneering solution that combines SSI and blockchain to detect fake news, filling a major gap in current academic discourse.

- Innovative Cryptographic Protocols: Introduction of privacy-preserving protocols, such as digital signatures and zero-knowledge proofs, that ensure secure identity verification and content validation.

- Comprehensive Evaluation Framework: A holistic system to assess technical metrics, security robustness, and user experience, grounded in practical deployment scenarios including regulatory and integration considerations.

1.5. Paper Organization

- Section 2 explores the related literature on fake news detection, SSI frameworks, and blockchain-based content tracking, identifying key gaps.

- Section 3 details the VeriTrust architecture and design specifications

- Section 4 covers implementation, including technology choices and optimization strategies.

- Section 5 discussion and comparative analysis

- Section 6 concludes by summarizing contributions and proposing future research directions.

2. Literature Review

2.1. Knowledge-Based Fake News Detection

2.2. Style-Based Fake News Detection

2.3. Propagation-Based Fake News Detection

2.4. Source-Based Fake News Detection

2.5. Blockchain Media and Content Verification

2.5.1. Content Authentication and Integrity

2.5.2. Copyright Protection and Digital Rights Management

2.5.3. Systematic Reviews and Meta-Analysis

2.5.4. Self-Sovereign Identity (SSI): DIDs and Verifiable Credentials

- European Blockchain Services Infrastructure (EBSI): EBSI aims to deliver blockchain-enabled cross-border public services across EU member states, using SSI for educational credentials and healthcare certification. Pilots have issued tamper-proof diplomas and COVID-19 vaccination certificates as verifiable credentials, shared securely without re-verification from issuers [31,32,33].

- Hyperledger Indy and Sovrin Network: The Sovrin Foundation built a decentralized identity ecosystem on Hyperledger Indy. In the healthcare sector, Epic Systems’ MyChart application uses Indy-based credentials for privacy-preserving medical data sharing [34,35]. British Columbia’s government also launched the Person Credential initiative, issuing over 100,000 digital identities since 2020 [36].

- Educational Sector Implementations: MIT has issued over 10,000 blockchain-based diplomas, enabling global verification [37].

2.5.5. Digital Content Provenance

3. Proposed Framework: VeriTrust: A Blockchain-SSI Framework for Content Provenance and Fake News Detection

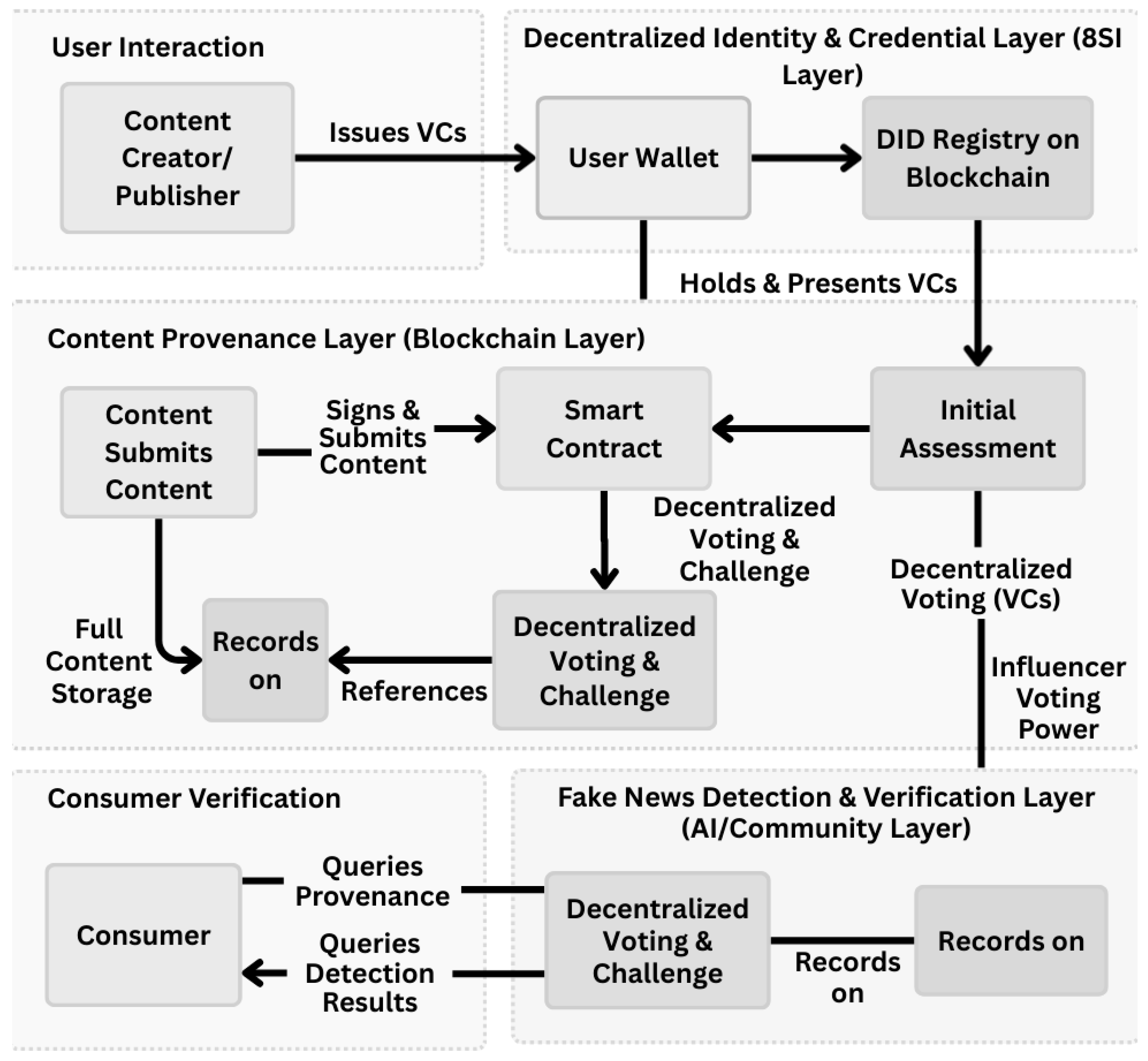

3.1. Architectural Overview

- Decentralized Identity and Credential Layer (SSI layer): This foundational layer manages digital identities through Decentralized Identifiers (DIDs) and Verifiable Credentials (VCs), allowing users to control their own identity data without reliance on centralized providers.

- Content Provenance Layer (Blockchain Layer): This layer acts as the system’s core and maintains an immutable record of content from its origin, promoting transparency and preventing tampering throughout its lifecycle [47].

- Fake news Detection and Verification Layer (AI/Community Layer): Utilizing sophisticated AI algorithms for initial content analysis, this layer also incorporates a decentralized, community-led mechanism to support verification and resolve disputes [58].

3.2. Self-Sovereign Identity Integration

- User Registration and DID Creation: All participants in the content ecosystem, including content creators (e.g., journalists, citizen reporters), publishers, and fact-checkers, register on the VeriTrust platform. During registration, they create their unique Decentralized Identifiers (DIDs). This process ensures that users retain full control over their digital identities, eliminating reliance on centralized services for identity management [59].

- Verifiable Credentials for Roles and Expertise: To enhance credibility, trusted entities such as journalism schools, professional associations, or established media organizations can issue Verifiable Credentials (VCs) to verify a user’s specific role (e.g., “Certified Journalist,” “Expert Fact-Checker”) or demonstrated expertise [36]. These VCs are held securely by the user in their digital wallet and can be selectively disclosed as Verifiable Presentations (VPs) only when necessary for specific verification processes [37].

- Digital Signatures for Content Authentication: As a key component of the SSI integration, every content submission and any later edits are required to be digitally signed using the private key associated with the creator’s or editor’s Decentralized Identifier (DID) [47]. This cryptographic linkage ensures irrefutable proof of both origin and authorship, safeguarding against unauthorized changes and denying any possibility of disowning the content.

- Access Control: Leveraging SSI principles, the framework enables granular, user-controlled access to their identity data. Users can selectively disclose specific attributes or credentials for verification purposes, maintaining a high degree of privacy and autonomy over their personal information.

3.3. Content Provenance Mechanism

- Initial Content Registration: When a piece of content is first created or uploaded to the platform, its cryptographic hash, along with the creator’s DID and a timestamp, is immutably recorded on the blockchain [60]. This marks the genesis point of the content, establishing its verifiable origin.

- Lifecycle Tracking: Any subsequent significant events in the content’s lifecycle, such as modifications, re-publications, or flags from fact-checking processes, are also hashed, timestamped, and linked to the relevant DIDs (e.g., the editor’s DID, the fact-checker’s DID) on the blockchain. This creates a transparent and verifiable chain of custody, allowing anyone to trace the content’s complete history.

- Metadata Documentation: Comprehensive metadata associated with the content—including details about the creation device, geographical location, and links to original source materials—is securely linked to its blockchain record. This rich contextual information provides crucial data for verification purposes.

- On-chain/Off-chain Storage Model: To ensure scalability while maintaining immutability, only cryptographic hashes and essential provenance metadata are stored on-chain. The full, larger content files (e.g., high-resolution images, videos, full articles) are stored on decentralized off-chain storage solutions like IPFS or Web3.Storage. This hybrid approach optimizes blockchain performance and storage efficiency.

3.4. Fake News Detection Components

- AI-Powered Content Analysis: A multimodal deep learning model is employed to analyze content across various formats, including text, images, and video, for indicators of misinformation. This AI model leverages federated learning, allowing it to be trained across distributed nodes without centralizing sensitive user data, thereby preserving privacy while maintaining high detection accuracy [61]. This provides an initial, automated assessment of content authenticity.

- Decentralized Voting and Challenge Mechanism: For content flagged as suspicious by the AI or challenged by users, a community-driven decentralized voting system is activated. Verified fact-checkers, whose expertise is attested by VCs, and potentially a broader community of users, can review the content and vote on its authenticity. Stake-based verification models can be implemented to incentivize honest participation and penalize malicious actors [62]. This hybrid intelligence model, combining AI’s efficiency with human nuance, creates a resilient, self-improving system that leverages the strengths of both while mitigating their individual weaknesses. The ability of such a challenge mechanism to correct a high percentage of AI misclassifications even with adversarial participation is a strong indicator of its robustness.

- Reputation System: A blockchain-based reputation mechanism tracks the historical accuracy and reliability of all participants: content creators, publishers, and fact-checkers [63]. Participants with a higher reputation, built on consistent contributions of verified content or accurate fact-checking, may be granted more voting power or influence within the decentralized verification process.

- Smart Contracts for Rules and Incentives: Smart contracts are crucial for automating the execution of verification rules, managing the distribution of token rewards to incentivize accurate fact-checking, and implementing penalties for malicious or dishonest behaviour within the system [59].

- Provenance-Based Authenticity Checks: The detection system directly integrates with the immutable provenance data recorded on the blockchain. By cross-referencing the content with its creator’s DID, modification history, and associated metadata, the system can perform robust authenticity checks, providing a foundational layer of trust that goes beyond surface-level content analysis.

4. Implementation and Validation

4.1. System Components and Tool Stack

- Blockchain Ledger: Hyperledger Besu is selected as the blockchain platform. As an Ethereum-compatible, permissioned client, it supports smart contracts written in Solidity and offers high throughput, making it suitable for enterprise-level applications like credential management and high-frequency provenance logging [64]. Its adoption by the European Blockchain Services Infrastructure (EBSI) further validates its robustness [31].

- SSI Framework: Hyperledger Aries and Indy provide the core components for Self-Sovereign Identity. Hyperledger Indy serves as a purpose-built distributed ledger for managing DIDs, while Hyperledger Aries provides a toolkit for building digital wallets and agent-to-agent communication, facilitating the issuance and presentation of VCs. DID methods like did:web or did:key can be used for simple, ledger-independent identity creation.

- Smart Contracts: Solidity is the language of choice for implementing the on-chain logic. Smart contracts will manage the DID registry, the anchoring of Merkle roots for content provenance, and the rules for the decentralized voting and reputation system.

- Off-Chain Storage: IPFS (InterPlanetary File System) is used for storing large content files and detailed provenance records off-chain. This approach addresses blockchain scalability limitations by only storing an immutable cryptographic hash (the IPFS CID) on the ledger, which points to the full data.

- Verifiable Credentials: Credentials will adhere to the W3C VC Data Model, serialized in JSON-LD format. This ensures interoperability and allows credentials to be machine-readable and cryptographically verifiable across different platforms.

- Frontend Verifier: A browser plugin or API-based service will act as the primary interface for consumer verification. This client-side tool will locally hash content, query the blockchain for the corresponding Merkle root, retrieve the Merkle proof from IPFS, and validate the VC signature against the issuer’s public DID document.

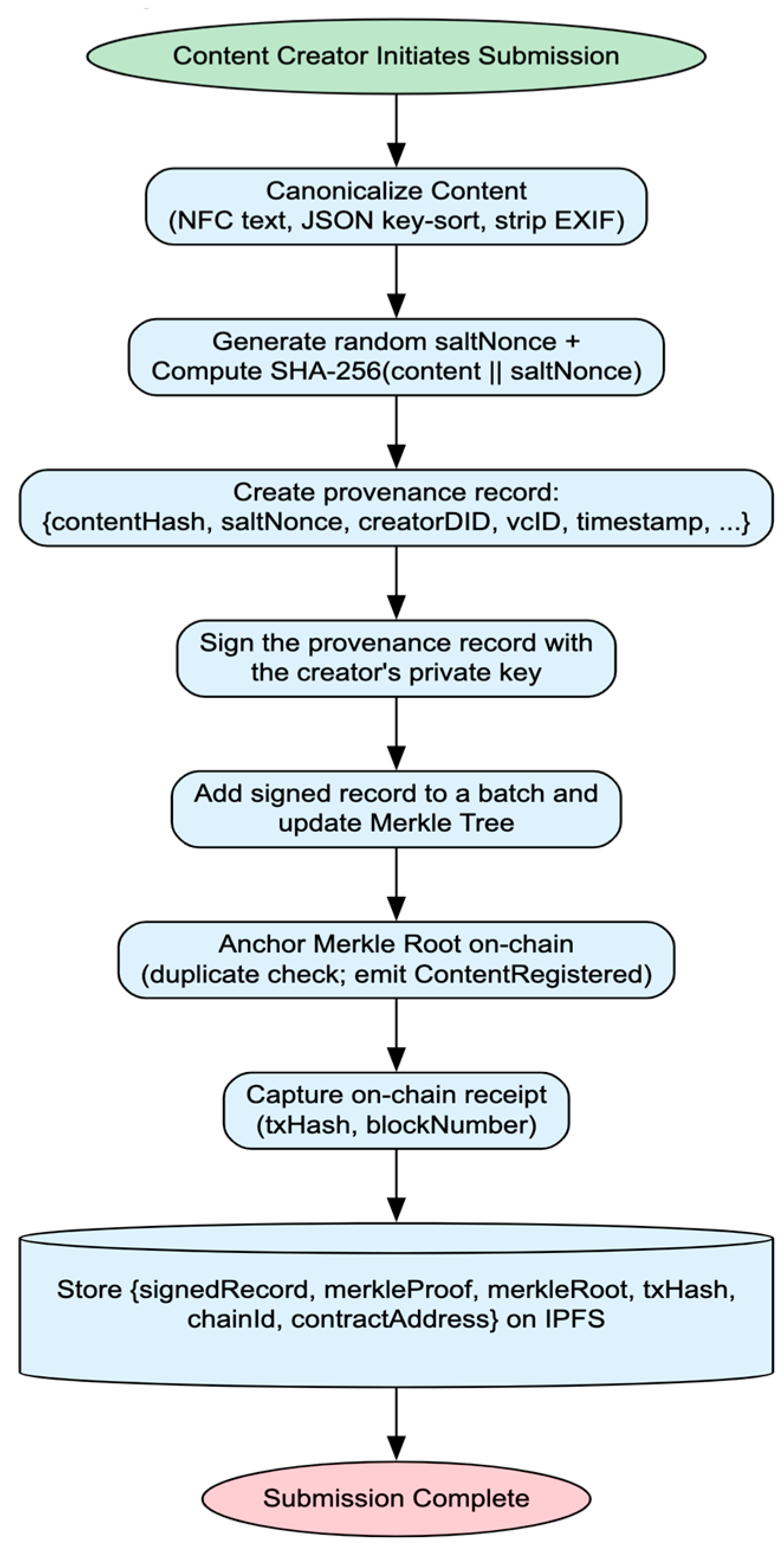

4.2. Implementation Workflow and Data Flow

- Identity Registration: A creator (e.g., a journalist) and a publisher (e.g., a news agency) each generate their own DIDs and associated key pairs using a compatible digital wallet (built with Hyperledger Aries). Their public keys and service endpoints are published in their respective DID Documents, which are registered on the DID ledger (Hyperledger Indy [66]).

- VC Issuance: The publisher, acting as a trusted Issuer, creates a W3C-compliant Verifiable Credential asserting the creator’s role (e.g., “Verified Journalist”). This VC is cryptographically signed with the publisher’s private key and sent to the creator, who stores it securely in their digital wallet [66].

- Content Creation and Hashing: The creator produces a piece of content (e.g., an article). The client application locally computes a SHA-256 hash of the content, creating a unique and tamper-evident fingerprint.

- Provenance Logging: The creator’s application bundles the content hash with relevant metadata (timestamp, author DID, etc.) into a provenance record. This record is digitally signed using the creator’s private key. The record is then added to a batch of other records, which are used to construct a Merkle Tree. The single Merkle root of this tree is submitted to the VeriTrust smart contract on the Hyperledger Besu blockchain. The full provenance record and the content itself are stored on IPFS.

- Verification: A consumer’s client (e.g., a browser plugin) encounters the content. It performs the following steps:

- ○

- Locally computes the SHA-256 hash of the content it is viewing.

- ○

- Queries the blockchain for the transaction containing the claimed provenance.

- ○

- Retrieves the Merkle proof and the signed provenance record from IPFS.

- ○

- Uses the Merkle proof to validate that its locally computed hash is included in the on-chain Merkle root.

- ○

- Resolves the creator’s DID to fetch their public key and verifies the digital signature on the provenance record.

- ○

- Optionally, it can request the creator’s VC and verify the publisher’s signature on it, confirming their credentials.

- ○

- Content Canonicalization for Dynamic Updates. To mitigate the risk of “hash drift” caused by minor content modifications (e.g., minor edits, formatting changes), VeriTrust applies a canonicalization step prior to hashing. While the immutable core elements like the author’s DID and original timestamp are preserved, the mutable components (e.g., metadata updates or headline corrections) are versioned and linked to new hashes. This approach ensures the traceability of content evolution without compromising the integrity of the original provenance commitment [67,68].

4.3. Algorithms and Pseudocode

4.3.1. Content Canonicalization for Stable Hashing

- Textual Data Normalization—To unify character encodings (e.g., different Unicode forms of accented characters are treated equivalently), all textual data is standardized to Unicode Normalization Form C (NFC), eliminating additional whitespace, line breaks, and non-semantic formatting characters.

- Structured Data Serialization—To ensure the same logical record always produces the same serialized representation, regardless of platform or editor differences, JSON-based metadata is processed by sorting keys lexicographically and serializing values in a uniform format without extra whitespaces.

- Multimedia Content Preprocessing—To prevent irrelevant changes (like a device automatically rewriting metadata) from altering the fingerprint of substantive content, hash of binary artifacts (e.g., images, video, audio) are computed on their raw content bytes after removing non-essential metadata (e.g., EXIF tags in JPEGs, container-level timestamps in MP4 files).

4.3.2. Algorithm 1: Content Provenance Submission

| Algorithm 1. Content Submission. |

|

4.3.3. Algorithm 2: Content Verification

| Algorithm 2. Content Verification. |

| Inputs: content, signedRecord, vc, merkleProof, onChainMerkleRoot Output: True if integrity and credentials validate; otherwise False |

| 1. Compute content hash: |

| computedHash ← SHA256(content) |

| 2. Validate inclusion and integrity: |

| isMerkleProofValid ← verifyMerkleProof(signedRecord, merkleProof, onChainMerkleRoot) |

| isHashMatch ← (computedHash = signedRecord.contentHash) |

| if not (isMerkleProofValid ∧ isHashMatch) then |

| return False // integrity validation failed |

| 3. Verify digital signatures and credentials: |

| creatorDID ← signedRecord.creatorDID |

| creatorPublicKey ← resolveDID(creatorDID) |

| isRecordSignatureValid ← verifySignature(signedRecord, creatorPublicKey) |

| issuerDID ← vc.issuer |

| issuerPublicKey ← resolveDID(issuerDID) |

| isVCSignatureValid ← verifySignature(vc, issuerPublicKey) |

| 4. Return final result: |

| return (isRecordSignatureValid ∧ isVCSignatureValid) |

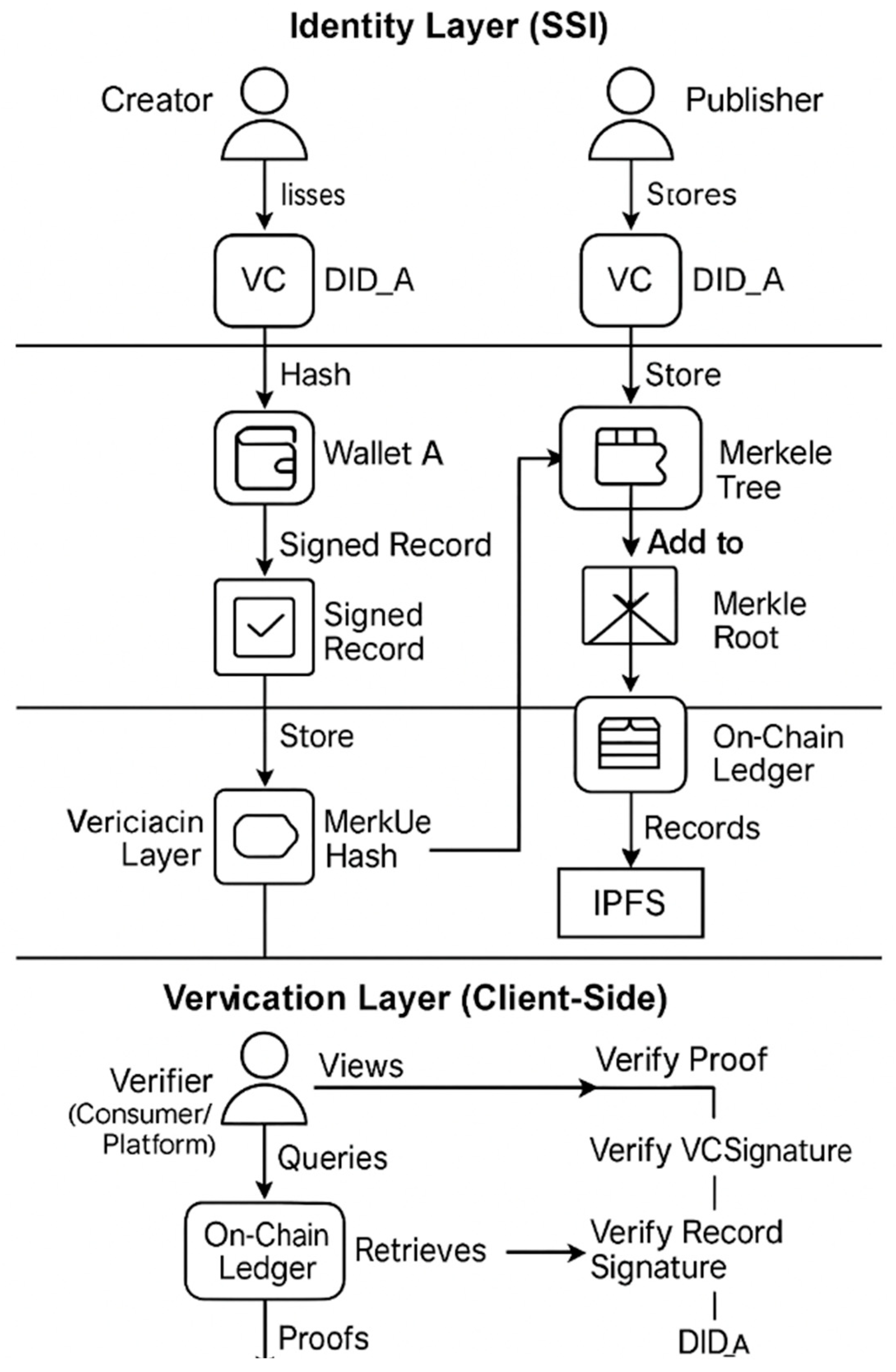

4.4. Framework Diagram and Architecture

- Identity Layer (layer 1): Manages Decentralized Identifiers (DIDs) and Verifiable Credentials (VCs)

- Provenance Layer (layer 2): Establishes content authenticity using cryptographic hashing and Merkle root embedding

- Verification Layer (layer 3): Enables validation through a hybrid blockchain record and off-chain data

4.4.1. Layer 1: Identity Layer (Self-Sovereign Identity—SSI)

4.4.2. Layer 2: Provenance Layer (On-Chain + Off-Chain)

4.4.3. Layer 3: Verification Layer (Client-Side)

- Content integrity: Is the local hash part of the Merkle tree rooted on-chain?

- Record authenticity: Is the signed record valid and signed by the creator’s DID?

- Issuer authority: Is the VC valid, and was it issued by a legitimate DID?

4.5. Limitations and Trade-Offs

- Issuers’ Trustworthiness: The framework’s success depends on the integrity of credential issuers. In the absence of formal governance or vetting protocols, malicious actors could compromise trust by generating fraudulent credentials.

- ZKP Overhead: Zero-Knowledge Proofs enhance privacy and support selective disclosure, but they introduce computational complexity that may hinder performance on devices with limited processing power.

- Off-Chain Storage Risks: Since IPFS does not ensure data persistence unless pinning incentives are actively maintained, reliance on it for content storage poses availability concerns.

- Cold-Start Adoption Barrier: For decentralized infrastructure to function, creators, issuers, and verifiers must simultaneously onboard, demanding technical literacy and platform compatibility across stakeholders.

- Ethereum Gas Costs: Though Merkle root batching alleviates some transaction fees, gas costs on Ethereum-based chains remain a potential bottleneck in high-frequency scenarios.

- Regulatory Compliance Challenges: The blockchain’s immutable nature clashes with data protection requirements such as GDPR, particularly with respect to the right to erasure and principles of data minimization.

4.6. Mathematical Validation

- Secure Hashing: Ensuring content integrity through tamper-evident cryptographic digests

- Merkle Trees: Enabling scalable and auditable data structures for efficient provenance anchoring

- ECDSA Signatures: Validating the authenticity of digital credentials via elliptic curve-based cryptography

- Zero-Knowledge Proofs (ZKPs): Providing selective disclosure capabilities while preserving user privacy

4.6.1. Hash-Based Content Integrity

- Preimage Resistance: Given a hash output It is computationally infeasible to find any input such that .

- Second Preimage Resistance: Given , it is computationally infeasible to find another ≠ such that .

- Collision Resistance: It is infeasible to find any pair , such that ≠ and .

4.6.2. Merkle Tree Inclusion Proof

4.6.3. ECDSA-Based Authenticity

- : Generates a private/public key pair.

- : Generates signature σ on message m using private key .

- : Verifies the signature using .

4.6.4. Zero-Knowledge Privacy Guarantees

- Completeness: If the statement is true, the verifier accepts.

- Soundness: A false claim passes verification with negligible probability.

- Zero-Knowledge: The verifier learns nothing beyond the statement’s validity.

4.6.5. Summary of Formal Guarantees

4.7. Benchmarking and Evaluation Methodology

4.7.1. Evaluation Metrics and Performance Objectives

- Latency (ms): Time required to perform identity resolution, content verification, and Merkle proof validation.

- Gas Cost (Gwei): Estimated cost to anchor Merkle roots on-chain using Solidity smart contracts.

- Proof Size (bytes): The size of Merkle proof versus Zero-Knowledge Proof (ZKP) required for verifiability.

- Storage Overhead: On-chain vs. off-chain content and metadata storage comparison.

- Scalability: Number of content records anchored per transaction using Merkle batching.

4.7.2. Benchmarking Simulation Scenarios

- Assuming the submission of a single Merkle root to Hyperledger Besu (IBFT-2, private chain).

- Based on similar Solidity smart contract interactions [75], the estimated gas cost is gas units.

- On a permissioned deployment, transaction fees can be negligible or fixed-rate depending on policy enforcement.

- A typical Merkle proof for records involves hashes ~320 bytes total.

- In contrast, a ZKP using Groth16 or Plonk ranges from ~768 bytes to 1.3 KB [76].

- Thus, Merkle batching is more space-efficient for inclusion verification, while ZKPs offer privacy benefits.

- Identity resolution via Hyperledger Indy takes ~180–500 ms, depending on ledger query delay [77].

- VC signature verification over secp256r1 (using ECDSA) typically requires ~20–50 ms per credential [Aries Framework Metrics].

- Total client-side verification latency is thus estimated at ms per content artifact.

- This version does not include direct empirical benchmarking and instead, reproducibility is supported through publicly released artifacts that detail the data formats and verification logic, available at https://github.com/marufrigan/veritrust-artifacts (v0.1.0) [65] (accessed on 25 July 2025).

4.7.3. Storage Efficiency and Off-Chain Trade-Offs

- An average provenance JSON + signature file is ~1.1 KB.

- Offloading this to IPFS reduces smart contract data payloads by 90–95% per record.

- Only the IPFS CID (~46 bytes) and Merkle root (~32 bytes) are stored on-chain.

4.7.4. Scalability via Merkle Batching

- ≈6.4× improvement in on-chain anchoring efficiency.

- A 90% reduction in gas fees per individual record.

5. Discussion and Comparative Analysis

5.1. Benchmark vs. State-of-the-Art Systems

- SSI and Credential-Based Trust: VeriTrust is one of the few frameworks to natively incorporate Self-Sovereign Identity (SSI) using Hyperledger Indy and Aries. Unlike centralized key registries used in Factom and ContentProof, VeriTrust binds identity assertions (e.g., “verified journalist”) via W3C Verifiable Credentials issued by decentralized authorities. This greatly improves resistance to impersonation and key forgery [69].

- Revocation Performance and Scalability: Unlike many existing SSI frameworks, which often encounter difficulties with high-latency revocation checks, VeriTrust utilizes Hyperledger Indy’s cryptographic accumulators to enable efficient, privacy-preserving revocation. Previous research shows that revocation proof verification can be completed within ~50–100 ms, supporting scalability for high-volume media ecosystems [84].

- Anchored Provenance via Merkle Trees: TruthChain and ContentProof use direct content hashes to anchor items on-chain. VeriTrust improves this by introducing Merkle tree batching, thereby enabling scalable anchoring of thousands of records per transaction—reducing gas costs and enabling public audit trails. The use of Merkle-based verification also simplifies inclusion proofs on the verifier side [85].

- Future-Proof Privacy with ZKPs: While ZKP support is still in the roadmap for VeriTrust, it is absent in most other systems. ZKPs are critical for privacy-preserving attestations, especially when verifying credentials without revealing full claim content (e.g., “is a certified fact-checker” without revealing identity). Planned integration with Groth16 or PLONK will address this in VeriTrust v2 [76].

- Federated Learning Role: VeriTrust proposes federated learning as a complementary layer serving two key purposes: (i) enabling client-side misinformation without centralizing user data, and (ii) facilitating collective reputation scoring of issuers and creators. Together, this dual application strengthens both technical accuracy and trust governance while preserving user privacy.

- Off-Chain Data and Decentralized Storage: Factom and ContentProof rely on third-party databases for content hosting, raising long-term reliability issues.

- Compliance-Aware Design: VeriTrust uniquely considers GDPR implications by salting content hashes, supporting revocation registries, and designing DID resolvers with expiration logic. This addresses the immutability-vs.-erasure tension that undermines many blockchain applications in regulated jurisdictions [86].

- Full-stack integration of identity, provenance, and content verification layers

- Cryptographic verifiability via Merkle proofs and digital signatures

- Forward compatibility with zero-knowledge technologies and data privacy laws

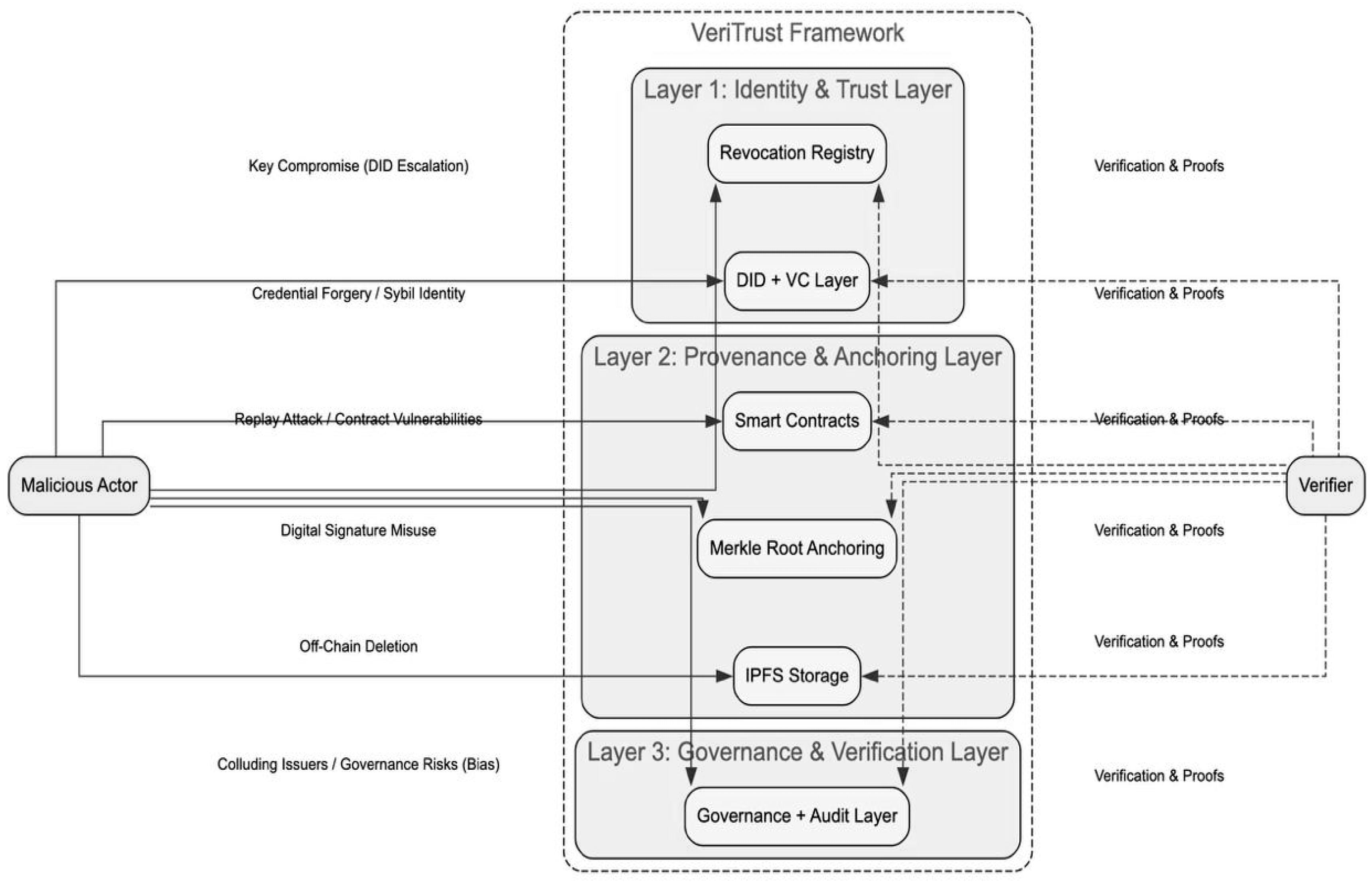

5.2. Security Analysis: Threat Model and Mitigations

- Attack Vector: Malicious actors modify digital content after it has been endorsed or published.

- Mitigation: Content fingerprints are computed via SHA-256 hashes. Any tampering changes the hash, invalidating the Merkle proof. As each record is cryptographically tied to its original content, tampering is instantly detectable [71].

- Attack Vector: An attacker fabricates or reuses Verifiable Credentials to impersonate verified publishers.

- Mitigation: Each VC is signed using the issuer’s private key and verified using the corresponding DID’s public key from the Hyperledger Indy ledger. Revocation registries are checked during validation, preventing the reuse of revoked credentials [67].

- Attack Vector: A previously valid signed content record is resubmitted to falsely prove new authorship.

- Mitigation: Timestamps and nonces are incorporated into the provenance record. Merkle root anchoring ensures each batch is uniquely tied to its time of submission. Duplicate entries trigger rejection logic in the smart contract.

- Attack Vector: Adversaries generate multiple fake identities to flood the system with malicious content.

- Mitigation: VeriTrust relies on verifiable credential issuance by trusted authorities (e.g., academic institutions, journalistic bodies). This makes Sybil identities non-functional unless they obtain VC endorsements, which are cryptographically verified.

- Attack Vector: Provenance records stored in IPFS are garbage-collected or lost due to a lack of pinning.

- Mitigation: Although IPFS ensures decentralized storage, unpinned content risks being removed eventually. VeriTrust addresses this by incorporating multiple availability strategies:

- ○

- Decentralized Pinning Services (e.g., Pinata, Eternum) are used to replicate data across independent nodes.

- ○

- Institutional Archival Gateways (e.g., university or media organization IPFS nodes) that maintain long-term pinning.

- ○

- Hybrid Redundancy Models combine IPFS with conventional cloud storage (e.g., AWS S3), ensuring fallback access [87].

- ○

- Multi-Node Replication: Previous research indicates that pinning across ≥3 nodes can achieve retrieval success rates above 99% over extended timeframes [87].

- Immutable commitments via Merkle trees

- Decentralized trust anchors using DIDs and VCs

- Tamper-evidence and content-bound hashes

- Smart contract logic for duplication and replay prevention

5.3. Regulatory and Ethical Considerations

- GDPR Compliance and the Right to Be Forgotten: VeriTrust avoids storing raw content or personal identifiers directly on-chain. Instead, it uses salted cryptographic hashes and off-chain IPFS storage. In line with GDPR Article 17, revocation registries allow credentials to be invalidated, while DID documents can be deactivated or rotated. This design ensures that data subjects retain meaningful control over their verifiable credentials without violating blockchain immutability constraints [86].

- Algorithmic Fairness and Credential Bias: SSI-based systems carry risks of embedded bias, particularly when credential issuers act as gatekeepers (e.g., only certain institutions can issue VCs). VeriTrust proposes a governance model where issuers are vetted by a decentralized trust registry. This guards against monopolistic control and enhances inclusiveness [88].

- Censorship and Decentralized Access: A balance must be struck between content verifiability and freedom of expression. While VeriTrust does not moderate content, it enables third-party verification without central arbitration. All verification logic is transparent, cryptographic, and content-neutral, making the system resistant to political or institutional censorship [89].

- Legal Standing of Cryptographic Evidence: VeriTrust uses digital signatures (ECDSA) and timestamped Merkle proofs to provide court-admissible evidence trails. Several jurisdictions now recognize such cryptographic attestations under eIDAS (EU), ESIGN (USA), and PKI-based evidence frameworks [90]. Future versions may support notarization plugins for enhanced legal assurance.

- Ethical Use of AI-Driven Verification Modules: If integrated with AI-based classifiers (e.g., for content quality or misinformation tagging), VeriTrust must ensure transparency and auditability. The use of explainable AI (XAI) and transparent training datasets is encouraged to avoid opacity and hidden bias in downstream use cases [61].

- GDPR Compliance and Data Protection: VeriTrust incorporates specific safeguards to comply with the EU General Data Protection Regulation (GDPR), carefully balancing the blockchain immutability with individual user data rights.

- Salt Management: To mitigate dictionary attacks, NIST guidelines are followed, and hashes are safeguarded with periodically rotated, cryptographically secure random salts.

- Off-Chain Data Erasure: Upholding the “Right to be Forgotten”, Personally Identifiable Information (PII) is encrypted and stored off-chain. Deletion of this off-chain data allows the associated on-chain hash to be permanently non-resolvable, achieving practical erasure in line with prevailing GDPR interpretations.

- Real-Time Revocation: Credential validity is governed by a Hyperledger Indy revocation registry. Verifiers must perform real-time queries against this registry to ensure that a credential has not been revoked prior to accepting it as valid.

- DPIA Summary: An initial Data Protection Impact Assessment (DPIA) highlights and addresses risks such as unauthorized access and issuer misuse through encryption, credential revocation, and transparent governance practices.

5.4. Reproducibility Statement

6. Conclusions and Future Work

6.1. Summary of Contributions

- Self-Sovereign Identity (SSI): Binds cryptographically verifiable ties between content creators/publishers and their identities via Decentralized Identifiers (DIDs) and Verifiable Credentials (VCs).

- Blockchain Technology: Ensures immutable, tamper-evident logging of content provenance by anchoring hash values, timestamps, and Merkle proofs on a distributed ledger.

- Hybrid AI and Community Validation Layer: Combines AI-driven content analysis with participatory verification mechanisms, such as decentralized voting and reputation models, to foster collective trust.

6.2. Concluding Remarks

6.3. Future Research Directions

- Prototype Development and Benchmarking: A proof-of-concept prototype should be developed using Hyperledger Besu, Aries, and Solidity. Key performance metrics such as latency, throughput, and transaction scalability must be rigorously evaluated under varying content volumes. Critical performance indicators, including client-side verification latency, gas costs for varying Merkle batch sizes, and IPFS retrieval success rates with and without pinning, should be thoroughly assessed in controlled settings using information from prior blockchain benchmarking studies [71,87] and decentralized storage research [87].

- Privacy-Preserving Verification via ZKPs: Future study should investigate the integration of efficient Zero-Knowledge Proofs (ZKPs) to enable privacy-preserving verification. While the current design does not yet include the incorporation of ZKPs, their adoption represents a key research direction for enabling privacy-preserving verification without disclosing sensitive identifiers, allowing the maintenance of a critical balance between accountability and privacy.

- Multimodal Provenance Models: Extending provenance to dynamic formats (e.g., deepfake videos, synthetic audio_ demands robust PROV-compliant metadata schemas and real-time validation pipelines tailored to composite content verification.

- Decentralized Governance Protocols: Sustainable trust requires community-driven governance models for VC issuer accreditation, protocol evolution, and dispute resolution, potentially drawing from DAO-inspired voting models.

- Ecosystem Interoperability: Seamless interoperability across cross-chain and cross-platform must be addressed, ensuring that the VeriTrust framework can integrate with varied blockchain networks such as Polygon and Solana, as well as open-source media platforms.

- Data Protection Impact Assessment (DPIA): Future work should include systematic Data Protection Impact Assessments (DPIAs) to evaluate adherence to GDPR and related data protection frameworks, with a focus on revocation propagation and anonymization of off-chain metadata.

6.4. Limitations of the Study

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alsuwat, E.; Alsuwat, H. An improved multi-modal framework for fake news detection using NLP and Bi-LSTM. J. Supercomput. 2024, 81, 177. [Google Scholar] [CrossRef]

- Zhang, L. Mechanisms of data mining in analyzing the effects and trends of news dissemination. Appl. Math. Nonlinear Sci. 2024, 9. [Google Scholar] [CrossRef]

- Pava-Díaz, R.A.; Gil-Ruiz, J.; López-Sarmiento, D.A. Self-sovereign identity on the blockchain: Contextual analysis and quantification of SSI principles implementation. Front. Blockchain 2024, 7, 1443362. [Google Scholar] [CrossRef]

- Torky, M.; Nabil, E.; Said, W. Proof of Credibility: A Blockchain Approach for Detecting and Blocking Fake News in Social Networks. Int. J. Adv. Comput. Sci. Appl. 2019, 10, 321–327. [Google Scholar] [CrossRef]

- Nawaz, M.Z.; Nawaz, M.S.; Fournier-Viger, P.; He, Y. Analysis and Classification of Fake News Using Sequential Pattern Mining. Big Data Min. Anal. 2024, 7, 942–963. [Google Scholar] [CrossRef]

- Farokhian, M.; Rafe, V.; Veisi, H. Fake news detection using dual BERT deep neural networks. Multimed. Tools Appl. 2023, 83, 43831–43848. [Google Scholar] [CrossRef]

- Saha, I.; Puthran, S. Fake News Detection: A Comprehensive Review and a Novel Framework. In Proceedings of the 2024 OITS International Conference on Information Technology (OCIT), Vijayawada, India, 12–14 December 2024; pp. 463–469. [Google Scholar] [CrossRef]

- Xia, J.; Li, Y.; Yu, K. Lgt: Long-range graph transformer for early rumor detection. Soc. Netw. Anal. Min. 2024, 14, 100. [Google Scholar] [CrossRef]

- Zhu, X.; Li, L. Research on Detecting Future Fake News Based on Multi-View and Knowledge Graph. In Proceedings of the 2024 4th International Conference on Neural Networks, Information and Communication Engineering (NNICE), Guangzhou, China, 19–21 January 2024; pp. 309–315. [Google Scholar] [CrossRef]

- Verma, P.K.; Agrawal, P.; Madaan, V.; Prodan, R. MCred: Multi-modal message credibility for fake news detection using BERT and CNN. J. Ambient. Intell. Humaniz. Comput. 2022, 14, 10617–10629. [Google Scholar] [CrossRef]

- Hiramath, C.K.; Deshpande, G.C. Fake News Detection Using Deep Learning Techniques. In Proceedings of the 2019 1st International Conference on Advances in Information Technology (ICAIT), Chikmagalur, India, 25–27 July 2019; Volume 4. [Google Scholar] [CrossRef]

- Arunthavachelvan, K.; Raza, S.; Ding, C. A deep neural network approach for fake news detection using linguistic and psychological features. User Model. User-Adapt. Interact. 2024, 34, 1043–1070. [Google Scholar] [CrossRef]

- Tsai, C.M. Stylometric Fake News Detection Based on Natural Language Processing Using Named Entity Recognition: In-Domain and Cross-Domain Analysis. Electronics 2023, 12, 3676. [Google Scholar] [CrossRef]

- Wu, J.; Guo, J.; Hooi, B. Fake News in Sheep’s Clothing: Robust Fake News Detection Against LLM-Empowered Style Attacks. In Proceedings of the KDD’24: Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Barcelona, Spain, 25–29 August 2024; Volume 33, pp. 3367–3378. [Google Scholar] [CrossRef]

- Zhao, C.; Wei, L.; Qin, Z.; Zhou, W.; Song, Y.; Hu, S. MPPFND: A Dataset and Analysis of Detecting Fake News with Multi-Platform Propagation. arXiv 2025. [Google Scholar] [CrossRef]

- Guo, Z.; Wu, P.; Liu, X.; Pan, L. CoST: Comprehensive structural and temporal learning of social propagation for fake news detection. Neurocomputing 2025, 648, 130618. [Google Scholar] [CrossRef]

- Truică, C.-O.; Apostol, E.-S.; Marogel, M.; Paschke, A. GETAE: Graph Information Enhanced Deep Neural NeTwork Ensemble ArchitecturE for fake news detection. Expert Syst. Appl. 2025, 275, 126984. [Google Scholar] [CrossRef]

- Gong, S.; Sinnott, R.O.; Qi, J.; Paris, C. Less is More: Unseen Domain Fake News Detection via Causal Propagation Substructures. arXiv 2023, arXiv:2411.09389v1. [Google Scholar]

- Bhardwaj, A.; Bharany, S.; Kim, S. Fake Social Media News and Distorted Campaign Detection Framework Using Sentiment Analysis & Machine Learning. Heliyon 2024, 10, e36049. [Google Scholar] [CrossRef] [PubMed]

- Pareek, S.; Goncalves, J. Peer–supplied credibility labels as an online misinformation intervention. Int. J. Hum.-Comput. Stud. 2024, 188, 103276. [Google Scholar] [CrossRef]

- Amri, S.; Boleilanga, H.C.M.; Aimeur, E. ExFake: Towards an Explainable Fake News Detection Based on Content and Social Context Information. arXiv 2023. [Google Scholar] [CrossRef]

- Nasser, M.; Arshad, N.I.; Ali, A.; Alhussian, H.; Saeed, F.; Da’u, A.; Nafea, I. A systematic review of multimodal fake news detection on social media using deep learning models. Results Eng. 2025, 26, 104752. [Google Scholar] [CrossRef]

- Li, X.; Wei, L.; Wang, L.; Ma, Y.; Zhang, C.; Sohail, M. A blockchain-based privacy-preserving authentication system for ensuring multimedia content integrity. Int. J. Intell. Syst. 2022, 37, 3050–3071. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, J.; Wu, S.; Pathan, M.S. Research on digital copyright protection based on the hyperledger fabric blockchain network technology. PeerJ Comput. Sci. 2021, 7, e709. [Google Scholar] [CrossRef]

- Chen, Q.; Kong, Y.; Cheng, L. A Digital Copyright Protection System Based on Blockchain and with Sharding Network. In Proceedings of the 2023 IEEE 10th International Conference on Cyber Security and Cloud Computing (CSCloud)/2023 IEEE 9th International Conference on Edge Computing and Scalable Cloud (EdgeCom), Xiangtan, China, 1–3 July 2023. [Google Scholar] [CrossRef]

- Shi, Q.; Zhou, Y. Application of blockchain technology in digital music copyright management: A case study of VNT chain platform. Front. Blockchain 2025, 7, 1388832. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Q.; Shen, Y. Research on New Media Digital Copyright Registration and Trading Based on Blockchain. In Proceedings of the 2024 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Toronto, ON, Canada, 19–21 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Xie, R.; Tang, M. A digital resource copyright protection scheme based on blockchain cross-chain technology. Heliyon 2024, 10, e36830. [Google Scholar] [CrossRef] [PubMed]

- Stockburger, L.; Kokosioulis, G.; Mukkamala, A.; Mukkamala, R.R.; Avital, M. Blockchain-Enabled Decentralized Identify Management: The Case of Self-Sovereign Identity in Public Transportation. Blockchain Res. Appl. 2021, 2, 100014. [Google Scholar] [CrossRef]

- Nokhbeh Zaeem, R.; Chang, K.C.; Huang, T.C.; Liau, D.; Song, W.; Tyagi, A.; Khalil, M.; Lamison, M.; Pandey, S.; Barber, K.S. Blockchain-Based Self-Sovereign Identity: Survey, Requirements, Use-Cases, and Comparative Study. In Proceedings of the IEEE/WIC/ACM International Conference on Web Intelligence, Melbourne, VIC, Australia, 14–17 December 2021. [Google Scholar] [CrossRef]

- European Blockchain Services Infrastructure|Shaping Europe’s Digital Future. Available online: https://digital-strategy.ec.europa.eu/en/policies/european-blockchain-services-infrastructure (accessed on 28 July 2025).

- Bashaddadh, O.; Omar, N.; Mohd, M.; Khalid, M.N.A. Machine Learning and Deep Learning Approaches for Fake News Detection: A Systematic Review of Techniques, Challenges, and Advancements. IEEE Access 2025, 13, 90433–90466. [Google Scholar] [CrossRef]

- Hernández-Ramos, J.L.; Karopoulos, G.; Geneiatakis, D.; Martin, T.; Kambourakis, G.; Fovino, I.N. Sharing Pandemic Vaccination Certificates through Blockchain: Case Study and Performance Evaluation. Wirel. Commun. Mob. Comput. 2021, 2021, e2427896. [Google Scholar] [CrossRef]

- Su, Y.-J.; Hsu, W.-C. Hyperledger Indy-based Roaming Identity Management System. Taiwan Ubiquitous Inf. 2023, 8, 545–557. Available online: https://bit.kuas.edu.tw/~jni/2023/vol8/s2/16.JNI-0774.pdf (accessed on 25 July 2025).

- Privacy Policies|Epic. Epic.com. 2025. Available online: https://www.epic.com/en-us/privacypolicies/ (accessed on 30 July 2025).

- Digital Government. Digital Government—Making Digital Service Delivery Easier for Public Service Employees. 2024. Available online: https://digital.gov.bc.ca/design/digital-trust/online-identity/person-credential/ (accessed on 30 July 2025).

- Schembri, F.; Digital diplomas. MIT Technology Review. 2018. Available online: https://www.technologyreview.com/2018/04/25/143311/digital-diplomas/ (accessed on 30 July 2025).

- Hoffmann, T.; Vasquez, M.C.S. The estonian e-residency programme and its role beyond the country’s digital public sector ecosystem. CES Derecho 2022, 13, 184–204. [Google Scholar] [CrossRef]

- National Digital Identity and Government Data Sharing in Singapore A Case Study of Singpass and APEX. Available online: https://www.developer.tech.gov.sg/assets/files/GovTech%20World%20Bank%20NDI%20APEX%20report.pdf (accessed on 28 July 2025).

- Pöhn, D.; Grabatin, M.; Hommel, W. Analyzing the Threats to Blockchain-Based Self-Sovereign Identities by Conducting a Literature Survey. Appl. Sci. 2023, 14, 139. [Google Scholar] [CrossRef]

- Grech, A.; Sood, I.; Ariño, L. Blockchain, Self-Sovereign Identity and Digital Credentials: Promise Versus Praxis in Education. Front. Blockchain 2021, 4, 616779. [Google Scholar] [CrossRef]

- Weigl, L.; Barbereau, T.; Fridgen, G. The construction of self-sovereign identity: Extending the interpretive flexibility of technology towards institutions. Gov. Inf. Q. 2023, 40, 101873. [Google Scholar] [CrossRef]

- Brăcăcescu, R.V.; Mocanu, Ş.; Ioniţă, A.D.; Brăcăcescu, C. A proposal of digital identity management using blockchain. Rev. Roum. Sci. Tech. Série Électrotechnique Énergétique 2024, 69, 85–90. [Google Scholar] [CrossRef]

- PROV-O: The PROV Ontology. W3C Recommendation. 2013. Available online: https://www.w3.org/TR/prov-o/ (accessed on 25 July 2025).

- Gil, Y.; Miles, S.; Belhajjame, K.; Deus, H.; Garijo, D.; Klyne, G.; Missier, P.; Soiland-Reyes, S.; Zednik, S. PROV Model Primer: W3C Working Group Note. Research Explorer The University of Manchester. 2013. Available online: https://research.manchester.ac.uk/en/publications/prov-model-primer-w3c-working-group-note (accessed on 30 July 2025).

- Merkle, R.C. Protocols for Public Key Cryptosystems. In Proceedings of the 1980 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 14–16 April 1980. [Google Scholar] [CrossRef]

- Akbarfam, A.J.; Heidaripour, M.; Maleki, H.; Dorai, G.; Agrawal, G. ForensiBlock: A Provenance-Driven Blockchain Framework for Data Forensics and Auditability. In Proceedings of the 2023 5th IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications (TPS-ISA), Atlanta, GA, USA, 1–4 November 2023; pp. 136–145. [Google Scholar] [CrossRef]

- Feng, K.; Ritchie, N.; Blumenthal, P.; Parsons, A.; Zhang, A.X. Examining the Impact of Provenance-Enabled Media on Trust and Accuracy Perceptions. Proc. ACM Hum.-Comput. Interact. 2023, 7, 1–42. [Google Scholar] [CrossRef]

- Jiang, L.; Li, R.; Wu, W.; Qian, C.; Loy, C.C. DeeperForensics-1. In 0: A Large-Scale Dataset for Real-World Face Forgery Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Butt, U.J.; Hussien, O.; Hasanaj, K.; Shaalan, K.; Hassan, B.; Al-Khateeb, H. Predicting the impact of data poisoning attacks in blockchain-enabled supply chain networks. Algorithms 2023, 16, 549. [Google Scholar] [CrossRef]

- Farhan, M.; Sulaiman, R.B. Developing Blockchain Technology to Identify Counterfeit Items Enhances the Supply Chain’s Effectiveness. FMDB Trans. Sustain. Technoprise Lett. 2023, 1, 123–134. [Google Scholar]

- Jahankhani, H.; Jamal, A.; Brown, G.; Sainidis, E.; Fong, R.; Butt, U.J. (Eds.) AI, Blockchain and Self-Sovereign Identity in Higher Education; Springer Nature: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Farhan, M.; Nur, A.H.; Sulaiman, R.B.; Butt, U.; Zaman, S. Blockchain-Based Railway Ticketing: Enhancing Security and Efficiency with QR Codes and Smart Contract. In Proceedings of the 2025 4th International Conference on Computing and Information Technology (ICCIT), Tabuk, Saudi Arabia, 13–14 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 238–244. [Google Scholar]

- Farhan, M.; Nur, A.H.; Salih, A.; Sulaiman, R.B. Disrupting the ballot box: Blockchain as a catalyst for innovation in electoral processes. In Proceedings of the 2024 International Conference on Advances in Computing, Communication, Electrical, and Smart Systems (iCACCESS), Dhaka, Bangladesh, 8–9 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Maruf, F.; Sulaiman, R.B.; Nur, A.H. Blockchain technology to detect fake products. In Digital Transformation for Improved Industry and Supply Chain Performance; Khan, M.R., Khan, N.R., Jhanjhi, N.Z., Eds.; IGI Global: Hershey, PA, USA, 2024; pp. 1–32. [Google Scholar] [CrossRef]

- Sourav, M.S.U.; Mahmud, M.S.; Talukder, M.S.H.; Sulaiman, R.B. IoMT-blockchain-based secured remote patient monitoring framework for neuro-stimulation devices. In Artificial Intelligence for Blockchain and Cybersecurity Powered IoT Applications; CRC Press: Boca Raton, FL, USA, 2024; pp. 209–225. [Google Scholar]

- Bin Sulaiman, R. Applications of Block-Chain Technology and Related Security Threats. Rejwan, Applications of Block-Chain Technology and Related Security Threats. SSRN Electron. J. 2018. Available online: https://ssrn.com/abstract=3205732 (accessed on 30 July 2025). [CrossRef]

- Protocol Labs Research. Design and Evaluation of IPFS: A Storage Layer for the Decentralized Web|Protocol Labs Research. Protocol Labs Research. 2022. Available online: https://research.protocol.ai/publications/design-and-evaluation-of-ipfs-a-storage-layer-for-the-decentralized-web/ (accessed on 30 July 2025).

- Morales-Alarcón, C.H.; Bodero-Poveda, E.; Villa-Yánez, H.M.; Buñay-Guisñan, P.A. Blockchain and Its Application in the Peer Review of Scientific Works: A Systematic Review. Publications 2024, 12, 40. [Google Scholar] [CrossRef]

- Ramachandran, A.; Ramadevi, P.; Alkhayyat, A.; Yousif, Y.K. Blockchain and Data Integrity Authentication Technique for Secure Cloud Environment. Intell. Autom. Soft Comput. 2023, 36, 2055–2070. [Google Scholar] [CrossRef]

- Roumeliotis, K.I.; Tselikas, N.D.; Nasiopoulos, D.K. Fake News Detection and Classification: A Comparative Study of Convolutional Neural Networks, Large Language Models, and Natural Language Processing Models. Future Internet 2025, 17, 28. [Google Scholar] [CrossRef]

- Schardong, F.; Custódio, R. Self-Sovereign Identity: A Systematic Review, Mapping and Taxonomy. Sensors 2022, 22, 5641. [Google Scholar] [CrossRef]

- Surjatmodjo, D.; Unde, A.A.; Cangara, H.; Sonni, A.F. Information Pandemic: A Critical Review of Disinformation Spread on Social Media and Its Implications for State Resilience. Soc. Sci. 2024, 13, 418. [Google Scholar] [CrossRef]

- Shcherbakov, A. Hyperledger Indy Besu as a permissioned ledger in self-sovereign identity. In Proceedings of the Open Identity Summit 2024 (OID 2024), Porto, Portugal, 20–21 June 2024; Roßnagel, H., Schunck, C.H., Sousa, F., Eds.; GI-Edition: Lecture Notes in Informatics (LNI), P-350; Gesellschaft für Informatik e.V.: Bonn, Germany, 2024; pp. 127–137. [Google Scholar] [CrossRef]

- [R-VERITRUST]. VeriTrust Artifacts (v0.1.0—Conceptual Scaffold). GitHub. 2025. Available online: https://github.com/marufrigan/veritrust-artifacts (accessed on 30 July 2025).

- Mazzocca, C.; Acar, A.; Uluagac, S.; Montanari, R.; Bellavista, P.; Conti, M. A Survey on Decentralized Identifiers and Verifiable Credentials. IEEE Commun. Surv. Tutor. 2025, 1–32. [Google Scholar] [CrossRef]

- Verifiable Credentials Data Model v2.0. W3C Recommendation. 2023. Available online: https://www.w3.org/TR/vc-data-model/ (accessed on 30 August 2025).

- Exclusive XML Canonicalization Version 1.0. W3C Recommendation. 2002. Available online: https://www.w3.org/TR/xml-exc-c14n/ (accessed on 30 August 2025).

- Sporny, M.; Longley, D.; Sabadello, M.; Reed, D.; Steele, O.; Allen, C. Decentralized Identifiers (DIDs) v1.0. W3C Recommendation. 2022. Available online: https://www.w3.org/TR/did-core/ (accessed on 30 July 2025).

- Doshi-Velez, F.; Kim, B. Towards A Rigorous Science of Interpretable Machine Learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology (NIST). FIPS PUB 180-4: Secure Hash Standard (SHS); NIST: Gaithersburg, MD, USA, 2015. [Google Scholar] [CrossRef]

- RFC 6979; Deterministic Usage of the Digital Signature Algorithm (DSA) and Elliptic Curve Digital Signature Algorithm (ECDSA). Internet Engineering Task Force (IETF). 2013. Available online: https://datatracker.ietf.org/doc/html/rfc6979 (accessed on 30 July 2025).

- Siqueira, A.; Da, F.; Rocha, V. Performance Evaluation of Self-Sovereign Identity Use Cases. In Proceedings of the 2023 IEEE International Conference on Decentralized Applications and Infrastructures (DAPPS), Athens, Greece, 17–20 July 2023. [Google Scholar] [CrossRef]

- Kokoris-Kogias, E.; Alp, E.C.; Gasser, L.; Jovanovic, P.; Syta, E.; Ford, B. CALYPSO: Private Data Management for Decentralized Ledgers. Cryptology ePrint Archive. 2018. Available online: https://eprint.iacr.org/2018/209 (accessed on 30 July 2025).

- Gürsoy, G.; Brannon, C.M.; Gerstein, M. Using Ethereum blockchain to store and query pharmacogenomics data via smart contracts. BMC Med. Genom. 2020, 13, 74. [Google Scholar] [CrossRef]

- Ben-Sasson, E.; Bentov, I.; Horesh, Y.; Riabzev, M. Scalable Zero Knowledge with No Trusted Setup. In Advances in Cryptology—CRYPTO 2019, Proceedings of the 39th Annual International Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 2019; Springer eBooks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 701–732. [Google Scholar] [CrossRef]

- Empower Healthcare through a Self-Sovereign Identity Infrastructure for Secure Electronic Health Data Access. arXiv 2022. [CrossRef]

- Zhang, K.; Jacobsen, H. Towards Dependable, Scalable, and Pervasive Distributed Ledgers with Blockchains. In Proceedings of the 2018 IEEE 38th International Conference on Distributed Computing Systems (ICDCS), Vienna, Austria, 2–6 July 2018. [Google Scholar] [CrossRef]

- OpenTimestamps: A Timestamping Proof Standard. 2025. Available online: https://opentimestamps.org/ (accessed on 30 July 2025).

- OriginTrail Decentralized Knowledge Graph. 2025. Available online: https://origintrail.io/ (accessed on 30 July 2025).

- Content Authenticity Initiative (CAI). 2025. Available online: https://contentauthenticity.org (accessed on 30 July 2025).

- Coalition for Content Provenance and Authenticity (C2PA). C2PA Specification, Version 1.3. 2025. Available online: https://c2pa.org/specifications/specifications/1.3 (accessed on 30 July 2025).

- Hyperledger Indy. 2025. Available online: https://www.hyperledger.org/projects/hyperledger-indy (accessed on 30 July 2025).

- Obaid, A.H.; Al-Husseini, K.A.O.; Almaiah, M.A.; Shehab, R. Dynamic key revocation and recovery framework for smart city authentication systems. Int. J. Innov. Res. Sci. Stud. 2025, 8, 2112–2123. [Google Scholar] [CrossRef]

- Benet, J. IPFS—Content Addressed, Versioned, P2P File System. arXiv 2014. [Google Scholar] [CrossRef]

- Suripeddi, M.K.S.; Purandare, P. Blockchain and GDPR—A study on compatibility issues of the distributed ledger technology with GDPR data processing. J. Phys. Conf. Ser. 2021, 1964, 042005. [Google Scholar] [CrossRef]

- IPFS Documentation|IPFS Docs. 2023. Available online: https://docs.ipfs.tech/ (accessed on 30 July 2025).

- Working Groups. Decentralizing Trust in Identity Systems. Decentralized Identity Foundation. 2024. Available online: https://blog.identity.foundation/decentralizing-trust-in-identity-systems/ (accessed on 30 July 2025).

- Blockchain and Freedom of Expression. 2019. Available online: https://www.article19.org/wp-content/uploads/2019/07/Blockchain-and-FOE-v4.pdf (accessed on 25 July 2025).

- Dumortier, J. Regulation (EU) No 910/2014 on Electronic Identification and Trust Services for Electronic Transactions in the Internal Market (EIDAS Regulation); Edward Elgar Publishing: Cheltenham, UK, 2022. [Google Scholar] [CrossRef]

| Area | Representative Work and Methodology | Identified Gap |

|---|---|---|

| Knowledge-Based Fake News Detection | FNACSPM [5] used sequential pattern mining and machine learning classifiers on six datasets. | Relies only on textual content; lacks integration with source identity or cross-modal misinformation signals. Not designed for real-time adaptability. |

| Style-Based Fake News Detection | MCRED [10] combined text, image, and user interaction with contrastive learning and GNNs. Other studies used TF-IDF, Word2Vec, LSTM, and BERT. | Vulnerable to adversarial writing styles and LLM-generated text. No link to verified author identity or provenance. |

| Propagation-Based Fake News Detection | GETAE [17] and CoST [16] leveraged graph transformers and ensemble propagation-text models. | Needs a complete propagation history; unsuitable for early detection. Lacks identity anchoring or source verification. |

| Source-Based Fake News Detection | ExFake and Us-DeFake evaluated user trust via behavioral metadata and social graphs. | Heavily relies on behavioral patterns, which can be manipulated. No cryptographic user identity or tamper-proof accountability. |

| Blockchain for Content Verification | Attestiv, OriginStamp, and Hyperledger solutions focus on immutable timestamps and hash commitments. | Do not verify content authorship. High computational/storage overhead makes real-time applications difficult. |

| Self-Sovereign Identity (SSI) | Implements DIDs and verifiable Credentials for user-controlled identity in education and healthcare. | Identity proofs not tied to digital content authorship. No content anchoring or provenance linkage. |

| Digital Content Provenance | W3C PROV, Merkle trees, ForensiBlock used for editing trails and forensic verification. | Tracks edits and metadata, but lacks a link to the verified identity of the author or source of content. |

| Integrated Frameworks | No unified framework combining SSI + provenance + blockchain. | Existing systems work in silos. No holistic pipeline for verifying user identity and content origin simultaneously. |

| Proposed Framework (VeriTrust) | Combines DIDs/VCverified authorship + W3C PROV hash lineage + Besu smartcontract anchoring; realtime verifier plugin. | Delivers first end-to-end trust pipeline: proves who created content (SSI), what/when it was published (blockchain + hash), and how it evolved (PROV). |

| Component | Description | Role in Fake News Detection/Content Provenance | Underlying Technology/Standard |

|---|---|---|---|

| Decentralized Identifiers (DIDs) | Unique, cryptographically verifiable identifiers controlled by the user. | Establishes an immutable, user-controlled identity for content creators and verifiers. | W3C DID Standard, DLT (e.g., Ethereum/Polygon) |

| Verifiable Credentials (VCs) | Digital certificates containing claims about a user’s identity, roles, or expertise. | Attests to the credibility and qualifications of content creators and fact-checkers; it enables selective disclosure. | W3C VC Standard, Cryptographic Proofs |

| Blockchain Ledger | A distributed, immutable, and tamper-resistant record of transactions. | Stores cryptographic hashes of content, DIDs, timestamps, and provenance events; ensures data integrity and transparency. | Ethereum, Polygon (PoS), Smart Contracts |

| Smart Contracts | Self-executing code stored on the blockchain. | Automates provenance tracking rules, manages decentralized voting mechanisms, and enforces incentive/penalty systems. | Solidity (for EVM-compatible blockchains) |

| Decentralized Storage (IPFS/Web3.Storage) | Peer-to-peer file storage systems. | Stores the full content files (articles, images, videos) off-chain, linked by hashes on the blockchain, addressing scalability. | IPFS, Web3.Storage |

| AI Detection Models | Multimodal deep learning algorithms. | Provides initial automated assessment of content authenticity (text, image, video); identifies potential misinformation. | Deep Learning (CNN, LSTM, Transformer Architectures), Federated Learning |

| Decentralized Voting Mechanism | A community-driven system for verifying content authenticity. | Allows verified fact-checkers and community members to review and vote on content, correcting AI misclassifications and building consensus. | Blockchain, Token Economics, Reputation Systems |

| Reputation System | A blockchain-based mechanism to track the reliability of participants. | Incentivizes honest behaviour and accurate verification; grants influence based on proven trustworthiness. | Blockchain, Token Economics |

| Component | Version | Security Role |

|---|---|---|

| Hyperledger Besu | 24.3.1 | On-chain anchoring of Merkle roots |

| Solidity Compiler | 0.8.23 | Smart contract bytecode verification |

| Hyperledger Indy | 1.13.2 | DID and schema ledger |

| Hyperledger Aries JS | 0.4.5 | DIDComm messaging and wallet services |

| IPFS (go-ipfs) | 0.25.0 | Off-chain storage (provenance content) |

| Docker Engine | 20.10.21 | Containerization and isolation |

| OpenSSL | 3.2.0 | TLS encryption and signature utilities |

| Variable | Type | Domain | Description |

|---|---|---|---|

| content | bytes | {0, 1} * | The raw digital content to be submitted. |

| metadata | JSON | Text | Structured contextual metadata (e.g., title, timestamp). |

| vc | JSON-LD | W3C VC | A Verifiable Credential issued to the creator. |

| creatorPrivateKey | bytes | Private Key | The private key used to sign the provenance record. |

| Variable | Type | Domain | Description |

|---|---|---|---|

| content | bytes | {0, 1} * | The raw content to be verified. |

| signedRecord | JSON | Text | The provenance record retrieved from IPFS. |

| vc | JSON-LD | W3C VC | The Verifiable Credential is associated with the creator. |

| merkleProof | list[bytes] | Hashes | Sibling hashes for verifying the Merkle root inclusion. |

| onChainMerkleRoot | bytes | Hash | The Merkle root is stored on-chain. |

| Security Goal | Formal Property | Cryptographic Basis |

|---|---|---|

| Integrity | SHA-256 (FIPS 180-4) | |

| Authenticity | ECDSA (RFC 6979) | |

| Non-repudiation | Signature binds the sender identity | Public Key Infrastructure |

| Inclusion | R = Merkle(hi, P) | Merkle Trees (binary hashes) |

| Privacy-preserving | ZKPs (Groth16, zk-SNARKs) |

| Framework | SSI Support | Merkle Anchoring | Off-Chain Storage | VC Integration | ZKP Support | GDPR-Aware Design | Key Limitation |

|---|---|---|---|---|---|---|---|

| VeriTrust (Ours) | Yes | Yes | Yes | Yes | Planned | Yes | Deployment maturity (in progress) |

| OriginTrail [80] | Yes | Yes | Yes | Partial | No | No | Primarily focused on supply-chain traceability |

| Content Authenticity Initiative [81] | No | Yes | Yes | No | No | Partial | Lacks decentralized identity and credential layer |

| C2PA Standard [82] | No | Yes (signatures + manifests) | Yes | No | No | Partial | Not blockchain anchored; identity is weakly bound |

| Sovrin / Hyperledger Indy [83] | Yes | No | Yes | Yes | No | Partial | No native content provenance anchoring |

| Threat Category | Risk Description | Mitigation Strategy/Control | Trade-Offs |

|---|---|---|---|

| Content Tampering | Alteration of content after publication | SHA-256 hashing, Merkle root anchoring, tamper-evidence checks [71] | Requires recomputation of hashes on updates |

| Credential Forgery | Attackers forge or reuse credentials | VCs signed with issuer’s private key; DID-based public key resolution; revocation checks [67] | Reliant on timely issuer participation |

| Replay Attacks | Re-submission of valid but outdated records | Use of nonces + timestamps; duplicate-entry rejection via smart contract | Extra storage/processing overhead |

| Sybil Attacks (Identity Layer) | Mass creation of fake identities to flood the system | VC issuance restricted to vetted authorities; decentralized trust registries | Governance complexity, risk of gatekeeping |

| Off-Chain Content Deletion | Loss of IPFS data due to garbage collection or node churn | Multi-node pinning, decentralized pinning services, institutional mirrors, hybrid cloud fallback [87] | Additional storage/operational costs |

| Malicious or Colluding Issuers | Issuers collude or issue fraudulent credentials | Accreditation via multi-stakeholder trust registries (e.g., Sovrin, EBSI) [70] | Governance overhead, trust in registry |

| Key Compromise/DID Rotation | Stolen or expired private keys undermine authenticity | DID rotation (per W3C DID Core [58]); credential revocation registries | Requires timely revocation propagation |

| Contract-Level Invariants | Logic flaws or replay vulnerabilities in smart contracts | Formal verification (SMTChecker, Certora) [5]; monotonic timestamp and uniqueness constraints | Verification costs, developer expertise |

| Governance Risks in Permissioned Networks | Collusion among validator nodes undermines consensus | Diversity of operators, external audits, migration paths to public/consortium blockchains [79] | Higher coordination and governance overhead |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farhan, M.; Butt, U.; Sulaiman, R.B.; Alraja, M. Self-Sovereign Identities and Content Provenance: VeriTrust—A Blockchain-Based Framework for Fake News Detection. Future Internet 2025, 17, 448. https://doi.org/10.3390/fi17100448

Farhan M, Butt U, Sulaiman RB, Alraja M. Self-Sovereign Identities and Content Provenance: VeriTrust—A Blockchain-Based Framework for Fake News Detection. Future Internet. 2025; 17(10):448. https://doi.org/10.3390/fi17100448

Chicago/Turabian StyleFarhan, Maruf, Usman Butt, Rejwan Bin Sulaiman, and Mansour Alraja. 2025. "Self-Sovereign Identities and Content Provenance: VeriTrust—A Blockchain-Based Framework for Fake News Detection" Future Internet 17, no. 10: 448. https://doi.org/10.3390/fi17100448

APA StyleFarhan, M., Butt, U., Sulaiman, R. B., & Alraja, M. (2025). Self-Sovereign Identities and Content Provenance: VeriTrust—A Blockchain-Based Framework for Fake News Detection. Future Internet, 17(10), 448. https://doi.org/10.3390/fi17100448