Abstract

Today, AI is primarily narrow, meaning that each model or agent can only perform one task or a narrow range of tasks. However, systems with broad capabilities can be built by connecting multiple narrow AIs. Connecting various AI agents in an open, multi-organizational environment requires a new communication model. Here, we develop a multi-layered ontology-based communication framework. Ontology concepts provide semantic definitions for the agents’ inputs and outputs, enabling them to dynamically identify communication requirements and build processing pipelines. Critical is that the ontology concepts are stored on a decentralized storage medium, allowing fast reading and writing. The multi-layered design offers flexibility by dividing a monolithic ontology model into semantic layers, allowing for the optimization of read and write latencies. We investigate the impact of this optimization by benchmarking experiments on three decentralized storage mediums—IPFS, Tendermint Cosmos, and Hyperledger Fabric—across a wide range of configurations. The increased read-write speeds allow AI agents to communicate efficiently in a decentralized environment utilizing ontology principles, making it easier for AI to be used widely in various applications.

1. Introduction

There is enormous potential for innovation and disruptive influence across industries due to the widespread deployment of blockchain networks and AI technology [1]. Despite the hype, today and for the foreseeable future, AI is primarily narrow AI and not artificial general intelligence (AGI) [2]. In contrast to AGI, narrow AI is characterized by having a single capability [3], performing a single task. To automatically perform more complex, real-world tasks, multiple narrow AI agents can be interconnected in multi-agent architectures [4,5,6]. Thus, an efficient AI-to-AI communication paradigm is necessary to achieve the full potential of contemporary AI technologies. We can boost creativity, promote information exchange and cross-organizational collaboration, and accelerate the development and application of AI technologies by addressing the interoperability barrier [7,8,9].

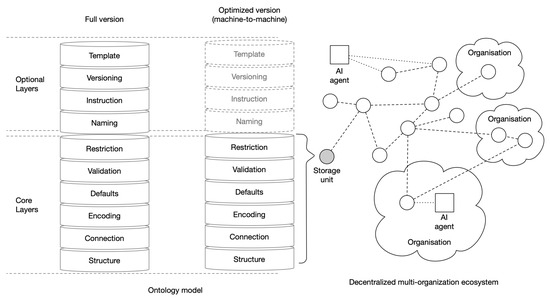

Blockchain networks’ decentralized structure provides transparency, immutability, and security, making it an excellent foundation for organizing and connecting AI agents [10]. However, the broad acceptance and effective application of AI within these networks are hampered by the absence of standardized protocols and smooth integration mechanisms. A decentralized ontology versioning paradigm would allow efficient communication and collaboration among AI agents in a decentralized environment [11,12]. For this, three prerequisites need to be fulfilled. Firstly, the message structure must be declared to facilitate interaction between the AI agents. Secondly, an intuitive approach should be available for software engineers to create linkages between agents according to their inputs and outputs. Lastly, it is essential to specify the operational environment for these agents, ensuring a setting where the agents can be implemented together with their input-output specifications (see [11] for details). The first two of these prerequisites are illustrated in Figure 1 and Figure 2.

Figure 1.

Message layers optimized for machine-to-machine interaction.

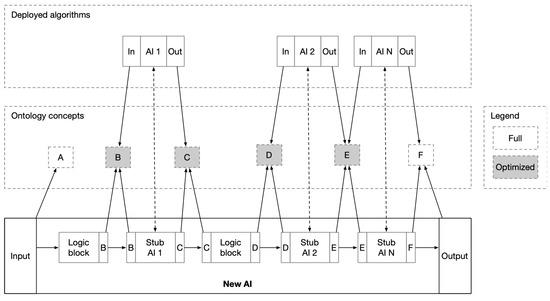

Figure 2.

Algorithm composition.

Figure 1 illustrates the modular architecture governing the exchange of messages between AI agents. The data exchanged among agents adhere to a flexible structure, allowing them to specify their inputs and outputs. This messaging framework is defined by ontology concepts, organized into layers as depicted in Figure 1, where the data represent an actual instance of an ontology concept.

The model’s versatility stems from its layered architecture, which facilitates the operation of multiple agents on different levels of data without requiring the transmission of the entire dataset. Each layer serves a distinct purpose:

- Template Layer: Optional. Offers partial views of the concept’s fields, enabling its use for various purposes where only subsets of fields are necessary.

- Versioning Layer: Optional. Manages requests for evolving the concept’s structure.

- Instruction Layer: Optional. Provides information on the concept’s purpose and usage.

- Naming Layer: Optional. Offers user-friendly labels for concept fields.

- Restriction Layer: Core. Contains cross-field validation rules for a concept.

- Validation Layer: Core. Stores rules to determine whether a given value can be stored in a particular field.

- Defaults Layer: Core. Provides default values for the concept’s fields, ensuring consistency.

- Encoding Layer: Core. Specifies how the fields of a concept are encoded, guiding AI agents on data parsing or encoding during transmission.

- Connection Layer: Core. Stores addresses of complex concepts declared in the structural layer, enabling retrieval of definitions from decentralized storage.

- Structural Layer: Core. Defines the fields of a message, including their names and data types, which may be primitive (e.g., string, number) or complex (e.g., another concept).

Only the core layers are needed in agent-to-agent interactions, even though a full ontology characterization calls for defining all layers (see Figure 1). Thus, agent-to-agent communication can be optimized by including only the core layers. The entire model version, including the optional layers, is shown on the left side of the following diagram. The optimized version, which contains only the minimum number of layers needed for AI agent communication, is shown in the middle of the diagram.

The procedure for connecting AI agents from a logical and structural point of view is shown in Figure 2. Placeholder sequences in the code connect AI agents, forming a composite AI. These placeholders’ inputs and outputs, representing AI agents, are defined and consistent with ontology ideas. This allows a software engineer to create links between agents at the base layer of the diagram and instantiate concepts. The middle layer of Figure 2 contains a decentralized medium where the ontology concepts are structurally stored. These concepts can be incorporated into the base-layer logical models of AIs or the running examples of the agents placed in upper-layer execution environments. However, the concepts for the input and output need to be fully defined because human interaction is their intended use. The internal concepts (shown in gray in Figure 2) can use the optimized versions since the optional layers are not required in machine-to-machine interactions between AI agents communication. In other words, the more extended the chains of composition, the more beneficial the application of the optimized model becomes.

Here, we address AI interoperability with a decentralized ontology versioning paradigm that enables the integration of AI algorithms within blockchain networks. Since communication speed is critical, our primary goal is to decrease communication delays and consequently improve the communication capabilities of AI agents. We propose and experimentally investigate a crucial optimization of the storage and delivery of ontology concepts. These analyses shed light on the ontology model’s efficacy and help develop cooperative, scalable AI systems for decentralized environments.

2. Use Case

To illustrate the role of the ontology, consider a use case where the ontology is designed to facilitate communication between AI agents in a smart city environment. In a smart city, multiple AI agents are responsible for managing different aspects of traffic. These include traffic signal controllers, autonomous vehicles, public transportation systems, and emergency response units. Efficient communication between embedded AI agents is critical for optimizing traffic flow, reducing congestion, and responding to incidents.

The ontology example is as follows:

- Traffic Signal Controller AI:

- –

- Ontology Concept: TrafficSignalStatus.

- –

- Fields: SignalID (string), Status (string: “Red”, “Yellow”, “Green”), Timestamp (datetime).

- –

- Description: The traffic signal controller AI updates the status of each traffic signal in real time. These data are encoded and transmitted using the TrafficSignalStatus concept defined in the ontology.

- Autonomous Vehicle AI:

- –

- Ontology Concept: VehicleStatus.

- –

- Fields: VehicleID (string), Location (GPS coordinates), Speed (numeric), Destination (GPS coordinates).

- –

- Description: Autonomous vehicles periodically share their status, including their current location and speed. This information is structured according to the VehicleStatus concept.

- Public Transportation System AI:

- –

- Ontology Concept: BusArrivalEstimate.

- –

- Fields: BusID (string), RouteID (string), EstimatedArrivalTime (datetime).

- –

- Description: The public transportation system provides real-time updates on bus arrival times, allowing other agents to adjust traffic management strategies accordingly.

- Emergency Response Unit AI:

- –

- Ontology Concept: EmergencyEvent.

- –

- Fields: EventID (string), Location (GPS coordinates), Severity (string: “Low”, “Medium”, “High”), Description (string).

- –

- Description: In case of an incident, the emergency response unit broadcasts information about the event, including the location and severity. Other agents, such as traffic signal controllers and autonomous vehicles, prioritize emergency response routes.

An example of a step-by-step communication flow is as follows:

- The Traffic Signal Controller AI detects a change in the signal status at a major intersection and updates the TrafficSignalStatus concept.

- Autonomous vehicles approaching the intersection receive the updated TrafficSignalStatus, adjust their speed, and plan their routes accordingly.

- A bus on the public transportation system calculates its updated arrival time due to the traffic signal change and updates the BusArrivalEstimate concept.

- An emergency occurs nearby, and the Emergency Response Unit AI broadcasts an EmergencyEvent concept. The Traffic Signal Controller AI and Autonomous Vehicle AI reprioritize routes to clear the way for emergency vehicles.

- The data exchanged among these AI agents are stored and retrieved from a decentralized storage system (e.g., IPFS, Tendermint Cosmos, or Hyperledger Fabric), ensuring fast and reliable communication.

By utilizing the multi-layered ontology framework, the communication model ensures that only the necessary layers are used for machine-to-machine communications, reducing latency and improving efficiency. For example, in the scenario described above, core layers such as structural, connection, and encoding are used, while optional layers like naming and usage are omitted, enhancing read and write speeds.

3. Related Works

Recent advancements in communication models for AI agents have primarily focused on improving the capabilities of large language models (LLMs) and their application in multi-agent systems. Birr et al. [13] introduced an affordance-based task planning system for LLMs, enhancing autonomous task execution. Similarly, Chen et al. [14] and Guo et al. [15] explored frameworks facilitating multi-agent collaboration. However, these studies primarily concentrated on agent systems centered around LLMs without considering the complexities that arise when each AI agent encompasses diverse models potentially running on different nodes. This creates a meshed network of agents that must communicate over the internet, where communication latencies can pose significant challenges.

Our work diverges from these approaches by emphasizing the dynamic optimization of communication storage to address the latency issues inherent in decentralized environments. While Gao et al. [16] examined tool use efficiency with chain-of-abstraction reasoning, and Cobbe et al. [17] focused on reasoning and verification tasks, these studies did not tackle the critical problem of optimizing data exchange mechanisms across distributed systems.

Additionally, Liu et al. [18,19] and Tian et al. [20] investigated conversational agents and AI safety, respectively, without addressing the need for robust communication protocols in a decentralized network of heterogeneous AI models. Our research specifically targets the optimization of decentralized storage mediums, such as IPFS, Tendermint Cosmos, and Hyperledger Fabric [21], to enhance read and write latencies, thereby facilitating more efficient AI agent communication.

In conclusion, while existing research has made significant strides in various aspects of AI and multi-agent systems, it often overlooks the complexities and latency issues associated with a decentralized mesh of diverse AI models. Our work makes a unique contribution by focusing on dynamic storage optimization for efficient communication, enabling the seamless integration and scalability of AI systems across various applications, even in the face of internet communication delays.

Several studies have explored incorporating blockchain technology into various domains, including healthcare, smart cities, e-health, robotics, and cardiovascular medicine. Tagde et al. reviewed the use of blockchain and AI in e-health [22], while Lopes and Alexandre provided an overview of blockchain integration with robotics and AI [23], and Hussien et al. explored the trends and opportunities of blockchain technology in the healthcare industry [24].

Rana et al. [25] introduced a decentralized access control model that combines blockchain technology and AI to enhance secure interoperability in healthcare. Their proposed solution aimed to ensure the privacy, integrity, and confidentiality of healthcare data while allowing efficient and seamless sharing between various providers and systems. Krittanawong et al. also addressed the challenges involved in providing accessibility to healthcare data, specifically in cardiovascular medicine, while maintaining privacy and security. They discussed blockchain integration with AI and potential applications in cardiovascular medicine [26]. Similar to our work, these studies dealt with blockchain-mediated interoperability but differed in their focus on healthcare and organizations instead of AI agents.

In related work, Singh et al. (2020) reviewed the intersection of blockchain technology and AI in Internet of Things (IoT) networks for sustainable smart cities [27]. They highlighted how this connection can support data security, privacy, and general efficiency in applications (IoT devices) related to smart cities. Interconnected IoT devices pose—at a high level—similar challenges to the interconnected AI agents we propose here, in that reliable machine-to-machine communication with flexible content is necessary.

Shifting the focus to integrating blockchain with semantic web technologies, Matthew, Auer, and Domingue presented a framework for the symbiotic development of these domains [28]. Graux et al. investigated the use of blockchain with RDF data and SANSA to profit from kitties on Ethereum [29]. Hoffman et al. introduced the concept of smart papers as dynamic publications stored on the blockchain [30]. Kim et al. proposed the engineering of an ontology of governance at the blockchain protocol level [31], while Sikorski et al. examined the application of blockchain technology in the chemical industry, specifically in the machine-to-machine electricity markets [32].

Sicilia and Visvizi discussed the opportunities and policy-making implications of integrating blockchain with OECD data repositories [33]. In particular, storing ontology concepts and RDF data for knowledge graphs on distributed systems has attracted research interest [34,35]. Cano-Benito et al. explored the integration of blockchain and semantic web technologies, highlighting their synergies [36], while Kruijff and Weigand used enterprise ontology to understand blockchain technology [37]. However, there are limited quantitative experimental results, with only a few articles reporting on benchmarking and comparing storage and compression algorithms for RDF data [38,39].

Tara et al. comprehensively compared read-write performance across three decentralized storage mediums [11,12]. Specifically, IPFS, Hyperledger Fabric, and Tendermint Cosmos were compared while varying the number of peers (or nodes) and concept size. They concluded that IPFS provides the best performance, both for reading and writing speeds, especially as the number of peers increases.

4. Materials and Methods

A series of experiments was carried out to assess how well various decentralized storage backends preserved ontology concepts. These experiments were crafted to store and retrieve concepts within a decentralized environment. The performance was measured by varying four independent parameters—concept sizes, infrastructure topology configurations, and storage engines—for each decentralization technology.

The current paper extends the results in [11] by introducing storage optimization (discarding optional layers during machine-to-machine communication; see Figure 1) and studying its effect across the above-mentioned parameters.

4.1. Concept Sizes

Ten files are included with each concept, one for each concept layer. Furthermore, as seen in Figure 1, a meta-file includes details about the notion and its creator. There is decentralized storage for these files. We employ exponential steps to change the number of characteristics in the structural layer from ten to 10,000 to test the scalability of the system. The number of properties in the naming layer also varies because each property in the structural layer has a unique name. The upper limit of the number of properties in our system is on the order of magnitude of the 30,000 properties listed in ECLASS [40]. The final concept sizes, measured in bytes, were almost KB, KB, KB, MB, and MB after testing.

4.2. Peer-to-Peer Configurations

IPFS is a decentralized storage system that uses content hashing to create file addresses for storage between network nodes. This architecture ensures immutability because any changes made to the content would also affect the hash of the file. In particular, the network operates without requiring consensus across all nodes.

We report the impacts of different adjustable parameters, indicated in Table 1, for the IPFS example on the performance of the suggested model by utilizing IPFS as the underlying environment. The findings provided in this section were averaged over several iterations. A total of 2700 iterations were carried out, requiring 19.5 h of nonstop simulation time.

4.2.1. Infrastructure Topology

Two methods were assigned to test the IPFS topology. Only a network of IPFS daemons, or regular peers, is present in the first topology, and they only share data among themselves upon request. In the second topology, a cluster network is positioned next to the Daemon network, with a single correspondent for each peer in the Daemon network within the clustering network. By emitting signals within the clustering network, a clustering network can improve replication and fault tolerance by distributing files received by one Daemon network member to all peers within the same network.

4.2.2. Storage Engines

As shown in Table 1, we tested three distinct IPFS storage engines: FlatFs, BadgerDs, and Lowpower.

Table 1.

IPFS simulation parameters.

Table 1.

IPFS simulation parameters.

| Parameter | Values |

|---|---|

| Network setup | Default | Cluster |

| Topology (peers count) | 2 | 4 | 8 |

| Concept size (properties count) | | | |

| Operation | Read | Write |

| Datastore engine | BadgerDS, FlatFs, Lowpower Profile |

| Warm-up iterations | 5 (each running for 10 s) |

| Execution iterations | 10 (each running for 30 s) |

4.2.3. Public Blockchain

Tendermint Cosmos is a blockchain where each node in the network records transactions in a predetermined sequence. A proof-of-stake consensus algorithm, which verifies members based on their stake in the network, is used to reach consensus on transaction order.

A total of 1800 iterations were carried out, with the transaction and memory pool variation experiments requiring 12 h of continuous simulations, and the datastore engine variation studies requiring 2700 iterations and 18 h of continuous simulations.

4.2.4. Infrastructure Topology

There is only one kind of node in the Tendermint network, instantiated according to the network size. But there are two modes of operation for the nodes: Sync and Commit. They are covered in more detail in the Results section.

4.2.5. Storage Engines

We tested five different storage engines for Tendermint (see Table 2): ClevelDb/GoLevelDb, BadgerDb, BoldDb, RocksDb, and MemDb.

Table 2.

Tendermint simulation parameters.

4.2.6. Permissioned Blockchain

Hyperledger Fabric, a permissioned blockchain platform, uses cryptographic certificates to authenticate validators. Nodes perform specialized tasks such as transaction orderers, validators, and certificate producers, enabling validators to participate in network operations. This model works very effectively in business settings.

Using Hyperledger Fabric as the underlying environment, we describe in this section the effects of the different adjustable parameters provided in Table 3 on the performance of the proposed model.

The results were averaged over several iterations. For the transaction and block size variation assessments, a total of 1800 iterations were carried out over the course of 12 h of continuous simulations. Additionally, six hours of continuous simulation time and 900 iterations were needed for the datastore engine variation studies.

4.2.7. Infrastructure Topology

Since Hyperledger Fabric is a permissioned blockchain, various kinds of nodes are needed to create a functional network. Each configuration includes a single orderer node that adds signed transactions to the blockchain by packaging them into blocks and receiving them from peers. The peer nodes in this topology, which are responsible for executing, validating, and signing transactions, are the components that vary. They were divided into two organizations, each with a single certificate authority node responsible for producing certificates that verify the legitimacy and identity of the group’s nodes.

4.2.8. Storage Engines

Table 3 shows the two different configurations that were used to test the Hyperledger framework: LevelDb and CouchDb.

Table 3.

Hyperledger Fabric simulation parameters.

Table 3.

Hyperledger Fabric simulation parameters.

| Parameter | Values |

|---|---|

| Topology (peers count) | 2 | 4 | 8 |

| Concept size (properties count) | | | |

| Operation | Read | Write |

| Block size | Half | Double |

| Transaction pool | Half | Double |

| Datastore engine | GolevelDB | CouchDB |

| Warm-up iterations | 5 (each running for 10 s) |

| Execution iterations | 10 (each running for 30 s) |

4.3. Hardware Configuration

The series of experiments was conducted utilizing the Java Microbenchmark Harness Tool. The experimental procedure strictly followed the guidelines outlined in [41]. Each experiment included five warm-up iterations of 10 s each, succeeded by ten measurement iterations, each lasting 30 s. The experiments were executed on a machine equipped with an Intel 7700HQ CPU, 24 GB of RAM, and 1 TB of SSD storage.

5. Results

Here, we present the experimental findings assessing the replication environments in terms of their suitability for the proposed model and their optimal parameter configurations. The experiments assessed the dependence of the latencies on the four types of parameters mentioned in the previous section for both retrieving and persisting concepts within the underlying environments.

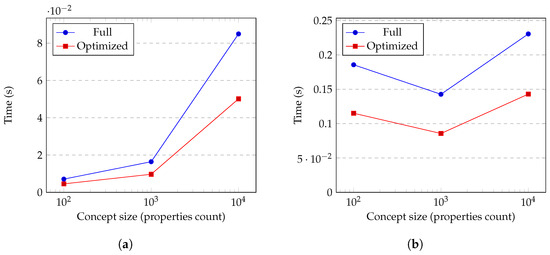

We present the outcomes for 360 distinct experimental configurations. The configuration parameters that were used to obtain the results are specified in Table 1, Table 2 and Table 3. Each experiment consisted of five warm-up iterations and ten execution iterations, with time limits of 10 s and 30 s, respectively. The results were first averaged over the ten execution runs. However, to provide a better overview of the high-level behavior related to the architectures and configuration parameters, we averaged the results over concept sizes without losing critical information (see Figure 3). Similar relationships between concept sizes and latencies also held for the other architectures and configurations and thus are not shown. The values from the 64 h of nonstop simulation were averaged to obtain the results.

Figure 3.

IPFS BadgerDS simulation results. (a) Read. (b) Write.

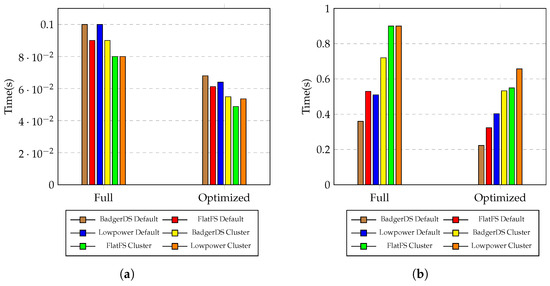

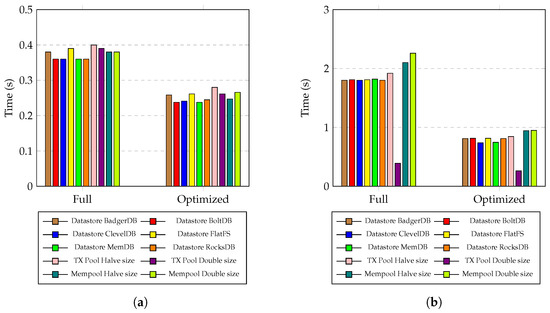

Observation 1: Figure 4 shows the results for the IPFS configurations. On average, persisting the concepts was twice as expensive as reading them. However, compared to Figure 5 and Figure 6, the IPFS setup provided the best throughput for both reading and writing.

Figure 4.

IPFS results. (a) IPFS read results. (b) IPFS write results.

Figure 5.

Tendermint results. (a) Tendermint read results. (b) Tendermint write results.

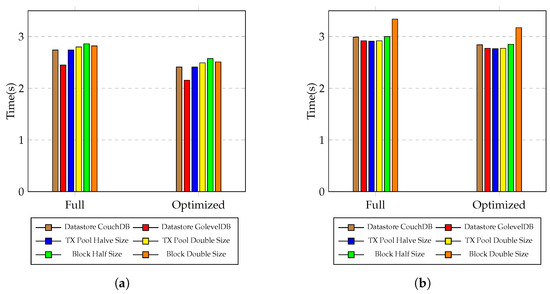

Figure 6.

Hyperledger results. (a) Hyperledger read results. (b) Hyperledger write results.

Observation 2: As demonstrated by the results for the IPFS configuration in Figure 4a, depending on the storage engine, the optimized version of the model achieved a read performance improvement of 30% to 40% compared to the full version. But for persisting the data, as shown in Figure 4b, the optimized model’s improvement was smaller, ranging from 20% to 25%.

Observation 3: Another notable observation from Figure 4 is that, despite IPFS providing a linear relationship between concept size and latency, it was observed that various storage engines responded differently to both the optimized and full versions of the model.

Observation 4: Figure 5 shows the results for Tendermint Cosmos, and it can be seen that the proposed model behaved predictably and stably for both read and write use cases across all configurations. As expected, retrieving information was, on average, three times faster than persisting it.

Observation 5: As demonstrated by the results for the Tendermint configuration in Figure 5a, the optimized version of the model achieved improvements of 30% to 35% in reading concepts compared to the full version, whereas the improvement for persisting the data ranged from 55% to 59% (see Figure 5b).

Observation 6: Figure 6 summarizes the results for the tested Hyperledger Fabric configuration parameters. The proposed model was stable and behaved predictably across all measured configurations, with a slight improvement observed for the Couch DB storage configuration. Also, the measured latencies for writing were less than 20% slower than those for reading.

6. Discussion

Here, we have presented a novel communication model that uses a multi-layered ontology approach to improve communication between AI agents in a decentralized, multi-organization context.

We examined the suitability of various blockchain and decentralized storage technologies, such as Tendermint Cosmos, Hyperledger Fabric, and IPFS, as means of communication for AI agents. We identified the solutions that provide the best outcomes for upholding ontology concepts and highlighted possible communication and resource usage bottlenecks through benchmarking and profiling.

By breaking down the monolithic ontology model into semantic layers, our proposed design enables flexibility, mobility, and efficiency in data processing. This flexibility allows for significant optimization of read and write latencies since, in machine-to-machine communication, only the core layers are necessary. Thus, the template, versioning, instruction, and naming layers can be excluded (see Figure 1).

We observed robust improvements to IPFS and Tendermint across both read (30–40% and 30–35%; see Observations 2 and 5, respectively) and write (20–25% and 55–59%, see Observations 2 and 5, respectively) speeds. However, for Hyperledger Fabric, the read improvement was only 9–12% (Observation 7), and the improvement for writing was even less, at 5%. A similar pattern was observed when comparing read and write latencies (i.e., independent of the optimization). Hyperledger read concepts only 10–18% faster than writing them (see Observation 6). In contrast, reading was 200% faster on IPFS (see Observation 1) and 300% faster on Tendermint (see Observation 5). This discrepancy suggests that a component responsible for transporting the information in Hyperledger heavily impacts overall system performance.

This research study utilizes ontology principles to improve AI agent communication in decentralized systems. Blockchain technology inherently involves substantial resource and energy costs. However, our proposed multi-layered architecture mitigates these issues by ensuring that each agent can efficiently access the necessary information without incurring the overhead associated with storing or retrieving extraneous data. By optimizing data access and minimizing unnecessary storage, we aim to balance the benefits of blockchain’s decentralized nature with a pragmatic approach to resource management. The multi-layered framework streamlines the interaction and setup between humans and machines and promotes fast communication between machines, enabling easy data exchange and identification among AI entities. This nuanced use of blockchain addresses some typical concerns regarding its sustainability and operational efficiency in practical applications. The proposed model provides a thorough methodology that can open the door for a broader use of AI across various applications.

Future Research

Avenues for future research could include the following:

- Performance Optimization: Further investigation into optimization methodologies could enhance the efficacy of AI agent communication in decentralized systems. This may include investigating ways to improve scalability, reduce resource bottlenecks, and accelerate data transfer rates among agents.

- Security and Privacy Considerations: As decentralized systems raise security and privacy concerns, future research could focus on developing robust protocols that safeguard confidential data during communication among AI agents. This could involve looking at privacy-preserving protocols, access control systems, and encryption techniques.

- Integration with Emerging Technologies: Given the rapid rate at which new technologies are developing, it would be advantageous to look into how the proposed communication model can integrate with technologies such as edge computing, the Internet of Things (IoT), or federated learning. This could enable AI bots to collaborate and communicate effectively under difficult conditions.

- Real-World Deployment and Case Studies: Case studies and real-world implementations can be conducted to obtain useful information on the suitability and effectiveness of the proposed communication model. This could involve applying the model in particular industries or enterprises and evaluating how well it functions, how user-friendly it is, and how it influences choices.

- Standardization and Interoperability: To facilitate widespread adoption and smooth communication among AI bots from different platforms or organizations, future research could also focus on standardizing communication protocols and promoting interoperability. This may involve developing common ontologies, semantic mappings, and communication protocols in order to facilitate smooth integration and cooperation among AI agents.By pursuing these research avenues, we can improve AI agents’ capabilities in decentralized environments, encouraging their use across a range of industries and advancing the field of AI communication.

Author Contributions

Software, N.N.; Writing—original draft, A.T.; Supervision, H.K.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding authors.

Conflicts of Interest

Nicolae Natea is employee of Openfabric Network SRL company. The authors declare no conflicts of interest.

References

- Hassanzadeh, P.; Atyabi, F.; Dinarvand, R. The significance of artificial intelligence in drug delivery system design. Adv. Drug Deliv. Rev. 2019, 151, 169–190. [Google Scholar] [CrossRef]

- Mitchell, M. Debates on the nature of artificial general intelligence. Science 2024, 383, eado7069. [Google Scholar] [CrossRef] [PubMed]

- Fei, N.; Lu, Z.; Gao, Y.; Yang, G.; Huo, Y.; Wen, J.; Lu, H.; Song, R.; Gao, X.; Xiang, T.; et al. Towards artificial general intelligence via a multimodal foundation model. Nat. Commun. 2022, 13, 3094. [Google Scholar] [CrossRef] [PubMed]

- Shen, Y.; Song, K.; Tan, X.; Li, D.; Lu, W.; Zhuang, Y. HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in HuggingFace. arXiv 2023, arXiv:2303.17580. [Google Scholar]

- Hao, R.; Hu, L.; Qi, W.; Wu, Q.; Zhang, Y.; Nie, L. ChatLLM Network: More brains, More intelligence. arXiv 2023, arXiv:2304.12998. [Google Scholar]

- Masterman, T.; Besen, S.; Sawtell, M.; Chao, A. The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A Survey. arXiv 2024, arXiv:2404.11584. [Google Scholar]

- Gupta, R.; Kumari, A.; Tanwar, S. Fusion of blockchain and artificial intelligence for secure drone networking underlying 5G communications. Trans. Emerg. Telecommun. Technol. 2021, 32, e4176. [Google Scholar] [CrossRef]

- Morgan, J. Yesterday’s Tomorrow Today: Turing, Searle and the Contested Significance of Artificial Intelligence; Routledge: London, UK, 2018. [Google Scholar]

- Zekai, Ş. Significance of artificial intelligence in science and technology. J. Intell. Syst. Theory Appl. 2018, 1, 1–4. [Google Scholar]

- Salah, K.; Rehman, M.H.U.; Nizamuddin, N.; Al-Fuqaha, A. Blockchain for AI: Review and open research challenges. IEEE Access 2019, 7, 10127–10149. [Google Scholar] [CrossRef]

- Tara, A.; Turesson, H.K.; Natea, N.; Kim, H.M. An Evaluation of Storage Alternatives for Service Interfaces Supporting a Decentralized AI Marketplace. IEEE Access 2023, 11, 116919–116931. [Google Scholar] [CrossRef]

- Tara, A.; Taban, N.; Vasiu, C.; Zamfirescu, C. A Decentralized Ontology Versioning Model Designed for Inter-operability and Multi-organizational Data Exchange. In Artificial Intelligence in Intelligent Systems; Silhavy, R., Ed.; Springer: Cham, Switzerland, 2021; pp. 617–628. [Google Scholar]

- Birr, T.; Pohl, C.; Younes, A.; Asfour, T. AutoGPT+P: Affordance-based Task Planning with Large Language Models. arXiv 2024, arXiv:2402.10778. [Google Scholar]

- Chen, W.; Su, Y.; Zuo, J.; Yang, C.; Yuan, C.; Chan, C.; Yu, H.; Lu, Y.; Hung, Y.; Qian, C.; et al. AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors. arXiv 2023, arXiv:2308.10848. [Google Scholar]

- Guo, X.; Huang, K.; Liu, J.; Fan, W.; Vélez, N.; Wu, Q.; Wang, H.; Griffiths, T.L.; Wang, M. Embodied LLM Agents Learn to Cooperate in Organized Teams. arXiv 2024, arXiv:2403.12482. [Google Scholar]

- Gao, S.; Dwivedi-Yu, J.; Yu, P.; Tan, X.E.; Pasunuru, R.; Golovneva, O.; Sinha, K.; Celikyilmaz, A.; Bosselut, A.; Wang, T. Efficient Tool Use with Chain-of-Abstraction Reasoning. arXiv 2024, arXiv:2401.17464. [Google Scholar]

- Cobbe, K.; Kosaraju, V.; Bavarian, M.; Chen, M.; Jun, H.; Kaiser, L.; Plappert, M.; Tworek, J.; Hilton, J.; Nakano, R.; et al. Training Verifiers to Solve Math Word Problems. arXiv 2021, arXiv:2110.14168. [Google Scholar]

- Liu, N.; Chen, L.; Tian, X.; Zou, W.; Chen, K.; Cui, M. From LLM to Conversational Agent: A Memory Enhanced Architecture with Fine-Tuning of Large Language Models. arXiv 2024, arXiv:2401.0277. [Google Scholar]

- Liu, X.; Yu, H.; Zhang, H.; Xu, Y.; Lei, X.; Lai, H.; Gu, Y.; Ding, H.; Men, K.; Yang, K.; et al. AgentBench: Evaluating LLMs as Agents. arXiv 2023, arXiv:2308.03688. [Google Scholar]

- Tian, Y.; Yang, X.; Zhang, J.; Dong, Y.; Su, H. Evil Geniuses: Delving into the Safety of LLM-based Agents. arXiv 2024, arXiv:2311.11855. [Google Scholar]

- Golchin, S.; Surdeanu, M. Time Travel in LLMs: Tracing Data Contamination in Large Language Models. arXiv 2024, arXiv:2308.08493. [Google Scholar]

- Tagde, P.; Tagde, S.; Bhattacharya, T.; Tagde, P.; Chopra, H.; Akter, R.; Kaushik, D.; Rahman, M.H. Blockchain and artificial intelligence technology in e-Health. Environ. Sci. Pollut. Res. 2021, 28, 52810–52831. [Google Scholar] [CrossRef] [PubMed]

- Lopes, V.; Alexandre, L.A. An overview of blockchain integration with robotics and artificial intelligence. arXiv 2018, arXiv:1810.00329. [Google Scholar] [CrossRef]

- Hussien, H.M.; Yasin, S.M.; Udzir, N.I.; Ninggal, M.I.H.; Salman, S. Blockchain technology in the healthcare industry: Trends and opportunities. J. Ind. Inf. Integr. 2021, 22, 100217. [Google Scholar] [CrossRef]

- Rana, S.K.; Rana, S.K.; Nisar, K.; Ag Ibrahim, A.A.; Rana, A.K.; Goyal, N.; Chawla, P. Blockchain technology and Artificial Intelligence based decentralized access control model to enable secure interoperability for healthcare. Sustainability 2022, 14, 9471. [Google Scholar] [CrossRef]

- Krittanawong, C.; Aydar, M.; Virk, H.U.H.; Kumar, A.; Kaplin, S.; Guimaraes, L.; Wang, Z.; Halperin, J.L. Artificial intelligence-powered blockchains for cardiovascular medicine. Can. J. Cardiol. 2022, 38, 185–195. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.; Sharma, P.K.; Yoon, B.; Shojafar, M.; Cho, G.H.; Ra, I.H. Convergence of blockchain and artificial intelligence in IoT network for the sustainable smart city. Sustain. Cities Soc. 2020, 63, 102364. [Google Scholar] [CrossRef]

- English, M.; Auer, S.; Domingue, J. Block chain technologies & the semantic web: A framework for symbiotic development. In Computer Science Conference for University of Bonn Students; Lehmann, J., Thakkar, H., Halilaj, L., Asmat, R., Eds.; 2016; pp. 47–61. Available online: https://www.semanticscholar.org/paper/Block-Chain-Technologies-%26-The-Semantic-Web-%3A-A-for-English-Auer/2fd37fed17e07c4ec04caefe7dcbcb16670fa2d8 (accessed on 7 June 2023).

- Graux, D.; Sejdiu, G.; Jabeen, H.; Lehmann, J.; Sui, D.; Muhs, D.; Pfeffer, J. Profiting from kitties on Ethereum: Leveraging blockchain RDF data with SANSA. In Proceedings of the SEMANTiCS Conference, Vienna, Austria, 8–12 October 2018. [Google Scholar]

- Hoffman, M.R.; Ibáñez, L.D.; Fryer, H.; Simperl, E. Smart papers: Dynamic publications on the blockchain. In Proceedings of the European Semantic Web Conference, Crete, Greece, 3–7 June 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 304–318. [Google Scholar]

- Kim, H.M.; Laskowski, M.; Nan, N. A First Step in the Co-Evolution of Blockchain and Ontologies: Towards Engineering an Ontology of Governance at the Blockchain Protocol Level. arXiv 2018, arXiv:1801.02027. [Google Scholar] [CrossRef]

- Sikorski, J.J.; Haughton, J.; Kraft, M. Blockchain technology in the chemical industry: Machine-to-machine electricity market. Appl. Energy 2017, 195, 234–246. [Google Scholar] [CrossRef]

- Sicilia, M.Á.; Visvizi, A. Blockchain and OECD data repositories: Opportunities and policymaking implications. Library Hi Tech 2018, 37, 30–42. [Google Scholar] [CrossRef]

- Le-Tuan, A.; Hingu, D.; Hauswirth, M.; Le-Phuoc, D. Incorporating blockchain into rdf store at the lightweight edge devices. In International Conference on Semantic Systems; Springer: Cham, Switzerland, 2019; pp. 369–375. [Google Scholar]

- Ibáñez, L.D.; Fryer, H.; Simperl, E.P.B. Attaching Semantic Metadata to Cryptocurrency Transactions. In Proceedings of the DeSemWeb@ISWC, Vienna, Austria, 21–22 October 2017. [Google Scholar]

- Cano-Benito, J.; Cimmino, A.; García-Castro, R. Towards blockchain and semantic web. In Proceedings of the International Conference on Business Information Systems, Hannover, Germany, 14–17 June 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 220–231. [Google Scholar]

- Kruijff, J.d.; Weigand, H. Understanding the blockchain using enterprise ontology. In Proceedings of the International Conference on Advanced Information Systems Engineering, Essen, Germany, 12–16 June 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 29–43. [Google Scholar]

- Cano-Benito, J.; Cimmino, A.; García-Castro, R. Benchmarking the efficiency of RDF-based access for blockchain environments. In Proceedings of the SEKE, Pittsburgh, PA, USA, 9–11 July 2020; pp. 554–559. [Google Scholar]

- Ruta, M.; Scioscia, F.; Ieva, S.; Capurso, G.; Di Sciascio, E. Supply chain object discovery with semantic-enhanced blockchain. In Proceedings of the 15th ACM Conference on Embedded Network Sensor Systems, Delft, The Netherlands, 6–8 November 2017; pp. 1–2. [Google Scholar]

- ECLASS Technical-Specification. Available online: https://eclass.eu/support/technical-specification/data-model/iso-13584-32-ontoml (accessed on 7 June 2023).

- Costa, D.; Bezemer, C.P.; Leitner, P.; Andrzejak, A. What’s wrong with my benchmark results? studying bad practices in JMH benchmarks. IEEE Trans. Softw. Eng. 2019, 47, 1452–1467. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).