TinyML Algorithms for Big Data Management in Large-Scale IoT Systems

Abstract

1. Introduction

2. Background and Related Work

2.1. Big Data Challenges, Internet of Things, and TinyML

The Big Data Dilemma in IoT

- Storage Capacity and Scalability: Traditional storage systems grapple with the ever-growing influx of data from IoT sources, necessitating the development of more scalable and adaptive solutions.

- Data Processing and Analysis: The heterogeneity of IoT data requires sophisticated adaptable algorithms and infrastructures to derive meaningful insights efficiently.

- Data Transfer and Network Load: Ensuring efficient and timely data transmission across a myriad of devices without overburdening the network infrastructure remains a paramount concern.

- Data Integrity and Security: As data become increasingly decentralized across devices, ensuring their authenticity and safeguarding them from potential threats are critical.

2.2. TinyML

2.2.1. TinyML as a Novel Facilitator in IoT Big Data Management

- Localized On-Device Processing: TinyML facilitates local data processing, markedly reducing the need for continuous data transfers, thus optimizing network bandwidth and improving system responsiveness.

- Intelligent Data Streamlining: With the ability to perform preliminary on-device analysis, TinyML enables IoT systems to discern and selectively transmit pivotal data, ensuring efficient utilization of storage resources.

- Adaptive Learning Mechanisms: IoT devices embedded with TinyML can continuously refine their data processing algorithms, fostering adaptability to dynamic data patterns and environmental changes.

- Reinforced Security Protocols: By integrating real-time anomaly detection at the device level, TinyML significantly enhances the security framework, providing an early detection system for potential data breaches or threats.

2.2.2. Characteristics of Large-Scale IoT Systems

2.2.3. Applications of TinyML on Embedded Devices

2.3. TinyML Algorithms

2.4. Data Management Techniques Utilizing TinyML in IoT Systems

3. Methodology

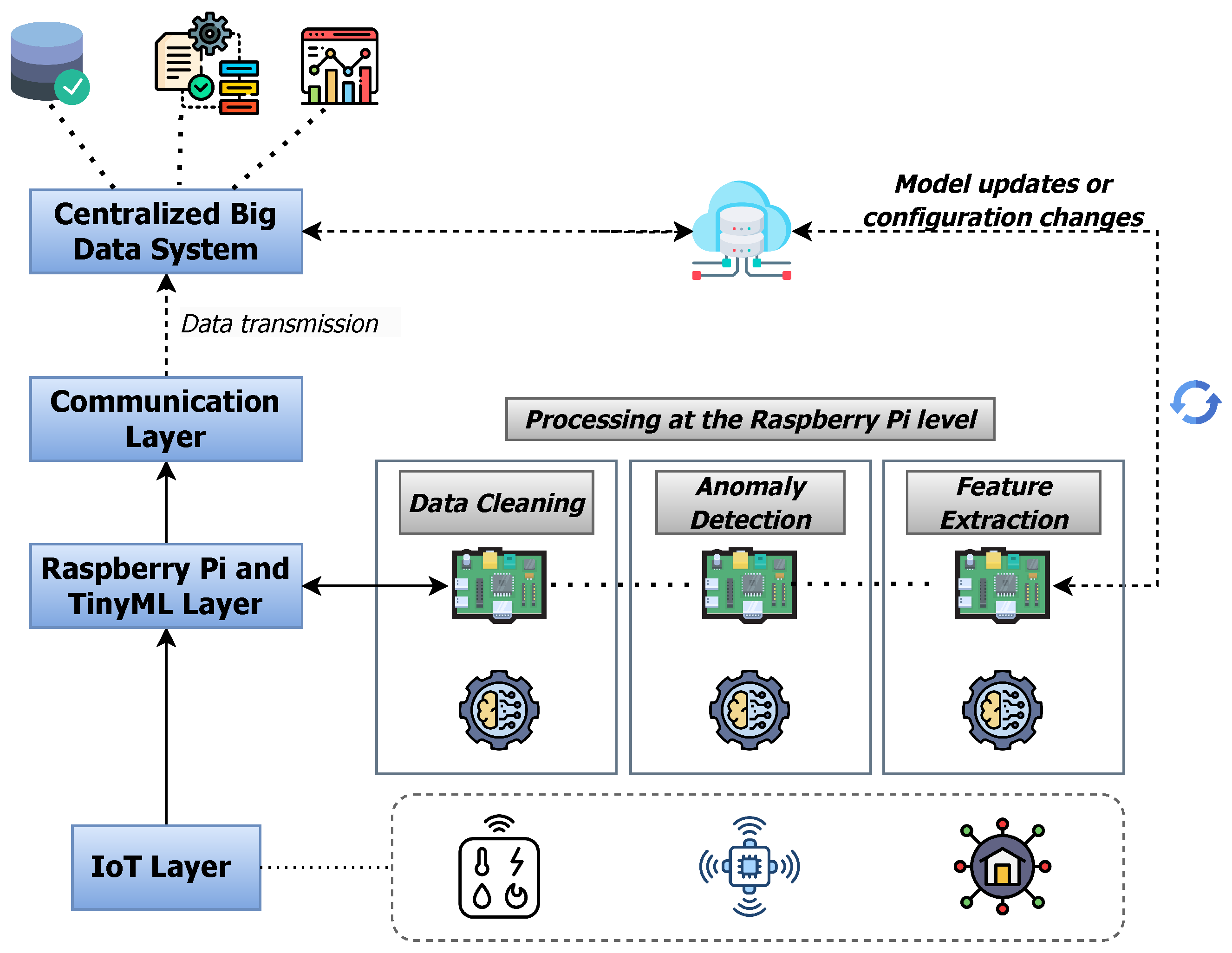

3.1. Advantages of TinyML

- Reduced Latency: Data processing on Raspberry Pi eliminates the lag associated with transmitting data to a centralized server and then fetching results. This ensures real-time or near-real-time responses.

- Decreased Bandwidth Consumption: Only crucial or processed data may be sent to the central server, reducing network load.

- Enhanced Privacy and Security: On-device processing ensures data privacy. Additionally, Raspberry Pis can be equipped with encryption tools to secure data before any transmission.

- Energy Efficiency: Although Raspberry Pis consume more energy than simple sensors, they are far more efficient than transmitting vast amounts of data to a distant server.

- Operational Resilience: Raspberry Pis equipped with TinyML can continue operations even when there is no network connectivity.

- Scalability and Flexibility: Raspberry Pis can be equipped with a variety of tools and software, allowing custom solutions for different data types and processing needs.

3.2. Big Data Challenges and Problems Addressed

- Volume: Local processing reduces data volume heading to centralized systems.

- Velocity: Raspberry Pis can handle high-frequency data, making real-time requirements attainable.

- Variety: Given their flexibility, Raspberry Pis can be customized to manage a multitude of data formats and types.

- Veracity: They can ensure data quality, filtering anomalies or errors before transmission.

- Value: On-device processing extracts meaningful insights, ensuring only the most relevant data are transmitted to central systems.

3.3. Framework Architecture

3.4. Hardware Configuration

- Raspberry Pi Devices:

- −

- 10 × Raspberry Pi 4 Model B: These are the workhorses of our setup, deployed for edge computing and intensive data processing tasks.

- −

- 5 × Raspberry Pi Zero W: These smaller units are used for less demanding tasks, primarily for collecting sensor data.

- Sensor Array:

- −

- 15 × DHT22 Temperature and Humidity Sensors: Key for monitoring environmental conditions, providing accurate temperature and humidity readings.

- −

- 10 × MPU6050 Gyroscope and Accelerometer Sensors: Employed to track motion and orientation, crucial for applications requiring movement analysis.

- −

- 8 × LDR Light Sensors: These sensors are tasked with detecting changes in light intensity, useful in both indoor and outdoor settings.

- −

- 7 × HC-SR04 Ultrasonic Distance Sensors: Utilized primarily for distance measurement and object detection, they play a pivotal role in spatial analysis.

- −

- 5 × Soil Moisture Sensors: Specifically selected for agricultural applications, these sensors provide valuable data for smart farming solutions.

3.5. Computational Framework for IoT Model Training and Evaluation

3.6. Dataset Configuration for TinyML Evaluation

- Sensor Array Composition:

- −

- Environmental Data: Sourced from DHT22 sensors, providing continuous insights into temperature and humidity.

- −

- Motion and Orientation Data: Collected via MPU6050 sensors, capturing detailed information on movement and angular positions.

- −

- Light Intensity Measurements: Obtained from LDR sensors, these readings reflect variations in ambient lighting conditions.

- −

- Distance and Proximity Data: Acquired from HC-SR04 ultrasonic sensors, essential for spatial analysis and object detection.

- −

- Soil Moisture Levels: Recorded by specialized sensors, pivotal for applications in smart agriculture.

- Data Volume:

- −

- The dataset encompasses over 1 terabyte of collected raw sensor data, providing a substantial foundation for algorithmic testing and optimization.

- Data Collection Frequency:

- −

- Sensor readings are captured at varying intervals, ranging from high-frequency real-time data streams to periodic updates. This variability simulates different real-world operational scenarios, ensuring robust algorithm testing.

- Data Preparation:

- −

- Prior to analysis, the data were subjected to essential preprocessing steps, including cleaning and normalization, to ensure consistency and reliability for subsequent TinyML processing.

3.7. Proposed Algorithms

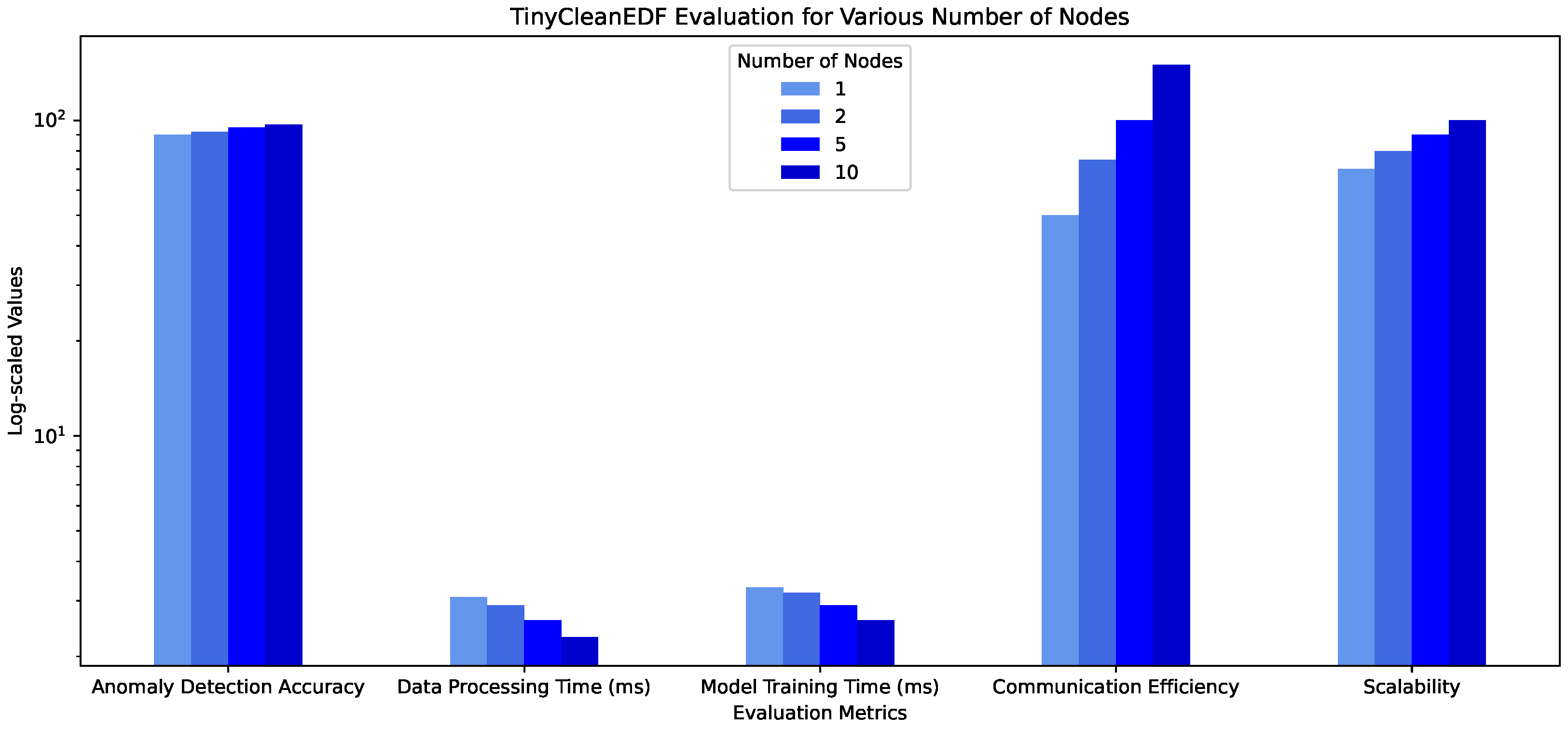

| Algorithm 1 TinyCleanEDF: Federated Learning for Data Cleaning and Anomaly Detection with Autoencoder-based Feature Extraction |

|

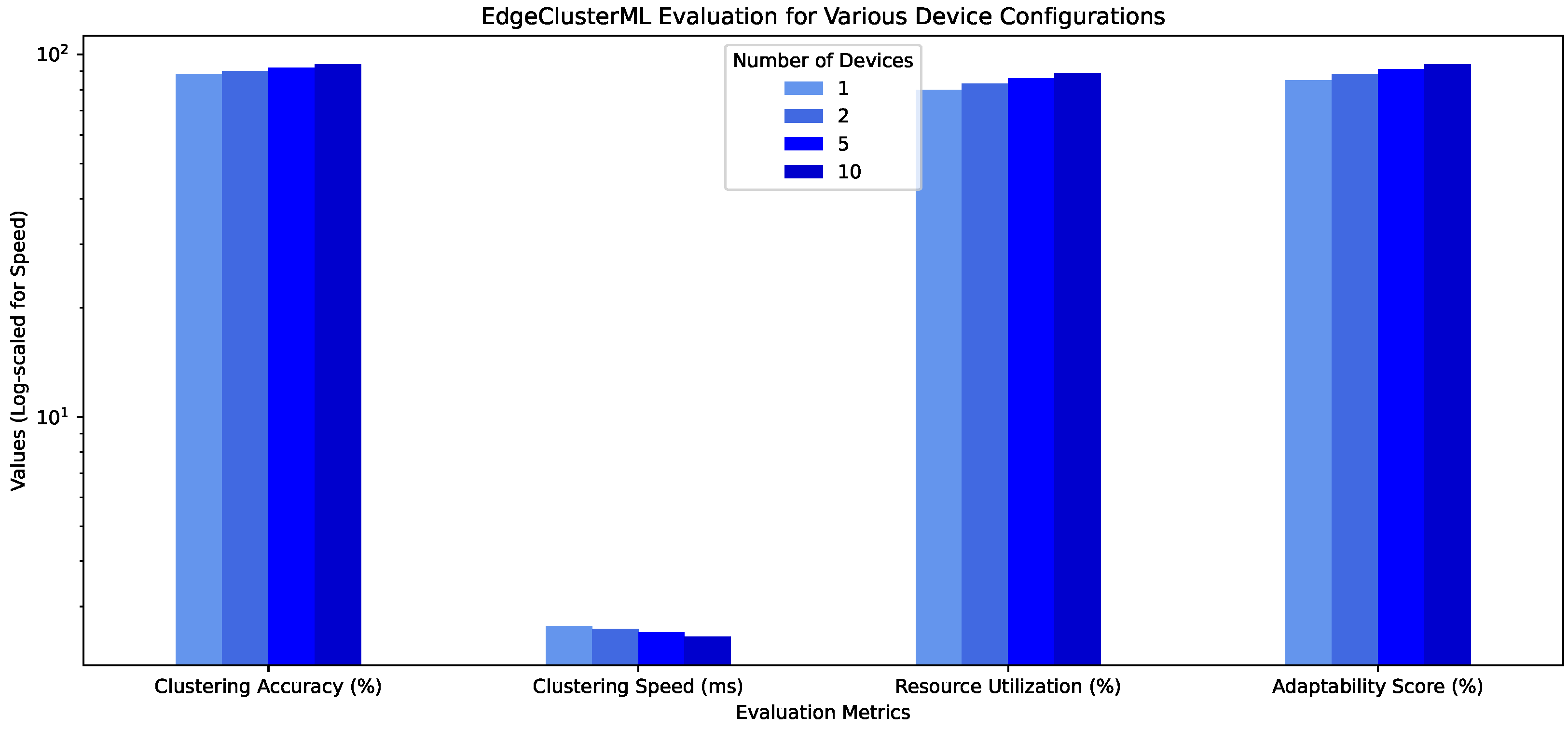

| Algorithm 2 EdgeClusterML: Dynamic and Self-Optimizing Clustering at the Edge |

|

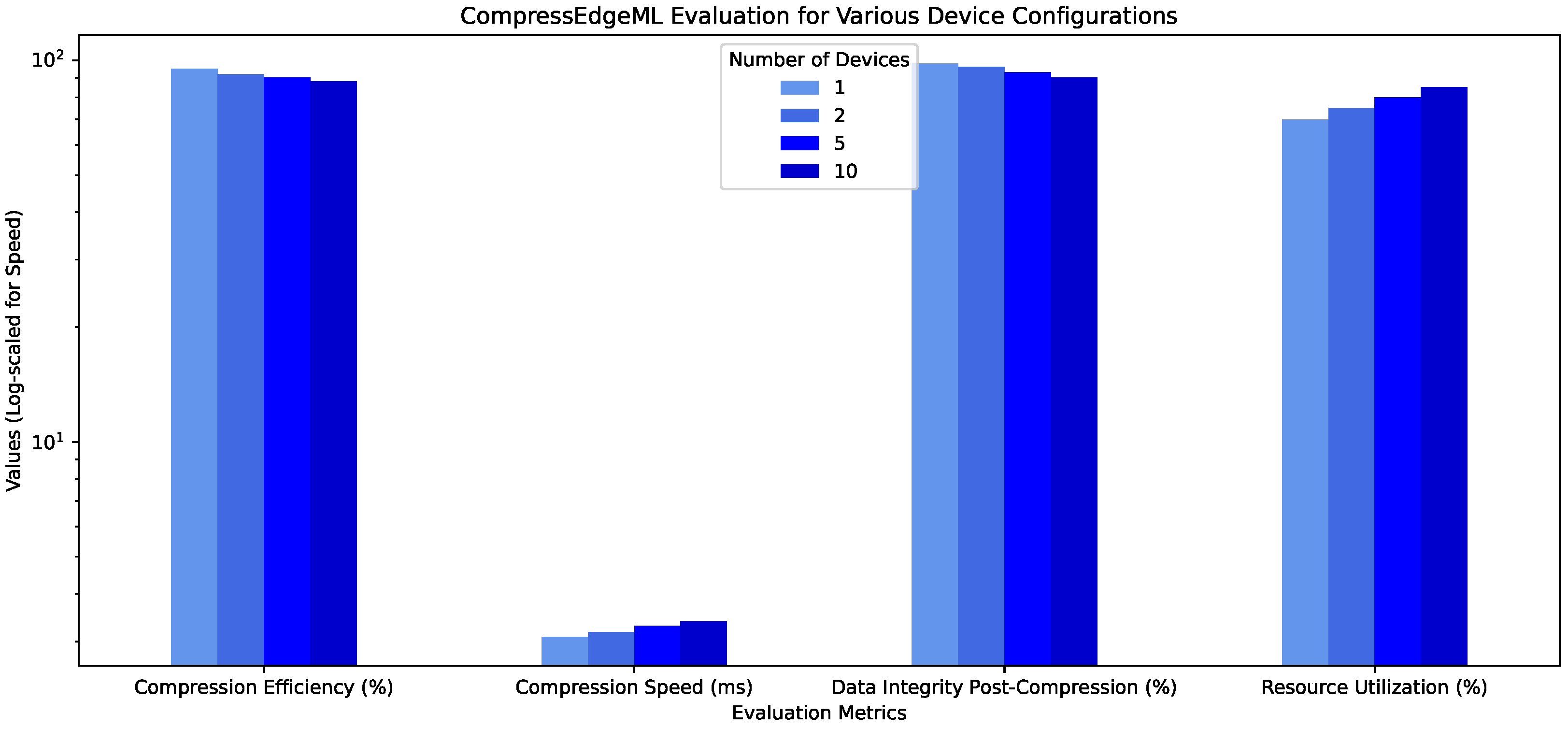

| Algorithm 3 CompressEdgeML: Adaptive Data Compression |

|

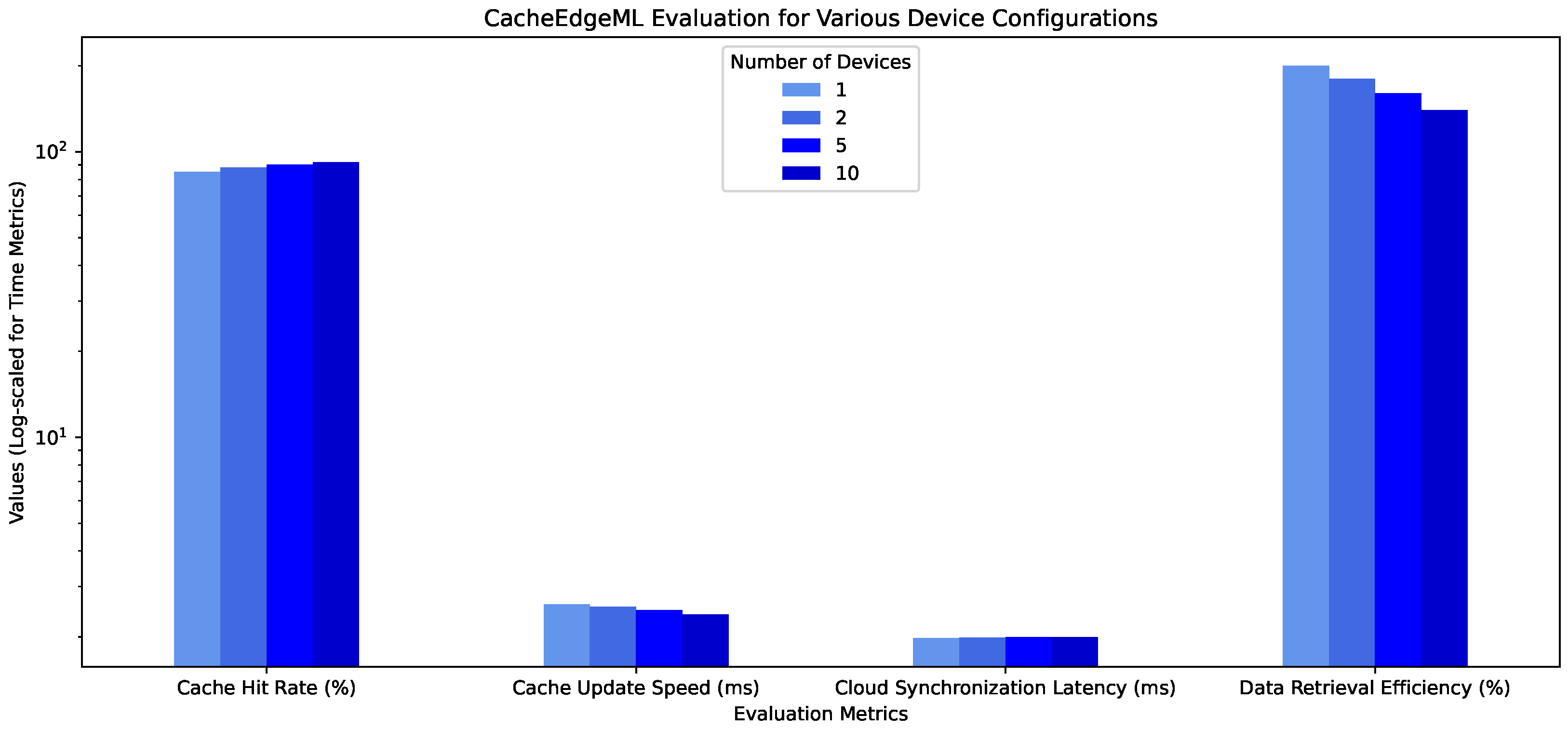

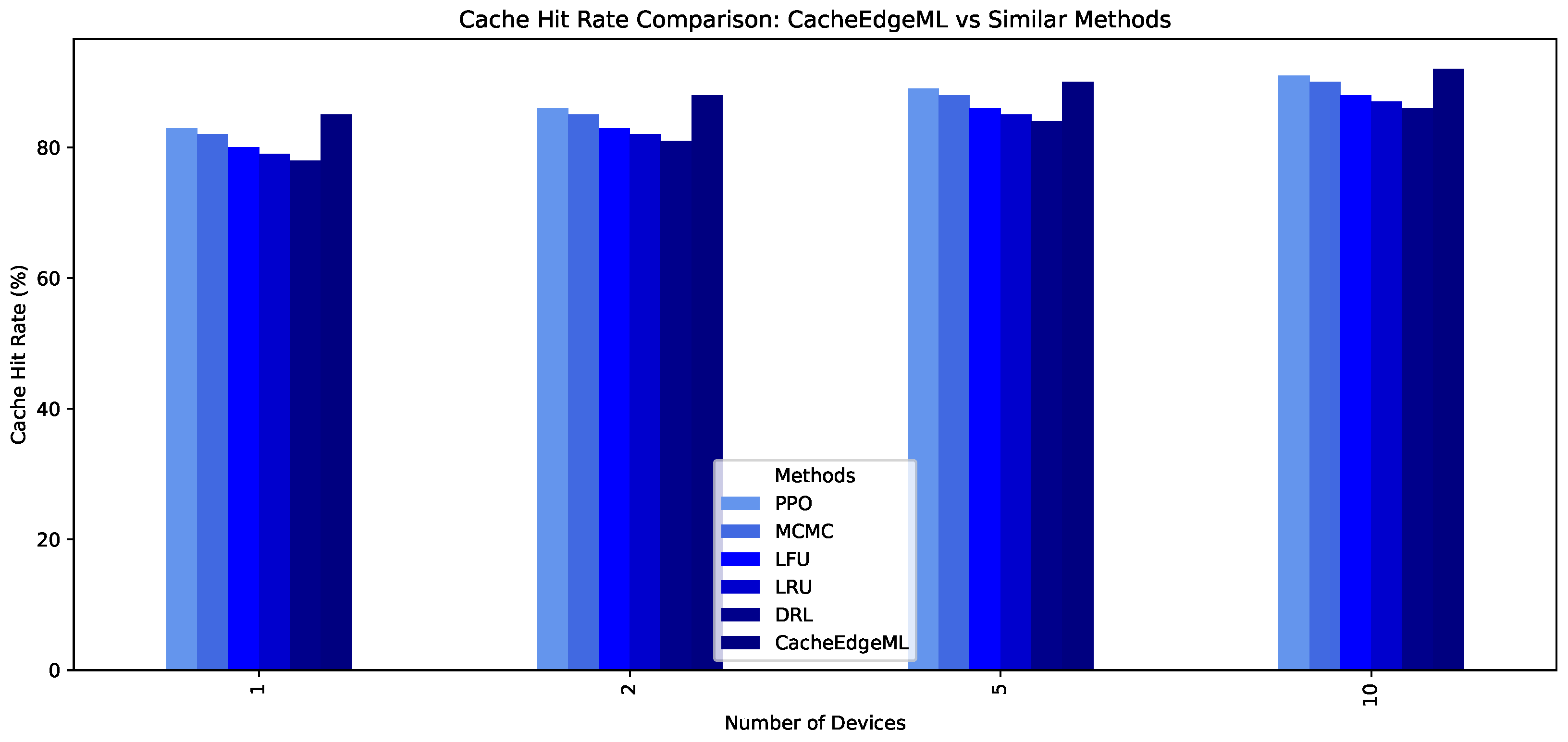

| Algorithm 4 CacheEdgeML: Predictive and Tiered Data Caching Strategy |

|

3.8. Comparison among Proposed TinyML Algorithms

| Algorithm 5 TinyHybridSenseQ: Data-Aware Hybrid Storage and Quality Assessment for IoT Sensors |

|

4. Experimental Results

4.1. Overview

4.2. Metrics and Methods

- Data Processing Time (ms): To measure the data processing time in a distributed system such as the one proposed where we have multiple Raspberry Pi devices, we can consider the maximum time taken by any single device as well as the average time across all devices. The equation is provided in Equation (1).

- Model Training Time (ms): For the model training time, we want to measure both the total cumulative time and the longest individual training time across all devices. The calculation is provided in Equation (2).

- Anomaly Detection Accuracy: For a distributed system, we want to consider not only the overall accuracy but also the consistency of anomaly detection across different nodes. A weighted approach is used where the accuracy of each node is weighted by the number of instances it processes. This is provided in Equation (3).

- Communication Efficiency: In a large-scale distributed setup, communication efficiency should account for the data transmission efficiency, the overhead of synchronization among nodes, the error rate in data transmission, and the effective utilization of available bandwidth. This comprehensive approach ensures a realistic assessment of communication performance in a distributed system.

- represents Communication Efficiency.

- is the symbol for Useful Data Transmitted.

- stands for Total Data Transmitted.

- is the Synchronization Overhead.

- denotes the Error Rate.

- symbolizes Bandwidth Utilization.

- Scalability: Scalability in a distributed system can be quantified by measuring how the system’s performance changes with the addition of more nodes, considering factors like throughput, response time, load balancing, system capacity, and cost-effectiveness. A higher throughput ratio, a lower response time ratio, and efficient load balancing with increased nodes indicate better scalability.

4.3. Comparison with Similar Works

5. Conclusions and Future Work

Future Work

- Anomaly Detection: There is space for incorporating more advanced machine learning models to enhance the accuracy and speed of anomaly detection, especially in environments with complex or noisy data. This will allow for more precise identification of irregularities, enhancing the overall data integrity.

- Energy Efficiency: Optimizing the energy consumption of these algorithms is crucial, particularly in environments where energy resources are limited. Research should focus on developing energy-efficient methods that reduce the overall energy demand of the system without sacrificing performance.

- Cloud–Edge Integration: Enhancing the interaction between edge and cloud platforms is essential for improved data synchronization and storage efficiency. This involves developing methods for more seamless data processing and management in hybrid cloud–edge environments.

- Real-Time Data Processing: Optimizing these algorithms for real-time processing of streaming data is imperative. This would enable timely decisionmaking based on the most current data, a critical aspect in dynamic IoT environments.

- Security and Privacy: Strengthening the security and privacy features of these algorithms is important, especially for applications handling sensitive information. This involves implementing robust security measures to protect data from unauthorized access and ensure user privacy.

- Customization and Adaptability: Improving the adaptability of these algorithms to various IoT environments is necessary. Future work should aim at developing customizable solutions that can be tailored to meet specific requirements of different applications.

- Interoperability and Standardization: Promoting interoperability between diverse IoT devices and platforms and contributing to standardization efforts is crucial. This will facilitate smoother integration and communication across different systems and devices.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mashayekhy, Y.; Babaei, A.; Yuan, X.M.; Xue, A. Impact of Internet of Things (IoT) on Inventory Management: A Literature Survey. Logistics 2022, 6, 33. [Google Scholar] [CrossRef]

- Vonitsanos, G.; Panagiotakopoulos, T.; Kanavos, A. Issues and challenges of using blockchain for iot data management in smart healthcare. Biomed. J. Sci. Tech. Res. 2021, 40, 32052–32057. [Google Scholar]

- Zaidi, S.A.R.; Hayajneh, A.M.; Hafeez, M.; Ahmed, Q.Z. Unlocking Edge Intelligence Through Tiny Machine Learning (TinyML). IEEE Access 2022, 10, 100867–100877. [Google Scholar] [CrossRef]

- Ersoy, M.; Şansal, U. Analyze Performance of Embedded Systems with Machine Learning Algorithms. In Proceedings of the Trends in Data Engineering Methods for Intelligent Systems: Proceedings of the International Conference on Artificial Intelligence and Applied Mathematics in Engineering (ICAIAME 2020), Antalya, Turkey, 18–20 April 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 231–236. [Google Scholar]

- Khobragade, P.; Ghutke, P.; Kalbande, V.P.; Purohit, N. Advancement in Internet of Things (IoT) Based Solar Collector for Thermal Energy Storage System Devices: A Review. In Proceedings of the 2022 2nd International Conference on Power Electronics & IoT Applications in Renewable Energy and its Control (PARC), Mathura, India, 21–22 January 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Ayub Khan, A.; Laghari, A.A.; Shaikh, Z.A.; Dacko-Pikiewicz, Z.; Kot, S. Internet of Things (IoT) Security with Blockchain Technology: A State-of-the-Art Review. IEEE Access 2022, 10, 122679–122695. [Google Scholar] [CrossRef]

- Chauhan, C.; Ramaiya, M.K. Advanced Model for Improving IoT Security Using Blockchain Technology. In Proceedings of the 2022 4th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 January 2022; pp. 83–89. [Google Scholar] [CrossRef]

- Mohanta, B.K.; Jena, D.; Satapathy, U.; Patnaik, S. Survey on IoT security: Challenges and solution using machine learning, artificial intelligence and blockchain technology. Internet Things 2020, 11, 100227. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, C.; Wang, Y.; Gao, L. A Cross-Chain Solution to Integrating Multiple Blockchains for IoT Data Management. Sensors 2019, 19, 2042. [Google Scholar] [CrossRef]

- Ren, H.; Anicic, D.; Runkler, T. How to Manage Tiny Machine Learning at Scale: An Industrial Perspective. arXiv 2022, arXiv:2202.09113. [Google Scholar]

- Keserwani, P.K.; Govil, M.C.; Pilli, E.S.; Govil, P. A smart anomaly-based intrusion detection system for the Internet of Things (IoT) network using GWO–PSO–RF model. J. Reliab. Intell. Environ. 2021, 7, 3–21. [Google Scholar] [CrossRef]

- Gibbs, M.; Woodward, K.; Kanjo, E. Combining Multiple tinyML Models for Multimodal Context-Aware Stress Recognition on Constrained Microcontrollers. IEEE Micro 2023, 1–9. [Google Scholar] [CrossRef]

- Chen, Z.; Gao, Y.; Liang, J. LOPdM: A Low-power On-device Predictive Maintenance System Based on Self-powered Sensing and TinyML. IEEE Trans. Instrum. Meas. 2023, 72, 2525213. [Google Scholar] [CrossRef]

- Savanna, R.L.; Hanyurwimfura, D.; Nsenga, J.; Rwigema, J. A Wearable Device for Respiratory Diseases Monitoring in Crowded Spaces. Case Study of COVID-19. In Proceedings of the International Congress on Information and Communication Technology, London, UK, 20–23 February 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 515–528. [Google Scholar]

- Nguyen, H.T.; Mai, N.D.; Lee, B.G.; Chung, W.Y. Behind-the-Ear EEG-Based Wearable Driver Drowsiness Detection System Using Embedded Tiny Neural Networks. IEEE Sens. J. 2023, 23, 23875–23892. [Google Scholar] [CrossRef]

- Hussein, D.; Bhat, G. SensorGAN: A Novel Data Recovery Approach for Wearable Human Activity Recognition. ACM Trans. Embed. Comput. Syst. 2023. [Google Scholar] [CrossRef]

- Zacharia, A.; Zacharia, D.; Karras, A.; Karras, C.; Giannoukou, I.; Giotopoulos, K.C.; Sioutas, S. An Intelligent Microprocessor Integrating TinyML in Smart Hotels for Rapid Accident Prevention. In Proceedings of the 2022 7th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Ioannina, Greece, 23–25 September 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Atanane, O.; Mourhir, A.; Benamar, N.; Zennaro, M. Smart Buildings: Water Leakage Detection Using TinyML. Sensors 2023, 23, 9210. [Google Scholar] [CrossRef]

- Malche, T.; Maheshwary, P.; Tiwari, P.K.; Alkhayyat, A.H.; Bansal, A.; Kumar, R. Efficient solid waste inspection through drone-based aerial imagery and TinyML vision model. Trans. Emerg. Telecommun. Technol. 2023, e4878. [Google Scholar] [CrossRef]

- Hammad, S.S.; Iskandaryan, D.; Trilles, S. An unsupervised TinyML approach applied to the detection of urban noise anomalies under the smart cities environment. Internet Things 2023, 23, 100848. [Google Scholar] [CrossRef]

- Priya, S.K.; Balaganesh, N.; Karthika, K.P. Integration of AI, Blockchain, and IoT Technologies for Sustainable and Secured Indian Public Distribution System. In AI Models for Blockchain-Based Intelligent Networks in IoT Systems: Concepts, Methodologies, Tools, and Applications; Springer: Berlin/Heidelberg, Germany, 2023; pp. 347–371. [Google Scholar]

- Flores, T.; Silva, M.; Azevedo, M.; Medeiros, T.; Medeiros, M.; Silva, I.; Dias Santos, M.M.; Costa, D.G. TinyML for Safe Driving: The Use of Embedded Machine Learning for Detecting Driver Distraction. In Proceedings of the 2023 IEEE International Workshop on Metrology for Automotive (MetroAutomotive), Modena, Italy, 28–30 June 2023; pp. 62–66. [Google Scholar] [CrossRef]

- Nkuba, C.K.; Woo, S.; Lee, H.; Dietrich, S. ZMAD: Lightweight Model-Based Anomaly Detection for the Structured Z-Wave Protocol. IEEE Access 2023, 11, 60562–60577. [Google Scholar] [CrossRef]

- Shabir, M.Y.; Torta, G.; Basso, A.; Damiani, F. Toward Secure TinyML on a Standardized AI Architecture. In Device-Edge-Cloud Continuum: Paradigms, Architectures and Applications; Springer: Berlin/Heidelberg, Germany, 2023; pp. 121–139. [Google Scholar]

- Tsoukas, V.; Gkogkidis, A.; Boumpa, E.; Papafotikas, S.; Kakarountas, A. A Gas Leakage Detection Device Based on the Technology of TinyML. Technologies 2023, 11, 45. [Google Scholar] [CrossRef]

- Hayajneh, A.M.; Aldalahmeh, S.A.; Alasali, F.; Al-Obiedollah, H.; Zaidi, S.A.; McLernon, D. Tiny machine learning on the edge: A framework for transfer learning empowered unmanned aerial vehicle assisted smart farming. IET Smart Cities 2023. [Google Scholar] [CrossRef]

- Adeola, J.O.; Degila, J.; Zennaro, M. Recent Advances in Plant Diseases Detection With Machine Learning: Solution for Developing Countries. In Proceedings of the 2022 IEEE International Conference on Smart Computing (SMARTCOMP), Helsinki, Finland, 20–24 June 2022; pp. 374–380. [Google Scholar] [CrossRef]

- Tsoukas, V.; Gkogkidis, A.; Kakarountas, A. A TinyML-Based System for Smart Agriculture. In Proceedings of the 26th Pan-Hellenic Conference on Informatics, New York, NY, USA, 25–27 November 2023; pp. 207–212. [Google Scholar] [CrossRef]

- Nicolas, C.; Naila, B.; Amar, R.C. TinyML Smart Sensor for Energy Saving in Internet of Things Precision Agriculture platform. In Proceedings of the 2022 Thirteenth International Conference on Ubiquitous and Future Networks (ICUFN), Barcelona, Spain, 5–8 July 2022; pp. 256–259. [Google Scholar] [CrossRef]

- Nicolas, C.; Naila, B.; Amar, R.C. Energy efficient Firmware over the Air Update for TinyML models in LoRaWAN agricultural networks. In Proceedings of the 2022 32nd International Telecommunication Networks and Applications Conference (ITNAC), Wellington, New Zealand, 30 November–2 December 2022; pp. 21–27. [Google Scholar] [CrossRef]

- Viswanatha, V.; Ramachandra, A.C.; Hegde, P.T.; Raghunatha Reddy, M.V.; Hegde, V.; Sabhahit, V. Implementation of Smart Security System in Agriculture fields Using Embedded Machine Learning. In Proceedings of the 2023 International Conference on Applied Intelligence and Sustainable Computing (ICAISC), Zakopane, Poland, 18–22 June 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Botero-Valencia, J.; Barrantes-Toro, C.; Marquez-Viloria, D.; Pearce, J.M. Low-cost air, noise, and light pollution measuring station with wireless communication and tinyML. HardwareX 2023, 16, e00477. [Google Scholar] [CrossRef]

- Li, T.; Luo, J.; Liang, K.; Yi, C.; Ma, L. Synergy of Patent and Open-Source-Driven Sustainable Climate Governance under Green AI: A Case Study of TinyML. Sustainability 2023, 15, 13779. [Google Scholar] [CrossRef]

- Ihoume, I.; Tadili, R.; Arbaoui, N.; Benchrifa, M.; Idrissi, A.; Daoudi, M. Developing a TinyML-Oriented Deep Learning Model for an Intelligent Greenhouse Microclimate Control from Multivariate Sensed Data. In Intelligent Sustainable Systems: Selected Papers of WorldS4 2022; Springer: Berlin/Heidelberg, Germany, 2023; Volume 2, pp. 283–291. [Google Scholar]

- Prakash, S.; Stewart, M.; Banbury, C.; Mazumder, M.; Warden, P.; Plancher, B.; Reddi, V.J. Is TinyML Sustainable? Assessing the Environmental Impacts of Machine Learning on Microcontrollers. arXiv 2023, arXiv:2301.11899. [Google Scholar]

- Soni, S.; Khurshid, A.; Minase, A.M.; Bonkinpelliwar, A. A TinyML Approach for Quantification of BOD and COD in Water. In Proceedings of the 2023 2nd International Conference on Paradigm Shifts in Communications Embedded Systems, Machine Learning and Signal Processing (PCEMS), Nagpur, India, 5–6 April 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Arratia, B.; Prades, J.; Peña-Haro, S.; Cecilia, J.M.; Manzoni, P. BODOQUE: An Energy-Efficient Flow Monitoring System for Ephemeral Streams. In Proceedings of the Twenty-fourth International Symposium on Theory, Algorithmic Foundations, and Protocol Design for Mobile Networks and Mobile Computing, Washington, DC, USA, 23–26 October 2023; pp. 358–363. [Google Scholar]

- Wardana, I.N.K.; Fahmy, S.A.; Gardner, J.W. TinyML Models for a Low-Cost Air Quality Monitoring Device. IEEE Sens. Lett. 2023, 7, 1–4. [Google Scholar] [CrossRef]

- Sanchez-Iborra, R. LPWAN and Embedded Machine Learning as Enablers for the Next Generation of Wearable Devices. Sensors 2021, 21, 5218. [Google Scholar] [CrossRef]

- Hussein, M.; Mohammed, Y.S.; Galal, A.I.; Abd-Elrahman, E.; Zorkany, M. Smart Cognitive IoT Devices Using Multi-Layer Perception Neural Network on Limited Microcontroller. Sensors 2022, 22, 5106. [Google Scholar] [CrossRef]

- Prakash, S.; Callahan, T.; Bushagour, J.; Banbury, C.; Green, A.V.; Warden, P.; Ansell, T.; Reddi, V.J. CFU Playground: Full-Stack Open-Source Framework for Tiny Machine Learning (TinyML) Acceleration on FPGAs. In Proceedings of the 2023 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Raleigh, NC, USA, 23–25 April 2023; pp. 157–167. [Google Scholar] [CrossRef]

- Gibbs, M.; Kanjo, E. Realising the Power of Edge Intelligence: Addressing the Challenges in AI and tinyML Applications for Edge Computing. In Proceedings of the 2023 IEEE International Conference on Edge Computing and Communications (EDGE), Chicago, IL, USA, 2–8 July 2023; pp. 337–343. [Google Scholar] [CrossRef]

- Shafique, M.; Theocharides, T.; Reddy, V.J.; Murmann, B. TinyML: Current Progress, Research Challenges, and Future Roadmap. In Proceedings of the 2021 58th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 5–9 December 2021; pp. 1303–1306. [Google Scholar] [CrossRef]

- Banbury, C.R.; Reddi, V.J.; Lam, M.; Fu, W.; Fazel, A.; Holleman, J.; Huang, X.; Hurtado, R.; Kanter, D.; Lokhmotov, A.; et al. Benchmarking tinyml systems: Challenges and direction. arXiv 2020, arXiv:2003.04821. [Google Scholar]

- Ooko, S.O.; Muyonga Ogore, M.; Nsenga, J.; Zennaro, M. TinyML in Africa: Opportunities and Challenges. In Proceedings of the 2021 IEEE Globecom Workshops (GC Wkshps), Madrid, Spain, 7–11 December 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Sanchez-Iborra, R.; Skarmeta, A.F. TinyML-Enabled Frugal Smart Objects: Challenges and Opportunities. IEEE Circuits Syst. Mag. 2020, 20, 4–18. [Google Scholar] [CrossRef]

- Mishra, N.; Lin, C.C.; Chang, H.T. A Cognitive Oriented Framework for IoT Big-data Management Prospective. In Proceedings of the 2014 IEEE International Conference on Communiction Problem-Solving, Beijing, China, 5–7 December 2014; pp. 124–127. [Google Scholar] [CrossRef]

- Mishra, N.; Lin, C.C.; Chang, H.T. A cognitive adopted framework for IoT big-data management and knowledge discovery prospective. Int. J. Distrib. Sens. Netw. 2015, 11, 718390. [Google Scholar] [CrossRef]

- Huang, X.; Fan, J.; Deng, Z.; Yan, J.; Li, J.; Wang, L. Efficient IoT data management for geological disasters based on big data-turbocharged data lake architecture. ISPRS Int. J. Geo-Inf. 2021, 10, 743. [Google Scholar] [CrossRef]

- Oktian, Y.E.; Lee, S.G.; Lee, B.G. Blockchain-based continued integrity service for IoT big data management: A comprehensive design. Electronics 2020, 9, 1434. [Google Scholar] [CrossRef]

- Lê, M.T.; Arbel, J. TinyMLOps for real-time ultra-low power MCUs applied to frame-based event classification. In Proceedings of the 3rd Workshop on Machine Learning and Systems, Rome, Italy, 8 May 2023; pp. 148–153. [Google Scholar]

- Doyu, H.; Morabito, R.; Brachmann, M. A TinyMLaaS Ecosystem for Machine Learning in IoT: Overview and Research Challenges. In Proceedings of the 2021 International Symposium on VLSI Design, Automation and Test (VLSI-DAT), Hsinchu, Taiwan, 19–22 April 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Lin, J.; Zhu, L.; Chen, W.M.; Wang, W.C.; Han, S. Tiny Machine Learning: Progress and Futures [Feature]. IEEE Circuits Syst. Mag. 2023, 23, 8–34. [Google Scholar] [CrossRef]

- Schizas, N.; Karras, A.; Karras, C.; Sioutas, S. TinyML for Ultra-Low Power AI and Large Scale IoT Deployments: A Systematic Review. Future Internet 2022, 14, 363. [Google Scholar] [CrossRef]

- Alajlan, N.N.; Ibrahim, D.M. TinyML: Enabling of Inference Deep Learning Models on Ultra-Low-Power IoT Edge Devices for AI Applications. Micromachines 2022, 13, 851. [Google Scholar] [CrossRef]

- Han, H.; Siebert, J. TinyML: A Systematic Review and Synthesis of Existing Research. In Proceedings of the 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Jeju Island, Republic of Korea, 21–24 February 2022; pp. 269–274. [Google Scholar] [CrossRef]

- Andrade, P.; Silva, I.; Silva, M.; Flores, T.; Cassiano, J.; Costa, D.G. A TinyML Soft-Sensor Approach for Low-Cost Detection and Monitoring of Vehicular Emissions. Sensors 2022, 22, 3838. [Google Scholar] [CrossRef]

- Wongthongtham, P.; Kaur, J.; Potdar, V.; Das, A. Big data challenges for the Internet of Things (IoT) paradigm. In Connected Environments for the Internet of Things: Challenges and Solutions; Springer: Berlin/Heidelberg, Germany, 2017; pp. 41–62. [Google Scholar]

- Shu, L.; Mukherjee, M.; Pecht, M.; Crespi, N.; Han, S.N. Challenges and Research Issues of Data Management in IoT for Large-Scale Petrochemical Plants. IEEE Syst. J. 2018, 12, 2509–2523. [Google Scholar] [CrossRef]

- Gore, R.; Valsan, S.P. Big Data challenges in smart Grid IoT (WAMS) deployment. In Proceedings of the 2016 8th International Conference on Communication Systems and Networks (COMSNETS), Bangalore, India, 5–10 January 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Touqeer, H.; Zaman, S.; Amin, R.; Hussain, M.; Al-Turjman, F.; Bilal, M. Smart home security: Challenges, issues and solutions at different IoT layers. J. Supercomput. 2021, 77, 14053–14089. [Google Scholar] [CrossRef]

- Kumari, K.; Mrunalini, M. A Framework for Analysis of Incompleteness and Security Challenges in IoT Big Data. Int. J. Inf. Secur. Priv. (IJISP) 2022, 16, 1–13. [Google Scholar] [CrossRef]

- Zhang, Y.; Adin, V.; Bader, S.; Oelmann, B. Leveraging Acoustic Emission and Machine Learning for Concrete Materials Damage Classification on Embedded Devices. IEEE Trans. Instrum. Meas. 2023, 72, 2525108. [Google Scholar] [CrossRef]

- Moin, A.; Challenger, M.; Badii, A.; Günnemann, S. Supporting AI Engineering on the IoT Edge through Model-Driven TinyML. In Proceedings of the 2022 IEEE 46th Annual Computers, Software, and Applications Conference (COMPSAC), Los Alamitos, CA, USA, 27 June–1 July 2022; pp. 884–893. [Google Scholar] [CrossRef]

- David, R.; Duke, J.; Jain, A.; Janapa Reddi, V.; Jeffries, N.; Li, J.; Kreeger, N.; Nappier, I.; Natraj, M.; Wang, T.; et al. Tensorflow lite micro: Embedded machine learning for tinyml systems. Proc. Mach. Learn. Syst. 2021, 3, 800–811. [Google Scholar]

- Qian, C.; Einhaus, L.; Schiele, G. ElasticAI-Creator: Optimizing Neural Networks for Time-Series-Analysis for on-Device Machine Learning in IoT Systems. In Proceedings of the 20th ACM Conference on Embedded Networked Sensor Systems; Association for Computing Machinery: New York, NY, USA, 2023; pp. 941–946. [Google Scholar] [CrossRef]

- Giordano, M.; Baumann, N.; Crabolu, M.; Fischer, R.; Bellusci, G.; Magno, M. Design and Performance Evaluation of an Ultralow-Power Smart IoT Device with Embedded TinyML for Asset Activity Monitoring. IEEE Trans. Instrum. Meas. 2022, 71, 2510711. [Google Scholar] [CrossRef]

- Bamoumen, H.; Temouden, A.; Benamar, N.; Chtouki, Y. How TinyML Can be Leveraged to Solve Environmental Problems: A Survey. In Proceedings of the 2022 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Sakheer, Bahrain, 20–21 November 2022; pp. 338–343. [Google Scholar] [CrossRef]

- Athanasakis, G.; Filios, G.; Katsidimas, I.; Nikoletseas, S.; Panagiotou, S.H. TinyML-based approach for Remaining Useful Life Prediction of Turbofan Engines. In Proceedings of the 2022 IEEE 27th International Conference on Emerging Technologies and Factory Automation (ETFA), Stuttgart, Germany, 6–9 September 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Silva, M.; Signoretti, G.; Flores, T.; Andrade, P.; Silva, J.; Silva, I.; Sisinni, E.; Ferrari, P. A data-stream TinyML compression algorithm for vehicular applications: A case study. In Proceedings of the 2022 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4.0&IoT), Trento, Italy, 7–9 June 2022; pp. 408–413. [Google Scholar] [CrossRef]

- Ostrovan, E. TinyML On-Device Neural Network Training. Master’s Thesis, Politecnico di Milano, Milan, Italy, 2022. [Google Scholar]

- Signoretti, G.; Silva, M.; Andrade, P.; Silva, I.; Sisinni, E.; Ferrari, P. An Evolving TinyML Compression Algorithm for IoT Environments Based on Data Eccentricity. Sensors 2021, 21, 4153. [Google Scholar] [CrossRef]

- Sharif, U.; Mueller-Gritschneder, D.; Stahl, R.; Schlichtmann, U. Efficient Software-Implemented HW Fault Tolerance for TinyML Inference in Safety-critical Applications. In Proceedings of the 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 17–19 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Fedorov, I.; Matas, R.; Tann, H.; Zhou, C.; Mattina, M.; Whatmough, P. UDC: Unified DNAS for compressible TinyML models. arXiv 2022, arXiv:2201.05842. [Google Scholar]

- Nadalini, D.; Rusci, M.; Benini, L.; Conti, F. Reduced Precision Floating-Point Optimization for Deep Neural Network On-Device Learning on MicroControllers. arXiv 2023, arXiv:2305.19167. [Google Scholar] [CrossRef]

- Silva, M.; Medeiros, T.; Azevedo, M.; Medeiros, M.; Themoteo, M.; Gois, T.; Silva, I.; Costa, D.G. An Adaptive TinyML Unsupervised Online Learning Algorithm for Driver Behavior Analysis. In Proceedings of the 2023 IEEE International Workshop on Metrology for Automotive (MetroAutomotive), Modena, Italy, 28–30 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 199–204. [Google Scholar]

- Pereira, E.S.; Marcondes, L.S.; Silva, J.M. On-Device Tiny Machine Learning for Anomaly Detection Based on the Extreme Values Theory. IEEE Micro 2023, 43, 58–65. [Google Scholar] [CrossRef]

- Zhuo, S.; Chen, H.; Ramakrishnan, R.K.; Chen, T.; Feng, C.; Lin, Y.; Zhang, P.; Shen, L. An empirical study of low precision quantization for tinyml. arXiv 2022, arXiv:2203.05492. [Google Scholar]

- Krishna, A.; Nudurupati, S.R.; Dwivedi, P.; van Schaik, A.; Mehendale, M.; Thakur, C.S. RAMAN: A Re-configurable and Sparse tinyML Accelerator for Inference on Edge. arXiv 2023, arXiv:2306.06493. [Google Scholar]

- Ren, H.; Anicic, D.; Runkler, T.A. TinyReptile: TinyML with Federated Meta-Learning. arXiv 2023, arXiv:2304.05201. [Google Scholar]

- Ren, H.; Anicic, D.; Runkler, T.A. Towards Semantic Management of On-Device Applications in Industrial IoT. ACM Trans. Internet Technol. 2022, 22, 1–30. [Google Scholar] [CrossRef]

- Chen, J.I.Z.; Huang, P.F.; Pi, C.S. The implementation and performance evaluation for a smart robot with edge computing algorithms. Ind. Robot. Int. J. Robot. Res. Appl. 2023, 50, 581–594. [Google Scholar] [CrossRef]

- Mohammed, M.; Srinivasagan, R.; Alzahrani, A.; Alqahtani, N.K. Machine-Learning-Based Spectroscopic Technique for Non-Destructive Estimation of Shelf Life and Quality of Fresh Fruits Packaged under Modified Atmospheres. Sustainability 2023, 15, 12871. [Google Scholar] [CrossRef]

- Koufos, K.; EI Haloui, K.; Dianati, M.; Higgins, M.; Elmirghani, J.; Imran, M.A.; Tafazolli, R. Trends in Intelligent Communication Systems: Review of Standards, Major Research Projects, and Identification of Research Gaps. J. Sens. Actuator Netw. 2021, 10, 60. [Google Scholar] [CrossRef]

- Ahad, M.A.; Tripathi, G.; Zafar, S.; Doja, F. IoT Data Management—Security Aspects of Information Linkage in IoT Systems. In Principles of Internet of Things (IoT) Ecosystem: Insight Paradigm; Peng, S.L., Pal, S., Huang, L., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 439–464. [Google Scholar] [CrossRef]

- Liu, R.W.; Nie, J.; Garg, S.; Xiong, Z.; Zhang, Y.; Hossain, M.S. Data-Driven Trajectory Quality Improvement for Promoting Intelligent Vessel Traffic Services in 6G-Enabled Maritime IoT Systems. IEEE Internet Things J. 2021, 8, 5374–5385. [Google Scholar] [CrossRef]

- Hnatiuc, B.; Paun, M.; Sintea, S.; Hnatiuc, M. Power management for supply of IoT Systems. In Proceedings of the 2022 26th International Conference on Circuits, Systems, Communications and Computers (CSCC), Crete, Greece, 19–22 July 2022; pp. 216–221. [Google Scholar] [CrossRef]

- Rajeswari, S.; Ponnusamy, V. AI-Based IoT analytics on the cloud for diabetic data management system. In Integrating AI in IoT Analytics on the Cloud for Healthcare Applications; IGI Global: Hershey, PA, USA, 2022; pp. 143–161. [Google Scholar]

- Karras, A.; Karras, C.; Giotopoulos, K.C.; Tsolis, D.; Oikonomou, K.; Sioutas, S. Peer to peer federated learning: Towards decentralized machine learning on edge devices. In Proceedings of the 2022 7th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Ioannina, Greece, 23–25 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–9. [Google Scholar]

- Karras, A.; Karras, C.; Giotopoulos, K.C.; Tsolis, D.; Oikonomou, K.; Sioutas, S. Federated Edge Intelligence and Edge Caching Mechanisms. Information 2023, 14, 414. [Google Scholar] [CrossRef]

- Karras, A.; Karras, C.; Karydis, I.; Avlonitis, M.; Sioutas, S. An Adaptive, Energy-Efficient DRL-Based and MCMC-Based Caching Strategy for IoT Systems. In Algorithmic Aspects of Cloud Computing; Chatzigiannakis, I., Karydis, I., Eds.; Springer: Cham, Switzerland, 2024; pp. 66–85. [Google Scholar]

- Meddeb, M.; Dhraief, A.; Belghith, A.; Monteil, T.; Drira, K. How to cache in ICN-based IoT environments? In Proceedings of the 2017 IEEE/ACS 14th International Conference on Computer Systems and Applications (AICCSA), Hammamet, Tunisia, 30 October–3 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1117–1124. [Google Scholar]

- Wang, S.; Zhang, X.; Zhang, Y.; Wang, L.; Yang, J.; Wang, W. A survey on mobile edge networks: Convergence of computing, caching and communications. IEEE Access 2017, 5, 6757–6779. [Google Scholar] [CrossRef]

- Zhong, C.; Gursoy, M.C.; Velipasalar, S. A deep reinforcement learning-based framework for content caching. In Proceedings of the 2018 52nd Annual Conference on Information Sciences and Systems (CISS), Princeton, NJ, USA, 21–23 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

| Challenge | Description |

|---|---|

| Data Volume | The increase in interconnected devices leads to unprecedented data generation, surpassing the capacity of conventional storage and processing systems. |

| Data Velocity | Continuous data generation in IoT necessitates real-time analysis and response, stressing the need for prompt processing solutions. |

| Data Variety | Diverse data sources in IoT range from structured to unstructured formats, posing integration and analytical complexities. |

| Data Veracity | The accuracy, authenticity, and reliability of data from varied devices present significant challenges in data verification. |

| Data Integration | Consolidating data from heterogeneous sources while preserving integrity and context remains complex. |

| Security | Increased interconnectivity broadens the risk of cyber attacks, necessitating robust security protocols. |

| Privacy | Balancing the protection of sensitive data within extensive datasets, while maintaining utility, is crucial. |

| Latency | Processing or transmission delays can affect the timeliness and relevance of insights, impacting decisionmaking. |

| Challenge | TinyML Solution |

|---|---|

| Data Overload | On-device preprocessing to reduce data transmission. |

| Real-time Processing | Edge-deployed models for swift decisionmaking. |

| Connectivity Issues | Local processing for uninterrupted operation. |

| Energy Efficiency | Optimized tasks to conserve energy and extend device life. |

| Security | Local data processing to minimize transmission risks. |

| Privacy | In situ data processing to enhance privacy. |

| Device Longevity | Reduced transmission strain to extend device life. |

| IoT System Characteristic | Implication | Enhancement with TinyML |

|---|---|---|

| Distributed Topology | Numerous devices scattered across different locations lead to data decentralization and increased latency. | TinyML facilitates edge computation, reducing latency and ensuring real-time insights. |

| Voluminous Data Streams | Continuous data generation can overwhelm storage and transmission channels. | On-device TinyML prioritizes, compresses, and filters data, managing storage and reducing transmission needs. |

| Diverse Device Landscape | Variety in device types introduces inconsistency in data formats and communication protocols. | TinyML standardizes data processing at source, ensuring unified data representation across devices. |

| Power and Resource Constraints | Devices, especially battery-operated ones, have limited computational resources. | TinyML models maximize computational efficiency, conserving device resources. |

| Real-time Processing Needs | Delays in data processing can hinder time-sensitive applications. | TinyML ensures rapid on-device processing for immediate responses to data changes. |

| Use Case | Description |

|---|---|

| Predictive Maintenance | Real-time analysis of sensor data for early fault detection in machinery, reducing downtime and maintenance costs. |

| Health Monitoring | Continuous health monitoring with wearables for vital signs and anomaly detection, enhancing preventative healthcare. |

| Smart Agriculture | Adaptive agriculture practices based on sensor data, optimizing resource use for better crop yield. |

| Voice Recognition | Local processing of voice commands for quicker privacy-focused responses. |

| Face Recognition | Low-latency facial recognition for secure access control and personalization. |

| Anomaly Detection | Immediate detection of irregular patterns in industrial and environmental data for proactive response. |

| Gesture Control | Touch-free device control via gesture recognition, improving user interaction and accessibility. |

| Energy Management | Intelligent energy use in smart grids and homes based on usage patterns and predictive analytics. |

| Traffic Flow Optimization | Real-time traffic analysis for dynamic routing and light sequencing, enhancing urban traffic management. |

| Environmental Monitoring | Continuous monitoring of environmental conditions, with real-time adjustments and alerts. |

| Smart Retail | Analysis of customer behavior for tailored retail experiences and store management. |

| Application | Description | Ref. |

|---|---|---|

| Concrete Materials Damage Classification | Lightweight CNN on MCU for damage recognition in concrete materials, showing TinyML’s potential in structural health. | [63] |

| Predictive Maintenance | TinyML for predictive maintenance in hydraulic systems, improving service quality, performance, and sustainability. | [64] |

| Keyword Spotting | TinyML for efficient keyword detection in voice-enabled devices, reducing processing costs and enhancing privacy. | [65] |

| Time-Series Analysis | ML hardware accelerators for real-time analysis of time-series data in IoT, optimizing neural networks for on-device processing. | [66] |

| Asset Activity Monitoring | TinyML for continuous monitoring of tool usage, identifying usage patterns and potential misuses. | [67] |

| Environmental Monitoring | TinyML for monitoring environmental factors like air quality, contributing to smart systems for sustainability. | [68] |

| Application Area | TinyML Algorithm | Reference |

|---|---|---|

| Predictive Maintenance | RUL prediction using LSTMs and CNNs | [69] |

| Data Compression | Tiny Anomaly Compressor (TAC) | [70] |

| Tool Usage Monitoring | TinyML for handheld power tool monitoring | [67] |

| On-Device Training | Neural network training for dense networks | [71] |

| IoT Compression | Evolving TinyML compression algorithm | [72] |

| Safety-Critical Applications | Software-implemented hardware fault tolerance | [73] |

| Model Optimization | Unified DNAS for compressible models | [74] |

| Study Focus | Key Contributions | Reference |

|---|---|---|

| Deep Neural Network Optimization | Reduced Precision Optimization for DNN on-device learning in MCUs. | [75] |

| Unsupervised Online Learning | Adaptive TinyML algorithm for driver behavior analysis in automotive IoT. | [76] |

| Anomaly Detection | TinyML algorithm for anomaly detection in Industry 4.0 using extreme values theory. | [77] |

| Low Precision Quantization | Empirical study on quantization techniques for TinyML efficiency. | [78] |

| Sparse tinyML Accelerator | Development of RAMAN, a re-configurable and sparse tinyML accelerator for edge inference. | [79] |

| Federated Meta-Learning | TinyReptile: federated meta-learning algorithm for TinyML on MCUs. | [80] |

| Technique | Objective | Role of TinyML |

|---|---|---|

| Predictive Imputation | Maintain data integrity by compensating for missing or lost data. | Uses historical and neighboring data to predict and fill missing values, ensuring dataset completeness. |

| Adaptive Data Quantization | Optimize data storage and transmission. | Analyzes current trends and adjusts quantization levels dynamically for optimal representation. |

| Sensor Data Fusion | Integrate data from multiple sensors for a holistic view. | Processes and merges diverse sensor data in real time, enhancing accuracy and context. |

| Anomaly Detection | Identify unusual data patterns or device malfunctions. | Continuously monitors data streams, recognizing and flagging anomalies for prompt action. |

| Intelligent Data Caching | Provide instant access to frequently used or critical data. | Uses predictive analytics to anticipate future data needs, caching relevant data. |

| Edge-Based Clustering | Group similar data at the edge for efficient analytics. | Performs lightweight clustering on-device for efficient aggregation and transmission. |

| Real-time Data Augmentation | Enhance data to improve machine learning performance. | Augments sensor data in real time, enriching them for better analytics. |

| Local Data Lifespan Management | Manage the relevance and storage duration of data. | Predicts data utility, retaining or discarding them for effective local storage management. |

| Contextual Data Filtering | Discard or prioritize data based on the current context. | Filters data relevant to the situation, enhancing decisionmaking processes. |

| On-device Data Labeling | Annotate raw data for subsequent processing. | Automatically labels data based on learned patterns, aiding in categorization and retrieval. |

| Algorithm | Federated Learning | Anomaly Detection | Data Compression | Caching Strategy | Data Quality |

|---|---|---|---|---|---|

| TinyCleanEDF (Algorithm 1) | ✓ | ✓ | × | × | ✓ |

| EdgeClusterML (Algorithm 2) | × | ✓ | × | × | × |

| CompressEdgeML (Algorithm 3) | × | × | ✓ | × | × |

| CacheEdgeML (Algorithm 4) | × | × | × | ✓ | × |

| TinyHybridSenseQ (Algorithm 5) | × | ✓ | × | ✓ | ✓ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karras, A.; Giannaros, A.; Karras, C.; Theodorakopoulos, L.; Mammassis, C.S.; Krimpas, G.A.; Sioutas, S. TinyML Algorithms for Big Data Management in Large-Scale IoT Systems. Future Internet 2024, 16, 42. https://doi.org/10.3390/fi16020042

Karras A, Giannaros A, Karras C, Theodorakopoulos L, Mammassis CS, Krimpas GA, Sioutas S. TinyML Algorithms for Big Data Management in Large-Scale IoT Systems. Future Internet. 2024; 16(2):42. https://doi.org/10.3390/fi16020042

Chicago/Turabian StyleKarras, Aristeidis, Anastasios Giannaros, Christos Karras, Leonidas Theodorakopoulos, Constantinos S. Mammassis, George A. Krimpas, and Spyros Sioutas. 2024. "TinyML Algorithms for Big Data Management in Large-Scale IoT Systems" Future Internet 16, no. 2: 42. https://doi.org/10.3390/fi16020042

APA StyleKarras, A., Giannaros, A., Karras, C., Theodorakopoulos, L., Mammassis, C. S., Krimpas, G. A., & Sioutas, S. (2024). TinyML Algorithms for Big Data Management in Large-Scale IoT Systems. Future Internet, 16(2), 42. https://doi.org/10.3390/fi16020042