The Future of the Human–Machine Interface (HMI) in Society 5.0

Abstract

1. Introduction

1.1. Societal and Industrial Transformation in the Form of Revolutions

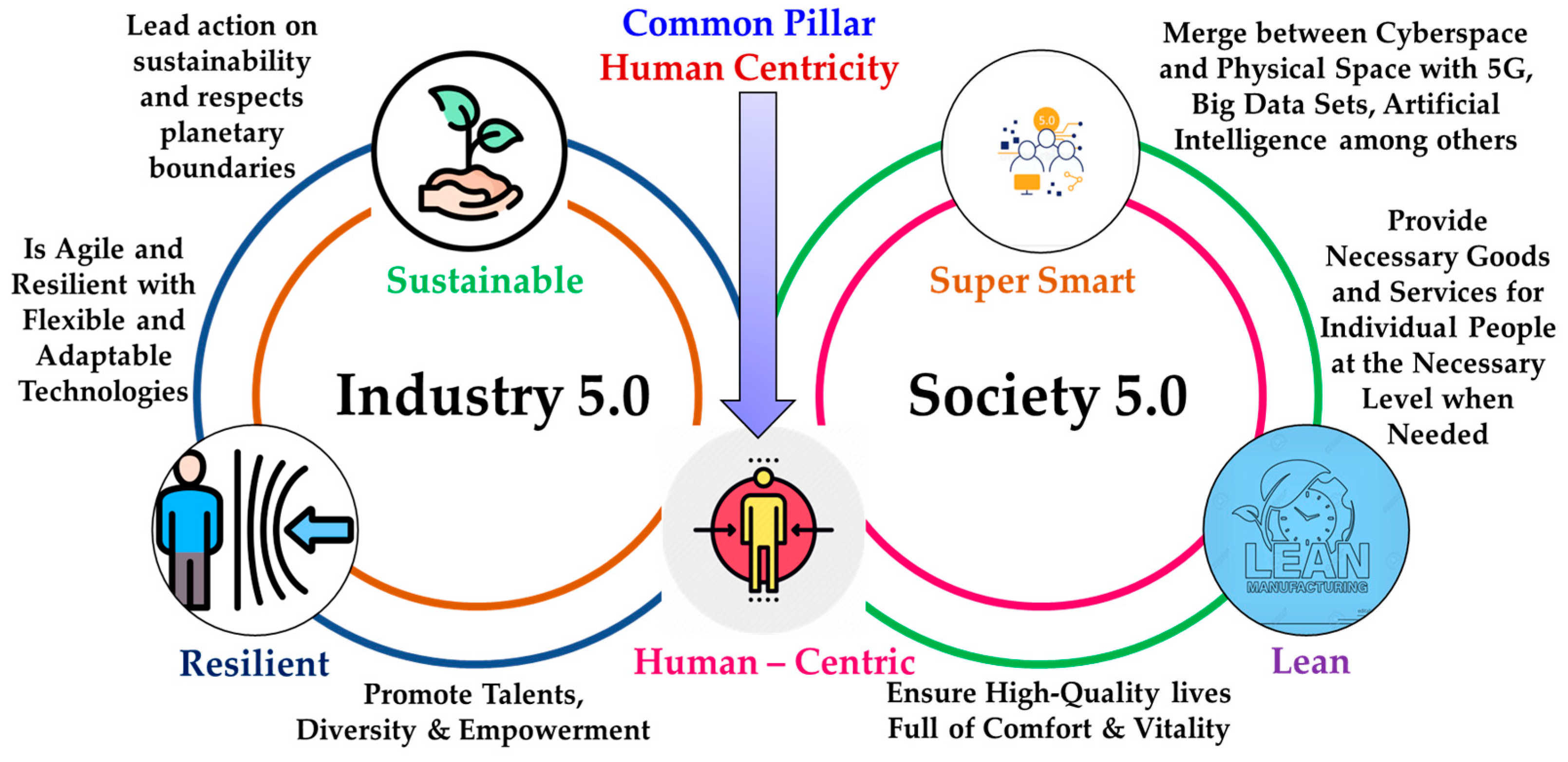

1.2. Vision and Pillars of Industry 5.0 and Society 5.0

- Cyber-physical systems (CPS): this refers to the integration of physical and digital systems, allowing for real-time monitoring and control of manufacturing processes [11].

- Artificial Intelligence (AI) and Machine Learning: AI algorithms can be used to analyze vast amounts of data to identify patterns and optimize manufacturing processes, improving productivity and reducing waste [12].

- The Internet of Things (IoT): The IoT connects devices and sensors throughout the manufacturing process, providing real-time data on the status of equipment and materials [13].

- Additive Manufacturing (AM): AM technologies such as 3D printing allow for the creation of complex and customized parts, reducing waste and increasing efficiency [14].

- eXtended Reality (XR): XR technologies can be used to train workers and provide real-time information on the manufacturing process, improving safety and productivity [15].

1.3. Building Society 5.0: Challenges and Opportunities

1.4. Humachine Definition

Definition: the word “Humachine” first appeared on the cover of a 1999 MIT Technology Review Special Edition and coined to describe “the symbiosis that is currently developing between human beings and machines–Humachines”.

1.5. Humachine Modus Operandi

- (1)

- Generate accurate quantitative information based on sensor signal processing that exceeds the range of human feeling;

- (2)

- Supervision of human actions and provision of advice/warnings to human operators in the event of identification of a possible danger/human error.

- (1)

- Help the machine in the generation of qualitative information that the machine sensing devices cannot interpret;

- (2)

- Supervise the tasks executed by the machines and intervene whenever appropriate.

1.6. Paper Organization

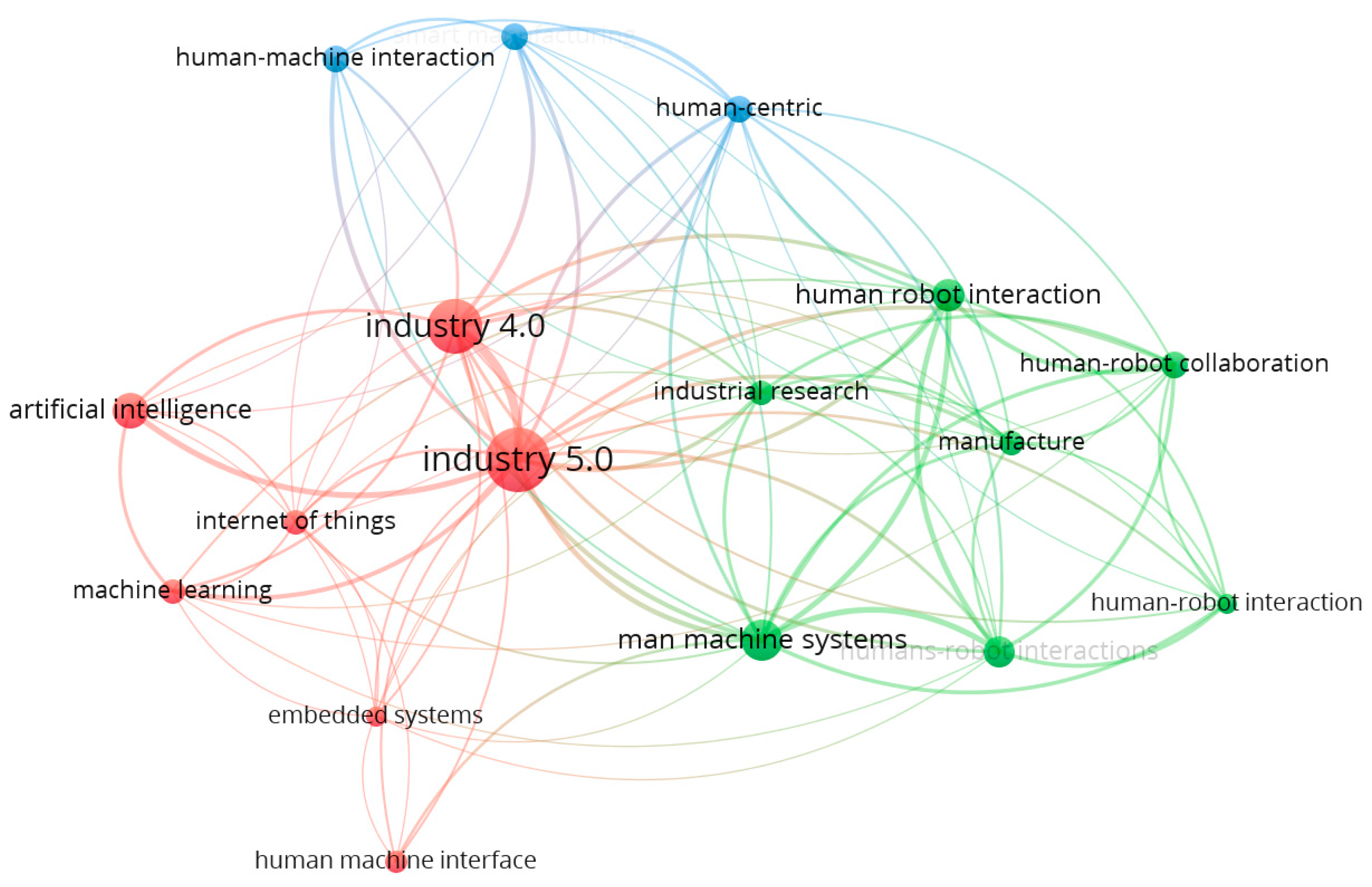

2. Literature Review Methodology

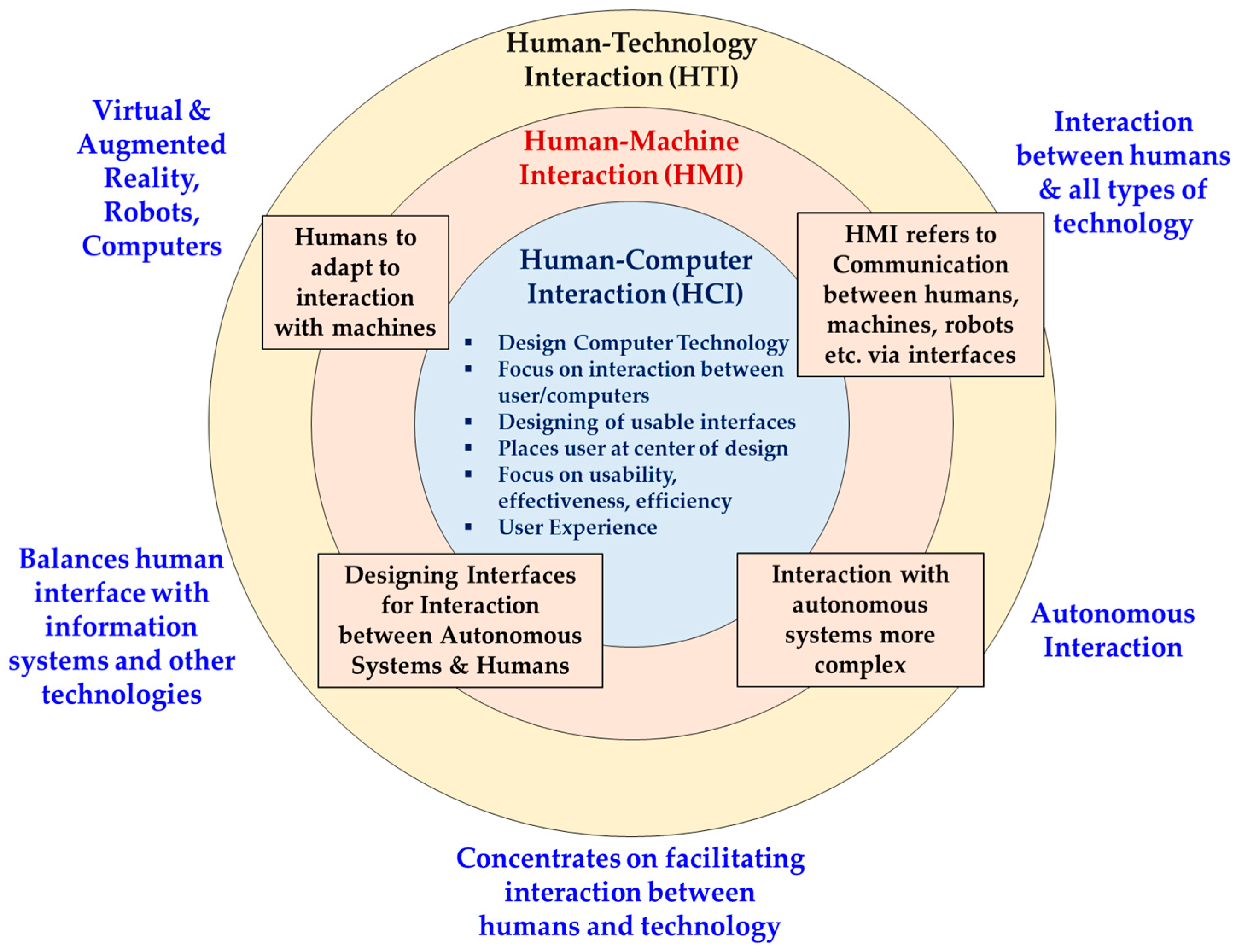

3. Human–Computer Interaction (HCI)

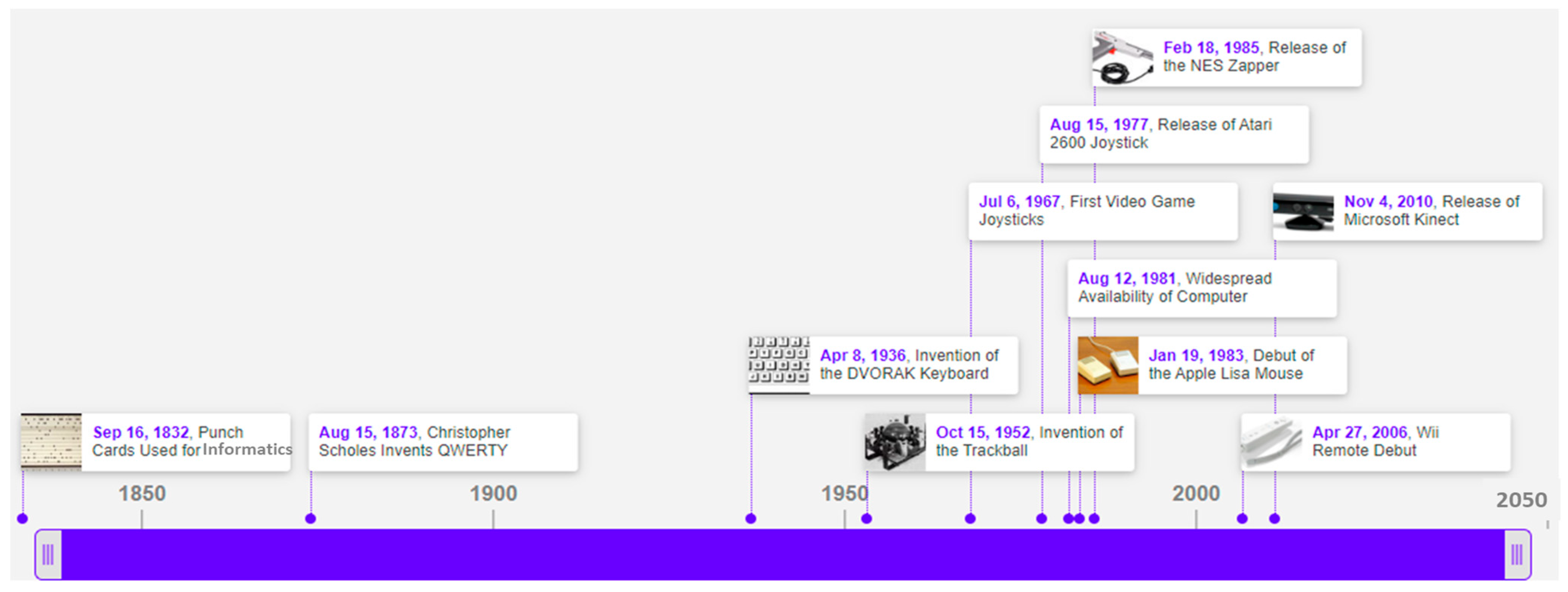

3.1. Key Milestones

- Command-line interface (CLI): The earliest HCI was based on the command-line interface (CLI), which required users to enter text commands to interact with the computer. This type of interface was difficult to use for non-experts and required extensive knowledge of computer commands.

- Graphical user interface (GUI): In the 1980s, the graphical user interface (GUI) was developed, which used icons, menus, and windows to make computing more intuitive and user-friendly. The GUI made it easier for users to navigate and interact with the computer and is still used widely today.

- Touchscreens: The introduction of touchscreen technology in the 1990s and early 2000s revolutionized HCI by allowing users to interact directly with graphical elements on the screen. This technology made computing even more intuitive and accessible and paved the way for the development of mobile devices.

- Natural language processing (NLP): In recent years, natural language processing (NLP) has become a major area of research in HCI. NLP allows users to interact with computers using spoken or written language rather than commands or mouse clicks. This technology is still in its early stages but has the potential to make computing even more natural and intuitive.

- Virtual Reality (VR) and Augmented Reality (AR): With the advent of VR and AR technologies, HCI is moving beyond the traditional screen-based interface. These technologies allow users to interact with digital content in a more immersive and natural way and have the potential to revolutionize HCI in areas such as gaming, education, and healthcare.

- Metaverse: The metaverse is a virtual world where users can interact in a 3D space using avatars. Human–computer interaction in the metaverse involves natural language processing, haptic feedback, and Virtual Reality to create a more immersive and natural experience. It has the potential to revolutionize the way we interact with digital content and with each other.

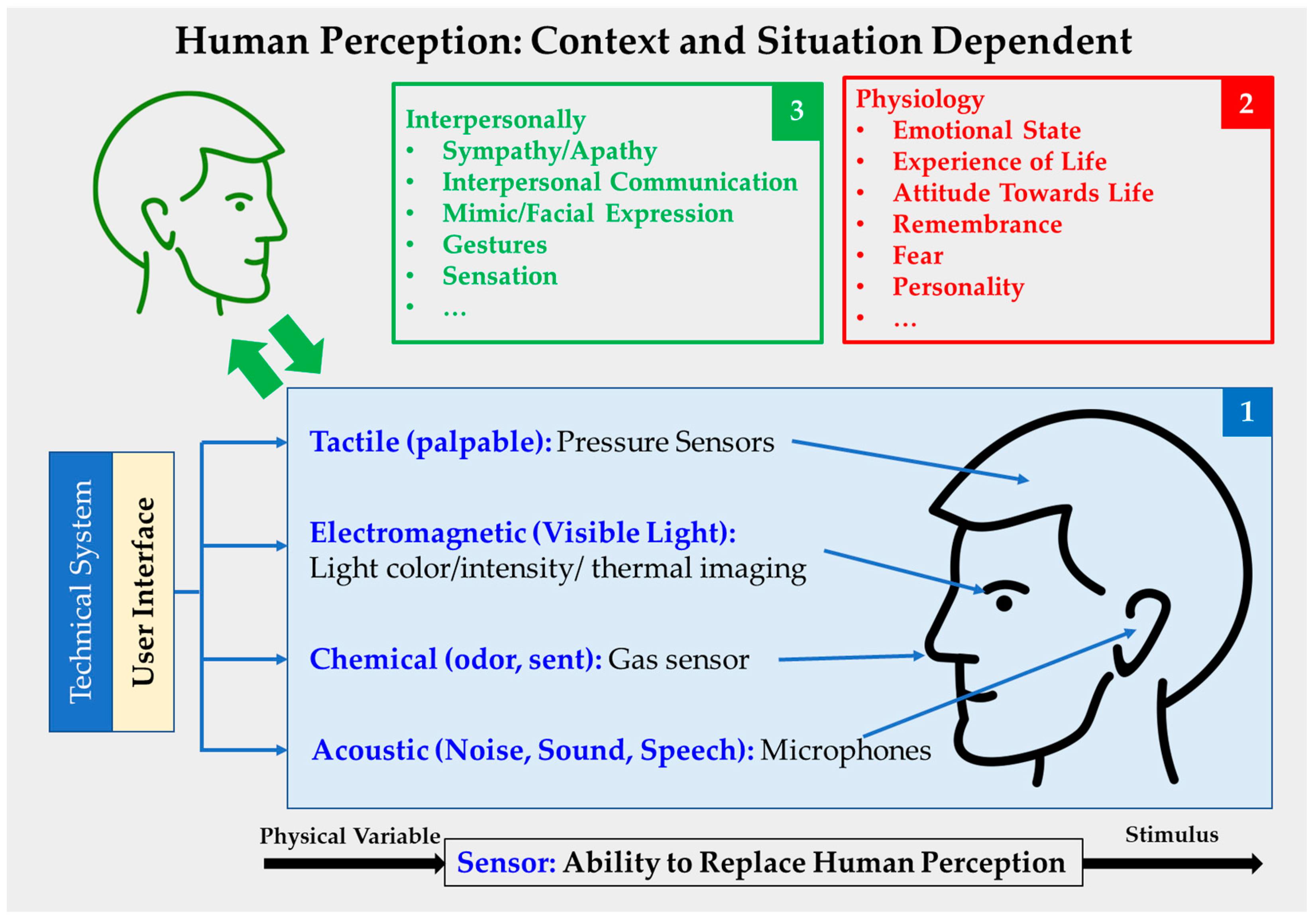

3.2. From HCI to Human Perception

- User interface: traditional human–machine interaction only incorporates human perception from system output;

- Physiology: improved models include personal capabilities, individuality, and actual conditions;

- Interpersonally: with regard to physiological processes involved in interpersonal communication.

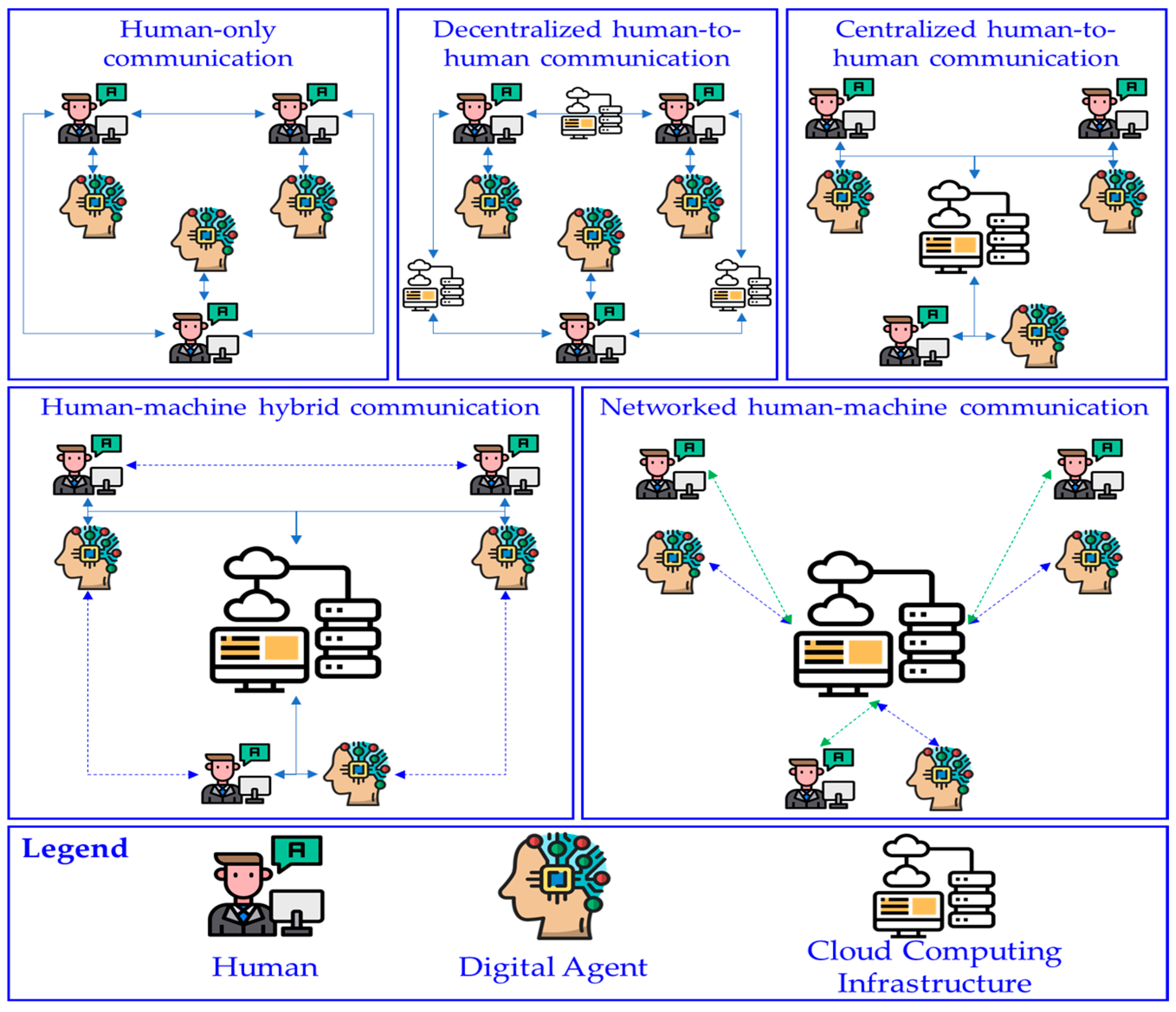

4. Human–Machine Interaction (HMI)

4.1. Key Enabling Technologies and Goals of HMI

4.2. Augmented Intelligence

4.3. Brain Computer Interface (BCI)

- Signal quality: One of the key challenges in the development of BCIs is obtaining high-quality signals from the human brain. By default, brain signals are weak and can be easily contaminated by noise and interference from other sources, such as muscles and other electronic devices. Therefore, accurate detection and interpretation of brain activity is a challenging task [42];

- Invasive vs. non-invasive BCIs: The current implementations of BCIs can be divided into two major categories: i) invasive and ii) non-invasive. Invasive BCIs require the implantation of electrodes directly into the brain, while non-invasive BCIs use external sensors to detect brain activity. Despite the fact that invasive BCIs can provide higher-quality signals, they are also riskier and more expensive. On the contrary, non-invasive BCIs are safer for humans and more accessible, at the expense of lower-quality signals [43];

- Training and calibration: BCIs require substantial effort in terms of training and calibration in order to work effectively. Furthermore, it is imperative for users to learn how to control their brain activity in such a way that can be detected and interpreted by the BCI. As a result, this can be a time-consuming and frustrating process for some users, causing discomfort [44];

- Limited bandwidth: BCIs often have limited bandwidth, thus allowing only a limited range of brain activity to be detected and interpreted. Therefore, the types of actions that can be controlled using a BCI are still limited [45];

- Ethical and privacy concerns: Indeed, BCIs have been evidenced to be really useful for the future of human–computer interfaces. However, there are certain ethical and privacy concerns. For example, data ownership legislation needs to be established. Furthermore, issues regarding data misuse and the development of suitable mechanisms to counteract such issues need to be explored [46].

5. Human–Centric Manufacturing (HCM)

5.1. Importance of Human-Centric Smart Manufacturing

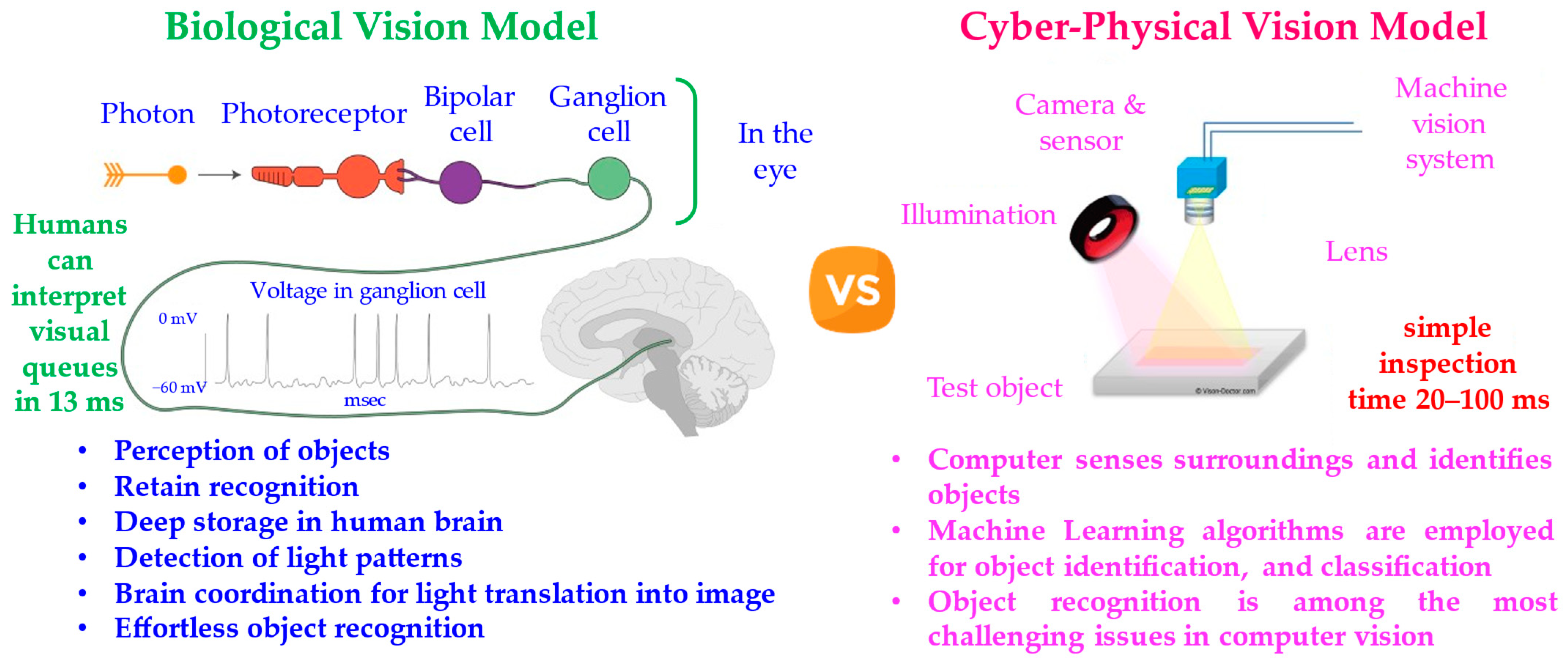

5.2. Parallelism of Biological Vision System with Cyber-Physical Vision System

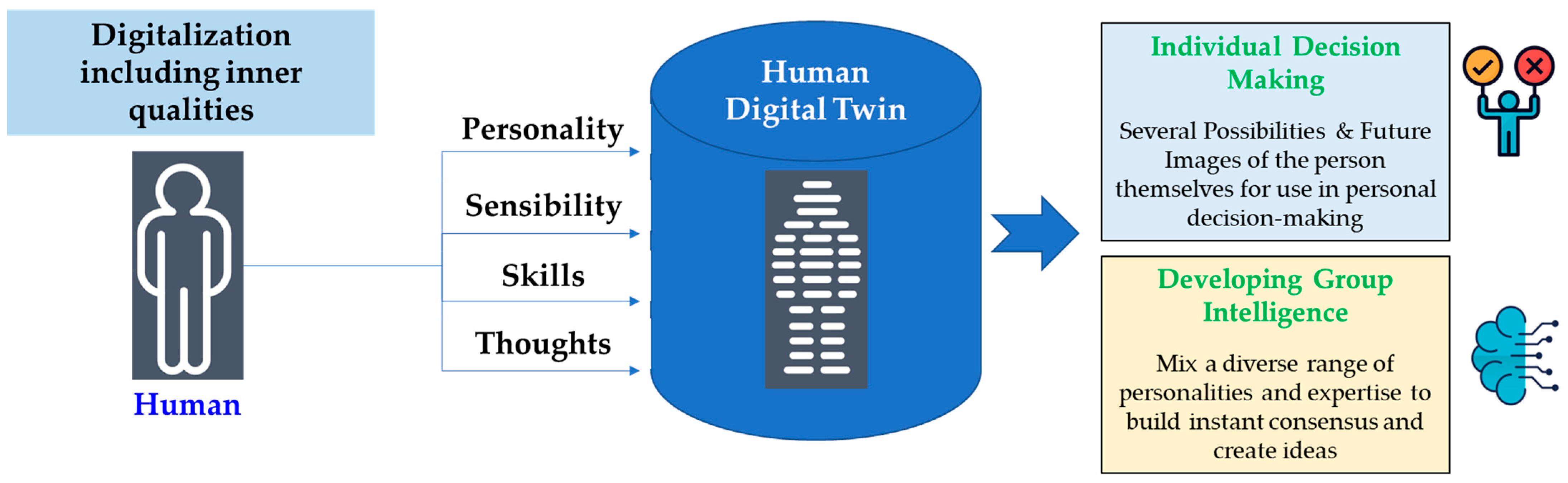

5.3. Human Digital Twins

5.4. Contribution of Human Digital Twins

- Proxy (virtual agent) meetings between digital twins: digital twins with the personality and characteristics of individual people can react as if they were the real people in response to approaches from others in cyberspace [56];

- Creating a personal/virtual agent to work on your behalf: you can extend the range of human activity from the real world to cyber space using digital twin computing [57];

- Enabling dialogue that would be impossible in the real world: digital twins can also be used to communicate with people who do not currently exist, such as the deceased, to gain knowledge and experience [58];

- Using digital twins as an interface: creation of a derivative of your own digital twin endowed with abilities you do not possess [15];

- Enabling dialogue: language skills can be fabricated by exchanging or merging the abilities of your own Digital Twin with someone else’s [15];

- Personalized healthcare: Human digital twins can be used to develop personalized treatment plans based on an individual’s unique characteristics and responses to different stimuli. By simulating and predicting how an individual will respond to different medications or therapies, healthcare providers can optimize treatments and improve outcomes [25];

- Disease prevention: Human digital twins can be used to monitor an individual’s health and detect early signs of disease or illness. By analyzing data from wearable devices and other sources, the twin can identify patterns and anomalies that may indicate a potential health problem [59];

- Sports performance optimization: Human digital twins can be used to optimize sports performance by simulating and predicting how an individual will respond to different training regimens and environmental conditions. This can help athletes and coaches develop more effective training plans and prevent injuries [60];

- Workplace safety: Human digital twins can be used to improve workplace safety by simulating and predicting how an individual will respond to different work environments and hazards. This can help to identify and mitigate potential risks before they occur [61];

- Social science research: Human digital twins can be used to study human behavior and social dynamics in a controlled environment. By simulating and predicting how individuals will interact with each other under different conditions, researchers can gain insights into complex social phenomena [62].

5.5. Use Cases of Human DTC

5.5.1. Collective Consensus Building

- A human digital twin has a memory of personal knowledge, experience, etc., so it can think and judge while having the same personality and sense of values as the real-life person and engage in various tasks;

- A digital twin replicates communication that takes place in the real world, so it can engage in advanced tasks that require communication with multiple people;

- A typical example is consensus building during a meeting.

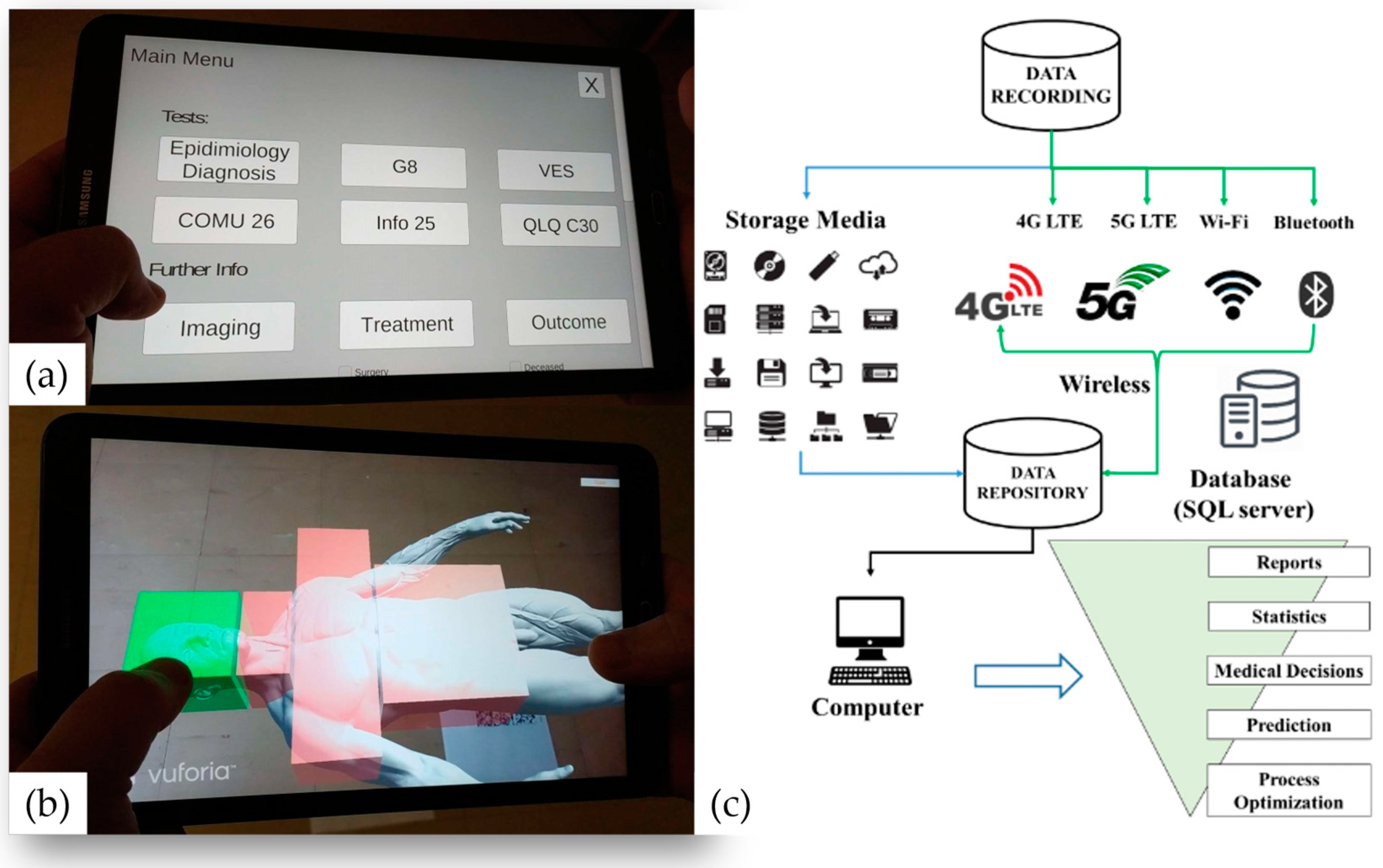

5.5.2. Towards Personalized Healthcare with Augmented Reality and Digital Twins

5.5.3. Future Prediction and Growth Support

6. Discussion

6.1. Humachine Framework

- Enhanced productivity and efficiency: humachines can augment human capabilities with the speed, accuracy, and consistency of machines, leading to higher productivity and efficiency in many industries;

- Improved decision making: combining human reasoning and intuition with Machine Learning algorithms can lead to better decision making, reducing errors and improving outcomes;

- Advanced healthcare: Humachines can help healthcare professionals in diagnoses, treatment planning, and monitoring, leading to more accurate and personalized healthcare;

- Innovation and creativity: by collaborating with machines, humans can access vast amounts of data, tools, and insights that can fuel innovation and creativity in various fields;

- Automation of mundane tasks: automation of repetitive and mundane tasks can free up human time and energy to focus on more meaningful and creative tasks, leading to higher job satisfaction and engagement.

6.2. Latest Advances in AI

6.3. Limitations and Risks of AI Adoption

7. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Leng, J.; Sha, W.; Wang, B.; Zheng, P.; Zhuang, C.; Liu, Q.; Wuest, T.; Mourtzis, D.; Wang, L. Industry 5.0: Prospect and retrospect. J. Manuf. Syst. 2022, 65, 279–295. [Google Scholar] [CrossRef]

- Huang, S.; Wang, B.; Li, X.; Zheng, P.; Mourtzis, D.; Wang, L. Industry 5.0 and Society 5.0—Comparison, complementation and co-evolution. J. Manuf. Syst. 2022, 64, 424–428. [Google Scholar] [CrossRef]

- Di Marino, C.; Rega, A.; Vitolo, F.; Patalano, S. Enhancing Human-Robot Collaboration in the Industry 5.0 Context: Workplace Layout Prototyping. In Advances on Mechanics, Design Engineering and Manufacturing IV: Proceedings of the International Joint Conference on Mechanics, Design Engineering & Advanced Manufacturing, JCM 2022, Ischia, Italy, 1–3 June 2022; Springer International Publishing: Cham, Switzerland, 2022; pp. 454–465. [Google Scholar] [CrossRef]

- Mourtzis, D. Design and Operation of Production Networks for Mass Personalization in the Era of Cloud Technology; Elsevier: Amsterdam, The Netherlands, 2021; pp. 1–393. [Google Scholar] [CrossRef]

- Firyaguna, F.; John, J.; Khyam, M.O.; Pesch, D.; Armstrong, E.; Claussen, H.; Poor, H.V. Towards industry 5.0: Intelligent reflecting surface (irs) in smart manufacturing. arXiv 2022, arXiv:2201.02214. [Google Scholar]

- Sanders, N.R.; Wood, J.D. The Humachine: Humankind, Machines, and the Future of Enterprise; Routledge: Abingdon, UK, 2019. [Google Scholar]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. From industry 4.0 to society 4.0: Identifying challenges and opportunities. In Proceedings of the International Conference on Computers and Industrial Engineering, CIE, Beijing, China, 18–21 October 2019. [Google Scholar]

- UNESCO. Japan Pushing Ahead with Society 5.0 to Overcome Chronic Social Challenges. UNESCO Science Report: Towards 2030. 2019. Available online: https://www.unesco.org/en/articles/japan-pushing-ahead-society-5 (accessed on 31 March 2023).

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. A Literature Review of the Challenges and Opportunities of the Transition from Industry 4.0 to Society 5.0. Energies 2022, 15, 6276. [Google Scholar] [CrossRef]

- Ivanov, D. The Industry 5.0 framework: Viability-based integration of the resilience, sustainability, and human-centricity perspectives. Int. J. Prod. Res. 2022, 61, 1683–1695. [Google Scholar] [CrossRef]

- Saadati, Z.; Barenji, R.V. Toward Industry 5.0: Cognitive Cyber-Physical System. In Industry 4.0: Technologies, Applications, and Challenges; Springer Nature: Singapore, 2022; pp. 257–268. [Google Scholar] [CrossRef]

- Özdemir, V.; Hekim, N. Birth of industry 5.0: Making sense of big data with artificial intelligence, “the internet of things” and next-generation technology policy. Omics J. Integr. Biol. 2018, 22, 65–76. [Google Scholar] [CrossRef]

- Aslam, F.; Aimin, W.; Li, M.; Ur Rehman, K. Innovation in the era of IoT and industry 5.0: Absolute innovation management (AIM) framework. Information 2020, 11, 124. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Mourtzis, D.; Panopoulos, N.; Angelopoulos, J.; Wang, B.; Wang, L. Human centric platforms for personalized value creation in metaverse. J. Manuf. Syst. 2022, 65, 653–659. [Google Scholar] [CrossRef]

- Ayhan, E.E.; Akar, Ç. Society 5.0 Vision in Contemporary Inequal World. In Society 5.0 A New Challenge to Humankind’s Future; Sarıipek, D.B., Peluso, P., Eds.; Okur Yazar Association: İstanbul, Turkey, 2022; p. 133. [Google Scholar]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Blockchain Integration in the Era of Industrial Metaverse. Appl. Sci. 2023, 13, 1353. [Google Scholar] [CrossRef]

- Sá, M.J.; Santos, A.I.; Serpa, S.; Miguel, F.C. Digitainability—Digital competences post-COVID-19 for a sustainable society. Sustainability 2021, 13, 9564. [Google Scholar] [CrossRef]

- Ciobanu, A.C.; Meșniță, G. AI Ethics for Industry 5.0—From Principles to Practice. In Proceedings of the Workshop of I-ESA, Valencia, Spain, 24–25 March 2022; Volume 22. [Google Scholar]

- Rojas, C.N.; Peñafiel, G.A.A.; Buitrago, D.F.L.; Romero, C.A.T. Society 5.0: A Japanese concept for a superintelligent society. Sustainability 2021, 13, 6567. [Google Scholar] [CrossRef]

- Alimohammadlou, M.; Khoshsepehr, Z. The role of Society 5.0 in achieving sustainable development: A spherical fuzzy set approach. Environ. Sci. Pollut. Res. 2023, 30, 47630–47654. [Google Scholar] [CrossRef]

- Martynov, V.V.; Shavaleeva, D.N.; Zaytseva, A.A. Information technology as the basis for transformation into a digital society and industry 5.0. In 2019 International Conference “Quality Management, Transport and Information Security, Information Technologies” (IT&QM&IS); IEEE: Piscataway, NJ, USA, 2019; pp. 539–543. [Google Scholar] [CrossRef]

- Fukuda, K. Science, technology and innovation ecosystem transformation toward society 5.0. Int. J. Prod. Econ. 2020, 220, 107460. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. A Teaching Factory Paradigm for Personalized Perception of Education based on Extended Reality (XR). SSRN Electron. J. 2022. [Google Scholar] [CrossRef]

- Intelligent Machines—Humachines, From the Editor in Chief John Benditt. MIT Technology Review, 1 May 1999. Available online: https://www.technologyreview.com/1999/05/01/275799/humachines/ (accessed on 31 March 2023).

- Oxford English Dictionary. “Machine”. Available online: https://www.oxfordlearnersdictionaries.com/definition/english/machine_1 (accessed on 31 March 2023).

- Mourtzis, D. Simulation in the design and operation of manufacturing systems: State of the art and new trends. Int. J. Prod. Res. 2020, 58, 1927–1949. [Google Scholar] [CrossRef]

- Van Eck, N.; Waltman, L. Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 2010, 84, 523–538. [Google Scholar] [CrossRef]

- Ho, M.R.; Smyth, T.N.; Kam, M.; Dearden, A. Human-computer interaction for development: The past, present, and future. Inf. Technol. Int. Dev. 2009, 5, 1. [Google Scholar]

- Duric, Z.; Gray, W.D.; Heishman, R.; Li, F.; Rosenfeld, A.; Schoelles, M.J.; Wechsler, H. Integrating perceptual and cognitive modeling for adaptive and intelligent human-computer interaction. Proc. IEEE 2002, 90, 1272–1289. [Google Scholar] [CrossRef]

- McFarlane, D.C.; Latorella, K.A. The scope and importance of human interruption in human-computer interaction design. Hum. Comput. Interact. 2002, 17, 1–61. [Google Scholar] [CrossRef]

- Romero, D.; Stahre, J.; Wuest, T.; Noran, O.; Bernus, P.; Fast-Berglund, Å.; Gorecky, D. Towards an operator 4.0 typology: A human-centric perspective on the fourth industrial revolution technologies. In Proceedings of the International Conference on Computers and Industrial Engineering (CIE46), Tianjin, China, 29–31 October 2016. [Google Scholar]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Operator 5.0: A survey on enabling technologies and a framework for digital manufacturing based on extended reality. J. Mach. Eng. 2022, 22, 43–69. [Google Scholar] [CrossRef]

- Jeon, M. Emotions and affect in human factors and human–computer interaction: Taxonomy, theories, approaches, and methods. Emot. Affect. Hum. Factors Hum. Comput. Interact. 2017, 3–26. [Google Scholar] [CrossRef]

- Coetzer, J.; Kuriakose, R.B.; Vermaak, H.J. Collaborative decision-making for human-technology interaction-a case study using an automated water bottling plant. J. Phys. Conf. Ser. 2020, 1577, 012024. [Google Scholar] [CrossRef]

- Wójcik, M. Augmented intelligence technology. The ethical and practical problems of its implementation in libraries. Libr. Hi Tech 2021, 39, 435–447. [Google Scholar] [CrossRef]

- De Felice, F.; Petrillo, A.; De Luca, C.; Baffo, I. Artificial Intelligence or Augmented Intelligence? Impact on our lives, rights and ethics. Procedia Comput. Sci. 2022, 200, 1846–1856. [Google Scholar] [CrossRef]

- Lepenioti, K.; Bousdekis, A.; Apostolou, A.; Mentzas, G. Human-Augmented Prescriptive Analytics with Interactive Multi-Objective Reinforcement Learning. IEEE Access 2021, 9, 100677–100693. [Google Scholar] [CrossRef]

- Li, Q.; Sun, M.; Song, Y.; Zhao, D.; Zhang, T.; Zhang, Z.; Wu, J. Mixed reality-based brain computer interface system using an adaptive bandpass filter: Application to remote control of mobile manipulator. Biomed. Signal Process. Control. 2023, 83, 104646. [Google Scholar] [CrossRef]

- Middendorf, M.; McMillan, G.; Calhoun, G.; Jones, K.S. Brain-computer interfaces based on the steady-state visual-evoked response. IEEE Trans. Rehabil. Eng. 2000, 8, 211–214. [Google Scholar] [CrossRef]

- Kubacki, A. Use of Force Feedback Device in a Hybrid Brain-Computer Interface Based on SSVEP, EOG and Eye Tracking for Sorting Items. Sensors 2021, 21, 7244. [Google Scholar] [CrossRef]

- Huang, D.; Wang, M.; Wang, J.; Yan, J. A Survey of Quantum Computing Hybrid Applications with Brain-Computer Interface. Cogn. Robot. 2022, 2, 164–176. [Google Scholar] [CrossRef]

- Liu, L.; Wen, B.; Wang, M.; Wang, A.; Zhang, J.; Zhang, Y.; Le, S.; Zhang, L.; Kang, X. Implantable Brain-Computer Interface Based On Printing Technology. In Proceedings of the 2023 11th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 20–22 February 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Mu, W.; Fang, T.; Wang, P.; Wang, J.; Wang, A.; Niu, L.; Bin, J.; Liu, L.; Zhang, J.; Jia, J.; et al. EEG Channel Selection Methods for Motor Imagery in Brain Computer Interface. In Proceedings of the 2022 10th International Winter Conference on Brain-Computer Interface (BCI), Gangwon-do, Republic of Korea, 21–23 February 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Cho, J.H.; Jeong, J.H.; Kim, M.K.; Lee, S.W. Towards Neurohaptics: Brain-computer interfaces for decoding intuitive sense of touch. In Proceedings of the 2021 9th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 22–24 February 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, Y.; Xie, S.Q.; Wang, H.; Zhang, Z. Data analytics in steady-state visual evoked potential-based brain–computer interface: A review. IEEE Sens. J. 2020, 21, 1124–1138. [Google Scholar] [CrossRef]

- Bussmann, S. An agent-oriented architecture for holonic manufacturing control. In Proceedings of the First International Workshop on IMS, Lausanne, Switzerland, 15–17 April 1998; pp. 1–12. [Google Scholar]

- Cimini, C.; Pirola, F.; Pinto, R.; Cavalieri, S. A human-in-the-loop manufacturing control architecture for the next generation of production systems. J. Manuf. Syst. 2020, 54, 258–271. [Google Scholar] [CrossRef]

- Frank, M.; Roehrig, P.; Pring, B. What to Do When Machines Do Everything: How to Get ahead in a World of AI, Algorithms, Bots, and Big Data; John Wiley & Sons: Hoboken, NJ, USA, 2017; Available online: https://books.google.gr/books (accessed on 31 March 2023).

- Wilson, H.J.; Daugherty, P.R. Collaborative intelligence: Humans and AI are joining forces. Harv. Bus. Rev. 2018, 96, 114–123. Available online: https://hbr.org/2018/07/collaborative-intelligence-humans-and-ai-are-joining-forces (accessed on 31 March 2023).

- Gill, H. From vision to reality: Cyber-physical systems. In Proceedings of the HCSS National Workshop on New Research Directions for High Confidence Transportation CPS: Automotive, Aviation, and Rail, Washington, DC, USA, 18–20 November 2008; pp. 1–29. [Google Scholar]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N.; Kardamakis, D. A smart IoT platform for oncology patient diagnosis based on ai: Towards the human digital twin. Procedia CIRP 2021, 104, 1686–1691. [Google Scholar] [CrossRef]

- Miller, M.E.; Spatz, E. A unified view of a human digital twin. Hum. Intell. Syst. Integr. 2022, 4, 23–33. [Google Scholar] [CrossRef]

- Zhang, Z.; Wen, F.; Sun, Z.; Guo, X.; He, T.; Lee, C. Artificial intelligence-enabled sensing technologies in the 5G/internet of things era: From virtual reality/augmented reality to the digital twin. Adv. Intell. Syst. 2022, 4, 2100228. [Google Scholar]

- Lin, Y.; Chen, L.; Ali, A.; Nugent, C.; Ian, C.; Li, R.; Gao, D.; Wang, H.; Wang, Y.; Ning, H. Human Digital Twin: A Survey. arXiv 2022, arXiv:2212.05937. [Google Scholar]

- Casadei, R.; Pianini, D.; Viroli, M.; Weyns, D. Digital twins, virtual devices, and augmentations for self-organising cyber-physical collectives. Appl. Sci. 2022, 12, 349. [Google Scholar] [CrossRef]

- Montoro, G.; Haya, P.A.; Baldassarri, S.; Cerezo, E.; Serón, F.J. A Study of the Use of a Virtual Agent in an Ambient Intelligence Environment. In Intelligent Virtual Agents. IVA 2008; Lecture Notes in Computer Science; Prendinger, H., Lester, J., Ishizuka, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2022; Volume 5208. [Google Scholar] [CrossRef]

- Bell, I.H.; Nicholas, J.; Alvarez-Jimenez, M.; Thompson, A.; Valmaggia, L. Virtual reality as a clinical tool in mental health research and practice. Dialogues Clin. Neurosci. 2020, 22, 169–177. [Google Scholar] [CrossRef]

- Boulos, M.N.K.; Zhang, P. Digital Twins: From Personalised Medicine to Precision Public Health. J. Pers. Med. 2021, 11, 745. [Google Scholar] [CrossRef]

- Barricelli, B.R.; Casiraghi, E.; Gliozzo, J.; Petrini, A.; Valtolina, S. Human digital twin for fitness management. IEEE Access 2020, 8, 26637–26664. [Google Scholar] [CrossRef]

- Douthwaite, J.A.; Lesage, B.; Gleirscher, M.; Calinescu, R.; Aitken, J.M.; Alexander, R.; Law, J. A modular digital twinning framework for safety assurance of collaborative robotics. Front. Robot. AI 2021, 8, 758099. [Google Scholar] [CrossRef] [PubMed]

- Ravid, B.Y.; Aharon-Gutman, M. The Social Digital Twin: The Social Turn in the Field of Smart Cities. Environ. Plan. B Urban Anal. City Sci. 2022. [Google Scholar] [CrossRef]

- Ye, X.; Du, J.; Han, Y.; Newman, G.; Retchless, D.; Zou, L.; Cai, Z. Developing human-centered urban digital twins for community infrastructure resilience: A research agenda. J. Plan. Lit. 2023, 38, 187–199. [Google Scholar] [CrossRef]

- Singh, M.; Fuenmayor, E.; Hinchy, E.P.; Qiao, Y.; Murray, N.; Devine, D. Digital twin: Origin to future. Appl. Syst. Innov. 2021, 4, 36. [Google Scholar] [CrossRef]

- Aydın, N.; Erdem, O.A. A Research on the New Generation Artificial Intelligence Technology Generative Pretraining Transformer 3. In Proceedings of the 2022 3rd International Informatics and Software Engineering Conference (IISEC), Ankara, Turkey, 15–16 December 2022; pp. 1–6. [Google Scholar] [CrossRef]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Aydın, Ö.; Karaarslan, E. OpenAI ChatGPT Generated Literature Review: Digital Twin in Healthcare. In Emerging Computer Technologies 2; Aydın, Ö., Ed.; İzmir Akademi Dernegi: İzmir, Turkey, 2022; pp. 22–31. [Google Scholar] [CrossRef]

- Lund, B.D.; Wang, T. Chatting about ChatGPT: How may AI and GPT impact academia and libraries? Libr. Hi Tech News 2023. [Google Scholar] [CrossRef]

| Similarities between Industry 5.0 and Society 5.0 | |

|---|---|

| Challenges | Opportunities |

| 1. Aging population | 1. Human–cyber-physical systems (HCPS) |

| 2. Resource shortages | 2. Green intelligent manufacturing (GIM) |

| 3. Environmental pollution | 3. Human–robot collaboration (HRC) |

| 4. Complex international situations | 4. Future jobs and operators 5.0 |

| 5. Human digital twins (HDTs) | |

| Human: “Relating to or characteristic of humankind. … Of or characteristic of people as opposed to God or animals or machines, especially in being susceptible to weaknesses. … Showing the better qualities of humankind, such as kindness.” | Humachine The combination of the better qualities of humankind—creativity, intuition, compassion, and judgment—with the mechanical efficiency of a machine—economies of scale, Big Data processing capabilities—augmented by Artificial Intelligence, in such a way as to shed the limitations and vices of both humans and machines while maintaining the virtues of both |

| Machine: “An apparatus using mechanical power and having several parts, each with a definite function and together performing a particular task. … Any device that transmits a force or directs its application. … An efficient and well-organized group of powerful people. … A person who acts with the mechanical efficiency of a machine.” |

| Cluster 1 (7 Items) | Cluster 2 (7 Items) | Cluster 3 (3 Items) |

|---|---|---|

| Artificial Intelligence Embedded systems Human–machine interface Industry 4.0 Industry 5.0 Internet of Things Machine Learning | Human–robot interaction Human–robot collaboration Human–robot interaction Human–robot interactions Industrial research Man–machine systems Manufacture | Human-centric Human-centric interaction Smart manufacturing |

| Model | No of Parameters | Training Dataset | Max Sequence Length | Release Date |

|---|---|---|---|---|

| GPT 1 | 0.12 × 109 | Common Crawl, BookCorpus | 1024 | 2018 |

| GPT 2 | 1.5 × 109 | Common Crawl, BookCorpus, Web Text | 2048 | 2019 |

| GPT 3 | 175 × 109 | Common Crawl, BookCorpus, Wikipedia, Books, Articles, etc. | 4096 | 2020 |

| GPT 3.5 | 355 × 109 | Common Crawl, BookCorpus, Wikipedia, Books, Articles, etc. | 4096 | 2022 |

| GPT 4 | 1 × 1012 | Common Crawl, BookCorpus, Wikipedia, Books, Articles, etc. | 8192 | 2023 |

| Risk | Description |

|---|---|

| Control problem | AI becomes a singularity with a decisive strategic advantage and goals that are orthogonal to human interests |

| Accountability gap | Those most affected by AI have no ownership or control over its development and deployment |

| Affect recognition | Unethical applications of facial recognition technology to judge interior mental states |

| Surveillance | Intrusive gathering of civilian data that undermines privacy and creates security risks from data breaches |

| Built-in bias | When AI is fed data that contains historical prejudices, resulting in bias in, bias out |

| Weaponization | Using bots to negatively impact the public through social media or cyberattacks |

| Deepfakes | Creating lifelike video fakery to sabotage the subject of the video and undermine public trust |

| Wild AI | Unleashing AI applications in public settings without oversight |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. The Future of the Human–Machine Interface (HMI) in Society 5.0. Future Internet 2023, 15, 162. https://doi.org/10.3390/fi15050162

Mourtzis D, Angelopoulos J, Panopoulos N. The Future of the Human–Machine Interface (HMI) in Society 5.0. Future Internet. 2023; 15(5):162. https://doi.org/10.3390/fi15050162

Chicago/Turabian StyleMourtzis, Dimitris, John Angelopoulos, and Nikos Panopoulos. 2023. "The Future of the Human–Machine Interface (HMI) in Society 5.0" Future Internet 15, no. 5: 162. https://doi.org/10.3390/fi15050162

APA StyleMourtzis, D., Angelopoulos, J., & Panopoulos, N. (2023). The Future of the Human–Machine Interface (HMI) in Society 5.0. Future Internet, 15(5), 162. https://doi.org/10.3390/fi15050162