1. Introduction

Performance testing is a concept used very widely and considered in the design process of information systems and applications in particular. Precise determination of application performance is becoming increasingly important for economic, computing resource reservation, and reliability reasons. For services using complex server systems with implemented vertical and horizontal scaling functions, the lack of precisely defined performance thresholds is not a critical problem. Increasingly, however, applications, including critical systems, are running on Internet of Things (IoT) devices that have limited hardware resources. Traditional programming paradigms may not be sufficient to effectively manage limited resources such as CPU and RAM. Therefore, more and more attention should be paid to developing techniques to accurately determine such parameters as application performance, how much load is placed on individual hardware resources depending on the number of user queries, response size, database queries, etc.

Initial research work showed that the number of tools used in performance testing is very large, and new ones are being developed all the time, both commercial and distributed under OpenSource licenses. However, the work identified a problem related to the precise definition of the term “performance test”. In the literature, we can find many different definitions of this concept and many different types of this test. Related to this situation is a much more serious problem—the possibility of comparing the results of performance tests of an application installed on different software and hardware environments performed by different entities. Currently, there are no consistent methods available to describe how to perform such comparative tests. Thus, based on the analysis of the current state of knowledge and the gaps that were identified, the authors proposed a new model for defining and implementing performance tests. The following are the main objectives and the authors’ own contributions to this article:

Conceptual ordering related to the implementation of performance tests.

Development of coherent model that allow the realization of comparative tests, taking into account the possibility of analyzing the individual components of the system in both software and hardware aspects.

Testing of the developed solution in real conditions.

This paper is organized as follows:

Section 1 provides an introduction and defines the scope of the work conducted.

Section 2 reviews the scientific and specialized literature related to the area of conducted research and identifies gaps in the current state of knowledge.

Section 3 formulates the problem and proposes a new model for the definition of performance tests.

Section 4 presents the test results of the developed model.

Section 5 concludes the article.

2. Literature Review

As part of the preliminary work, it was decided to check how the performance tests currently described in scientific publications, as well as in the professional and specialized literature, are implemented. As a first step, it began with a detailed analysis of the leading publishers and databases of scientific publications: IEEE, Springer, Google Scholar, Elsevier, MDPI—Multidisciplinary Digital Publishing Institute, ScienceDirect, Web of Science, and Scopus. The following keywords were used in the search: performance testing, performance test application, performance methodology, stress testing, load testing, application stress test, web application stress test, web server stress test, performance stress test technology, application bottleneck, web server bottleneck, distributed system performance test, web request performance, TCP performance test, and formal software testing.

The paper [

1] presents an analysis of various performance testing methodologies and best practices used to evaluate the performance of software systems, including the importance of testing in detecting bottlenecks and optimizing resource utilization. Various performance tests such as load tests, stress tests, and scalability tests are described. The importance of designing appropriate and realistic test cases includes, but is not limited to, real user data to assure better and more accurate performance evaluation is emphasized. The possibilities of using modern tools and technologies in performance testing were analyzed. The importance of integrating continuous testing into the software development and operation cycle was emphasized, which is key to early identification of performance regressions and facilitates rapid troubleshooting. Also highlighted are the challenges and limitations of performance testing, including the complexity of predicting user behavior, the dynamic nature of distributed systems, and the need for effective analysis of test results. The article focuses mainly on describing test data and solutions. However, it does not include an analysis of real tests conducted by the authors in laboratory or test conditions. The authors of the article [

2] presented a study of the impact of Asterisk server hardware configuration on VOIP Quality of Service (QoS). They gradually loaded the server with massive calls and checked the performance of the CPU and RAM. They also scanned network packets and monitored call quality. They tried to examine the threshold value for the number of bulk connections generated by the hardware configuration in order to guarantee good QoS. The article is strongly practical in nature. However, it lacks a detailed description of the test scenarios themselves. In the publication [

3], a tool called Testing Power Web Stress was developed, providing the ability to implement extreme server testing along with the ability to analyze the server response time in the course of executing a given transaction. Three functional areas were created for testing: within the Local Area Network (LAN), from the computer to the Wide Area Network (WAN), from the computer to the cloud network and then to the WAN environment. In order to verify the accuracy of the function, a free network load test tool called Pylot was used as a benchmark. Both of the tools used recorded response times; however, they also encountered limitations such as the inability to analyze different computer specifications and the inability to host a website using Hypertext Transfer Protocol Secure (HTTPS). The article limits the range of tests considered, e.g., no server throughput tests were conducted. There is also no information on the consumption of server resources during the tests. The article [

4] compares the performance of network testing tools such as Apache JMeter and SoapUI. The key objectives of the article were to evaluate the key technological differences between Apache JMeter and SoapUI and to examine the benefits and drawbacks associated with the use of these technologies. Ultimately, the authors came to the following conclusions: compared to SoapUI, JMeter is more suitable for client–server architecture. JMeter handles a higher load of HTTP user requests compared to SoapUI, it is also open source and no infrastructure is required for its installation. The tool is based on Java, so it is platform independent. JMeter can be used to test the load of large projects and generate more accurate results in graphs and Extensible Markup Language (XML) formats. The article lacked a representation of what a given test environment looks like. Also, not indicated was the ability to track the occupancy of server resources during testing. Publication [

5] proposes a performance testing scheme for mobile applications based on LoadRunner, an automated testing tool developed in the C programming language. The solution supports performance testing of applications in C, Java, Visual Basic (VB), JavaScript, etc. The test tool can generate a large number of concurrent user sessions, or concurrent users, to realize concurrent load. Real-time performance monitoring makes it possible to perform tests on the business system and, consequently, to detect bottlenecks, e.g., detect memory leaks, overloaded components, and other system defects. The tests performed are well described, but the paper does not provide a general scheme for conducting the test and exact methods for tracking key resources of the server under test. The authors of the article [

6] describe application performance analysis using Apache-Jmeter, and SoapUI. Apache-Jmeter is pointed out as a useful tool for testing server performance under heavy load. SoapUI, on the other hand, verifies the quality of service of a specific application under variable load. The authors described how load tests should be designed and created a table where it is shown how to identify whether a test is a load test according to various characteristics. The paper [

7] analyzed the impact of more realistic dynamic load on network performance metrics. A typical e-commerce scenario was evaluated and various dynamic user behaviors were reproduced. The results were compared with those obtained using traditional workloads. The authors created their test model and showed what their network topology looks like. To define the dynamic workloads, they used the Dweb model, which allows them to model behaviors that cannot be represented with such accuracy using traditional approaches. The authors found that dynamic workloads degraded server performance more than traditional workloads. The authors of the article [

8] aimed to present a method for estimating server load based on measurements of external network traffic obtained at an observation point close to the server. In conducting the study, the time from TCP SYN to SYN + ACK segments on the server side was measured. It was found that SRT on servers varies throughout the day depending on their load. The concept introduced in paper [

9] describes an AI-based framework for autonomous performance testing of software systems. It uses model-free reinforcement learning (specifically Q-learning) with multiple experience bases to determine performance breaking points for CPU-intensive, memory-intensive, and disk-intensive applications. This approach allows the system to learn and optimize stress testing without relying on performance models. However, the article primarily focuses on describing the framework and its theoretical use, without providing practical examples of its implementation. Publication [

10] presents an analysis of indicators and testing methods of the performance testing of the web, and put forward some testing process and methods to optimize the strategy. The paper has the merit of providing a detailed definition of stress and load testing and a number of strategies for optimizing test performance. However, its focus is strictly on web application testing, which limits the broader applicability of the tests. Article [

11] demonstrates the usage of the model-driven architecture (MDA) to performance testing. Authors do this by extending their own tool to generate test cases which are capable of checking performance specific behavior of a system. However, as the authors themselves acknowledge, the method requires further development, as it does not provide insight into identifying bottlenecks or how different system resources are utilized. It merely assesses overall system performance, indicating the percentage of tests that passed or failed for a given number of test executions. Paper [

12] shows an example of conduct analyzing the behavior of the system in the server environment that currently run and then optimize the configuration of the service and server with JMeter as a performance test tools. The researchers focus on testing application performance with load testing and stress testing. The paper presents a single specific example of testing on one system, which limits its universality. Article [

13] presented an approach for load testing of websites which is based on stochastic models of user behavior. Furthermore, authors described implementation of load testing in a visual modelling and performance testbed generation tool. However, the article is limited to load testing only. It does not describe other types of tests. The example only applies to websites. In work [

14] authors apply load and stress testing for software defined networking (SDN) controllers. In essence, the article focuses only on answering the question “How much throughput can each controller provide?”. While the article explains why the performance tests were performed and briefly describes the methodology, it does not explain why the specific tests were chosen, nor does it delve into the nature of the testing methodology. The article is highly technical, focusing on test results, but lacks justification for the specific testing approaches used.

The scientific papers presented above on performance testing have often been characterized by an overly general approach or limited to a few specific tools, such as JMeter, but without a thorough presentation of the methodology and specific examples of its broader application. Some relied only on brief descriptions of the tests in question, while others mentioned what the tests were about and showed a brief example of how to use it in practice or analyzing the results obtained. In addition, the work mainly focused on two types of tests: stress and load tests. Further, the paper analyzed specialized industry sources, technical reports, and materials from manufacturers of performance testing solutions. In reports [

15,

16,

17] issued by IXIA, one can find descriptions of test parameter settings and a list of possible load tests with simple usage examples. The official user’s guide to IxLoad [

18], which contained similar information, was also analyzed. In addition, a number of articles on load testing were found in the online literature. In [

19], the definition of stress testing was presented and the most important aspects were briefly described, such as why to do such tests, what to pay attention to when testing, possible benefits, etc. However, the descriptions, metrics, and examples presented were very vague, e.g., the test presented assumed saturation of server resources, but no explanation of how to do it. The text is limited only to stress tests. Reference [

20] also describes in general terms what stress testing is and presents two metrics: the Mean time between failures (MTBF) and the Mean time to failure (MTTF). As before, these metrics are too general, as they assume averaging of time. Article [

21] describes the differences between the different tests and proposes several testing tools like JMeter but does not provide an example of how to use it. It is also very vague about the metrics. Similarly, item [

22] does not present examples or define relevant metrics to describe test results. Items [

23,

24] are limited only to defining what stress tests are and explaining the benefits of using them. Item [

25], compared to the previous two, still presents a list of stress testing tools with their characteristics—but no examples of use. In [

26] presented stress tests based on a JavaScript script that simulates the work of a certain number of users for a set period of time. Item [

27] described different types of tests and proposed the BlazeMeter tool for conducting them but presented only a laconic example of its use.

In summary, the literature items presented provided only general descriptions of load tests (stress and load) and the differences between them and performance tests. These works pointed to exemplary tools, such as JMeter, without a detailed explanation of the methodology, description of specific metrics, or examples of application. Among the main problems identified during the literature analysis stage and the authors’ experience, we can highlight (1) The lack of official standards, including tools or testing methodologies, results in a lack of uniform test scenarios, leading to divergent approaches to testing systems in practice. When there are no clearly defined standards or guidelines for tools or test methodologies, different teams may use different approaches. In addition, the lack of consistent guidelines can result in tests not being conducted in a complete and reliable manner, jeopardizing the reliability of the results and the effectiveness of the system evaluation. For example, a testing team focusing only on stress testing at the expense of load testing may overlook memory leakage issues. (2) In addition, there is a noticeable lack of experience in test preparation teams. Failure to include in a test scenario all the key aspects and variables that occur in a real operating environment runs the risk that the system may perform well under test conditions but fail to meet requirements in real-world applications. For example, skipping stress testing may result in overlooking anomaly handling problems such as a sudden increase in the number of users. (3) Subjectivity in performance evaluation. When there are not clearly defined and objective evaluation criteria, evaluators may rely on their own beliefs, experiences, and intuition, which in turn leads to uneven and potentially unfair assessments. Subjectivity in interpreting results can also create conflicts and misunderstandings among different stakeholders, who may have different expectations and priorities. As a result, decision-making based on such results becomes less transparent and more prone to error and manipulation. (4) There is also a noticeable lack of diversity in performance testing which in practice translates into being limited to using only a few types of tests. This can lead to an incomplete understanding of system performance and its behavior under varying conditions. When only stress tests and load tests are described, other important aspects of performance testing that can provide valuable information about the system are omitted. For example, information on scalability, throughput, and endurance tests is very often missing. (5) In published works and reports, we are very often confronted with a very elaborate description of the assumptions of the tests and the tools used, and many times there is only a theoretical description of the tests without application and an example of its use in practice. The analyzed works lack detailed descriptions of test preparation and implementation. This stage is very often overlooked—and it is crucial for the results obtained.

4. Experiment and Discussion

This section presents the results of applying the above test scheme to some of the most common types of load tests carried out for two real systems (web servers)—the first, without most additional services such as DNS, running under laboratory conditions, and the second running under production conditions.

All tests were performed using the IXIA Novus One traffic generator and the dedicated IxLoad software. The application operates on layers 4–7 of the ISO/OSI model, which makes it possible to test the efficiency of support for specific state protocols and to examine the server’s response in fixed bandwidth traffic. The parameters of the generator used are shown below:

General specifications: OS: Ixia FlixOS Version 2020.2.82.5; RAM: 8 GB; Hard disk: 800 GB; 4 × 10 GBase-T-RJ-45/SFP+; maximum bandwidth: 10 Gbit/s; complies with standards: IEEE 802.1x, IEEE 802.3ah, IEEE 802.1as, IEEE 802.1Qbb, IEEE 1588v2, IEEE 802.1Qat.

Protocols: data link: Fast Ethernet, Gigabit Ethernet, 10 Gigabit Ethernet; network/transport: TCP/IP, UDP/IP, L2TP, ICMP/IP, IPSec, iSCSI, ARP, SMTP, FTP, DNS, POP3, IMAP, DHCPv6, NFS, RTSP, DHCPv4, IPv4, IPv6, SMB v2, SMB v3; Routingu: RIP, BGP-4, EIGRP, IGMP, OSPFv2, PIM-SM, OSPFv3, PIM-SSM, RIPng, MLD, IS-ISv6, BGP-4+, MPLS, IS-ISv4; VoIP: SIP, RTP.

4.1. Scenario 1

In the first case, an example of the use of the model bypassing the Network Layer is demonstrated, i.e., . The performance test model without a network layer is useful in scenarios where the objective is to directly evaluate the performance of a server or application (let’s denote this as ). In order to achieve the objective, it was decided to run the following types of tests (elements of the set):

—The Connections per Second test (CPS) provides a rough performance metric and allows for determining how well the server can accept and handle new connections. The test aims to establish the maximum number of connections (without transactions) required to maximize CPU resource utilization.

—The throughput test determines the maximum throughput of a server. It should be noted, however, that this refers to the throughput of the application layer and not to a general measurement of throughput as the total number of bits per second transmitted on the physical medium.

In addition, the following elements of the sets were defined:

For : —TCP/UDP Packet Generators, —Servers: Web, Database; —OS Built-in Monitoring Tools.

For : , —HTTP Request Generators, , .

The test notation for the scenario 1 target is as follows:

In this example, the test object was an html page. It was hosted on an Apache 2.4 server. Services such as DNS, SSL, or firewall (possible bottlenecks) were also disabled. The system was hosted on a server with the following parameters: OS: Debian 12; RAM: 8 GB; Hard disc: 800 GB; CPU: Intel Pentium i5. The topological pattern of the test is shown in

Figure 6. In addition, all data were collected on a dedicated desktop computer which acted as DATA COLECTOR (DC) and DATA ANALYZER (DA) (see

Figure 3).

During the test, TCP connections were attempted and http requests were sent using the GET method to an example resource on the server—in this case, it was a website on the server called index.html (size: 340 bytes). The web page was almost completely devoid of content. As can be seen in

Figure 6, the system is devoid of any additional devices—communication takes place directly on the traffic generator—server line. Network traffic takes place via a 1 Gbit/s network interface. The built-in resource usage monitor of the Debian 12 was used as the monitoring agent.

Test

. Below (

Figure 7) is a graph of the dependence of the number of connections per second on time and a report (

Figure 8) for the CPS test (

).

As can be read from

Figure 7, the web server was able to handle 30,000 connections per second with a success rate of 92.57% (3,887,403 transactions out of 4,199,327 total attempts were made)—

Figure 8. Such results mean that a limit has been reached on the number of connections that the web server is still able to make without a complete communication breakdown. These results are within expectations.

Test . In the next step, the support of maximum throughput was tested (the throughput test).

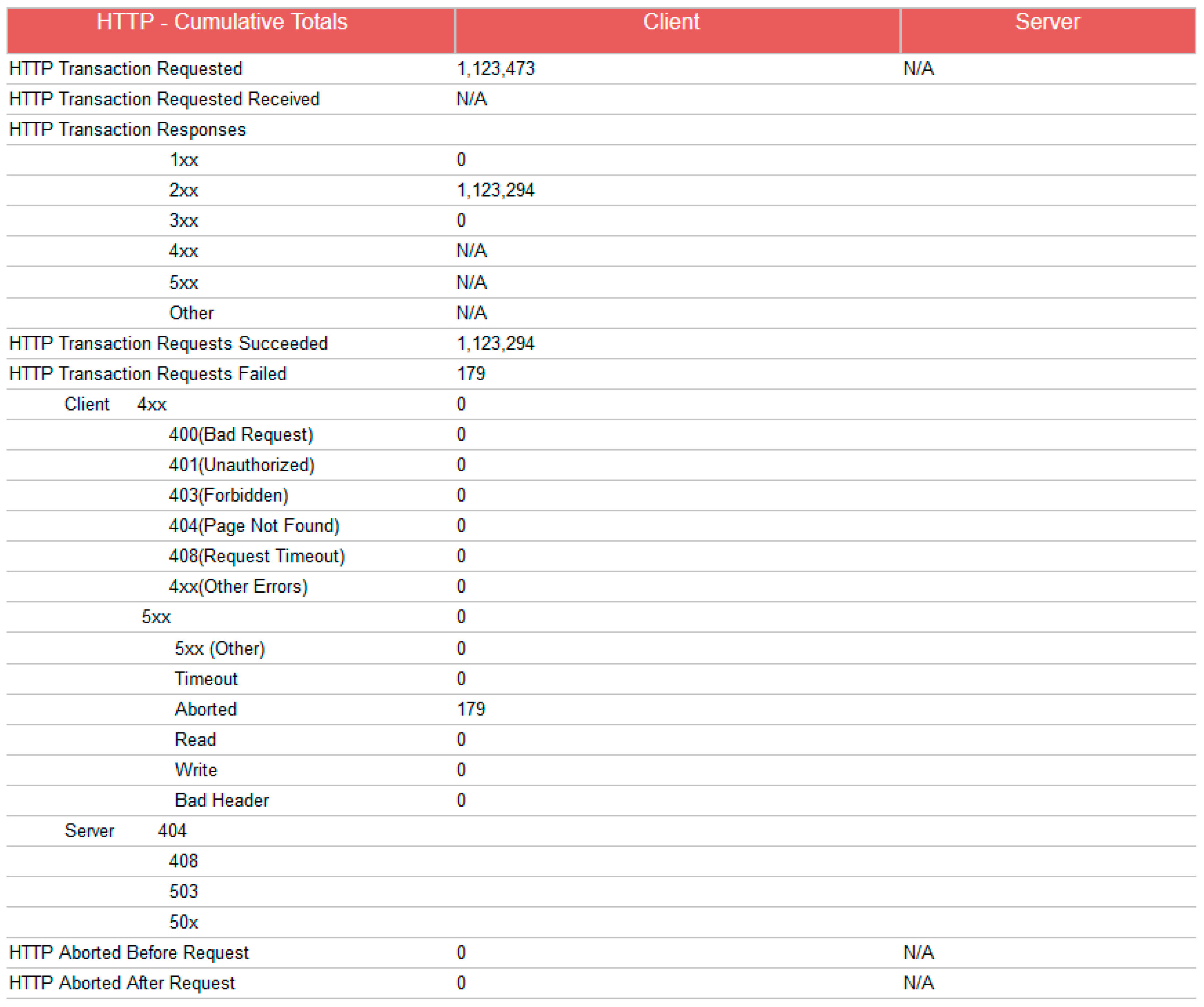

As can be seen in

Figure 9, almost the entire 1 Gb communication bandwidth was used on the test server, maintaining almost 100% communication correctness (1,123,294 transactions out of 1,123,473 total attempts (

Figure 10). These results are within expectations.

4.2. Scenario 2

Scenario 2 demonstrates an example of the use of a model that considers all three layers, i.e., . This model of performance testing is useful in scenarios where the goal () is to assess the resilience of the system to operate under heavy load. In order to meet the objective, it was decided to run the following types of tests (elements of the set):

—Described in scenario 1.

—Described in scenario 1.

—The concurrent connection (CC) test allows you to determine the maximum number of active simultaneous TCP sessions that the server is able to maintain. In other words, it provides an answer to the question of how many concurrently active sessions the server is able to maintain before it runs out of memory.

In addition, the following elements of the sets were defined (only those elements of the sets that were not defined in scenario 1 will be described):

For : , —Routers, Switches, Firewalls, —Data Analysis Tools: Grafana,

For : , , ,

For : , ,

The test notation for the scenario 2 target is as follows:

Including the network layer in the performance test model allows for a more realistic simulation of actual system operating conditions. Such a model takes into account the impact of network devices and network topology on server and application performance.

In the second scenario, the test object was again the web server, but this time a much more complex system, as it was a working e-learning platform. In order to best replicate real-life conditions, the tests were performed with a DNS server and an implemented SSL certificate. The web server functions are implemented by two virtual machines. The machines are implemented on a server hosted by the ESXi hypervisor. The first machine hosts the Moodle platform, while the second hosts the PostgreSQL database associated with Moodle. The pattern of the test topology for scenario 2 is shown in

Figure 11.

As can be seen in

Figure 11, the system is much more complex than the previous one, because the system chosen for testing is as close as possible to real systems. This time, network traffic is not generated directly to the server, but instead passes through an intermediate network layer (a real campus network loaded with normal user traffic).

Test

. Below (

Figure 12) is a graph showing the dependence of the number of CPS connections on time, which was generated by the IxLoad software.

Test on the e-learning platform showed (see

Figure 12) that the maximum number of connections per second the server is able to handle is around 450–500 connections per second (this value stabilizes after approximately 40 s). These results are well below expectations.

In addition, it can be seen in

Figure 13 that after about 40 s there is a sharp increase in the number of concurrent connections, which is a sign of abnormal communication with the server.

As can be read from

Figure 14, out of 280,665 http requests, a correct response was received for 10,486 attempts (2xx), while 270,177 requests were rejected with an error (5xx abort). This error usually means that the server’s resources have been exhausted. The CPU, RAM, and disk usage percentages on the virtual machines with Moodle and the database are shown below.

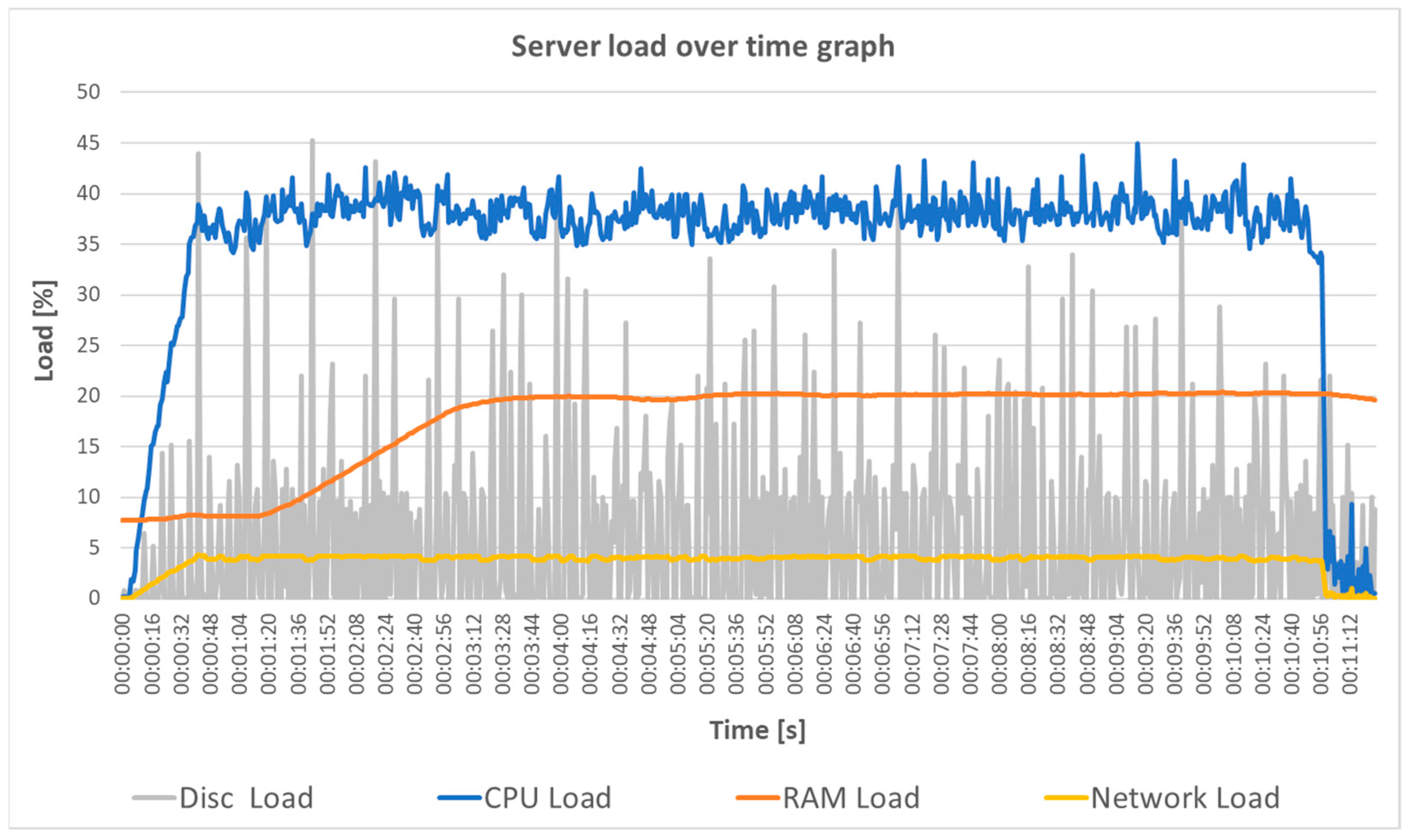

As can be seen in

Figure 15, around the 40th second there is a 10% increase in RAM usage (approximately 13 GB) due to the increase in concurrent connections, but while the overall resource usage on the Moodle machine does not exceed 50%, for the database VM the CPU usage from around the 40th second is 100% and the disk usage around 75–80% (

Figure 16).

Taking this into account, the test was repeated, but this time limiting the number of generated connections to 400 per second to determine the maximum CPS value at which the server still maintained 100% correct communication. The limit of 400 connections per second was chosen based on the observation that the database VM’s CPU usage reached 100% and RAM usage increased significantly during the initial test. By reducing the connection rate, we aimed to identify the threshold at which the server could handle the load efficiently without reaching critical resource limits. Additionally, this approach allowed us to verify whether the performance issues consistently occur above 400 CPS or if this value was an anomaly.

At this point, it should be noted that the

test was performed with different call values for the

generator, which was defined as TCP/UDP Packet Generators. It is possible to define more precisely the generator used along with its call parameters, e.g.,

—TCP/UDP Packet Generators (without CPS limit),

—TCP/UDP Packet Generators (whit limitations 400 CPS) in which case the test description could be written as follows:

The level of detail in describing a given testing process depends on the requirements defined by the project team.

Figure 17 shows a graph of the time dependence of the CPS for the repeated test—400 CPS i.e., as assumed before, while the number of concurrent connections (

Figure 18), although lower than in the first test, is still relatively high, which means that the server load is extremely close to saturation of resources and, although 100% correct packet transmission was obtained, there are already delays (hence the relatively high number of concurrent connections). Admittedly, Admittedly,

Figure 19 shows a 100% success rate for 250,007 HTTP requests, and an analysis of resource usage on the Moodle virtual machine (see

Figure 20) shows that the server still has a significant amount of free computing resources, but

Figure 21 also shows that CPU usage on the database VM is fluctuating around 95%. In summary, 400 connections per second is the extreme value that the server is able to handle without packet loss.

Test . The CC test was performed as the next step.

As can be seen in

Figure 22, the maximum number of concurrent connections obtained is approximately 2200. RAM consumption increased (

Figure 23) by approximately 10 per cent (13 GB), but this is once again significantly lower than expected. Analyzing

Figure 24, it can be seen that the CPU consumption on the database VM has again reached 100%.

Test . In the last step, the support of maximum throughput was tested (the throughput test).

As can be seen from

Figure 25, the maximum throughput is 112 Mbps. For this throughput, the correctness of communication is almost 100% (

Figure 26). However, as in the previous tests, this value is lower than expected. Again, you can see in

Figure 27, there are still a lot of free resources left on the virtual machine with Moodle, while on the virtual machine with the database the CPU resources have been fully saturated (

Figure 28).

The experiments carried out in this chapter have shown how to use the proposed model to implement the tests. These tests were performed on two different web servers in order to evaluate their performance and identify potential problems associated with their operation. The first stage of testing was carried out on a simple web server, where the results were as expected. This server achieved relatively high CPS and throughput values, confirming that under laboratory conditions the tests prepared on the basis of the proposed framework work correctly and effectively measure server performance.

The second stage of testing included a system operating in production conditions. The tests showed a decrease in performance with increasing intensity of generated loads. CPS, CC, and throughput values were much lower than expected, which suggests the existence of performance problems at the configuration level or at the system level. Further analysis revealed that the main cause of these problems was the saturation of processor resources on the virtual machine on which the database is running. The use of the proposed model turned out to be crucial in the process of preparing the testing process and interpretation of results by different teams working on different components, which allowed for a relatively quick and precise diagnosis.

Table 1 compares the key characteristics of the proposed layered model with the classical approach to performance testing.

The comparative areas were selected on the basis of the literature analysis conducted in chapter 2 with particular emphasis on the areas of concern identified in that analysis ((1)–(5)). As can be read from the table above, the new approach is distinguished from the classic approach by several features. Firstly, in the new model presented, tests are organized in layers corresponding to different stages of preparation and execution, allowing a clear separation of tasks. In contrast, the classical approach is typically linear and handled by a single team, which limits efficiency. Additionally, we use a precise objectives-tests matrix that aligns specific goals with appropriate tests, ensuring better coverage and focus. The classical approach relies more on general practices and experience, which may result in less targeted testing. The layered model is also more modular, allowing easy adjustments to meet the unique needs of each system. The classical approach is less adaptable, making it harder to adjust during different project phases. By isolating tasks, the layered model enables different teams to work concurrently on separate layers, optimizing both time and cost. Moreover, the clear steps and structure of our method make it easier to replicate across various projects, while the classical approach relies heavily on team experience, which makes replication more challenging. In terms of cost efficiency, the layered specialization of the model and targeted task assignment help optimize resource usage, leading to potential cost savings. Of course, the initial stage related to model definition can be costly for simple tests, but in the long run it has a cost-reducing effect. However, the classical model holds an advantage in simplicity and faster deployment for straightforward projects. In cases where the system is less complex and testing requirements are standard, the classical approach may be more intuitive and easier to implement, as it relies on well-established and proven practices. This comparison highlights the strengths and limitations of both methods, providing context for why the new approach is better suited for complex and evolving IT environments. During our work, we also analyzed the possibility of using formal methodologies based on UML2 and SysML for test descriptions. They largely focus on detailed definition of functional tests also with aspects of detailed analysis of correlations between given components of a given subsystem, defining in great detail requirements for (including performance requirements and availability of individual components) specific parameters e.g., ([

38,

39,

40]). From the point of view of the proposed model, these tests can be viewed as individual elements of a set

, while the approach proposed in the article can be treated as a metamodel. On the other hand, the direct use of systems modeling language models to design and describe a whole range of tests is hampered by the lack of direct support for performance test procedures and the need to apply a complex process of converting the notation used into formats that are understood by testers or can be used to prepare test tool configurations [

41]. The requirements defined in the model-based systems engineering (MBSE) approach are generally functional in nature and using them to describe performance tests assumes the use of considerable expertise in the selection of testing methods and means [

42]. In our model proposition, tests are defined including the indication of tools for their implementation and focuses on performance testing, which plays an increasingly important role in the implementation process of small and medium-sized systems in production conditions. The process of defining a system model for MBSE is complex and time-consuming and requires expertise, and is often reserved for complex, large IT systems specific to areas such as aerospace, automotive, defense, and telecommunication.

5. Conclusions

Performance testing of applications and hardware components used to build IT systems is crucial to their design, implementation, and subsequent operation. At present, there are no consistent methods that precisely distinguish between different types of tests and define how to conduct them. This contributes to difficulties in the process of test implementation and interpretation of their results. In this paper, based on the analysis of the literature, a number of problems related to the implementation of performance tests have been identified. Then, a model was proposed which, in four steps, allows to precisely define the goal of testing and the methods and means to achieve it. The proposed solution can be seen in terms of a design framework. In the course of implementing the developed model, engineering teams can independently define the individual elements of sets and determine the topology used for test execution. The proposed approach makes it possible to minimize the impact of the problems defined in chapter two (1)–(5) on the efficiency and accuracy of the implemented tests, in particular in the areas of precise definition of test scenarios, their differentiability, and repeatability. In addition, dividing the testing process into layers correlated with the test preparation steps allows to separate quasi-independent areas which can be handled by specialized engineering teams or outsourced to external companies. Such an approach, on the one hand, allows to accelerate the process of performance testing execution and can reduce the cost of its implementation

The developed model was used to plan and conduct tests in a laboratory and real web application environment. As a result of the performance tests, bottlenecks in the system were identified, as well as key parameters that affect the performance of the system. In addition, the team of developers creating the solution in production was able to understand exactly how the tests were conducted and which parameters of the system and how they were tested, which resulted in faster development of fixes and optimization of system performance. In addition, using the proposed solution, a team of developers could quickly develop a new type of tests and, using the developed notation, communicate its description to the testing team.

During the research, it turned out that especially important from the point of view of the effectiveness of the use of the proposed solution is the correct definition of the elements of sets . This requires the team to have experience and extensive knowledge of test execution and operation of the hardware and software components that create each layer . Therefore, further work will develop a reference set of elements that can be used by less experienced teams in the process of test planning and execution.