Abstract

Online social networks (OSNs) are inundated with an enormous daily influx of news shared by users worldwide. Information can originate from any OSN user and quickly spread, making the task of fact-checking news both time-consuming and resource-intensive. To address this challenge, researchers are exploring machine learning techniques to automate fake news detection. This paper specifically focuses on detecting the stance of content producers—whether they support or oppose the subject of the content. Our study aims to develop and evaluate advanced text-mining models that leverage pre-trained language models enhanced with meta features derived from headlines and article bodies. We sought to determine whether incorporating the cosine distance feature could improve model prediction accuracy. After analyzing and assessing several previous competition entries, we identified three key tasks for achieving high accuracy: (1) a multi-stage approach that integrates classical and neural network classifiers, (2) the extraction of additional text-based meta features from headline and article body columns, and (3) the utilization of recent pre-trained embeddings and transformer models.

1. Introduction

The influence of online social networks (OSNs) has transformed users worldwide from passive consumers into active content creators [1]. People now generate content in response to news events, personal experiences, product reviews, and more. This user-generated content often blends events, facts, and opinions, reflecting the creators’ personal perspectives, sentiments, cultures, and backgrounds [2,3]. However, the challenge lies in distinguishing facts from opinions within the vast amounts of text, graphics, and videos produced daily. Are users intentionally altering facts, spreading misinformation, or simply sharing content that aligns with their beliefs, regardless of its accuracy [4,5]? This becomes even more complex when information is shared within close-knit networks of friends or like-minded individuals, where the original source may be unknown or unverified [6,7].

Fact-checking platforms such as Snopes.com and FactCheck.org monitor and verify popular claims, relying on manual investigations. In contrast, researchers are exploring machine learning approaches to automate content labeling. These machine learning classifiers are trained on datasets of known claims, aiming to detect correlations between users’ sentiments or stances and the spread of misinformation. Although sentiments and stances may seem similar, both reflect the connection between user emotions or opinions and the content’s subject, stances are often broader, influenced by multiple factors beyond mere sentiment.

Stance detection is a crucial area within Natural Language Processing (NLP) and has been extensively studied. It involves determining the polarity of an audience or listener based on textual analysis, identifying whether their preference toward a subject is positive, negative, or neutral. Related concepts, such as stance classification, stance prediction, stance identification, debate stance classification, and debate-side classification, are integral to this research domain [8,9,10,11,12].

Stance detection has found applications across various fields, including the identification of fake news. Numerous researchers have focused on detecting fake news by examining the stance of texts, particularly when the stance is directed toward a specific topic or entity [13]. Fake News Challenge (FNC) involved the development of machine learning models that can predict stance labels given a headline and body of a news article. The stance label to be assigned could be one of the sets: ‘agree’, ‘disagree’, ‘discuss’, or ‘unrelated’. The provided dataset consists of pairs of a headline of a news article as a claim and a snippet of text related to the headline. Most of the teams participating in the FNC, including the winner team, used deep learning (DL) approaches to develop their learning and prediction models. Several papers discussed FNC and main submissions, such as [14,15,16,17,18,19]. Previous research indicates that traditional text processing methods have been effective. The crucial insight appears to be in comparing features from the headline with those from the body of the text. This approach is intuitive, as the stance is often determined by how the body of the text relates to the headline.

Recent developments in the identification of false news have demonstrated that hybrid models, which incorporate a variety of machine learning approaches, significantly improve detection accuracy. Studies show that by efficiently digesting both short and long news texts, pre-trained Transformer models such as BERT and XLNet achieve great accuracy in identifying false news [20]. Furthermore, zero-shot detection techniques have been made possible by integrating fact verification and false news identification, which helps to get beyond the drawbacks of limited datasets [21]. Likewise, detection accuracy has been further enhanced by multi-modal hybrid techniques that include text and picture analysis, particularly for news that contains satirical or distorted material [22]. Other researchers, for example, adopted hybrid models with new criteria such as using complex user behavior and network dynamics [23], using a Long Short-Term Memory (LSTM) recurrent neural network [24], and using large language models and crowdsourcing for the detection of hybrid human–AI misinformation [25].

The main goal of this research is to design and assess text mining models that incorporate cosine similarity between the headline and the body of news articles to predict user stance. We aim to explore if the cosine distance feature will enhance the models’ prediction accuracy. An initial exploratory study has been published in [26]. In this paper, several submissions from the FNC competition [27] will be evaluated as a benchmark. Our goals are threefold. First, we aim to independently verify the results reported in the challenge. Second, we used FNC submissions in addition to others that were published along with their code as a benchmark for our study. For each selected submission, our focus will be on analyzing the architecture, features, and results of the submission. Third, we developed and evaluated pre-trained language-based text mining models, aiming to achieve accuracy improvements compared with the benchmark submissions.

The rest of the paper is organized as follows. Section 2 summarizes a selection of related work to fake news detection in general and stance detection in particular. Section 3 summarizes a selection of FNC submissions, their main approach, and evaluation. Section 4 presents our own analysis and evaluation of FNC. Finally, the paper is concluded in Section 5.

2. Literature Review

Today, in our digital age, fake news and misinformation have become significant challenges, particularly with the proliferation of social media platforms. As a response, developing advanced techniques for fake news detection and stance detection has flourished since the start of the new millennium. Research in this domain often uses multimedia retrieval systems.

2.1. Fake News

The rise of online misinformation requires robust methods for identifying fake news. The research on fake identification and detection has been primarily researched and examined by many researchers. Rubin et al. [28] have classified fake news into three categories: (1) the news, where the purpose is to mislead the reader by misinforming him to be confused. It is totally considered fraudulent. (2) Rumors that contain information along with suspicious certainty. (3) Irony and imitation are done by people who are used to satire and sarcasm. Fake news is defined in [29] as any kind of news that has been, unintentionally or intentionally, written incorrectly or wrongly, or news that is provable false and deluding people. In addition, they classified fake news into two main categories, specifically fake news on traditional media and fake news on social media. Fake news on traditional media consists of psychology and social foundations, while fake news on social media consists of malicious accounts and echo chambers [29]. They also proposed a model for detecting and fixing fake news problems, specifically on social networks. They detect and categorize the original news of fake news by confirming the feed box beside the official news website extension and confirming it by specifying a link to previously active original news on the news websites.

De Oliveira et al. [30] have proposed a sensitive stylistic approach to detect and recognize fake news on social networks, specifically Twitter. Their approach, based on NLP, uses computational stylistic analysis. They used thirty-three thousand tweets classified as real and proven false. They stated that their approach achieved 86% accuracy and 94% precision.

Another work by Anjo et al. [31] to identify fake news messages on Twitter using deep learning. They proposed a framework based on using a hybrid approach of Convolutional Neural Networks (CNN) and Recurrent Neural Network (RNN) models. Their framework can detect related features correlated with fake news with no knowledge of the domain. Their framework achieved 82% accuracy. Similarly, Boididou et al. [32] have tested different combinations of quality and trust-oriented features with the aim of predicting the most likely label (fake or real) for each tweet. They derived progressive forensics features and fused them, including user-based and post-based features, to deal with the news verification dilemma. The problem of the forensics feature is labor-intensive because most of these features are produced manually and intended to identify certain updated traces, which are not appropriate to be applied to actual images related to fake news.

Fake images or fake news images contain fake news or actual images that have been used incorrectly to misrepresent actual news or occasions. In Boididou et al. [33], fake images have been classified into two main categories: (1) Tampered images and (2) Misleading images. Tampered images are images that have been altered digitally. Misleading images have not been altered; however, the content of the image misleads the reader or viewer. Gupta et al. [34] have employed and applied two general categories of features in identifying the fake images that have been posted during the Sandy hurricane in the United States (US). They used seven features of users, such as user age and size of the followers, and eighteen features of the tweet, such as tweet length and count. They used more than ten thousand images to comprehend how fake images influence social reputation.

The researchers developed several deep learning frameworks to identify COVID-19 misinformation in both Chinese and English [35]. They employed the Long Short-Term Memory (LSTM) model, the Gated Recurrent Unit (GRU) model, and the Bidirectional Long Short-Term Memory (BiLSTM) model to detect fake news. Their findings revealed that the BiLSTM model achieved the highest detection accuracy, reaching 94% for short English texts and 99% for longer English texts. For Chinese texts, the detection accuracy was 82%. Similarly, deep learning networks were also used to develop a new model framework to detect fake news images called Multi-domain Visual Neural Network (MVNN) [36]. The proposed framework models visual contents at semantic levels simultaneously with physical levels using a deep learning network. Their approach combines the pixel domain and the frequency of visual information to detect fake news. They utilized a CNN-based network to capture challenging images associated with different forms of fake news in the domain. To extract visual features across various semantic levels, they applied a multi-branch model combining CNN and RNN architectures. Forensic features have been employed to identify altered images generally. The Block Artifact Grids (BAG) feature is used to help identify fake news. Additionally, Dementieva et al. [37] have introduced Multiverse, a novel feature leveraging multilingual evidence to improve the detection methods of fake news. This approach was tested in data sets that contain general news and a specific dataset focused on fake COVID-19 news, resulting in substantial performance improvements compared to baseline models.

Therefore, research has shown that combining textual and visual information together can significantly improve the accuracy of the detection of fake news. Zhou et al. [38] proposed a multi-modal approach called SAFE (Similarity-Aware FakE news detection), which utilizes both textual content and visual features to identify fake news. Their model outperformed text-only baselines, demonstrating the importance of considering visual elements in fake news detection. Building on this multi-modal approach, Qi et al. [39] introduced MCAN (Multi-modal Co-Attention Networks), which uses a co-attention mechanism to capture the intricate relationships between textual and visual content. Their model showed improved performance in identifying fake news across various datasets.

Recently, the different aspects of detecting fake news on social media using advanced learning techniques have been reviewed by [40,41,42]. They highlighted the potential of deep learning models, particularly with attention mechanisms, for analyzing textual data and capturing complex relationships within content. Reviews emphasize broader challenges and research directions in this domain, including adapting models to various domains, ensuring explainability of decisions, and leveraging social context for better fake news detection.

2.2. Stance Detection

Stance detection, a subtask within the FNC, plays a crucial role in identifying fake news. Stance detection involves determining the attitude of a text towards a specific target. Stance detection in news articles is different from stance detection in online debates and tweets. The stance detection in article news is related to the titles in NLP. The validity of titles is predicted with the utilization of the sources’ trustworthiness and the stance of the article [43].

The Fake News Challenge (FNC-1), launched in 2017, was a pivotal competition in the field of fake news detection that focused on stance detection as a subtask. Participants were tasked with classifying the stance of body text relative to a headline into four categories: agrees, disagrees, discusses, or unrelated. The competition provided a dataset of 50,000 headline-body pairs and used a weighted scoring system for evaluation. FNC-1 had a significant impact on the field, providing a standardized dataset and evaluation metric, sparking numerous research papers, and highlighting the importance of stance detection in fake news identification. The motivation behind the first FNC stance identification job was retained from the effort recommended in [44], wherein the authors categorize the stance of distinct sentences only of the headline news to a certain claim.

Borges et al. [45] used deep learning to tackle the problem of stance detection in FNC. They integrate the neural attention mechanism with max-pooling and bidirectional RNN to create depictions from the news article body and titles with external features based on similarity. Similarly, Shang et al. [46], proposed a framework to deliver and offer the reader a complete viewpoint and understanding of the question. They introduced a model consisting of two parts: part one consists of tree-based concentrating on features that are handcrafted, while part two involves RNN concentrating on a few of the main sentences merely. Moreover, Umer et al. [47] proposed a model to detect fake news stances. This model depends on the body of the news and the titles. They combine Principal Component Analysis (PCA) as well as chi-square along with LSTM and CNN, wherein chi-square and PCA obtain the features that are delivered to the CNN-LSTM model. They mitigate the feature dimensionality before delivering them to the classifier through applying dimensionality mitigation methods. Their approach shows that the principal component analysis did better than chi-square and achieved 97.8% accuracy.

Recently, Hardalov et al. [48] proposed a cross-lingual multi-task learning framework for stance detection. Their approach, which leverages pre-trained language models and multi-task learning, achieved state-of-the-art results on several benchmark datasets. In the domain of multimedia stance detection, Jiang et al. [49] introduced MMSD (Multi-Modal Stance Detection), a framework that incorporates textual, visual, and social context information. Their model demonstrated superior performance in detecting stances in social media posts containing both text and images. Other researchers studied fake news and stance detection in multilingual text or other specific non-English text; see, for example, [50,51].

The project outlined herein is an analysis of the data made available by the FNC. As described on the website, the goal of the challenge is “to address the problem of fake news by organizing a competition to foster the development of tools to help human fact-checkers identify hoaxes and deliberate misinformation in news stories using machine learning, NLP, and artificial intelligence” [27].

3. Fake News Challenge Contributions

The FNC team provided a baseline approach based on gradient boosting and the co-occurrence (COOC) of word and character n-grams in the headline and the document, along with lexicon-based features. As the FNC competition has concluded, a brief analysis of the top three competitors by FNC score is discussed in the following subsections, followed by a section that presents some other solutions that are documented in the literature. Afterwards, a new proposed approach is presented that tackles the FNC challenge in a different way, using n-gram features and the cosine distance between the headline and body of the news article, aiming to enhance the weighted accuracy of the fake news detection approach.

3.1. Talos Intelligence (1st Place)

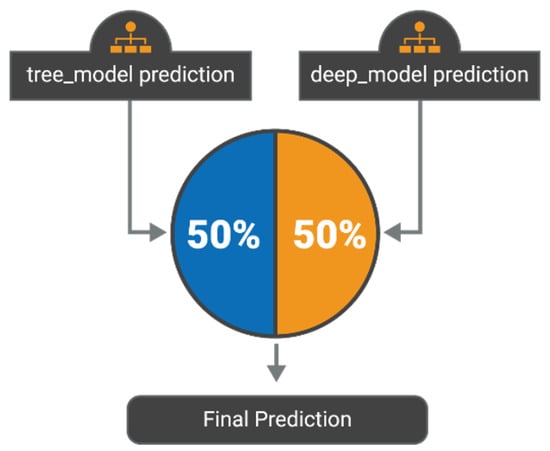

Talos, a Cisco research division, achieved the best score. Talos’ final model is an ensemble that uses a 50/50 weighted average between a deep learning model created with deep convolutional neural networks (DCN) and a gradient-boost decision trees (GBDT) model with lexical features (LF); see Figure 1 [52]. In addition to outlining the models used in their solution, the Talos blog post also notes several feature relationships that the team identified during data exploration. Upon analyzing the dataset, several features emerged as potentially valuable for understanding the relationship between headlines and body text. These include the count of overlapping words between the headline and the body text:

Figure 1.

Talos ensemble.

- Similarities assessed through word count, 2-g, and 3-g (an n-gram is a contiguous sequence of n items from a given sample of text or speech). The items can be phonemes, syllables, letters, words, or base pairs according to the application. The 2-g and 3-g are specific types of n-grams in natural language processing and computational linguistics. For example, the 2-g of “The cat sat on the mat” would be “The cat”, “cat sat”, “sat on”, “on the”, “the mat”, and so on) comparisons.

- Similarities calculated after applying term frequency–inverse document frequency (TF-IDF).

- Weighting and Singular Value Decomposition (SVD) to these counts.

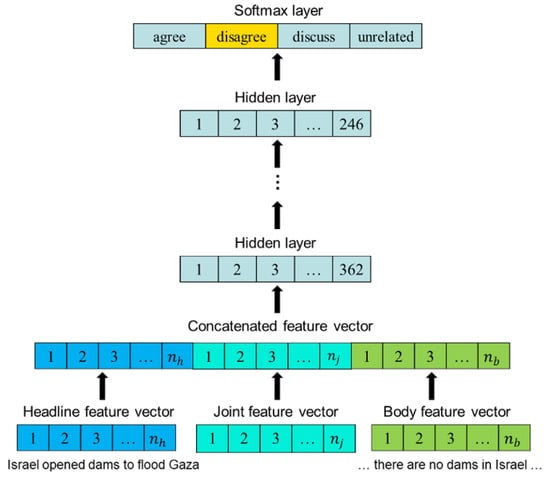

Technische Universität (TU) Darmstadt (2nd place). The second-best score was achieved by the Athene card team; researchers at the UKP Lab TU Darmstadt in Germany [53] used ensemble learning (EL) of five randomly initialized Multilayer Perceptron (MLP) structures; each MLP is constructed with seven hidden layers and is fed with the same set of inputs. The predictions are based on hard voting. The team applied MLP to a combination of baseline features and the additional features. The additional features are outlined in the medium article published by the team [53]. The MLP model is shown in Figure 2, which is provided in the article:

Figure 2.

Talos ensemble.

- BoW: Bag of words uni-grams.

- NNF: Non-Negative Matrix Factorization.

- LSI: Latent Semantic Indexing.

- LSA: Latent Semantic Analysis.

- PPDB: Paraphrase Detection based on Word Embeddings.

The team randomly initialized this MLP to create an ensemble of five models. The final prediction model uses hard voting to consolidate the output of the ensemble.

3.2. University College London (UCL) Machine Reading (3rd Place)

The third-best score was achieved by an NLP research group at the UCL Computer Science department, UCL Machine Reading. The team’s solution is described in [14]. In contrast to the other two top teams, they used a single MLP model with bag-of-words (BoW) features. The author is a programmer with very little Python experience, but Python was chosen as it seems to be the most powerful and versatile tool for machine learning tasks. The following libraries were used to facilitate machine learning tasks:

- Pandas—Data analysis.

- Scikit-learn—Machine learning toolkit used for the following:

- Text processing.

- Feature selection.

- Model training.

- Cross-validation.

- Seaborn—Data charting.

The main goal of this research work is to produce an independent solution to the FNC with a high accuracy rate compared with the ones documented in the literature with which we can benchmark our solution.

3.3. Other Solutions

Following the competition, several studies proposed alternative methods utilizing FNC data. Bhatt [18] introduced a deep recurrent model (DRM) to generate neural embeddings, alongside a weighted n-gram bag-of-words model for capturing statistical features. Additionally, feature engineering heuristics were applied to extract handcrafted external features. These features were then integrated through a deep neural network layer to classify headline-body news pairs into categories: agree, disagree, discuss, or unrelated. This approach achieved an overall weighted accuracy of 83.08%.

Borges [45] employed bi-directional RNNs enhanced with max-pooling and neural attention mechanisms to create detailed representations of both headlines and the body of news articles. These representations were then combined with external similarity features for improved accuracy. Meanwhile, Zhang [12] introduced an end-to-end ranking algorithm using a Multi-Layer Perceptron (MLP), where TF-IDF was utilized to extract features from headlines and article bodies. In a subsequent study, Zhang [54] addressed the classification challenge by proposing a hierarchical model that grouped the ‘agree’, ‘disagree’, and ‘discuss’ categories into a single ‘related’ class for more effective categorization.

Altheneyan and Alhadlaq [55] employed big data technology (Spark) and machine learning to create a stacked ensemble model that followed feature extraction using n-grams, Hashing TF-IDF, and a count vectorizer. The results showed that their model has a classification accuracy of 92.45%. Table 1 summarizes those contributions, models, and their final weighted accuracy. We included in the table those publications that made their code public, and we were able to include in the comparison.

Table 1.

Related papers contributions comparison.

4. The Proposed Method and FNC Analysis

4.1. FNC Dataset

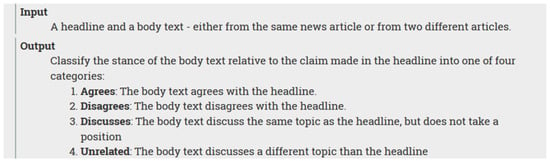

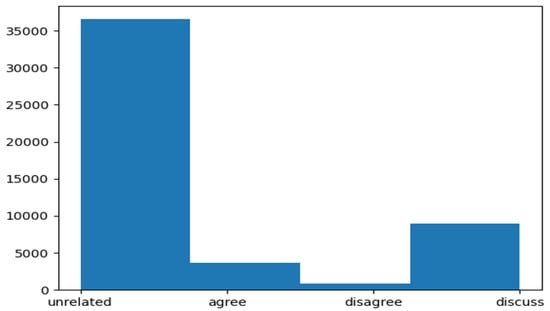

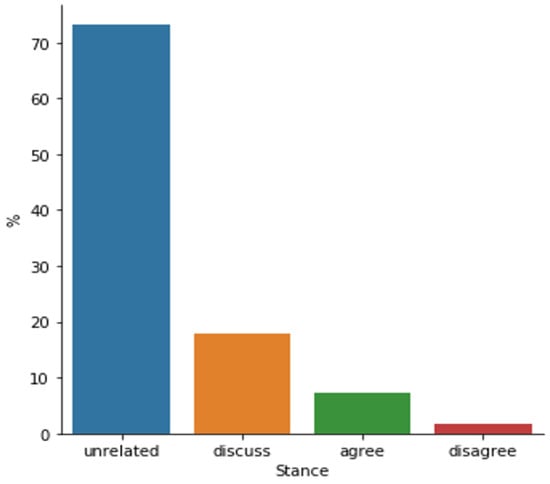

Training and test datasets are provided on the FNC website. An overview of the data is provided on the FNC website and is shown in Figure 3 that shows the provided data description and Figure 4 that shows the distribution of the values in the target variable, Stance. The most frequent value is “Unrelated”, which means the body text discusses a different topic than the headline. This data is available on GitHub in the following form:

Figure 3.

Provided data description.

Figure 4.

Distribution of the outcome variable.

- train_bodies.csv

- train_stances.csv

- compeition_test_bodies.csv

- compeition_test_stances.csv

The bodies and stances are associated with each other using a foreign key called body ID included in the body and stance files.

The training data includes 49,972 news articles, 36,545 of which are unrelated. Figure 5 shows a breakdown of the training data by stance category or label.

Figure 5.

Training data by stance.

All training data may be used to train models since separate test data with 25,414 articles are provided.

4.2. Pipeline of the Proposed Method

The dataset provided contains only textual features, making text processing a critical component of the task. Previous analyses have shown that traditional text processing methods are effective, particularly by comparing features of both the headline and the body of the text. This approach is intuitive, as the stance is shaped by the relationship between the two.

To keep the scope of the project focused, only one text processing technique was applied, instead of using multiple methods. While top-performing teams employed various techniques such as word counts, TF-IDF, word embeddings, and sentiment analysis, this project specifically focuses on extracting features using TF-IDF. The TfidfVectorizer from Scikit-learn was used to compute these frequencies. It processes the corpus of all headlines and body text, identifies the top 1000 1-g, 2-g, 3-g, and 4-g, and then vectorizes the text accordingly.

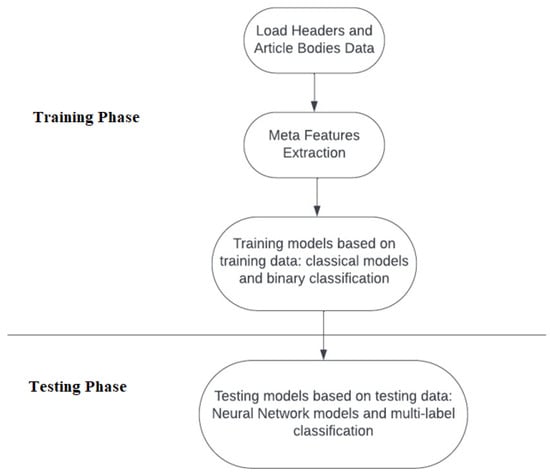

Since headlines and bodies with similar stances are more likely to align in agreement or discussion, the cosine distance was employed to evaluate the similarity between the headline and body based on their TF-IDF frequencies. The Scikit-learn library offers a function called pairwise_distances, which computes the similarity values for each pair of headline and body rows in the dataset. Figure 6 shows the pipeline of the baseline model that we used in the first stage.

Figure 6.

Pipeline of the proposed method.

4.2.1. First Stage: Text-Based Meta Features and Classical Learning Models

First, we used TF-IDF frequencies to extract n-gram features from the headlines and bodies of the article. Second, cosine similarity was used to assess the similarity between the headline and body.

4.2.2. Second Stage: Pre-Trained Embeddings-Based Neural Network Model, Multi-Label Classification

In the second stage of the experiment, we utilized Neural Network (NN) models in combination with pre-trained word embeddings from the GloVe dataset (specifically the “glove.6B.50d.txt” file). This file contains word vectors with a dimensionality of 50, trained on a corpus of 6 billion tokens from Wikipedia and Gigaword 5.

The architecture of the NN model consists of three layers. The first two layers use the Rectified Linear Unit (ReLU) as the activation function, which is a widely used activation function in neural networks due to its ability to introduce non-linearity while avoiding the vanishing gradient problem. The ReLU function outputs the input directly if it is positive; otherwise, it outputs zero. The final layer uses the Softmax activation function, which is common in classification tasks as it converts the output into a probability distribution over the predicted classes.

We trained the model over 120 epochs, meaning the entire training dataset was passed through the model 120 times to update the network’s weights. A relatively small batch size of five was used, meaning the model’s weights were updated after every five samples. This small batch size is beneficial for making more frequent updates to the model parameters, potentially leading to more fine-grained adjustments to the learning process.

4.2.3. A Hybrid Multi-Stage Approach

A hybrid multi-stage approach combines multiple NLP techniques and machine learning models to enhance accuracy and robustness. To do so, this approach uses pre-trained language models enriched with meta features and combines different models (e.g., CNN and RNN) to leverage their strengths. In evaluating previous analysis, we noticed three aspects that distinguish submissions or models with high accuracy:

- The first one is adopting a multi-stage approach rather than a single-stage approach to build the final learning model. Typically, the first stage involves meta features with classical classifiers.

- The second trend in significant submissions used several examples of meta features extracted from headlines and/or article bodies. We used several features mentioned in those previous submissions, such as cosine similarity or other similarity metrics between headline and body, TF-IDF, Bag of Words, N-grams, and text summarizations.

- The third trend is using Neural Network (NN) models and also pre-trained embeddings. In our experiments, we used Glove, but other embeddings should be evaluated and compared as well. Previous literature showed that some embedding models can perform better based on the dataset and the domain. We are also planning to use sentence transformers, as they showed significant accuracy in recent literature.

- Individual classifiers in the first stage will classify input text as either related or un-related. Second-stage NN models will classify input text into either agree, disagree, or discuss.

Therefore, based on these notes, in the hybrid multi-stage approach, we adopted deep learning models using pre-trained embeddings enriched with meta features. The meta features used include (1) cosine similarity and other similarity metrics between headline and body, (2) TF-IDF, (3) Bag of Words, (4) n-grams, and (5) text summarization.

5. Experiments and Results

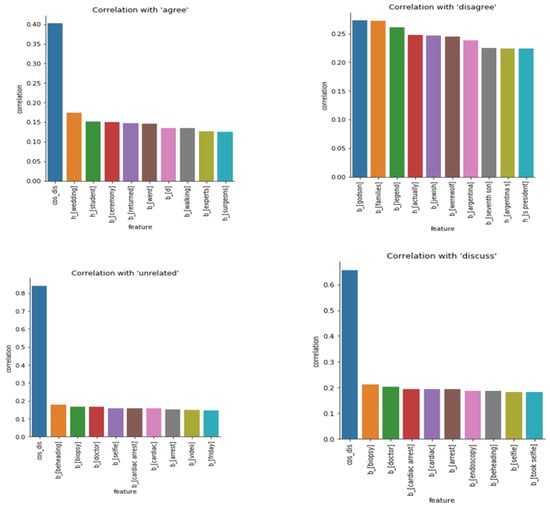

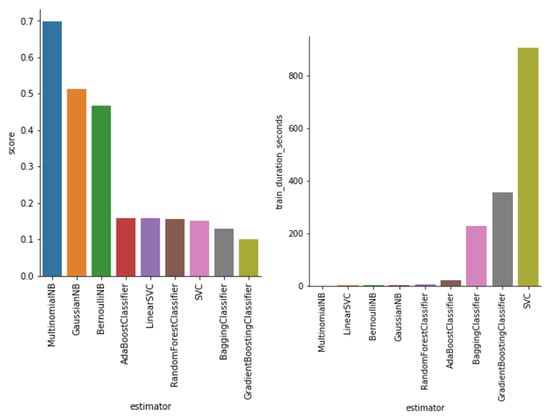

After preprocessing, the dataset comprises 2001 features: 1000 TF-IDFs from the headline, 1000 TF-IDFs from the body, and the cosine similarity between these two vectors (see Figure 7). As anticipated, cosine similarity emerged as the most effective predictor of an article’s stance. Figure 8 illustrates the correlation of each feature with the target stances. The TF-IDF features are labeled to indicate their origin (‘h’ for headline and ‘b’ for body) and the type of n-gram. Cosine similarity consistently proved to be the most reliable predictor, except for the ‘disagree’ stance.

Figure 7.

Feature correlation.

Figure 8.

Classifier scores and training durations (Training Stage).

The training methods evaluated included support vector machine classifiers, naïve Bayes classifiers, and ensemble techniques such as random forest and gradient boosting classifiers. Scikit-learn facilitates model experimentation as all models implement a consistent set of methods. Figure 8 displays the performance scores and training times for nine classifiers used in the initial stage of our multi-stage models. The performance score represents the percentage of correctly predicted targets.

Among the classifiers tested, multinomial naïve Bayes emerged as the most effective, despite generally low scores. This outcome is attributed to the fact that only one feature, which compares the headline to the body of the article, was used. Alone, the features from the headline and body do not appear to be strong predictors of an article’s stance. Additionally, multinomial naïve Bayes was the fastest to train, although training speed was not a consideration in its selection. Figure 8 also illustrates the training durations of the classifiers assessed. The lengthy preprocessing step posed challenges for experimenting with different methods, extending the feedback loop significantly. To address this, preprocessed data were saved to intermediate CSV files, which helped streamline experimentation with various models and parameters.

We used weighted accuracy suggested by the competition [27]. The weighted accuracy, , is expressed as

where is the binary accuracy across related agree, disagree, discuss, and unrelated article claim pairs. is the accuracy for pairs in related classes only.

Our final model, the Hybrid Multi-Stage Approach, shows a weighted accuracy of 86%. The achieved accuracy, compared with the included approaches in Table 2, depicts a noticeable enhancement in prediction accuracy. We noticed the significant impact of using pre-trained models and believe that evaluating other pre-trained models or transformers can produce models with better accuracy. We also believe that evaluating other types of NN models and evaluating different hyper-parameters can produce models with better results.

Table 2.

Proposed approach accuracy compared with the other approaches.

6. Conclusions and Future Work

In this study, we explored the challenge of detecting user stance within news articles, using it as a case study for stance detection on online social platforms. We developed advanced text mining models utilizing n-gram features, complemented by cosine distance measurements between the headline and the body of the news article. Our results indicate that cosine similarity is the most effective predictor of an article’s stance. Future improvements could involve incorporating additional text feature extraction techniques, such as word counts, word embeddings, and sentiment analysis. More sophisticated methods like SVD and Latent Semantic Analysis (LSA) could also be considered. To enhance accuracy further, exploring alternative comparison measures between headlines and bodies might be beneficial. Relying solely on cosine similarity may be inadequate; some top-performing teams also employed metrics like overlap to assess the relationship between headlines and bodies.

Our final model presented a significant improvement in comparison with FNC winning teams. We integrated approaches from the different benchmark models that we evaluated from the competition and recent relevant efforts or contributions in FNC stance detection data.

Author Contributions

Conceptualization, I.A. (Iyad Alazzam) and M.Z.; methodology, I.A. (Izzat Alsmadi); software, M.A.-R.; validation, I.A. (Iyad Alazzam) and M.Z.; formal analysis, I.A. (Izzat Alsmadi); investigation, M.A.-R.; resources, I.A. (Izzat Alsmadi); data curation, M.A.-R.; writing—original draft preparation, I.A. (Iyad Alazzam); writing—review and editing, M.Z.; visualization, M.Z.; supervision, I.A. (Izzat Alsmadi); project administration, I.A. (Izzat Alsmadi). All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| OSNs | Online Social Networks |

| NLP | Natural Language Processing |

| FNC | Fake News Challenge |

| DL | Deep learning |

| CNN | Convolutional Neural Networks |

| GRU | Gated recurrent unit |

| MLP | Multi-Layer Perceptron |

| BiLSTM | Bidirectional long and short-term memory |

| MVNN | Multi-domain Visual Neural Network |

| BAG | Block Artifact Grids |

| PCA | Principal Component Analysis |

| COOC | Cooccurrence |

| LSA | Latent Semantic Analysis |

| DCN | Deep convolutional neural networks |

| LF | Lexical features |

| GBDT | Gradient-boosted decision trees |

| TF-IDF | Frequency–inverse document frequency |

| SVD | Singular Value Decomposition |

| DRM | Deep recurrent model |

| NN | Neural Network |

References

- Kaplan, A.M.; Haenlein, M. Users of the world, unite! The challenges and opportunities of Social Media. Bus. Horiz. 2010, 53, 59–68. [Google Scholar] [CrossRef]

- Cha, M.; Haddadi, H.; Benevenuto, F.; Gummadi, K.P. Measuring user influence in Twitter: The million follower fallacy. In Proceedings of the International AAAI Conference on Web and Social Media, Washington, DC, USA, 23–26 May 2010; Volume 4. No. 1. [Google Scholar]

- Papacharissi, Z. Affective Publics: Sentiment, Technology, and Politics; Oxford University Press: Oxford, UK, 2015. [Google Scholar]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef] [PubMed]

- Lazer, D.M.; Baum, M.A.; Benkler, Y.; Berinsky, A.J.; Greenhill, K.M.; Menczer, F.; Zittrain, J.L. The science of fake news. Science 2018, 359, 1094–1096. [Google Scholar] [CrossRef]

- Metzger, M.J.; Flanagin, A.J. Credibility and trust of information in online environments: The use of cognitive heuristics. J. Pragmat. 2013, 59, 210–220. [Google Scholar] [CrossRef]

- Sundar, S.S. The MAIN Model: A Heuristic Approach to Understanding Technology Effects on Credibility; Metzger, M.J., Flanagin, A.J., Eds.; MacArthur Foundation Digital Media and Learning: Cambridge, MA, USA, 2007; pp. 73–100. [Google Scholar]

- Anand, P.; Walker, M.; Abbott, R.; Tree, J.E.F.; Bowmani, R.; Minor, M. Cats Rule and Dogs Drool!: Classifying Stance in Online Debate. In Proceedings of the 2nd Workshop on Computational Approaches to Subjectivity and Sentiment Analysis (WASSA 2.011), Portland, OR, USA, 11–17 June 2011; pp. 1–9. [Google Scholar]

- Hasan, K.S.; Ng, V. Stance Classification of Ideological Debates: Data, Models, Features, and Constraints. In Proceedings of the Sixth International Joint Conference on Natural Language Processing, Nagoya, Japan, 14–19 October 2013; pp. 1348–1356. [Google Scholar]

- Qiu, M.; Sim, Y.; Smith, N.A.; Jiang, J. Modeling User Arguments, Interactions, and Attributes for Stance Prediction in Online Debate Forums. In Proceedings of the 2015 SIAM International Conference on Data Mining, Vancouver, BC, Canada, 30 April–2 May 2015; pp. 855–863. [Google Scholar]

- Walker, M.; Anand, P.; Abbott, R.; Grant, R. Stance Classification Using Dialogic Properties of Persuasion. In Proceedings of the 2012 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Montréal, QC, Canada, 3–8 June 2012; pp. 592–596. [Google Scholar]

- Zhang, Q.; Yilmaz, E.; Liang, S. Ranking-Based Method for News Stance Detection. In Proceedings of the Web Conference, Lyon, France, 23–27 April 2018; pp. 41–42. [Google Scholar]

- Mohammad, S.; Kiritchenko, S.; Sobhani, P.; Zhu, X.; Cherry, C. Semeval-2016 Task 6: Detecting Stance in Tweets. In Proceedings of the 10th International Workshop on Semantic Evaluation (SemEval-2016), San Diego, CA, USA, 16–17 June 2016; pp. 31–41. [Google Scholar]

- Riedel, B.; Augenstein, I.; Spithourakis, G.P.; Riedel, S. A simple but tough-to-beat baseline for the Fake News Challenge stance detection task. arXiv 2017, arXiv:1707.03264. [Google Scholar]

- Thorne, J.; Chen, M.; Myrianthous, G.; Pu, J.; Wang, X.; Vlachos, A. Fake News Detection Using Stacked Ensemble of Classifiers; Association for Computational Linguistics: Stroudsburg, PA, USA, 2017. [Google Scholar]

- Bourgonje, P.; Schneider, J.M.; Rehm, G. From clickbait to fake news detection: An approach based on detecting the stance of headlines to articles. In Proceedings of the 2017 EMNLP Workshop: Natural Language Processing Meets Journalism, Copenhagen, Denmark, 7 September 2017; pp. 84–89. [Google Scholar]

- Hanselowski, A.; PVS, A.; Schiller, B.; Caspelherr, F.; Chaudhuri, D.; Meyer, C.M.; Gurevych, I. A retrospective analysis of the fake news challenge stance detection task. arXiv 2018, arXiv:1806.05180. [Google Scholar]

- Bhatt, G.; Sharma, A.; Sharma, S.; Nagpal, A.; Raman, B.; Mittal, A. Combining neural, statistical and external features for fake news stance identification. In Proceedings of the Web Conference 2018, Lyon, France, 23–27 April 2018; pp. 1353–1357. [Google Scholar]

- Slovikovskaya, V. Transfer learning from transformers to fake news challenge stance detection (FNC-1) task. arXiv 2019, arXiv:1910.14353. [Google Scholar]

- Schütz, M.; Schindler, A.; Siegel, M.; Nazemi, K. Automatic Fake News Detection with Pre-trained Transformer Models. In Proceedings of the Pattern Recognition, ICPR International Workshops and Challenges (ICPR 2021), Virtual Event, 10–15 January 2021; Del Bimbo, A., Cucchiara, R., Sclaroff, S., Farinella, G.M., Mei, T., Bertini, M., Escalante, H.J., Vezzani, R., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2021; Volume 12667. [Google Scholar] [CrossRef]

- Li, Q.; Zhou, W. Connecting the Dots Between Fact Verification and Fake News Detection. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 1820–1825. [Google Scholar] [CrossRef]

- Segura-Bedmar, I.; Alonso-Bartolome, S. Multimodal Fake News Detection. Information 2022, 13, 284. [Google Scholar] [CrossRef]

- Dedeepya, P.; Yarrarapu, M.; Kumar, P.P.; Kaushik, S.K.; Raghavendra, P.N.; Chandu, P. Fake News Detection on Social Media Through a Hybrid SVM-KNN Approach Leveraging Social Capital Variables. In Proceedings of the 2024 3rd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 2–4 July 2024; pp. 1168–1175. [Google Scholar] [CrossRef]

- Dev, D.G.; Bhatnagar, V.; Bhati, B.; Gupta, M.; Nanthaamornphong, A. LSTMCNN: A hybrid machine learning model to unmask fake news. Heliyon 2024, 10, e25244. [Google Scholar] [CrossRef]

- Zeng, X.; La Barbera, D.; Roitero, K.; Zubiaga, A.; Mizzaro, S. Combining Large Language Models and Crowdsourcing for Hybrid Human-AI Misinformation Detection. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR ’24), Washington, DC, USA, 14–18 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 2332–2336. [Google Scholar] [CrossRef]

- Alsmadi, I.; Alazzam, I.; Al-Ramahi, M. Stance Detection in the Context of Fake News. In Proceedings of the Annual IDeaS Conference: Disinformation, Hate Speech, and Extremism, CMU, Online, 12–13 July 2021. [Google Scholar]

- Fake News Challenge Stage 1 (Fnc-I): Stance Detection. 2017. Available online: http://www.fakenewschallenge.org/ (accessed on 1 October 2023).

- Rubin, V.L.; Chen, Y.; Conroy, N.K. Deception Detection for News: Three Types of Fakes. Proc. Assoc. Inf. Sci. Technol. 2015, 52, 1–4. [Google Scholar] [CrossRef]

- Bedi, A.; Pandey, N.; Khatri, S.K. A Framework to Identify and Secure the Issues of Fake News and Rumours in Social Networking. In Proceedings of the 2019 2nd International Conference on Power Energy, Environment and Intelligent Control (PEEIC), Greater Noida, India, 18–19 October 2019; pp. 70–73. [Google Scholar]

- De Oliveira, N.R.; Medeiros, D.S.; Mattos, D.M. A Sensitive Stylistic Approach to Identify Fake News on Social Networking. IEEE Signal Process. Lett. 2020, 27, 1250–1254. [Google Scholar] [CrossRef]

- Ajao, O.; Bhowmik, D.; Zargari, S. Fake News Identification on Twitter with Hybrid Cnn and Rnn Models. In Proceedings of the 9th International Conference on Social Media and Society, Copenhagen, Denmark, 18–20 July 2018; pp. 226–230. [Google Scholar]

- Boididou, C.; Papadopoulos, S.; Dang-Nguyen, D.-T.; Boato, G.; Kompatsiaris, Y. The Certh-Unitn Participation@ Verifying Multimedia Use 2015. In Proceedings of the MediaEval 2015 Workshop, Wurzen, Germany, 14–15 September 2015. [Google Scholar]

- Boididou, C.; Andreadou, K.; Papadopoulos, S.; Dang-Nguyen, D.-T.; Boato, G.; Riegler, M.; Kompatsiaris, Y. Verifying Multimedia Use at Mediaeval 2016. In Proceedings of the MediaEval 2016 Workshop, Hilversum, The Netherlands, 20–21 October 2016. [Google Scholar]

- Gupta, A.; Lamba, H.; Kumaraguru, P.; Joshi, A. Faking Sandy: Characterizing and Identifying Fake Images on Twitter During Hurricane Sandy. In Proceedings of the 22nd International Conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013; pp. 729–736. [Google Scholar]

- Chen, M.; Lai, Y.; Lian, J. Using Deep Learning Models to Detect Fake News about COVID-19. ACM Trans. Internet Technol. 2023, 23, 2. [Google Scholar] [CrossRef]

- Qi, P.; Cao, J.; Yang, T.; Guo, J.; Li, J. Exploiting Multi-Domain Visual Information for Fake News Detection. In Proceedings of the 2019 IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; pp. 518–527. [Google Scholar]

- Dementieva, D.; Kuimov, M.; Panchenko, A. Multiverse: Multilingual Evidence for Fake News Detection. J. Imaging 2023, 9, 77. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, J.; Zaiane, O.R. SAFE: Similarity-Aware multi-modal Fake news detection. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Singapore, 11–14 May 2020; Springer: Cham, Switzerland, 2020; pp. 354–367. [Google Scholar]

- Qi, P.; Cao, J.; Yang, T.; Guo, J.; Li, J. Exploiting multi-domain visual information for fake news detection. In Proceedings of the IEEE International Conference on Data Engineering (ICDE), Beijing, China, 8–11 November 2021; pp. 1598–1609. [Google Scholar]

- Mishra, A.; Sadia, H. A Comprehensive Analysis of Fake News Detection Models: A Systematic Literature Review and Current Challenges. Eng. Proc. 2023, 59, 28. [Google Scholar] [CrossRef]

- Singh, M.; Ahmed, J.; Alam, A.; Raghuvanshi, K.; Kumar, S. A comprehensive review on automatic detection of fake news on social media. Multimed. Tools Appl. 2023, 83, 47319–47352. [Google Scholar] [CrossRef]

- Nikumbh, D.; Thakare, A. A Comprehensive review of fake news detection on social media: Feature engineering, feature fusion, and future research directions. Int. J. Syst. Innov. 2023, 7, 6. [Google Scholar]

- Popat, K.; Mukherjee, S.; Strötgen, J.; Weikum, G. Where the Truth Lies: Explaining the Credibility of Emerging Claims on the Web and Social Media. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017; pp. 1003–1012. [Google Scholar]

- Ferreira, W.; Vlachos, A. Emergent: A Novel Data-Set for Stance Classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1163–1168. [Google Scholar]

- Borges, L.; Martins, B.; Calado, P. Combining Similarity Features and Deep Representation Learning for Stance Detection in the Context of Checking Fake News. J. Data Inf. Qual. 2019, 11, 1–26. [Google Scholar] [CrossRef]

- Shang, J.; Shen, J.; Sun, T.; Liu, X.; Gruenheid, A.; Korn, F.; Lelkes, Á.D.; Yu, C.; Han, J. Investigating Rumor News Using Agreement-Aware Search. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 2117–2125. [Google Scholar]

- Umer, M.; Imtiaz, Z.; Ullah, S.; Mehmood, A.; Choi, G.S.; On, B.-W. Fake News Stance Detection Using Deep Learning Architecture (Cnn-Lstm). IEEE Access 2020, 8, 156695–156706. [Google Scholar] [CrossRef]

- Hardalov, M.; Arora, A.; Nakov, P.; Augenstein, I. A survey on stance detection for mis-and disinformation identification. arXiv 2021, arXiv:2103.00242. [Google Scholar]

- Jiang, Y.; Petrak, J.; Song, X.; Bontcheva, K.; Maynard, D. Team Bertha von Suttner at SemEval-2022 Task 4: Multi-modal Stance Detection using Visual and Textual Cues. In Proceedings of the 16th International Workshop on Semantic Evaluation (SemEval-2022), Seattle, WA, USA, 14–15 July 2022; pp. 416–424. [Google Scholar]

- Zotova, E.; Agerri, R.; Nuñez, M.; Rigau, G. Multilingual Stance Detection in Tweets: The Catalonia Independence Corpus. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; European Language Resources Association: Paris, France, 2020; pp. 1368–1375. [Google Scholar]

- Guo, Z.; Zhang, Q.; Ding, F.; Zhu, X.; Yu, K. A Novel Fake News Detection Model for Context of Mixed Languages through Multiscale Transformer. IEEE Trans. Comput. Soc. Syst. 2023, 11, 5079–5089. [Google Scholar] [CrossRef]

- Sean, B.; Pan, Y. Talos Targets Disinformation with Fake News Challenge Victory. 2017. Available online: https://blog.talosintelligence.com/2017/06/talos-fake-news-challenge.html (accessed on 1 February 2021).

- Hanselowski, A.; Avinesh, P.V.S.; Schiller, B.; Caspelherr, F. Description of the System Developed by Team Athene in the FNC-1. 2017. Available online: https://github.com/hanselowski/athene_system/blob/master/system_description_athene.pdf (accessed on 13 August 2024).

- Zhang, Q.; Liang, S.; Lipani, A.; Ren, Z.; Yilmaz, E. From stances’ imbalance to their hierarchical representation and detection. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; ACM: New York, NY, USA, 2019; pp. 2323–2332. [Google Scholar]

- Altheneyan, A.; Alhadlaq, A. Big Data ML-Based Fake News Detection Using Distributed Learning. IEEE Access 2023, 11, 29447–29463. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).