1. Introduction

With the development of fifth-generation mobile networks (5G) and more advanced mobile communication networks, the number of mobile device users is expected to increase from 5.17 billion to 7.33 billion by the end of 2023, according to Statista [

1]. In this rapidly evolving digital landscape, MEC servers, as a pivotal technology for enhancing the computational capabilities of mobile devices, offer users improved quality of service (QoS) and quality of experience (QoE). By decentralizing cloud computing, the MEC adeptly manages burgeoning data traffic, mitigates network congestion, and curtails latency, evolving to incorporate diverse technologies, including WiFi [

2] and fixed access [

3] (ETSI ISG [

4]).

Amidst such advancements, MEC networks face the intricate challenge of efficient task management and coordination due to their compositional complexity and inherent variability. This complexity is further augmented in distributed layouts, which, unlike their centralized counterparts that optimize globally, offer escalated flexibility and scalability, essential for vast networks operating without central oversight.

Device-to-Device (D2D) communication has surfaced as a promising paradigm within MEC to bolster collaborative offloading efforts [

5]. Studies [

6,

7] have exemplified enhancements in network capacity, energy efficiency, and latency reduction through D2D-facilitated offloading. In resource-constrained environments, Zhou et al. employed the game theory to devise a D2D-assisted offloading algorithm optimizing task computation time [

8], while Xiao et al. tackled the content caching through an improved multi-objective bat algorithm [

9]. Abbas et al. considered cooperative-aware task offloading to balance the number of completed tasks against energy and cost [

10], and Li et al. proposed a security-conscious, energy-aware task offloading framework [

11].

While the existing research has laid a robust groundwork for harnessing idle computational resources in MEC, optimizing the utilization of these resources remains a significant challenge. The integration of D2D technology introduces a spectrum of offloading modalities and platforms, enriching user services but also complicating offloading decisions with additional considerations like local computational capacities and the availability of proximate nodes.

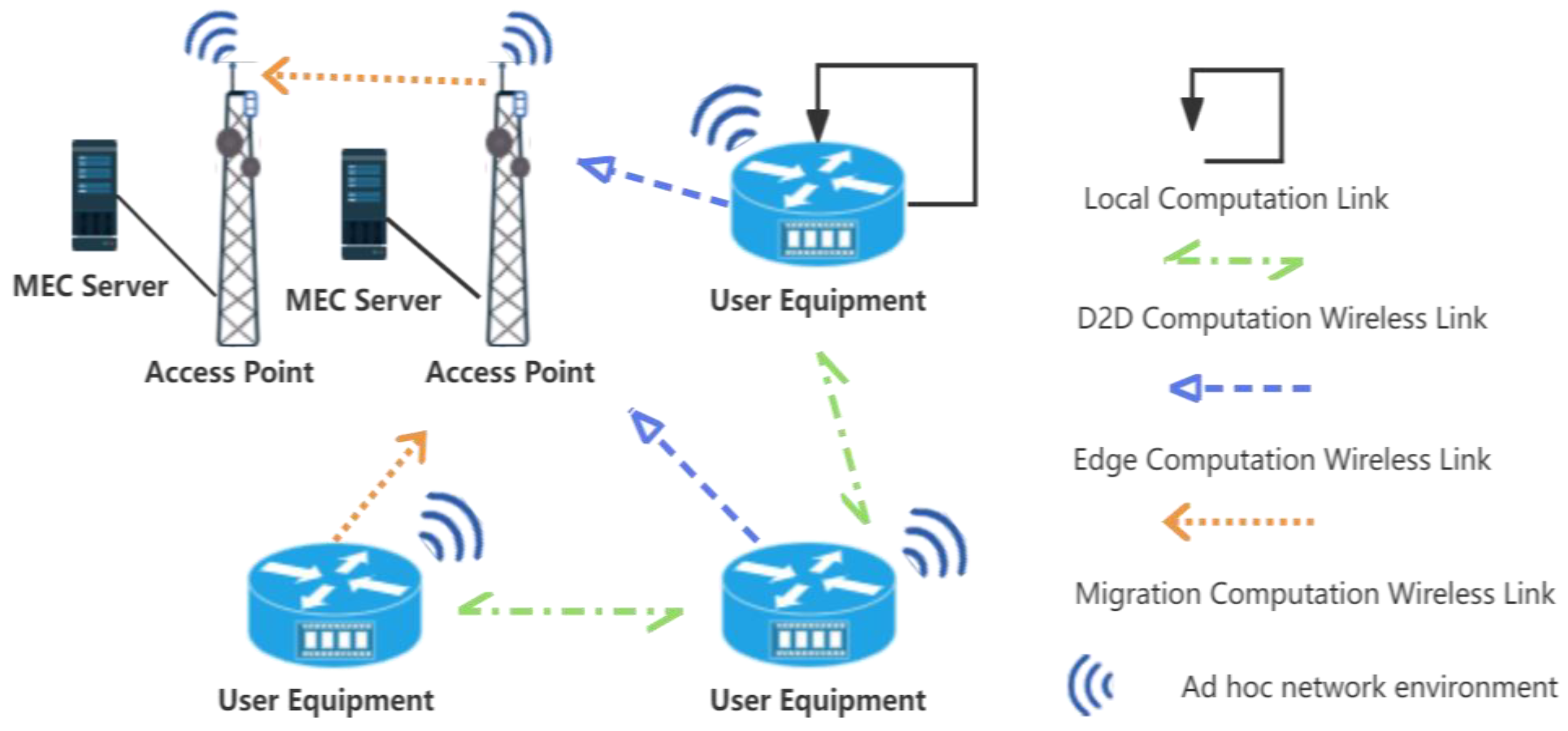

Addressing the dynamic, transient nature of D2D-integrated MEC networks, this paper proposes a PPO-based D2D-assisted computation offloading and resource allocation scheme. As shown in

Figure 1, we consider a single unit in the MEC network, including two access points (APs) and multiple requesters and collaborators deployed at the edge of the network, where the user equipment (UE) can choose the appropriate computation method, such as local computation, edge computation, D2D computation, and migration computation, according to its own needs within the coverage of the APs. Our contribution is as follows:

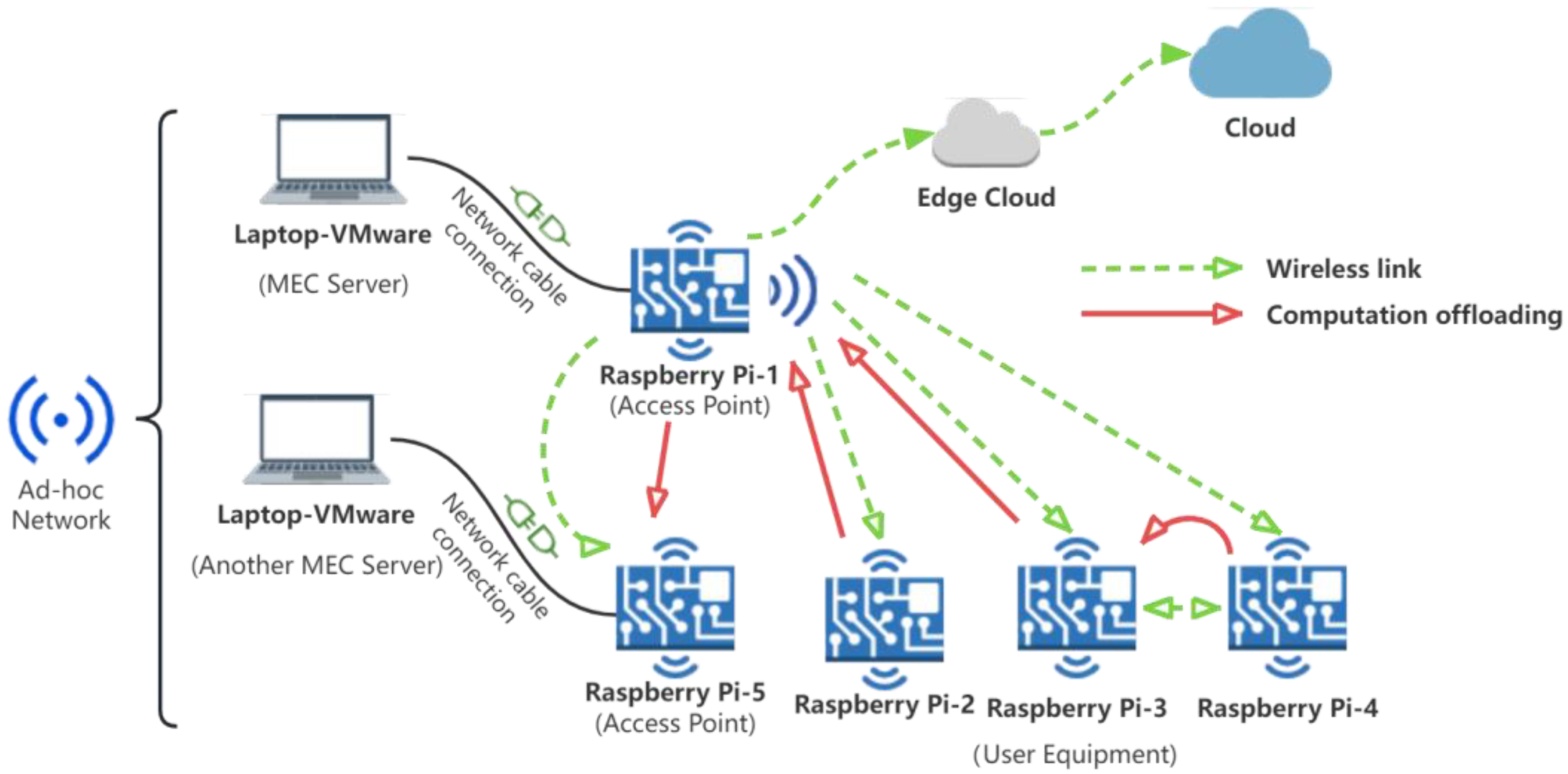

We construct an authentic MEC network environment mirroring the actual MEC architecture, utilizing ad hoc wireless technology and computational devices, moving beyond mere simulation-based studies.

Considering the MEC network’s complexity, dynamics, and randomness, we refine the neural network training features on the UE to include CPU utilization, transmission delay, task execution time, task count, and the aggregate of transmission and computation energy consumption.

We introduce a PPO-based D2D-assisted computational offloading and resource allocation scheme to amplify terminal device resource utilization. By formulating a Markov decision process (MDP) model, we seek to minimize the time loss and energy consumption, deriving an optimal offloading strategy via PPO application.

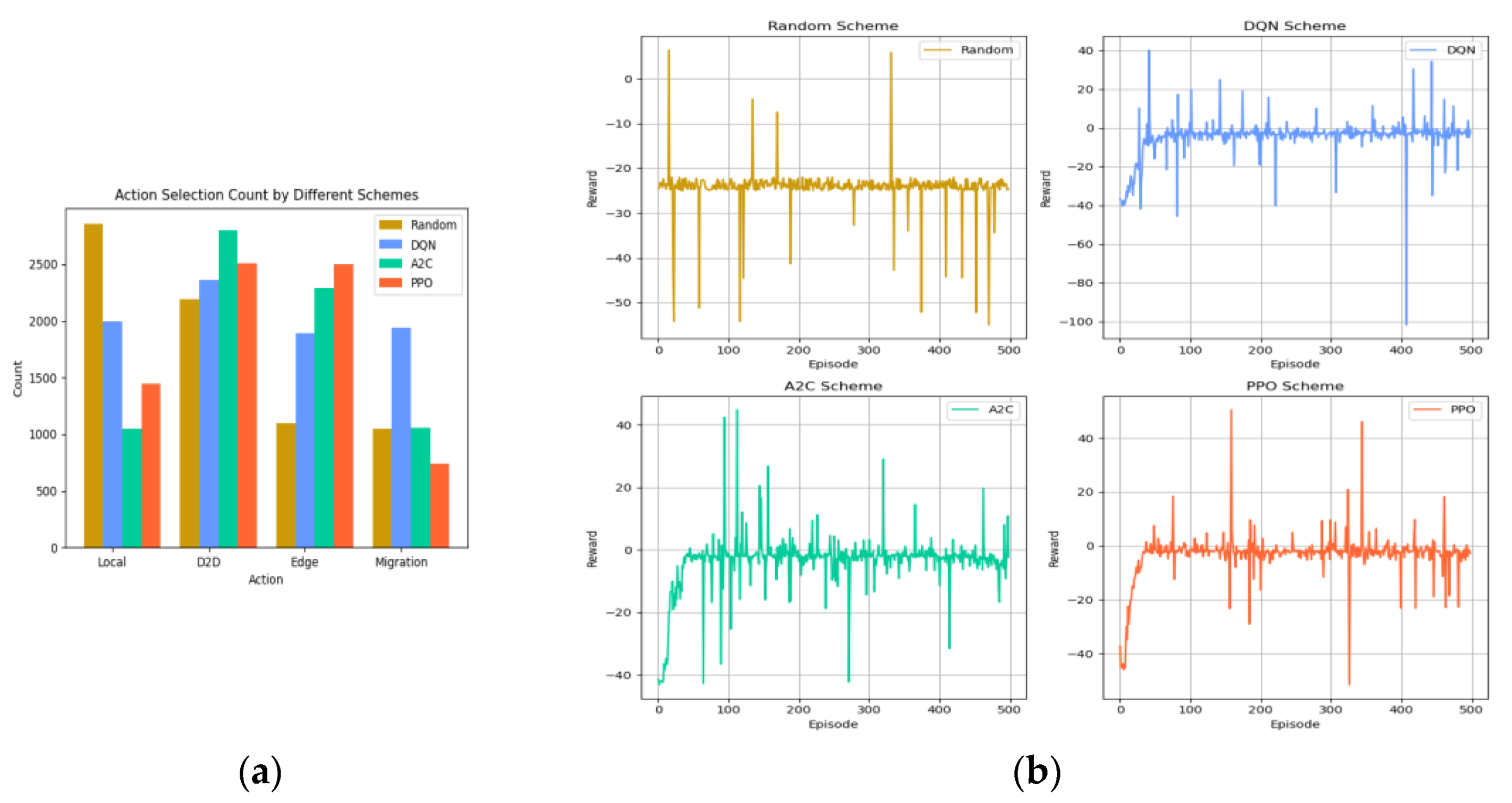

The experimental results show that our scheme outperforms the other three baselines in terms of delay, energy consumption, and algorithm convergence.

The remaining sections are organized as follows. In

Section 2, we review the related work.

Section 3 defines the system model, including the communication and computation models, and formally defines the D2D auxiliary computation offloading and resource allocation problem.

Section 4 describes the MDP model for this problem and the specific application of the PPO algorithm to this experiment.

Section 5 describes the experimental environment and parameter settings and compares this scheme with three other baselines.

Section 6 concludes the paper.

2. Related Work

In the multi-access edge computing (MEC) domain, computational offloading emerges as a multifaceted challenge, demanding innovative solutions [

12,

13,

14], which are crucial for enhancing network efficiency. Research in this domain has predominantly concentrated on three approaches: mathematical optimization algorithms, control algorithms, and artificial intelligence (AI)-driven optimization algorithms. Among these, AI and machine learning (ML)-based algorithms have shown particular promise in dynamic and complex MEC environments due to their adaptability and learning capabilities [

15].

The studies to date have focused on optimizing latency and energy consumption during computational offloading. Abbas et al. utilized meta-heuristic algorithms, such as ant colony, whale, and grey wolf optimization, to establish an offloading strategy aimed at minimizing energy and delay, albeit within the confines of a singular MEC server context [

16]. While meta-heuristic algorithms are robust, their complexity and numerous parameters often complicate the tuning process. In the realm of control algorithms, Lin et al. proposed drone-assisted dynamic resource allocation leveraging Lyapunov optimization to ensure delay efficiency and reliability of offloading services [

17]. Chang et al. contributed a balanced framework using SDN-based controller load balancing with Lyapunov optimization for improved offloading efficiency and reduced latency [

18].

The precision of mathematical optimization for linear problems contrasts with its constrained adaptability to dynamic changes, whereas control algorithms excel in system stability and robustness but can be complex and less suitable for non-linear systems. Deep reinforcement learning (DRL), marrying deep learning with reinforcement learning, has emerged as a potent approach in MEC for managing dynamic tasks, with techniques like deep Q-network (DQN) and advantage actor–critic (A2C) algorithms gaining traction [

19,

20,

21].

With the endeavor to overcome the limitations of mathematical optimization algorithms and control class algorithms, many researchers have used DRL methods for their self-learning and self-adaptation qualities in MEC resource allocation. In [

19], the authors demonstrated the superiorities and applications of ML and deep learning (DL) methods in MEC environments for two key tasks: automatic identification and offloading of “untrusted tasks” and task scheduling on MEC servers, in particular using a flow-shop-like scheduling approach. Gao et al. developed an enhanced scheme based on the deep deterministic policy gradient (DDPG), which exhibited notable performance in reducing energy consumption, managing load status, and minimizing latency. However, this scheme did not adequately consider the involved behavioral characteristics of the devices [

20]. To minimize the computing delay and energy consumption of UE, Liang et al. used DQN and DDPG to process large-scale state spaces and obtained the task offloading ratio and power allocation suitable for each UE. Silva et al. utilized A2C, empowered UEs to make intelligent offloading decisions, and demonstrated efficacy in simulated OpenAI Gym scenarios but did not fully capture the dynamism of mobile devices [

21].

Although DRL demonstrates its superiority in resource management, the challenge of high user concurrency persists, often impacting QoS and QoE due to resource contention, and the challenge of high user concurrency persists, often impacting QoS and QoE due to resource contention [

22]. D2D communication’s potential in offloading via high-speed, direct connections between devices has thus garnered academic focus. Li et al. addressed the energy consumption optimization problem for UE-assisted MEC computational offloading in mobile environments and proposed a DRL-based computational offloading model for D2D-MEC. Finally, they demonstrated that this scheme consumes the least amount of energy and cost in continuous time [

23]. Lin et al. investigated a D2D collaborative computing offloading design for two users dynamically exchanging computational loads over a D2D link. The design achieved minimax optimization for a given finite time horizon [

24]. Guan et al. studied the multi-user collaborative partial offloading and computational resource allocation problem, using a DQN algorithm to maximize the number of collaborative devices to maximize the computational resources under the maximum latency constraint of the application and limited computational resources [

25]. Liu et al. investigated a computational offloading scheme in a multi-user UAV system, where a UAV with a computational task can offload part of the task to a nearby assistant to satisfy a latency constraint [

26]. Fan et al. used the Lagrange multiplier method to solve the problem of computing resource allocation and adopted a greedy strategy to select D2D users, substantially reducing the average execution delay of the task [

27]. They all increase the computing power by increasing the number of devices rather than fully utilizing the per-device computing resources.

D2D communication, a key component in enhancing MEC, has also received significant attention. Its ability to facilitate high-speed, short-range connections makes it a valuable asset in offloading tasks. However, research in this area has often focused on increasing the computational power through additional devices rather than optimizing per-device resource utilization. Our study contributes to this field by proposing a PPO-based D2D-assisted computation offloading and resource allocation scheme. This scheme not only addresses the dynamic nature of MEC networks but also optimizes resource utilization at a per-device level, filling a critical gap in the current research landscape.

3. System Model

In this study, we propose a D2D-assisted MEC server computation offloading and resource allocation scheme for multi-access edge computing scenarios. This model aims to minimize the total offloading cost, including task execution time and energy consumption. The proposed system model, as shown in

Figure 2, is crucial to understanding how UE can optimize offloading decisions based on the device state, proximity to computational resources, and the nature of computational tasks. The system model consists of three parts: the network model, the communication model, and the computational model, which will be introduced in detail.

3.1. Network Model

The network model consists of multiple UEs and distributed MEC servers. In an ad hoc network [

28,

29] environment, each UE can generate computationally intensive tasks, while the MEC servers and available computing nodes nearby provide the necessary computational resources.

represents the maximum workload of the MEC server and

represents the maximum computational resources provided by the MEC server. Among the network entities, let

as the set of all UEs in the network. All UEs can be represented as

, where

denotes the size of the user task.

is the operating frequency of the CPU for processing computational tasks and

represents the maximum tolerable time for the current UE transmission or computation. The variable

represents the current computing state. When

executes a computation task,

; otherwise,

. The variables

denote the system offloading decision set, representing different offloading strategies. The variables

represent the system offloading strategies, which represent different offloading decisions:

for local computation,

for offloading the task via D2D,

for offloading the task via the current MEC, and

for migrating the task to another MEC server, which is called migration calculation in this article.

3.2. Communication Model

The communication model defines the channels through which UEs and MEC servers interact. When an UE requires the edge server computation, the computation task for offloading must be transferred from the UE to the AP, and then the AP is assigned to the MEC server for computation. This process requires data transmission over the network, so the network bandwidth is one of the key factors affecting the overall system performance. To optimize the offloading process, we conceptualize a communication model that imposes a bandwidth limit between the UE and the AP. This measure is designed to ensure equitable bandwidth distribution among UEs, thereby mitigating potential network congestion. It simultaneously governs energy expenditure while striving to maintain an equilibrium between system performance and operational costs. The details are as follows: we take

as the set of communication links of the AP.

is the bandwidth of the AP and we divide the available bandwidth resource of the AP into equal-width subchannels

, then the bandwidth of each subchannel can be denoted as

The matrix

denotes the transmission state of the AP,

, and

indicates that the AP provides an offloading service on its communication link.

indicates that no offloading service is being provided on its communication link. Thus, the bandwidth resource is denoted as:

In the resource-constrained scenario, when the requester offloads the task to the MEC server, the task is first sent from the

to the AP via wireless transmission. We assume that the wireless channel state remains constant while each computational task is transmitted between the UE and the AP.

denotes the transmission power that requester i sends to the AP,

denotes the channel gain during its transmission,

represents the power of the noise,

denotes the distance between the requester

and the AP, and

is a standardized path loss propagation exponent so that the path loss can be denoted as

; then, the signal-to-noise ratio (SNR) can be computed as:

After obtaining the SNR, the transmission rate from the requester to the AP can be calculated as

In order to avoid the interference of UEs transmitting between D2D communication links, we assume that each D2D communication link can be obtained as an orthogonal subchannel; then,

denotes the bandwidth of the subchannel. Similarly, we denote the signal-to-noise ratio of the D2D communication link, then the transmission rate of D2D communication between requester

, and collaborator c is denoted as follows:

Since the executor that performs the task of the requester may be a MEC server or another UE (collaborator), based on the above expression, the transmission rate between the requester and the executor may be expressed as or .

3.3. Computational Model

We studied four computing models for devices to choose from under the MEC network environment: local computing, D2D peer devices computing, edge computing, and migration computing. The computation delay and energy consumption for each model are as follows.

3.3.1. Local Computing

If the computational requirements of the computing tasks are within the processing power of the UE, the device performs computing tasks locally. We assume that the CPU in

operates at a frequency of

, with

representing the local computational power in terms of CPU cycles. Therefore, the local computation time can be calculated as follows:

Parameter

is based on the findings presented in reference [

30]. Local energy consumption can be mathematically represented as

Incorporating the influence of chip architecture [

31], the effective switching capacitance κ is introduced in the equation. The total energy consumption is provided by the following expression (

are loss factors):

3.3.2. Edge Computing

When the computing power of local devices is not enough to efficiently handle tasks or to handle tasks that require a fast response, edge computing can provide lower latency due to its proximity to the data source. The

is the size of the task from the requester

and

represents the rate at which

transmits tasks to the MEC server, transmission delay is

The time requirement of edge computing is

denotes the transmission power that requester

sends to the MEC server. The transmission energy loss is denoted as

Computation energy loss is denoted as

The total consumption, including the loss of time and energy, provides

3.3.3. Migrating the Computation

When the MEC server experiences an excessive workload or when neighboring servers have available resources, the

can migrate its computational tasks to the adjacent servers. This migration incurs additional transmission overhead for the

, which involves transferring data between MEC servers. The magnitude of the transmission overhead is determined based on the allocated computational resources provided by the target server in response to the migration request from the

. The

in

,

and

signifies the transmission from

to the AP where another MEC server is located; therefore, the transmission delay is

Assuming that the migration cost only depends on the task size, which is denoted as

, the transmission energy loss is

The computational delay and energy consumption of the

is computed based on the resource allocation strategy of the migration server, with the computational formula remaining unchanged. Consequently, the total cost of the migration computation is expressed as

3.3.4. D2D Device Computing

is the size of the task and

represents the rate at which the requester,

, transmits tasks to the collaborator; thus, the transmission delay is

and

represent the number of CPU cycles required by the collaborator

to complete the computational task

of the requester

, and the current CPU working frequency, respectively. The time requirement of D2D computing is

denotes the transmission power that requester

sends to the collaborator. The transmission energy loss is denoted as

Computation energy loss is denoted as

The total consumption provides

3.4. Problem Formulation

To enhance the efficiency of computational task offloading, we constructed a mathematical model tailored to minimize the total execution cost within the MEC framework. This mathematical model integrates the inherent complexities of the network’s architecture, the communication resource in use, and the dynamics computation at play in MEC environments. Recognizing the finite nature of MEC server resources and the associated costs of server utilization, our model incorporates economic considerations. Specifically, due to MEC servers having limited resources, assuming that each strategy repeatedly selects an edge computing strategy will lead to a high cost. Therefore, we assigned very low rewards to successively select strategies for MEC as a main task processor; however, even if such a strategy emerges, it slowly disappears in the iterations.

We used the following equation to express the cost consumption of the four task offloading strategies of the UE:

Since the aim of this study was to minimize the total cost of delay and energy consumption of UEs, the bandwidth resources of AP should be satisfied:

where

represents the bandwidth of AP.

represents all UE devices, which can be calculated as

Under the constraints of maximum tolerable delay and energy consumption, the problem is expressed as

Based on satisfying the QoS and QoE required by users, the solution of this problem can be expressed as solving the minimum total offloading cost under the optimal offloading policy vector .

4. Multi-Objective Deep Reinforcement Learning Based on the PPO Algorithm

In this section, we introduce the application of the proximal policy optimization (PPO) algorithm, a robust deep reinforcement learning approach, under the MEC environment. The PPO model was chosen for its notable benefits in multi-objective optimization, characterized by stability, adaptability, and efficient sample utilization, making it highly suitable for the dynamic and uncertain environments of MEC. The problem of computational offloading and resource allocation was first constructed as a Markov decision process (MDP) model, and the PPO algorithm was used to obtain the optimal solution.

4.1. Markov Decision Process

Our approach constructs the computational offloading and resource allocation problem as an MDP. The MDP is defined by a state space encompassing key metrics like transfer latency, computational delay, energy consumption, an action space for offloading strategies, and a reward function designed to minimize latency and energy consumption. This formulation is crucial for generating optimized offloading policies based on the current state of the network. In the context of computational offloading and resource allocation within the edge environments, we define the MDP as a quintuple, encompassing the state space (), action space (), state transition probability matrix (), reward function (), and the discount factor (). The crux of the MDP’s application in this scenario lies in its ability to generate a policy that dictates offloading decisions based on the current state.

State: The state of the environment consists of several metrics for the local device, the MEC server, and the collaborator devices. Transfer latency used by the computational tasks, computational latency, transfer energy consumption, computational energy consumption, CPU utilization of the current device, and the number of computational tasks.

Action: The action space consists of four offloading strategies: local computing, D2D computing, edge computing, and migration computing.

Probability: The state probability transfer matrix can be expressed as

Reward: The rewards are provided at each episode. Considering the goal of minimizing UE latency and energy consumption, the defined reward should be negatively correlated with this goal. It can be expressed as follows:

: is the discount factor, .

For the computational model of the system, the state can be composed of a number of metrics from the local device, the MEC server, collaborator devices, and the offloaded device. After executing each decision, the agent will receive an immediate reward to measure the goodness of the current decision [

32]. The reward function is shown in Equation (26), and the value of the state is dependent on the current reward versus the possible future reward. The return of state is defined as follows:

is used to estimate the current value of future rewards, then the long-term payoff can be expressed as the expected value of

, defined as

denotes the value function of state under a given strategy , denotes the next state, and denotes the strategy.

The problem is thus formulated as solving the optimal policy

of the system model to maximize the long-term value of the model

, expressed as follows:

4.2. PPO Algorithm Application

The application of the PPO algorithm is directed at optimizing the policy function using a neural network. This approach is particularly adapted to navigating the complexities of the non-convex optimization challenges characteristic of intricate MEC scenarios. PPO distinguishes itself by balancing the exploration of innovative strategies with the exploitation of established, efficacious actions facilitated by its distinct objective function and policy update methodology. This allows for effective handling of the non-convex optimization problem prevalent in complex MEC scenarios. The PPO algorithm, with its objective function and policy update mechanism, ensures a balance between the exploration of new strategies and the exploitation of known effective actions [

33].

To evaluate the versatility of various reinforcement learning (RL) algorithms and to fine-tune hyperparameters for broader applicability, we decoupled the environmental module from the neural network. This separation enables the environmental module to focus exclusively on generating environmental feedback and computing rewards, a critical step in enhancing the scheme’s generality, as illustrated in

Figure 3. The environmental module’s interfaces, namely reset and step, are integral to the RL process. The reset interface initializes or refreshes the environment to a baseline state, providing the initial observation post-training initiation or episode completion. In contrast, the step interface progresses the environment in response to the agent’s actions, delivering subsequent observations, instant rewards, termination flags, and additional context-specific data. This delineation of responsibilities ensures a coherent and systematic interaction between the PPO algorithms and the environment module, promoting an efficient learning cycle predicated on action-derived feedback.

Within the neural network module, the PPO algorithm updates the neural network parameters based on state returns by sampling historical offloading data. This process involves two distinct neural networks: the actor network, which is responsible for updating the current policy by determining action probabilities, and the critic network, which evaluates the policy’s expected returns. The functions of the actor and critic neural networks are to update and evaluate the current strategy, respectively. Upon receiving the current environment state as input, the actor network employs the neural network’s parameters, , to ascertain the likelihood of undertaking a particular action within state s. Concurrently, the critic network computes the value of the current policy, effectively estimating the expected returns. This dual-structured approach enables the PPO algorithm to harmoniously balance the need for exploration, which involves experimenting with novel actions with the necessity of exploitation or optimizing known effective actions. Through this iterative interaction, the PPO algorithm learns and hones strategies, progressively navigating towards an optimal policy.

The objective function of the policy, denoted as

(Equation (30)), integrates with

, the estimator of the advantage function with time step

, and

is the strategy function.

The PPO algorithm utilizes the principle of importance sampling to update the strategy with the experience generated by the old strategy. It solves the redundant process in reinforcement learning that requires recollecting experience after each policy update, thus effectively utilizing past experience and speeding up the learning process. The principle of importance sampling requires

(expressed as Equation (32)) to be as close to 1 as possible, and here, a restriction is placed on the objective function of the online network, see Equation (31), in which

is generally taken to be 0.2 [

34].

Optimal policies can be obtained by optimizing the metric based on gradient-based algorithms:

, where

is the gradient of

with respect to

,

is the time step, and

is the optimization rate. Summarizing the above, the algorithm training process is presented in Algorithm 1.

| Algorithm 1 Training the algorithm of PPO. |

Initialize, update times of each training step Rp, and replay buffer;

For episode = 1, …, 500,

Obtain the Observation from the environment;

Execute the action a[n];

Evaluate reward r[n], policy entropy S(s[n]), the log-probability of a[n],

and store these variables in the experience buffer;

Store the transition {s[n], a[n], r[n], s[n+1]} into experience buffer;

If mod (n, N) = 0 then reset the training environment;

end if n = n + 1;

Calculate the advantage function A[n];

for up = 1, …, Rp do

Calculate the current policy entropy S(s[n])

and ;

Update the policy entropy and log probability in the experience buffer;

end for Clear experience buffer;

end for . |

6. Conclusions

In this paper, we proposed a PPO-based D2D-assisted computation offloading and resource allocation scheme for multi-access edge computing environments. The proposed approach leverages the actor–critic based on the PPO method in reinforcement learning to optimize offloading decisions based on the task attributes and device performance, resulting in reduced latency and lower energy consumption. Additionally, the collaborative offloading strategy between the D2D and MEC servers greatly improved the utilization of the edge computational resources. The implementation of this scheme marks a significant advancement in addressing the complex challenges inherent in the MEC networks, particularly relevant in the evolving 5G landscape. However, we recognize the limitations in the current study: one being the time-varying nature of the communication model channel parameters as influenced by the environment and the other being the protection of sensitive user information and data during D2D communication. In the future, we will extend our work. On the one hand, we will use real-time monitoring and sensing techniques to accurately measure the dynamic characterization of channel parameters, and on the other hand, we will incorporate privacy-preserving aspects into the common offloading process within D2D-assisted MEC networks.