Abstract

The rapid advancement of networking, computing, sensing, and control systems has introduced a wide range of cyber threats, including those from new devices deployed during the development of scenarios. With recent advancements in automobiles, medical devices, smart industrial systems, and other technologies, system failures resulting from external attacks or internal process malfunctions are increasingly common. Restoring the system’s stable state requires autonomous intervention through the self-healing process to maintain service quality. This paper, therefore, aims to analyse state of the art and identify where self-healing using machine learning can be applied to cyber–physical systems to enhance security and prevent failures within the system. The paper describes three key components of self-healing functionality in computer systems: anomaly detection, fault alert, and fault auto-remediation. The significance of these components is that self-healing functionality cannot be practical without considering all three. Understanding the self-healing theories that form the guiding principles for implementing these functionalities with real-life implications is crucial. There are strong indications that self-healing functionality in the cyber–physical system is an emerging area of research that holds great promise for the future of computing technology. It has the potential to provide seamless self-organising and self-restoration functionality to cyber–physical systems, leading to increased security of systems and improved user experience. For instance, a functional self-healing system implemented on a power grid will react autonomously when a threat or fault occurs, without requiring human intervention to restore power to communities and preserve critical services after power outages or defects. This paper presents the existing vulnerabilities, threats, and challenges and critically analyses the current self-healing theories and methods that use machine learning for cyber–physical systems.

1. Introduction

This narrative review paper presents a descriptive review of manuscripts on cyber–physical self-healing systems using machine learning. Cyber–physical systems (CPSs) are integrated systems that bridge the physical and cyber domains, enabling the seamless integration of biological processes and computing systems [1]. Self-healing in CPSs refers to the ability of these systems to automatically detect and respond to faults or failures without human intervention, enhancing their resilience and reliability [2]. While self-healing capabilities can improve the performance and robustness of CPSs, they also face several vulnerabilities, threats, and challenges that need to be addressed [3]. These include hardware and software component vulnerabilities that may be susceptible to cyber-attacks and other threats, compromising the self-healing process [4]. The lack of standardisation in self-healing mechanisms and technologies creates system interoperability issues, leading to vulnerabilities and integration challenges [2]. The complexity of CPSs, with multiple interconnected components, poses difficulties in identifying and diagnosing faults, making it challenging to implement effective self-healing mechanisms [5]. Human error during system design, implementation, and maintenance can create vulnerabilities and compromise the self-healing capabilities of the system [2]. The lack of visibility into CPS self-healing systems can also hinder fault identification and compromise system operations [6]. CPS self-healing systems are also vulnerable to malicious attacks, including denial-of-service attacks, malware, and hacking, which can compromise the system’s integrity and availability [7]. Considering that CPS self-healing systems are vital for critical infrastructure, such as transportation systems and power grids, failures or vulnerabilities in these systems can have severe safety implications [8]. Addressing these vulnerabilities, threats, and challenges is essential to ensure the security, reliability, and safety of critical infrastructure supported by self-healing capabilities in CPSs [2].

The increased adoption of digital systems in conducting human socioeconomic development affairs concerning business, manufacturing, healthcare provisions, and government services comes with the attendant risk of increased threats to computer systems and networks. These threats could be in the form of cyber-attacks on the individual level or at the organisational level. For example, they targeted those isolated at home during the COVID-19 pandemic lockdowns, schools, businesses, hospitals, manufacturing plants, and social infrastructures. Through the widespread adoption of digital systems, communities have become more susceptible to malicious cyber-attacks; hence, the importance of research around computer systems self-healing has increased over the recent years. A review of existing approaches and methodologies has been conducted to address the need for robust self-healing and self-configuring systems to secure cyber–physical systems against security threats. The selection of methods for this review paper was based on a systematic literature search conducted in major scientific databases such as IEEE Xplore, ACM Digital Library, and Google Scholar. Keywords such as “computer systems self-healing”, “cyber–physical systems security”, and “self-configuring systems” were used to identify relevant articles published in peer-reviewed journals and conference proceedings. A total of 40 articles were included in this review, covering a wide range of topics related to self-healing and self-configuring systems in the context of cyber–physical systems. The selected articles provide insights into the current state-of-the-art challenges and future directions in this field. By examining these approaches, this review aims to contribute to the development of robust self-healing and self-configuring systems for securing current and future cyber–physical systems. Safety within cyber–physical systems cannot be overemphasised as it is not only an economic imperative but, in some cases, such as in a healthcare setting, can become a matter of life or death. There is, therefore, the broad scope for finding solutions that can aid the development of robust self-healing or self-configuring systems capable of securing current and future cyber–physical systems against security threats.

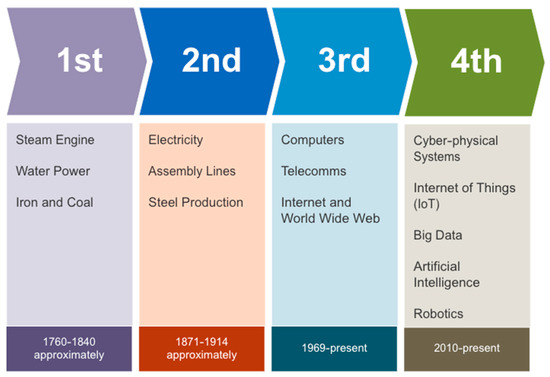

Cyber–physical systems are part of the Industry 4.0 devices that utilise the power of the Internet to convert the existing Industry 3.0 devices into smart industry devices. These include cyber–physical systems deployed in smart manufacturing, smart grid, smart city, and innovative automobiles. The cyber–physical system is highlighted in Figure 1 as part of Industry 4.0, and the figure focuses on the physical components of Industry 4.0, including cyber–physical systems and IoT, while underscoring the self-healing capability of CPSs in modern manufacturing systems using digital technologies such as cloud computing. An example of such development in transitioning the existing state-of-the-art systems protection from manual interventions to a self-healing approach through automation is noted in [9]. The study argues that as providers migrate from 4G to more robust 5G networks, the operational costs associated with network failures, predicted to increase exponentially, account for approximately 23% to 26% of revenue from the mobile network. A shift towards automating the system’s protection process through self-healing is occurring to control expenses as mobile network providers migrate to 5G. Self-healing systems are being deployed in electricity distribution plants worldwide, with most deployments burdened with latency, bandwidth, and scalability problems, as highlighted in [10]. Standardised architecture for distributed power control using self-healing functionality to solve systems faults is presented. The system proposal increases reliability during normal operations and resilience during threat events. The result of the self-healing experiment in [10] is currently undergoing field implementation by Duke Energy. Deploying machine learning to build self-healing functionality into the power grid is very important in a world where population growth is rising, and according to [11], frequent power outages constitute a considerable cost to the economy and adversely affect people’s quality of life. A proposal for using a fault-solving library coupled with a machine-learning algorithm to create self-healing functionality in computer systems was put forth by [11].

Figure 1.

Chronological progression of industrial revolutions: from the 1st to the 4th.

This paper briefly discusses the proposal, particularly the twin model approach. Self-healing functionality is vital for providing excellent quality of service (QoS) in cloud computing. With QoS increasingly critical to services offered by vendors of cloud computing, such services as software as service (SaaS), platform as service (PaaS), and infrastructure as service (IaaS), self-healing functions allow the network environment the ability to recover from failure situations that may occur within a software, network, or hardware part of the system, in such cases described in [12]. A technique was developed called self-configuring and self-healing of cloud-based resources RADAR. The principal issue that affects the optimal performance of the smart grid network is multifaceted failures in multiple areas of the network, such as network overload, systems intrusion, systems misconfiguration, etc. These failures can potentially cause severe setbacks to the economy and the quality of human life, which can be mitigated by applying self-healing functionality to the system, as demonstrated in recent research studies. Part of the myriad of solutions that have been proposed is using a fault-solving strategy library on a twin model system and a machine-learning (ML) algorithm to implement a self-healing mechanism in a smart grid. The ML algorithm compiles with the dataset derived from the fault-solving library and is then deployed to detect the anomalies within the cyber–physical system. The anomaly detection process is the first step towards implementing self-healing functionality and detecting such that the self-healing functionality is triggered after the fault classification process gas is completed and a viable mitigation solution is found within the fault-solving library.

This paper adopts a narrative research method within the qualitative methodology, using existing literature to highlight theories, machine-learning algorithms, and network architectures for implementing self-healing functionality, which can then be deployed to protect the security of cyber–physical systems. The paper surveys existing literature and shows the research areas where similar systems have been implemented and gaps still exist, intending to aid future studies. The goal and objectives of this paper encompass the following four points, which aim to contribute to enhancing knowledge in the field of study:

- This paper aims to enhance knowledge by highlighting current trends in the area of study;

- The main objective of this paper is to identify the latest machine-learning tools, methods, and algorithms for integrating self-healing functionality into cyber–physical systems;

- The self-healing capability of cyber-physical systems will be evaluated concerning state-of-the-art techniques, and machine-learning tools and methods in implementing self-healing functions will be explored;

- The existing literature will be critically reviewed to identify current tools, methods, algorithms, classification models, frameworks, networks, and architectures currently deployed for a self-healing approach.

The implications of the existing approaches for future research are discussed, emphasising how the literature review and findings can contribute to advancing future experiments. This paper’s structure includes sections on self-healing theories, self-healing for cyber-physical systems, self-healing methods, an analytical comparison of promising approaches, and a critical discussion of presented theories and techniques. The taxonomy of the literature is summarised in Table 1.

Table 1.

Taxonomy of the literature.

2. Self-Healing Theories

Self-healing theories are areas of research that seek to formulate arguments that explain the fundamental principles to be considered when implementing self-healing functionality and the pattern between self-healing and other areas of science. The self-healing cyber–physical system section describes what it means to have the self-healing functionality implemented into the cyber–physical system, and self-healing methods detail the models, frameworks, and network architectures that underpin the implementation of self-healing functionality.

Hence, different self-healing theories are presented and discussed in the following subsections.

2.1. Negative and Positive Selection

Negative and positive selection are two processes in the immune system to ensure that only healthy cells are present in the body. A self-healing system refers to a system that can repair itself when damaged or infected. Hence in the context of a self-healing system, the immune system uses both negative and positive selection to ensure that only healthy cells are present. If a cell is found to be harmful, the immune system eliminates it and then begins to repair and regenerate healthy cells. From the biological science viewpoint, negative selection is the process in which the immune system removes cells that recognise self-antigens, and the immune system uses negative selection to ensure that immune cells do not attack healthy cells. Likewise, positive is a process in which the immune system selects cells that recognise foreign antigens and prime the immune system to identify and eliminate harmful cells or pathogens. The CPS self-healing theory of negative and positive selection is the replication of the biological immune response in computer science. An example of such is using a genetic algorithm to detect system intrusions and then deploying the self-healing functionality of the algorithm to remediate the threat.

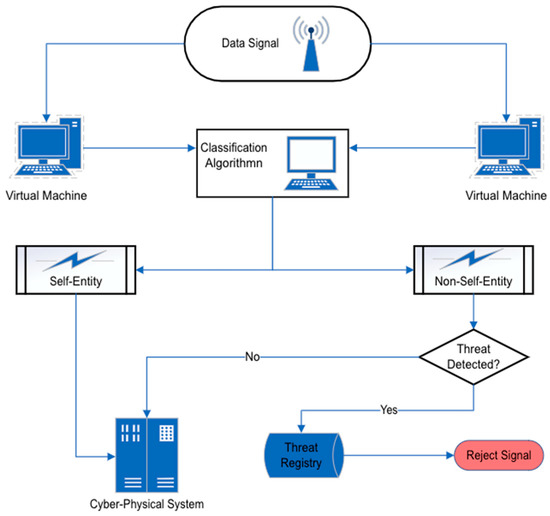

The characterisation of anomaly is essential in ascertaining where the potential threats or faults are located within a system, and the theory that is relied upon to achieve this is the negative and positive selection theory. Identifying threats before deploying practical self-healing functionality is a vital aspect of its implementation for appropriate remediation. Negative selection of anomaly detection is called “non-self” detection and positive selection of anomaly detection is called “self” detection [13]. The central concept of negative selection, as shown in (Figure 2) is to construct a set of “non-self” entities that do not pass a similarity test with any pre-existing “self” entities. If a new entity is detected that matches the “non-self” entities, it is rejected as foreign.

Figure 2.

Negative and positive selection in self-healing systems.

Similarly, the positive selection principle reduces the algorithm by one step, and instead of matching a new entity with a constructed “non-self” entity set, it matches the entity with pre-existing “self” set and rejects the entity if no matches are found. D’haeseleer in [13] posits that negative selection has the properties of a thriving immune system, requiring no prior knowledge of intrusions. This is due to being, at its core, a general anomaly detection method. Negative selection is self-learning because it naturally evolves as a set of detectors; when obsolete detectors die, new detectors are obtained from the current event traffic. Dasgupta, cited in [13], argued that negative and positive selection produce comparable results despite their fundamental approach differences. Both approaches raise the alarm when an unknown entity infiltrates the system.

2.2. Danger Theory

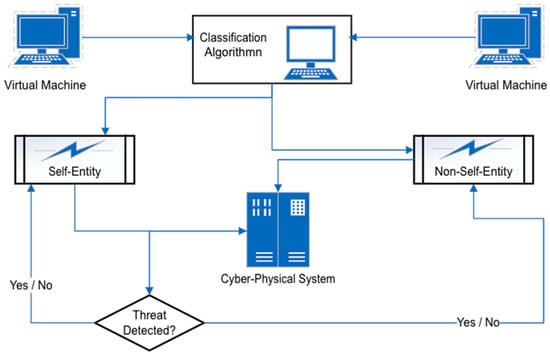

Danger theory is the approach where immune responses are triggered by danger signals rather than just by the presence of any “self” or “non-self” objects. Negative or positive selection entities are allowed until signs indicate that they pose a threat. For example, as shown in (Figure 3) within the immune system (which this theory is modelled against), if a harmful activity is detected, the immune response is triggered, attacking either all the foreign entities or entities locally, depending on the severity of the danger signal as noted in [13] that Burges et al. (1998) was among the first study that proposed the use of biologically inspired danger theory to detect and react to harmful activity in computer systems. Danger theory establishes the link between artificial immune systems and intrusion detection systems. Mazinger in [5] argued that danger theory is based on the concept that the immune system does not entirely differentiate between self and non-self but differentiates between events that possess the potential to cause damage and or the events that will not. Once the system understands itself, it can extend its pattern recognition capabilities and respond to dangerous circumstances.

Figure 3.

Harnessing the power of danger theory to optimise self-healing systems.

The creation of an intrusion detection self-healing system based on danger theory in which anomaly score is calculated for every event in the system was proposed by [13]. Each event has three computed values: event type (ET), anomaly value (AV), and danger value (DV). The ET is based on predefined types or automated events clustering. The AV defines how the abnormal event is based on “non-self” computations. The DV increases when any strange or potentially dangerous signal is associated with an event. All these three central event values are combined to calculate the threat total value (TV). TV is the perceived potential of a particular event to cause damage or to be a constitutional part of events that can cause a system’s failure. Three main system flow originates from dangerous events [13]:

- New event analysis: When a new event is detected, it should be added to the timeline, and the dangerous pattern should be checked;

- Danger signal procession: When a danger signal is detected, the system must decide if any pattern can be related to the danger signal and then act accordingly;

- Warning signal processing: When a warning arrives from other hosts that carry information about a danger signal and related dangerous sequence of events, a host’s timeline should be checked to verify that it does not have a similar dangerous sequence of events.

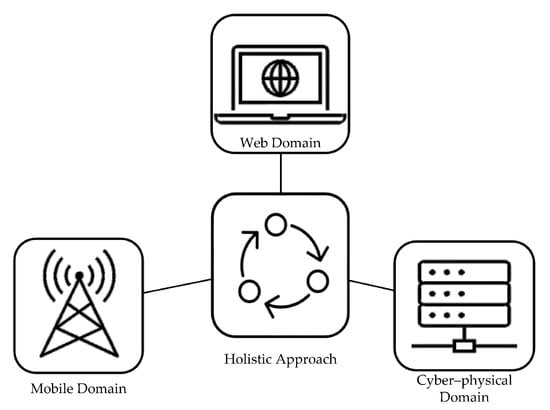

2.3. Holistic Self-Healing Theory

The holistic self-healing theory is a holism principle that reinforces complex systems’ resilience. Improving the resilience of one part of the system can potentially introduce fragility in another. This occurs because when one aspect of the system’s resilience is enhanced, it may inadvertently compromise the stability of another element. In mobile network management, for instance, Ref. [10] argued that this approach, as depicted in (Figure 4) means that different management domains and levels are not considered in isolation. Though the other management domains may be operating on different time scales and different managed objects, the domains need to be aware of the threat events that occur in each segment of the whole to react to the danger and trigger appropriate remedial action. Effective communication between the various subdomains of the system allows for the application of danger theory to protect the overall design as a singularity.

Figure 4.

A holistic approach to maintenance and repair of the self-healing system.

3. Self-Healing for Cyber-Physical Systems

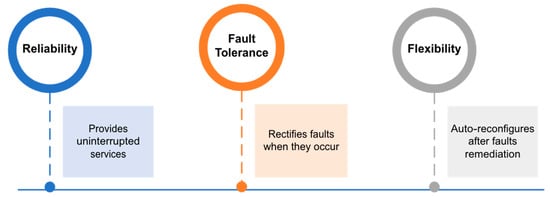

Alhomoud described a self-healing system in [13] as a resilient system that can carry on its normal functions even when under attack. A self-healing system is equipped with measures to identify and prevent attacks from internal or external events and to facilitate the system’s recovery autonomously. A system equipped with self-healing functionality monitors the system’s environment by constructing a pattern of the sequence of the events and using the pattern to detect anomalies in the circumstances before the remedial functions that correct or eliminate the events anomaly can be successfully deployed. Only when this autonomous remediation of attacks has been successfully achieved can the system be described as having demonstrated self-healing functionality. The main characteristic of a self-healing or self-organising system is the ability to react to problems through self-adaptive principles, which is shown in [19] using a platform they termed PREMiuM. The system must be able to classify the attack from everyday activities and take remedial actions to mitigate the impact. The proposed PREMiuM platform in [19] is designed to realise self-healing functionality in manufacturing systems, focusing on increasing efficiency during manufacturing processes. The PREMiuM platform consists of a top-level architecture of several services, i.e., interactive, self-healing, proactive, communication, modelling, and security services. These services, which are independent of each other, are deployed to achieve predictive maintenance of manufacturing systems. The self-healing service can detect or predict failures in the system in furtherance of the self-healing and self-adaptive functionality. A proposed intrusion detection system (IDS) by [13] is based on anomaly attack detection in which the IDS monitors the system’s environment, constructs a pattern of events and then uses the pattern to detect anomalies in the system, sometimes called outliers. The intrusion detection system detecting outliers triggers the system’s defence mechanisms. Then, it notifies the other parts of the system and or system administrators of the anomalies that have been detected. In a similar self-healing approach, Ref. [1] proposed using machine-learning (ML) algorithms to implement IDS in smart grid construction by integrating traditional power grid strategy with the computer network. It then established the distribution fault-solving strategy library, which caused the grid to become self-adaptive. The method abstracted the power grid into an integrated domain with the cyber–physical system through data sharing, and the grid state in each of the system’s nodes corresponds to a twin matrix, making the grid fully modelled and digitised. The grid, therefore, in the event of failure, utilises the fault-solving strategy library to self-correct itself using the functions of the distribution network. The critical characteristics of self-healing are reliability, fault tolerance, and flexibility. These characteristics are demonstrated in [27] principles of self-adaptation systems research, in which self-healing forms part of the fundamental principle encompassing self-protection, self-configuration, and self-optimisation.

RADAR, a self-healing resource, was evaluated by [12] using a toolkit called CloudSim, and the experiment results show a promising outcome, with an improvement in the fault detection rate of 16.88% more than the state-of-the-art management techniques. Resource utilisations increase of 8.79% is shown, and throughput increased by 14.50%; availability increased by 5.96%; reliability increased by 11.23%; resource contention decreased by 6.64%; SLA breaches of QoS decreased by 14.50%; energy consumption decreased by 9.73%; waiting time decreased by 19.75%; turnaround time decreased by 17.45%; and lastly, execution time reduced by 5.83%. The critical contributions of RADAR are listed in [12]:

- Provision of self-configuration resources by reinstalling newer versions of obsolete dependencies of the system’s software and offers management of errors through self-healing;

- Automatically schedules resource provisioning and optimises QoS without the need for human intervention;

- Provides algorithms for four-phased approaches of monitoring, analysis, planning, and execution of the QoS values. These four phases are triggered through corresponding alerts to aid the preservation of the system’s efficiency;

- Reduces the breach of service level agreement (SLA) and increases the QoS expectation of the user by improving the availability and reliability of services.

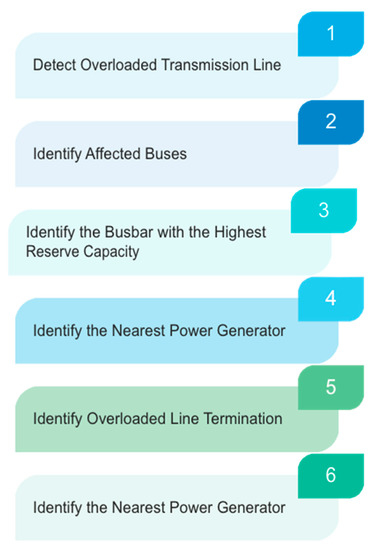

A prominent issue in current research is the ability of systems to identify “zero-day” or never-before-seen anomaly events intelligently. The proposal presented by [33] suggests utilising a knowledge-based algorithm to construct an intrusion detection system (IDS) that effectively prevents power grid fault line intrusion. Experiments were conducted within a testbed of a six-bus mesh network modelled to identify fault events within the system and concurrently perform mitigating actions initiated by [33] and proposed as a novel protocol. The proposed protocol is referred to as autonomous isolation strategies. The strategies involve rerouting power flow displacements within the power grid once a threat intrusion is detected. Simulations during the experiment were conducted using Power World Simulator, MATLAB, and SimAuto (a fault detection platform). As noted in [33], the experiment result shows that MATLAB extracts network parameters. Then, self-healing strategies are triggered by rerouting network processes to other distribution areas, providing stability to the system. The self-healing approach, started by the knowledge-based algorithm, continues concurrently until all the overloading lines on the grid are cleared and all effects of the system’s threat eradicated. The guidelines of supervised learning for the knowledge-based algorithm as related to the electric power network are listed as having the following characteristics in [33]:

- Detection of overloaded transmission lines in the power network;

- Identify buses that have overloaded transmission lines connected to them;

- Identification of the busbar that has the highest reserve capacity and that can then serve as a viable option for a power restoration strategy;

- Identification of the nearest distribution generator to the overloaded transmission line;

- Identification of the termination point of the overloaded lines;

- Establishment of line connection using the references of the reserve busbar index.

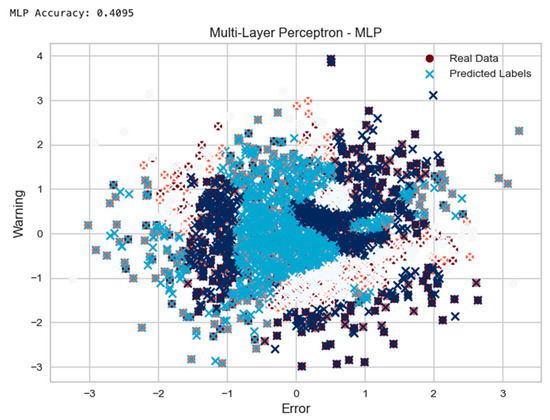

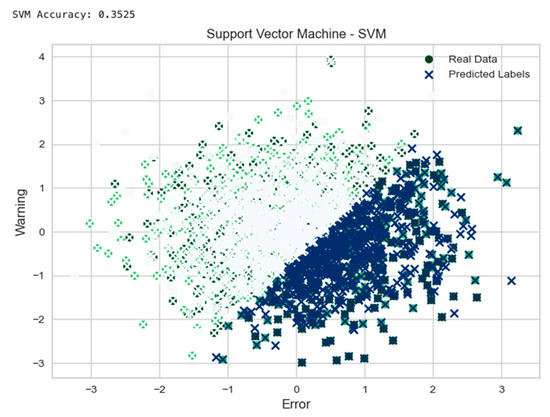

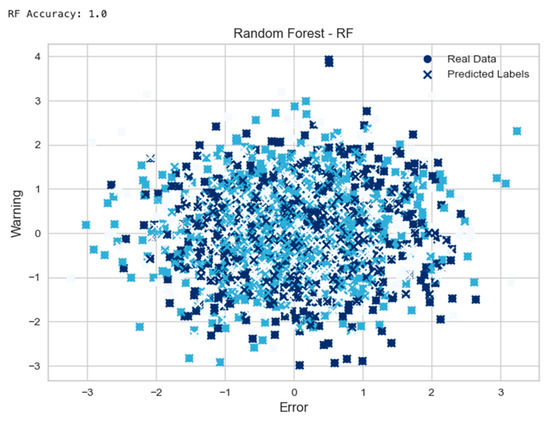

Other anomaly detection systems for network diagnosis are proposed in previous studies, such as ARCD by [34], which uses data logs collected from large-scale monitoring systems to identify root causes of problems in a cellular network. An experiment by [34] identified that ARCD systems achieved rate levels above 90% in terms of anomaly detection accuracy rate and detection rate. The drive towards an automatic diagnosis of computer systems failures in mobile cellular networks is propelled by the industry’s need for efficient means of identifying problems within the network. Interestingly, Ref. [35] noted that mobile network operators spend a quarter of their revenues on network maintenance, and a drive towards maintenance automation will drive down costs. A solution that relies on random tree forest (RF), convolutional neural network (CNN), and neuromorphic deep-learning module to perform fault diagnostics were proposed by [35]. The proposal uses an RSRP map of fault-generated images to provide an AI-based fault diagnostic solution. The impact of fault diagnostic solutions is noticeable in reducing costs and improving the end user’s overall quality of service (QoS). Experiments during research by [35] show that the proposed system could identify all the faults fed through the image datasets.

Similarly, a system that is resilient to system intrusions and built using Python-based libraries, software-defined networks, and virtual machine composition was proposed by [3]. The system is called Shar-Net and was tested in a smart grid environment. The experiment results show demonstrably viable IDS that can prevent cyber-attacks and, at the same time, can mitigate the effects of attacks through the system network’s automatic reconfiguration. The principal areas covered in the proposed system are intrusion detection system (IDS), intrusion mitigation system (IMS), and alert management system (AMS). Zolli and Healy describe the resilience of a system in [10] as the ability of the system to recover from failure or attack. The above description is quite different from a robust system. A robust system is a system that is built to withstand unforeseen threats. Although the two terms describing the core functionality of a self-healing-capable system might be used interchangeably, it is essential to note the difference between them. A robust system relies on threats that have been previously seen and thus has allowed the designers of the system to build countermeasures to such threats proactively. On the other hand, a resilient system retroactively reacts to unforeseen or “zero-day attacks” and applies countermeasures accordingly. The authors of [10] listed the following principles of a resilient system:

- Monitoring and adaptation: It must be responsive to unforeseen attacks;

- Redundancy, decoupling, and modularity: It must have a decentralised structure to prevent the threats from spreading to the other constituent parts of the network or the system’s host;

- Focusing: The system must be able to focus resources where they are most needed to prevent the overuse of resources, which may be counterintuitive to the task of shoring up the system’s resilience;

- Diverse at the edge and simple at the core: The system should be able to utilise shared protocols through simply defined processes. Still, it should also retain an element of diversity to circumvent widespread attack threats.

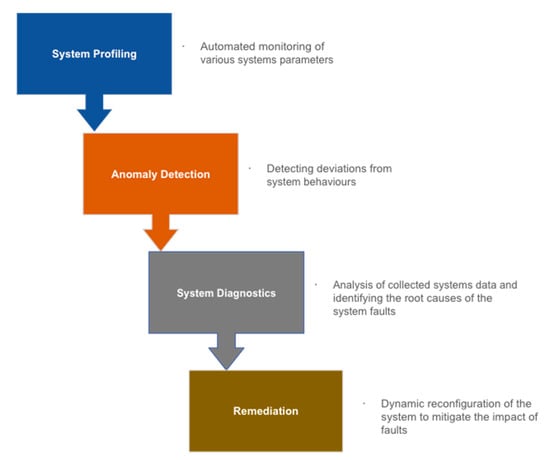

Self-healing functions can be implemented in four stages (Figure 5). These include profiling the system’s normal states, detecting the system’s deviation from its normal state, diagnosing the system’s failures, and taking corrective actions to mitigate the impact of the system’s failure. The choice of profiling algorithm for a self-healing system is dependent on the scope of the design requirements and based on further considerations such as:

Figure 5.

Four stages of a self-healing system: implementation and functions.

- The system’s architecture;

- The available datasets;

- Profile scope;

- Profile features;

- Feature distribution or subset;

- Understandability.

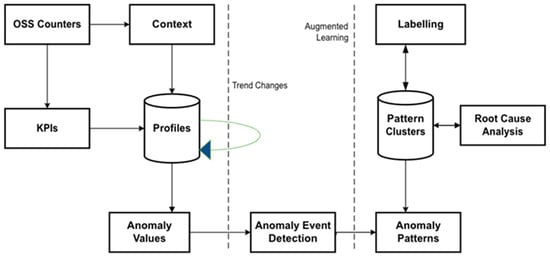

The illustration of anomaly detection and diagnosis for radio access networks (RANs) (Figure 6) shows the profiling, detection, and diagnosis of anomaly events related to RANs. The self-healing function is implemented according to a selected event context, like when a threat event occurs. The key performance indicators (KPIs) are calculated when a profile is created within a time-series format. The anomaly events that are unique in characteristics are detected based on their anomaly level. The diagnosis function then analyses the detected anomaly occurrences. The diagnosis function then identifies the root causes of the anomaly events to ascertain whether corrective measures are required or not to lessen the potential threats, and the corrective workflow is then triggered if indeed needed [10]. The major problem that affects the optimal performance of the smart grid network today is the occurrence of system failures caused by multifaceted fault areas, such as system overload, system intrusion, and system misconfiguration, among others [1]. Such failures within the smart grid can cause significant economic setbacks, with consequences that sometimes negatively impact human livelihoods or quality of life. To mitigate the problem of the system’s inability to self-heal after failures, Ref. [1] proposed using a fault-solving strategy library based on a twin model system and machine learning (ML) algorithm to implement a self-healing mechanism in a smart grid. The algorithm will be fed into the dataset derived from the fault-solving library to detect anomalies within the system. Then, the self-healing function is trigged once the classification process is completed and a viable mitigation solution is found. Self-healing methods can be helpful in a variety of contexts where uptime, reliability, and performance are critical, such as:

Figure 6.

Anomaly detection for radio access.

- Manufacturing: In a manufacturing environment, production lines and equipment must always be operational and available to ensure maximum output. Self-healing mechanisms can detect and respond to faults or failures automatically, thereby minimising downtime and reducing the need for manual intervention;

- Transportation: Transportation systems, such as trains, planes, and automobiles, rely on sensors and other technology to monitor and control their operations. Self-healing mechanisms can detect faults or failures and take corrective action to ensure the system’s safety;

- Power grids: Power grids are critical infrastructure that must always be operational to ensure reliable access to electricity. Self-healing mechanisms can detect and respond to faults or failures, preventing cascading failures and reducing the impact of outages;

- Healthcare: Healthcare systems rely on technology to monitor and provide critical care. Self-healing mechanisms can ensure that these systems are always operational, minimising the risk of disruption that could compromise patient safety;

- Internet of Things (IoT): IoT devices are becoming increasingly common in homes, businesses, and public spaces. Self-healing mechanisms can detect and respond to faults or failures, ensuring these devices remain operational and connected to the Internet.

4. Self-Healing Approaches

Self-healing approaches are the strategies and techniques to detect, diagnose, and resolve CPS problems automatically without human intervention. These approaches are commonly used in complex systems such as software applications, networks, and hardware systems to ensure that they recover from failures and continue to operate without disruption. Table 2 lists the most common machine-learning tools for cyber–physical self-healing systems.

Table 2.

List of machine-learning tools for cyber–physical self-healing systems.

The approaches involve:

- Redundancy: Redundancy involves having duplicate components or systems that can take over if the primary system fails. For example, if one node fails in a computer cluster, another node can take over and continue processing the request;

- Automated recovery: Automated recovery involves setting up automated processes to detect and resolve problems. For example, a computerised process can restart a server or move its workload to another server if it goes down;

- Predictive maintenance: Predictive maintenance involves using sensors and data analytics to predict when a system is likely to fail and proactively take action to prevent the failure from occurring. For example, an aircraft engine can be monitored for signs of wear and tear, and maintenance can be scheduled before failure occurs;

- Machine learning: Machine learning involves using algorithms to analyse data and learn patterns that can be used to detect and resolve problems. For example, machine algorithms can analyse network traffic and detect anomalies that may indicate security breaches;

- Fault tolerance: Fault tolerance involves designing systems that can continue to operate even if one or more components fail. For example, a database cluster can be designed to replicate data across multiple nodes. If one node fails, then other nodes can continue providing data access.

It is important to consider training datasets, which are the bedrock of implementing ML self-healing functions in cyber–physical systems. The self-healing algorithms use data to identify errors, analyse their causes, and take remedial actions. Self-healing algorithms utilise the system’s derived data to improve the reliability of the system and fulfil the self-healing functionality, data such as the following:

- Log data: This contains information about the system events, such as error messages and other data that can be used to diagnose problems;

- Performance metrics: This includes data derived from CPU utilisation, memory usage, network latency, and input/output disk;

- Configuration data: Includes data related to the system’s configuration changes and parameters;

- Environment data: Identifies the issues relating to environmental conditions, such as overheating and excessive humidity.

- User behaviour data: These data identify patterns in the system’s user behaviours, such as the response times or the frequency of errors.

4.1. Self-Healing Models and Frameworks

A self-organising network was presented by [10], called the self-organised network (SON) experiment framework, with which self-healing functionality is implemented and demonstrated using data from actual network instances and live integrations. The SON experiment framework is a tool developed by Bodrog [10]. It is implemented in R language and has a user interface to visualise anomaly detection and its diagnosis process. Further research includes using the transfer-learning method to investigate the remediation aspect of the self-healing system. A reoccurring theme is addressed in the literature relating to self-healing methods, and [36], in describing self-healing software techniques, noted that the techniques are modelled after the observer orient decide act (OODA) feedback loop. The OODA model identifies where to apply protection by observing the system’s behaviour. The system is monitored to detect the fault and determine fault parameters, such as the type of fault, the input or the sequence of events that led to the input, the approximate areas of the system that is affected by the defect, and the information that may be useful in mitigating the fault. The self-healing mechanism in terms of OODA is more appealing than the traditional defence mechanism, which prioritises the termination of attack processes and restarting the system in the event of an attack. The self-healing tool succeeds by preventing code injection or the misused of legitimate code, rather than the traditional defence method, which may cause systems fault to persist even after the attack process has been terminated and the system restarted.

Self-healing functionality implementation has at its core anomaly detection, and once an anomaly is detected using the various methods that are presently available, such as network intrusion detection system (NIDS) and or host-based intrusion detection systems (HIDS), then performing remediation through triggered actions becomes necessary to protect the system and realise the self-healing function. A self-healing system must encompass resilience through its ability to take corrective measures that return the system to its routine or default state after system failure or attack. Systems that are capable of remedial actions against losses and or attacks from outside sources were proposed by Lui in [37]. However, the proposal cannot trigger automatic remediation to mitigate attack events in real time but still requires human intervention to perform the remediation process. Therefore, a self-healing framework that is automatic and uses collaborative host-based learning to incorporate a self-healing mechanism into the Internet of Things (IoT) devices was proposed by Golomb in [37] in a study where a lightweight HIDS designed for IoT was deployed. The authors of [33] described the conventional self-restoration within the electrical systems concept as a system that can automatically reconfigure itself to achieve repossession during an unexpected power disruption event. Automation within the power grid is the concept of autonomously restoring proceedings that are impacted by power failures and restoration of power supply through redirecting power flow from stable lines to the fault-affected areas instantaneously.

The automation principle facilitates reforming the existing power grid to dynamically respond to fault detection, applying deterministic isolation techniques and executing reroute operations in real-time. An example is shown in a framework demonstrated by [37] in the form of a tool for detecting anomaly events on cyber–physical systems. The system sends alerts through the HIDS and triggers the best possible remediation action, neutralising the attack effects and returning the IoT devices to their normal state. An auto-remediation model is deployed, which uses an evolutionary-computation algorithm built to imitate the functions of the natural process of evolution and in which the fittest are likely to survive through a process like natural selection. The principle of the evolutionary algorithm dictates that multiple practical solutions are created, and the best among the solutions is then selected during the evolutionary process. Consequently, various solutions for the different ML models implemented on IoT devices connected to local area networks (LAN) are defined.

The best model, which becomes more adept at selecting the correct remediation process, is chosen more often than the rest. The evolutionary algorithm uses fitness criteria, which enables the selection of models based on the health score provided by the algorithm. In addition to selecting the models based on the health score, the algorithm generates new ML models based on the individual model’s current attributes and supported by mutation. To initialise the automatic remediation models, Ref. [37] proposed the implementation of a lab setup in which multiple versions of auto-remediation models are trained to provide corrective countermeasures by classifying system attacks that can be deployed on IoT devices. The approach expedites the collaborative training process and improves the ability of the auto-remediation agents to trigger corrective countermeasures against actual attacks within a real system environment.

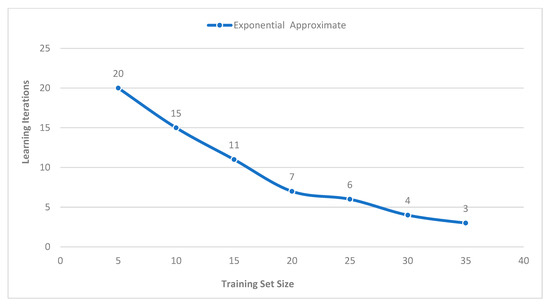

A physical platform comprising 35 Raspberry Pi devices, with similar hardware and software on each device, was used in experimentation. All the Raspberry Pi devices were connected to a network switch on a LAN. The long short-term memory (LSTM) model was initialised on each IoT device with random weight to evaluate the experiment. Then, an attack is triggered on the devices using attack stimulators based on the Red Hat Ansible engine, an IT automation tool. After each episode, the genetic algorithm is executed to update the LSTM model through learning iteration. The learning iterations are measured on all the devices connected to a testbed against the response of the attack, and a countermeasure action is undertaken to return the devices to their normal states. One of the critical discoveries during the experiment is that increasing the number of devices connected to the testbed (i.e., training sets) decreases the learning process time exponentially, as shown in (Figure 7).

Figure 7.

Learning iteration of long short-term memory model (LSTM).

In presenting their work, Goasduff [37] noted that Gartner Inc. predicted that there would be 5.8 billion IoT devices by the end of 2020, and the prediction represents a 21% increase from the previous year. The number is then expected to reach 64 billion devices by 2025. Theoretical studies show that ML models, learning autonomously by experience and collaboration, can serve as the basis for new cyber systems security defence. Future studies could see the automatic remediation algorithms built into IoT devices that could provide self-healing system safeguards and reduce maintenance costs, which can ordinarily occur from system failure or attack. Future work to further explore the theory would be centred around adding more classification of attacks to the ML models to bolster the learning capacity of the models, as well as explore the feasibility of transferring a trained automatic remediation model between multiple IoT devices.

Similarly, a self-healing function within the microgrid electricity network was described by Shahnia in [26] as part of a control and operation mechanism aimed at introducing a level of automation in the network system. The self-healing mechanism involves many decision-making processes and can be presented in a three-tier hierarchical structure. The bottom part of the structure consists in deploying a well-established control scheme strategy to solve fault problems locally without requiring interventions from any central command. The goal of the strategy is to reduce costs and increase the speed of operation. According to Wang and Wang [26], the goal prompted many researchers to explore local decision-making models.

Consequently, Wang and Wang [26] proposed a novel sectionalised self-healing approach in decentralised systems to resolve electrical energy distribution problems. The objective set by the authors is to guarantee power supply by balancing loads in the subsection of the decentralised system by either adjusting the power outputs of the distribution network or through the implementation of load shedding. A suitable self-healing network deployment case study is a platform by [20] called fault location isolation and supply restoration (FLISR), deployed and tested on a network simulator. The test result indicates that the solution reduces the cost of commissioning on grid networks, and the platform has been deployed in grid networks in several countries, such as the Netherlands, France, Vietnam, and Cuba. A method for an automatic prediction model for systems failure, recovery, and self-healing in virtual machine (VM) networks using intelligent hypervisors was presented by [9]. The self-healing functionality implementation on the transmission network of a smart grid is achieved through optimal voltage control with a genetic algorithm, unified power flow controller (UPFC), and islanding process. The distribution network approach involves the design based on the propulsion system, ant colony algorithm, multi-stakeholder control system (MACS) for the intelligent distribution network, fault location, isolation, and service restoration (FLISR). The self-healing functionality is achieved using a predictive model that recovers failed VM instances in a physical machine through a self-healing algorithm that utilises the VM’s memory snapshots. The self-healing algorithms include decision tree, Gaussian normal basis (GNB), and support vector machine (SVM). A database’s self-healing functionality, extracting helpful information that identifies the type and subtype of events from the database’s events report (text fields) data, was presented by [38]. The extracted information was analysed using Python version 3.6.3, Natural Language Toolkit (NLTK), Scikit-learn, Regular Expression (RE), and Pandas modules. Several self-healing methods have been proposed, such as those described in [16] and relating to a smart grid with corresponding characteristics, which has the elements and is representative of all self-healing known features. The smart grid self-healing systems, to be considered adequate, must be able to meet the following criteria:

- Quick detection of system faults;

- Redistribution of network resources to protect the system;

- Reconfiguration of the system to maintain service, irrespective of the situation;

- Minimal interruption of service during reconfiguration or self-healing period.

4.1.1. Twin Model

The twin model is a statistical model commonly used in the behavioural genetics study of the heritability of various traits and behaviours. It assumes that genetic and environmental factors contribute to the variation in each trait or behaviour. Researchers may use the twin model as a statistical model and ML algorithms to analyse data from sensors and other sources to detect and diagnose anomalies in a cyber–physical system. The concept of the twin model to this effect was proposed by [1], and it integrates a strategy network, valuation network, and fast decision network into the twin matrix of the power grid. It facilitates the analysis of the smart grid by operating the actual power grid but does so virtually (on a computer). The model is a utility used to simulate the operation of an existing grid on a computer, with a use case that analyses the functionalities of a smart grid. As merging energy flow and information become vital for the optimal operation of the smart grid, failure in these aspects can cause successive losses in the transmission between data and the physical network of the power grid. There may also be a resultant effect from such failures that precipitated the collapse of the computing system and the entire smart grid. To better describe the grid state, Ref. [1] introduced a virtual network called the twin model, which represents the physical system state and the corresponding data in precise information in the smart grid. The relationship of which is defined in the following equation.

The data of each node in the grid are compared to the matrix, and each node in the grid is regarded as the first column of the matrix, which is noted as the different types of faults (voltage, frequency, etc.) may appear in the subsequent corresponding node, which is noted as etcetera. Through the above representation in the actual grid, if a node has a fault, the failed node can easily be identified by analysing the data changes in the twin matrix.

4.1.2. QoS Model

The quality of service (QoS) model is described by [17] as the expression of the correlation between QoS changes and its environment primitives (EPs) or control primitives (CPs). The QoS models can be a powerful tool to automatically assist cloud providers in adapting cloud-based services and applications. They help determine the extent to which services and applications can sufficiently exploit CPs to support QoS objectives and consider the QoS sensitivity of both EPs and CPs, as noted by [37]. A self-healing QoS model can automatically detect and correct errors in a system without human intervention. It is a model designed to maintain high accuracy and reliability despite unexpected events or changes. One approach to building a self-healing ML QoS model combines supervised and unsupervised learning techniques. Supervised learning is used to train the model on a set of labelled data, while unsupervised learning is used to identify patterns and anomalies in the data.

4.1.3. Auto-Regressive Moving Average with Exogenous Input Model

The auto-regressive moving average with exogenous input (ARMAX) model is a statistical model that combines both auto-regressive (AR) and moving average (MA) components with exogenous input. Ref. [2] explored combining artificial neural network (ANN) and auto-regressive moving average with an exogenous input model (ARMAX) to show how primitives correspond to the quality of service adaptively (QoS) based on the related primitive matrix. Ref. [2] through experiment demonstrate the implementation of a middleware that incorporates a self-adaptive approach based on the feedback control mechanism. The experiment’s outcome, when tested using the RuBis benchmark and FIFA 1998 dataset, proves that the models (ANN and ARMAX) produce a more accurate result when compared to the state-of-the-art models. The resulting model from combining the two models in an experiment is S-ANN and S-ARMAX. The former handles the dynamic QOS sensitivity better and produces higher accuracy in events where QOS fluctuations occur, whereas the latter makes fewer errors when QOS fluctuations decrease. The ARMAX model is commonly used in time-series analysis to predict future values tina time series based on past values and exogenous inputs. The model can be estimated using various methods, such as maximum likelihood estimation or least square estimation, and evaluated using measures such as the Akaike information criterion (AIC) or Bayesian information criterion (BIC).

4.2. Network Architecture

The principal challenge of research into self-healing functionality on computer networks is the ability to achieve reliability, one of the three core characteristics of a self-healing system described [27,29]. A method was proposed based on utilising shared operation and spare nodes in each neural network layer to compensate for any faulty node and resolve the self-healing reliability challenge. The proposed method is implemented using VHSIC hardware description language (VHDL), and the simulation result is obtained through Altira 10 GX FPGA. The experiment by [21] looked at overcoming the area overhead caused using redundancy over time, and the result demonstrates overhead reduction by 27% for four nodes within a layer and a 15% reduction in overhead for ten nodes within a layer. When designing self-healing systems, several network architecture issues need to be taken into consideration:

- Redundancy: The network should be designed with redundant components to minimise the impact of failures by providing fail-safe functionality to the system;

- Automation: The network should be automated to reduce the need for manual intervention and speed up the recovery process;

- Monitoring: The network should have robust monitoring capabilities to detect and diagnose issues as soon as they occur;

- Resiliency: The network should be designed to be resilient to common failures, such as power outages, hardware failures, and software bugs;

- Security: The network should be designed with safety in mind to mitigate attacks that could cause outages

The above will ensure that the three main self-healing characteristics (Figure 8), as defined by [16], can be realised.

Figure 8.

Characteristics of a self-healing system.

Having evaluated self-healing approaches within the cluster platform architecture, Ref. [14] proposed a self-adaptive method that identifies and recovers from abnormal events in cluster platforms such as Kubernetes or Docker. The proposed method is designed to introduce different anomalies into the cluster architecture of an edge computing platform. An experiment was conducted using generated workloads data to measure the effects of the abnormalities on the edge computing nodes at varying system settings, which allowed for identifying relationships between the system components and aided the system’s adaptation towards fulfilling its overall self-healing goals. The experiment result shows that the proposed approach detects anomalous events with accuracy ratings of 98% and recovery ratings of 99%. In evaluating self-healing in virtual networks (VN), Ref. [29] presented a multi-commodity flow problem (MFP) network called MFP-VNMH, which can enhance the VN mapping of overall VNs. The proposed approach enables self-healing capabilities on network virtualisation. Self-healing functionality was achieved using sessions between the service pack (SP) and inhibit presentation (InP), supervised by a collection of control protocols. Experiments conducted on the proposed approach demonstrate efficiency and effectiveness for service restoration after network failures.

4.2.1. Strategy Network

A network comprising tens of thousands of faults solving strategy libraries was proposed by [1]. They argued that the essence of the strategic network in their study is to proffer a combination of solutions when dealing with risks of power grid failures. The global strategy network in a power grid scenario is not accurate in solving power failure problems; hence, the sub-strategy of each node is combined to solve a global fault based on the general direction of the global strategy. The combined sub-strategy then constitutes a strategy network. The framework for the network consists of several layers and each with its strategy:

- Perception layer: This layer monitors the network to detect failures and anomalies. Strategies in this layer include using sensors to monitor network traffic, analysing system logs, and applying ML techniques to identify abnormal patterns;

- Analysis layer: This layer is responsible for analysing the data collected by the perception layer to identify the root cause of failures. Strategies in this layer include using ML algorithms to analyse the data and identify patterns that indicate the cause of a failure;

- Planning layer: This layer is responsible for developing a plan to address the identified failures. Strategies in this layer include using ML algorithms to determine the optimal recovery strategy and selecting the appropriate recovery mechanism;

- Execution layer: This layer is responsible for executing the recovery plan. Strategies in this layer include using automation to execute the recovery plan and providing feedback to the other layers to optimise the recovery process;

- Knowledge layer: This layer stores and manages knowledge about the network and the recovery process. Strategies in this layer include using databases to store information about the network topology and previous failures and using algorithms to learn from previous failures and improve the self-healing process.

4.2.2. Valuation Network

In artificial intelligence and deep learning, a valuation network refers to a neural network model designed to estimate or assign a value to a particular input or set of information [1]. The purpose of a valuation network is to evaluate the quality, significance, or relevance of the input data based on a specific criterion or objective. Valuation networks are commonly used in various applications, such as reinforcement learning, where the network is trained to estimate the value or expected return of different actions or states in an environment. They can also be used in recommendation systems to assess the preference or utility of items for a user or in natural language processing tasks to assign a score or sentiment to text inputs. The architecture of a valuation network can vary depending on the specific task and requirements. It typically involves multiple layers of neurons that process and transform the input data, and the final output represents the estimated value or score assigned to the input. The valuation network solves the problem of configuring the local strategy by judging the sub-strategy effective solution rate under specific power grid scenarios. At its core are machine and data learning. The practical solution rate means the combination of sub-strategy configuration, considering the global impact. The valuation network, Ref. [1] argued, is not predisposed to guess what sub-strategy to take but relies on an optimal global angle to predict the effective-solution rate of being resolved under different local strategy configurations or effective probability. The valuation network is a vital component of the continuous improvement of the fault-solving strategy library. By analysing the network’s usage patterns, the valuation network can identify areas where resources are underutilised or over utilised and recommend adjustments to improve and reduce costs. Valuation networks can help improve the system’s reliability, scalability, and cost-effectiveness by continuously monitoring the performance and optimising resource utilisation.

4.2.3. Fast Decision Network

In the context of self-healing, a fast decision network architecture can make quick, accurate decisions to enable rapid failure recovery. Speedy decision-making is critical to minimise downtime and maintain system availability in a self-healing system. A fast decision network can be implemented using ML algorithms to quickly analyse network data and make decisions based on real-time information. The algorithms can be trained to recognise network traffic patterns and behaviours and identify potential issues before they result in a system failure. For example, if a fast decision network detects that the CPU usage is high or that any other performance issues, it can quickly take action to mitigate the problem. This could involve diverting traffic to other devices, scaling up resources to handle the increased loads, or automatically triggering a self-healing mechanism to recover from the failure. A fast decision network is described by [1] as a representation of the amount of decision-making speed. It starts from the judging position and then quickly takes the local strategy to solve the grid risks for each node. The decision-making network will have a good or bad result after the configuration sub-strategy until the final node, and calculate the statistical probability of each corresponding node to obtain the overall probability. The process of a fast decision network does not consider the effect of local strategy configuration on global strategy. Fast decision network plays a significant role in enhancing the speed of fault resolution when an actual online operation is running. A fast decision network works more effectively when combined with a valuation network in a strategic configuration.

4.2.4. Virtual Machine

A virtual machine (VM) is a software emulation of a computer system that can run an operating system and application like a physical computer. In a self-healing system, VM can implement a self-healing mechanism in several ways. For example:

- Isolation: A VM can isolate applications and services from each other. If one application or service experiences a failure, it can be restarted within the VM without affecting the other applications or services running on the same physical machine;

- Redundancy: Multiple VMs can be deployed to provide redundancy for critical applications or services. If one VM fails, another can take over its workload to ensure continuity of service;

- Rapid provisioning: VMs can quickly be configured to meet changing workload demands. The proposed method enables the network to scale up or down as needed by utilising shared operation and spare nodes in each neural network layer, ensuring performance and availability are maintained;

- Testing and validation: VMs can test and validate self-healing mechanisms before they are deployed in a production environment. Implementing these approaches can help ensure the effectiveness of the tools without causing unintended consequences.

In their study, Ref. [17] investigated heterogeneous events in which different software stacks run on virtual machines (VMs) and physical machines (PMs) within a cloud environment, each operating at varying levels of primitives and capacity. The primitives tend to correspond to similar heterogeneous QoS for different service instances. Adaptive QoS models concerning each service instance were created to overcome the heterogeneity problem. As such, the service instance on VM (virtual machine) utilises the same computational resources as other service instances running on different VMs. Network virtualisation has been adjudged a novel approach for implementing promising heterogeneous services and building the next-generation network architecture [29]. Network virtualisation through VMs promises better utilisation of resources and increased network service delivery. Network virtualisation’s possibilities prompted [29] to propose a novel VN restoration approach called MFP-VNMH, which enhances VN mapping and service restoration. By isolating applications and services, providing redundancy, enabling rapid provisioning, and facilitating testing and validation, VMs can play a critical role in implementing effective self-healing mechanisms.

4.2.5. Phasor Measurement Unit

Phasor measurement unit (PMU) can be used in the context of self-healing to monitor and analyse the state of the power grid in real time. PMUs measure electrical quantities’ magnitude and phase angle, such as voltage and current, at high speed and accuracy. PMUs can be used to implement self-healing mechanisms in several ways, such as:

- Real-time monitoring: PMUs can be used to monitor the state of the power grid in real-time, providing high-resolution data on voltage, current, and frequency. This data can be used to detect and diagnose faults and other anomalies in the grid;

- Fault detection and isolation: PMUs can detect faults in the power grid, such as short circuits or equipment failures, by analysing electrical quantities’ magnitude and phase angle. PMUs can identify the location and the extent of the fault;

- Restoration: PMUs can be used to facilitate the repair of power after a fault has occurred. By providing real-time data on the state of the grid, PMUs can help operators quickly identify the source of the fault and take steps to restore power;

- Protection: PMUs can be used to protect critical equipment and infrastructure. By monitoring the state of the power grid in real-time, PMUs can detect abnormal conditions and trigger protective measures, such as tripping circuit breakers or isolating faulty equipment.

PMU for a self-healing feature on the power grid was implemented by [18] and created real-time monitoring and load balancing using three components that facilitate the self-adaptation and self-healing functionality of the network. The following list describes the three components of the PMU:

- Intrusion detection system (IDS): Relies on the PMU network logs and phasor measurements to detect different classes of abnormal events within the network;

- Intrusion mitigation system (IMS): Once the IDS detects an anomaly, the generated alerts from AMS are delivered through a publisher–subscriber interface; for appropriate remedial action to be taken;

- Alert management system (AMS): Generates alerts based on anomaly rules defined in IDS and forwards the alert to the IMS if abnormal events are detected for onward remedial actions by the IMS.

AMS comprises three sub-components: the alert manager subscriber1, subscriber2, and subscriber3. The alert subscriber1 collects alert logs from anomalies detected and forwards them to the IMS. The subscriber2 sends the received alert messages to the namespace orchestrator, which triggers the orchestration process on a given substation namespace. The alert manager subscriber3 sends the received alert messages to the application programming interface (API) of the central management application [18].

4.2.6. Mesh-Type Configuration Network

Mesh-type configuration network refers to a network topology in which each device in a network is connected to multiple other devices, forming a mesh-like structure. This type of network is often used in self-healing because it provides redundancy and resilience against network failures. In a mesh-type configuration network, if one device fails, the network can automatically reroute traffic through an alternate path, maintaining connectivity and availability. This approach allows the network to operate seamlessly, even when specific devices or links are unavailable. A proposal by [33] uses a mesh-type configuration to achieve the full potential of a power restoration scheme. The proposal’s aim is achieved by deploying the autonomous self-healing technique. However, despite the concerns of the critics of the proposal, who argue that a mesh-type configuration network introduces complications, is costly, and has a higher probability of bi-directional fault current flow, recent studies have shown that a mesh-type configuration network provides flexibility and security and performs independently. Mesh-type configuration network, by its design, establishes multiple points of failure, making the system resilient and making coupling or decoupling processes easily accessible. Using a mesh-type configuration network ensures the decentralisation of systems management and paves the way for the independent control of multi-level architecture. Mesh-type configuration was demonstrated in [30] implementation of wireless mesh networks (WMNs), which are multi-hop wireless networks with instant deployment, self-healing, self-organisation, and self-configuration features. WMNs offer multiple redundant communication paths throughout the network, and the network automatically reroutes packets through alternative pathways in situations where failure occurs in one section of the network or any threat that interferes with a particular path.

4.2.7. Agent Architecture

Agent architecture is the design and implementation of intelligent software agents that can autonomously detect and respond to faults or errors in a system. These agents are designed to operate in a distributed system, with each agent responsible for monitoring a specific aspect of the system and taking action to resolve issues when they arise.

Agent architecture is described in [31] as a rule-based architecture that uses an “if-then” rationalising scheme, in which the consequent result relies on prior experience. Then, appropriate agent architecture influences how well the system’s agents handle their operating environment through inference reasoning. The scope for simultaneous internal and external monitoring of the system is limited due to what is referred to as a threading obstacle with JADE/JESS integration, as noted in Cardoso [31]. The alternative and viable option is to structure agent communication from JADE agents to the JESS inference by granting JESS access privileges to the agent communication language (ACL) message or allowing JESS to add ACL message objects to the JESS working memory. Agent architecture in a self-healing system typically consists of the following components:

- Sensing: Agents can monitor the system and gather data about its current state. The data may include system performance metrics, error logs, and other relevant information;

- Diagnosis: Agents use data gathered during the sensing phase to analyse the system’s current state and identify any faults or errors. Various techniques can be employed to achieve this, such as comparing current data to historical trends or utilising machine-learning algorithms to detect and pinpoint abnormal behaviours;

- Decision-making: Once a fault or error has been detected, agents must decide how to respond. In such scenarios, the process may involve selecting from a predetermined set of response options, such as restarting a process or diverting traffic to a backup system;

- Action: Agents take action to resolve the fault or error using pre-define or adaptive responses. This process may involve coordinating with other agents to initiate a synchronised response or adjusting the system configuration to prevent future occurrences;

- Learning: Agents continually learn from their experiences and adapt their behaviour over time to improve effectiveness. To adapt effectively, agents may need to adjust their response strategies based on the outcomes of past responses and update their system models using new data.

4.2.8. Host Intrusion Detection System on IoT

The host intrusion detection system (HIDS) is an approach for protecting IoT devices against threats. As noted by [37], each device performs this by installing detection agents. The alternative to HIDS is the network detection system (NIDS), which is the most common approach and more scalable because it does not require software installation on the IoT device. However, NIDS has limitations when compared with HIDS, such as the limited capability in its detection functionality, especially in situations where traffic on the IoT network is encrypted. A framework proposed by [37] can detect systems attacks in real-time and react to remediate the effects of such attacks. The framework is an automatic and collaborative host-based self-healing mechanism for IoT devices. The framework description involves using HIDS to protect a collective instance of IoT devices built into an IoT environment, such as a smart city. In a research study by [37], multiple IoT devices collaborate to train a deep-learning model. The best possible remediation is triggered once the HIDS issues an alert to the model, fulfilling the framework’s self-healing functionality. The self-healing architecture proposed consists of three modules: HIDS, health monitoring, and auto-remediation. The HIDS module collects information from the IoT devices, analyses it, and determines if a threat could compromise the IoT device. The health-monitoring module is responsible for assessing the health state of the IoT device by collecting multiple data sources, such as memory usage, disk space, network metrics, etc. The auto-remediation module acts to remedy the effects of malfunction or intrusion of the IoT device.

4.2.9. Multi-Area Microgrid

Multi-area microgrid refers to a distributed energy system consisting of multiple interconnected microgrids that can operate independently or in coordination. This type of microgrid is often used to provide energy to various buildings or communities, and it can be designed with self-healing capabilities to improve its resiliency and reliability. A two-area microgrid was proposed by [26] with modes that stand independently. The multi-area microgrid is used to analyse a multi-machine system. A multi-area microgrid was selected to separate the core system into sections, in a fault event simulation, in a manner that allows the application to adopt distributed control. Each area of the multi-area microgrid is equipped with dispatchable and non-dispatchable distributed generators, respectively. The multi-area microgrid implementation separates the system into sections, making asserting control in a system fault event easier. In an experiment, Ref. [26] deployed three diesel power plants and one hydropower plant, all based on synchronous generators. Two types of power load implementation on power plants were deployed: controlled and uncontrolled. The proposed approach utilises machine-learning techniques to detect event signatures in the power system features. A multiclass classification algorithm was then applied to the generated feature data and facilitated self-healing functionality through postfault decision-making that restored the standalone microgrid system without the need for intervention by the central power station.

4.3. Machine-Learning Algorithms

Machine-learning (ML) algorithms can be used individually or in combination with each other to create more accurate and comprehensive models of system behaviours. By analysing system data in real-time, ML algorithms can enable self-healing functionality in cyber–physical systems to detect, diagnose, and potentially correct faults or failures before they cause significant damage or downtime. Table 3 lists the state-of-the-art algorithms used in self-healing systems, utilising sensing, mining, and prediction.

Table 3.

Classification of the existing self-healing algorithms.

4.3.1. Monte Carlo Tree Search

Monte Carlo tree search (MCTS) is an algorithm used in decision-making processes, especially in situations where the outcome of an action is uncertain. It works by constructing a tree of possible future game states and then simulating many random games from those states to determine which actions will most likely lead to successful outcomes.

MCTS can be used to decide how to recover from failures in a complex system. For example, suppose that an extensive computer network experiences a loss in one of its nodes. The self-healing system would need to determine the best course of action to recover from this failure, considering factors such as the system’s current state, the possible causes of the loss, and the likely effectiveness of different recovery strategies. MCTS is described by [1] as a method for making optimal decisions when resolving artificial intelligence problems. It combines stochastic simulation and the accuracy of a tree search. The algorithm builds the search tree of the node through a substantial number of random samples, then formulates greater insight into the system and extracts the datasets to calculate the optimal strategy. For example, when faced with unexpected risks in the power grid scenario, MCTS choose the optimal strategy configuration through sampling and estimation results. As the number of samples increases, the obtained strategy will be closer to the optimal approach. MCTS can be used in the context of self-healing by constructing a tree of possible recovery strategies, simulating the effects of each strategy on the system, and then selecting the strategy that is most likely to result in successful recovery. The simulation process can consider factors such as the likelihood of further failures, the time required for each strategy to take effect, and the potential impact on other system parts. MCTS, a powerful tool for self-healing systems, enables the system to make informed decisions in complex and uncertain environments. However, it is essential to note that MCTS is only effective as the quality of the models used to construct the tree may require significant computational resources to run effectively in large-scale systems.

4.3.2. Deep Learning

Deep learning (DL) is a subfield of ML that uses neural networks with multiple layers to extract high-level features from raw data. It can be used to develop models that automatically detect and diagnose failures in complex systems and then take appropriate actions to recover from them. One application of DL in self-healing systems is in predictive maintenance, where ML models are trained to detect anomalies in the system data that may indicate potential failure. The models can be trained on historical data to learn the system’s expected behaviour and then use that knowledge to detect deviations from normal behaviour that may indicate a failure. Once a failure is detected, the self-healing system can take appropriate actions to prevent or mitigate the effects of the failure. A study by Zhiyuan in [1] demonstrated that DL is an efficient feature extraction method in machine learning. The feature of deep learning aims to establish a deep structure model by integrating the more non-representational feature of data and achieving more detailed characteristics of the data. The three main aspects of DL are unsupervised training, data sample alignment, and data sample testing. The authors of [1] noted that the essence of DL is to find out more valuable features of the dataset by constructing an ML model within many hidden layers and an extensive training dataset to improve the accuracy of classification and prediction. The predictive nature of DL can be deployed to perform systems fault diagnosis, where ML models can be used to identify the root cause of a failure. These models can be trained to analyse sensor data or other system inputs to identify patterns associated with specific types of failures. Once the root cause is identified, the self-healing system can take appropriate actions to address the underlying issue and prevent future failures.

4.3.3. Intensive Learning