Abstract

Due to poor local range of the perception and object recognition mechanisms used by autonomous vehicles, incorrect decisions can be made, which can jeopardize a fully autonomous operation. A connected and autonomous vehicle should be able to combine its local perception with the perceptions of other vehicles to improve its capability to detect and predict obstacles. Such a collective perception system aims to expand the field of view of autonomous vehicles, augmenting their decision-making process, and as a consequence, increasing driving safety. Regardless of the benefits of a collective perception system, autonomous vehicles must intelligently select which data should be shared with who and when in order to conserve network resources and maintain the overall perception accuracy and time usefulness. In this context, the operational impact and benefits of a redundancy reduction mechanism for collective perception among connected autonomous vehicles are analyzed in this article. Therefore, we propose a reliable redundancy mitigation mechanism for collective perception services to reduce the transmission of inefficient messages, which is called VILE. Knowledge, selection, and perception are the three phases of the cooperative perception process developed in VILE. The results have shown that VILE is able to reduce it the absolute number of redundant objects of 75% and generated packets by up to 55%. Finally, we discuss possible research challenges and trends.

1. Introduction

The operation of a connected and autonomous vehicle (CAV) is based on three layers, namely sensing, perception, and decision making [1,2]. CAVs are equipped with an array of onboard sensors at the sensing layer, such as high-resolution cameras, RADAR, light detection and ranging (LIDAR), global positioning system (GPS), inertial measurement unit (IMU), and ultrasonic sensors [3,4]. In this way, the sensing layer provides a vast amount of environmental and contextual data [5,6]. This sensing capability is a prerequisite for a vehicle to perceive the surrounding environment and make proper and timely decisions to ensure the comfort and security of passengers [7,8]. However, regardless of the technology used, onboard sensors are limited by the CAV field of view (FoV), and by obstacles from other moving cars or roadside objects.

The perception layer is responsible for furnishing the vehicle with knowledge of the surrounding environment to allow it to identify and track pedestrians, animals, and other vehicles, as well as accidents and dangerous situations, among other tasks [9]. In summary, the perception layer must have the following characteristics: (i) provide precise driving environment information; (ii) work typically and not easily malfunction; and (iii) avoid processing delays that affect real-time applications (e.g., overtaking maneuvers) [10,11]. However, the sensors’ restricted FoV and potential inaccuracy results in an incomplete or erroneous perception of the surrounding environment [10,12]. In this sense, the limitations and inherent challenges in the perception layer lead to malfunction for complex driving applications, such as collision avoidance systems, blind crossing, location prediction, and high definition maps [13,14].

To cope with this issue, CAVs must not only depend on on-board sensors or mechanisms that operate locally. This is because the decision layer can have more precise decisions as soon as the perception layer also provides information from other vehicles, sensors, people, cities, etc. [15,16]. In this sense, collective perception (CP) appears as an alternative to enhance the sensing layer information with data from other vehicles by allowing vehicles to share their sensing and perceived data via wireless communication to obtain a more accurate and comprehensive perception of their surroundings to guarantee safety for CAVs [17,18]. CP services provide more information to detect safety-critical objects, avoid blind spots, detect non-connected road users or to better track objects [16,19]. Hence, CP provides updated view of a larger environment by considering information from neighbor vehicles [15,16]. Finally, the decision layer is responsible for continuously taking efficient and safe driving actions in real time, such as overtaking maneuvers, platoon formation, predictions, route and motion planning, and obstacle avoidance. These decisions are possible through the collective knowledge of the scenario provided by the perception layer [20,21].

However, CP could lead to significant amount of information exchanged by vehicles, which decrease the performance of the vehicle-to-everything (V2X) network, and also the effectiveness of CP. One way to mitigate this problem is to reduce redundant shared data, which can be achieved by correlating different data sources or taking into account vehicle mobility patterns. In addition, redundant data indicate that many vehicles may execute a duplicate perception across the same region simultaneously [22]. It is important to mention that the processing delay of a given data should not be too long, as otherwise, the processing results would be out of date in relation to the real state of the environment. In this way, the CP may face increased transmission latency as soon as no technique is used to decrease transmission redundancy [23,24,25,26].

Existing studies [27,28,29,30] seek to reduce the overhead of the CP system and control the redundant information exchanged between vehicles to reduce the communications overhead. For instance, some works [27,31] propose a scheme that enables CAVs to adapt the transmission of each tracked object based on information such as position, carrying density, and road geometry. However, to the best of our knowledge, these data selection works in the literature do not consider the presence of several regions of interest in the CP scheme. A data selection scheme based on regions reduces frequent transmissions of messages, enabling CP applications to be applied while maintaining the same perception of the environment and number of objects detected.

In this article, we describe the operational impacts and benefits associated with CP for CAVs. In this sense, we review the state of the art of CP for CAVs and the critical research challenges related to CP. Based on our examination of the relevant literature, we identify four research challenges, namely, redundant information, perception fusion and dissemination, privacy, and security. In addition, we introduce a cooperatiVe perceptIon for the autonomous vechicLEs mechanism, called (VILE), to show the benefits of CP in order to reduce the amount of redundant data, while maintaining the amount of detected objects compared to the basic transmission approach. To achieve that, we divide the scenario into a set of regions and in each region, vehicles have perception data, such as the number of objects detected. In the VILE operation, it relies on a data selection algorithm to choose a given vehicle to share the perception of a specific area to reduce communication overhead. Furthermore, VILE considers three phases (i.e., knowledge, selection, and perception) in the CP process. Evaluation results demonstrate the efficiency of VILE mechanism to reduce the amount of redundant data by up to 85%, while maintaining the number of detected objects compared to the basic transmission approach defined by European Telecommunications Standards Institute (ETSI) [32]. VILE also meets the requirements for the operation of CP applications, as it maintains the same number of pedestrians and vehicles detected and shared with the other CAVs. The main contributions of this article are stated as follows: (i) Introduce the VILE mechanism to mitigate data redundancy for CAV object detection application. (ii) The evaluation of a CP to mitigate data redundancy for CAV object detection application; (iii) The identification of potential research challenges, opportunities, and requirements for CP in CAVs.

The remainder of this article is structured as follows. In Section 2, we describe the current state-of-the-art about CP for CAVs. Section 3 presents the system model and operation of the proposed VILE mechanism. In Section 4, we introduce the simulation scenario, simulation methodology, and discuss the results obtained. Section 5 presents the key research challenges related to CP. Section 6 concludes this article and presents the future work direction.

2. State of Art about Collective Perception for Connected and Autonomous Vehicle

This section introduces an overview about CP applied to CAV. In addition, we introduce the state-of-the-art requirements and opportunities for CP in CAVs in terms of congestion control and redundancy mitigation; data selection and fusion for CAVs applications that may increase vehicle perception; and the safety aspect of CP applications. Based on a detailed study of the literature and state of the art of CP in CAVs, we classified existing works in different areas, such as communication, data selection and fusion, and privacy and security.

2.1. CP Overview

CP allows vehicles to exchange collective perception message (CPM) with each other to obtain a more accurate and comprehensive perception of their surroundings, improving traffic safety and efficiency for CAVs [16]. The ETSIs defines the CPMs as a basic service protocol that contain information about perceived objects by CAVs. Specifically, ETSIs defines the message format and rules for CPMs generation [32,33]. In this way, CPMs allows CAVss to share surrounding information (e.g., objects detected) detected by sensors, such as, cameras, RADAR, LIDAR, and others [34,35]. A CPM contains data about the vehicle that generated the CPM, its onboard sensors (e.g., range and FoV), as well as data about the detected object (e.g., position, speed, distance, and size) [22,27].

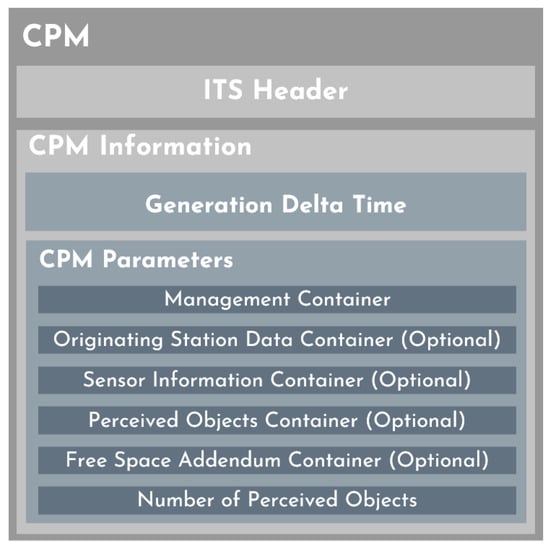

In addition, CPM could be used to send information about tracked objects, where the CPM must be transmitted based on the following conditions: (i) The detected object must move at least 4 m from its last CPM transmission; (ii) The detected object must be moving by more than m/s from its last CPMs transmission; (iii) The detected object must not be included in following CPMs. Figure 1 presents the general structure of a CPM. One standard intelligent transport system (ITS) protocol data unit (PDU) header and multiple containers constitute a CPM. The ITS header includes the information of the protocol version and the message type. Inside the CPM information, the parameters describing the objects identified within the CAV’s FoV must be included.

Figure 1.

ETSI collective perception message (CPM) general structure [32].

A CPM can be broadcast either by a CAV or by a road side unit (RSU). Some fields present in CPM are optional and describe the perception observed by the sensor FoV. The management container offers fundamental information about the transmitting entity, whether it is a CAV or an RSU. The sensor information contains the data for each sensor equipped on a vehicle. In this sense, the sensor container provides data, such as the range and horizontal and optional vertical opening angles. Furthermore, the free space addendum container may represent varying confidence levels for a given sensor’s FoV. Finally, the perceived object container includes a detailed description of a detected object’s dynamic state (i.e., speed, acceleration, and direction) and other properties related to the object’s behavior.

2.2. Communication Aspect

Concerning the creation of CPM, the frequency of CPM generation is crucial since vehicles would overload the communications channel by creating/sending many CPMs. Therefore, ETSI has suggested the first set of guidelines for CPM creation for CP applications [32]. These rules dictate when and what collective perception messages CAVs must transmit. In this sense, frequent transmission of CPMs might enhance the knowledge about the CAVs surroundings. However, the transmission rate for CPM should be between 5 Hz and 10 Hz without coordination, which lead to multiple observations of the same object will frequently be broadcast through the network. The increased network demand might result in transmission delays, reducing the usefulness of CP applications. As a result, it is important to consider advanced techniques for dynamically controlling CPM redundancy transmitted over wireless channel, while maintaining greater awareness of the driving environment.

Huang et al. [22] investigated the data redundancy issue in highway scenarios and demonstrated that repeated transmissions might lead to significant network loads, specially in dense traffic circumstances. The authors also discussed transmission redundancy effects and proposed a probabilistic data selection scheme to suppress redundant transmissions. The scheme enables CAVs to adapt the transmission of each tracked object based on position, carrying density, and road geometry. The results demonstrated that the suggested technique may minimize the communication cost for both V2V and V2I-based CP while retaining the appropriate perception sharing ratio.

Thandavarayan et al. [31] created an algorithm that modified the ETSI generation rules to rearrange the transmission of CPM data, which leads CAVs to broadcast fewer CPMs than the ETSI standard. In this sense, each CPM contains information about a higher number of identified objects. As a result, the suggested method decreased channel load, enhanced the reliability of V2X communications, and improved CAV sensing capabilities.

Ishihara et al. [30] introduced an overview about congestion control for collective awareness and CP applications and suggested the relative position-based priority method to control congestion. This work regulates the frequency of beacon messages transmission based on their relative positions among neighboring vehicles. In this sense, CAVs decide how often beacon messages with information about detected objects are sent out based on how close they are to other vehicles. The method can be used with other strategies, such as the current ETSI generation rule and its variations. The proposed method cuts down on CPM traffic by using a simple rule based on where a CAV is about the other vehicles around it.

Schiegg et al. [27] examined the performance of the CPs service in IEEE 802.11p networks in regards to its impact on traffic participants’ acquired environmental perception and drew the conclusion that the service should be developed further. The primary objective is to help the ongoing ETSI standardization work in this domain. In addition, the authors modify the analytical model used to evaluate the performance of the C-V2Xs CPs service.

Garlichs et al. [29] proposed a set of generation rules for the CPs Service that provides a trade-off between two factors. The proposed rules have the potential to significantly impact the delivered service quality for receiving intelligent transport system stations, as well as the generated network load on the used radio channel. As a result, the proposed generation rules include a message segmentation mechanism that enables the generation of sequential independent CPMss. In this approach, the proposed generation rules are subjected to a thorough simulation study to assess their behavior in various traffic scenarios and radio configurations.

Delooz and Festag [28] investigated three schemes for filtering the number of objects in messages, and thus adjusting network load to optimize the transmission of perceived objects. The simulation-based performance evaluation shows that filtering is an effective method for improving network-related performance metrics, with only a minor reduction in perceived quality. The authors also investigate whether filtering perceived objects carried in CPM is an appropriate mechanism for optimizing vehicle perception capabilities. The authors see filtering as a supplement to the message generation rules based on vehicle dynamics.

2.3. Data Selection and Fusion Aspect

CP over wireless networks cannot depend on the exchange of raw sensor data. This is because the limited availability of communication resources, which is a fundamental factor to justify the need to select or fuse data before transmission. Therefore, raw sensor data must be effectively combined to save storage space and transmission resources. Previously, some strategies [36,37,38] were presented for handling and processing such raw sensor data. In this sense, data fusion approaches may integrate shared perceptual information provided by CP applications to increase the accuracy of discovered items. In addition to minimizing resource consumption, these strategies enhance the identification of impediments in the driving environment via integrating multi-sensor data and raising the navigational safety of autonomous cars [17,39].

Data fusion using a large set of redundant information leads to an overconfident estimate, and thus it is important to use adaptive methods to select the information to be fused [36,38]. As CP techniques rely on broadcast data without universally interpretable object identifiers, participating CAVs must use data binding to sort and organize the received data before merging computations. In addition, it is crucial to examine the geographical and temporal significance of the item that will be used to integrate the data and its effect on the produced findings [10].

Abdel-Aziz et al. [36] studied the joint problem of associating vehicles, allocating resource blocks (RBs), and selecting the content of the exchanged CPMs to maximize the vehicles’ satisfaction in terms of the received sensory information. The authors consider a quadtree-based point cloud compression mechanism to analyze a reinforcement learnings (RLs)-based vehicular association, RB allocation, and content selection of CPMs.

Yoon et al. [40] proposed and evaluated a unified CPs framework based on vehicle-to-vehicles (V2Vs) connectivity. The authors presented a generalized framework for decentralized multi-vehicle CPs, as well as a systematic analysis of the framework’s inherent limitations and opportunities. The framework must take into account the ad hoc and random nature of traffic and connectivity, providing scalability and robustness to work at various traffic densities and scenarios.

Lima et al. [37] studied the split covariance intersection filter, which is a method capable of handling both independent and arbitrarily correlated estimates and observation errors. Various filtering solutions based on the covariance intersection filter or split covariance intersection filter were presented.

Miller et al. [38] created a perception and localization system to allow a vehicle with low-fidelity sensors to incorporate high-fidelity observations from a vehicle in front of it, allowing both vehicles to operate autonomously. The system shares observed vehicle tracks and their associated uncertainties, as well as localization estimates and uncertainties. The associated measurements are then fused in an extended Kalman filter to provide high-fidelity estimates of tracked vehicle states at the current time.

Aoki et al. [41] presented a CPs scheme with deep reinforcement learning to improve detection accuracy for surrounding objects. The presented scheme reduces network load in vehicular communication networks and improves communication reliability by using deep reinforcement learning to select the data to transmit. The authors also demonstrated the collective and intelligent vehicle simulation platform, which was used to design, test, and validate the CPs scheme.

Masi et al. [10] presented a dedicated system design with a custom data structure and light-weight routing protocol for easy data encapsulation, fast interpretation, and transmission. The authors also proposed a comprehensive problem formulation and an efficient fitness-based sorting algorithm to choose the most valuable data to display at the application layer.

2.4. Privacy and Security Aspect

As soon as the data leave the vehicle, it is important to guarantee that any personal data are processed lawfully. Some works [42,43,44] are still evaluating how to comply with data protection regulations in CP applications. CP deals with mobility and sensor data, and thus, monitoring CAVs might breach users’ privacy. Furthermore, relying on CP applications for continuous and comprehensive environmental data may generate privacy problems [17,32].

Perception data would show the movements and actions of the driver, who is not necessarily the vehicle owner. In this sense, broadcast CPMs with user location information may lead to a privacy risk for both the car owner and the driver [17,32]. However, privacy issues may be addressed if the information is filtered, anonymized, and aggregated (for anonymity and data quality reasons) by trustworthy back-end systems before being shared with other parties. Volk et al. [42] introduced a safety metric for object perception that incorporates all of these parameters and returns a single easily interpretable safety assessment score. This new metric is compared to state-of-the-art metrics using real-world and virtual data sets. The proposed metric uses responsible-sensitive safety (RSS) definitions to identify collision-relevant zones and penalizes hidden collision-relevant objects. The RSS model consists of 34 definitions of various safety distances, timings, and procedural rules that mathematically codify human judgment in various traffic conditions. RSS rules specify the proper behavior of an autonomous vehicle and provide a mathematical description of safe conduct.

Shan et al. [45] investigated and demonstrated, using representative experiments, how a CAVs achieves improved safety and robustness when perceiving and interacting with RSUs, using CPMs information from an intelligent infrastructure in various traffic environments and with different setups. The authors showed how CAVs can interact with walking and running pedestrians autonomously and safely using only CPMs information from the RSUs via vehicle-to-infrastructures (V2Is) communication.

Guo et al. [43] provided a trustworthy information-sharing framework for CAVs, in which vehicles measure each other’s trust using the Dirichlet-categorical model. The authors apply trust modeling and trust management techniques developed for vehicular networks to CAVs in order to achieve a trustworthy perception of information sharing on CAVs. Enhanced super-resolution generative adversarial networks are used to improve the resolution of images containing distant objects in order to allow a vehicle to assess the trustworthiness of more data transmitted from others. Based on the results of shared object detection, the work first crops out all distant objects from captured images. It detects potential objects by applying our super-resolution model to these blurry images.

Hurl et al. [46] examined a novel approach for CAVs to communicate perceptual observations, which is tempered by trust modeling of peers providing reports. Based on the accuracy of reported object detections as verified locally, transmitted messages can be fused to improve perception performance beyond sight and a long distance from the ego vehicle. The authors proposed a method for integrating trust modeling and collective perception into an end-to-end distributed perception model, as well as the TruPercept dataset, a multi-vehicle perspective perception dataset for CAVs collected in a realistic environment.

3. Collective Perception Mechanism for Autonomous Vehicles

This section describes the VILE mechanism, which considers three phases in the CP process, namely, knowledge, selection, and perception. The knowledge phase divides the scenario into a set of regions (i.e., hexagonal perception regions) and receives beacons from the CAVs to build a dynamic graph. Next, in the selection phase, the RSU selects, in each region, the vehicle closest to the hexagonal’s center and sends a message requesting the perception of the surrounding environment. Finally, in the perception phase, the chosen CAVs broadcast their perception with others CAVs in the scenario. In the following, we introduce the considered the network and system model, as well as the mechanism operation.

3.1. Network and System Model

We consider a CAV scenario composed of n autonomous vehicles (nodes) moving on a multi-lane urban or highway area, where each vehicle has an individual identity (i∈). These vehicles are represented in a dynamic graph , where the vertices represent a finite set of vehicles, and edges build a finite set of asymmetric wireless links between neighbor vehicles . Each vehicle moves toward a certain direction () following a travel path (a set of roads connected by intersections) with speed ranging between a minimum (), and a maximum () speed limit. Vehicles periodically broadcast beacon messages on the network via V2X communication, where VILE includes extra information in the beacon, i.e., vehicle position, speed, and direction. In this sense, VILE takes advantage of such beacon transmissions to acquire information and build the dynamic graph , avoiding extra overhead. In this sense, RSUs collects such information and builds the knowledge necessary for its decision making (i.e., selection and perception phases). Furthermore, the RSU divides the scenario in a set of hexagonal regions , where represents the center position of the hexagonal region h with a size.

At each time interval t, the CAV detects a set of road objects that are inside the sensor FoV. Specifically, an object is the state space representation of a physically perceived entity inside the sensor’s perceptual range (i.e., its FoV). The sensors detect all objects that are not shadowed by buildings or other vehicles using a simple ray-tracing-like approach. The perception information is represented by each object’s position, type, size, and dynamic state (i.e., speed, acceleration, and direction), which can be estimated by analyzing the vehicle’s own sensor measurements. Sensor noise or other inaccuracies are not modeled. In this way, it is possible to extract the set of perception information from each object and build the local perception of the CAV. The local perception will be included into the CPM, using the ETSI proposed format, and shared with the other CAVs. The size of the CPM is dynamically calculated based on the number of objects included in each CPM. Each object included in the message has an average size of about 30 bytes [32].

For the VILE works properly, the following assumptions are made:

- Every CAV can detect and share a object that is inside the sensor FoV. The sensors detect all objects not covered by buildings or other vehicles;

- Other errors, sensor noise, and processing latency are not modeled;

- Each CAV is aware of its own data by means of orientation and positioning system, such as global positioning systems (GPS) and inertial measurement unit (IMU);

3.2. VILE Operation

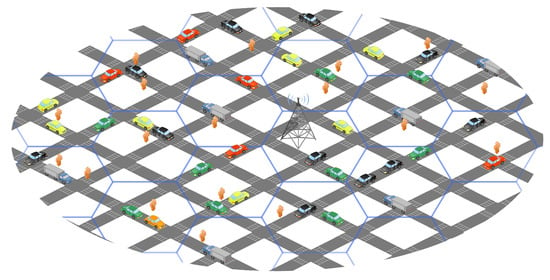

In summary, the VILE mechanism considers tree phases for CP service, namely, knowledge, selection, and perception phases. The VILE mechanism takes into account the existence of a set H of hexagonal regions that divide a given map, as shown in Figure 2, which depicts the division of the areas considered for the mechanism’s selection phase. Mapping is a critical component of autonomous sensing and navigation systems since it provides the CAV with the ability to locate and plan a collision-free course, as well as a means of communication between people and the CAV. The hexagonal grid-based map is one of the most used approaches for representing the surroundings. The RSUs have global knowledge of the regions present in their coverage radius, but the distribution is global and not associated with a specific RSU. VILE considers that an area segmentation algorithm, such as the one presented by Uber [47], was used to partition the scenario into hexagons. The VILE mechanism considers that each hexagonal region h of the set of regions H has a fixed size S and diameter equal to the maximum range of a vehicle’s sensors.

Figure 2.

VILE knowledge phase.

Grid map systems are essential for analyzing enormous geographical perceptions systems since they segment Earth into recognizable grid cells [48,49]. A hexagonal grid map can be used for mapping static and dynamic environments. The shape of the cell is not essential for dynamic approaches, but the advantages of the shape are preserved. Other publications have already shown the advantages of dividing areas using hexagons [50,51]. Fewer hexagonal sections are needed to represent the map, which reduces the computing time and storage requirements. Another advantage of employing hexagonal areas is that sampling using hexagons results in minimal quantization error for a given sensor’s resolution capacity [49]. First, the distance between a particular cell and its immediate neighbors is the same in all six principal directions; curved structures are represented more precisely than rectangular regions [50,51]. In that context, in the knowledge phase, the RSU collects information shared by the CAVs based on position, speed, and orientation.

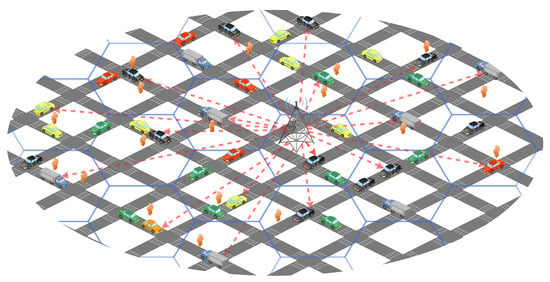

Following, the selection phase chooses the CAV closest to the center position of the hexagonal region to share the set of detected objects with the other vehicles after the end of the time window W, as shown in Figure 3. This CAV is in charge of representing the perception in the defined region. Algorithm 1 provides a brief explanation of how the VILE mechanism was implemented and how the selection process is carried out based on the distance between the position of each CAV and the center of each hexagonal region. The parameters used to begin this processes are the hexagonal region’s center position L and the CAVs set V under the RSU cover selected to share their sensor perception with other vehicles.

| Algorithm 1: VILE Algorithm |

|

Figure 3.

VILE selection phase.

In this way, Algorithm 1 describes the process of the VILE. The set of vehicles V and the hexagonal regions H created by RSU that divide a given map are provided to VILE. In each vehicle inside the RSU coverage area, the algorithm identifies the defined hexagonal region h according to each position (Lines 7–9). In the case of the position information present in the h region of the RSU, the RSU can calculate the distance between the position of the and the center position of the hexagonal region h. For this, VILE applied Euclidean distance to calculate the smallest distance between two specific points (Line 10). If the calculated distance is the shortest distance, compared with , among the set of CAVs in the region h, then the CAV is added to the set of selected vehicles (Lines 11–12). Finally, the RSU sends the message on the network and requests the selected CAVs about the perception of the region (Line 13).

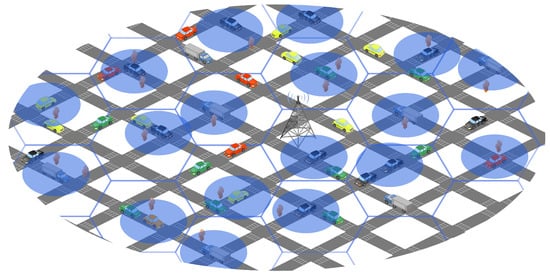

After the selection phase, the chosen CAVs broadcasting CPM contain the position and dynamic state (e.g., velocity, acceleration, and orientation), as well as all or a subset of the perceptual information , which is known as the perception phase, as shown in Figure 4. Thus, each selected CAV is responsible for sensing a single hexagonal region. The CAVs chosen by the mechanism will send the message to all the neighboring CAVs and the RSU. In this way, the CP services can build the perception of the regions separately and share the objects or obstacles detected with the neighboring regions. In this way, cars might gather information from nearby regions for future use. RSU is also responsible for keeping all areas’ perceptions updated after the time window W has ended.

Figure 4.

VILE perception phase.

Figure 2, Figure 3 and Figure 4 illustrate each step for establishing a perception in each hexagonal region H using the VILE mechanism. Assume that vehicles , , and move on a hexagonal region to form a portion of CAVs V. After the knowledge phase, the RSU has the position information for each vehicle inside region . For exemplification, let us assume , , and . Each CAV is aware of its position provided by a positioning system, such as GPS. During the selection phase, the RSU applies the VILE algorithm to compute and select based on the distance between each vehicle inside the region and the center position of the hexagonal region , defined as . In this example, is selected to share the perception and representative vehicle for region since it has the shortest Euclidean distance, among the set of CAVs V in the region , with distances , , and .

4. Evaluation

This section presents the evaluation of the VILE mechanism for redundancy mitigation on the CAV scenario compared to baseline (ETSI CPM generation rules [32]). In addition, this section presents the scenario description, detailing the setup and simulation and the results obtained from CPM generated and received. The baseline approach is used to evaluate the behavior of the proposal, which serves as the main benchmark for the analysis of collective perception approaches. From a collective perception services perspective, we also evaluate the redundancy of objects and the number of detected pedestrians and vehicles perceived by the mechanisms.

4.1. Simulation Scenario Description

We considered the Veins and OMNeT++ framework to evaluate the implemented mechanics. Veins is an open source framework that implements the standard IEEE 802.11p protocol stack for inter-vehicle communication and an obstacle model for signal attenuation. For traffic and vehicle mobility simulation, we employed the Simulation of Urban MObility (SUMO), which is an open source traffic simulator, to model and manipulate objects in the road scenario. This allows us to reproduce the desired vehicle movements with random cruise speed and V2I interactions according to empirical data. We conducted 33 simulation runs with different randomly generated seeds, and the results present the values with a confidence interval of 95%.

We conducted simulations in a Manhattan grid scenario to evaluate the impacts of vehicular mobility in the CP mechanisms. The scenario is a 1 km2 fragment of Manhattan, USA, with several blocks and two-way streets so that the vehicles can move in opposite directions. The vehicle densities varied from 100 to 200 vehicles/km2. Vehicles in the simulation have the exact dimensions, mean speed, and standard deviation speed. In addition, we applied a random mobility model to produce unique routes for each vehicle based on replication and density using a random mobility model. As a result, vehicles in the simulation share the same characteristics, such as exact size, mean, and standard deviation speed. All the main simulation parameters are summarized in Table 1. In our simulations, we chose the sensors’ parameters according to others approaches that evaluate the CP in CAVs scenario [22,32]. Thus, the FoV of 360° was chosen as well as the range of 150 m to generate CP messages with more detections in the scenario.

Table 1.

Simulation parameters.

In terms of collective perception evaluation, the number of messages, channel busy ratio, and packet delivery ratio are some metrics that address the network costs of the CP service but are not enough to assess the quality level of the perception for the CAVs applications because they fail to capture redundancy aspects of data content related to sensors’ perception [32]. For example, the number of duplicate detected objects is based on how often the sensor has caught the same object, such as people or CAVs. Therefore, a higher redundancy value means worse channel utilization. We also evaluate the number of detected objects for each vehicle on the road. We measure the average number of unique, shared perceived objects for each CAV over its simulation time to calculate the absolute number of objects detected. On the other hand, the number of CPM messages generated and received measures the network costs for each approach based on the total number of packets generated and sent through the shared channel. The CPM-generated values closer to zero mean that the techniques use fewer messages in the shared communication channel to transmit the objects detected.

4.2. Simulations Results

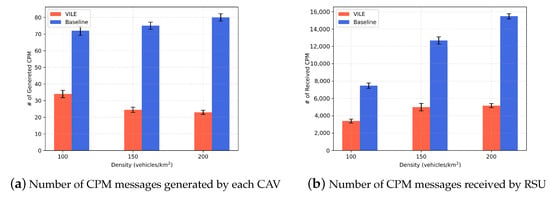

To evaluate the cost of the collective perception service, the absolute number of CPM generated for each approach applied in the scenario was evaluated, as shown in Figure 5. The amount of CPM messages generated and received showed similar behavior to the redundancy results of detected objects. However, the VILE mechanism reduces the number of generated packets by up to 55% compared to the other approach. The most significant amount of CPM messages generated occurred when the density was 100 vehicles, with a value of 32 messages per CAV. Furthermore, we can notice a constant behavior in the number of messages generated by each vehicle and received by the RSU. This happens because the VILE mechanism chooses a single candidate to share the perception for each previously defined region. Thus, the number of vehicles does not directly influence the number of candidates chosen. The number of messages generated and transmitted by the baseline increases significantly, as there is no redundancy control mechanism. In this context, we noticed at least 25% increase in messages received by RSU.

Figure 5.

Absolute number of CPM-type messages generated and received by CAVs.

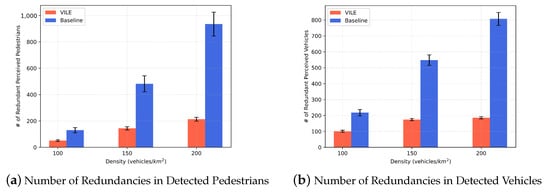

Unlike the communication metrics described above, which quantify the costs of the CP service, the environmental perception metrics quantify its benefit. The results discussed present the impacts of vehicular communication in CP applications. Figure 6 presents the redundancy results of objects (i.e., pedestrians and vehicles) detected for Baseline and VILE. By analyzing the results of Figure 6a, it is possible to notice a reduction in the absolute number of redundant pedestrians by 75% of VILE compared to Baseline. The result of the VILE mechanism is because it chooses only one node closer to the center of the region to transmit the perception with neighboring CAVs, reducing the number of objects detected more than once. Therefore, VILE can reduce the amount and processing of transmitted messages. The association between the increase in cars and the number of redundant items recognized by the system may also be observed; the number of redundant objects shared by the baseline increases by at least 25% according to the number of vehicles.

Figure 6.

Redundancy results of detected objects (pedestrians and vehicles).

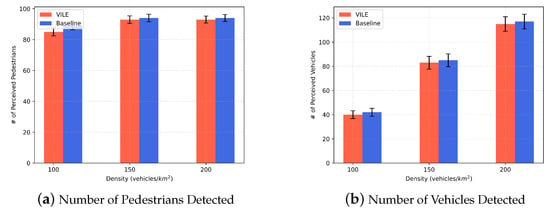

The behavior observed in Figure 7 shows that VILE keeps the same amount of detected unique objects compared to Baseline. In the simulated scenario, 100 pedestrians with random positions and mobility were included. We can see that with an increase in the number of vehicles, we have an increase in the number of pedestrians detected. In the number of vehicles, the behavior is more pronounced concerning detection. The more vehicles in the scenario (200 vehicles), the greater the chances of detection and sharing of other vehicles. Thus, it is possible to conclude that the VILE mechanism meets the requirements for the operation of collective perception applications, as it maintains the same number of pedestrians and vehicles detected and shared with the other CAVs while reducing the total number of CPMs generated and transmitted.

Figure 7.

Absolute number of objects detected with collective perception.

5. Open Issues about Collective Perception

We identify four research issues (i.e., redundant information, perception fusion and dissemination, privacy and security) from the suggested CP architecture for CAVs based on our analysis of the relevant literature. In this way, application-level control of the detected objects transmission often lacks modeling utilizing sophisticated V2X communication models. In the case of multiple CAVs perceiving the same object , redundant and unnecessary frequent updates about that object will be sent in the CPM, thereby increasing the network channel load. The high channel load may lead to frequent losses of CPMs, which may in turn degrade the performance of the CP service. In order to reduce the message size, the CP service may optionally omit a subset of the perceived objects that meet pre-defined redundancy mitigation rules. However, studies rarely use a precise V2X communication simulation model to evaluate redundant information from several CAVs. Consequently, V2X communication modeling must be performed with care, as redundancy mitigation techniques may be susceptible to the loss of CPM due to radio propagation or interference effects, and locally perceived objects may be omitted from the new CPM, even if a remote CAV fails to receive a particular historical CPM containing object information.

The data load created by CP is primarily governed by the frequency with which messages are generated and the number of objects they convey. The former affects the delay between two consecutive CPM, while the latter affects the vehicle’s environmental awareness. When messages are transmitted excessively often, they increase the channel’s strain while enhancing perception only modestly. However, if they are created rarely, they have little effect on local perceptive skills. Setting a lower or higher limit for the minimum or maximum value is an option for specifying a preset CPM generating frequency. Furthermore, most techniques presume the correctness of the data linkage between local perception objects and those received through CPMs. Consequently, improper object information or the wrong omission of object information might reduce the performance of the CP service.

Another future research should compare the different factors that affect low latency and true fusion to find a good balance between the two. Due to sensor preprocessing and communication flaws, direct data matching is impossible due to spatial and temporal deviations or false positives or negatives in sensor data. It must be fused so the CAV planning component can use it. There are two major types of sensors in CP for CAVs: (i) onboard sensors, which are mounted directly to the ego vehicle, and (ii) shared sensors, which are sensor information shared from other vehicles or RSUs. The fusion time is proportional to the input format of the data to fuse, the fusion method used, the output format, and the accompanied object detection and tracking method. It should be noted that incorporating tracking mechanisms into fusion can improve object detection and fusion but slows down the fusion process itself.

An essential task of the data fusion mechanism is to add state spaces to the list of objects currently perceived by a CAV in case a sensor detects a new object. In addition, the data fusion system must develop a data structure for storing detected objects and updating previously tracked objects with new measurements associated with a previously monitored object. Finally, if new measurements are not related to previously monitored items, the system must delete those objects from the list of tracked objects. The data fusion mechanism predicts each object to timestamps at which no measurement is available from sensors. In addition, the data fusion mechanism associates objects from other potential sensors mounted to the station or received from other CAVs with objects in the tracking list. It is essential to examine the different prediction approaches used in this context and to suggest new strategies to maintain the prioritization of objects on the list.

Finally, the assumption that information obtained through collective perception is correct may not be valid in real-world situations, and malicious attackers may exploit models that lack mechanisms to detect and eliminate false information. CPM can cause accidents or inaccurate CAV behavior with malicious intent. Encryption and signatures, in general, can ensure data security. Aside from the malicious intent of CPM partners, there could be other causes. It could be a simple malfunction or a lack of calibration. As a result, the research seeks to broaden the field through mutual trust networks.

6. Conclusions and Future Work

CP improves traffic safety and efficiency by supporting various CAV applications. However, one source of inefficiency in this new paradigm is that the same object can be detected and tracked by multiple CAVs on the road, resulting in redundant data transmissions over the network. Without coordination, three observations of the same object will often be sent over the network. Additionally, if the network is overloaded, messages may take longer to send, which makes them less useful for collective perception. In this situation, we need data redundancy mechanisms to make collective perceptions more accurate and useful.

In this article, we discussed the implications and benefits of the redundancy reduction mechanism for CP in the context of CAVs. In addition, an evaluation of a redundancy mitigation mechanism, called VILE, was introduced to reduce communication costs and maintain the system’s overall perception. Simulation results show the efficiency of the VILE compared to the baseline to ensure a collective perception with 55% less generated packets and 75% fewer redundant objects. Finally, we discuss collective perception trends in the context of CAVs, as well as potential research challenges and opportunities.

In future work, we aim to compare the VILE mechanism with other proposals in the literature and evaluate it in more complex scenarios. We also intend to consider the perception and processing latency from the sensors, for example, by adding the processing model defined by activity estimation [52], as well as evaluating the transmission delay and a prediction model that chooses the CAV that will stay longer inside the hex region. The security of the provided proposal will also be examined in future work. We will also assess how the introduction of misleading information affects the behavior of the approach and the perception of CAVs.

Author Contributions

Conceptualization, W.L., P.M. and D.R.; methodology, W.L., P.M. and D.R.; software, W.L. and D.R.; validation, W.L., P.M., D.R., E.C. and L.A.V.; formal analysis, W.L., P.M., D.R., E.C. and L.A.V.; investigation, W.L., P.M., D.R., E.C. and L.A.V.; resources, P.M., D.R. and L.A.V.; data curation, W.L. and D.R.; writing—original draft preparation, W.L., P.M. and D.R.; writing—review and editing, W.L. and D.R.; funding acquisition, P.M. and D.R. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank the São Paulo Research Foundation (FAPESP) for the financial support, grant #2019/19105-3.

Data Availability Statement

Simulation data can be provided after contacting the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Wang, J.; Liu, J.; Kato, N. Networking and communications in autonomous driving: A survey. IEEE Commun. Surv. Tutorials 2018, 21, 1243–1274. [Google Scholar] [CrossRef]

- Liu, S.; Tang, J.; Zhang, Z.; Gaudiot, J.L. Computer architectures for autonomous driving. Computer 2017, 50, 18–25. [Google Scholar] [CrossRef]

- Shladover, S.E. Opportunities and Challenges in Cooperative Road Vehicle Automation. IEEE Open J. Intell. Transp. Syst. 2021, 2, 216–224. [Google Scholar] [CrossRef]

- Schiegg, F.A.; Llatser, I.; Bischoff, D.; Volk, G. Collective perception: A safety perspective. Sensors 2020, 21, 159. [Google Scholar] [CrossRef] [PubMed]

- Lobato, W.; de Souza, A.M.; Peixoto, M.L.; Rosário, D.; Villas, L. A Cache Strategy for Intelligent Transportation System to Connected Autonomous Vehicles. In Proceedings of the IEEE 92nd Vehicular Technology Conference (VTC-Fall), Virtual, 18 November–16 December 2020; pp. 1–5. [Google Scholar]

- da Costa, J.B.; Meneguette, R.I.; Rosário, D.; Villas, L.A. Combinatorial optimization-based task allocation mechanism for vehicular clouds. In Proceedings of the IEEE 91st Vehicular Technology Conference (VTC-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–5. [Google Scholar]

- Ambrosin, M.; Alvarez, I.J.; Buerkle, C.; Yang, L.L.; Oboril, F.; Sastry, M.R.; Sivanesan, K. Object-level perception sharing among connected vehicles. In Proceedings of the IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 1566–1573. [Google Scholar]

- Ding, L.; Wang, Y.; Wu, P.; Li, L.; Zhang, J. Kinematic information aided user-centric 5G vehicular networks in support of cooperative perception for automated driving. IEEE Access 2019, 7, 40195–40209. [Google Scholar] [CrossRef]

- Delooz, Q.; Festag, A.; Vinel, A. Revisiting message generation strategies for collective perception in connected and automated driving. In Proceedings of the 9th International Conference on Advances in Vehicular Systems, Technologies and Applications (VEHICULAR), Porto, Portugal, 18–22 October 2020. [Google Scholar]

- Masi, S.; Xu, P.; Bonnifait, P.; Ieng, S.S. Augmented Perception with Cooperative Roadside Vision Systems for Autonomous Driving in Complex Scenarios. In Proceedings of the IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 1140–1146. [Google Scholar]

- Tang, S.; Gu, Z.; Fu, S.; Yang, Q. Vehicular Edge Computing for Multi-Vehicle Perception. In Proceedings of the Fourth International Conference on Connected and Autonomous Driving (MetroCAD), Detroit, MI, USA, 28–29 April 2021; pp. 9–16. [Google Scholar]

- Thandavarayan, G.; Sepulcre, M.; Gozalvez, J. Cooperative perception for connected and automated vehicles: Evaluation and impact of congestion control. IEEE Access 2020, 8, 197665–197683. [Google Scholar] [CrossRef]

- Chen, Q.; Ma, X.; Tang, S.; Guo, J.; Yang, Q.; Fu, S. F-cooper: Feature based cooperative perception for autonomous vehicle edge computing system using 3D point clouds. In Proceedings of the 4th ACM/IEEE Symposium on Edge Computing, Arlington, VA, USA, 7–9 November 2019; pp. 88–100. [Google Scholar]

- Kuutti, S.; Fallah, S.; Katsaros, K.; Dianati, M.; Mccullough, F.; Mouzakitis, A. A survey of the state-of-the-art localization techniques and their potentials for autonomous vehicle applications. IEEE Internet Things J. 2018, 5, 829–846. [Google Scholar] [CrossRef]

- Krijestorac, E.; Memedi, A.; Higuchi, T.; Ucar, S.; Altintas, O.; Cabric, D. Hybrid vehicular and cloud distributed computing: A case for cooperative perception. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), Taipei, Taiwan, 7–11 December 2020; pp. 1–6. [Google Scholar]

- Yang, Q.; Fu, S.; Wang, H.; Fang, H. Machine-learning-enabled cooperative perception for connected autonomous vehicles: Challenges and opportunities. IEEE Netw. 2021, 35, 96–101. [Google Scholar] [CrossRef]

- SAE J 3016-2018; Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. Society of Automobile Engineers: Warrendale, PA, USA, 2018.

- Garlichs, K.; Wegner, M.; Wolf, L.C. Realizing collective perception in the artery simulation framework. In Proceedings of the IEEE Vehicular Networking Conference (VNC), Taipei, Taiwan, 5–7 December 2018; pp. 1–4. [Google Scholar]

- Miucic, R.; Sheikh, A.; Medenica, Z.; Kunde, R. V2X applications using collaborative perception. In Proceedings of the IEEE 88th Vehicular Technology Conference (VTC-Fall), Chicago, IL, USA, 27–30 August 2018; pp. 1–6. [Google Scholar]

- Hussain, R.; Zeadally, S. Autonomous cars: Research results, issues, and future challenges. IEEE Commun. Surv. Tutorials 2018, 21, 1275–1313. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, P.; Lu, G. Cooperative autonomous traffic organization method for connected automated vehicles in multi-intersection road networks. Transp. Res. Part C Emerg. Technol. 2020, 111, 458–476. [Google Scholar] [CrossRef]

- Huang, H.; Li, H.; Shao, C.; Sun, T.; Fang, W.; Dang, S. Data redundancy mitigation in V2X based collective perceptions. IEEE Access 2020, 8, 13405–13418. [Google Scholar] [CrossRef]

- Xu, W.; Zhou, H.; Cheng, N.; Lyu, F.; Shi, W.; Chen, J.; Shen, X. Internet of vehicles in big data era. IEEE/CAA J. Autom. Sin. 2017, 5, 19–35. [Google Scholar] [CrossRef]

- Qazi, S.; Sabir, F.; Khawaja, B.A.; Atif, S.M.; Mustaqim, M. Why is internet of autonomous vehicles not as plug and play as we think? Lessons to be learnt from present internet and future directions. IEEE Access 2020, 8, 133015–133033. [Google Scholar] [CrossRef]

- Chen, Q.; Xie, Y.; Guo, S.; Bai, J.; Shu, Q. Sensing system of environmental perception technologies for driverless vehicle: A review of state of the art and challenges. Sens. Actuators A Phys. 2021, 319, 112566. [Google Scholar] [CrossRef]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Schiegg, F.A.; Bischoff, D.; Krost, J.R.; Llatser, I. Analytical performance evaluation of the collective perception service in IEEE 802.11 p networks. In Proceedings of the Wireless Communications and Networking Conference (WCNC), Seoul, Republic of Korea, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Delooz, Q.; Festag, A. Network load adaptation for collective perception in v2x communications. In Proceedings of the IEEE International Conference on Connected Vehicles and Expo (ICCVE), Graz, Austria, 4–8 November 2019; pp. 1–6. [Google Scholar]

- Garlichs, K.; Günther, H.J.; Wolf, L.C. Generation rules for the collective perception service. In Proceedings of the IEEE Vehicular Networking Conference (VNC), Los Angeles, CA, USA, 4–6 December 2019; pp. 1–8. [Google Scholar]

- Ishihara, S.; Furukawa, K.; Kikuchi, H. Congestion Control Algorithms for Collective Perception in Vehicular Networks. J. Inf. Process. 2022, 30, 22–29. [Google Scholar] [CrossRef]

- Thandavarayan, G.; Sepulcre, M.; Gozalvez, J. Generation of cooperative perception messages for connected and automated vehicles. IEEE Trans. Veh. Technol. 2020, 69, 16336–16341. [Google Scholar] [CrossRef]

- ETSI TR 103 562; Intelligent Transport System (ITS); Vehicular Communications; Basic Set of Applications; Analysis of the Collective-Perception Service (CPS). European Telecommunications Standards Institute: Sophia-Antipolis, France, 2019.

- Iranmanesh, S.; Abkenar, F.S.; Jamalipour, A.; Raad, R. A Heuristic Distributed Scheme to Detect Falsification of Mobility Patterns in Internet of Vehicles. IEEE Internet Things J. 2021, 9, 719–727. [Google Scholar] [CrossRef]

- Tsukada, M.; Oi, T.; Ito, A.; Hirata, M.; Esaki, H. AutoC2X: Open-source software to realize V2X cooperative perception among autonomous vehicles. In Proceedings of the IEEE 92nd Vehicular Technology Conference (VTC-Fall), Virtual, 18 November–16 December 2020; pp. 1–6. [Google Scholar]

- Zhou, P.; Kortoçi, P.; Yau, Y.P.; Finley, B.; Wang, X.; Braud, T.; Lee, L.H.; Tarkoma, S.; Kangasharju, J.; Hui, P. AICP: Augmented Informative Cooperative Perception. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22505–22518. [Google Scholar] [CrossRef]

- Abdel-Aziz, M.K.; Perlecto, C.; Samarakoon, S.; Bennis, M. V2V cooperative sensing using reinforcement learning with action branching. In Proceedings of the IEEE International Conference on Communications (ICC), Virtual, 12–14 October 2021; pp. 1–6. [Google Scholar]

- Lima, A.; Bonnifait, P.; Cherfaoui, V.; Al Hage, J. Data Fusion with Split Covariance Intersection for Cooperative Perception. In Proceedings of the IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 1112–1118. [Google Scholar]

- Miller, A.; Rim, K.; Chopra, P.; Kelkar, P.; Likhachev, M. Cooperative perception and localization for cooperative driving. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1256–1262. [Google Scholar]

- Coutinho, R.W.; Boukerche, A. Guidelines for the design of vehicular cloud infrastructures for connected autonomous vehicles. IEEE Wirel. Commun. 2019, 26, 6–11. [Google Scholar] [CrossRef]

- Yoon, D.D.; Ayalew, B.; Ali, G.M.N. Performance of decentralized cooperative perception in v2v connected traffic. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6850–6863. [Google Scholar] [CrossRef]

- Aoki, S.; Higuchi, T.; Altintas, O. Cooperative perception with deep reinforcement learning for connected vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NE, USA, 19 October–13 November 2020; pp. 328–334. [Google Scholar]

- Volk, G.; Gamerdinger, J.; von Bernuth, A.; Bringmann, O. A comprehensive safety metric to evaluate perception in autonomous systems. In Proceedings of the IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–8. [Google Scholar]

- Guo, J.; Yang, Q.; Fu, S.; Boyles, R.; Turner, S.; Clarke, K. Towards Trustworthy Perception Information Sharing on Connected and Autonomous Vehicles. In Proceedings of the International Conference on Connected and Autonomous Driving (MetroCAD), Detroit, MI, USA, 27–28 February 2020; pp. 85–90. [Google Scholar]

- Masuda, H.; El Marai, O.; Tsukada, M.; Taleb, T.; Esaki, H. Feature-based Vehicle Identification Framework for Optimization of Collective Perception Messages in Vehicular Networks. IEEE Trans. Veh. Technol. 2022, 23, 1–11. [Google Scholar] [CrossRef]

- Shan, M.; Narula, K.; Wong, Y.F.; Worrall, S.; Khan, M.; Alexander, P.; Nebot, E. Demonstrations of cooperative perception: Safety and robustness in connected and automated vehicle operations. Sensors 2020, 21, 200. [Google Scholar] [CrossRef] [PubMed]

- Hurl, B.; Cohen, R.; Czarnecki, K.; Waslander, S. Trupercept: Trust modelling for autonomous vehicle cooperative perception from synthetic data. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NE, USA, 19 October–13 November 2020; pp. 341–347. [Google Scholar]

- Brodsky, I. H3: Hexagonal Hierarchical Geospatial Indexing System. Uber Open Source Retrieved. 2018. Available online: h3geo.org (accessed on 1 December 2022).

- Duszak, P.; Siemiątkowska, B. The application of hexagonal grids in mobile robot Navigation. In Proceedings of the International Conference Mechatronics, Ilmenau, Germany, 18–20 March 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 198–205. [Google Scholar]

- Brennand, C.A.; Filho, G.P.R.; Maia, G.; Cunha, F.; Guidoni, D.L.; Villas, L.A. Towards a fog-enabled intelligent transportation system to reduce traffic jam. Sensors 2019, 19, 3916. [Google Scholar] [CrossRef]

- Duszak, P.; Siemiątkowska, B.; Więckowski, R. Hexagonal Grid-Based Framework for Mobile Robot Navigation. Remote Sens. 2021, 13, 4216. [Google Scholar] [CrossRef]

- Li, T.; Xia, M.; Chen, J.; Gao, S.; De Silva, C. A hexagonal grid-based sampling planner for aquatic environmental monitoring using unmanned surface vehicles. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 3683–3688. [Google Scholar]

- Deepa, J.; Ali, S.A.; Hemamalini, S. Intelligent energy efficient vehicle automation system with sensible edge processing protocol in Internet of Vehicles using hybrid optimization strategy. Wirel. Netw. 2023, 29, 1–17. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).