Abstract

Homomorphic encryption (HE) has emerged as a pivotal technology for secure neural network inference (SNNI), offering privacy-preserving computations on encrypted data. Despite active developments in this field, HE-based SNNI frameworks are impeded by three inherent limitations. Firstly, they cannot evaluate non-linear functions such as , the most widely adopted activation function in neural networks. Secondly, the permitted number of homomorphic operations on ciphertexts is bounded, consequently limiting the depth of neural networks that can be evaluated. Thirdly, the computational overhead associated with HE is prohibitively high, particularly for deep neural networks. In this paper, we introduce a novel paradigm designed to address the three limitations of HE-based SNNI. Our approach is an interactive approach that is solely based on HE, called iLHE. Utilizing the idea of iLHE, we present two protocols: , which facilitates the direct evaluation of the function on encrypted data, tackling the first limitation, and , which extends the feasible depth of neural network computations and mitigates the computational overhead, thereby addressing the second and third limitations. Based on and protocols, we build a new framework for SNNI, named . We prove that our protocols and the framework are secure in the semi-honest security model. Empirical evaluations demonstrate that surpasses current HE-based SNNI frameworks in multiple aspects, including security, accuracy, the number of communication rounds, and inference latency. Specifically, for a convolutional neural network with four layers on the MNIST dataset, achieves accuracy with an inference latency of s, surpassing the popular HE-based framework CryptoNets proposed by Gilad-Bachrach, which achieves accuracy with an inference latency of s.

1. Introduction

Over the last decade, the field of machine learning (ML), particularly deep learning (DL), has witnessed a tremendous rise, achieving superhuman-level performance in many areas, such as image recognition [1,2], natural language processing [3,4], and speech recognition [5,6]. The success of deep neural networks (DNNs) relies on training large-scale models encompassing billions of parameters and fed with extensive datasets. For instance, ChatGPT [7], a famous large language model, was trained on the diverse and wide-ranging dataset derived from books, websites, and other texts, containing a total of 175 billion parameters. Therefore, training DNNs can be time-consuming and requires huge training samples. For such reasons, it is challenging for individual users to build DNNs of this scale. In response to this challenge, Machine Learning as a Service (MLaaS) has been proposed, enabling individual users to perform inference on their data without the necessity of local model training.

The MLaaS paradigm can be described in more detail as follows. A pre-trained neural network (NN), denoted by F, is deployed on a remote cloud server. In this setup, a client transmits a data sample, denoted by x, to the server for inference. After receiving x, the cloud server performs neural network inference on x to obtain the output , which will be returned to the user as the prediction result. Although MLaaS has clear benefits, it also raises serious privacy concerns for the client and the server. Firstly, from the client’s perspective, the data x and the prediction result may contain sensitive personal information, e.g., in healthcare applications, data x can be a medical record and the prediction is whether the client has contracted a certain disease. Therefore, the client may hesitate to share x with the server and want to hide from the server. Secondly, from the server’s perspective, the pre-trained NN, F, represents the service provider’s intellectual property and has great commercial advantages. Hence, the server does not want to share F with clients. Furthermore, the NN may leak information about the training data [8]. Consequently, it is very desirable and of great practical importance to design secure MLaaS frameworks, where the client should learn but nothing else about the server’s model F, while the server should not learn anything about the client’s input x or the prediction result . Throughout this paper, we refer to this goal as secure neural network inference (SNNI) (other names sometimes used in the literature include privacy-preserving inference and oblivious inference).

Existing approaches to the SNNI problem naturally rely on privacy-preserving techniques of modern cryptography, in particular, multi-party computation (MPC) and fully homomorphic encryption (HE). In secure two-party computation (2PC), a special case of MPC with two parties, the client and the server interact with each other to securely compute the prediction result without revealing any individual values. After three decades of theoretical and applied work improving and optimizing 2PC protocols, we now have very efficient implementations, e.g., refs. [9,10,11,12,13]. The main advantage of 2PC protocols is that they are computationally inexpensive and can evaluate arbitrary operators. However, 2PC protocols require the structure (e.g., the boolean circuit) of the NN to be public and involve multiple rounds of interaction between the client and the server. On the other hand, HE-based SNNI is a non-interactive approach (the client and the server are not required to involve any communication during the inference process) and keeps all NNs’ information secret from the client. The main bottlenecks of HE-based SNNI frameworks are their computational overhead and they cannot evaluate non-linear operators, e.g., the comparison operator. If prioritizing accuracy and inference time, 2PC is the better candidate. For prioritizing security and optimizing communication, HE is the better candidate. Our motivation is to design a novel framework that achieves the best of both worlds: the security and low communication cost characteristic of the HE-based approach, coupled with the computational efficiency and ability to evaluate non-linear functions inherent in the 2PC-based approach.

In this paper, we focus on the HE-based approach to SNNI. HE is a cryptographic technique that allows computations to be performed on encrypted data without decrypting it. HE-based SNNI can be described as follows. The client encrypts x and sends the encrypted data to the server. The server evaluates F on and sends the result to the client, where (homomorphic property). Finally, the client decrypts the ciphertext and obtains . The main contribution of our paper is to propose a novel interactive approach for SNNI solely based on HE that overcomes the aforementioned issues in 2PC and HE. The novelty of our approach is that it is only based on HE, while the existing interactive approach combines HE with 2PC protocols. Hence, we reduce communication complexity caused by 2PC protocols.

Existing (non-interactive) HE-based SNNI frameworks face three inherent drawbacks, which our approach will address.

- Firstly, existing HE schemes only support addition and multiplication, while other operators, such as comparison, are not readily available and are usually replaced by evaluating expensive (high-degree) polynomial approximation [9,10]. Hence, current HE-based SNNI frameworks cannot practically evaluate non-linear activation functions, e.g., function, which are widely adopted in NNs.

The solution for this issue is to replace these activation functions with (low-degree) polynomial approximations, e.g., square function. However, this replacement degrades the accuracy of the NN [11] and requires a costly re-training of the NN. Moreover, training NNs using the square activation function can lead to strange behavior when running the gradient descent algorithm, especially for deep NNs. For example, gradient explosion or overfitting phenomena may occur [12]. This is because the derivative of the square function, unlike the function, is unbounded. This paper proposes a novel protocol to evaluate the function, , which addresses the challenge of evaluating in current HE-based SNNI frameworks.

- Secondly, in all existing HE schemes, ciphertexts contain noise, which will be accumulated after running homomorphic operations, and at some point, the noise present in a ciphertext may become too large, and the ciphertext is no longer decryptable. A family of HE supporting a predetermined number of homomorphic operators is called leveled HE (LHE). LHE is widely adopted in SNNI frameworks (LHE-based SNNI frameworks). Therefore, an inherent drawback of existing LHE-based SNNI frameworks is that they limit the depth of NNs.

The bootstrapping technique, an innovative technique proposed by Gentry [13], can address this issue. The bootstrapping technique produces a new ciphertext that encrypts the same message with a lower noise level so that more homomorphic operations can be performed on the ciphertext. Roughly speaking, bootstrapping homomorphically decrypts the ciphertext using encryption of the secret key, called the bootstrapping key, then (re-)encrypts the message with the bootstrapping key. This requires an extra security prerequisite, termed the circular security assumption. The assumption implies that disclosing the encryption of the secret key is presumed to be secure.

The circular security assumption remains poorly studied and poorly understood. Proving circular security for the HE schemes remains an open problem. Furthermore, bootstrapping is generally considered an expensive operation, so one usually tries to avoid it as much as possible [14]. This paper proposes a novel protocol to refresh ciphertext in HE schemes, , to address the noise growth issue in HE schemes. Our protocol is much faster than bootstrapping (the empirical comparison results are shown in Section 5.2) and does not require circular security.

- Thirdly, LHE-based SNNI suffers from huge computational overhead. The computational cost of LHE, for example, grows dramatically with the number of levels of multiplication that the scheme needs to support. This means LHE-based SNNI is impractical in the case of deep NNs (equivalent to a very large multiplication level). Interestingly, naturally reduces the size of LHE schemes’ parameters, hence significantly reducing computational overhead (details in Section 5.3).

The non-interactive LHE-based SNNI approach cannot efficiently address the three above challenges. A natural approach that appears in the literature is interactive LHE-based approaches [11,15,16]. There are two branches in interactive LHE-based approaches. The first branch is solely based on LHE, which we call iLHE, and the second branch combines HE and 2PC protocols, known as the HE-MPC hybrid approach in the literature.

n-Graph-HE2 [11] is the only iLHE-based SNNI framework. n-Graph-HE2 adopted client-aided architecture where the server sends encrypted values (before the non-linear activation function) of intermediate layers to the client, and the client decrypts those encrypted values and then evaluates the activation function on intermediate layers’ values; finally, the client encrypts those intermediate layers’ values (after non-linear activation function) and sends it to the server. It is worth noting that the process of decryption-then-encryption can be considered as a noise refresher. This solution addresses the three mentioned issues of non-interactive LHE-based SNNI. However, nGraph-HE2 leaks the network’s information, namely NNs’ architecture and all intermediate layers’ values. Recent studies have illuminated the potential risks of this information leakage; a malicious client could feasibly reconstruct the entire neural network parameters by exploiting these intermediate values [17,18]. nGraph-HE2 is an ad hoc solution to address issues of LHE-based SNNI frameworks and is not considered as a separate approach to SNNI in the literature. This paper proposes a novel iLHE-based SNNI framework that addresses the drawbacks of existing non-interactive LHE-based SNNI frameworks while preserving NNs’ architecture and not leaking information about intermediate layers’ values. We argue that the iLHE-based approach deserves more attention from the research community.

The second branch of interactive LHE-based SNNI is the HE-MPC hybrid approach, which wisely combines HE and MPC. In particular, HE schemes were used to compute linear layers, i.e., fully connected layer and convolution layer, while 2PC protocols, e.g., garbled circuit (GC) [19] and ABY [20], were used to compute an exact non-linear activation function. The major bottleneck of the approach is the communication complexity caused by 2PC protocols. On the other hand, our framework is an iLHE-based approach, which is solely based on HE. Hence, we naturally avoid expensive 2PC protocols.

To address the three challenges faced by non-interactive LHE-based SNNI, this paper designs two novel iLHE-based protocols. Firstly, we design the protocol to evaluate the activation function, an essential non-linear function widely adopted in NNs. This protocol addresses the first challenge of non-linear function evaluation in HE-based SNNI. Compared to current LHE-based SNNI frameworks, our protocol can exactly evaluate the function. Compared to existing HE-MPC hybrid frameworks, i.e., Gazelle [15] and MP2ML [16], our protocol achieves fewer communication rounds (we detail this in Section 5.1). Secondly, we design to refresh HE ciphertexts, which enable further homomorphic operators. This protocol addresses the second challenge of noise accumulation in HE-based SNNI. By using , rather than selecting HE parameters large enough to support the entire NN, the HE’s parameters must now only be large enough to support the computation on linear layers between activation function layers. For instance, to perform secure inference on a seven-layer NN, which contains convolution layers, fully connected layers followed by activation function layers, our framework (with ) requires , while current non-interactive LHE-based SNNI frameworks (without ) require (details in Section 5.3). Therefore, can address the third challenge of computational overhead due to large multiplication depth in LHE-based SNNI. In the HE scheme, plays the role of a bootstrapping procedure, which aims to reduce the noise in ciphertexts. Our experiment showed that outperforms bootstrapping by in running time (we detail this in Section 5.2). Interestingly, and can run in parallel. Hence, we can save communication rounds.

Our contribution. In this paper, we first design two novel protocols, i.e., and , to address the drawbacks of existing LHE-based SNNI frameworks. Then, we leverage and to build a new framework for SNNI called HeFUN. The idea of HeFUN is to use to evaluate the activation function and use to refresh intermediate neural network layers. The benefits of our proposed method are twofold. Firstly, compared to current HE-based frameworks, our approach significantly accelerates inference time while achieving superior accuracy. Secondly, compared to current 2PC-based frameworks, our methodology reduces communication rounds and safeguards circuit privacy for service providers. We highlight the superiority of our approach compared to current approaches in Section 2. A comparison summary is shown in Table 1. The contribution of this paper is as follows:

- We proposed a novel iLHE-based protocol, , that can exactly evaluate the function solely based on HE, which achieves better communication rounds than current HE-MPC hybrid frameworks. The analysis is presented in Section 5.1.

- We proposed a novel iLHE-based protocol, , that refreshes noise in ciphertexts to enable arbitrary depth of the circuit. also reduces HE parameters’ size, hence significantly reducing computation time. Considering the ciphertext refreshing purpose, outperforms bootstrapping in relation to computation time (we detail this in Section 5.2). Furthermore, our protocol deviates from Gentry’s bootstrapping technique, so we bypass the circular security requirement.

- We build a new iLHE-based framework for SNNI, named , which uses to evaluate the activation function and use to refresh intermediate neural network layers.

- We provide security proofs for the proposed protocols. All proposed protocols, i.e., and , are proven to be secure in the semi-honest model using the simulation paradigm [21]. Our framework, , is created by composing sequential protocols. The security proof of the framework is provided based on modular sequential composition [22].

- Experiments show that outperform previous HE-based SNNI frameworks. Particularly, we achieve higher accuracy and better inference time.

Table 1.

Comparison of SNNI frameworks.

Table 1.

Comparison of SNNI frameworks.

| Approach | Framework | Security | Accuracy | Efficiency | ||||

|---|---|---|---|---|---|---|---|---|

| Circuit Privacy | Comparable Accuracy a | Non-Linear | Unbounded | Non-Interactive b | SIMD | Small HE Params c | ||

| LHE | CryptoNets | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ |

| CryptoDL | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| BNormCrypt | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| Faster-CryptoNets | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| HCNN | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| E2DM | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| LoLa | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| CHET | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| SEALion | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| nGraph-HE | ✓ | ✓ | ✗ | ✗ | ✓ | ✓ | ✗ | |

| FHE | FHE-DiNN | ✓ | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ |

| TAPAS | ✓ | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ | |

| SHE | ✓ | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ | |

| MPC | DeepSecure | ✗ | ✗ | ✓ | ✓ | ✗ | - | - |

| XONN | ✗ | ✗ | ✓ | ✓ | ✗ | - | - | |

| GarbledNN | ✗ | ✗ | ✓ | ✓ | ✗ | - | - | |

| QUOTIENT | ✗ | ✓ | ✓ | ✓ | ✗ | - | - | |

| Chameleon | ✗ | ✓ | ✓ | ✓ | ✗ | - | - | |

| ABY3 | ✗ | ✓ | ✓ | ✓ | ✗ | - | - | |

| SecureNN | ✗ | ✓ | ✓ | ✓ | ✗ | - | - | |

| FalconN | ✗ | ✓ | ✓ | ✓ | ✗ | - | - | |

| CrypTFlow | ✗ | ✓ | ✓ | ✓ | ✗ | - | - | |

| Crypten | ✗ | ✓ | ✓ | ✓ | ✗ | - | - | |

| HE-MPC hybrid | Gazelle | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

| MP2ML | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ | |

| iLHE | nGraph-HE2 | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

| HeFUN (this work) | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ | |

a Among frameworks considered having comparable accuracy with plain model, LHE-based frameworks are less accurate than others because they approximate non-linear function by polynomial, while MPC-based, HE-MPC hybrid-based, and iLHE-based approaches achieve the same accuracy as the plain model (details in Section 5.3). b HeFUN requires less communication rounds than HE-MPC hybrid-based approach (details in Section 5.1). c The size of HE parameters directly impacts the inference time of SNNI frameworks. HeFUN uses smaller HE parameters than current LHE-based frameworks, hence significantly accelerating inference time (details in Section 5.3).

The rest of the paper is organized as follows. Section 2 reviews current SNNI frameworks, Section 3 provides the necessary cryptographic background, Section 4 presents our proposed approach, Section 5 presents our implementation and evaluation results, Section 6 provides some discussions and potential future works, and Section 7 concludes our work.

2. Related Works

This section provides a summary of current approaches for SNNI. Existing solutions for SNNI can be categorized into four main approaches: LHE-based (Section 2.1), TFHE-based (Section 2.2), MPC-based (Section 2.3), and HE-MPC hybrid approach (Section 2.4). We now overview those approaches. Table 1 summarizes the comparison between existing SNNI approaches.

2.1. LHE-Based SNNI

This approach utilizes leveled homomorphic encryption (LHE) schemes, e.g., BFV [23] and CKKS [24]. Such schemes allow a limited and predefined number of operations (e.g., additions and multiplications) that can be performed on encrypted data. The computational cost of LHE-based SNNI frameworks grows dramatically with the number of layers in NNs. CryptoNets [12] by Microsoft Research is the initial work for LHE-based SNNI. In this paper, the authors first presented an SNNI framework for a five-layer NN on the MNIST dataset. CryptoNets triggered significant interest in using LHE for SNNI. Based on the idea of CryptoNets, many subsequent works were proposed to improve the performance, scaled to deeper networks, and integrated with deep NN compiler technologies, e.g., CryptoDL [25], BNormCrypt [26], Faster-CryptoNets [27], HCNN [28], E2DM [29], LoLa [30], CHET [31], SEALion [32], nGraph-HE [33], and nGraph-HE2 [11]. An essential feature of LHE schemes is that they offer the Single-Instruction-Multiple-Data (SIMD) technique [34], where we can pack multiple plain data into a single ciphertext and performing homomorphic operations on the ciphertext corresponds to simultaneously performing operations on multiple plain data. This feature helps amortize the cost of homomorphic operations, so it increases the throughput of the inference process. However, LHE-based SNNI frameworks have three inherent shortcomings.

Firstly, LHE-based SNNI frameworks cannot evaluate the function, commonly used in deep learning. This is because existing LHE schemes do not support the comparison operation, which is required in computing the function. One solution widely adopted to address the issue is to replace the function with a polynomial approximation, e.g., CryptoNets replaces the function with the square function (). However, this solution compromises the accuracy of the NN [11]. Additionally, it requires re-training the entire NN, which is a costly procedure. Furthermore, training an NN with polynomial approximation, e.g., square function, unlike the function, may lead to strange behavior, as the derivative of the square function is unbounded.

Secondly, LHE-based SNNI restricts the depth of the NNs, as LHE schemes allow only a limited number of operations that can be performed on the encrypted data. Thus, this method is not scalable and suitable for deep learning, where NNs can contain tens, hundreds, or sometimes thousands of layers [35,36]. These issues can be addressed by using the bootstrapping technique [13]. However, the bootstrapping technique requires circular security and is a costly procedure. So one usually tries to avoid it as much as possible [14].

Thirdly, and perhaps more importantly, even with many efforts to improve the performance, the computational cost of LHE-based SNNI frameworks is prohibitively large. This is because inference on deep NNs requires a large multiplicative level, leading to large LHE schemes’ parameters.

addresses all three mentioned drawbacks of LHE-based SNNI thanks to two novel protocols. That is, the protocol can exactly evaluate the function, which addresses the first drawback, and can refresh ciphertexts, which addresses the second and third drawbacks.

2.2. TFHE-Based SNNI

TFHE [37] is an HE scheme that represents ciphertexts in the torus modulo 1. TFHE can execute rapid binary operations on encrypted binary bits. Furthermore, TFHE is equipped with a fast bootstrapping technique. Compared to LHE schemes, TFHE has two advantages. Firstly, unlike the LHE schemes approximating the by expensive polynomial operations, TFHE can exactly evaluate the function by boolean operations. Secondly, TFHE allows unbounded homomorphic operators that can be performed on ciphertext, thanks to the fast bootstrapping technique. However, TFHE only works on binary gates, and current TFHE-based SNNI frameworks work with binarized neural networks (BNNs) [38]. BNNs restrict the weights and inputs of their linear layers to the set . It employs the activation function , which is straightforward to implement in boolean circuits. FHE-DiNN [39] is the first TFHE-based SNNI framework. While FHE-DiNN performs inference on MNIST faster than CryptoNets, its accuracy is notably lower than CryptoNets because it is limited to supporting smaller BNNs.

Subsequent works, i.e., TAPAS [40] and SHE [41], improved the efficiency of FHE-DiNN, but their core idea was merely based on FHE-DiNN, i.e., using TFHE over BNN. Due to the limitation of operating with BNN, the accuracy of TFHE-based frameworks falls short when compared to the plain NN (running inference on the original NN). For example, ref. [42] evaluated the most prevalent HE schemes for SNNI, namely CKKS, BFV, and TFHE; while CKKS and BFV achieve comparable accuracy with the plain NN (), TFHE’s accuracy was significantly lower (). As shown in Table 1, while other approaches achieve comparable accuracy with the plain NN, the TFHE-based approach falls short in accuracy. Furthermore, since the packing technique does not easily align with the TFHE scheme, it might introduce extra inefficiencies in terms of running time and memory overhead when we want to process large amounts of data concurrently.

Compared to the TFHE-based approach, is more accurate (we achieve the same accuracy with the plain NN) and efficient (supports SIMD). Furthermore, also achieves unbounded depth and can exactly evaluate the activation function.

2.3. MPC-Based SNNI

MPC is a cryptographic technique that enables two or more parties to jointly compute a function f over their respective secret inputs without revealing their secret inputs to each other. SNNI is a special case of MPC, i.e., secure two-party computation (2PC), where the client and the server jointly evaluate the prediction function on private data (held by the client) and the NN’s parameters (held by the server). GC is an MPC protocol commonly used in MPC-based SNNI frameworks. In the context of SNNI, when the bit length of the numbers in use is fixed, all the operations in an NN can be represented using a boolean circuit. This means the entire NN can be translated into one (likely extensive) boolean circuit. The GC protocol [19] provides a method to evaluate such a boolean circuit securely. DeepSecure [43] was pioneered as the first SNNI framework that purely relied on GC. Following this, XONN [44] and GarbledNN [45] are other frameworks that follow this purely GC approach. Such purely GC frameworks suffer from three drawbacks. Firstly, the boolean circuits are unsuitable for performing arithmetic operations (used in NN). Hence, all these frameworks require some modification on NN to make them compatible with boolean circuits, e.g., XONN works on BNNs. This modification significantly drops the accuracy of the NN. Secondly, the purely GC-based approach is bulky and incurs a large overhead [46]. This is considered as the major hurdle of the purely GC-based approach. Thirdly, it leaks the entire NN architecture to the client, i.e., the number of layers, type of layers, and the number of neurons in each layer.

Secret Sharing (SS) [47] and Boolean SS (also known as GMW) [48] are other common MPC protocols used in MPC-based SNNI. Many SS/GMW-based frameworks have been proposed to address SNNI, e.g., SecureML [49], ABY2 [50], QUOTIENT [51], Chameleon [52], ABY3 [20], SecureNN [53], FalconN [54], CrypTFlow [55], and Crypten [56]. These frameworks are highly efficient. Typically, they exhibit superior performance compared to existing SNNI approaches [46]. It is worth noting that many of them support private training (not only SNNI). Although SS/GMW-based frameworks offer many benefits, such as the fact that they can evaluate any function, including , and they are very efficient, they suffer from a serious security issue similar to the purely GC-based approach, namely, they leak the entire network architecture to the client.

Overall, MPC-based SNNI frameworks provide a lower level of security than other SNNI approaches (including ), as they leak the entire NN’s architecture.

2.4. HE-MPC Hybrid SNNI

HE-MPC Hybrid SNNI judiciously combines HE and MPC. In particular, HE-MPC Hybrid SNNI frameworks compute linear layers, e.g., fully connected and convolutional layers, using HE and non-linear activation functions, e.g., using MPC. Gazelle [15] was the first work to combine an LHE scheme, i.e., BFV, to compute linear layers with GC to compute non-linear layers. Gazelle [15] opened a new approach, which outperformed other approaches, i.e., LHE-based, TFHE-based, and MPC-based, at the time of publication (2018). Based on the idea of Gazelle, MP2ML [16] was proposed, which combines the CKKS scheme with ABY [57] (BFV was replaced by CKKS and GC was replaced by ABY). Although Gazelle and MP2ML can evaluate the function, offer efficient solutions, and keep the NN architecture secret from the client, the main bottleneck of these frameworks is the GC/ABY block, where the client and the server have to interact to jointly compute non-linear functions. Additionally, HE-MPC hybrid frameworks require converting between HE ciphertexts and MPC values.

On the other hand, our framework, , is an MPC-free interactive SNNI framework where we use HE only as the cryptographic primitive. Compared to HE-MPC hybrid frameworks, is simpler and requires fewer communication rounds, as escapes from the main bottleneck block, i.e., GC/ABY. We detail this in Section 5.1.

Our protocol, , overcomes the shortcomings of previous approaches by introducing two novel protocols for evaluating the function () and refreshing ciphertext (). Table 1 shows a comparison between and existing approaches. satisfies security, accuracy, and efficiency criteria. Notably, is the first framework that meets all criteria only using LHE. The idea is that we allow the client and the server to interact solely based on HE (without the need for any MPC protocols). We call this approach interactive LHE (iLHE).

3. Cryptographic Preliminaries

3.1. Notation

We denote a scalar value in lower-case letters, e.g., x. We denote vectors in lower-case bold letters, e.g., , and we refer to the ith element of a vector as , with indexing starting from one. For any two vectors , and signify component-wise addition and multiplication, respectively, while denotes the dot product between and . We use to denote the cyclically leftward-shifted (rotation) vector of by r positions, with the understanding that a negative r corresponds to a rightward shift. We denote a matrix in capital bold letters, e.g., , and we refer to the ith column of a vector as . We let denote vector–matrix multiplication. Additionally, we use to denote the cyclotomic ring , where N is a power of two, and .

3.2. Homomorphic Encryption

Homomorphic encryption (HE) is a distinctive form of encryption that permits computation on ciphertexts without the need for decryption, thereby ensuring data confidentiality, even within untrusted environments. Current HE schemes vary, particularly concerning the data types they accommodate. For instance, integer-based HE schemes facilitate operations on integer vectors, whereas real number-based HE schemes cater to computations involving real vectors. Although our proposed frameworks are compatible with both types, our primary focus is on the CKKS scheme [10], which is prevalent in SNNI due to its inherent compatibility with real numbers.

In CKKS, a ciphertext takes the form of , with q being a prime number. The scheme enables the encryption of a vector consisting of real numbers into slots of a single ciphertext, thanks to the batching technique [10]. CKKS facilitates homomorphic operations, applying identical computations to each slot concurrently. In particular, if we represent the ciphertext of a vector as , we can describe homomorphic operators as follows: , , , , and .

LHE-based SNNI. Leveraging the operators supported by homomorphic encryption, numerous LHE-based SNNI frameworks have been developed (as can be seen in Table 1). In NNs, two common operators that can be efficiently evaluated using HE schemes are matrix multiplication and convolution. A comprehensive explanation of these methods is beyond the scope of this paper; for detailed insights, we direct readers to [58,59,60,61]. We briefly overview the operators that we used in this paper.

Given a vector and a matrix , the vector–matrix multiplication can be efficiently evaluated in the HE scheme, i.e., , where ⊙ denotes homomorphic (encrypted) vector and (plain) matrix multiplication. In the context of convolution, given an input image (we repurpose the symbol , typically used for vectors, to represent images in this context) and a filter (for clarity, we presume the filter possesses a singular map; in scenarios with multiple maps, the operation can be repeated accordingly), we have , where ∗ and ⊛ denote convolution and homomorphic convolution, respectively. In this paper, we employ convolution based on [60], in which convolution between an encrypted vector and a plain matrix can be computed based on element-wise operators in HE schemes. Additionally, it is noteworthy that the dot product is essentially a specific instance of matrix multiplication, illustrated as .

LHE. In CKKS, each ciphertext has a non-negative integer, termed as level, representing the capacity for homomorphic multiplication operations. After each homomorphic multiplication, the level is reduced by one through the rescaling procedure. After several multiplications, if the level ℓ reduces to zero, the ciphertext can no longer support further multiplication operations. The initial setting of ℓ is crucial and is determined based on the expected number of multiplications. For deep NN, ℓ is large, resulting in a corresponding increase in the CKKS parameters, which subsequently leads to computational overheads. Another solution to expend ciphertext’s computational capacity is the bootstrapping procedure [13] that turns the zero-level ciphertext into a higher-level ciphertext, thereby enabling further homomorphic multiplications. However, bootstrapping is commonly considered as a costly procedure, so one usually tries to avoid it as much as possible [14].

Security of HE. An essential attribute of the CKKS is its semantic security. This ensures that a computationally bounded adversary cannot distinguish whether a given ciphertext corresponds to the encryption of one of two different messages. Intriguingly, even if two messages are identical (i.e., ), their respective ciphertexts will differ (i.e., ). This is due to random noise introduced during the encryption process. The robustness of CKKS’s semantic security is based on the Ring Learning with Errors (RLWE) assumption [62]. The RLWE problem has undergone extensive scrutiny within the cryptographic community. Significantly, it is deemed a computationally hard problem, resistant to quantum attacks, thereby positing RLWE-based schemes like CKKS as viable options in the quantum era.

3.3. Threat Model and Security

We prove the security of our proposed protocols against a semi-honest adversary by following the simulation paradigm in [21]. We consider two parties, and , and jointly compute a function by running a protocol . A computationally bounded adversary corrupts either or at the beginning of the protocol and follows the protocol specification honestly but attempts to infer additional sensitive information (e.g., information about the input of the other party) from the observed messages during protocol execution. We now give the formal definition of security within the semi-honest model. Intuitively, the definition guarantees that everything a party observes from the received message of a legitimate execution is essentially inferred from its output (coupled with its corresponding input). In other words, any knowledge the party acquires from the protocol execution is fundamentally implied in the output itself, leaving the party with only the output as new knowledge.

Definition 1.

A protocol Π between a party and a party is said to securely compute a function f in semi-honest model if it satisfies the following guarantees:

- Correctness: For any ’s input and ’s input , the probability that, at the end of the protocol, outputs and outputs is 1.

- Security:

- −

- Corrupted : For that follows the protocol, there exists a simulator such that where denotes the view of in the execution of (the view includes the ’s input, randomness, and the message received during the protocol), and denotes the ultimate output received at the end of Π.

- −

- Corrupted : For that follows the protocol, there exists a simulator such that where denotes the view of in the execution of and denotes the ultimate output received at the end of Π.

Our framework (Section 4) is created by composing sequential secure protocols. To prove the security of our framework, we invoke modular sequential composition, as introduced in [22]. The details of the modular sequential composition are out of the scope of this work. Please refer to [22] for details. We now briefly describe the idea of modular sequential composition, which we used to prove security for our framework. The idea is that two parties and run a protocol and use calls to an ideal functionality to compute a function f (e.g., and may privately compute f by transmitting their inputs to a trusted third party and subsequently receiving the output) in . If we can prove that is secure in the semi-honest model and if we have a protocol that privately computes f in the same model, then we can replace the ideal calls to by the execution of in ; the new protocol denoted is then secure in the semi-honest model.

4. HeFUN: An iLHE-Based SNNI Framework

This section provides a detailed description of our proposed framework. We first state the problem we try to solve throughout this section (Section 4.1). Secondly, we present a protocol to homomorphically evaluate the function, i.e., (Section 4.2). Thirdly, we present a protocol to refresh ciphertexts, i.e., (Section 4.3). Fourthly, we present a naive framework for SNNI, i.e., , that straightforwardly applies the and , but it leaks some unexpected information to the client (Section 4.4). Then, we revise to prevent the potential information leakage, which results in our “ultimate” framework, i.e., (Section 4.5). Throughout this section, we denote the client by and the server by .

4.1. Problem Statement

We consider a standard scenario for cloud-based prediction services. In this context, possesses an NN, and sends the data to and subsequently obtains the corresponding prediction. The problem is formally defined as:

where is the input data, is the linear transformation applied in the ith layer, is (usually non-linear) activation functions, and and are the weight and bias in the i-th layer of the NN. The problem we address is SNNI. That is, after each prediction, obtains the prediction , while learns nothing about and , and learns nothing about and with except . In this paper, we focus on the function, i.e., . The final activation function, i.e., , can be omitted in the inference stage without compromising the accuracy of predictions. This is because NN predictions rely on the index with the maximum value in its output vector, and since the is monotone increasing, whether or not we apply it will not affect the prediction. The following sections elaborate on how to homomorphically evaluate layers in NNs in the framework.

Linear layers (). These layers apply a linear transformation on input. In NNs, there are two common linear transformations, i.e., fully connected layers and convolution layers.

Fully connected layer. The fully connected layer can be considered as the multiplication between an encrypted vector () and a plain weight matrix () and addition with a bias vector (). Based on operators supported by the CKKS scheme (as described in Section 3.2), the fully connected layer can be efficiently evaluated in the ciphertext domain. In particular, .

Convolution layer. A convolutional layer consists of filters that act on input values. Convolutional layers aim to extract features from the given image. Every filter is an square that moves with a certain stride. By convolving the image pixels, we compute the dot product between filter values and the values adjacent to a particular pixel. Similar to the fully connected layer, the convolution layer can be efficiently evaluated in the ciphertext domain, i.e., .

After linear layers, a non-linear activation function () is usually applied. Unfortunately, the activation function cannot be evaluated in the ciphertext domain. The next section presents our method to address this problem.

4.2. : Homomorphic Evaluation Protocol

After a linear layer, a non-linear activation function is applied to introduce non-linearity into the NN and allow it to capture complex patterns and relationships in the data. In this paper, we focus on the function.

Evaluating requires the comparison operator, which is not natively supported by existing HE schemes. Hence, the activation function layer cannot be directly computed in the ciphertext domain. To address this issue, we propose a protocol, , in which interacts with to perform computation.

Homomorphic evaluation problem. holds secret key , the server has public key but can not access to . holds ciphertext () encrypted under . obtains without leaking to and .

We now present our protocol that solves the homomorphic evaluation problem described above. Here, means applying the function on each element of , i.e., . Our protocol () is described in Protocol 1. We now give the intuition behind the protocol and then present the proof for the correctness and security of the protocol (as illustrated in Lemma 1).

Intuition. We based this on the fact that evaluating the function is essentially equivalent to evaluating the function. In particular, given , can be implemented as follows:

where if , if , and if . It is noteworthy that the function as defined in this paper is consistent with the standard interpretation found in the literature [9,10,63,64]. This is widely employed in various real-world applications, including machine learning algorithms, such as support vector machines [65], cluster analysis [66], and gradient boosting [67].

Therefore, the fundamental question now is as follows: How can (holds ) securely evaluate ? We start from a trivial (non-secure) solution to evaluate as follows: sends to ; decrypts the ciphertext (using ) and obtains , then obtains ; encrypts the result and sends the ciphertext to . Obviously, this trivial solution reveals the entire to . To hide from , first homomorphically multiplies with a random vector (line 2), then sends to (line 3). After that, can decrypt and obtain , which hides from in an information-theoretic way (it is a one-time pad). At ’s side, based on the sign of and sign of , this allows to obtain the sign of . In particular, as , (illustrated in Table 2). Using , can homomorphically compute based on Formula (2). The correctness and security of Protocol 1 is shown in Lemma 1.

| Protocol 1 : Homomorphic evaluation protocol |

Input : Secret key Input : Public key , ciphertext Output :

|

Table 2.

Logic table for function.

Lemma 1.

protocol is secure in the semi-honest model.

Proof.

We prove that the security of our protocol follows Definition 1. We first prove the correctness and then analyze the security of the protocol.

Correctness. The correctness of the protocol is straightforward. We first prove the correctness of computing . At line 6, we have:

.

Now, we prove the correctness of computing . At line 7, we have:

(Formula (2)).

Security. We now consider two cases as following Definition 1, i.e., corrupted and corrupted .

Corrupted : ’s view is . Given , we build a simulator as below:

- 1.

- Pick ;

- 2.

- Output .

Even can decrypt and obtain , because was randomly chosen by ; hence, from the view of : .

Corrupted : ’s view is . Given , we build a simulator as below:

- 1.

- Pick ;

- 2.

- Output .

Based on the semantic security of HE schemes, it is obvious that . □

The idea of computing the function is based on Equation (2), i.e., . This equation requires a division by 2, which is equivalent to multiplying by in CKKS. However, the division is not supported by almost all other schemes that work on integers in the ring , such as BFV [23] and BGV [68]. In those schemes, the division by 2 can be replaced by multiplying by , where is the inverse of 2 in . By doing so, our protocol can be compatible with both CKKS and other integer HE schemes.

allows to obtain without leaking to and C. However, it leaks some unexpected information about to , namely, it leaks whether the ith slot in is zero or not (we denote it by ). This leakage occurs at line 4 in Protocol 1. In particular, knows a slot if the ith slot in is 0 (as ). We address the information leakage by proposing a novel NN permutation. We detail this in Section 4.5.

4.3. : Refreshing Ciphertexts

LHE schemes limit the depth of the circuit that can be evaluated. Furthermore, in the case of deep NNs, the multiplicative depth of the HE scheme must be a big value that causes computation overhead (as mentioned in Section 3.2). To address these issues, we design a simple but efficient protocol to regularly refresh intermediate layers in NNs, . In this section, we use to denote the ciphertext is at the level ℓ, i.e., ℓ multiplications can be performed on this ciphertext).

Ciphertext refreshing problem. holds secret key , the server has public key but can not access to . holds ciphertext () encrypted under . obtains , s.t. , without leaking to and .

We now present our protocol that solves the ciphertext refreshing problem described above. The protocol is shown in Protocol 2. We now give the intuition behind the protocol and then present the proof for the correctness and security of the protocol (as illustrated in Lemma 2.

| Protocol 2 : Ciphertext refreshing protocol |

Input : Secret key Input : Public key , Output :

|

Lemma 2.

protocol is secure in the semi-honest model.

Intuition. The idea of the protocol is as follows. First, additively blinds with a random mask (line 2) and sends the masked ciphertext to (line 3). Then, decrypts the ciphertext and obtains masked message , which perfectly hides from in an information-theoretic way (it is a one-time pad). Then, encrypts the masked message the . This ciphertext is at the highest level L, as it has not undergone any multiplication. Obviously, the encryption–decryption procedure can be considered as the ciphertext refresher. Finally, homomorphically subtracts and obtains a new ciphertext, which is an L-level ciphertext. is essential to enable continued computation without increasing the encryption parameters. Rather than selecting encryption parameters large enough to support the entire NN, we must now only be large enough to support the linear layers and computation in the protocol.

Proof.

We first prove the correctness and then analyze the security of the protocol based on Definition 1.

Correctness. The correctness of the protocol is straightforward. We first consider the level of the ciphertext. At line 5, encrypts the plain message into . This new ciphertext has not undergone any computation; hence, it is at the highest level of the HE scheme, i.e., L. In line 6, the ciphertext involves one homomorphic subtraction; hence, the resulting ciphertext remains at the level L.

From now on, for the sake of simplicity, we remove the level notion in ciphertexts. At line 6 of the protocol, .

Security. We now consider two cases as following Definition 1, i.e., corrupted and corrupted .

Corrupted : ’s view is . Given , we build a simulator as below:

- 1.

- Pick ;

- 2.

- Output .

Even can decrypt , and obtain , because was randomly chosen by ; hence, from the view of : .

Corrupted : ’s view is . Given , we build a simulator as below:

- 1.

- Pick ;

- 2.

- Output .

Based on the semantic security of HE schemes, it is obvious that . □

We now leverage and to design an SNNI framework. We start from a simple framework, , which straightforwardly applies in SNNI (Section 4.4). almost satisfies the requirements of SNNI, except that it leaks to . We then improve to to prevent such information leakage, which results in the protocol (Section 4.5).

4.4. Protocol

This section presents our method to solve the SNNI problem, which uses HE to evaluate linear layers (as described in Section 4.1), uses the protocol to evaluate the activation function, and uses the protocol to refresh intermediate layers. Interestingly, and can be run in parallel on the same input . By doing so, we preserve communication rounds. The protocol is shown in Protocol 3. straightforwardly applies the protocol to compute the activation function. As shown in Section 4.2, it leaks to when and run (line 4). In the next section, we present a mature version of , i.e., , which overcomes such data leakage.

| Protocol 3 framework |

Input : Data , secret key Input : public key , trained weights and biases with Output : , where is function.

|

4.5.

We first present the intuition behind how can we hide from .

Intuition. To tackle the issues of leaking to , implements a random permutation on prior to executing the protocol. Then, cannot determine the original position of a value, concealing whether the actual value of a particular slot is zero. Thus, only learns the number of zero values, which can be obscured by adding dummy elements. However, in SNNI, is in encrypted form, i.e., . Unfortunately, permutation on is a challenging task. This is because the permutation requires swapping slots in the ciphertext, which requires numerous homomorphic rotations—a costly operator [11,15].

This approach is not practical when the number of neurons in NN layers is large. The section below describes how we can overcome this challenge.

Given a vector , and is a permutation over , let denote the permutation ’s slots according to , i.e., the ith slot in the permuted vector () is (instead of ). Given a matrix , we use to denote permutation of by columns, i.e., the ith column in the permuted matrix () is (instead of ). Here are some obvious facts:

- Permuting two vectors with the same permutation preserves element-wise addition:

- Permute two vectors with the same permutation and preserve their dot product:

- Permute a vector, and every column in a matrix with the same permutation preserves the vector–matrix product:where denotes that we apply the permutation to every column of .

- In vector–matrix multiplication, (only) permutation of a matrix by column leads to the same permutation on the result.

Proof.

Proof of Property (3):

Proof of Property (4):

Proof of Property (5):

Proof of Property (6):

□

Based on Property (6) and the fact that , where is the identity matrix, we can achieve permutation of by just permuting identity matrix , namely . Based on this observation, we propose a novel algorithm, i.e., , to permute slots inside a ciphertext without the rotation operator. The algorithm is shown in Protocol 4. The correctness of the protocol is straightforward, as below.

| Protocol 4 : Homomorphic permutation |

Input: ciphertext , permutation Output:

|

The algorithm allows us to permute any (encrypted) intermediate layers, i.e., , prior to executing the protocol. Hence, we can hide from . Suppose each intermediate layer (before the layer) is permuted by a permutation . Then, the value obtained by (after running ) is (not ). This value will be the input for the next linear layer. Therefore, to compute the next linear layer, first needs to unpermute. To do so, runs on and , which results in . Then, can homomorphically evaluate the next linear layer on , which results in . Finally, can run to permute using permutation . Interestingly, for a fully connected layer, we can achieve permutation without the need of . This is because the fully connected layer can be homomorphically evaluated directly on by permuting each column in by (based on Property (5)), namely . Therefore, we achieve permutation for free in fully connected layers.

Assume that chooses L random permutations corresponding to layer 1 to layer L, respectively. Notably, can be chosen offline by before the inference process. Hence, we can save the inference time. The details of are shown in Protocol 5, in which means fully connected layer and means convolution layer. ’s security follows Lemma 3. We now provide an analysis of the correctness and security of the protocol.

| Protocol 5 framework |

Input : Data , secret key Input : public key , trained weights and biases with Output : , where is function.

|

Lemma 3.

protocol is secure in the semi-honest model.

Proof. Correctness. We first consider the fully connected layers. At line 12, we have:

We now consider convolution layers, i.e., lines 14, 15, and 16. The correctness of convolution layers is straightforward based on the correctness of the algorithm and the homomorphic property.

The above process, i.e., computing the linear layer then computing the activation function, is repeated through layers. At the end, obtains (line 18). Because sends to the permutation of the last layer, i.e., (line 17), can unpermute , and obtains .

Security. We prove the security using modular sequential composition introduced in [22]. We first construct framework , in which and call ideal functionalities and to refresh ciphertext and evaluate the function. We have and such that they are already secure in the semi-honest model (according to Lemma 2 and Lemma 1, respectively). Now, we need to prove that (with the calls to and ) is secure in the semi-honest model. Then, we can replace the calls to ideal functionalities and by protocols and , respectively, and conclude the security of by invoking modular sequential composition (as presented in Section 3.3). We now consider two cases as follows Definition 1, i.e., corrupted and corrupted . Considering corrupted , it is obvious that receives nothing from ideal calls and . Hence, we just need to consider the case of corrupted , as below:

Corrupted : ’s view is

Given ), we build a simulator as below:

- 1.

- Pick with ;

- 2.

- Output .

Given the semantic security guaranteed by the HE scheme, coupled with the entropic uncertainty introduced by our homomorphic permutation algorithm, it naturally follows that . Hence, . □

5. Experiments

In the evaluation of SNNI, we consider three critical criteria: security, accuracy, and efficiency. The comparative analysis outlined in Table 1 leads to several observations. (1) MPC-based frameworks fail to meet the security criterion, as they disclose the entire architecture of NNs; (2) TFHE-based frameworks fall short on accuracy, since they are limited to BNNs; and (3) LHE-based frameworks, HE-MPC hybrid frameworks, and our proposed framework appear to fulfill all three criteria (it should be noted that the fulfillment of these requirements is not absolute, and some issues still persist, as outlined in Table 1). Hence, in this section, we compare our proposed approach, , with HE-MPC hybrid-based frameworks and LHE-based frameworks. The core architecture of both and prevailing HE-MPC hybrid frameworks encompasses two fundamental components: linear layer evaluation and the evaluation of the non-linear function, specifically the function. While the linear layer evaluation in these frameworks uniformly employs homomorphic encryption, the difference arises in the treatment of non-linear layer evaluation, namely, implements , whereas HE-MPC hybrid-based frameworks opt for either the GC or ABY protocol. This section delves into the communication complexity inherent in the evaluation within as compared to that within existing HE-MPC hybrid frameworks (Section 5.1). serves as a potential alternative to the GC/ABY protocols in HE-MPC hybrid-based frameworks, which reduces the communication complexity in SNNI. To compare with LHE-based frameworks, we first compare the ciphertext refreshing component. In particular, we compare with the bootstrapping technique in the existing HE schemes (Section 5.2). Subsequently, we benchmark alongside the prevalent LHE-based frameworks using a real-world dataset (Section 5.3). All experiments are run on a PC with a single Intel i9-13900K running at 5.80 GHz and 64 GB of RAM, running Ubuntu 22.04. Overall, the results presented below show that:

- Compared to current HE-MPC frameworks, requires less communication rounds in evaluating the activation function (Section 5.1).

- Compared to existing bootstrapping procedures, is much faster (Section 5.2).

- Compared to current LHE-based frameworks, outperforms the current LHE-based approach in terms of accuracy and inference time (Section 5.3).

5.1. Comparison with Hybrid HE-MPC Approach

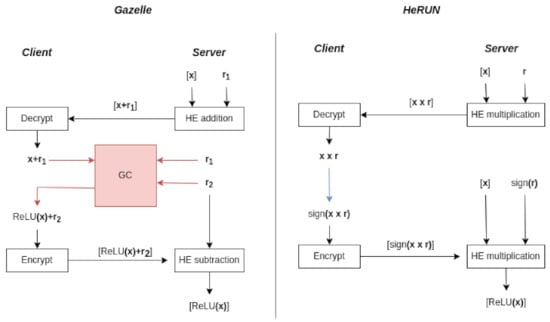

The current state-of-the-art HE-MPC hybrid-based approach is Gazelle [15]. Within Gazelle’s framework, the server directly computes linear layers using HE operators of the LHE scheme in an offline phase. To evaluate non-linear layers, Gazelle leverages GC [19] to handle the bitwise operations required by (online phase). Finally, because each layer in an NN consists of alternating linear and non-linear layers, Gazelle also elaborates on an efficient method to switch between the two aforementioned primitives using a novel technique based on additive secret sharing. Gazelle’s primary bottleneck is the cost of evaluating GC for the activation function [15,69]. Compared to Gazelle, HeFUN can evaluate the function solely based on an HE scheme, hence avoiding the expensive GC protocol that significantly reduces communication rounds and communication cost. We now compare the procedure of evaluating the function between and Gazelle regarding communication rounds and communication cost. In both frameworks, i.e., and Gazelle, after computing the linear layers homomorphically or receiving the client’s encrypted input, the server holds an encrypted vector . The objective for both and Gazelle is to enable the server to obtain . In Gazelle, the server and the client perform the following steps:

- Conversion from HE to MPC: The server additively blinds with a random mask and sends the masked ciphertext to the client.

- MPC circuit evaluation: The client decrypts . Then, the client (holds ) and the server (holds and a randomness ) run GC to compute without leaking to the server and and to the client. Finally, the client sends to the server.

- Conversion from MPC to HE: The server homomorphically subtracts and obtains .

The main bottleneck of Gazelle is step 2, i.e., the client and the server run GC, which requires a huge communication [15]. The comparison shown in Figure 1 illustrates that surpasses Gazelle by reducing both communication rounds and costs. Specifically, performs evaluation in just two rounds, whereas Gazelle requires more communication rounds, namely two rounds (to convert between HE and MPC and vice versa) and communication rounds required by GC. In , two ciphertexts are transmitted between the client and the server, while the number of messages exchanged in Gazelle is larger, namely two ciphertexts ( and ) and a huge number of messages exchanged in the GC protocol.

Figure 1.

Comparison between Gazelle [15] and . The main bottleneck of Gazelle is GC. is solely based on HE, hence avoiding expensive GC procedure. outperforms Gazelle in terms of communication rounds and communication cost.

In Gazelle, the client needs to involve the GC procedure, which is undesirable in SNNI. In contrast, for , the client only needs to perform decryption and encryption, which are simple algorithms in the HE scheme, while other heavy computation takes place in the server.

One should acknowledge that in Gazelle, the server’s operations include homomorphic addition and subtraction; conversely, in , the server performs homomorphic multiplication (as delineated in line 7, Protocol 1), which necessitates an LHE scheme with larger parameters due to the additional multiplications required. Where Gazelle operates with a multiplicative depth of 1, requires a depth of 3.

Another HE-MPC hybrid-based SNNI framework is MP2ML [16], which incorporates the ABY protocol [20] for the evaluation of the function, as opposed to the utilization of GC. In the comparative analysis (see Figure 1), the GC component is substituted with an ABY component. It is obvious that demonstrates superior performance over MP2ML in terms of both the number of communication rounds and communication costs. Overall, outperforms the current HE-MPC hybrid-based approach in evaluating in terms of communication complexity. This is primarily attributed to ’s elimination of the necessitated communication-intensive GC or ABY protocols. However, presents an elevated multiplicative depth requirement of 3, in contrast to the multiplicative depth of 1 required by Gazelle and MP2ML.

5.2. Experimental Results

In the HE scheme, is equivalent to the bootstrapping procedure, which both aim to reduce the noise in ciphertexts. We compare with the bootstrapping technique for CKKS [70], implemented in OpenFHE. We report the result for refreshing noise for one ciphertext. To ensure the reliability of the results, we repeat the experiment 1000 times and take the average result. The comparison results are shown in Table 3. The depth is the number of expected homomorphic multiplications performed before noise refreshing, N is the degree of the polynomial used in the CKKS scheme, and the level is the multiplicative level required for noise refreshing. Bootstrapping homomorphically computes the decryption equation in the encrypted domain. The procedure consumes an amount of homomorphic multiplication, so the level of the CKKS scheme needs to be big enough for this procedure. The details of the bootstrapping procedure are out of the scope of this work. Please refer to [70] for details. While DoubleR (Protocol 2) only requires simple computation, i.e., encryption, decryption, addition, and subtraction, we do not need to increase the level of CKKS. The larger level of CKKS leads to a bigger value of N to meet the security requirement. With simpler operators and smaller parameters, DoubleR is much faster than bootstrapping. For example, with a depth of 1, DoubleR is nearly 300 times faster than bootstrapping. For a depth of 4, with the same N, is nearly 225 times faster than bootstrapping.

Table 3.

Comparison between Double-CKKS protocol and bootstrapping.

5.3. Comparison between and LHE (CryptoNets)

This section aims to compare with the LHE-based approaches. The foundational concept for LHE-based approaches originates from the landmark study presented in CryptoNets [12], which utilizes an LHE scheme with a predetermined depth that aligns with the NN’s architecture. For the purposes of this analysis, the term ‘CryptoNets’ will be used to refer to the conventional LHE-based approach.

5.3.1. Experimental Setup

The NNs in this section were trained using PyTorch [71]. To implement HE, we employed TenSEAL [60], with CKKS as instantiation. All parameters in the following sections are chosen to comply with the recommendations on HE standards [72], which satisfies 128 bits of security.

5.3.2. Dataset and NN Setup

We evaluated the performance of NN inference on two distinct datasets, i.e., MNIST [73] and AT&T faces datasets [74]. The MNIST dataset has a standard split containing grayscale images of Arabic numerals 0 to 9 of 50,000 training images and 10,000 test images. MNIST is the standard benchmark for homomorphic inference tasks [11,12,25,33]. The AT&T faces dataset includes grayscale images of 40 individuals, with 10 different images of each individual. The dataset is considered a classic dataset in computer vision for experimenting with techniques for face recognition. It offers a more realistic scenario for SNNI, allowing for the recognition of faces while maintaining the confidentiality of the individual images.

The neural networks (NNs) under consideration are composed of convolutional layers, activation functions, and fully connected layers. For the framework, the activation function employed is the function, while LHE-based secure neural network inference (SNNI) typically utilizes the square function as the activation mechanism, as extensively documented in the literature [12,25,26]. To introduce diversity into our experimental evaluation, we have selected two distinct NN architectures, i.e., a small NN and a large NN (the term “large NN” refers to an NN with more complexity than our “small NN”, but its size is tailored to stay within the operational limits of LHE-based frameworks for meaningful comparisons). The details of the NNs’ architectures for the MNIST dataset are detailed below.

- Small NN:

- −

- Convolution layer: The input image is 28 × 28. 5 kernels, each in size, with a stride of 2 and no padding. The output is a tensor.

- −

- Activation function: This layer applies the approximate activation function to each input value. It is a square function in CryptoNets, and the function in .

- −

- Fully connected layer: It connects the 845 incoming nodes to 100 outgoing nodes.

- −

- Activation function: It is the square function in CryptoNets, and the function in .

- −

- Fully connected layer: It connects the 100 incoming nodes to 10 outgoing nodes (corresponding to 10 classes in the MNIST dataset).

- −

- Activation function: It is the sigmoid activation function.

- Large NN:

- −

- Convolution layer: It contains 5 kernels, each in size, with a stride of 2 and no padding. The output is a tensor.

- −

- Activation function: It is the square function in CryptoNets, and the function in .

- −

- Fully connected layer: It connects the 845 incoming nodes to 300 outgoing nodes.

- −

- Activation function: It is the square function in CryptoNets, and the function in .

- −

- Fully connected layer: It connects the 300 incoming nodes to 100 outgoing nodes.

- −

- Activation function: It is the square function in CryptoNets, and the function in .

- −

- Fully connected layer: connects the 100 incoming nodes to 10 outgoing nodes.

- −

- Activation function: It is the sigmoid activation function.

In the case of the AT&T faces dataset, the neural network retains a similar layer structure as used with the MNIST dataset. However, adjustments are made to the neuron count per layer to accommodate the dataset’s structure. For instance, the output layer features 40 neurons, corresponding to the 40 distinct classes represented in the dataset, in contrast to the 10-neuron configuration used for datasets like MNIST.

As we mention in Section 4.1, the last sigmoid activation function can be removed in the inference phase without interfering with prediction accuracy.

5.3.3. HE Parameter

The main parameters defining the CKKS scheme [24] are the degree N of the polynomial modulus , the coefficient modulus q, and multiplicative depth L. The multiplicative depth L of the CKKS scheme was chosen to align with the NN’s depth. Subsequently, the modulus q is carefully chosen to meet the security criterion of 128 bits, taking into account the specified multiplicative depth L. Within the framework, the protocol is employed to refresh the outputs of intermediate layers, thereby facilitating additional multiplications. Table 4 details the parameters. CryptoNets () and CryptoNets () stand for CryptoNets () on small NN and large NN, respectively. We choose N = 16,384 in both frameworks. Notably, in the case of CryptoNets, an escalation in NN depth from a small to a large model necessitates an increase in L (from 5 to 7), consequently requiring an increase in modulus q to preserve the desired security level. Conversely, maintains a constant and reduced multiplicative depth regardless of NN depth increments—specifically 3 as per Table 4—thanks to its intermediate ciphertext refreshing mechanism. It is imperative to recognize that elevated values of L and q slow down inference time.

Table 4.

LHE’s parameters of CryptoNets and . The best results are in bold font.

5.3.4. Experimental Results

Table 5 and Table 6 present the experimental results on the MNIST and AT&T faces datasets, respectively, upon which we base our analysis of the accuracy and inference time associated with and CryptoNets.

Table 5.

Comparison between frameworks and CryptoNets1 on MNIST dataset. The best results are in bold font.

Table 6.

Comparison between frameworks and CryptoNets1 on AT&T faces dataset. The best results are in bold font.

Accuracy. It can be seen that outperforms CryptoNets in accuracy. For the MNIST dataset, and achieve accuracy of and , respectively, while CryptoNet and CryptoNet achieve accuracy of and , respectively. The difference is caused by the activation used in the frameworks, namely, used the activation function, whereas CryptoNets used the square function. Similarly, for the AT&T faces dataset, and achieve accuracy of and , respectively, while CryptoNet and CryptoNet achieve accuracy of and , respectively.

Inference. The timing results reported in Table 5 and Table 6 are the total running time at the client and server. As shown in Table 5 and Table 6, is faster than CryptoNets for both the small NN and large NN. This acceleration is because LHE parameters in are smaller than CryptoNets. For instance, in Table 5, with an increase in NN depth from 5 to 7, CryptoNets shows a significant jump in inference time—from s to s. This increase is due to the escalated complexity of LHE parameters necessitated by a deeper network. Conversely, benefits from the protocol, which mitigates noise accumulation at intermediate layers and allows for consistent LHE parameters regardless of NN depth. Consequently, in , a similar increase in NN depth results in a modest increase in inference time, from s to s. Interestingly, in , the computation time at the same layers almost remains unchanged, regardless of the depth of NN, due to its invariable LHE parameter requirements. On the other hand, in CryptoNets, deeper NNs require an increase in the LHE’s parameters, which slows down the computation time. For instance, the layer Fully connected 1 takes s in both and , while it takes s in CryptoNet, and takes s in CryptoNet. This observation reinforces the scalability of in terms of computation time when compared to CryptoNets as NN complexity increases.

We now analyze the running time at the activation function layers. The evaluation of activation function layers’ running times, as shown in Table 1, reveals that exhibits slower performance compared to CryptoNets. This difference stems from the intrinsic complexity of the activation functions utilized; CryptoNets employs a square function as the activation, which is a single homomorphic multiplication of two ciphertexts, whereas utilizes the more complex protocol for evaluating the function, as detailed in Table 7.

Table 7.

Comparison between protocol and square function evaluation in CryptoNets.

Despite this, has an advantage in terms of its independence from the neural network’s (NN) depth. Specifically, maintains a constant multiplicative depth of 3, regardless of the NN’s depth, which avoids the escalation of LHE parameters—and consequently, inference times—that is seen with CryptoNets as the NN becomes deeper. To illustrate, the Activation 1 layer in consistently requires s for both small and large NNs ( and ), in contrast to CryptoNets, which records s for a small NN (CryptoNet) and increases to s for a large NN (CryptoNet). This observation reinforces the scalability of in terms of computation time when compared to CryptoNets as NN complexity increases.

6. Discussion on Security of

6.1. Model Extraction Attack

While ensures security against semi-honest adversaries by protecting client data and the model’s parameters, it remains vulnerable to model extraction attacks. Adversaries can potentially exploit a series of inference queries to reverse-engineer the model’s parameters [75,76], reconstruct training samples [77,78], or deduce the presence of specific samples in the training set [8]. Indeed, all the frameworks in Table 1 remain vulnerable to such attacks. We acknowledge that addressing model extraction is a distinct challenge from SNNI and is not the primary focus of our current work.

6.2. Fully Private SNNI

hides all the NN’s parameters from the client and hides clients’ data from the server. However, does not completely hide the network architecture. Our protocol leaks the number of layers and the number of neurons in each layer to the client. To further enhance privacy, we can adopt the padding technique used in the Gazelle framework, which involves adding dummy layers and neurons to conceal the network’s true architecture at the cost of additional computational resources. Furthermore, our protocol obviously leaks the type of activation function used in the NN to the client. It should be noted that all frameworks in the MPC-based and HE-MPC hybrid-based approaches (in Table 1) leak such information. Among current SNNI approaches, LHE-based and FHE-based approaches do not expose any details about the NN, thereby providing a superior level of security. Despite this, such methods are constrained by their inability to efficiently compute certain functions like , their limitation in handling deep NNs, and the significant computational overhead involved. These limitations reduce their scalability and practical applicability in complex SNNI tasks.

7. Conclusions and Future Works

This paper introduces iLHE, a novel interactive LHE-based approach for SNNI. Building upon iLHE, we developed two innovative protocols that address the limitations of current HE-based SNNI methods. Our protocol enables the evaluation of the activation function with reduced communication rounds compared to existing HE-MPC hybrid solutions. We also introduce , a refreshing framework that facilitates deep computation by allowing additional homomorphic operations without increasing HE parameters, thereby accelerating HE scheme computations. Uniquely, sidesteps the need for Gentry’s bootstrapping and its associated circular security assumptions. Leveraging and , we propose a comprehensive framework for SNNI that offers enhanced security, improved accuracy, fewer communication rounds, and faster inference times, as demonstrated by our experimental results. This framework has been rigorously validated to be secure within the semi-honest model.

This work introduces an iLHE-based method for efficiently evaluating the activation in NNs, circumventing the communication overhead associated with MPC protocols. An avenue for future research lies in extending iLHE-based techniques to other non-linear functions, such as max-pooling and softmax. Such advancements could significantly enhance the performance of existing SNNI frameworks.

Author Contributions

Conceptualization, D.T.K.N.; Methodology, D.T.K.N.; Validation, D.T.K.N., D.H.D. and T.A.T.; Formal analysis, D.T.K.N. and D.H.D.; Investigation, D.T.K.N.; Writing–original draft, D.T.K.N.; Writing–review & editing, D.H.D., W.S., Y.-W.C. and T.A.T.; Supervision, D.H.D., W.S. and Y.-W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available in this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 5485–5551. [Google Scholar]

- Park, D.S.; Chan, W.; Zhang, Y.; Chiu, C.C.; Zoph, B.; Cubuk, E.D.; Le, Q.V. Specaugment: A simple data augmentation method for automatic speech recognition. arXiv 2019, arXiv:1904.08779. [Google Scholar]

- Gulati, A.; Qin, J.; Chiu, C.C.; Parmar, N.; Zhang, Y.; Yu, J.; Han, W.; Wang, S.; Zhang, Z.; Wu, Y.; et al. Conformer: Convolution-augmented transformer for speech recognition. arXiv 2020, arXiv:2005.08100. [Google Scholar]

- OpenAI. ChatGPT. 2023. Available online: https://chat.openai.com (accessed on 3 November 2023).

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership inference attacks against machine learning models. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 3–18. [Google Scholar]

- Lee, E.; Lee, J.W.; No, J.S.; Kim, Y.S. Minimax approximation of sign function by composite polynomial for homomorphic comparison. IEEE Trans. Dependable Secur. Comput. 2021, 19, 3711–3727. [Google Scholar] [CrossRef]

- Cheon, J.H.; Kim, D.; Kim, D. Efficient homomorphic comparison methods with optimal complexity. In Advances in Cryptology–ASIACRYPT 2020, Proceedings of the 26th International Conference on the Theory and Application of Cryptology and Information Security, Daejeon, Republic of Korea, 7–11 December 2020; Proceedings, Part II 26; Springer: Cham, Switzerland, 2020; pp. 221–256. [Google Scholar]

- Boemer, F.; Costache, A.; Cammarota, R.; Wierzynski, C. nGraph-HE2: A high-throughput framework for neural network inference on encrypted data. In Proceedings of the 7th ACM Workshop on Encrypted Computing & Applied Homomorphic Cryptography, London, UK, 11 November 2019; pp. 45–56. [Google Scholar]

- Gilad-Bachrach, R.; Dowlin, N.; Laine, K.; Lauter, K.; Naehrig, M.; Wernsing, J. Cryptonets: Applying neural networks to encrypted data with high throughput and accuracy. In Proceedings of the International Conference on Machine Learning, PMLR, New York, NY, USA, 20–22 June 2016; pp. 201–210. [Google Scholar]