1. Introduction

Temporal knowledge graphs (TKGs) are a type of dynamic knowledge structure in which knowledge may change over time. Compared with traditional static knowledge graphs, TKGs take into account the influence of the time dimension. TKGs with multiple timestamps can be represented as a series of knowledge graph (KG) snapshots, where each snapshot contains all facts within the same timestamp.

For static knowledge graph completion, methods such as DiSMult [

1], ComplEx [

2], RGCN [

3], ConvE [

4], and RotatE [

5] have been proposed. TKG reasoning tasks can perform temporal reasoning and evolution analysis of knowledge, mainly divided into interpolation and extrapolation [

6]. Interpolation is to complete missing events based on existing facts; extrapolation is to predict future events based on past events. Predicting future events based on the evolution of historical knowledge graphs is very important and challenging. Obtaining possible future events in a timely manner based on temporal relations can support applications such as financial risk control, disaster relief, etc.

This paper focuses on extrapolation research. The challenge of this task is how to better obtain relevant historical information that can reflect future behaviors. Currently, there are two methods that can be used for TKG extrapolation, namely, query-specific and entire graph-based methods [

7]. The former includes RE-NET [

6], CyGNet [

8], xERTE [

9], TITer [

10], CENET [

11], etc., using high-frequency historical facts related to a query’s topics and relations to predict future trend, ignoring structural dependencies within snapshot; the latter includes RE-GCN [

12], CEN 7, TANGO [

13], TiRGN [

14], L2TKG [

15], etc., using historical knowledge graph as input to obtain the evolutionary patterns of TKG.

The above two methods do not consider the historical features from different perspectives, ignoring potentially useful information. Relying solely on either local or global knowledge graphs may result in information loss, affecting prediction results. However, future events may depend on both local structural dependencies and global temporal patterns. Historical knowledge from varying perspectives likely influences future occurrences to differing degrees.

In this work, we propose a model to capture multi-scale evolutionary features of historical information, called Multi-Scale Evolutionary Network (MSEN). Typically, different features manifest at different scales, providing diverse semantic information. The local memory focuses on contextual knowledge, while the global memory recalls long-range patterns. By integrating both scales, MSEN can better model TKG evolution for reasoning.

The main contributions are:

- (1)

In the local memory encoder, a hierarchical transfer aware graph neural network (HT-GNN) is proposed to enriches structural semantics within each timestamp;

- (2)

In the global memory encoder, a time related graph neural network (TR-GNN) is proposed to extract periodic and non-periodic temporal patterns globally across timestamps;

- (3)

Through experiments on typical event-based datasets, the paper demonstrates the effectiveness of our proposed model MSEN.

2. Related Work

In this section, this paper reviews the existing methods for TKG Reasoning under the interpolation setting and the extrapolation setting, and summarizes the methods for event prediction.

2.1. TKG Reasoning under the Interpolation Setting

For the interpolation setting, models try to complete missing facts in the past timestamps. TTransE [

16], TA-TransE [

17], and TA-DistMult [

17] embed temporal information into scoring functions and relations, respectively, to obtain the evolution information of facts; HyTE [

18] proposes a time-aware model based on hyperplanes, and projects entities and subgraphs onto specific hyperplanes; TNTComplEx [

19] introduces complex numbers to factorize a fourth-order tensor to generate timestamp embeddings. DE-SimplE [

20] accomplishes the task of knowledge graph completion by making feature representations of temporal information; ChronoR [

21] captures the rich interactions between time and multi-relational features in knowledge graphs by learning embeddings of entities, relations, and time; TempCaps [

22] is a capsule network model that uses information retrieved through dynamic routing to construct entity embeddings. TKGC [

23] maps entities and relations in TKG to multivariate Gaussian processes, thereby simulating overall and local trends. However, these models cannot obtain embeddings of future events.

2.2. TKG Reasoning under the Extrapolation Setting

Recently, several studies have attempted to infer future events, and existing methods can be divided into two categories: query-specific and entire graph-based methods [

7] They all use historical information to predict future trends.

2.2.1. Query-Specific Models

The methods focus on obtaining historical information of a specific query. GHNN [

24] predicts the occurrence of future events through obtaining the dynamic sequence of evolving graphs; xERTE [

9] uses iterative sampling and attention propagation techniques to extract closed subgraphs around specific queries. Both CluSTeR [

25] and TITer [

10] are based on reinforcement learning to discover paths for specific queries in the historical KG; RE-NET [

6] can effectively extract sequential and structural information related to a specific query in the TKG to solve the entity prediction task for a given query, but cannot model long-term KG information; CyGNet [

8] combines two reasoning modes, copy mode and generation mode, making predictions based on repeated entities in the historical vocabulary or the entire entity vocabulary, but ignores higher-order semantic dependencies between co-occurring entities; CENET [

11] proposes a novel historical comparative learning approach for knowledge graph reasoning that uses temporal reasoning patterns mined from historical knowledge graph versions to make more accurate predictions.

2.2.2. Entire Graph-Based Models

The methods focus on obtaining the history of the latest KG of fixed length. Glean [

26] introduces unstructured event description information to enrich entity representations, but description information is not available for all events in real applications; TANGO [

13] extends neural ordinary differential equations to multi-relational graph convolutional networks to model structural information, but the model’s utilization of long-distance information is still limited; RE-GCN [

12] and CEN [

7] both model the evolution of representations of all entities by capturing fixed-length historical information, but neither of these two models considers the evolutionary features of historical information from different perspectives; TiRGN [

14] introduces a new recurrent graph network model that incorporates local and global historical patterns in temporal knowledge graphs to improve the accuracy of link prediction over time.

Some recent works have incorporated both local and global modeling for TKG reasoning. However, MSEN proposes new designs for each module and their integration, achieving better results. The hierarchical design in the local module enriches semantic learning, while the periodic and aperiodic temporal modeling in the global module improves temporal encoding.

3. The Proposed Method

In this section, this paper mainly introduces Temporal Knowledge Graph (TKG) Reasoning under the extrapolation setting and the proposed method.

Table 1 shows some of the important symbols of the paper and the corresponding explanations.

3.1. Problem Formulation

A TKG is a KG sequence. In each KG, is a directed multi-relational graph, where Ε is the set of entities; is the set of relations; is the set of facts at timestamp t. A fact in can be expressed as a quaternion (s,r,o,t), where and . The goal of the TKG entity reasoning task is to complete tail entity prediction (s,r,?,) or head entity prediction (?,r,o,) based on the historical knowledge graph sequence . For each quaternion (s,r,o,t), an inverse quaternion is added to the KG. Therefore, the prediction of head entity (?,r,o,) can be transformed into the prediction of tail entity ().

3.2. Model Overview

In this section, a Multi-Scale Evolutionary Network (MSEN) is proposed to solve the problem of feature extraction at different scales. The overall framework of a MSEN is illustrated in

Figure 1. There are three parts in our model: (1) A local memory encoder that applies a hierarchical transfer-aware graph neural network (HT-GNN) to obtain structural features within each timestamp’s knowledge graph snapshot. The local encoder also performs temporal encoding of entities and relations using a gated recurrent unit (GRU); (2) a global memory encoder that employs a time-related graph neural network (TR-GNN) to mine temporal-semantic dependencies across the historical knowledge graph, yielding entity and relation embeddings; (3) a decoder that integrates the local and global encoder outputs and utilizes a scoring function to predict future entities.

Specifically, the input is a series of temporal knowledge graph snapshots. The local encoder extracts per-timestamp features using HT-GNN and temporal dynamics via the GRU. The global encoder identifies long-range patterns using TR-GNN. Finally, the decoder combines local and global embeddings to predict future entities using the scoring function. This multi-scale approach allows MSEN to capture both contextual knowledge and global evolutions for enhanced temporal reasoning.

3.3. Local Memory Encoder

The local memory encoder focuses on obtaining the memories of the m historical knowledge graphs adjacent to the query time. It aggregates each knowledge graph snapshot to capture structural dependencies within timestamps. Additionally, sequence neural networks are utilized to learn temporal features of entities and relations, enabling the model to better represent temporal dynamics across knowledge graphs.

3.3.1. HT-GNN

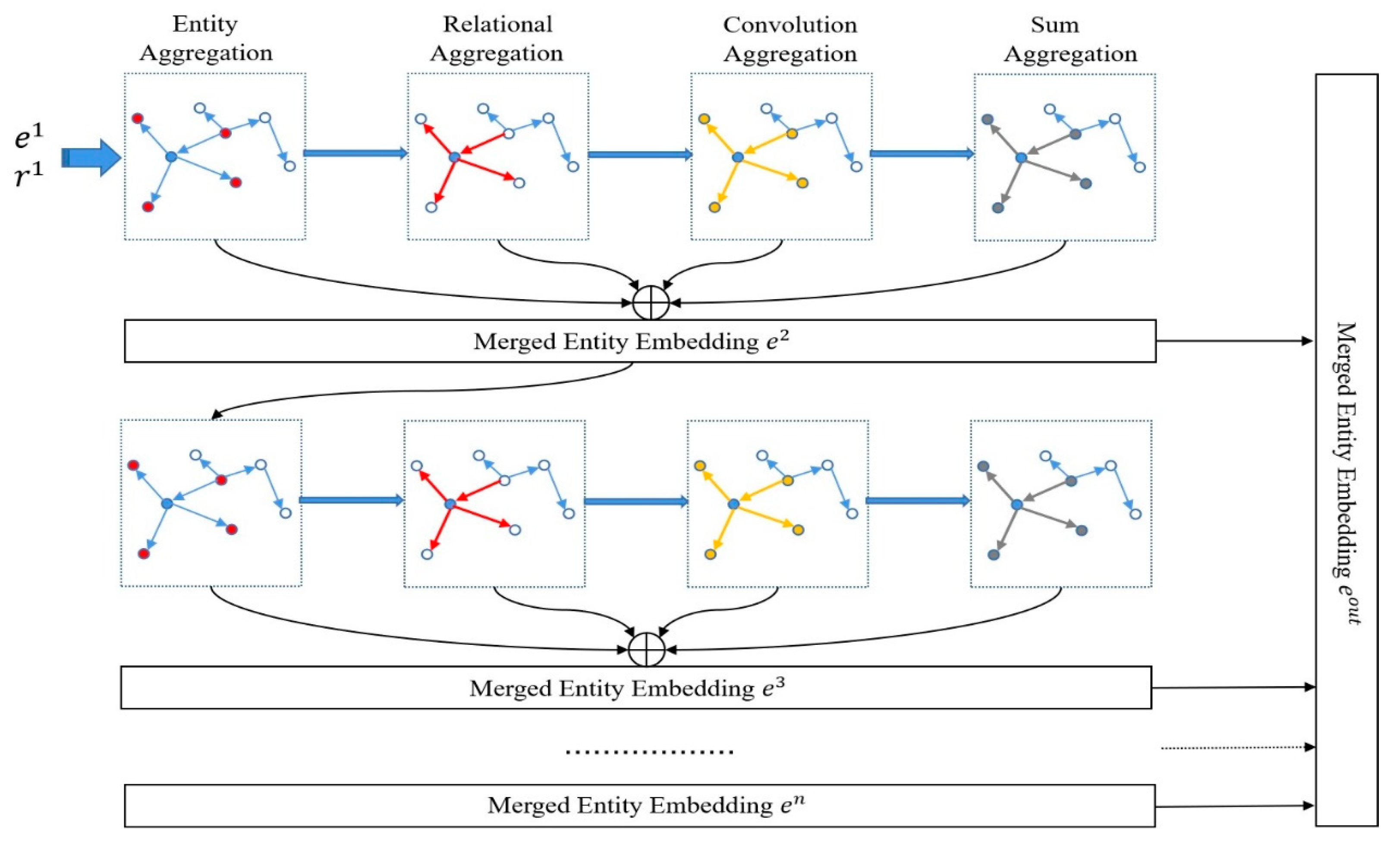

Obtaining informative embedding representations for each temporal knowledge graph is critical for effective local encoding. To better capture semantic information, we propose a novel graph neural network-based knowledge graph embedding method called Hierarchical Transfer-Aware Graph Neural Network (HT-GNN). As illustrated in

Figure 2, HT-GNN performs sequential aggregation at each layer: entity aggregation, relation aggregation, convolution aggregation, and sum aggregation. The blue nodes serve as aggregation centers. Different aggregation results are merged as input to the next layer. Finally, the outputs from the multi-layer aggregations are combined as the overall model output.

Specifically, entity aggregation allows nodes to incorporate semantics from adjacent entities based on co-occurrence, capturing semantic dependencies. The entity aggregation function and weight calculation are:

where

is embedding representation of entity o.

is the embedding representation of entity s which is a neighboring node of entity o.

is a learnable weight matrix.

represents the neighboring entities and relations of entity o.

is a nonlinear activation function.

is the aggregation weight, calculated as follows:

where

is the LeakyRelu activation function. L

represents the fully connected function.

represents the concatenation operation.

The result of entity aggregation is used as input to relation aggregation. The relation aggregation infuses relation semantics into the entity representations, thereby obtaining the co-occurrence of each node and relation in the knowledge graph. The relation aggregation function and corresponding weight calculation method used in the paper are as follows:

where

is the embedding representation of the neighboring relation r of entity o.

is a learnable weight matrix.

is the relation aggregation weight, calculated as follows:

The result of relation aggregation is used as input to convolution aggregation. For convolution aggregation, it obtains the hidden relation between entity s, relation r, and entity o, which enriches the semantic information of entities. The convolution aggregation function and weight calculation method used in the paper are as follows:

where

is the convolution operation.

is a learnable weight matrix.

is the aggregation weight, calculated as follows:

The result of convolution aggregation is used as input to sum aggregation. For sum aggregation, information is obtained through relational paths between entities, and sum-obtained information is similar to translation model TransE [

27]. The sum aggregation function and corresponding weight calculation method used in the paper are as follows:

where

and

are the embedding representations of neighboring entity s and relation r of entity o.

is a learnable weight matrix.

is the relation aggregation weight, calculated as follows:

Finally, the final entity embedding can be expressed as:

3.3.2. Local Memory Representation

In order to obtain the temporal features of entities and relations in adjacent historical knowledge graphs, following RE-GCN [

12], GRU is adopted to update the representations of entities and relations. The update of entity embedding representations is as follows:

where

is the entity embedding at time t + 1.

is the entity embedding obtained after aggregating the knowledge graph using HT-GNN.

Similarly, in order to obtain the temporal embedding representation of the relationship, the following method is used for calculation:

where

is the relation embedding at time t + 1.

represents mean pooling operation to retain all entity embeddings related to the relationship r and average them.

3.4. Global Memory Encoder

To extract cross-timestamp entity dependencies, we construct a global knowledge graph by associating historical snapshots containing the same entities [

28]. We introduce a Time-Related Graph Neural Network (TR-GNN) to effectively encode this global graph.

The global graph aggregates facts across timestamps, including periodic and non-periodic occurrences. To fully utilize this temporal information, we design separate periodic and aperiodic time vector representations:

where

is the periodic time vector;

is the non-periodic time vector.

and

are learnable vectors, representing frequency and phase respectively.

is a periodic activation function, which is sin function in the paper. t represents the time interval

between two connected entities in the global knowledge graph.

To obtain the time-semantic dependencies between entities in the global knowledge graph, the paper designs TR-GNN to encode the knowledge graph:

where

is the encoded entity embedding.

is a learnable weight parameter.

and

are weights for entities and relations respectively, calculated as follows:

Using the non-periodic time vector to compute weights for entities and relations better captures the temporal-semantic relationships between entities. Finally, the updated entity embeddings are nonlinearly transformed to obtain the embedding representations of each entity in the global knowledge graph.

3.5. Gating Integration

In order to better integrate the embeddings vectors obtained by the local memory encoder and the global memory encoder for reasoning, the paper applies a learnable gating function [

29] to adaptively adjust the weights of local memory embeddings and global memory embeddings, finally obtaining a vector:

where

is the sigmoid function, aiming to restrict the range of each element to [0, 1]. g is the gate parameter to adjust the weights.

is element-wise multiplication.

3.6. Scoring Function and Loss Function

In this section, the paper mainly introduces the details of scoring function and the loss function. In order to obtain the probability of an event occurring at timestamp t + 1 in the future, ConvTransE [

12] is utilized as the decoder to calculate the probability of the existence between entities at timestamp t + 1 under the relation r:

where

is the sigmoid function.

and

are the embedding representations of entities and relations at timestamp t + 1.

is the embedding representations of entities after global and local fusion.

Following RE-GCN [

12], the loss function during training is defined as:

The final loss is

, where

is the loss for predicting entities,

is the loss for predicting relations,

is the hyperparameter balancing different tasks.

is

norm, and

is to control the penalty term. The overall training algorithm is shown in Algorithm 1.

| Algorithm 1. Training of MSEN |

Input: The train set: ; the number of epoch n.

Output: The minimum Loss on the train set. |

Init.normal_(), //Initialize the model’ parameters For each do + + + //Obtaining the semantic information using HT-GNN //local memory representation Construct a global graph G; //Obtaining time-semantic dependencies using TR-GNN //Gating Integration ConvTranE() LossCalculate the sum of and Update parameters End for Return Loss

|

4. Experiments

In this chapter, the paper compares MSEN with several baselines on two datasets. Meanwhile, ablation experiments are conducted to analyze the ability of acquiring semantic information at different scales. Comparison experiments evaluate the effects of different GCNs. In addition, the paper also studies the parameter settings of the model.

4.1. Datasets

To evaluate the effect of the MSEN model, experiments are conducted on two typical event-based datasets, including: ICEWS14 [

17], ICEWS18 [

6]. Both datasets are from the event-based dataset Integrated Crisis Early Warning System [

30], with the time interval of 24 h. The statistical details of the datasets are listed in

Table 2.

4.2. Baselines

The paper compares MSEN with two types of methods: static KG reasoning and TKG reasoning methods.

For static KG methods: DiSMult [

1], ComplEx [

2], RGCN [

3], ConvE [

4], and RotatE [

5] are selected.

For TKG reasoning under the extrapolation setting: CyGNet [

8], RE-NET [

6], xERTE [

9], RE-GCN [

12], TITer [

10], CENET [

11], and TiRGN [

14] are selected.

4.3. Evaluation Metrics

This paper adopts mean reciprocal rank (MRR) and Hit@k (k is 1 and 10) to evaluate the performance of models for entity prediction. For the fairness of the comparison, the ground truth is used when performing multi-step reasoning, and the experimental results are reported under the time-aware filtered setting. MRR is the average of the reciprocal ranks of all relevant candidate entities, measuring the rank of the highest-ranking relevant entity. Hit@k represents the proportion of cases where the true target entity is included in the top k predicted candidates, reflecting the model’s ability to contain the target entity within the top k.

4.4. Implementation Details

In the experiments, the embedding dimension was fixed at 200 for all methods. The Adam optimizer was used for parameter training with a learning rate of 0.001. The hyperparameter for balancing different tasks was set to 0.7. To ensure fairness of comparison with other models, for the local memory encoder, the number of adjacent historical knowledge graphs obtained was 3 and 6 on the ICEWS14 and ICEWS18 datasets respectively; for the global memory encoder, the number of adjacent historical knowledge graphs obtained was 10 and 10 on the ICEWS14 and ICEWS18 datasets, respectively. The number of layers in HT-GNN was 2 on both datasets. The number of layers in TR-GNN was 2 and 3 on the ICEWS14 and ICEWS18 datasets respectively. For the ConvTranE decoder, the number of channels used was 50 and the kernel size was 2 × 3.

5. Results

5.1. Performance Comparison

As shown in

Table 3 and

Table 4, entity prediction experiments are conducted on facts at future timestamps. The tables list the predictive performance of MSEN and the baseline models on ICEWS14 and ICEWS18 datasets. The results of baseline models in the table are from [

15].

In the experimental results, the MSEN model outperforms other baseline models. The results show the effectiveness of the MSEN model in predicting entities at future timestamps. Compared with TiRGN*, MSEN improves the MRR by 3.5 and 1.83 percentage points on ICEWS14 and ICEWS18, respectively.

Probing deeper, we evaluated recall capabilities against TiRGN* on ICEWS14. At recall@1, MSEN achieved 32.78%, while TiRGN* achieved 34.88%. For recall@10, the models achieved near identical results, with MSEN at 61.98% versus 62.21% for TiRGN*. While marginally behind in recall@k, MSEN still demonstrates competitive retrieval ability.

5.2. Ablation Study

The encoder of the MSEN model is mainly composed of two submodules: Local Memory Encoder (LME) and Global Memory Encoder (GME). To further analyze the effect of each module on the final entity prediction results, the paper reports the results of LME (HT-GNN) and GME (TR-GNN) on the ICEWS14 dataset using MRR and Hit@k (k = 1, 3, 10) metrics. LME (HT-GNN) represents removing the GME module from the MSEN model; GME (TR-GNN) represents removing the LME module from the MSEN model. As can be seen from

Table 5, the performance of LME (HT-GNN) is worse than MSEN, because this part only extracts local features and the obtained embedding representations have less semantic information. The performance of GME (TR-GNN) has greatly improved compared to LME (HT-GNN), but integrating both using a gating function can further improve the accuracy of entity prediction.

To study the effects of HT-GNN and TR-GNN in LME and GME, respectively, the paper compares these two models with different types of GNN-based models on the ICEWS14 dataset using MRR and Hit@k (k = 1, 3, 10) metrics. In LME, RGCN [

3], KBGAT [

31], and CompGCN (add) [

32] are used to replace the HT-GNN model; in GME, RGCN [

3], KBGAT [

31], CompGCN (add) [

32], and HRGNN [

28] are used to replace the TR-GNN model. The experimental results in

Table 5 show that the MRR of HT-GNN is 0.83 percentage points higher than CompGCN (add); in GME, the MRR of TR-GNN is 0.31 percentage points higher than HRGNN. Overall, both can effectively improve the accuracy of entity prediction in LME and GME respectively. The improvement of HT-GNN may be due to the hierarchical transfer method that enriches the semantic information of entities in the final knowledge graph. The improvement of TR-GNN may be due to its full utilization of both periodic and non-periodic time in the global knowledge graph.

5.3. Sensitivity Analysis

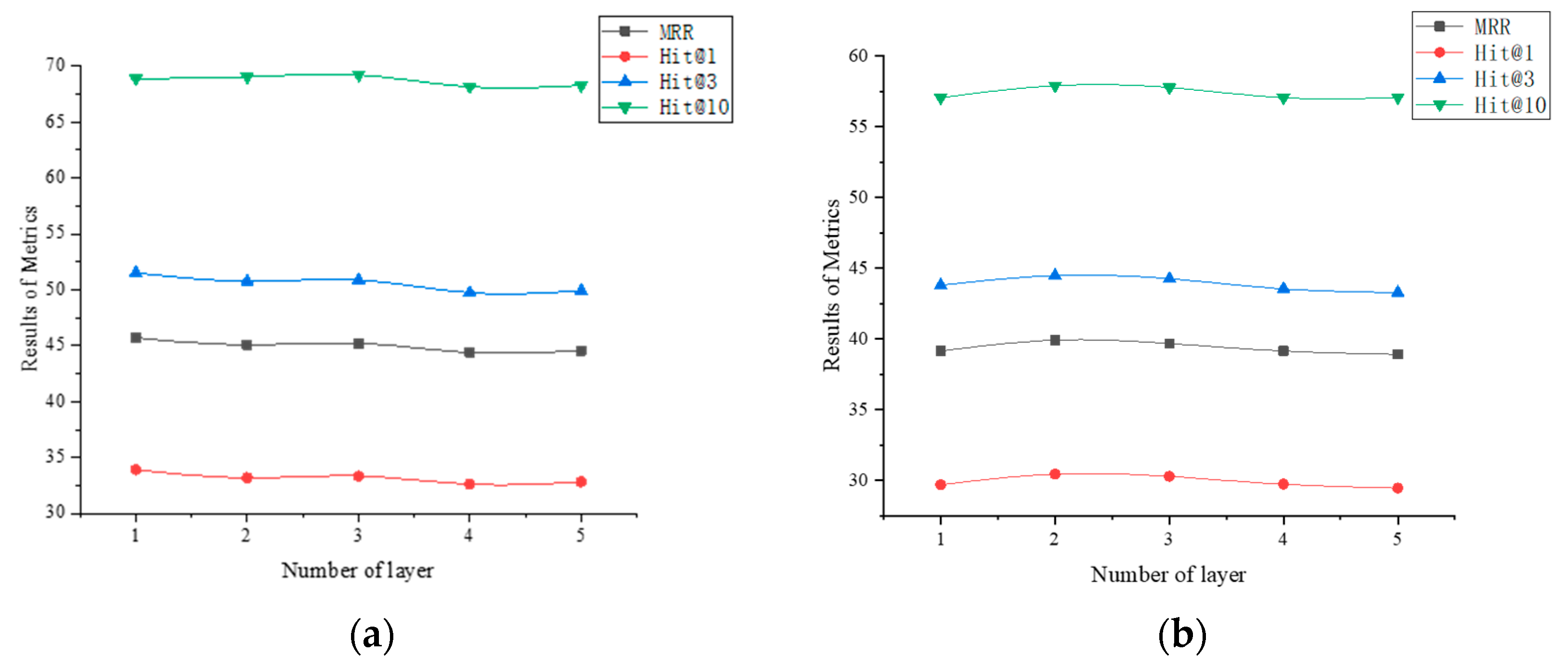

The paper further studied the effect of the number of layers in TR-GNN and HT-GNN on entity prediction performance for GME and LME modules using MRR and Hit@k (k = 1, 3, 10) metrics, respectively.

Figure 3 show the experimental results with different numbers of layers on the ICEWS14 dataset. The experimental results show that with just 1 layer, the TR-GNN module can already effectively extract temporal-semantic information between entities. As the number of layers increases, the extracted features may interfere with entity embeddings. The HT-GNN model can extract sufficient semantic information with 2 layers, thus achieving higher entity prediction accuracy. Through experiments, it was proven that the different number of layers in TR-GNN and HT-GNN can affect the extraction of semantic information in the knowledge graph, thus influencing the effect of entity prediction.

5.4. Training Process Analysis

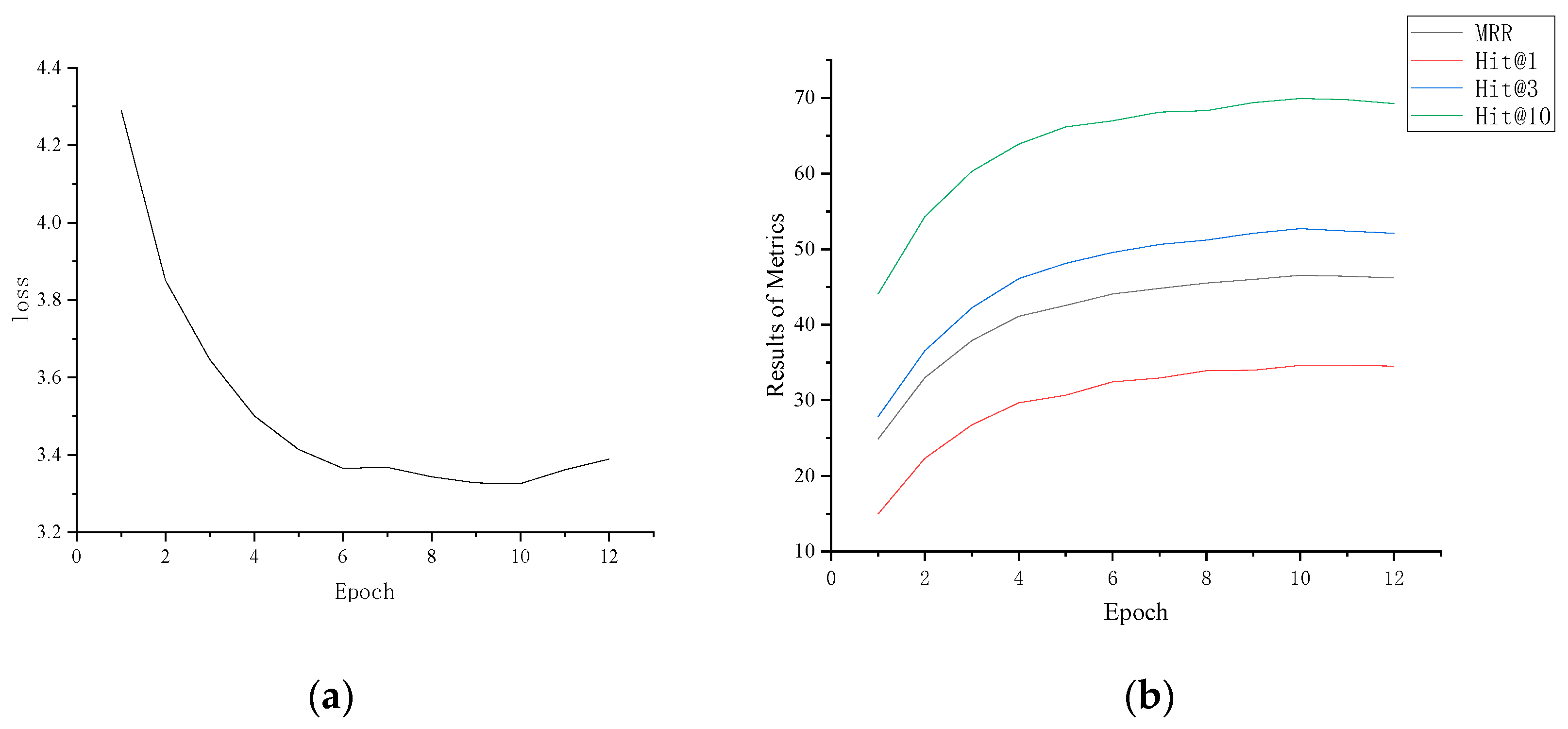

This paper studied the evolution of loss and evaluation metrics of the MSEN model during the training process.

Figure 4 shows how the experimental results change with increasing epoch on the validation dataset of ICEWS14. The experimental results show that as the number of training epochs increases, the loss first gradually decreases, reaching the lowest point at the 10th epoch, and then slowly increases again; the values of the evaluation metrics MRR, Hit@1, Hit@3, and Hit@10 gradually increase, reaching a plateau after the 10th epoch. The results indicate that after 10 epochs of training on the ICEWS14 dataset, the model starts overfitting as evidenced by the increasing loss and stabilizing evaluation metrics. Therefore, 10 epoch is an appropriate number for training the MSEN model on the ICEWS14 dataset to achieve good performance without overfitting.

5.5. Statistical Analysis

In order to demonstrate the reliability of the results, both permutation tests and multiple repeated experiments were conducted in this study.

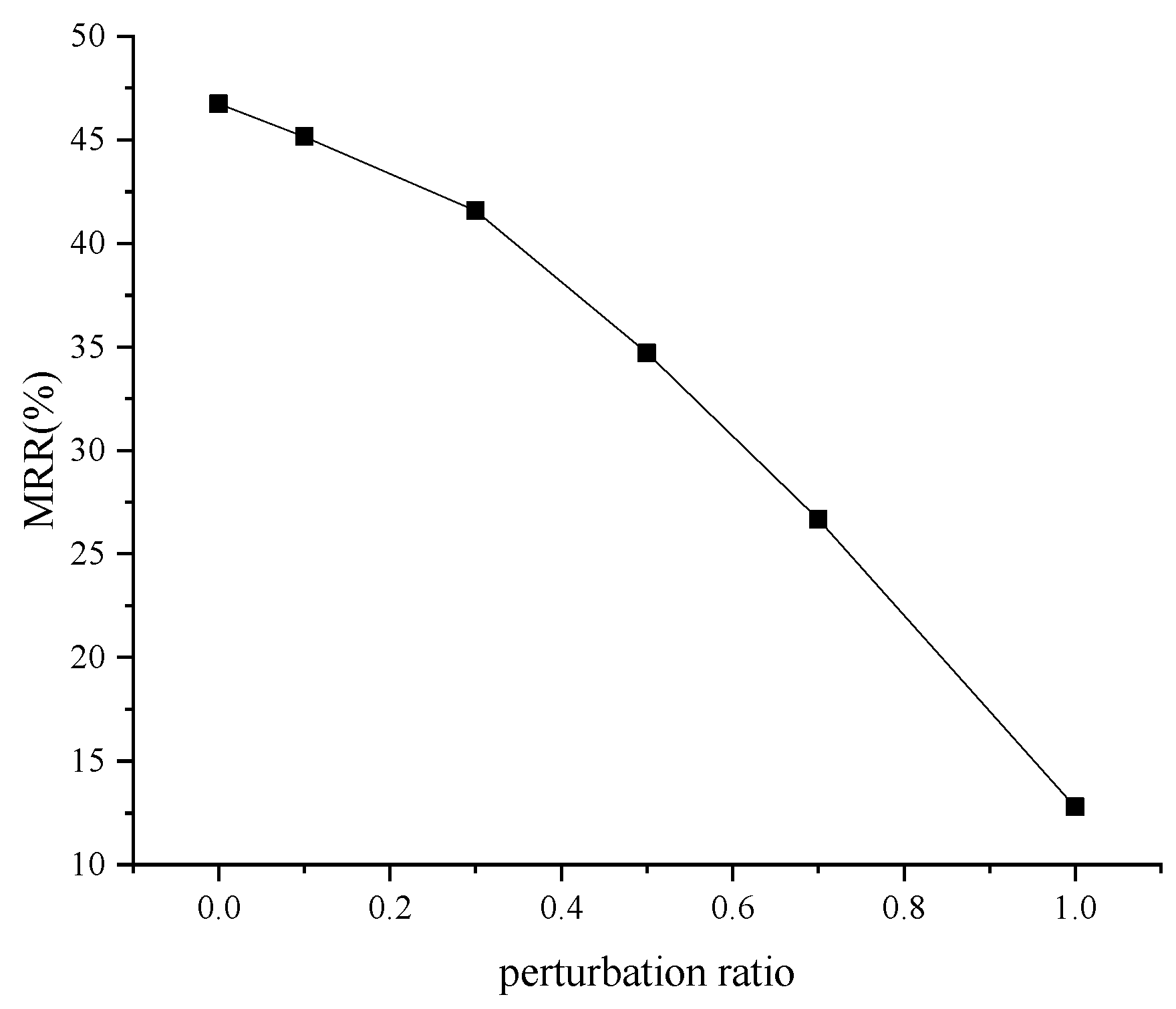

For the permutation test, we first trained the proposed MSEN model on the original training set and recorded the model’s performance (MRR) on the test set as the true observation. We then created perturbed training sets by replacing different proportions of head or tail entities in the original training set. The MSEN model was re-trained on these perturbed sets and achieved varying MRRs on the test set. The p-value was calculated using the following formula:

where M is the number of times the MRR obtained using the perturbed datasets was greater than the true observation, and N is the number of experiments conducted with the perturbed datasets.

As illustrated in

Figure 5, the MSEN model achieved lower MRRs when trained on more heavily perturbed sets, with p-values below the 0.05 significance level. This suggests the model has genuinely captured meaningful patterns in the training data.

Additionally, the experiment was repeated three times using the original training set, yielding consistent MRRs of 46.68%, 46.75%, and 46.72%, with an average of 46.72%. This further demonstrates the reliability and reproducibility of the proposed model.

6. Discussion

The experimental results demonstrate that the proposed MSEN model achieves superior performance compared to several baselines on typical benchmark datasets. The better results validate the effectiveness of modeling multi-scale evolutionary information for predicting future events in TKGs.

The ablation studies in

Section 5.2 provide insights into the contribution of each module in MSEN. Removing the global memory encoder leads to a significant performance drop, showing the importance of capturing long-distance temporal dependencies. The local memory encoder also contributes steady improvements by aggregating rich semantics within each timestamp. Using HT-GNN and TR-GNN as the graph neural network encoders also leads to better results compared to alternative GNN models, demonstrating their capabilities in encoding structural and temporal patterns from TKGs.

The multi-scale modeling in MSEN aligns with findings from previous works such as TiRGN [

14] which show combining local and global patterns is beneficial. However, MSEN proposes new designs for each module, leading to better results. The hierarchical design in HT-GNN enriches semantic learning, while the periodic and aperiodic temporal modeling in TR-GNN improves temporal encoding.

7. Conclusions and Future work

In this work, we propose a new method Multi-Scale Evolutionary Network (MSEN) to solve temporal knowledge graph (TKG) reasoning under the extrapolation setting. First, a hierarchical transfer graph neural network (HT-GNN) is designed in the local memory encoder to acquire rich semantic information from the local knowledge graph, and sequence neural networks are used to learn temporal features of entities and relations. Second, in the global memory encoder, a time-related GNN (TR-GNN) is designed to make full use of temporal information in the global knowledge graph, obtaining periodic and aperiodic temporal-semantic dependencies in the global knowledge graph, providing different weights for entities and relations, improving performance of downstream tasks. Finally, embeddings from different scales are integrated to complete entity prediction. Experimental results on a typical benchmark demonstrate the effectiveness of MSEN in solving TKG reasoning problems.

While MSEN achieves good results, there remain several promising directions for future investigation: (1) Model Compression: The current MSEN model entails relatively high computational complexity. To enable real-time inference and deployment under resource constraints, we can explore model compression techniques such as pruning and knowledge distillation. By simplifying the model while retaining predictive power, we can enhance its applicability to real-world systems. (2) Robustness to Noisy Data: Historical knowledge graphs often suffer from incomplete, biased, or noisy data. The robustness of MSEN’s predictions rely on the quality of the historical inputs. Advanced data filtering, denoising, and imputation methods can be studied to handle imperfect historical graphs. Techniques such as outlier detection and data estimation can help improve the model’s resilience against noise and missing facts. (3) Advanced GNN Architectures: Further improving the local and global graph neural network encoders with more sophisticated architectures such as graph attention networks is a promising direction. This can potentially capture more complex structural, semantic, and temporal patterns.

In the future, we will aim to enhance MSEN along these directions to make broader impacts in real-world applications of temporal knowledge graph reasoning.