COVID-Related Misinformation Migration to BitChute and Odysee

Abstract

1. Introduction

- Migration of misinformation from the YouTube mainstream platform to alternative low content-moderation platforms, namely Bitchute and Odysee.

- Analysis of the characteristics of misinformation dissemination in Bitchute and Odysee.

- Analysis of how users engage with misinformation videos in Bitchute and Odysee.

- RQ1: Are misleading videos posted on mainstream platforms migrating to Bitchute and Odysee low content moderation platforms?

- RQ2: Are there similarities between the dissemination of misinformation in Bitchute/Odysee versus in YouTube?

- RQ3: How are users engaging with misinformation videos in Bitchute and Odysee?

- RQ4: Do users who share misleading videos in Bitchute and Odysee maintain accounts on other platforms?

2. Related Work

2.1. Health-Related Misinformation and Conspiracy Theories

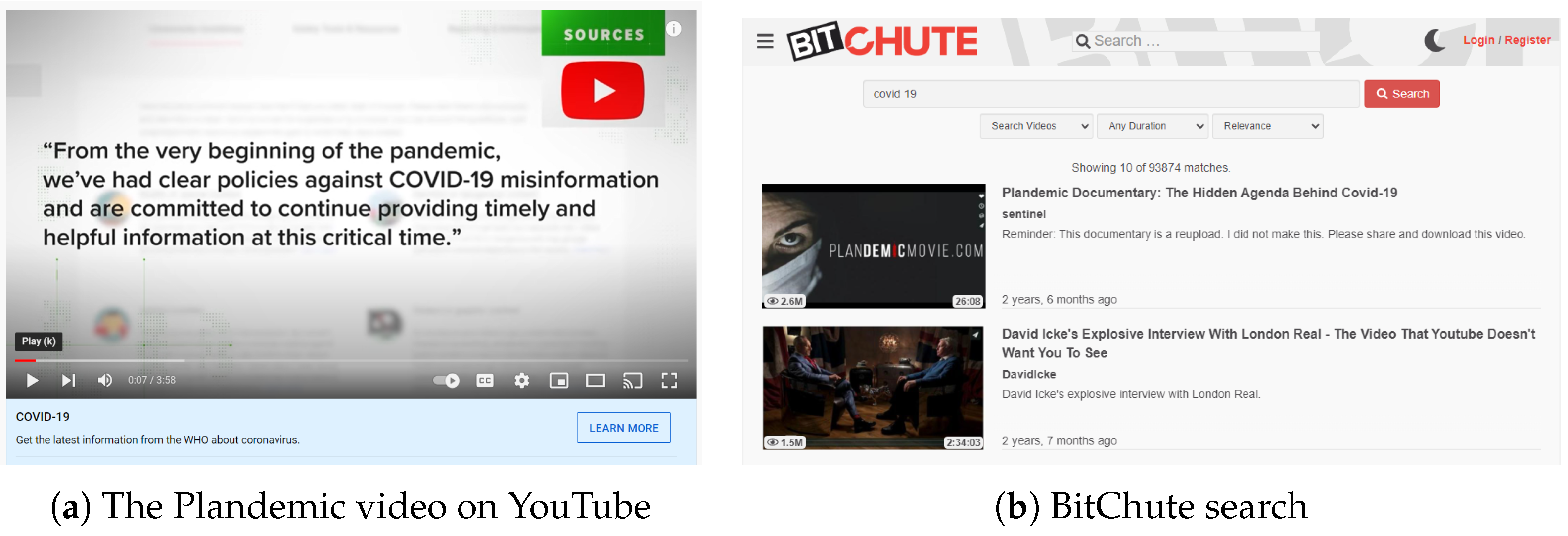

2.2. Misinformation on Mainstream Social Media and Video Platforms

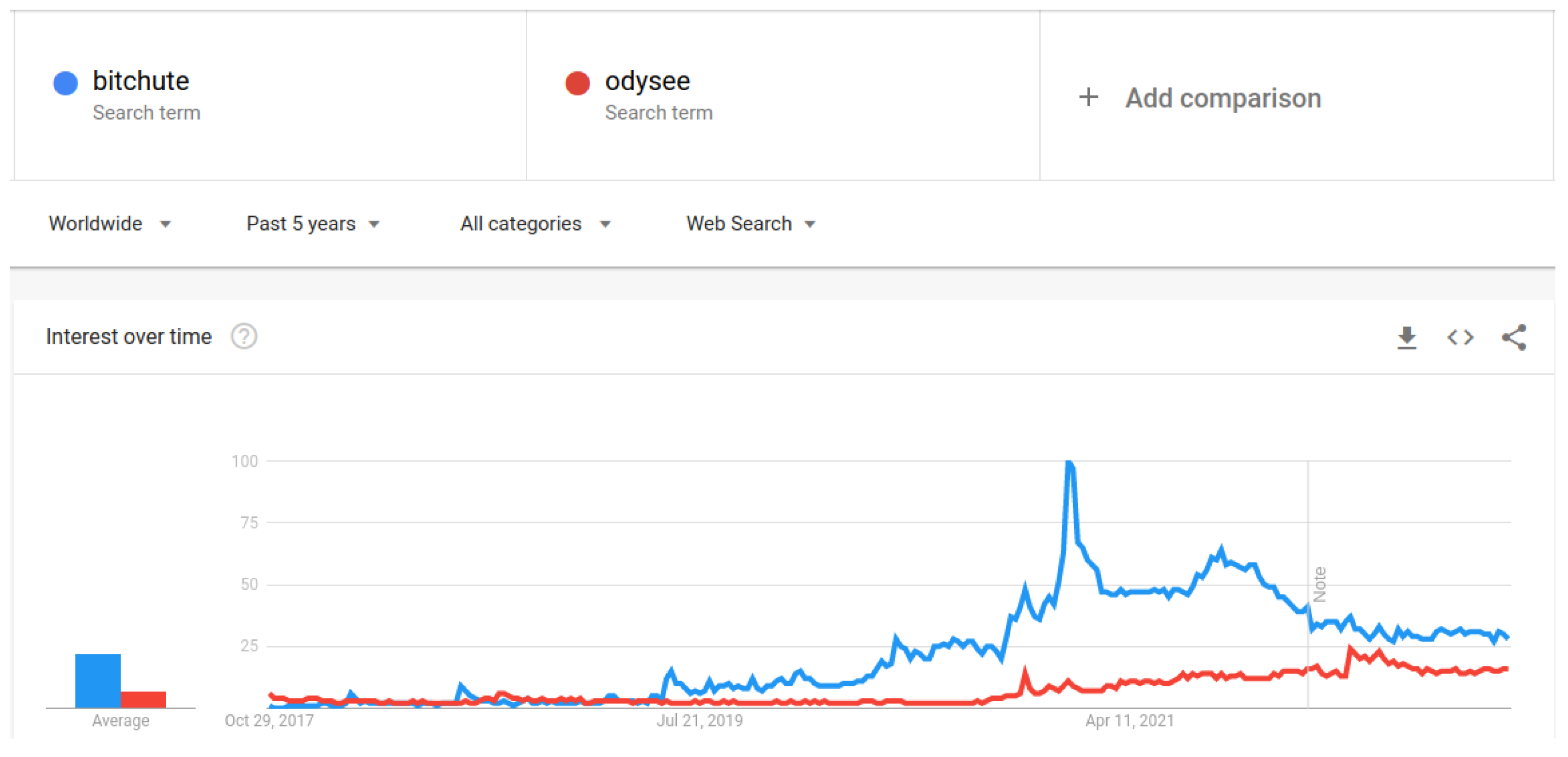

2.3. Misinformation on Low Content Moderation Platforms

3. Methodology

3.1. Oxford COVID-Related Misinformation Dataset

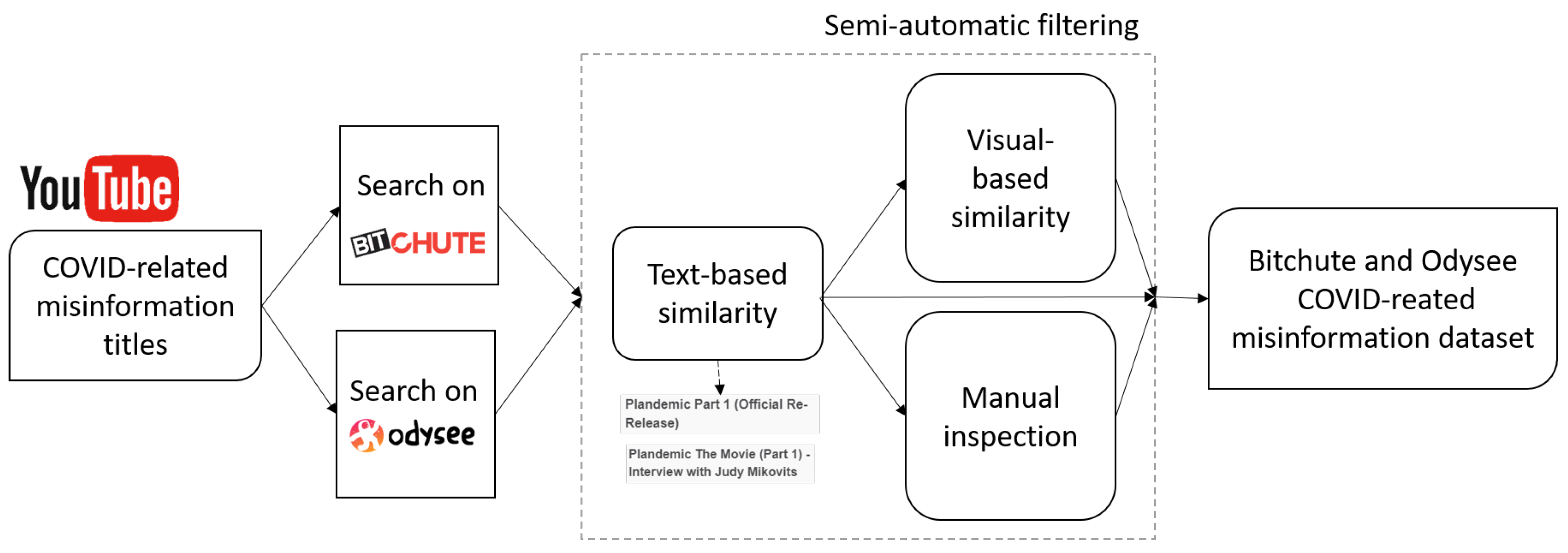

3.2. Video Retrieval in BitChute and Odysee

3.2.1. Text Similarity

3.2.2. Visual Similarity

3.2.3. Manual Similarity

BitChute and Odysee Dataset

- We used a semi-automated process to recover these videos, so there may be videos that migrated, but our process could not recover;

- We solely relied on the video titles in the first step to search and compare videos; the videos might have been posted on the target platforms with a different title, in which cases it would not be possible to recover them.

4. BitChute Analysis

4.1. Temporal Disribution

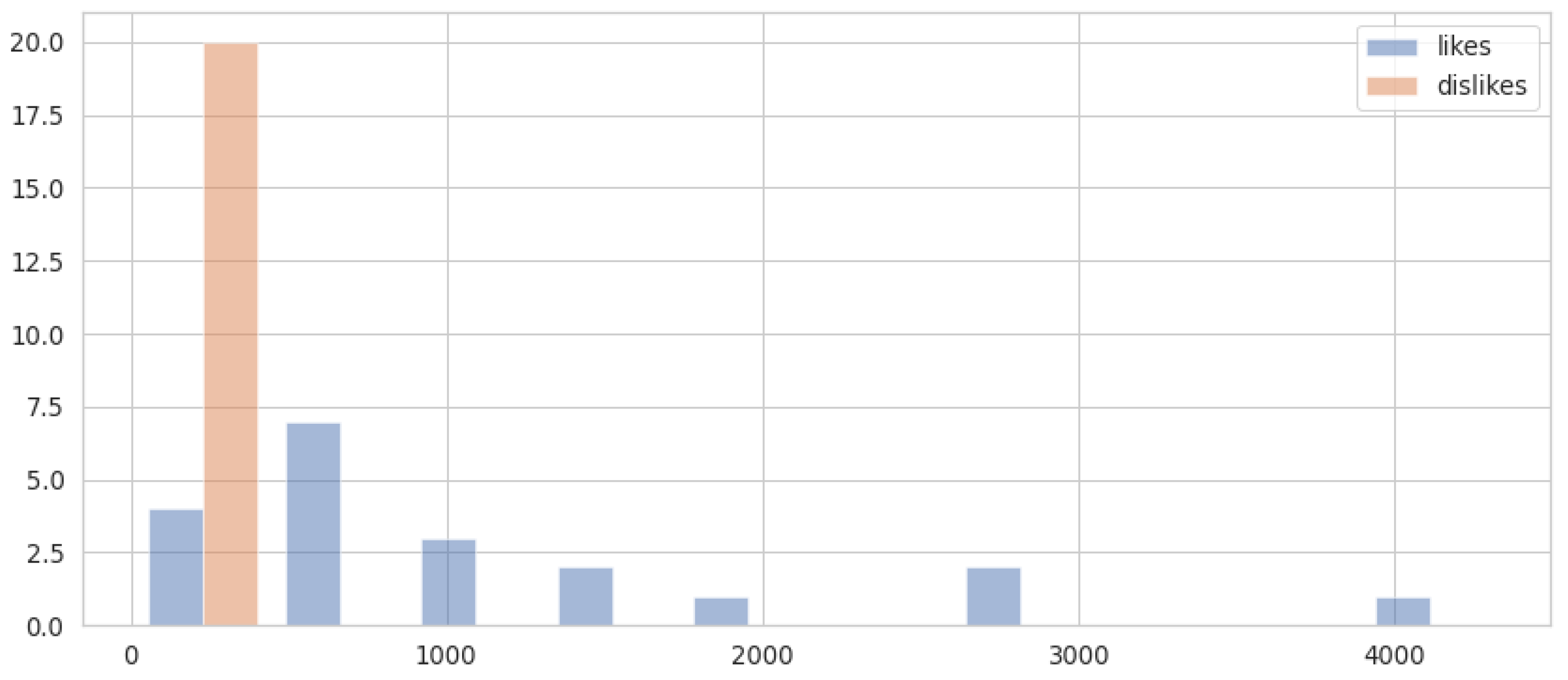

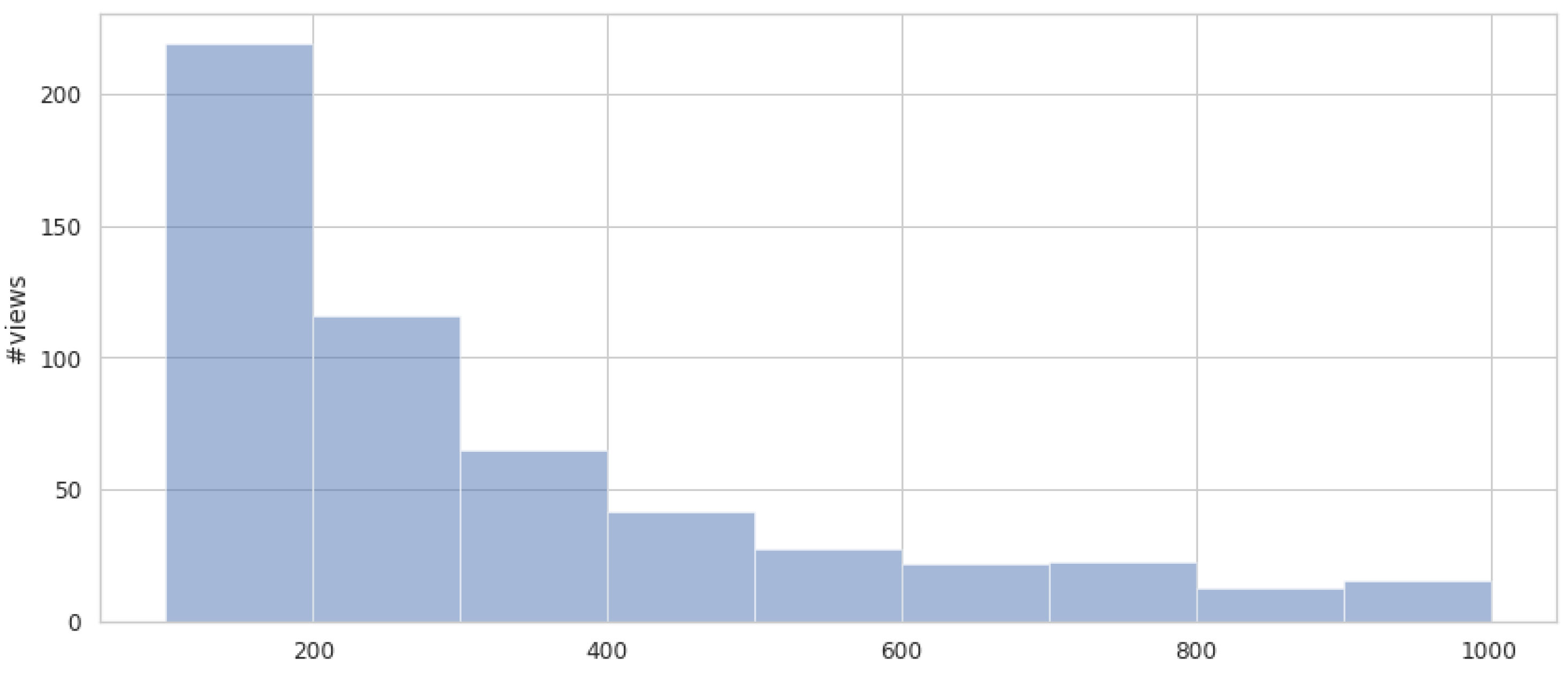

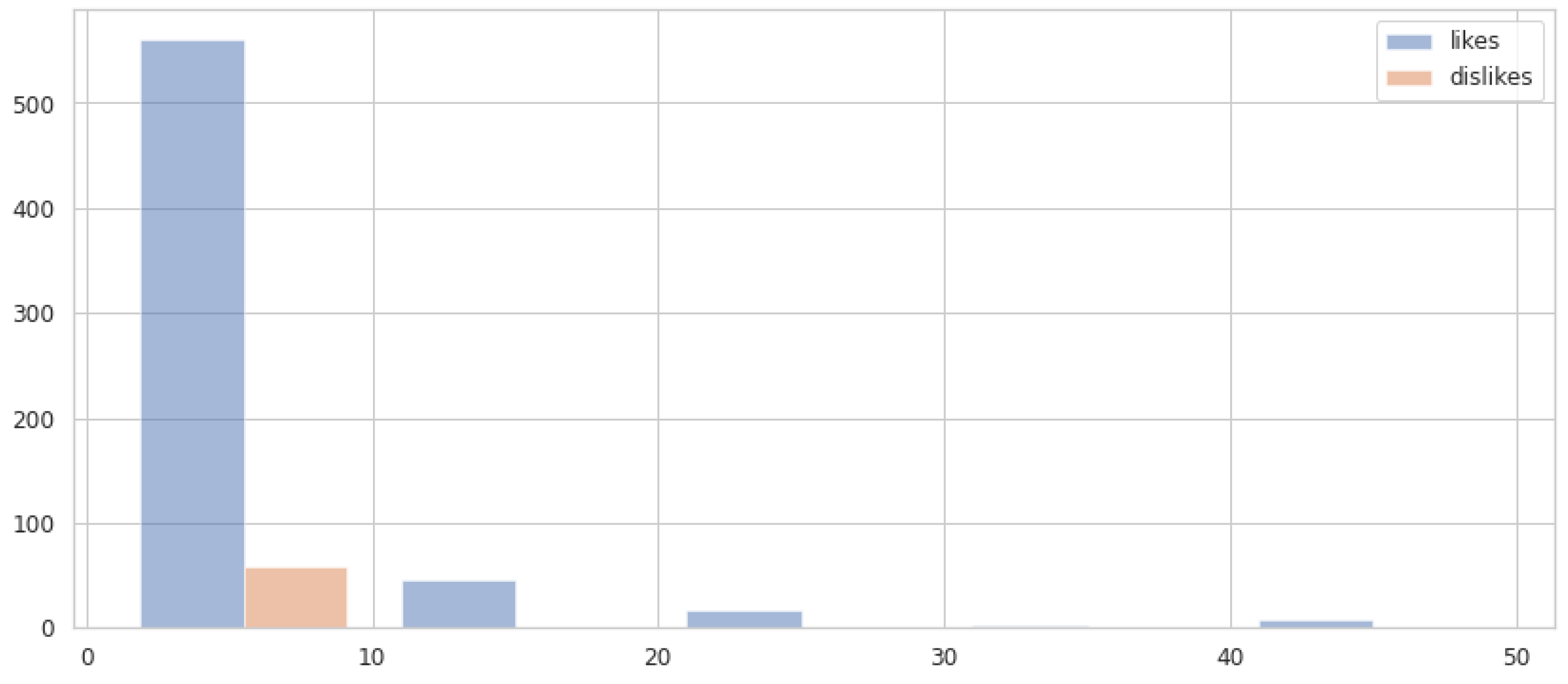

4.2. Video Interactions

4.3. Titles and Descriptions

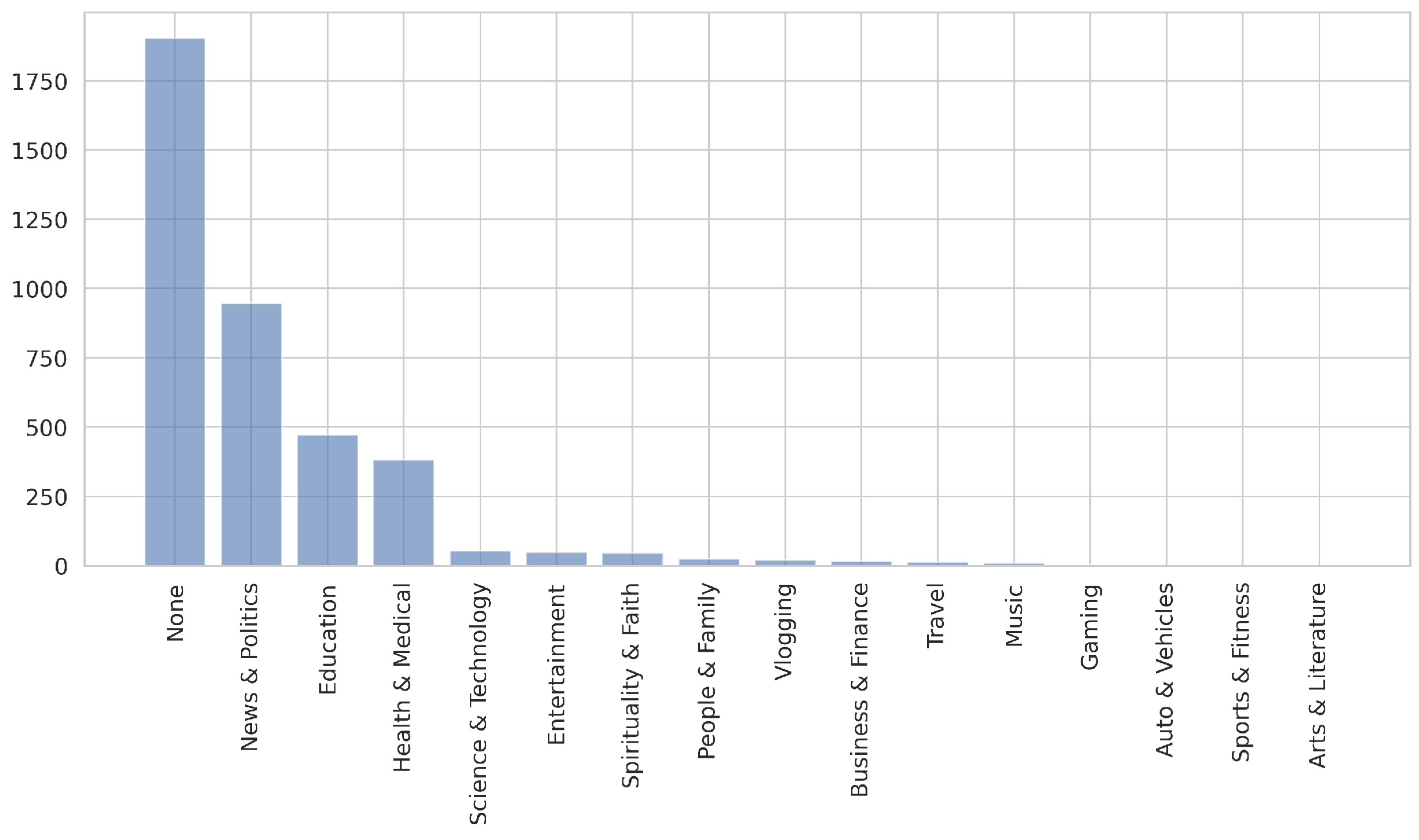

4.4. Categories

4.5. BitChute and IDs

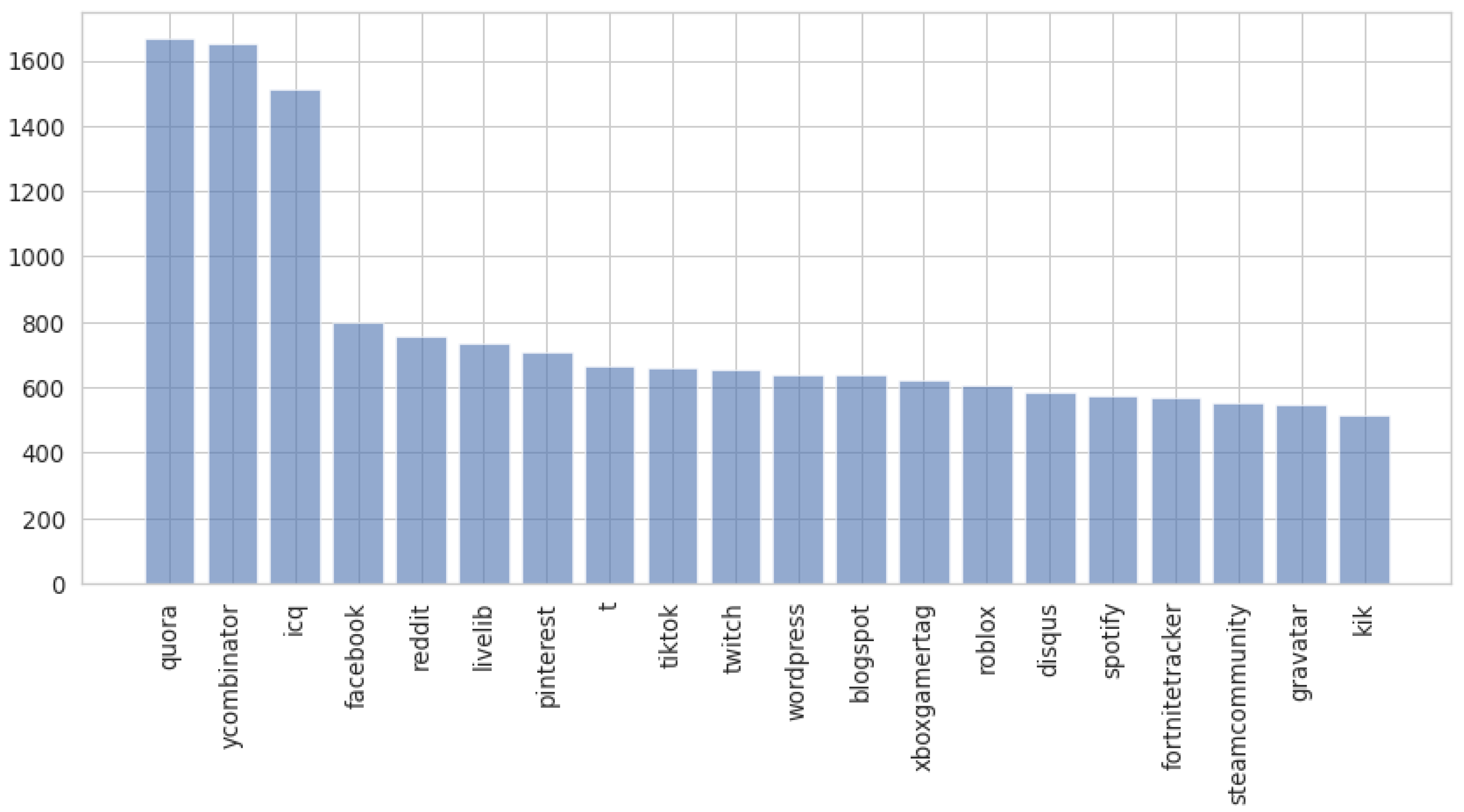

4.6. Cross-Platform Diffusion

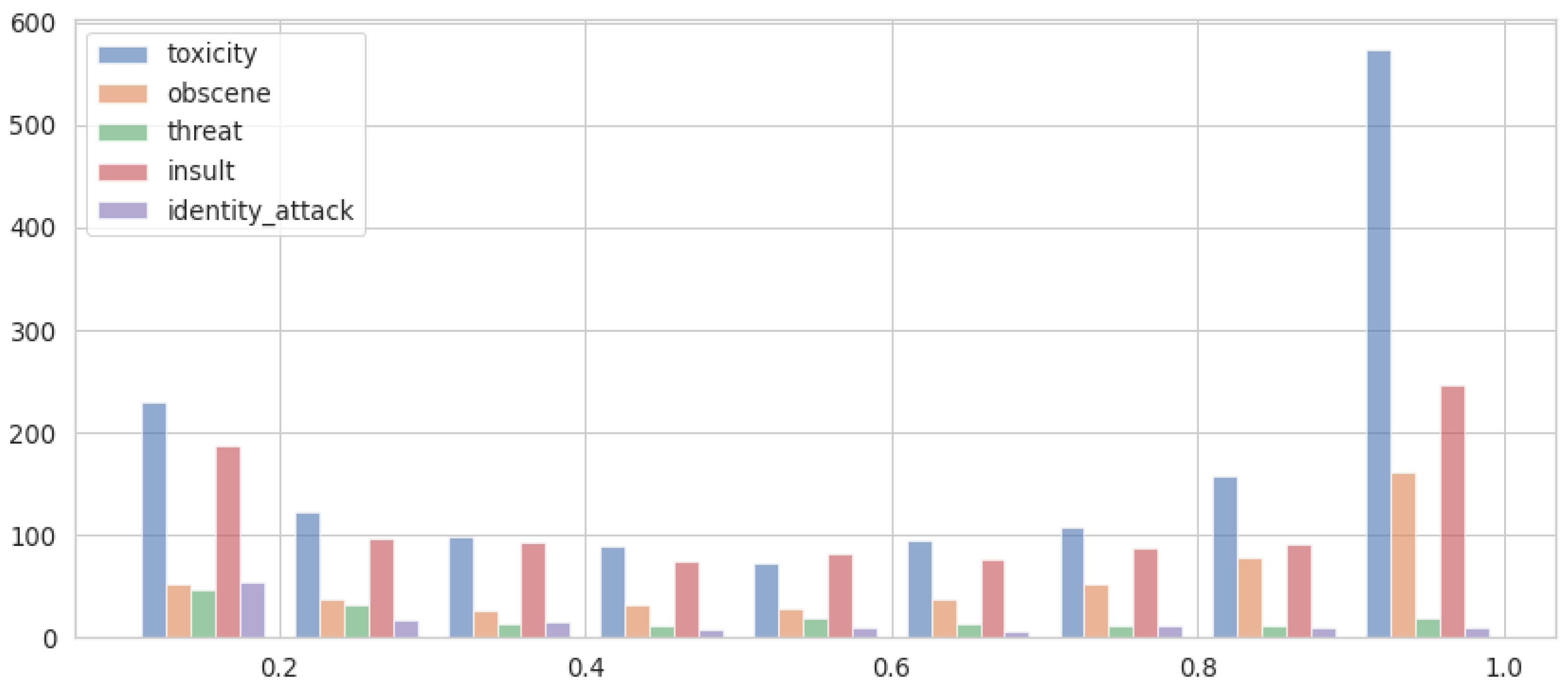

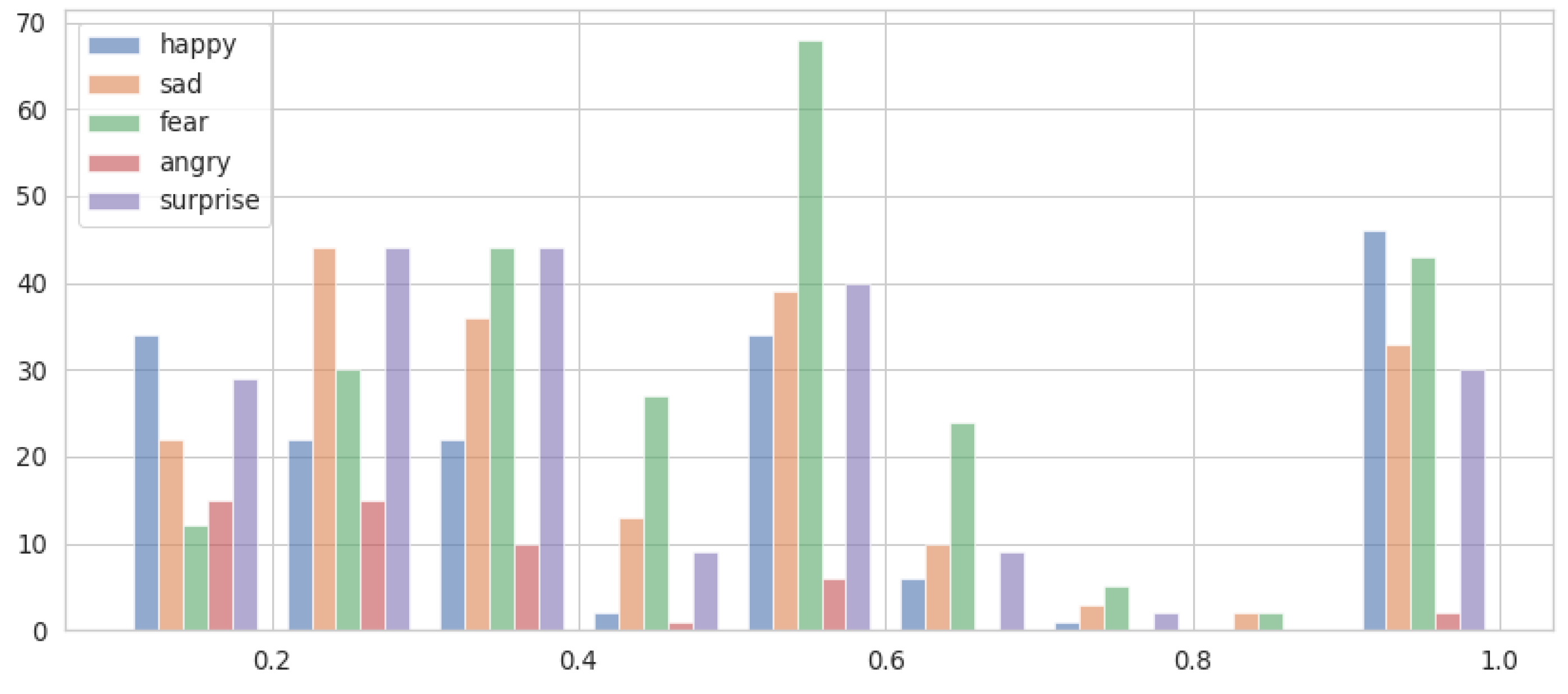

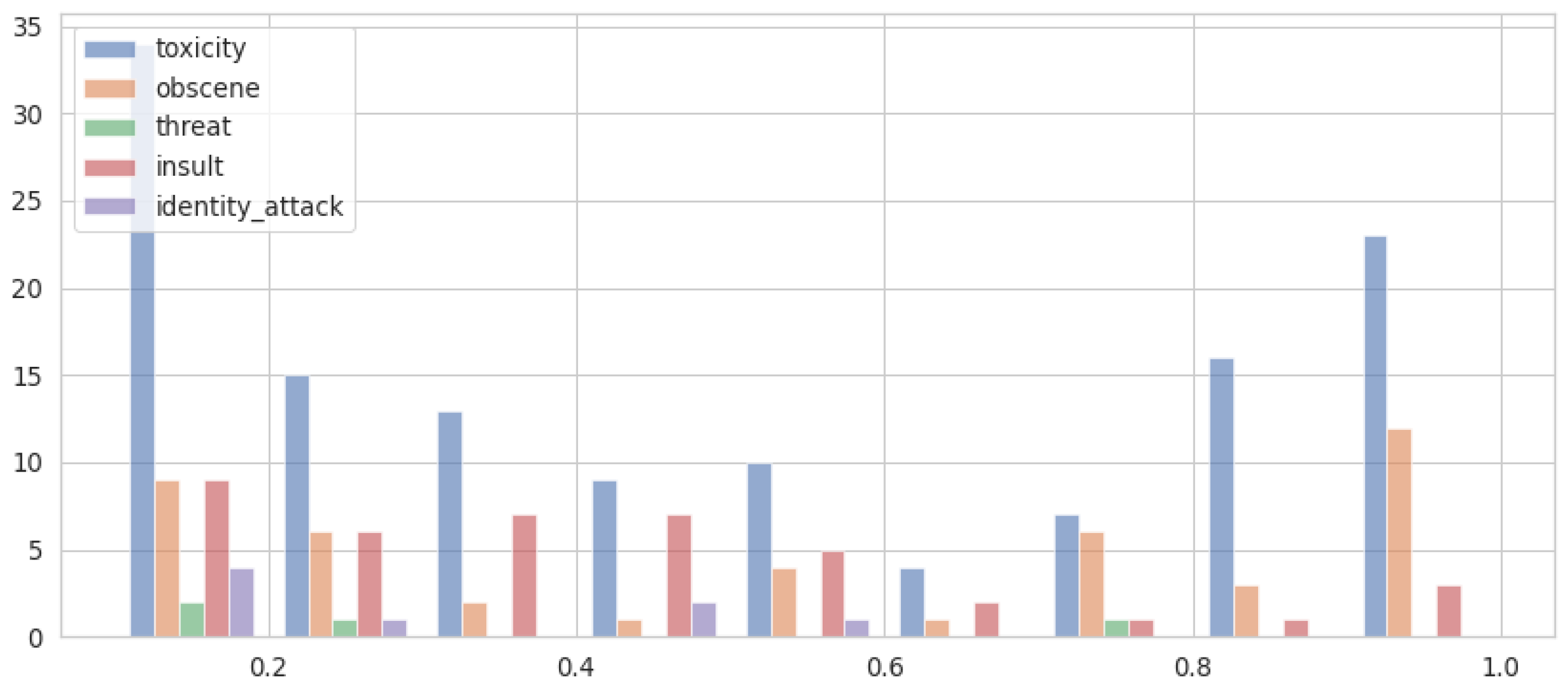

4.7. Comments

4.8. Channels

4.9. Comparison with MeLa Dataset

5. Odysee Analysis

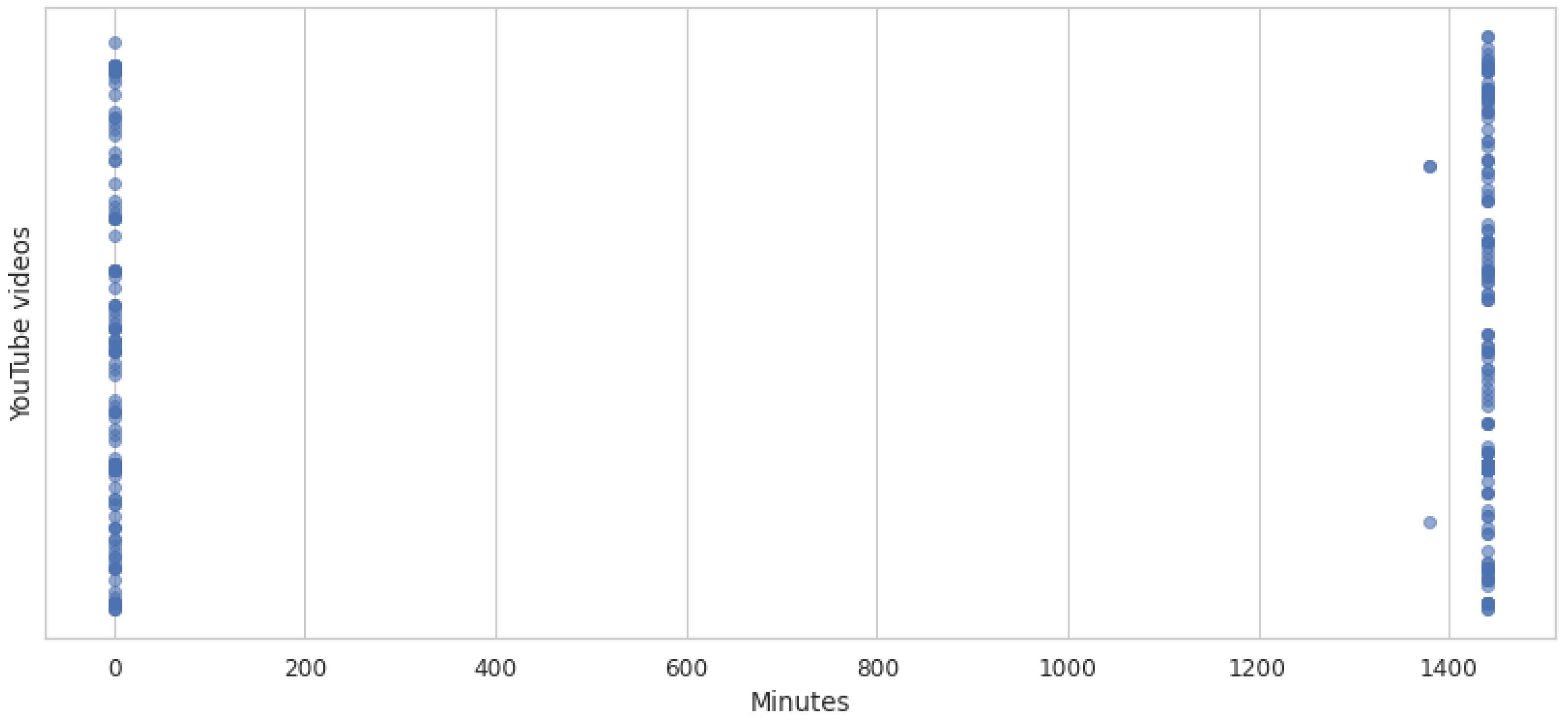

5.1. Temporal Distribution

5.2. Video Interactions

5.3. Video Titles and Descriptions

5.4. Cross-Platform Diffusion

5.5. Comments

5.6. Channels

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fair, G.; Wesslen, R. Shouting into the void: A database of the alternative social media platform gab. In Proceedings of the International AAAI Conference on Web and Social Media, Munich, Germany, 11–14 June 2019; Volume 13, pp. 608–610. [Google Scholar]

- Hanley, H.W.; Kumar, D.; Durumeric, Z. No Calm in The Storm: Investigating QAnon Website Relationships. In Proceedings of the International AAAI Conference on Web and Social Media, Atlanta, GA, USA, 6–9 June 2022; Volume 16, pp. 299–310. [Google Scholar]

- Papasavva, A.; Blackburn, J.; Stringhini, G.; Zannettou, S.; Cristofaro, E.D. “Is it a qoincidence?”: An exploratory study of QAnon on Voat. In Proceedings of the Web Conference 2021, Virtual Conference, 19–23 April 2021; pp. 460–471. [Google Scholar]

- Trujillo, M.; Gruppi, M.; Buntain, C.; Horne, B.D. What is bitchute? characterizing the. In Proceedings of the 31st ACM Conference on Hypertext and Social Media, Online, 13–15 July 2020; pp. 139–140. [Google Scholar]

- Trujillo, M.Z.; Gruppi, M.; Buntain, C.; Horne, B.D. The MeLa BitChute Dataset. In Proceedings of the International AAAI Conference on Web and Social Media, Atlanta, GA, USA, 6–9 June 2022; Volume 16, pp. 1342–1351. [Google Scholar]

- Andrade, G. Medical conspiracy theories: Cognitive science and implications for ethics. Med. Health Care Philos. 2020, 23, 505–518. [Google Scholar] [CrossRef] [PubMed]

- Douglas, K.M.; Uscinski, J.E.; Sutton, R.M.; Cichocka, A.; Nefes, T.; Ang, C.S.; Deravi, F. Understanding conspiracy theories. Political Psychol. 2019, 40, 3–35. [Google Scholar] [CrossRef]

- Allington, D.; Duffy, B.; Wessely, S.; Dhavan, N.; Rubin, J. Health-protective behaviour, social media usage and conspiracy belief during the COVID-19 public health emergency. Psychol. Med. 2021, 51, 1763–1769. [Google Scholar] [CrossRef] [PubMed]

- Oliver, J.E.; Wood, T. Medical conspiracy theories and health behaviors in the United States. JAMA Intern. Med. 2014, 174, 817–818. [Google Scholar] [CrossRef] [PubMed]

- Dunn, A.G.; Surian, D.; Leask, J.; Dey, A.; Mandl, K.D.; Coiera, E. Mapping information exposure on social media to explain differences in HPV vaccine coverage in the United States. Vaccine 2017, 35, 3033–3040. [Google Scholar] [CrossRef]

- Jolley, D.; Douglas, K.M. The effects of anti-vaccine conspiracy theories on vaccination intentions. PLoS ONE 2014, 9, e89177. [Google Scholar] [CrossRef] [PubMed]

- Zimmerman, R.K.; Wolfe, R.M.; Fox, D.E.; Fox, J.R.; Nowalk, M.P.; Troy, J.A.; Sharp, L.K. Vaccine criticism on the world wide web. J. Med. Internet Res. 2005, 7, e369. [Google Scholar] [CrossRef] [PubMed]

- Andrade, G.E.; Hussain, A. Polio in Pakistan: Political, sociological, and epidemiological factors. Cureus 2018, 10, e3502. [Google Scholar] [CrossRef]

- Karafillakis, E.; Larson, H.J. The benefit of the doubt or doubts over benefits? A systematic literature review of perceived risks of vaccines in European populations. Vaccine 2017, 35, 4840–4850. [Google Scholar] [CrossRef]

- Grebe, E.; Nattrass, N. AIDS conspiracy beliefs and unsafe sex in Cape Town. AIDS Behav. 2012, 16, 761–773. [Google Scholar] [CrossRef]

- Thorburn, S.; Bogart, L.M. Conspiracy beliefs about birth control: Barriers to pregnancy prevention among African Americans of reproductive age. Health Educ. Behav. 2005, 32, 474–487. [Google Scholar] [CrossRef] [PubMed]

- Jolley, D.; Douglas, K.M. The social consequences of conspiracism: Exposure to conspiracy theories decreases intentions to engage in politics and to reduce one’s carbon footprint. Br. J. Psychol. 2014, 105, 35–56. [Google Scholar] [CrossRef] [PubMed]

- Wood, M.J.; Douglas, K.M.; Sutton, R.M. Dead and alive: Beliefs in contradictory conspiracy theories. Soc. Psychol. Personal. Sci. 2012, 3, 767–773. [Google Scholar] [CrossRef]

- Earnshaw, V.A.; Bogart, L.M.; Klompas, M.; Katz, I.T. Medical mistrust in the context of Ebola: Implications for intended care-seeking and quarantine policy support in the United States. J. Health Psychol. 2019, 24, 219–228. [Google Scholar] [CrossRef] [PubMed]

- Goertzel, T. Conspiracy theories in science: Conspiracy theories that target specific research can have serious consequences for public health and environmental policies. EMBO Rep. 2010, 11, 493–499. [Google Scholar] [CrossRef]

- Alathur, S.; Chetty, N.; Pai, R.R.; Kumar, V.; Dhelim, S. Hate and False Metaphors: Implications to Emerging E-Participation Environment. Future Internet 2022, 14, 314. [Google Scholar] [CrossRef]

- Colomina, C.; Margalef, H.S.; Youngs, R. The Impact of Disinformation on Democratic Processes and Human Rights in the World; Directorate-General for External Policies, European Parliament: Strasbourg, France, 2021; Volume 29, p. 2021. [Google Scholar]

- Allington, D.; Dhavan, N. The Relationship between Conspiracy Beliefs and Compliance with Public Health Guidance with Regard to COVID-19; King’s College: London, UK, 2020. [Google Scholar]

- Freeman, D.; Waite, F.; Rosebrock, L.; Petit, A.; Causier, C.; East, A.; Jenner, L.; Teale, A.L.; Carr, L.; Mulhall, S.; et al. Coronavirus conspiracy beliefs, mistrust, and compliance with government guidelines in England. Psychol. Med. 2022, 52, 251–263. [Google Scholar] [CrossRef]

- Imhoff, R.; Lamberty, P. A bioweapon or a hoax? The link between distinct conspiracy beliefs about the coronavirus disease (COVID-19) outbreak and pandemic behavior. Soc. Psychol. Personal. Sci. 2020, 11, 1110–1118. [Google Scholar] [CrossRef]

- Hornik, R.; Kikut, A.; Jesch, E.; Woko, C.; Siegel, L.; Kim, K. Association of COVID-19 misinformation with face mask wearing and social distancing in a nationally representative US sample. Health Commun. 2021, 36, 6–14. [Google Scholar] [CrossRef]

- Bierwiaczonek, K.; Kunst, J.R.; Pich, O. Belief in COVID-19 conspiracy theories reduces social distancing over time. Appl. Psychol. Health Well-Being 2020, 12, 1270–1285. [Google Scholar] [CrossRef]

- Earnshaw, V.A.; Eaton, L.A.; Kalichman, S.C.; Brousseau, N.M.; Hill, E.C.; Fox, A.B. COVID-19 conspiracy beliefs, health behaviors, and policy support. Transl. Behav. Med. 2020, 10, 850–856. [Google Scholar] [CrossRef] [PubMed]

- Pavela Banai, I.; Banai, B.; Mikloušić, I. Beliefs in COVID-19 conspiracy theories, compliance with the preventive measures, and trust in government medical officials. Curr. Psychol. 2021, 41, 7448–7458. [Google Scholar] [CrossRef] [PubMed]

- Rosário, R.; Martins, M.R.; Augusto, C.; Silva, M.J.; Martins, S.; Duarte, A.; Fronteira, I.; Ramos, N.; Okan, O.; Dadaczynski, K. Associations between covid-19-related digital health literacy and online information-seeking behavior among portuguese university students. Int. J. Environ. Res. Public Health 2020, 17, 8987. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, E.; Varol, O.; Davis, C.; Menczer, F.; Flammini, A. The rise of social bots. Commun. ACM 2016, 59, 96–104. [Google Scholar] [CrossRef]

- Bessi, A.; Coletto, M.; Davidescu, G.A.; Scala, A.; Caldarelli, G.; Quattrociocchi, W. Science vs conspiracy: Collective narratives in the age of misinformation. PLoS ONE 2015, 10, e0118093. [Google Scholar] [CrossRef]

- Del Vicario, M.; Bessi, A.; Zollo, F.; Petroni, F.; Scala, A.; Caldarelli, G.; Stanley, H.E.; Quattrociocchi, W. The spreading of misinformation online. Proc. Natl. Acad. Sci. USA 2016, 113, 554–559. [Google Scholar] [CrossRef]

- Lee, J.J.; Kang, K.A.; Wang, M.P.; Zhao, S.Z.; Wong, J.Y.H.; O’Connor, S.; Yang, S.C.; Shin, S. Associations between COVID-19 misinformation exposure and belief with COVID-19 knowledge and preventive behaviors: Cross-sectional online study. J. Med. Internet Res. 2020, 22, e22205. [Google Scholar] [CrossRef]

- Kouzy, R.; Abi Jaoude, J.; Kraitem, A.; El Alam, M.B.; Karam, B.; Adib, E.; Zarka, J.; Traboulsi, C.; Akl, E.W.; Baddour, K. Coronavirus goes viral: Quantifying the COVID-19 misinformation epidemic on Twitter. Cureus 2020, 12, e7255. [Google Scholar] [CrossRef]

- Bokovnya, A.Y.; Khisamova, Z.I.; Begishev, I.R.; Sidorenko, E.L.; Ilyashenko, A.N.; Morozov, A.Y. Global Analysis of Accountability for Fake News Spread About the Covid-19 Pandemic in Social Media. Appl. Linguist. Res. J. 2020, 4, 91–95. [Google Scholar]

- Bora, K.; Das, D.; Barman, B.; Borah, P. Are internet videos useful sources of information during global public health emergencies? A case study of YouTube videos during the 2015–16 Zika virus pandemic. Pathog. Glob. Health 2018, 112, 320–328. [Google Scholar] [CrossRef]

- Li, H.O.Y.; Bailey, A.; Huynh, D.; Chan, J. YouTube as a source of information on COVID-19: A pandemic of misinformation? BMJ Glob. Health 2020, 5, e002604. [Google Scholar] [CrossRef]

- Pandey, A.; Patni, N.; Singh, M.; Sood, A.; Singh, G. YouTube as a source of information on the H1N1 influenza pandemic. Am. J. Prev. Med. 2010, 38, e1–e3. [Google Scholar] [CrossRef] [PubMed]

- Pathak, R.; Poudel, D.R.; Karmacharya, P.; Pathak, A.; Aryal, M.R.; Mahmood, M.; Donato, A.A. YouTube as a source of information on Ebola virus disease. N. Am. J. Med. Sci. 2015, 7, 306. [Google Scholar] [CrossRef] [PubMed]

- Buchanan, R.; Beckett, R.D. Assessment of vaccination-related information for consumers available on Facebook®. Health Inf. Libr. J. 2014, 31, 227–234. [Google Scholar] [CrossRef] [PubMed]

- Seymour, B.; Getman, R.; Saraf, A.; Zhang, L.H.; Kalenderian, E. When advocacy obscures accuracy online: Digital pandemics of public health misinformation through an antifluoride case study. Am. J. Public Health 2015, 105, 517–523. [Google Scholar] [CrossRef] [PubMed]

- Sharma, M.; Yadav, K.; Yadav, N.; Ferdinand, K.C. Zika virus pandemic—Analysis of Facebook as a social media health information platform. Am. J. Infect. Control 2017, 45, 301–302. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.; Paek, H.J.; Lynn, J. A content analysis of smoking fetish videos on YouTube: Regulatory implications for tobacco control. Health Commun. 2010, 25, 97–106. [Google Scholar] [CrossRef]

- Paek, H.J.; Kim, K.; Hove, T. Content analysis of antismoking videos on YouTube: Message sensation value, message appeals, and their relationships with viewer responses. Health Educ. Res. 2010, 25, 1085–1099. [Google Scholar] [CrossRef]

- Yoo, J.H.; Kim, J. Obesity in the new media: A content analysis of obesity videos on YouTube. Health Commun. 2012, 27, 86–97. [Google Scholar] [CrossRef]

- Ache, K.A.; Wallace, L.S. Human papillomavirus vaccination coverage on YouTube. Am. J. Prev. Med. 2008, 35, 389–392. [Google Scholar] [CrossRef]

- Basch, C.; Zybert, P.; Reeves, R.; Basch, C. What do popular YouTubeTM videos say about vaccines? Child Care Health Dev. 2017, 43, 499–503. [Google Scholar] [CrossRef] [PubMed]

- Donzelli, G.; Palomba, G.; Federigi, I.; Aquino, F.; Cioni, L.; Verani, M.; Carducci, A.; Lopalco, P. Misinformation on vaccination: A quantitative analysis of YouTube videos. Hum. Vaccines Immunother. 2018, 14, 1654–1659. [Google Scholar] [CrossRef] [PubMed]

- Briones, R.; Nan, X.; Madden, K.; Waks, L. When vaccines go viral: An analysis of HPV vaccine coverage on YouTube. Health Commun. 2012, 27, 478–485. [Google Scholar] [CrossRef] [PubMed]

- Keelan, J.; Pavri-Garcia, V.; Tomlinson, G.; Wilson, K. YouTube as a source of information on immunization: A content analysis. JAMA 2007, 298, 2482–2484. [Google Scholar] [CrossRef] [PubMed]

- Broniatowski, D.A.; Jamison, A.M.; Qi, S.; AlKulaib, L.; Chen, T.; Benton, A.; Quinn, S.C.; Dredze, M. Weaponized health communication: Twitter bots and Russian trolls amplify the vaccine debate. Am. J. Public Health 2018, 108, 1378–1384. [Google Scholar] [CrossRef] [PubMed]

- Ortiz-Martínez, Y.; Jiménez-Arcia, L.F. Yellow fever outbreaks and Twitter: Rumors and misinformation. Am. J. Infect. Control. 2017, 45, 816–817. [Google Scholar] [CrossRef] [PubMed]

- Oyeyemi, S.O.; Gabarron, E.; Wynn, R. Ebola, Twitter, and misinformation: A dangerous combination? BMJ 2014, 349, g6178. [Google Scholar] [CrossRef]

- Ribeiro, M.H.; Ottoni, R.; West, R.; Almeida, V.A.; Meira Jr, W. Auditing radicalization pathways on YouTube. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, 27–30 January 2020; pp. 131–141. [Google Scholar]

- Faddoul, M.; Chaslot, G.; Farid, H. A longitudinal analysis of YouTube’s promotion of conspiracy videos. arXiv 2020, arXiv:2003.03318. [Google Scholar]

- Buntain, C.; Bonneau, R.; Nagler, J.; Tucker, J.A. YouTube recommendations and effects on sharing across online social platforms. In Proceedings of the ACM on Human-Computer Interaction, Málaga, Spain, 22–24 September 2021; Volume 5. [Google Scholar]

- Wilson, T.; Starbird, K. Cross-platform Information Operations: Mobilizing Narratives & Building Resilience through both’Big’&’Alt’Tech. In Proceedings of the ACM on Human-Computer Interaction, Málaga, Spain, 22–24 September 2021; Volume 5. [Google Scholar]

- Jasser, G.; McSwiney, J.; Pertwee, E.; Zannettou, S. ‘Welcome to# GabFam’: Far-right virtual community on Gab. New Media Soc. 2021, 22, 700–715. [Google Scholar]

- Lima, L.; Reis, J.C.; Melo, P.; Murai, F.; Araujo, L.; Vikatos, P.; Benevenuto, F. Inside the right-leaning echo chambers: Characterizing gab, an unmoderated social system. In Proceedings of the 2018 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Barcelona, Spain, 28–31 August 2018; pp. 515–522. [Google Scholar]

- Zannettou, S.; Bradlyn, B.; De Cristofaro, E.; Kwak, H.; Sirivianos, M.; Stringini, G.; Blackburn, J. What is gab: A bastion of free speech or an alt-right echo chamber. In Proceedings of the Companion Proceedings of the The Web Conference 2018, Lyon, France, 23–27 April 2018; pp. 1007–1014.

- Zhou, Y.; Dredze, M.; Broniatowski, D.A.; Adler, W.D. Elites and foreign actors among the alt-right: The Gab social media platform. First Monday 2019, 24. [Google Scholar] [CrossRef]

- Donovan, J.; Lewis, B.; Friedberg, B. Parallel ports: Sociotechnical change from the alt-right to alt-tech. In Post-Digital Cultures of the Far Right: Online Actions and Offline Consequences in Europe and the US; Fielitz, M., Thurston, N., Eds.; Transcript Publishing: Bielefeld, Germany, 2019. [Google Scholar]

- Rye, E.; Blackburn, J.; Beverly, R. Reading In-Between the Lines: An Analysis of Dissenter. In Proceedings of the ACM Internet Measurement Conference, Pittsburgh, PA, USA, 27–29 October 2020; pp. 133–146. [Google Scholar]

- Colley, T.; Moore, M. The Challenges of Studying 4chan and the Alt-Right:‘Come on in the Water’s Fine’. New Media Soc. 2022, 24, 5–30. [Google Scholar] [CrossRef]

- Hine, G.E.; Onaolapo, J.; De Cristofaro, E.; Kourtellis, N.; Leontiadis, I.; Samaras, R.; Stringhini, G.; Blackburn, J. Kek, cucks, and god emperor trump: A measurement study of 4chan’s politically incorrect forum and its effects on the web. In Proceedings of the Eleventh International AAAI Conference on Web and Social Media, Montreal, QC, Canada, 15–18 May 2017. [Google Scholar]

- Mittos, A.; Zannettou, S.; Blackburn, J.; De Cristofaro, E. “And we will fight for our race!” A measurement study of genetic testing conversations on Reddit and 4chan. In Proceedings of the International AAAI Conference on Web and Social Media, Atlanta GA, USA, 8–11 June 2020; Volume 14, pp. 452–463. [Google Scholar]

- Zannettou, S.; Caulfield, T.; Blackburn, J.; De Cristofaro, E.; Sirivianos, M.; Stringhini, G.; Suarez-Tangil, G. On the origins of memes by means of fringe web communities. In Proceedings of the Internet Measurement Conference 2018, Boston, MA, USA, 31 October–2 November 2018; pp. 188–202. [Google Scholar]

- Pieroni, E.; Jachim, P.; Jachim, N.; Sharevski, F. Parlermonium: A data-driven UX design evaluation of the Parler platform. arXiv 2021, arXiv:2106.00163. [Google Scholar]

- Aliapoulios, M.; Bevensee, E.; Blackburn, J.; Bradlyn, B.; De Cristofaro, E.; Stringhini, G.; Zannettou, S. A Large Open Dataset from the Parler Social Network. In Proceedings of the ICWSM, Virtually, 7–10 June 2021; pp. 943–951. [Google Scholar]

- Sharevski, F.; Jachim, P.; Pieroni, E.; Devine, A. “Gettr-ing” Deep Insights from the Social Network Gettr. arXiv 2022, arXiv:2204.04066. [Google Scholar]

- Paudel, P.; Blackburn, J.; De Cristofaro, E.; Zannettou, S.; Stringhini, G. An Early Look at the Gettr Social Network. arXiv 2021, arXiv:2108.05876. [Google Scholar]

- van Doesburg, J. An Alternative Rabbit Hole? An Analysis of the Construction of Echo Chambers within Coronavirus Activism Groups op Telegram. Master’s Thesis, Utrecht University, Utrecht, The Netherlands, 2021. [Google Scholar]

- Rogers, R. Deplatforming: Following extreme Internet celebrities to Telegram and alternative social media. Eur. J. Commun. 2020, 35, 213–229. [Google Scholar] [CrossRef]

- Rauchfleisch, A.; Kaiser, J. Deplatforming the Far-Right: An Analysis of YouTube and BitChute; SSRN: Rochester, NY, USA, 2021. [Google Scholar]

- Bond, S. Kicked off Facebook and Twitter, Far-Right Groups Lose Online Clout. 2022. Available online: https://www.npr.org/2022/01/06/1070763913/kicked-off-facebook-and-twitter-far-right-groupslose-online-clout (accessed on 24 October 2022).

- Kearney, M.D.; Chiang, S.C.; Massey, P.M. The Twitter Origins and Evolution of the COVID-19 “Plandemic” Conspiracy Theory; Harvard Kennedy School Misinformation Review: Cambridge, MA, USA, 2020; Volume 1. [Google Scholar]

- Knuutila, A.; Herasimenka, A.; Au, H.; Bright, J.; Nielsen, R.; Howard, P.N. Covid-related misinformation on YouTube: The spread of misinformation videos on social media and the effectiveness of platform policies. COMPROP Data Memo 2020, 6, 1–7. [Google Scholar]

- Kordopatis-Zilos, G.; Tzelepis, C.; Papadopoulos, S.; Kompatsiaris, I.; Patras, I. DnS: Distill-and-Select for Efficient and Accurate Video Indexing and Retrieval. arXiv 2021, arXiv:2106.13266. [Google Scholar] [CrossRef]

- Naeem, B.; Khan, A.; Beg, M.O.; Mujtaba, H. A deep learning framework for clickbait detection on social area network using natural language cues. J. Comput. Soc. Sci. 2020, 3, 231–243. [Google Scholar] [CrossRef]

- Childs, M.; Buntain, C.; Trujillo, M.Z.; Horne, B.D. Characterizing Youtube and Bitchute content and mobilizers during us election fraud discussions on twitter. In Proceedings of the 14th ACM Web Science Conference 2022, Barcelona, Spain, 26–29 June 2022; pp. 250–259. [Google Scholar]

- Luo, H.; Cai, M.; Cui, Y. Spread of misinformation in social networks: Analysis based on Weibo tweets. Secur. Commun. Netw. 2021, 2021, 7999760. [Google Scholar] [CrossRef]

- Al-Ramahi, M.A.; Alsmadi, I. Using Data Analytics to Filter Insincere Posts from Online Social Networks. A case study: Quora Insincere Questions. In Proceedings of the 53rd Hawaii International Conference on System Sciences, Maui, HI, USA, 7–10 January 2020. [Google Scholar]

- Guo, C.; Cao, J.; Zhang, X.; Shu, K.; Yu, M. Exploiting emotions for fake news detection on social media. arXiv 2019, arXiv:1903.01728. [Google Scholar]

| YT Video Title |

|---|

| EXCLUSIVE Dr Rashid Buttar BLASTS Gates, Fauci, EXPOSES Fake Pandemic Numbers As Economy Collapses |

| Dr. Rashid Buttar BLASTS Gates, Fauci, EXPOSES Fake Pandemic Numbers As Economy Collapses |

| EXCLUSIVE# Dr Rashid Buttar BLASTS Gates, Fauci, EXPOSES Fake Pandemic Numbers As Economy Collapses |

| Language | #Videos | Percentage |

|---|---|---|

| EN | 3162 | 43.8% |

| ES | 840 | 11.6% |

| DE | 709 | 9.8% |

| PT | 480 | 6.5% |

| FR | 311 | 4.3% |

| Texual | Visual | Manual | Total/Unique #dupl | Total #yt | ||||

|---|---|---|---|---|---|---|---|---|

| #dupl | #yt | #dupl | #yt | #dupl | #yt | |||

| Bitchute | 3860 | 893 | 4256 | 372 | 1293 | 182 | 9409/4096 | 1048 |

| Odysee | 2088 | 487 | 487 | 119 | 968 | 222 | 3543/1810 | 591 |

| Positive | Negative |

|---|---|

| Cheers for uploading this critically important information | What’s it like being an idiot? Do people laugh at you or do they just walk away? |

| Great video i wish many more see this. | You sound like a hateful Nazi demon Troll! |

| I love that man | Gary…Shut up!!! |

| Excellent documentary. | why is it that evil people like Faucci get away with murder? |

| Glad I found this, and you, here on BitChute | Age of deceit! |

| Channels | Subscribers | #Videos |

|---|---|---|

| banned-dot-video | 155,242 | 18 |

| styxhexenhammer666 | 143,602 | 1 |

| sgt-report | 125,163 | 2 |

| fallcabal | 114,087 | 7 |

| amazingpolly | 113,036 | 1 |

| computingforever | 112,613 | 1 |

| davidicke | 103,933 | 7 |

| thecrowhouse | 89,838 | 13 |

| timpool | 84,212 | 1 |

| free-your-mind | 74,222 | 11 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papadopoulou, O.; Kartsounidou, E.; Papadopoulos, S. COVID-Related Misinformation Migration to BitChute and Odysee. Future Internet 2022, 14, 350. https://doi.org/10.3390/fi14120350

Papadopoulou O, Kartsounidou E, Papadopoulos S. COVID-Related Misinformation Migration to BitChute and Odysee. Future Internet. 2022; 14(12):350. https://doi.org/10.3390/fi14120350

Chicago/Turabian StylePapadopoulou, Olga, Evangelia Kartsounidou, and Symeon Papadopoulos. 2022. "COVID-Related Misinformation Migration to BitChute and Odysee" Future Internet 14, no. 12: 350. https://doi.org/10.3390/fi14120350

APA StylePapadopoulou, O., Kartsounidou, E., & Papadopoulos, S. (2022). COVID-Related Misinformation Migration to BitChute and Odysee. Future Internet, 14(12), 350. https://doi.org/10.3390/fi14120350