YOLO-DFAN: Effective High-Altitude Safety Belt Detection Network

Abstract

1. Introduction

2. Related Work

2.1. Object Detection

2.2. Attention Mechanism

3. Proposed Method

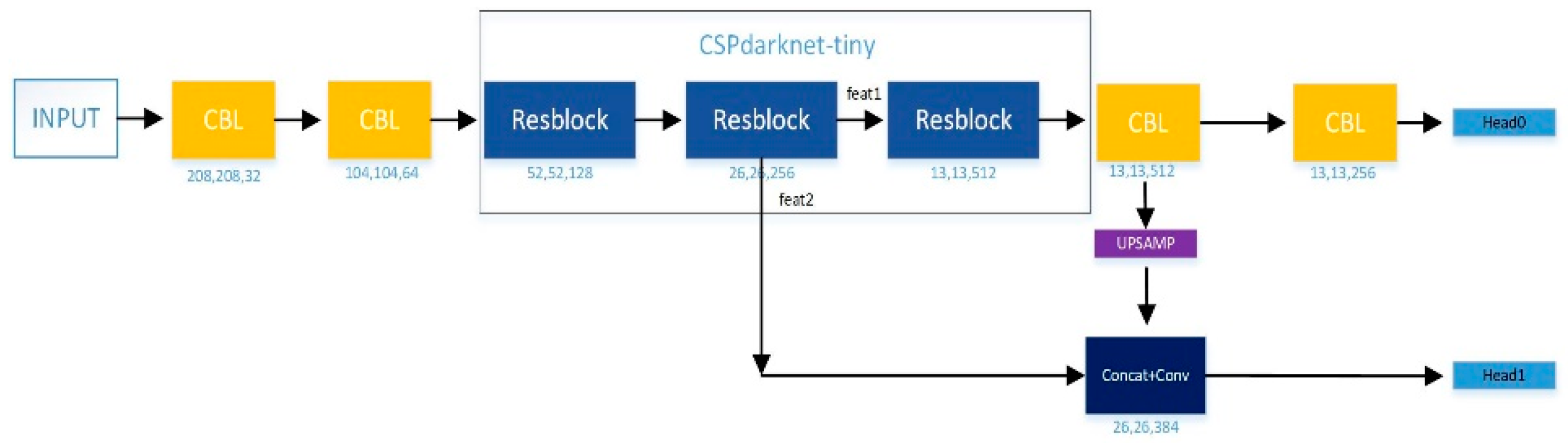

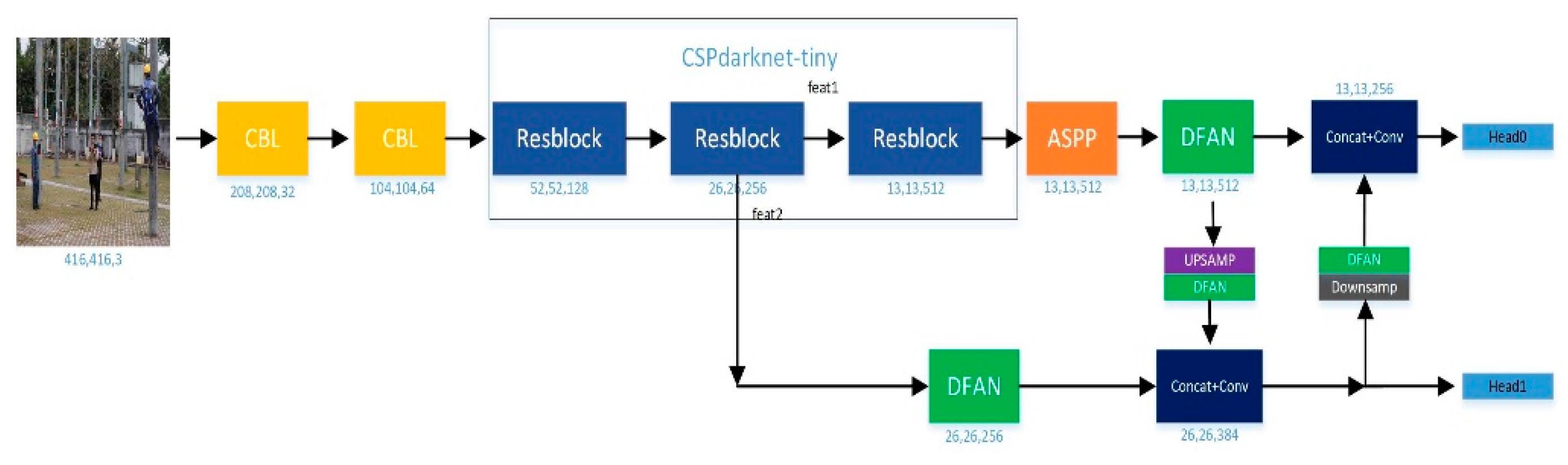

3.1. YOLO-DFAN

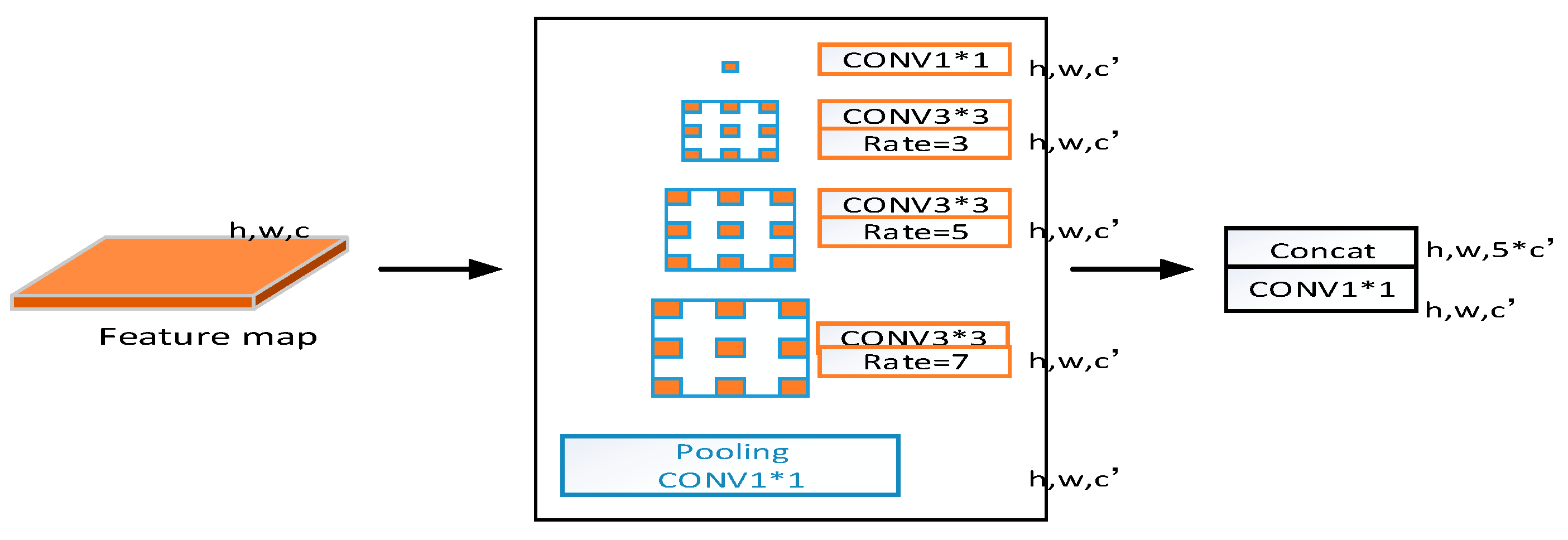

3.2. Atrous Spatial Pyramid Pooling Network

3.3. Dependency Fusing Attention Network

3.4. PANet with DFAN

4. Results

4.1. Environmental and Experimental Settings

4.2. Dataset

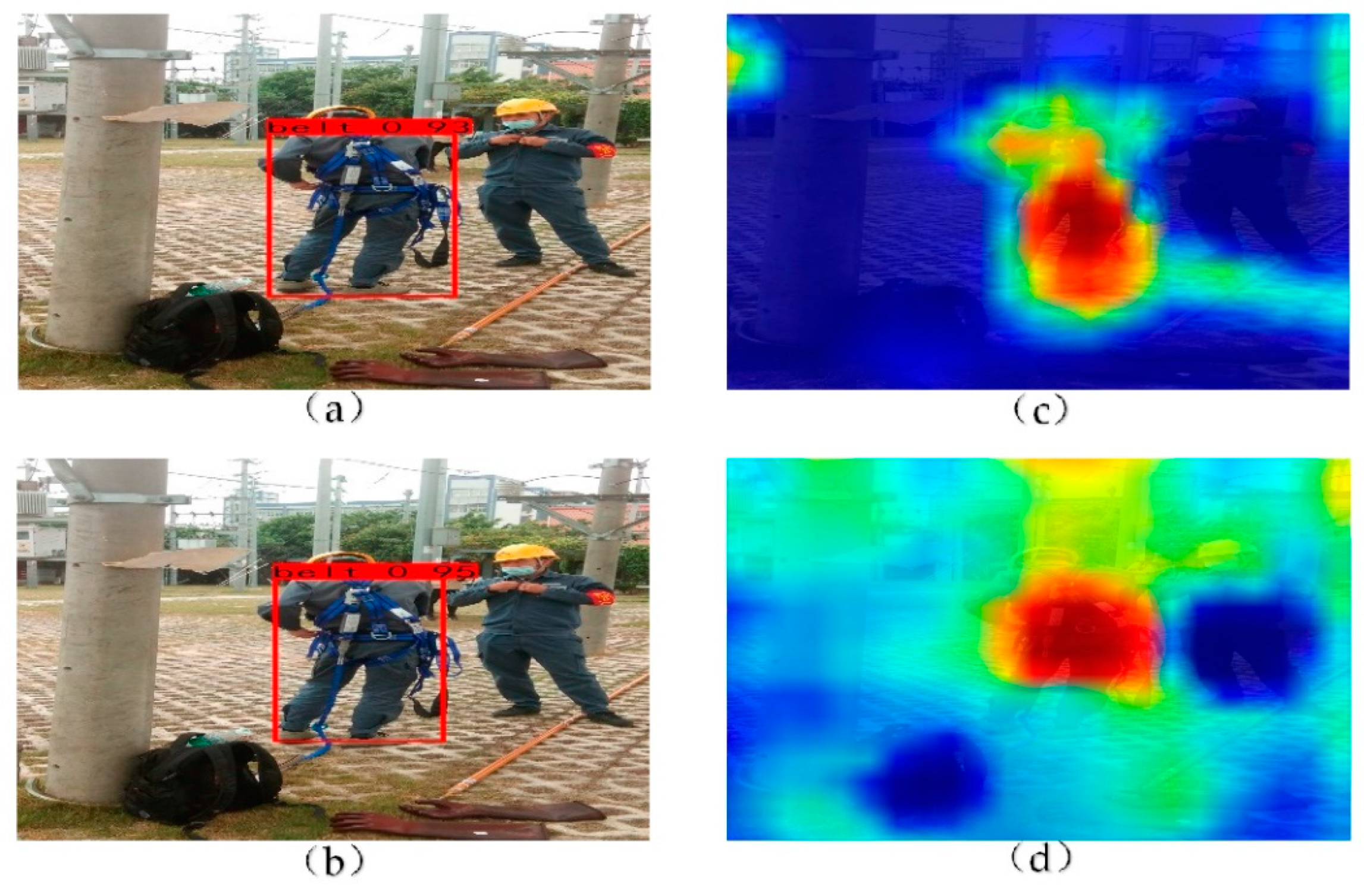

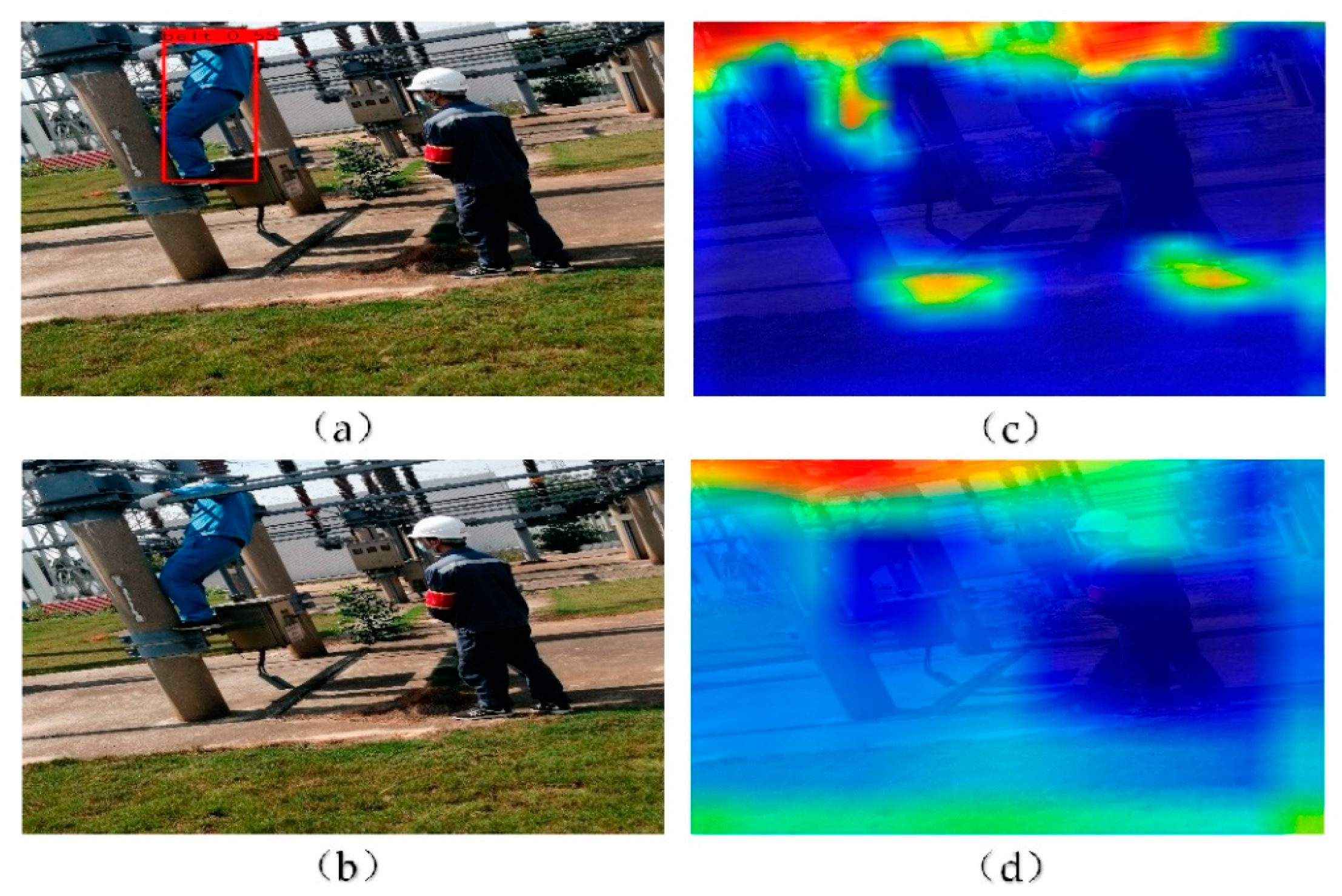

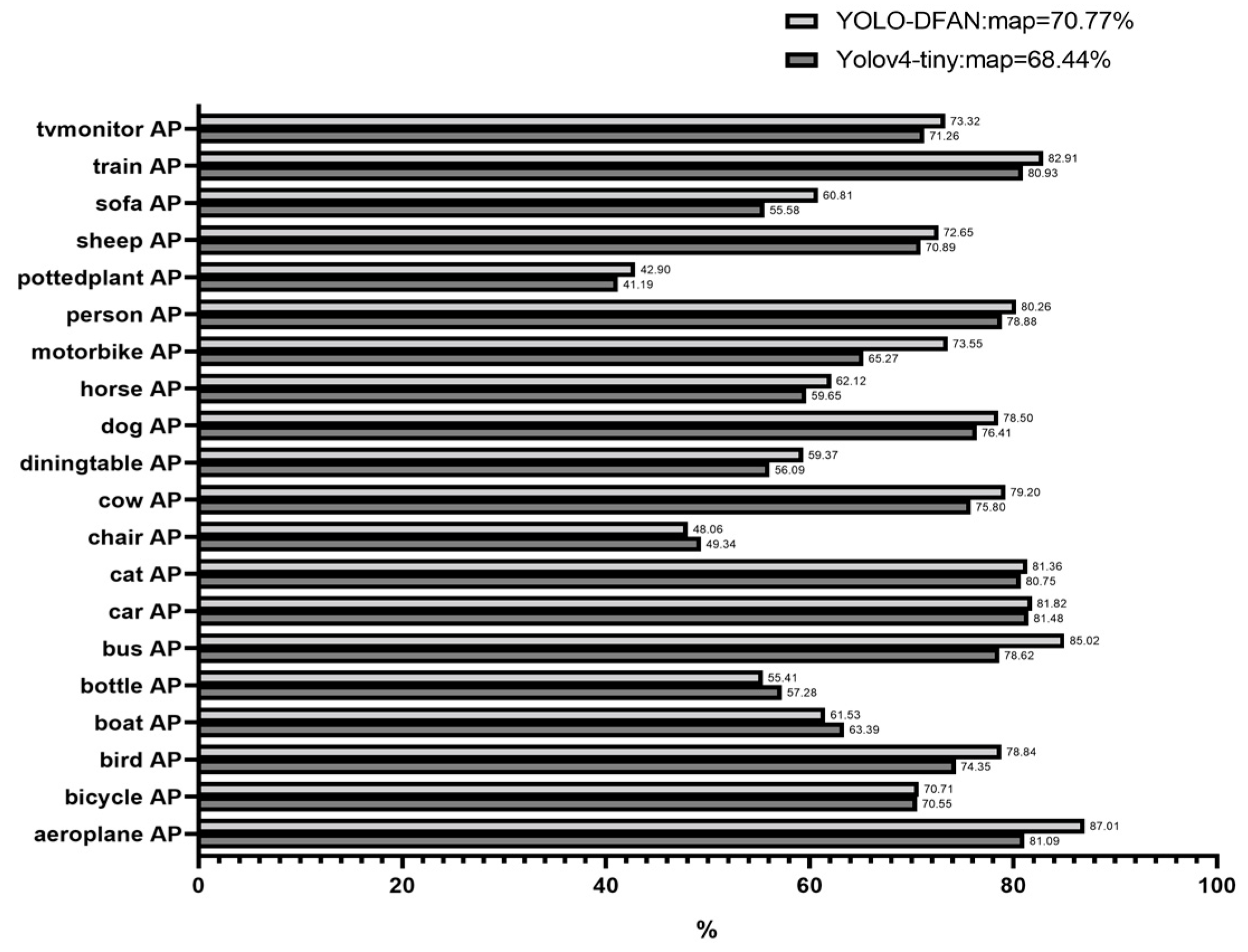

4.3. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bai, X.P.; Zhao, Y.H. A novel method for occupational safety risk analysis of high-altitude fall accident in architecture construction engineering. J. Asian Archit. Build. Eng. 2021, 20, 314–325. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Cheng, R.; He, X.W.; Zheng, Z.L.; Wang, Z.T. Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny. Appl. Sci. 2021, 11, 3652. [Google Scholar] [CrossRef]

- Liao, M.H.; Lyu, P.Y.; He, M.H.; Yao, C.; Wu, W.H.; Bai, X. Mask TextSpotter: An End-to-End Trainable Neural Network for Spotting Text with Arbitrary Shapes. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 532–548. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.M.; Chen, H.; Wang, Y. Multi-Source Remote Sensing Image Fusion for Ship Target Detection and Recognition. Remote Sens. 2021, 13, 4852. [Google Scholar] [CrossRef]

- Yao, Z.X.; Song, X.P.; Zhao, L.; Yin, Y.H. Real-time method for traffic sign detection and recognition based on YOLOv3-tiny with multiscale feature extraction. Proc. Inst. Mech. Eng. Part D-J. Automob. Eng. 2021, 235, 1978–1991. [Google Scholar] [CrossRef]

- Fu, K.; Chang, Z.; Zhang, Y.; Xu, G.; Zhang, K.; Sun, X. Rotation-aware and multi-scale convolutional neural network for object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 161, 294–308. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- He, K.M.; Gkioxari, G.; Doll, P. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-Shot Refinement Neural Network for Object Detection. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4203–4212. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Fang, W.; Ding, L.; Luo, H.; Love, P.E.D. Falls from heights: A computer vision-based approach for safety harness detection. Autom. Constr. 2018, 91, 53–61. [Google Scholar] [CrossRef]

- Shanti, M.Z.; Cho, C.-S.; Byon, Y.-J.; Yeun, C.Y.; Kim, T.-Y.; Kim, S.-K.; Altunaiji, A. A Novel Implementation of an AI-Based Smart Construction Safety Inspection Protocol in the UAE. IEEE Access 2021, 9, 166603–166616. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Ling, H.; Wu, J.; Huang, J.; Chen, J.; Li, P. Attention-based convolutional neural network for deep face recognition. Multimed. Tools Appl. 2020, 79, 5595–5616. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Proceedings of the Computer Vision Conference (CVC), Las Vegas, NV, USA, 25–26 April 2019; pp. 128–144. [Google Scholar]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Duan, Z.; Li, S.; Hu, J.; Yang, J.; Wang, Z. Review of Deep Learning Based Object Detection Methods and Their Mainstream Frameworks. Laser Optoelectron. Prog. 2020, 57, 120005. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Guo, M.-H.; Xu, T.-X.; Liu, J.-J.; Liu, Z.-N.; Jiang, P.-T.; Mu, T.-J.; Zhang, S.-H.; Martin, R.R.; Cheng, M.-M.; Hu, S.-M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Electr Network, Nashville, TN, USA, 19–25 June 2021; pp. 13708–13717. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. In Proceedings of the 16th IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Detection Network | Map (%) | F1 | R (%) | P (%) | Rate(s·Picture−1) |

|---|---|---|---|---|---|

| YOLOv4-tiny | 74.03 | 0.76 | 67.33 | 84.44 | 0.0039 |

| EfficientDet | 72.36 | 0.77 | 64.29 | 85.36 | 0.0031 |

| YOLOv4 | 80.28 | 0.82 | 72.28 | 86.05 | 0.0365 |

| YOLO-DFAN | 79.16 | 0.80 | 71.29 | 84.56 | 0.0055 |

| Algorithm | Map (%) |

|---|---|

| YOLOv4-tiny | 74.03 |

| +SE | 75.28 |

| +CBAM | 75.96 |

| +ECA | 74.98 |

| +CA | 76.24 |

| +DFAN | 77.00 |

| +ASPP | 76.23 |

| +PANET | 75.67 |

| +ASPP+DFAN+PANET | 79.16 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, W.; Wang, X.; Tan, S. YOLO-DFAN: Effective High-Altitude Safety Belt Detection Network. Future Internet 2022, 14, 349. https://doi.org/10.3390/fi14120349

Yan W, Wang X, Tan S. YOLO-DFAN: Effective High-Altitude Safety Belt Detection Network. Future Internet. 2022; 14(12):349. https://doi.org/10.3390/fi14120349

Chicago/Turabian StyleYan, Wendou, Xiuying Wang, and Shoubiao Tan. 2022. "YOLO-DFAN: Effective High-Altitude Safety Belt Detection Network" Future Internet 14, no. 12: 349. https://doi.org/10.3390/fi14120349

APA StyleYan, W., Wang, X., & Tan, S. (2022). YOLO-DFAN: Effective High-Altitude Safety Belt Detection Network. Future Internet, 14(12), 349. https://doi.org/10.3390/fi14120349