Exploring the Design of a Mixed-Reality 3D Minimap to Enhance Pedestrian Satisfaction in Urban Exploratory Navigation

Abstract

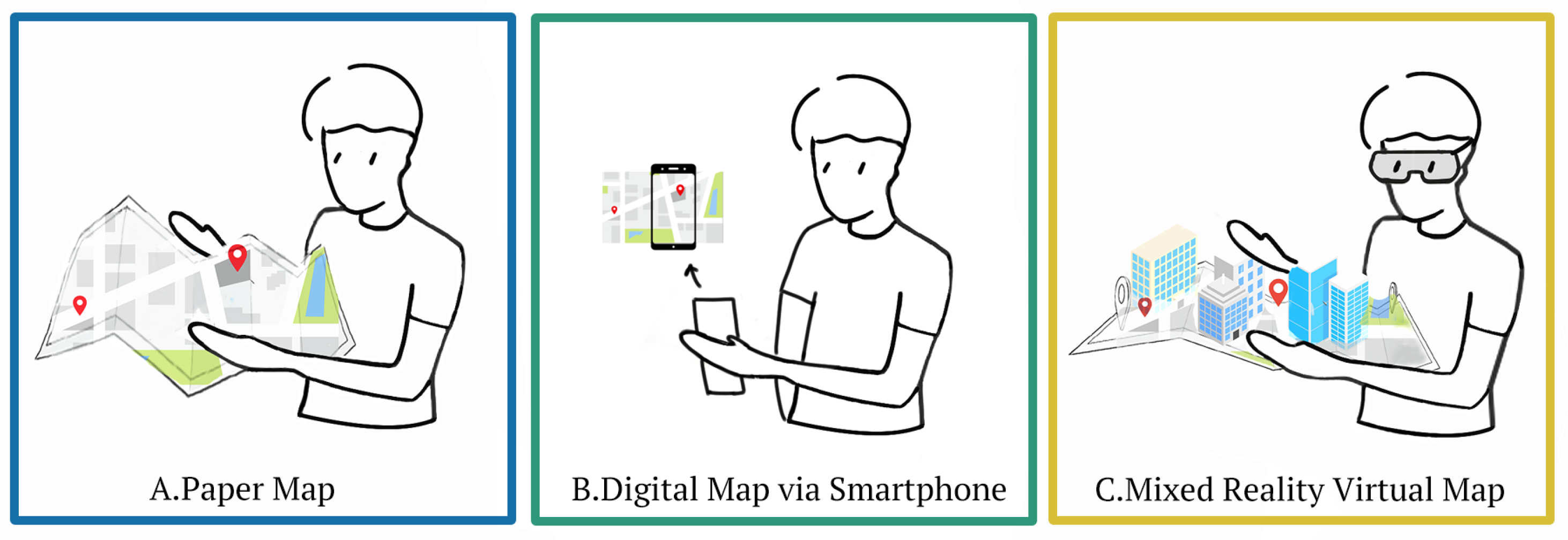

1. Introduction

- RQ1: Which map mode can result in a better performance in exploratory navigation?

- RQ2: Which map mode can result in a lower perceived workload in exploratory navigation?

- RQ3: Which map mode can result in a better user experience in exploratory navigation?

2. Related Work

2.1. Mental Satisfaction in Pedestrian Navigation

2.2. Novel Interface to Support Exploratory Navigation

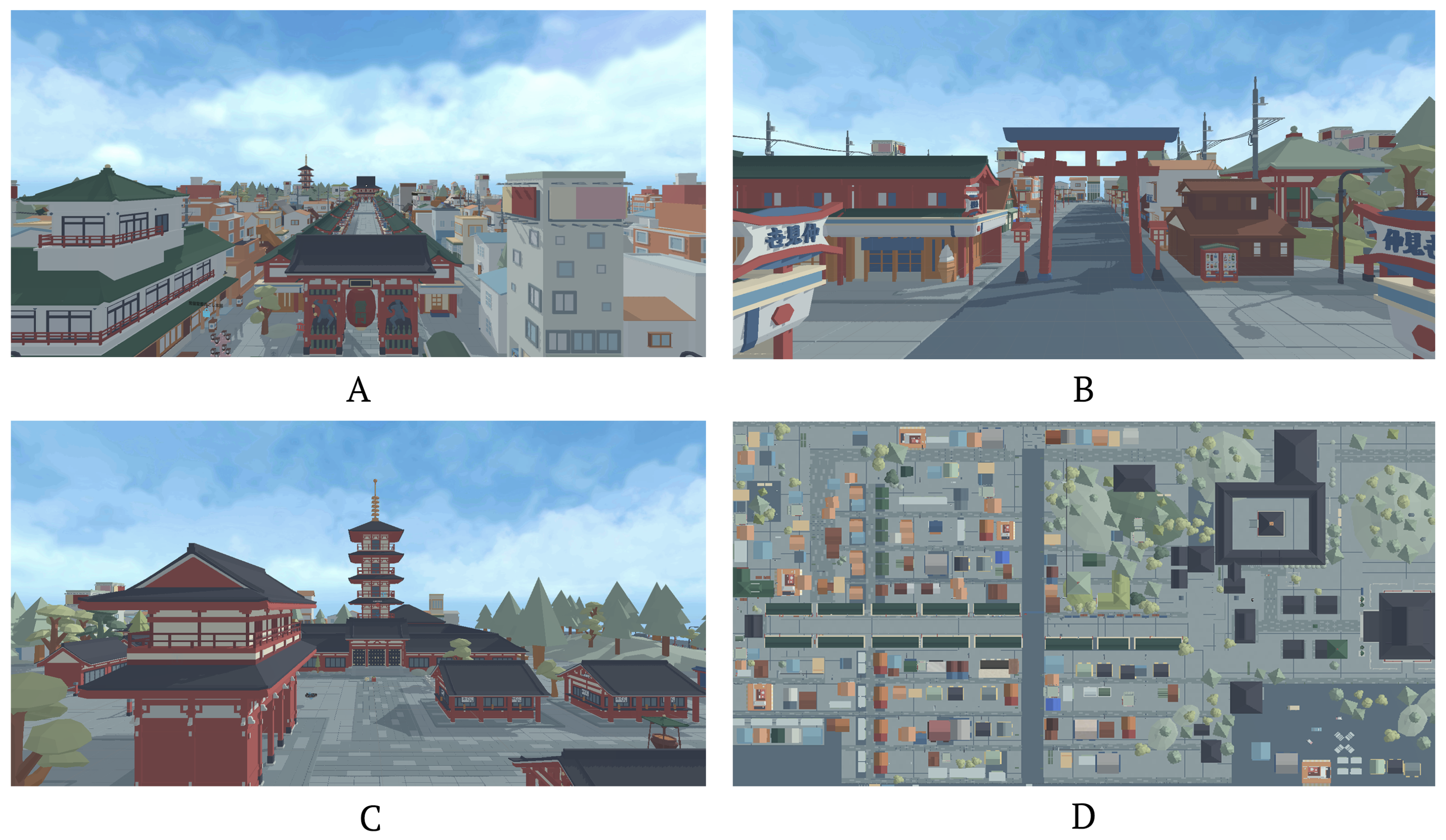

3. Mixed-Reality 3D Minimap

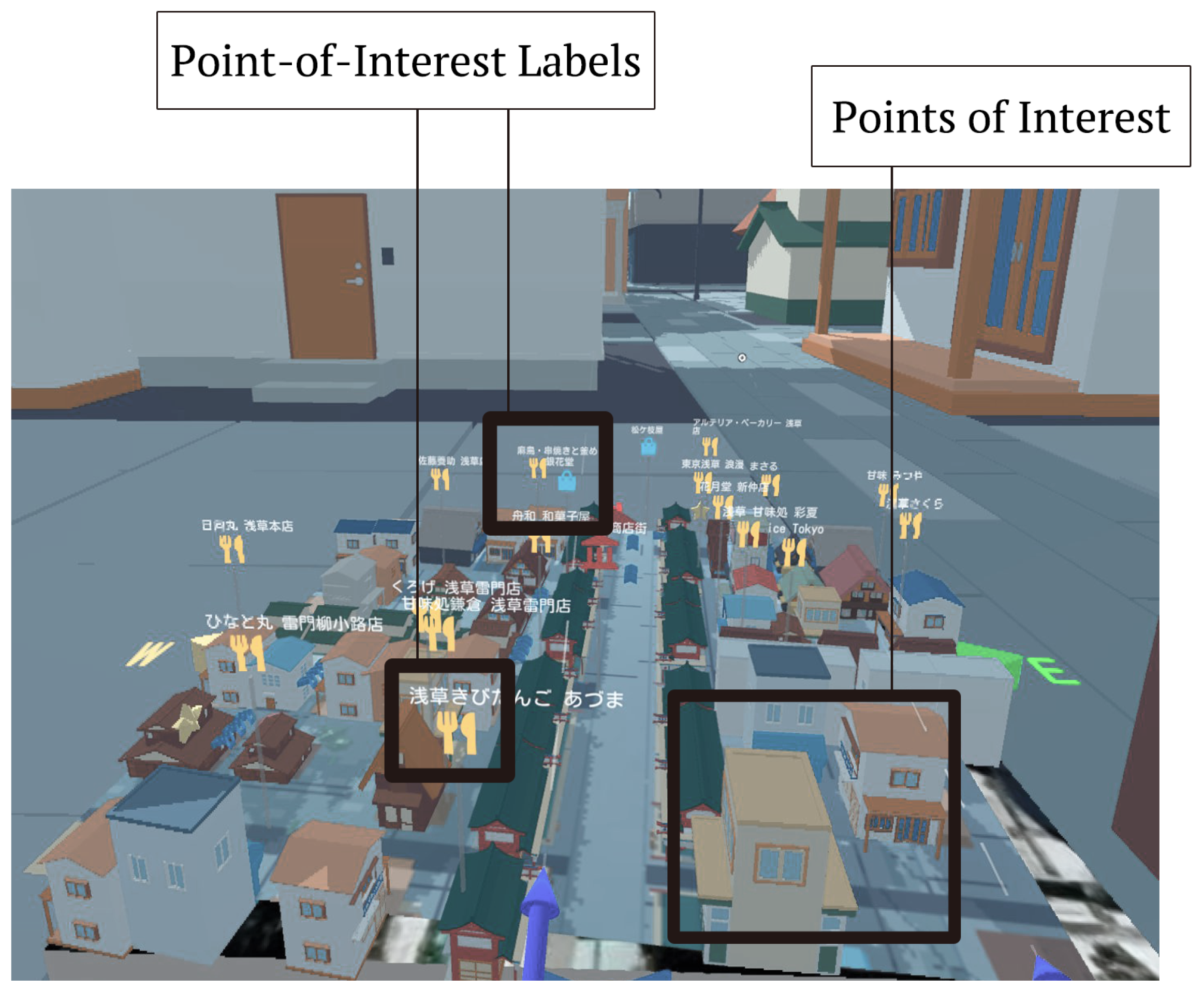

3.1. Interface Component

3.1.1. Point of Interest and Landmark

3.1.2. Navigation

3.1.3. Layer of Control

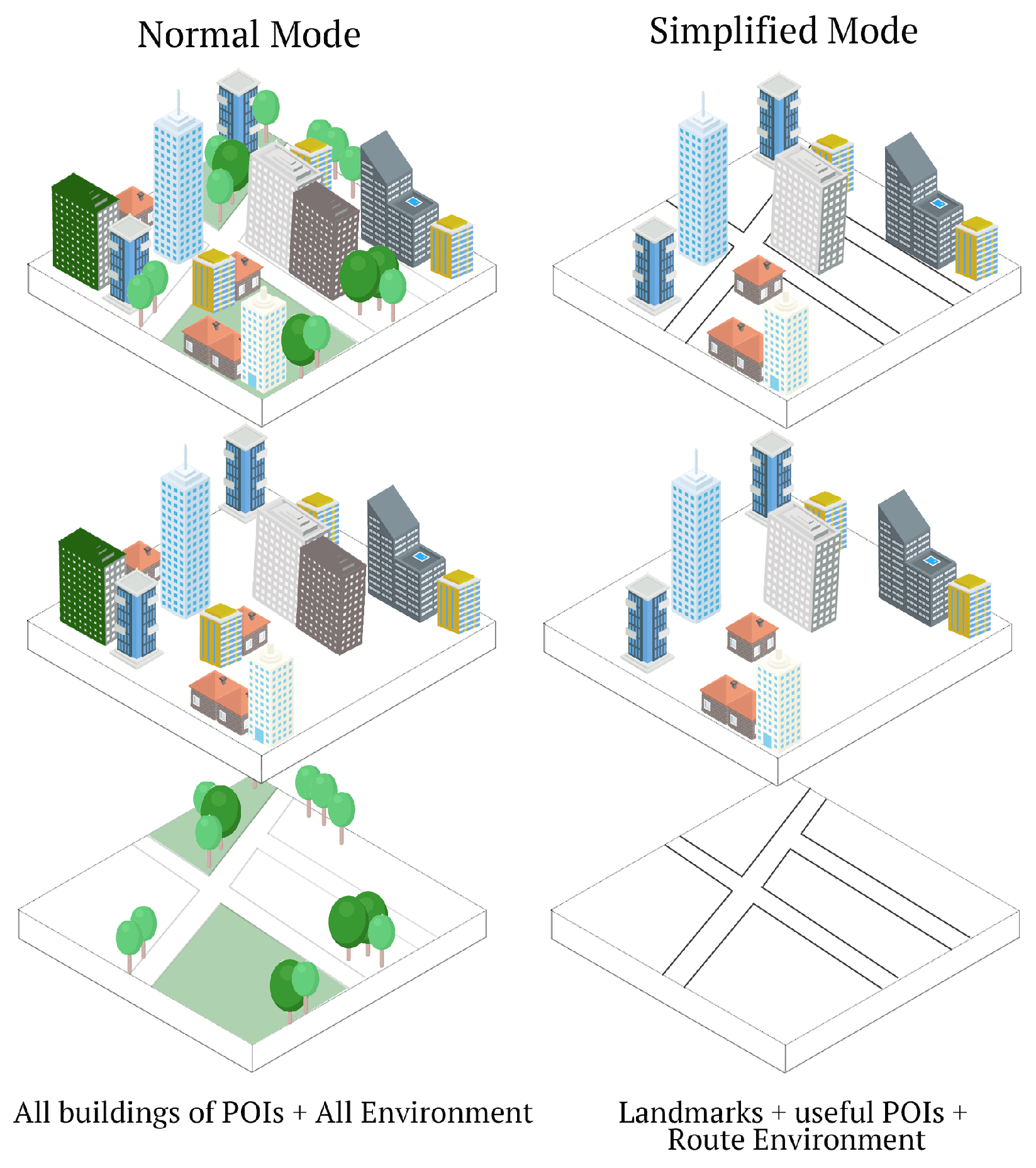

3.2. Different Levels of Detail of Map Interface

3.2.1. Preliminary Study

3.2.2. Two Different Levels of Detail Map Design

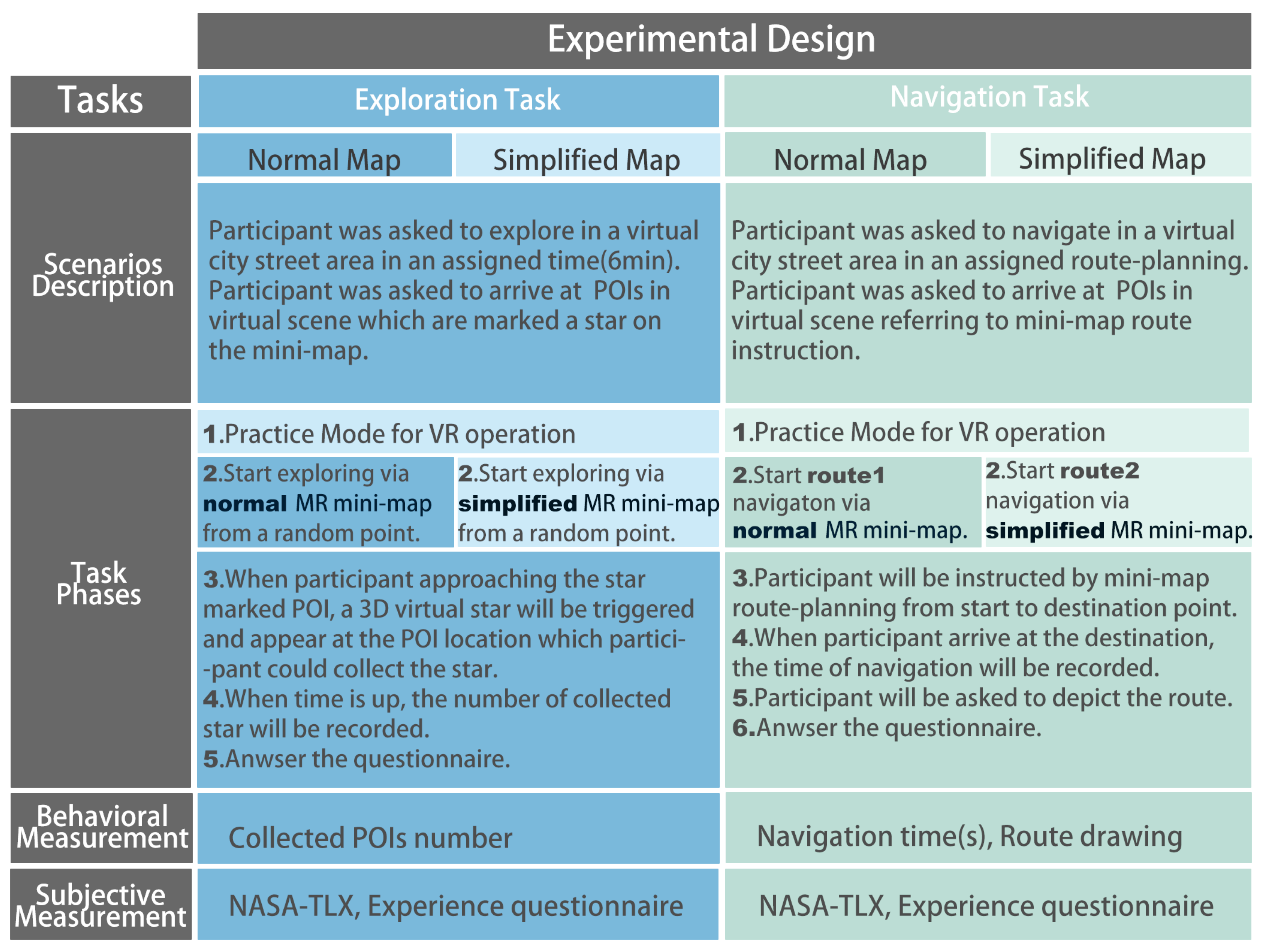

4. Experiment

4.1. Experimental Design

4.2. Participants

4.3. Experimental Procedure

4.4. Results

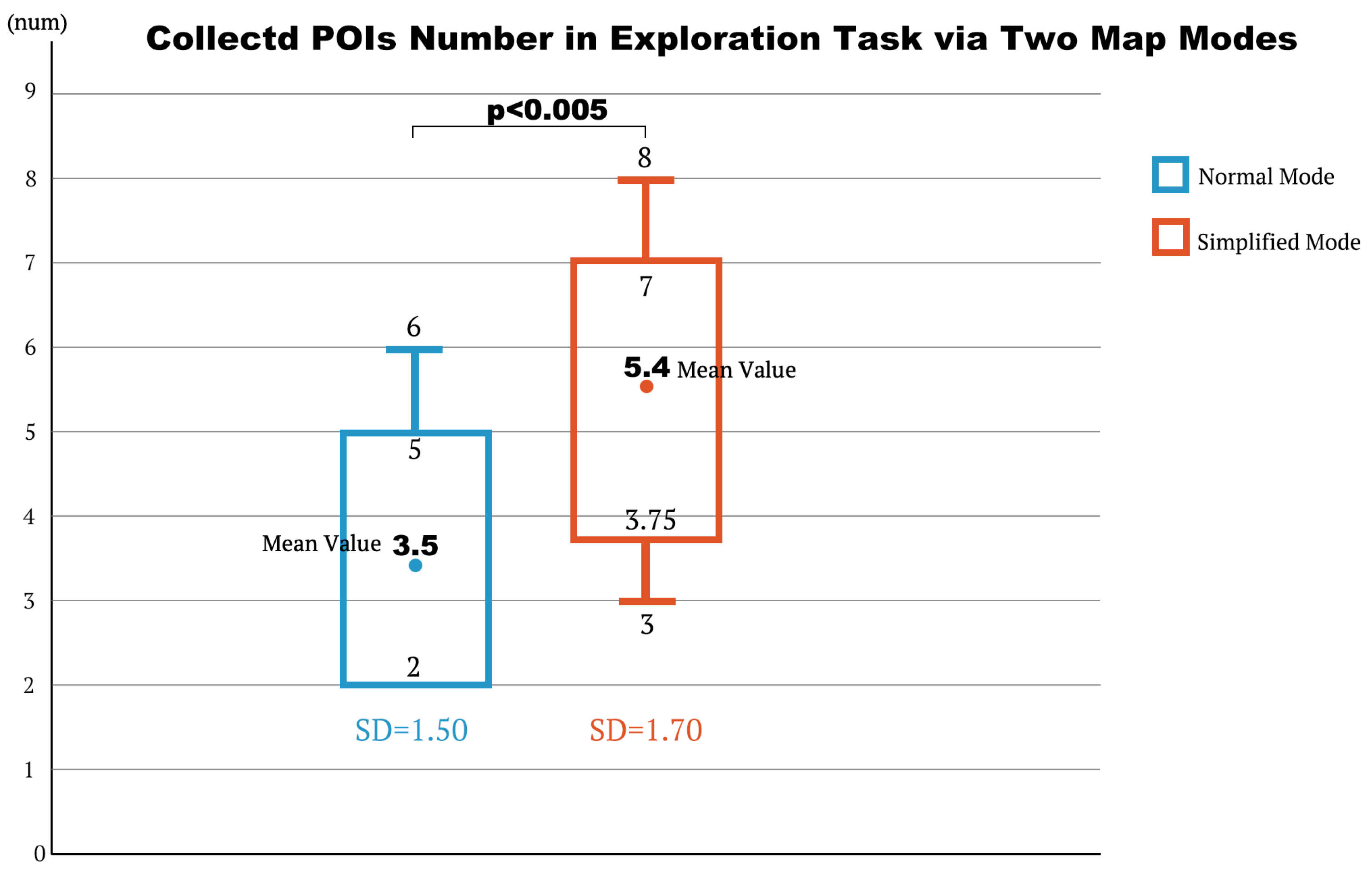

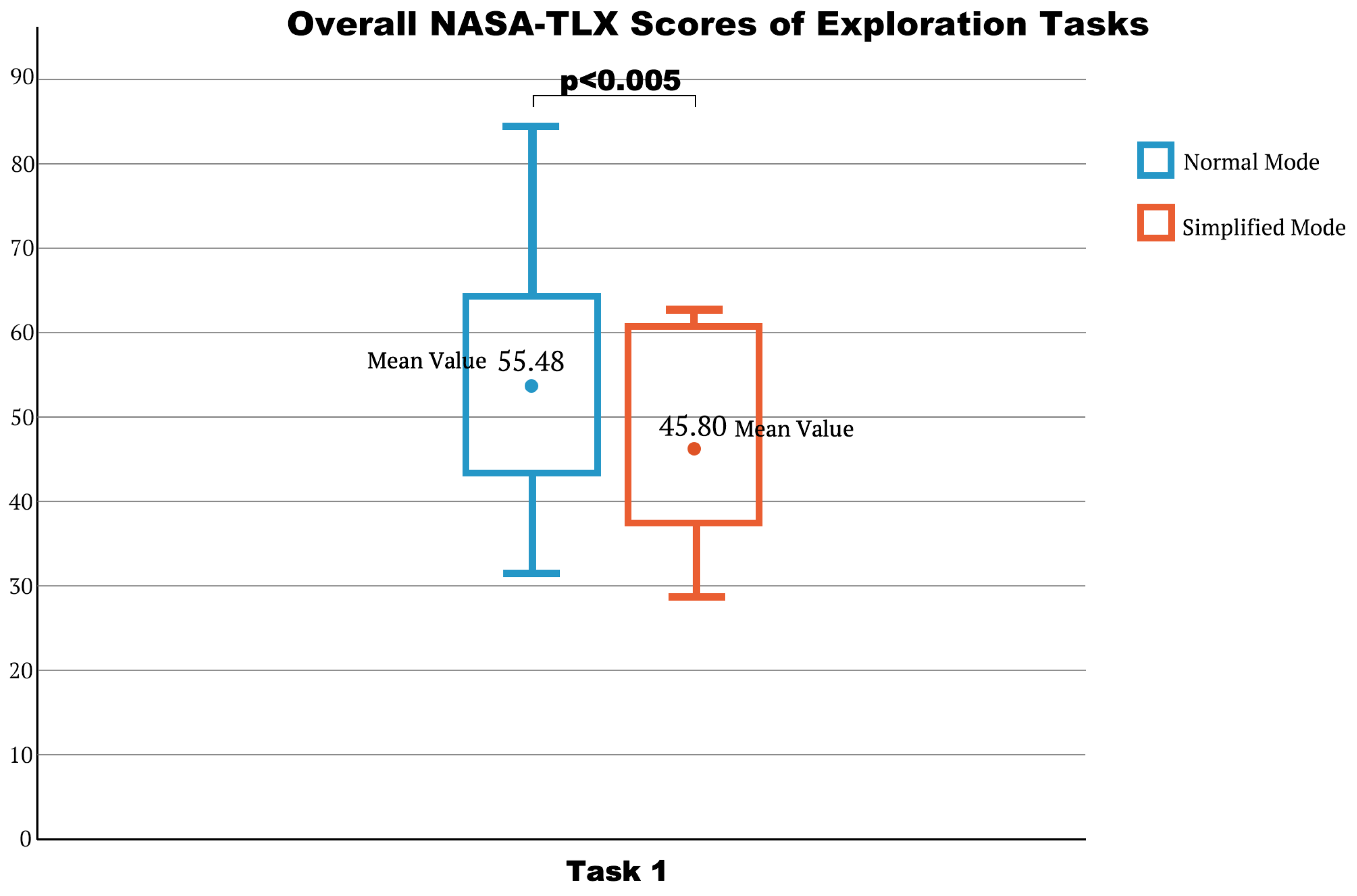

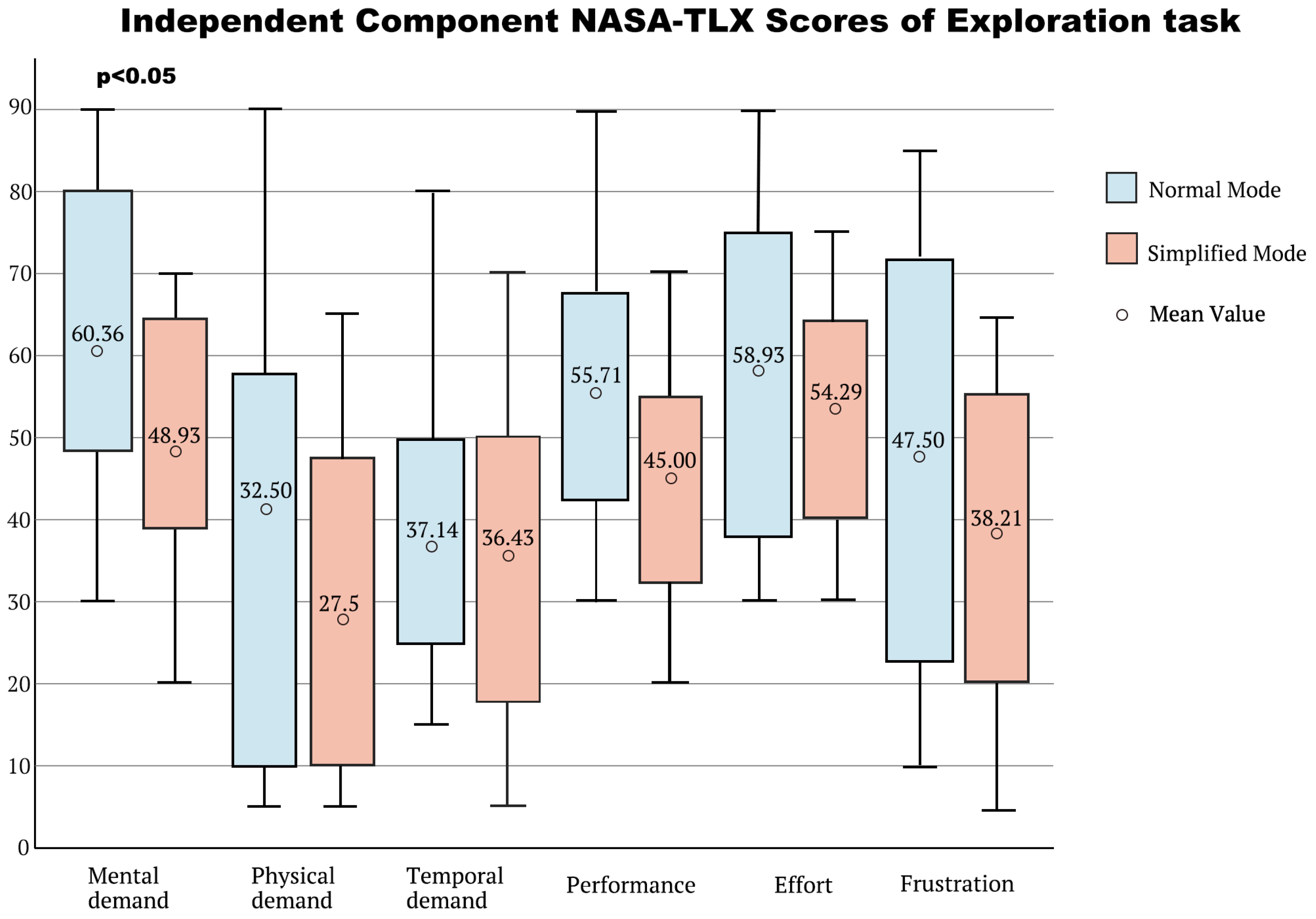

4.4.1. Quantitative Results

- Result of Exploration Task

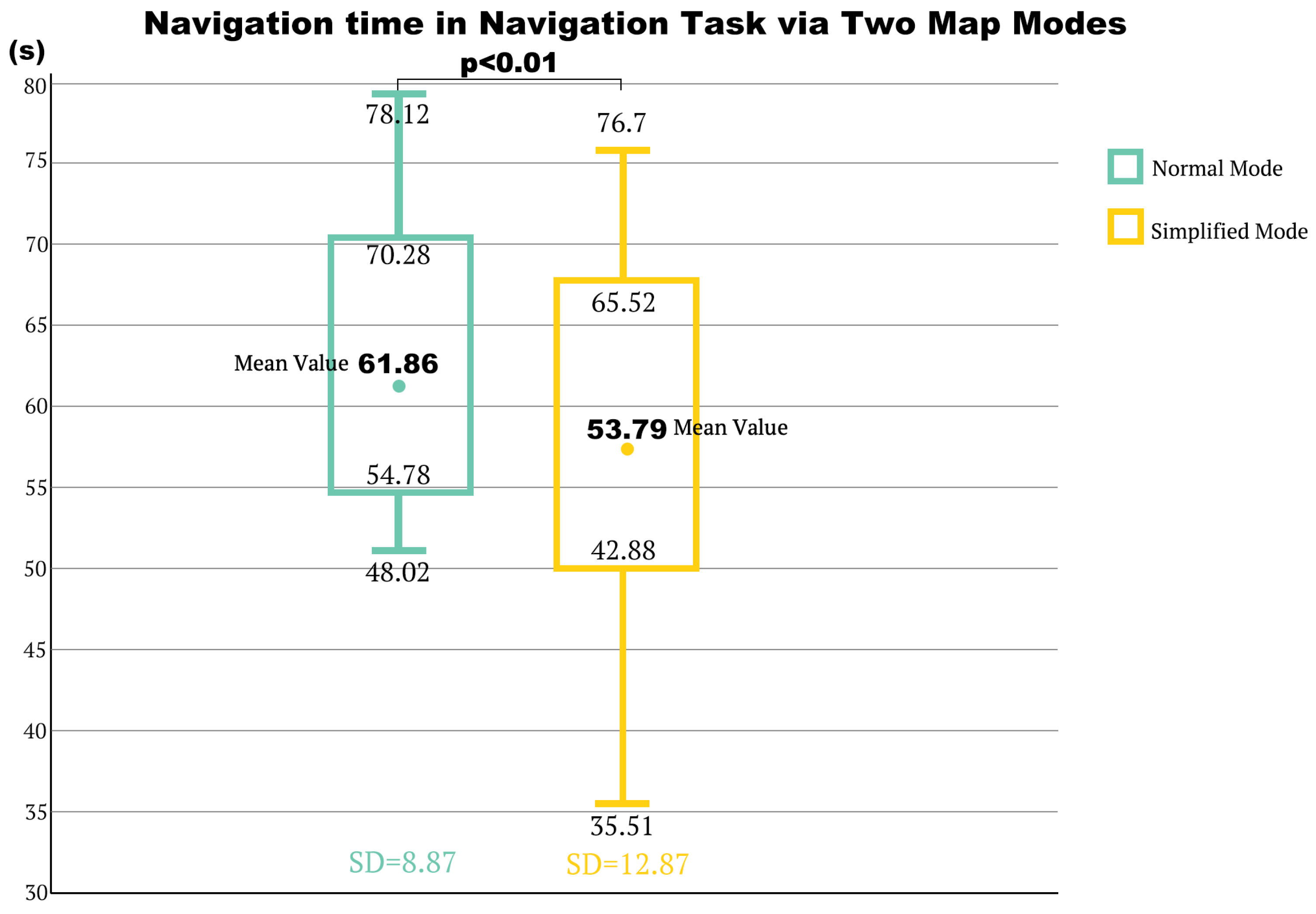

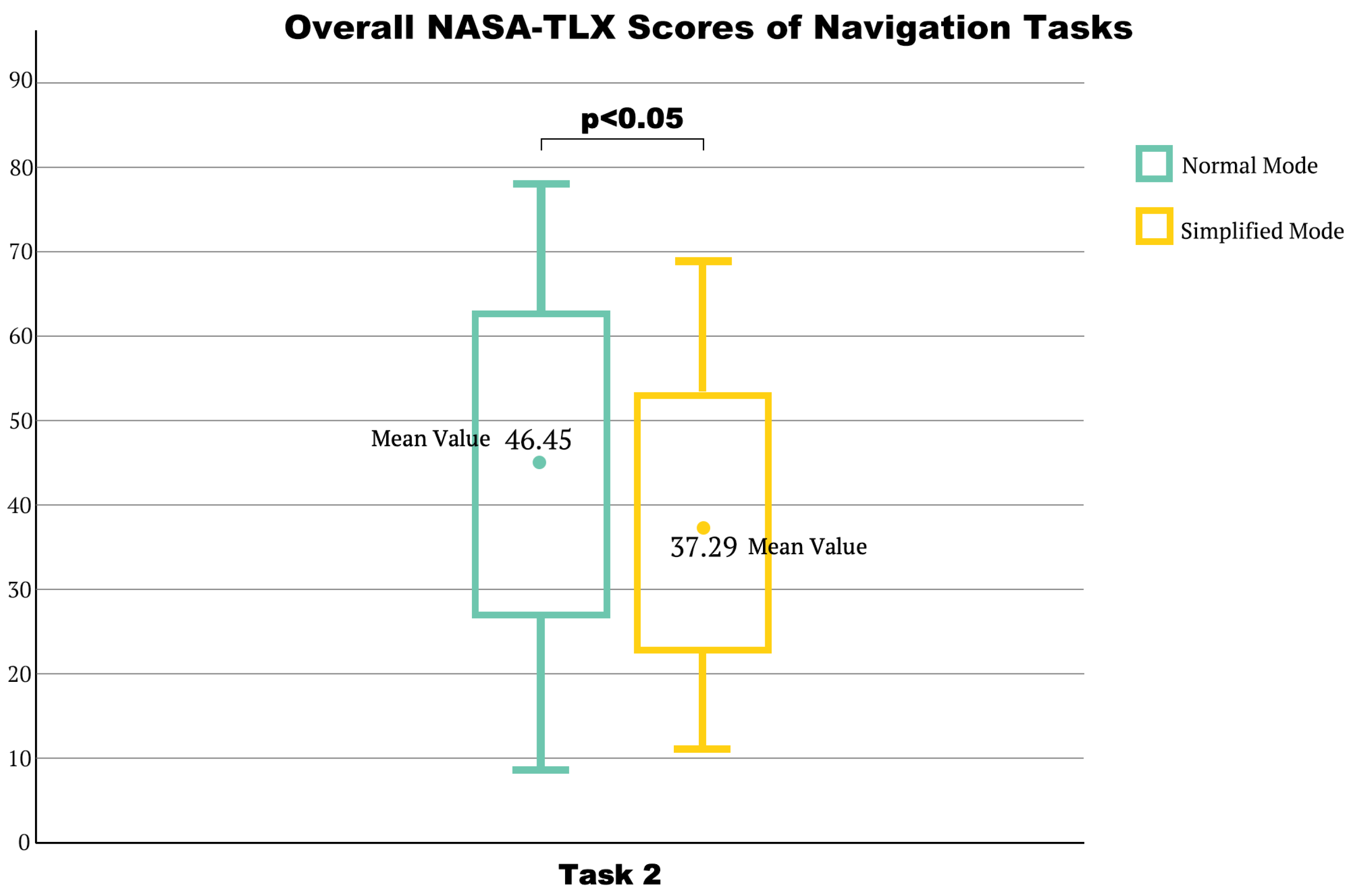

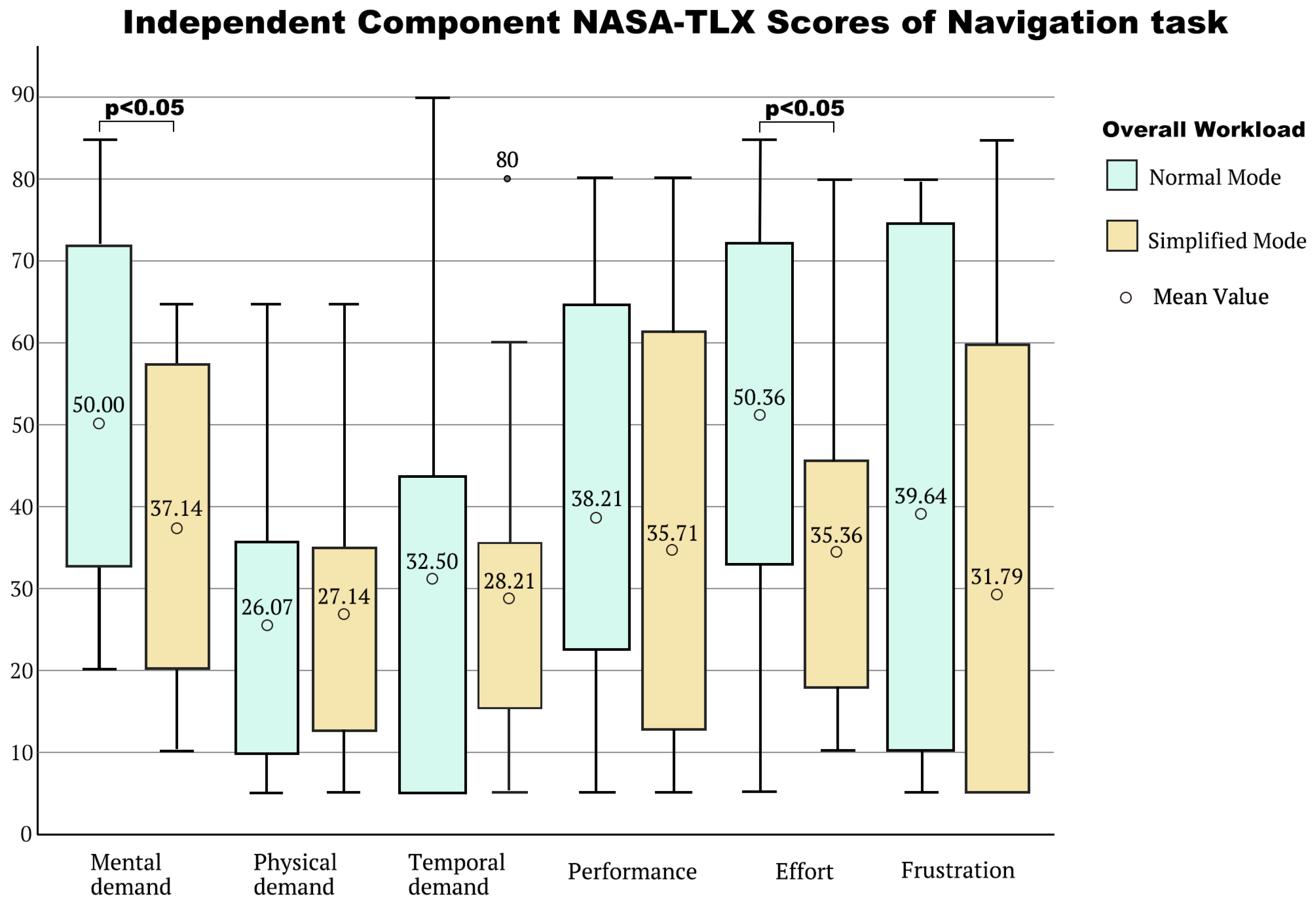

- Results of Navigation Task

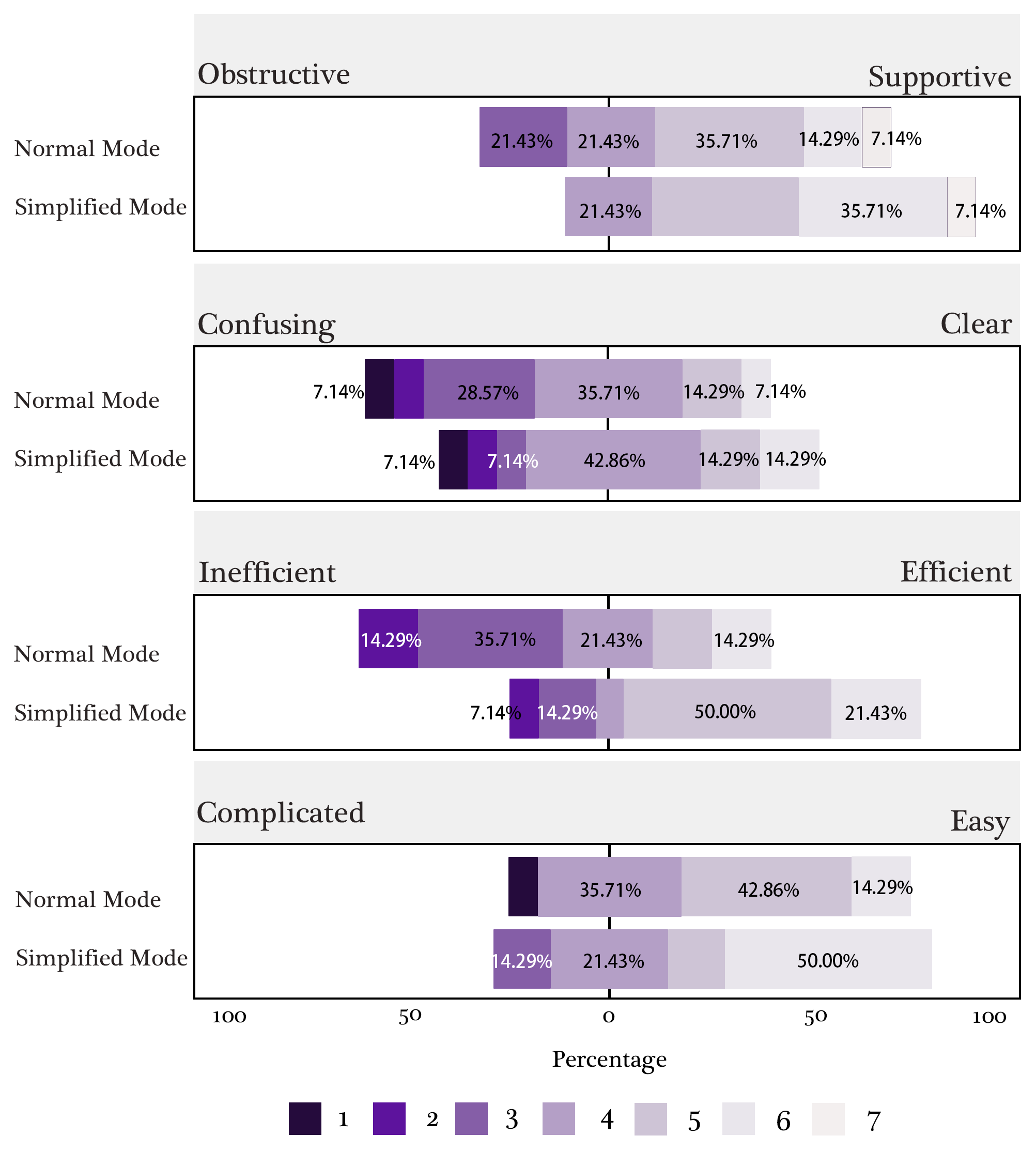

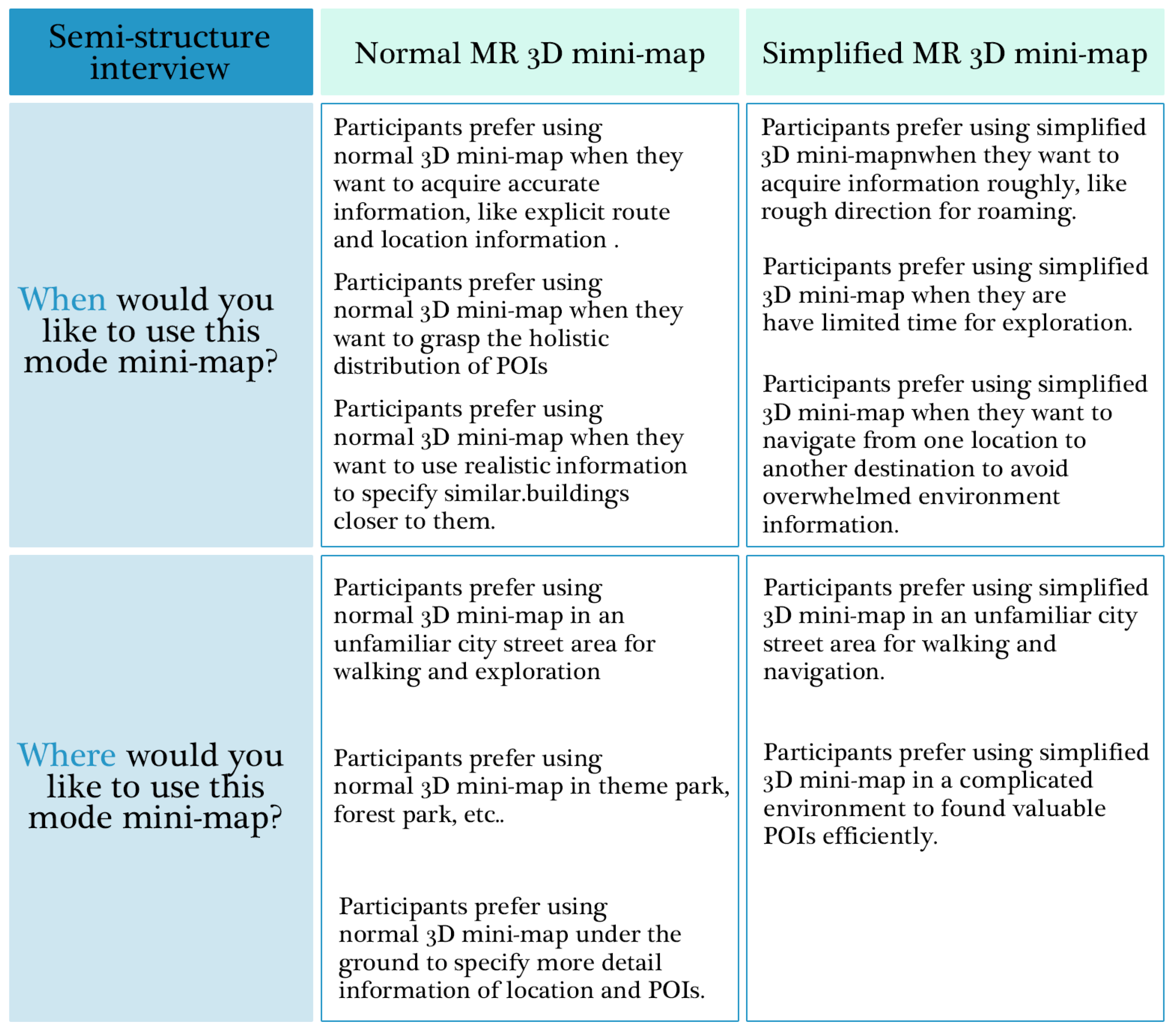

4.4.2. Qualitative Results

5. Discussion

5.1. Performance in Exploratory Navigation

5.2. Perceived Workload

5.3. User Experience

5.4. Other Findings

5.5. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Anderson, Z.; Jones, M.D. Mobile computing and well-being in the outdoors. In Proceedings of the Adjunct Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, London, UK, 9–13 September 2019; pp. 1154–1157. [Google Scholar]

- Bairner, A. Urban walking and the pedagogies of the street. Sport. Educ. Soc. 2011, 16, 371–384. [Google Scholar] [CrossRef]

- Holton, M. Walking with technology: Understanding mobility-technology assemblages. Mobilities 2019, 14, 435–451. [Google Scholar] [CrossRef]

- Kim, D.; Kim, S. The Role of Mobile Technology in Tourism: Patents, Articles, News, and Mobile Tour App Reviews. Sustainability 2017, 9, 2082. [Google Scholar] [CrossRef]

- Richardson, I.; Wilken, R. Haptic vision, footwork, place-making: A peripatetic phenomenology of the mobile phone pedestrian. Second. Nature: Int. J. Creat. Media 2009, 1, 22–41. [Google Scholar]

- Cartwright, W.; Peterson, M.; Gartner, G.; Reichenbacher, T. Adaptation in mobile and ubiquitous cartography. In Multimedia Cartography; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Nayyar, A.; Mahapatra, B.; Le, D.-N.; Suseendran, G. Virtual Reality (VR) & Augmented Reality (AR) technologies for tourism and hospitality industry. Int. J. Eng. Technol. 2018, 7, 11858. [Google Scholar] [CrossRef]

- Rokhsaritalemi, S.; Sadeghi-Niaraki, A.; Kang, H.-S.; Lee, J.-W.; Choi, S.-M. Ubiquitous Tourist System Based on Multicriteria Decision Making and Augmented Reality. Appl. Sci. 2022, 12, 5241. [Google Scholar] [CrossRef]

- Yung, R.; Khoo-Lattimore, C. New realities: A systematic literature review on virtual reality and augmented reality in tourism research. Curr. Issues Tour. 2017, 22, 2056–2081. [Google Scholar] [CrossRef]

- Moro, S.; Rita, P.; Ramos, P.; Esmerado, J. Analysing recent augmented and virtual reality developments in tourism. J. Hosp. Tour. Technol. 2019, 10, 571–586. [Google Scholar] [CrossRef]

- Nobrega, R.; Jacob, J.; Coelho, A.; Weber, J.; Ribeiro, J.; Ferreira, S. Mobile location-based augmented reality applications for urban tourism storytelling. In 24º Encontro Português de Computação Gráfica e Interação (EPCGI); IEEE: Piscataway, NJ, USA, 2017; pp. 1–8. [Google Scholar]

- Yang, C.-C.; Sia, W.; Tseng, Y.-C.; Chiu, J.-C. Gamification of Learning in Tourism Industry: A case study of Pokémon Go. In Proceedings of the ACM International Conference Proceeding Series, Tokyo, Japan, 25–28 November 2018; Association for Computing Machinery: New York, NY, USA; pp. 191–195. [Google Scholar]

- Sasaki, R.; Yamamoto, K. A Sightseeing Support System Using Augmented Reality and Pictograms within Urban Tourist Areas in Japan. Isprs Int. J. Geo-Inf. 2019, 8, 381. [Google Scholar] [CrossRef]

- Vaittinen, T.; McGookin, D. Uncover: Supporting city exploration with egocentric visualizations of location-based content. Pers. Ubiquitous Comput. 2018, 22, 807–824. [Google Scholar] [CrossRef]

- Paavilainen, J.; Korhonen, H.; Alha, K.; Stenros, J.; Koskinen, E.; Mayra, F. The Pokémon GO Experience. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 2493–2498. [Google Scholar]

- Zhou, H.; Edrah, A.; MacKay, B.; Reilly, D. Block Party: Synchronized Planning and Navigation Views for Neighbourhood Expeditions. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1702–1713. [Google Scholar]

- Papangelis, K.; Metzger, M.; Sheng, Y.; Liang, H.-N.; Chamberlain, A.; Cao, T. Conquering the City: Understanding perceptions of Mobility and Human Territoriality in Location-based Mobile Games. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 90. [Google Scholar] [CrossRef]

- Fang, Z.; Li, Q.; Shaw, S.-L. What about people in pedestrian navigation? Geo-Spat. Inf. Sci. 2016, 18, 135–150. [Google Scholar] [CrossRef]

- Lee, J.; Jin, F.; Kim, Y.; Lindlbauer, D. User Preference for Navigation Instructions in Mixed Reality. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Christchurch, New Zealand, 12–16 March 2022; pp. 802–811. [Google Scholar]

- Thi Minh Tran, T.; Parker, C. Designing Exocentric Pedestrian Navigation for AR Head Mounted Displays. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–8. [Google Scholar]

- Bujari, A.; Gaggi, O.; Palazzi, C.E. A mobile sensing and visualization platform for environmental data. Pervasive Mob. Comput. 2020, 66, 101204. [Google Scholar] [CrossRef]

- Schinke, T.; Henze, N.; Boll, S. Visualization of off-screen objects in mobile augmented reality. In Proceedings of the 12th International Conference on Human Computer Interaction with Mobile Devices and Services, Lisbon, Portugal, 7–10 September 2010; pp. 313–316. [Google Scholar]

- Brata, K.C.; Liang, D. An effective approach to develop location-based augmented reality information support. Int. J. Electr. Comput. Eng. (IJECE) 2019, 9, 9. [Google Scholar] [CrossRef]

- Ruta, M.; Scioscia, F.; De Filippis, D.; Ieva, S.; Binetti, M.; Di Sciascio, E. A Semantic-enhanced Augmented Reality Tool for OpenStreetMap POI Discovery. Transp. Res. Procedia 2014, 3, 479–488. [Google Scholar] [CrossRef]

- Jylhä, A.; Hsieh, Y.-T.; Orso, V.; Andolina, S.; Gamberini, L.; Jacucci, G. A wearable multimodal interface for exploring urban points of interest. In Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, Seattle, DC, USA, 9–13 November 2015; pp. 175–182. [Google Scholar]

- Viswanathan, S.; Boulard, C.; Grasso, A.M. Ageing Clouds. In Proceedings of the Companion Publication of the 2019 on Designing Interactive Systems Conference 2019 Companion, San Diego, CA, USA, 24–28 June 2019; pp. 313–317. [Google Scholar]

- Besharat, J.; Komninos, A.; Papadimitriou, G.; Lagiou, E.; Garofalakis, J. Augmented paper maps: Design of POI markers and effects on group navigation. J. Ambient. Intell. Smart Environ. 2016, 8, 515–530. [Google Scholar] [CrossRef]

- Lobo, M.-J.; Christophe, S. Opportunities and challenges for Augmented Reality situated geographical visualization. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Nice, France, 31 August–2 September 2020; p. V-4-2020. [Google Scholar] [CrossRef]

- Lee, L.-H.; Braud, T.; Hosio, S.; Hui, P. Towards Augmented Reality-driven Human-City Interaction: Current Research on Mobile Headset and Future Challenges. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Yagol, P.; Ramos, F.; Trilles, S.; Torres-Sospedra, J.; Perales, F. New Trends in Using Augmented Reality Apps for Smart City Contexts. Isprs Int. J. Geo-Inf. 2018, 7, 478. [Google Scholar] [CrossRef]

- Maslow, A.H. A theory of human motivation. Psychol. Rev. 1943, 50, 370–396. [Google Scholar] [CrossRef]

- Abraham, M. Motivation and Personality; Harper & Row, Publishers: New York, NY, USA, 1954. [Google Scholar]

- Maslow, A.H. Toward a Psychology of Being, 2nd ed.; D. Van Nostrand: Oxford, UK, 1968; p. xiii, 240. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research; Elsevier Science & Technology: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Schrepp, M. User Experience Questionnaire Handbook. All You Need to Know to Apply the UEQ Successfully in Your Project 2015. Available online: https://www.ueq-online.org/Material/Handbook.pdf (accessed on 23 October 2022). [CrossRef]

- Rehrl, K.; Häusler, E.; Leitinger, S.; Bell, D. Pedestrian navigation with augmented reality, voice and digital map: Final results from an in situ field study assessing performance and user experience. J. Locat. Based Serv. 2014, 8, 75–96. [Google Scholar] [CrossRef]

- Perebner, M.; Huang, H.; Gartner, G. Applying user-centred design for smartwatch-based pedestrian navigation system. J. Locat. Based Serv. 2019, 13, 213–237. [Google Scholar] [CrossRef]

- Huang, H.; Mathis, T.; Weibel, R. Choose your own route—Supporting pedestrian navigation without restricting the user to a predefined route. Cartogr. Geogr. Inf. Sci. 2021, 49, 95–114. [Google Scholar] [CrossRef]

- Ahmadpoor, N.; Shahab, S. Spatial Knowledge Acquisition in the Process of Navigation: A Review. Curr. Urban Stud. 2019, 7, 1–19. [Google Scholar] [CrossRef][Green Version]

- Allen, G.L. Cognitive abilities in the service of wayfinding: A functional approach. Prof. Geogr. 1999, 51, 555–561. [Google Scholar] [CrossRef]

- Vaez, S.; Burke, M.; Alizadeh, T. Urban Form and Wayfinding: Review of Cognitive and Spatial Knowledge for Individuals’ Navigation. 2017. Available online: https://research-repository.griffith.edu.au/bitstream/handle/10072/124212/VaezPUB1830.pdf?sequence=1&isAllowed=y (accessed on 23 October 2022).

- Li, C. User preferences, information transactions and location-based services: A study of urban pedestrian wayfinding. Comput. Environ. Urban Syst. 2006, 30, 726–740. [Google Scholar] [CrossRef]

- Montello, D.R. Spatial cognition. In International Encyclopedia of the Social and Behavioral Sciences; Smelser, N.J., Baltes, B., Eds.; Elsevier: Amsterdam, The Netherlands, 2001; pp. 14771–14777. [Google Scholar]

- Allen, G.L. Spatial abilities, cognitive maps, and wayfinding. In Wayfinding Behavior: Cognitive Mapping and Other Spatial Processes; The Johns Hopkins University Press: Baltimore, MD, USA, 1999; pp. 46–80. [Google Scholar]

- Google AR. Available online: https://arvr.google.com/ar (accessed on 23 October 2022).

- Apple Map. Available online: https://www.apple.com/maps/ (accessed on 23 October 2022).

- Carmo, M.; Afonso, A.; Ferreira, A.; Cláudio, A.P.; Silva, G. PoI Awareness, Relevance and Aggregation for Augmented Reality. In Proceedings of the 2016 20th International Conference Information Visualisation (IV), Lisbon, Portugal, 19 July 2016; pp. 300–305. [Google Scholar]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A Survey of Augmented, Virtual, and Mixed Reality for Cultural Heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Bekele, M.K. Walkable Mixed Reality Map as interaction interface for Virtual Heritage. Digit. Appl. Archaeol. Cult. Herit. 2019, 15, e00127. [Google Scholar] [CrossRef]

- Boutsi, A.; Ioannidis, C.; Soile, S. Hybrid mobile augmented reality: Web-like concepts applied to high resolution 3D overlays. In Proceedings of the ISPRS - International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences 2019, XLII-2/W17, Prague, Czech Republic, 12–19 July 2016; pp. 85–92. [Google Scholar] [CrossRef]

- Boustila, S.; Ozkan, M.; Bechmann, D. Interactions with a Hybrid Map for Navigation Information Visualization in Virtual Reality. In Proceedings of the 2020 Conference on Interactive Surfaces and Spaces, Wellington, New Zealand, 20–23 November 2020; pp. 69–72. [Google Scholar]

- Khan, N.; Rahman, A.U. Rethinking the Mini-Map: A Navigational Aid to Support Spatial Learning in Urban Game Environments. Int. J. Hum. Comput. Interact. 2017, 34, 1135–1147. [Google Scholar] [CrossRef]

- Toups, Z.; Lalone, N.; Alharthi, S.; Sharma, H.; Webb, A. Making Maps Available for Play: Analyzing the Design of Game Cartography Interfaces. ACM Trans. Comput. Hum. Interact. 2019, 26, 1–43. [Google Scholar] [CrossRef]

- Zagata, K.; Gulij, J.; Halik, Ł.; Medyńska-Gulij, B. Mini-Map for Gamers Who Walk and Teleport in a Virtual Stronghold. ISPRS Int. J. Geo-Inf. 2021, 10, 96. [Google Scholar] [CrossRef]

- Toups, Z.; LaLone, N.; Spiel, K.; Hamilton, B. Paper to Pixels: A Chronicle of Map Interfaces in Games. In Proceedings of the Designing Interactive Systems, New York, NY, USA, 17–19 August 2020; pp. 1433–1451. [Google Scholar]

- Xcode. Available online: https://developer.apple.com/xcode/ (accessed on 23 October 2022).

- MapKit. Available online: https://developer.apple.com/documentation/mapkit/ (accessed on 23 October 2022).

- ARKit. Available online: https://developer.apple.com/jp/augmented-reality/arkit/ (accessed on 23 October 2022).

- Hololens. Available online: https://www.microsoft.com/ja-jp/hololens (accessed on 23 October 2022).

- König, S.U.; Keshava, A.; Clay, V.; Rittershofer, K.; Kuske, N.; König, P. Embodied Spatial Knowledge Acquisition in Immersive Virtual Reality: Comparison to Map Exploration. Frontiers in virtual reality. bioRxiv 2020, 2, 625548. [Google Scholar] [CrossRef]

- Feng, Y.; Duives, D.; Daamen, W.; Hoogendoorn, S. Data collection methods for studying pedestrian behaviour: A systematic review. Build. Environ. 2021, 187, 107329. [Google Scholar] [CrossRef]

- Stähli, L.; Giannopoulos, I.; Raubal, M. Evaluation of pedestrian navigation in Smart Cities. Environment and planning. Urban Anal. City Sci. 2021, 48, 1728–1745. [Google Scholar] [CrossRef]

- Török, Z.G.; Török, A.; Tölgyesi, B.; Kiss, V. The virtual tourist: Cognitive strategies and differences in navigation and map use while exploring an maginary city. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences-ISPRS Archives, Casablanca, Morocco, 10–11 October 2018; pp. 703–709. [Google Scholar] [CrossRef]

- Gushima, K.; Nakajima, T. Virtual Fieldwork: Designing Augmented Reality Applications Using Virtual Reality Worlds; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2021; Volume 12770, pp. 417–430. [Google Scholar]

- Gushima, K.; Nakajima, T. A Scenario Experience Method with Virtual Reality Technologies for Designing Mixed Reality Services. In Proceedings of the 2020 16th International Conference on Intelligent Environments (IE), New York, NY, USA, 20–23 July 2020; pp. 122–125. [Google Scholar]

- Unity 3D. Available online: https://unity.com/ja (accessed on 23 October 2022).

- Oculus Quest 2. Available online: https://store.facebook.com/jp/quest/products/quest-2/ (accessed on 23 October 2022).

- IJsselsteijn, W.A.; De Kort, Y.A.; Poels, K. The game experience questionnaire. Eindhoven: Technische Universiteit Eindhoven. 2013. Available online: https://pure.tue.nl/ws/files/21666907/Game_Experience_Questionnaire_English.pdf (accessed on 23 October 2022).

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 196–202. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Nakajima, T. Exploring the Design of a Mixed-Reality 3D Minimap to Enhance Pedestrian Satisfaction in Urban Exploratory Navigation. Future Internet 2022, 14, 325. https://doi.org/10.3390/fi14110325

Zhang Y, Nakajima T. Exploring the Design of a Mixed-Reality 3D Minimap to Enhance Pedestrian Satisfaction in Urban Exploratory Navigation. Future Internet. 2022; 14(11):325. https://doi.org/10.3390/fi14110325

Chicago/Turabian StyleZhang, Yiyi, and Tatsuo Nakajima. 2022. "Exploring the Design of a Mixed-Reality 3D Minimap to Enhance Pedestrian Satisfaction in Urban Exploratory Navigation" Future Internet 14, no. 11: 325. https://doi.org/10.3390/fi14110325

APA StyleZhang, Y., & Nakajima, T. (2022). Exploring the Design of a Mixed-Reality 3D Minimap to Enhance Pedestrian Satisfaction in Urban Exploratory Navigation. Future Internet, 14(11), 325. https://doi.org/10.3390/fi14110325