Real-Time Image Detection for Edge Devices: A Peach Fruit Detection Application

Abstract

1. Introduction

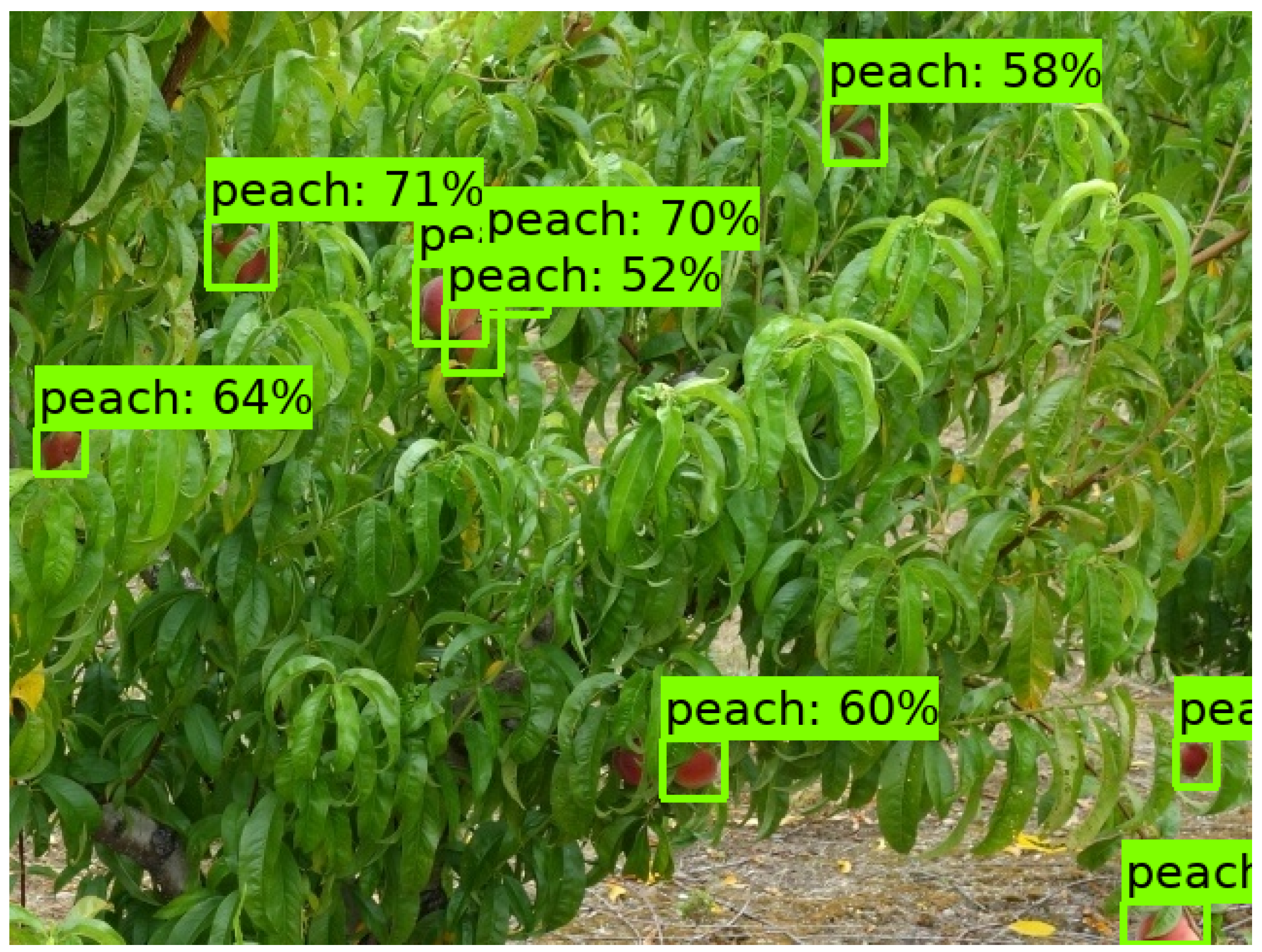

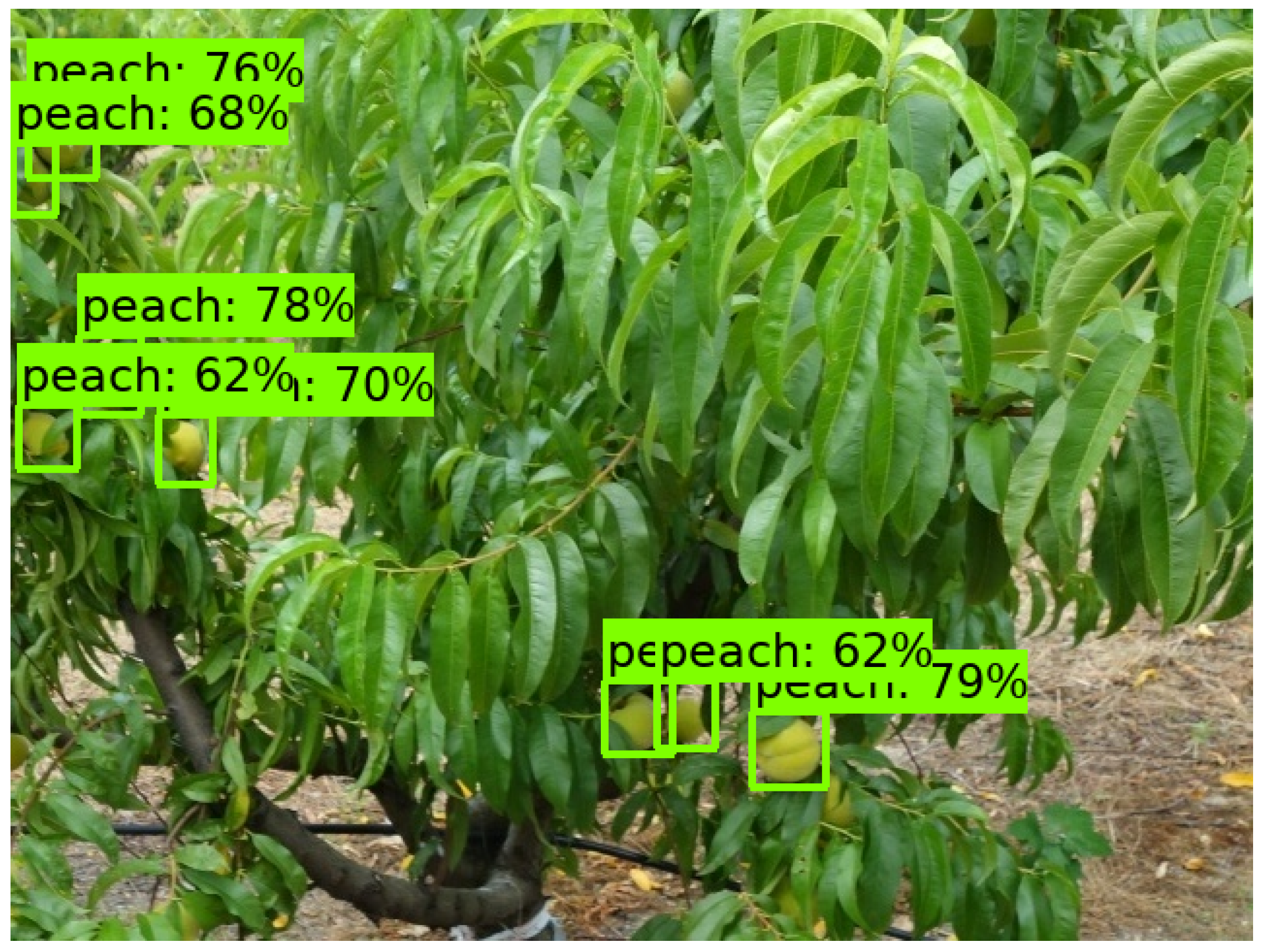

- We propose the use of a lightweight and hardware-aware MobileDet detector model for a real-time peach fruit detection application while embedded in a Raspberry Pi target device along with a Coral edge TPU accelerator.

- We present a novel dataset of three peach cultivars with annotations and have made it available for further study (to our knowledge, this is the first work of its kind).

2. Materials and Methods

2.1. Dataset Description

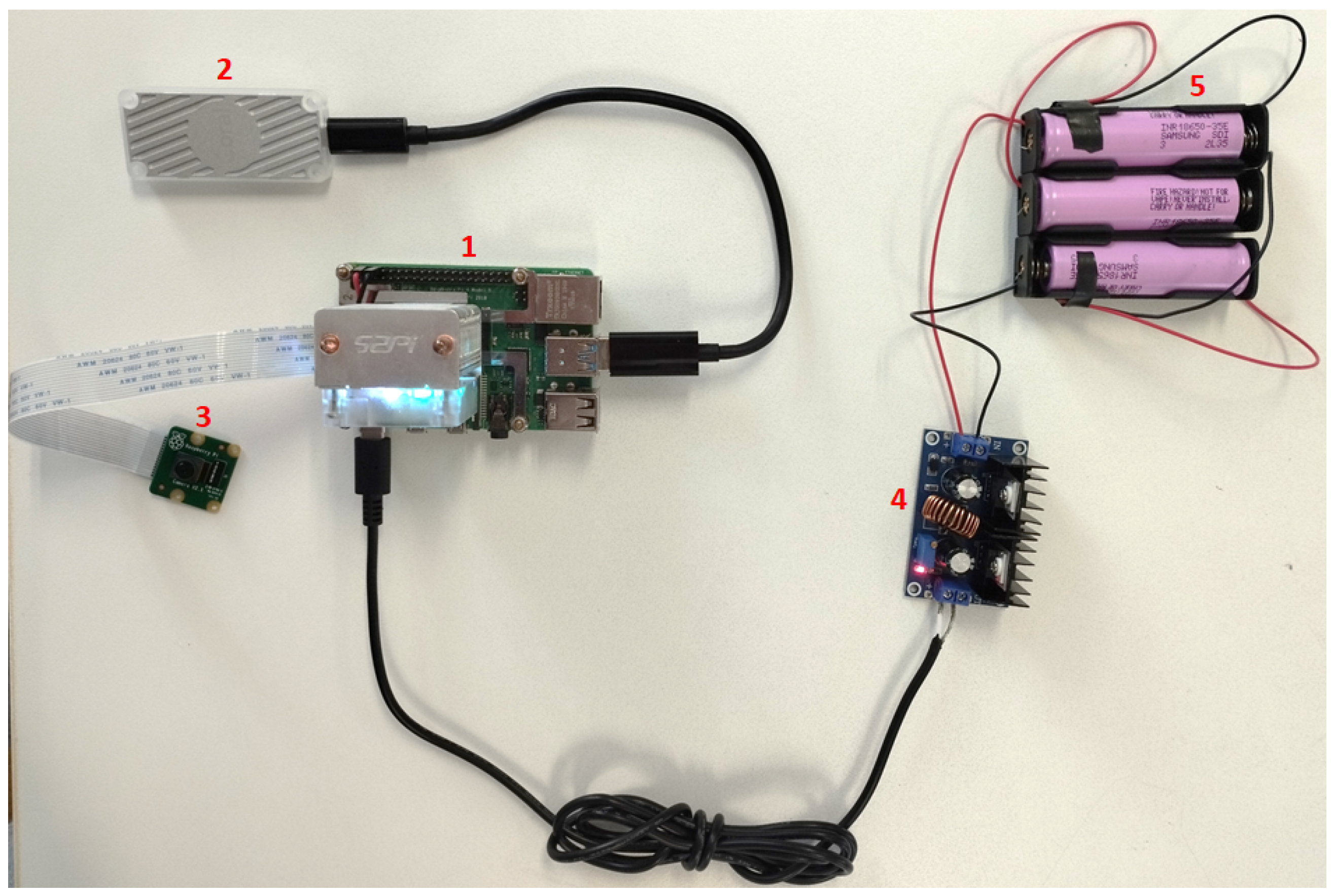

2.2. Hardware for Inference

2.3. SSD: Single Shot Detector

2.3.1. MobileNetV1

2.3.2. MobileNetV2

2.3.3. MobileNet Edge TPU

2.3.4. MobileDet

2.4. Model Optimizations

2.5. Network Training

2.6. Model Assessment

3. Results and Discussions

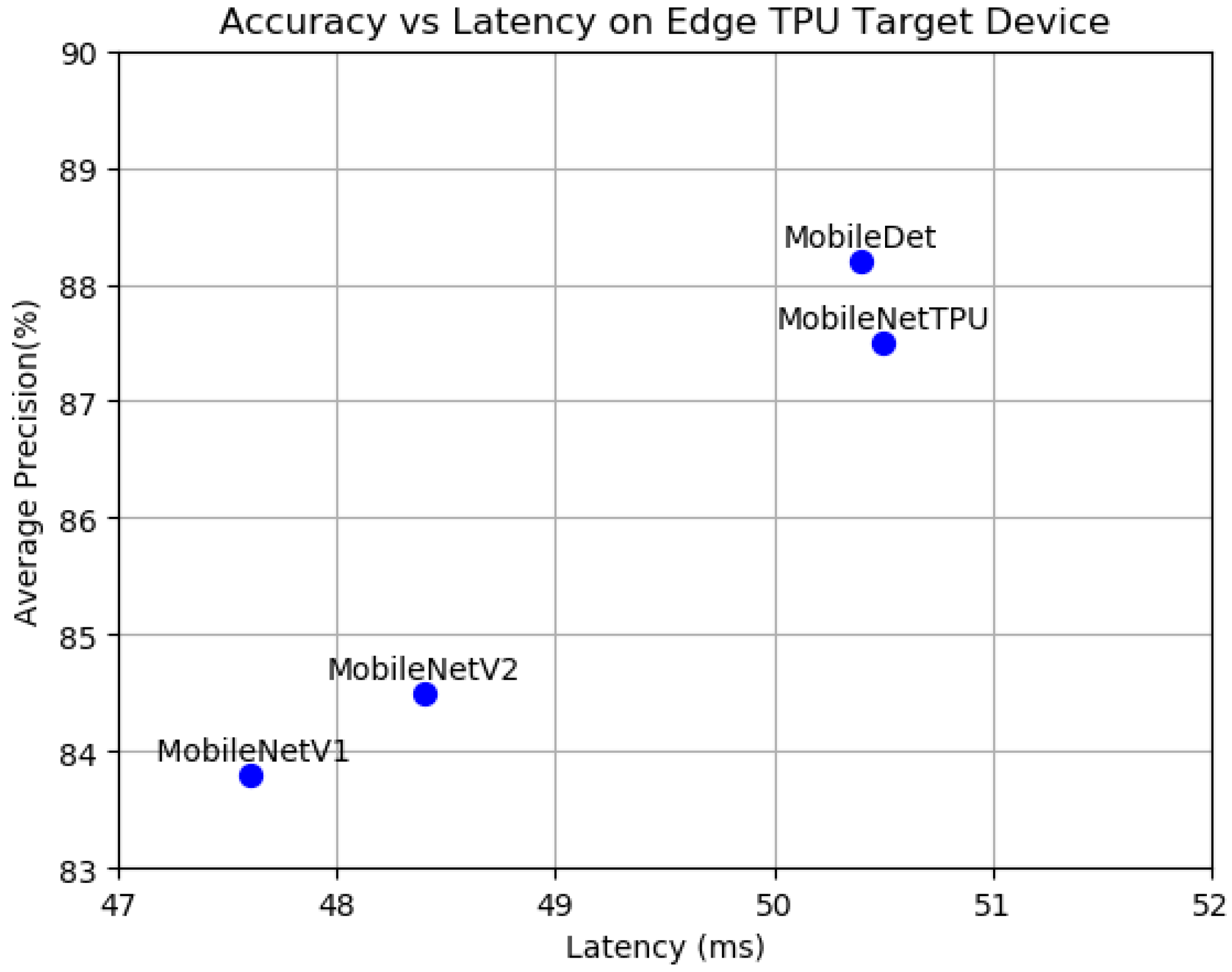

3.1. Ablation Studies

3.2. Inference Time

3.3. Accuracy and Inference Time Trade-Off

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Sample Availability

Abbreviations

| AP | Average precision |

| FPS | Frames per second |

| GPU | Graphics processing unit |

| TPU | Tensor processing unit |

| DSP | Digital signal processor |

| SSD | Single-shot detector |

| YOLO | You only look once |

| CNN | Convolutional neural network |

| AutoML | Auto-machine learning |

| PTQ | Post-training quantization |

| QAT | Quantization-aware training |

References

- Roy, P.; Kislay, A.; Plonski, P.A.; Luby, J.; Isler, V. Vision-based preharvest yield mapping for apple orchards. Comput. Electron. Agric. 2019, 164, 104897. [Google Scholar] [CrossRef]

- Assunção, E.; Diniz, C.; Gaspar, P.D.; Proença, H. Decision-making support system for fruit diseases classification using Deep Learning. In Proceedings of the 2020 International Conference on Decision Aid Sciences and Application (DASA), Sakheer, Bahrain, 8–9 November 2020; pp. 652–656. [Google Scholar]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.M.; Campos, R.M.; Girão, I.; Monteiro, J.; Lopes, C.M. A Review of the Challenges of Using Deep Learning Algorithms to Support Decision-Making in Agricultural Activities. Remote Sens. 2022, 14, 638. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Assunção, E.; Alirezazadeh, S.; Lima, T.M. Irrigation optimization with a deep reinforcement learning model: Case study on a site in Portugal. Agric. Water Manag. 2022, 263, 107480. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.M. Modeling soil water content and reference evapotranspiration from climate data using deep learning method. Appl. Sci. 2021, 11, 5029. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Assunção, E.; Alirezazadeh, S.; Lima, T.M.; Soares, V.N.; Caldeira, J.M. Comparison of on-policy deep reinforcement learning A2C with off-policy DQN in irrigation optimization: A case study at a site in Portugal. Computers 2022, 11, 104. [Google Scholar] [CrossRef]

- Cunha, J.; Gaspar, P.D.; Assunção, E.; Mesquita, R. Prediction of the Vigor and Health of Peach Tree Orchard. In Proceedings of the International Conference on Computational Science and Its Applications, Cagliari, Italy, 13–16 September 2021; pp. 541–551. [Google Scholar]

- Assunção, E.; Gaspar, P.D.; Mesquita, R.; Simões, M.P.; Alibabaei, K.; Veiros, A.; Proença, H. Real-Time Weed Control Application Using a Jetson Nano Edge Device and a Spray Mechanism. Remote Sens. 2022, 14, 4217. [Google Scholar] [CrossRef]

- Assunção, E.T.; Gaspar, P.D.; Mesquita, R.J.; Simões, M.P.; Ramos, A.; Proença, H.; Inacio, P.R. Peaches Detection Using a Deep Learning Technique—A Contribution to Yield Estimation, Resources Management, and Circular Economy. Climate 2022, 10, 11. [Google Scholar] [CrossRef]

- Alibabaei, K.; Gaspar, P.D.; Lima, T.M. Crop yield estimation using deep learning based on climate big data and irrigation scheduling. Energies 2021, 14, 3004. [Google Scholar] [CrossRef]

- FARM_VISION. Precision Mapping for Fruit Production. 2021. Available online: https://farm-vision.com/#news (accessed on 11 November 2021).

- Puttemans, S.; Vanbrabant, Y.; Tits, L.; Goedemé, T. Automated visual fruit detection for harvest estimation and robotic harvesting. In Proceedings of the 2016 Sixth International Conference on Image Processing Theory, Tools and Applications (IPTA), Oulu, Finland, 12–15 December 2016; pp. 1–6. [Google Scholar]

- Krishnamoorthi, R. Quantizing deep convolutional networks for efficient inference: A whitepaper. arXiv 2018, arXiv:1806.08342. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and training of neural networks for efficient integer-arithmetic-only inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2704–2713. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- NVIDIA. NVIDIA TensorRT. 2021. Available online: https://developer.nvidia.com/tensorrt (accessed on 10 December 2021).

- Zhang, W.; Liu, Y.; Chen, K.; Li, H.; Duan, Y.; Wu, W.; Shi, Y.; Guo, W. Lightweight Fruit-Detection Algorithm for Edge Computing Applications. Front. Plant Sci. 2021, 12, 2158. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Huang, T.; Li, Z.; Lyu, S.; Hong, T. Design of Citrus Fruit Detection System Based on Mobile Platform and Edge Computer Device. Sensors 2022, 22, 59. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Fu, L.; Majeed, Y.; Zhang, X.; Karkee, M.; Zhang, Q. Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosyst. Eng. 2020, 197, 245–256. [Google Scholar] [CrossRef]

- Liu, G.; Nouaze, J.C.; Touko Mbouembe, P.L.; Kim, J.H. YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3. Sensors 2020, 20, 2145. [Google Scholar] [CrossRef]

- Tsironis, V.; Stentoumis, C.; Lekkas, N.; Nikopoulos, A. Scale-Awareness for More Accurate Object Detection Using Modified Single Shot Detectors. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2021, 43, 801–808. [Google Scholar] [CrossRef]

- Tsironis, V.; Bourou, S.; Stentoumis, C. Tomatod: Evaluation of object detection algorithms on a new real-world tomato dataset. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 1077–1084. [Google Scholar] [CrossRef]

- Coral. USB Accelerator. 2021. Available online: https://coral.ai/products/accelerator (accessed on 5 October 2021).

- Xiong, Y.; Liu, H.; Gupta, S.; Akin, B.; Bender, G.; Wang, Y.; Kindermans, P.J.; Tan, M.; Singh, V.; Chen, B. Mobiledets: Searching for object detection architectures for mobile accelerators. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 3825–3834. [Google Scholar]

- Dias, C.; Alberto, D.; Simões, M. Produção de pêssego e Nectarina na Beira Interior. pêssego–Guia prático da Produção. Centro Operativo e Tecnológico Hortofrutícola Nacional. 2016. Available online: http://hdl.handle.net/10400.11/7076 (accessed on 24 September 2022).

- Tzutalin. LabelImg. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 3 May 2021).

- Raspberry-Pi, F. Raspberry Pi 4. 2021. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 5 May 2021).

- Raspberry. Raspberry Pi Camera Module 2. 2016. Available online: https://www.raspberrypi.com/products/camera-module-v2/ (accessed on 18 September 2022).

- XLSEMI. 8A 180KHz 40V Buck DC to DC Converter. 2021. Available online: https://www.alldatasheet.com/datasheet-pdf/pdf/1134369/XLSEMI/XL4016.html (accessed on 18 September 2022).

- Mouser. Li-Ion Battery. 2022. Available online: https://mauser.pt/catalog/product_info.php?products_id=120-0445 (accessed on 18 September 2022).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yazdanbakhsh, A.; Seshadri, K.; Akin, B.; Laudon, J.; Narayanaswami, R. An evaluation of edge tpu accelerators for convolutional neural networks. arXiv 2021, arXiv:2102.10423. [Google Scholar]

- Howard, A.; Gupta, S. Introducing the Next Generation of On-Device Vision Models: MobileNetV3 and MobileNetEdgeTPU. 2020. Available online: https://ai.googleblog.com/2019/11/introducing-next-generation-on-device.html (accessed on 24 September 2022).

- Menghani, G. Efficient Deep Learning: A Survey on Making Deep Learning Models Smaller, Faster, and Better. arXiv 2021, arXiv:2106.08962. [Google Scholar]

| Cultivar | Sample Image | Fruit Density | Color |

|---|---|---|---|

| Royal Time |  | Low | Red |

| Sweet Dream |  | Medium | Dark Red |

| Catherine |  | High | Yellow |

| Split | Cultivar | Images | Fruits (Labels) |

|---|---|---|---|

| Train | Sweet Dream | 270 | 2015 |

| Royal Time | 248 | 1066 | |

| Catherine | 305 | 4564 | |

| Test | Sweet Dream | 66 | 453 |

| Royal Time | 63 | 270 | |

| Catherine | 76 | 1480 | |

| Total of training | 823 | 7645 | |

| Total of testing | 205 | 2203 | |

| Model | AP (%) | Drop from Baseline to TPU | |

|---|---|---|---|

| Baseline | EdgeTPU | ||

| SSDLite MobileDet | 89 | 88.2 | 0.8 |

| MobileNet EdgeTPU | 88 | 87.5 | 0.5 |

| SSD MobileNetV2 | 86 | 84.5 | 1.5 |

| SSD MobileNetV1 | 85 | 83.8 | 1.2 |

| Model | Latency | ||

|---|---|---|---|

| CPU (ms) | EdgeTPU (ms) | FPS | |

| SSD MobileNetV1 | 847.9 | 47.6 | 21.01 |

| SSDLite MobileDet | 1045.9 | 50.4 | 19.84 |

| MobileNet EdgeTPU | 1232 | 50.5 | 19.80 |

| SSD MobileNetV2 | 773.1 | 48.4 | 20.66 |

| Model | Device|Accel. | Price (€) | Input Size | Fruit | AP (%) | Latency |

|---|---|---|---|---|---|---|

| Approach_1 | Jetson Nano|GPU | 108 | 608 × 608 | Citrus | 93.32 | 180 (ms) |

| Approach_2 | Jetson Xavier|GPU | 429 | - | Apple | 85 | 45 (ms) |

| Our | Raspberry|TPU | 141 | 640 × 480 | Peach | 88.2 | 50.4 (ms) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Assunção, E.; Gaspar, P.D.; Alibabaei, K.; Simões, M.P.; Proença, H.; Soares, V.N.G.J.; Caldeira, J.M.L.P. Real-Time Image Detection for Edge Devices: A Peach Fruit Detection Application. Future Internet 2022, 14, 323. https://doi.org/10.3390/fi14110323

Assunção E, Gaspar PD, Alibabaei K, Simões MP, Proença H, Soares VNGJ, Caldeira JMLP. Real-Time Image Detection for Edge Devices: A Peach Fruit Detection Application. Future Internet. 2022; 14(11):323. https://doi.org/10.3390/fi14110323

Chicago/Turabian StyleAssunção, Eduardo, Pedro D. Gaspar, Khadijeh Alibabaei, Maria P. Simões, Hugo Proença, Vasco N. G. J. Soares, and João M. L. P. Caldeira. 2022. "Real-Time Image Detection for Edge Devices: A Peach Fruit Detection Application" Future Internet 14, no. 11: 323. https://doi.org/10.3390/fi14110323

APA StyleAssunção, E., Gaspar, P. D., Alibabaei, K., Simões, M. P., Proença, H., Soares, V. N. G. J., & Caldeira, J. M. L. P. (2022). Real-Time Image Detection for Edge Devices: A Peach Fruit Detection Application. Future Internet, 14(11), 323. https://doi.org/10.3390/fi14110323