1. Introduction

Appropriate management techniques are an important help to remediate agricultural challenges regarding productivity, environmental impact, food security, and sustainability [

1]. Due to the heterogeneity and complexity of agricultural environments, it is necessary to consider them, with regard to monitoring, measurement, and continuous analysis of the physical aspects and phenomena involved [

2]. For agricultural fields cultivated with woody species, individual tree canopy identification is important for plant counts, its growth assessment, and yield estimation. On the other hand, tree detection and mapping are costly activities that consume a lot of time and effort when performed by traditional field techniques [

3,

4]. In view of this, practical approaches for the efficient identification and mapping of such trees are necessary to improve agricultural management.

Remote sensing data have been widely used for agricultural management as well as for plant analysis. The data of interest for such an evaluation is collected individually for each plant in the field. In particular, Unmanned Aerial Vehicles (UAVs) are the most frequently used remote sensing platforms for this activity because they provide images with refined spatial resolution, allowing for on-demand imaging, enabling timely processing and acquisition of information, and they are cost-effective compared to manned aerial or equivalent orbital imaging [

5]. From tobacco plants with a focus on agricultural management using RGB images acquired by UAVs, Ref. [

6] evaluated the ability of automatic detection. RGB UAV images were used by Ref. [

7] to identify individual rice plants in agricultural fields to estimate productivity. Multispectral UAV images were used by Ref. [

8] to derive spectral attributes (i.e., vegetation indices) for the identification of the greening disease in orange plantations through the recognition of individual canopies before its classification.

The imaging equipment commonly used in UAVs are RGB digital cameras, multi and hyperspectral sensors and, less commonly, thermal and light detection and ranging (LiDAR) systems [

5]. The use of RGB cameras in UAV is considerably more accessible than later methods. Using photogrammetry techniques and algorithms, such as Structure from Motion (SfM) and Multi-View Stereo (MVS), allows for the acquisition of data beyond the imaged scenes, such as dense three-dimensional point clouds, Digital Elevation Models (DEM), and ortho-rectified image mosaics [

9].

The integration of images with these three-dimensional data allows for robust vegetation monitoring, not only due to the ability to identify individual plants but also because of the possibility to estimate canopy morphology parameters, such as height, diameter, perimeter, and volume [

10,

11,

12]. The canopy detection techniques from remote sensing images apply different concepts and its suitability depends on the type of canopy studied and the characteristics of the evaluated areas. The classic methods for this task are the Local Maxima (LM) algorithm, Marker-Controlled Watershed Segmentation (MCWS), template matching, region-growing, and edge detection [

13]. However, the major limitation for the application of these methods is its requirement to manually configure specific parameters for each type of target of interest or image during identification [

14,

15] with analyst’s specific knowledge and so it reduces the feasibility of developing automated process frameworks.

In addition to classical methods, Deep Learning (DL) models have been widely used in recent years due to its ability to deal with various computer vision problems [

16], especially with Convolutional Neural Network (CNN) architecture. One of the advances made in the field of CNNs was the development of Region-based CNNs (R-CNN) [

17], which is architecture able to perform image instance segmentation—the individual identification of objects belonging to the same semantic class (i.e., the discrimination of individual treetops within the vegetation class).

The latest advance of R-CNNs is the Mask R-CNN architecture [

18], which is capable of accomplishing instantiated identification and delimitation of the contour from the object of interest at the pixel level. Mask R-CNN has been widely used in remote sensing of vegetation and the results obtained indicate its great potential for the detection and design of targets. A comparison of the performance of classic models with Mask R-CNN for the identification of China fir was made by Ref. [

13], and it was concluded that Mask R-CNN presents a higher performance, reaching a F1-score up to 0.95. The parameter setting of the model for the use of training samples with different levels of refinement was investigated by Ref. [

19], reaching a F1-score of 0.90 and 0.97 for potato and lettuce plantations, respectively.

Even with the application of DL, using region-based or other CNN models, the detection of tree canopies still requires further investigation, such as the use of a single model for areas with heterogeneous spatial characteristics or in cultures with high planting densities, where the contiguity and overlap between two neighboring crowns occurs naturally and makes individual identification a really difficult task [

20,

21]. On the other hand, studies of instance segmentation applied to natural scenes have shown that the performance of models is superior when combining scene depth information with RGB images [

22]. Therefore, the structural/morphological information from data based on photogrammetry, linked to the crown images, can be a relevant factor for the identification of each grouped crowns.

To the best of our knowledge, few studies evaluated the use of three-dimensional information from RGB images as a proxy for individualization of contiguous treetops. the ability to identify individual crowns of chestnut trees from DEM derived products based on SfM was evaluated by Ref. [

10]. The process suggested by the authors is based mainly based on the use of morphological filters since the chestnut crowns, despite touching each other, clearly maintain its circular path, as seen from nadir. Combinations of different RGB and SfM-based features for crown identification and Chinese fir height calculation were analyzed by Ref. [

12]; however, similarly, crowns do not contemplate a high level of density. Furthermore, some cultures have different characteristics of the mentioned species and the crowns are located extremely dense, such as orange trees and grapes. a tool based on a dense cloud of points and products derived from DEM for surveying viticulture biomass was developed by Ref. [

11]. The authors did not focus on the individual identification of each plant to calculate the biomass but on the identification of failures due to the decrease in the canopy density.

An additional major challenge for the use of DL is the need of a significant amount and variety of training samples for network learning, which requires a lot of manual labeling work. Some studies are based on this condition to simplify the detection of trees, identified by an enclosing rectangle, without delimiting the contour of each crown [

20,

23] or even considering the punctual representation, without the two-dimensional delimitation of the plant [

24,

25,

26]. Nevertheless, the identification of trees by a canopy delimiting mask offers a range of possibilities for the analysis due to the discrimination of the canopy area in in relation to its surroundings (i.e., soil and shade). It allows for the application of approaches aimed at individual location, tree counts, and the extraction of morphological information, as mentioned, but above all the use of the exclusive spectral response from the plant canopy, as in the identification of phyto-pathologies [

27].

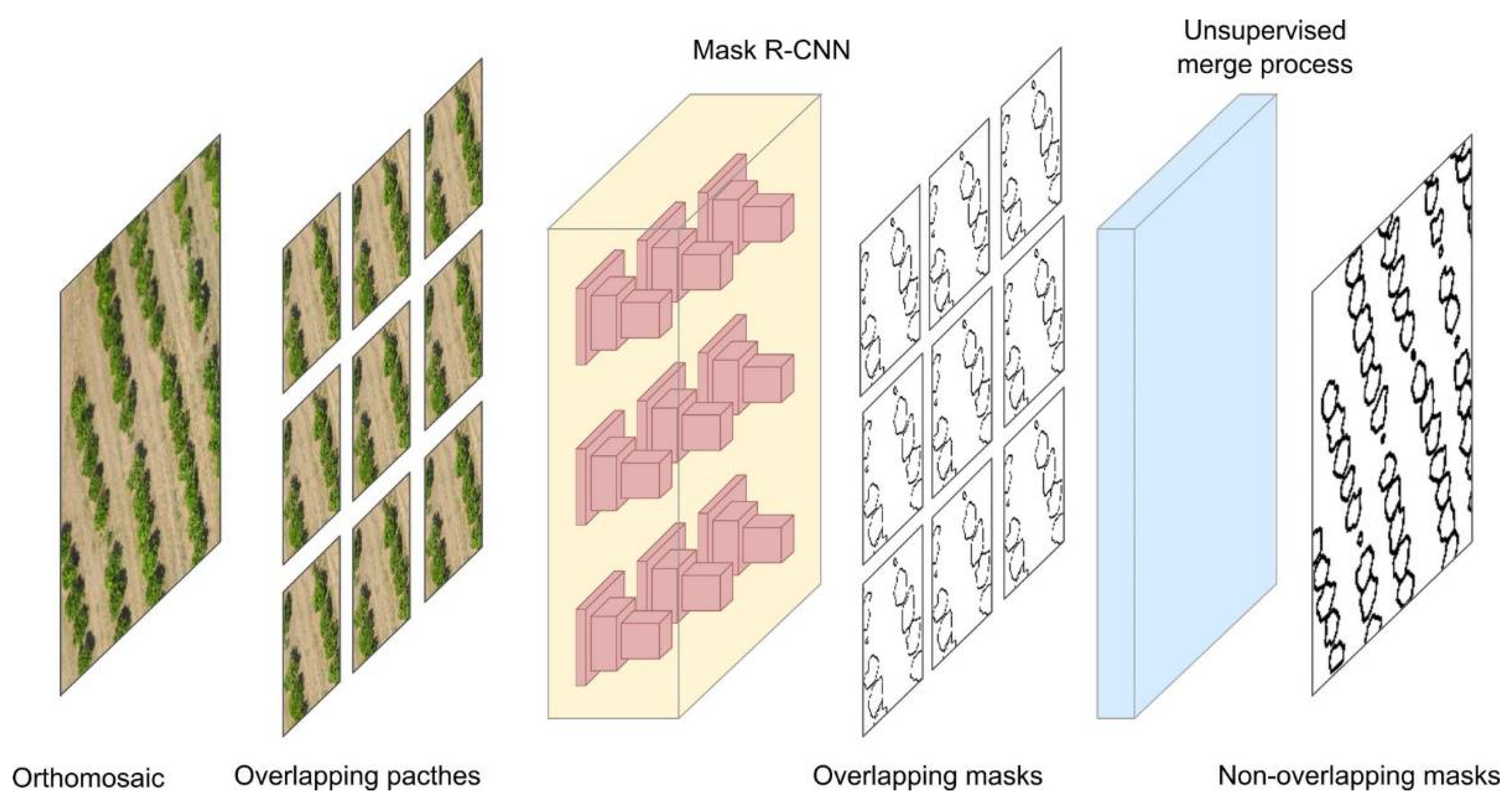

Another challenge for using models based on DL is the adequacy of the images to the architecture of the models, as these are usually built for natural images of fixed proportions and especially smaller sizes than orbital or UAV images. Therefore, the use of remote sensing images in these models requires the subdivision of the original image into several square patches that require post-treatment to eliminate the mosaic effect [

6,

13], which makes it difficult to develop automated detection and counting frameworks. To the best of our knowledge, few studies have addressed any methodology for aggregating the final result in a single scene. Through a modification of the Non-Maximum Suppression (NMS) method, Ref. [

28] proposed an approach to study with irrigated pivots, which differ from trees in agricultural plots as they have extremely regular shapes and no overlap. To integrate the results of identification of forest tree canopies, Ref. [

29] adopted their own method. Without much detail about the procedure, the authors suggest the union of any two crowns that overlap and are located at the ends of the clippings (patches), disregarding the possibility of real overlap between the trees.

In the existing literature, few studies have reported the evaluation of instance segmentation in remote sensing images using only RGB imagery and photogrammetry DEM-based data for detection and delineation of dense treetops (touching/overlapping). The analysis carried out in this study aims (I) to contribute to the identification and counting of plants and the delimitation of their canopies, as well as (II) to increase the feasibility of the annotation process in studies involving canopies of contiguous trees in agricultural orchards, and also (III) to propose a method for automating the segmentation process of canopies with different planting densities for large scenes by mosaicking the results.

2. Materials and Methods

2.1. Study Area

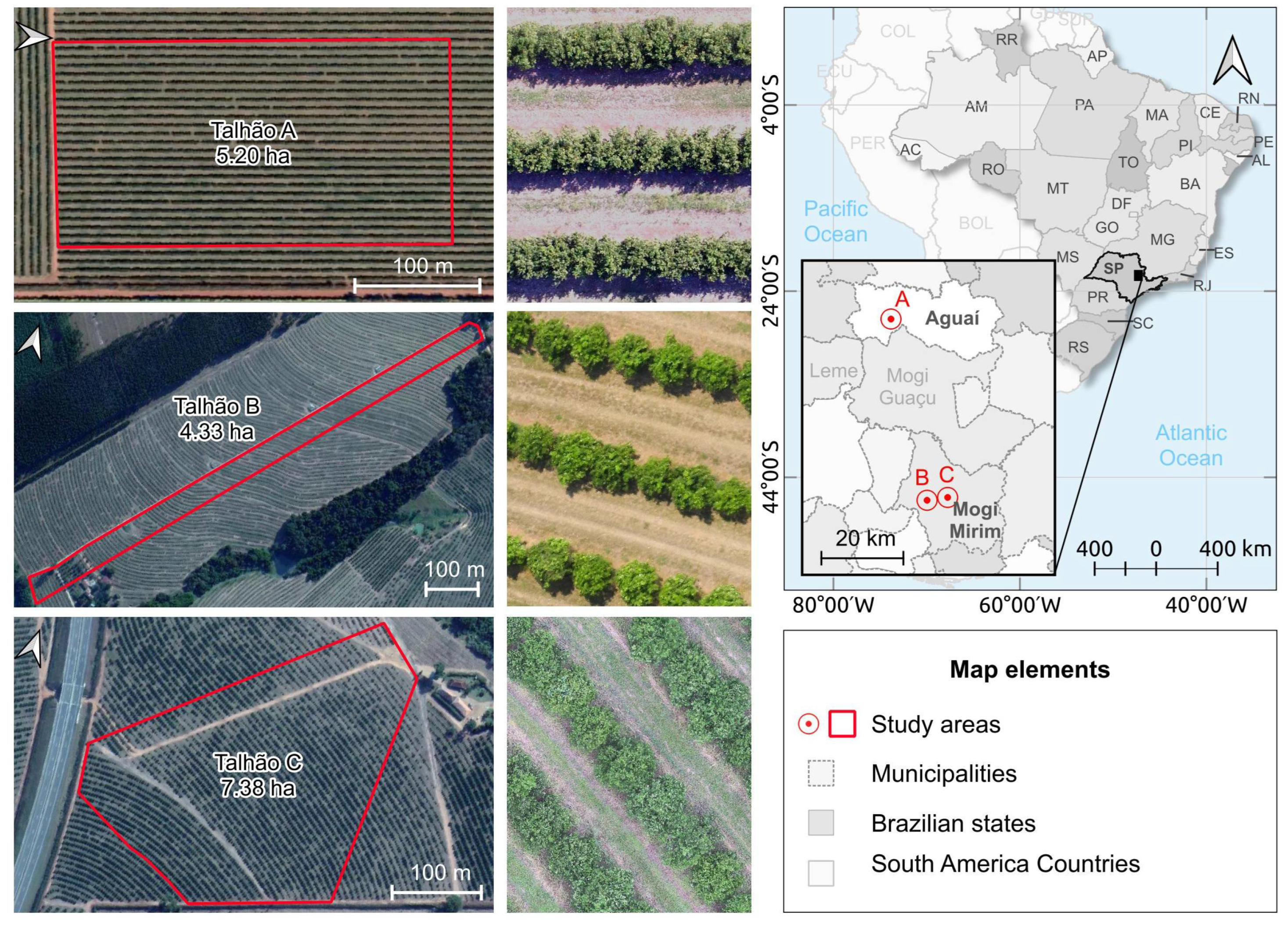

The study area (

Figure 1) is composed of three fractions of plots located in São Paulo State, Brazil, referred as plots A (47.1285° W, 22.0543° S), B (47.0491° W, 22.4555° S) and C (47.0003° W, 22.4508° S). These plots cover 5.20, 4.33, 7.38 hectares, respectively, totaling 16.9 ha of orange plantations of Hamlin, Baianinha, Valencia, Pêra, and Natal varieties. Altogether, the segments of plots cover 9064 trees and have different spatial characteristics, such as planting age, crown height and diameter, spacing between trees and rows, as well as different soil coverage.

2.2. Image Acquisition and Processing

The RGB images were acquired in three plots at different dates and with different equipment. Plots A and C were imaged with a multi-rotor UAV, the DJI Phantom 3 (DJI, Shenzhen, China) with an RGB digital camera—PowerShot S100 (zoom lens 5.2 mm; 12.1 Megapixel CMOS sensor; 4000 × 3000 resolution). Plot B was imaged with a fixed-wing UAV, senseFly’s eBee (senseFly SA, Lausanne, Switzerland) integrated with the senseFly Duet T camera, which has an RGB sensorSensefly S.O.D.A. (zoom lens 35 mm; 5472 × 3648 resolution) and a thermal sensor that was not considered in this study.

Table 1 summarizes information related to image acquisition, including equipment and flight configuration.

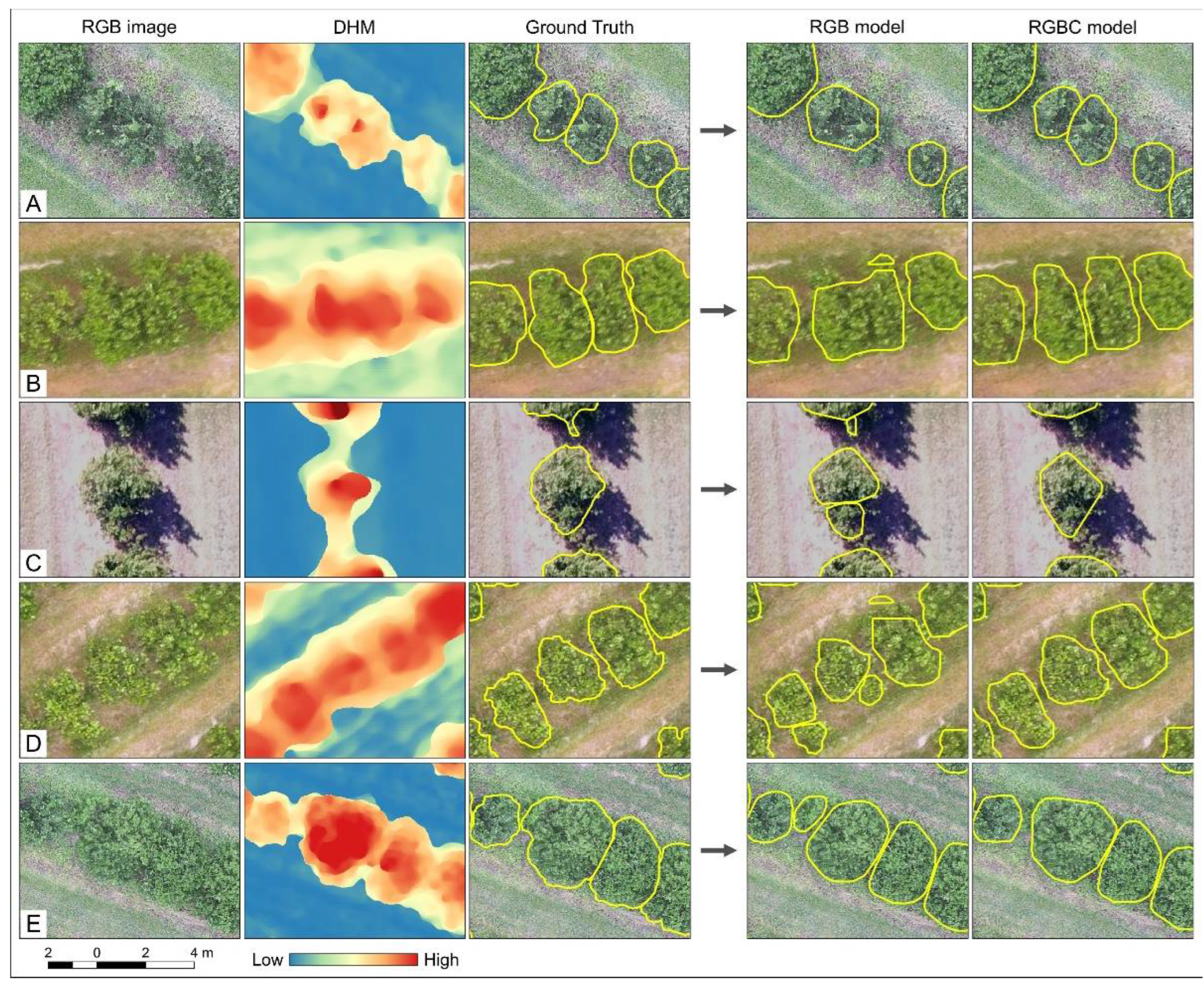

Pix4Dmapper Pro software (Pix4D SA, Lausanne, Switzerland) was used for the photogrammetric processing of the images acquired by the UAVs, using sequentially the following techniques: alignment optimization, construction of dense mesh of points, classification of ground points, elaboration of DEM-based data (such as the Digital Surface Model (DSM) and Digital Terrain Model (DTM)) and ortho-mosaics. In addition to the products generated by the software, the Canopy Height Model (CHM) of each plot was also computed. The CHM is the result of the subtraction of the DSM by the DTM, generating an elevation model that considers only the heights above the ground and eliminates the terrain slope. The canopy height information is important to define the separation between two dense canopies. At

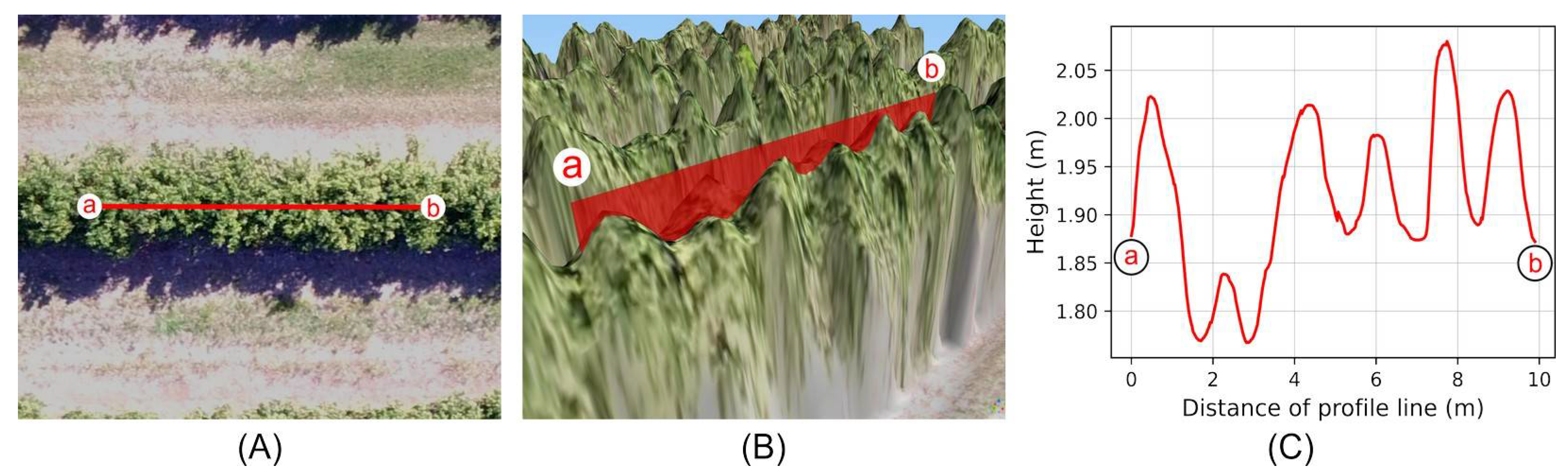

Figure 2, one observes that from the RGB image at nadir, it is difficult to differentiate between the canopies even by visual interpretation. However, the altitude information of the canopies suggests the separation from the formation of the saddle point between the top points.

2.3. The Mask R-CNN

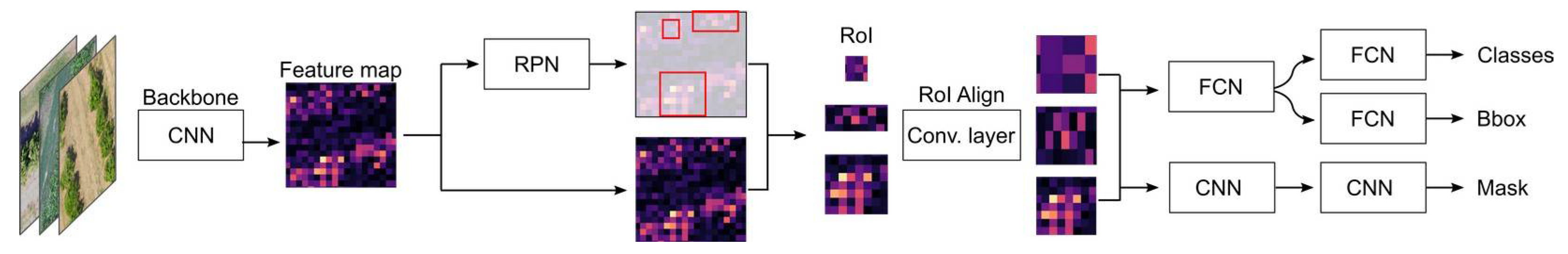

The detection and delimitation of treetops was performed using the Mask R-CNN [

18], one of the main frameworks currently used for instance segmentation in remote sensing images [

30]. This mask operates in two modules, one for detecting regions where the presence of the object has been detected and discriminated and the other for segmentation at the pixel level of object boundaries. Its structure (

Figure 3) is defined by adding this second module to the structure of the Faster R-CNN [

31], a network which can detect objects without defining their borders. The following describes its functioning.

The input image is inserted into the backbone structure, which performs a set of convolutional and pooling operations, extracting visual attributes at different scales. In this study, the ResNet101 architecture was used. The attribute maps generated by the backbone are used as input to the Region Proposed Network (RPN), which computes the probable regions containing the desired objects from patterns extracted from the attributes. For each region with a high probability of an object occurrence, multiple anchor boxes (or RoI, Regions of Interest) are computed, depending on the scale and ratio parameters defined for the model. In this study, scales 4, 8, 16, 32, 64 (pixels) and ratios 0.5, 1, 2 were used, resulting in 15 anchor boxes for each region found.

These parameters were defined according to the coverage and proportion of the crowns in the images of different spatial resolutions. Additionally, the same scale interval was maintained despite slightly different values found by [

19]. Due to the different sizes and proportions considered for the RoIs, the algorithm performs a homogenization of its sizes, through a convolution layer called RoI-align. RoIs with the same size and scale are used as input in three different processes: (1) the softmax classifier preceded by a Fully Connected Network (FNC) that indicates the class and respective probability of each identified object; (2) the boundary box regressor preceded by an FCN for delimitation of the object’s bounding box; and (3) according to the increment of the Faster R-CNN by the Mask R-CNN, the delimitation of the object’s mask, at pixel level, through convolution layers [

18].

The CNN R-Mask algorithm was obtained and adapted from the implementation referred by [

32]. The adaptation carried out includes the possibility of using images with more than 3 bands and images in TIFF format, preserving the geospatial information, which subsidized the geolocation of the inferred masks. The implementation performed uses COCO-like annotation in JSON format to represent the ground truth required during the training phase.

2.4. Individual Tree Canopy Dataset

To create the ground truth dataset, a methodology was adopted aiming to reduce the time and effort demanded by manual activity for image processing techniques. This methodology provides a possibility of simplifying the process, and it can be used in future works to create a dataset of identification of tree canopies not only with the punctual location or squared borders of the instances but with masks that outline its limits. From mask, the point information and the surrounding rectangle can be derived from the extraction of the centroid and bounding box, respectively.

The proposed methodology for the delineation of the canopies (

Figure 4) was performed according to the following sequence:

Extraction of the Color Index of Vegetation Extraction (CIVE) [

33] whose equation is given by:

where red, green, and blue are the respective bands of the image;

Application of the morphological opening with two different window sizes (3 and 5 pixels) and smoothing in the CIVE image;

CIVE image threshold operation from a specific threshold for each image;

Conversion of the threshold image from raster to vector (polygon) and selection of the area represented by the set of canopies;

Individualization of the polygon representing the set of canopies by manual clipping.

As a result of the above sequence, the manual work (Step 5) was only necessary to determine the separation line (or eventually the intersection) between two canopies in regions where there was some level of contact between them, and so manual identification and delimitation became unnecessary.

At the point of contact between two very dense trees, visual identification of the line that separates both can be a difficult and a skewed task if performed only from the RGB image. However, when considering the morphological aspect of the canopies, the occurrence of a saddle point formed by neighboring trees is common, regardless of the proximity between them, which facilitates the individual discrimination. Therefore, for the visual identification of the borders between the canopies, in addition to the RGB image, the CHM was used, which provided information on the relief of the treetops, needed especially in those areas where it is not possible to individualize the trees based on color aspects and texture from the RGB image.

Furthermore, to increase the feasibility of manual canopy delimitation, an algorithm was developed to convert geographic data into a coco-type annotation, the format used in the Mask R-CNN. Thus, all the steps mentioned in this topic were carried out within the GIS environment (QGIS version 3.16) and afterwards, automatically the masks represented by the vector features (polygons) were converted directly into annotations (JSON format) referenced and geo-located in its respective images.

2.5. Training of Models

The pre-processed images were cut into square patches (aspect rate = 1) to be used in the segmentation models. The patches present horizontal and vertical overlap and its size is proportional to the spatial resolution of each original image. In addition to the images, the vector data containing the ground truth with the delimitation of the canopies was also cut following the same cut grid. So, each sample used for training or detection inference corresponds to a patch.

Two segmentation models were defined: the first (RGB model) using RGB images with the three original ortho-mosaic bands (Red, Green and Blue) and the second (RGBC model) using images with 4 bands, namely Red, Green, Blue, and CHM. To homogenize the radiometric resolution between the bands of the RGBC model images, the CHM was converted to 8 bits by a linear transformation. Hence, all the bands of the patches used in each model have a pixel value range between 0 and 255.

The analysis considered the three areas under study indistinctly, since the focus of the work is to evaluate and compare the canopy detection capacity of RGB and RGBC images, considering the spatial complexity and variability of planting characteristics, such as orange variety, age of orchard, presence of vegetation cover, or exposed soil between planting lines and different tree heights and planting densities. Therefore, training, validation of training, and inference were performed considering the three plots uniformly.

In total, 1715 patches were used for each of the two models, with 1031 (60%) used for training, 342 (20%) for training validation, and 342 (20%) for testing. The characteristics adopted in each patch set are shown in

Table 2. For all patches in the three fields adopted, the maximum number of instances (canopies or fractions) per patch was 30.

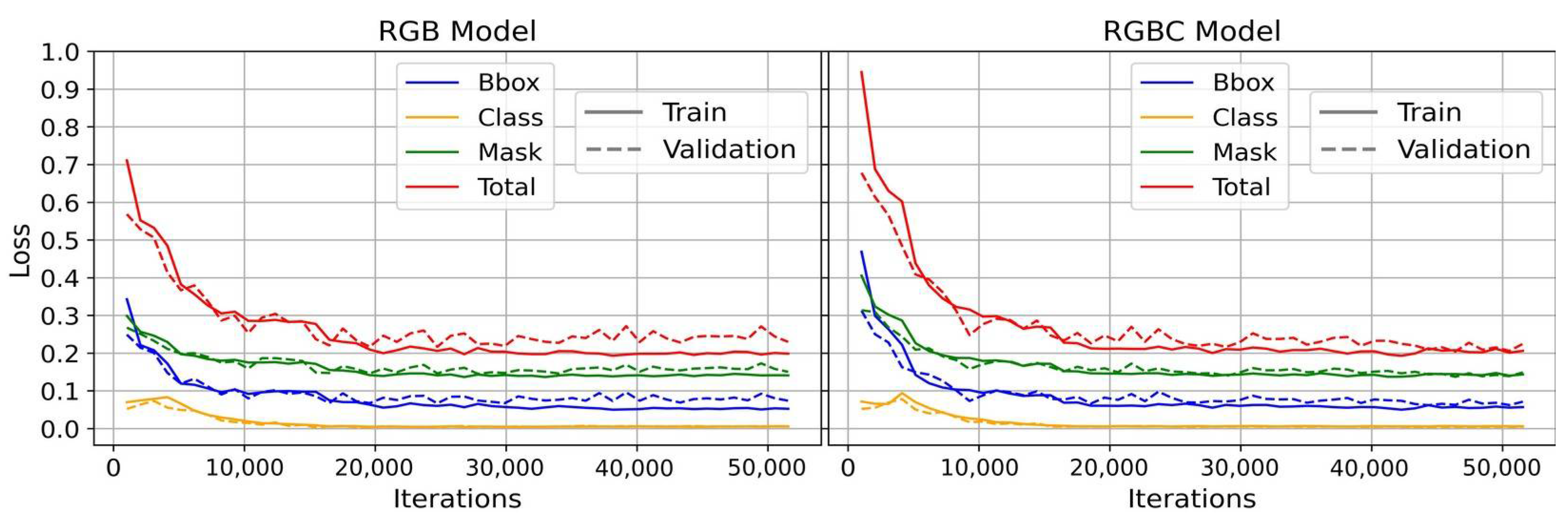

The two models were similarly trained, with an initial learning rate of 0.001 and a momentum of 0.9. The epoch corresponds to a cycle of use for all training samples, which is formed by iterations (batch size). These in turn may have the same number of samples. Fifty epochs were adopted, each with 1031 iterations and, to increase the learning process, a 10-fold reduction in the training rate was considered between epochs 15 and 27 (0.0001), 27 and 39 (0.00001), and 39 and 50 (0.000001).

The models were not randomly started during the training process. Both networks were loaded with pre-training parameters of the COCO dataset. However, the parameters loaded correspond to pre-training on RGB images and because the RGBC model is executed with 4-band images, the weights of the first layer for this model were started randomly. Additionally, data augmentation was randomly applied to the training images prior to their entry into the models, and the changes applied to each of the samples correspond to one or two of the following transformations: (1) horizontal mirroring, (2) vertical mirroring, (3) rotation by 90°, 180°, or 270°, and (4) change in the brightness value of pixels in the interval between 50% and 150%.

The evaluation of the Mask R-CNN training and validation is carried out by a set of metrics and each one evaluates a particularity of the detection activity. The metrics used in this study are (1) class loss which indicates how close the model is to the correct class, i.e., canopy or background, (2) bounding box loss—the difference between the bounding box parameters (height and width) of the reference and the inference, (3) mask loss—the pixel-level difference between the reference mask and the inference, and (4) total loss—the sum of all other metrics. After the training phase, the metrics were computed and the learning evolution of the two models is shown in

Figure 5.

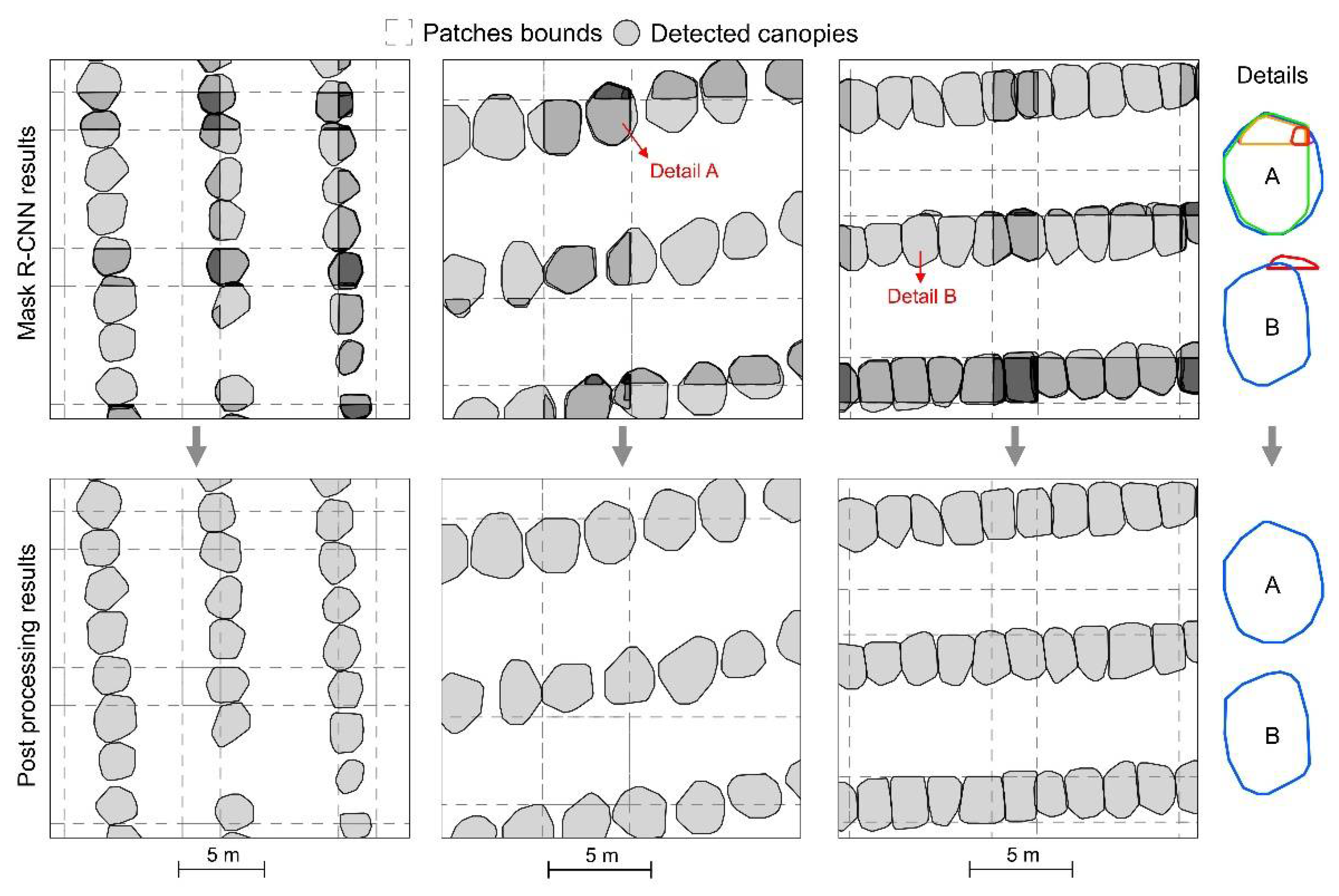

2.6. Unsupervised Merge Process

Applying the model described for inference, the possible limits of the trees in the original images fractionated by the patches are obtained. However, the overlap between the patches means that some trees may occur redundantly. Redundancies can be a part of a tree located at the end of a patch or an entirely duplicated canopy. Redundancy is identified by the overlapping of two or more masks related to the same tree. In order to prevent it to degrade the detection accuracy, a result aggregation methodology was developed based on the topological relationship between the inferred candidate masks.

The overlap section between patches was defined in such a way that at least one of the generated patches covers fully the treetops in the intersection region. So, for the refinement of the result it is necessary to identify the redundant masks and delete them, and it is not necessary to unite two or more masks to generate a final polygon. Candidate masks which do not overlap with the others are left unchanged.

It is noteworthy that in stands with a high density of plants, the overlap between them can occur naturally and, assuming this possibility, it is not possible to simply exclude the tree segments that overlap. During the analysis, it was observed that the adoption of this procedure implies the exclusion of non-redundant masks and by default the reduction of detection accuracy.

The unsupervised algorithm proposed for this activity consists of the following steps:

For each candidate mask, calculate the intersections between it and the others;

Evaluate the spatial relationship between intersections and masks and exclude the mask if any of these conditions are met:

- 2.a

Existence of at least one intersection with an area corresponding to minimum 50% from the area of the original mask;

- 2.b

The sum of all intersecting areas of the mask corresponds to at least 50% of its area;

- 2.c

Mask area is less than 1/3 the area of any other mask intersecting with it.

The conditions defined for the refinement were empirically selected from the raw results obtained at the different plots. The natural overlap in the treetops occurs in a subtle way and does not exceed more than 50% of the canopy from each one, either by unilateral (condition 2.1) or bilateral (condition 2.2) overlapping. This overlap occurs between two large trees with similar areas, and it is unlikely, due to the homogeneous spacing adopted in planting. The overlap between a small canopy with a large canopy (condition 2.3) assists in removing false detections, often related to weeds from the plant’s surroundings.

The development of this algorithm allowed for the adoption of a unified and automated framework, including all the segmentation procedures of the two previously trained models, which receive as input the ortho-mosaics and the CHMs from pre-processing and the output is the vector data corresponding to the delimitation of the canopies in the entire area defined for inference, without any reference to the division of the patches adopted in the Mask R-CNN (

Figure 6). After the inference, the treetops were submitted to the validation of the result from the comparison with the ground truth data extracted manually.

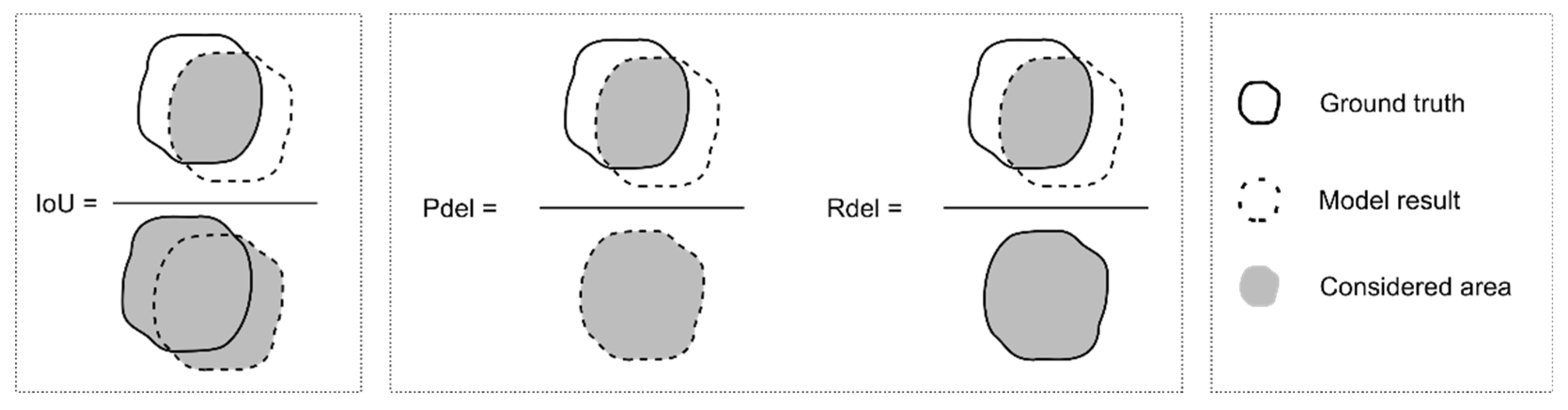

2.7. Evaluation Metrics

To validate the proposed method, two evaluation approaches were applied to each of the segmentation models. The first focused on the performance of the individual detection of trees in the test area and the second focused on the precision evaluation of the delineation from each identified canopy. As the metrics of the two approaches have similar names, a suffix was adopted in each to discriminate any case: _det for detection and _del for delineation. In both cases, the validation considered the entire set of inferred and reference canopies.

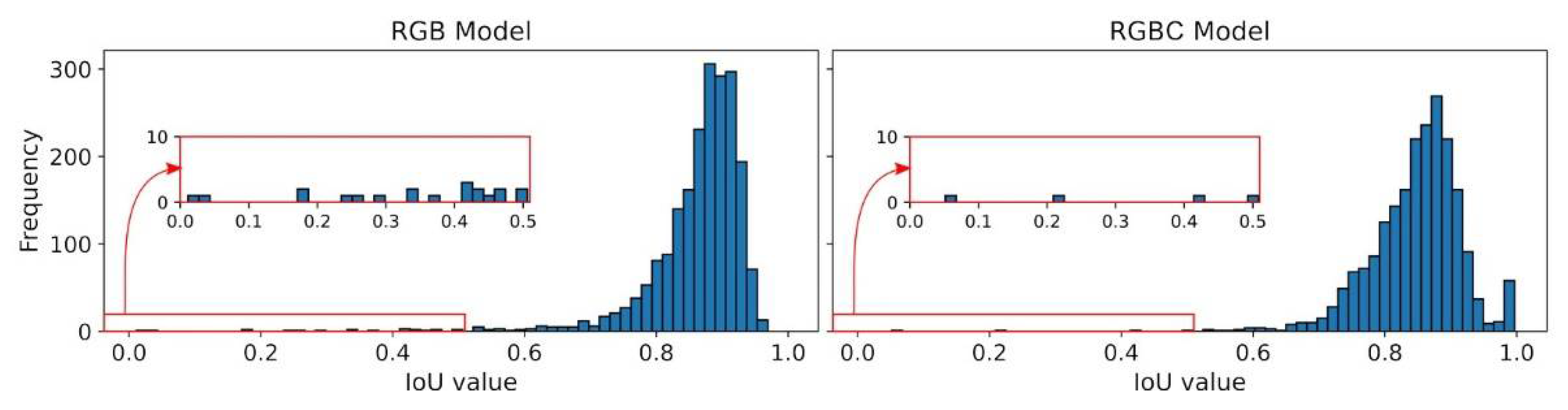

2.7.1. Number of Detected Trees

All inferred trees were validated through the spatial relationship between their limits and the borders of their respective ground truth by the Intersection of Union (IoU) metric (

Figure 7). IoU corresponds to the ratio between the areas of the intersection of the corresponding pair (ground truth and inference) by union. The higher the conformity of the tracings of the two masks, the closer to 1 is the IoU value. A sample was considered true positive (TP) when IoU > 0.5, and to guarantee the integrity of the validation, each reference was used only once when comparing with the inferred canopies.

Hence, three possible results were considered: (1) TP, when the tree was correctly identified; (2) false negatives (FN), when an omission error occurred, i.e., an existing plant was not identified; and (3) false positives (FP), when an inferred mask did not correspond to an existing plant, resulting in a commission error.

From these results, the metrics precision_det (Pdet), recall_det (Rdet), F1-score (Fdet), and overall accuracy (OA) were computed for each of the two models using the following equations:

2.7.2. Tree Canopy Delineation

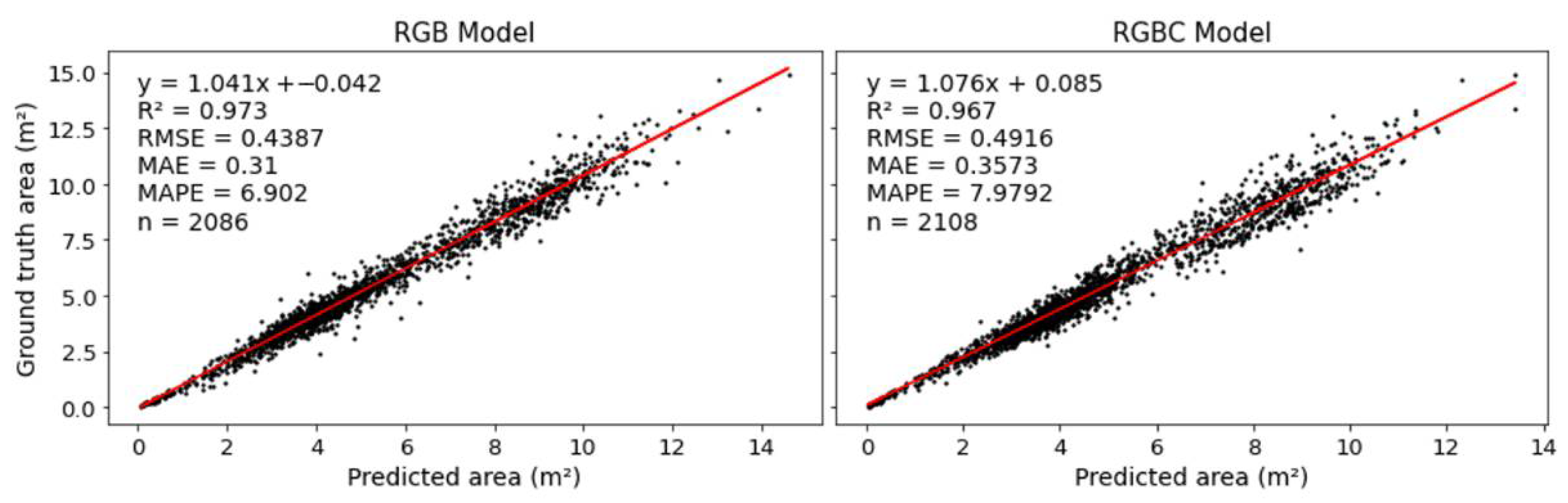

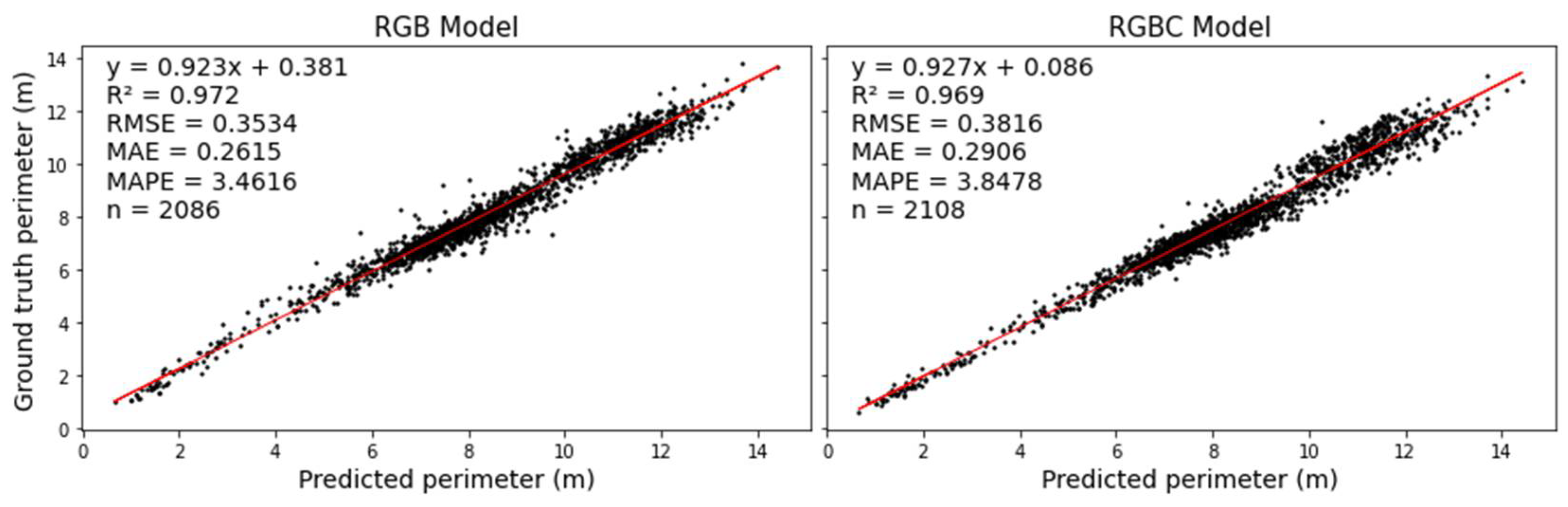

The validation for delineation of objects can be made by different methods. In this study more than one approach was adopted for comparison with the existing literature and to suggest future works. The validation included the evaluation of the IoU value itself, the metrics precision_del (Pdel), recall_del (Rdel), F1-score_del (F1del) and the statistical analysis of the canopy area value by linear regression.

The Pdel and Rdel metrics were calculated according to an increment of the approach proposed by [

29], who analyzed the relationship between the areas of the bounding boxes of the inferred, the reference segments, and their intersections. The modification proposed in relation to the work mentioned is that the delimiting masks themselves were considered instead of the bounding boxes. Pdel is obtained by the ratio between the intersection area of each corresponding pair and the area of the inferred mask; Rdel is obtained by the ratio of the intersection area over the reference mask; F1-score_del is obtained by equation IV with the metrics Pdel and Rdel, respectively replacing Pdet and Rdet.

Figure 7 below displays each metric graphically.

A regression analysis was performed to evaluate the relation between area and perimeter of the reference canopies and those estimated by the proposed method. For each analysis, the coefficient of determination (R2) was computed, which indicates the degree of correlation between the two variables, the Root Mean Squared Error (RMSE), the Mean Absolute Error (MAE) and the Mean Absolute Percent Error (MAPE). The higher the R2, the greater the correlation between the variables and the lower the RMSE, MAE, and MAPE, the closer. The four metrics were used for the analysis.

5. Conclusions

In this study, we evaluated the use of images captured by UAVs and photogrammetry techniques using the SfM algorithm for the identification and delineation of treetops located in different spatial densities using deep learning. The architecture of the model adopted, the Mask R-CNN, corresponds to the state-of-the-art in the instance segmentation process and the images used considered exclusively the RBG sensors of digital cameras. Additionally, a methodology for the elaboration of the ground truth was adopted, which increased the viability of the analysis performed. An unsupervised algorithm was developed that contributes to the automation of instance segmentation in remote sensing images.

Therefore, the main contributions of the proposed approach in this study are: (1) the effort reduction for the elaboration of the ground truth and of the training samples; (2) proposal of a model to identify and delimit dense tree canopies in images of different characteristics with high accurate results (Detection: Overall accuracy and F1-score 97.01% and 98.48%, respectively, and design: IoU > 0.5 average of 0.848 and average F1-score of 91.6%); (3) development of a methodology to encapsulate all procedures adopted, reducing the need for manual operation.

The analysis performed did not cover all the possibilities involving the improvement of the instance segmentation process using SfM-based data. Future works will be carried out to explore other agriculture and forestry crops, where the crowns also present high spatial density, using data with different imaging characteristics.