Abstract

Video delivery is exploiting 5G networks to enable higher server consolidation and deployment flexibility. Performance optimization is also a key target in such network systems. We present a multi-objective optimization framework for service function chain deployment in the particular context of Live-Streaming in virtualized content delivery networks using deep reinforcement learning. We use an Enhanced Exploration, Dense-reward mechanism over a Dueling Double Deep Q Network (E2-D4QN). Our model assumes to use network function virtualization at the container level. We carefully model processing times as a function of current resource utilization in data ingestion and streaming processes. We assess the performance of our algorithm under bounded network resource conditions to build a safe exploration strategy that enables the market entry of new bounded-budget vCDN players. Trace-driven simulations with real-world data reveal that our approach is the only one to adapt to the complexity of the particular context of Live-Video delivery concerning the state-of-art algorithms designed for general-case service function chain deployment. In particular, our simulation test revealed a substantial QoS/QoE performance improvement in terms of session acceptance ratio against the compared algorithms while keeping operational costs within proper bounds.

1. Introduction

Video traffic occupies more than three-quarters of total internet traffic nowadays, and the trend is to grow [1]. Such growth is mainly characterized by Over-The-Top (OTT) video delivery. Content providers need OTT Content Delivery systems to be efficient, scalable, and adaptive [2]. For this reason, cost and Quality of Service (QoS) optimization for video content delivery systems is an active research area. A lot of the research effort in this context is being placed on the optimization [3], and modeling [4] of Content Delivery systems’ performance.

Content Delivery Networks (CDN) are distributed systems that optimize the end-to-end delay of content requests over a network. CDN systems are based on the redirection of requests and content reflection. Well-designed CDN systems warrant good Quality of service (QoS) and Quality of Experience (QoE) for widely distributed users. CDN uses replica servers as proxies for the content providers’ origin servers. Traditional CDN systems are deployed on dedicated hardware and incur, thereby in high capital and operational expenditures. On the other hand, virtualized Content Delivery Networks (vCDN) use Network Function Virtualization (NFV) [5], and Software-defined Networking (SDN) [6] to deploy their software components on virtualized network infrastructures like virtual machines or containers. NFV and SDN are key enablers for 5G networks [7]. Over the last two years, numerous internet services providers (ISP), mobile virtual network operators (MVNO), and other network market players are exploiting 5G networks to augment the spectrum of network services they offer [8]. In this context, virtualized CDN systems are taking the profit of 5G networks to enable higher server consolidation, and flexibility during deployment [9,10]. VCDNs reduce both capital and operational expenditures concerning CDNs deployed to dedicated-hardware [11]. Further, vCDNs are edge-computing compliant [12] and make possible to act win-win strategies between ISP and CDN providers [13].

1.1. Problem Definition

Virtualized Network systems are usually deployed as a composite chain of Virtual Network Functions (VNF), often called a service function chain (SFC). Every incoming request to a virtualized network system will be mapped to a corresponding deployed SFC. The problem of deploying a SFC inside a VNF infrastructure is called VNF Placement or SFC Deployment [14]. Many service requests can share the same SFC deployment scheme, or the SFC deployments can vary. Given two service requests that share the same requested chain of VNFs, the SFC deployment will vary when at least one pair of same-type VNFs are deployed on different physical locations for each request.

This work focuses on the particular case of Live-Video delivery, also referred to as live-streaming. In such a context, each service request is associated with a Live-Video streaming session. CDNs have proved essential to meet scalability, reliability, and security in Live-Video delivery scenarios. One important Quality of Experience (QoE) measure in live-video streaming is the session startup delay, which is the time the end-user waits since the content is requested and the video is displayed. One important factor that influences the startup delay is the round-trip-time (RTT) of the session request, which is the time between the content request is sent, and the response is received. In live-Streaming, the data requested by each session is determined only by the particular content provider or channel requested. Notably, cache HIT and cache MISS events may result in very different request RTTs. Consequently, a realistic Live-Streaming vCDN model should keep track of the caching memory status of every cache-VNF module for fine-grain RTT simulation.

Different SFC deployments may result in different round-trip times (RTT) for live-video sessions. The QoS/QoE goodness of a particular SFC deployment policy is generally measured by the mean acceptance ratio (AR) of client requests, where the acceptance ratio is defined as the percentage of requests whose RTT is below a maximum threshold [14,15,16]. Notice that RTT is different from the total delay, which is the total propagation time of the data stream from the origin server and the end-user.

Another important factor that influences RTT computation is the request processing time. Such a processing time will notably depend on the current VNF utilization. To model VNF utilization in a video-delivery context, major video streaming companies [17] recommend to consider not only the content-delivery tasks, but also the resource consumption associated with content-ingestion processes. In other words, any VNF must ingest a particular data stream before being able to deliver it through its own client connections, and such ingestion will incur non-negligible resource usage. Further, a realistic vCDN delay model must incorporate VNF instantiation times, as they may notably augment the starting delay of any video-streaming session. Finally, both instantiation time and resource consumption may differ significantly depending on the specific characteristics of each VNF [3].

In this paper, we model a vCDN following the NFV Management and orchestration (NFV-MANO) framework published by the ETSI standard group specification [9,18,19,20]. We propose elastic container-based virtualization of a CDN inspired by [5,19]. One of the management software components of a vCDN in the ETSI standard is the Virtual Network Orchestrator (VNO), which is generally responsible for the dynamic scaling of the containers’ resource provision [19]. The resource scaling is triggered when needed, for example, when traffic bursts occur. Such a resource scaling may influence the resource provision costs, also called hosting costs, especially if we consider a cloud-hosted vCDN context [20]. Also, data-transportation (DT) routes in the substrate network of a vCDN may be affected by different SFC deployment decisions. Consequently, DT costs may also vary [9], especially if we consider a multi-cloud environment as we do in this work. Thus, DT costs may also be an important part of the operational costs of a vCDN.

Finally, Online SFC Deployment implies taking fine-grained control decisions over the deployment scheme of SFCs when the system is running. For example, one could associate one SFC deployment for each incoming request to the system or keep the same SFC deployment for different requests but be able to change it whenever requested. In all cases, Online SFC Deployment implies that SFC policy adaptions can be done each time a new SFC request comes into the system. Offline deployment instead takes one-time decisions over aggregations of input traffic to the system [21]. Offline optimization’s effectiveness relies on the estimation accuracy of future environment characteristics [22]. Such estimation may not be trivial, especially in contexts where incoming traffic patterns may be unpredictable or when request characteristics might be heterogeneous, which is the case of Live-Streaming [9,14,23,24]. Consequently, in this work, we consider the Online optimization of a Live-Streaming vCDN SFC deployment, and we seek such optimization in terms of both QoS/QoE and operational costs, where the latter are composed of hosting costs and data-transportation costs.

1.2. Related Works

SFC Deployment is an NP-hard problem [25]. Several exact optimization models, and sub-optimal heuristics, have been proposed in recent years. Ibn-Khedher et al. [26] defined a protocol for optimal VNF placement based on SDN traffic rules and an exact optimization algorithm. This work solves the optimal VNF placement, migration, and routing problem, modeling various system and network metrics as costs and user satisfaction. HPAC [27] is a heuristic proposed by the same authors to scale the solutions of [26] for bigger topologies based on the Gomory-Hu tree transformation of the network. Their call for future work includes the need for the dynamic triggering of adaptation like monitoring user demands, network loads, and other system parameters. Yala et al. [28] present a resource allocation and VNF placement optimization model for vCDN and a two-phase offline heuristic for solving it in polynomial time. This work models server availability through an empirical probabilistic model and optimizes this score alongside the VNF deployment costs. Their algorithm produces near-optimal solutions in minutes for a Network Function Virtualization Infrastructure (NFVI) deployed on a substrate network made of even 600 physical machines. They base the dimensioning criteria on extensive video streaming VNF QoE-aware benchmarking.

Authors in [20] keep track of resource utilization in the context of an optimization model for multi-cloud placement of VNF chains. Utilization statistics per node and network statistics per link are taken into account inside a simulation/optimization framework for VNF placement in vCDN in [29]. This offline algorithm can handle large-scale graph topologies being designed to run on a parallel-supercomputer environment. This work analyzes the effect of routing strategies on the results of the placement algorithm and performs better with a greedy max-bandwidth routing approach. The caching state of each cache-VNF is modeled with a probabilistic function in this work. Offline Optimization of Value Added Service (VAS) Chains in vCDN is proposed in [30], where authors model an Integer Linear Programming (ILP) problem to optimize QoS and Provider Costs. This work models license costs for each VNF added in a new physical location. An online alternative is presented in [31], where authors model the cost of VNF instantiations when optimizing online VNF placement for vCDN. This model lacks to penalize the Roud Trip Time (RTT) of requests with the instantiation time of such VNFs. More scalable solutions for this problem are leveraged with heuristic-based approaches like the one in [32]. On the other hand, regularization-based techniques are used to present an online VNF placement algorithm for geo-distributed VNF chain requests in [32]. This work optimizes different costs and the end-to-end delay providing near-optimal solutions in polynomial time.

Robust Optimization (RO) has also been applied to solve various network-related optimization problems. RO and stochastic programming techniques have been used to model optimization under scenarios characterized by data uncertainty. Uncertainty concerning network traffic fluctuations or resource request amount can be modeled if one seeks to minimize network power consumption [33] or the costs related to cloud resource provisioning [34], for example. In [35], Marotta et. al. present a VNF placement and SFC routing optimization model that minimizes power consumption taking into account that resource requirements are uncertain and fluctuate in virtual Evolved Packet Core scenarios. Such an algorithm is enhanced in a successive work [36] where authors improve the scalability of their solution by dividing the task into sub-problems and adopting various heuristics. Such an improvement permits solving high-scale VNF placement in less than a second, making such an algorithm suitable for online optimization. Remarkably, the congestion-induced delay has been modeled in this work. Ito et. al. [37] instead provide various models of the VNF placement problem where the objective is to warrant probabilistic failure recovery with minimum backup required capacity. Authors in [37] model uncertainty in both failure events and virtual machine capacity.

Deep Reinforcement Learning (DRL) based approaches have been modeled to solve the SFC deployment also. DRL algorithms have recently evolved to solve problems on high-dimensional action spaces through the usage of state-space discretization [38], Policy Learning [39,40], and sophisticated Value learning algorithms [41]. Network-related problems like routing [42], and VNF forwarding graph embedding [43,44,45] have been solved with DRL techniques. Authors in [46] use the Deep Q-learning framework to implement a VNF placement algorithm which is aware of the server reliability. A policy learning algorithm is used for optimizing operational costs and network throughput on SFC Deployment optimization in [14]. A fault-tolerant version of SFC Deployment is presented in [47], where authors use a Double Deep Q-network (DDQN) and propose different resource reservation schemes to balance the waste of resources and ensure service reliability. Authors in [48] assume to have accurate incoming traffic predictions in input and use a DDQN based algorithm for choosing small-scale network sub-regions to optimize every 15 min. Such a work uses a threshold-based policy to optimize the number of fixed-dimensioned VNF instances. A Proximal Policy Optimization DRL scheme is used in [49] to jointly minimize packet loss and server energy consumption on a cellular network SFC deployment environment. The advantage of DRL approaches with respect to traditional optimization models is the constant time complexity reached after training. A well-designed DRL framework has the potential to achieve complex feature learning and near-optimal solutions even to unprecedented context situations [23].

1.3. Main Contribution

To the best of the authors’ knowledge, this work is the first VNF-SCF deployment optimization model for the particular case of Live-Streaming vCDN. We propose a vCDN model where we take into account, at the same time:

- VNF-instantiation times,

- Content-delivery and content-ingestion resource usage,

- Utilization-dependant processing times,

- Fine-grained cache-status tracking,

- Operational costs composed of data-transportation costs and hosting costs,

- Multi-cloud deployment characteristics,

- No a-priory knowledge of session duration.

We seek to jointly optimize QoS and operational costs with an Online DRL-based approach. To achieve this objective, we propose a dense-reward model and an enhanced-exploration mechanism for over a dueling-DDQN agent, which combination leads to the convergence to sub-optimal SFC deployment policies.

Further, in this work, we model bounded network resource availability to simulate network overload scenarios. Our aim is to create and validate a safe-exploration framework that facilitates the assessment of market-entry conditions for new cloud-hosted Live-Streaming vCDN operators.

Our experiments show that our proposed algorithm is the only one to adapt to the model conditions, maintaining an acceptance ratio above the state-of-art techniques for SFC deployment optimization while keeping a satisfactory balance between network throughput and operational costs. This paper can be seen as an upgrade proposal for the framework presented in [14]. The optimization objective of SFC deployment that we pursue is the same: maximizing the QoS and minimizing the operational costs. We enhance the algorithm used in [14] to find a suitable DRL technique for the particular case of Live-Streaming in v-CDN scenarios.

2. Materials and Methods

2.1. Problem Modelisation

We now rigorously model our SFC Deployment optimization problem. First of all, the system elements that are part of the problem are identified. We then formulate a high-level optimization statement briefly. Successively, our optimization problem’s decision variables, penalty terms, and feasibility constraints are described. Finally, we formally define the optimization objective.

2.1.1. Network Elements and Parameters

We model three-node categories in the network infrastructure of a vCDN. The content provider (CP) nodes, denoted as , produce live-video streams that are routed through the SFC to reach the end-users. The VNF hosting nodes, , are the cloud-hosted virtual machines that instantiate container VNFs and interconnect through each other to form the SFCs. Lastly, we consider nodes representing geographic clusters of clients, . Geographic client clusters are created in such a way that every client in the same geographic cluster is considered to have the same data-propagation delays with respect to the hosting nodes in . Client cluster nodes will be referred to as client nodes from now on. Notice that different hosting nodes may be deployed on different cloud providers. We denote the set of all nodes of the vCDN substrate network as:

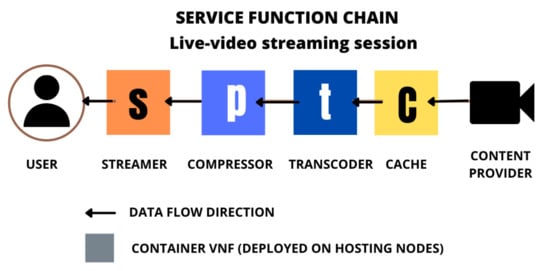

We assume that each live-streaming session request r is always mapped to a VNF chain containing a Streamer, a Compressor, a Transcoder, and a Cache module [19,50]. In a live-streaming vCDN context, the caching module acts as a proxy that ingests video chunks from a Content Provider, stores them on memory, and sends them to the clients towards the rest of the SFC modules. Caching modules accelerate session startup time and prevent origin server overloads, keep an acceptable total delay, improving session startup times which is a measure of QoE in the context of live-streaming. Compressors, instead, may help to decrease video quality when requested. On the other hand, transcoding functionalities are necessary whenever the requested video codec is different from the original one. Finally, the streamer acts as a multiplexer for the end-users [19]. The order in which the VNF chain is composed is explained by Figure 1.

Figure 1.

The assumed Service Function Chain composition for every Live-Video Streaming session request. We assume that every incoming session needs for a streamer, a compressor a transcoder and a cache VNF modules. We assume container based virtualization of a vCDN.

We will denote the set of VNF types considered in our model as K:

Any k-type VNF instantiated at a hosting node i will be denoted as . We assume that every hosting node is able to instantiate a maximum of one k-type VNF. Note that, at any time, there might be multiple SFCs whose k-type module is assigned to a single hosting node i.

We define fixed-length time windows denoted as which we call simulation time-steps following [14]. At each t, the VNO releases resources for timed-out sessions and processes the incoming session requests denoted as . It should be stressed that every r will request for a SFC composed of all the VNF types in K. We will denote the k-type VNF requested by r as . Key notations for our vCDN SFC Deployment Problem are listed in Table 1.

Table 1.

Key notations for our vCDN SFC Deployment Problem.

We now enlist all the network elements and parameters that are part of the proposed optimization problem:

- as the set of all nodes of the CDN network,

- is the set of VNF types considered in our model,

- , is the set of links between nodes in N, so that ,

- , is the set of resource types for every VNF container,

- The resource cost matrix , where is the per-unit resource cost of resource at node i,

- , is the link delay matrix so that variable represents the data propagation delay between the nodes i and j. We assume for . Notice that D is symmetric,

- , is the data-transportation costs matrix, where is the unitary data-transportation cost for the link that connects i and j. We assume for . Notice that O is symmetric,

- are the parameters indicating the instantiation times of each k-type VNF.

- is the set of incoming session requests set during time-step t. Every request r is characterized by , the scaled maximumbitrate, , the mean scaled payload, , the content provider requested, and , the user cluster from which r was originated,

- T is a fixed parameter indicating the maximum RTT threshold value for the incoming Live-Streaming requests.

- is the optimal processing times matrix, where is the processing-time contribution of the resource in any k-type VNF assuming optimal utilization conditions,

- is the time degradation matrix, where is the parameter representing the degradation base for the resource in any k-type VNF,

- are a set of variables indicating the resource capacity of VNF during t.

2.1.2. Optimization Statement

Given a Live-Streaming vCDN constituted by the parameters enlisted in Section 2.1.1, we must decide the SFC deployment scheme for each incoming session request r, considering the penalties in the resulting RTT caused by the eventual instantiation of VNF containers, cache MISS events, and over-utilization of network resources. We must also consider that the entity of the vCDN operational costs is derived from our SFC deployments. We must deploy SFCs for every request to maximize the resulting QoS and minimizing Operational Costs.

2.1.3. Decision Variables

We propose a discrete optimization problem: For every incoming request , the decision variables in our optimization problem are the binary variables that equal 1 if is assigned to , and 0 otherwise.

2.1.4. Penalty Terms and Feasibility Constraints

We model two penalty terms and two feasibility constraints for our optimization problem. The first penalty term is the Quality of Service penalty term and is modeled as follows. The acceptance ratio during time-step t is computed as:

where the binary variable indicates if the SFC assigned to r respects or not its maximum tolerable RTT, denoted by :

Notice that is the round-trip-time of r and is computed as:

where:

- The binary variable equals 1 if the link between nodes i and j is used to reach , and 0 otherwise,

- The parameter represents the data-propagation delay between the nodes i and j. (We assume a unique path between every node i and j),

- The binary variable equals 1 if is assigned to , and 0 otherwise,

- The binary variable equals 1 if is instantiated at the beginning of t, and 0 otherwise,

- is a parameter indicating the instantiation time of any k-type VNF.

- is the processing time of r in .

Notice that, by modeling with (3), we include data-propagation delays, processing time delays, and VNF instantiation times when needed: We will include the delay to the content provider in such RTT only in the case of a cache MISS. In other words, if i is a CP node, then will be 1 only if was not ingesting content from i at the time of receiving the assignation of . On the other hand, whenever is assigned to , but is not instantiated at the beginning of t, then the VNO will instantiate , but adequate delay penalties are added to , as shown in (3). Notice that we approximate the VNF instantiation states in the following manner: Any VNF that is not instantiated during t and receives a VNF request to manage starts its own instantiation and finishes such instantiation process at the beginning of the . From that moment on, unless the VNF has been turned off in the meantime because all its managed sessions are timed out, the VNF is considered ready to manage new incoming requests without any instantiation time penalty.

Recall that we model three resource types for each VNF: CPU, Bandwidth, and Memory. We model the processing time of any r in as the sum of the processing times related to each of these resources:

where are each of the resource processing time contributions for r in , and each of such contributions is computed as:

where:

- the parameter is the processing-time contribution of in any k-type VNF assuming optimal utilization conditions,

- is a parameter representing the degradation base for in any k-type VNF,

- the variable is the utilization in at the moment when is assigned.

Note that (5) models utilization-dependent processing times. The resource utilization in any , denoted as is computed as:

where is the instantaneous resource usage in , and is the resource capacity of during t. The value of is fixed during an entire time-step t and depends on any dynamic resource provisioning algorithm acted by the VNO. In this work we assume a bounded greedy resource provisioning policy as specified in Appendix A.1. On the other hand, if we denote with the a subset of that contains the requests that have already been accepted at the current moment, we can compute as:

where:

- The variable indicates the resource demand in at the beginning of time-step t,

- The binary variable was already defined and it indicates if is assigned to ,

- is the resource demand faced by any k-type VNF when serving r, and we call it the client resource-demand,

- The binary variable is 1 if is currently ingesting content from content provider l, and 0 otherwise,

- The parameter models the resource demand faced by any k-type VNF when ingesting content from any content provider.

Notice that, modeling resource usage with (7), we take into account not only the resource demand associated with the content transmission, but we also model the resource usage related to each content ingestion task the VNF is currently executing.

The resource demand that any k-type VNF faces when serving a session request r is computed as:

where is a fixed parameter that indicates the maximum possible resource consumption implied while serving any session request incoming to any k-type VNF. The variable instead, is indicating the session workload of r, which depends on the specific characteristics of r. In particular, the session workload will depend on the normalized maximum bitrate and the mean payload per time-step of r, denoted as and , respectively:

In (8), the parameters do not depend on r and are fixed normalization exponents that balance the contribution of and in .

Recall that the binary variable indicates if the SFC assigned to r respects or not its maximum tolerable RTT. Notice that we can assess the total throughput served by the vCDN during t as:

The second penalty term is related to the Operational Costs, which is constituted by both the hosting costs and the Data-transportation costs. We can compute the Hosting Costs for our vCDN during t as:

where

- are the total Hosting Costs at the end of time-step ,

- are the hosting costs related to the timed-out sessions at the beginning of time-step t,

- is the set of resources we model, i.e., Bandwidth, Memory, and CPU,

- is the per-unit resource cost of resource at node i.

Recall that is the resource capacity at during t. Notice that different nodes may have different per-unit resource costs as they may be instantiated in different cloud providers. Thus, modeling the hosting costs using (11), we have considered a possible multi-cloud vCDN deployment. Notice also that, using (11), we keep track of the current total hosting costs for our vCDN assuming that timed-out session resources are released at the end of each time-step.

We now model the Data-Transportation Costs. In our vCDN model, each hosting node instantiates a maximum of one VNF of each type. Consequently, all the SFCs that exploit the same link for transferring the same content between the same pair of VNFs will exploit a unique connection. Therefore, to realistically assess DT costs, we create the notion of session DT amortized-cost:

where is a parameter indicating the unitary DT cost for the link between i and j, and is the set of SFCs that are using the link between i and j to transmit to the content related to the same CP requested by r. Notice that DT costs for r are proportional to the mean payload . Recall that indicates if the link between i and j is used to reach . According to (12), we compute the session DT cost for any session request r in the following manner: For each link on our vCDN, we first compute the whole DT cost among such a link. We then compute the number of concurrent sessions that are using such a link for transferring the same content requested by r. Lastly, we compute the ratio between these quantities and sum such ratios for every hop in the SFC of r to obtain the whole session amortized DT cost. The total amortized DT costs during t are then computed as:

where

- are the total DT costs at the end of the time-step,

- are the total DT costs regarding the timed-out sessions at the beginning of time-step t,

- is the session DT cost for r computed with (12). Recall that indicates if r was accepted or not based on its resultant RTT.

On the other hand, the first constraint is the VNF assignation constraint: For any live-streaming request r, every k-type VNF request must be assigned to one and only one node in . We express such a constraint follows:

Finally, the second constraint is the minimum service constraint. For any time-step t, the acceptance ratio must be greater or equals than . We express such a constraint as:

One could optimize operational costs by discarding a significant percentage of the incoming requests instead of serving them. The fewer requests are served, the less the resource consumption entity and the hosting costs will be. Also, data transfer costs are reduced when less traffic is generated due to the rejection of live-streaming requests. However, the constraint in (15) is created to avoid such naive solutions to our optimization problem.

2.1.5. Optimization Objective

We model a multi-objective SFC deployment optimization: At each simulation time-step t, we measure the accomplishment of three objectives:

- Our first goal is to maximize the network throughput as defined in (10), and we express such objective as ,

- Our second goal is to minimize the hosting costs as defined in (11), and we express such objective as ,

- Out third goal is to minimize the DT cost as defined in (13), and such objective can be expressed as .

We tackle such a multi-objective optimization goal with a weighted-sum method that leads to a single objective function:

where , , and are parametric weights for the network throughput, hosting costs, and data transfer costs, respectively.

2.2. Proposed Solution: Deep Reinforcement Learning

Any RL framework is composed of an optimization objective, a reward policy, an environment, and an agent. In RL scenarios, a Markov Decision Process (MDP) is modeled, where the Environment conditions are the nodes of a Markov Chain (MC) and are generally referred to as state-space samples. The agent iteratively observes the state-space and chooses actions from the action-space to interact with the Environment. Each action is corresponded by a reward signal and leads to the transition to another node in the Markov Chain, i.e., to another point in the state-space. Reward signals are generated by a action-reward that drives learning towards optimal action policies under dynamic environment conditions.

In this work, we propose a DRL-based framework to solve our Online SFC Deployment problem. To do that, we first need to embed our optimization problem in a Markov Decision Process (MDP). We then need to create an action reward mechanism that drives the agent to learn optimal SFC Deployment policies, and finally, we need to specify the DRL algorithm we will use for solving the problem. The transition between states of the MDP will be indexed by in the rest of this paper.

2.2.1. Embedding SFC Deployment Problem into a Markov Decision Process

Following [14,32,48,51,52], we propose to serialize the SFC Deployment process into single-VNF assignation actions. In other words, our agent interacts with the Environment each time a particular VNF request, , has to be assigned to a particular VNF instance, of some hosting node i in the vCDN. Consequently, the actions of our agent, denoted by are the single-VNF assignation decisions for each VNF request of a SFC.

Before taking any action, the agent observes the environment’s conditions. We propose to embed such conditions onto a vector that contains a snapshot of the current incoming request and hosting nodes’ conditions. In particular, will be formed by the concatenation of three vectors:

where:

- is called the request vector, and contains information about the VNF request under assignment. In particular, codifies the requested CP, , the client cluster from which the request was originated, , the request’s session workload, , and the number of pending VNFs to complete the deployment of the current SFC, . Notice that and that goes each time from 4 to 0 as our agent performs the assignations actions regarding r. Note that the first three components of are invariable for the whole set of VNF requests regarding the SFC of r. Notice also that, using a one-hot encoding for , and , the dimension of is ,

- is a binary vector where equals 1 if is ingesting the CP requested by r and 0 otherwise,

- , where is the utilization value of the resource that is currently the most utilized resource in .

2.2.2. Action-Reward Schema

When designing the action-reward schema, we take extreme care in giving the right entity to the penalty of resource over-utilization, as it seriously affects QoS. We also include a cost-related penalty to our reward function to jointly optimize QoS and Operational Costs. Recall that the actions taken by our agent are the single-VNF assignation decisions for each VNF request of a SFC. At each iteration , our agent observes the state information , takes an action , and receives a correspondent reward computed as:

where:

- The parameters , , and are weights for the reward contributions relating to the QoS, the DT costs, and the Hosting Costs, respectively,

- is the reward contribution that is related to the QoS optimization objective,

- is a binary variable that equals 1 if action a corresponds to the last assignation step of the last session request arrived in the current simulation time-step t, and 0 otherwise.

Recall that and are the total DT costs and hosting costs of our vCDN at the end of the simulation time-step t. Using (18), we subtract a penalty proportional to the current whole hosting and DT costs in the vCDN only at the last transition of each simulator time-step, i.e., when we assign the last VNF of the last SFC in . Such a sparse cost penalty was also proposed in [14].

When modeling the QoS-related contribution of the reward instead, we propose the usage an inner delay-penalty function, denoted as . In practice, will be continuous and non-increasing. We design in such a way that . Recall that T is a fixed parameter indicating the maximum RTT threshold value for the incoming Live-Streaming requests. We specify the inner delay-penalty function used in our simulations in Appendix A.2.

Whenever our agent performs an assignation action a, for a VNF request in r, we compute the generated contribution to the RTT of r. In particular, we compute the processing time of r in the assigned VNF, eventual instantiation times, and the transmission delay to the chosen node. We sum such RTT contribution at each assignation step to form the current partial RTT, which we denote as . The QoS-related part of the reward assigned to a is then computed as:

If we look at the first line of (19), we realize that a positive reward is given for every assignment that results in a non-prohibitive partial RTT. Moreover, such a positive reward is inversely proportional to (the number of pending assignations for the complete deployment of the SFC of r). Notice that, since is cumulative, we give larger rewards to the latter assignation actions of an SFC, as it is more difficult to avoid surpassing the RTT limit at the end of the SFC deployment with respect to the beginning.

The second line in (19) shows instead that a negative reward is given to the agent whenever exceeds T. Further, such a negative reward worsens proportionally to the prematureness of the assignation action that caused to surpass T. Such a worsening makes sense because bad assignation actions are easier to occur at the end of the SFC assignation process with respect to the beginning.

Finally, the third and fourth lines in (19) correspond to the case when we the agent performs the last assignation action of an SFC. The third line indicates that the QoS related reward is equal to 1 whenever a complete SFC request r is deployed, i.e., when every in the SFC of r has been assigned without exceeding the RTT limit T, and the last line tells us that the reward will be 0 whenever the last assignation action incurs in a non-acceptable RTT for r.

This reward schema is the main contribution of our work. According to the MDP embedding proposed Section 2.2.1, the majority of actions taken by our agent are given a non-zero reward. Such a reward mechanism is called dense, and it improves the training convergence to optimal policies. Notice also that, in contrast to [14], our reward mechanism penalizes bad assignments in contrast to ignoring them. Such an inclusion enhances the agent exploration in the action space and reduces the possibility of converging to local optima.

2.2.3. DRL Algorithm

Any RL agent receives a reward for each action taken, . The function that RL algorithms seek to maximize is called the discounted future reward and is defined as:

where is a fixed parameter known as the discount factor. The objective is then to learn an action policy that maximizes such the discounted future reward. Given a specific policy , the action value function, also called Q-value function indicates how much valuable it is to take a specific action being at state :

from (21) we can derive the recursive Bellman equation:

Notice that if we denote the final state with , then . The Temporal Difference learning mechanism uses (22) to approximate the Q-values for state-action pairs in the traditional Q-learning algorithm. However, in large state or action spaces, it is not always feasible to use tabular methods to approximate the Q-values.

To overcome the traditional Q-learning limitations, Mnih et al. [53] proposed the usage of a Deep Artificial Neural Network (ANN) approximator of the Q-value function. To evict convergence to local-optima, they proposed to use an -greedy policy where actions are sampled from the ANN with probability and from a random distribution with probability , where decays slowly at each MDP transition during training. They also used the Experience Replay (ER) mechanism: a data structure keeps transitions for sampling uncorrelated training data and improve learning stability. ER mitigates the high correlation presented in sequences of observations during online learning. Moreover, authors in [54] implemented two neural network approximators for (21), the Q-network and the Target Q-network, indicated by and , respectively. In [54], the target network is updated only periodically to reduce the variance of the target values and further stabilize learning with respect to [53]. Authors in [54] use stochastic gradient descent to minimize the following loss function:

where minimization of (23) is done with respect to the parameters of . Van Hasselt et al. [55] applied the concepts of Double Q-Learning [56] on large-scale function approximators. They replaced the target value in (23) with a more sophisticated target value:

Doing such a replacement, authors in [55] avoided over-estimations of the Q-values which characterized (23). This technique is called Double Deep Q-Learning (DDQN), and it also helps to decorrelate the noise introduced by , from the noise of . Notice that are the parameters that approximate the function used to choose the best actions, while are the parameters of the approximator used to evaluate the choices. Such a differentiation in the learning and acting policies is also called off-policy learning.

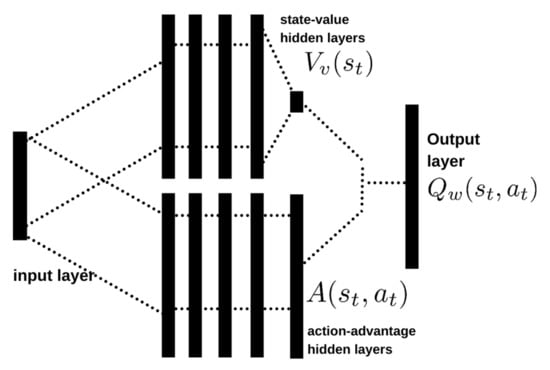

Instead, Wang et al. [41] proposed a change in the architecture of the ANN approximator of the Q-function: they used a decomposition of the action value function in the sum of two other functions: the action-advantage function and the state-value function:

Authors in [41] proposed a two-stream architecture for an ANN approximator, where one stream approximated and the other approximated . They integrate such contributions at the final layer of the ANN using:

where are the parameters of the first layers of the ANN approximator, while and are the parameters encoding the action-advantage and the state-value heads, respectively. This architectural innovation works as an attention mechanism for states where actions have more relevance with respect to other states and is known as Dueling DQN. Dueling architectures have the ability to generalize learning in the presence of many similar-valued actions.

For our SFC Deployment problem, we propose the usage of the DDQN algorithm [55] where the ANN approximator of the Q-value function uses the dueling mechanism as in [41]. Each layer of our Q-value function approximator is a fully connected layer. Consequently, it can be classified as a multilayer Perceptron (MLP) even if it has a two-stream architecture. Even if we approximate and whit two streams, the final output layer of our ANN approximates the Q-value for each action using (26). The input neurons receive the state-space vectors specified in Section 2.2.1. Figure 2 schematizes the proposed topology for our ANN. The parameters of our model are detailed instead in Table 2.

Figure 2.

Dueling-architectured DDQN topology for our SFC Deployment agent: A two-stream deep neural network. One stream approximates the state-value function, and the other approximates the action advantage function. These values are combined to get the state-action value estimation in the output layer. The inputs are instead the action is taken and the current state.

Table 2.

Deep ANN Assigner topology Parameters.

We index the training episodes with , where M is a fixed training hyper-parameter. We assume that an episode ends when all the requests of a fixed number of simulation time-steps have been processed. Notice that each simulation time-step t may have a different number of incoming requests, , and that every incoming request r will be mapped to an SFC of length , which coincides with the number of MDP transitions on each SFC deployment process. Consequently, the number of transitions in an episode e will be then given by

where and are the initial and final simulation timesteps of episode e, respectively (Recall that ).

To improve training performance and avoid convergence to local optima, we use the -greedy mechanism. We introduce a high number of randomly chosen actions at the beginning of our training phase and progressively diminish the probability of taking such random actions. Such randomness should help to reduce the bias in the initialization of the ANN approximator parameters. In order to gradually lower the number of random moves as our agent learns the optimal policy, our -greedy policy is characterized by an exponentially decaying as:

where we define , , and as fixed hyper-parameters such that

Notice that and

We call our algorithm Enhanced-Exploration Dense-Reward Duelling DDQN (E2-D4QN) SFC Deployment. Algorithm 1 describes the training procedure of our E2-D4QN DRL agent. We call learning network the ANN approximator used to choose actions. In lines 1 to 3, we initialize the replay memory, the parameters of the first layers (), the action-advantage head (), and the state-value head () of the ANN approximator. We then initialize the target network with the same parameter values of the learning network. We train our agent for M epochs, each of which will contain MDP transitions. In lines 6–10 we set an ending episode signal. We need such a signal because, when the final state of an episode has been reached, the loss should be computed with respect to the pure reward of the last action taken, by definition of . At each training iteration, our agent observes the environment conditions, takes an action using the -greedy mechanism, obtains a correspondent reward, and transits to another state (lines 11–14). Our agent stores the transition in the replay buffer and then randomly samples a batch of stored transitions to run the stochastic gradient descent on the loss function in (24) (lines 14–25). Notice that the target network will only be updated with the parameter values of the learning value each U iterations to increase training stability, where U is a fixed hyper-parameter. The complete list of the training hyper-parameters used for training is enlisted in Appendix A.4.

| Algorithm 1 E2-D4QN. |

| 1: Initialize 2: Initialize , , and randomly 3: Initialize , , and with the values of , , and , respectively 4: for episode do 5: while τ ≤ Ne do 6: if τ = Ne then 7: τend ← True 8: else 9: τend ← False 10: end if 11: Observe state sτ from simulator. 12: Update ϵ using (28). 13: Sample a random assignation at action with probability ϵ or ← Q(, a; Θ) with probability 1 − ϵ. 14: Obtain the reward rτ using (18), and the next state sτ+1 from the environment. 15: Store transition tuple (, , , , τend) in . 16: Sample a batch of transition tuples from . 17: for all (sj, aj, rj, sj+1, τend) ∈ do 18: if τend) = True then 19: yj rj 20: else 21: yj r + ϒ(sj+1, Q(sj+1, a; θ), ) 22: end if 23: Compute the temporal difference error (θ) using (24). 24: Compute the loss gradient ∇(θ). 25: Θ ← Θ ← lr · ∇ (θ) 26: Update Θ− ← Θ only every U steps. 27: end for 28: end while 29: end for |

2.3. Experiment Specifications

2.3.1. Network Topology

We used a real-world dataset to construct a trace-driven simulation for our experiment. We consider the topology of the proprietary CDN of an Italian Video Delivery operator in our experiments. Such an operator delivers Live video from content providers distributed around the globe to clients located in the Italian territory. This operator’s network consists of 41 CP nodes, 16 hosting nodes, and 4 client cluster nodes. The hosting nodes and the client clusters are distributed in the Italian territory, while CP nodes are distributed worldwide. Each client cluster emits approximately Live-Video requests per minute. The operator gave us access to the access log files concerning service from 25–29 July 2017.

2.3.2. Simulation Parameters

We took data from the first four days for training our SFC Deployment agent and used the last day’s trace for evaluation purposes. Given a fixed simulation time-step interval of 15 seconds and a fixed number of time-steps per episode, we trained our agent for 576 episodes, which correspond to 2 runs of the 4-day training trace. At any moment, the vCDN conditions are composed by the VNF instantiation states, the caching VNF memory states, the container resource provision, utilization, etc. In the test phase of every algorithm, we should fix the initial network conditions to reduce evaluation bias. However, setting the initial network conditions like those encountered at the end of its training cycle might also bias the evaluation of any DRL agent. We want to evaluate every agent’s capacity to recover the steady state from general environment conditions. Such an evaluation needs initial conditions to be different with respect to the steady-state achieved during training. In every experiment we did, we set the initial vCDN conditions as those registered at the end of the fourth day when considering a greedy SFC deployment policy. We fix the QoS, Hosting costs, and DT-cost weight parameters in (16) to , , and , respectively.

In the context of this research, we did not have access to any information related to the data-transmission delays. Thus, for our experimentation, we have randomly generated fixed data-transmission delays considering the following realistic assumptions. We assume that the delay between a content provider and a hosting node is generally bigger concerning the delay between any two hosting nodes. We also assumed that the delay between two hosting nodes is usually bigger than between hosting and client-cluster nodes. Consequently, in our experiment, delays between CP nodes and hosting nodes were generated uniformly in the interval 120–700 [ms], delays between hosting nodes, from the interval 20–250 [ms], the delays between hosting nodes and client clusters were randomly sampled from the interval 8–80 [ms]. Also, the unitary data-transportation costs were randomly generated for resembling a multi-cloud deployment scenario. For links between CP nodes and hosting nodes, we assume that unitary DT costs range between and USD per GB (https://cloud.google.com/cdn/pricing (accessed on 26 October 2021)). For links between hosting nodes, the unit DT costs were randomly generated between and USD per GB, while DT Cost between hosting nodes and client cluster nodes is assumed null.

The rest of the simulation parameters are given in Appendix A.3.

2.3.3. Simulation Environment

The training and evaluation procedures for our experiment were made on a Google Colab-Pro hardware-accelerated Environment equipped with a Tesla P100-PCIE-16GB GPU, an Intel(R) Xeon(R) CPU @ 2.30GHz processor with two threads, and 13 GB of main memory. The source code for our vCDN simulator and our DRL framework’s training cycles was made in python v. 3.6.9. We used torch library v. 1.7.0+cu101 (PyTorch) as a deep learning framework. The whole code is available online on our public repository (https://github.com/QwertyJacob/e2d4qn_vcdn_sfc_deployment (accessed on 26 October 2021)).

2.3.4. Compared State-of-Art Algorithms

We compare our algorithm with the NFVDeep framework presented in [14]. We have created three progressive enhancements of the NFVDeep algorithm for an exhaustive comparison with E2-D4QN. NFVDeep is a policy gradient DRL framework for maximizing network throughput and minimizing operational costs on general-case SFC deployment. Xiao et al. design a backtracking method: if a resource shortage or exceeded latency event occurs during SFC deployment, the controller ignores the request, and no reward is given to the agent. Consequently, sparse rewards characterize NFVDeep. The first algorithm we compare with is a reproduction of NFVDeep on our particular Live-Streaming vCDN Environment. The second algorithm introduces our dense-reward scheme on the NFVDeep framework, and we call it NFVDeep-Dense. The third method is an adaptation of NFVDeep that introduces our dueling DDQN framework but keeps the same reward policy as the original algorithm in [14], and we call it NFVDeep-D3QN. The fourth algorithm is called NFVDeep-Dense-D3QN, and it adds our dense reward policies to NFVDeep-D3QN. Notice that the difference between NFVDeep-Dense-D3QN and our E2-D4QN algorithm is that the latter does not use the backtracking mechanism: In contrast to any of the compared algorithms, we permit our agent to do wrong VNF assignations and to learn from its mistakes to escape from local optima.

Finally, we also compare our proposed algorithm with a greedy-policy lowest-latency and lowest-cost (GP-LLC) assignation agent, based on the work presented in [57]. GP-LLC is an extension of the algorithm in [57], that includes server-utilization, channel-ingestion state, and resource-costs awareness in the decisions of a greedy policy. For each incoming VNF request, GP-LLC will assign a hosting node. This greedy policy will try not to overload nodes with assignation actions and always choose the best available actions in terms of QoS. Moreover, given a set of candidate nodes respecting such a greedy QoS-preserving criterion, the LLC criterion will tend to optimize hosting costs. Appendix B describes in detail the GP-LLC SFC Deployment algorithm.

3. Results

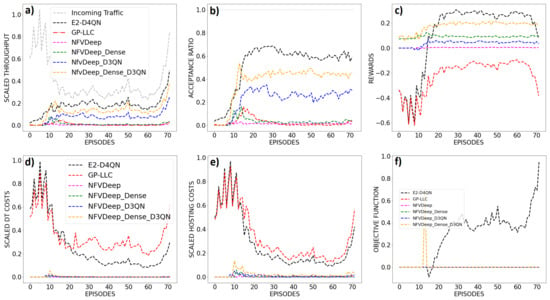

Various performance metrics for all the algorithms mentioned in Section 2.3.4 are presented in Figure 3. Recall that the measurements in such a figure are taken during the 1-day evaluation trace as mentioned in Section 2.3.2. Notice that, given the time-step duration and number of time-steps per episode specified in Section 2.3.2, one-day trace consists of 72 episodes, starting at 00:00:00 h at finishing at 23:59:59 of the 29 July 2017.

Figure 3.

Basic evaluation metrics of E2-D4QN, GP-LLC, NFVDeep and three variants of the latter, presented in Section 2.3.4. (a) Scaled mean network throughput per episode. (b) Mean Acceptance Ratio per episode. (c) Mean rewards per episode. (d) Mean scaled total Data-Transportation Costs per episode (e) Mean scaled total hosting costs per episode. (f) Mean scaled optimization objective per episode.

3.1. Mean Scaled Network Throughput per Episode

The network throughput for each simulation time-step was computed using (10) and the mean values for each episode were scaled and plotted in Figure 3a. Also the scaled incoming traffic amount is plotted in such a figure. In the first twenty episodes of the trace, which correspond to the period from 0:00 to 6:00, the incoming traffic goes from intense to moderate. Incoming traffic has minor oscillations with respect to the antecedent descent from episode 20 to episode 60, and it starts to grow again from the sixtieth episode on, which corresponds to the period from 18:00 to the end of the trace.

The initial ten episodes are characterized for a comparable throughput between GP-LLC, E2-D4QN, and NFVDeep-Dense-D3QN. We can see, however, from the 20th episode on, the throughput of policy-based NFVDeep variants is lowered. From episode 15, however, which corresponds to the period from 05:00 to the end of the day, the throughput of our proposed algorithm is superior throughput with respect to every other algorithm.

3.2. Mean Acceptance Ratio per Episode

The AR for each simulation time-step was computed using (1) and the mean values for each episode are plotted in Figure 3b. At the beginning of the test, corresponding to the first five episodes, E2-D4QN has a superior AR performance. From episodes 5 to 15, only E2-D4QN and NFVDeep-Dense-D3QN keep growing in the acceptance ratio. The unique algorithm that holds a satisfactory acceptance ratio during the rest of the day is E2-D4QN instead. It should be stressed that the AR cannot be one in our experiments because the parameter configuration described in Section 2.3.2 resembles overloaded network conditions on purpose.

3.3. Mean Rewards per Episode

Figure 3c shows the mean rewards per episode. We plot the rewards obtained at every assignation steps. Notice that such a selection corresponds to the non-null rewards in the sparse-reward models.

During the first 15 episodes, -00:00 to 05:00- both GP-LLC and E2-D4QN increment their mean rewards starting from a worse performance with respect to the NFVDeep algorithms. This is explained because the Enhanced-Exploration mechanism of E2-D4QN and GP-LLC is the unique that includes negative rewards in the reward assignation policy. From episode 15 to 20, E2-D4QN reaches the rewards obtained by NFVDeep-Dense-D3QN, and from episode 20 on, E2-D4QN has a better performance with respect to the NFVDeep variants, most of all due to the lowering of operational costs for this algorithm. Finally, with the exception of the last 5 episodes, only E2-D4QN dominates the rest of the trace in the mean reward metric, with the exception of the last five episodes.

3.4. Total Scaled Data-Transportation Costs per Episode

The total DT costs per time-step as defined in (13) were computed and the mean values per episode were scaled and plotted in Figure 3d. During the whole evaluation period, both E2-D4QN and GP-LLC incur higher DT costs with respect to every other algorithm. This phenomenon is explained by the Enhanced Exploration mechanism, which permits E2-D4QN and GP-LLC, to accept requests even when the resulting RTT is over the acceptable threshold. E2-D4QN and GP-LLC accept every incoming request instead. Notice, however, that E2-D4QN and GP-LLC progressively reduce DT costs due to common path creation for similar SFCs.From the twentieth episode on, however, only E2-D4QN minimizes such costs while maintaining an acceptance ratio greater than .

3.5. Total Scaled Hosting Costs per Episode

A similar explanation can be given for the total Hosting Cost behavior. Such a cost was computed for each time-step using (11) and the mean values per episode were scaled and plotted in Figure 3e. Given the adaptive resource provisioning algorithm described in Appendix A.1, we can argue that, in general, hosting cost is high for E2-D4QN and GP-LLC because their throughput is high. The hosting costs burst that characterizes the first twenty episodes, however, can be explained by the network initialization state during experiments: Every algorithm is evaluated from an uncommon network state with respect to the steady state reached during training. The algorithms that are equipped with the Enhanced-Exploration mechanism tend to worse such a performance drop at the beginning of the testing trace because of the unconstrained nature of such mechanism. It is the Enhanced-Exploration however, that drives our proposed agent to learn sub-optimal policies that permit to maximize the network acceptance ratio.

3.6. Optimization Objective

The optimization objective as defined in (16) was computed at each time-step, and the mean values per episode were scaled and plotted in Figure 3f. Recall that (16) is invalid whenever the acceptance ratio is above the minimum threshold of 0.5 as mentioned in Section 2.1.4. For this reason, in Figure 3f we set the optimization value to zero whenever the minimum service constraint was not met. Notice that no algorithm can achieve the minimum acceptance ratio during the first ten episodes of the test. This behavior can be explained by the greedy initialization with which every test has been carried out: The initial network state for every algorithm is very different from the states observed during training. From the tenth episode on, however, E2-D4QN is the only algorithm to achieve a satisfactory acceptance ratio, and thus, the optimization objective function has a non-zero value.

4. Discussion

Trace-driven simulations have revealed that our approach shows adaptability to the complexity of the particular context of Live-Streaming in vCDN with respect to the state-of-art algorithms designed for general-case SFC deployment. In particular, our simulation test revealed decisive QoS performance improvements in terms of acceptance ratio with respect to any other backtracking algorithm. Our agent progressively learns to adapt to the complex environment conditions like different user cluster traffic patterns, different channel popularities, unitary resource provision costs, VNF instantiation times, etc.

We assess the algorithm’s performance in a bounded-resource scenario aiming to build a safe-exploration strategy that enables the market entry of new vCDN players. Our experiments have shown that the proposed algorithm, E2-D4QN is the only one to adapt to such conditions, maintaining an acceptance ratio above the general case state-of-art techniques while keeping a delicate balance between network throughput and operational costs.

Based on the results in the previous section, we now argue the main reasons that make E2-D4QN the most suitable algorithm for online optimization of SFC Deployment on a Live-video delivery vCDN scenario. The main reason for our proposed algorithm’s advantage is the combination of the enhanced exploration with a dense-rewards mechanism on a dueling DDQN framework. We argue that such a combination leads to discover convenient long-term actions in contrast to convenient short-term actions during training.

4.1. Environment Complexity Adaptation

As explained in Section 2.3.4, we have compared our E2-D4QN agent with the NFVDeep algorithm presented in [14], with three progressive enhancements to such algorithm, and with an extension of the algorithm presented in [57], which we called GP-LLC. Authors in [14] assumed utilization-independent processing times. A consequence of this assumption is the possibility of computing the remaining latency space with respect to the RTT threshold before each assignment. In our work, instead, we argue that realistic processing times should be modeled as utilization-dependent. Moreover, Xiao et al. did not model ingestion-related utilization nor VNF instantiation time penalties. Relaxing the environment with these assumptions simplifies the environment and helps on-policy DRL schemes like the one in [14] to converge to suitable solutions. Lastly, in NFVDeep, prior knowledge of each SFC session’s duration is also assumed. This feature helps the agent to learn to accept longer sessions to increase the throughput. Unfortunately, it is not realistic to assume session duration knowledge when modeling Live-Streaming in vCDN context. Our model is agnostic to this feature and maximizes the overall throughput when optimizing the acceptance ratio. This paper shows that the NFVDeep algorithm cannot reach a good AR on SFC Deployment optimization without assuming all the aforementioned relaxations.

4.2. State Value, Advantage Value and Action Value Learning

In this work, we propose the usage of the dueling-DDQN framework for implementing a DRL agent that optimizes SFC Deployment. Such a framework is meant to learn approximators for the state value function, , the action advantage function, , and the action-value function, . Learning such functions helps to differentiate between relevant actions in the presence of many similar-valued actions. This is the main reason why NFVDeep-D3QN improves AR with respect to NFVDeep: Learning the action advantage function, helps to identify convenient long-term actions from a set of similar valued actions. For example, preserving resources of low-cost nodes for popular channel bursts in the future can be more convenient in the long term with respect to adopting a round-robin load-balancing strategy during low incoming traffic periods. Moreover, suppose we do such a round-robin dispatching. In that case, the SFC routes to content providers won’t tend to divide the hosting nodes into non-overlapping clusters. This will provoke more resource usage in the long run: almost every node will ingest the content of almost every content provider. As generally content-ingestion resource usage is much heavier with respect to content-serving, this strategy will accentuate the resource leakage on the vCDN in the long run, provoking bad QoS performance. Our E2-D4QN learns to polarize the SFC routes in order to minimize content ingestion resource usage during the training phase. Such a biased policy performs in the best way possible with respect to the compared algorithms taking into account the whole evaluation period.

4.3. Dense Reward Assignation Policies

Our agent converges to sub-optimal policies by carefully designing a reward schema as the one presented in Section 2.2.2. Our algorithm assigns a specific reward at each MDP transition considering the optimality of VNF assignments in terms of QoS, hosting costs, and data transfer costs. This dense-reward schema enhances the agent’s convergence. In fact, in our experiments, we have also noticed that the dense-reward algorithms improve the results of their sparse-reward counterparts. In other words, we see in Figure 3b that NFVDeep-Dense performs slightly better than NFVDeep, and NFVDeep-Dense-D3QN performs better than NFVDeep-D3QN. This improvement exists because dense rewards provide valuable feedback at each assignation step of the SFC, improving convergence of the DRL agents to shorter RTTs. On the other hand, we have also observed that even if cost-related penalties are sparsely subtracted in our experiments, the proposed DRL agent learns to optimize SFC deployment not only with respect to QoS but also taking into account the operational costs.

4.4. Enhanced Exploration

Notice that GP-LLC does not learn inherent network traffic dynamics, making it impossible to differentiate convenient long-term actions from greedy actions. For example, GP-LLC won’t adopt long-term resource consolidation policies, in which each channel is ingested by a defined subset of nodes to amortize the overall ingestion resource consumption. On the other hand, we argue that the main reason that keeps NFVDeep above a satisfactory performance is the leakage of the enhanced exploration mechanism. In fact, we demonstrate that the acceptance ratio of E2-D4QN surpasses every other algorithm because we add enhanced exploration, which enriches the experience replay buffer with non-optimal SFC deployments during training, preventing the agent from getting stuck at local optima, which we think is the main problem with NVFDeep under our environment conditions.

In practice, in all the NFVDeep or backtracking algorithms, i.e., the algorithms without the enhanced-exploration mechanism, at each VNF assignment, the candidate nodes are filtered based on their utilization availability. Only non-overloaded nodes are available candidates. If the agent chooses an overloaded node, the action is ignored, and no reward is given. Our model instead performs assignment decisions without being constrained by current node utilization to enhance the exploration of the action space. The well-designed state-space codifies important features that drive learning towards sub-optimal VNF placements:

- The request vector codifies useful information about the requests that help our agent extract the incoming traffic patterns.

- The ingestion vector helps our agent to optimize ingestion-related resource demand by avoiding session assignments to VNF instances that do not ingest the content requested at the moment of assignation.

- The maximum utilization vector gives our agent resource utilization awareness, making it able to converge to assignation policies that optimize processing times and preserve QoS.

Consequently, our agent learns optimal SFC Deployment policies without knowing the actual bounds of the resource provision or the current instantiation state of the VNF instances that compose the vCDN. It learns to recognize the maximum resource provisioning for the VNFs and also learns to evict assignations to non-initialized VNFs thanks to our carefully designed reward assignation scheme.

4.5. Work Limitations and Future Research Directions

We have based the experiments in this paper on a real-world data set concerning a particular video delivery operator. In this case, the hosting nodes of the corresponding proprietary CDN are deployed in the Italian territory. However, such a medium-scale deployment is not the unique possible configuration for a CDN. Consequently, as future work, we plan to obtain or generate data concerning high-scale topologies to assess the scalability of our algorithm to such scenarios.

Further, this paper presents the assessment of the performance of various DRL-based algorithms. However, the authors of this work had access to real-world data set limited to a five-day trace. Consequently, the algorithms presented in this work were trained on a four-day trace, while the evaluation period consisted of a single day. Future research directions include assessing our agent’s training and evaluation performance on data concerning more extended periods.

Finally, in this work, we have used a VNF resource-provisioning algorithm that is greedy and reactive, as specified in Appendix A.1. A DRL-based resource-provisioning policy would instead act proactive and long-term convenient actions. Such a resource-provisioning policy, combined with the SFC deployment policy presented in this work, would further optimize QoS and Costs. Thus, future work also includes the development of a multi-agent DRL framework for the joint optimization of both resource provisioning and SFC deployment tasks in the context of live-streaming in vCDN.

Author Contributions

Conceptualization and methodology, J.F.C.M., L.R.C., R.S. and A.S.R.; software, J.F.C.M. and R.S.; investigation, validation and formal analysis J.F.C.M., R.S. and L.R.C.; resources and data curation, J.F.C.M., L.R.C. and R.S.; writing—original draft preparation, J.F.C.M.; writing—review and editing, J.F.C.M., R.S., R.P.C.C., L.R.C., A.S.R. and M.M.; visualization, J.F.C.M.; supervision and project administration, L.R.C., A.S.R. and M.M; funding acquisition, R.P.C.C. and M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by ELIS Innovation Hub within a collaboration with Vodafone and partly funded by ANID—Millennium Science Initiative Program—NCN17_129. R.C.C was funded by ANID Fondecyt Postdoctorado 2021 # 3210305.

Data Availability Statement

Not applicable, the study does not report any data.

Acknowledgments

The authors wish to thank L. Casasús, V. Paduano and F. Kieffer for their valuable insights.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| ANN | Aritifical Neural Network |

| CDN | Content Delivery Network |

| CP | Content Provider |

| DT | Data-Transportation |

| GP-LLC | Greedy Policy of Lowest Latency and Lowest Cost algoritm |

| ILP | Integer Linear Programming |

| ISP | Internet Service Provider |

| QoE | Quality of Experience |

| QoS | Quality of Service |

| MANO | Management and orchestration framework |

| MC | Markov Chain |

| MDP | Markov Decision Process |

| MVNO | Mobile Virtual Network Operator |

| NFV | Network Function Virtualization |

| NFVI | Network Function Virtualization Infrastructure |

| OTT | Overt-The-Top Content |

| RTT | Round-Trip-Time |

| SDN | Software Defined Networking |

| SFC | Service Function Chain |

| vCDN | virtualized-Content Delivery Network |

| VNF | Virtual Network Function |

| VNO | Virtual Network Orchestrator |

Appendix A. Further Modelisation Details

Appendix A.1. Resource Provisioning Algorithm

In this paper we assume that the VNO component is acting a greedy resource provisioning algorithm, i.e., the resource provision on for the next time-step will be computed as:

where the parameter is the maximum res resource capacity available for , and is a parameter indicating a fixed desired utilization of after the adaptation takes place and before receiving further session requests. Recall that it the current resource utilization in . Resource adaptation procedure is triggered periodically each time-steps, where is a fixed parameter. On the other hand, each time that any is instantiated, the VNO allocates a fixed minimum resource capacity for each resource in such VNF instance, denoted as .

Appendix A.2. Inner Delay-Penalty Function

The core of our QoS related reward is the delay-penalty function, which has some properties specified in Section 2.2.1. The function that we used on our experiments is the following:

Notice that the domanin of will be the RTT of any SFC deployment and the co-domain will be the segment . Notice also that:

Such a bounded co-domain helps to stabilize and improve the learning performance of our agent. Notice, however that it is worth noting that similar functions could be easily designed for other values of T.

Appendix A.3. Simulation Parameters

The whole list of our simulation parameters is presented in Table A1. Every simulation has used such parameters unless other values are explicitly specified.

Table A1.

List of simulation parameters.

Table A1.

List of simulation parameters.

| Parameter | Description | Value |

|---|---|---|

| CPU Unit Resource Costs (URC) | ||

| (for each cloud provider) | ||

| Memory URC | ||

| Bandwidth URC | ||

| Maximum resource provision parameter | 20 | |

| (assumed equal for all the resource types) | ||

| Minimum resource provision parameter | 5 | |

| (assumed equal for all the resource types) | ||

| Payload workload exponent | ||

| Bit-rate workload exponent | ||

| Optimal CPU Processing Time | ||

| (baseline of over-usage degradation) | ||

| Optimal memory PT | ||

| Optimal bandwidth PT | ||

| CPU exponential degradation base | 100 | |

| Memory deg. b. | 100 | |

| Bandwidth deg. b. | 100 | |

| cache VNF Instantiation Time | 10,000 | |

| Penalization in ms (ITP) | ||

| streamer VNF ITP | 8000 | |

| compressor VNF ITP | 7000 | |

| transcoder VNF ITP | 11,000 | |

| Time-steps per greedy | 20 | |

| resource adaptation | ||

| Desired resulting utilization | ||

| after adaptation | ||

| Optimal resourse utilization | ||

| (assumed equal for every resource type) |

Appendix A.4. Training Hyper-Parameters

A complete list of the hyper-parameters values used in the training cycles is specified in Table A2. Every training procedure has used such values unless other values are explicitly specified.

Table A2.

List of hyper-parameters’ values for our training cycles.

Table A2.

List of hyper-parameters’ values for our training cycles.

| Hyper-Parameter | Value |

|---|---|

| Discount factor ( ) | |

| Learning rate | |

| Time-steps per episode | 80 |

| Initial -greedy action probability | |

| Final -greedy action probability | |

| -greedy decay steps | |

| Replay memory size | |

| Optimization batch size | 64 |

| Target-network update frequency | 5000 |

Appendix B. GP-LLC Algorithm Specification

In this paper, we have compared our E2-D4QN agent with a greedy policy lowest-latency and lowest-cost (GP-LLC) SFC deployment agent. Algorithm 2 describes the behavior of the GP-LLC agent. Note that the lowest-latency and lowest-cost (LLC) criterion can be seen as a procedure that, given a set of candidate hosting nodes, chooses the correct hosting node to deploy the current VNF request of a SFC request r. Such a procedure is at the core of the GP-LLC algorithm, while the outer part of the algorithm is responsible for choosing the hosting nodes that form the candidate set according to a QoS maximization criterion. The LLC criterion woks as follows. Given a set of candidate hosting nodes and a VNF request, the LLC criterion will divide the candidate nodes in subsets considering the cloud provider they come from. It will then chose the hosting node corresponding to the route that will generate less transportation delay, i.e., the fastest route, from the cheapest cloud-provider candidate node subset.

The outer part of the algorithm acts instead as follows. Every time that a VNF request needs to be processed, the GP-LLC agent monitors the network conditions. The agent identifies the hosting nodes that are currently not in overload conditions, , the ones that currently have a resource provision that is less than the maximum for all the resource types , and the hosting nodes that currently have a ingesting the content from the same content provider requested by , (lines 2–4). Notice that is the set of nodes whose resource provision can still be augmented by the VNO. Notice also that choosing a node from to assign , implies not to incur in a Cache MISS event and consequently warrants the acceptance of r. If is not an empty set, the agent assigns to a node in such a set following the LLC criterion. However, if is an empty set, then if at least is not empty, then a node from will be chosen using the LLC criterion. If on the other hand, is empty, then a node from will be chosen with the LLC criterion. Finally, if both and are empty sets, then a random hosting node will be chosen for hosting (lines 5–16). Choosing a random node in the last case instead of using the LLC criterion from the whole hosting node will prevent bias in the assignation policy to cheap nodes with fast routes. Making such a random choice will then result in an increment in the overall load balance among the hosting nodes.

Notice that, whenever possible, GP-LLC will evict overloading nodes with assignation actions and will always choose the best actions in terms of QoS. Moreover, given a set of candidate nodes respecting such a greedy QoS-preserving criterion, the inner LLC criterion will tend to optimize hosting costs and data-transmission delays. Notice also that GP-LLC does not take into account data-transportation costs for VNF SFC deployment.

| Algorithm A1 GP-LLC VNF Assignation procedure. |

| 1: for ∈ r do 2: Get the non-overloaded hosting nodes set 3: Get the still-scalable hosting nodes set 4: Get the set of hosting nodes that currently have a ingesting on 5: if > 0 then 6: if > 0 then 7: use the LLC criterion to chose from 8: else 9: use the LLC criterion to chose from 10: end if 11: else 12: if > 0 then 13: use the LLC criterion to chose from 14: else 15: choose a random node from 16: end if 17: end if 18: end for |

References

- Cisco, V. Cisco Visual Networking Index: Forecast and Methodology 2016–2021. Complet. Vis. Netw. Index (VNI) Forecast. 2017, 12, 749–759. [Google Scholar]

- Budhkar, S.; Tamarapalli, V. An overlay management strategy to improve QoS in CDN-P2P live streaming systems. Peer-to-Peer Netw. Appl. 2020, 13, 190–206. [Google Scholar] [CrossRef]

- Demirci, S.; Sagiroglu, S. Optimal placement of virtual network functions in software defined networks: A survey. J. Netw. Comput. Appl. 2019, 147, 102424. [Google Scholar] [CrossRef]

- Li, J.; Lu, Z.; Tong, Y.; Wu, J.; Huang, S.; Qiu, M.; Du, W. A general AI-defined attention network for predicting CDN performance. Future Gener. Comput. Syst. 2019, 100, 759–769. [Google Scholar] [CrossRef]