1. Introduction

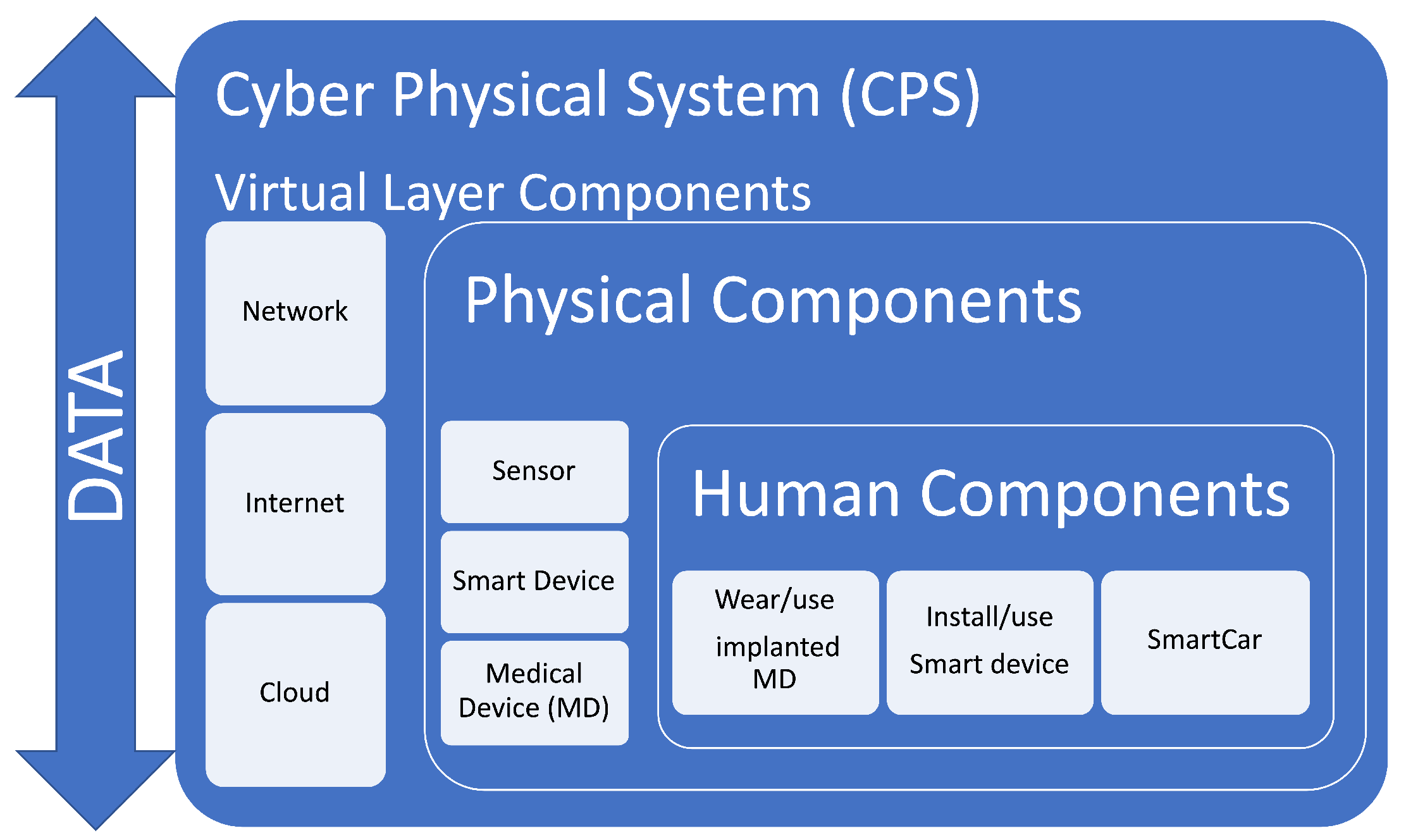

Cyber Physical Systems (CPS) are “integrations of computation and physical processes” [

1]. Thus, CPS can combine human, physical and technological components and allow these to communicate seamlessly to derive value (see

Figure 1). The value generated will depend on the perspective of the user. For example, for the individual who uses their smart phone to communicate with their heating system, the value will be in having a warm home when they get there. For the electricity company, the value may be in providing the power needed on-demand. In addition, the electricity company may also derive value from, for example, being able to remotely read the customer’s meter, or use the data generated to predict when the customer will next need additional power to be supplied. However, this also raises a number of security and privacy concerns that need to be explored and addressed.

While a lot of research has been done around what the security threats, vulnerabilities and implications of CPS might be [

2], most of this work considers privacy only as a sub-set of security considerations [

3]. We contend that privacy must be considered in its own right and on a more holistic basis. Some work has been done in this area, discussing how privacy might be affected by the use of CPS and highlighting the need for improved decision making to ensure privacy is preserved in CPS [

4], a notion that we subscribe to. We contend that, not only does privacy need to be discussed, a practical framework for how to do this in a holistic manner, is also sorely needed.

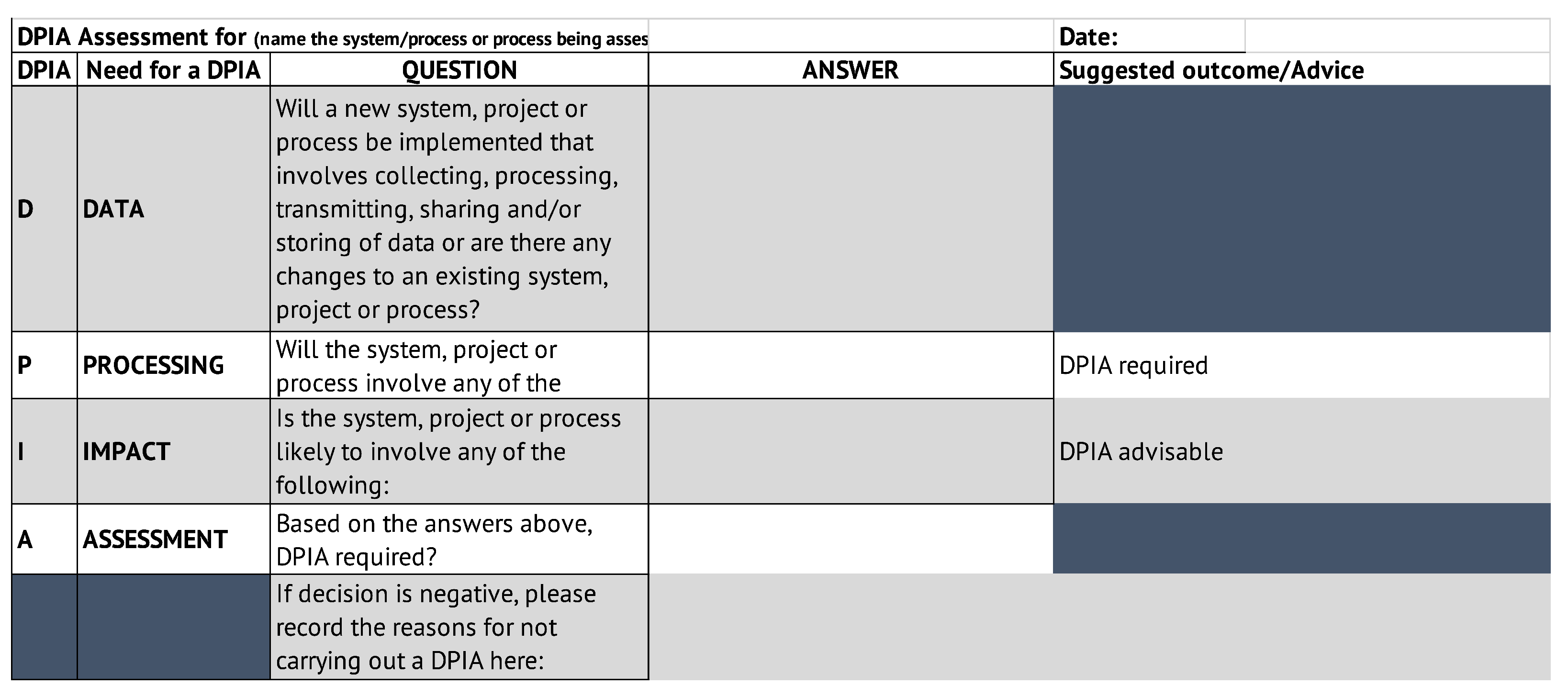

This paper seeks to address this gap by introducing the DPIA Data Wheel, a privacy risk assessment framework devised for supporting informed decision making around privacy risk and conducting Data Protection Impact Assessments (DPIA) for any system, project or scenario that involves high risk processing of data. This, we contend, will include most CPS.

Contribution

The creation of the DPIA Data Wheel came about as part of an action intervention study conducted to guide a local Charity in the UK through implementing the changes brought in by the General Data Protection Regulation (GDPR) across the organisation [

5]. As part of this implementation, a number of complimentary elements arose, including a need for a privacy risk assessment that would allow the organisation to assess the privacy implications of their data processing on their customers in a consistent, repeatable manner, allowing them to meet their obligations for conducting DPIAs under Article 35 of GDPR [

6]. This paper discusses this complimentary element, and provides a detailed explanation for how this DPIA framework was created, and the considerations taken into account in the design.

The findings from this study showed that including contextual questions in a practical guided manner within the privacy risk assessment, helped raise awareness of not only what is meant by context in terms of privacy risk assessment, but also identified contextual nuances that might not otherwise be apparent when conducting traditional privacy impact assessments (PIAs). However, to ensure these aspects are understood by assessors, in-depth training will need to be provided to ensure they appreciate and know how to assess privacy risk in context, this is discussed in

Section 7. Further, the results show that for optimum contextual consideration to be included in the privacy risk assessment, it is advisable to consult with a wide range of stakeholders as part of the consultation process. This also widens the scope for more privacy risks to be identified and included in the privacy risk assessment. We also found that while organisations are comfortable assessing risks from an organisational perspective, they struggled to appreciate that the DPIA requires them to consider risks from the perspective of their customers or service recipients and what this means in practice (see

Section 7.1).

In presenting the DPIA Data Wheel, three contributions are presented. First, we provide an exemplar model for how Contextual Integrity (CI) can be used in practice, to ensure context is included as standard, within the privacy risk assessment process, from the perspective of both the organisation and their service recipients. Second, we provide organisations with an empirically evaluated, repeatable, consistent approach to privacy risk assessment, that will facilitate users in assessing the privacy risks associated with any CPS likely to involve high risk data processing, and identify suitable mitigations for each privacy risk identified. Third, we show how, by using the DPIA Data Wheel, organisations can use the privacy risk assessments created, towards demonstrating compliance with the GDPR.

The rest of this paper is organised as follows.

Section 2 explains the background to the creation of a practical DPIA framework. Next, in

Section 3, the underpinning concepts and work upon which this DPIA framework was created are outlined, and we explain how CI was used to create a DPIA framework for assessing privacy risks to the individual. This DPIA framework was designed using concepts devised in a pilot study, CLIFOD (

Section 3.1), and aligned with official guidance (

Section 3.2). The design of the DPIA Data Wheel itself is provided in

Section 4.

Section 5 explains how this framework was empirically evaluated in three different practical environments, using real data. This is followed by a review of related work in

Section 6. We then discuss the outcomes of the evaluations in

Section 7 before concluding in

Section 8 by re-iterating and reflecting on the contributions made to the wider community.

3. The DPIA Framework

The DPIA Framework was created as part of a GDPR implementation study, conducted in collaboration with a local Charity in the UK [

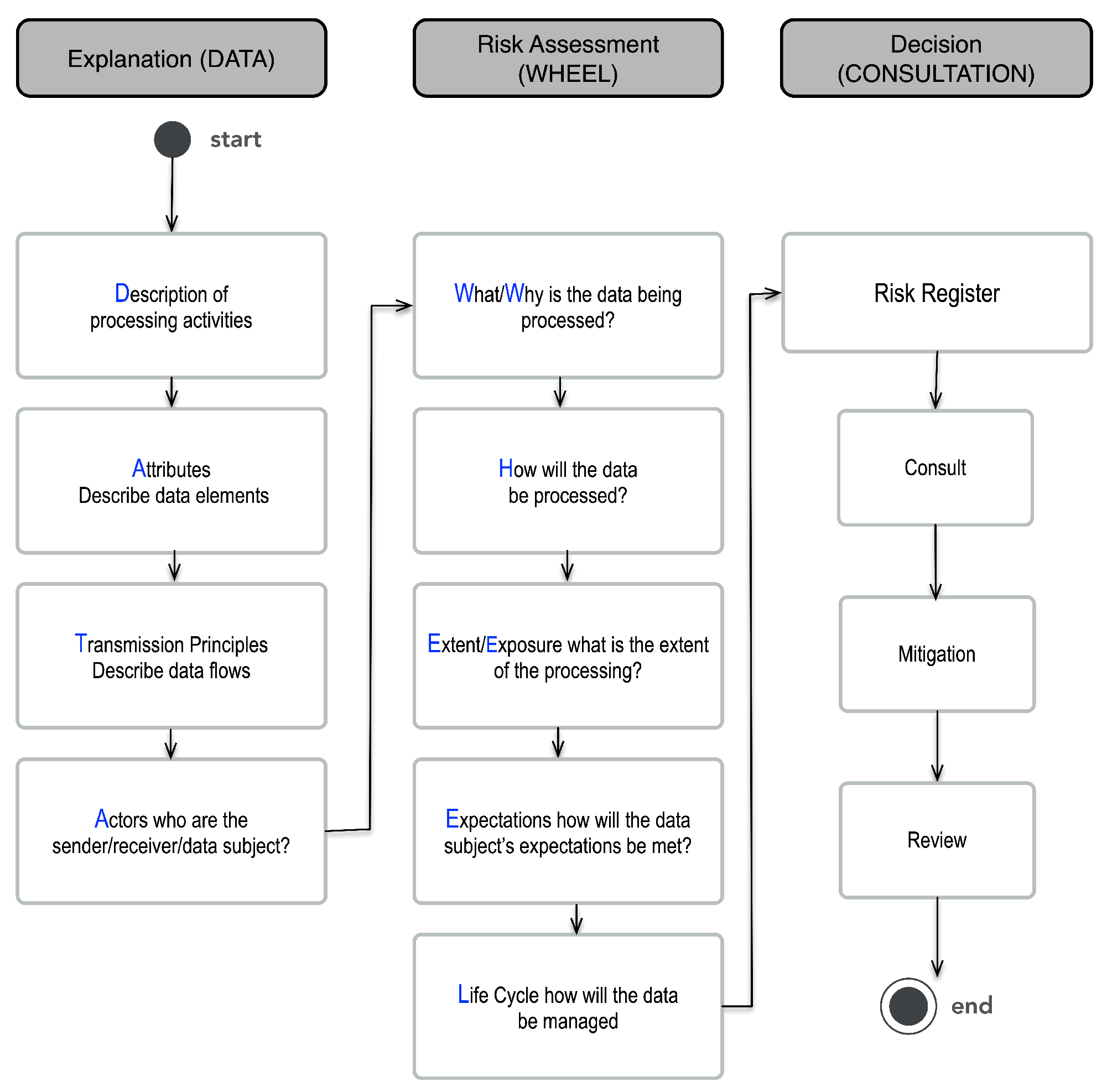

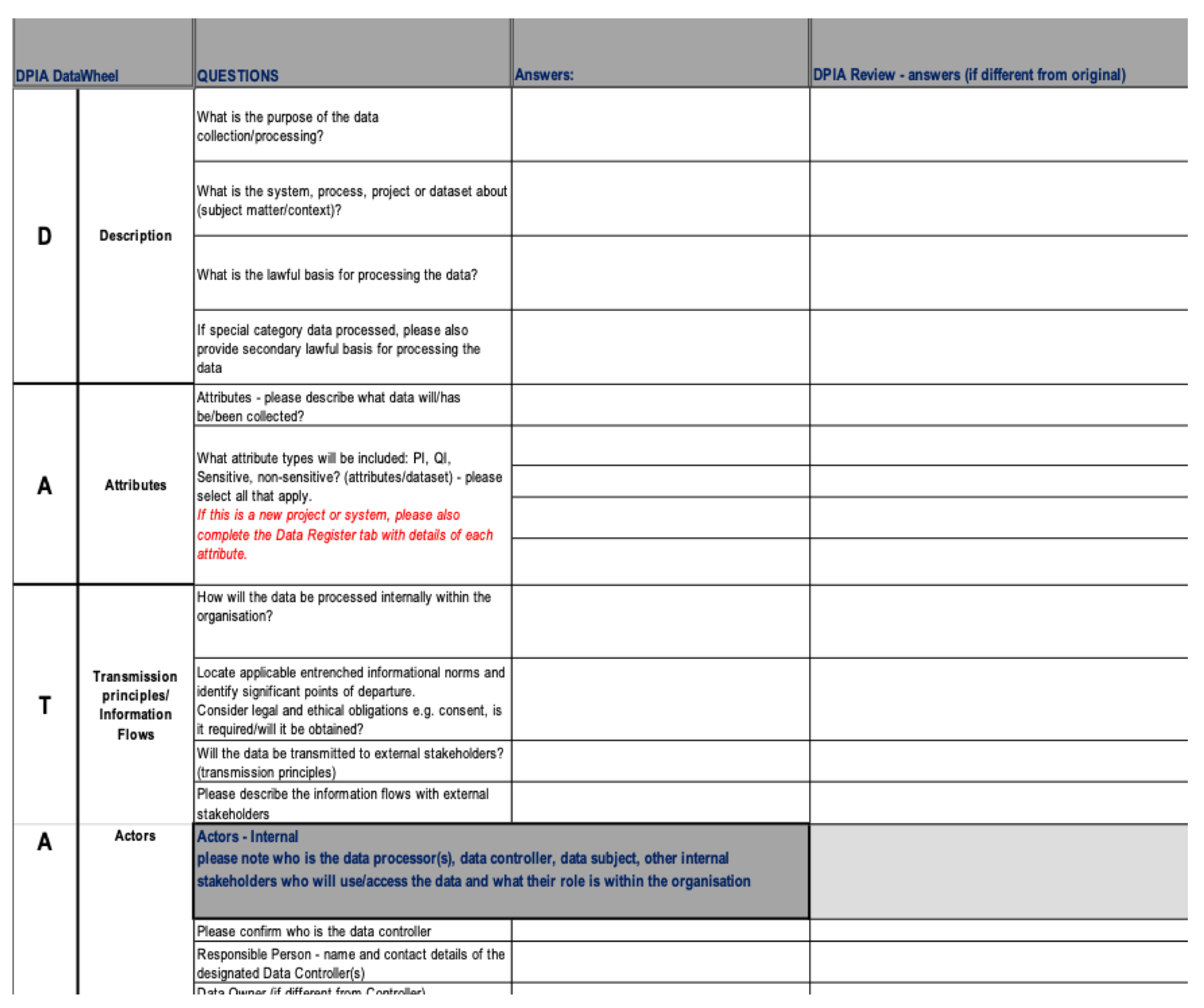

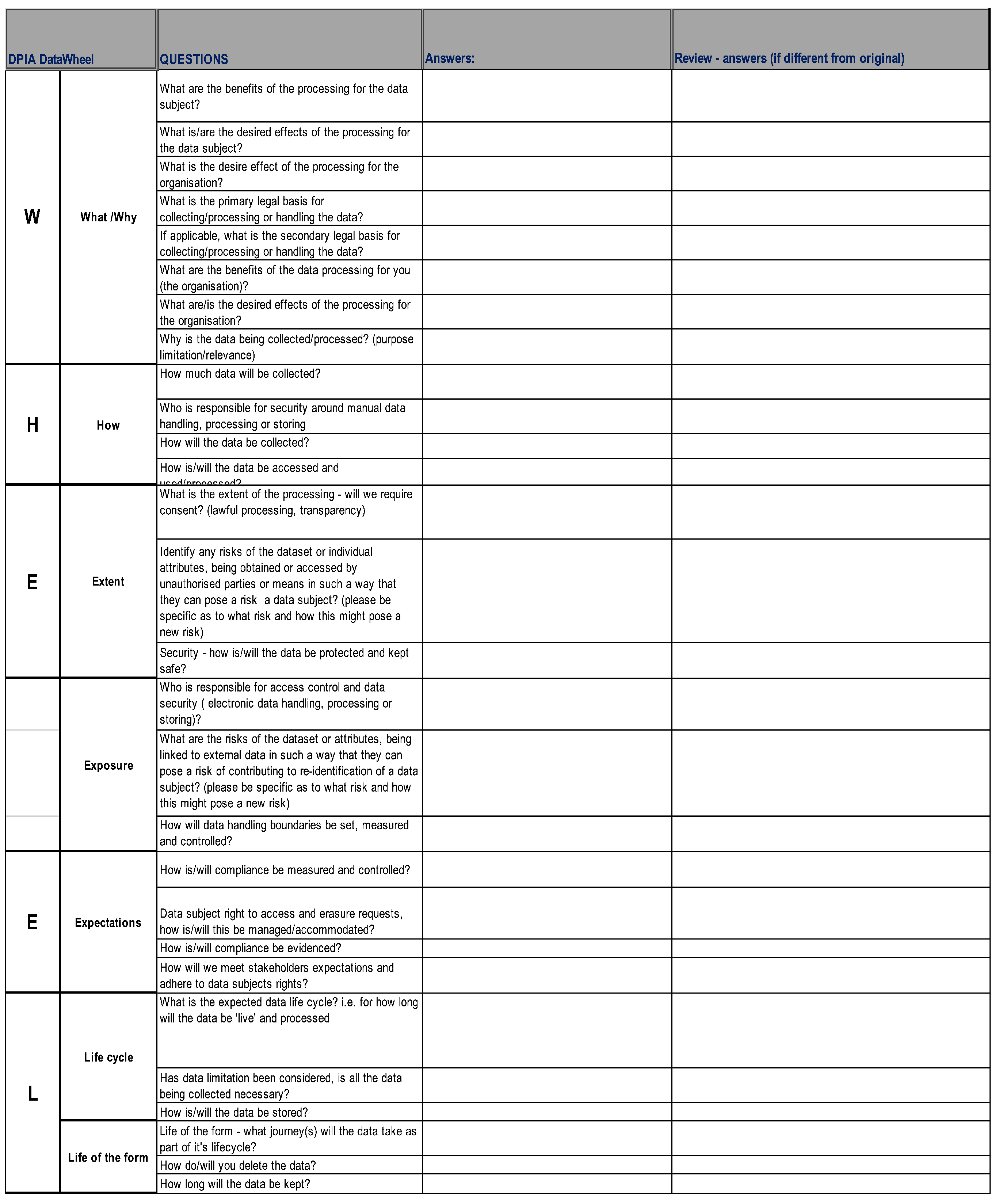

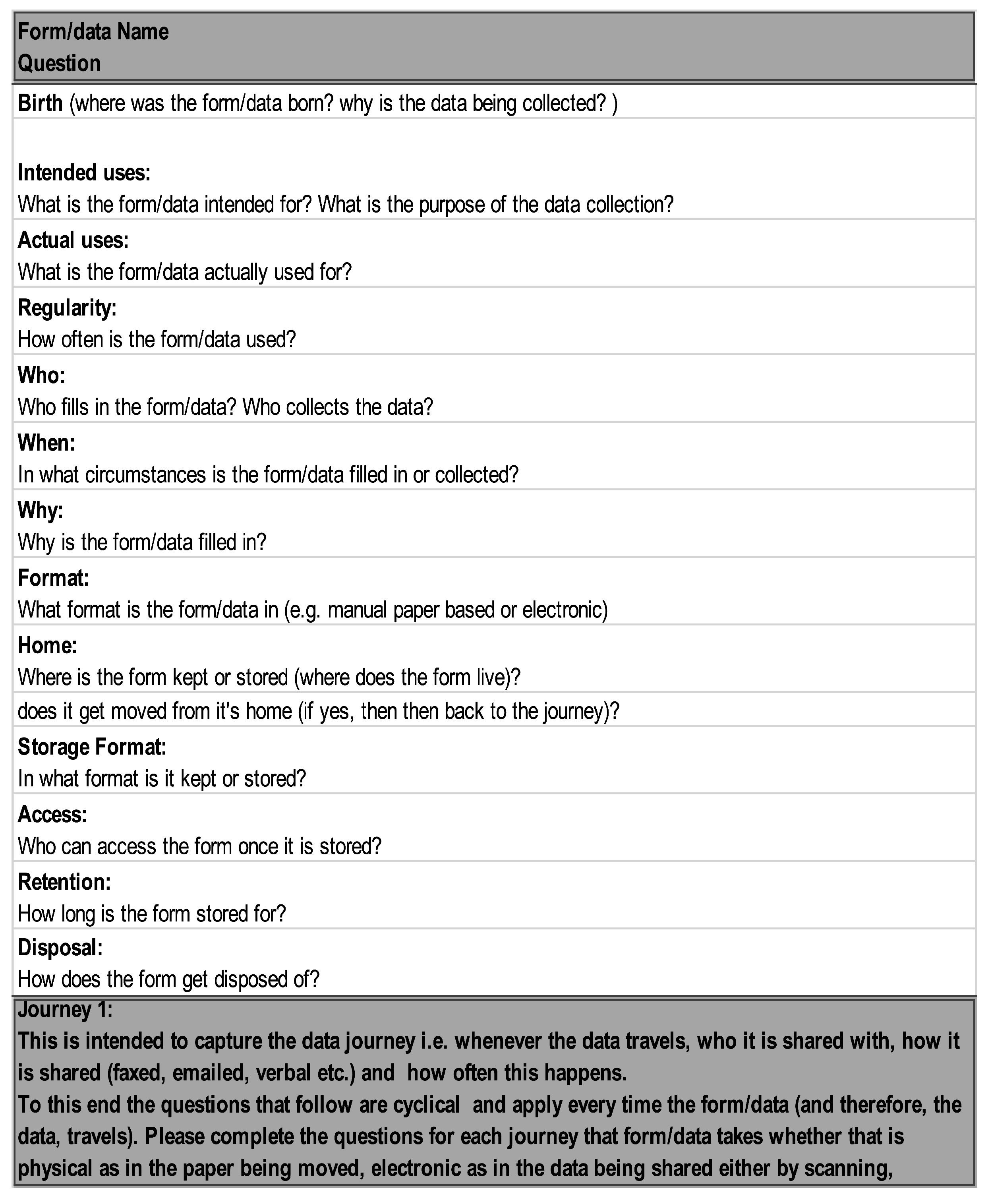

5]. One outcome of this study, was a data protection impact assessment, “the DPIA Data Wheel” (see

Figure 2), devised to facilitate the charity’s assessment of the privacy risks of their processing activities by including context as part of the design.

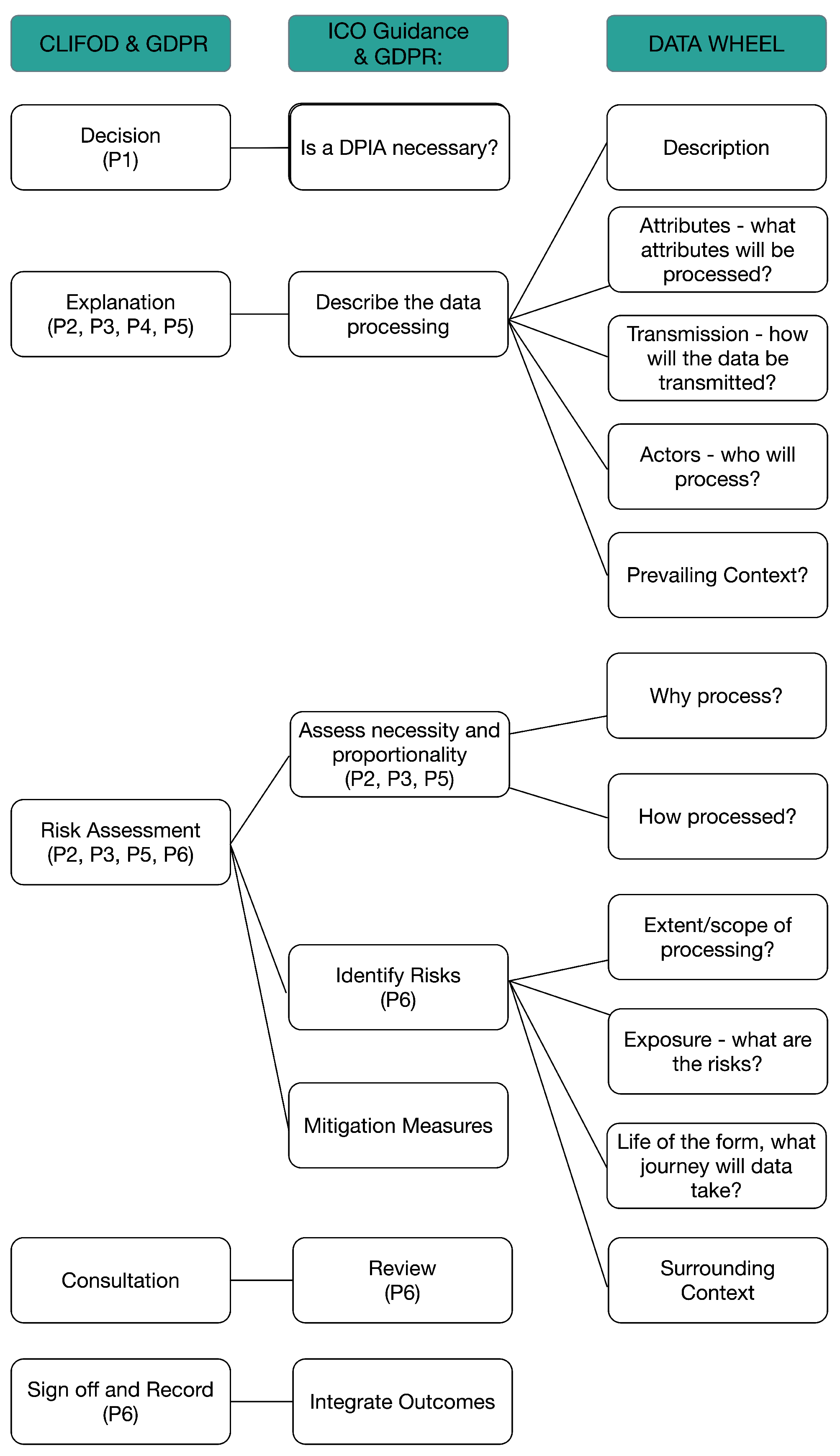

In creating the DPIA Data Wheel, we sought to integrate CI to GDPR obligations around DPIA and establish how CI could be incorporated into the DPIA process, using the concepts devised in a pilot study, ContextuaL Integrity for Open Data (CLIFOD) [

16] (see

Section 3.1). This was done through an analysis that sought to first, align the guidance provided by the Information Commissioners Office (ICO) in the UK around DPIAs [

17] with GDPR and advice from the EU (see

Section 3.2). This was then aligned to CI through CLIFOD (see

Section 3.3) to create the final framework (see

Section 4).

The DPIA Data Wheel was empirically evaluated as part of the Charity GDPR implementation study in three real-world settings. First, the DPIA framework was evaluated in an organisational setting, working in collaboration with management at a charitable organisation. The second evaluation involved consulting and collaborating with staff at the charity through a series of training workshops to further validate the framework. Third, the participation was widened to include peers by evaluating the DPIA framework in four seminars, held as part of a one-day workshop for a group of 40 charitable organisations based in the local area (see

Section 5).

3.1. CLIFOD Pilot Study

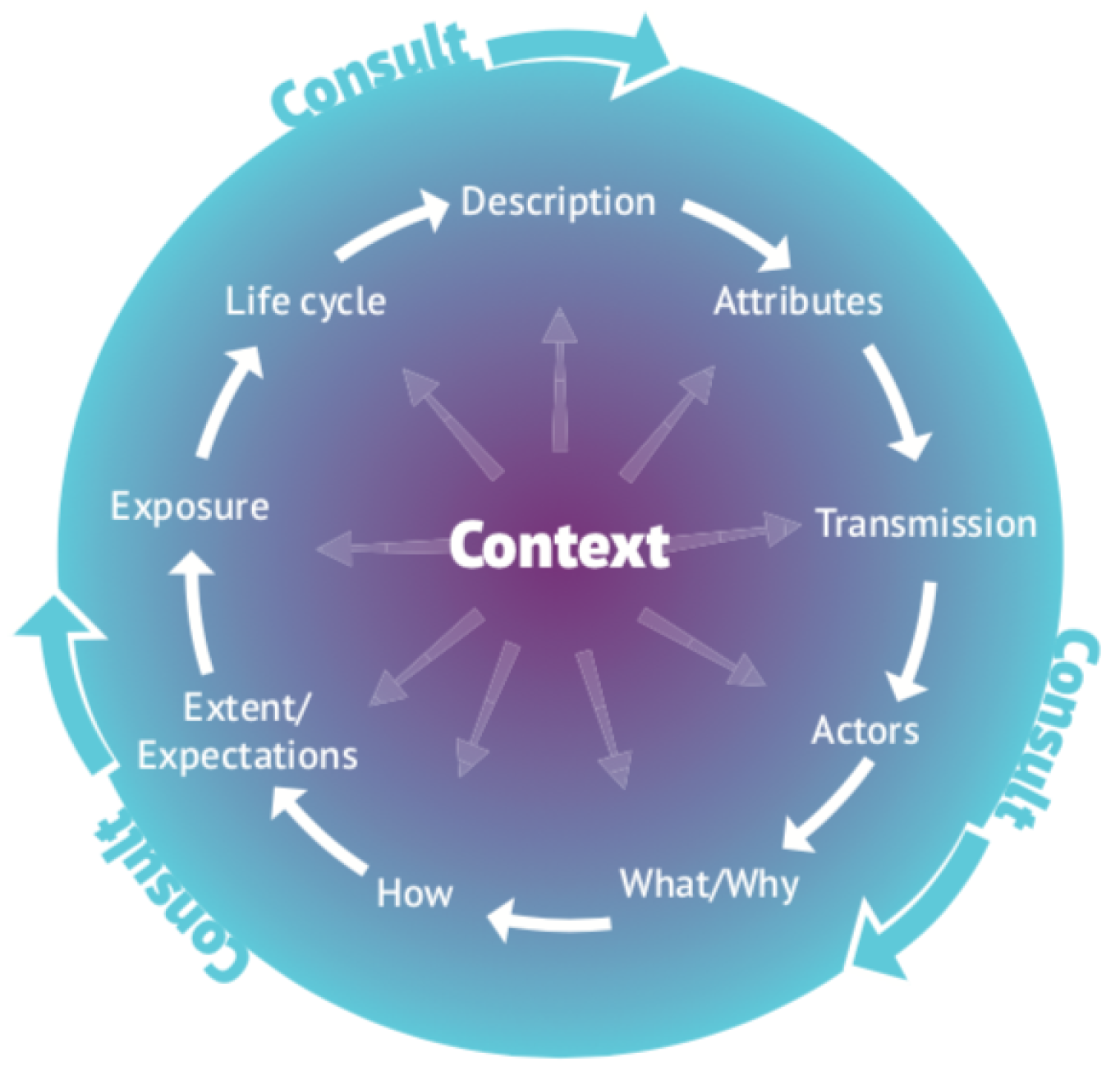

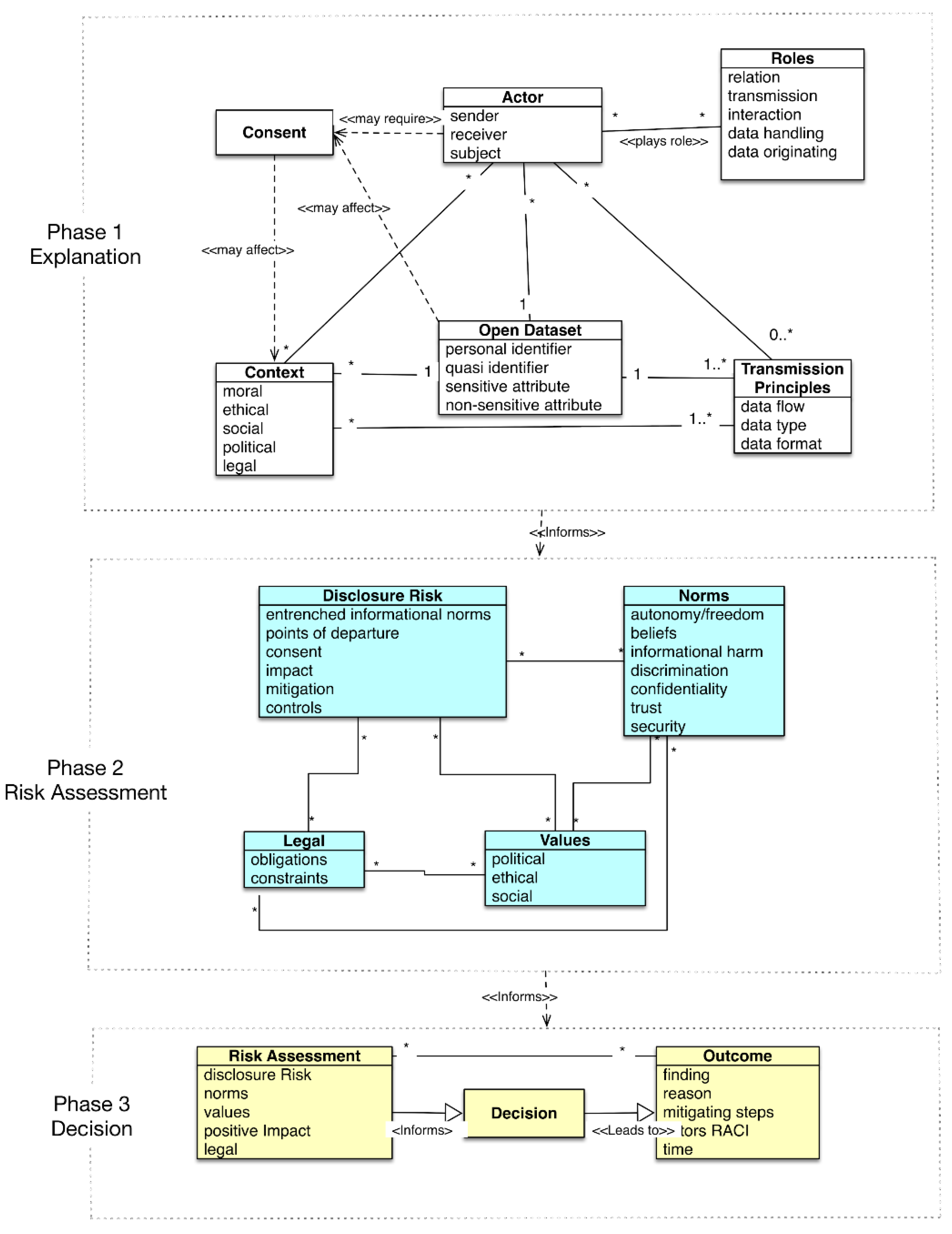

To explain how the concepts within the DPIA Data Wheel were created, it is necessary to first explain how CI was translated into a practical risk assessment framework for supporting organisational decision-making around privacy. This process began with the creation of a meta-model of CI (see

Figure 3) that illustrates how CI can be translated and represented in a visual model that can be applied in practice [

18].

From this, we expanded on the idea of using CI to understand privacy risks by applying the concepts of CI to the decision-making process for open data publication in a pilot study, CLIFOD. In that work, the CI principles were used to create a privacy risk assessment questionnaire to aid decision makers in assessing the risks of making data available in open format [

16].

The CLIFOD framework sought to incorporate the meta-model of CI into privacy risk assessment to facilitate informed decision-making for whether or not data can be published as open data. In creating CLIFOD, the two core elements of CI were used.

3.1.1. CI Core Elements Used in CLIFOD

3.2. Aligning GDPR and ICO Guidance

We used the concepts derived in the CLIFOD pilot study to incorporate CI into the DPIA process. However, the first step in creating an effective DPIA framework, was to ensure this would comply with GDPR obligations and guidance for conducting DPIAs. To this end, the guidance from the text of GDPR (Articles 5 & 35 and Recitals 90–94); the recommendations of Working Party 29 on DPIAs [

19] (hereafter just referred to as

GDPR), and the information Commissioner’s Office (ICO), the governing authority for data protection in the UK [

17], was used as the starting point.

This analysis began by looking at what the DPIA is actually seeking to accomplish, which is to support an effective, repeatable and detailed privacy assessment of data processing activities during its lifecycle with the organisation, and then how this could be adapted to fall into the CI phases devised in previous work

explanation, risk assessment and decision, as described in

Section 3.1.1 and [

20].

To present this analysis, a logic model method was used. Research shows that modelling is an effective tool for communication of a cause of action, a programme or a process. For example, visualisation has been shown to help explain “how to get there” [

21], and enhance understanding and communication, for example, of risks [

22]. Therefore, a decision was made to depict the transition from the identified documentation in a visual manner. Therefore, a logic model was created to try to fit the process into some form of acronym or model that would provide a visual representation that practitioners could use for a quick overview.

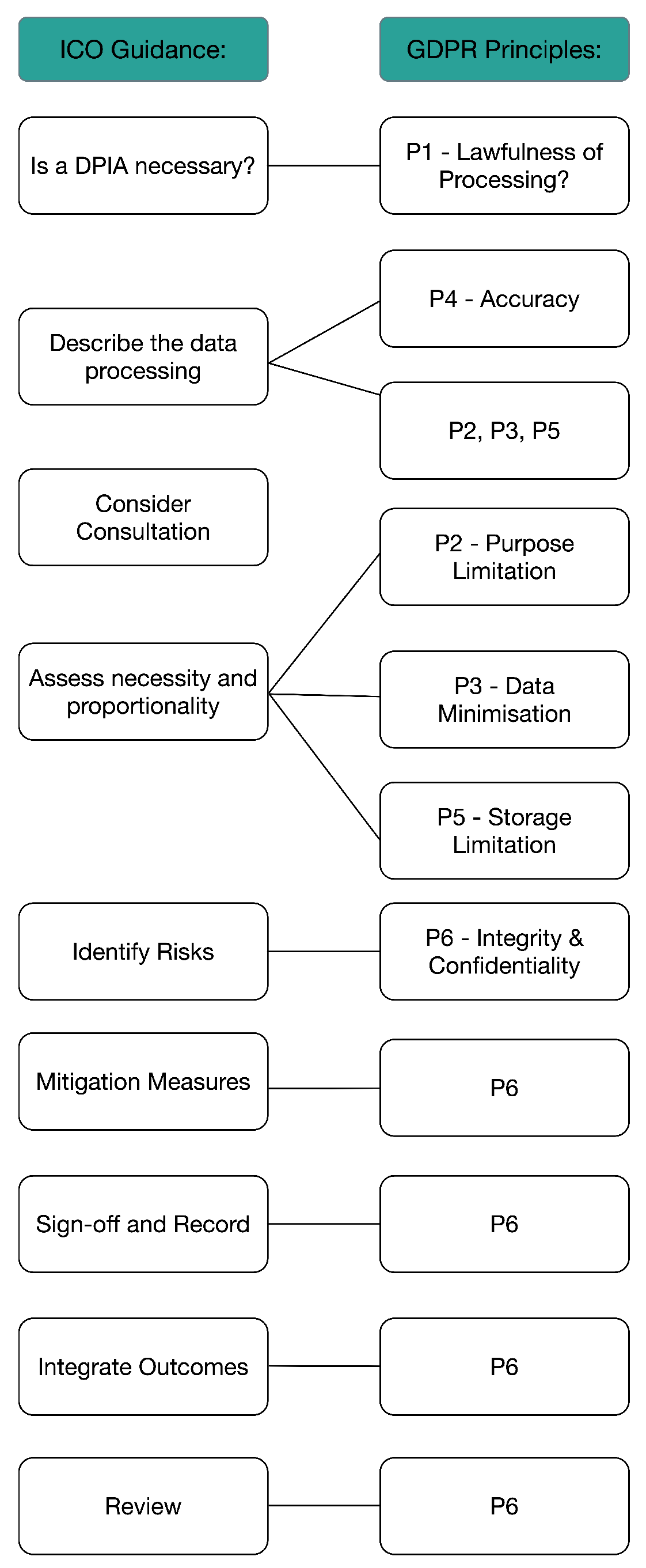

Thus, starting with the nine steps outlined by the ICO for conducting a DPIA [

17], these were mapped through a series of logic models to the six GDPR principles according to how they relate to each step. This is depicted in

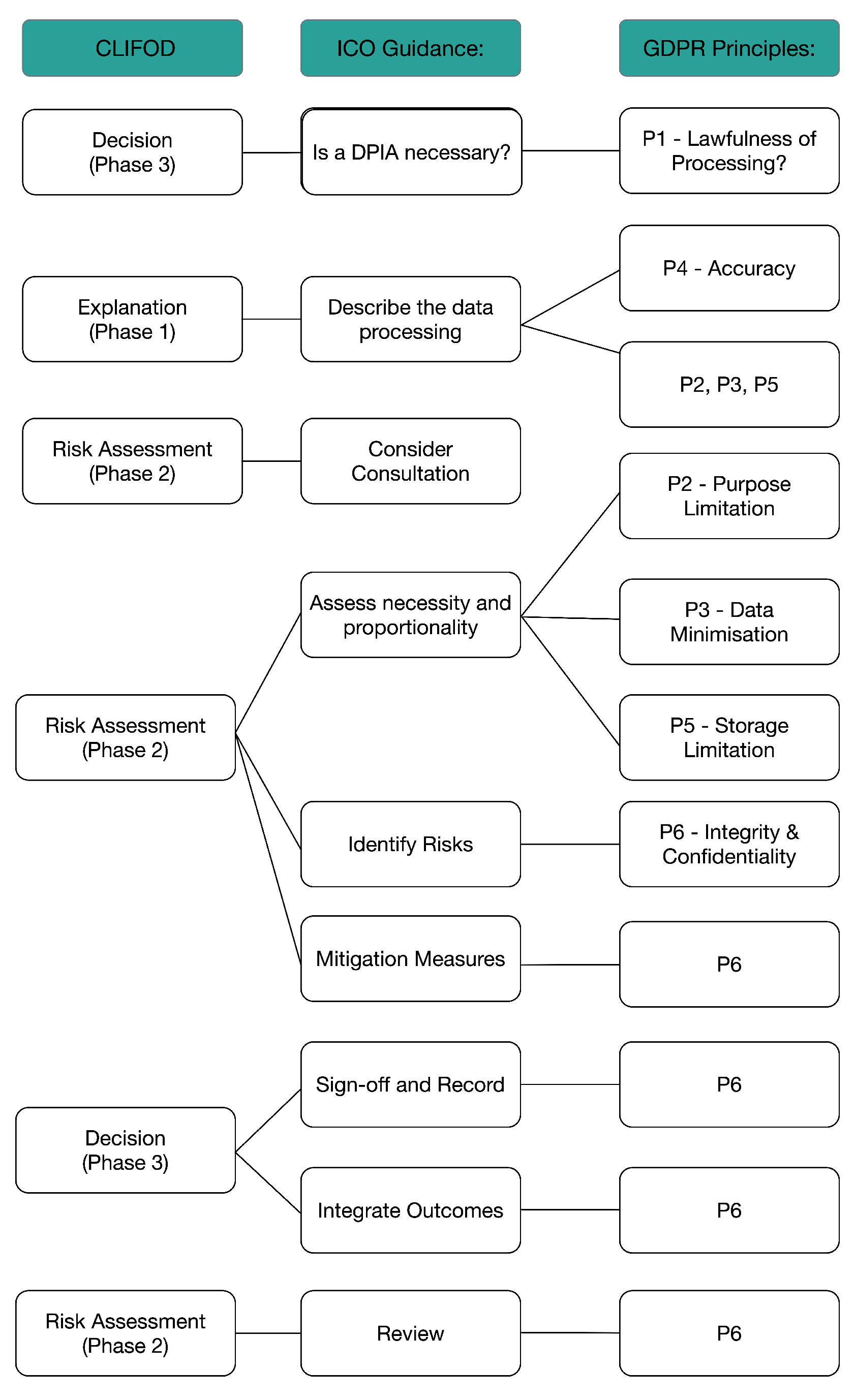

Figure 4, which shows the ICO principles in the first column, and then how these have been aligned to each of the GDPR principles in the second column.

3.3. Aligning CLIFOD to ICO Guidance and GDPR

Next, to incorporate CI, we used the outcomes from the CLIFOD pilot study as the basis for introducing context into the DPIA framework (see

Section 3.1.1). To this end, work began on analysing how the CI principles used in CLIFOD could be mapped to the meta-model of Contextual Integrity in

Figure 3 and aligned to the ICO DPIA Guidance and the GDPR Principles in

Figure 4.

Figure 5 shows how the three phases of contextual integrity (CI) used in the meta-model and CLIFOD:

Explanation, Risk Assessment and Decision (see

Section 3.1) have been aligned first, to each of the ICO steps (column 2) and then, to the GDPR principles to which they relate (column 3).

5. Evaluating the DPIA Data Wheel

The evaluation of the DPIA Data Wheel was carried out over a three-month period. As part of this process, the DPIA Data Wheel was subject to three empirical evaluations as follows:

Evaluation One: Assessment of the DPIA questions (by three: Practitioners; Academics; and Peers)

Evaluation Two: Initial completion of the DPIA Data Wheel and Staff consultation, consisting of three training sessions attended by 29 staff;

Evaluation Three: Peer Consultation, consisting of a workshop and four seminar groups, attended by 40 charity sector employees.

5.1. Evaluation One

In the first evaluation, three groups of assessors were approached and asked to comment on the format, layout and the questions devised for the DPIA Data Wheel framework. These groups were:

- Academic

three Academics, two who specialise in Security, HCI, CPSs and privacy and one with a process and methodology background;

- Practitioners

three practitioners with a background in security and/or data management;

- Peers

three peers (PhD students with expertise in risk and/or security) were approached for comment.

Each assessor provided valuable feedback resulting in revisiting and revising some of the questions. This included adding a glossary and amalgamating the instructions for each worksheet within the prototype spreadsheet into an introductory worksheet containing instruction for how to work through the framework, and details about what each worksheet covers.

In total, seven evaluations and consultation sessions were conducted. These were attended by 69 participants who between them identified a total of 88 different privacy risks. At the end of Evaluations Two and Three, both participants and facilitators were asked to complete a post-training questionnaire (this can be found in the case study protocol, Appendix I and J respectively (see

Section 5 for link).

5.2. Evaluation Two

Evaluation two began with the DPIA Data Wheel being completed and evaluated by Management at the charity. Two Managers, the Chief Financial Officer (CFO) and one of the Directors, collaborated to complete all the elements of the DPIA framework for six different forms used within the organisation, with each form corresponding to a data handling process in regular use within the organisation. This resulted in a number of privacy risks being identified for each process. For example, the completed DPIA Data Wheel for the client care plan, one of the most frequently used forms within the organisation, resulted in 17 risks being identified, mostly related to unauthorised access to data or loss of data as a result of external interference or influences of some description.

The data captured on the data registers for each of these was transferred onto the Master Data Register. This aided the DPIA assessment itself, and helped the charity keep their data register up to date, thereby demonstrating compliance to the UK Information Commissioner’s Office (ICO).

Based on the completed DPIA assessments from Management, a decision was then made to use the client care plan as the basis for consultation as part of the staff training session. Research suggests that incorporating known scenarios into the teaching materials leads participants (in this case, staff) to better, more lasting outcomes. This means they are more likely to apply their learning in practice [

24]. This, therefore, influenced the decision to use this DPIA. However, the main reasons for choosing to use this DPIA assessment were; first, the care plan contains a lot of very personal and highly sensitive information that requires safeguarding under GDPR. Second, the charity was in the throes of changing how the care plan is completed and used in practice from a manual handling process to electronic recording and processing in future. This change would introduce an automated element to the charity’s data processing whereby any updates made by staff on their smart devices (phone and/or iPads) would interact and update via the organisation network to automatically update and synchronise with the rest of the connected devices and the cloud. This meant that the data transmission principles would need to change as a result. Third, using a process that staff were very familiar with meant they would have practical experience of how the form is handled, as part of the daily routine within the organisation, and therefore, a good idea about what sort of risks might be associated with this handling of the form.

The next stage of this evaluation consisted of the staff training sessions, used the pre-completed manual process form for the care plan. This evaluation consisted of a training session on the changes introduced by GDPR and the DPIA, before moving on to the evaluation of the DPIA. As part of this evaluation, staff were provided with a handout (a copy of this can be found in the case study protocol, Appendix H (see

Section 5 for link) containing information about GDPR, how this affects them and their work with data and the pre-completed DPIA Data Wheel.

The staff training sessions were attended by 29 members of staff. Of these participants, 22 considered themselves to have limited understanding of GDPR, and most claimed to have little knowledge about DPIAs prior to attending the training. However, after the session, 21 felt very or reasonably confident in attempting to conduct a DPIA for themselves going forward. Across all sessions, 36 privacy risks were elicited with potential mitigation strategies identified for each. This not only provided staff an opportunity to consider the risks of how they handle data as part of their work activities, they also identified solutions for overcoming some of the obstacles identified themselves. We believe this makes it more likely they will implement the required data handling mitigations in future [

24,

25].

5.3. Evaluation Three

The third evaluation took place as part of a one-day workshop where 40 participants were invited to attend from all the local charitable organisations in the area. The format of the workshop was to inform local charities of the changes brought in by GDPR and to help them demystify the DPIA process and give them confidence in conducting DPIAs in practice. To this end, as part of the workshop, participants were divided into four seminar groups for a practical run-through of completing a DPIA.

For these seminar sessions, we used the same DPIA Data Wheel assessment that had been pre-completed by the charity’s management in the second evaluation as the basis for the simulations. However, participants were asked to consider how the proposed change from manual to CPS processing might affect the privacy of the individuals involved.

The seminar groups were facilitated by individuals with experience and backgrounds in data management and security. The facilitators were instructed to facilitate rather than steer the privacy risk discussion to avoid bias being introduced into the evaluation or resulting privacy risk register (a copy of this can be found in the case study protocol, Appendix H (see

Section 5 for link).

The format of the session was designed so that participants would first, review the Data Wheel answers provided by the management team. Second, discuss what the potential risks might be associated with the change in data transmission principles from manual to CPS, with the session facilitators noting these on the privacy risk register within the framework. Seminar participants were provided with handouts providing details of GDPR and a copy of the pre-completed Data Wheel (as used for the staff training).

Thus, these seminar sessions were used as an opportunity to; (i) further evaluate the DPIA Data Wheel; (ii) widen the DPIA consultation to an extended peer group within the charity sector, thereby getting a broader perspective on potential privacy risks; and (iii) use this and the training sessions as an opportunity to conduct two DPIA risk assessments on the client care plan, one for the existing manual process and one for the proposed new process.

5.4. Evaluation Results

Most participants in Evaluation Three did not consider themselves security or privacy experts, with just over half reporting that they considered themselves to have some prior knowledge of GDPR. Similarly, most participants stated they had little or no prior knowledge or understanding of DPIAs prior to attending the workshop. Following the workshop, when asked how likely they were to use the learning from the workshop and seminar in their daily work in future, just over half of the participants reported that they were extremely or very likely to apply what they had learnt in practice.

7. Discussion

7.1. Individual vs. Organisational Risks

The different perspective from which privacy risks need to be assessed when conducting DPIAs appeared to be a greater challenge than originally anticipated. Both the workshop and staff training sessions were preceded by explanatory sessions and talks about GPDR and DPIAs, which made it clear that, in conducting a DPIA, the desired outcome was to identify privacy risks to both the data subject and to the organisation. Yet, despite this, as part of the evaluation, the participants identified only risks to the organisation. There were no risks to the individual included at all from any of the sessions.

This lack of individual risks clearly showed that the research team underestimated the effect of changing perspectives and how difficult this would be for practitioners to appreciate. The fact that mandatory privacy risk assessment requires privacy risk being assessed from the perspective of the individual (see

Section 6.2) appeared to be more difficult to comprehend for the practitioners than originally envisaged. This became quite clear as throughout the evaluations of the DPIA Data Wheel where, although participants identified many appropriate risks, most of these related to the organisation and/or the industry sector (charities), rather than the individuals whose data was being processed. This indicates either the distinction between the two different perspectives (data subject vs. organisation) was not highlighted sufficiently or, perhaps participants were so pre-conditioned to assess risks from an organisational perspective, that they failed to recognise or fully appreciate the difference. Future work will look at this to establish how best to address and overcome this issue.

7.2. Using Context for Discovery

The idea of having a distinct context section that contained specific guided questions around the values and norms that need to be considered as part of contextual inquiry within the Wheel (see

Section 4.2.2), provided a very useful aid for guiding the discussion; this helped participants apply contextual inquiry in practice. However, to fully appreciate and get the most from the practical application of context, more in-depth explanation of these concepts as part of the consultation process in any future applications of the framework, will help raise awareness of contextual inquiry, and how to consider context. This will help identify more privacy risks and suitable mitigation strategies.

7.3. Context as a Form of Provocation

When completing the supporting questionnaires during Evaluation Two, the most varied and vibrant area for discussion about risks was the context sections. The questions prompted the management to think about how the actions of staff might be perceived by service recipients. Thus, when they were asked probing questions about the prevailing and surrounding context of how staff and volunteers handle (

process) the data and how this might be perceived by service recipients or other stakeholders, the discussion became interesting. Initially, for the prevailing context questions, this did not seem to raise too many concerns. For example, in regards to discrimination, one of the areas identified as one of the four challenges by Wachter [

4], staff felt that potential discrimination was unlikely as this was an area staff and volunteers received a lot of guidance and training on. This would indicate that, at least for some areas, the charity has ensured sufficient training and awareness is raised among staff to safeguard service recipients from harm involving potential discrimination. Nonetheless, the assessment did raise concerns that had not been previously considered by management.

Context was used in a similar manner in Evaluation Three. Interestingly, in these sessions, no changes had been recorded in the context sections for any of the groups. This could have been due to lack of understanding on the part of the participants and/or facilitators. This was highlighted by one of the facilitators as part of their feedback where they stated: “I don’t think the instructions were lacking it was more my lack of experience with facilitating that may have impeded the process” (F2). Further, the facilitators had been specifically instructed not to ‘direct or lead’ the discussion, rather, let the participants discuss the answers among themselves. This, in hindsight, might have been a mistake as a more in-depth discussion of context could have provided some insight and understanding of how context affects data flow, thereby helping participants appreciate the wider implications of assessing privacy risks. A similar sentiment was provided in the evaluation feedback provided by participants, with one attendee commenting: “Some areas seem grey and it is so individual to each charity it seems a minefield” (A17).

7.4. Using Context to Identify Data Handling Power Asymmetries

When the questions in the surrounding context section required participants to consider whether data handling might pose a power imbalance or threat to the freedom of the data subject, a few concerns were highlighted as a result of the prompts provided by the questions. For example, service recipients in active treatment reside on the premises of the charity. While service recipients’ are in residence, staff have the power to ask service recipients to leave if they endanger other service recipients or break “house rules”. This could be perceived by service recipients as an abuse of power in that they would have to leave the house. One potential consequence of this would be the cessation of treatment, which in turn would increase the risk of a relapse into addiction. The risk of this occurring was rated as low but the impact on the individual would, however, be significant in view that a relapse could potentially endanger the client’s life.

After the assessment, the findings were presented and discussed with the management team who explained that all service recipients are informed of these rules. They were then asked to sign to signify they understand and agree them, prior to moving into one of the houses. However, the management team conceded that a suitable mitigation strategy of more staff training on how to avoid potential abuse of power situations might be appropriate. This was noted on the DPIA form, and signposted for being action by management.

7.5. Role-Specific Privacy Risks

During Evaluation Two, the risks identified by staff were all different from the risks identified by managers. Where management had focused on risks originating from external parties gaining access to data, staff viewed most risks as originating from internal threat actors such as service recipients seeing or gaining access to information they should not be privy to or staff errors resulting in potential data leakage. The aspects around context were not really discussed beyond the answers provided by management initially. This was partly due to time constraints and partly, due to staff not feeling they could add anything to the list of risks already identified by the management team.

7.6. Generic and Specific Privacy Risks

In the seminar sessions in Evaluation Three, each seminar group elicited a different set of risks, ranging from generic risk, for example, “loss of data”, to much more detailed risks such as; “Disgruntled employee may deliberately leak information and then report the leak to the ICO” being identified. Similarly, in discussing potential mitigation strategies, the seminar groups who came up with generic risks also identified generic mitigation strategies, for example, “back up data”, whereas the group with the more detailed risks also produced more detailed mitigation strategies, for example, “Ensure staff sign confidentiality agreement, staff training, criminal liability—appropriate staff contract, DBS check all staff. Access control on different types of data. Make everyone accountable”.

Although participants were randomly selected for each of the groups, some would have more experience of risk assessment than others. According to the facilitators feedback, some of the participants had significant risk knowledge and understanding, although this was thought to relate more to security risk rather than privacy risk. Therefore, these disparities between the level of detail could perhaps be attributed to the level of expertise within each seminar group. The seminar sessions still proved useful in identifying and examining how changes in transmission principles would change the risks to the organisation, and what mitigations might help prevent those risks occurring.

8. Conclusions

In this paper, we presented the DPIA Data Wheel: an empirically evaluated DPIA framework. The DPIA Data Wheel exemplifies how PbD can be achieved through designing CI into the DPIA process. The framework was devised to provide a useful tool that decision makers can use to ensure contextual considerations are included as part of their privacy risk assessment process.

The DPIA Data Wheel framework was designed with charities in mind. However, it can equally be applied and used in any organisation who are required to conduct privacy risk assessments and/or DPIAs. This DPIA Data Wheel provides an exemplar, GDPR compliant DPIA process that incorporated two prototype spreadsheets (a Data Register and the life of the form, devised to capture details of the data flow within the organisation) from the GDPR implementation case study (see [

5]). These prototypes have been included in the DPIA Data Wheel to facilitate organisations being able to collate appropriate evidence for demonstrating compliance with GDPR. For context, the DPIA framework questions integrated most of the questions from CLIFOD, including all the contextual questions, into the framework. Finally, the DPIA framework also incorporated a privacy risk assessment, and recording of suitable mitigation strategies to address any privacy risks identified, designed to aid practitioners in determining what privacy risks might be associated with data processing associated with the implementation of a new system, project or process or other data processing activity associated with CPS (see

Section 3).

The DPIA framework presented in this paper provides decision makers with an exemplar for conducting a combined DPIA and PIA assessment in practice based on CI. Moreover, this DPIA framework not only helps decision makers conduct consistent and repeatable privacy risk assessments, it also guides them towards making informed decisions about privacy risks and provide them with demonstrable evidence of GDPR compliant processes and practices. This, we contend, is particularly useful in scenarios where boundaries between different processes or systems are blurred, such as CPS.

Some of the outcomes from the evaluations in

Section 5 should not be entirely surprising. Historically, risk assessment is invariably concerned with what the consequences and likelihood of the risk occurring would be for the business and how this might negatively affect the organisation, rather than how they might affect the individual. Thus, decisions makers might be pre-conditioned into thinking about risks in terms of organisational risks. Therefore, asking them to now look at risk from the perspective of the individual without first providing more direction and guidance is unlikely to result in the desired DPIA assessments Data Protection Regulation Authorities might hope for.

Our work demonstrates that the wider the consultation on the DPIA risks identified, the more in-depth and complete the resulting privacy risk register is likely to be. Moreover, in terms of context, what our evaluation illustrates is that, the more stakeholders are consulted, the more context is likely to be included in the assessment. This shows the importance of allowing each stakeholder group to add their different perspectives, thereby broadening the context being considered. Stakeholder consultation is also an opportunity for reciprocal learning, making participants more likely to implement and use the lessons learnt which, in turn, reduces the likelihood of the risks identified occurring.

While the breadth of the evaluation, and therefore the consultation, was inclusive and comprehensive, more emphasis needs to be placed on the procedure for conducting the DPIA and how to contextualise and relate the risks to the individual rather than the organisation to ensure wide stakeholder engagement. To address this, consideration needs to be given to whether and how the questions can be adapted to encourage a change in perspective, or illustrative examples can be incorporated into the framework questions.

To ensure the decision makers understand the distinction between risks to the individual and risks to the organisation, more emphasis also needs to be placed on making sure the underlying principles are fully understood by participants when conducting training or consultation on DPIAs. This can be addressed by incorporating more direction about context, and how it can be considered as part of the evaluation. One way this can be achieved would be to provide some worked examples as part of the learning material or, as mentioned above, as part of the instructions provided with the framework.

Future work will examine how more guidance and worked examples can be provided in future iterations of the DPIA Data Wheel and associated documentation. It will also look at how the DPIA Data Wheel can be applied to the design of CPSs, and used more effectively to provide better directed education and training. This will allow users to assess risks to the individual, before using these to direct how they can implement effective safeguards and practices around their personal data processing activities in future.