Spatiotemporal Heterogeneity of Forest Park Soundscapes Based on Deep Learning: A Case Study of Zhangjiajie National Forest Park

Abstract

1. Introduction

2. Data and Methods

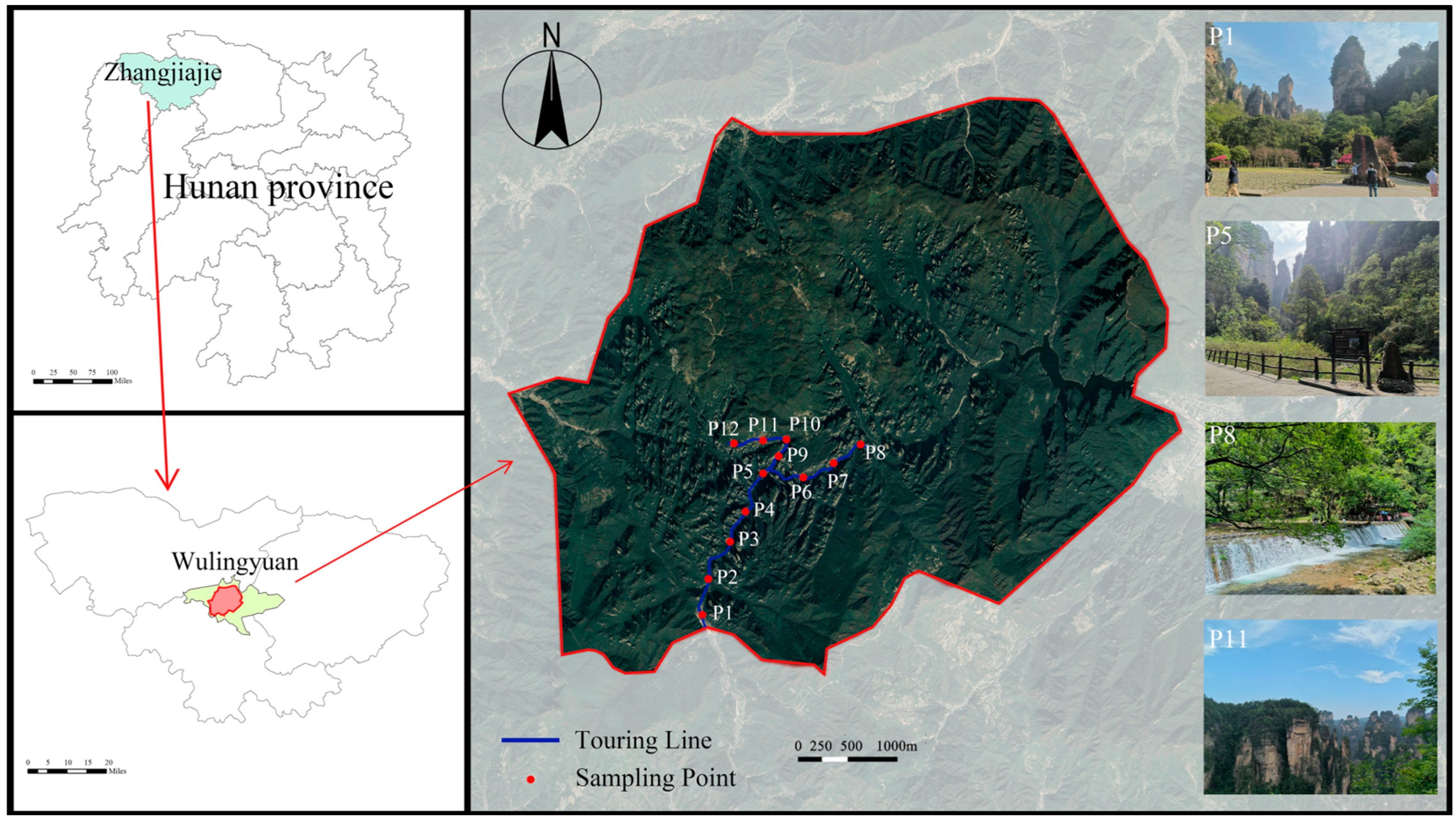

2.1. Study Area

2.2. Data Sources

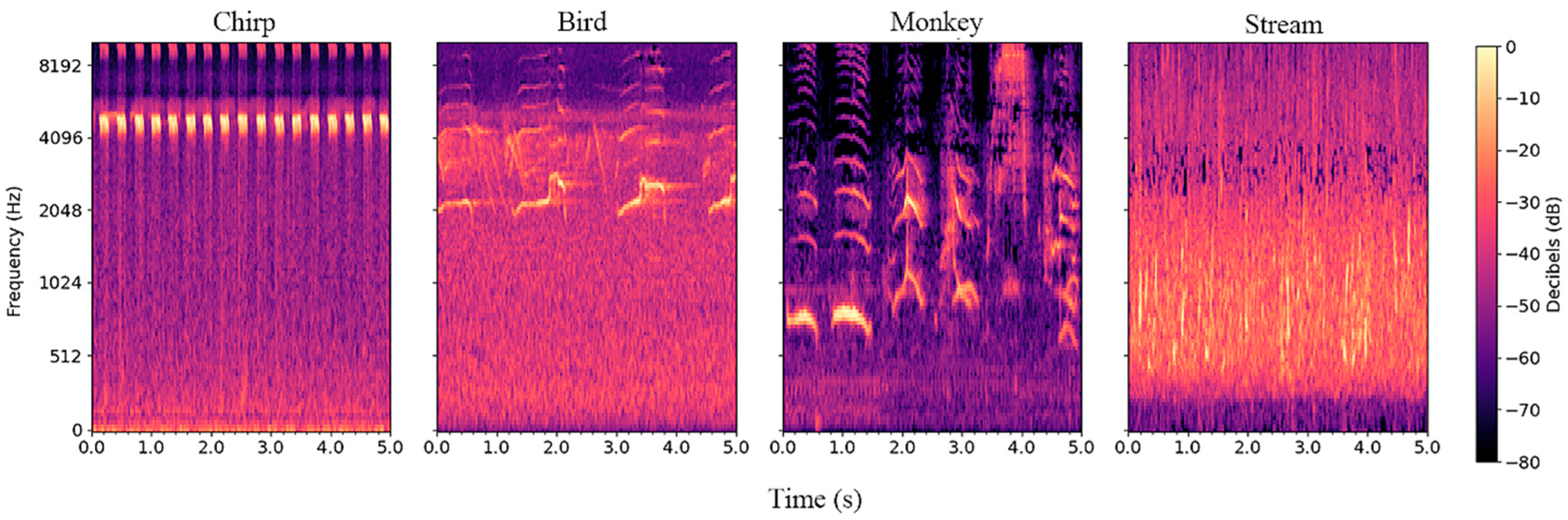

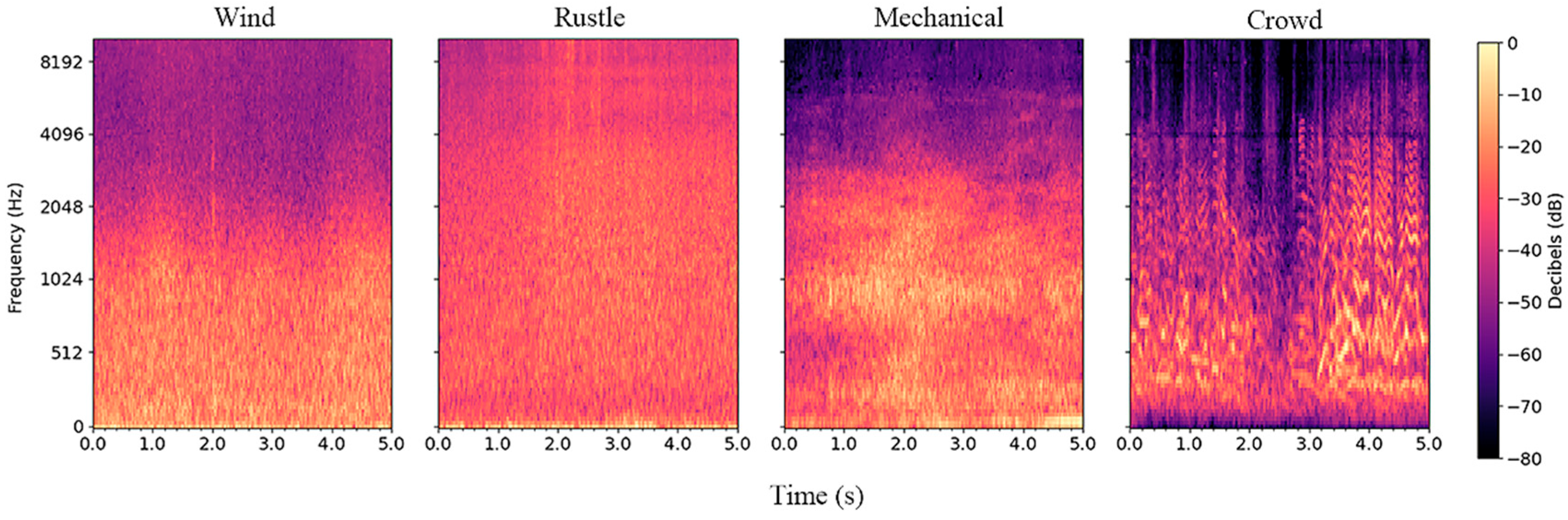

2.3. Data Preprocessing and Feature Extraction

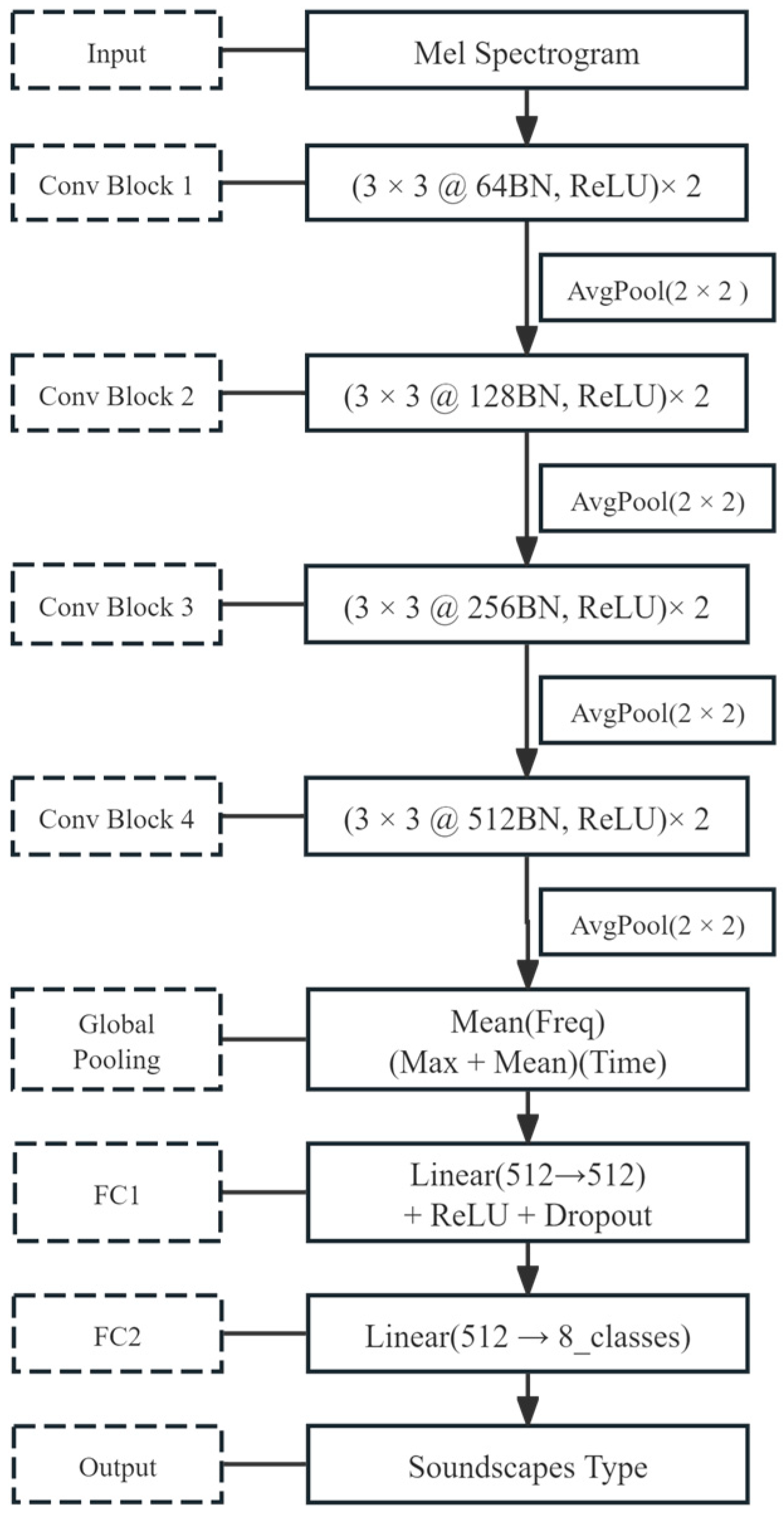

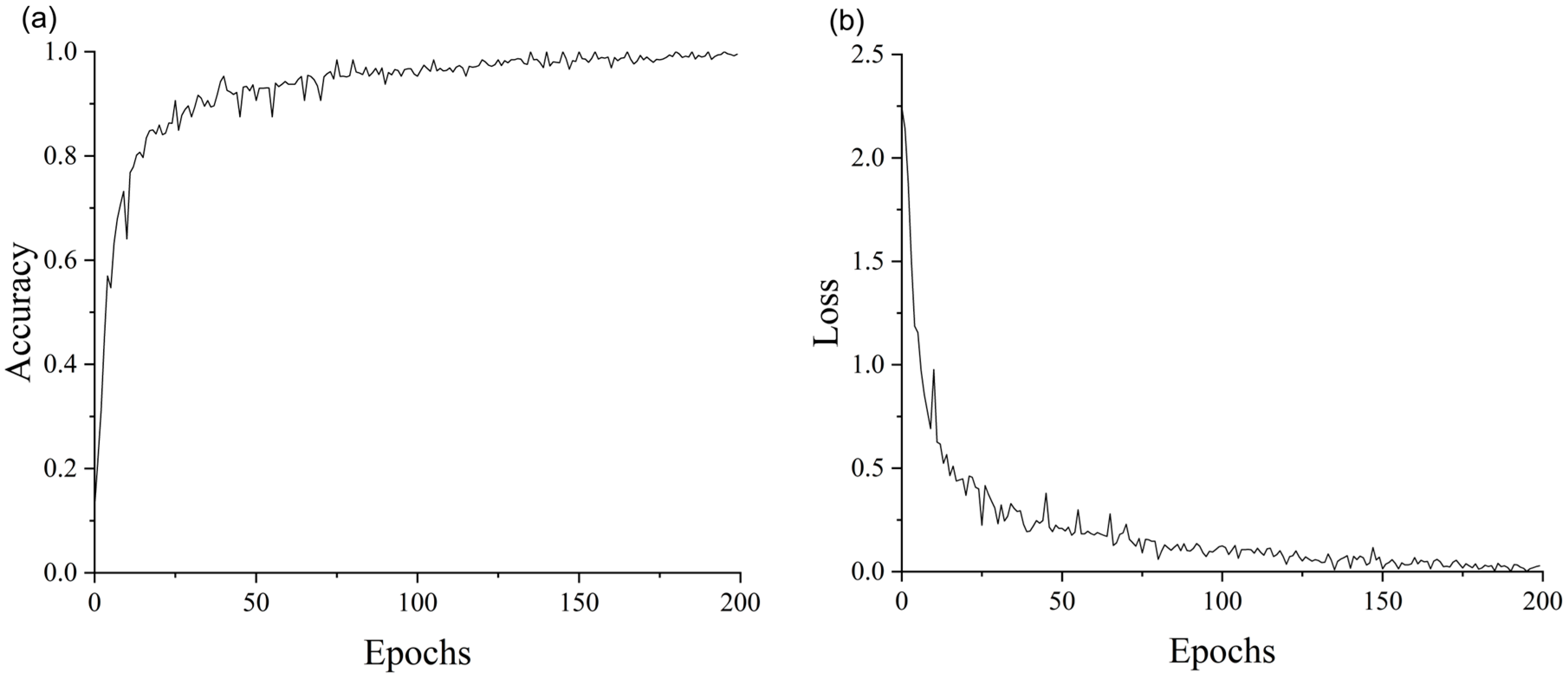

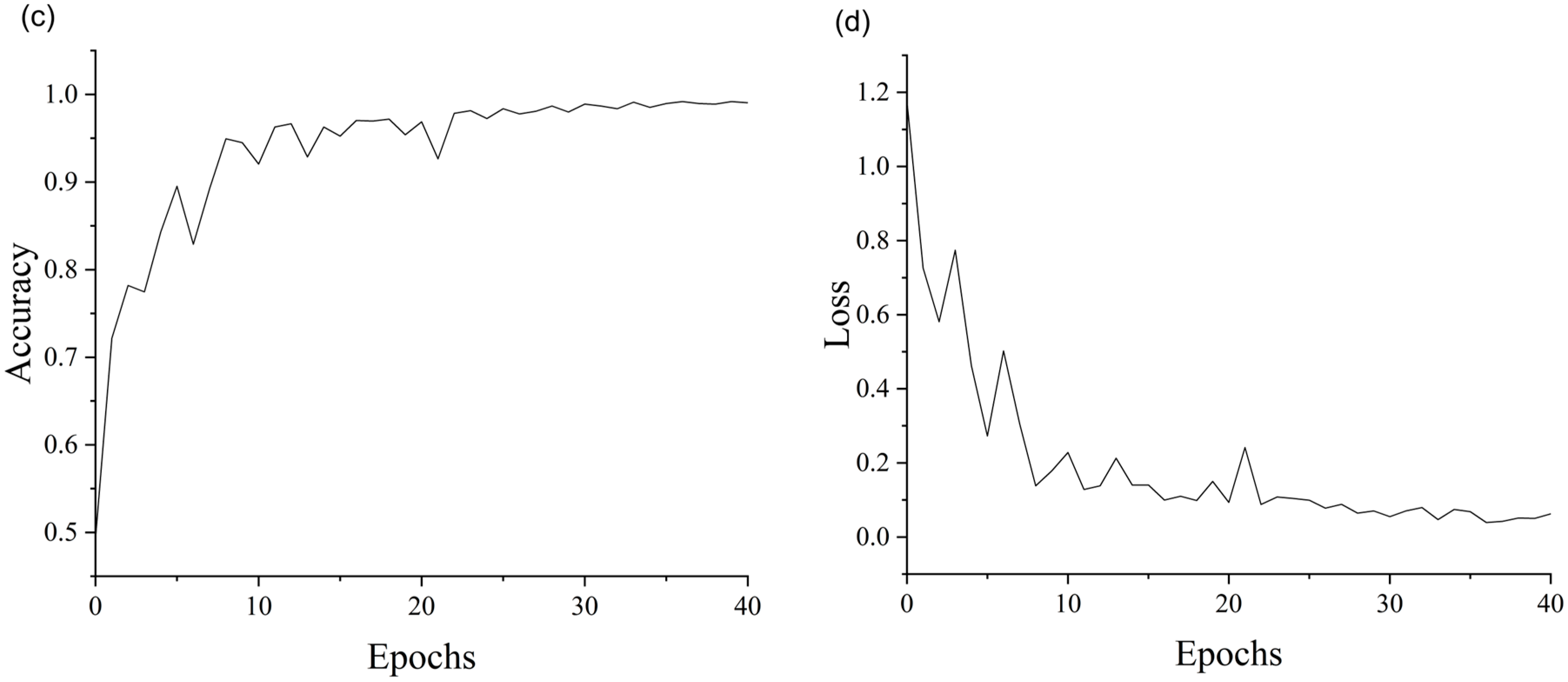

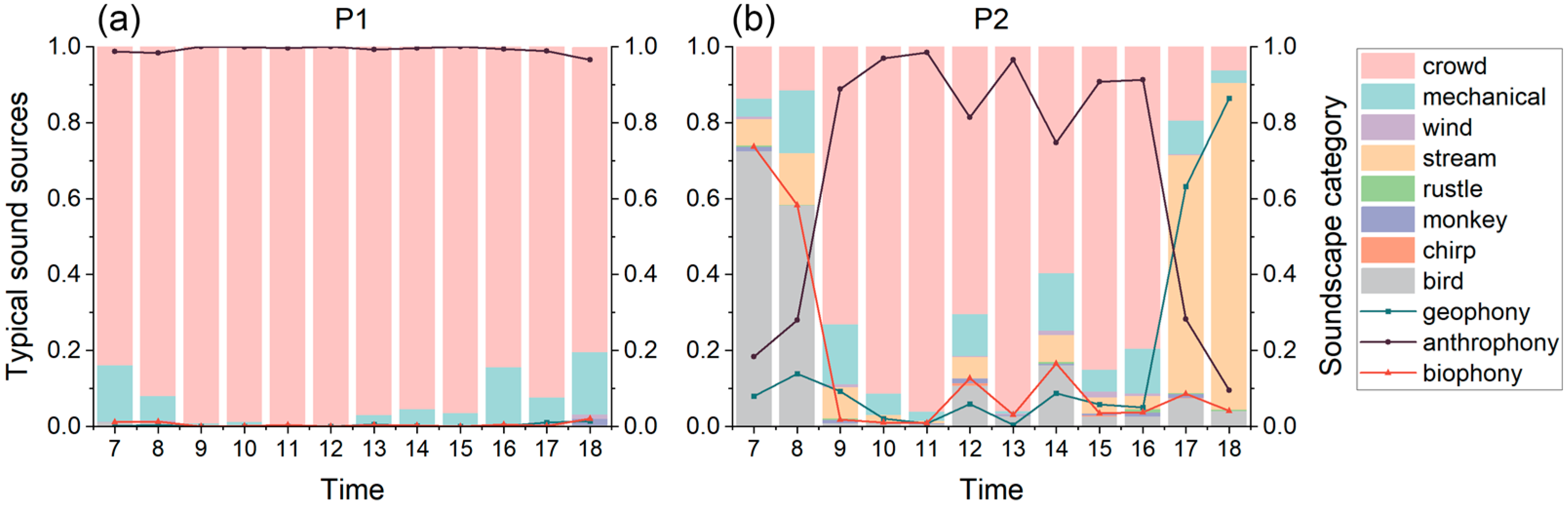

2.4. Deep Learning Model Construction

2.5. Model Training and Evaluation

3. Results and Analysis

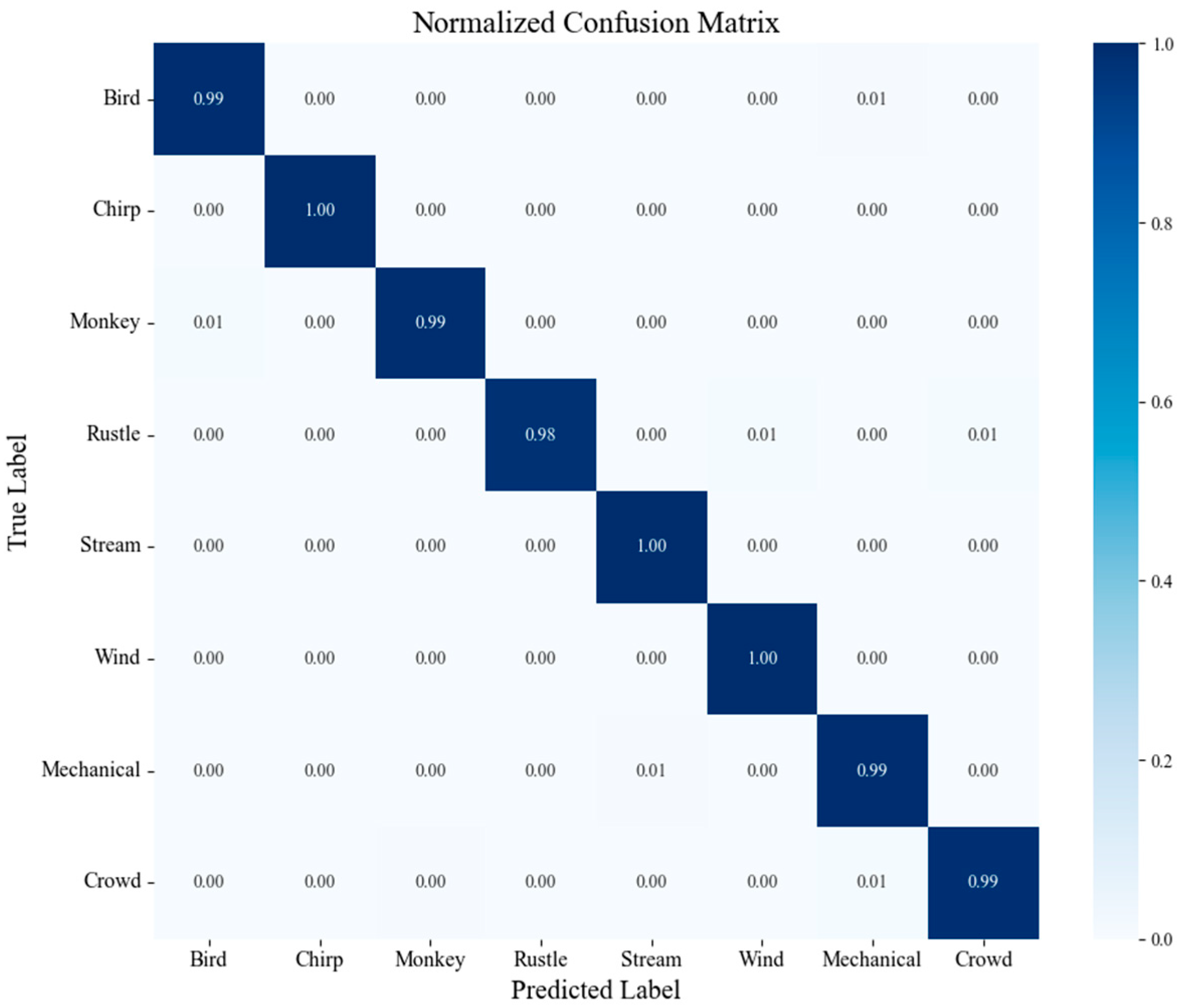

3.1. Temporal Dynamics of Forest Park Soundscapes

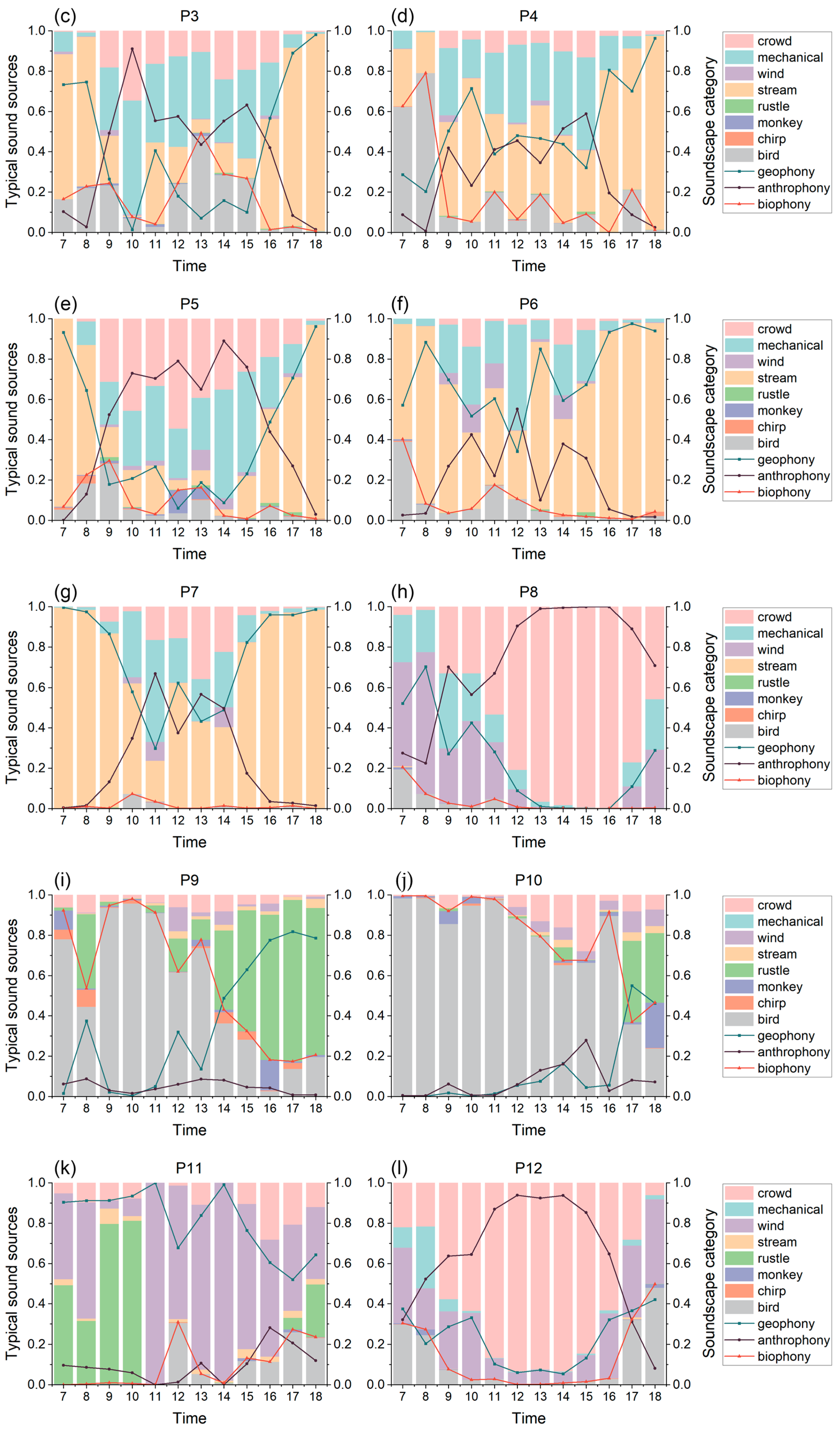

3.1.1. Temporal Characteristics of Soundscapes Under Different Dominant Sound Source Types

3.1.2. Temporal Characteristics of Soundscapes Across Different Time Periods

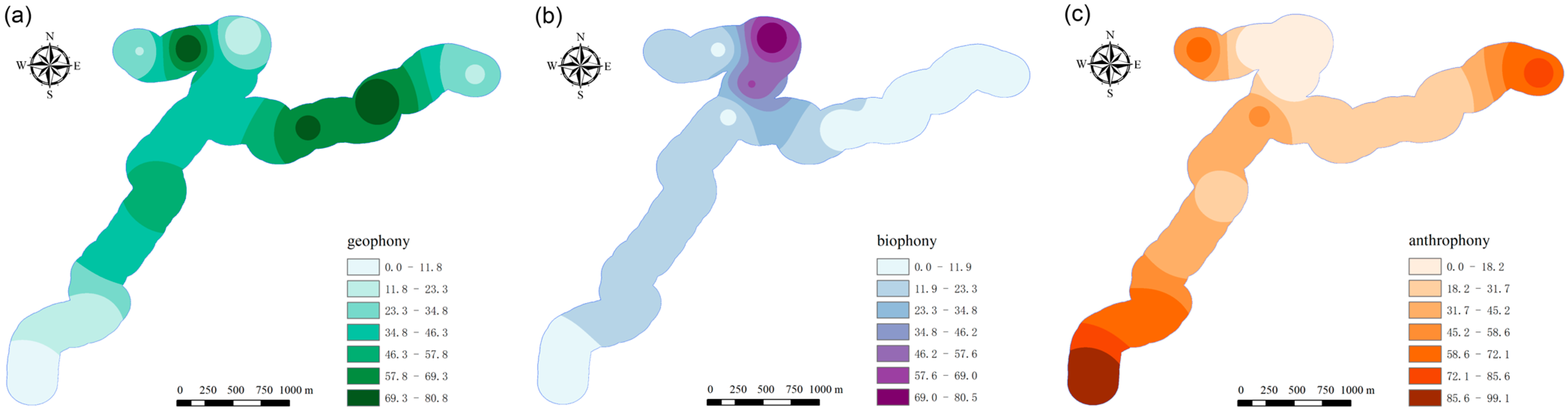

3.2. Spatial Pattern of Forest Park Soundscapes

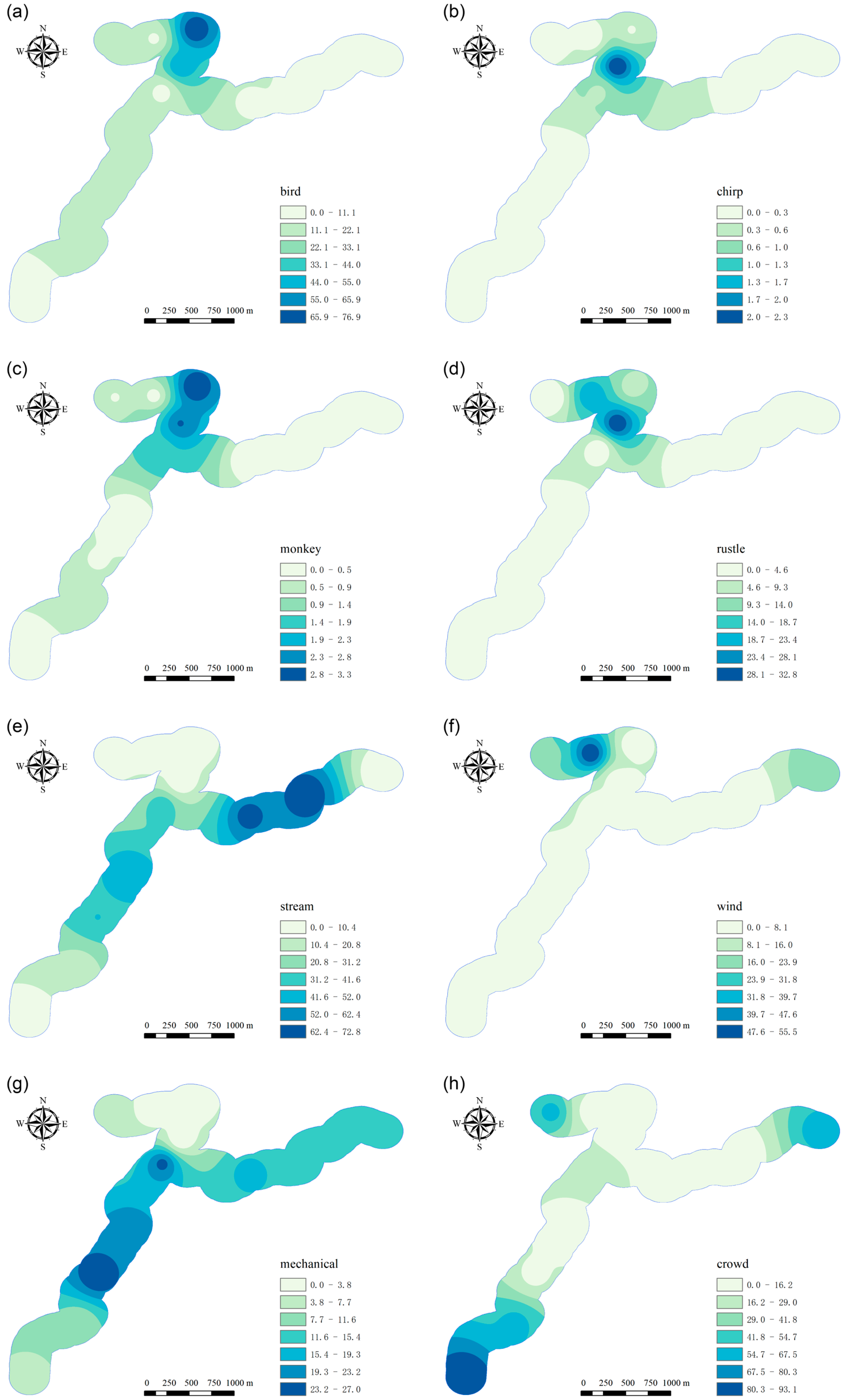

3.2.1. Spatial Distribution Patterns of Typical Sound Sources

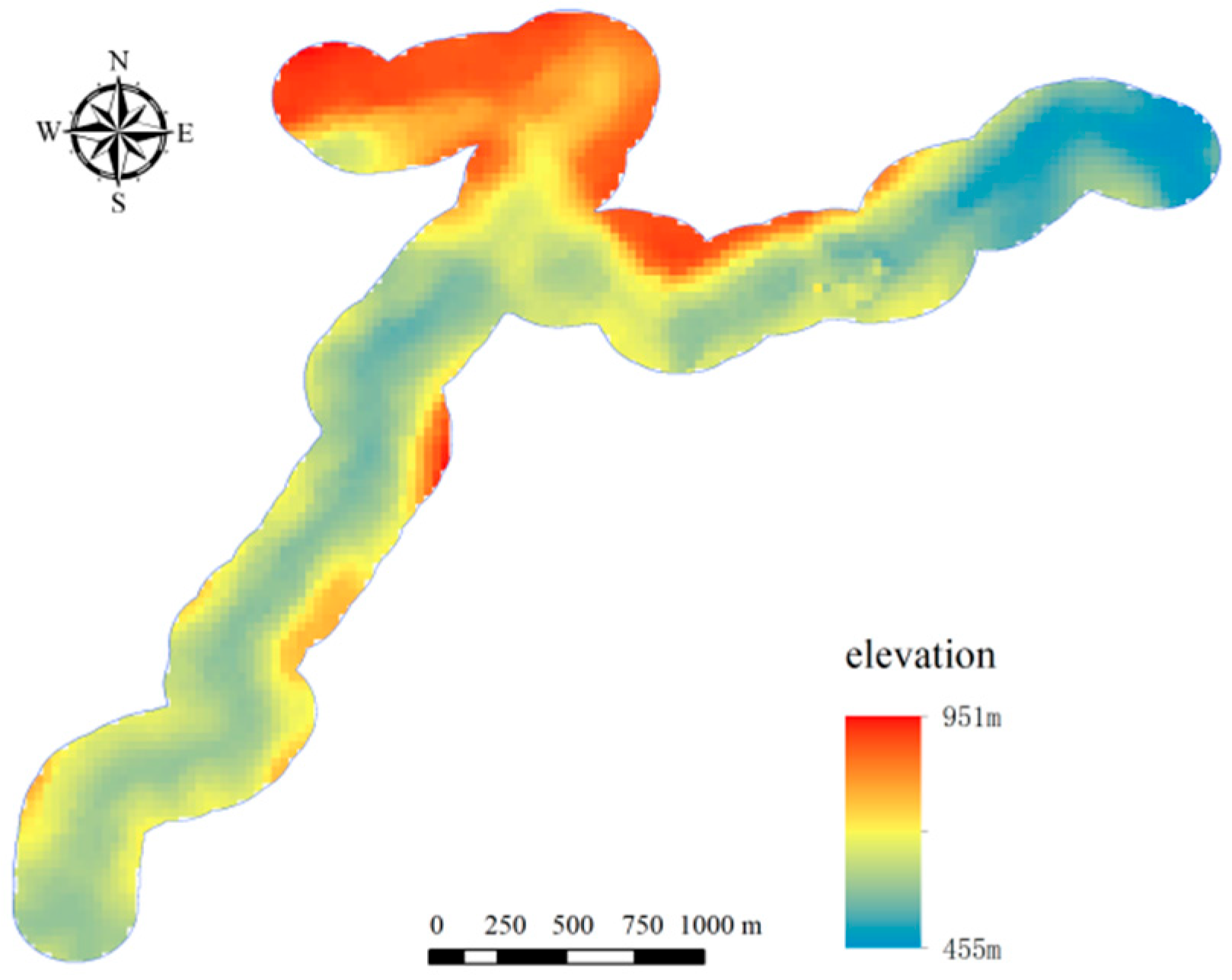

3.2.2. Analysis of Topographic Characteristics of Soundscape Distribution

4. Discussion and Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Huisman, E.R.C.M.; Morales, E.; Van Hoof, J.; Kort, H.S.M. Healing environment: A review of the impact of physical environmental factors on users. Build. Environ. 2012, 58, 70–80. [Google Scholar] [CrossRef]

- Kang, J.; Aletta, F.; Gjestland, T.T.; Brown, L.A.; Botteldooren, D.; Schulte-Fortkamp, B.; Lercher, P.; van Kamp, I.; Genuit, K.; Fiebig, A.; et al. Ten questions on the soundscapes of the built environment. Build. Environ. 2016, 108, 284–294. [Google Scholar] [CrossRef]

- Kogan, P.; Arenas, J.P.; Bermejo, F.; Hinalaf, M.; Turra, B. A Green Soundscape Index (GSI): The potential of assessing the perceived balance between natural sound and traffic noise. Sci. Total Environ. 2018, 642, 463–472. [Google Scholar] [CrossRef] [PubMed]

- Lawrence, B.T.; Hornberg, J.; Schröer, K.; Djeudeu, D.; Haselhoff, T.; Ahmed, S.; Moebus, S.; Gruehn, D. Linking ecoacoustic indices to psychoacoustic perception of the urban acoustic environment. Ecol. Indic. 2023, 155, 111023. [Google Scholar] [CrossRef]

- Haselhoff, T.; Schuck, M.; Lawrence, B.T.; Fiebig, A.; Moebus, S. Characterizing acoustic dimensions of health-related urban greenspace. Ecol. Indic. 2024, 166, 112547. [Google Scholar] [CrossRef]

- Zhong, B.; Xie, H.; Zhang, Z.; Wen, Y. Non-linear effects of ecoacoustic indices on urban soundscape assessments based on gradient boosting decision trees in summer Chongqing, China. Build. Environ. 2025, 278, 112984. [Google Scholar] [CrossRef]

- Wang, J.; Li, C.; Yao, Z.; Cui, S. Soundscape for urban ecological security evaluation. Basic Appl. Ecol. 2024, 76, 50–57. [Google Scholar] [CrossRef]

- Jia, Y.; Ma, H.; Kang, J. Characteristics and evaluation of urban soundscapes worthy of preservation. J. Environ. Manag. 2020, 253, 109722. [Google Scholar] [CrossRef]

- Ng, M.L.; Butler, N.; Woods, N. Soundscapes as a surrogate measure of vegetation condition for biodiversity values: A pilot study. Ecol. Indic. 2018, 93, 1070–1080. [Google Scholar] [CrossRef]

- Borker, A.L.; Buxton, R.T.; Jones, I.L.; Major, H.L.; Williams, J.C.; Tershy, B.R.; Croll, D.A. Do soundscape indices predict landscape-scale restoration outcomes? A comparative study of restored seabird island soundscapes. Restor. Ecol. 2020, 28, 252–260. [Google Scholar] [CrossRef]

- Xu, X.; Wu, H. Audio-visual interactions enhance soundscape perception in China’s protected areas. Urban For. Urban Green. 2021, 61, 127090. [Google Scholar] [CrossRef]

- Francis, C.D.; Newman, P.; Taff, B.D.; White, C.; Monz, C.A.; Levenhagen, M.; Petrelli, A.R.; Abbott, L.C.; Newton, J.; Burson, S.; et al. Acoustic environments matter: Synergistic benefits to humans and ecological communities. J. Environ. Manag. 2017, 203, 245–254. [Google Scholar] [CrossRef]

- Burivalova, Z.; Maeda, T.M.; Purnomo; Rayadin, Y.; Boucher, T.; Choksi, P.; Roe, P.; Truskinger, A.; Game, E.T. Loss of temporal structure of tropical soundscapes with intensifying land use in Borneo. Sci. Total Environ. 2022, 852, 158268. [Google Scholar] [CrossRef]

- Chen, Z.; Hermes, J.; von Haaren, C. Mapping and assessing natural soundscape quality: An indicator-based model for landscape planning. J. Environ. Manag. 2024, 354, 120422. [Google Scholar] [CrossRef] [PubMed]

- Schlicht, L.; Schlicht, E.; Santema, P.; Kempenaers, B. A dawn and dusk chorus will emerge if males sing in the absence of their mate. Proc. R. Soc. B 2023, 290, 20232266. [Google Scholar] [CrossRef]

- Yang, Y.; Ye, Z.; Zhang, Z.; Xiong, Y. Investigating the drivers of temporal and spatial dynamics in urban forest bird acoustic patterns. J. Environ. Manag. 2025, 376, 124554. [Google Scholar] [CrossRef]

- Davies, B.F.R.; Attrill, M.J.; Holmes, L.; Rees, A.; Witt, M.J.; Sheehan, E.V. Acoustic Complexity Index to assess benthic biodiversity of a partially protected area in the southwest of the UK. Ecol. Indic. 2020, 111, 106019. [Google Scholar] [CrossRef]

- Lu, X.; Li, G.; Song, X.; Zhou, L.; Lv, G. Concept, Framework, and Data Model for Geographical Soundscapes. ISPRS Int. J. Geo-Inf. 2025, 14, 36. [Google Scholar] [CrossRef]

- Hao, Z.; Wang, C.; Sun, Z.; Konijnendijk van den Bosch, C.; Zhao, D.; Sun, B.; Xu, X.; Bian, Q.; Bai, Z.; Wei, K.; et al. Soundscape mapping for spatial-temporal estimate on bird activities in urban forests. Urban For. Urban Green. 2021, 57, 126822. [Google Scholar] [CrossRef]

- Doser, J.W.; Finley, A.O.; Kasten, E.P.; Gage, S.H. Assessing soundscape disturbance through hierarchical models and acoustic indices: A case study on a shelterwood logged northern Michigan forest. Ecol. Indic. 2020, 113, 106244. [Google Scholar] [CrossRef]

- Zhao, Y.; Sheppard, S.; Sun, Z.; Hao, Z.; Jin, J.; Bai, Z.; Bian, Q.; Wang, C. Soundscapes of urban parks: An innovative approach for ecosystem monitoring and adaptive management. Urban For. Urban Green. 2022, 71, 127555. [Google Scholar] [CrossRef]

- Bian, Q.; Wang, C.; Sun, Z.; Yin, L.; Jiang, S.; Cheng, H.; Zhao, Y. Research on spatiotemporal variation characteristics of soundscapes in a newly established suburban forest park. Urban For. Urban Green. 2022, 78, 127766. [Google Scholar] [CrossRef]

- Quinn, C.A.; Burns, P.; Gill, G.; Baligar, S.; Snyder, R.L.; Salas, L.; Goetz, S.J.; Clark, M.L. Soundscape classification with convolutional neural networks reveals temporal and geographic patterns in ecoacoustic data. Ecol. Indic. 2022, 138, 108831. [Google Scholar] [CrossRef]

- Scarpelli, M.D.A.; Roe, P.; Tucker, D.; Fuller, S. Soundscape phenology: The effect of environmental and climatic factors on birds and insects in a subtropical woodland. Sci. Total Environ. 2023, 878, 163080. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, S.; Feng, J.; Ge, J.; Wang, T. Free-ranging livestock changes the acoustic properties of summer soundscapes in a Northeast Asian temperate forest. Biol. Conserv. 2023, 283, 110123. [Google Scholar] [CrossRef]

- Yue, R.; Meng, Q.; Yang, D.; Wu, Y.; Liu, F.; Yan, W. A visualized soundscape prediction model for design processes in urban parks. Build. Simul. 2023, 16, 337–356. [Google Scholar] [CrossRef]

- Hong, J.Y.; Jeon, J.Y. Exploring spatial relationships among soundscape variables in urban areas: A spatial statistical modelling approach. Landsc. Urban Plan. 2017, 157, 352–364. [Google Scholar] [CrossRef]

- Barbaro, L.; Sourdril, A.; Froidevaux, J.S.P.; Cauchoix, M.; Calatayud, F.; Deconchat, M.; Gasc, A. Linking acoustic diversity to compositional and configurational heterogeneity in mosaic landscapes. Landsc. Ecol. 2022, 37, 1125–1143. [Google Scholar] [CrossRef]

- Guo, X.; Jiang, S.Y.; Liu, J.; Chen, Z.; Hong, X.C. Understanding the Role of Visitor Behavior in Soundscape Restorative Experiences in Urban Parks. Forests 2024, 15, 1751. [Google Scholar] [CrossRef]

- Bian, Q.; Zhang, C.; Wang, C.; Yin, L.; Han, W.; Zhang, S. Evaluation of soundscape perception in urban forests using acoustic indices: A case study in Beijing. Forests 2023, 14, 1435. [Google Scholar] [CrossRef]

- Luo, L.; Zhang, Q.; Mao, Y.; Peng, Y.; Wang, T.; Xu, J. A Study on the Soundscape Preferences of the Elderly in the Urban Forest Parks of Underdeveloped Cities in China. Forests 2023, 14, 1266. [Google Scholar] [CrossRef]

- Xu, X.; Baydur, C.; Feng, J.; Wu, C. Integrating spatial-temporal soundscape mapping with landscape indicators for effective conservation management and planning of a protected area. J. Environ. Manag. 2024, 356, 120555. [Google Scholar] [CrossRef]

- Xu, H.; Tian, Y.; Ren, H.; Liu, X. A lightweight channel and time attention enhanced 1D CNN model for environmental sound classification. Expert Syst. Appl. 2024, 249, 123768. [Google Scholar] [CrossRef]

- Xie, J.; Hu, K.; Zhu, M.; Yu, J.; Zhu, Q. Investigation of different CNN-based models for improved bird sound classification. IEEE Access 2019, 7, 175353–175361. [Google Scholar] [CrossRef]

- Ecke, S.; Stehr, F.; Frey, J.; Tiede, D.; Dempewolf, J.; Klemmt, H.-J.; Endres, E.; Seifert, T. Towards operational UAV-based forest health monitoring: Species identification and crown condition assessment by means of deep learning. Comput. Electron. Agric. 2024, 219, 108785. [Google Scholar] [CrossRef]

- Meedeniya, D.; Ariyarathne, I.; Bandara, M.; Jayasundara, R.; Perera, C. A survey on deep learning based forest environment sound classification at the edge. ACM Comput. Surv. 2023, 56, 1–36. [Google Scholar] [CrossRef]

- Paranayapa, T.; Ranasinghe, P.; Ranmal, D.; Meedeniya, D.; Perera, C. A comparative study of preprocessing and model compression techniques in deep learning for forest sound classification. Sensors 2024, 24, 1149. [Google Scholar] [CrossRef] [PubMed]

- Ranmal, D.; Ranasinghe, P.; Paranayapa, T.; Meedeniya, D.; Perera, C. Esc-nas: Environment sound classification using hardware-aware neural architecture search for the edge. Sensors 2024, 24, 3749. [Google Scholar] [CrossRef] [PubMed]

- List of UNESCO Global Geoparks and Regional Networks. Available online: https://www.unesco.org/en/iggp/geoparks (accessed on 5 March 2025).

- Wulingyuan Scenic and Historic Interest Area. Available online: https://whc.unesco.org/en/list/640 (accessed on 5 March 2025).

- Pijanowski, B.C.; Farina, A.; Gage, S.H.; Dumyahn, S.L.; Krause, B.L. What is soundscape ecology? An introduction and overview of an emerging new science. Landsc. Ecol. 2011, 26, 1213–1232. [Google Scholar] [CrossRef]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Zhang, T.; Feng, G.; Liang, J.; An, T. Acoustic scene classification based on Mel spectrogram decomposition and model merging. Appl. Acoust. 2021, 182, 108258. [Google Scholar] [CrossRef]

- Kong, Q.; Cao, Y.; Iqbal, T.; Wang, Y.; Wang, W.; Plumbley, M.D. Panns: Large-scale pretrained audio neural networks for audio pattern recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2880–2894. [Google Scholar] [CrossRef]

- Scarpelli, M.D.A.; Tucker, D.; Doohan, B.; Roe, P.; Fuller, S. Spatial dynamics of soundscapes and biodiversity in a semi-arid landscape. Landsc. Ecol. 2023, 38, 463–478. [Google Scholar] [CrossRef]

- Mendes, C.P.; Carreira, D.; Pedrosa, F.; Beca, G.; Lautenschlager, L.; Akkawi, P.; Bercê, W.; Ferraz, K.M.P.M.B.; Galetti, M. Landscape of human fear in Neotropical rainforest mammals. Biol. Conserv. 2020, 241, 108257. [Google Scholar] [CrossRef]

- Lewis, J.S.; Spaulding, S.; Swanson, H.; Keeley, W.; Gramza, A.R.; VandeWoude, S.; Crooks, K.R. Human activity influences wildlife populations and activity patterns: Implications for spatial and temporal refuges. Ecosphere 2021, 12, e03487. [Google Scholar] [CrossRef]

- He, X.; Deng, Y.; Dong, A.; Lin, L. The relationship between acoustic indices, vegetation, and topographic characteristics is spatially dependent in a tropical forest in southwestern China. Ecol. Indic. 2022, 142, 109229. [Google Scholar] [CrossRef]

| Number | Sampling Point | Environmental Characteristics |

|---|---|---|

| P1 | Oxygen Bar Plaza | Located at the entrance to the park, with a large open plaza as the starting point for visitors’ distribution and guided tour. |

| P2 | Divine Eagle Protecting the Whip | Visit iconic viewpoints and popular tourist attractions on the route. |

| P3 | Golden Whip Stream Poetry | Dense vegetation, close to streams, complete natural surroundings. |

| P4 | Rock of Literary Star | The understory is open with several sets of seating facilities for short stopovers. |

| P5 | Meet from Afar | Open terrain, the intersection of two tour routes, frequent visitor traffic and stops. |

| P6 | Jumping Fish Pool | Close to a body of water with strong currents and a resting pavilion. |

| P7 | Sandstone Peak Forest | Complex terrain, strong currents, vending machines available. |

| P8 | Four Gates Waterside | A junction of multiple routes with outstanding scenery, where tourists tend to linger. |

| P9 | Viewing Platform | The platform is large with a wide view and is equipped with benches and other facilities for visitors to rest. |

| P10 | Winding Slope | The trail is narrow, the terrain is uneven, and the vegetation coverage is high. It is a transitional type of scenic spot. |

| P11 | Rear Garden | Located on the edge of the forest, away from the main tourist route, the environment is relatively quiet. |

| P12 | Enchanted Stand | Located at a high vantage point, it is one of the important thoroughfares and is frequently visited by tourists. |

| Soundscape Category | Typical Sound Source |

|---|---|

| Biophony | Chirp, Bird, Monkey |

| Geophony | Stream, Wind, Rustle |

| Anthrophony | Mechanical, Crowd |

| Label | Field Recorded | Online Audio | Data Augmentation | Total | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Chirp | 400 | 400 | / | / | / | / | 800 | ||||

| Bird | 400 | 400 | / | / | / | / | 800 | ||||

| Monkey | 200 | 200 | AL | 100 | PS | 100 | TS | 100 | EC | 100 | 800 |

| Stream | 400 | 400 | / | / | / | / | 800 | ||||

| Wind | 400 | 400 | / | / | / | / | 800 | ||||

| Rustle | 400 | 400 | / | / | / | / | 800 | ||||

| Mechanical | 400 | 400 | / | / | / | / | 800 | ||||

| Crowd | 400 | 400 | / | / | / | / | 800 | ||||

| Total | 3000 | 3000 | 400 | 6400 | |||||||

| Model | Precision | Recall | F1-Score |

|---|---|---|---|

| PANNs | 0.99405 | 0.99376 | 0.99376 |

| CAM++ | 0.99182 | 0.99142 | 0.99139 |

| ECAPA-TDNN | 0.98363 | 0.98284 | 0.98282 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhuo, D.; Yan, C.; Xie, W.; He, Z.; Hu, Z. Spatiotemporal Heterogeneity of Forest Park Soundscapes Based on Deep Learning: A Case Study of Zhangjiajie National Forest Park. Forests 2025, 16, 1416. https://doi.org/10.3390/f16091416

Zhuo D, Yan C, Xie W, He Z, Hu Z. Spatiotemporal Heterogeneity of Forest Park Soundscapes Based on Deep Learning: A Case Study of Zhangjiajie National Forest Park. Forests. 2025; 16(9):1416. https://doi.org/10.3390/f16091416

Chicago/Turabian StyleZhuo, Debing, Chenguang Yan, Wenhai Xie, Zheqian He, and Zhongyu Hu. 2025. "Spatiotemporal Heterogeneity of Forest Park Soundscapes Based on Deep Learning: A Case Study of Zhangjiajie National Forest Park" Forests 16, no. 9: 1416. https://doi.org/10.3390/f16091416

APA StyleZhuo, D., Yan, C., Xie, W., He, Z., & Hu, Z. (2025). Spatiotemporal Heterogeneity of Forest Park Soundscapes Based on Deep Learning: A Case Study of Zhangjiajie National Forest Park. Forests, 16(9), 1416. https://doi.org/10.3390/f16091416