Automatic Detection of Ceroxylon Palms by Deep Learning in a Protected Area in Amazonas (NW Peru)

Abstract

1. Introduction

2. Materials and Methods

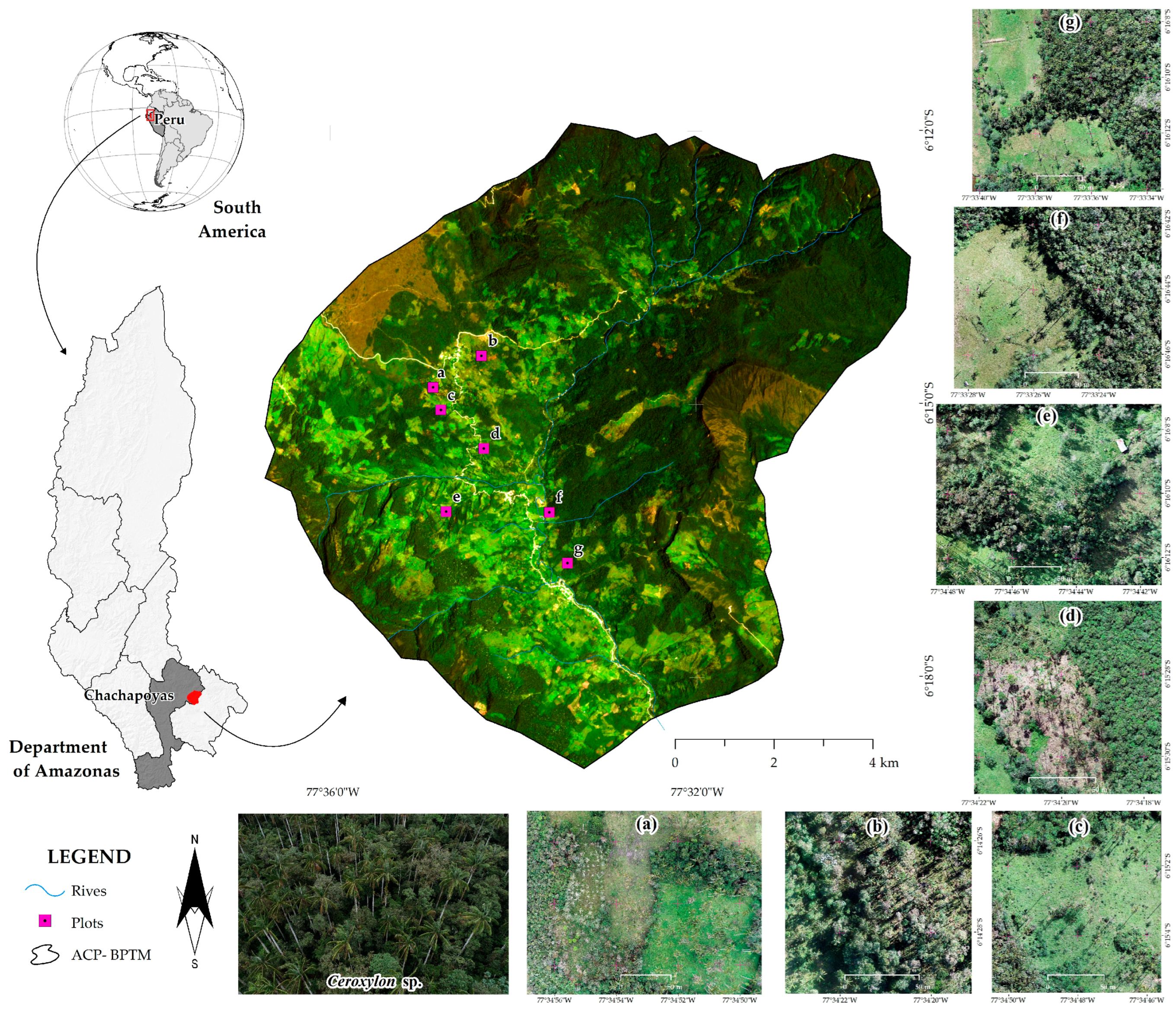

2.1. Study Area

2.2. Data Processing and Orthomosaic Generation

2.3. Palms Tree Detection by Methods (YOLOv8, YOLOv10, and YOLOv11)

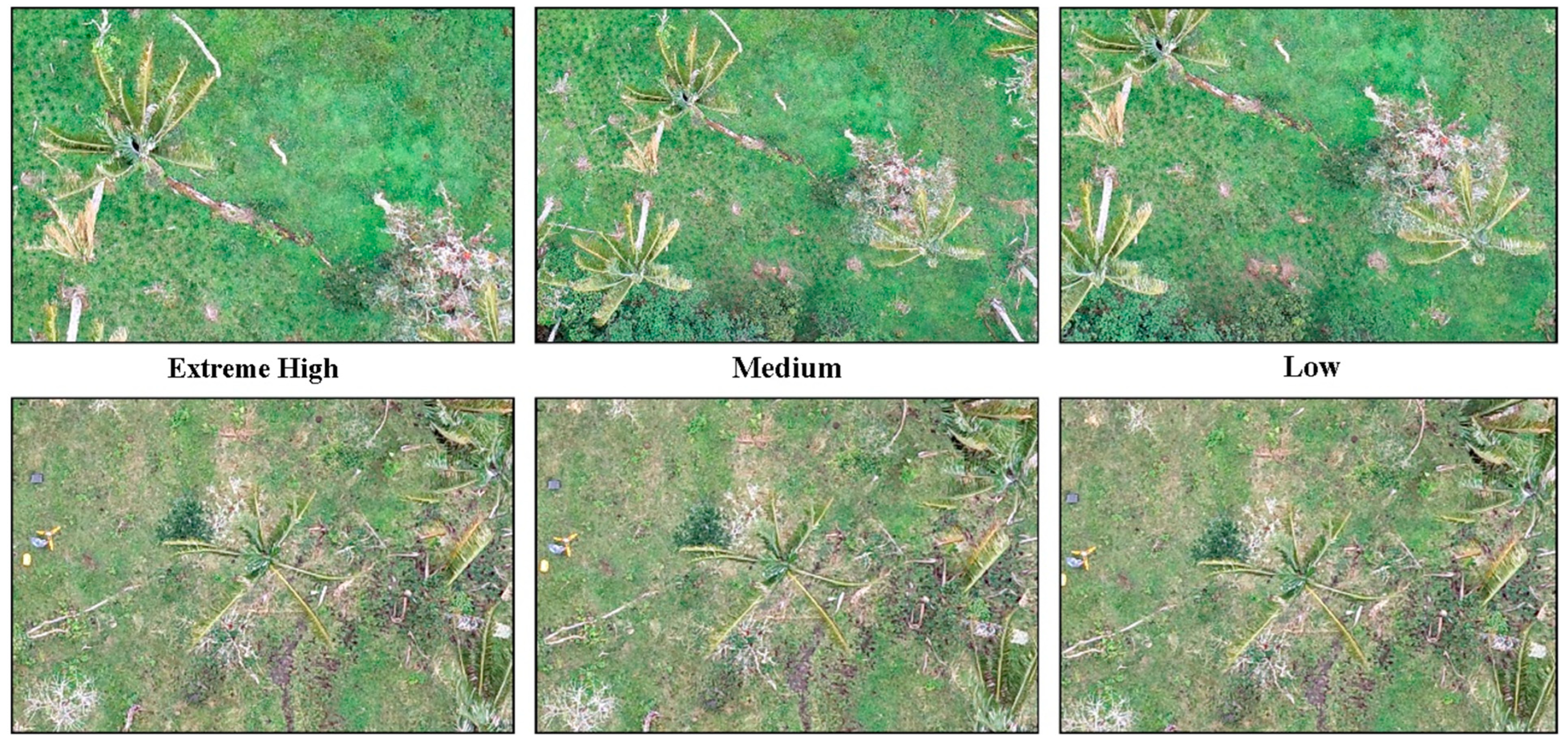

2.3.1. Preparation of Pre-Training Data

2.3.2. Data Augmentation

- A.HorizontalFlip (p = 0.6): Horizontal data inversion with probability 0.6.

- A.Rotate (limit = 20, border_mode = 0, p = 0.6): Image rotation up to 20 degrees in any direction for slightly tilted positions.

- A.RandomScale (scale_limit = 0.5, p = 0.6): Random scale with a limit of 50% in both magnification and reduction for the recognition of palm trees at different distances.

- A.RandomBrightnessContrast (brightness_limit = 0.2, contrast_limit = 0.2, p = 0.6): Randomly adjusting the brightness and contrast of images by up to 20% improving the model’s ability to work in different lighting conditions.

- A.Sharpen (alpha = (0.1, 0.3), p = 0.6): Adds a focus effect to images to improve edge detection of palm fronds.

- A.HueSaturationValue (hue_shift_limit = 10, sat_shift_limit = 20, val_shift_limit = 10, p = 0.6): Adjusts 0.2 saturation, 0.1 hue and 0.1 brightness of images to simulate different environmental conditions.

- A.RandomFog (fog_coef_lower = 0.1, fog_coef_upper = 0.3, p = 0.6): Adds a fog effect by varying its density by up to 60% to increase the identification of palm trees in low visibility.

- A.MotionBlur (blur_limit = 5, p = 0.6): Simulates camera movement by adding blur.

2.3.3. Model Selection and Implementation of YOLO

- Batch size: two images per batch (to optimize computational efficiency);

- Learning rate: 0.0005;

- Epochs: 500;

- Early stopping: 30 epochs (Helps to find the optimal point of the model [75]);

- Optimizer: Adam.

2.3.4. Model Evaluation Metrics

- Accuracy: This metric evaluates how many of the positive predictions, or true positives, are actually correct.

- Recall: Measures of the model’s ability to detect all positive cases.

- F1-Score: Represents the harmonic meaning between recall and precision and is useful to identify the balance between both metrics.

3. Results

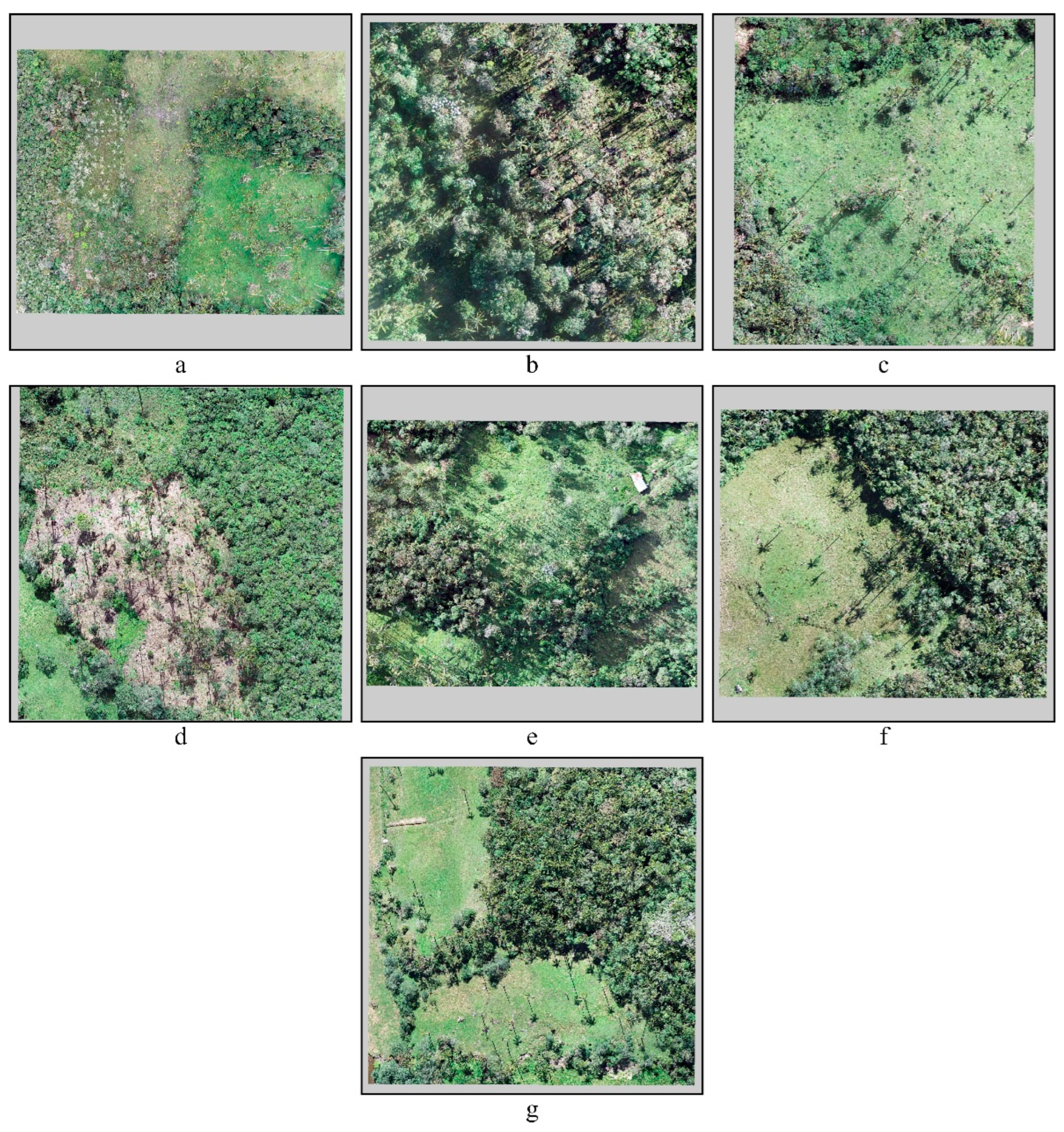

3.1. Generation of Orthomosaics

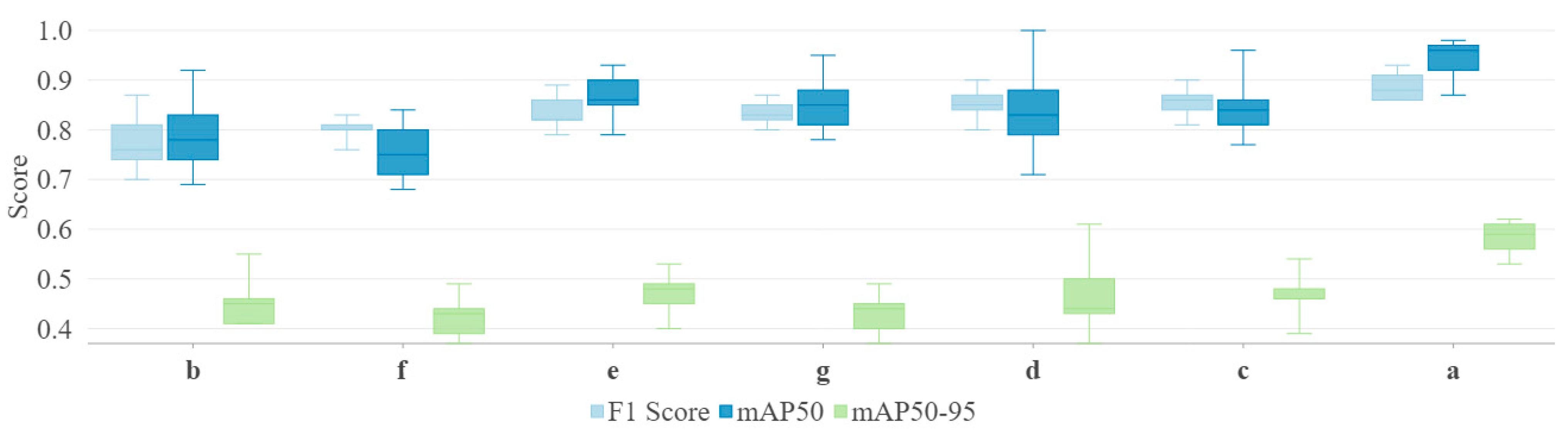

3.2. Evaluation of the Mosaic Clipping Size Parameter

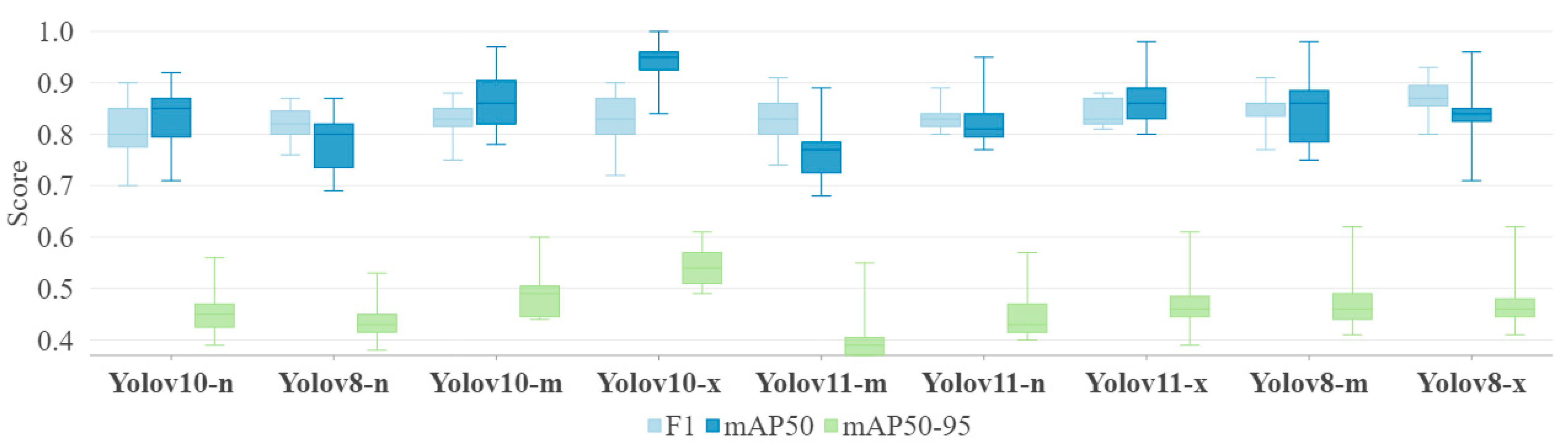

3.3. Training of the Nine Configurations at YOLO

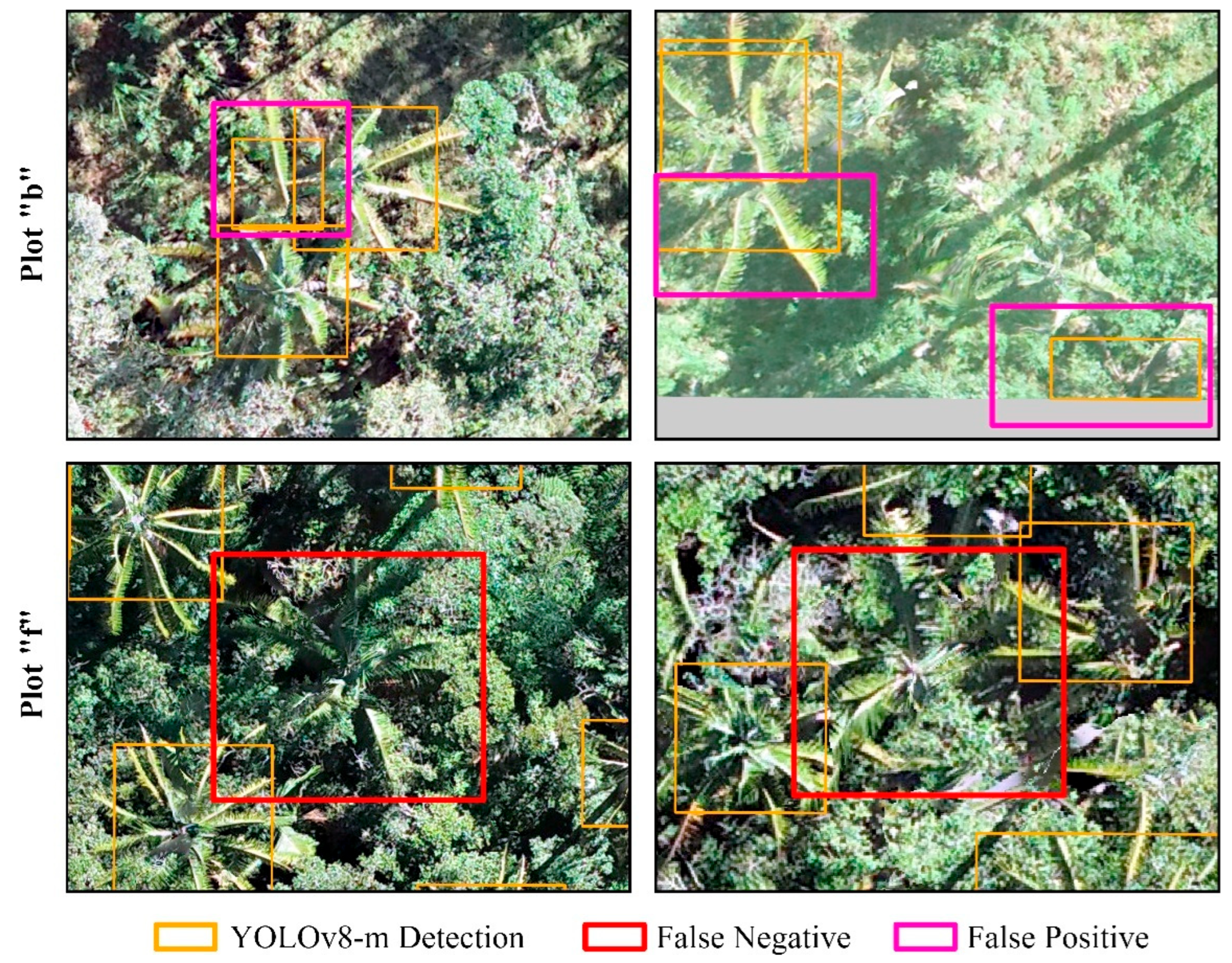

3.4. Application of Tests on Orthomosaics

3.5. Detection of Palms of the Genus Ceroxylon—YOLOv8m

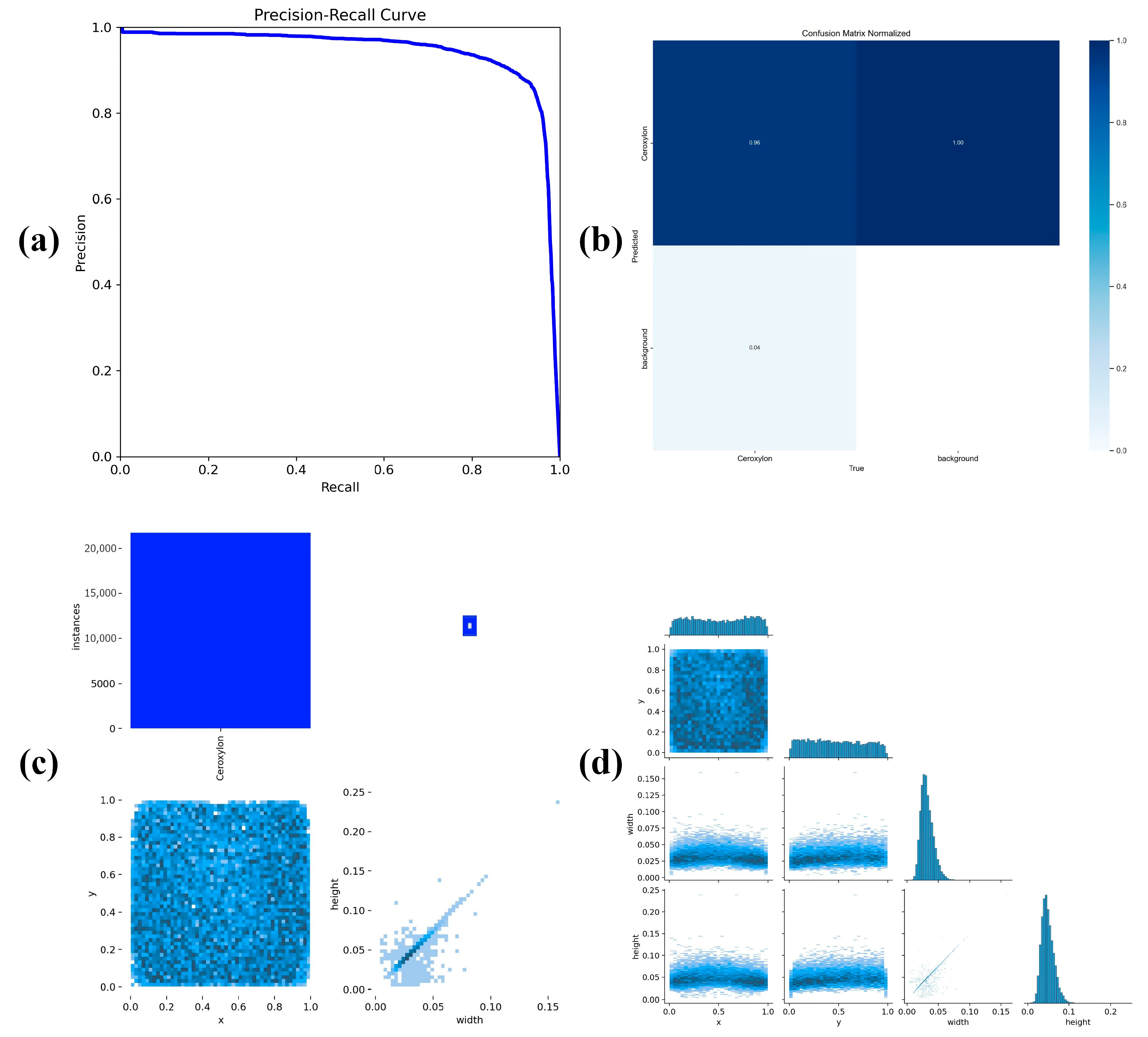

- Model Performance (Precision–Recall Curve, Figure 7a): The precision–recall curve analysis demonstrates outstanding model performance, with an mAP50 of 0.929. The curve remains at a precision close to 1.0, decreasing significantly only when the maximum recall is reached, indicating robust detection capability with minimal false positives. This curve shape is characteristic of a well-calibrated model with high discriminative power.

- Confusion Matrix (Figure 7b): The normalized confusion matrix reveals high accuracy (0.941) in detecting Ceroxylon, impeccable background classification with an accuracy of 1.00, and a low level of false positives and false negatives.

- Distribution of Detections (Figure 7c): A significant concentration of samples is observed (deep blue region), the scatter plot shows correlations between model parameters, and the distribution graph suggests a substantial accumulation of features relevant for correct identification. The metrics suggest a uniform distribution of detections and a consistent correlation between the variables analyzed.

- Detection Characteristics (Figure 7d): The graphs show how different features extracted during the detection process interact, that is, they indicate a uniform spatial distribution with positive correlations in the detections. The consistency of the model is validated through the well-defined concentration peaks in the histograms. This is a statistical analysis of annotated Ceroxylon palms, including scatter plots between the variable pairs (x, y), (x, width), (x, height), (y, width), (y, height), and (width, height). In these plots, darker shades indicate a higher concentration of data at those positions. Additionally, individual histograms are shown for the variables x, y, width, and height. The variables x and y correspond to the normalized coordinates of the center of the bounding box within the image, with values between 0 and 1. Width and height represent the proportion of the box size to the total image, also ranging from 0 to 1 [20].

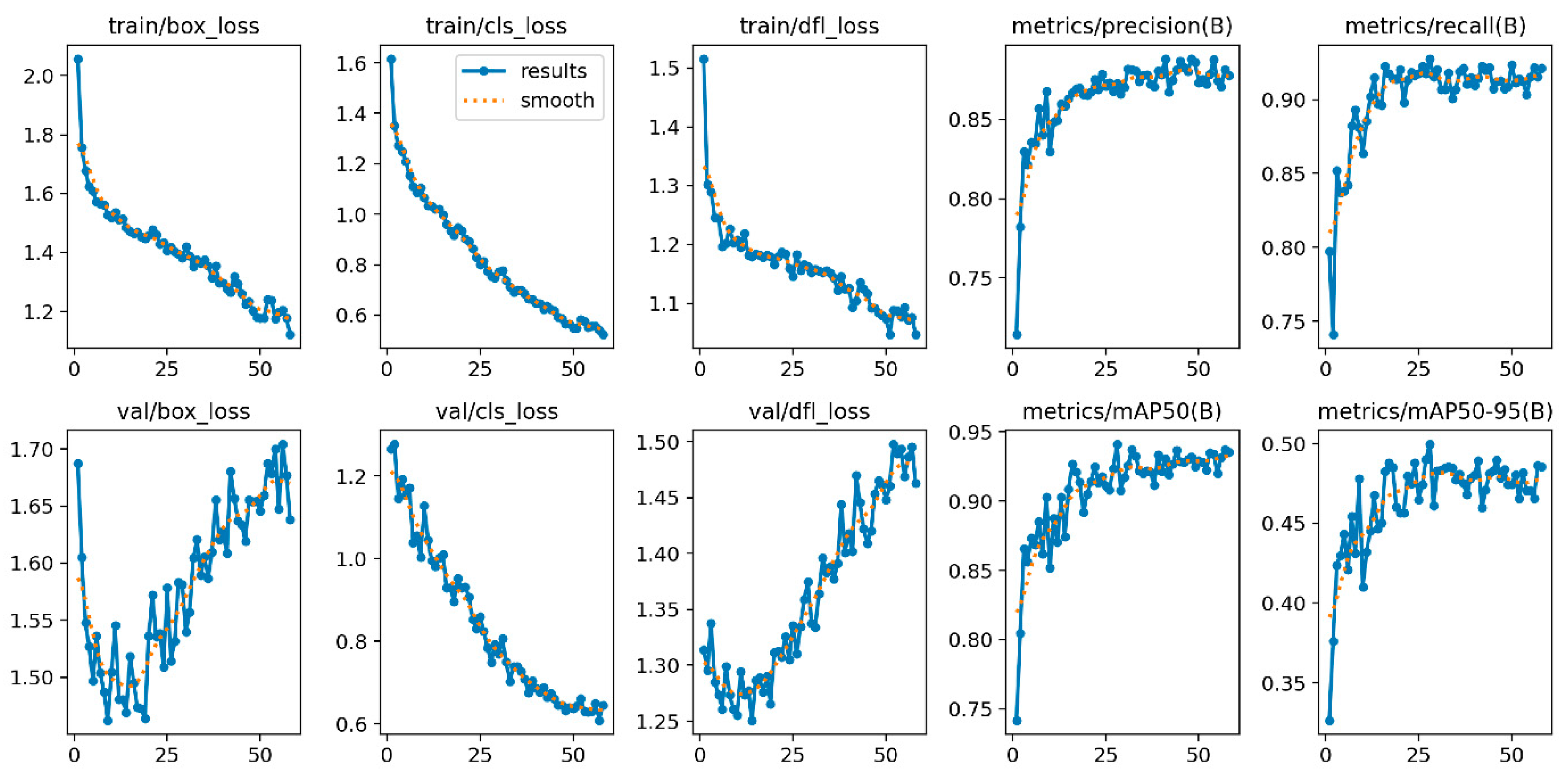

- Training and Validation Metrics (Figure 8): The figure shows nine graphs that represent the data obtained in the training of the model:

- -

- The downward trend in all three losses (train/box_loss, train/cls_loss, train/dfl_loss) indicates that the model is learning efficiently and showed no signs of overfitting in training.

- -

- Validation losses (val/box_loss, val/cls_loss, val/dfl_loss) follow a similar pattern with some controlled fluctuations.

- -

- Training precision and recall metrics (metrics/precision(B), metrics/recall(B)) consistently improve during training, stabilizing at high values up to ~0.9, which is indicative of a robust model with low risk of false positives and negatives.

- -

- Similarly, the validation precision and recall metrics (metrics/mAP50(B)) have a progressive increase up to ~0.9, but in the most rigorous metric (metrics/mAP50-95(B)), its stabilization remains at ~0.45, which is typical in objects that are difficult to segment or with morphological variability (failures in the reconstruction of orthomosaics).

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Culqui, L.; Leiva-Tafur, D.; Haro, N.; Juarez-Contreras, L.; Vigo, C.N.; Maicelo Quintana, J.L.; Oliva-Cruz, M. Native Species Diversity Associated with Bosque Palmeras de Ocol in the Amazonas Region, Peru. Trees For. People 2024, 16, 100580. [Google Scholar] [CrossRef]

- Prajapati, V.; Modi, K.; Mehta, Y.; Malhotra, S. Assessment of Drug Marketing Literature Systematically Using the WHO Criteria. Natl. J. Physiol. Pharm. Pharmacol. 2023, 13, 2100–2104. [Google Scholar] [CrossRef]

- Kalogiannidis, S.; Kalfas, D.; Loizou, E.; Chatzitheodoridis, F. Forestry Bioeconomy Contribution on Socioeconomic Development: Evidence from Greece. Land 2022, 11, 2139. [Google Scholar] [CrossRef]

- Ge, Y.; Hu, S.; Ren, Z.; Jia, Y.; Wang, J.; Liu, M.; Zhang, D.; Zhao, W.; Luo, Y.; Fu, Y.; et al. Mapping Annual Land Use Changes in China’s Poverty-Stricken Areas from 2013 to 2018. Remote Sens. Environ. 2019, 232, 111285. [Google Scholar] [CrossRef]

- Leberger, R.; Rosa, I.M.D.; Guerra, C.A.; Wolf, F.; Pereira, H.M. Global Patterns of Forest Loss across IUCN Categories of Protected Areas. Biol. Conserv. 2020, 241, 108299. [Google Scholar] [CrossRef]

- Kupec, P.; Marková, J.; Pelikán, P.; Brychtová, M.; Autratová, S.; Fialová, J. Urban Parks Hydrological Regime in the Context of Climate Change—A Case Study of Štěpánka Forest Park (Mladá Boleslav, Czech Republic). Land 2022, 11, 412. [Google Scholar] [CrossRef]

- Aguirre-Forero, S.E.; Piraneque-Gambasica, N.V.; Abaunza-Suárez, C.F. Especies Con Potencial Para Sistemas Agroforestales En El Departamento Del Magdalena, Colombia. Inf. Tecnol. 2021, 32, 13–28. [Google Scholar] [CrossRef]

- Leite-Júnior, D.P.; de Oliveira-Dantas, E.S.; de Sousa, R.; de Sousa, M.; Durigon, L.; Sehn, M.; Siqueira, V. Burning Season: Challenges to Conserve Biodiversity and the Critical Points of a Planet Threatened by the Danger Called Global Warming. Int. J. Environ. Clim. Change 2021, 11, 60–90. [Google Scholar] [CrossRef]

- Rivers, M.; Newton, A.C.; Oldfield, S. Scientists’ Warning to Humanity on Tree Extinctions. Plants People Planet 2023, 5, 466–482. [Google Scholar] [CrossRef]

- Medina Medina, A.J.; Salas López, R.; Zabaleta Santisteban, J.A.; Tuesta Trauco, K.M.; Turpo Cayo, E.Y.; Huaman Haro, N.; Oliva Cruz, M.; Gómez Fernández, D. An Analysis of the Rice-Cultivation Dynamics in the Lower Utcubamba River Basin Using SAR and Optical Imagery in Google Earth Engine (GEE). Agronomy 2024, 14, 557. [Google Scholar] [CrossRef]

- Zhong, H.; Zhang, Z.; Liu, H.; Wu, J.; Lin, W. Individual Tree Species Identification for Complex Coniferous and Broad-Leaved Mixed Forests Based on Deep Learning Combined with UAV LiDAR Data and RGB Images. Forests 2024, 15, 293. [Google Scholar] [CrossRef]

- Budei, B.C.; St-Onge, B.; Hopkinson, C.; Audet, F.A. Identifying the Genus or Species of Individual Trees Using a Three-Wavelength Airborne Lidar System. Remote Sens. Environ. 2018, 204, 632–647. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of Studies on Tree Species Classification from Remotely Sensed Data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Braga, J.R.G.; Peripato, V.; Dalagnol, R.; Ferreira, M.P.; Tarabalka, Y.; Aragão, L.E.O.C.; de Campos Velho, H.F.; Shiguemori, E.H.; Wagner, F.H. Tree Crown Delineation Algorithm Based on a Convolutional Neural Network. Remote Sens. 2020, 12, 1288. [Google Scholar] [CrossRef]

- Kentsch, S.; Caceres, M.L.L.; Serrano, D.; Roure, F.; Diez, Y. Computer Vision and Deep Learning Techniques for the Analysis of Drone-Acquired Forest Images, a Transfer Learning Study. Remote Sens. 2020, 12, 1287. [Google Scholar] [CrossRef]

- Zhang, Z.; Han, C.; Wang, X.; Li, H.; Li, J.; Zeng, J.; Sun, S.; Wu, W. Large Field-of-View Pine Wilt Disease Tree Detection Based on Improved YOLO v4 Model with UAV Images. Front. Plant Sci. 2024, 15, 1381367. [Google Scholar] [CrossRef]

- Almeida, D.R.A.D.; Broadbent, E.N.; Ferreira, M.P.; Meli, P.; Zambrano, A.M.A.; Gorgens, E.B.; Resende, A.F.; de Almeida, C.T.; do Amaral, C.H.; Corte, A.P.D.; et al. Monitoring Restored Tropical Forest Diversity and Structure through UAV-Borne Hyperspectral and Lidar Fusion. Remote Sens. Environ. 2021, 264, 112582. [Google Scholar] [CrossRef]

- Terryn, L.; Calders, K.; Bartholomeus, H.; Bartolo, R.E.; Brede, B.; D’hont, B.; Disney, M.; Herold, M.; Lau, A.; Shenkin, A.; et al. Quantifying Tropical Forest Structure through Terrestrial and UAV Laser Scanning Fusion in Australian Rainforests. Remote Sens. Environ. 2022, 271, 112912. [Google Scholar] [CrossRef]

- Botterill-James, T.; Yates, L.A.; Buettel, J.C.; Aandahl, Z.; Brook, B.W. Southeast Asian Biodiversity Is a Fifth Lower in Deforested versus Intact Forests. Environ. Res. Lett. 2024, 19, 113007. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, H.; Liu, Y.; Zhang, H.; Zheng, D. Tree-Level Chinese Fir Detection Using UAV RGB Imagery and YOLO-DCAM. Remote Sens. 2024, 16, 335. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, Z.; Tang, Y.; Qu, Y. Individual Tree Crown Detection from High Spatial Resolution Imagery Using a Revised Local Maximum Filtering. Remote Sens. Environ. 2021, 258, 112397. [Google Scholar] [CrossRef]

- Qin, H.; Zhou, W.; Yao, Y.; Wang, W. Individual Tree Segmentation and Tree Species Classification in Subtropical Broadleaf Forests Using UAV-Based LiDAR, Hyperspectral, and Ultrahigh-Resolution RGB Data. Remote Sens. Environ. 2022, 280, 113143. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, X.; Wu, B. Automatic Detection of Individual Oil Palm Trees from UAV Images Using HOG Features and an SVM Classifier. Int. J. Remote Sens. 2018, 40, 7356–7370. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Lopez Caceres, M.L.; Nguyen, H.T.; Serrano, D.; Roure, F. Comparison of Algorithms for Tree-Top Detection in Drone Image Mosaics of Japanese Mixed Forests. In Proceedings of the 9th International Conference on Pattern Recognition Applications and Methods ICPRAM, Valletta, Malta, 22–24 February 2020; Volume 1, pp. 75–87. [Google Scholar] [CrossRef]

- Puliti, S.; Astrup, R. Automatic Detection of Snow Breakage at Single Tree Level Using YOLOv5 Applied to UAV Imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102946. [Google Scholar] [CrossRef]

- Yu, K.; Hao, Z.; Post, C.J.; Mikhailova, E.A.; Lin, L.; Zhao, G.; Tian, S.; Liu, J. Comparison of Classical Methods and Mask R-CNN for Automatic Tree Detection and Mapping Using UAV Imagery. Remote Sens. 2022, 14, 295. [Google Scholar] [CrossRef]

- Liu, S.; Xue, J.; Zhang, T.; Lv, P. Research Progress and Prospect of Key Technologies of Fruit Target Recognition for Robotic Fruit Picking. Front. Plant Sci. 2024, 15, 1423338. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Parhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Qiu, Q.; Lau, D. Assessment of Trees’ Structural Defects via Hybrid Deep Learning Methods Used in Unmanned Aerial Vehicle (UAV) Observations. Forests 2024, 15, 1374. [Google Scholar] [CrossRef]

- Shen, Y.; Liu, D.; Chen, J.; Wang, Z.; Wang, Z.; Zhang, Q. On-Board Multi-Class Geospatial Object Detection Based on Convolutional Neural Network for High Resolution Remote Sensing Images. Remote Sens. 2023, 15, 3963. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo Algorithm Developments. Procedia Comput. Sci. 2021, 199, 1066–1073. [Google Scholar] [CrossRef]

- Jintasuttisak, T.; Edirisinghe, E.; Elbattay, A. Deep Neural Network Based Date Palm Tree Detection in Drone Imagery. Comput. Electron. Agric. 2022, 192, 106560. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Lou, X.; Huang, Y.; Fang, L.; Huang, S.; Gao, H.; Yang, L.; Weng, Y.; Hung, I.K. Measuring Loblolly Pine Crowns with Drone Imagery through Deep Learning. J. For. Res. 2022, 33, 227–238. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, H.; Zhang, X.; Gao, P.; Xu, Z.; Huang, X. An Object Detection Method for Bayberry Trees Based on an Improved YOLO Algorithm. Int. J. Digit. Earth 2023, 16, 781–805. [Google Scholar] [CrossRef]

- Dong, C.; Cai, C.; Chen, S.; Xu, H.; Yang, L.; Ji, J.; Huang, S.; Hung, I.K.; Weng, Y.; Lou, X. Crown Width Extraction of Metasequoia Glyptostroboides Using Improved YOLOv7 Based on UAV Images. Drones 2023, 7, 336. [Google Scholar] [CrossRef]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on YOLOv5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Qin, B.; Sun, F.; Shen, W.; Dong, B.; Ma, S.; Huo, X.; Lan, P. Deep Learning-Based Pine Nematode Trees’ Identification Using Multispectral and Visible UAV Imagery. Drones 2023, 7, 183. [Google Scholar] [CrossRef]

- Cruz, M.; Pradel, W.; Juarez, H.; Hualla, V.; Suarez, V. Deforestation Dynamics in Peru. A Comprehensive Review of Land Use, Food Systems, and Socio-Economic Drivers; International Potato Center: Lima, Peru, 2023; 43p. [Google Scholar] [CrossRef]

- MINAM. GEOBOSQUES: Bosque y Pérdida de Bosque. Available online: https://geobosques.minam.gob.pe/geobosque/view/perdida.php (accessed on 22 June 2025).

- De Sy, V.; Herol, M.; Achard, F.; Beauchle, R.; Clevers, J.; Lindquist, E.; Verchot, L. Land Use Patterns and Related Carbon Losses Following Deforestation in South America. Environ. Res. Lett. 2015, 10, 124004. [Google Scholar]

- Meza-Mori, G.; Rojas-Briceño, N.B.; Cotrina Sánchez, A.; Oliva-Cruz, M.; Olivera Tarifeño, C.M.; Hoyos Cerna, M.Y.; Ramos Sandoval, J.D.; Torres Guzmán, C. Potential Current and Future Distribution of the Long-Whiskered Owlet (Xenoglaux loweryi) in Amazonas and San Martin, NW Peru. Animals 2022, 12, 1794. [Google Scholar] [CrossRef] [PubMed]

- Galán-De-mera, A.; Campos-De-la-cruz, J.; Linares-Perea, E.; Montoya-Quino, J.; Torres-Marquina, I.; Vicente-Orellana, J.A. A Phytosociological Study on Andean Rainforests of Peru, and a Comparison with the Surrounding Countries. Plants 2020, 9, 1654. [Google Scholar] [CrossRef]

- Linares-Palomino, R.; Cardona, V.; Hennig, E.I.; Hensen, I.; Hoffmann, D.; Lendzion, J.; Soto, D.; Herzog, S.K.; Kessler, M. Non-Woody Life-Form Contribution to Vascular Plant Species Richness in a Tropical American Forest. Plant Ecol. 2009, 201, 87–99. [Google Scholar] [CrossRef][Green Version]

- Maicelo-Quintana, J.L. Sutainability Indicators in Soil Funtion and Carbon Sequestration in the Biomass of Ceroxylon peruvianum Galeano, Sanin and Mejía from the Middle Utcubamba River Basin, Amaoznas Peru. Ecol. Apl. 2012, 11, 33. [Google Scholar] [CrossRef][Green Version]

- Rimachi, Y.; Oliva, M. Evaluación de La Regeneración Natural de Palmeras Ceroxylon parvifrons En El Bosque Andino Amazónico de Molinopampa, Amazonas. Rev. Investig. Agroproducción Sustentable 2018, 2, 42. [Google Scholar] [CrossRef]

- García-Pérez, A.; Rubio Rojas, K.B.; Meléndez Mori, J.B.; Corroto, F.; Rascón, J.; Oliva, M. Estudio Ecológico de Los Bosques Homogéneos En El Distrito de Molinopampa, Región Amazonas. Rev. Investig. Agroproducción Sustentable 2018, 2, 73. [Google Scholar] [CrossRef]

- Oliva, M.; Rimachi, Y. Selección Fenotípica de Árboles plus de Tres Especies Forestales Maderables En Poblaciones Naturales En El Distrito de Molinopampa (Amazonas). Rev. Investig. Agroproducción Sustentable 2017, 1, 36. [Google Scholar] [CrossRef]

- Oliva, M.; Pérez, D.; Vela, S. Priorización de Especies Forestales Nativas Como Fuentes Semilleros Del Proyecto PD 622/11 En Molinopampa, Amazonas, Perú; IIAP: Loreto, Peru, 2011. [Google Scholar]

- Zabaleta-Santisteban, J.A.; López, R.S.; Rojas-Briceño, N.B.; Fernández, D.G.; Medina Medina, A.J.; Tuesta Trauco, K.M.; Rivera Fernandez, A.S.; Crisóstomo, J.L.; Oliva-Cruz, M.; Silva-López, J.O. Optimizing Landfill Site Selection Using Fuzzy-AHP and GIS for Sustainable Urban Planning. Civ. Eng. J. 2024, 10, 1698–1719. [Google Scholar] [CrossRef]

- Bernal, R.; Sanín, M.J.; Galeano, G. Plan de Conservación, Manejo y Uso Sostenible de la Palma de Cera del Quindío (Ceroxylon quindiuense), Árbol Nacional de Colombia; Ministerio del Ambiente y Desarrollo Sostenible: Bogota, Colombia, 2015; ISBN 9789588901039.

- Taddia, Y.; Stecchi, F.; Pellegrinelli, A. Using Dji Phantom 4 Rtk Drone for Topographic Mapping of Coastal Areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. ISPRS Arch. 2019, 42, 625–630. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Shafri, H.Z.M.; Al-Ruzouq, R.; Shanableh, A.; Nahas, F.; Al Mansoori, S. Large-Scale Date Palm Tree Segmentation from Multiscale UAV-Based and Aerial Images Using Deep Vision Transformers. Drones 2023, 7, 93. [Google Scholar] [CrossRef]

- Ariyadi, M.R.N.; Pribadi, M.R.; Widiyanto, E.P. Unmanned Aerial Vehicle for Remote Sensing Detection of Oil Palm Trees Using You Only Look Once and Convolutional Neural Network. In Proceedings of the 10th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Palembang, Indonesia, 20–21 September 2023; pp. 226–230. [Google Scholar] [CrossRef]

- Tinkham, W.T.; Swayze, N.C. Influence of Agisoft Metashape Parameters on Uas Structure from Motion Individual Tree Detection from Canopy Height Models. Forests 2021, 12, 250. [Google Scholar] [CrossRef]

- Shen, L.; Lang, B.; Song, Z. DS-YOLOv8-Based Object Detection Method for Remote Sensing Images. IEEE Access 2023, 11, 125122–125137. [Google Scholar] [CrossRef]

- Shaikh, I.M.; Akhtar, M.N.; Aabid, A.; Ahmed, O.S. Enhancing Sustainability in the Production of Palm Oil: Creative Monitoring Methods Using YOLOv7 and YOLOv8 for Effective Plantation Management. Biotechnol. Rep. 2024, 44, e00853. [Google Scholar] [CrossRef]

- YOLOv8—Ultralytics YOLO Documentos. Available online: https://docs.ultralytics.com/es/models/yolov8/#overview (accessed on 18 December 2024).

- Wada, K. Labelme: Image Polygonal Annotation with Python. Available online: https://github.com/wkentaro/labelme (accessed on 18 December 2024).

- Wardana, D.P.T.; Sianturi, R.S.; Fatwa, R. Detection of Oil Palm Trees Using Deep Learning Method with High-Resolution Aerial Image Data. In Proceedings of the 8th International Conference on Sustainable Information Engineering and Technology (SIET ‘23), Bali, Indonesia, 24–25 October 2023; Association for Computing Machinery: New York, NY, USA; pp. 90–98. [Google Scholar] [CrossRef]

- Putra, Y.C.; Wijayanto, A.W. Automatic Detection and Counting of Oil Palm Trees Using Remote Sensing and Object-Based Deep Learning. Remote Sens. Appl. Soc. Environ. 2023, 29, 100914. [Google Scholar] [CrossRef]

- Bounding Boxes Augmentation for Object Detection—Albumentations Documentation. Available online: https://albumentations.ai/docs/examples/example-bboxes/ (accessed on 22 June 2025).

- Zhorif, N.N.; Anandyto, R.K.; Rusyadi, A.U.; Irwansyah, E. Implementation of Slicing Aided Hyper Inference (SAHI) in YOLOv8 to Counting Oil Palm Trees Using High-Resolution Aerial Imagery Data. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 869–874. [Google Scholar] [CrossRef]

- Inicio—Ultralytics YOLO Docs. Available online: https://docs.ultralytics.com/es#yolo-a-brief-history (accessed on 18 December 2024).

- UbiAI. Why YOLO v7 Is Better than CNNs. Available online: https://ubiai.tools/why-yolov7-is-better-than-cnns/ (accessed on 13 June 2025).

- Sharma, A.; Kumar, V.; Longchamps, L. Comparative Performance of YOLOv8, YOLOv9, YOLOv10, YOLOv11 and Faster R-CNN Models for Detection of Multiple Weed Species. Smart Agric. Technol. 2024, 9, 100648. [Google Scholar] [CrossRef]

- Vilcapoma, P.; Meléndez, D.P.; Fernández, A.; Vásconez, I.N.; Hillmann, N.C.; Gatica, G.; Vásconez, J.P. Angle Detection in Dental Panoramic X-Rays. Sensors 2024, 24, 6053. [Google Scholar] [CrossRef]

- Ayturan, K.; Sarıkamış, B.; Akşahin, M.F.; Kutbay, U. SPHERE: Benchmarking YOLO vs. CNN on a Novel Dataset for High-Accuracy Solar Panel Defect Detection in Renewable Energy Systems. Appl. Sci. 2025, 15, 4880. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, C.; Qiang, Z.; Liu, C.; Wei, X.; Cheng, F. A Coffee Plant Counting Method Based on Dual-Channel NMS and YOLOv9 Leveraging UAV Multispectral Imaging. Remote Sens. 2024, 16, 3810. [Google Scholar] [CrossRef]

- Wu, J.; Xu, W.; He, J.; Lan, M. YOLO for Penguin Detection and Counting Based on Remote Sensing Images. Remote Sens. 2023, 15, 2598. [Google Scholar] [CrossRef]

- Yu, C.; Yin, H.; Rong, C.; Zhao, J.; Liang, X.; Li, R.; Mo, X. YOLO-MRS: An Efficient Deep Learning-Based Maritime Object Detection Method for Unmanned Surface Vehicles. Appl. Ocean Res. 2024, 153, 104240. [Google Scholar] [CrossRef]

- Chen, Z.; Cao, L.; Wang, Q. YOLOv5-Based Vehicle Detection Method for High-Resolution UAV Images. Mob. Inf. Syst. 2022, 2022, 11. [Google Scholar] [CrossRef]

- Nurhabib, I.; Seminar, K.B. Sudradjat Recognition and Counting of Oil Palm Tree with Deep Learning Using Satellite Image. IOP Conf. Ser. Earth Environ. Sci. 2022, 974, 012058. [Google Scholar] [CrossRef]

- Al-Saad, M.; Aburaed, N.; Mansoori, S.A.; Ahmad, H.A. Autonomous Palm Tree Detection from Remote Sensing Images-UAE Dataset. In Proceedings of the 2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2191–2194. [Google Scholar] [CrossRef]

- Bakewell-stone, P. Elaeis Guineensis (African Oil Palm). In PlantwisePlus Knowledge Bank; CABI International: Wallingford, UK, 2022. [Google Scholar] [CrossRef]

- Kurihara, J.; Koo, V.C.; Guey, C.W.; Lee, Y.P.; Abidin, H. Early Detection of Basal Stem Rot Disease in Oil Palm Tree Using Unmanned Aerial Vehicle-Based Hyperspectral Imaging. Remote Sens. 2022, 14, 799. [Google Scholar] [CrossRef]

- Sanín, M.J.; Galeano, G. A Revision of the Andean Wax Palms, Ceroxylon (Arecaceae). In Phytotaxa; Magnolia Press: Aukland, New Zealand, 2011; Volume 34, ISBN 9781869778194. [Google Scholar]

- Martínez, B.; López Camacho, R.; Castillo, L.S.; Bernal, R. Phenology of the Endangered Palm Ceroxylon quindiuense (Arecaceae) along an Altitudinal Gradient in Colombia. Rev. Biol. Trop. 2021, 69, 649–664. [Google Scholar] [CrossRef]

- Yandouzi, M.; Berrahal, M.; Grari, M.; Boukabous, M.; Moussaoui, O.; Azizi, M.; Ghoumid, K.; Elmiad, A.K. Semantic Segmentation and Thermal Imaging for Forest Fires Detection and Monitoring by Drones. Bull. Electr. Eng. Inform. 2024, 13, 2784–2796. [Google Scholar] [CrossRef]

- Sanín, M.J.; Kissling, W.D.; Bacon, C.D.; Borchsenius, F.; Galeano, G.; Svenning, J.C.; Olivera, J.; Ramírez, R.; Trénel, P.; Pintaud, J.C. The Neogene Rise of the Tropical Andes Facilitated Diversification of Wax Palms (Ceroxylon: Arecaceae) through Geographical Colonization and Climatic Niche Separation. Bot. J. Linn. Soc. 2016, 182, 303–317. [Google Scholar] [CrossRef]

- Chacón-Vargas, K.; García-Merchán, V.H.; Sanín, M.J. From Keystone Species to Conservation: Conservation Genetics of Wax Palm Ceroxylon quindiuense in the Largest Wild Populations of Colombia and Selected Neighboring Ex Situ Plant Collections. Biodivers. Conserv. 2019, 29, 283–302. [Google Scholar] [CrossRef]

- Moreira, S.L.S.; dos Santos, R.A.F.; Paes, É.d.C.; Bahia, M.L.; Cerqueira, A.E.S.; Parreira, D.S.; Imbuzeiro, H.M.A.; Fernandes, R.B.A. Carbon Accumulation in the Soil and Biomass of Macauba Palm Commercial Plantations. Biomass Bioenergy 2024, 190, 107384. [Google Scholar] [CrossRef]

- Li, G.; Li, C.; Jia, G.; Han, Z.; Huang, Y.; Hu, W. Estimating the Vertical Distribution of Biomass in Subtropical Tree Species Using an Integrated Random Forest and Least Squares Machine Learning Mode. Forests 2024, 15, 992. [Google Scholar] [CrossRef]

| Plot | Area (ha) | Characteristics | Geographic Coordinates WGS84 | |

|---|---|---|---|---|

| Longitude (W) | Latitude (S) | |||

| a | 4.39 | Pastures | 77°34′52.857″ | 6°14′47.948″ |

| b | 1.54 | Wooded | 77°34′20.977″ | 6°14′27.352″ |

| c | 2.45 | Pastures | 77°34′47.814″ | 6°15′2.952″ |

| d | 2.01 | Pastures | 77°34′19.681″ | 6°15′28.578″ |

| e | 3.76 | Wooded | 77°34′44.634″ | 6°16′10.013″ |

| f | 3.34 | Wooded | 77°33′25.055″ | 6°16′44.255″ |

| g | 4.16 | Wooded | 77°33′36.697″ | 6°16′10.842″ |

| Photo Orientation | Accuracy | High |

| Reference preselection | Origin | |

| Key points per photo | 40,000 | |

| Passing points per photo | 4500 | |

| Model creation | Data origin (Mesh) | Depth maps |

| Quality | Medium | |

| Interpolation | Enabled (Default) | |

| Depth filtering | Moderate | |

| Orthomosaic creation | Surface | Mesh |

| Mixing Mode | Mosaic (Default) |

| Model/Size | Training Time (Hours/Minutes/Seconds) | Best Epoch | mAP50 | |

|---|---|---|---|---|

| YOLO v8-s | 640 | 0 h 37 m 19 s | 86 | 0.881 |

| 960 | 0 h 30 m 7 s | 28 | 0.917 | |

| 1280 | 1 h 56 m 56 s | 35 | 0.928 | |

| 1600 | 5 h 50 m 49 s | 32 | 0.929 | |

| Model | Training Time (Hours/Minutes/Seconds) | Validation | |||||

|---|---|---|---|---|---|---|---|

| P | R | F1 Score | mAP50 | mAP50-95 | |||

| Yolo v8 | n | 0 h 18 m 33 s | 0.86 | 0.85 | 0.85 | 0.89 | 0.43 |

| m | 0 h 32 m 37 s | 0.86 | 0.92 | 0.89 | 0.94 | 0.48 | |

| x | 3 h 55 m 34 s | 0.86 | 0.91 | 0.88 | 0.93 | 0.48 | |

| Yolo v10 | n | 0 h 36 m 4 s | 0.83 | 0.83 | 0.83 | 0.89 | 0.45 |

| m | 1 h 18 m 10 s | 0.88 | 0.90 | 0.89 | 0.92 | 0.48 | |

| x | 35 h 18 m 19 s | 0.87 | 0.90 | 0.89 | 0.93 | 0.50 | |

| Yolo v11 | n | 0 h 33 m 45 s | 0.88 | 0.87 | 0.87 | 0.91 | 0.47 |

| m | 0 h 43 m 52 s | 0.87 | 0.91 | 0.89 | 0.93 | 0.49 | |

| x | 8 h 28 m 16 s | 0.89 | 0.90 | 0.89 | 0.93 | 0.48 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sánchez-Vega, J.A.; Silva-López, J.O.; Salas Lopez, R.; Medina-Medina, A.J.; Tuesta-Trauco, K.M.; Rivera-Fernandez, A.S.; Silva-Melendez, T.B.; Oliva-Cruz, M.; Barboza, E.; da Silva Junior, C.A.; et al. Automatic Detection of Ceroxylon Palms by Deep Learning in a Protected Area in Amazonas (NW Peru). Forests 2025, 16, 1061. https://doi.org/10.3390/f16071061

Sánchez-Vega JA, Silva-López JO, Salas Lopez R, Medina-Medina AJ, Tuesta-Trauco KM, Rivera-Fernandez AS, Silva-Melendez TB, Oliva-Cruz M, Barboza E, da Silva Junior CA, et al. Automatic Detection of Ceroxylon Palms by Deep Learning in a Protected Area in Amazonas (NW Peru). Forests. 2025; 16(7):1061. https://doi.org/10.3390/f16071061

Chicago/Turabian StyleSánchez-Vega, José A., Jhonsy O. Silva-López, Rolando Salas Lopez, Angel J. Medina-Medina, Katerin M. Tuesta-Trauco, Abner S. Rivera-Fernandez, Teodoro B. Silva-Melendez, Manuel Oliva-Cruz, Elgar Barboza, Carlos Antonio da Silva Junior, and et al. 2025. "Automatic Detection of Ceroxylon Palms by Deep Learning in a Protected Area in Amazonas (NW Peru)" Forests 16, no. 7: 1061. https://doi.org/10.3390/f16071061

APA StyleSánchez-Vega, J. A., Silva-López, J. O., Salas Lopez, R., Medina-Medina, A. J., Tuesta-Trauco, K. M., Rivera-Fernandez, A. S., Silva-Melendez, T. B., Oliva-Cruz, M., Barboza, E., da Silva Junior, C. A., Sánchez-Vega, J., & Zabaleta-Santisteban, J. A. (2025). Automatic Detection of Ceroxylon Palms by Deep Learning in a Protected Area in Amazonas (NW Peru). Forests, 16(7), 1061. https://doi.org/10.3390/f16071061