Developing Interpretable Deep Learning Model for Subtropical Forest Type Classification Using Beijing-2, Sentinel-1, and Time-Series NDVI Data of Sentinel-2

Abstract

1. Introduction

2. Materials and Methods

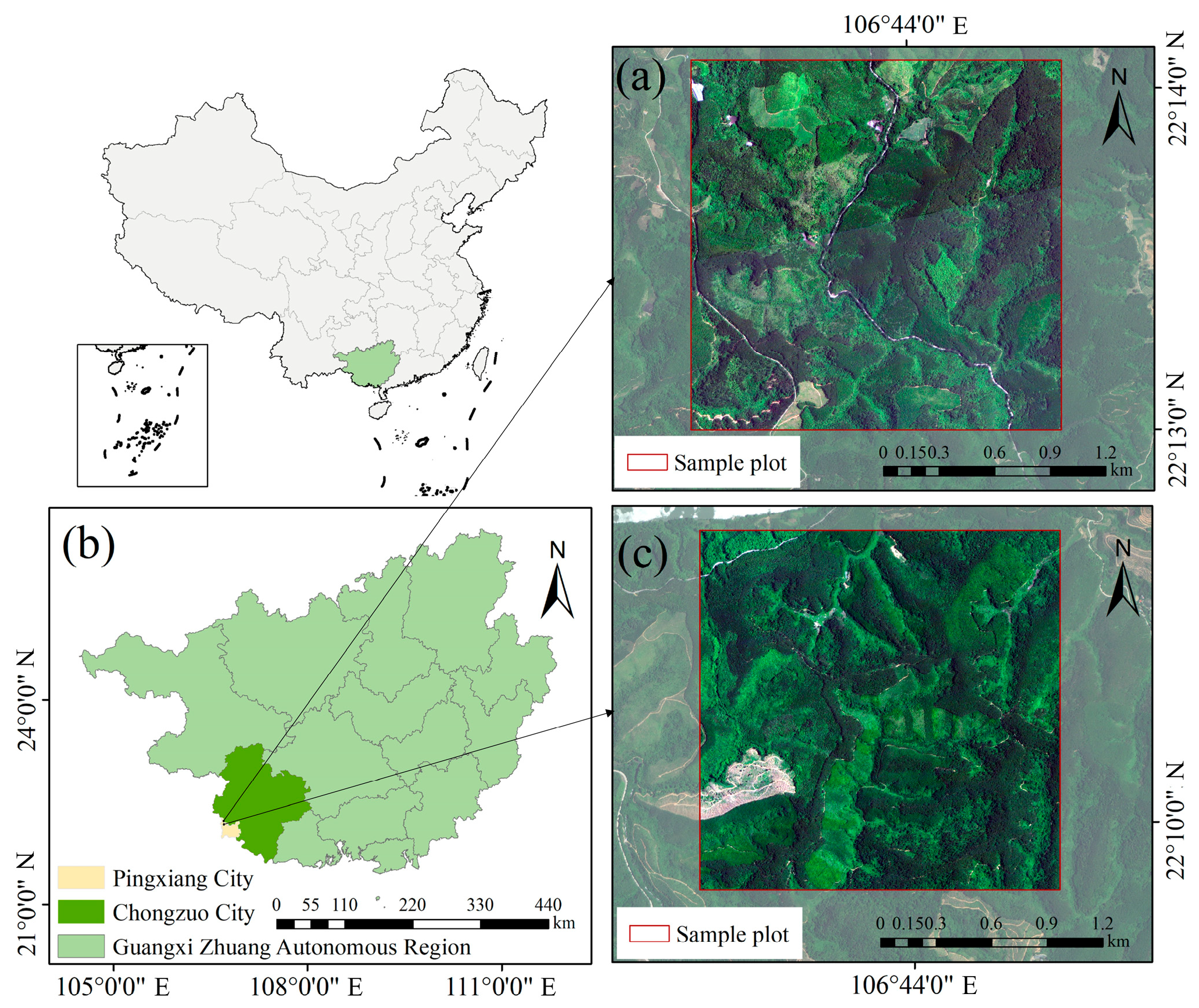

2.1. Study Area

2.2. Remote Sensing Data

2.3. Constructing the Sample Set

3. Methods

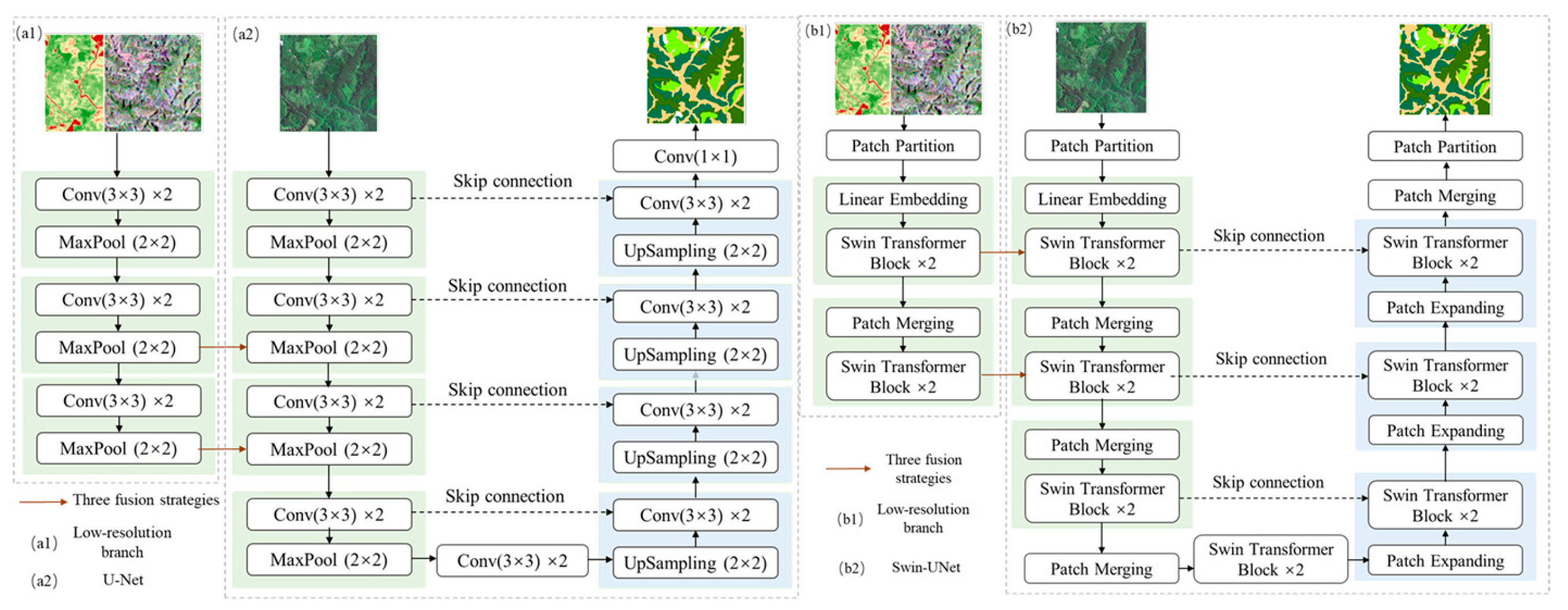

3.1. Baseline Models

3.2. Feature Fusion Strategies

3.2.1. Direct Concatenation

3.2.2. Gated Mechanism

3.2.3. Attention Mechanism

3.3. Experimental Setup and Evaluation Metrics

3.3.1. Training Configuration

3.3.2. Performance Evaluation

3.4. Model Interpretability

3.4.1. Feature Contribution

3.4.2. Spatial Visualization

3.4.3. Occlusion Sensitivity

4. Results

4.1. Overall Model Performance and Classification Accuracy

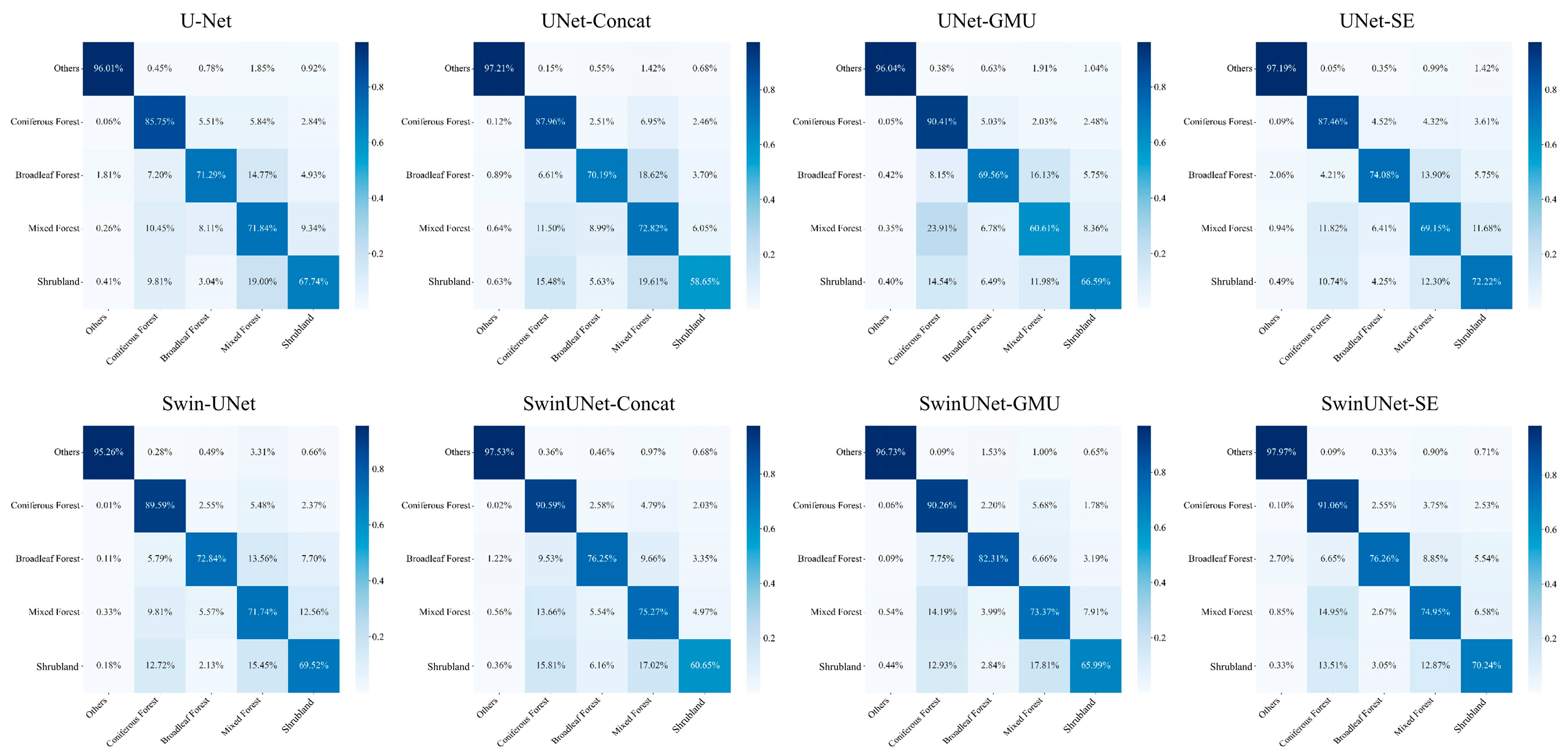

4.1.1. Comparison of Overall Performance

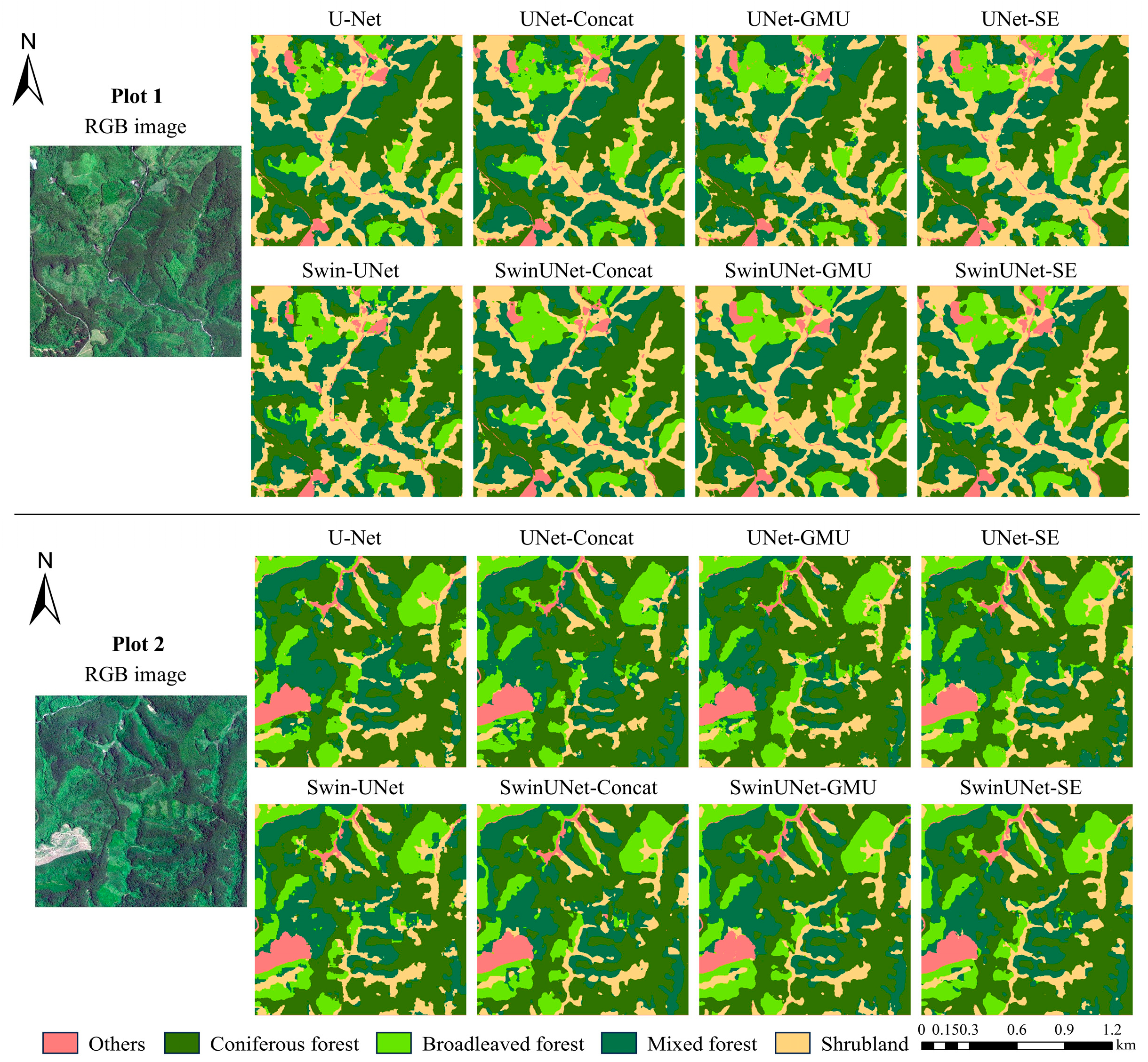

4.1.2. Classification Results

4.2. Modal Contribution Analysis

4.3. Model Interpretability Analysis

4.3.1. SHAP Analysis of Different Features

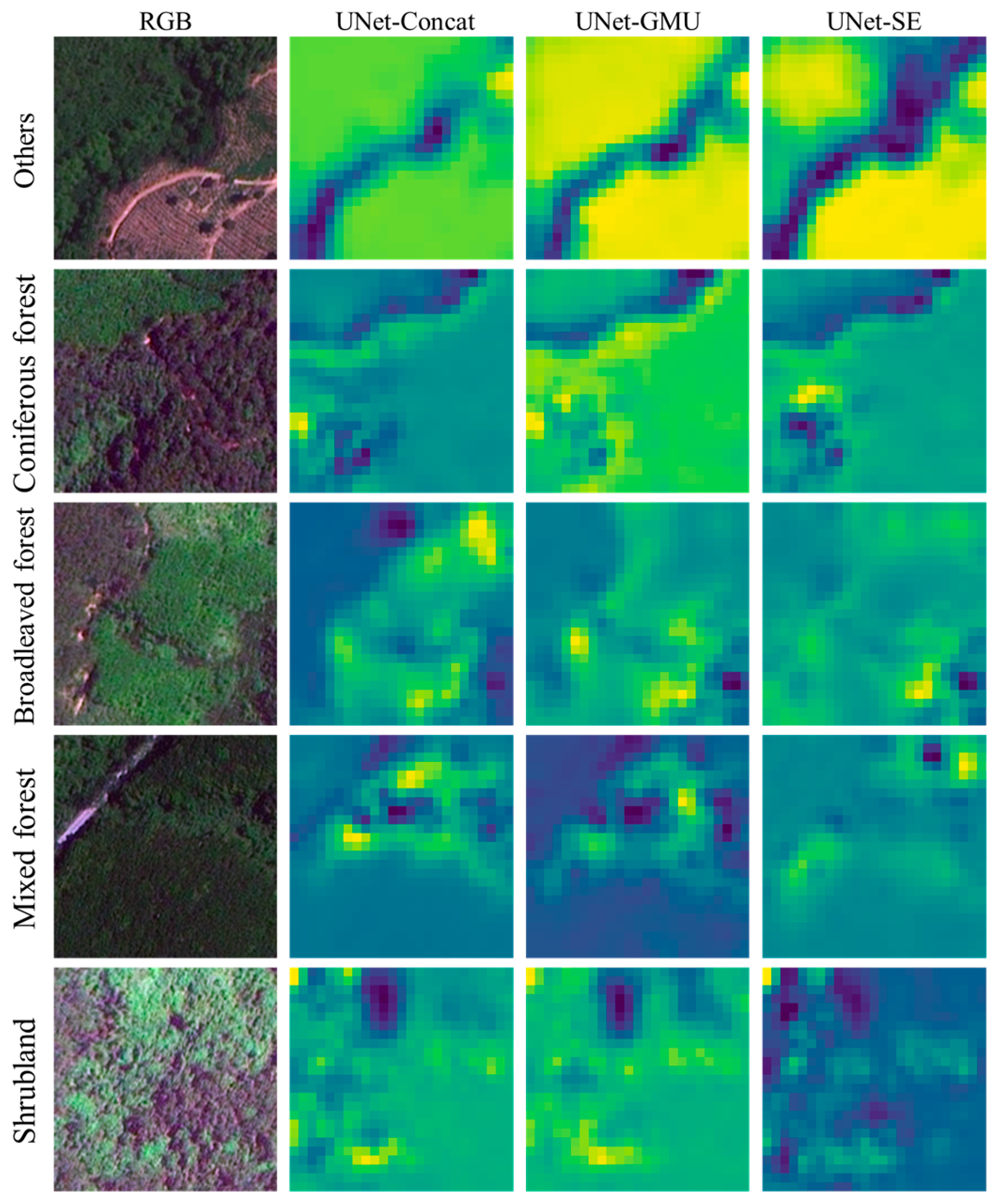

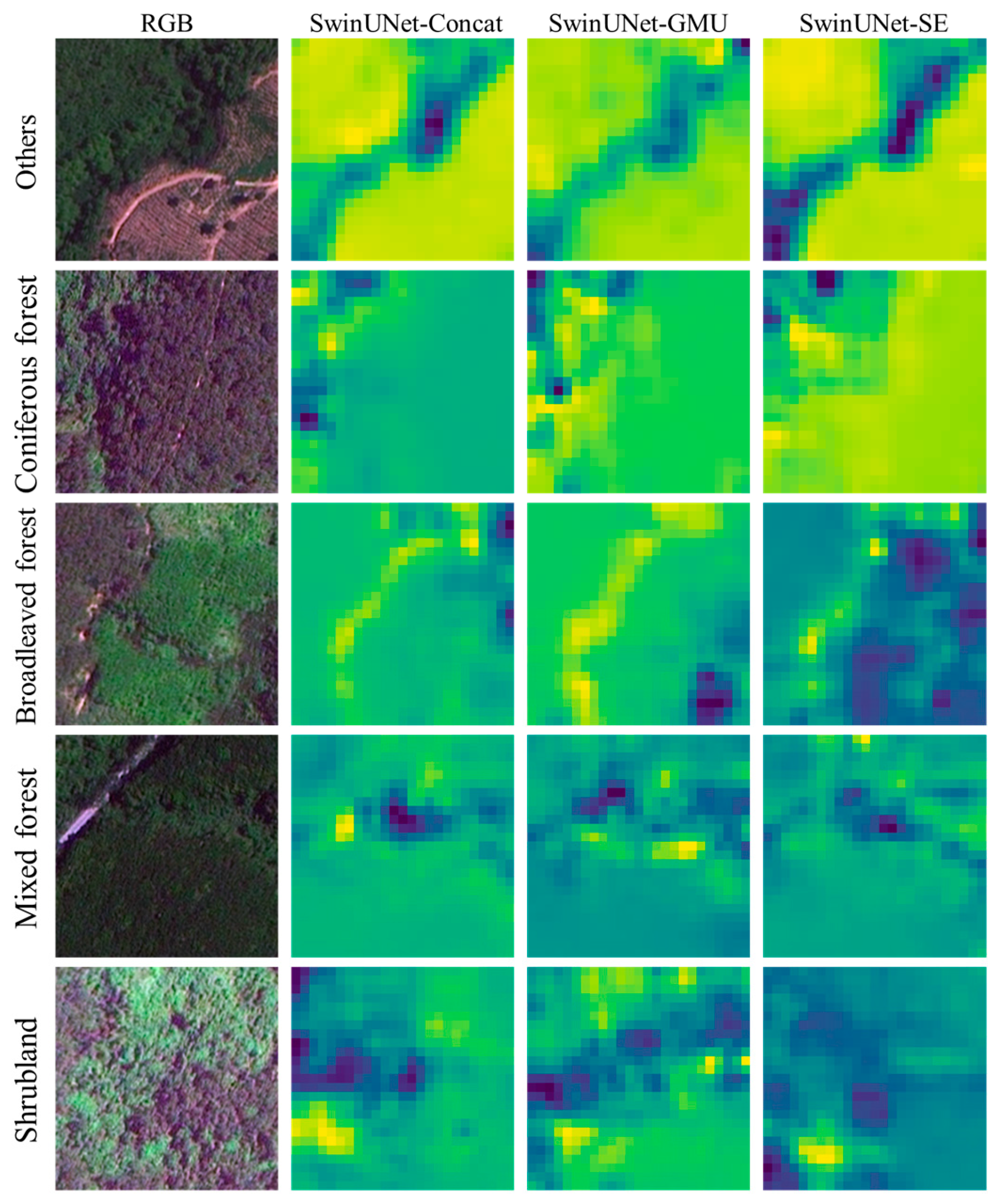

4.3.2. Visualization of Grad-CAM++ Analysis

4.3.3. Occlusion Sensitivity Analysis

5. Discussion

5.1. Analysis of Modality Complementarity

5.2. Differences Across Network Architectures

5.3. Effectiveness of Multimodal Fusion Strategies

5.4. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, M.; Chen, B.; Liao, X.; Yue, T.; Yue, H.; Ren, S.; Li, X.; Nie, Z.; Xu, B. Forest Types Classification Based on Multi-Source Data Fusion. Remote Sens. 2017, 9, 1153. [Google Scholar] [CrossRef]

- Yu, Y.; Li, M.; Fu, Y. Forest type identification by random forest classification combined with SPOT and multitemporal SAR data. J. For. Res. 2018, 29, 1407–1414. [Google Scholar] [CrossRef]

- Yu, X.; Lu, D.; Jiang, X.; Li, G.; Chen, Y.; Li, D.; Chen, E. Examining the Roles of Spectral, Spatial, and Topographic Features in Improving Land-Cover and Forest Classifications in a Subtropical Region. Remote Sens. 2020, 12, 2907. [Google Scholar] [CrossRef]

- Cheng, K.; Wang, J. Forest Type Classification Based on Integrated Spectral-Spatial-Temporal Features and Random Forest Algorithm—A Case Study in the Qinling Mountains. Forests 2019, 10, 559. [Google Scholar] [CrossRef]

- Li, J.; Cai, Y.; Li, Q.; Kou, M.; Zhang, T. A review of remote sensing image segmentation by deep learning methods. Int. J. Digit. Earth 2024, 17, 2328827. [Google Scholar] [CrossRef]

- Chen, X.; Shen, X.; Cao, L. Tree Species Classification in Subtropical Natural Forests Using High-Resolution UAV RGB and SuperView-1 Multispectral Imageries Based on Deep Learning Network Approaches: A Case Study within the Baima Snow Mountain National Nature Reserve, China. Remote Sens. 2023, 15, 2697. [Google Scholar] [CrossRef]

- Sothe, C.; De Almeida, C.M.; Schimalski, M.B.; Liesenberg, V.; La Rosa, L.E.C.; Castro, J.D.B.; Feitosa, R.Q. A comparison of machine and deep-learning algorithms applied to multisource data for a subtropical forest area classification. Int. J. Remote Sens. 2020, 41, 1943–1969. [Google Scholar] [CrossRef]

- Diwei, Z.; Xiaoyang, C.; Yunxiang, G. A Multi-Model Output Fusion Strategy Based on Various Machine Learning Techniques for Product Price Prediction. J. Electron. Inf. Syst. 2024, 4, 42–51. [Google Scholar] [CrossRef]

- Stahlschmidt, S.R.; Ulfenborg, B.; Synnergren, J. Multimodal deep learning for biomedical data fusion: A review. Brief. Bioinform. 2022, 23, bbab569. [Google Scholar] [CrossRef]

- Boulahia, S.Y.; Amamra, A.; Madi, M.R.; Daikh, S. Early, intermediate and late fusion strategies for robust deep learning-based multimodal action recognition. Mach. Vis. Appl. 2021, 32, 121. [Google Scholar] [CrossRef]

- Singh, L.; Janghel, R.R.; Sahu, S.P. A hybrid feature fusion strategy for early fusion and majority voting for late fusion towards melanocytic skin lesion detection. Int. J. Imaging Syst. Technol. 2022, 32, 1231–1250. [Google Scholar] [CrossRef]

- Gadzicki, K.; Khamsehashari, R.; Zetzsche, C. Early vs Late Fusion in Multimodal Convolutional Neural Networks. In Proceedings of the 2020 IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; pp. 1–6. [Google Scholar]

- Pereira, L.M.; Salazar, A.; Vergara, L. A Comparative Analysis of Early and Late Fusion for the Multimodal Two-Class Problem. IEEE Access 2023, 11, 84283–84300. [Google Scholar] [CrossRef]

- Yun, T.; Li, J.; Ma, L.; Zhou, J.; Wang, R.; Eichhorn, M.P.; Zhang, H. Status, advancements and prospects of deep learning methods applied in forest studies. Int. J. Appl. Earth Obs. Geoinf. 2024, 131, 103938. [Google Scholar] [CrossRef]

- Shamsolmoali, P.; Zareapoor, M.; Wang, R.; Zhou, H.; Yang, J. A Novel Deep Structure U-Net for Sea-Land Segmentation in Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3219–3232. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin Transformer Embedding UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Zhong, L.; Dai, Z.; Fang, P.; Cao, Y.; Wang, L. A Review: Tree Species Classification Based on Remote Sensing Data and Classic Deep Learning-Based Methods. Forests 2024, 15, 852. [Google Scholar] [CrossRef]

- Xu, J.; Yang, J.; Xiong, X.; Li, H.; Huang, J.; Ting, K.C.; Ying, Y.; Lin, T. Towards interpreting multi-temporal deep learning models in crop mapping. Remote Sens. Environ. 2021, 264, 112599. [Google Scholar] [CrossRef]

- Li, Z. Extracting spatial effects from machine learning model using local interpretation method: An example of SHAP and XGBoost. Comput. Environ. Urban Syst. 2022, 96, 101845. [Google Scholar] [CrossRef]

- Ma, Y.; Zhao, Y.; Im, J.; Zhao, Y.; Zhen, Z. A deep-learning-based tree species classification for natural secondary forests using unmanned aerial vehicle hyperspectral images and LiDAR. Ecol. Indic. 2024, 159, 111608. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Cham, Switzerland, 18 November 2015; pp. 234–241. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-Like Pure Transformer for Medical Image Segmentation. In Proceedings of the Computer Vision–ECCV 2022 Workshops, Cham, Switzerland, 18 February 2023; pp. 205–218. [Google Scholar]

- Arevalo, J.; Solorio, T.; Montes-y-Gómez, M.; González, F.A. Gated multimodal units for information fusion. arXiv 2017, arXiv:1702.01992. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 16 December 2018; pp. 7132–7141. [Google Scholar]

- Chen, X.; Yao, X.; Zhou, Z.; Liu, Y.; Yao, C.; Ren, K. DRs-UNet: A Deep Semantic Segmentation Network for the Recognition of Active Landslides from InSAR Imagery in the Three Rivers Region of the Qinghai–Tibet Plateau. Remote Sens. 2022, 14, 1848. [Google Scholar] [CrossRef]

- Van Soesbergen, A.; Chu, Z.; Shi, M.; Mulligan, M. Dam Reservoir Extraction from Remote Sensing Imagery Using Tailored Metric Learning Strategies. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4207414. [Google Scholar] [CrossRef]

- Liu, Y.; Gao, K.; Wang, H.; Yang, Z.; Wang, P.; Ji, S.; Huang, Y.; Zhu, Z.; Zhao, X. A Transformer-based multi-modal fusion network for semantic segmentation of high-resolution remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104083. [Google Scholar] [CrossRef]

- Pradhan, B.; Lee, S.; Dikshit, A.; Kim, H. Spatial flood susceptibility mapping using an explainable artificial intelligence (XAI) model. Geosci. Front. 2023, 14, 101625. [Google Scholar] [CrossRef]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-CAM++: Generalized Gradient-Based Visual Explanations for Deep Convolutional Networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Mäyrä, J.; Keski-Saari, S.; Kivinen, S.; Tanhuanpää, T.; Hurskainen, P.; Kullberg, P.; Poikolainen, L.; Viinikka, A.; Tuominen, S.; Kumpula, T.; et al. Tree species classification from airborne hyperspectral and LiDAR data using 3D convolutional neural networks. Remote Sens. Environ. 2021, 256, 112322. [Google Scholar] [CrossRef]

- Heckel, K.; Urban, M.; Schratz, P.; Mahecha, M.D.; Schmullius, C. Predicting Forest Cover in Distinct Ecosystems: The Potential of Multi-Source Sentinel-1 and -2 Data Fusion. Remote Sens. 2020, 12, 302. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Bai, Y.; Sun, G.; Li, Y.; Ma, P.; Li, G.; Zhang, Y. Comprehensively analyzing optical and polarimetric SAR features for land-use/land-cover classification and urban vegetation extraction in highly-dense urban area. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102496. [Google Scholar] [CrossRef]

- Li, Y.; Xiao, X. Deep Learning-Based Fusion of Optical, Radar, and LiDAR Data for Advancing Land Monitoring. Sensors 2025, 25, 4991. [Google Scholar] [CrossRef]

- Liu, X.; Zou, H.; Wang, S.; Lin, Y.; Zuo, X. Joint Network Combining Dual-Attention Fusion Modality and Two Specific Modalities for Land Cover Classification Using Optical and SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 3236–3250. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, W.; Zhang, T.; Yang, Z.; Li, J. Efficient Transformer for Remote Sensing Image Segmentation. Remote Sens. 2021, 13, 3585. [Google Scholar] [CrossRef]

- Fan, L.; Zhou, Y.; Liu, H.; Li, Y.; Cao, D. Combining Swin Transformer with UNet for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5530111. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. Multi-source remotely sensed data fusion for improving land cover classification. ISPRS J. Photogramm. Remote Sens. 2017, 124, 27–39. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very high resolution urban remote sensing with multimodal deep networks. ISPRS J. Photogramm. Remote Sens. 2018, 140, 20–32. [Google Scholar] [CrossRef]

- Sun, Y.; Fu, Z.; Sun, C.; Hu, Y.; Zhang, S. Deep Multimodal Fusion Network for Semantic Segmentation Using Remote Sensing Image and LiDAR Data. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5404418. [Google Scholar] [CrossRef]

| Sample Set | Others | Coniferous Forest | Broadleaf Forest | Mixed Forest | Shrubland |

|---|---|---|---|---|---|

| Training (pixel) | 1,078,932 | 10,499,302 | 3,998,494 | 7,614,322 | 5,448,950 |

| validation (pixel) | 294,064 | 3,736,658 | 1,076,566 | 2,276,734 | 855,978 |

| Model | Params (M) | FLOPs (G) | FPS |

|---|---|---|---|

| U-Net | 17.26 | 24.48 | 179.41 |

| UNet-Concat | 18.58 | 25.18 | 160.3 |

| UNet-GMU | 19.38 | 28.38 | 132.53 |

| UNet-SE | 19.33 | 28.13 | 148.71 |

| Swin-UNet | 21.99 | 5.24 | 184.89 |

| SwinUNet-Concat | 25.57 | 4.53 | 116.2 |

| SwinUNet-GMU | 25.74 | 4.70 | 105.8 |

| SwinUNet-SE | 25.71 | 4.66 | 106.57 |

| Model | RGB+NDVI+SAR | RGB+NDVI | RGB+SAR | ||||||

|---|---|---|---|---|---|---|---|---|---|

| OA | MIoU | Mean F1 | OA | MIoU | Mean F1 | OA | MIoU | Mean F1 | |

| UNet-Concat | 78.74% | 0.6398 | 0.7689 | 50.50% | 0.2834 | 0.3476 | 66.84% | 0.3423 | 0.4620 |

| UNet-GMU | 77.18% | 0.6324 | 0.7624 | 55.11% | 0.3165 | 0.4269 | 72.35% | 0.4908 | 0.6447 |

| UNet-SE | 79.42% | 0.6452 | 0.7757 | 60.42% | 0.3735 | 0.5241 | 70.84% | 0.4238 | 0.5724 |

| SwinUNet-Concat | 81.62% | 0.6790 | 0.8001 | 79.08% | 0.6440 | 0.7726 | 65.70% | 0.3069 | 0.4172 |

| SwinUNet-GMU | 82.27% | 0.7009 | 0.8159 | 81.68% | 0.6841 | 0.8048 | 67.11% | 0.3249 | 0.4359 |

| SwinUNet-SE | 82.76% | 0.6885 | 0.8102 | 81.25% | 0.6578 | 0.7883 | 66.65% | 0.3679 | 0.5066 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, S.; Wang, X.; Shi, M.; Tao, G.; Qiao, S.; Chen, Z. Developing Interpretable Deep Learning Model for Subtropical Forest Type Classification Using Beijing-2, Sentinel-1, and Time-Series NDVI Data of Sentinel-2. Forests 2025, 16, 1709. https://doi.org/10.3390/f16111709

Chen S, Wang X, Shi M, Tao G, Qiao S, Chen Z. Developing Interpretable Deep Learning Model for Subtropical Forest Type Classification Using Beijing-2, Sentinel-1, and Time-Series NDVI Data of Sentinel-2. Forests. 2025; 16(11):1709. https://doi.org/10.3390/f16111709

Chicago/Turabian StyleChen, Shudan, Xuefeng Wang, Mengmeng Shi, Guofeng Tao, Shijiao Qiao, and Zhulin Chen. 2025. "Developing Interpretable Deep Learning Model for Subtropical Forest Type Classification Using Beijing-2, Sentinel-1, and Time-Series NDVI Data of Sentinel-2" Forests 16, no. 11: 1709. https://doi.org/10.3390/f16111709

APA StyleChen, S., Wang, X., Shi, M., Tao, G., Qiao, S., & Chen, Z. (2025). Developing Interpretable Deep Learning Model for Subtropical Forest Type Classification Using Beijing-2, Sentinel-1, and Time-Series NDVI Data of Sentinel-2. Forests, 16(11), 1709. https://doi.org/10.3390/f16111709