1. Introduction

Particleboard is an engineered wood product manufactured by processing natural wood, branch materials, or other cellulose-containing substances through chipping, drying, adhesive bonding, and hot pressing [

1,

2,

3]. Due to its high resource utilization, structural strength, sound insulation properties, and impact resistance, particleboard is extensively used in furniture manufacturing, packaging, and architectural decoration industries [

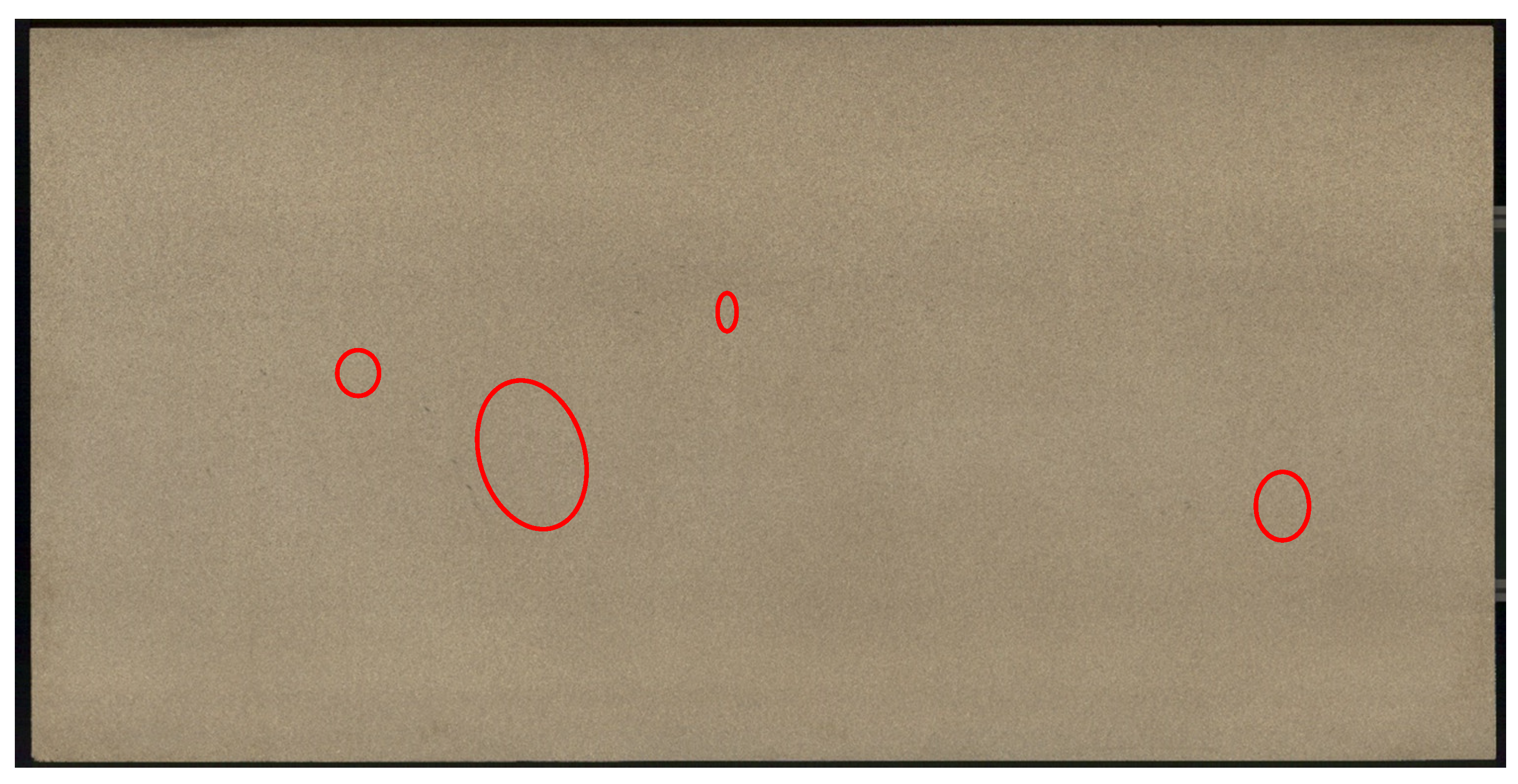

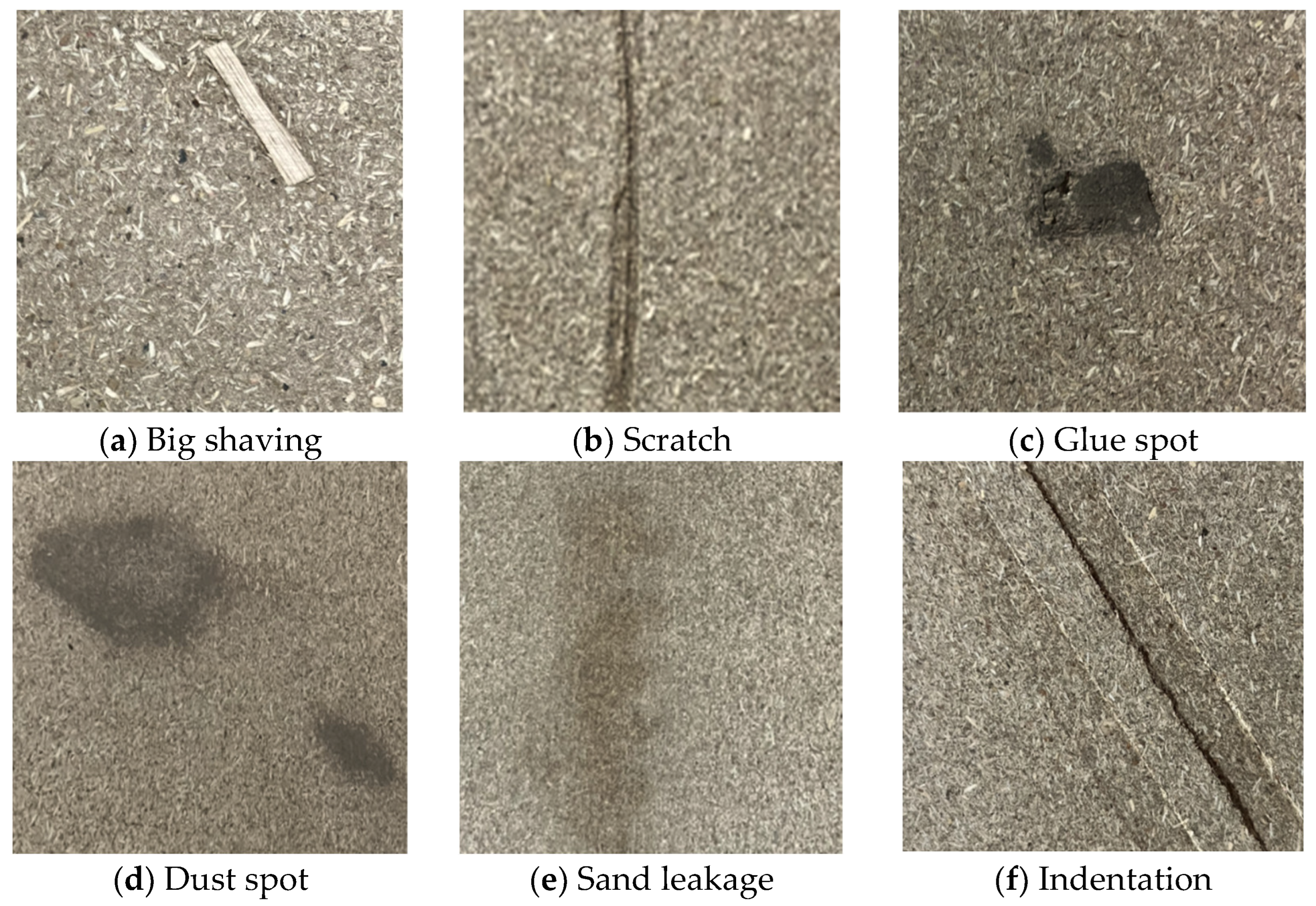

4]. During production, surface defects can compromise board quality and hinder subsequent lamination processes. Quality control oversights can lead to economic losses and, more seriously, damage to corporate reputation. Currently, surface quality inspection of domestic and international particleboard production lines primarily relies on manual visual inspection, where workers visually identify defects and grade their severity. However, prolonged inspection work causes visual fatigue among workers, resulting in high miss and false detection rates while also affecting production efficiency. Therefore, developing intelligent surface defect detection and classification systems is crucial for improving particleboard product quality and production efficiency in China.

Machine vision technology, which replaces human visual inspection with automated detection and measurement, offers advantages such as high efficiency and speed. Many researchers have successfully applied this technology in agriculture, aerospace, and other fields, and it is gradually being adopted in artificial board manufacturing [

5,

6]. Guo Hui et al. [

7] developed a particleboard defect detection algorithm using a gray-level co-occurrence matrix and hierarchical clustering. They tested 600 images (512 × 512 pixels) containing five defect types: debris, oil stains, glue spots, big shavings, and soft spots. The algorithm achieved 92.2% precision and 91.8% recall, with a 5% false detection rate for normal boards. While the miss rates for glue spots, oil stains, and soft spots were 1.6%, 4.0%, and 6.6%, respectively, with an average processing time of 0.867 s per image.

With neural network advancement, particleboard surface inspection has evolved from traditional machine learning to deep learning. Zhang et al. [

8] proposed an innovative dual attention mechanism with dense connection (DC-DACB), combining Ganomaly and ResNet networks to detect five defect types (shavings, scratches, chalk marks, soft spots, and adhesive spots) achieving 93.1% accuracy. For real-time detection improvement, Zhao et al. [

9] modified YOLOv5 by incorporating gamma-ray transformation and image differentiation methods to correct uneven illumination. They added Ghost Bottleneck lightweight deep convolution modules in the backbone block and replaced Conv with depth-wise convolution (DWConv) in the Neck module to compress network parameters. Testing on five defect types (big shavings, sand leakages, glue spots, soft spots, and oil pollution) achieved a 91.22% recall and 94.5% F1-score, with oil pollution accuracy at 81.7% and a single image detection time of 0.031 s. These results indicate that detection accuracy for specific defects like big shaving and glue spot still needs improvement in existing methods.

Due to particleboard’s large surface area and defects varying in size and shape with similar characteristics, smaller defects are prone to miss detection or false detection. Small defects typically feature low pixel resolution and poor visual quality. Traditional CNN methods struggle to process details and textures in large-scale images. Image super-resolution reconstruction technology can overcome existing hardware limitations by enhancing the small defect feature information in particleboard images, improving surface image quality and defect clarity, thereby increasing detection accuracy.

Image super-resolution technology is an image processing method, which aims to restore low-resolution images to high-resolution images [

10,

11]. Liang et al. [

12] designed a model called SwinIR, which is based on the Transformer architecture and utilizes an innovative self-attention reconstruction mechanism to extract shallow features, mine deep features, and ultimately reconstruct images. Extensive experimental comparisons showed that the restored image quality surpassed that of CNN models by 0.14~0.45 dB in PSNR metrics, with over 60% reduction in model parameters, showing significant research potential, though model stability still needs improvement. Deeba et al. [

13] developed a modified wide residual network by increasing network width and reducing depth, which was effectively validated on public remote sensing datasets. Compared to EDSR and SRResnet networks, it reduced memory usage by 21% and 34%, respectively, improving training loss efficiency. Huang et al. [

14] introduced the 2 structure incorporating directional operators and multi-scale fusion to enhance medical radiographic image reconstruction, achieving excellent performance in PSNR, SSIM, NIQE, and PI metrics. Our team member Yu Wei [

15] proposed the SRDAGAN model for particleboard surface image super-resolution reconstruction, comprehensively evaluated using PSNR, SSIM, and LPIPS metrics, demonstrating clearer textures and more authentic feature expression in reconstructed images. However, this technology has not yet been applied in defect detection models.

This paper employs machine vision technology and artificial intelligence algorithms for particleboard surface defect detection. For defects with small areas and unclear contours, super-resolution technology is introduced to obtain higher-quality particleboard surface images with enhanced details before defect detection. The system implements intelligent particleboard surface defect recognition based on super-resolution reconstruction, effectively reducing hardware costs while improving detection accuracy and efficiency, which has significant implications for promoting transformation and upgrading of the particleboard industry and enhancing equipment intelligence. The main contribution of this paper is the establishment of a large-scale veneer image acquisition system based on machine vision. The acquired images were processed using an improved Transformer algorithm for image super-resolution, and, finally, the processed images were verified for defect recognition accuracy using the YOLOv8 algorithm.

3. Results

In this study, all super-resolution reconstruction model training, validation, and testing were conducted under identical hardware and software conditions. This experimental programming language uses python3.10, deep learning framework torch2.2 + cuda11.8, image super-resolution reconstruction framework BasicSR1.4, and target detection library ultralytics8.3, and code modification uses vscode.

During the training process, we used the Adam optimizer and set the initial learning rate of 1 × 10−4 for the network. The entire training process covers a total of 500,000 epochs. The learning rate was halved at epochs 250,000, 400,000, 450,000, and 475,000. The feature weight parameter was set to 1, with 180 feature maps and a batch size of 4.

Upon inputting low-resolution image data of the particleboard into the trained model for reconstruction, an ultra-resolution reconstructed image of the particleboard, along with the corresponding evaluation index results, was obtained. The detailed evaluation index is presented in

Table 2. The results demonstrate that our algorithm achieved notable improvements across all metrics. Compared to the traditional BICUBIC method, ESRGAN improved the PSNR by 4.88 dB, the SSIM by 0.1629, and reduced the LPIPS by 0.2548, indicating that deep learning-based super-resolution shows significant effectiveness in particleboard image reconstruction.

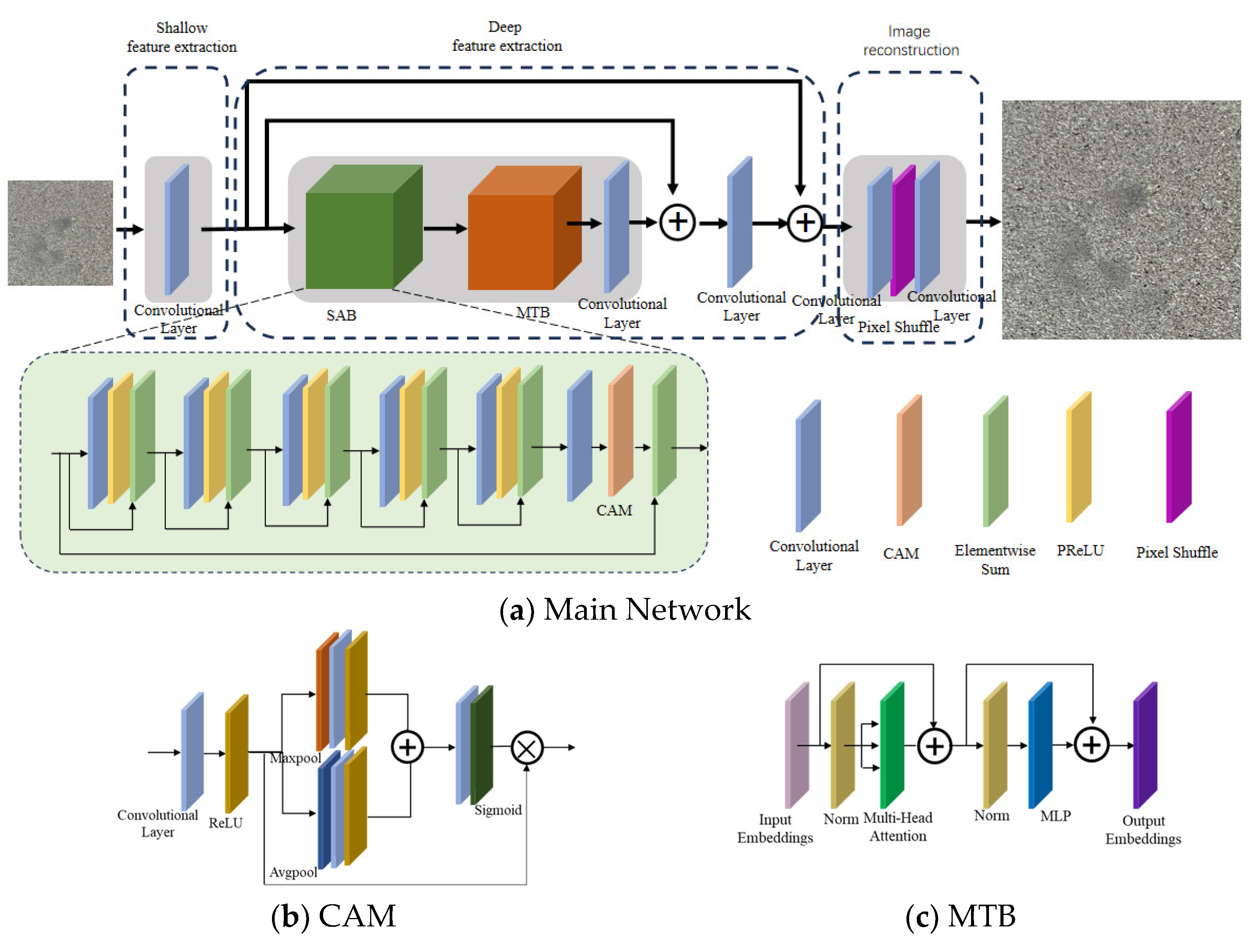

SwinIR, compared to ESRGAN, improved the PSNR by 7.32 dB and the SSIM by 0.0833, but increased the LPIPS by 0.1249. This indicates that the Transformer-based SwinIR method performs better than CNN-based ESRGAN in particleboard image reconstruction. SwinIR’s fusion of shallow and deep features shows excellent performance in metrics related to image noise, brightness, contrast, and structural similarity, with super-resolution image pixel values closely matching high-resolution ones. However, the increase in LPIPS by 0.1249 indicates poor visual presentation, manifesting as high pixel resolution but over-smoothed, unclear defect edges and textures.

To achieve high-quality images with clear details and edge information, our Transformer-based improved network showed slight improvements over SwinIR in the PSNR and SSIM metrics, while reducing LPIPS by 0.1317. This significant improvement in visual perception metrics is attributed to the comprehensive loss function incorporating perceptual loss, providing clearer defect edge information and refined texture details visually.

Figure 5 shows the comparison of reconstructed image results from different algorithms. As observed, deep learning-based methods produce better-quality images compared to traditional methods. Both our network and the ESRGAN network reconstruct high-resolution images with visually clear edge information. However, ESRGAN produces artifacts that appear as a film over the image, resulting in poor visual perception. While the SwinIR network produces images with high resolution, the texture is overly smoothed, which is disadvantageous for defect detection in particleboard images with high noise levels.

Our network effectively captures both deep and shallow information through the combined use of Transformer and residual network structures. By fusing this information, it obtains rich detail and texture features. Additionally, the use of a comprehensive loss function aimed at achieving both high pixel resolution and high visual perception results in high-resolution images with distinct defect edges and clear texture details, which is beneficial for improving particleboard surface defect recognition rates.

4. Discussion

The research on the super-resolution reconstruction of particleboard images is to improve surface defect recognition rates. The YOLO network demonstrates high detection accuracy, fast detection speed, and effectively avoids background misidentification, showing good performance in particleboard surface defect detection. Therefore, this paper uses the YOLO network to validate defect recognition on super-resolution images.

The low-resolution particleboard images from the test set and their corresponding super-resolution reconstructed particleboard images were, respectively, input into the pre-trained YOLOv8s model, and the P-R curves of the low-resolution and super-resolution particleboard images were obtained, as shown in

Figure 6. Using low-resolution particleboard images, defect detection achieved a mAP of 70.9%, with Average Precision values of 0.592, 0.596, 0.739, 0.554, 0.918, and 0.856 for big shaving, dust spot, glue spot, scratch, sand leakage, and indentation, respectively. The average detection speed of each picture is 3.6 ms.

In comparison, defect detection using super-resolution particleboard images achieved a mAP of 96.5%, a 25.6% improvement. The Average Precision values for big shaving, dust spot, indentation, scratch, glue spot, and sand leakage were 0.936, 0.982, 0.954, 0.927, 0.995, and 0.993, respectively. The average detection speed of each picture is 11.5 ms.

Comparative analysis shows that defects such as big shaving, dust spot, and scratch, due to their color and texture features being extremely similar to the particleboard background, were poorly detected in low-resolution images due to unclear texture contours, leading to missed detections by the YOLOv8s detection model. After introducing our model, the improved image resolution, more authentic detail feature expression, and more distinct texture contours resulted in effective improvements in recognition accuracy across all defect categories when using YOLOv8s for detection.

The detection confusion matrices for low-resolution and super-resolution particleboard images are shown in

Figure 7. For low-resolution images in

Figure 7a, the model’s recall rates for the six defect types (big shaving, dust spot, indentation, scratch, glue spot, and sand leakage) were 0.61, 0.57, 0.83, 0.54, 0.72, and 0.90, respectively. The confusion matrix shows that defects are most easily confused with the particleboard background. Among defect types, dust spots are often confused with glue spots, and glue spots with indentations, due to unclear defect textures in low-resolution images.

For super-resolution images in

Figure 7b, the recall rates improved to 0.77, 0.93, 0.95, 0.92, 1.00, and 0.99, respectively. Comparative analysis shows that confusion between different defect types almost disappeared after super-resolution reconstruction, with remaining confusion mainly between defects and the base board. Significant improvements were observed across all defect types, particularly for indentation, which showed the highest improvement. Indentations, with their subtle color differences, were previously often misidentified as scratches in low-resolution images due to only their outer contours being detected while ignoring the compressed inner area. After image reconstruction, the elongated compressed regions became clearer, with texture details distinctly different from qualified particleboard areas, improving detection accuracy.

For thin, elongated defects like scratches, which have small areas and minimal color variation, low-resolution images failed to express the corresponding defect information, leading to scratch information being eliminated and misclassified as background. Therefore, image super-resolution reconstruction was necessary to avoid such misdetections.

Our method significantly improved particleboard defect detection performance. Images processed through our super-resolution model expressed richer texture details and contour shapes, producing clearer, higher-quality images that enhanced feature differentiation among defect types, facilitating subsequent detection and recognition and improving overall particleboard defect identification effectiveness.

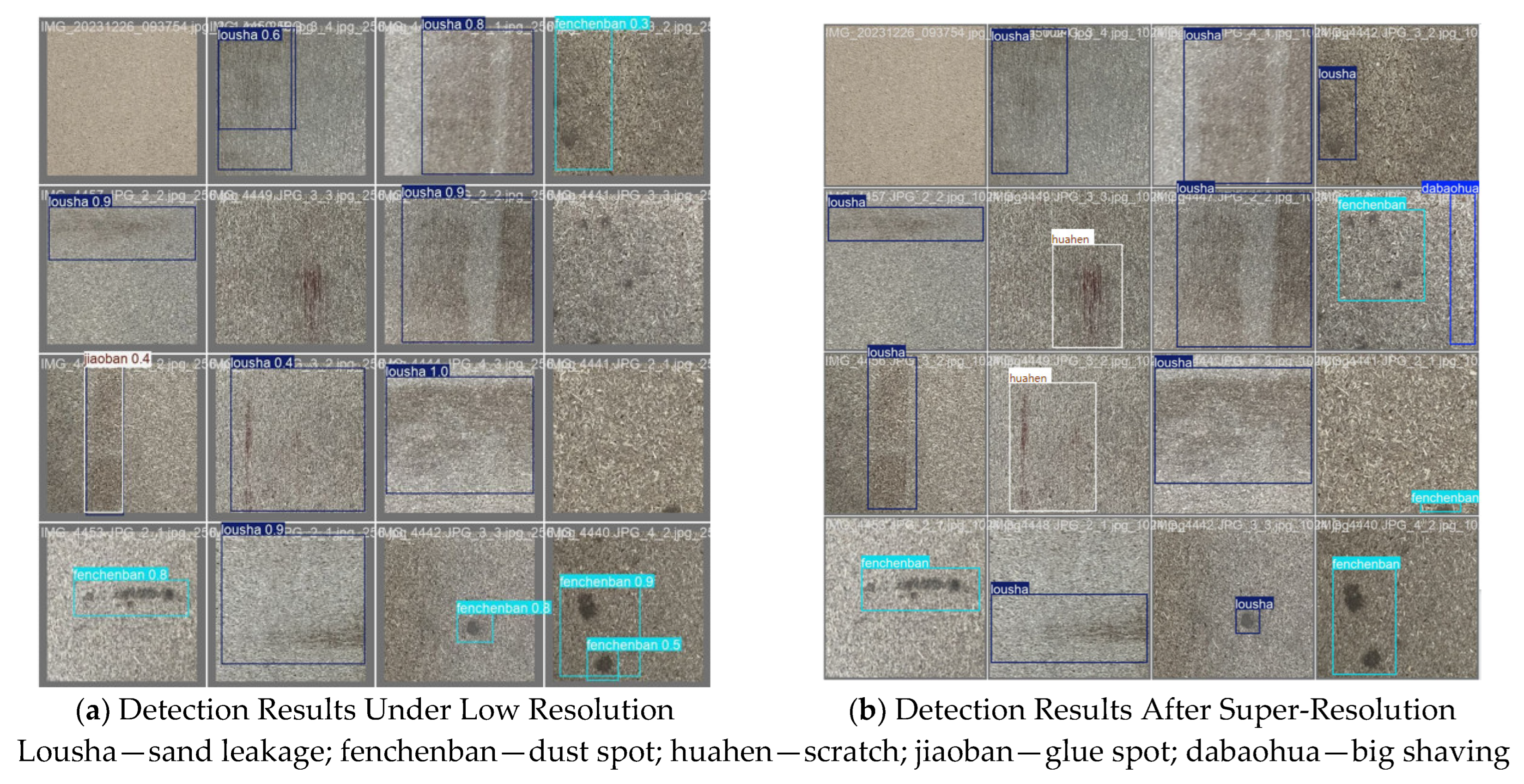

The detection results of particleboard defect images are shown in

Figure 8.

Figure 8a shows the results of the YOLOv8s model in the recognition of low-resolution images, while

Figure 8b shows the recognition of particleboard images after super-resolution processing under the same model. By comparing the recognition results of the same image, it can be seen that in the low-resolution image, the dust spot and large particles in the second row of the fourth image were not successfully identified, and the scratch in the second row of the second image was not detected. In addition, the glue spot in the third row of the fourth image was also not identified. It is worth noting that in addition to missed detection, there are also recognition errors. The scratch in the second picture of the third line was mistaken for sand leakage in the low-resolution image.

In contrast, all these defects are correctly identified in

Figure 8b. This demonstrates that under the same model, particleboard images processed through our super-resolution algorithm show clearer defects, particularly for big shaving, scratch, dust spot, and glue spot, contributing to improved overall recognition performance of the YOLOv8s model.

At the same time, this paper uses the classical Faster R-CNN, YOLOv5, and YOLOv7 algorithms to compare the defect detection experiments. Keeping the same experimental conditions, the corresponding low-resolution data set and super-resolution data set are input into the network to complete the training and testing of the model. The experimental results are shown in

Table 3.

Through comparison, it is found that in the algorithm models of Faster R-CNN, YOLOv5, YOLOv7, and YOLOv8, the super-resolution data sets show excellent performance in detection. Compared with the original low-resolution data sets, the mAP index values have been improved to varying degrees, and YOLOv8 maintains the best detection performance. The above experiments prove that the image super-resolution is helpful to improve the recognition rate of the detection model.

5. Conclusions

In order to improve the recognition rate of particleboard surface defects, this paper proposes an improved super-resolution reconstruction method of particleboard surface images based on a Transformer network (ours). The model introduces a deep information extraction structure combining a channel attention mechanism and residual module in the deep feature extraction module, and integrates the shallow information extracted by the translation convolution kernel through the convolutional neural network. This design can effectively take into account both local texture features and global shape information, which is helpful to deal with the surface defects of particleboard with large scale changes, so as to promote the extraction of particleboard defect features and image reconstruction.

Our network achieved a PSNR of 39.27 dB, an SSIM of 0.9169, and an LPIPS of 0.2213. When validated through the YOLOv8 recognition model, the reconstructed images achieved a defect recognition accuracy of 96.5%, which is a 25.6% improvement over non-super-resolution images. Particularly significant improvements were observed in recognizing big shaving, glue spot, and dust spot, thus verifying the effectiveness of image super-resolution reconstruction technology in improving defect recognition rates.

In future work, we will focus on improving the speed of particleboard defect recognition and detection, preparing for implementation in factory online inspection systems.