Abstract

With the ever-improving advances in computer vision and Earth observation capabilities, Unmanned Aerial Vehicles (UAVs) allow extensive forest inventory and the description of stand structure indirectly. We performed several flights with different UAVs and popular sensors over two sites with coniferous forests of various ages and flight levels using the custom settings preset by solution suppliers. The data were processed using image-matching techniques, yielding digital surface models, which were further analyzed using the lidR package in R. Consumer-grade RGB cameras were consistently more successful in the identification of individual trees at all of the flight levels (84–77% for Phantom 4), compared to the success of multispectral cameras, which decreased with higher flight levels and smaller crowns (77–54% for RedEdge-M). Regarding the accuracy of the measured crown diameters, RGB cameras yielded satisfactory results (Mean Absolute Error—MAE of 0.79–0.99 m and 0.88–1.16 m for Phantom 4 and Zenmuse X5S, respectively); multispectral cameras overestimated the height, especially in the full-grown forests (MAE = 1.26–1.77 m). We conclude that widely used low-cost RGB cameras yield very satisfactory results for the description of the structural forest information at a 150 m flight altitude. When (multi)spectral information is needed, we recommend reducing the flight level to 100 m in order to acquire sufficient structural forest information. The study contributes to the current knowledge by directly comparing widely used consumer-grade UAV cameras and providing a clear elementary workflow for inexperienced users, thus helping entry-level users with the initial steps and supporting the usability of such data in practice.

1. Introduction

Forests are complex terrestrial ecosystems covering almost 4 billion hectares globally and providing an immense number of ecosystem services [1], i.e., ecological, climatic, economic, cultural, and social services. Forest ecosystems accumulate most of the standing biomass [2], protect the watershed, prevent soil erosion, sequestrate large quantities of carbon via photosynthesis, mitigate climate change [3], and harbour a massive number of species [4]. However, the pressure on the production of these ecosystem services is increasing [5,6], forest disturbances are intensifying [7], and the global forested area is continuously decreasing. This is why it is necessary to continue improving our abilities of forest ecosystems monitoring.

With the availability of Earth observation techniques, the knowledge of the world’s forests distribution, health status, biomass storage, and stand structure and composition continuously increases [8]. Satellites and airborne systems supplement the terrestrial data inventories and facilitate the monitoring, mapping, and modelling of forests with unprecedented spatial resolution across large extents. The choice of the platform always relates to the analysis or application we would like to perform. While satellites may capture scenes across large areas, conventional airborne systems may provide better spatial and temporal resolutions. In addition to those systems, unmanned aerial vehicles (UAVs) may be used. Despite their still-limited payload capacity, flight endurance, and data storage and processing capabilities [9], unmanned systems bring great potential benefits to forestry applications [10,11,12] due to their relatively low acquisition costs, ever increasing user-friendliness, and high flexibility regarding temporal and sensor variability. Over the last few years, their popularity has increased [13], and these systems are now used for plant species classification [14,15,16], tree stress detection [17,18,19], individual tree detection [20,21,22], the complex assessment of forest structures [23,24], biomass estimations [25], microclimate modelling [26], or terrain reconstruction [27]. Many approaches and applications are described in the current reviews; see, e.g., [11,12].

LiDAR (Light Detection and Ranging) offers, thanks to its penetrating pulses, the benefit of capturing both the canopy and under-canopy forest structure [28,29,30,31]. Passive systems (e.g., spectral cameras), which are far less expensive, capture only the upper canopy; they may, however, still be suitable for the description of the forest structure in detail sufficient for most purposes including, for example, aboveground biomass estimation [32,33] whilst preserving low data acquisition costs. While more or less standardised protocols of data acquisition and processing have been verified for the traditional remote sensing approaches over the years, unmanned systems are still relatively novel; thus, the researcher may face challenges during data acquisition and processing [34]. This should be overcome by the creation and implementation of standardised protocols including accuracy assessment [35], which would be especially helpful for users without in-depth education in remote sensing data acquisition and processing, such as forest managers. Besides not being familiar with the processing and calibration of aerial data, such users often do not handle a large budget and are, therefore, likely to opt for rather low-cost solutions, such as suitable sensors mounted on casual commercial UAVs, as even such consumer-grade RGB sensors may work with sufficient efficacy and reliability to derive basic tree parameters.

Several studies focused on the evaluation of different tree parameters using individual tree detection in different environments have been published so far. Ghanbari et al. [36] compared UAV-based photogrammetric and LiDAR point clouds-based tree canopy parameters, Mohan et al. [37] compared UAV-based imagery and UAV-based LiDAR, and Gallardo-Salazar et al. [38] evaluated UAV-based tree height, area, and crown diameter. Kuzmin et al. [39] combined UAV-borne imageries (RGB and multispectral) for the detection of European Aspen, Nguyen et al. [40] used them for the detection of infested fir trees, and Qin et al. [41] used them for the identification of pine nematode disease. Diez et al. [42] recently published a review describing the use of deep learning for the processing of RGB data in forestry. Mohan et al. [37] have even published a guide for beginners on how to detect trees. Individual tree detection has also been evaluated from an SfM software perspective [43]. However, to the best of our knowledge, no studies comparing the usability and effectiveness of the estimation of the number of trees and crown size of different UAV platforms and sensors sensing from different altitudes have been published thus far.

The question remains of how an inexperienced user utilising such a solution with supplier-preset parameters will fare in imagery acquisition. The quality of such imagery is also related to other flight parameters, such as the flight altitude. The objectives of the presented study were (i) to provide entry-level users with the information on the basic processing workflow for the identification of tree crowns and their size; (ii) to provide information about the performance and reliability of such low-cost systems, which can be useful when assessing information derived from studies using such preset solutions; and (iii) to find out the optimal flight altitude of the individual widely used ready-made UAV solutions in the default modes preset by the suppliers that would result in the acquisition of the imagery which is best-suited for the detection of tree crowns and their size (which can be further used, e.g., for aboveground biomass estimates).

2. Materials and Methods

2.1. Study Area

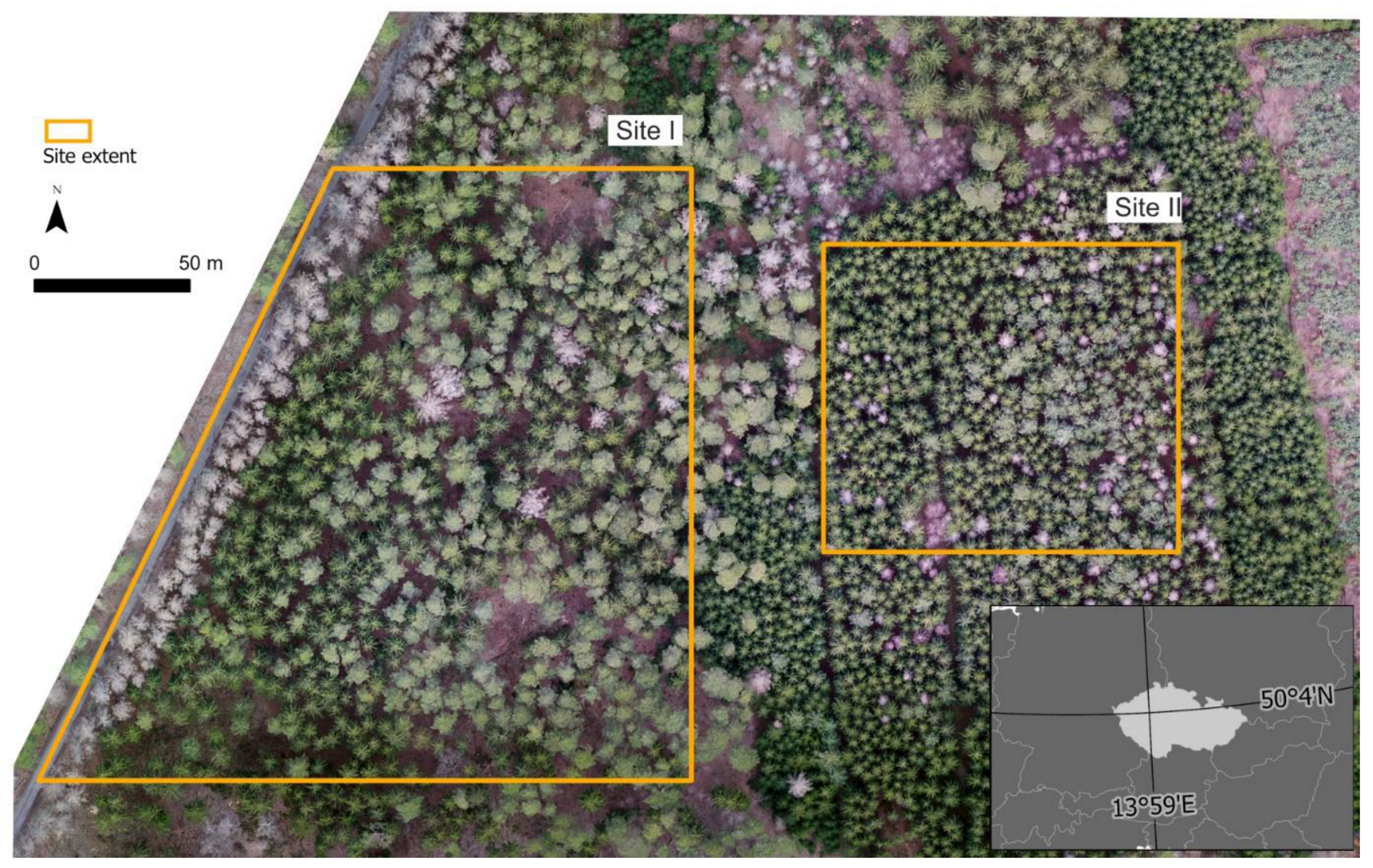

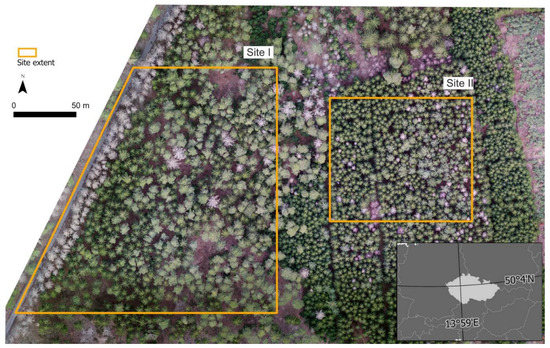

The study site (50°04′ N, 13°59′ E) is located 30 km west from the city of Prague, Czech Republic. The elevation in the study area of 15 ha ranges from 420 to 430 m above the mean sea level, with mostly an south or southwest aspect. It constitutes a part of a protected landscape of Křivoklátsko (681 sq. km), which was included among the UNESCO Biosphere reserves in 1977. The study site represents a typical Central European spruce-pine forest which is managed for timber production (standard silvicultural treatments are carried out). Two different forest compositions can be found on the site: (i) full-grown 80–100 year-old coniferous forests consisting of pine (Pinus sylvestris, 40%), spruce (Picea abies, 40%), and larch (Larix decidua, 20%), with a mean crown size of 7.1 ± 1.4 m; and (ii) 20–40 year-old coniferous forests consisting of spruce (60%), pine (20%) and larch (20%), with a mean crown size of 4.5 ± 0.9 m; see Figure 1.

Figure 1.

Study site overview—two tested sites: (Site I) full-grown 80–100 year-old mixed coniferous forest; (Site II) forest with the dominance of spruce, 20–40 years old. Basemap source: orthomosaics acquired from a Phantom 4 Pro at 200 m above ground.

2.2. Imagery Acquisition

Flights with four sensors mounted on three different UAVs were performed in the study area (see Table 1 for details). The imagery was collected during late winter, on 26 February 2019; the flight conditions were convenient—mostly cloudy sky with a temperature of around 12 °C and a northwest wind of 2–5 m⋅s−1. A total number of eight Ground Control Points (GCP) surveyed with a Leica 1200 GNSS in RTK mode were placed throughout the study site.

Table 1.

Specifications of the used UAV-mounted cameras.

The three UAVs included: (a) a lightweight fixed-wing UAV Disco Pro Ag (Parrot SA, Paris, France), which is a ready-to-deploy solution for agricultural and forestry applications with a maximum take-off weight (MTOW) of 0.94 kg mounted with a Sequoia camera; the remaining two were rotary-wing systems, namely (b) the Phantom 4 Pro (DJI, Shenzhen, China) which is probably the most popular lightweight (MTOW of 1.39 kg) universal commercial UAV, mounted with an integrated camera, and (c) a Matrice 210 (DJI, China), representing a professional adjustable enterprise solution with a MTOW of 6.6 kg, with a Zenmuse X5S FC6520 camera. In addition, (d) a fourth UAS (Unmanned Aerial System, i.e., a ready-to-fly solution including all of the necessary components) was created by mounting a RedEdge-M camera on the Phantom 4 Pro platform. Except for the fixed-wing UAV, for which where the producer regulates the elevation level, the flights were performed at 100, 150, and 200 m above ground level (AGL). For the fixed-wing UAV, we used the minimum and maximum allowed flight altitudes (125 m and 150 m) and supplemented them with one between these two (135 m). The flight missions were performed using (i) the DJI Ground Station Pro application for both Phantom 4 and Matrice 210, and (ii) the Pix4Dcapture application for the Parrot Disco Pro Ag. The flights were conducted using perpendicular flight lines with 80% forward (longitudinal) overlap and 70% side (lateral) overlaps. The UAVs followed predefined flight plans across the study sites. The sensors’ triggering option was set to Overlaps (one image acquisition per waypoint) or, where not possible, Time-lapse (a fixed time between any two acquisitions). In order to simulate the behaviour of a user without in-depth knowledge of RS techniques, we evaluated the performance of the systems in the default mode, i.e., with no adjustments to the vendor-preset parameters of image acquisition (shutter speed/aperture preference, ISO, etc.).

2.3. Image Alignment and Surface Reconstruction

Agisoft Metashape Professional (version 1.5.5, Agisoft LLC, St. Petersburg, Russia) image-matching software was used to generate point clouds and reconstruct the 3D surface [44]. Metashape uses metadata related to the band information from EXIF to load the image description, including the coordinates taken from the on-board GNSS units. As the first step, we loaded the images and estimated the image quality. Images taken during the take-off, landing and taxiing, as well as those with a quality below 0.5 (automatically evaluated by Agisoft), were excluded from further processing [45]. The numbers of the acquired images are tabulated below (Table 2).

Table 2.

Number of acquired images of each camera, and the flight level and Agisoft processing parameters.

After determining the image orientations, i.e., after geometrical processing [46], the sparse point clouds were checked for outliers and, subsequently, densified using the surveyed GCPs. Following the Metashape manual, a digital surface model (DSM) was constructed using dense point clouds and an orthomosaic was built; see the processing parameters in Table 2. The ground point classification of the dense point clouds was performed in order to enable the construction of a digital terrain model (DTM). In accordance with [47], point cloud filtering was performed using filtering parameter tuning in order to receive the best possible terrain for all of the inputs.

2.4. Deriving Tree Variables and Statistical Analysis

The evaluated tree parameters (the number of detected trees and the crown diameter) were derived in R (version 3.4.3, R Core Team, Vienna, Austria). First, we subtracted the terrain models from the surface models in order to gain normalised heights, i.e., Canopy Height Models (CHM). Subsequently, CHMs from all 24 datasets (3 altitude levels, 2 sites and 4 cameras) were processed using the same workflow in the lidR package [48,49]. The workflow included (i) identification of individual trees; and (ii) delimitation of the tree crowns. Detection of the individual trees was based on local maxima filtering using focal statistics [50,51] while crown delineation was performed by watershed-based object detection [52,53]. Subsequently, the crown diameter was calculated using an automatic methodological workflow consisting of (i) the approximation of individual detected crowns using circles with areas corresponding to those of the tree crown polygons, and (ii) circle diameter calculation. Thus, the final output contained information about the total number of trees along with the location (coordinates) and crown diameter (m) of each individual tree/shrub.

Reference values for the number and diameters of the tree crowns were obtained by the operator through manual detection from the orthomosaic. The number of trees was also surveyed in the field. The tree crown diameters were manually measured in the north-south and west-east directions in ArcGIS software, version 10.7.1 (ESRI, Redlands, CA, USA). Due to the slight differences in the treetop position in the CHMs from the individual UASs and the reference, the treetops on the individual orthomosaics were automatically overlaid (Near function in ArcGIS) before further processing. Multiple treetops within a radius of 2 m were considered to represent a single tree [15]. In addition, the results were visually inspected.

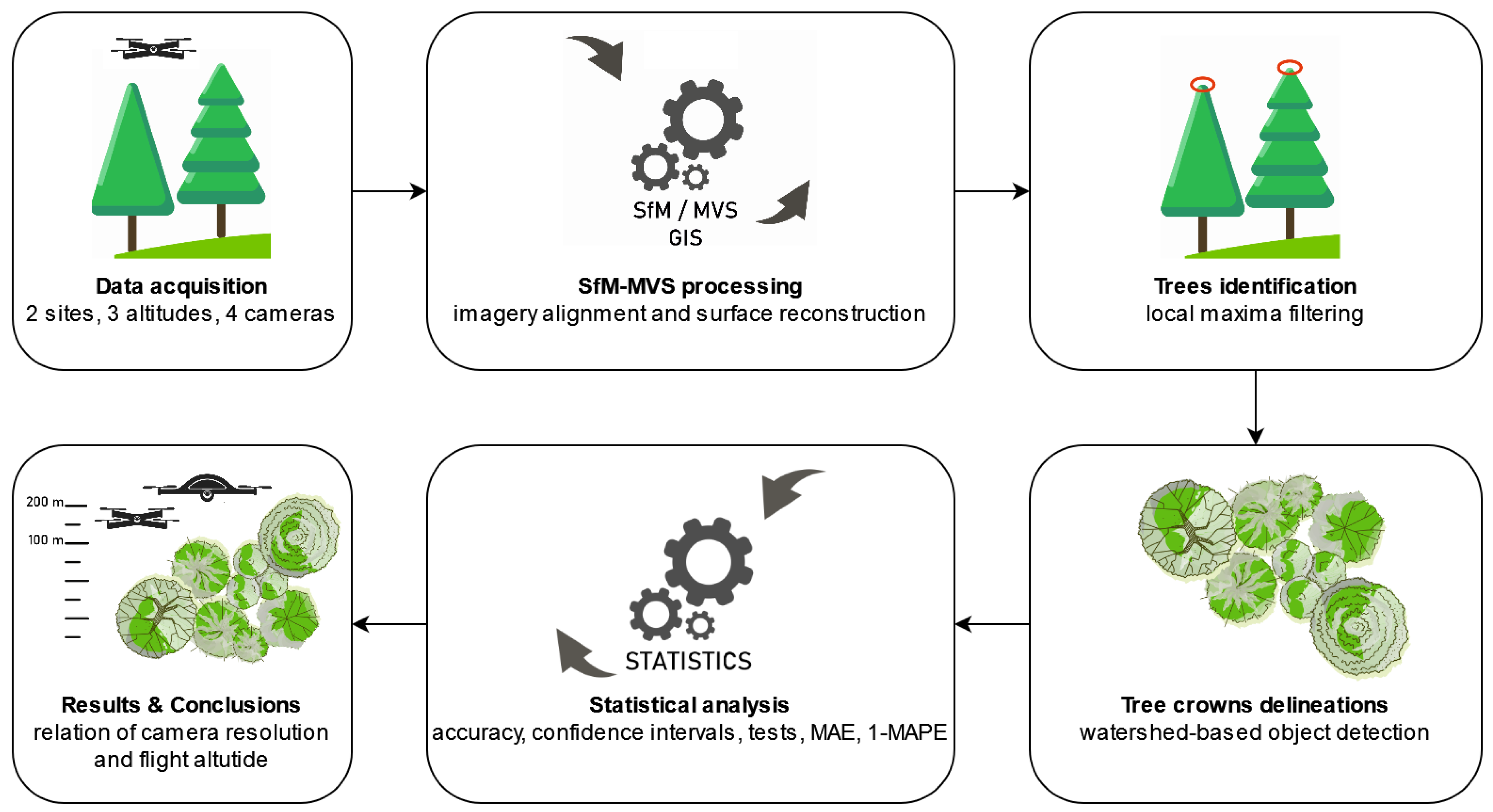

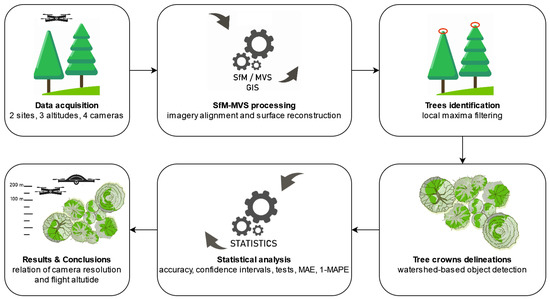

The accuracy of individual tree detection, and thus the proportion of correctly detected trees in both study sites, was evaluated and expressed as the total accuracy with 95% confidence intervals (Table 3). In total, 100 trees were randomly selected within each study site. The normality of the distribution of the individual treetop areas was tested by the Shapiro–Wilk test with outliers both included and excluded. Depending on the results, we applied Student’s t-test or the Wilcoxon signed-rank test, respectively (Table 4), for the detection of significant differences between the automatically detected treetop areas and the reference values (i.e., for the comparison of the performance of the models). The accuracy of the detected crowns was evaluated and expressed as the Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE); the model performance was expressed as 1-MAPE (Table 4). The study pipeline is in Figure 2.

Table 3.

Descriptive statistics of the models’ performance: the accuracy as a percentage of the identified trees (Detected Trees) and the 95% confidence interval (CI 95) for the achieved accuracy; the values of the accuracies of 70% or more (within the range), together with the 95% confidence intervals, are in bold. In total, 612 detected trees were used for reference at each site.

Table 4.

Tree crown delineation: p values of Student’s t-test or Wilcoxon test, descriptive Mean Absolute Error (MAE) and model performance (1-MAPE). Non-significant values are in bold—such models are supposed not to differ from the reference value.

Figure 2.

Study processing pipeline. Using four UAVs, data were collected at three different flight levels at two sites. The data were subsequently processed by SfM-MVS methods into the form of orthomosaics and digital surface/terrain models. Then, the treetops were automatically detected and the tree crowns were delineated using the lidR package in R. The accuracy of the automatic methods against the reference data was further evaluated statistically.

3. Results

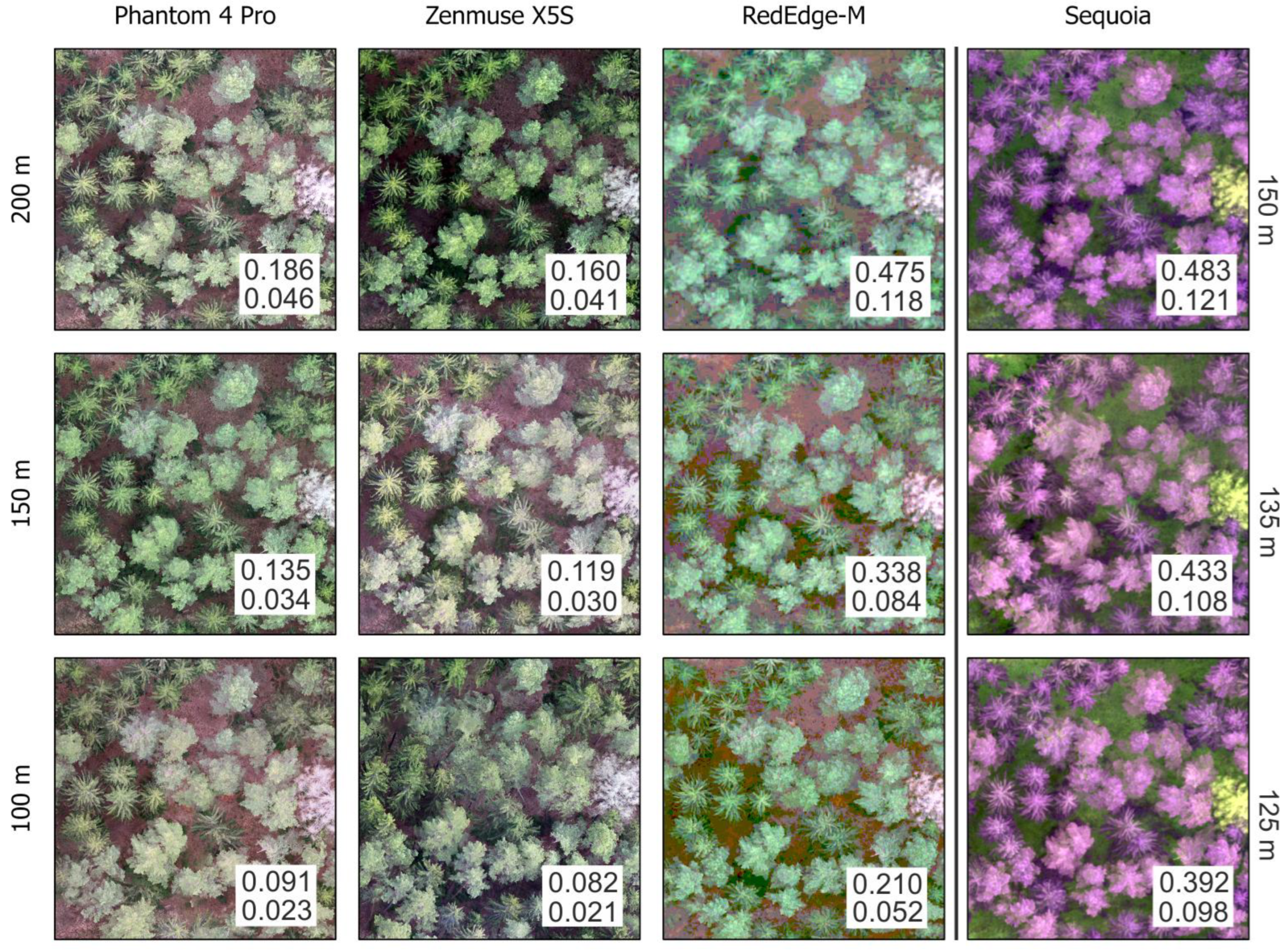

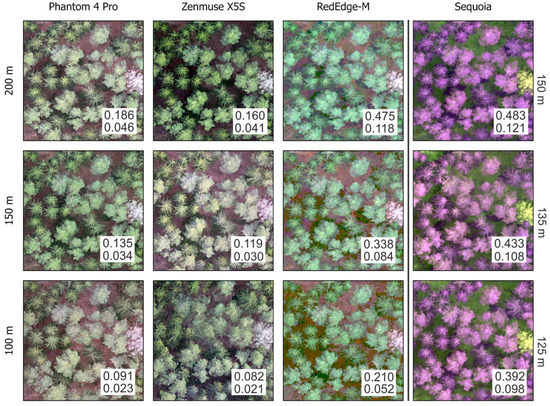

In total, 24 canopy height models, together with orthorectified mosaics, were derived from the low-altitude aerial surveys using three different types of unmanned aerial systems across two forested study sites. The detail of the orthomosaics with the resulting spatial resolutions are in Figure 3.

Figure 3.

The details of Site I of the study: created models with the resulting spatial resolution of the digital elevation model (first lines) and orthomosaics (second lines) in metres.

3.1. Number of Trees

As far as the number of trees is concerned, all of the models observed a similar trend, i.e., that cameras with a higher resolution and larger sensor size were able to capture the forest canopy in more detail than those with a lower resolution and smaller sensors (the latter tended to smooth out slight vertical differences). Generally, sensors with higher spatial resolution, i.e., the Phantom 4 Pro and Zenmuse XS5, performed better at higher flight levels (150 or 200 m AGL), while the sensors with lower resolution (RedEdge-M and Sequoia) performed better at the lowest flight level (100 and 125 m AGL, respectively); see the descriptive statistics in Table 3.

Two sites were tested: a full-grown 80–100 years old forest with a mean crown size of 7.1 ± 1.4 m (Site I), and a younger, 20–40 year-old forest with a 4.5 ± 0.9 m mean crown size (Site II). The Phantom 4 Pro and Zenmuse X5S offered similar results for both sites, while the RedEdge-M and Sequoia performed much better at Site I, probably due to the larger tree crown size and the lower resolution of the sensors. Considering the success rate of the detected individual trees, the best results were achieved using the widely used consumer-grade DJI Phantom 4 Pro flying at 150 m AGL, yielding 84% correctly identified trees at both typical central European forest sites. Zenmuse X5S achieved very similar results with a detection rate of 81–83% at a 150 m flight height. On the other hand, RedEdge-M detected 75–78% and Sequoia 50–71% of the trees across both sites at 100 m AGL. In general, the Sequoia camera performed worse than the RedEdge-M, which could be caused by is poorer optical system quality, resulting in lower detail. However, the inferior results could also be associated with the different fixed capture settings compared to the RedEdge-M camera.

3.2. Tree Crown Diameters

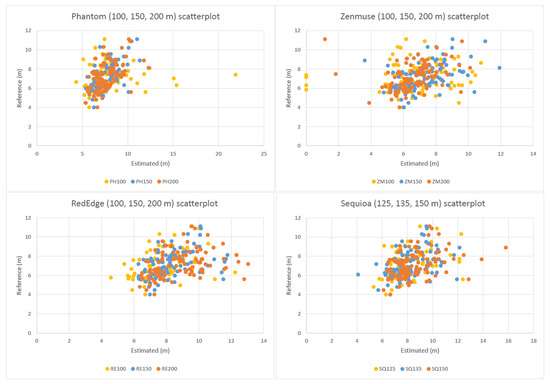

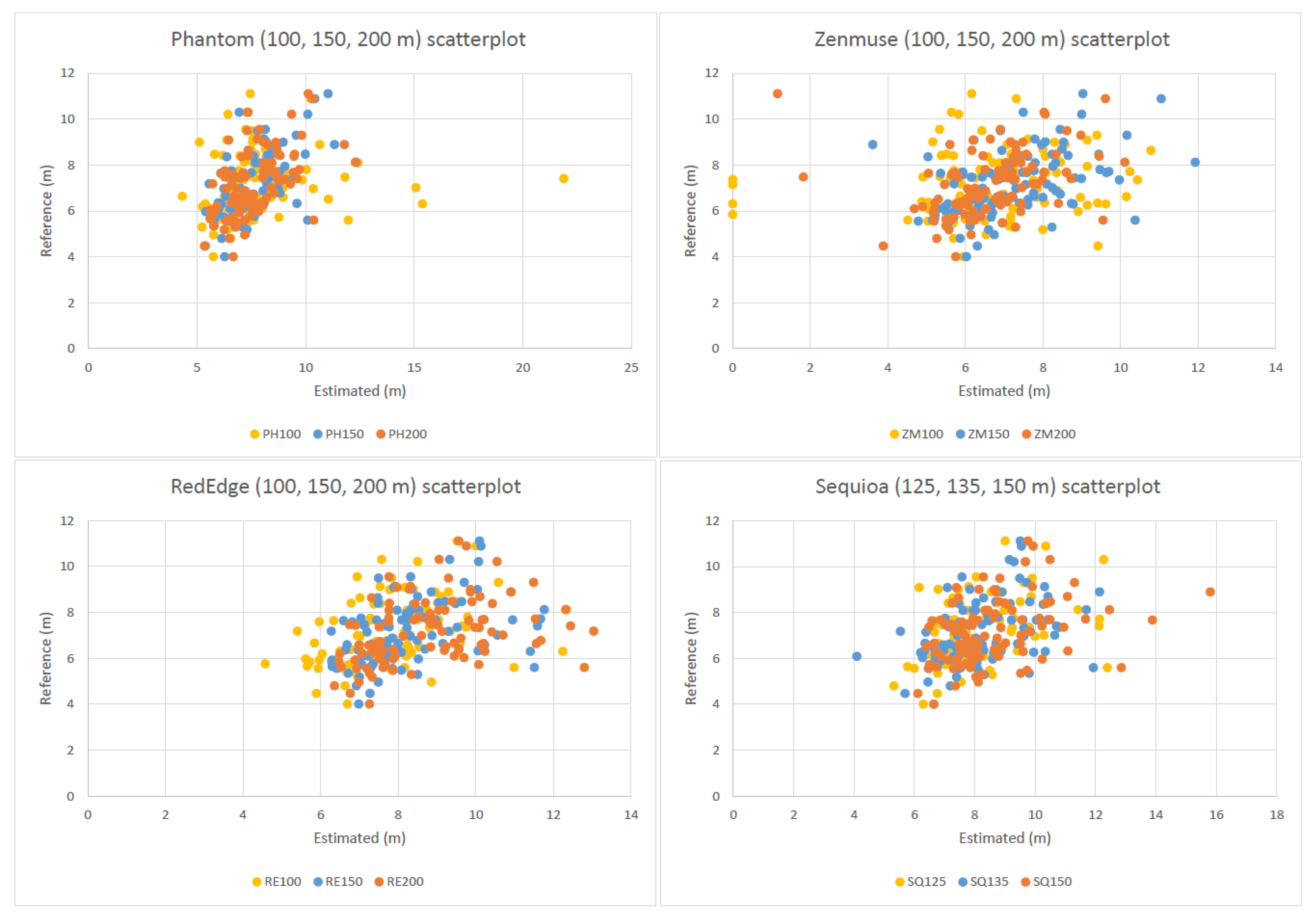

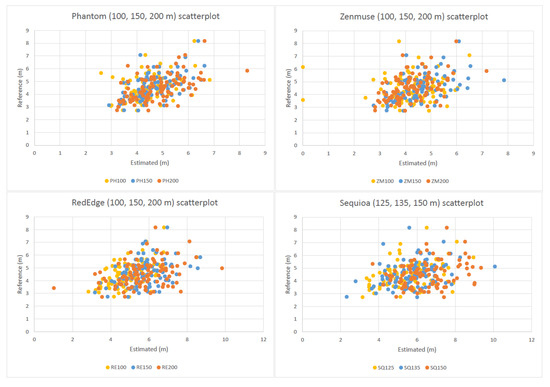

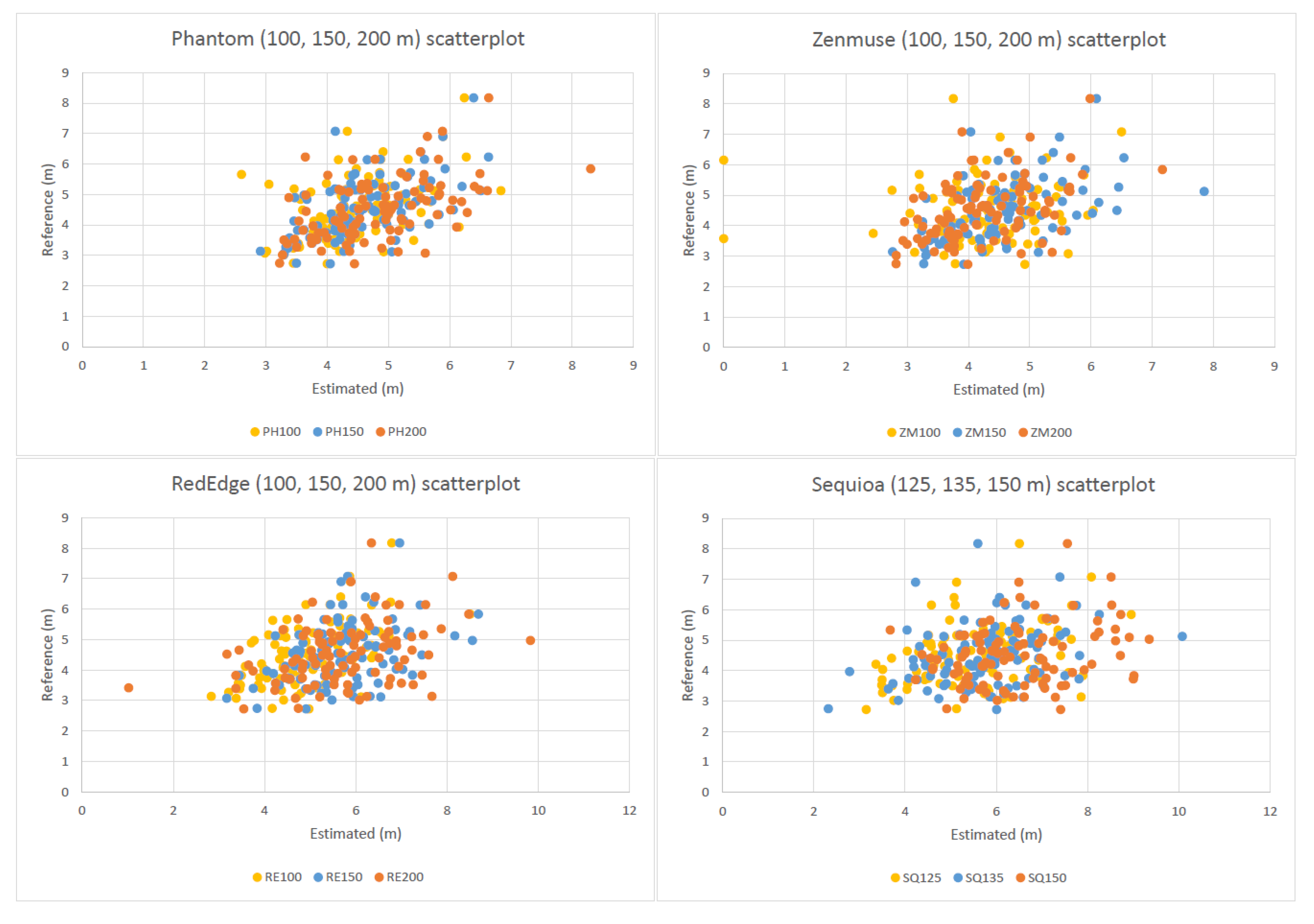

As far as the tree crown diameters are concerned, the best results (i.e., the lowest errors) were achieved using the Phantom 4 Pro and Zenmuse X5S. The MAE of the tree crown diameters was 0.79–0.99 m for the Phantom 4 Pro, 0.88–1.16 m for the Zenmuse X5S, 1.03–1.60 m for the RedEdge-M, and 1.27–1.81 m for the Sequoia, respectively. Sensors with higher resolutions generally achieved close to 80% accuracy in the crown diameter estimation (calculated as 1-MAPE) at all flight levels; in the group of sensors with lower resolution, only the 100 m flight level was worth a closer look; the full results are tabulated (Table 4) and visualized in scatterplots (Figure A1 and Figure A2).

Sensors with better resolution performed better at higher flight altitudes (150–200 m AGL); their accuracy was higher than 80%. They performed very well, especially at the 150 m altitude (note the 86% accuracy of the Phantom 4 Pro at 150 m and the 84–85% accuracy of the Zenmuse X5S at 150 m, respectively). Following the trend of tree identification, multispectral cameras with much lower resolution performed better at low flight altitudes (100 m AGL), where RedEdge-M and Sequoia achieved promising 81% and 80–69% accuracies, respectively.

The tree crown diameters performance of higher-resolution sensors remained balanced across the sites. In contrast, the low-resolution sensor’s performance declined with the decreasing diameters of the tree crowns (especially for the Sequoia). At Site I, with a mean crown diameter of 7.1 ± 1.4 m, RedEdge-M and Sequoia’s accuracy ranged between 81% and 72%. When the mean crown diameter decreased to 4.5 ± 0.9 m (Site II), the accuracy decreased significantly to 81–51%. However, the low value of 50% was only valid for the fixed-wing UAV at the highest altitude, which can be unsuitable for this type of analysis; all of the remaining flights, even with low-resolution sensors, yielded accuracies of 65% or more.

4. Discussion

Many studies have pointed out the potential of remote sensing for forestry purposes. Over the last few years, the popularity of UAVs has increased, and many applications have been explored and described [54]. Specifically, UAVs equipped with various cameras were successfully used to count trees and to measure their crowns and heights [21,55,56]. The potential of consumer-grade (low-cost) solutions is also being explored in practice [57]. This is why this paper compares the performance of four widely used cameras for the counting of the trees and the measurement of their crown diameter. The presented results indicated that even consumer-grade non-professional cameras have potential for measuring tree parameters, especially for users without in-depth education in remote sensing data acquisition and processing, such as forest managers. Moreover, it is possible to process the data solely in open-source software [58], thereby reducing the necessary costs.

The best success rate of the tree detection and the best accuracy of delineated crowns using the consumer-grade RGB cameras was observed when the imagery was taken from the flight altitude of 150 m AGL (which corresponds approximately to 140–120 m above the canopy, depending on the site) at both sites, i.e., in the full-grown as well as young dense forest. The RGB cameras yielded generally better results at 200 m AGL than at 100 m AGL. On the other hand, the multispectral cameras yielded better results at the lowest flight altitude, i.e., 100 m AGL (approximately 90–70 m above the canopy). The increasing flight altitude led to a significant decline of the results of multispectral cameras, especially in tree detection.

Lidar is often considered to be the superior method for scanning forests, as it can better penetrate the canopy. This is true where tree heights are being measured [59]; however, where tree detection and tree crown delineation are concerned, SfM methods can provide results of similar accuracy. While the best result achieved using our simple workflow was 84% of detected individual trees, St-Onge et al. [60] achieved such accuracy (83%) using lidar in boreal forests. On the other hand, Kuželka et al. [29] reported as much as 98–99% successfully identified trees using UAV-lidar; however, they analysed a 100–130 years old coniferous forest with a low tree density. Similarly to our study, an accuracy of more than 80% was reported for a mixed coniferous forest by Mohan et al. [21] using a DJI Phantom 3. As far as a forest with standard silviculture treatment is concerned, the success rate exceeding 80% detected trees is excellent. On the other hand, the success rate may exceed 90% in plantations and orchards; Guerra-Hernández et al. [61] reported 80–96% accuracy in eucalyptus plantations.

Where the tree crown diameters are concerned, Panagiotidis et al. [52] reported lower accuracy than our study using Sony NEX-5R, in a very similar environment. Specifically, they reported MAEs of 2.6 and 2.8 m, respectively. Qiu et al. [62] achieved accuracies of 76% and 63% in a rainforest and coniferous forest, respectively. Our results correspond to those of Zhou et al. [63], who achieved an accuracy of 86% in a mixed growth forest. On the other hand, the authors report the success rate being as high as 92% in monoculture environments.

UAV image acquisition results may be generally affected by the weather conditions, especially the wind speed, precipitation, shadows and light conditions, resulting in differences in exposure or blurriness [45]. This study aimed to eliminate these factors by performing the flights at low wind speeds, with no precipitation, and with stable light conditions. However, we set capture settings set to default/auto mode without user adjustment. The differences in the cameras’ behaviour (F-stop, shutter speed, ISO) might have also affected the image quality and, in effect, the detection results.

In addition, the quality of forest canopy geometry may also be affected by the angle of the acquired imagery. Imagery acquisition combining different camera angles may significantly reduce the models’ systematic error and the error in the determined internal orientation parameters [64]; using oblique image acquisition might, therefore, make the forest canopy imagery even more suitable for tree crown delineation. Moreover, the flight level of lesser tens of meters is usually believed to improve the overall accuracy. However, the lower flight levels increase the number of images and, in effect, the computational time and costs; such (perhaps unnecessarily high) spatial resolution may also result in increasing bias and uncertainty in canopy geometry.

The results may also be affected by the processing workflow used for the tree detection and crown delineation. The lidR package, which was used in this study, was developed for forestry applications of airborne LiDAR data [49]; however, it could fit UAV-borne data as well [65]. The used method consists of local maxima filtering and watershed-based object detection using a (normalised) digital elevation model. Such a workflow is typical for the detection of and delineation of tree crowns in various environments [56,66,67]. On the other hand, other methods, such as Geographic Object-Based Image Analysis—GEOBIA [68,69], neural network approaches and machine learning methods, or semi-supervised feature extraction [70,71], have also been successfully employed [72,73]. Methods based on spectral information analysis can increase the accuracy compared to CHM-based methods [74]; however, CHM-based tree crown detection and delineation methods offer approximately 70–90% accuracies (depending on the environment). Thanks to ready-made processing workflows, CHM-based methods can be used even by users without in-depth education in remote sensing data processing. In contrast, GEOBIA or machine learning methods require advanced knowledge and experience in remote sensing data processing.

5. Conclusions

Close range remote sensing techniques support current forestry practices and may be beneficial even to forest managers without in-depth education in remote sensing. The successful implementation of UAVs depends not only on the UAV’s availability, flexibility and user-friendliness but also on financial affordability and reliability. UAV-borne canopy height models allow the derivation of numerous parameters, including tree crown detection and delineation, and the determination of tree heights, which could be used, among other things, to estimate the aboveground forest biomass. This study assessed the effect of widely used consumer-grade cameras and flight altitudes on the detection and delineation of typical central European conifer trees. The study brings a comparison of the performance of four solutions (three of which are ready-made commercial solutions suitable for “out of the box” use), providing information on the reliability of data acquired by inexperienced users of these systems. In addition, a clear elementary workflow for inexperienced users is presented, opening the door to the easier usability of such data in forestry practice.

Our results prove the relationship between the flight altitude and the quality of the resulting product. While RGB cameras with higher spatial resolutions performed better in higher altitudes, multispectral cameras with much smaller spatial resolution required lower flight altitudes to yield sufficient accuracy. The success of tree detection and crown diameter determination decreased in the case of multispectral cameras proportionately with increasing flight altitude, i.e. with the declining detail of the forest canopy.

We found that the imagery acquisition altitude of 150 m AGL was associated with the best performance, especially for sensors with a higher spatial resolution (i.e. consumer-grade RGB cameras); it is also necessary to mention the additional benefit of this flight altitude compared to lower ones, i.e., the lower number of images leading to the lower computational time and lower costs). On the other hand, more expensive multispectral cameras with a much lower spatial resolution can also achieve promising results but lower flight levels of approximately100 m AGL are necessary for these sensors.

Author Contributions

All of the authors contributed in a substantial way to the manuscript. J.K. and T.K. invented, conceived, designed and performed the experiment, and wrote significant manuscript parts. J.K. was responsible for project management and administration. P.K. contributed by acquiring the UAV imagery. J.K. processed all of the input remote sensing data. K.H. performed the formal analysis and statistical evaluation. J.K. and T.K. ensured the project funding. All of the authors read and approved the submitted manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

The research was supported by the Technology Agency of the Czech Republic under the Grant Nos. CK02000203 and TJ02000283.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to data licensing reasons.

Acknowledgments

We would like to thank David Balhar for organising and performing the aerial surveys and Dominika Gulková for measuring the crown diameters. Also, many thanks to Jaroslav Janošek for his helpful comments.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

Scatterplots showing the association between the manually and automatically delineated tree crowns—examples from Site I.

Figure A1.

Scatterplots showing the association between the manually and automatically delineated tree crowns—examples from Site I.

Figure A2.

Scatterplots showing the association between the manually and automatically delineated tree crowns—examples from Site II.

Figure A2.

Scatterplots showing the association between the manually and automatically delineated tree crowns—examples from Site II.

References

- Peña, L.; Casado-Arzuaga, I.; Onaindia, M. Mapping recreation supply and demand using an ecological and a social evaluation approach. Ecosyst. Serv. 2015, 13, 108–118. [Google Scholar] [CrossRef]

- Ciais, P.; Schelhaas, M.J.; Zaehle, S.; Piao, S.L.; Cescatti, A.; Liski, J.; Luyssaert, S.; Le-Maire, G.; Schulze, E.-D.D.; Bouriaud, O.; et al. Carbon accumulation in European forests. Nat. Geosci. 2007, 1, 2000–2004. [Google Scholar] [CrossRef]

- Pan, Y.; Birdsey, R.A.; Phillips, O.L.; Jackson, R.B. The Structure, Distribution, and Biomass of the World’s Forests. Annu. Rev. Ecol. Evol. Syst. 2013, 44, 593–622. [Google Scholar] [CrossRef] [Green Version]

- Estreguil, C.; Caudullo, G.; de Rigo, D.; San-Miguel-Ayanz, J. Forest Landscape in Europe: Pattern, Fragmentation and Connectivity. Eur. Sci. Tech. Res. 2013, 25717, 18. [Google Scholar] [CrossRef]

- Lewis, S.L.; Edwards, D.P.; Galbraith, D. Increasing human dominance of Tropical Forests. Science 2015, 349, 827–832. [Google Scholar] [CrossRef]

- Smith, P.; House, J.I.; Bustamante, M.; Sobocká, J.; Harper, R.; Pan, G.; West, P.C.; Clark, J.M.; Adhya, T.; Rumpel, C.; et al. Global change pressures on soils from land use and management. Glob. Chang. Biol. 2016, 22, 1008–1028. [Google Scholar] [CrossRef]

- Kautz, M.; Meddens, A.J.H.; Hall, R.J.; Arneth, A. Biotic disturbances in Northern Hemisphere forests—A synthesis of recent data, uncertainties and implications for forest monitoring and modelling. Glob. Ecol. Biogeogr. 2017, 26, 533–552. [Google Scholar] [CrossRef]

- White, J.C.; Coops, N.C.; Wulder, M.A.; Vastaranta, M.; Hilker, T.; Tompalski, P. Remote Sensing Technologies for Enhancing Forest Inventories: A Review. Can. J. Remote Sens. 2016, 42, 619–641. [Google Scholar] [CrossRef] [Green Version]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef] [Green Version]

- Banu, T.P.; Borlea, G.F.; Banu, C. The Use of Drones in Forestry. J. Environ. Sci. Eng. B 2016, 5, 557–562. [Google Scholar] [CrossRef] [Green Version]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicle Forest Remote Sensing—A Systematic Review. Part I: A General Framework. Forests 2021, 12, 327. [Google Scholar] [CrossRef]

- Dainelli, R.; Toscano, P.; Di Gennaro, S.F.; Matese, A. Recent Advances in Unmanned Aerial Vehicles Forest Remote Sensing—A Systematic Review. Part II: Research Applications. Forests 2021, 12, 397. [Google Scholar] [CrossRef]

- Gambella, F.; Sistu, L.; Piccirilli, D.; Corposanto, S.; Caria, M.; Arcangeletti, E.; Proto, A.R.; Chessa, G.; Pazzona, A. Forest and UAV: A bibliometric review. Contemp. Eng. Sci. 2016, 9, 1359–1370. [Google Scholar] [CrossRef]

- Brovkina, O.; Cienciala, E.; Surový, P.; Janata, P. Unmanned aerial vehicles (UAV) for assessment of qualitative classification of Norway spruce in temperate forest stands. Geo-Spatial Inf. Sci. 2018, 21, 12–20. [Google Scholar] [CrossRef] [Green Version]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-Based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef] [Green Version]

- Abdollahnejad, A.; Panagiotidis, D. Tree Species Classification and Health Status Assessment for a Mixed Broadleaf-Conifer Forest with UAS Multispectral Imaging. Remote Sens. 2020, 12, 3722. [Google Scholar] [CrossRef]

- Minařík, R.; Langhammer, J.; Lendzioch, T. Detection of Bark Beetle Disturbance at Tree Level Using UAS Multispectral Imagery and Deep Learning. Remote Sens. 2021, 13, 4768. [Google Scholar] [CrossRef]

- Cessna, J.; Alonzo, M.G.; Foster, A.C.; Cook, B.D. Mapping Boreal Forest Spruce Beetle Health Status at the Individual Crown Scale Using Fused Spectral and Structural Data. Forests 2021, 12, 1145. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Díaz-Varela, R.A.; Ávarez-González, J.G.; Rodríguez-González, P.M. Assessing a novel modelling approach with high resolution UAV imagery for monitoring health status in priority riparian forests. For. Ecosyst. 2021, 8, 1–21. [Google Scholar] [CrossRef]

- Klouček, T.; Komárek, J.; Surový, P.; Hrach, K.; Janata, P.; Vašíček, B. The use of UAV mounted sensors for precise detection of bark beetle infestation. Remote Sens. 2019, 11, 1561. [Google Scholar] [CrossRef] [Green Version]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual tree detection from unmanned aerial vehicle (UAV) derived canopy height model in an open canopy mixed conifer forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef] [Green Version]

- Almeida, A.; Gonçalves, F.; Silva, G.; Mendonça, A.; Gonzaga, M.; Silva, J.; Souza, R.; Leite, I.; Neves, K.; Boeno, M.; et al. Individual Tree Detection and Qualitative Inventory of a Eucalyptus sp. Stand Using UAV Photogrammetry Data. Remote Sens. 2021, 13, 3655. [Google Scholar] [CrossRef]

- Tuominen, S.; Balazs, A.; Honkavaara, E.; Pölönen, I.; Saari, H.; Hakala, T.; Viljanen, N. Hyperspectral UAV-Imagery and photogrammetric canopy height model in estimating forest stand variables. Silva Fenn. 2017, 51, 7721. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Malenovsky, Z.; Turner, D.; Vopenka, P.; Malenovskỳ, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Banerjee, B.P.; Spangenberg, G.; Kant, S. Fusion of Spectral and Structural Information from Aerial Images for Improved Biomass Estimation. Remote Sens. 2020, 12, 3164. [Google Scholar] [CrossRef]

- Kašpar, V.; Hederová, L.; Macek, M.; Müllerová, J.; Prošek, J.; Surový, P.; Wild, J.; Kopecký, M. Temperature buffering in temperate forests: Comparing microclimate models based on ground measurements with active and passive remote sensing. Remote Sens. Environ. 2021, 263, 112522. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Lidmila, M.; Kolář, V.; Křemen, T. Vegetation filtering of a steep rugged terrain: The performance of standard algorithms and a newly proposed workflow on an example of a railway ledge. Remote Sens. 2021, 13, 3050. [Google Scholar] [CrossRef]

- Almeida, D.R.A.; Broadbent, E.N.; Zambrano, A.M.A.; Wilkinson, B.E.; Ferreira, M.E.; Chazdon, R.; Meli, P.; Gorgens, E.B.; Silva, C.A.; Stark, S.C.; et al. Monitoring the structure of forest restoration plantations with a drone-lidar system. Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 192–198. [Google Scholar] [CrossRef]

- Kuželka, K.; Slavík, M.; Surový, P. Very high density point clouds from UAV laser scanning for automatic tree stem detection and direct diameter measurement. Remote Sens. 2020, 12, 1236. [Google Scholar] [CrossRef] [Green Version]

- Lindberg, E.; Holmgren, J. Individual Tree Crown Methods for 3D Data from Remote Sensing. Curr. For. Reports 2017, 3, 19–31. [Google Scholar] [CrossRef] [Green Version]

- Slavík, M.; Kuželka, K.; Modlinger, R.; Tomášková, I.; Surový, P. Uav laser scans allow detection of morphological changes in tree canopy. Remote Sens. 2020, 12, 3829. [Google Scholar] [CrossRef]

- Alonzo, M.; Andersen, H.E.; Morton, D.C.; Cook, B.D. Quantifying boreal forest structure and composition using UAV structure from motion. Forests 2018, 9, 119. [Google Scholar] [CrossRef] [Green Version]

- Navarro, A.; Young, M.; Allan, B.; Carnell, P.; Macreadie, P.; Ierodiaconou, D. The application of Unmanned Aerial Vehicles (UAVs) to estimate above-ground biomass of mangrove ecosystems. Remote Sens. Environ. 2020, 242, 111747. [Google Scholar] [CrossRef]

- Berra, E.F.; Peppa, M.V. Advances and Challenges of UAV SFM MVS Photogrammetry and Remote Sensing: Short Review; IEEE: Piscataway, NJ, USA, 2020; pp. 533–538. [Google Scholar]

- Meinen, B.U.; Robinson, D.T. Mapping erosion and deposition in an agricultural landscape: Optimization of UAV image acquisition schemes for SfM-MVS. Remote Sens. Environ. 2020, 239, 111666. [Google Scholar] [CrossRef]

- Ghanbari Parmehr, E.; Amati, M. Individual Tree Canopy Parameters Estimation Using UAV-Based Photogrammetric and LiDAR Point Clouds in an Urban Park. Remote Sens. 2021, 13, 2062. [Google Scholar] [CrossRef]

- Mohan, M.; Leite, R.V.; Broadbent, E.N.; Wan Mohd Jaafar, W.S.; Srinivasan, S.; Bajaj, S.; Dalla Corte, A.P.; Do Amaral, C.H.; Gopan, G.; Saad, S.N.M.; et al. Individual tree detection using UAV-lidar and UAV-SfM data: A tutorial for beginners. Open Geosci. 2021, 13, 1028–1039. [Google Scholar] [CrossRef]

- Gallardo-Salazar, J.L.; Pompa-García, M. Detecting Individual Tree Attributes and Multispectral Indices Using Unmanned Aerial Vehicles: Applications in a Pine Clonal Orchard. Remote Sens. 2020, 12, 4144. [Google Scholar] [CrossRef]

- Kuzmin, A.; Korhonen, L.; Kivinen, S.; Hurskainen, P.; Korpelainen, P.; Tanhuanpää, T.; Maltamo, M.; Vihervaara, P.; Kumpula, T. Detection of European Aspen (Populus tremula L.) Based on an Unmanned Aerial Vehicle Approach in Boreal Forests. Remote Sens. 2021, 13, 1723. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Caceres, M.L.L.; Moritake, K.; Kentsch, S.; Shu, H.; Diez, Y. Individual Sick Fir Tree (Abies mariesii) Identification in Insect Infested Forests by Means of UAV Images and Deep Learning. Remote Sens. 2021, 13, 260. [Google Scholar] [CrossRef]

- Qin, J.; Wang, B.; Wu, Y.; Lu, Q.; Zhu, H. Identifying Pine Wood Nematode Disease Using UAV Images and Deep Learning Algorithms. Remote Sens. 2021, 13, 162. [Google Scholar] [CrossRef]

- Diez, Y.; Kentsch, S.; Fukuda, M.; Caceres, M.L.L.; Moritake, K.; Cabezas, M. Deep Learning in Forestry Using UAV-Acquired RGB Data: A Practical Review. Remote Sens. 2021, 13, 2837. [Google Scholar] [CrossRef]

- Kameyama, S.; Sugiura, K. Effects of Differences in Structure from Motion Software on Image Processing of Unmanned Aerial Vehicle Photography and Estimation of Crown Area and Tree Height in Forests. Remote Sens. 2021, 13, 626. [Google Scholar] [CrossRef]

- Verhoeven, G. Taking computer vision aloft - archaeological three-dimensional reconstructions from aerial photographs with photoscan. Archaeol. Prospect. 2011, 18, 67–73. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef] [Green Version]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef] [Green Version]

- Klápště, P.; Fogl, M.; Barták, V.; Gdulová, K.; Urban, R.; Moudrý, V. Sensitivity analysis of parameters and contrasting performance of ground filtering algorithms with UAV photogrammetry-based and LiDAR point clouds. Int. J. Digit. Earth 2020, 0, 1672–1694. [Google Scholar] [CrossRef]

- Roussel., J.-R.; Auty, D. Airborne LiDAR Data Manipulation and Visualization for Forestry Applications. R Package Version 3.1.0. Available online: https://cran.r-project.org/package=lidR (accessed on 22 March 2022).

- Roussel, J.R.; Auty, D.; Coops, N.C.; Tompalski, P.; Goodbody, T.R.H.; Meador, A.S.; Bourdon, J.F.; de Boissieu, F.; Achim, A. lidR: An R package for analysis of Airborne Laser Scanning (ALS) data. Remote Sens. Environ. 2020, 251, 112061. [Google Scholar] [CrossRef]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Vauhkonen, J.; Ene, L.; Gupta, S.; Heinzel, J.; Holmgren, J.; Pitkänen, J.; Solberg, S.; Wang, Y.; Weinacker, H.; Hauglin, K.M.; et al. Comparative testing of single-tree detection algorithms under different types of forest. Forestry 2012, 85, 27–40. [Google Scholar] [CrossRef] [Green Version]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Surový, P.; Almeida Ribeiro, N.; Panagiotidis, D. Estimation of positions and heights from UAV-sensed imagery in tree plantations in agrosilvopastoral systems. Int. J. Remote Sens. 2018, 39, 4786–4800. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Reports 2019, 5, 155–168. [Google Scholar] [CrossRef] [Green Version]

- Ferreira, M.P.; de Almeida, D.R.A.; Papa, D.d.A.; Minervino, J.B.S.; Veras, H.F.P.; Formighieri, A.; Santos, C.A.N.; Ferreira, M.A.D.; Figueiredo, E.O.; Ferreira, E.J.L. Individual tree detection and species classification of Amazonian palms using UAV images and deep learning. For. Ecol. Manage. 2020, 475, 118397. [Google Scholar] [CrossRef]

- Miraki, M.; Sohrabi, H.; Fatehi, P.; Kneubuehler, M. Individual tree crown delineation from high-resolution UAV images in broadleaf forest. Ecol. Inform. 2021, 61, 101207. [Google Scholar] [CrossRef]

- Komárek, J. The perspective of unmanned aerial systems in forest management: Do we really need such details? Appl. Veg. Sci. 2020, 23, 718–721. [Google Scholar] [CrossRef]

- Vacca, G. Overview of open source software for close range photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.-ISPRS Arch. 2019, 42, 239–245. [Google Scholar] [CrossRef] [Green Version]

- Ganz, S.; Käber, Y.; Adler, P. Measuring tree height with remote sensing-a comparison of photogrammetric and LiDAR data with different field measurements. Forests 2019, 10, 694. [Google Scholar] [CrossRef] [Green Version]

- St-Onge, B.; Audet, F.-A.; Bégin, J. Characterizing the Height Structure and Composition of a Boreal Forest Using an Individual Tree Crown Approach Applied to Photogrammetric Point Clouds. Forests 2015, 6, 3899–3922. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; Cosenza, D.N.; Rodriguez, L.C.E.; Silva, M.; Tomé, M.; Díaz-Varela, R.A.; González-Ferreiro, E. Comparison of ALS- and UAV(SfM)-derived high-density point clouds for individual tree detection in Eucalyptus plantations. Int. J. Remote Sens. 2018, 39, 5211–5235. [Google Scholar] [CrossRef]

- Qiu, L.; Jing, L.; Hu, B.; Li, H.; Tang, Y. A New Individual Tree Crown Delineation Method for High Resolution Multispectral Imagery. Remote Sens. 2020, 12, 585. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Wang, L.; Jiang, K.; Xue, L.; An, F.; Chen, B.; Yun, T. Individual tree crown segmentation based on aerial image using superpixel and topological features. J. Appl. Remote Sens. 2020, 14, 022210. [Google Scholar] [CrossRef]

- Štroner, M.; Urban, R.; Seidl, J.; Reindl, T.; Brouček, J. Photogrammetry Using UAV-Mounted GNSS RTK: Georeferencing Strategies without GCPs. Remote Sens. 2021, 13, 1336. [Google Scholar] [CrossRef]

- Krause, S.; Sanders, T.G.M.; Mund, J.-P.P.; Greve, K. UAV-based photogrammetric tree height measurement for intensive forest monitoring. Remote Sens. 2019, 11, 758. [Google Scholar] [CrossRef] [Green Version]

- Berra, E.F. Individual tree crown detection and delineation across a woodland using leaf-on and leaf-off imagery from a UAV consumer-grade camera. J. Appl. Remote Sens. 2020, 14, 034501. [Google Scholar] [CrossRef]

- Huang, H.; Li, X.; Chen, C. Individual tree crown detection and delineation from very-high-resolution UAV images based on bias field and marker-controlled watershed segmentation algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2253–2262. [Google Scholar] [CrossRef]

- Díaz-Varela, R.A.; de la Rosa, R.; León, L.; Zarco-Tejada, P.J. High-resolution airborne UAV imagery to assess olive tree crown parameters using 3D photo reconstruction: Application in breeding trials. Remote Sens. 2015, 7, 4213–4232. [Google Scholar] [CrossRef] [Green Version]

- Yurtseven, H.; Akgul, M.; Coban, S.; Gulci, S. Determination and accuracy analysis of individual tree crown parameters using UAV based imagery and OBIA techniques. Meas. J. Int. Meas. Confed. 2019, 145, 651–664. [Google Scholar] [CrossRef]

- Luo, F.; Zou, Z.; Liu, J.; Lin, Z. Dimensionality reduction and classification of hyperspectral image via multi-structure unified discriminative embedding. IEEE Trans. Geosci. Remote Sens. 2021, 11, 3128764. [Google Scholar] [CrossRef]

- Duan, Y.; Huang, H.; Wang, T. Semisupervised Feature Extraction of Hyperspectral Image Using Nonlinear Geodesic Sparse Hypergraphs. IEEE Trans. Geosci. Remote Sens. 2021, 10, 3110855. [Google Scholar] [CrossRef]

- Adhikari, A.; Kumar, M.; Agrawal, S.; Raghavendra, S. An Integrated Object and Machine Learning Approach for Tree Canopy Extraction from UAV Datasets. J. Indian Soc. Remote Sens. 2020, 49, 471–478. [Google Scholar] [CrossRef]

- Kestur, R.; Angural, A.; Bashir, B.; Omkar, S.N.; Anand, G.; Meenavathi, M.B. Tree Crown Detection, Delineation and Counting in UAV Remote Sensed Images: A Neural Network Based Spectral–Spatial Method. J. Indian Soc. Remote Sens. 2018, 46, 991–1004. [Google Scholar] [CrossRef]

- Gu, J.; Grybas, H.; Congalton, R.G. A Comparison of Forest Tree Crown Delineation from Unmanned Aerial Imagery Using Canopy Height Models vs. Spectral Lightness. Forests 2020, 11, 605. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).