Probabilistic Distributed Algorithms for Energy Efficient Routing and Tracking in Wireless Sensor Networks

Abstract

:1. Introduction

1.1. A Brief Description of Wireless Sensor Networks

1.2. Critical Challenges

- The number of sensor particles in a sensor network is extremely large compared to that in a typical ad-hoc network.

- Sensor networks are typically prone to faults.

- Because of faults as well as energy limitations, sensor nodes may (permanently or temporarily) join or leave the network. This leads to highly dynamic network topology changes.

- The density of deployed devices in sensor networks is much higher than in ad-hoc networks.

- The limitations in energy, computational power and memory are much more severe in sensor networks.

Scalability

Efficiency

Fault-tolerance

1.3. Models and Relations between them

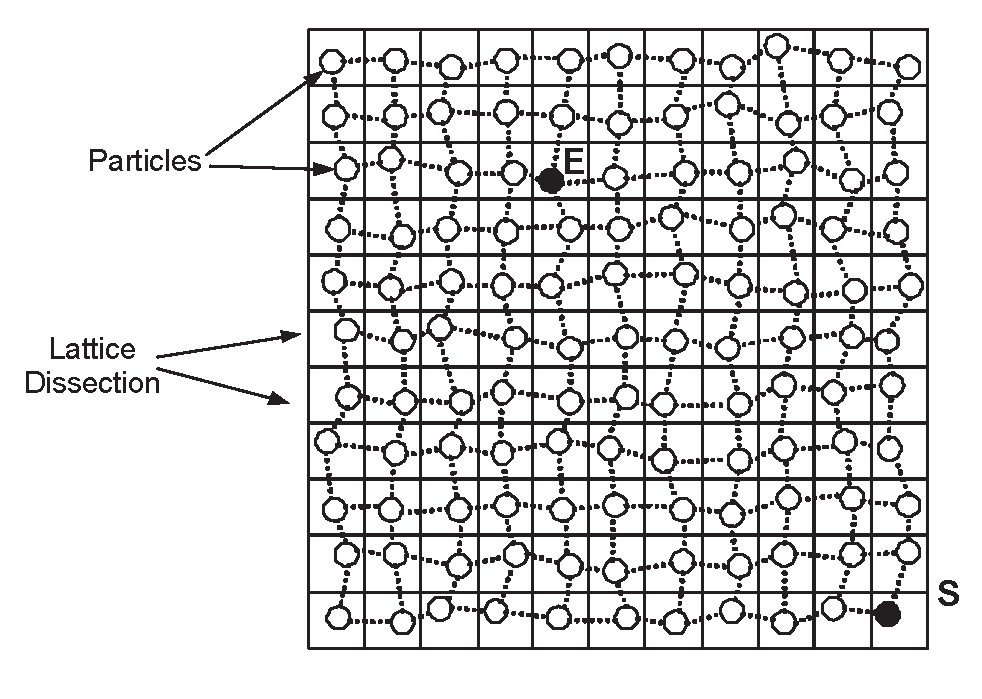

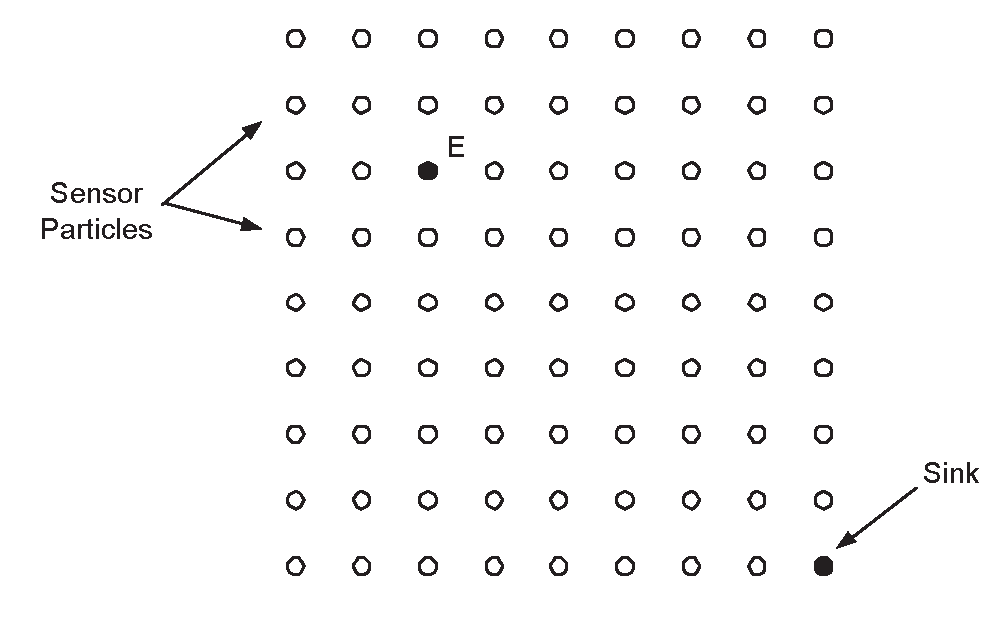

Basic Model M0

A stronger model M1

Variations

1.4. The Energy Efficiency Challenge in Routing and Tracking

- The Local Target Protocol (LTP), that performs a local optimization trying to minimize the number of data transmissions.

- The Probabilistic Forwarding Protocol (PFR), that creates redundant data transmissions that are probabilistically optimized, to trade-off energy efficiency with fault-tolerance.

- The Energy Balanced Protocol (EBP), that focuses on guaranteeing the same per sensor energy dissipation, in order to prolong the lifetime of the network.

2. LTP: A Hop-by-Hop Data Propagation Protocol

2.1. The Protocol

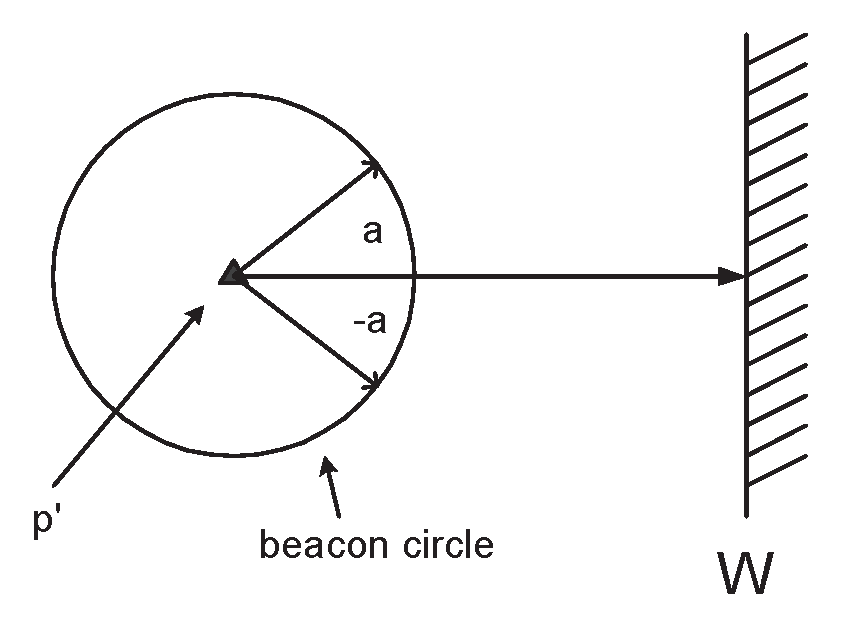

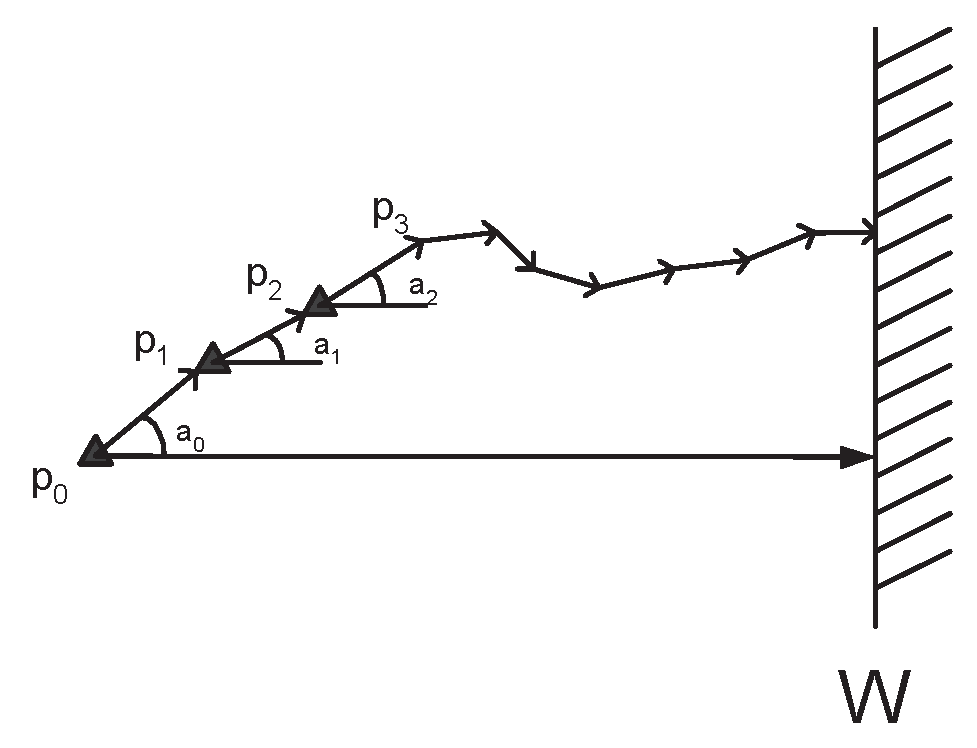

- Search Phase: It uses a periodic low energy directional broadcast in order to discover a particle nearer to than itself. (i.e. a particle p″ where d(p″, ) < d(p′, )).

- Direct Transmission Phase: Then, p′ sends info() to p″.

- Backtrack Phase: If consecutive repetitions of the search phase fail to discover a particle nearer to , then p″ sends info() to the particle that it originally received the information from.

2.2. Theoretical Analysis

2.3. Local Optimization: The Min-two Uniform Targets Protocol (M2TP)

3. PFR - A Probabilistic Forwarding Protocol

3.1. The Protocol

- physical damage of sensors,

- deliberate removal of some of them (possibly by an adversary in military applications),

- changes in the position of the sensors due to a variety of reasons (weather conditions, human interaction etc.), and

- physical obstacles blocking communication.

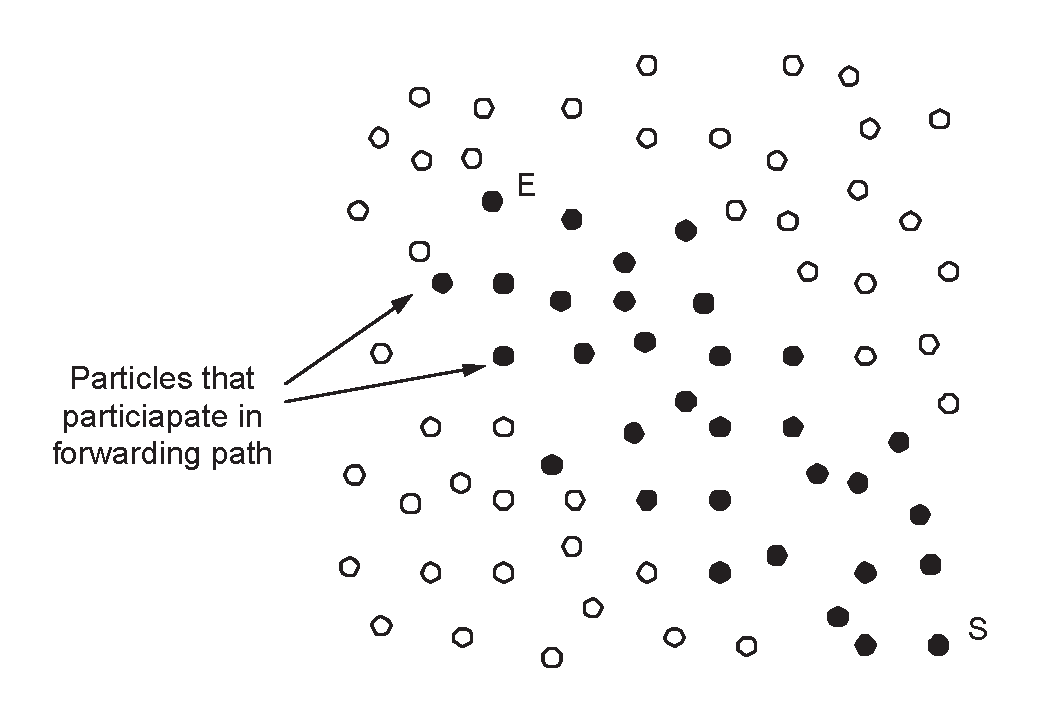

- Phase 1: The “Front” Creation Phase. Initially the protocol builds (by using a limited, in terms of rounds, flooding) a sufficiently large “front” of particles, in order to guarantee the survivability of the data propagation process. During this phase, each particle having received the data to be propagated, deterministically forwards them towards the sink. In particular, and for a sufficiently large number of steps , each particle broadcasts the information to all its neighbors, towards the sink. Remark that to implement this phase, and in particular to count the number of steps, we use a counter in each message. This counter needs at most bits.

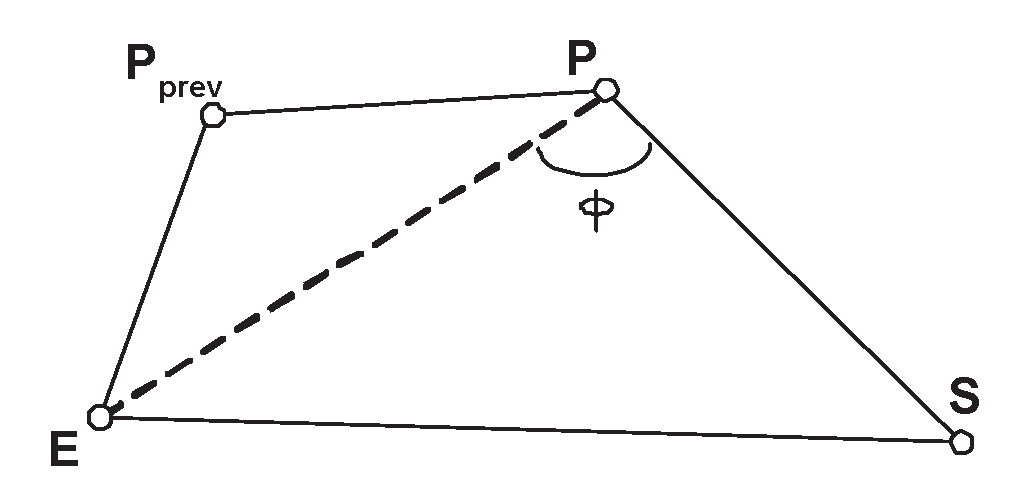

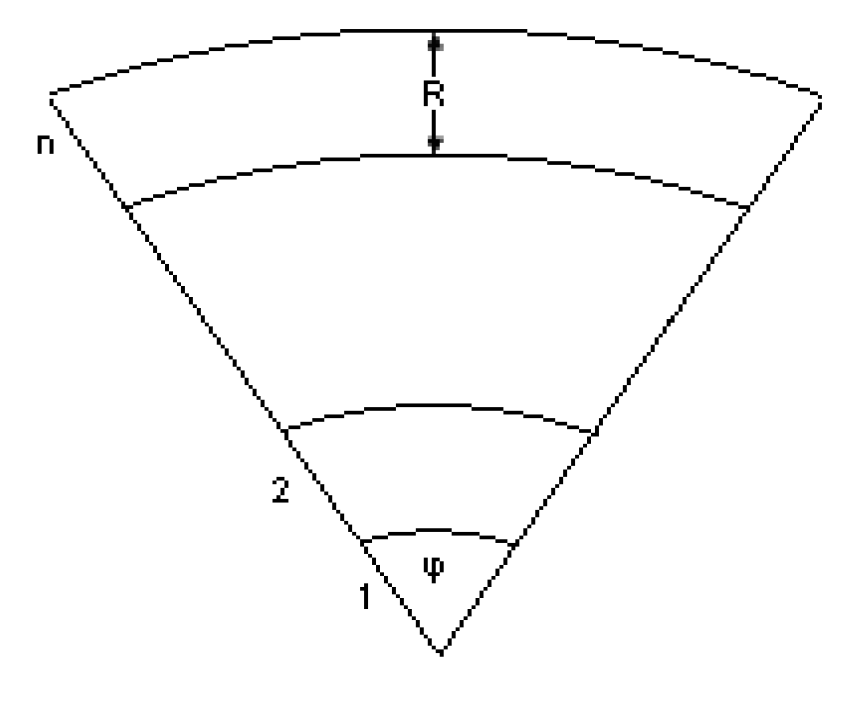

- Phase 2: The Probabilistic Forwarding Phase. During this phase, each particle P possessing the information under propagation, calculates an angle ϕ by calling the subprotocol “ϕ-calculation” (see description below) and broadcasts info() to all its neighbors with probability (or it does not propagate any data with probability ) defined as follows:where ϕ is the angle defined by the line EP and the line PS and ϕthreshold = 134o (the selection reasons of this ϕthreshold can be found in [9].

The ϕ-calculation subprotocol (see Figure 5)

3.2. Properties of PFR

- Correctness. Π must guarantee that data arrives to the position S, given that the whole network exists and is operational.

- Robustness. Π must guarantee that data arrives at enough points in a small interval around S, in cases where part of the network has become inoperative.

- Efficiency. If Π activates k particles during its operation then Π should have a small ratio of the number of activated over the total number of particles . Thus r is an energy efficiency measure of Π.

3.3. The Correctness of PFR

3.4. The Energy Efficiency of PFR

3.5. The Robustness of PFR

4. The Energy Balance Problem

4.1. The Model and the Problem

4.2. EBP: The Energy Balance Protocol

4.3. Basic Definitions - Preliminaries

4.4. The General Solution

4.5. A Closed Form

4.6. Further research on energy balance

5. Tracking Moving Entities

5.1. Problem Description

5.2. Our Contribution

5.3. Related Work and Comparison

Coverage

Tracking

Centralized Approaches

Distributed Approaches

5.4. The Model and its Combinatorial Abstraction

Sensors and Sensor Network

A model for targets

The combinatorial model

- (a)

- that the union of all is U.

- (b)

- that each e in U belongs to at least 3 sets initially.

5.5. A way to compute near optimal FMDs

The randomized rounding method

- (1)

- (2)

- Let be the collection of all Σ containing element e. Then, for each element e,

- (1)

- (2)

5.6. The triangulation issue

5.7. Alternative collaborative processing methods for our approach

6. Conclusions

References and Notes

- Akyildiz, I.F.; Su, W.; Sankarasubramaniam, Y.; Cayirci, E. Wireless sensor networks: a survey. J. Comput. Networks 2002, 38, 393–422. [Google Scholar] [CrossRef]

- Alon, N.; Moshkovitz, D.; Safra, M. Algorithmic construction of sets for k-restrictions. ACM Trans. Algorithms 2006, 2, 153–177. [Google Scholar] [CrossRef]

- Antoniou, T.; Boukerche, A.; Chatzigiannakis, I.; Mylonas, G.; Nikoletseas, S. A New Energy Efficient and Fault-tolerant Protocol for Data Propagation in Smart Dust Networks using Varying Transmission Range. In Proc. 37th Annual ACM/IEEE Simulation Symposium (ANSS’04), 2004.

- Akyildiz, I.F.; Su, W.; Sankarasubramaniam, Y.; Cayirci, E. A Survey on Sensor Networks. IEEE Commun. Mag. 2002, 40, 102–114. [Google Scholar] [CrossRef]

- Aspnes, J.; Goldberg, D.; Yang, Y. On the computational complexity of sensor network localization. In Proc. 1st Int. Workshop on Algorithmic Aspects of Wireless Sensor Networks (ALGOSENSORS 2004); Springer-Verlag: Heidelberg, Germany, 2004; Lecture Notes in Computer Science, LNCS 3121; pp. 32–44. [Google Scholar]

- Boukerche, A.; Cheng, X.; Linus, J. Energy-Aware Data-Centric Routing in Microsensor Networks. In Proc. of ACM Modeling, Analysis and Simulation of Wireless and Mobile Systems, September 2003; pp. 42–49.

- Chakrabarty, K.; Iyengar, S.S.; Qi, H.; Cho, E. Grid coverage for surveillance and target location in distributed sensor networks. IEEE Trans. Comput. 2002, 51, 1448–1453. [Google Scholar] [CrossRef]

- Chatzigiannakis, I.; Nikoletseas, S.; Spirakis, P. Smart Dust Protocols for Local Detection and Propagation. In Proc. 2nd ACM Workshop on Principles of Mobile Computing (POMC’2002), 2002. This article also appeared as: Chatzigiannakis, I.; Nikoletseas, S.; Spirakis, P. Efficient and Robust Protocols for Local Detection and Propagation in Smart Dust Networks. Mobile Networks and Applications 2005, 10, 133–149.

- Chatzigiannakis, I.; Dimitriou, T.; Nikoletseas, S.; Spirakis, P. A Probabilistic Algorithm for Efficient and Robust Data Propagation in Smart Dust Networks. In Proc. 5th European Wireless Conference on Mobile and Wireless Systems beyond 3G (EW 2004), 2004. This article also appeared as: Chatzigiannakis, I.; Dimitriou, T.; Nikoletseas, S.; Spirakis, P. A probabilistic algorithm for efficient and robust data propagation in wireless sensor networks. Ad-Hoc Networks 2006, 4, 621–635.

- Chatzigiannakis, I.; Dimitriou, T.; Mavronicolas, M.; Nikoletseas, S.; Spirakis, P. A Comparative Study of Protocols for Efficient Data Propagation in Smart Dust Networks. In Proc. International Conference on Parallel and Distributed Computing (EUPOPAR), 2003. Euro-Par 2003 Parallel Processing; Springer-Verlag: Heidelberg, Germany, 2003; Lecture Notes in Computer Science, Capter 15, pp. 1003-1016. This article also appeared as: Chatzigiannakis, I.; Dimitriou, T.; Mavronicolas, M.; Nikoletseas, S.; Spirakis, P. A Comparative Study of Protocols for Efficient Data Propagation in Smart Dust Networks. Parallel Processing Letters 2003, 13, 615–627.

- Chatzigiannakis, I.; Nikoletseas, S. A Sleep-Awake Protocol for Information Propagation in Smart Dust Networks. In Proc. 3rd Workshop on Mobile and Ad-Hoc Networks (WMAN), IPDPS Workshops; IEEE Press, 2003; p. 225, This article also appeared as: Chatzigiannakis, I.; Nikoletseas, S. Design and analysis of an efficient communication strategy for hierarchical and highly changing ad-hoc mobile networks. Mobile Networks and Applications 2004, 9, 319–332. [Google Scholar]

- Chen, B.; Jamieson, K.; Balakrishnan, H.; Morris, R. SPAN: An energy efficient coordination algorithm for topology maintenance in ad-hoc wireless networks. In Proc. ACM/IEEE International Conference on Mobile Computing (MOBICOM), 2001.

- Dhilon, S.S.; Chakrabarty, K.; Iyengar, S.S. Sensor placement for grid coverage under imprecise detections. In Proc. 5th International Conference on Information Fusion, (FUSION’02), Annapolis, MD, Jul 2002; pp. 1–10.

- Duh, R.-C.; Fuerer, M. Approximation of k-Set Cover by Semi-Local Optimization. In Proc. 29th Annual ACM Symposium on the Theory of Computing, 1997; Association for Computer Machinery: Nwe York, NY, USA, 1997; pp. 256–264. [Google Scholar]

- Estrin, D.; Govindan, R.; Heidemann, J.; Kumar, S. Next Century Challenges: Scalable Coordination in Sensor Networks. In Proc. 5th ACM/IEEE International Conference on Mobile Computing, (MOBICOM’99), 1999.

- Euthimiou, H.; Nikoletseas, S.; Rolim, J. Energy Balanced Data Propagation in Wireless Sensor Networks. In Proc. 4th International Workshop on Algorithms for Wireless, Mobile, Ad-Hoc and Sensor Networks, (WMAN ’04), 2004. This article also appeard as: Euthimiou, H.; Nikoletseas, S.; Rolim, J. Energy balanced data propagation in wireless sensor networks. Wireless Networks 2006, 12, 691–707.

- Feige, U. A Threshold of ln n for Approximating Set Cover. J. ACM 1998, 45, 634–652. [Google Scholar] [CrossRef]

- Gui, C.; Mohapatra, P. Power conservation and quality of surveillance in target tracking sensor networks. In Proc. ACM MobiCom Conference, 2004.

- Gupta, R.; Das, S.R. Tracking moving targets in a smart sensor network. In Proc. VTC Symp., 2003.

- Halldorsson, M.M. Approximating Discrete Collections via Local Improvements. In Proc. ACM SODA, 1995.

- Halldorsson, M.M. Approximating k-Set Cover and Complementary Graph Coloring. In Proc. IPCO, 1996.

- Hollar, S.E.A. COTS Dust. M.Sc. Thesis, University of California, Berkeley, USA, 2000. [Google Scholar]

- Heinzelman, W.R.; Chandrakasan, A.; Balakrishnan, H. Energy-Efficient Communication Protocol for Wireless Microsensor Networks. In Proc. 33rd Hawaii International Conference on System Sciences (HICSS’2000), 2000.

- Heinzelman, W.R.; Kulik, J.; Balakrishnan, H. Adaptive Protocols for Information Dissemination in Wireless Sensor Networks. In Proc. 5th ACM/IEEE International Conference on Mobile Computing (MOBICOM’1999), 1999.

- Intanagonwiwat, C.; Govindan, R.; Estrin, D. Directed Diffusion: A Scalable and Robust Communication Paradigm for Sensor Networks. In Proc. 6th ACM/IEEE International Conference on Mobile Computing (MOBICOM’2000), 2000.

- Intanagonwiwat, C.; Govindan, R.; Estrin, D.; Heidemann, J.; Silva, F. Directed Diffusion for Wireless Sensor Networking. IEEE/ACM Trans. Networking 2003, 11, 2–16, (Extended version of [25]). [Google Scholar] [CrossRef]

- Intanagonwiwat, C.; Estrin, D.; Govindan, R.; Heidemann, J. Impact of Network Density on Data Aggregation in Wireless Sensor Networks. Technical Report 01-750. University of Southern California Computer Science Department, November 2001. [Google Scholar]

- Jain, S.; Demmer, M.; Patra, R.; Fall, K. Using Redundancy to Cope with Failures in a Delay Tolerant Network. In Proc. SIGCOMM, 2005.

- Kahn, J.M.; Katz, R.H.; Pister, K.S.J. Next Century Challenges: Mobile Networking for Smart Dust. In Proc. 5th ACM/IEEE International Conference on Mobile Computing, September 1999; pp. 271–278.

- Karp, B. Geographic Routing for Wireless Networks. Ph.D. Thesis, Harvard University, Cambridge, USA, 2000. [Google Scholar]

- Kleinrock, L. Queueing Systems Theory; John Wiley & Sons: New York, NY, USA, 1975; Vol. I, p. 100. [Google Scholar]

- Liu, J.; Liu, J.; Reich, J.; Cheung, P.; Zhao, F. Distributed group management for track initiation and maintenance in target localization applications. In Proc. Int. Workshop on Information Processing in Sensor Networks (IPSN), 2003.

- Lund, C.; Yannakakis, M. On the hardness of approximating minimization problems. J. ACM 1994, 960–981. [Google Scholar] [CrossRef]

- μ-Adaptive Multi-domain Power aware Sensors. http://www-mtl.mit.edu/research/icsystems/uamps April 2001.

- Manjeshwar, A.; Agrawal, D.P. TEEN: A Routing Protocol for Enhanced Efficiency in Wireless Sensor Networks. In Proc. 2nd International Workshop on Parallel and Distributed Computing Issues in Wireless Networks and Mobile Computing, satellite workshop of the 16th Annual International Parallel & Distributed Processing Symposium (IPDPS’02), 2002.

- Mehlhorn, K.; Näher, S. LEDA: A Platform for Combinatorial and Geometric Computing; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Nikoletseas, S.; Spirakis, P. Efficient Sensor Network Design for Continuous Monitoring of Moving Objects. In Proc. of the Third International Workshop on Algorithmic Aspects of Wireless Sensor Networks (ALGOSENSORS 07); Springer-Verlag: Heidelberg, Germany, 2008. Lecture Notes in Computer Science (LNCS). Volume 4837, pp. 18–31, This article also appeared in: Theoretical Computer Science 2008, 402, 56–66. [Google Scholar]

- Nikoletseas, S.; Chatzigiannakis, I.; Euthimiou, H.; Kinalis, A.; Antoniou, A.; Mylonas, G. Energy Efficient Protocols for Sensing Multiple Events in Smart Dust Networks. In Proc. 37th Annual ACM/IEEE Simulation Symposium (ANSS’04), 2004.

- Perkins. C.E. Ad Hoc Networking; Addison-Wesley: Boston, MA, USA, 2001. [Google Scholar]

- Poduri, S.; Sukhatme, G.S. Constrained coverage in mobile sensor networks. In Proc. IEEE Int. Conf. Robotics and Automation (ICRA’04), New Orleans, LA, USA, April-May 2004; pp. 40–50.

- Rao, N.S.V. Computational complexity issues in operative diagnostics of graph based systems. IEEE Trans. Comput. 1993, 42, 447–457. [Google Scholar] [CrossRef]

- Ross, S.M. Stochastic Processes, 2nd Edition ed; John Wiley and Sons: Hoboken, NJ, USA, 1995. [Google Scholar]

- Shorey, R.; Ananda, A.; Chan, M.C.; Ooi, W.T. Mobile, Wireless, and Sensor Networks: Technology, Applications, and Future Directions. Wiley 2006. [Google Scholar]

- Schurgers, C.; Tsiatsis, V.; Ganeriwal, S.; Srivastava, M. Topology Management for Sensor Networks: Exploiting Latency and Density. In Proc. MOBICOM, 2002.

- Tiny, O.S. A Component-based OS for the Network Sensor Regime. http://webs.cs.berkeley.edu/tos/ October, 2002.

- Triantafilloy, P.; Ntarmos, N.; Nikoletseas, S.; Spirakis, P. NanoPeer Networks and P2P Worlds. In Proc. 3rd IEEE International Conference on Peer-to-Peer Computing, 2003.

- Ye, W.; Heidemann, J.; Estrin, D. An Energy-Efficient MAC Protocol for Wireless Sensor Networks. In Proc. 12th IEEE International Conference on Computer Networks (INFOCOM), New York, NY, USA, June 23-27 2002.

- Wireless Integrated Sensor Networks. http:/www.janet.ucla.edu/WINS April, 2001.

- Xu, Y.; Heidemann, J.; Estrin, D. Geography-informed energy conservation for ad-hoc routing. In Proc. ACM/IEEE International Conference on Mobile Computing (MOBICOM), Rome, Italy, July 16-21 2001.

- Warneke, B.; Last, M.; Liebowitz, B.; Pister, K.S.J. Smart dust: communicating with a cubic-millimeter computer. Computer 2001, 34, 44–51. [Google Scholar] [CrossRef]

- Zhang, W.; Cao, G. Optimizing tree reconfiguration for mobile target tracking in sensor networks. In Proc. IEEE InfoCom, 2004.

- Zhao, F.; Guibas, L. Wireless Sensor Networks: An Information Processing Approach; Morgan Kaufmann Publishers: San Fransisco, CA, USA, 2004. [Google Scholar]

- Zou, Y.; Chakrabarty, K. Sensor deployment and target localization based on virtual forces. In Proc. IEEE InfoCom, San Francisco, CA, April 2003; pp. 1293–1303.

- Ringwald, M.; Roemer, K. BitMAC: a deterministic, collision-free, and robust MAC protocol for sensor networks. In Proc. of the Second European Workshop on Sensor Networks, 2005; pp. 57–69.

- Oh, S.; Russell, S.J.; Sastry, S.S. Markov Chain Monte Carlo Data Association For General Multiple-Target Tracking Problems. In Proc. Fourty-third IEEE Conference on Decision and Control; IEEE Press: Piscataway, NJ, USA, 2004; Vol. 1, pp. 735–742. [Google Scholar]

- Demirbas, M.; Arora, A.; Gouda, M.G. A Pursuer-Evader Game for Sensor Networks. In Proc. Self-Stabilizing Systems, San Francisco, CA, USA, 2003; pp. 1–16.

- He, T.; Gu, L.; Luo, L.; Yan, T.; Stankovic, J.A.; Son, S.H. An overview of data aggregation architecture for real-time tracking with sensor networks. In Proc. IPDPS, Rhodes Island, Greece, 29 April 2006.

- Powell, O.; Leone, P.; Rolim, J. Energy optimal data propagation in wireless sensor networks. J. Parallel Distrib. Comput. 2007, 67, 302–317. [Google Scholar] [CrossRef]

- Jarry, A.; Leone, P.; Powell, O.; Rolim, J. An Optimal Data Propagation Algorithm for Maximizing the Lifespan of Sensor Networks. In Proc. DCOSS, San Francisco, CA, USA, June 18-20 2006.

© 2009 by the authors; licensee Molecular Diversity Preservation International, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Nikoletseas, S.; Spirakis, P.G. Probabilistic Distributed Algorithms for Energy Efficient Routing and Tracking in Wireless Sensor Networks. Algorithms 2009, 2, 121-157. https://doi.org/10.3390/a2010121

Nikoletseas S, Spirakis PG. Probabilistic Distributed Algorithms for Energy Efficient Routing and Tracking in Wireless Sensor Networks. Algorithms. 2009; 2(1):121-157. https://doi.org/10.3390/a2010121

Chicago/Turabian StyleNikoletseas, Sotiris, and Paul G. Spirakis. 2009. "Probabilistic Distributed Algorithms for Energy Efficient Routing and Tracking in Wireless Sensor Networks" Algorithms 2, no. 1: 121-157. https://doi.org/10.3390/a2010121

APA StyleNikoletseas, S., & Spirakis, P. G. (2009). Probabilistic Distributed Algorithms for Energy Efficient Routing and Tracking in Wireless Sensor Networks. Algorithms, 2(1), 121-157. https://doi.org/10.3390/a2010121