1. Introduction

Crop mapping, the process of identifying and monitoring crop types and their spatial distribution, has become a cornerstone of modern agriculture. Crop mapping using remote sensing data plays a crucial role in enhancing food security, particularly in the face of climate change and population growth. By providing accurate and timely information on crop health and yield predictions, farmers can make informed decisions regarding resource allocation and crop rotation strategies, ultimately leading to increased productivity and sustainability [

1,

2,

3,

4]. Hyperspectral remote sensing (HRS) has undergone significant developments and achieved numerous milestones in the field of crop monitoring and mapping over the past several decades. Initially focused on mineral exploration in the 1960s, its application to agriculture gained momentum in the 1980s and 1990s with projects like LACIE and AgRISTARS, which demonstrated the potential of remote sensing for crop monitoring [

5]. The transition from multispectral to hyperspectral sensors marked a significant milestone, allowing for the capture of hundreds of contiguous spectral bands. Hyperspectral data from the Hyperion sensor onboard EO-1 were evaluated for crop classification in northeastern Greece, demonstrating higher accuracy than Landsat 5 TM data. The inclusion of Hyperion’s SWIR bands enhanced crop discrimination, highlighting the potential of hyperspectral imaging for precision agriculture [

6]. This advancement enabled more detailed analysis of crop characteristics and environmental conditions [

7]. The ability to distinguish between different crop types and detect stress factors like drought and nutrient deficiencies has been a major achievement of HRS, enhancing precision agriculture practices [

8,

9]. Thus, hyperspectral data embody considerable potential for the accurate and efficient representation of agricultural landscapes, which is vital for the advancement of sustainable agricultural practices, resource governance, and prudent decision-making.

The high dimensionality of hyperspectral data presents several challenges that complicate its analysis and application. One primary issue is the “curse of dimensionality,” which refers to the exponential increase in data volume as the number of spectral bands increases, leading to computational inefficiencies and storage burdens [

10,

11]. This high dimensionality often results in highly correlated information across bands, which can degrade classification accuracy due to redundancy and noise [

12]. Additionally, the vast number of spectral bands compared with the limited number of training samples can lead to overfitting in machine learning models, reducing their generalization capability [

13].

The complexity of hyperspectral data also includes challenges such as spectral mixing and changes, which complicate target detection and classification tasks [

14]. To address these issues, various dimensionality reduction (DR) techniques have been developed. These include methods like principal component analysis (PCA) and partial least squares (PLS), which aim to reduce the number of spectral bands while retaining essential information for classification [

15]. Advanced methods such as semi-supervised spatial spectral regularized manifold local scaling cut (S3RMLSC) and locally adaptive dimensionality reduction metric learning (LADRml) have been proposed to exploit both spectral and spatial information, improving classification accuracy by preserving the data’s original distribution and handling complex data structures [

16,

17]. Furthermore, methods like the improved spatial-spectral weight manifold embedding (ISS-WME) and local and global sparse representation (LGDRSR) integrate spatial and spectral information to enhance the discriminative performance of hyperspectral images [

18,

19]. These approaches demonstrate the ongoing efforts to mitigate the challenges posed by high dimensionality in hyperspectral data, aiming to improve processing efficiency and classification outcomes.

In recent years, deep learning models, particularly convolutional neural networks (CNNs), have become a cornerstone in the field of remote sensing, offering significant advancements in various applications such as object detection, image classification, and change detection. CNNs excel in automatically extracting and representing features from remote sensing imagery, which is crucial for various applications [

20,

21]. The adaptability of CNNs to different tasks is evident in their application to land cover mapping, where they have been extensively used to classify and segment images without the need for manual feature extraction [

22]. Moreover, CNNs have been employed to address challenges in remote sensing, such as color consistency, where they help in maintaining texture details while adjusting for color discrepancies across images [

23]. In change detection, CNNs like the SSCFNet have been developed to enhance the extraction of both high-level and low-level semantic features, improving the detection of changes in remote sensing images [

24]. The versatility of CNNs is further demonstrated in multimodal data classification, where frameworks like CCR-Net integrate data from different sources, such as hyperspectral and LiDAR, to improve classification accuracy [

25]. Despite their success, deploying CNNs in resource-constrained environments, such as spaceborne applications, poses challenges due to the computational demands. Solutions like the automatic deployment of CNNs on FPGAs have been proposed to optimize performance while minimizing power consumption [

26]. Additionally, innovative architectures like the multi-granularity fusion network (MGFN) and Siamese CNNs have been developed to enhance scene classification by capturing deep spatial features and addressing the limitations of small-scale datasets [

27,

28]. Overall, CNNs continue to evolve, offering robust solutions to the complex challenges in remote sensing, while ongoing research addresses their limitations and explores new applications [

29].

U-Net and its variants, such as U-Net++, U-Net Atrous, and others, have demonstrated significant suitability for semantic segmentation and pixel-wise classification in remote sensing, particularly with hyperspectral images. The base U-Net model is widely recognized for its effectiveness in generating pixel-wise segmentation maps, which is crucial for analyzing remote sensing imagery [

30]. Variants like UNeXt, which integrates ConvNeXt and Transformer architectures, have been developed to address the challenges posed by high-resolution remote sensing images, such as scale variations and redundant information, achieving high accuracy and efficiency [

31].

The incorporation of attention mechanisms and feature enhancement modules, as seen in some U-Net variants, further improves segmentation accuracy by focusing on regions of interest and suppressing irrelevant information [

32]. CM-Unet, another variant, enhances segmentation accuracy by integrating channel attention mechanisms and multi-feature fusion, demonstrating superior performance in complex scenes [

33]. The DSIA U-Net variant employs a deep-shallow interaction mechanism with attention modules to improve segmentation efficiency, particularly for water bodies, achieving remarkable accuracy [

34]. Additionally, the SA-UNet variant utilizes atrous spatial pyramid pooling to expand the receptive field and integrate multiscale features, significantly enhancing classification accuracy in agricultural applications [

35]. The CAM-UNet framework, which incorporates channel attention and multi-feature fusion, also shows improved classification accuracy by effectively combining spectral, spatial, and semantic features [

36]. CTMU-Net and AFF-UNet further optimize segmentation by employing combined attention mechanisms and adaptive feature fusion, respectively, to enhance feature detail retention and segmentation accuracy across various datasets [

37,

38].

Collectively, these advancements in U-Net and its variants underscore their robust applicability and adaptability for semantic segmentation tasks in remote sensing, including hyperspectral image analysis, by addressing specific challenges such as computational efficiency, feature detail retention, and segmentation accuracy.

This work advances the state of hyperspectral crop classification through a systematic integration of deep learning architectures with dimensionality-reduction strategies. In contrast to prior studies that emphasize either architectural novelty [

39], airborne data, or dimensionality handling [

40], we provide an operationally oriented, spaceborne analysis grounded in EnMAP imagery. Our main contributions are as follows:

Benchmarking of U-Net Variants on Spaceborne Hyperspectral Data: We provide a direct comparison of U-Net, U-Net++, and Atrous U-Net architectures using EnMAP data, thereby addressing the growing need for scalable, satellite-based crop monitoring solutions.

Comprehensive Dimensionality-Reduction Framework: Four complementary strategies (LDA, SHAP, ACS, and OMP) are systematically integrated with deep models, offering a balanced view of statistical, model-driven, and unsupervised feature selection methods.

Evidence of Architecture–Feature Space Interaction: Results demonstrate that the superiority of a given architecture is conditional on the input representation, with U-Net++ dominating on ACS subsets, U-Net excelling under LDA, and Atrous U-Net benefiting from SHAP-driven compact inputs.

Operationally Relevant Efficiency Gains: We show that dimensionality reduction, particularly via ACS and SHAP, can reduce the spectral space by 70% and 90%, respectively, while retaining competitive classification accuracy, enhancing reproducibility, and deployment feasibility.

Targeting Fine-Grained Crop Discrimination: The analysis focuses on perennial fruit crops of high agronomic importance (stone fruits, pome fruits, industrial peach, nut trees, vineyards), offering insights beyond generic land-cover classification.

Collectively, these contributions establish a reproducible and interpretable framework for hyperspectral crop mapping, highlighting the importance of jointly optimizing data representation and model architecture.

2. Materials and Methods

This section outlines the frameworks and methodologies employed throughout the study. By leveraging deep learning techniques in conjunction with dimensionality reduction approaches, the goal is to develop and evaluate efficient and accurate crop classification models for hyperspectral imagery. The high dimensionality inherent in such data presents a significant challenge for effective processing and analysis [

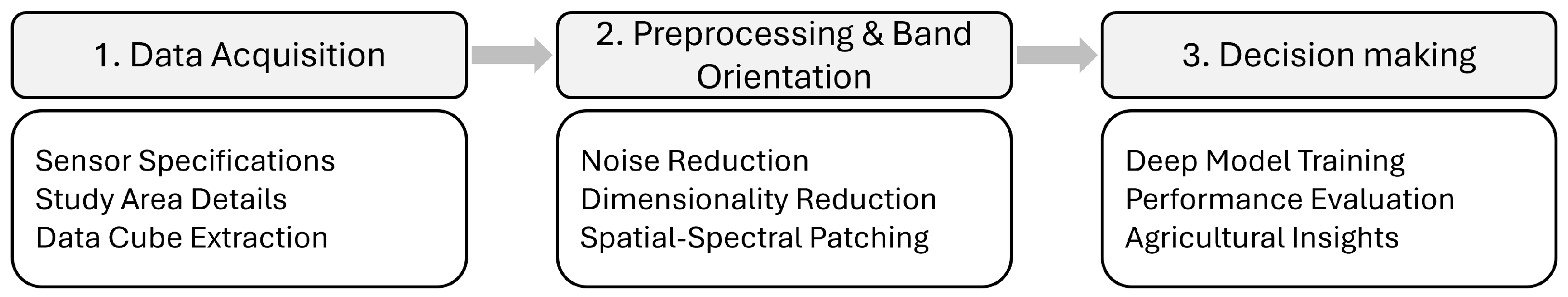

41], especially in the context of agricultural land cover mapping. An overview of the methodology is presented in

Figure 1.

The initial stage, Data Acquisition,

Section 2.1, involves obtaining high-resolution hyperspectral images from the EnMAP satellite, specifically over the diverse agricultural region of Lake Vegoritida, Greece. This crucial step established the foundation of our analysis, providing the rich spectral information necessary for fine-grained crop discrimination across the study area.

Subsequently, the Preprocessing and Band Orientation phase prepared this raw hyperspectral data for deep learning analysis,

Section 2.2. This involved critical steps such as managing bad or overlapping bands, alongside noise removal and initial band transformation through techniques like Minimum Noise Fraction to enhance data quality. Following this, a key component of this phase was the application of further dimensionality reduction techniques, including LDA, SHAP-based, histogram-based clustering, and reconstruction-driven feature selection, which strategically optimized the spectral information content, thereby streamlining the data for efficient model processing.

Finally, the Decision Making stage,

Section 2.3, encompassed the core analytical processes of the study. Here, the preprocessed data are utilized to train and evaluate various U-Net-based deep learning architectures, specifically U-Net, U-Net++, and U-Net Atrous, for the precise task of agricultural crop semantic segmentation. This phase allowed for a comprehensive assessment of each model’s performance, ultimately guiding the identification of optimal strategies for hyperspectral crop classification and extracting valuable insights for practical applications.

2.1. Study Area and Data Acquisition

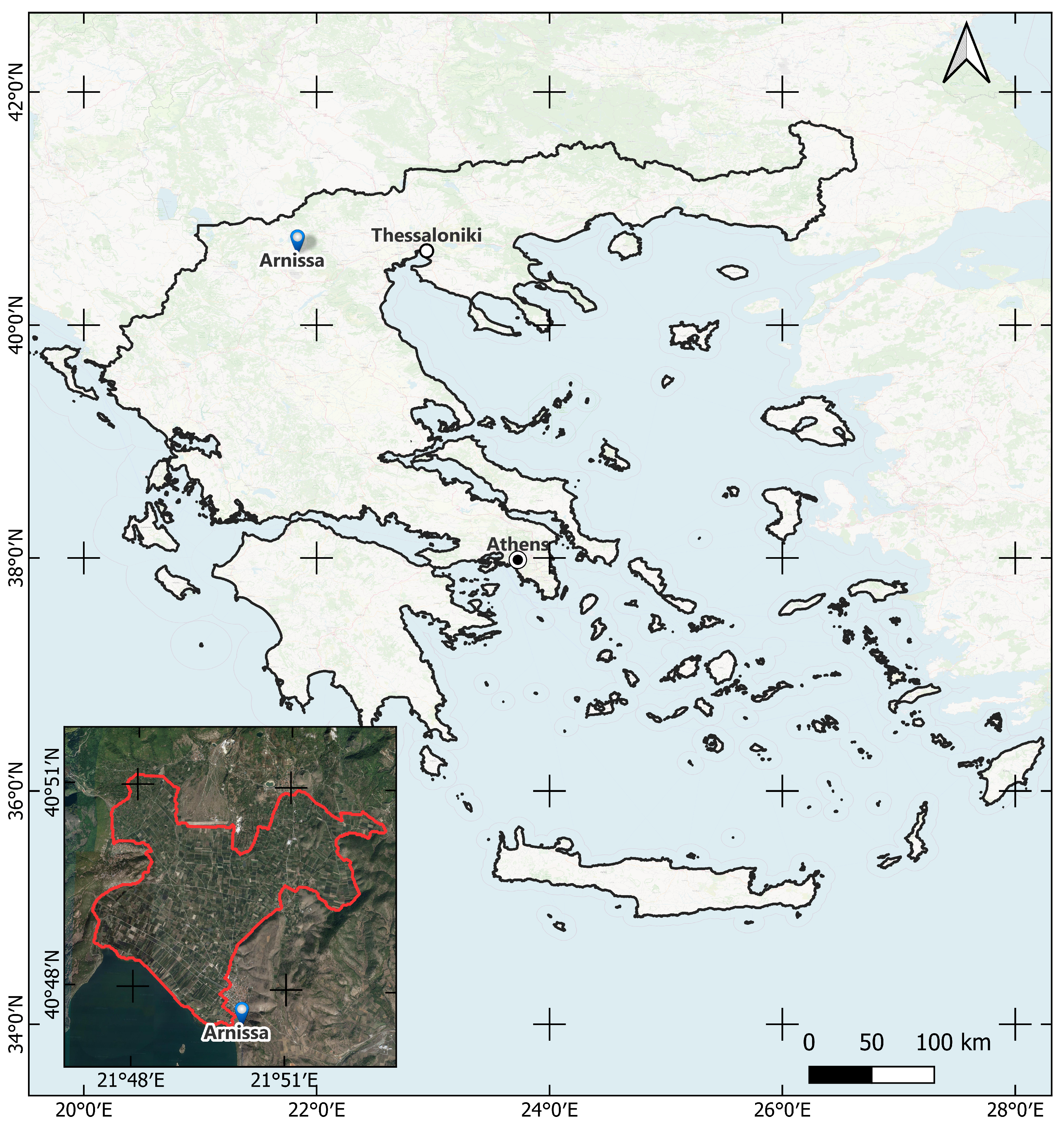

The study area,

Figure 2 is located Northwest of the town of Arnissa, in the Pella regional unit, approximately 115 km West–Northwest of Thessaloniki, in northern Greece. This rural region is primarily recognized for its fruit plantations, predominantly consisting of stone fruits and pome fruits. Other minor plantations include tree nut plantations and vineyards. Hyperspectral data require preprocessing to address sensor artifacts, remove noise, correct atmospheric conditions, and enhance the overall data quality to facilitate accurate analysis.

In this study, EnMAP hyperspectral imagery was utilized. The Environmental Mapping and Analysis Program (EnMAP) represents a German satellite initiative focused on hyperspectral observation aimed at systematically assessing and characterizing the Earth’s environmental conditions on a global scale. EnMAP effectively quantifies geochemical, biochemical, and biophysical parameters, thereby providing essential insights into the status and dynamics of both terrestrial and aquatic ecosystems.

The EnMAP mission, launched in 2022, is equipped with a duo of spectrometers that capture electromagnetic radiation across the visible spectrum to the shortwave infrared range, encompassing 246 distinct bands. EnMAP possesses a spatial resolution of 30 m by 30 m and exhibits a temporal revisit interval of 27 days, with an off-nadir revisit capability of four days. The spectral sampling interval varies between 6.5 nm (VNIR) and 12 nm (SWIR), allowing for detailed spectral resolution [

42]. The EnMAP data are freely accessible to the scientific community via the official Data Access Portal (

https://planning.enmap.org/ (20 August 2023)), subject to a mandatory user registration process. The acquisition date of the EnMAP image was 7 October 2022, coinciding with the collection of crop mapping ground truth data from the official Greek Payment Authority Common Agricultural Policy database in the same year.

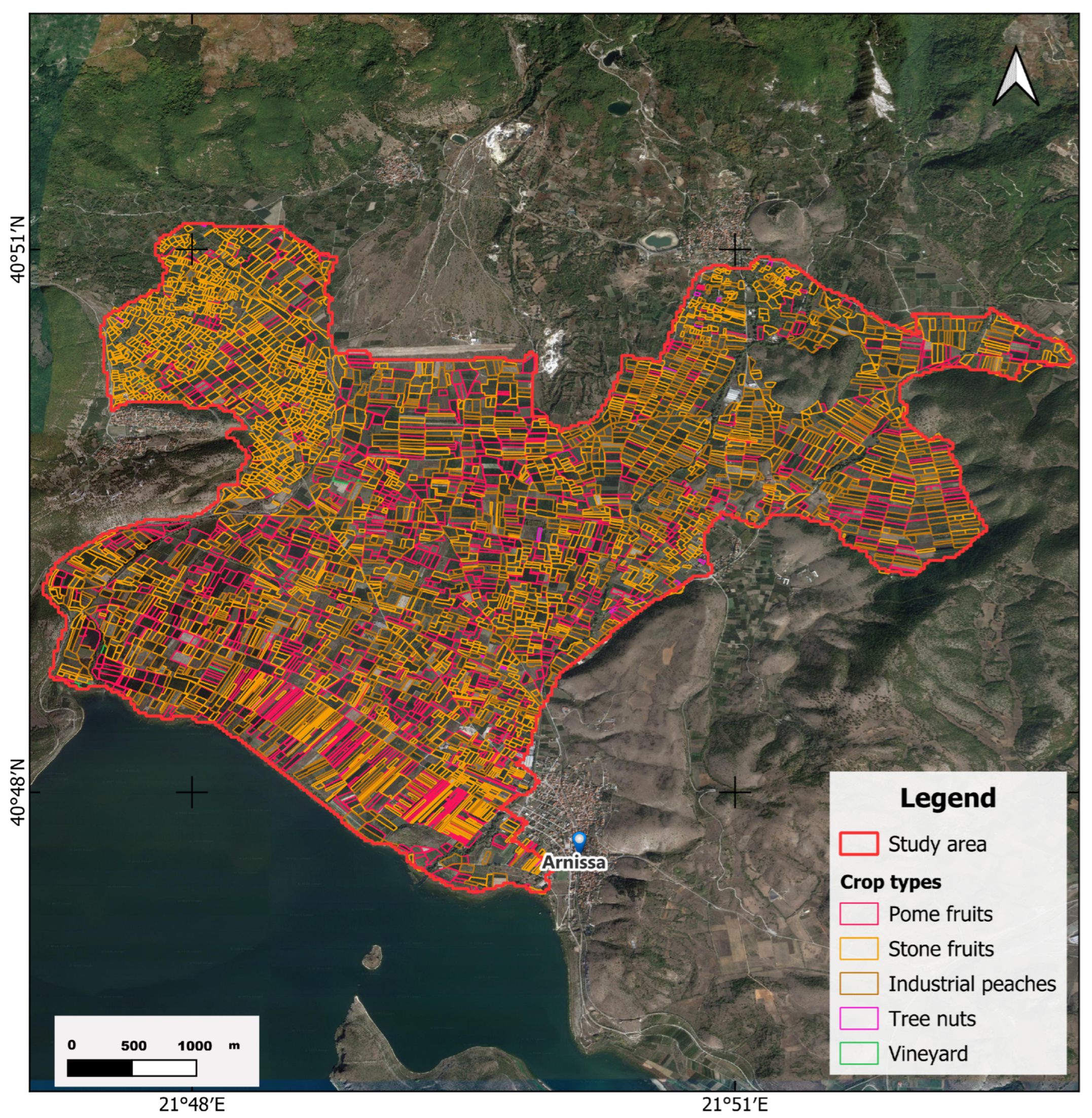

The ground truth data,

Figure 3, were received from the Greek Payment Authority of Common Agricultural Policy. The data were received in shapefile format for the study area. In the study area, there are in total 4103 cultivated parcels: 1314 pome fruits, 2749 stone fruits, of which 867 are registered as industrial peach, 33 tree nuts, and 7 vineyards.

Bad/Overlapping Band Removal: Due to the existence of two different spectrometers (VNIR and SWIR), spectral overlap occurs between 900 nm and 1000 nm (from 902.2 nm to 993.0 nm). To avoid possible mismatches or co-registration errors between the sensors, overlapping VNIR bands were excluded from the analysis. Additionally, spectrally degraded or unreliable bands—such as those affected by sensor artifacts, strong water vapor absorption, or other anomalies—were excluded by referencing the image’s XML metadata file, where bad bands are flagged with a value of zero (0). After this procedure, 208 spectral bands remained for initial consideration.

Noise Removal and Minimum Noise Fraction (MNF) Transformation: Hyperspectral data inherently contain noise from various sources, including sensor characteristics, atmospheric interference, and environmental conditions. To address this, the Minimum Noise Fraction (MNF) transformation was applied [

42]. MNF reduces noise and dimensionality by decorrelating and segregating noise from meaningful signals. It projects data into a new coordinate system ordered by their signal-to-noise ratios. The initial MNF components with high eigenvalues were retained as they encapsulate significant spectral information while suppressing noise and redundancy. Specifically, seven MNF bands from the VNIR range and two MNF bands from the SWIR range were retained. Subsequently, the inverse MNF transformation was applied separately to VNIR and SWIR data due to differing signal-to-noise ratios, projecting them back into their original spectral dimensions while minimizing noise.

2.2. Preprocessing and Band Orientation

Pre-processing of the EnMAP imagery was performed using ENVI software (ENVI 6.0). The EnMAP image was initially provided as Level-2A atmospherically corrected surface reflectance data. Preprocessing included the removal of spectrally degraded or unreliable bands based on the XML metadata flags and addressing sensor overlaps between the VNIR and SWIR spectrometers. This stage resulted in 208 bands, of which 196 bands were ultimately retained for further analysis following noise reduction via MNF transformation and visual assessment.

The judicious reduction in hyperspectral dimensionality plays a decisive role in remote-sensing analytics. When dozens or hundreds of highly correlated bands are left unfiltered, the resulting parameter space inflates computational demands, aggravates multicollinearity, and triggers the Hughes effect, whereby classifier performance deteriorates as dimensionality outpaces the availability of labelled samples [

12]. By isolating—or projecting onto—a limited set of spectrally and statistically salient features, one can suppress sensor noise, discard atmospheric redundancy, and stabilize covariance estimates, all while shortening training times for machine-learning models. Equally important, a compact spectral signature clarifies the physical interpretation of class separability, revealing the wavelengths that truly govern biogeochemical or land-cover distinctions.

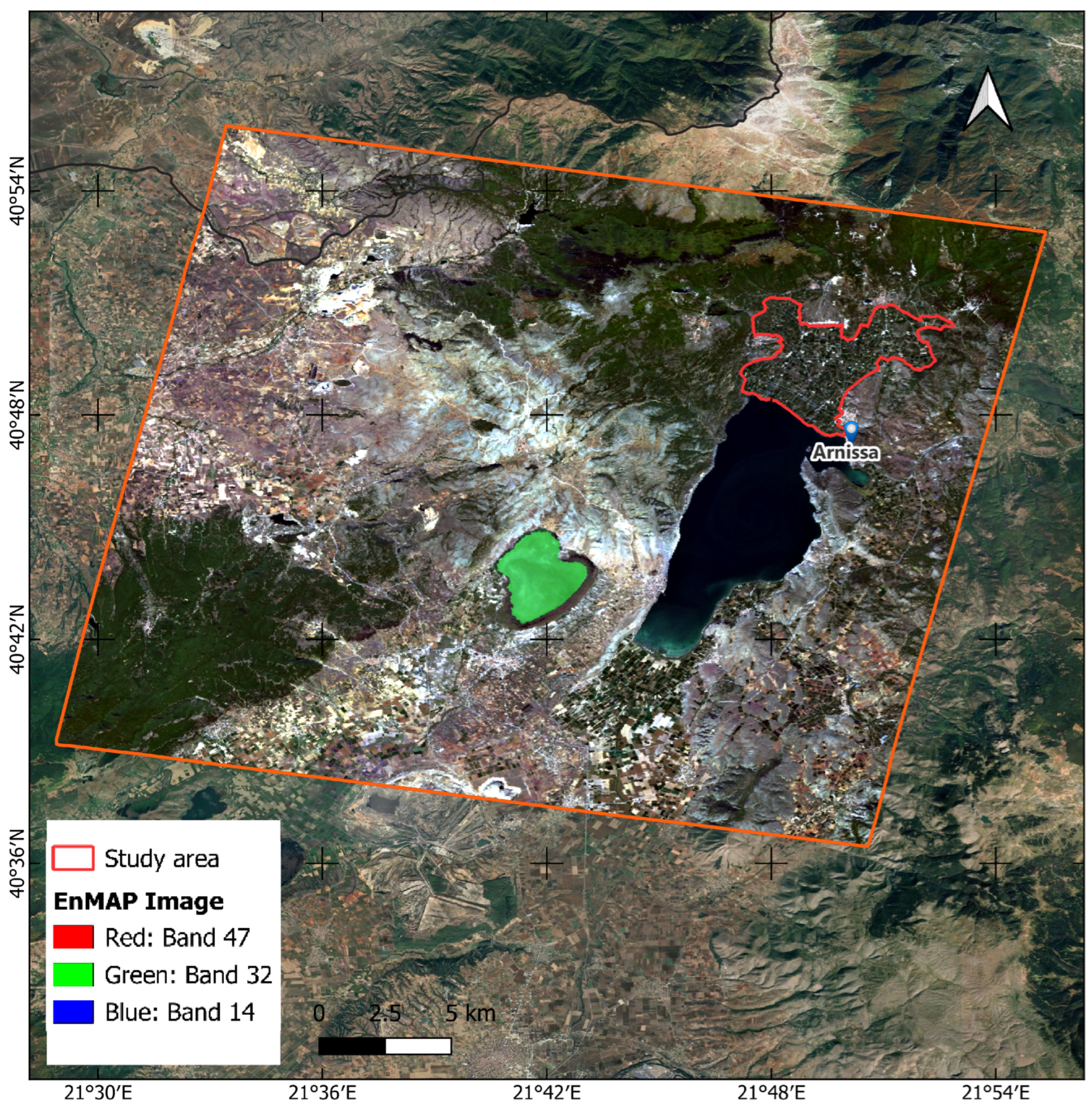

From a practical standpoint, early-stage dimensionality reduction decreases storage and transmission loads—an asset in large-area or near-real-time monitoring pipelines—while guarding against overfitting when ground truth is scarce. For these reasons, a carefully designed dimensionality-reduction step is not an optional convenience but rather a prerequisite for extracting robust, reproducible, and scientifically meaningful information from hyperspectral data. Following a visual assessment of the MNF-inverted dataset, 196 out of the original 208 EnMAP spectral bands were retained for further analysis based on their information content and spectral quality. The outcome of this process is demonstrated in

Figure 4. The selected bands, along with their corresponding central wavelengths, are listed in

Table 1.

2.3. Decision Making

Semantic segmentation of Earth-observation imagery demands models that can preserve fine spatial detail while reasoning over broader context. Architectures derived from the U-Net lineage fulfill these requirements by combining a contracting encoder path, which gathers multi-scale semantic features, with a symmetric expanding decoder that restores full resolution through explicit skip-connections. The present work evaluates three successive members of this family—standard U-Net, U-Net++, and an atrous-enabled U-Net variant—in order to quantify the incremental benefits of deeper fusion and multi-scale context aggregation.

The study adopted the U-Net family of convolutional neural networks —namely the original U-Net, U-Net++, and an atrous (dilated-convolution) U-Net—because this encoder–decoder paradigm offers a judicious balance of architectural parsimony, data-efficiency, and spatial fidelity that is well suited to hyperspectral scene analysis. A symmetric contracting–expanding path with skip connections preserves fine-grained localization while simultaneously aggregating high-level semantic context, thereby enabling precise pixel-wise delineation even when training data are scarce—a frequent constraint in remote-sensing campaigns.

The nested dense skip connections of U-Net++ refine feature fusion across multiple semantic scales, mitigating the semantic gap between encoder and decoder and often accelerating convergence. Conversely, the introduction of atrous convolutions enlarges the receptive field without inflating the parameter count, allowing the atrous variant to capture long-range contextual cues that are essential for resolving spectrally similar but spatially dispersed classes. Collectively, these three complementary configurations provide a systematic framework for interrogating the trade-offs between model complexity, receptive-field size, and segmentation accuracy, while retaining a common backbone whose proven robustness in biomedical imaging has translated effectively to hyperspectral land-cover mapping.

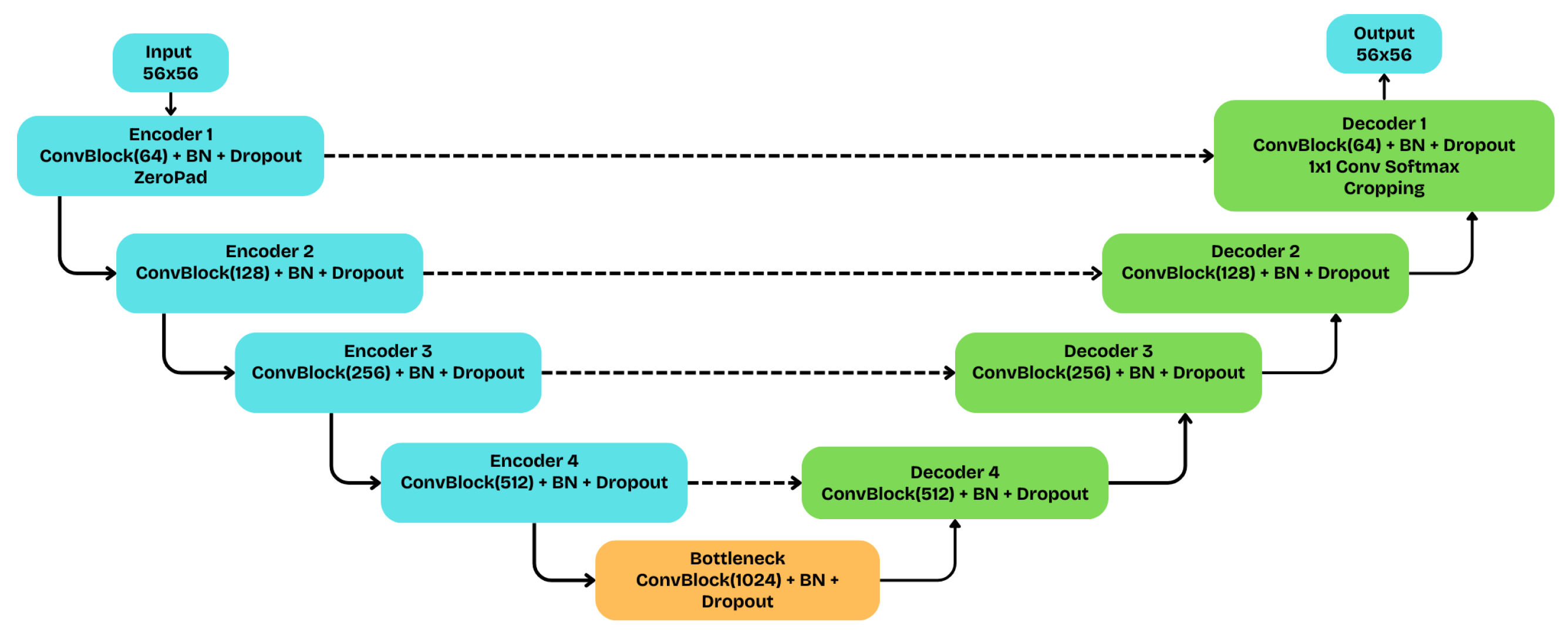

The implemented baseline U-Net, whose fundamental structure is schematically depicted in

Figure 5, adopts a symmetric five-level encoder (blue) - decoder (green) topology. In the contracting path (encoder), each level consists of a convolutional block with two sequential

convolutions, each followed by Batch Normalization and a ReLU activation. Spatial downsampling is achieved through a

max-pooling operation, while the number of filters doubles at each step, starting from 64 and reaching 1024 at the bottleneck. For regularization and to prevent overfitting, a Dropout layer with a rate of 0.1 (10%) is applied at the end of each convolutional block.

In the symmetric expansive path (decoder), spatial resolution is restored via a upsampling (UpSampling2D) process. At each level, the upsampled feature map is concatenated with the corresponding map from the encoder via skip connections, thereby reintroducing high-resolution spatial information. To handle input image patches with dimensions not perfectly divisible by 32 (), the model applies a strategy of zero-padding at the input and corresponding cropping at the output. The network terminates with a convolution and a softmax activation, which produces the final class probabilities for each pixel. This architecture balances depth with parameter economy, making it suitable for the semantic segmentation of hyperspectral data.

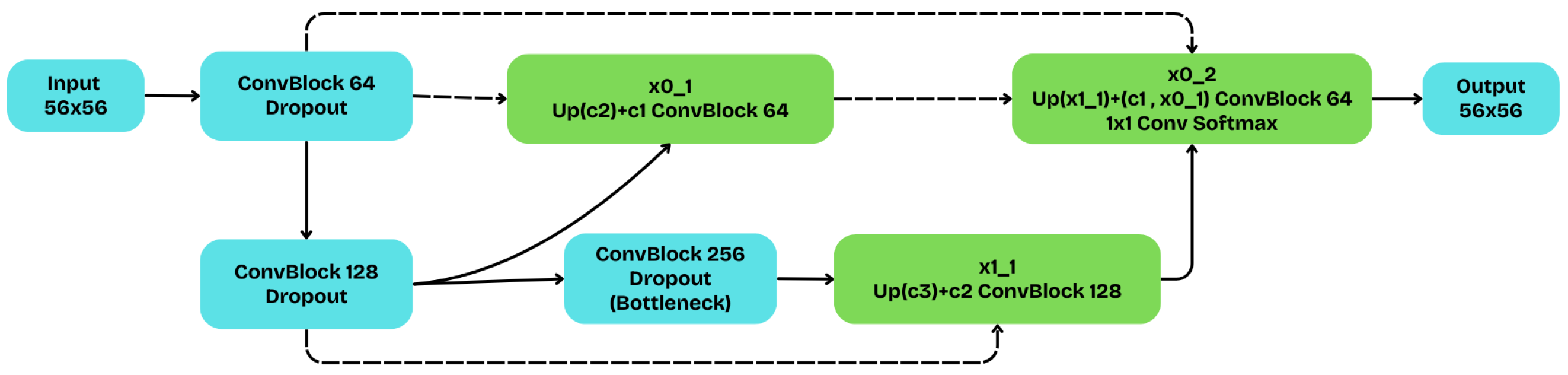

The implemented U-Net++ architecture, whose fundamental structure is schematically depicted in

Figure 6, extends the classic U-Net by introducing a set of nested and densely connected skip pathways. This approach is designed to bridge the semantic gap between encoder and decoder features, improving segmentation quality.

This specific implementation is a shallower variant with a depth of three levels.The encoder (blue) processes the input through three stages, with filter counts of 64, 128, and 256, respectively. Each convolutional block consists of two convolutions with ReLU activation. Unlike other configurations, this architecture omits Batch Normalization but incorporates Dropout with a rate of 0.1 at the end of each block for regularization.

The nested skip pathways allow for the gradual accumulation of features. The final segmentation map is generated from node , which combines features from three different sources: the initial encoder feature map (), the first-level skip node (), and the upsampled deeper node (). This dense connectivity enables the network to produce sharp object boundaries. The final prediction is delivered by a convolutional layer with a softmax activation, creating the probability cube for each pixel.

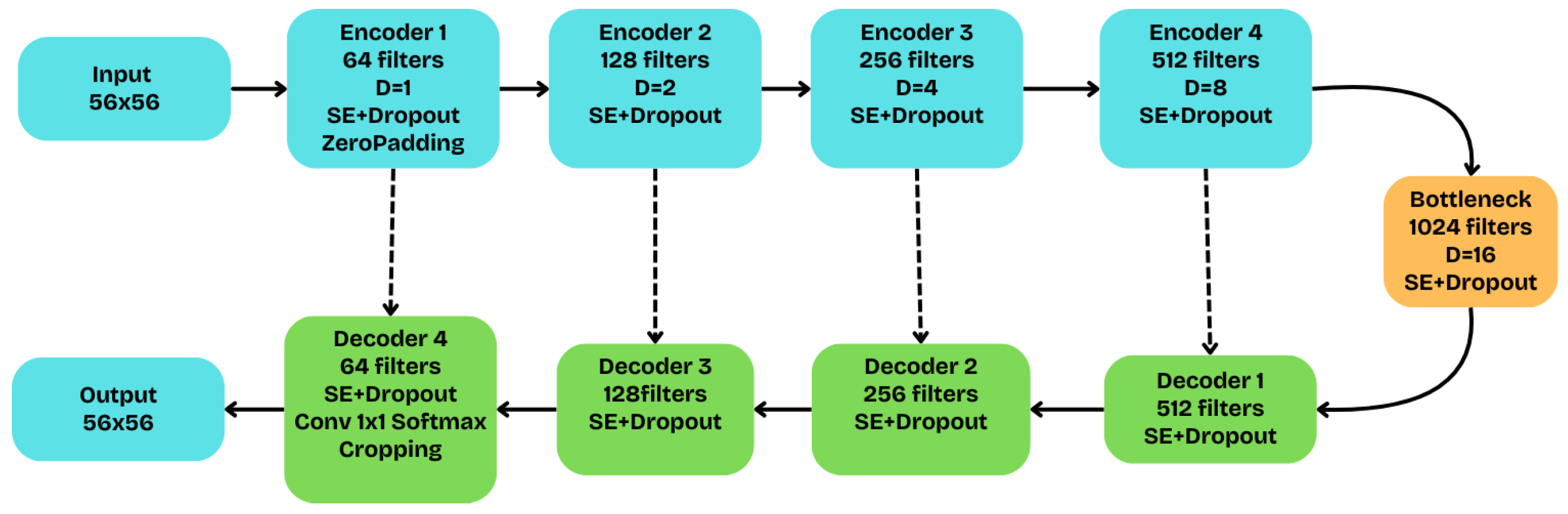

The architecture of the Atrous U-Net network, the fundamental structure of which is schematically depicted in

Figure 7, is a Squeeze-and-Excitation (SE) dilated U-Net, designed to enlarge the receptive field without degrading spatial resolution. Along the encoder path (blue), the dilation rate doubles at each of the five stages—

—while the number of filters increases from 64 to 1024. Each convolutional block now incorporates not only two dilated convolutions but also Batch Normalization, a Squeeze-and-Excitation (SE) module with a reduction ratio of 16, and Dropout regularization with a rate of 0.1. The SE mechanism allows the model to dynamically recalibrate channel-wise feature responses, thereby emphasizing the most informative ones. To ensure dimensional compatibility during the successive downsampling operations of the encoder, a strategy of zero-padding at the input and corresponding cropping at the output is adopted.

In summary, the three U-Net variants examined here offer complementary routes to reconciling spectral richness with spatial precision.

The baseline U-Net supplies a lean, well-tested backbone whose encoder–decoder symmetry and direct skip connections already yield reliable pixel-level delineation. Building on that foundation, U-Net++ densifies the skip topology, narrowing the semantic gap between encoding and decoding stages and thereby sharpening object boundaries without a prohibitive rise in parameters. Finally, the atrous U-Net enlarges the receptive field through systematic dilation, endowing the network with long-range contextual awareness that is especially beneficial when class separability hinges on broader scene structure. All these architectures map a spectrum of design trade-offs—depth versus width, locality versus context—providing a robust experimental framework from which the most task-appropriate configuration, or an ensemble thereof, can be selected for operational hyperspectral segmentation.

3. Experimental Setup

This section outlines the experimental design adopted to evaluate the impact of different dimensionality-reduction strategies on hyperspectral crop classification with U-Net-based architectures. Our analysis was conducted using the EnMAP hyperspectral scene described in

Section 2, combined with parcel-level ground truth data for multiple perennial crop classes. To ensure rigorous evaluation, we designed a parcel-based spatial holdout with buffer enforcement, thereby preventing information leakage due to spatial autocorrelation.

We investigated four complementary band selection approaches, chosen to represent statistical, model-driven, and unsupervised paradigms. Specifically, (i) Linear Discriminant Analysis (LDA) was employed as a supervised projection method that maximizes class separability; (ii) SHAP-based feature attribution was applied on an XGBoost baseline to identify the most informative bands, yielding compact yet interpretable subsets; (iii) Agglomerative Clustering Selection (ACS) was used as an unsupervised strategy to group spectrally redundant bands and retain a representative subset (196 to 60 bands) and (iv) Orthogonal Matching Pursuit (OMP) was implemented as a sparse approximation technique to recover the most discriminative features. These reduced feature sets were subsequently used as inputs to the three deep architectures under study: U-Net, U-Net++, and Atrous U-Net.

The combination of diverse band selection methods and deep segmentation models provides a systematic framework to evaluate how different spectral representations interact with network design, thereby allowing us to disentangle architectural effects from data-related factors.

3.1. Spectral Band Selection via Statistical and Model-Driven Methods

Dimensionality reduction plays a critical role in hyperspectral image analysis by addressing challenges such as spectral redundancy, sensor noise, and the curse of dimensionality. To comprehensively explore this design space, we adopted a diverse set of supervised and unsupervised strategies for band selection. Specifically, two supervised techniques were implemented: a statistical method based on Linear Discriminant Analysis (LDA) with sequential feature selection, and a model-driven approach using SHAP (SHapley Additive exPlanations) values derived from gradient-boosted decision trees. To complement these, two unsupervised schemes were further introduced: a distribution-based clustering method leveraging Jensen–Shannon divergences between band histograms, and a reconstruction-driven greedy selection procedure inspired by column subset selection. Together, these approaches provide a multifaceted view of the problem, balancing statistical optimality, model interpretability, and data-driven compactness without reliance on class labels.

The first approach combines LDA with a forward Sequential Feature Selection (SFS) wrapper. After removing unknown class pixels from the Vegoritida hyperspectral cube, a class-stratified bootstrap sample of 5000 valid pixels was drawn to mitigate imbalance. The SFS algorithm iteratively added bands that maximized five-fold cross-validated LDA accuracy. Although up to 15 bands were evaluated, an inspection of the LDA loadings revealed that six spectral channels—TIF indices, as shown in

Table 2, captured the entire discriminative variance (explained-variance ratio = 1.00) and accounted for approximately 92% of the aggregate importance.

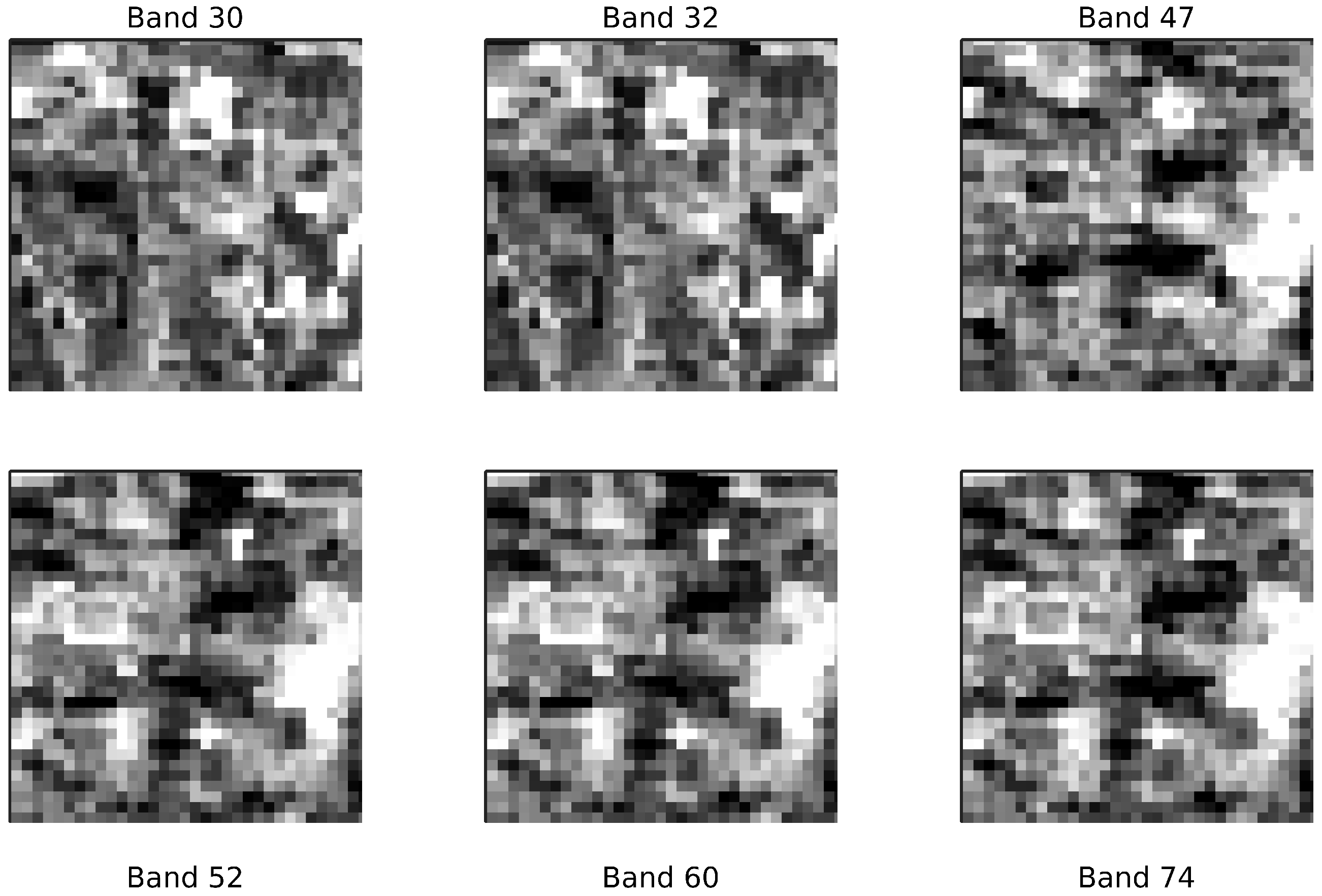

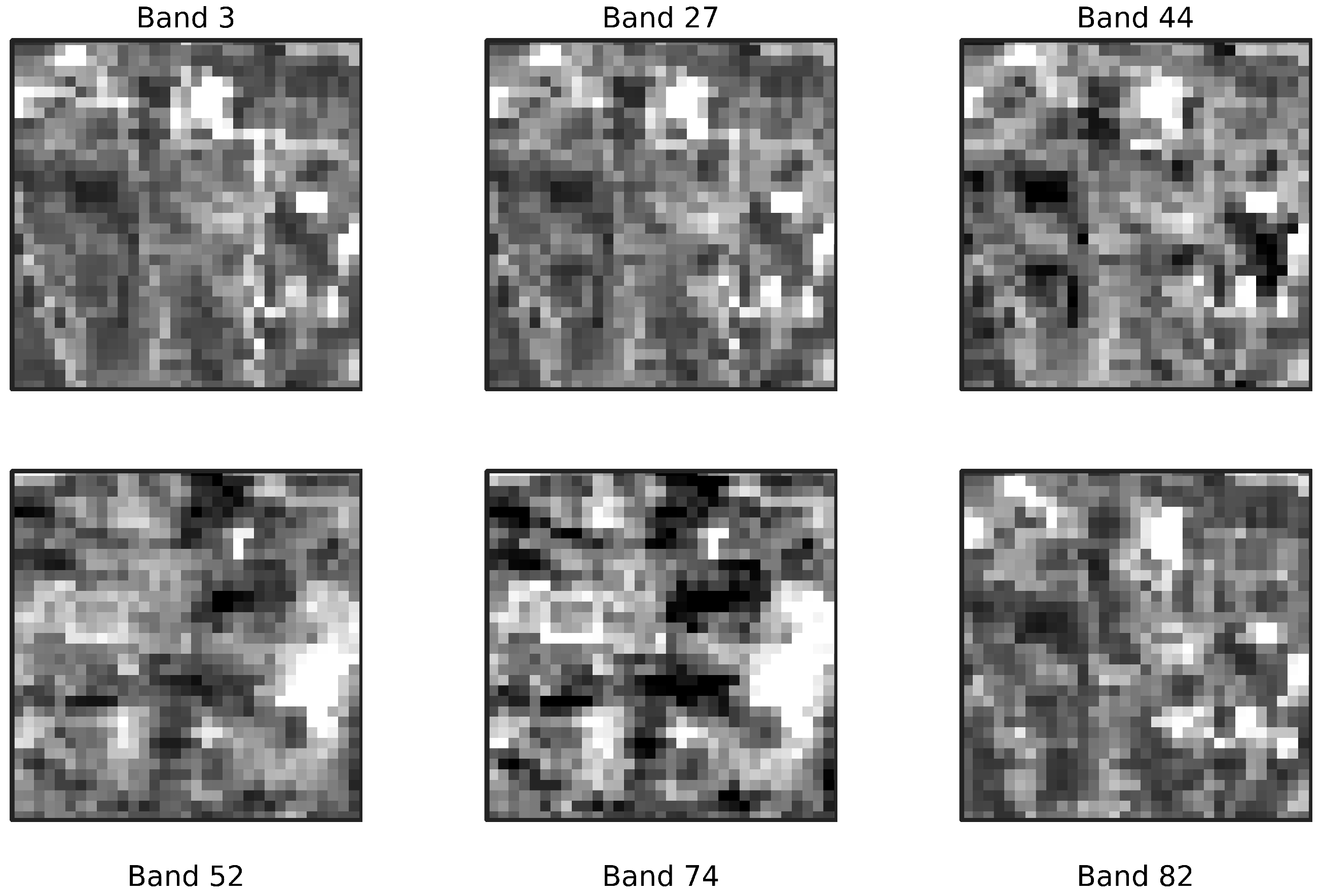

Figure 8 provides an indicative visualization for all of these bands, over a randomly selected image patch. These bands were selected to construct a compact six-band GeoTIFF, facilitating both efficient storage and faster model training. All band rankings and component variances were exported to CSV for reproducibility and future analysis.

The second dimensionality-reduction method follows a model-centered strategy based on gradient-boosted decision trees (XGBoost). After removing unknown class values, a stratified 70/30 partition split under a fixed random seed was used. The class imbalance was mitigated via inverse-frequency sample weights. A conservative, lightweight configuration of 100 boosting rounds, using multiclass log-loss optimization, was adopted, including a fixed seed, to minimize overfitting and avoid injecting tuning bias into subsequent attributions. All other parameters maintained the scikit-learn library’s default values.

To prevent information leakage, SHAP attributions (i.e., TreeExplainer) were computed only on the training split. A transparent, reproducible selection rule defined the reduced subset: a band was retained if it appeared in the per-class top-5 for at least two crop classes or if its global mean exceeded the 75th percentile; the final subset size was fixed at 19 bands. Using the same stratified split, balanced weights, and seed, the 19-band model achieved OA = 0.747 and macro-F1 = 0.740 on the held-out test set, versus OA = 0.762 and macro-F1 = 0.756 with all 196 bands (OA = pp; macro-F1 = pp; 95% bootstrap CI for OA: to pp). This represents a modest performance reduction given the 90.3% dimensionality decrease (), a trade-off that substantially improves parsimony and interpretability while preserving nearly all predictive signal.

The TIF band indices that were retained by the SHAP analysis are presented in

Table 3.

Figure 9 provides an indicative visualization for 6 out of the 19 selected bands, over a randomly selected image patch.

An unsupervised Histogram-based clustering (Agglomerative) strategy was also introduced to identify representative spectral channels based on the similarity of their value distributions. Each band, with pixel values inherently in the surface reflectance range of

, was then converted into a probability histogram (30 bins with common edges). The pairwise divergence between histograms was quantified using the Jensen–Shannon distance, a symmetric and bounded metric well-suited for spectral distribution comparison. The resulting distance matrix was clustered via Agglomerative Clustering with average linkage, targeting a reduced subset corresponding to 30% of the original dimensionality (

bands). Within each cluster, the medoid band—i.e., the one with the minimum cumulative divergence to its peers—was retained as representative. The selected bands span both contiguous and non-contiguous regions of the spectrum, suggesting that distributional diversity is effectively captured. The full set of indices is reported in

Table 4.

A second unsupervised approach, based on Greedy reconstruction-driven selection, was designed to select bands that best preserve the global variance structure of the hyperspectral cube. Inspired by column subset selection methods, we adopted a greedy procedure akin to Orthogonal Matching Pursuit (OMP): at each step, the band that minimized the residual reconstruction error of the covariance matrix was added to the subset. This criterion ensures that the chosen bands collectively span the spectral feature space with minimal redundancy. Fixing the target subset size to 30% of the spectrum yielded 59 bands, covering diverse regions while maintaining linear representational capacity. Compared with clustering-based methods, this reconstruction-driven strategy emphasizes global covariance preservation rather than distributional dissimilarity. The selected band indices are given in

Table 5.

In summary, the four dimensionality-reduction strategies explored in this, i.e., SFS–LDA, SHAP, histogram-based clustering, and reconstruction-driven selection, offer complementary perspectives on hyperspectral band selection. While the supervised methods emphasize discriminative power and predictive accuracy, the unsupervised schemes prioritize redundancy reduction and preservation of intrinsic spectral structure. This methodological diversity ensures that both class-separability and data-intrinsic criteria are represented, yielding compact subsets that are efficient, interpretable, and well-suited as inputs for the comparative evaluation of U-Net architectures in subsequent experiments.

3.2. Dataset Preparation

The dataset preparation begins with the spatial alignment of the hyperspectral cube and the ground-truth data to ensure geometric consistency. Next, a sliding window of a fixed size of pixels is applied with a stride of two pixels to generate a dense set of overlapping patches. Each patch is subjected to a quality control step: if more than 40% of its pixels correspond to unknown class values, the patch is discarded.

Importantly, the use of spatial patches, rather than single-pixel spectral vectors, captures essential contextual information that would otherwise be lost [

43,

44]. While per-pixel approaches leverage the spectral signature of each point, they ignore the spatial structure of the surrounding area, which often contains crucial clues for differentiating between spectrally similar crop types. By incorporating both spatial and spectral information, the patch-based method supports more robust feature learning, especially in the presence of intra-class variability or noise.

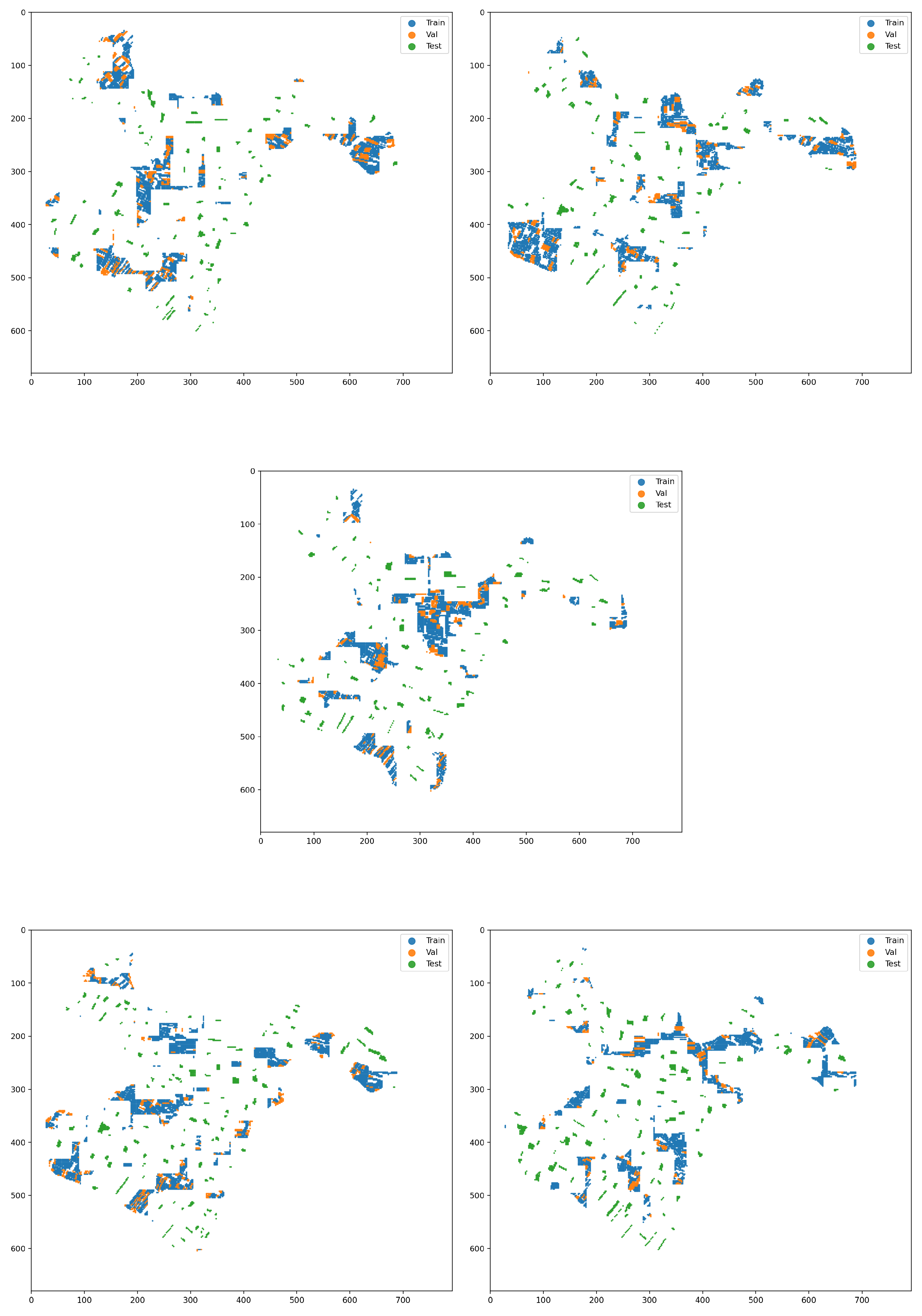

To address the critical issue of spatial autocorrelation and to avoid overly optimistic performance estimates, a strict data splitting strategy, known as a spatial holdout, was adopted (see

Figure 10). Instead of a random sampling of patches, which would lead to information leakage between the training and evaluation sets, the new procedure leverages the boundaries of agricultural parcels provided in a shapefile. Specifically, all patches originating from the same parcel are grouped together, and the division into training (70%), validation (15%), and test (15%) sets is performed at the parcel level. This guarantees that entire parcels are exclusively assigned to one of the three sets, ensuring that the model is trained and evaluated on geographically distinct and independent regions.

To achieve absolute spatial isolation and eliminate any possibility of contact, the methodology dynamically defines a buffer zone around the parcels of the test set. The width of this zone is automatically set to be equal to the patch radius plus one additional pixel (radius + 1). The rejection of patches is based on the position of their central pixel (centroid): if the center of a training or validation patch falls within this buffer zone, the entire patch is discarded. Because the buffer is larger than the distance from the patch’s center to its edge, this strategy guarantees that there will always be a minimum distance of one pixel between any training/validation patch and the test area. Consequently, the datasets not only do not overlap, but also do not even touch.

Finally, normalization statistics (mean and standard deviation) are calculated exclusively from the training data to prevent any information leakage. The normalized patches and their corresponding masks are stored in HDF5 files for optimized I/O speed during training. To ensure statistical reliability and to rule out the possibility of chance results, the entire experimental procedure, from the spatial split to the final training, is repeated five times using five different random seeds. Each seed produces a different but equally valid spatial split of the data. Critically, this set of five seeds remained fixed across all evaluated architectures, providing a consistent and unbiased basis for comparing their performance.

3.3. Model Training and Optimization

To investigate the performance of different architectures and select the appropriate model for the data, a structured random search strategy was adopted to ensure the reproducibility and interpretability of the findings. This approach allowed for an effective exploration of a broad, discrete hyperparameter space in a controlled and systematic manner.

All models were trained, validated, and tested on a dataset consisting of image patches, which was split into 500 training, 500 validation, and 500 test samples.

Initially, for each of the three model families (U-Net, U-Net++, Atrous U-Net), a search space was defined that included all possible combinations of critical hyperparameters (

Table 6).

From this complete space, 10 unique architectures were randomly sampled for each family, leading to a total of 30 experiments. The sampling process was initialized with a fixed seed to guarantee the full reproducibility of the results. Regarding the remaining parameters, the learning rate was held constant at for all trials, a widely accepted value for the Adam optimizer that ensures a fair comparison between architectures.

The final selection of the best architecture from each family was based on a clear criterion: the highest macro F1-score on the validation set. This metric was chosen as it provides a balanced assessment of performance in multi-class classification problems. All the models that were tested (

Table 7), as well as those that were selected (

Table 8), are presented below.

The training procedure was kept consistent across all experiments to ensure a fair comparison between the architectures (see

Table 9). Each model was trained for a maximum of 50 epochs, employing an Early Stopping mechanism. This mechanism terminated the training if the validation loss (

val_loss) did not improve for 10 consecutive epochs (patience = 10), thus preventing overfitting. Concurrently, a model checkpoint was used to always save the model state that achieved the lowest validation loss. For feeding the models, the data were organized into image patches with dimensions of 56 × 56 pixels, which were extracted with a stride of 2.

3.4. Experimental Results

The experimental process involves three U-Net variations and five hyperspectral band selection approaches, resulting in fifteen distinct model configurations. Each model configuration was trained and evaluated using identical preprocessed input data, corresponding to its specific spectral dimensionality. A holdout approach of five sets was applied, as explained in

Section 3.3. Input tensors varied spectrally from 196 (all channels) to 6 bands (SFS-LDA), depending on the dimensionality reduction strategy,

Section 2.2. The output consistently comprised a single-channel segmentation mask, for pixel-level crop classification.

The experiments reveal multiple key findings. First, dimensionality reduction enhances efficiency without compromising accuracy: ACS reduces the spectral space by nearly 70% (196 to 60 bands) while sustaining or improving performance, and SHAP achieves an even more compact representation (down to 19 bands, over 90% reduction) with competitive accuracy when paired with Atrous U-Net.

Second, U-Net++ generally delivers the strongest results, regardless of the band selection approach, reaching a peak mean F1-score of 0.77 with ACS-selected bands. Third, class-level analysis shows that spectrally similar crops such as nut trees and industrial peach remain the most challenging, whereas vineyards and stone fruits are consistently well distinguished. These highlights set the stage for the detailed results that follow.

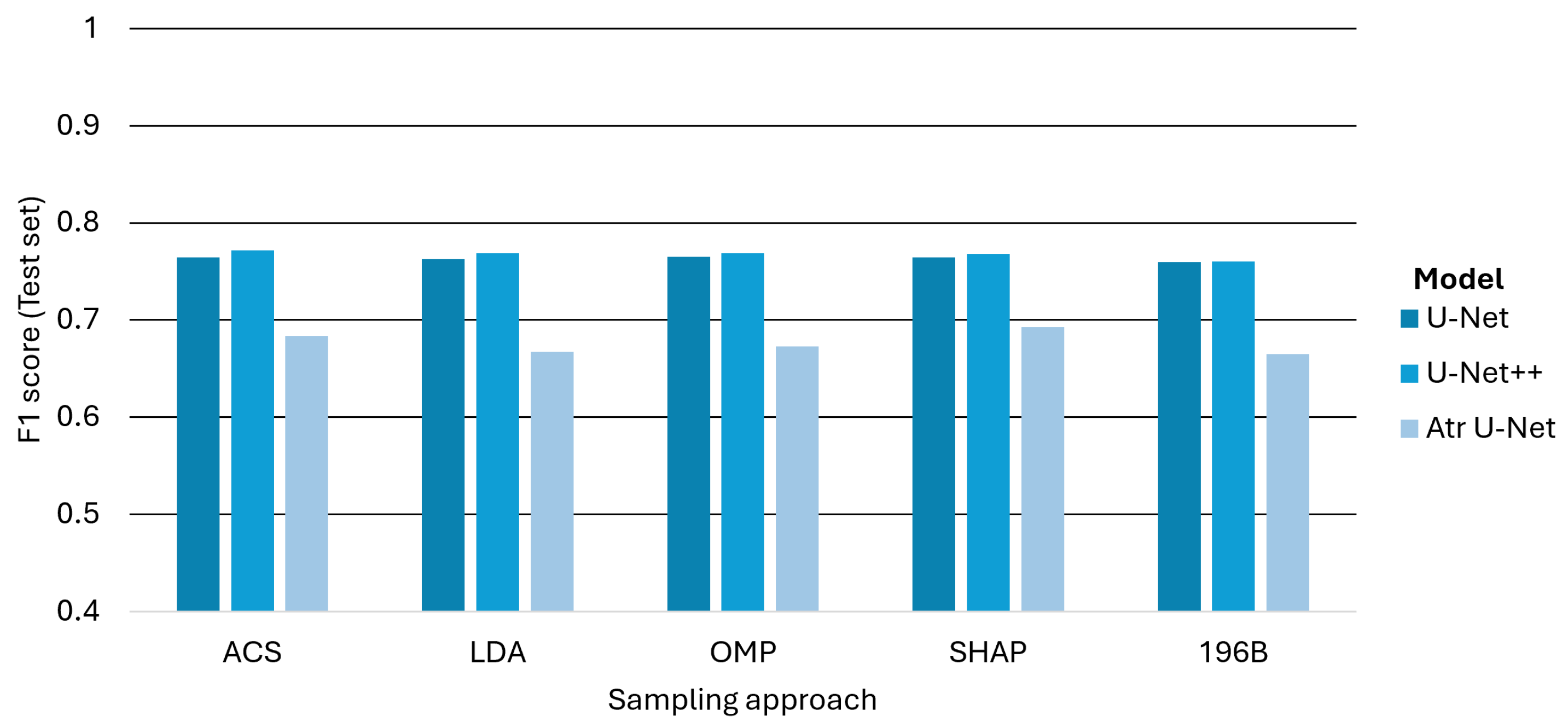

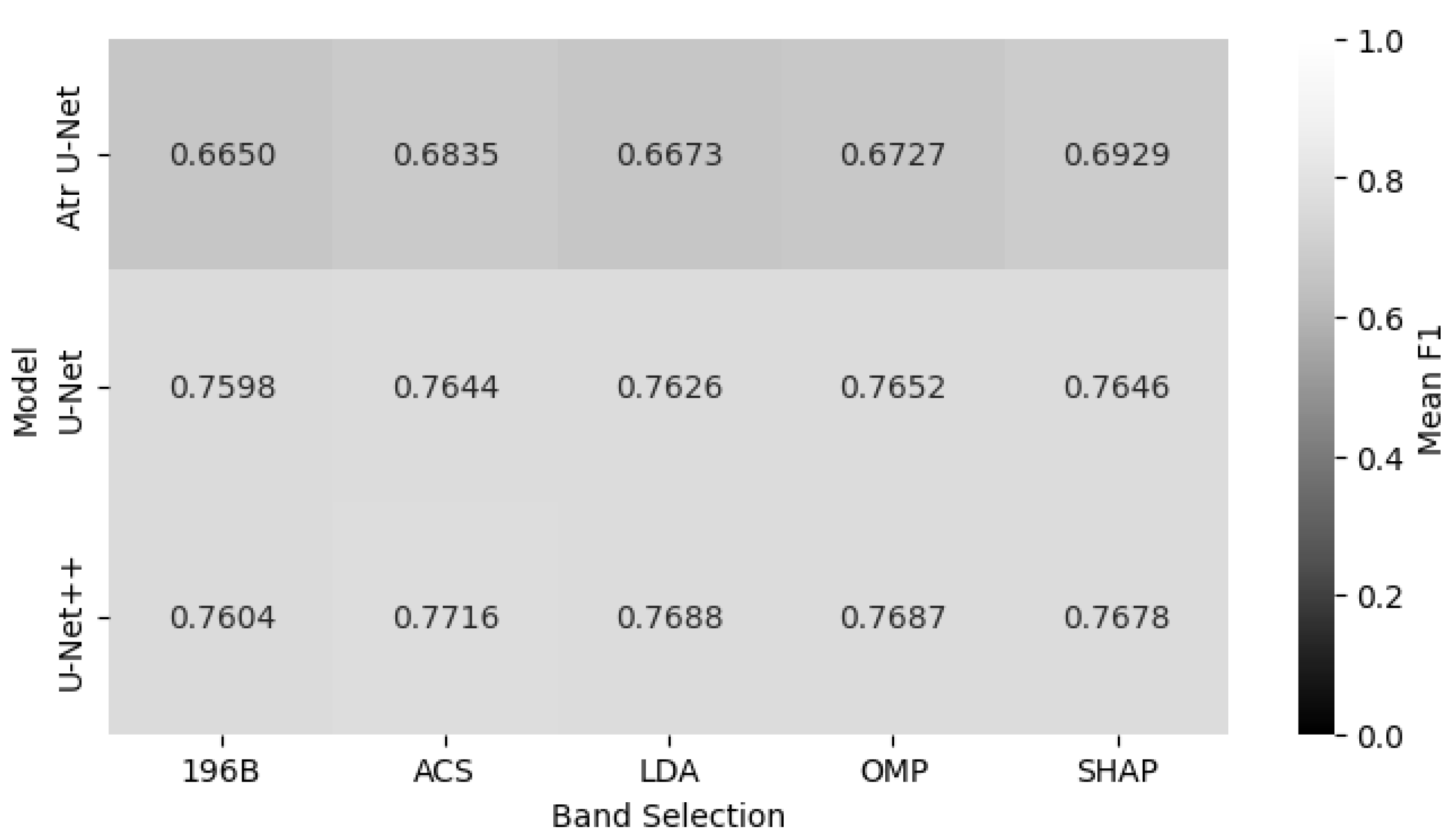

Figure 11 presents the classification performance of each model across five different band selection approaches. The results, averaged across all classes, show that the Unet_PP architecture delivers strong overall performance, achieving the highest mean F1-score of 0.77 when combined with ACS-derived subsets. This is particularly noteworthy since ACS reduces the spectral dimensionality from 196 to 60 bands (a 70% reduction), yet retains or even enhances predictive accuracy. Interestingly, SHAP achieves a much more compact representation (19 bands, i.e., over 90% reduction) and, when paired with all U-Net architectures, yields competitive results compared with full-spectrum models. These findings underscore the operational potential of compact band representations for large-scale agricultural monitoring.

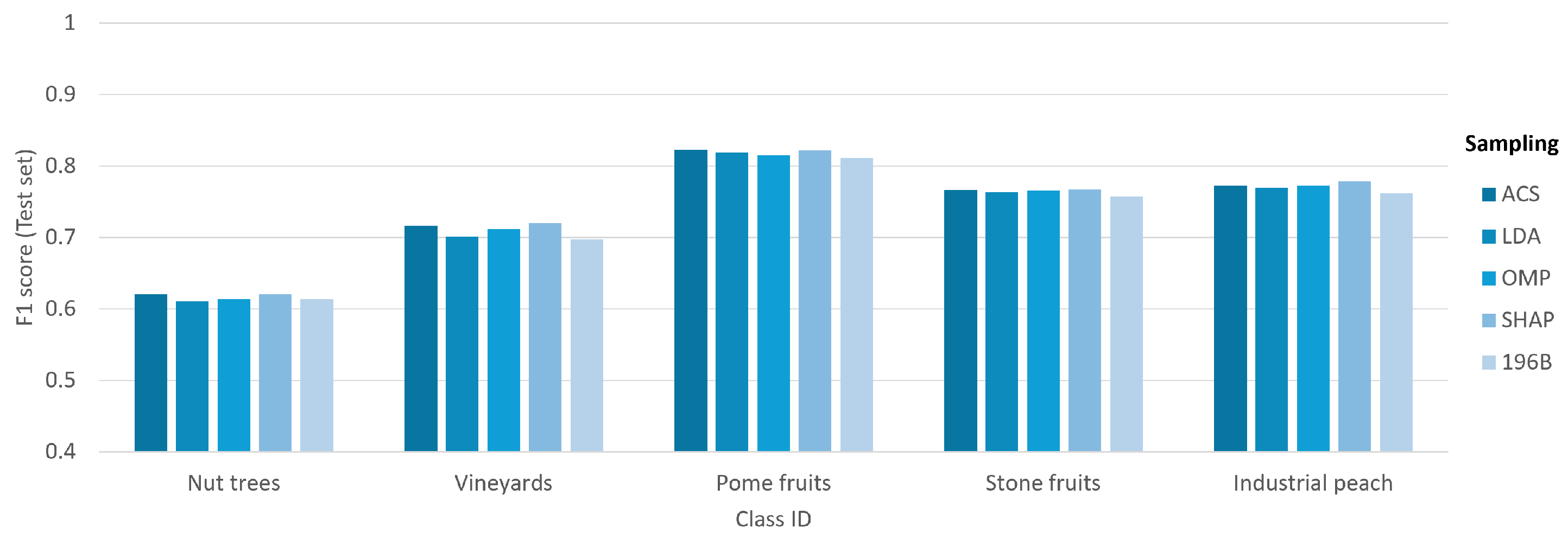

Figure 12 demonstrates the impact of band selection on the classification performance for each investigated class. The results reveal a significant variation in F1-scores across the different classes, with the separability of the classes being a more dominant factor than the choice of band selection strategy. Notably, the Stone fruits and Industrial peach classes consistently yield high F1-scores, whereas the Nut trees and Vineyards classes prove to be more challenging, achieving lower scores. Furthermore, within each class, the performance across the different band selection methods (ACS, LDA, OMP, SHAP, and 196B) is remarkably consistent, with only minimal variations. This finding underscores that the intrinsic nature and distinctiveness of the target classes are the primary determinants of classification success in this experimental setup.

Figure 13 provides a comprehensive overview of the average F1-scores for all investigated models and band selection scheme combinations. The results demonstrate that the U-Net++ architecture combined with the ACS band selection method constitutes the optimal overall scheme, achieving the highest mean F1-score of 0.7716. However, a more detailed analysis reveals the best band selection approach for each specific architecture. For the standard U-Net, the optimal performance is achieved with the OMP strategy, yielding an F1-score of 0.7652. The Atrous U-Net model performs best when paired with the SHAP method, although its F1-score of 0.6929 remains significantly lower than those of its counterparts, reinforcing its systematic underperformance. These findings underscore that while the choice of architecture is the primary determinant of overall performance, subtle gains can be achieved by optimizing the band selection strategy for each specific model.

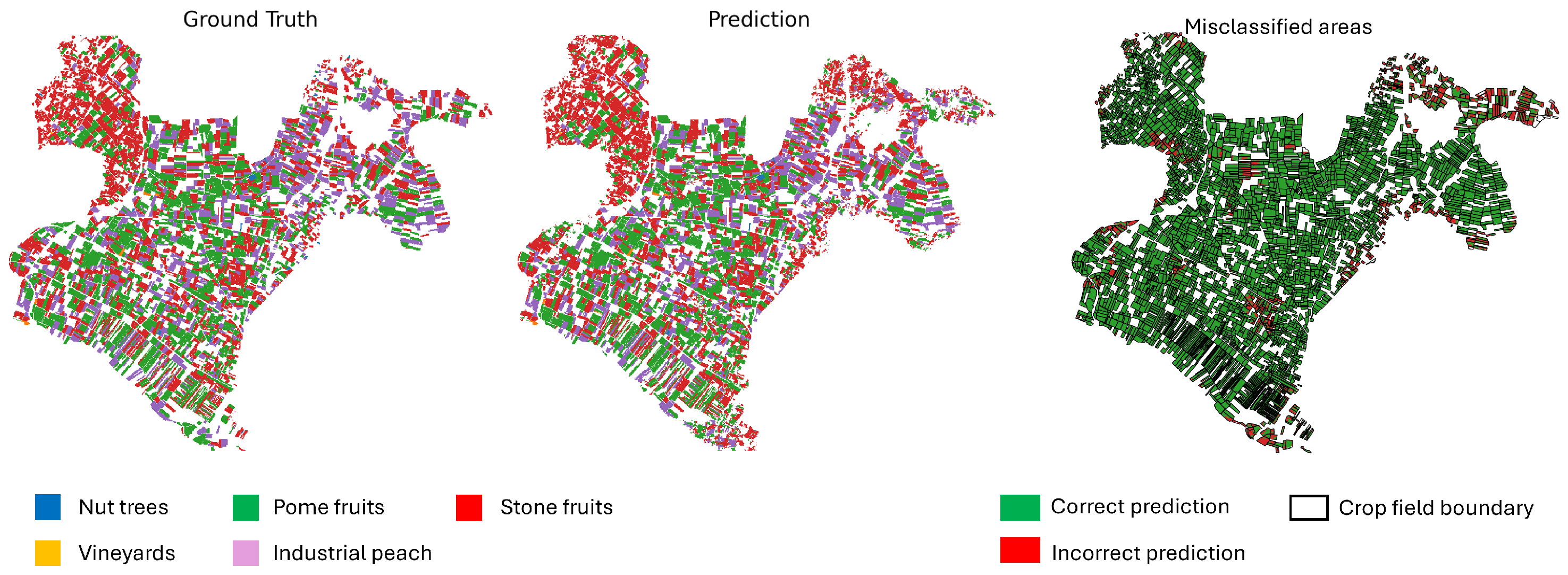

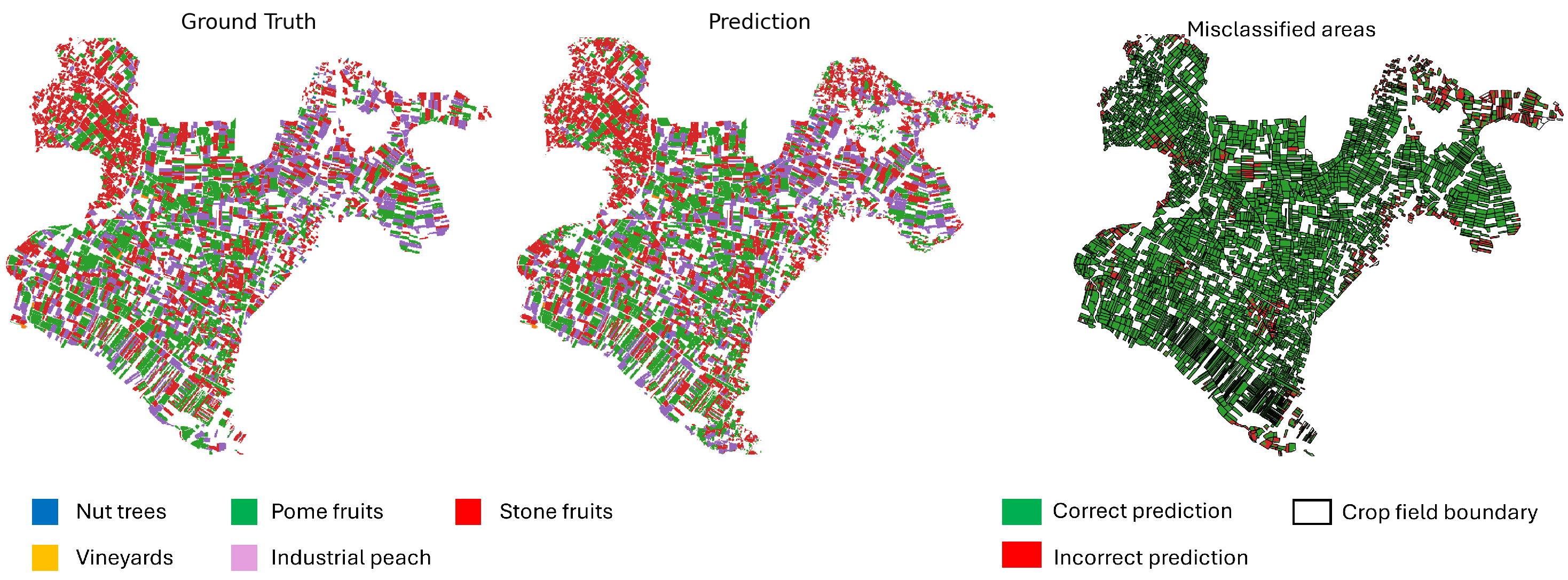

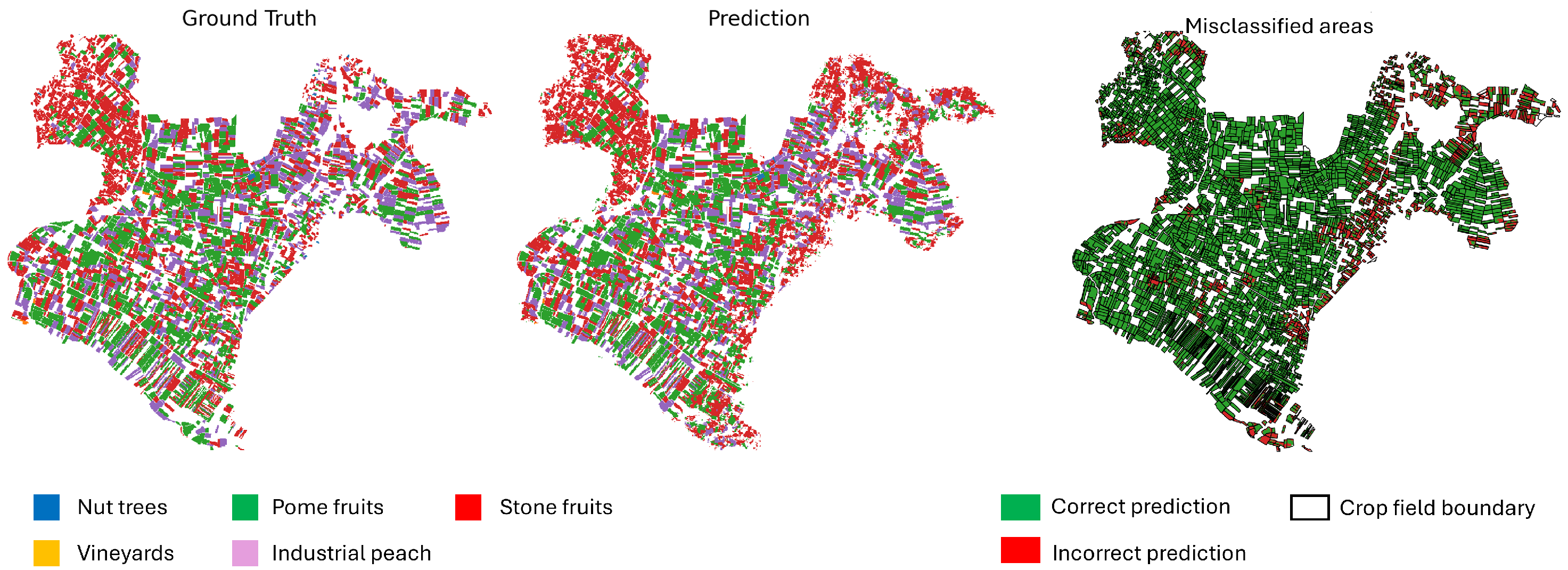

Figure 14,

Figure 15 and

Figure 16 provide a comparison between the ground truth and the model predictions. The left panel shows the annotated ground truth, while the center panel displays the model’s predictions across the test set. The right panel highlights misclassified pixels, where the prediction does not match the ground truth. Dominant perennial crop categories—including nut trees, pome fruits, and stone fruits—are represented using distinct color codes. The error map aids in identifying spatial patterns of misclassification, offering insight into areas where the model underperforms.

Overall, the experimental results highlight three key insights: (i) dimensionality reduction substantially improves efficiency without sacrificing accuracy, (ii) U-Net++ is generally robust but not universally superior, and (iii) the optimal configuration emerges from jointly selecting the architecture and the feature space. These findings set the stage for the broader interpretation provided in the discussion section.

3.5. Statistical Tests

To assess the statistical significance of performance differences among the evaluated models, a comprehensive analysis was conducted based on the F1-scores computed on the test sets over the five holdout sets.

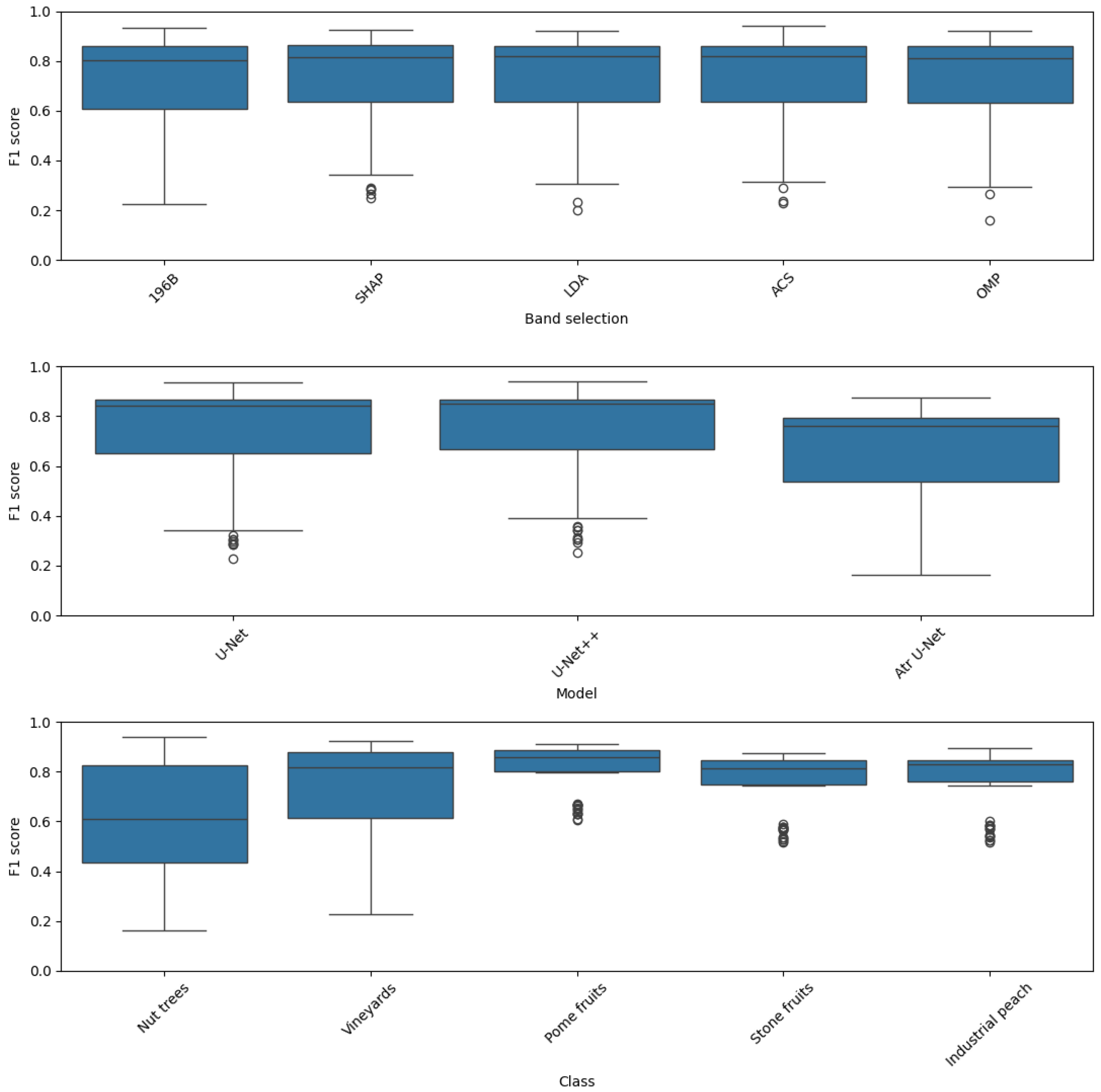

Figure 17 illustrates the distribution of these scores using boxplots.

The top subplot, which illustrates the distribution across different band selection methods, suggests that this factor has a limited impact on overall performance, as the median F1 scores and interquartile ranges for all methods (196B, SHAP, LDA, ACS, and OMP) are very similar. In contrast, the middle subplot, which visualizes performance across the model architectures, provides strong evidence for a clear architectural hierarchy. The U-Net++ box plot is positioned highest with a tight distribution, indicating consistently superior performance, followed by the U-Net model. The Atrous U-Net model exhibits a significantly lower median and a wider spread, signifying its systematic underperformance. This analysis underscores that the choice of architecture is a far more impactful factor than band selection.

The influence of the target class, as shown in the bottom plot, has a peculiar behavior. The inherent separability of the classes appears to be a dominant factor, affecting F1 score distributions. The distributions for Pome fruits, Stone fruits, and Industrial peach are characterized by high median F1 scores and minimal spread, indicating these classes are consistently easy to classify. In contrast, the boxplots for Nut trees and Vineyards show significantly lower medians and much broader distributions, highlighting their inherent difficulty and the high variability in classification performance. In summary, the F1 score is most sensitive to the intrinsic properties of the class being identified, followed by the model architecture, while band selection has a comparatively negligible effect on the overall outcome.

Despite the good overall performance of several deep learning models and/or band selection approaches, the proximity of their mean F1-scores makes it difficult to draw conclusions based on descriptive statistics alone. Therefore, a formal statistical testing framework was adopted to rigorously examine whether the observed differences are statistically significant.

Based on the preliminary statistical evaluation of the F1-score data, the assumptions for a parametric analysis of variance (ANOVA) were assessed. The Shapiro–Wilk test for the normality of residuals yielded a p-value of 0.0000, which is significantly less than the typical threshold. This result rejects the null hypothesis of normality, indicating that the residuals are not normally distributed. In contrast, Levene’s test for the homogeneity of variances produced a p-value of 0.9811, which is well above the significance level, thus satisfying this assumption. Given that the fundamental assumption of normality was violated, the use of a parametric test like ANOVA would be inappropriate. Therefore, a non-parametric alternative, such as the Kruskal–Wallis test, is more appropriate.

The Kruskal–Wallis test was conducted to assess whether different band selection strategies had a significant effect on the F1 performance. The results indicated no statistically significant differences among groups (

,

). Consistently, post-hoc pairwise comparisons using the Mann–Whitney U test with Holm correction confirmed the absence of significant differences across all pairings (

Table 10). This suggests that the choice of band selection method did not substantially influence the model performance.

This finding aligns with the preliminary observations from the boxplot analysis, which visually suggested a high degree of similarity in the performance distributions of the different band selection methods. Consequently, post-hoc pairwise comparisons were not necessary, as the omnibus test showed no significant overall effect. The results confirm that, within the context of this study, the choice of band selection strategy does not exert a significant influence on the models’ classification performance.

The Kruskal–Wallis test revealed statistically significant differences among the model architectures (

,

). Post-hoc pairwise Mann–Whitney U tests with Holm correction (

Table 11) indicated that the Atrous U-Net significantly underperforms compared with the U-Net++ and the standard U-Net (

in both cases). No significant differences were observed between the

U-Net++ and the standard

U-Net (

).

The Kruskal–Wallis test indicated statistically significant differences among crop classes (

,

). Post-hoc pairwise Mann–Whitney U tests with Holm correction (

Table 12) revealed that

Nut trees,

Pome fruits, and

Industrial peach exhibited consistently higher performance differences compared to other classes. In particular,

Nut trees vs.

Pome fruits,

Pome fruits vs.

Stone fruits, and

Pome fruits vs.

Industrial peach all showed highly significant differences (

). Conversely, comparisons involving

Vineyards or

Stone fruits vs.

Industrial peach did not reach significance.

The statistical analysis results, combined with the outcomes of

Section 3.4, validate the importance of carefully selecting both the architecture and dimensionality-reduction strategy in hyperspectral classification tasks. The statistical evidence further supports the robustness of the proposed methodology and the reliability of the performance comparisons made in this study.

4. Discussion

The comparative evaluation demonstrates that both network architecture and spectral representation decisively shape hyperspectral crop classification performance. U-Net++ frequently yields the most robust results, particularly when coupled with ACS-based subsets. Yet, regardless of the band selection scheme, U-Net++ performs marginally better than its counterparts. These findings underscore the importance of considering architecture–feature space interactions rather than attributing performance differences to architecture alone.

An equally important outcome is the demonstration that judicious dimensionality reduction enhances parsimony without compromising predictive accuracy. In particular, ACS and SHAP methods reduce the spectral dimensionality by over 50% while preserving nearly all discriminative power. The advantages of processes involving dimensionality reduction [

40] or band selection [

45] have been reported in similar studies. Beyond computational savings, this compactness promotes interpretability and operational viability, particularly in large-scale monitoring pipelines.

The strict parcel-based spatial holdout with buffer enforcement addresses the pervasive risk of spatial autocorrelation, ensuring unbiased performance estimation. The appropriate process for sample selection remains an important research topic [

46]. This methodological rigor strengthens the validity of our reported accuracies and enhances comparability with future work. The finding that class separability (e.g., industrial peach vs. nut trees) strongly influences classification accuracy further illustrates that the challenge lies not only in architectural design but also in the inherent spectral properties of the target crops.

At the same time, several limitations remain that define natural directions for future research. The reliance on a single EnMAP acquisition constrains temporal generalization, although the chosen site includes diverse perennial fruit crops with high intra-class variability. Extending this framework to multi-temporal EnMAP stacks will enable insights into seasonal crop dynamics and improve generalizability across growing seasons, as already demonstrated in the work of [

47].

Similarly, while our focus on U-Net variants allowed for controlled architectural comparisons, evaluating lightweight or edge-optimized segmentation models would further strengthen the operational applicability of the framework, particularly in resource-constrained environments such as UAVs or mobile ground platforms. The current research advocates favorably towards such approaches [

48].

Overall, this study clarifies the conditional strengths of U-Net variants and highlights the operational value of compact spectral inputs, while also identifying methodological extensions that can further enhance the scalability and transferability of hyperspectral crop classification.