Abstract

The main objective of the study is to present the latest trends and research directions in the field of optimization of logistics systems with Discrete-Event Simulation (DES) and Deep Learning (DL). This research area is highly relevant from several aspects: on the one hand, in the modern Industry 4.0 concept, simulation tools, especially Discrete-Event Simulations, are increasingly used for the modelling of material flow processes; on the other hand, the use of Artificial Intelligence (AI)—especially Deep Neural Networks (DNNs)—to evaluate the results of the former significantly enhances the potential applicability and effectiveness of such simulations. At the same time, the results obtained from Discrete-Event Simulations can also be used as synthetic datasets for the training of DNNs, which creates entirely new opportunities for both scientific research and practical applications. As a result, the interest in the combination of Discrete-Event Simulation with Deep Learning in the field of logistics has significantly increased in the recent period, giving rise to multiple different approaches. The main contribution of the current paper is that, through a review of the relevant literature, it provides an overview and systematization of the state-of-the-art methods and approaches in this developing field. Based on the results of the literature review, the study also presents the evolution of the research trends and identifies the most important research gaps in the field.

1. Introduction

In logistics, a wide range of different types of simulations are applied for the modelling of various material flow processes, both internal and external. Among the different types of simulation approaches, Discrete-Event Simulations have become one of the most widely applied for the modelling of logistics systems in recent years, as these types of simulations became an integral part of Digital Twins (DTs) [1], themselves being a fundamental component of the Industry 4.0 methodology. The defining characteristic of Discrete-Event Simulation is that it uses a sequence of events for the modelling of a system, and this event sequence represents the changes in the states of the model. This makes DES especially suitable for the modelling of logistics and material flow systems, as these are characterized by well-defined system states (good examples for this are the states of storage places, the states of material handling machines, the discrete positions of cargo in the material handling system at any given time, the states of production lines, etc.).

The widespread utilization of Discrete-Event Simulation for the modelling of material flow processes has not only been represented in academic research, but also in industrial applications as well, as it has become a standard practice for companies to use DES models for the preliminary evaluation of a planned material flow system. Such models can aid, for example, in the optimization of factory layouts, in the selection of the proper type and number of material handling machines, in the optimization of the size of the warehouse and the storage areas, etc. It is important that these models facilitate both the optimization of a logistics system before its actual implementation, as well as the short- and medium-term forecasting of any potential problem through their application as DTs during the operational phase.

However, with the recent high-profile advancements in Deep Learning in the past couple of years, the combination of DES with DNNs became a natural choice as a logical next step in the optimization of logistics systems. Therefore, a wide variety of different approaches emerged in the last few years that use some form of combination of Discrete-Event Simulation and Deep Learning in this area of research. This also justifies the need for the current study, as it has become increasingly harder to navigate this research field due to the dynamically evolving methods and approaches, while the few existing reviews usually tend to have a broader and more general scope (for example in [2], the authors examined the literature from the broader perspectives of Machine Learning (ML)).

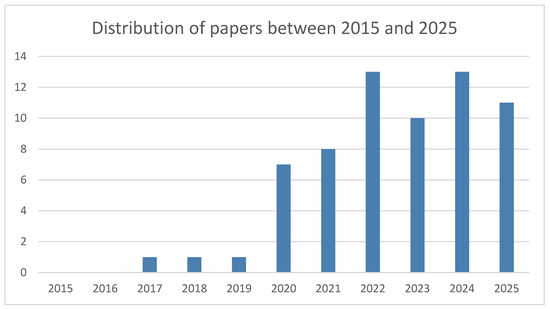

The main goal of the current paper was to provide an overview of the different approaches for the combination of DES with DNNs for the optimization of logistics systems, based on a comprehensive review of the relevant scientific literature. To be able to focus on the latest research trends, the review was conducted within a timeframe ranging from 2015 to the present, as the application of the Industry 4.0 concept and the widespread utilization of Deep Learning techniques both became defining trends in the second half of the 2010s (of course, the DL paradigm itself is much older, but the spectacular rise in Deep Learning applications really started in the early 2010s, with significant successes achieved through the application of Convolutional Neural Networks (CNNs)). As can be seen later in Section 3 from the yearly distribution of the included papers, this was a justified decision as no eligible papers were identified for the years 2015 and 2016, while from 2017 to 2019, only a single paper was found per year.

An additional aim of the current study was to provide a systematization of the different approaches identified within the conducted review. Based on this systematization, a further goal was to identify research gaps and promising future research directions in this rapidly changing field.

The paper is structured into six main sections. After the current introduction, the following chapter describes the advantages resulting from the combined use of Discrete-Event Simulations and Deep Learning in logistics. Section 3 presents the applied methodology used in the review. Afterwards, Section 4 presents the actual literature review and its results, while Section 5 contains the discussion. Finally, the conclusions are drawn in Section 6, after which the paper is closed with a list of abbreviations and a list of the cited literature.

2. Advantages of the Combination of Discrete-Event Simulation and Deep Learning in Logistics

2.1. The Significance of Discrete-Event Simulation in Logistics

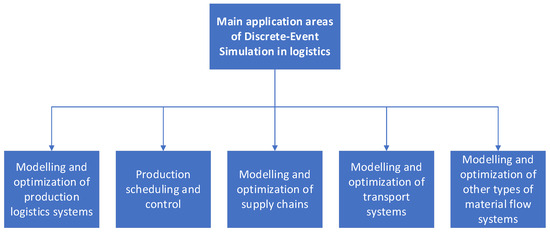

As was previously mentioned, Discrete-Event Simulations became an integral part of Digital Twins in the recent period [1]. This development significantly increased the utilization of DES, as there is a growing need to develop decision-making systems based on DTs [3]. From the perspective of logistics and material flow systems, this trend translates into a wide application area of DES, which is represented in Figure 1 on the next page. In fact, DES had already been applied for these tasks even before the emergence of the DT concept, but the latter significantly increased the interest in the former due to the previously described reasons.

Figure 1.

The main application areas of Discrete-Event Simulation in logistics.

As the figure shows, production logistics represents a significant application area for DES, which is not surprising given the fact that DTs are most closely related to the Industry 4.0 concept. However, DES is also applied for supply chain optimization, for the modelling of transport systems, and for the optimization of other types of material flow systems as well. The latter includes application areas such as healthcare logistics, postal logistics, recycling logistics, etc.

There are multiple reasons behind the existence of this wide field of applications. As was previously mentioned, one key reason is that the DES paradigm is especially well suited for the modelling of logistics and material flow systems, as these are usually characterized by well-defined system states. Another reason is the availability of a relatively large number of user-friendly Discrete-Event Simulation environments. These software tools usually provide an easily accessible graphical user interface with drag-and-drop features. The latter means that the simulation models of even large-scale complex material flow systems can be constructed with relative ease and in a comparatively short time. In addition, while the control methods and algorithms in complex models (for example the ones for production control, AGV—Automated Guided Vehicle—routing, etc.) usually require additional programming, these can also be implemented inside such simulation environments. Examples for frequently used Discrete-Event Simulation environments include Siemens Tecnomatix Plant Simulation, Arena, AnyLogic, FlexSim, and many others. It is also worth noting that multiple open-source DES environments are also available, such as Salabim, JaamSim, and CloudSim [4]. Besides the previous reasons, an additional factor behind the widespread utilization of the DES paradigm lies in its flexibility, as the mentioned software tools can be usually applied to model a wide variety of different material flow systems.

2.2. The Advantages Resulting from the Combination of Discrete-Event Simulation and Deep Learning in Logistics

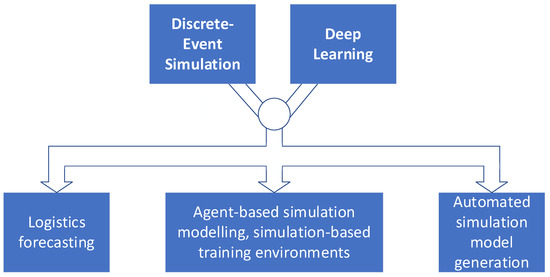

As was previously described, the DES paradigm itself has many application areas in logistics. However, DES models can also have some limitations. One of the most obvious of these is that while most simulation environments provide a user-friendly graphical interface that makes the creation of complex models relatively simple, however the design of the methods and algorithms which control the operation of various objects in the model could require significant additional development work, depending on the complexity of the problem. In addition, while the creation of the model structure itself can often be performed in a relatively short time, it nevertheless usually still requires experience in the given application area. Furthermore, simulation models must always be customized for the given problems, and the running of the simulation itself could also take a non-negligible amount of time if the model is significantly large, especially if a series of simulation experiments is conducted. One of the main advantages of combining Discrete-Event Simulation with Deep Learning is that it can provide solutions to the previously mentioned problems, while at the same time also opening entirely new possibilities. These are presented below in Figure 2 and elaborated afterwards.

Figure 2.

The main application possibilities of the combined use of Discrete-Event Simulation and Deep Learning.

Logistics forecasting: The most straightforward application of Deep Neural Networks in combination with DES is for forecasting based on the training data generated by the simulation or simulations. It is true that DES models alone can be applied for this purpose as well; however, as mentioned, in the case of complex models, the running of the simulation itself can take a significant amount of time, especially if a series of simulation experiments with variable parameters is conducted. The advantage of training a DNN on the results of the simulation experiments is that in this case, the simulations have to be conducted only once and afterwards, after the completion of the training phase, the neural network can quickly provide the forecasting results on its own. Of course, the training of the DNN itself could also require significant time and resources, but once this is accomplished, no training or simulations are required afterwards if the environment remains unchanged, which can make it a better solution in the long run. Naturally, DNNs can also have limitations; for example, if the operational environment is changed, then the simulation model has to be reconfigured accordingly, and both the simulation experiments and the training of the DNN have to be repeated again. However, to a lesser extent, this is also a problem even if only the DES model alone is used. Another critical factor is the accuracy of the DNN, which has to reach sufficiently high levels at the end of training without overfitting. However, the previously mentioned limitations and factors can be coped with through proper planning and implementation.

Agent-based simulation modelling, simulation-based training environments: An advanced application of Deep Learning models is in the form of agents in a Discrete-Event Simulation environment. Usually, some form of Deep Reinforcement Learning is applied to train the agent or agents for the optimal policy in such applications. An obvious consequence of this is that the DES model itself usually also serves as the training environment in such applications. The advantage of using DL models as agents, in general, is that they can learn complex strategies that could be hard to replicate with heuristics or even with other types of Machine Learning approaches. This is especially true for more complex DES models, where a large number of variables has to be considered in order to achieve the optimal result for a given parameter or a set of parameters. Theoretically, under very specific circumstances, it could also be possible to train DL agents in a DES environment for real-life deployment, for example, in the case of production scheduling, where the real system could also be adequately described with discrete system states. From the perspective of simulation modelling, another advantage of using such agents is that in certain cases, their training could be generalized enough to facilitate their application in multiple different DES models, which can significantly reduce the required development time.

Automated simulation model generation: Probably the most advanced form of using Deep Learning in combination with Discrete-Event Simulation is the area of automated simulation model generation. As the name of the field implies, this is based on the utilization of generative AI, usually either in the form Generative Adversarial Networks (GANs) or Large Language Model (LLM)-based approaches. Naturally, the greatest advantage of being able to generate simulation models in an automated manner is that it would enable the application of DES on an even wider scale and with drastically reduced development times. This would be especially important for logistics, where the operational environment can change rapidly and requires fast reactions from the decision makers. The widescale use of automated simulation model generation would also democratize the application of DES, as much less experience and background knowledge would be needed to generate simulation models this way. However, this is a relatively new research area, and much more work is needed before the widescale deployment of this technology.

It must be noted that other forms of Machine Learning and even heuristics can also be applied to at least partially provide the previously described advantages in the context of DES. However, Deep Learning methods are especially well suited to solve complex multi-variable problems, which usually characterize the field of logistics simulations. It also has to be mentioned that while the possible joint application areas of DES and DL were described separately, in reality, these can also overlap with each other (for example, DNNs can be trained for logistics forecasting on DES models using DNN-based agents). Finally, it must also be noted that other less frequently applied approaches also exist for the combination of DES and DL. For example, one such approach is when a DNN is applied for the pre-processing of input data for the DES model. Another nascent approach is the automated evaluation of the simulation results with the use of generative AI.

3. Description of the Applied Methodology

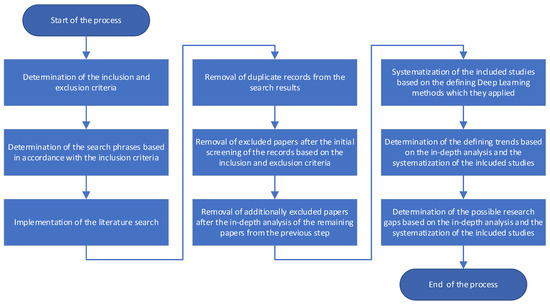

The literature review was conducted by following a methodical approach aimed to identify scientific papers that specifically apply both Discrete-Event Simulation and Deep Learning for solving logistics problems or at least provide a conceptual framework that facilitates the joint application of the previous. The main challenge was to identify papers exactly matching these criteria, as the number of articles dealing with either simulation and/or Machine Learning, in general, is very large, even if only logistics-related works are considered. Therefore, strict inclusion and exclusion criteria were applied during the search, which are described in Section 3.1. The entire methodology of the review process, complete with the systematization of the papers and the identification of the research gaps, is presented in Figure 3 in the form of a process flowchart.

Figure 3.

The process flowchart of the methodology applied for the literature review and analysis.

3.1. Inclusion and Exclusion Criteria

In accordance with the previous, the inclusion criteria applied during the literature review were the following:

- Only original research papers were considered.

- The included studies had to provide a solution to a logistics or material flow problem through the combined application of Discrete-Event Simulation and Deep Learning. In the context of Deep Learning, this meant the inclusion of only those works that either at least partially utilized Artificial Neural Networks (ANNs) with a minimum of two hidden layers (of course, architectures like Long Short-Term Memory (LSTM) are, by definition, considered Deep Neural Networks) or provided a framework that facilitates such applications. However, in relation to logistics and material flow, a wider scope of problems is considered, with the only specific criterion being that the work had to deal with the optimization of some type of logistical problem (e.g., warehouse management, production scheduling, transport optimization, healthcare logistics, etc.).

- The included studies had to be published between 2015 and 2025 (in some cases, due to publication practice, this criterion also allowed the inclusion of conference papers from 2014).

During the initial screening of the search results, papers that did not meet all the previous inclusion criteria were obviously excluded. During the in-depth analysis of the remaining works, a number of additional articles were also excluded, as they turned out to lack any of the listed inclusion criteria upon deeper examination. This usually occurred in relation to the second criterion, as it was not always immediately obvious whether a certain research work indeed applied Deep Learning or not. For example, if the research utilized ANNs with only a single hidden layer, then it could not be considered a Deep Learning method, but if two or more hidden layers were applied, then it fulfilled this criterion. In fact, due to this and similar reasons, the biggest challenge during the review proved to be the isolation of Deep Learning-based approaches from other types of ML-centred methods.

3.2. Search Phrases and General Results

The literature search itself was realized with the use of the Scopus database (through Scopus Advanced Search), the Google Scholar search engine, and the Web of Science database. In case of each, in order to get better results, multiple search phrases were applied, including the following: “discrete event simulation” AND “logistics” AND “deep learning”, “discrete event simulation” AND “material flow” AND “deep learning”, “discrete event simulation” AND “logistics” AND “generative AI”, “discrete event simulation” AND “material flow” AND “generative AI”. The latter two search phrases were specifically applied since in the past few years, mainly because of the advancements made in the field of Large Language Models (LLMs), generative AI has gained prominence in many research areas. Its role can be especially important in relation to Discrete-Event Simulation, as the automated generation of simulation models would have clear and significant advantages. For this reason, it was important to specifically search for generative AI applications in relation to logistics-centred Discrete-Event Simulation as well.

Overall, the literature search yielded 9270 records. After the removal of duplicates, this number was reduced to 7809. Out of these, 7680 were excluded based on the exclusion criteria after title and abstract screening. The remaining 129 papers were subjected to in-depth analysis based on the inclusion and exclusion criteria, which resulted in the exclusion of another 64 papers. This meant that at the end of the process, 65 papers were included in the current study. The following section provides a detailed analysis of the included papers together with their systematization.

4. Analysis of the Identified Literature

4.1. Systematization of the Included Papers

When analyzed form a chronological perspective, the number of included papers shows a radical increase from 2019 to 2020. This, in many ways, was expected: as was previously mentioned, the application of Deep Learning techniques, in general, became a mainstream trend sometime around the middle of the 2010s. A yearly breakdown of the number of papers can be seen in the following diagram (Figure 4).

Figure 4.

The number of relevant papers on a yearly basis from 2015 to 2025.

As Figure 4 clearly shows, no eligible papers were found before 2017. From 2017 to 2019, only one paper was identified in every single year, while 2020 represents a breakout year from the perspective of the field, as seven eligible papers were found for this year. After 2020, the number of yearly papers never fell below this number, while 2022 and 2024 represent especially productive years for the field, with 13 papers published in both years. It must also be mentioned that while at the time of writing this study, 11 papers were found for 2025, this might not include some of the papers written in Q3 and completely excludes Q4, so the final number for 2025 will probably be higher. Overall, the yearly distribution of papers clearly represents a significant uptake in research interest in the field starting from 2020, and though afterwards there was some variation in the yearly numbers, the research interest has constantly remained high in the past more than 5 years.

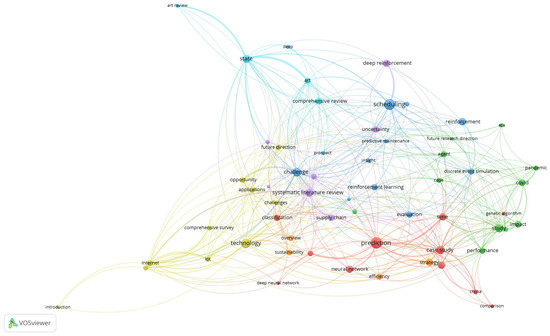

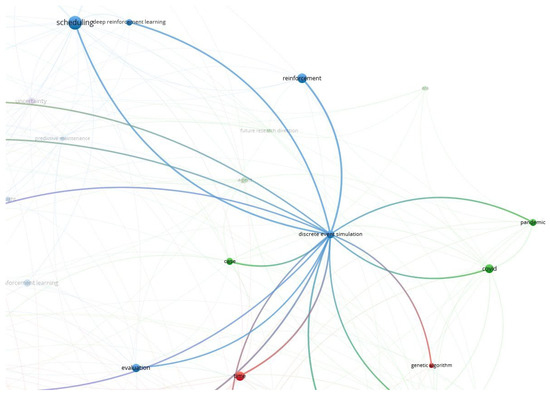

Additional conclusions can also be drawn from the co-occurrence map of typical words and word compositions in the titles of the included papers. Figure 5 shows the complete co-occurrence map, created with the VOSviewer software (version 1.6.20) from the data of the Scopus database, for the search phrases “discrete event simulation” AND “logistics” AND “deep learning”. In Figure 6, an enlarged section of this map is presented, containing the word composition “discrete event simulation” and several of its most important connections. As can be seen from the latter figure, “discrete event simulation” has the strongest connections with “scheduling”, “reinforcement”, and “deep reinforcement learning”. This implies that the most significant area of Discrete-Event Simulation in logistics is related to scheduling problems, while the most typical Deep Learning approach used in conjunction with DES is Deep Reinforcement Learning (DRL). The systematization of the included literature presented later confirms these conjectures.

Figure 5.

The co-occurrence map of the 59 most relevant words and word compositions from the paper titles for the search phrases “discrete event simulation” AND “logistics” AND “deep learning” based on Scopus (made with the VOSviewer software).

Figure 6.

An enlarged part of the co-occurrence map showing the strongest connections between the word composition “discrete event simulation” and some of the related concepts (made with the VOSviewer software).

Table 1 contains the systematization of papers based on the applied Deep Learning methodology. This classification reaffirms the previous assumption, based on the co-occurrence map, that the most frequently applied Deep Learning methodology in conjunction with DES for solving logistics problems is indeed Deep Reinforcement Learning. In fact, the most frequently applied method type is some form of Deep Q-Learning (DQL), often referred to as a Deep Q-Network (DQN), but this is also one of the most typical approaches used within the broader concept of Deep Reinforcement Learning. This also highlights an unavoidable challenge in any systematization, namely that there will always be overlaps and borderline cases between different method classes. For example, obviously some type of Deep Neural Network was applied in all the included studies, but most of them used specific types of such networks. However, due to lacking any better description, the studies that used standard DNNs without further specification were categorized into the “Deep Neural Network” category. In a similar manner, the concept of Deep Reinforcement Learning could be used in some additional studies in other categories as well, but those were classified, based on their defining features, into other method classes. For example, those that centred around the use of Recurrent Neural Networks (RNNs) were grouped into the “Recurrent Neural Network” category. In other words, the categorisation was mainly based on the defining features of the given studies. Table 1 contains the complete systematization of the included studies.

Table 1.

Systematization of the papers in the literature review based on the applied Deep Learning methodology.

As the above systematization shows, out of the 65 papers, overall, 31 can be strictly categorized into the Deep Reinforcement Learning main category, out of which 21 utilized Deep Q-Learning. This together represents more than 47% of the included papers. Sixteen papers can also be classified as using some kind of combined method, which means that these either utilize a Deep Learning technique together with some other type of optimization method or they apply multiple different Deep Learning approaches together. Seven studies are also categorized into the “Generative AI” category. Unsurprisingly, these are all from the last three years, starting from 2022, which correlates with the uptake of research interest in generative AI in the last period. In addition, five papers utilized standard Deep Neural Networks, and an additional four studies were classified into the “Recurrent Neural Network” category. Finally, a single paper was assigned to both the “Convolutional Neural Network” category and to the “Transformer” category (while the latter study utilized a transformer-based architecture, this was not a Large Language Model; therefore, it had to be placed into a separate category from generative AI).

It is also worth mentioning that out of the 65 included studies, at least 15 focused on some form of scheduling problem, which translates to slightly more than 23% percent of the papers. This also confirms the other assumption based on the co-occurrence map analysis, namely that the most frequent application of DES in relation to logistics is the solving of some kind of scheduling problem, mostly production scheduling (the percentage could be even larger if application areas such as production control were taken into account, as these also naturally include the solution of scheduling problems).

4.2. Detailed Overview of the Included Papers

In the following section, the papers included in the literature review will be summarized following the systematization presented in Table 1.

4.2.1. Deep Reinforcement Learning: Deep Q-Learning

Müller et al. utilized a Deep Q-Network for realizing the deadlock-free operation of an AGV system [5]. They applied the Siemens Tecnomatix Plant Simulation software (version 16.0) for the implementation of the learning environment. The problem itself was approached as a Markovian Decision-Making Process (MDP). The actual ANN had two hidden layers, with 64 and 32 neurons, respectively. While their initial simulation experiments produced many collisions, after reconfiguration of the input and output layers and completion of 400 training episodes, they achieved a state where no deadlocks occurred in the AGV system and only single collisions could be observed.

Rabe et al. applied a convolutional neural network with a Deep Q-Learning algorithm to train the developed agent based on the visual data acquired from the simulation [6]. The simulation model itself was implemented with the SimChain tool, which itself utilizes the Siemens Tecnomatix Plant Simulation software and a MySQL database. The model was built to represent a logistics network. The goal of the simulation experiments was to reduce the network costs, increase the service level, with the aid of the DQN agent, and prove the feasibility of the general concept.

Feldkamp et al. used a Double Deep Q-Learning (DDQL) approach for the optimization of an AGV system used in a modular production environment [7]. The modular production system, together with the AGVs, was modelled with the Siemens Tecnomatix Plant Simulation software. A unique aspect of the study was that the Deep Q-Learning algorithm implemented in Python was communicating with the simulation software through a TCP/IP-based interface. The optimization was focused on reducing lead times, and the trained agent performed better than simple heuristic decision rules.

Carvalho et al. also utilized the DDQL approach for the dispatching of trucks in the mining sector, used together with Discrete-Event Simulation [8]. The DES model provided the samples that were used to train the DDQN (Double Deep Q-Network) agent, and the latter provided the dispatching decisions for the simulation model through a feedback loop. The approach was tested under real conditions at a copper–gold mining complex.

Altenmüller et al. utilized a DQN agent for the realization of autonomous production control of complex job shops under time constraints [9]. Their results were based on the modelling of a semiconductor wafer fab, which was implemented using Discrete-Event Simulation. The results demonstrated that Reinforcement Learning (RL) agents can be successfully applied to control order dispatching in a real-world-sized application.

Eriksson et al. used DES and DQN together for the modelling and production scheduling of a robot cell [10]. While the modelling was implemented with the Siemens Tecnomatix Plant Simulation software, the data was derived from a real robot production cell. Another aspect of the study was that the DQN agent was realized in MATLAB, which was connected to the DES model. One of the aims of the research was to minimize the energy use of the production cell while also meeting the production goals.

Tang and Salonitis used DQN and Duelling Double DQN (DDDQN) agents for optimizing the scheduling in reconfigurable manufacturing systems [11]. Discrete-Event Simulation was applied to model the system. A complex information exchange between the simulated manufacturing system and the agent was developed. For the DQN algorithm, the authors applied an ε-greedy policy for action selection, while a prioritized replay memory was also used in the learning process.

Dittrich and Fohlmeister used a Deep Q-Learning-based approach for the optimization of inventory control [12]. They used the SimPy framework for the modelling of the logistics system, which was a linear process chain. For the implementation of the Deep Q-Learning agent, the TensorFlow framework was applied with the use of the Keras-rl library. As a result of the study, they showed that the control of a complex process chain with up to ten elements was possible with the proposed method.

Silva and Azevedo used Deep Q-Learning for the optimization of production flow control [13]. With the aid of Discrete-Event Simulation, they simulated the CONWIP (Constant Work in Process) concept and a push protocol and compared the two based on multiple metrics. They applied the DQN algorithm for finding the optimal WIP (Work in Progress) cap and for determining how to maintain the throughput (TH) of the system.

Hofmann et al. applied Deep Q-Learning for the realization of autonomous production control in a matrix production system [14]. They used Discrete-Event Simulation to model the given production system. The scheduling problem was treated as a Markov Decision Problem (MDP), thereby aiding the application of Reinforcement Learning. The applied neural network architecture had four hidden layers, with the number of neurons inside them ranging from 128 to 320. The authors compared the performance of the network with a rule-based approach, and the former achieved a 4.4% improvement in throughput time compared to the latter.

Duhem et al. applied a so-called Branching Duelling Q-Network (BDQ) for the parameterization of a Demand-Driven Material Requirements Planning (DDMRP) model [15]. The advantage of this approach was the splitting of the Q-function in the neural network into a “state-value” part (shared between all the actions) and an “action-advantage” part (specific to each action). In addition, it made it possible to work on several products at the same time, which were treated independently. Discrete-Event Simulation was used to model a DDMRP hybrid flowshop system (the DES model was implemented in Python). The authors demonstrated the application of the concept through a case study using the DES model.

Lee et al. also used Deep Q-Learning for the optimization of scheduling in a matrix assembly system, focusing on the automotive industry [16]. They used Discrete-Event Simulation for the assessment of the candidate actions suggested by the DQN agent. They also treated the problem as a Markov Decision Process. However, a unique feature of the matrix system was that it also intensively utilized Autonomous Mobile Robots (AMRs) and multipurpose workstations. The authors validated the proposed algorithm by comparing it with various dispatching rules.

Noriega et al. applied Double Deep Q-Learning for the optimization of real-time truck dispatching in an open-pit mine [17]. Discrete-Event Simulation was applied for the modelling of the open-pit truck and shovel environment. The AI agent was integrated into the simulation logic by receiving the system’s current state as an input to determine the truck–shovel assignment for the next cycle. Another important aspect of the study was that each truck was also considered an individual agent that collected an experience vector after completing an operating cycle. A case study for the evaluation of the proposed framework was presented based on real-world data from an iron ore deposit, which ended with favourable results.

Alexopoulos et al. applied a Deep Q-Network for short-term production scheduling [18]. The agent was trained, tested, and evaluated on a bicycle production model, which was implemented with Discrete-Event Simulation. The DES model was created using the LANNER WITNESS HORIZON software v23.1. A custom-made Python v3 API was also used for the execution of training. The task of the agent was to implement the optimal dispatching policy. It was tested against multiple other algorithms and provided the best results by a significant margin (in terms of makespan, the agent found a better solution compared to all other algorithms by 85%).

Waschneck et al. utilized Deep Q-Learning for the optimization of global production scheduling [19]. They used a DQN agent of Google DeepMind from the keras-rl library. The factory simulation is implemented in MathWorks MATLAB. Furthermore, they also used the MATLAB API for Python to realize an OpenAI Gym interface for the simulation. The DQN agent was tested in multiple scenarios under different conditions, and it was able to reach the level of expert knowledge within 2 days of training.

Lang et al. used Deep Q-Networks for the real-time scheduling of flexible job-shop production [20]. A unique aspect of their research is that they used the LSTM architecture for the realization of the two hidden layers of the DQN agent. The flexible job-shop problem was modelled with Discrete-Event Simulation using the Python library salabim. They compared the performance of the DQN agent with the GRASP (Greedy Randomized Adaptive Search Procedure) algorithm. The results showed that the DQN agent always found the better solution for the given problem.

Valet et al. used a standard DQN agent and multiple variations of it to solve the problem of opportunistic maintenance scheduling [21]. The two variations of the standard agent utilized a flexible opportunity window for the scheduling of the maintenance actions, and the second variant also applied a higher penalty for unnecessary waiting actions. Discrete-Event Simulation was used to model a real-world use case in relation to semiconductor front-end wafer fabrication. The results showed that while the DQN agent could not outperform the corresponding benchmark heuristic in general, there could still be scenarios in complex manufacturing systems where it performs better than rigid rule-based heuristics.

Nikkerdar et al. used a Deep Q-Network to address the assembly line rebalancing problem [22]. The learning and operational environment was modelled with Discrete-Event Simulation using by using the FlexSim software version 23.1.2. The environment itself was a digital model of the IFactory, a modular learning factory located at the Intelligent Manufacturing Systems Centre of the University of Windsor. The Discrete-Event Simulation model communicated with the developed agent through a JavaScript Object Notation (JSON)-based protocol. The results of the study showed that the trained agent demonstrated scalability and generalization across diverse scenarios without requiring retraining, though some limitations were also identified.

Shi et al. used Deep Q-learning for the intelligent scheduling of discrete automated production lines [23]. They tested the performance of their agent on a linear, a parallel, and a re-entrant production line. A Discrete-Event Simulation environment was built to provide an intelligent and efficient environment for the agent. The results showed that the Deep Learning agent achieved competitive scheduling performance to heuristic scheduling methods in linear, parallel, and re-entrant discrete automated production lines and shows good robustness to processing time randomness.

Marchesano et al. applied a Deep Q-Network for dynamic scheduling in a flow shop [24]. The learning and operational environment was modelled with the Anylogic Discrete-Event Simulation software version 8.9.6, while the agent was implemented with a framework called Reinforcement Learning for Java (rl4j), which is built into the DeepLearning4J library. The authors investigated eight training experiments, changing the size of the network and two hyperparameters of the training. The experiments showed that for a reward function focused on maximizing the throughput, the system chose, most of time, the shortest processing time (SPT) rule in any scenario.

Vespoli et al. presented a novel framework for WIP control through the integration of Deep Reinforcement Learning and Discrete-Event Simulation [25]. In their solution, a DQN agent dynamically manages WIP levels in a CONWIP environment, learning optimal control policies from the interaction with the Discrete-Event Simulation-based flow-shop environment. The performance of the agent was tested through extensive simulation experiments with varying parameters. The results showed robust performance in tracking the target throughput and adapting the processing time variability, with acceptable Mean Absolute Percentual Error (MAPE) values.

4.2.2. Deep Reinforcement Learning

Nahhas et al. used a Deep Reinforcement Learning-based approach for solving allocation problems in the supply chain [26]. Specifically, they used the Asynchronous Actor–Critic (A3C) algorithm and Proximal Policy Optimization (PPO) to solve the given problem. Discrete-Event Simulation was used to model the supply chain, namely the open-source salabim library. A unique aspect of the study is that the authors used an image observation space based on Gantt charts. The results of the study showed that both algorithms produced comparable solution qualities in terms of the average time to sell and the makespan.

Lim and Jeong applied Deep Reinforcement Learning for the optimization of storage device utilization in a factory environment [27]. Specifically, they applied the PPO algoritm and compared it with the standard FIFO (first-in, first-out) approach. Discrete-Event Simulation was applied to model the factory environment using the FlexSim software. The results showed that the proposed Deep Reinforcement Learning approach can be used to optimize multiple simulation outcomes.

Jiang et al. also used a PPO-based Deep Reinforcement Learning approach for the adaptive control of resource flow to optimize construction work and cash flow [28]. To assist in the training process of the developed agent, a Discrete-Event Simulation-based environment was also implemented to model the dynamic features and external environments of a project. The interaction process between the agent and the environment was taken as a partially observable Markov Decision Process. The results of the numerical simulations showed that the DRL agent had excellent performance across multiple scenarios. A hybrid approach, where the DRL-based agent managed the material flows while an empirical policy managed the labour flows, was also tested, which showed even better results.

Yang et al. used an Advanced Actor–Critic (A2C)-based Deep Reinforcement Learning agent for the intelligent scheduling of distributed workshops [29]. The manufacturing system was also modelled with the Siemens Tecnomatix Plant Simulation software. The simulated system contained 130 jobs, 8 machines, and 3 factories and was used to verify the performance of the proposed agent. The conducted simulation experiments showed that the scheduling results obtained by the agent programmed in Python are the same as the results obtained with the Plant Simulation platform.

Bitsch and Senjic applied a Deep Neural Network with Proximal Policy Optimization for intelligent scheduling within a manufacturing company [30]. They used Discrete-Time Event-Driven simulation for the modelling of the learning and operational environment. The scope of the model was fairly large, containing 215 machines and 7238 active orders. It is also important to note that the model used a real industrial dataset. The results showed that the AI-based adaptation of the planning parameters for scheduling improved considerably.

Krenczyk used Deep Reinforcement Learning with PPO and A2C for allocating processes to production resources in the Digital Twin of a cyber-physical system [31]. The Digital Twin was created using Discrete-Event Simulation, more specifically the FlexSim simulation software. The DRL agent was coded and executed in Python using the stable-baselines3 libraries and the Gym library. The communication between the agent and the DES model was realized with the use of the Reinforcement Learning tool. Two experiments were carried out with different distributions for the semiproducts entering the system, and the results showed that in this particular environment, the PPO performed better in both cases.

Bretas et al. used a Multi-Agent Deep Reinforcement Learning (MADRL) approach for the traffic management of a complex rail network [32]. The aim of the study was to address the formation of deadlocks in such systems. In their approach, intelligent agents represented system elements, such as trains, dump stations, and load points. The agents applied the PPO algorithm. The simulation model acting as the environment for the agents was implemented using Discrete-Event Simulation. The experiments showed that the proposed approach outperformed both the heuristic and the Genetic Algorithm previously applied for the same system (the Hunter Valley Coal Chain).

Liu et al. used Multi-Agent Deep Reinforcement Learning for the dynamic scheduling of re-entrant hybrid flow shops, with consideration to worker fatigue and skill levels [33]. The manufacturing system was modelled with the use of the AnyLogic Discrete-Event Simulation software, and the DRL experiments were implemented through the Pytorch Deep Learning framework. The agents applied the PPO algorithm. The actual manufacturing system used in the experiments was a pharmaceutical plant. Different DRL models were built for multiple sub-decisions in the problem, and the experiments showed that the proposed DRL models had significant advantages over the rule-based methods.

Tremblay et al. applied Deep Reinforcement Learning for the control of a continuous wood-drying production line [34]. The DRL agent was trained through interaction with the DES model of the production line. The latter model simulated the pieces of lumber, machines, conveyors, and decision heuristic of real lines. The DRL performance of the DRL agent was tested with five different configurations of the simulation environment. The results showed that the agent significantly outperformed the heuristics currently used in the industry, while also providing robustness against some possible environmental disturbances.

Kumar et al. applied a DRL agent to improve the efficiency of the operation of an overhead crane in a steel converter facility [35]. The authors developed a simulation model using discrete-event and agent-based simulation and connected it with real-time data through the digital twin approach. Then, the DRL agent was trained in a stochastic environment with multiple feedback loops and complex interactions for crane scheduling. The specific task of the agent was to recommend sequences of crane movements to reduce the wait time in bottleneck situations. The experimental results showed that on average, the proposed method performed sufficiently closely (within 3.6%) to the optimal solution.

4.2.3. Combined Methods

Göppert et al. used Google DeepMind’s AlphaZero in combination with a greedy agent for the prediction of performance indicators of online scheduling in dynamically interconnected assembly systems [36]. The greedy agent was required to convert the pretraining scenarios in training data for the supervised learning phase of the AlphaZero-based agent. A new Discrete-Event Simulation tool in python named DIAS-Sim was also developed for the study. It played a crucial role in the construction of the search tree used by the AlphaZero-based scheduling agent. The fully trained ANN could predict favourable actions with an accuracy of over 95% and estimated the makespan with an error smaller than 3%.

Klar et al. applied a Rainbow DQN-based approach together with a Graph Neural Network (GNN) and a Convolutional Neural Network for Transferable multi-objective factory layout planning [37]. The authors also developed a Discrete-Event Simulation module that allowed the simulation of manufacturing and material flow processes simultaneously for any layout configuration generated by the proposed approach. The layout-related information was presented using multiple grids. The role of the CNN was to process the layout-related information with one channel per grid. The role of the GNN was to process the material flow-related information provided in the form of a transportation matrix. The layout planning itself was realized with the Rainbow DQN agent. Based on the concept, two Reinforcement Learning architectures were developed, and the experiments showed that the linear scalarization architecture should be preferred. In addition, the proposed approach was compared with a Genetic Algorithm, a Tabu Search, and a Non-Dominated Sorting Genetic Algorithm method in multiple scenarios. One of the main takeaways was that the performance of the proposed approach, compared to meta-heuristics, depends on the computation time considered.

Klar et al. also used Rainbow DQN with a GNN and a CNN, specifically for brownfield factory planning [38]. A Discrete-Event Simulation model was applied to evaluate the final layout at the end of each run. This means that the model simulated the production process and returned the throughput time to the learning environment, which was followed by the calculation of the reward. The two main optimization criteria were the throughput time and the restructuring effort. The case study contained 16 functional units, the placement of which had to be optimized by the agent. The results showed that the proposed approach could solve the brownfield planning problem by generating various layouts according to the underlying prioritization.

Zuercher and Bohné utilized Discrete-Event Probabilistic Simulation (DEPS) integrated into a Reinforcement Learning framework for maximization of the value of production [39]. The value of production in this approach included production management, inventory management, quality management, and maintenance management. The authors applied multiple Deep Learning algorithms for the implementation of the RL agent and compared their performance to each other. The applied algorithms included the DQN, DDQN, DDDQN, and SAC (Soft Actor–Critic). Among other conclusions, the results of the study showed that novel approaches are needed to lower the burden of deploying Reinforcement Learning to production lines.

Panzer and Gronau applied Deep Reinforcement Learning together with heuristics in a proposed control framework for modular production systems [40]. Specifically, a DQN agent was applied for the implementation of a hyper-heuristic, while the low-level rule set contained the highest priority first (HP), local and global first-in–first-out (FIFO), earliest due date (EDD), and lowest-distance-first (LDF) rules. Their approach also consisted of multiple agents interacting with the simulation environment. The latter was implemented with a Python-based Discrete-Event Simulation framework that enables the rapid creation of a modular production layout with corresponding system organizations and control regulations. The application of the control framework was demonstrated through a case study that included the fabrication of two product groups. The results demonstrated the multi objective optimization performance of the proposed control framework.

Seah and Srigrarom applied a Deep Q-Network together with a Convolutional Neural Network for the traffic planning of multiple Unmanned Aircraft Systems (UASs) [41]. As the learning and operational environment of the agents was three dimensional, a 3D CNN was applied for feature extraction before the information was transmitted to the DQN. Discrete-Event Simulation was applied to test the trained agents in point-to-point and hub-and-spoke network architectures. The authors also examined the cost and efficiency aspects of the proposed traffic planning approach.

Zhou et al. used various Deep Reinforcement Learning methods for the maximization of monetary gain in a meal delivery system [42]. More specifically, they applied six implementations of agents based on the DQN, DDQN, Duelling DQN, Duelling Double DQN, and A2C. They also applied a hybrid Discrete-Time, Discrete-Event Simulation environment developed specifically for the modelling of the daily operation of the meal delivery system. The optimization problem, called the Courier Reward Maximization Problem, was approached as a Markov Decision Process. Numerical experiments were carried out both in the case of a single courier and in the case of multiple carriers. One interesting takeaway from the experiments was that when all couriers used the proposed approach, the advantages were diminished for each courier, which justified further research for finding the proper incentives that encourage mutually beneficial courier behaviours.

Leon et al. used different Deep Reinforcement Learning algorithms for increasing the efficiency of warehouse operations [43]. More specifically, they used the A2C, PPO, and DQN algorithms. These were employed together with Discrete-Event Simulation, where the latter was used to implement the learning and operational environment. The authors applied the FlexSim software for the implementation of the DES model, while the DRL agents were created using the Python OpenAI Gym library and Stable-Baselines3. Communication between the agents and the simulation environment was realized through the so-called sockets provided by the FlexSim software and by using the latter’s internal programming language, FlexScript. The authors first tested the DRL agents in a smaller model made for validation and afterwards in an extended model for the purpose of performance comparison. In the second case, while the agents maintained their performance in terms of throughput, they fell behind from the aspect of travelled distance performance compared to the benchmark greedy agent, though the DQN agent was able maintain almost the same efficiency in this case as well.

Göppert et al. presented a modular software architecture that provided an interface between online scheduling agents and production planning and control systems [44]. Their approach incorporated Discrete-Event Simulation for the training of the agents, but also as a planning and optimization tool that could be used to evaluate alternative versions of the assembly system (these latter functionalities could also be employed independently of online scheduling). The approach is compatible with a wide range of agent types including Supervised Learning (SL), heuristics, and Deep Learning. The proposed architecture was implemented at the Laboratory for Machine Tools and Production Engineering (WZL) of RWTH Aachen University. Qualitative validation of the implemented components and interfaces was also carried out, while a large number of test scenarios with the simulation model were also tested.

Lang et al. presented a method for the integration of OpenAI Gym agents with Discrete-Event Simulations as Reinforcement Learning environments for the modelling and optimization of production scheduling problems [45]. While they did not specify the actual algorithms, OpenAI Gym provides many pre-built DRL agents from OpenAI Baselines and Stable Baselines. The authors presented both the theoretical framework and a concrete example of the proposed integration.

Rumin et al. also presented an approach for the integration of OpenAI Gym with a Discrete-Event Simulation environment, specifically the FlexSym software [46]. One of the unique aspects of their study was that they utilized their approach for energy-efficient route planning in hyperloop low-pressure capsule transit. The proposed architecture for the integration was based on a custom Gym environment called FlexSimEnv, which was designed to facilitate seamless interaction with the FlexSym software. Though in the initial implementation of the architecture, the authors used PPO as the chosen agent; they also mentioned that the method allows the application of a wide variety of DRL algorithms from the OpenAI Gym library, including A2C, DQN, and SAC, which was the main reason for the classification of the approach as a “Combined method”.

Cen and Haas used a combined approach based on Graph Neural Networks and Generative Neural Networks for enhanced simulation metamodeling [47]. They specifically focused on the metamodeling of Discrete-Event Simulations for operations management, while noting that their metamodels can also potentially serve as surrogate models in Digital Twin settings. They conducted several preliminary experiments with multiple frameworks from the proposed approach, which included the use of a Generative Graphical Metamodel (GGMM) for estimating the distribution of the task completion time in a Stochastic Activity Network (SAN) and the application of a dynamic GGMM (D-GGMM) for the surrogate simulation of queue departure times, among others.

Zhang et al. applied a combined method based on Multi-Task Reinforcement Learning (MTRL), LSTM, and A3C (Asynchronous Advantage Actor–Critic) for the dynamic scheduling of large-scale fleets in earthmoving operations [48]. They used Discrete-Event Simulation for the creation of the operational environment, which tracked the real-time impact of scheduling decisions on the transportation system. They also applied multiple agents for handling scheduling tasks and for the generation of optimized scheduling plans. On the global level, the A3C strategy was realized with two LSTM networks, which aided the agents in adaptively learning and optimizing the scheduling strategy. The capability of the proposed framework was validated with a case study based on a realistic large-scale earthmoving project located in the upper reaches of the Yalong River.

Ogunsoto et al. introduced a combined approach based on the use of LSTM, a Multi-Layer Perceptron Neural Network (MLPNN), and DES, applied as a DT to aid in supply chain recovery after natural disasters [49]. Their approach was based on multiple consecutive phases that applied the previously mentioned methods for different tasks. The first phase was the prediction of natural disasters, which was implemented with the use of an LSTM and logistic regression (it turned out that the former clearly outperformed the latter). This was followed by the modelling of supply chain disruption with the use of the FlexSim DES software. The third and final phase was the prediction of a recovery indicator for the supply chain, for which the MLPNN was used with the application of data generated by the FlexSim software. The application of the entire approach was demonstrated on a case study based on a scenario where flooding disrupts a manufacturing supply chain.

Kulmer et al. presented a planning assistant tool for medium-term capacity management in a factory environment [50]. The proposed approach was based on the application of multiple algorithms, including Deep Reinforcement Learning. As part of the approach, the authors created a Digital Twin of a learning factory, called the LEAD factory (located at the Graz University of Technology, the author’s home institution), with the use of Discrete-Event Simulation. They then tested various algorithms from the Stable-Baselines3 library in the created simulation environment. The tested algorithms were Trust Region Policy Optimization (TRPO), PPO, PPO with recurrent policy (RecurrentPPO), a DQN, a Quantile Regression DQN (QR-DQN), Augmented Random Search (ARS), and A2C. The results showed that RecurrentPPO, PPO, and TRPO provided particularly good overall performance and should be further evaluated. Furthermore, the authors showed that the proposed decision support system outperformed humans in the test setting.

Fischer et al. applied hybrid Deep Learning models for activity recognition in relation to the construction industry [51]. Three data sources—activity data, equipment sensor data and production logs—served as input for the study, and DNNs were used for activity recognition. Overall, three DNN models were tested with different architectures, (one was based only on Softmax layers, while the other two combined convolutional layers with LSTM or bidirectional LSTM layers together with Softmax layers). The processed data was then used as input for a data-driven DES model of the construction process created in Python. The results provided insights into the effect of production model granularity on activity recognition and on the application of DTs in the construction industry, while also helping in the identification of future research directions.

4.2.4. Generative AI

Reider and Lang presented a framework for the integration of generative AI with Discrete-Event Simulation [52]. The aim of their proposed method was to facilitate Automated Simulation Model Generation (ASMG). They specifically applied an instruction-finetuned Large Language Model (LLM)-based approach, in which the LLM generated structured factory descriptions from natural language inputs and contextual descriptions. The role of Discrete-Event Simulation in the proposed approach was twofold: it aided in the training of the LLM through Reinforcement Learning but also provided feedback for the trained LLM, based on which the latter could evaluate the results and identify optimization opportunities.

Fei et al. presented a platform utilizing generative-AI and Discrete-Event Simulation for the AI-assisted modelling of manufacturing systems [53]. Their approach used agents for the automation of various elements of the simulation process, including data-collection, preprocessing, and simulation model construction. In their case study, they used the Simio Discrete-Event Simulation software and the Claude model 3.5 Sonnet from Anthropic as the AI agent. The results of the case study proved the capabilities of the proposed architecture, while also revealing areas that required further improvement.

Li et al. presented a Large Manufacturing Decision Model (LMDM) using Digital Twin technology and the reasoning capabilities of Large Language Models (LLMs) [54]. The main characteristic of the proposed LMDM concept was that these models prioritize the generation of multiple high-quality decision options as primary outputs, which are supplemented with explanatory texts. This facilitates the human-centric optimization and reconfiguration process of manufacturing systems. The role of the Digital Twin was to aid the training and self-guidance of the LMDM. For the application example, the authors developed a discrete-event shop-floor simulation in Python as the representation of the Digital Twin. The results clearly demonstrated the achievable performance and efficiency gains provided by the proposed approach.

Chen et al. applied a Generative Adversarial Network for the optimization of paratransit services [55]. Their goal was to optimize vehicle allocation at dispatch stations. They applied the Arena Discrete-Event Simulation software in multiple roles, which included the creation of the simulation environment, aiding in the training of the GAN, generating the number of possible service requests in the model in each sub-region on a daily basis, and implementing various modules of the proposed concept. The authors verified the approach through a case study of paratransit services in Yunlin, Taiwan.

Montevechi et al. applied GANs for the validation of DES models [56]. They developed a framework in which GANs were first used to generate synthetic data from the real data and then used the Discriminator to discriminate the real outputs from the simulated ones. Five statistical distributions were also trained, which were used in the verification of the power of the test. The authors tested the approach through the simulation of an emergency department. The results proved the efficiency of the approach and that GANs could aid in the validation of DES models.

Feldkamp et al. used GANs for the optimization of robustness in logistics systems [57]. More specifically, they applied two GANs for the generation of optimized experiment plans for the decision factors and the noise factors in a competitive, turn-based game. In their case study, they used the Siemens Tecnomatix Plant Simulation software for the implementation of the operational environment, which was the model of an assembly line. The goal was to make the line robust against variations in the product mixture. The proposed approach was tested against the Taguchi Method and the Response Surface Method with favourable results, though finding the global optimum was not guaranteed. However, the results proved that the proposed concept had a lot of potential and demonstrated that GANs could be used for generating experiment plans that exhibit desirable design quality criteria.

Jackson et al. presented a framework based on the refined GPT-3 Codex for the generation of functionally valid simulations for queuing and inventory management systems [58]. The goal was to automate the creation of simulation models based on natural language description. The models were generated by the AI as executable Python code. While the aim was not to specifically generate DES models, the GPT-3 Codex was trained on code samples of simulations from GitHub, and some of these were implemented using the Discrete-Event Simulation paradigm. As a result, the trained AI could also implement the Discrete-Event Simulation principle in the generated simulation models.

4.2.5. Deep Neural Network

Kumar et al. applied a Deep Neural Network for the production control of complex job shops [59]. They trained the DNN using Discrete-Event Simulation. Their use case represented a production process of five machine groups with ten sub-organized specialized machines. Each group effectively represented a job shop and had a buffer stock with 20 buffer slots. During the simulation experiments, the DNN was compared with a Reinforcement Learning algorithm and provided better results in terms of decision-making accuracy.

Molnár et al. examined the possibilities and advantages of using synthetic data provided by Discrete-Event Simulation for the training of neural networks [60]. As a part of their analysis, they provided an application example centred on a flexible manufacturing line. The latter was based on a sample model provided by the Siemens Tecnomatix Plant Simulation model. The authors applied a Deep Neural Network with seven hidden layers, which was trained on the synthetic data provided by the simulation model. The goal was to maximize the number of units produced per day, while a product mix of six products was given daily. The most important optimization parameter was the number of pallets in the system. The results showed that the ANN-based decision system could be used effectively as long as there were no fundamental changes in the production system (new product launches, layout changes, etc.).

Zhang et al. presented a complex framework for Digital Twin-enhanced dynamic job-shop scheduling [61]. One of the key features of their proposed approach was the fusion of real and synthetic data by the Digital Twin. In the presented framework, Discrete-Event Simulation could be used for the modelling of the machining workflow in order to evaluate various related parameters (for example the utility rate of each machine depending on the time period, the tardiness of each job, the makespan, etc.). In their case study, the authors also applied a Deep Neural Network within the framework to build the relation between the tool wear and the mechanical stress affecting the tool.

Reed et al. utilized a special type of Artificial Neural Network known as a Mixture Density Network (MDN) for the modelling of stochastic behaviour in Digital Twins [62]. This type of ANN architecture is particularly useful for the representation of various distributions, which was of course the main rationale behind its application in the study. The authors provided an application example based on the modelling of load–haul–dump vehicle operations in a sublevel caving mine. A DES model was created for the modelling of the vehicle operations, and the MDN was trained on the training data generated by the model. A key question that had to be answered through the use of the MDN was the impact of waste rock on the operational processes. The results verified the advantages of the integrated use of the MDN and the DES model.

Vespoli et al. applied Discrete-Event Simulation and agent-based simulation to train a Deep Neural Network for the performance estimation of a CONWIP flow-shop system [63]. Simulation modelling was implemented with the use of the Anylogic software. The DNN was constructed with the aid of Google Colaboratory and the Tensor Flow Keras library. The authors assessed multiple ANN architectures before selecting the proposed solution. This consisted of an input layer with the WIP values in the production system, the quadratic coefficient of variation, and the critical WIP. This was followed by a hidden normalization, which itself was followed by two condensed layers of 24 neurons (12 each) with the elu and the sigmoid as activation functions. The final output layer comprised a single neuron with the tanh activation function. While the study also had limitations (for example, it was focused primarily on balanced production lines), it still demonstrated the exceptional accuracy of the proposed model in predicting throughput.

4.2.6. Recurrent Neural Network

Yildirim et al. presented a method that used a Nonlinear Autoregressive with External Input (NARX) model, a special type of recurrent neural network, for process knowledge-driven change point detection in Discrete-Event Simulation models [64]. The aim of the proposed approach was to provide a framework for process-driven multi-variate change point detection to aid the development and calibration of complex DES models. The authors tested the proposed method with a simulation of an emergency department. The results verified that the proposed model could complement a large variety of data-driven change point detection models and facilitate the automated discovery of process knowledge in DES environments.

Terrada et al. presented a new framework for Urban Traffic Management and Control (UTMC), with a focus on green supply chains [65]. Their approach used various methods, one of which was based on the fluid modelling of road network flows, with the specific aim of applying the developed equations to the Discrete-Event Simulation of a network of fluid reservoirs. Another component of their approach was an LSTM-based Spatial-Temporal Dynamic Network architecture aimed at urban traffic prediction. A further component was the extensive utilization of IoT (Internet of Things) technologies for data collection and processing. Tests were also carried out with the implementation of the proposed UTMC concept.

Woerrlein and Strassburger applied a Recurrent Encoder–Decoder model (RNN-ED) for the prediction of power consumption in machining jobs [66]. They used a hybrid simulation approach, where the RNN-ED is called within a discrete-event-oriented simulation to characterize the power consumption of each job within the machining room of a machine tool. The complete simulation model contained the entire manufacturing cell with a waiting area and a manufacturing area. In the case study, Tensorflow was used together with the Keras API and the package rSimmer (the latter was used for the implementation of the DES model). The results confirmed the functionality of the presented approach.

Ashraf et al. applied LSTM for Time-to-Recover (TTR) prediction for improving resilience in a cognitive digital supply chain twin framework [67]. The supply chain itself—a three-echelon supply chain with limited buffer capacity—was modelled with the use of Discrete-Event Simulation, more specifically with the application of the AnyLogic software. The LSTM was trained to predict the TTR using the incoming signal from the DES model for different parameters and metrics. In the experiments, the performance of the LSTM was evaluated under different disruption scenarios. The prediction results confirmed the ability of the LSTM to predict TTR based on multivariate historical data, using different supply chain parameters and metrics.

4.2.7. Convolutional Neural Network

Sommer et al. proposed a method for the automated generation of Digital Twins for existing built environments, enabled by the application of scan and object detection, while placing an emphasis on using DES for simulation modelling [68]. The main goal was to provide input data for production planning through the application of the concept. In their framework, they proposed the application of Convolutional Neural Networks for object detection and recognition in the built environment. The overall approach was tested using real-life data, which demonstrated the applicability of the entire framework.

4.2.8. Transformer

Innuphat and Toahchoodee applied Discrete-Event Simulation together with Temporal Fusion Transformers (TFTs) for the validation of a custom spare parts inventory policy in a petrochemical industry setting [69]. The main role of the TFT was the demand forecasting of spare parts. In the proposed concept, historical data was used as the basis on which the TFT could make future predictions. For the implementation, the authors used two sources of data from one of the petrochemicals industries in Thailand (the historical inventory data and the historical purchasing data gathered from real-world information systems). They applied SimPy for the implementation of the DES model and a TFT model from the open-source PyTorch Forecasting package. In their experiments, they compared the performance of the TFT with Random Forest Regression, and while both algorithms proved to be similarly efficient, the TFT had additional advantages related to cross-learning and multi-horizontal forward predictions.

5. Discussion

5.1. Evolution of the Identified Research Trends

Based on the conducted literature review, it can be concluded that the combination of Discrete-Event Simulation with Deep Learning has indeed become a significant main research trend in the past few years, which is clearly supported by the data in Figure 4. It is also clear that this trend clearly started in the second half of the 2010s, as the first included papers written in the field could be identified from 2017 to 2019, while 2020 clearly represented a surge of research interest in the topic, as the number of articles suddenly grew from 1 per year to 7. Furthermore, based on the number of the included studies, starting from 2020, the research trend has consistently remained strong, often producing 10 or more papers on a yearly basis, with 2025 clearly becoming such a year (at the time of writing, 11 papers were already included in the review from 2025).

Based on the yearly distribution of papers, an evolution of sub-trends within the main research trend can also be identified. As was mentioned, the first included papers could be identified from the period between 2017 and 2019 [6,13,19]. All three papers applied some form of Deep Reinforcement Learning in combination with DES, which implies that the use of the DRL paradigm served as the starting point for the main research trend.

Then, in 2020, with the sudden rise in the number of studies, some experimentation with other types of DL methods could be witnessed, though the field was still dominated by DRL. These other approaches included the application of the NARX model [64] and the Recurrent Encoder–Decoder model [66], both of which are examples of Recurrent Neural Networks. Similarly in 2021, the majority of studies were still based on the application of DRL, but two of the included papers presented research based on the utilization of standard DNNs [59,61], while another study proposed a framework for the integration of DES with the Gym interface from OpenAI [45], though this latter concept was also aimed at using RL algorithms.

The real diversification of the field started in 2022, with two of the included studies presenting research based on various combined methods [44,47], while the application of generative AI in relation to DES also appeared during this year in the form of Generative Adversarial Networks [55,56,57]. In the subsequent years, this has been followed by multiple other studies presenting a variety of different combined methods and other types of approaches, though the significance of DRL has remained strong. Finally, the first application example of using an LLM combined with DES was identified in a study from 2024 [58], in which automated simulation model generation was the specific goal of the research.

5.2. Identification of the Research Gaps

The systematization of the papers reinforced the assumptions that could be made based on the co-occurrence analysis of the most relevant words and word compositions in the included literature. Namely, the most frequently applied methodology is Deep Reinforcement Learning, especially Deep Q-Learning, as was also implied by the previously presented evolution of research trends. As was mentioned before, even in the strictest sense, more than 47% of the papers can be classified into the joint DRL-DQL category. In addition, it is important to highlight that the DRL paradigm has been applied in multiple other studies as well, for example, in the case of the “Combined methods” category, in which multiple papers applied DQL in combination with some other approach or approaches. One reason for the widespread utilization of DRL in connection with DES could be the fact that in a Discrete-Event Simulation model, it is relatively straightforward to define a discrete action space for a DRL agent, as the models are composed of discrete objects and operate based on a sequence of discrete events. As DQNs are especially suitable for the handling of discrete action spaces, this also explains the widespread application of Deep Q-Learning in relation to Discrete-Event Simulation models.

However, while DRL is already frequently applied in the context of DES, based on the literature review, it turned out that the use of generative AI, especially LLMs, in the field is still in its early stages. More specifically, among the included papers, only four studies applied LLMs or LLM-based approaches, which represents barely more than 6% of the total number of papers. On the one hand, this is understandable given the fact that the transformer architecture—the key component upon which Large Language Models are built—was introduced in 2017 [70], while LLMs themselves have only received widespread attention in the past few years. On the other hand, the lack of logistics-oriented papers using LLMs and DES together is clearly noticeable, even considering the aforementioned factors. Therefore, this represents a clear gap in the research area, as the application of LLMs is currently one of the most significant trends across almost all scientific fields. Furthermore, in relation to Discrete-Event Simulations, there is clear application potential for LLM-based generative AI, both in terms of the automated generation of simulation models and the automated evaluation of simulation results, as the few examples clearly show [52,53,54,58].