A Fusion-Based Approach with Bayes and DeBERTa for Efficient and Robust Spam Detection

Abstract

1. Introduction

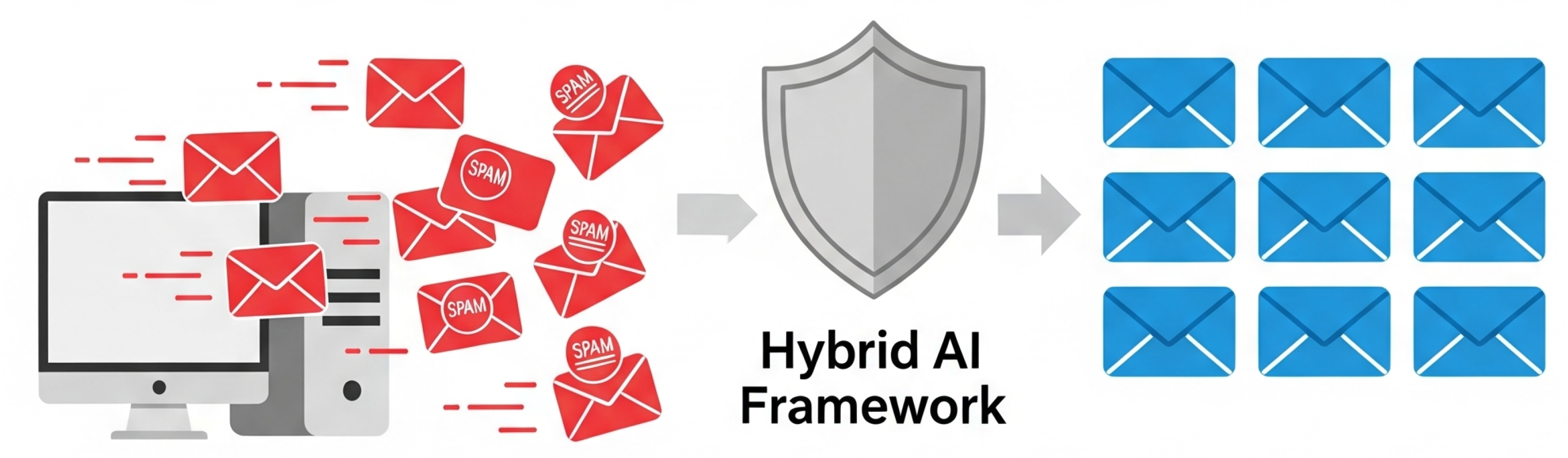

- We propose a hybrid spam detection framework that combines a classical multinomial naive Bayes model and DeBERTa through a weighted probability fusion strategy.

- This fusion strategy effectively balances computational efficiency and classification accuracy, enabling the system to perform well in both simple and complex cases.

- Extensive experiments on the SMS Spam Collection dataset demonstrate that our method outperforms both traditional machine learning baselines and standalone LLMs, achieving superior performance in accuracy, precision, and recall. Additionally, the method maintains practical inference efficiency, making it suitable for real-world deployment.

2. Related Work

2.1. Traditional Machine Learning for Spam Detection

2.2. LLMs for Spam Detection and Text Classification

3. Methodology

| Algorithm 1: Hybrid spam detection pipeline. |

|

3.1. Hybrid Architecture Overview

- Naive Bayes Branch: This branch utilizes a lightweight multinomial naive Bayes (MNB) classifier, which is trained on term frequency-inverse document frequency (TF-IDF) features extracted from the input text. The naive Bayes classifier focuses on identifying statistical patterns within the text, particularly the frequency of specific keywords or word combinations that are indicative of spam. By leveraging the probabilistic framework of naive Bayes, this model computes the likelihood that a given message belongs to a particular class (spam or ham) based on the occurrence of these features. This method is highly efficient and interpretable, making it suitable for quick classification tasks, especially when computational resources are limited.

- DeBERTa Branch: In this branch, we employ a fine-tuned DeBERTa model, which is a transformer-based model designed to capture deep contextual representations in text. DeBERTa enhances traditional BERT-like architectures by utilizing disentangled attention mechanisms, which separate the content and position information of tokens within a sequence. This allows the model to better understand the relationships and dependencies between words, even in complex contexts or long-range sequences. Fine-tuning the DeBERTa model on spam datasets enables it to learn nuanced patterns and semantic information that are crucial for distinguishing between spam and legitimate messages, especially in cases where the text is obfuscated or contains sophisticated language. This branch excels in capturing subtle and intricate contextual clues that simpler models may miss.

3.2. Naive Bayes Classifier

3.3. DeBERTa Classifier

3.4. Weighted Probability Fusion

3.5. Overall Inference Algorithm

4. Experiment

4.1. Dataset

4.2. Evaluation Metrics

- Accuracy: Accuracy measures the overall percentage of correctly classified messages. It is defined as the ratio of the number of correct predictions (both spam and ham) to the total number of messages:where is the number of true positives (correctly classified spam messages), is the number of true negatives (correctly classified ham messages), is the number of false positives (ham messages incorrectly classified as spam), and is the number of false negatives (spam messages incorrectly classified as ham).

- Precision: Precision measures the proportion of true spam messages among all messages classified as spam. It is defined as follows:Precision focuses on the quality of spam predictions, and a high precision value indicates that the model does not misclassify ham messages as spam frequently.

- Recall: Recall (also known as sensitivity or true positive rate) measures the proportion of correctly identified spam messages among all actual spam messages. It is defined as follows:Recall highlights the model’s ability to identify all spam messages, and a high recall value indicates that the model minimizes false negatives, capturing as many spam messages as possible.

- F1-score: The F1-score is the harmonic mean of precision and recall, providing a balanced measure that considers both false positives and false negatives. It is defined as follows:The F1-score is especially useful when there is an imbalance between precision and recall, as it combines both metrics into a single score that penalizes large discrepancies between them.

4.3. Baselines

- Multinomial Naive Bayes (MNB): This baseline model uses the traditional multinomial naive Bayes classifier, which is trained on TF-IDF features. As one of the most popular and widely used algorithms for text classification tasks, naive Bayes operates by calculating the likelihood of a message belonging to the spam class based on the frequency of words. Despite its simplicity, MNB is highly efficient and interpretable, making it a strong baseline for comparison. However, it may struggle with more complex linguistic patterns or semantic relationships within the text, as it relies heavily on word frequencies and assumes feature independence.

- DeBERTa-base: DeBERTa (decoding-enhanced BERT with disentangled attention) is a more advanced deep learning model for natural language processing, built upon the transformer architecture. In this baseline, we use the pre-trained DeBERTa-base model, which has been fine-tuned on the same SMS spam dataset. DeBERTa improves upon BERT by incorporating disentangled attention mechanisms, which separate content and positional information for more accurate context understanding. This allows DeBERTa to capture deeper semantic relationships in text, making it particularly effective at detecting nuanced spam messages. However, DeBERTa requires more computational resources and may not be as efficient as simpler models in terms of inference time, especially when deployed in real-world spam detection systems.

- Our Hybrid Model: This is the proposed model, which combines the strengths of both MNB and DeBERTa through weighted probability fusion. The hybrid model integrates the fast and lightweight naive Bayes classifier with the powerful, context-aware DeBERTa model, offering a balance between computational efficiency and accuracy. By using a weighted fusion strategy, the hybrid model combines the predictions of the two classifiers to produce a final classification, leveraging MNB’s efficiency for simpler cases and DeBERTa’s robust contextual understanding for more complex scenarios. This fusion strategy ensures that the model performs well in both real-time applications with large message volumes and in cases where deeper semantic analysis is required to accurately classify spam messages.

4.4. Implementation Details

4.5. Results and Discussion

4.6. Sensitivity Analysis of the Fusion Weight

4.7. Robustness Test on Different Types of Spam

- 1.

- Spelling Obfuscation: In this type of attack, common spam keywords such as “free” and “winner” are replaced with visually similar variants, like “fr3e” and “w1nner”. This tactic is designed to confuse models that rely on exact keyword matching, making it more difficult for them to identify spam based solely on word frequencies.

- 2.

- Zero-Width Character Insertion: This attack involves inserting invisible zero-width spaces between characters of spam keywords, such as “fr[ZWSP]ee” or “w[ZWSP]inner”. The goal is to deceive tokenizers, which may fail to recognize the original words due to the inserted invisible characters. This technique is commonly used to bypass simple text-based classifiers.

- 3.

- Synonym Replacement: In this attack, common spam keywords are replaced with less frequent but semantically similar words. For example, the word “free” might be replaced with “complimentary”, or “winner” could be replaced with “champion”. While this does not alter the meaning of the message, it challenges models that rely on exact keyword matching and may affect their ability to classify the message correctly.

4.8. Error Analysis

5. Ethical Considerations

5.1. User Privacy

5.2. Bias and Fairness

5.3. Responsible Deployment

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Karim, A.; Azam, S.; Shanmugam, B.; Kannoorpatti, K.; Alazab, M. A comprehensive survey for intelligent spam email detection. IEEE Access 2019, 7, 168261–168295. [Google Scholar] [CrossRef]

- Dada, E.G.; Bassi, J.S.; Chiroma, H.; Abdulhamid, S.M.; Adetunmbi, A.O.; Ajibuwa, O.E. Machine learning for email spam filtering: Review, approaches and open research problems. Heliyon 2019, 5, e01802. [Google Scholar] [CrossRef] [PubMed]

- Xia, W.; Peng, R.; Chu, H.; Zhu, X.; Yang, Z.; Yang, L.; Lv, B.; Xiang, X. An Improved Pure Fully Connected Neural Network for Rice Grain Classification. arXiv 2025, arXiv:2503.03111. [Google Scholar]

- Zhao, C.-T.; Wang, R.-F.; Tu, Y.-H.; Pang, X.-X.; Su, W.-H. Automatic lettuce weed detection and classification based on optimized convolutional neural networks for robotic weed control. Agronomy 2024, 14, 2838. [Google Scholar] [CrossRef]

- Wang, R.F.; Su, W.H. The application of deep learning in the whole potato production Chain: A Comprehensive review. Agriculture 2024, 14, 1225. [Google Scholar] [CrossRef]

- Mishra, J.; Sahay, S.K. Modern Hardware Security: A Review of Attacks and Countermeasures. arXiv 2025, arXiv:2501.04394. [Google Scholar] [CrossRef]

- Samarthrao, K.V.; Rohokale, V.M. Enhancement of email spam detection using improved deep learning algorithms for cyber security. J. Comput. Secur. 2022, 30, 231–264. [Google Scholar] [CrossRef]

- Altulaihan, E.; Alismail, A.; Hafizur Rahman, M.; Ibrahim, A.A. Email security issues, tools, and techniques used in investigation. Sustainability 2023, 15, 10612. [Google Scholar] [CrossRef]

- Joung, J.; Kim, H.M. Automated keyword filtering in latent Dirichlet allocation for identifying product attributes from online reviews. J. Mech. Des. 2021, 143, 084501. [Google Scholar] [CrossRef]

- Boger, Z.; Kuflik, T.; Shoval, P.; Shapira, B. Automatic keyword identification by artificial neural networks compared to manual identification by users of filtering systems. Inf. Process. Manag. 2001, 37, 187–198. [Google Scholar] [CrossRef]

- Sahami, M.; Dumais, S.; Heckerman, D.; Horvitz, E. A Bayesian approach to filtering junk e-mail. In Proceedings of the Learning for Text Categorization: Papers from the 1998 Workshop, Madison, WI, USA, 26–27 July 1998; Volume 62, pp. 98–105. [Google Scholar]

- Robertson, S. Understanding inverse document frequency: On theoretical arguments for IDF. J. Doc. 2004, 60, 503–520. [Google Scholar] [CrossRef]

- Azam, N.; Yao, J. Comparison of term frequency and document frequency based feature selection metrics in text categorization. Expert Syst. Appl. 2012, 39, 4760–4768. [Google Scholar] [CrossRef]

- Hakim, A.A.; Erwin, A.; Eng, K.I.; Galinium, M.; Muliady, W. Automated document classification for news article in Bahasa Indonesia based on term frequency inverse document frequency (TF-IDF) approach. In Proceedings of the 2014 6th International Conference on Information Technology and Electrical Engineering (ICITEE), Yogyakarta, Indonesia, 7–8 October 2014; pp. 1–4. [Google Scholar]

- Zhang, C.; Jiang, H.; Liu, W.; Li, J.; Tang, S.; Juhas, M.; Zhang, Y. Correction of out-of-focus microscopic images by deep learning. Comput. Struct. Biotechnol. J. 2022, 20, 1957–1966. [Google Scholar] [CrossRef]

- Zheng, F.; Chen, X.; Liu, W.; Li, H.; Lei, Y.; He, J.; Pun, C.M.; Zhou, S. SMAFormer: Synergistic Multi-Attention Transformer for Medical Image Segmentation. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portugal, 3–6 December 2024. [Google Scholar]

- Liu, W.; Shen, X.; Pun, C.M.; Cun, X. Explicit visual prompting for low-level structure segmentations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19434–19445. [Google Scholar]

- Liu, W.; Cun, X.; Pun, C.M.; Xia, M.; Zhang, Y.; Wang, J. Coordfill: Efficient high-resolution image inpainting via parameterized coordinate querying. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 1746–1754. [Google Scholar]

- Liu, W.; Shen, X.; Li, H.; Bi, X.; Liu, B.; Pun, C.M.; Cun, X. Depth-aware Test-Time Training for Zero-shot Video Object Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19218–19227. [Google Scholar]

- Liu, W.; Juhas, M.; Zhang, Y. Fine-grained breast cancer classification with bilinear convolutional neural networks (BCNNs). Front. Genet. 2020, 11, 547327. [Google Scholar] [CrossRef]

- Jiang, H.; Li, S.; Liu, W.; Zheng, H.; Liu, J.; Zhang, Y. Geometry-aware cell detection with deep learning. Msystems 2020, 5, 10–1128. [Google Scholar] [CrossRef]

- Liu, W.; Qian, J.; Yao, Z.; Jiao, X.; Pan, J. Convolutional two-stream network using multi-facial feature fusion for driver fatigue detection. Future Internet 2019, 11, 115. [Google Scholar] [CrossRef]

- Liu, W.; Cun, X.; Pun, C.M. DH-GAN: Image manipulation localization via a dual homology-aware generative adversarial network. Pattern Recognit. 2024, 155, 110658. [Google Scholar] [CrossRef]

- Liu, W.; Shen, X.; Pun, C.M.; Cun, X. ForgeryTTT: Zero-Shot Image Manipulation Localization with Test-Time Training. arXiv 2024, arXiv:2410.04032. [Google Scholar]

- Zhu, L.; Liu, W.; Chen, X.; Li, Z.; Chen, X.; Wang, Z.; Pun, C.M. Test-Time Intensity Consistency Adaptation for Shadow Detection. arXiv 2024, arXiv:2410.07695. [Google Scholar]

- Jiang, H.; Tang, S.; Liu, W.; Zhang, Y. Deep learning for COVID-19 chest CT (computed tomography) image analysis: A lesson from lung cancer. Comput. Struct. Biotechnol. J. 2021, 19, 1391–1399. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Huang, G.; Cheng, L.; Zhong, G.; Liu, W.; Chen, X.; Cai, M. Cross-domain visual prompting with spatial proximity knowledge distillation for histological image classification. J. Biomed. Inform. 2024, 158, 104728. [Google Scholar] [CrossRef]

- Di, X.; Cui, K.; Wang, R.F. Toward Efficient UAV-Based Small Object Detection: A Lightweight Network with Enhanced Feature Fusion. Remote Sens. 2025, 17, 2235. [Google Scholar] [CrossRef]

- Huo, Y.; Wang, R.F.; Zhao, C.T.; Hu, P.; Wang, H. Research on Obtaining Pepper Phenotypic Parameters Based on Improved YOLOX Algorithm. AgriEngineering 2025, 7, 209. [Google Scholar] [CrossRef]

- Yang, Z.X.; Li, Y.; Wang, R.F.; Hu, P.; Su, W.H. Deep Learning in Multimodal Fusion for Sustainable Plant Care: A Comprehensive Review. Sustainability 2025, 17, 5255. [Google Scholar] [CrossRef]

- Huang, J.; Feng, X.; Chen, Q.; Zhao, H.; Cheng, Z.; Bai, J.; Zhou, J.; Li, M.; Qin, L. MLDebugging: Towards Benchmarking Code Debugging Across Multi-Library Scenarios. arXiv 2025, arXiv:2506.13824. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Li, H.; Pun, C.M. Cee-net: Complementary end-to-end network for 3d human pose generation and estimation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 1305–1313. [Google Scholar]

- Li, H.; Pun, C.M. Monocular robust 3d human localization by global and body-parts depth awareness. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7692–7705. [Google Scholar] [CrossRef]

- Li, H.; Ge, S.; Gao, C.; Gao, H. Few-shot object detection via high-and-low resolution representation. Comput. Electr. Eng. 2022, 104, 108438. [Google Scholar] [CrossRef]

- Li, H.; Zheng, F.; Liu, Y.; Xiong, J.; Zhang, W.; Hu, H.; Gao, H. Adaptive Skeleton Prompt Tuning for Cross-Dataset 3D Human Pose Estimation. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Li, H.; Pun, C.M.; Xu, F.; Pan, L.; Zong, R.; Gao, H.; Lu, H. A hybrid feature selection algorithm based on a discrete artificial bee colony for Parkinson’s diagnosis. ACM Trans. Internet Technol. 2021, 21, 63. [Google Scholar] [CrossRef]

- Yang, S.; Li, H.; Pun, C.M.; Du, C.; Gao, H. Adaptive spatial-temporal graph-mixer for human motion prediction. IEEE Signal Process. Lett. 2024, 31, 1244–1248. [Google Scholar] [CrossRef]

- Yan, X.; Xie, J.; Liu, M.; Li, H.; Gao, H. Hierarchical local temporal network for 2d-to-3d human pose estimation. IEEE Internet Things J. 2024, 12, 869–880. [Google Scholar] [CrossRef]

- Wu, A.Q.; Li, K.L.; Song, Z.Y.; Lou, X.; Hu, P.; Yang, W.; Wang, R.F. Deep Learning for Sustainable Aquaculture: Opportunities and Challenges. Sustainability 2025, 17, 5084. [Google Scholar] [CrossRef]

- Yang, Z.Y.; Xia, W.K.; Chu, H.Q.; Su, W.H.; Wang, R.F.; Wang, H. A comprehensive review of deep learning applications in cotton industry: From field monitoring to smart processing. Plants 2025, 14, 1481. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, H.W.; Dai, Y.Q.; Cui, K.; Wang, H.; Chee, P.W.; Wang, R.F. Resource-Efficient Cotton Network: A Lightweight Deep Learning Framework for Cotton Disease and Pest Classification. Plants 2025, 14, 2082. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1 (long and short papers), pp. 4171–4186. Available online: https://aclanthology.org/N19-1423/?utm_campaign=The+Batch&utm_source=hs_email&utm_medium=email&_hsenc=p2ANqtz-_m9bbH_7ECE1h3lZ3D61TYg52rKpifVNjL4fvJ85uqggrXsWDBTB7YooFLJeNXHWqhvOyC (accessed on 13 August 2025).

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- He, P.; Gao, J.; Chen, W. Debertav3: Improving deberta using electra-style pre-training with gradient-disentangled embedding sharing. arXiv 2021, arXiv:2111.09543. [Google Scholar]

- Bai, J.; Yin, Y.; Dong, Y.; Zhang, X.; Pun, C.M.; Chen, X. LensNet: An End-to-End Learning Framework for Empirical Point Spread Function Modeling and Lensless Imaging Reconstruction. arXiv 2025, arXiv:2505.01755. [Google Scholar]

- Bai, J.; Yin, Y.; He, Q.; Li, Y.; Zhang, X. Retinexmamba: Retinex-based mamba for low-light image enhancement. In Proceedings of the International Conference on Neural Information Processing, Okinawa, Japan, 20–24 November 2025; pp. 427–442. [Google Scholar]

- Chen, X.; Ng, M.K.P.; Tsang, K.F.; Pun, C.M.; Wang, S. ConnectomeDiffuser: Generative AI Enables Brain Network Construction from Diffusion Tensor Imaging. IEEE Trans. Consum. Electron. 2025. [Google Scholar] [CrossRef]

- Chen, X.; Li, Z.; Shen, Y.; Mahmud, M.; Pham, H.; Pun, C.M.; Wang, S. High-Fidelity Functional Ultrasound Reconstruction via A Visual Auto-Regressive Framework. arXiv 2025, arXiv:2505.21530. [Google Scholar]

- Li, M.; Sun, H.; Lei, Y.; Zhang, X.; Dong, Y.; Zhou, Y.; Li, Z.; Chen, X. High-Fidelity Document Stain Removal via A Large-Scale Real-World Dataset and A Memory-Augmented Transformer. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 7614–7624. [Google Scholar]

- Zhou, Z.; Lei, Y.; Chen, X.; Luo, S.; Zhang, W.; Pun, C.M.; Wang, Z. DocDeshadower: Frequency-Aware Transformer for Document Shadow Removal. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 2468–2473. [Google Scholar]

- Guo, X.; Luo, S.; Dong, Y.; Liang, Z.; Li, Z.; Zhang, X.; Chen, X. An asymmetric calibrated transformer network for underwater image restoration. Vis. Comput. 2025, 41, 6465–6477. [Google Scholar] [CrossRef]

- Guo, X.; Dong, Y.; Chen, X.; Chen, W.; Li, Z.; Zheng, F.; Pun, C.M. Underwater image restoration via polymorphic large kernel cnns. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Guo, X.; Chen, X.; Wang, S.; Pun, C.M. Underwater Image Restoration Through a Prior Guided Hybrid Sense Approach and Extensive Benchmark Analysis. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4784–4800. [Google Scholar] [CrossRef]

- Yi, F.; Zheng, Z.; Liang, Z.; Dong, Y.; Fang, X.; Wu, W.; Chen, X. MAC-Lookup: Multi-Axis Conditional Lookup Model for Underwater Image Enhancement. arXiv 2025, arXiv:2507.02270. [Google Scholar]

- Zhang, X.; Chen, F.; Wang, C.; Tao, M.; Jiang, G.P. Sienet: Siamese expansion network for image extrapolation. IEEE Signal Process. Lett. 2020, 27, 1590–1594. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, Y.; Gu, C.; Lu, C.; Zhu, S. SpA-Former: An Effective and lightweight Transformer for image shadow removal. In Proceedings of the 2023 International Joint Conference on Neural Networks (IJCNN), Gold Coast, Australia, 18–23 June 2023; pp. 1–8. [Google Scholar]

- Xu, Z.; Zhang, X.; Chen, W.; Liu, J.; Xu, T.; Wang, Z. MuralDiff: Diffusion for Ancient Murals Restoration on Large-Scale Pre-Training. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 2169–2181. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, Z.; Tang, H.; Gu, C.; Zhu, S.; Guan, X. Shadclips: When Parameter-Efficient Fine-Tuning with Multimodal Meets Shadow Removal; Research Square: Durham, NC, USA, 2024. [Google Scholar] [CrossRef]

- Zhang, X.; Shen, C.; Yuan, X.; Yan, S.; Xie, L.; Wang, W.; Gu, C.; Tang, H.; Ye, J. From Redundancy to Relevance: Enhancing Explainability in Multimodal Large Language Models. arXiv 2024, arXiv:2406.06579. [Google Scholar]

- Wei, J.; Zhang, X. DOPRA: Decoding Over-accumulation Penalization and Re-allocation in Specific Weighting Layer. arXiv 2024, arXiv:2407.15130. [Google Scholar]

- Yuan, X.; Shen, C.; Yan, S.; Zhang, X.; Xie, L.; Wang, W.; Guan, R.; Wang, Y.; Ye, J. Instance-adaptive Zero-shot Chain-of-Thought Prompting. arXiv 2024, arXiv:2409.20441. [Google Scholar]

- Zhang, X.; Quan, Y.; Gu, C.; Shen, C.; Yuan, X.; Yan, S.; Cheng, H.; Wu, K.; Ye, J. Seeing Clearly by Layer Two: Enhancing Attention Heads to Alleviate Hallucination in LVLMs. arXiv 2024, arXiv:2411.09968. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, F.; Gu, C. Simignore: Exploring and enhancing multimodal large model complex reasoning via similarity computation. Neural Netw. 2024, 184, 107059. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, F.; Quan, Y.; Hui, Z.; Yao, J. Enhancing Multimodal Large Language Models Complex Reason via Similarity Computation. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025. [Google Scholar]

- Goodman, J.; Cormack, G.V.; Heckerman, D. Spam and the ongoing battle for the inbox. Commun. ACM 2007, 50, 24–33. [Google Scholar] [CrossRef]

- Drucker, H.; Wu, D.; Vapnik, V.N. Support vector machines for spam categorization. IEEE Trans. Neural Netw. 1999, 10, 1048–1054. [Google Scholar] [CrossRef]

- Metsis, V.; Androutsopoulos, I.; Paliouras, G. Spam filtering with naive bayes-which naive bayes? CEAS 2006, 17, 28–69. [Google Scholar]

- Wang, R.F.; Qu, H.R.; Su, W.H. From Sensors to Insights: Technological Trends in Image-Based High-Throughput Plant Phenotyping. Smart Agric. Technol. 2025, 12, 101257. [Google Scholar] [CrossRef]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. Palm: Scaling language modeling with pathways. J. Mach. Learn. Res. 2023, 24, 1–113. [Google Scholar]

- Zhao, Z. Using Pre-Trained Language Models for Toxic Comment Classification. Ph.D. Thesis, University of Sheffield, Sheffield, UK, 2022. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Method | Accuracy | Precision | Recall | F1 | AUC |

|---|---|---|---|---|---|

| Logistic Regression (TF-IDF) | 95.8% | 94.2% | 92.5% | 93.3% | 97.9% |

| MNB (TF-IDF) | 96.1% | 93.7% | 95.6% | 94.6% | 98.3% |

| SVM (TF-IDF) | 96.5% | 95.5% | 94.1% | 94.8% | 98.5% |

| DistilBERT | 96.9% | 95.7% | 94.8% | 95.2% | 98.5% |

| BERT-base | 97.0% | 95.9% | 95.1% | 95.5% | 98.6% |

| RoBERTa-base | 96.9% | 96.0% | 95.3% | 95.6% | 98.7% |

| DeBERTa | 97.2% | 96.1% | 95.4% | 95.7% | 98.7% |

| Hybrid (Ours) | 97.5% | 96.8% | 96.2% | 96.5% | 99.1% |

| Value | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 0.0 (DeBERTa Only) | 97.2% | 96.1% | 95.4% | 95.7% |

| 0.1 | 97.3% | 96.4% | 95.8% | 96.1% |

| 0.2 | 97.4% | 96.6% | 96.0% | 96.3% |

| 0.3 (Proposed) | 97.5% | 96.8% | 96.2% | 96.5% |

| 0.4 | 97.3% | 96.5% | 95.9% | 96.2% |

| 0.5 | 97.1% | 96.0% | 95.7% | 95.8% |

| 0.7 | 96.6% | 94.8% | 95.6% | 95.2% |

| 1.0 (MNB Only) | 96.1% | 93.7% | 95.6% | 94.6% |

| Threshold | Precision | Recall | F1 | Note |

|---|---|---|---|---|

| 0.30 | 94.6% | 97.3% | 95.9% | High recall |

| 0.40 | 95.7% | 96.7% | 96.2% | |

| 0.45 | 96.8% | 96.2% | 96.5% | F1-max |

| 0.50 | 97.2% | 94.9% | 96.0% | Default |

| 0.60 | 98.1% | 92.3% | 95.1% | High precision |

| Model | Standard Spam | Spelling Obfuscation | Zero-Width Chars | Synonym Replacement |

|---|---|---|---|---|

| MNB (TF-IDF) | 94.6% | 65.2% | 71.5% | 78.4% |

| DeBERTa | 95.7% | 92.1% | 93.5% | 92.8% |

| Hybrid (Ours) | 96.5% | 92.5% | 94.0% | 93.1% |

| Model | Avg. Inference Time (ms) |

|---|---|

| Naive Bayes (TF-IDF) | 0.45 |

| DistilBERT | 13.2 |

| RoBERTa-base | 29.5 |

| DeBERTa-base | 27.8 |

| Hybrid (Ours) | 28.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, A.; Li, K.; Wang, H. A Fusion-Based Approach with Bayes and DeBERTa for Efficient and Robust Spam Detection. Algorithms 2025, 18, 515. https://doi.org/10.3390/a18080515

Zhang A, Li K, Wang H. A Fusion-Based Approach with Bayes and DeBERTa for Efficient and Robust Spam Detection. Algorithms. 2025; 18(8):515. https://doi.org/10.3390/a18080515

Chicago/Turabian StyleZhang, Ao, Kelei Li, and Haihua Wang. 2025. "A Fusion-Based Approach with Bayes and DeBERTa for Efficient and Robust Spam Detection" Algorithms 18, no. 8: 515. https://doi.org/10.3390/a18080515

APA StyleZhang, A., Li, K., & Wang, H. (2025). A Fusion-Based Approach with Bayes and DeBERTa for Efficient and Robust Spam Detection. Algorithms, 18(8), 515. https://doi.org/10.3390/a18080515