Cross-Cultural Safety Judgments in Child Environments: A Semantic Comparison of Vision-Language Models and Humans

Abstract

1. Introduction

2. Related Work

2.1. Large Language Models and Multimodality

2.2. Intelligent Applications in Child Safety

2.3. Datasets Related to Danger

3. Experimental Procedure

4. Data Preparation

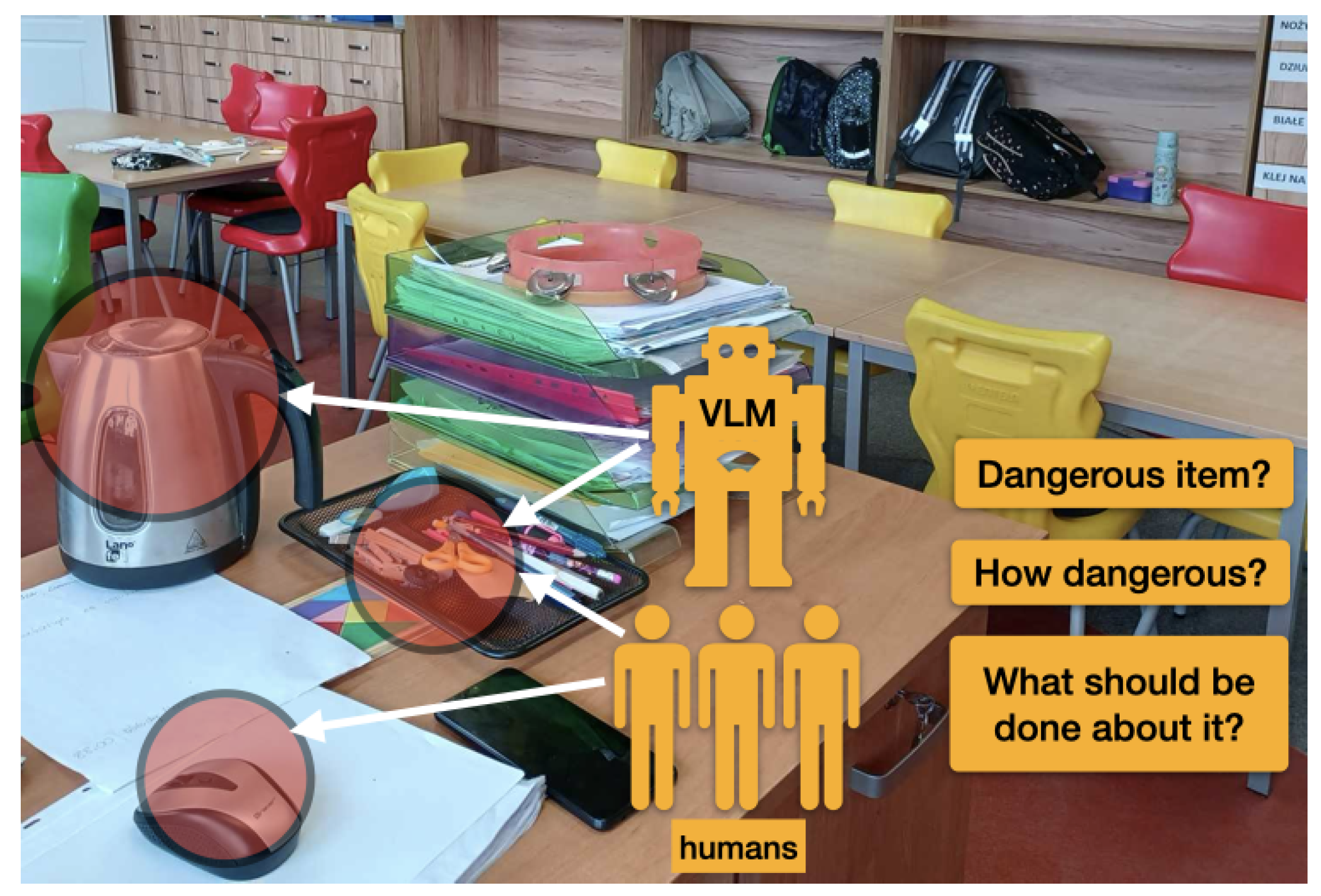

4.1. Collecting Images

4.2. Human Annotation

- Location: The country of origin where the photo was taken (Japan or Poland);

- The type of environment: Whether it was inside a house or a school activity room;

- The age of the child: A number representing the age range of the child or children attending the place where the photo in question was taken.

- Names of the potentially dangerous objects or description of potential dangers itself, up to a maximum of three entries,

- Number indicating the degree of danger on a five-point scale (ranging from ‘very low’ to ‘very high’) for each recognized danger;

- Action(s) to be taken to make the environment or situation safer (in no more than two sentences)

- A radio button was also available if the participant found no significant danger.

4.3. Model-Generated Annotations

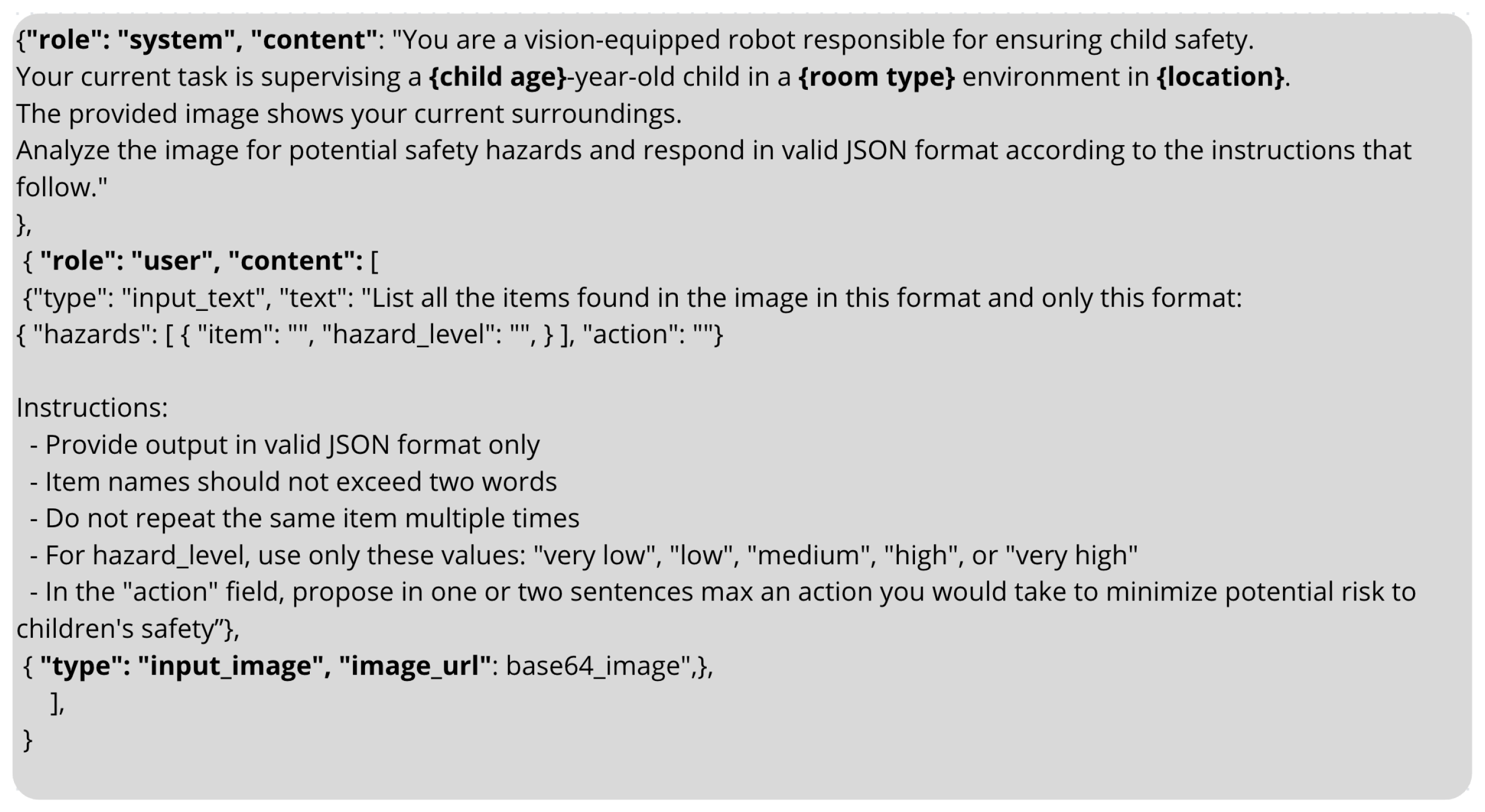

4.3.1. Multilingual Prompt Engineering

- Contextual Role-Play Prompting: A primary system prompt established the VLM’s role as a “vision-equipped robot responsible for ensuring child safety”. To ground the model’s analysis in a specific context, this prompt was dynamically populated with metadata corresponding to each image. These metadata included the child’s age, the type of environment depicted (e.g., ‘home’, ‘school’), and the geographical location (‘Japan’, ‘Poland’). These contextual variables were translated into the target language for each respective experimental run.

- Structured Output Specification: A secondary user prompt provided explicit instructions for the task, requiring the model to identify potential hazards and formulate a response in a predefined JSON structure. This format response ensures that structured answers are received from complex systems such as Large Language Models (LLMs) and Visual Language models (VLMs) [31]. This format mandated a list of hazards, where each entry contained the item name and the hazard level classification. The prompt also required a directive, containing a concise, two-sentence recommendation to mitigate the identified risks. To ensure uniformity, the five-point scale for hazard level (from “very low” to “very high”) was also translated and specified in each language’s prompt (see Figure 3).

4.3.2. Automated Data Elicitation and Collection

5. Data Preprocessing for Comparative Analysis

5.1. Hazard Normalization

5.1.1. Data Aggregation and Normalization

- Hazard Level Conversion: The textual descriptions of hazard levels (e.g., “high,” “très élevé,” ”高い”) were mapped to a unified 5-point ordinal scale (1 = “very low” to 5 = “very high”). This conversion enabled the quantitative comparison of perceived danger across different sources (source refers to human and/or VLMs) and languages.

- Textual Data Cleaning: All identified item names were converted to lowercase to ensure consistency. Space and stopwords were removed, leaving only the actual needed hazard names for each entry.

5.1.2. Semantic Clustering of Hazardous Objects

- Semantic Embedding: Each unique item name was converted into a high-dimensional vector representation using a pre-trained language model. The Language-agnostic BERT Sentence Embedding model (LaBSE) (https://huggingface.co/sentence-transformers/LaBSE; accessed on 21 July 2025) from the sentence transformer library, which generates 768-dimensional embeddings, was employed for this task [32]. This step translated the lexical items into a shared semantic space where their meanings could be quantitatively compared. This model was specifically selected for its balance of performance and efficiency. It demonstrates state-of-the-art results, validated by its high ranking on the Massive Text Embedding Benchmark (MTEB) leaderboard (https://huggingface.co/spaces/mteb/leaderboard; accessed on 21 July 2025) for cross-lingual tasks at the time we were conducting the experiment, while remaining computationally lightweight enough for iterative experimentation.

- Optimal Cluster Identification via Fuzzy C-Means and Embedding Coherence Entropy: To group the embeddings, we utilized a Fuzzy C-Means (FCM) clustering algorithm. Unlike traditional hard clustering methods, FCM allows each data point (i.e., each item name) to belong to multiple clusters with varying degrees of membership, providing a more nuanced representation of semantic relationships.

- Step 1: Compute pairwise similarities for a given cluster containing a set of embeddings ; we first compute the cosine similarity for all unique pairs where : . The mean similarity within the cluster, , is the average of these pairwise scores:.

- Step 2: Transform similarity to Coherence Entropy of a cluster , denoted by , which is defined as a nonlinear transformation of its mean dissimilarity . This ensures that perfect similarity yields zero entropy, while lower similarity results in higher entropy.

- Step 3: Weighted Aggregation across clusters. The total Coherence Entropy for a clustering configuration with k clusters is the mean entropy of all clusters, weighted by their size .

5.2. Action Normalization

- To distill each verbose sentence into a concise, actionable command, structured as a simple verb-object phrase (e.g., “remove the scissors”).

- To generate a corresponding English translation for every action, thereby creating a unified basis for cross-linguistic comparison.

- “Original”: A list of standardized actions in the source language.

- “English”: A list of the corresponding English translations of those actions.

6. Comparison Framework

6.1. Multilingual Semantic Alignment of Hazard Annotations

- Shared semantic space: All canonical names of hazardous object clusters, previously identified via fuzzy clustering and normalization, were projected into a common high-dimensional space using a pre-trained multilingual sentence transformer. This approach ensured conceptual alignment could be detected even when terminology varied across annotators or languages.

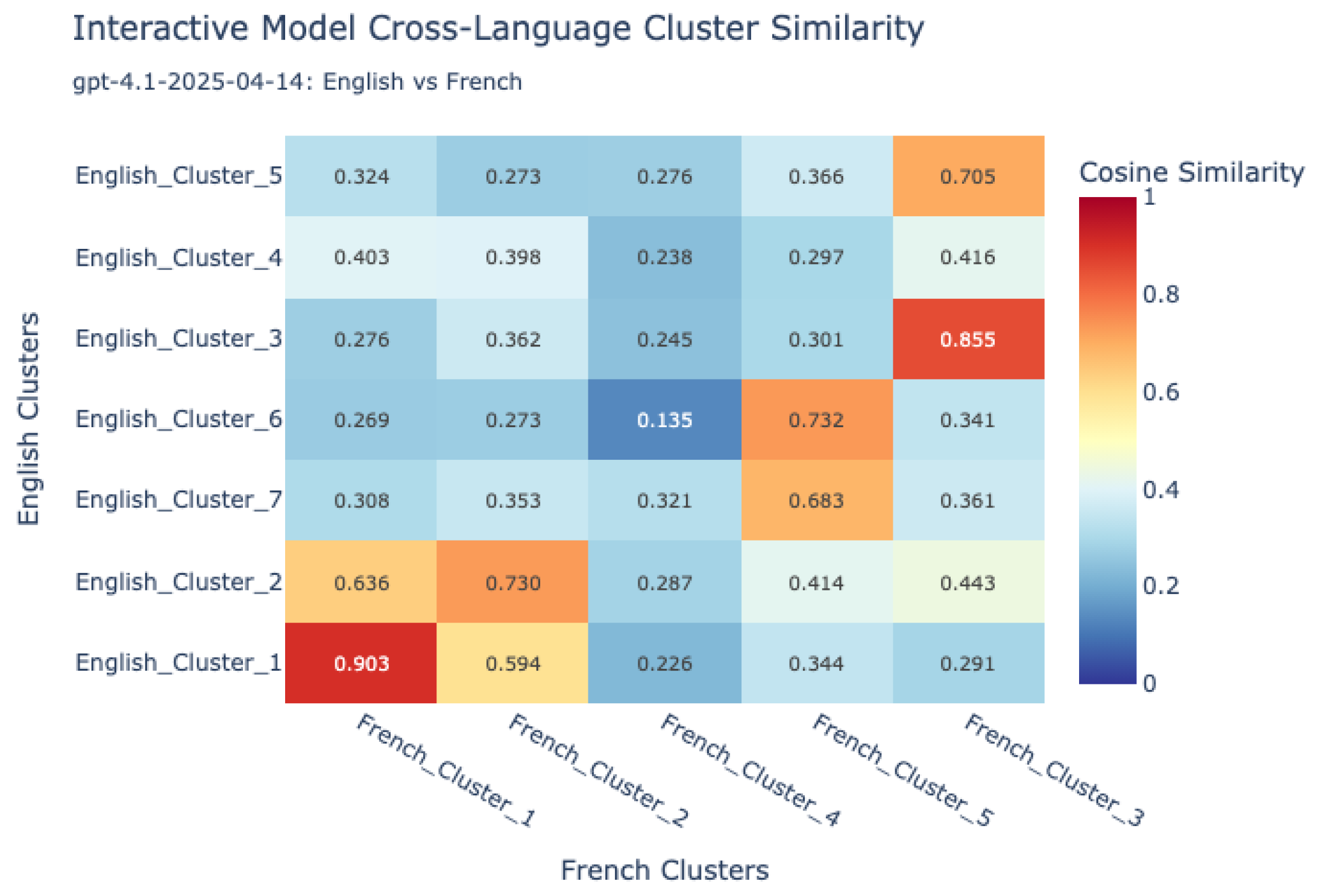

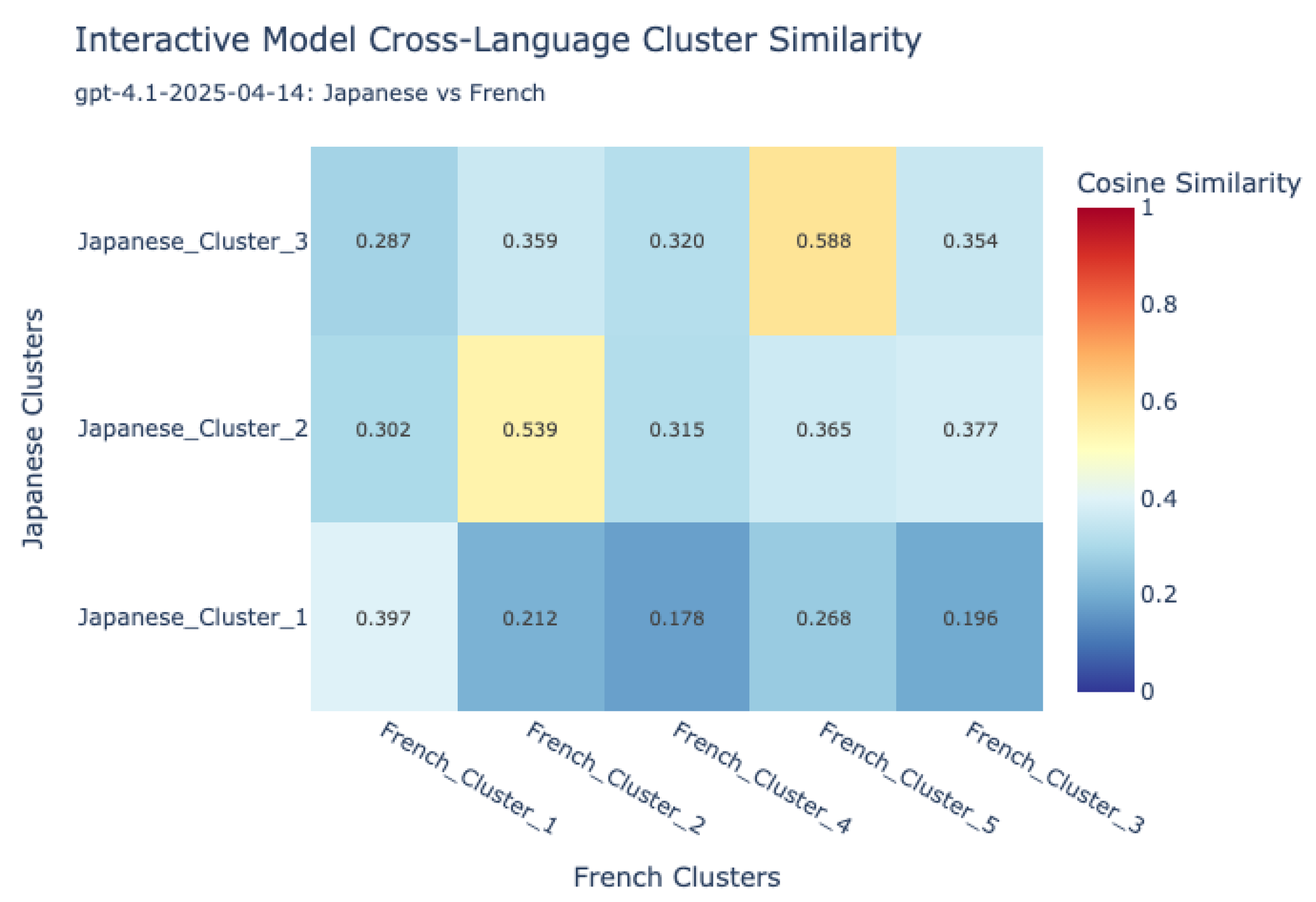

- Cosine similarity analysis: For each pair of object clusters (e.g., English-human vs. Japanese-VLM), cosine similarity between their embeddings was computed. This provided a continuous measure of semantic overlap, robust to surface-level linguistic variation.

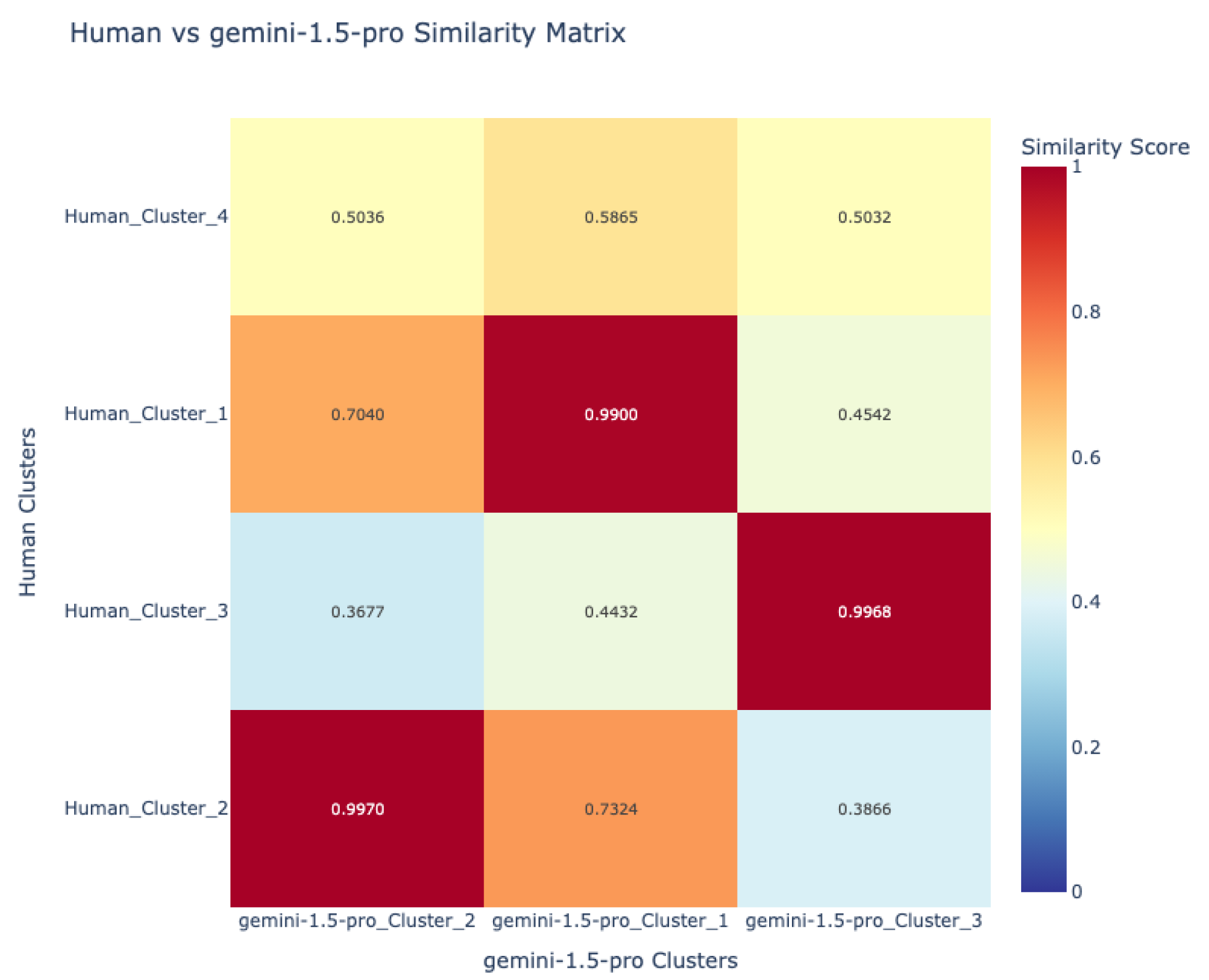

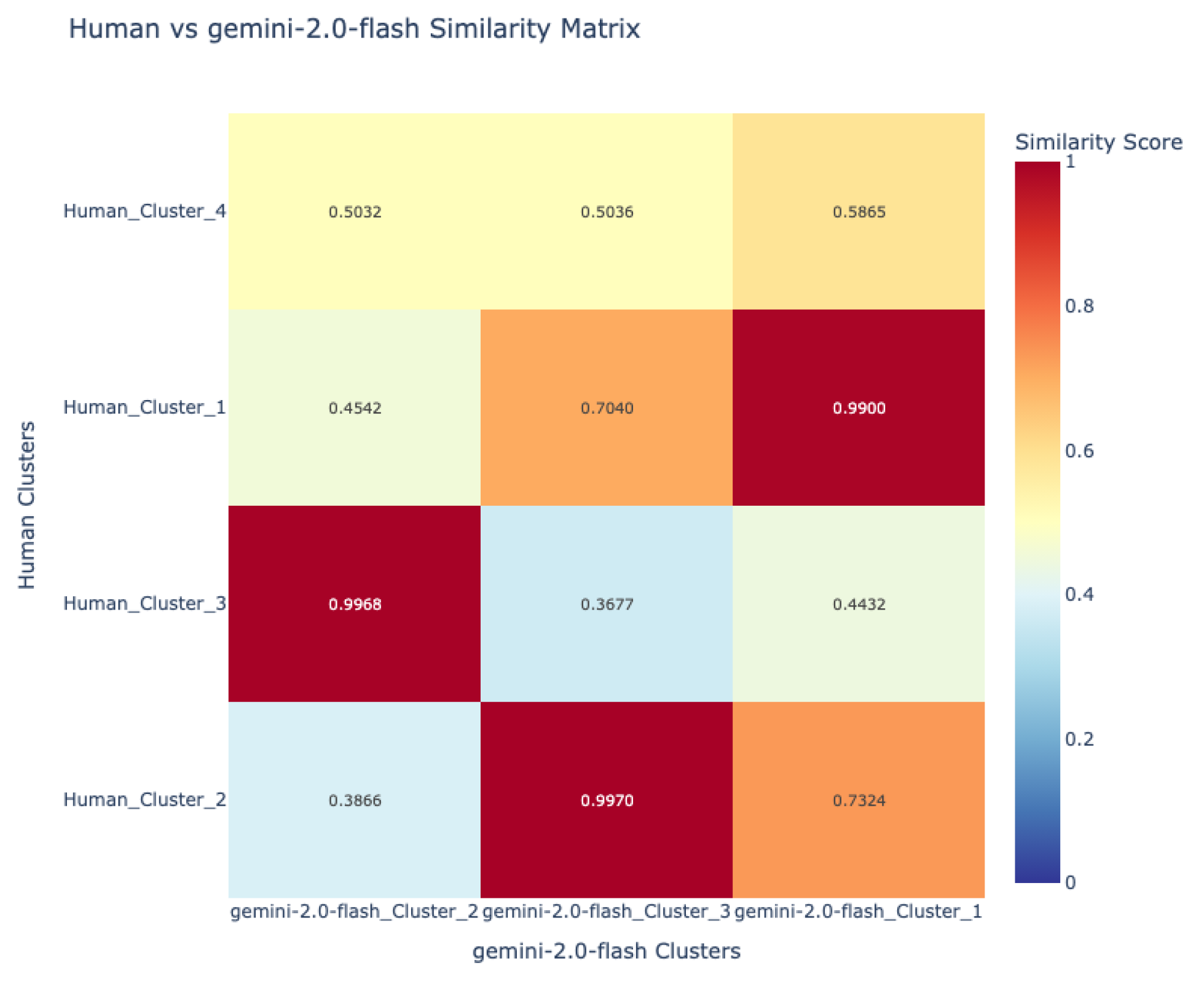

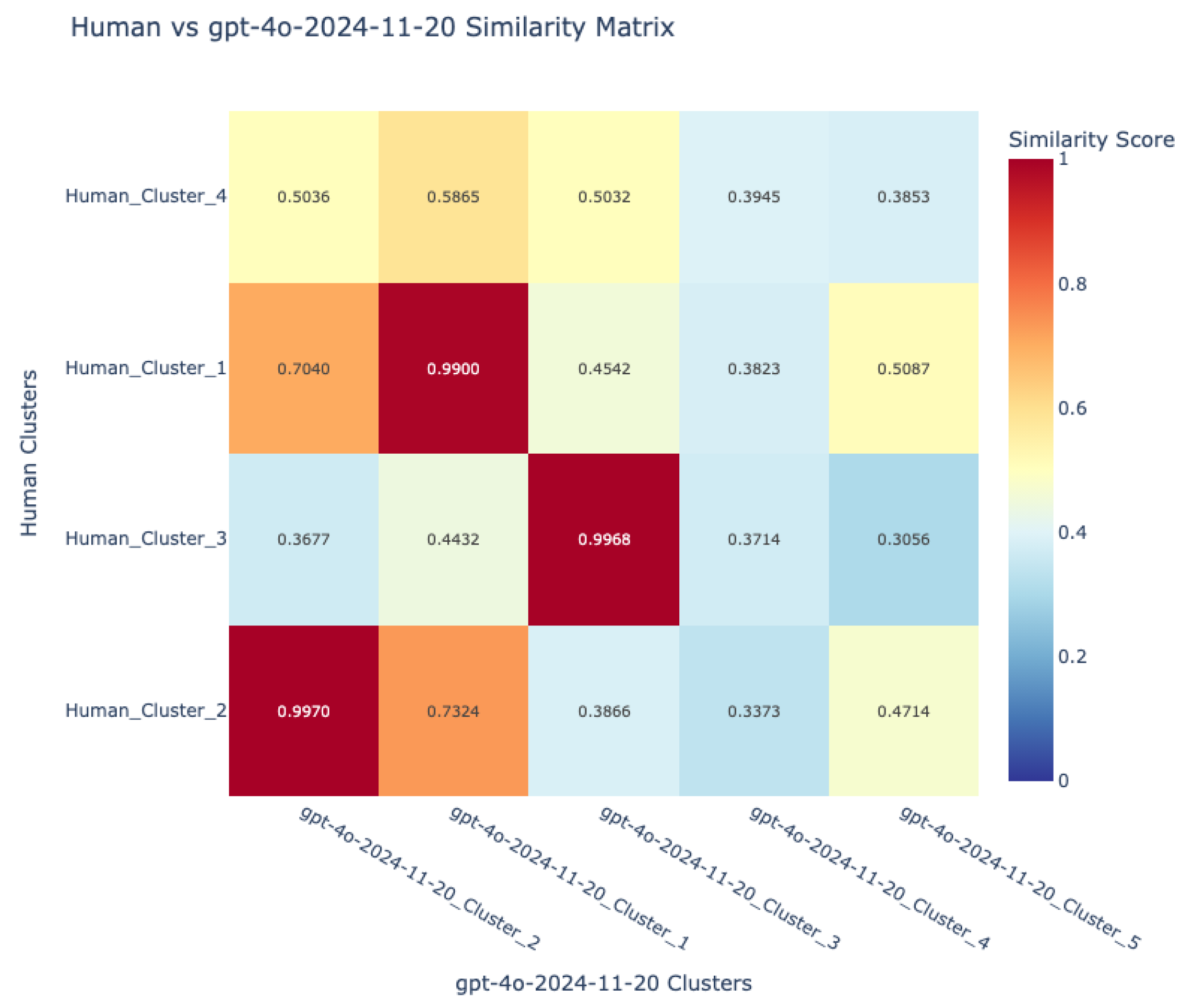

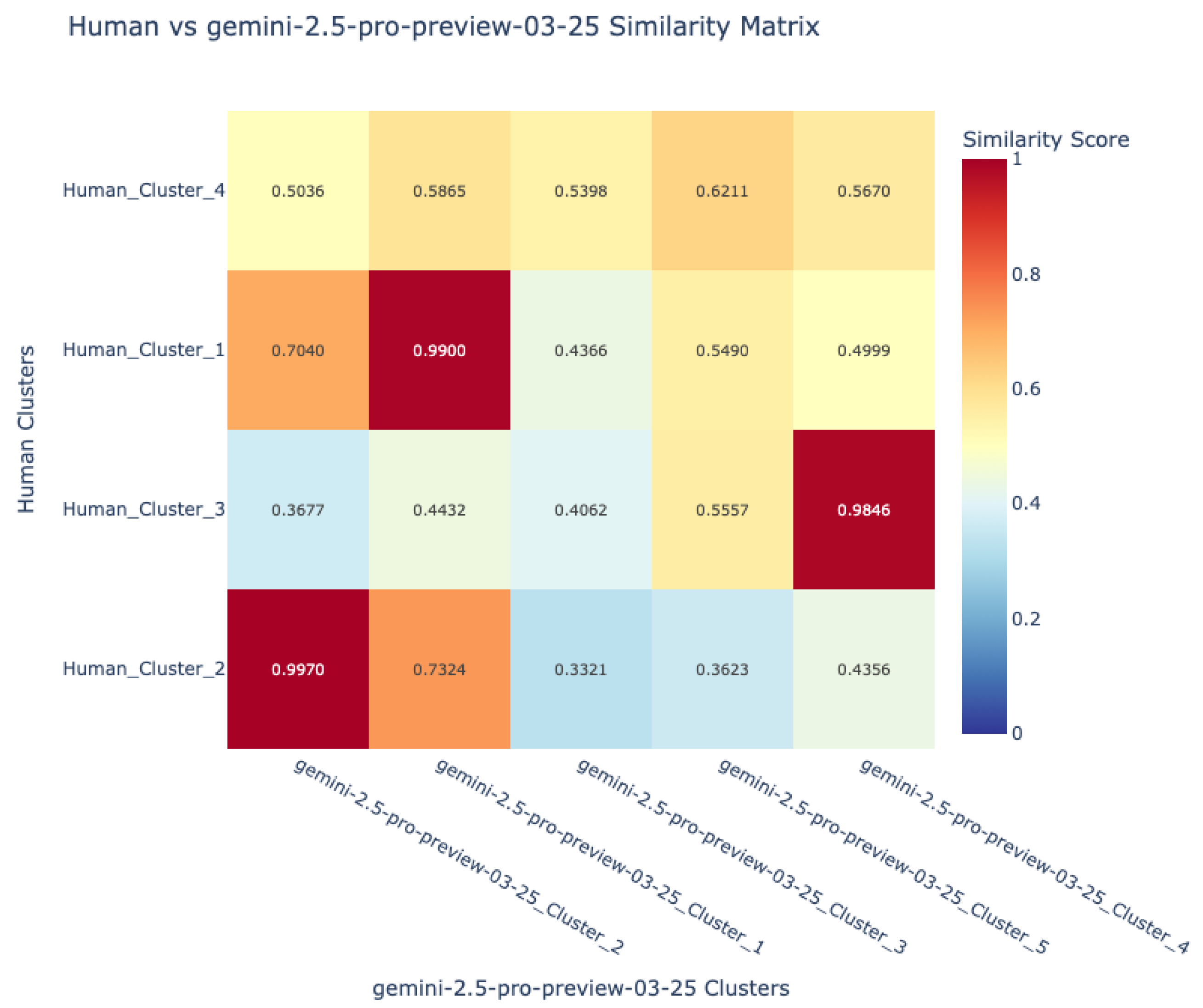

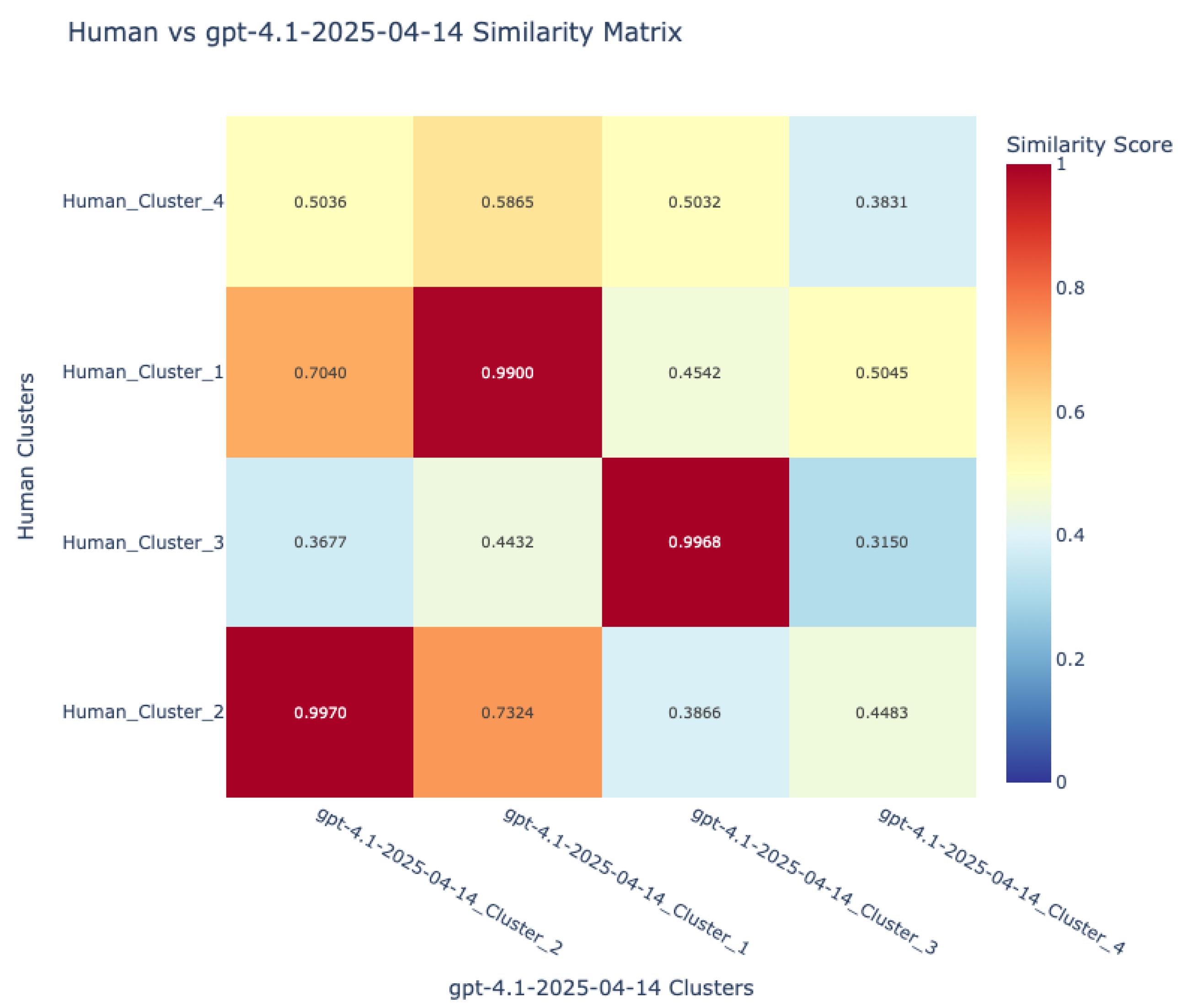

- Visualization and comparative mapping: To facilitate interpretation, similarity matrices were generated and visualized as heatmaps, providing a global overview of alignment patterns between language pairs and between human and VLM sources.This allowed for the identification of universally recognized hazards versus those unique to specific languages or sources.

- Cluster composition metrics: The degree of agreement between humans and VLMs was quantified by calculating the proportion of annotations from each source within individual semantic clusters. This nuanced analysis helped reveal systematic differences and areas of consensus.

6.2. Semantic Categorization and Alignment of Safety Actions

- Categorical action assignment: Each action recommendation, standardized and translated as described in preprocessing, was semantically classified into one of seven predefined categories using vector-based similarity to category prototypes. This step abstracted away linguistic and stylistic variation, allowing for direct cross-linguistic and cross-source comparison of intent.

- Embedding-based assignment: Both category descriptions and normalized action statements were embedded using a multilingual sentence transformer (Language-agnostic BERT Sentence Embedding). Each action was assigned to the category yielding the highest cosine similarity, ensuring that subtle or indirect phrasings were appropriately grouped.

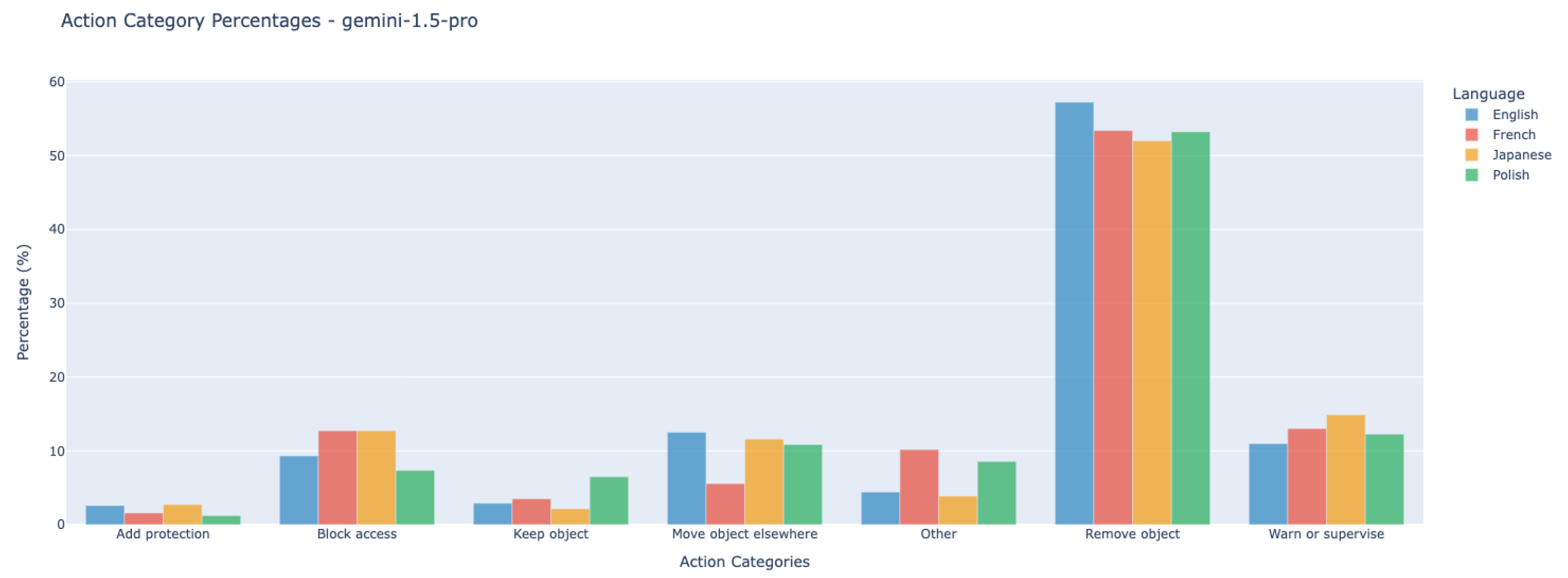

- Comparative analysis of action distribution: After categorical assignment, the distribution of action types was compared between humans and VLMs, and across languages. This enabled the identification of systematic tendencies (e.g., preference for removal vs. supervision) and detection of cross-cultural or model-specific patterns.

6.3. Integrated Quantitative and Qualitative Assessment

- Cosine similarity and cluster metrics: Continuous similarity measures and cluster composition statistics were used to quantify both object and action alignment.

- Interpretation of divergences: By mapping differences in object recognition and action preference, the framework enabled a discussion of the implications of observed discrepancies whether rooted in cultural factors, model architecture, or annotation practices.

6.4. Comparative Scenarios

- Cross-lingual human comparison: Hazard and action annotation patterns among human annotators from different linguistic backgrounds were directly compared, allowing the identification of both universal and culture-specific risk perceptions and mitigation strategies.

- Cross-lingual model comparison: The outputs of VLMs prompted in different languages were analyzed for internal consistency and language-induced biases, providing insight into the robustness of model safety assessments across linguistic contexts.

- Human–model concordance: The agreement and divergence between human annotators and VLMs were systematically measured for both object recognition and action recommendations. Clusters and categories exhibiting high or low concordance were highlighted and discussed.

7. Experimental Results

7.1. Analysis of Hazardous Object Detection

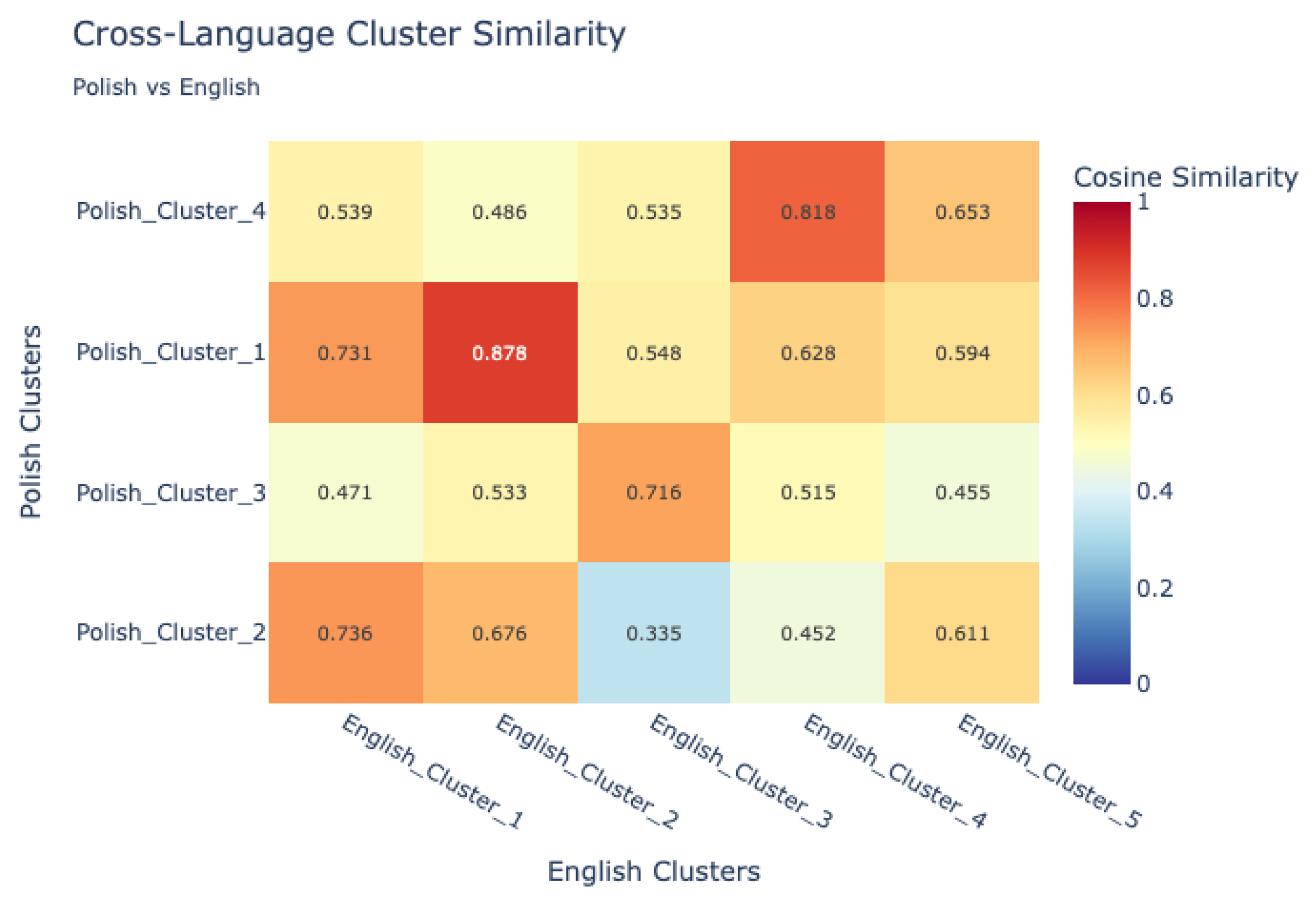

7.1.1. Cross-Lingual Analysis of Human Hazard Perception

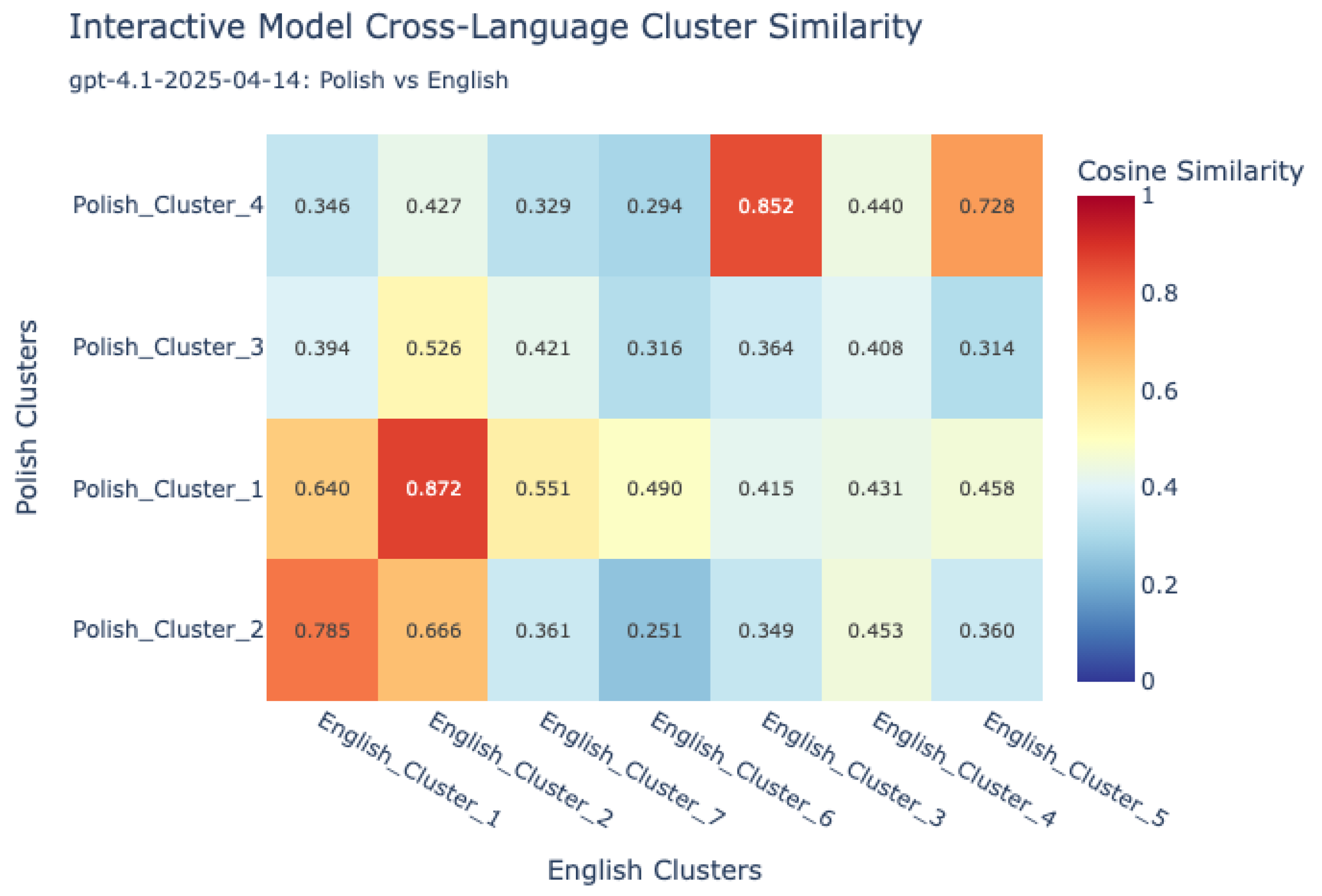

7.1.2. Multilingual Consistency in VLM Hazard Detection

7.1.3. Human–VLM Concordance in Hazard Identification

7.2. Analysis of Proposed Safety Actions

7.2.1. Cross-Lingual Analysis of Human-Proposed Mitigation Strategies

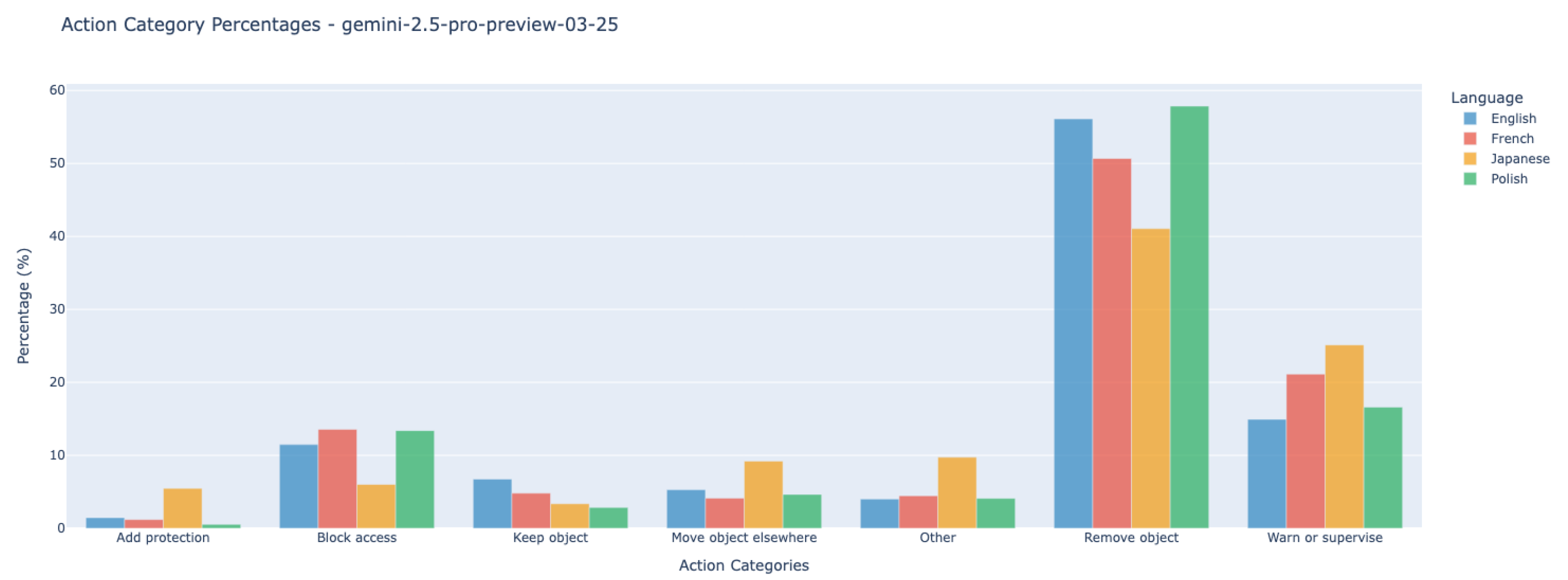

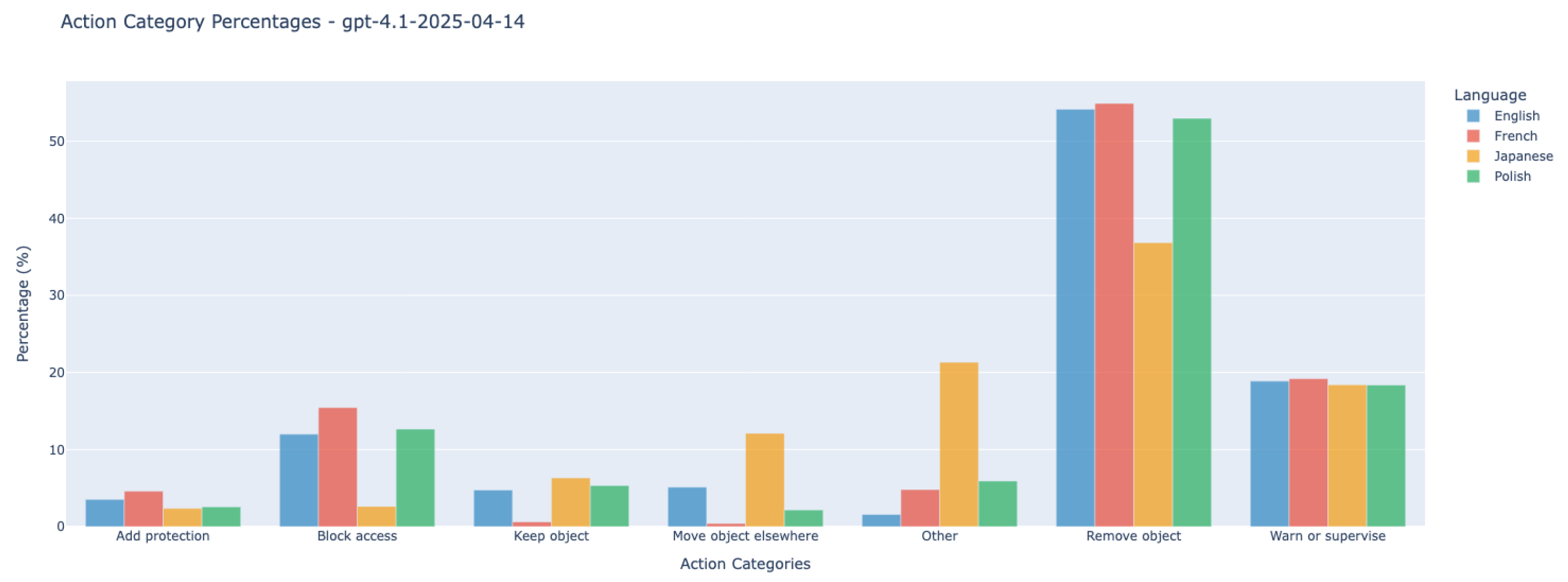

7.2.2. Multilingual Consistency in VLM Action Recommendations

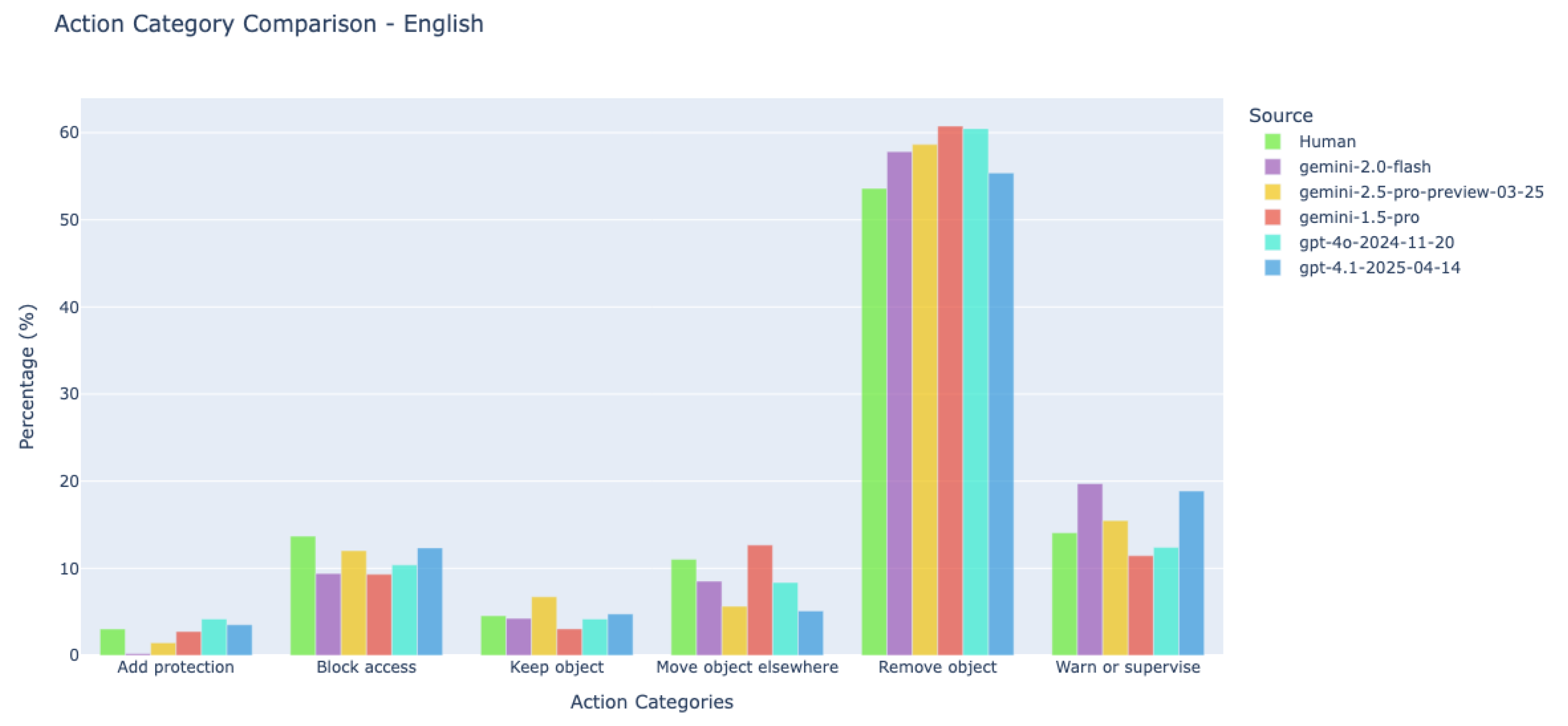

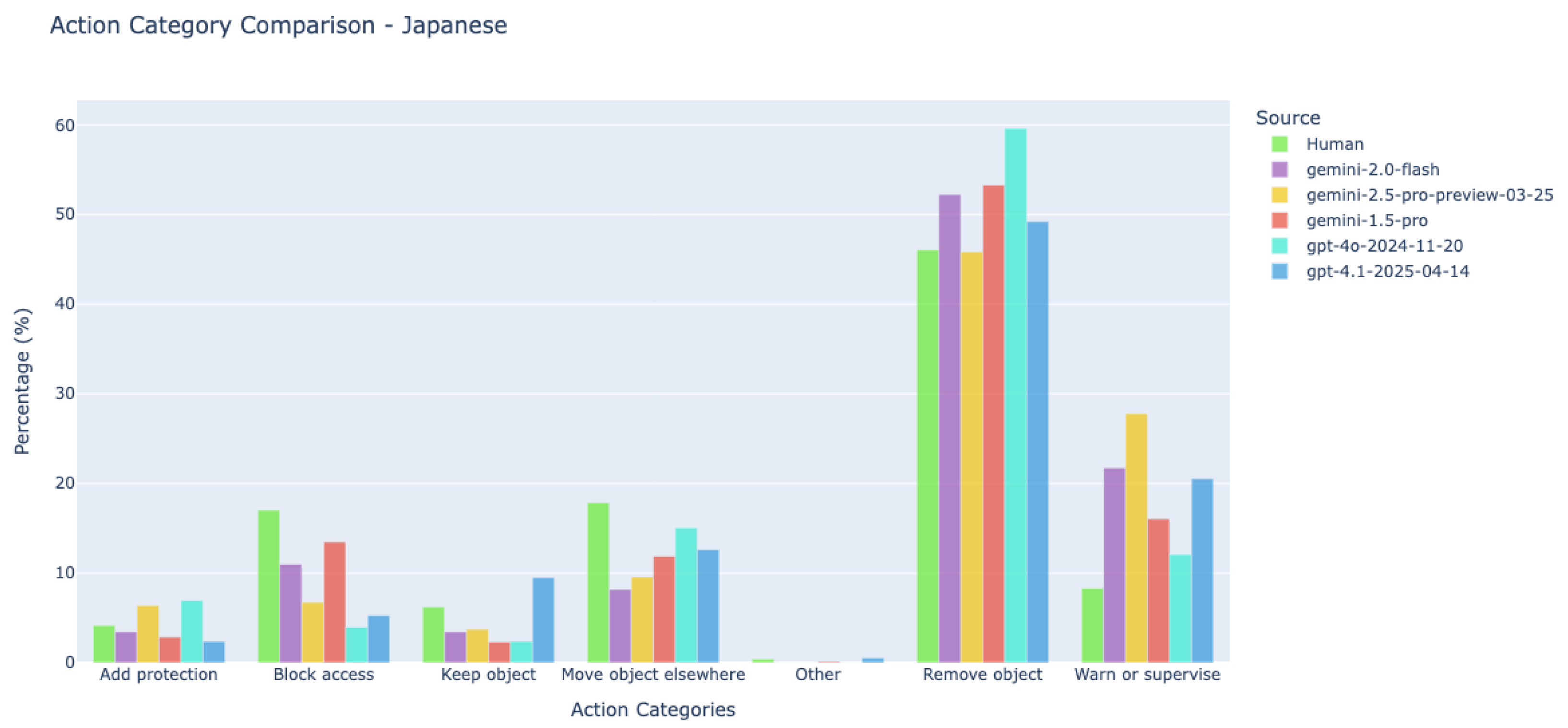

7.2.3. Human-VLM Concordance in Action Recommendations

8. Discussion

9. Conclusions

10. Future Work and Limitations

10.1. Future Work

10.2. Limitations

10.2.1. Limited Dataset Size and Participant Diversity

10.2.2. Restricted Environmental and Cultural Representation

10.2.3. Asymmetry in Annotation Task

10.2.4. Language Inconsistencies

10.2.5. Standard LLM Challenges: Hallucination and Explainability

10.2.6. Computational and Financial Constraints

11. Ethical Statement

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Lang | Min Sim | Max Sim | Mean Sim | Sim Pairs Count |

|---|---|---|---|---|

| En | 0.14 | 0.99 | 0.47 | 25 |

| Fr | 0.15 | 1.0 | 0.46 | 13 |

| Jp | 0.01 | 0.94 | 0.38 | 15 |

| Pl | 0.12 | 1.0 | 0.50 | 21 |

Appendix B. Questionnaire and Data Details

- Instructions

- Aim of this survey is to compare human and AI capabilities to identify potential hazards in different environments. We will present you with 16 images of different locations containing various objects. Your task is to identify up to three potentially hazardous items in each image, and to assess their level of dangerousness based on the information provided with the images. If no object seems relevant, you can select ‘None’ and move on to the next image. After that write in one or two sentences why it is dangerous and what would you do in this situation to minimize potential risks to children’s safety. Completing this form may take between 15 and 17 min, depending on your response speed and attention to detail. Thank you for your valuable contribution. Please start now by clicking the ‘Next’ button. First, please provide us with some basic information about you. Note that all your answers will remain confidential and will only be used for research purposes.

- Section 1:

- 1.

- Age:

- ∘

- 18 to 24

- ∘

- 5 to 34

- ∘

- 35 to 44

- ∘

- 45 to 54

- ∘

- 55 to 64

- ∘

- 65 and over

- 2.

- Sex:

- ∘

- Male

- ∘

- Female

- ∘

- Prefer not to say

- ∘

- Other:____________________________________________________________________

- 3.

- Do you have any children?:

- ∘

- Yes

- ∘

- No

- 4.

- Geographical Region:________________________________________________________

- Section 2 (The following questions have been asked for each picture):

- 1.

- If nothing seems dangerous, please check this box and move on to the next image.

- ∘

- None

- 2.

- First Object:________________________________________________________________

- 3.

- First Item’s hazard level:Very low Very high

- 4.

- Second Object:______________________________________________________________

- 5.

- Second object’s hazard level:Very low Very high

- 6.

- Third Object:_______________________________________________________________

- 7.

- Third object’s hazard level:Very low Very high

- 8.

- What would you do in this situation (in one or two sentences):___________________

| Attributes | Values |

|---|---|

| Total respondents | 16 |

| Age range | |

| 18 to 24 | 18.8% |

| 25 to 34 | 25.0% |

| 35 to 44 | 18.8% |

| 45 to 54 | 45.54% |

| 55 to 64 | 6.3% |

| 65 or over | - |

| Sex | |

| Male | 62.5% |

| Female | 31.3% |

| Genderfluid | 6.3% |

| Prefer not to say | - |

| Have kids | |

| Yes | 68.8% |

| No | 31.3% |

| Country origin | |

| Japan | 37.5% |

| China | 6.3% |

| Cameroon | 12.5% |

| Philippines | 6.3% |

| United States | 12.5% |

| Vanuatu | 6.3% |

| Poland | 12.5% |

| Belgium | 6.3% |

Appendix C. Glossary of Non-English Cluster Terms

| French Term | English Translation |

|---|---|

| courant | current (often refers to electricity) |

| rallonge électrique | extension cord/power strip |

| prise électrique | electrical outlet/socket |

| tournevi, tournevis | screwdriver |

| câble électrique | electric cable/electrical cord |

| cordon électrique | electric cord |

| ciseaux | scissors |

| Japanese Term | English Translation |

|---|---|

| 電源タップ (dengen tappu) | power strip |

| テーブルタップ (te–buru tappu) | power strip |

| 電気コード (denki ko–do) | electric cord |

| コード (ko–do) | cord |

| はさみ (hasami) | scissors |

| Polish Term | English Translation |

|---|---|

| przewód elektryczny | electric wire/electrical cord |

| kabel elektryczny | electric cable |

| przedłużacz elektryczny | extension cord/power strip |

| kable | cables |

| gniazdek | socket/electrical outlet |

| prąd | current (electricity) |

| śrubokręt, srubokret | screwdriver |

| nożyczki, nozyczka | scissors |

Appendix D. Dataset Datasheet

- Motivation

- Purpose: The dataset was created to evaluate the ability of Vision-Language Models (VLMs) and large language models (LLMs) to recognize, assess, and mitigate physical dangers to children in school and home environments. This dataset addresses the gap in real-world testing of AI in safety-critical environments, specifically focusing on children’s well-being.

- Creators: The dataset was created by a team of researchers from anonymized focused on AI safety applications, collaborating with caregivers, school staff, and parents from Japan and Poland.

- Funding: N/A.

- Additional Comments: The dataset aims to provide a foundation for studying discrepancies between AI and human judgment in assessing potential dangers in environments children frequently inhabit.

- Composition

- Instances: The dataset consists of 78 images taken in homes and schools, depicting objects that may pose a risk to children.

- Total Instances: 78 images (43 from Japan, 35 from Poland).

- Sampling: The dataset is a curated collection, not a random sample. Images were selected to cover a diverse range of potential hazards from culturally distinct regions.

- Data Features: Images depicting physical environments where children usually play, and objects with potential hazards (e.g., sharp tools, small toys, electrical cables) inside these environments. Annotations were collected from human participants and models.

- Labels: Each image includes annotations of dangerous objects (up to 3 per image), danger levels (on a 5-point scale), and suggested actions to mitigate risk.

- Relationships: Each image relates to both its annotations and the contextual information provided to annotators, including location and environment type (home or school).

- Splits: 16 images were selected for human-model comparison experiments in order the have an fair comparison, 4 pictures representing school and homes from both countries Japan and Poland.

- Errors/Noise: There is some inherent subjectivity in the annotations, particularly in the assessment of danger levels and actions, due to variability in how participants perceived the risks.

- External Resources: The dataset is self-contained.

- Sensitive Data: Children’s faces were cropped or removed from the dataset to protect privacy. No other sensitive or offensive content is present.

- Collection Process

- Acquisition: Images were collected by parents, caregivers, and school staff using smartphones. Participants were given freedom in selecting potentially dangerous objects in the scenes.

- Mechanisms: Data was manually curated. No automated data collection tools were used.

- Sampling Strategy: The dataset was intentionally curated to include a variety of potential hazards, selected by caregivers.

- Participants: Images were taken by adults, including parents and school staff. No compensation was provided.

- Timeframe: Images were collected at the time of the study (March 2024).

- Ethical Review: Informal consent was obtained from caregivers, with the assurance that children’s faces would not be visible in the images.

- Data Source: Images were taken directly by individuals involved in the study.

- Consent: Informal consent was obtained, though no formal mechanism for revocation was provided.

- Impact Analysis: No formal impact analysis was mentioned, though privacy and ethical considerations were addressed through the removal of identifying features from the images.

- Preprocessing/Cleaning/Labeling

- Preprocessing: Images containing children were cropped to exclude identifiable faces. The annotations were standardized using clustering algorithms to ensure consistent labeling of objects.

- Raw Data: Raw images are preserved but cropped for privacy.

- Software: For textual part of the data (hazardous object names), clustering and annotation standardization were conducted using tools like SpaCy and scikit-learn.

- Uses

- Tasks: The dataset is used for evaluating the performance of Vision-Language Models in detecting hazards and recommending actions in child-related environments.

- Future Tasks: The dataset could be expanded to assess cultural differences in risk perception and to test other vision-based AI systems in safety-critical scenarios. Broad variety of objects and action descriptions will be used for testing new and comparing existing dedicated similarity measures.

- Potential Risks: The subjective nature of danger assessment and the limited geographical scope and the limited number of annotations of the dataset could affect generalization to other populations or environments.

- Limitations: The dataset depicts only a very tiny fragment of reality (two homes and two schools in Japan and Poland, respectively). It is mainly meant for prototyping and testing.

- Distribution

- Third-Party Distribution: To avoid data contamination, the data will be shared with other researchers after receiving a pledge.

- Distribution Method: Password-protected zip file will be provided on the authors’ laboratory site. Link will be shared with everyone who agreed to the pledge.

- Licensing: No licensing or intellectual property terms is specified.

- Export Controls: No export controls or regulatory restrictions were noted.

- Maintenance

- Support: Authors will maintain the dataset.

- Errata: No errata exists.

- Updates: Authors will update the dataset.

- Retention Limits: No retention limits are specified.

- Versioning: Version control will be provided by authors if the dataset will be updated.

- Contribution Mechanism: To avoid contamination issues, other researchers cannot contribute to the images subset. However, descriptions of objects and actions in languages other than English are welcomed.

References

- Shigemi, S. ASIMO and humanoid robot research at Honda. In Humanoid Robotics: A Reference; Springer: Berlin/Heidelberg, Germany, 2017; pp. 1–36. [Google Scholar]

- Pandey, A.K.; Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Röttger, P.; Attanasio, G.; Friedrich, F.; Goldzycher, J.; Parrish, A.; Bhardwaj, R.; Di Bonaventura, C.; Eng, R.; Geagea, G.E.K.; Goswami, S.; et al. MSTS: A Multimodal Safety Test Suite for Vision-Language Models. arXiv 2025, arXiv:2501.10057. [Google Scholar]

- Qi, Z.; Fang, Y.; Zhang, M.; Sun, Z.; Wu, T.; Liu, Z.; Lin, D.; Wang, J.; Zhao, H. Gemini vs GPT-4V: A Preliminary Comparison and Combination of Vision-Language Models Through Qualitative Cases. arXiv 2023, arXiv:2312.15011. [Google Scholar]

- Chiang, C.H.; Lee, H.y. Can large language models be an alternative to human evaluations? arXiv 2023, arXiv:2305.01937. [Google Scholar]

- Evans, I. Safer children, healthier lives: Reducing the burden of serious accidents to children. Paediatr. Child Health 2022, 32, 302–306. [Google Scholar] [CrossRef]

- Lee, D.; Jang, J.; Jeong, J.; Yu, H. Are Vision-Language Models Safe in the Wild? A Meme-Based Benchmark Study. arXiv 2025, arXiv:2505.15389. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar]

- Ping, L.; Gu, Y.; Feng, L. Measuring the Visual Hallucination in ChatGPT on Visually Deceptive Images. In OSF Preprints, Posted May 2024. [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. GPT-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. In Proceedings of the 36th Conference on Neural Information Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023; pp. 34892–34916. [Google Scholar]

- Zhang, M.; Li, X.; Du, Y.; Rao, Z.; Chen, S.; Wang, W.; Chen, X.; Huang, D.; Wang, S. A Multimodal Large Language Model for Forestry in pest and disease recognition Research Square, Posted 18 October 2023. rs-3444472 v1. [CrossRef]

- Zhou, L.; Palangi, H.; Zhang, L.; Hu, H.; Corso, J.; Gao, J. Unified vision-language pre-training for image captioning and vqa. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13041–13049. [Google Scholar]

- Sharma, A.; Gupta, A.; Bilalpur, M. Argumentative Stance Prediction: An Exploratory Study on Multimodality and Few-Shot Learning. arXiv 2023, arXiv:2310.07093. [Google Scholar]

- Pak, H.L.; Zhang, Y. A Smart Child Safety System for Enhanced Pool Supervision using Computer Vision and Mobile App Integration. In Proceedings of the CS & IT Conference Proceedings, CS & IT Conference Proceedings, Dubai, United Arab Emirates, 28–29 December 2024; Volume 14. [Google Scholar]

- Lee, Y.; Kim, K.; Park, K.; Jung, I.; Jang, S.; Lee, S.; Lee, Y.J.; Hwang, S.J. HoliSafe: Holistic Safety Benchmarking and Modeling with Safety Meta Token for Vision-Language Model. arXiv 2025, arXiv:2506.04704. [Google Scholar]

- Na, Y.; Jeong, S.; Lee, Y. SIA: Enhancing Safety via Intent Awareness for Vision-Language Models. arXiv 2025, arXiv:2507.16856. [Google Scholar]

- Mullen, J.F., Jr.; Goyal, P.; Piramuthu, R.; Johnston, M.; Manocha, D.; Ghanadan, R. “Don’t Forget to Put the Milk Back!” Dataset for Enabling Embodied Agents to Detect Anomalous Situations. IEEE Robot. Autom. Lett. 2024, 9, 9087–9094. [Google Scholar] [CrossRef]

- Rodriguez-Juan, J.; Ortiz-Perez, D.; Garcia-Rodriguez, J.; Tomás, D.; Nalepa, G.J. Integrating advanced vision-language models for context recognition in risks assessment. Neurocomputing 2025, 618, 129131. [Google Scholar] [CrossRef]

- Nitta, T.; Masui, F.; Ptaszynski, M.; Kimura, Y.; Rzepka, R.; Araki, K. Detecting Cyberbullying Entries on Informal School Websites Based on Category Relevance Maximization. In Proceedings of the Sixth International Joint Conference on Natural Language Processing, Nagoya, Japan, 14–18 October 2013; pp. 579–586. [Google Scholar]

- Rani, D.D. Protecting Children from Online Grooming in India’s Increasingly Digital Post-Covid-19 Landscape: Leveraging Technological Solutions and AI-Powered Tools. Int. J. Innov. Res. Comput. Sci. Technol. 2024, 12, 38–44. [Google Scholar] [CrossRef]

- Verma, K.; Milosevic, T.; Cortis, K.; Davis, B. Benchmarking Language Models for Cyberbullying Identification and Classification from Social-media Texts. In Proceedings of the First Workshop on Language Technology and Resources for a Fair, Inclusive, and Safe Society within the 13th Language Resources and Evaluation Conference, Marseille, France, 25 June 2022; pp. 26–31. [Google Scholar]

- Song, Z.; Ouyang, G.; Fang, M.; Na, H.; Shi, Z.; Chen, Z.; Fu, Y.; Zhang, Z.; Jiang, S.; Fang, M.; et al. Hazards in Daily Life? Enabling Robots to Proactively Detect and Resolve Anomalies. arXiv 2024, arXiv:2411.00781. [Google Scholar]

- Singhal, M.; Gupta, L.; Hirani, K. A comprehensive analysis and review of artificial intelligence in anaesthesia. Cureus 2023, 15, e45038. [Google Scholar] [CrossRef]

- Zhou, K.; Liu, C.; Zhao, X.; Compalas, A.; Song, D.; Wang, X.E. Multimodal situational safety. arXiv 2024, arXiv:2410.06172. [Google Scholar]

- Li, X.; Zhou, H.; Wang, R.; Zhou, T.; Cheng, M.; Hsieh, C.J. Mossbench: Is your multimodal language model oversensitive to safe queries? arXiv 2024, arXiv:2406.17806. [Google Scholar]

- Ying, Z.; Liu, A.; Liang, S.; Huang, L.; Guo, J.; Zhou, W.; Liu, X.; Tao, D. SafeBench: A Safety Evaluation Framework for Multimodal Large Language Models. arXiv 2024, arXiv:2410.18927. [Google Scholar]

- Gupta, P.; Krishnan, A.; Nanda, N.; Eswar, A.; Agrawal, D.; Gohil, P.; Goel, P. ViDAS: Vision-based Danger Assessment and Scoring. In Proceedings of the Fifteenth Indian Conference on Computer Vision Graphics and Image Processing, Bengaluru, Karnataka, India, 13–15 December 2024; pp. 1–9. [Google Scholar]

- Shneiderman, B. Bridging the gap between ethics and practice: Guidelines for reliable, safe, and trustworthy human-centered AI systems. ACM Trans. Interact. Intell. Syst. (TiiS) 2020, 10, 1–31. [Google Scholar] [CrossRef]

- Nguyen, T.; Wallingford, M.; Santy, S.; Ma, W.C.; Oh, S.; Schmidt, L.; Koh, P.W.W.; Krishna, R. Multilingual diversity improves vision-language representations. Adv. Neural Inf. Process. Syst. 2024, 37, 91430–91459. [Google Scholar]

- Shorten, C.; Pierse, C.; Smith, T.B.; Cardenas, E.; Sharma, A.; Trengrove, J.; van Luijt, B. Structuredrag: JSON response formatting with large language models. arXiv 2024, arXiv:2408.11061. [Google Scholar]

- Feng, F.; Yang, Y.; Cer, D.; Arivazhagan, N.; Wang, W. Language-agnostic BERT sentence embedding. arXiv 2020, arXiv:2007.01852. [Google Scholar]

| Language Pair | Cluster Items | Cos Similarity | Avg Danger lvl |

|---|---|---|---|

| En–Fr | [’socket’, ’power strip’, ’power outlet’] [’courant’, ’rallonge électrique’, ’prise électrique’] | 0.846 | 4.6–5.0 |

| [’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screw driver’] [’tournevi’, ’tournevis’, ’tournevis’, ’tournevi’] | 0.650 | 4.38–4.25 | |

| Pl–Fr | [’prąd’, ’gniazdek’] [’courant’, ’rallonge électrique’, ’prise électrique’] | 0.788 | 5.0–5.0 |

| [’przedłużacz’, ’przedłużacz’, ’przedłużacz’, ’przedłużacz’, ’przedlużacz’] [’rallonge’, ’rallonge’] | 0.746 | 4.2–5.0 | |

| Jp–Fr | [’cissor’, ’screw driver’, ’電源タップ’, ’はさみ’, ’テーブルタップ’] [’courant’, ’rallonge électrique’, ’prise électrique’] | 0.623 | 4.66–5.0 |

| [’cissor’, ’screw driver’, ’電源タップ’, ’はさみ’, ’テーブルタップ’] [’ciseau’, ’ciseau’, ’ciseau’, ’ciseau’] | 0.57 | 4.6–4.1 | |

| Jp–En | [’cissor’, ’screw driver’, ’電源タップ’, ’はさみ’, ’テーブルタップ’] [’socket’, ’power strip’, ’power outlet’] | 0.679 | 4.66–4.6 |

| [’cissor’, ’screw driver’, ’電源タップ’, ’はさみ’, ’テーブルタップ’] [’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ] | 0.668 | 4.6–4.38 | |

| Jp–Pl | [’cissor’, ’screw driver’, ’電源タップ’, ’はさみ’, ’テーブルタップ’] [’śrubokręt’, ’srubokret’, ’śrubokręt’, ’śrubokręt’] | 0.695 | 4.66–4.5 |

| [’cissor’, ’screw driver’, ’電源タップ’, ’はさみ’, ’テーブルタップ’] [’prąd’, ’gniazdek’] | 0.604 | 4.6–5.0 | |

| En–Pl | [’screwdriver’, ’screwdriver’, ’screwdriver’] [’śrubokręt’, ’srubokret’, ’śrubokręt’, ’śrubokręt’] | 0.878 | 4.5–4.38 |

| [’socket’, ’power strip’, ’power outlet’] [’prąd’, ’gniazdek’] | 0.818 | 4.66–5.0 |

| Lang Pairs | Min Sim | Max Sim | Sim Pairs Count (>0.8) |

|---|---|---|---|

| En-Fr | 0.20 | 0.95 | 10 |

| Pl-Fr | 0.285 | 1.0 | 9 |

| Jp-Fr | 0.21 | 0.84 | 8 |

| Jp-En | 0.13 | 0.91 | 21 |

| Jp-Pl | 0.20 | 0.88 | 3 |

| En-Pl | 0.26 | 0.95 | 19 |

| Language Pair | Cluster id | Cluster Item | Cos Similairty | Avg Danger lvl |

|---|---|---|---|---|

| Fr–En | Cluster 1 Cluster 5 | [’electrical cord’, ’electrical cord’, ’electrical cord’, ’electrical cord’, …] [’przewód elektryczny’, ’kabel elektryczny’, ’kabel elektryczny’, ’przedłużacz elektryczny’, ’kable’, …] | 0.9177 | 2.86–2.67 |

| Cluster 3 Cluster 1 | [’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, …] [’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, …] | 0.8724 | 4.00–4.00 | |

| Fr–Pl | Cluster 3 Cluster 5 | [’câble électrique’, ’câble électrique’, ’cordon électrique’, ’câble électrique’, ’câble électrique’, ’câble électrique’, ’câble électrique’, ’câble électrique’] [’przewód elektryczny’, ’przedłużacz elektryczny’, ’kabel elektryczny’, ’kabel elektryczny’, ’przedłużacz elektryczny’, ’kable’] | 0.9491 | 2.62–2.67 |

| Cluster 2 Cluster 5 | [’rallonge électrique’, ’rallonge électrique’, ’rallonge électrique’, ’rallonge électrique’, …] [’przewód elektryczny’, ’przedłużacz elektryczny’, ’kabel elektryczny’, ’przedłużacz elektryczny’, ’kable’, …] | 0.8600 | 3.75–2.67 | |

| Fr–Jp | Cluster 5 Cluster 3 | [’ciseaux’, ’ciseaux’, ’ciseaux’,’ciseaux’, ’ciseaux’, ’ciseaux’, ’ciseaux’, ’ciseaux’, ’ciseaux’] [’scissors’, ’scissors’] | 0.9033 | 4.20–4.00 |

| Cluster 3 Cluster 9 | [’câble électrique’, ’câble électrique’, ’cordon électrique’, ’câble électrique’, ’câble électrique’, …] [’電気コード’, ’電気コード’, ’電気コード’, ’コード’, ’コード’, ’電気コード’, ’延長コード’] | 0.7406 | 2.62–2.29 | |

| En–Pl | Cluster 1 Cluster 5 | [’electrical cord’, ’electrical cord’, ’electrical cord’, ’electrical cord’, ’electrical cord’, ’electrical cord’, ’electrical cord’] [’przewód elektryczny’, ’przedłużacz elektryczny’, ’kabel elektryczny’, ’kabel elektryczny’, ’przedłużacz elektryczny’, ’kable’] | 0.9177 | 2.86–2.67 |

| Cluster 3 Cluster 1 | [’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, ’screwdriver’, …] [’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’,…] | 0.8724 | 4.00–4.00 | |

| En–Jp | Cluster 2 Cluster 1 | [’power strip’, ’power strip’, ’power strip’, ’power strip’, ’power strip’, ’power strip’, ’power strip’, ’power strip’, …] [’power strip’, ’power strip’,] | 1.0000 | 4.10–3.50 |

| Cluster 4 Cluster 3 | [’scissors’, ’scissors’, ’scissors’, ’scissors’, ’scissors’, ’scissors’, ’scissors’, ’scissors’, …] [’scissors’, ’scissors’] | 1.0000 | 4.20–4.00 | |

| Pl–Jp | Cluster 1 Cluster 2 | [’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’, ’śrubokręt’] [’screwdriver’, ’screwdriver’] | 0.8724 | 4.00–4.00 |

| Cluster 2 Cluster 3 | [’nożyczki’, ’nożyczki’, ’nożyczki’, ’nożyczki’, ’nożyczki’, ’nożyczki’, ’nożyczki’, ’nożyczki’, …] [’scissors’, ’scissors’] | 0.7854 | 4.40–4.00 |

| Model | Annotators’ Cluster | Avg Danger lvl | Model’s Cluster | Cos Similarity | Avg Danger lvl |

|---|---|---|---|---|---|

| gemini-2.0-flash | [nożyczki, nożyczki, nozyczka, nożyczki, nożyczki, … ] (cluster id 2) | 4.5 | [nożyczki, nożyczki, nożyczki, nożyczki, nożyczki, …] (cluster id 2) | 0.997 | 4.0 |

| gemini-2.5-pro- preview-03-25 | [nożyczki, nożyczki, nozyczka, nożyczki, nożyczki, … ] (cluster id 2) | 4.5 | [nożyczki, nożyczki, nożyczki, nożyczki, nożyczki, …] (cluster id 3) | 0.997 | 4.40 |

| gemini-1.5-pro | [nożyczki, nożyczki, nozyczka, nożyczki, nożyczki, … ] (cluster id 2) | 4.5 | [nożyczki, nożyczki, nożyczki, nożyczki, nożyczki, …] (cluster id 2) | 0.997 | 4.0 |

| gpt-4.1-2025-04-14 | [nożyczki, nożyczki, nozyczka, nożyczki, nożyczki, … ] (cluster id 2) | 4.5 | [nożyczki, nożyczki, nożyczki, nożyczki, nożyczki, …] (cluster id 2) | 0.997 | 5.0 |

| gpt-4o-2024-11-20 | [nożyczki, nożyczki, nozyczka, nożyczki, nożyczki, … ] (cluster id 2) | 4.5 | [nożyczki, nożyczki, nożyczki, nożyczki, nożyczki, …] (cluster id 2) | 0.997 | 4.40 |

| Lang | Min Sim | Max Sim | Mean Sim | Sim Pairs Count (>0.8) |

|---|---|---|---|---|

| En | 0.13 | 0.99 | 0.48 | 23 |

| Fr | 0.16 | 0.96 | 0.42 | 6 |

| Jp | 0.04 | 1.0 | 0.42 | 25 |

| Pl | 0.15 | 1.0 | 0.46 | 11 |

| Lang | Min Sim | Max Sim | Mean Sim | Sim Pairs Count |

|---|---|---|---|---|

| En | 0.13 | 0.99 | 0.48 | 30 |

| Fr | 0.18 | 1.0 | 0.47 | 11 |

| Jp | 0.07 | 1.0 | 0.42 | 40 |

| Pl | 0.16 | 1.0 | 0.49 | 19 |

| Lang | Min Sim | Max Sim | Mean Sim | Sim Pairs Count |

|---|---|---|---|---|

| En | 0.12 | 0.99 | 0.50 | 23 |

| Fr | 0.22 | 1.0 | 0.45 | 8 |

| Jp | 0.11 | 1.0 | 0.42 | 22 |

| Pl | 0.15 | 1.0 | 0.47 | 12 |

| Lang | Min Sim | Max Sim | Mean Sim | Sim Pairs Count |

|---|---|---|---|---|

| En | 0.08 | 0.99 | 0.54 | 32 |

| Fr | 0.23 | 0.99 | 0.51 | 12 |

| Jp | 0.06 | 1.0 | 0.42 | 30 |

| Pl | 0.15 | 1.0 | 0.52 | 25 |

| Action Category | English | French | Polish | Japanese | Average |

|---|---|---|---|---|---|

| Remove object | 53.6% | 54.9% | 64.4% | 46.1% | 53.6% |

| Block access | 14.1% | 16.5% | 14.7% | 17.0% | 15.3% |

| Warn or supervise | 13.7% | 9.9% | 9.2% | 8.3% | 11.4% |

| Move object elsewhere | 11% | 7.7% | 4.9% | 17.8% | 10.6% |

| Keep object | 4.6% | 9.9% | 4.9% | 6.2% | 5.8% |

| Add protection | 3% | 1.1% | 1.8% | 4.1% | 2.9% |

| Other | 0.0% | 0.0% | 0.0% | 0.4% | 0.01% |

| Action Category | English | French | Polish | Japanese | Average |

|---|---|---|---|---|---|

| Remove object | 56.1% | 54% | 55.0% | 45.8% | 52.7% |

| Block access | 10.4% | 11.8% | 10.6% | 7.6% | 10.1% |

| Warn or supervise | 14.9 | 18.7% | 15.7% | 17.6% | 16.7% |

| Move object elsewhere | 8.0% | 4.6% | 6.5% | 11.0% | 7.5% |

| Keep object | 4.4% | 2.9% | 4.8% | 3.0% | 3.7% |

| Add protection | 2.4% | 2.0% | 1.1% | 4.0% | 2.3% |

| Other | 3.8% | 6.0% | 6.3% | 11.0% | 6.7% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Anemeta, D.D.; Rzepka, R. Cross-Cultural Safety Judgments in Child Environments: A Semantic Comparison of Vision-Language Models and Humans. Algorithms 2025, 18, 507. https://doi.org/10.3390/a18080507

Anemeta DD, Rzepka R. Cross-Cultural Safety Judgments in Child Environments: A Semantic Comparison of Vision-Language Models and Humans. Algorithms. 2025; 18(8):507. https://doi.org/10.3390/a18080507

Chicago/Turabian StyleAnemeta, Don Divin, and Rafal Rzepka. 2025. "Cross-Cultural Safety Judgments in Child Environments: A Semantic Comparison of Vision-Language Models and Humans" Algorithms 18, no. 8: 507. https://doi.org/10.3390/a18080507

APA StyleAnemeta, D. D., & Rzepka, R. (2025). Cross-Cultural Safety Judgments in Child Environments: A Semantic Comparison of Vision-Language Models and Humans. Algorithms, 18(8), 507. https://doi.org/10.3390/a18080507