Abstract

The quantification of emotional value and accurate prediction of purchase intention has emerged as a critical interdisciplinary challenge in the evolving emotional economy. Focusing on Generation Z (born 1995–2009), this study proposes a hybrid algorithmic framework integrating text-based sentiment computation, feature selection, and random forest modeling to forecast purchase intention for therapeutic toys and interpret its underlying drivers. First, 856 customer reviews were scraped from Jellycat’s official website and subjected to polarity classification using a fine-tuned RoBERTa-wwm-ext model (F1 = 0.92), with generated sentiment scores and high-frequency keywords mapped as interpretable features. Next, Boruta–SHAP feature selection was applied to 35 structured variables from 336 survey records, retaining 17 significant predictors. The core module employed a RF (random forest) model to estimate continuous “purchase intention” scores, achieving R2 = 0.83 and MSE = 0.14 under 10-fold cross-validation. To enhance interpretability, RF model was also utilized to evaluate feature importance, quantifying each feature’s contribution to the model outputs, revealing Social Ostracism (β = 0.307) and Task Overload (β = 0.207) as dominant predictors. Finally, k-means clustering with gap statistics segmented consumers based on emotional relevance, value rationality, and interest level, with model performance compared across clusters. Experimental results demonstrate that our integrated predictive model achieves a balance between forecasting accuracy and decision interpretability in emotional value computation, offering actionable insights for targeted product development and precision marketing in the therapeutic goods sector.

1. Introduction

The fast-paced and high-pressure lifestyle of contemporary society has led to an unprecedented surge in consumers’ demand for emotional value, particularly among younger demographics [1]. Unlike traditional consumption patterns focused solely on functionality and price, “emotional consumption” has emerged as a defining characteristic of Generation Z (born 1995–2010)—a digitally native cohort raised in relative material abundance, prioritizing subjective well-being and emotional resonance through consumption [2]. For instance, Generation Z actively purchases therapeutic products such as stress-relief toys and mystery blind boxes to alleviate anxiety and seek pleasure, reflecting the rise of the “emotional economy” that emphasizes products/services addressing consumers’ emotional needs.

The evolving socio-psychological landscape has positioned the emotional economy as a critical focus in academia and industry. Defined as value creation through fulfilling consumers’ emotional demands, this economic paradigm spans therapeutic foods, cultural products, and stress-relief toys [3]. Scholars characterize Generation Z’s emotional consumption as a coping mechanism against involution pressure and virtual social environments, where consumption serves as a pathway to emotional relief and psychological belonging [4]. Empirical studies confirm significant correlations between emotional consumption behaviors and psychological factors such as anxiety levels and loneliness. Market analyses further reveal distinct traits in Generation Z’s consumption patterns: virtual engagement, subjective prioritization, and social-driven purchasing [5,6,7]. Their preference for “self-indulgent” consumption, driven by immediate emotional gratification, has propelled therapeutic toys (e.g., squishy toys, Jellycat plushies) to prominence in the emotional economy [8]. However, the inherent subjectivity and impulsiveness of emotion-driven consumption complicate demand prediction.

Therapeutic toys—products offering psychological comfort—epitomize this trend. As primary consumers, Generation Z prioritize personalization, emotional resonance, and social sharing, with purchasing behaviors heavily influenced by emotional triggers. Social media amplification through KOL marketing (e.g., short videos, livestream sales) underscores the interplay between product design, psychological needs, and digital subcultures.

Drawing on Affective Events Theory (AET), therapeutic toy consumption is framed as an affective response to workplace/academic stressors (e.g., task overload, social ostracism) [9]. Further, Self-Determination Theory (SDT) explains how emotional consumption fulfills Gen Z’s psychological needs for autonomy (self-reward), competence (stress mastery), and relatedness (social belonging) [10,11]. While prior sociological studies have explored the dynamics of ‘healing culture’ [12,13], the emotional drivers underlying consumption behaviors remain largely unquantified in the literature. Our work addresses this underexamined dimension of the emotional economy by introducing a novel methodological framework. This approach integrates text sentiment analysis (RoBERTa), explainable feature selection (Boruta-SHAP), and ensemble learning (random forest) to decode emotion-driven consumption patterns.

In this work, we aim to combine the text-based sentiment computation, feature selection, and random forest modeling to propose an integrated approach to predict the consumer demand for therapeutic toys and parse the influencing factors. Our questions focus on (1) how to extract features reflecting users’ emotions and preferences from questionnaires and text feedback; (2) what factors most influence Gen Z’s willingness to purchase therapeutic toys; (3) and whether users can be segmented accordingly to portray different types of consumer groups. To this end, we designed the following research framework. We first perform text sentiment analysis on user-generated text data to quantify emotional features; then, we perform feature construction and selection to extract key variables; then, we use the random forest model to predict the consumption intention (continuous variable); we explain the strength of the association between each feature and the consumption intention through the random forest significance analysis; and finally, we use k-means clustering to segment the users.

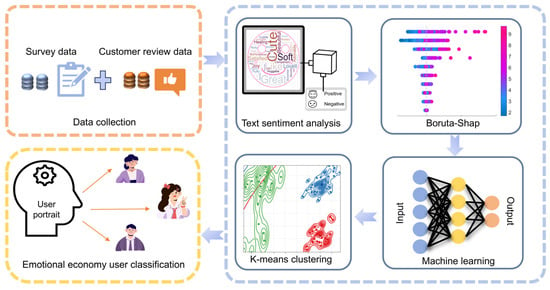

Effectively modeling Generation Z’s emotional consumption necessitates interdisciplinary innovation. Classical consumer behavior models, reliant on rational assumptions and structured data, inadequately capture emotional dynamics. Conversely, advances in social media analytics and text-based sentiment computation enable quantitative assessment of emotional tendencies, offering new avenues for predictive modeling. To this end, we designed the following research framework. As shown in Figure 1, we first perform text sentiment analysis on user-generated text data to quantify the emotional features; then, we perform feature construction and selection to extract key variables; then, we use the random forest model to predict the consumption intention (continuous variable); we explain the strength of the association between each feature and the consumption intention through the random forest significance analysis; and finally, we use k-means clustering to make a subgroup portrait of the users.

Figure 1.

The quantitative prediction and analysis process of generation z’s therapeutic toy consumption behavior includes six steps: data collection, text sentiment analysis, Boruta–SHAP, machine learning, k-means clustering, and emotional economy user classification.

The main contributions of this paper are as follows: (1) deep sentiment analysis methods (RoBERTa-wwm-ext, etc.) are introduced in consumer behavior research to quantitatively portray the influence of users’ emotional factors on consumption decisions; (2) a hybrid model framework integrating machine learning prediction and importance assessment is constructed to achieve effective prediction and interpretable analysis of consumption intention of therapeutic toys; (3) a combination of cluster analysis reveals a segmented portrait of Generation Z consumers, which provides a basis for enterprises to formulate differentiated marketing strategies. The rest of the article is structured as follows: Section 2 describes in detail the methodology adopted in the study; Section 3 describes the experimental data, process, and results; Section 4 discusses the results, including model performance comparison, consistency of influencing factors, and user characterization; and Section 5 summarizes the whole article and looks forward to the future directions for the study.

2. Materials and Methods

In order to achieve quantitative prediction and analysis of Generation Z’s therapeutic toy consumption behavior, this paper proposes a multi-stage research framework as previously described (see Figure 1). In this section, the methodology and technical solutions employed are described in detail according to each part of the process.

2.1. Text Sentiment Analysis

Developments in the field of affective computing has provided technical means to analyze consumer emotions [14]. Among them, text sentiment analysis is one of the most widely used sentiment computing methods [15]. Based on natural language processing, emotional tendencies (positive/negative, etc.) and intensities can be automatically identified from consumer comments and social media posts. Commonly used methods for Chinese sentiment analysis include two categories: sentiment dictionary-based computation and machine learning-based classification [16]. Lexicon methods utilize pre-constructed sentiment dictionaries to calculate sentiment scores based on the frequency of positive and negative sentiment words in the text, while machine learning methods train classification models (e.g., SVM, deep learning, etc.) to directly predict text sentiment polarity. In recent years, pre-trained language models (e.g., BERT and its Chinese variants) have achieved significant improvements in sentiment analysis tasks [17]. For example, the Chinese RoBERTa-wwm-ext, pre-trained with full word masks to better understand the context, can capture subtle sentiment features of commented texts, thus improving classification accuracy. Sentiment analysis has been applied to consumer behavior research [18], such as analyzing e-commerce reviews to assess user satisfaction or predicting product sales in conjunction with social opinion. In this study, we combine RoBERTa-wwm-ext with a sentiment lexicon to analyze the sentiment polarity and calculate the sentiment intensity of social media evaluative texts, and incorporate them into the consumption prediction model as one of the influencing factors.

First, we fine-tune the text for sentiment classification using the pre-trained language model RoBERTa-wwm-ext, which is a RoBERTa model trained on whole-word masking (WWM) for the Chinese corpus, and has performed well on several Chinese NLP tasks [19]. We utilize it to obtain contextual semantic representations of text and add a fully connected layer to output sentiment polarity (e.g., positive, negative, neutral). Compared to the traditional TF-IDF+machine-learning-based approach, the pre-trained model is able to better understand the implicit emotional meanings, e.g., emotional judgments of slang and Internet buzzwords, which is especially important for informal expressions commonly used by Generation Z. A total of 856 customer reviews were scraped from Jellycat’s official website, and the texts were lower-cased, tokenized with Jieba, and padded or truncated to 128 tokens. The model’s final hidden state was passed through a fully connected layer that outputs the class-probability vector, which can be expressed as follows:

where Pneg, Pneu and Ppos denote the model’s belief that the text is negative, neutral, or positive, respectively, and by construction Pneg + Pneu + Ppos = 1. Fine-tuning uses a learning rate 2 × 10−5, the batch size is 32, and it has five epochs to avoid over-fitting.

Due to the specificity of Chinese texts, we combine a deep learning approach based on pre-trained models and a traditional dictionary-based approach to improve the analysis accuracy and robustness [20]. If a text T contains ci+ occurrences of the ith positive token and ci− occurrences of the jth negative token, its raw lexical polarity is as follows:

where the superscripts “+” and “−” indicate positive and negative polarity, respectively. Degree adverbs, such as very or slightly, multiply wt by an intensity factor, while an in-sentence negator (e.g., not) flips its sign. The scalar Slex is mapped to [0, 1] through the logistic squashing function.

where 1 − σ(Slex) and σ(Slex) serve as pseudo-probabilities of negative and positive sentiment, and the neutral slot is left empty because a dictionary alone cannot establish neutrality.

The specific approach is to utilize the KnowledgeNet HOWNET sentiment dictionary and the customized sentiment thesaurus of popular words on the internet to calculate the sentiment scores after word separation of the text, as follows: counting the frequency of positive and negative sentiment words, combining the degree adverbs (e.g., “very”, “a little”) with the weight correction and the role of negatives to obtain the sentiment scores of each text. The frequency of positive emotion words and negative emotion words is counted, combined with the weight correction of degree adverbs (e.g., “very”, “a little”) and the role of negative words to obtain the emotion score of each text. This score reflects the strength and direction of the sentiment of the text. For example, when a user comment contains words such as “like”, “cure”, “happy”, etc., and is modified by the adverb “special”, we determine the strength of the sentiment of the text, and judge the sentiment to be strongly positive; if negative words such as “disappointment” and “boredom” appear, the text is judged to be negative. The lexical approach is relatively intuitive and explains the contribution of each word to the sentiment value, but may miss sentences that imply sentiment.

To integrate the advantages of the two approaches, we compare and combine the prediction results of the RoBERTa model with the lexicon sentiment scores. On the one hand, if the two results are consistent (e.g., both are judged as positive), the confidence level is increased; if not, we manually inspect the text to find out the reasons for the disagreement, such as misjudgment by some anti-statement lexicographic methods, etc., and then adjust and optimize the model or the lexicon. Ultimately, we assign each text record a sentiment propensity label (positive/medium/negative) as well as a sentiment strength score (normalized by taking the positive probability or lexicon sentiment value of the RoBERTa output). These metrics from sentiment analysis will be used as one of the feature inputs for subsequent modeling.

2.2. Feature Construction and Selection

After obtaining the sentiment indicators, we integrate other information from the questionnaire to construct a complete feature set. Feature construction consists of two parts: one is the direct use or processing of questionnaire items to form features based on theoretical assumptions, and the other is the extraction of data-derived features.

First, we based the questionnaire design on the characteristics of each respondent obtained by combining or converting multiple questions into scores. The questionnaire covers demographic characteristics such as gender, age, and income level, consumer behavioral characteristics such as purchase and knowledge of therapeutic toys, and psychological attitude scales such as stress measurement scale, mood measurement scale, and product advantage measurement scale.

Each multi-item attitude scale is condensed into a single latent score by simple averaging.

where xik is respondent k’s response to the i-th item and mk is the number of items in that scale. Internal consistency is checked with Cronbach’s α.

Ensuring that each aggregated score behaves unidimensionally. Demographic fields (gender, age, income) and observed behavioral markers (prior purchases, product knowledge) are included unchanged.

For the attitude-scale-type items, we went through reliability and validity tests (see the next section for details) and calculated the mean score of each scale as a measure of that latent variable [21].

Second, we used the questionnaire information to construct some derived variables. For example, based on the respondents’ ratings of different toy attributes (cute appearance, brand IP, social topics, etc.), we calculated a “healing attribute recognition index”, which represents the overall recognition of the concept of therapeutic toys by users.

Drawing on responses that rate individual toy attributes ria ∈ [1, 5] (e.g., cute appearance, brand IP), we define a “healing attribute recognition index”.

With A being the number of rated attributes; larger values indicate broader endorsement of therapeutic toy concepts. In addition, each respondent carries a sentiment-intensity score si ∈ [0, 1], yielding a text-derived affective signal that complements self-reported mood scales. Altogether, we create p0 candidate features—substantially exceeding the sample size n—so dimensionality reduction is essential.

In addition, the emotional intensity obtained from text sentiment analysis is added to the feature matrix, and each user has a corresponding emotional tendency value [22]. After sorting, we obtain dozens of candidate features, the number of which is more than the sample size and the possibility of multicollinearity, which need to be screened.

To avoid redundancy and overfitting, we use the Boruta–SHAP algorithm for feature selection. The Boruta–SHAP algorithm first identifies potentially relevant features using Boruta [23], and then evaluates the importance of the features and refines them using SHAP values [24]. This framework goes beyond the traditional single feature importance measure by allowing the use of an arbitrary tree model as a base model, providing more efficient, accurate and explanatory feature selection results [25]. In market segmentation and consumer behavior research, Boruta–SHAP is able to effectively identify the key factors that drive consumer behavior and help optimize marketing strategies.

In the specific implementation, the target variable in consumer behavior prediction is used as the supervisory signal, the random forest is chosen as the base model, and all the candidate features and a number of “shadow features” are fed into the Boruta algorithm for iteration [26]. After several rounds of running, the algorithm outputs a set of results in which each feature is rated as “important”, “unimportant”, or “marginal”. Next, we calculate SHAP values for the potentially important features screened by Boruta to measure the magnitude and direction of their influence on the model output [27], thus further eliminating variables with minimal or unstable contributions. The final set of retained features contains both traditional questionnaire variables and sentiment analysis variables with derived variables. This process ensures that we do not omit factors that have a significant impact on the objectives, while controlling the feature dimensions within reasonable limits [28]. After screening, the number of features used in modeling in this study is 10, which is about 30% of the initial candidate set. Among them are both the basic attributes of users, as well as mental attitude indicators and emotional tendency indicators, which initially cover the main influencing factors of our hypothesis.

2.3. Modeling and Feature Importance Analysis

Random forest (RF) is an integrated learning algorithm based on decision trees that excels in user behavior prediction due to its robustness and nonlinear fitting ability. Compared to linear regression or logistic regression, random forest is able to handle high-dimensional features and complex interactions, and is more tolerant to nonlinear relationships and noise common in consumption data [29]. In emotion-driven consumption contexts, RF models are suitable as prediction tools due to the variety of influencing factors and nonlinear relationships. In addition to predictive performance, RF provides built-in feature importance assessment, which helps to initially screen out important variables. However, it is important to note that RF’s importance tends to favor features with a high base or with multiple branches, which may be biased. Therefore, we use RF prediction in conjunction with k-means clustering analysis for a more comprehensive assessment of feature impact.

After completing the feature engineering, we built a random forest model to predict the continuous dependent variable. In this paper, the dependent variable is consumers’ willingness to buy therapeutic toys or the intensity of their demand, which is derived from the score of the “Buying Therapeutic Toys makes me feel happy” questionnaire or similar questions. This variable is continuously distributed and can be regarded as a scale of demand. The random forest regression model was chosen because, on the one hand, it can handle more input features and explore nonlinear relationships in this study, and the built-in out-of-bag estimation can alleviate overfitting [30,31]; on the other hand, compared with neural networks, random forests have a certain degree of interpretability and robustness, which makes it easy to be combined with the subsequent correlation analysis [32].

We divided the sample data into training and testing sets by 8:2, used k-fold cross-validation on the training set, and tuned the hyperparameters. The main hyperparameters to be tuned include the number of decision trees, the maximum depth, and the minimum number of sample foliated nodes. Considering the limited sample size, we selected a relatively conservative number of trees (n = 100) by grid search, and limited the maximum depth to prevent single tree overfitting [33]. The average R2 and mean-squared error (MSE) from cross-validation were used to assess the performance of the model during the training phase and to guide parameter selection. After finalizing the parameters, the model is retrained on the entire training set and the prediction performance is evaluated on an independent test set, including R2, MSE metrics [34].

In addition to the prediction performance, we also extracted the importance of the features of the random forest. Based on the average reduction in mean square error or Gini impurity reduction metrics, we can obtain a ranking of the contribution of each input feature to the model’s prediction. This provides a list of important factors and facilitates a more in-depth explanation of the influences on the model.

2.4. K-Means Cluster Analysis

Finally, we apply k-means clustering to segment the interviewed Generation Z consumers in order to portray different types of user profiles, which helps to understand the differences in the characteristics of different groups and implement precision marketing. K-means clustering is a classic unsupervised learning method widely used for consumer segmentation due to its simplicity and high efficiency [35]. K-means optimizes by iterative optimization to make sure that the similarity of objects within the clusters degree is maximized and inter-cluster similarity is minimized by iterative optimization [36]. Given the standardized feature matrix {x1,…,xn} (where each xi stacks the ten retained predictors and the relevant demographic dummies), K-means partitions the sample into K non-overlapping clusters {C1,…,CK} by minimizing the within-cluster sum of squared Euclidean distances.

where μk denotes the centroid of cluster CK. Iterative updates alternate between assigning each respondent to the nearest centroid (maximizing intra-cluster similarity) and recalculating centroids (minimizing inter-cluster similarity).

Based on questionnaire data, clustering can be used to group individuals with similar consumer perceptions and behavioral characteristics based on consumers’ responses on multiple attributes. For example, users who emphasize emotional value and those who focus on cost-effectiveness can be classified into different clusters, thus depicting very different consumption portraits.

In this study, we select the key variables identified by the model, as well as the demographic characteristics of the users, etc., and perform k-means clustering analysis, expecting to discover the segmentation of Generation Z therapeutic toy consumers. Through clustering, we can distill three types of user portraits and compare the differences between clusters in terms of emotional needs, purchase intention, etc.

The data used for clustering consisted of the key features screened as described earlier, as well as key demographic variables. Prior to clustering, we standardized continuous-type features (mean 0 variance 1) to avoid differences in magnitude affecting distance calculations; categorical variables were converted to dummy variables. To determine the number of clusters K, we considered the elbow rule and contour coefficients and other indicators. Several trials in the range of K = 2–6 were conducted to determine the optimal K value to balance the intra-cluster tightness and inter-cluster separation.

3. Results

The data in this study mainly come from the questionnaire we designed and distributed based on the adaptation of existing research scales, and the public user review data on Jellycat’s official website. The following describes the data collection process, sample characteristics, and the results of the scale reliability test, respectively, followed by the specific experimental results of the model, including regression prediction effects, feature importance comparison, and user clustering analysis.

3.1. Data Collection and Sample Profile

We designed a questionnaire for Generation Z (18–30 years old) consumers in early 2025, focusing on the perception and preference of therapeutic toys. The questionnaire was distributed through an online platform and 336 valid samples were collected. The questionnaire content includes the following:

- Demographic information: gender, age, education, occupation, monthly disposable income, etc.;

- Consumer behavior and preference: including the purchase of therapeutic toys, and the knowledge of therapeutic toys;

- Stress, attitude, and product advantage: including stress measurement scale, emotion measurement scale, and product advantage measurement scale [37,38,39,40];

- Purchase intention: including purchase likelihood and product attitude [41,42,43].

For more information on the scales, see Appendix A.

The survey analyzed the overall situation of the sample by describing the gender, age, education, and income of the respondent group. Among them, 69.9% are female and 30.1% are male, with the number of females significantly higher than males. The respondents are Generation Z youths, the majority of whom are 21–25 years old, reflecting the young group’s higher acceptance of and concern for therapeutic toys. Those with education above a bachelor’s degree and master’s degree accounted for 77.6%. Since Generation Z youth are basically in the stage of studying or just leaving the society at present, 65.5% of them have an income level of less than 5000 RMB. To control for response bias, we performed the following:

- (a)

- Anonymized surveys to reduce social-desirability effects,

- (b)

- Included attention-check questions,

- (c)

- Validated scales for reliability (Cronbach’s α > 0.8).

For more information of the sample, See Appendix B.

3.2. Social Media Corpus Analysis and Model Performance Evaluation

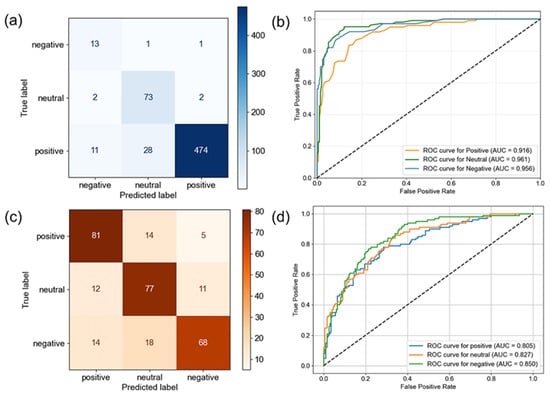

In addition to the questionnaire data, in order to improve the accuracy and contextual understanding of the sentiment analysis, we used the RoBERTa-wwm-ext pre-trained language model for fine-tuning training and predicting the sentiment categorization of the collected social media texts. In this study, a total of 856 public user reviews from Jellycat’s official website were used as the input corpus for the sentiment model, and a sentiment labeling dataset was constructed for fine-tuning the RoBERTa model to fit the semantic style of consumer reviews of toy products. The model is used to categorize the sentiment polarity (positive/neutral/negative) of the text in the social comments and output the probability distribution of sentiment intensity (taking the positive probability after softmax as an indicator of sentiment intensity). In addition, a lexicographic method based on HOWNET + a custom internet buzzword dictionary is constructed as an aid for assessing model consistency and analyzing sentiment composition differences. To evaluate the performance of the RoBERTa-wwm-ext model, we divide the training set and test set (with a ratio of 8:2) in the labeled corpus, and plot the model confusion matrix and ROC curves.

In order to comprehensively evaluate the classification performance of the RoBERTa-wwm-ext model, we plotted the confusion matrix plot and ROC curve of the model on the test set. The results are shown in Figure 2a,b. The RoBERTa model achieves 92.1% accuracy with 0.91 F1-score on the test set, with clear classification boundaries for various types of emotions and low misclassification rate; its corresponding multicategorization AUC value reaches 0.936, indicating that the model has strong differentiation ability and robustness.

Figure 2.

Analysis of model emotional accuracy: (a) RoBERTa-wwm-ext model confusion matrix; (b) RoBERTa-wwm-ext model ROC curve; (c) dictionary confusion matrix; (d) dictionary method ROC curve.

In addition, we conducted a side-by-side comparison with the traditional dictionary method, and the results are shown in Figure 2c,d. The dictionary method only achieves 78.3% accuracy in recognizing sentiment polarity on the same test set, with an F1-score of 0.75 and an AUC value of 0.828. The comparison shows that the RoBERTa-wwm-ext model significantly outperforms the traditional lexicon method in terms of the overall performance, the ability to generalize the unstructured text, and the contextual comprehension of the sentiment expression, especially in neutral sentiments and sarcasm, antiphonal texts show higher accuracy in the judgment of antiphonal texts.

After categorizing the sentiment of all 856 user review texts based on the fine-tuned RoBERTa model, we obtain the overall sentiment polarity distribution for the following: 88.7% of positive reviews, 7.5% of neutral reviews, and only 3.8% of negative reviews. This result shows that the majority of consumers have a clearly positive attitude towards therapeutic toy products.

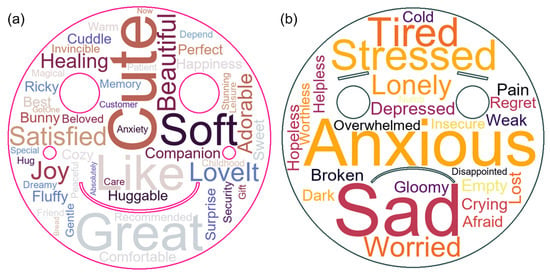

In order to further explore consumers’ emotional content and value perception characteristics, we perform text feature extraction and keyword analysis on positive and negative comments, respectively. Specifically, based on the attention weights output from the RoBERTa model as a benchmark for word weights, we combine word frequency statistics with the TF-IDF algorithm to weight and filter keywords, identify highly emotionally contributing words, and construct a word cloud map for visualization and analysis.

As shown in Figure 3a, the high-frequency keywords in the word cloud of positive comments include “Cute”, “Soft”, “Great” and “Like”, which are highly focused on product appearance attractiveness, tactile experience, and emotional comfort, showing a strong emotional response from consumers in terms of psychological comfort and sense of companionship. In contrast, as shown in Figure 3b, although the number of negative comments is relatively small, the occurrence of high-frequency keywords such as “Sad”, “Anxious”, “Stressed” and so on reflect the fact that the experience of some consumers falls short of emotional expectations, or that they use scenarios that do not fit in the actual experience. These kinds of comments often involve the gap between product expectations and real feelings, and have important product improvement value.

Figure 3.

Emotional word cloud chart: (a) positive sentiment; (b) negative sentiment.

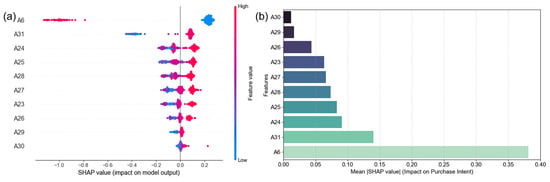

3.3. Boruta–SHAP Feature Selection

After obtaining the sentiment indicators, we integrated the information related to consumer attitudes, cognitions, and behaviors in the questionnaire data, and constructed an original feature set containing a total of 31 candidate variables from A1–A31. These features cover two major sources: first, questionnaire items extracted based on theoretical hypothesis processing, such as basic user attributes, mental attitudes, and consumer perceptions; and second, structured variables from the sentiment analysis module, such as the distribution of the emotional intensity of the comments and the emotional tendency. Since the number of features exceeds the sample size and there may be redundancy and multicollinearity problems, we systematically screened the features to ensure the stability and interpretability of the model.

In this study, a combined Boruta–SHAP approach is used to automate the feature screening of candidate variables, and Boruta first compares the importance of the original features with the randomly perturbed “shadow features” in predicting the target variables (e.g., willingness to buy) based on the random forest iteration. In the first seven iterations, all the variables are in the “pending” state, and their importance is not confirmed; as the iteration progresses, the variables are gradually confirmed in the 8th round, and finally, in the 12th iteration, 10 variables are stably confirmed to be “important”, and the remaining 21 are explicitly excluded, and the SHAP is used for the comparison. The results of the scatterplot and bar chart of SHAP analysis are shown in Figure 4a,b.

Figure 4.

Results of Boruta–SHAP analysis, screening the top 10 most important features: (a) scatterplot; (b) bar graph.

The 10 key variables selected included gender, age, education, income, work/study load, career/study prospects, work/study environment, packaging of curing toys, marketing, and services. These variables cover key dimensions ranging from socio-demographic characteristics to perceptions of the consumer environment to those related to the therapeutic toy product experience, constituting a compact but informative multidimensional subset of predictions. Proportionally, the final retained features only account for about 32% of the initial feature set, reflecting a good information condensation effect. At the same time, these variables are highly compatible with our theoretical expectations of the factors influencing consumer behavior and also provide data support for subsequent predictive modeling.

3.4. Reliability and Validity Analysis

In the present study, the quality of the scales was comprehensively assessed through systematic psychometric tests. In terms of reliability tests, the internal consistency of the construct scales was examined using Cronbach’s α coefficient, and the results are shown in Table 1, indicating that the stress scale demonstrated an excellent level of reliability (α = 0.921). The positive dimension (α = 0.905) and negative dimension (α = 0.806) of the emotion scale reached the standards of excellence and goodness of fit, respectively, and the willingness-to-buy scale likewise demonstrated excellent reliability characteristics (α = 0.880). It is particularly noteworthy that the composite reliability coefficient of the total scale is as high as 0.971, with all indicators exceeding the recommended threshold of 0.8, fully proving that the scale has ideal measurement stability.

Table 1.

Cronbach’s reliability analysis.

In the validity validation session, the study used KMO and Bartlett’s spherical test to assess the structural validity of the scale. The results are shown in Table 2, showing that the KMO value reached an excellent level of 0.918 (>0.9), and the approximate chi-square value of Bartlett’s spherical test was 2086.082 (df = 435, p < 0.001), which reached statistical significance. These data not only indicate the existence of a significant correlation structure between the variables, but also confirm that the study data are well suited for factor analysis, ensuring the validity of the scale constructs at the methodological level.

Table 2.

KMO and Bartlett’s test.

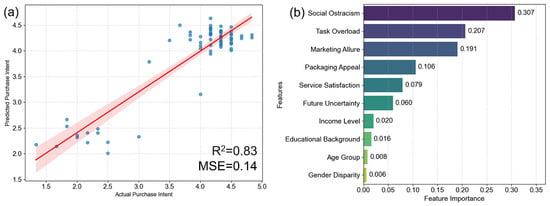

3.5. Machine Learning Model Prediction

After feature selection and reliability and validity tests, machine learning is used for prediction, with 10 features screened by feature selection, and a random forest model is used to model these features to predict the user’s purchase intention. The results are shown in Figure 5a. The goodness of fit (R2) of the model for the test set is 0.83, and the mean square error (MSE) is 0.14. From the scatter plot, it can be seen that there is a strong linear relationship between the actual purchase intention and the predicted purchase intention, and most of the data points are centered near the red regression line, which indicates that the model has a good prediction ability.

Figure 5.

(a) Evaluation results of the RF model after feature selection; (b) SHAP feature importance analysis ranking.

Using the Gini importance index of random forest, we obtain the feature influence ranking. The top five features in the RF model are, in the following order: (1) Social Ostracism, (2) Task Overload, (3) Marketing Allure, (4) Packaging Appeal, and (5) Service Satisfaction. These results align with our theoretical framework—Affective Events Theory (AET) and Self-Determination Theory (SDT)—validating the primacy of emotional drivers in therapeutic consumption. Figure 5b visualizes the importance scores of these characteristics, in which “Social Ostracism” is more important than the others, with an importance score of 0.307. This implies that the more a person in our sample rejects the emotional satisfaction of consumption, the more likely they are to show a resistance to purchasing therapeutic toys. Similarly, Task Overload people tend to have an impact on the willingness to buy with the difficulty of generating favorable feelings towards toys.

Social Ostracism and Task Overload represent critical affective events [9]. Their high predictive power (β = 0.307 and β = 0.207, respectively) confirms AET’s premise that workplace/academic stressors evoke negative affect (e.g., anxiety), triggering consumption as a coping mechanism. Specifically, Social Ostracism threatens belongingness, motivating compensatory purchases to restore emotional equilibrium; Task Overload induces perceived loss of control, driving consumers toward therapeutic toys as tools for psychological recovery.

The core features identified by the Boruta–SHAP algorithm are essentially the same when compared to the random forest results. Among the features that were selected in the Boruta process, those that ranked low were gender, age (probably due to the weak influence of the small age span of the sample), specific education, and specific income. These did also rank low in importance in the random forest, suggesting that these differences within the Generation Z group are not a major contradiction in curing toy consumption. Overall, the model analysis highlights that emotional and psychological variables are the primary drivers, with economic and demographic variables taking a back seat.

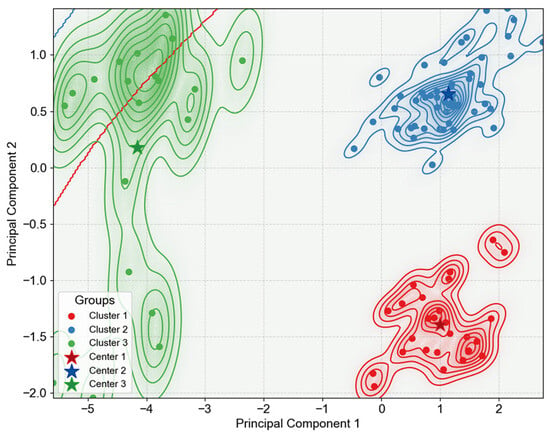

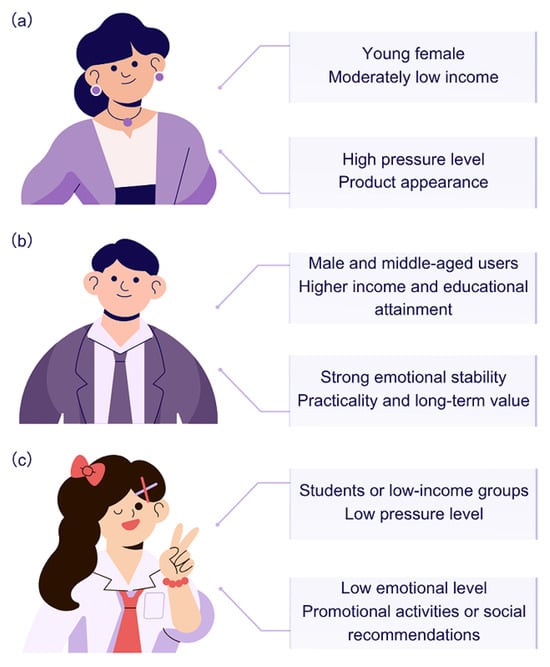

3.6. User Segmentation Profile

On the basis of the model in the previous section, k-means clustering was used to cluster the interviewed Generation Z consumers in order to portray different types of user profiles. By selecting the key variables identified by the model and using the contour function to draw contours based on the clustering results, the optimal number of clusters was determined to be when K was 3, after comparison. This is specifically illustrated in Figure 6. It can be seen that the data points are clearly divided into three main clusters on the two-dimensional plane, which are represented by red, blue, and green, respectively. Each cluster represents a class of Generation Z consumers with similar characteristics. Based on the results of clustering, the therapeutic toy consumers can be divided into the following three categories, as shown in Table 3.

Figure 6.

K-means clustering scatter density plot, including three types of clustering centers.

Table 3.

Consumer cluster characteristics of therapeutic toys.

The first group is highly emotionally dependent (the main group, Figure A2a), dominated by young women (86%), with a medium-low income and significantly higher stress levels than the other groups (mean 4.6). Purchase decisions are emotionally driven, sensitive to product appearance (packaging, spokespersons) (mean 4.5), and extremely willing to buy (4.8). Likely to relieve anxiety by purchasing toys when stressed at work/school, preferring cute shapes (e.g., Jellycat) or IP co-branded models. This group accounts for 48% of the population, becoming the main force in purchasing therapeutic toys, and should be the focus of therapeutic toy store owners.

The second group is the rational value type (the backbone, Figure A2b) with a high proportion of male and middle-aged users, high income and education levels, and strong emotional stability (mean value 3.5). They emphasize the practicality and long-term value of products (e.g., collection potential, material durability), and make rational purchase decisions (mean value 4.1). They may buy the toys for family members or self-reward, preferring Disney classics or limited editions. This group accounts for 32% of the population, constituting the backbone of the therapeutic toy consumer market, which has both stable consumption ability and the potential to continue to tap the consumption, and is the core group that stores need to focus on maintaining.

The third group of people is the mildly interested type (promising person, Figure A2c) dominated by students or low-income groups, with low levels of stress and emotion (mean < 3) and weak willingness to buy (3.0). Consumption behavior is random and easily influenced by promotions or social recommendations. They are likely to purchase holiday gifts or temporary purchases, preferring low-priced basics (e.g., small blind boxes). Accounting for 20% of the population, this group is a potential buyer of therapeutic toys, and is a worthy target for therapeutic toy stores.

Significant demographic stratifications emerged within these segments. Females dominated the emotionally dependent group (86%), displaying stronger stress-driven purchasing, while males comprised 58% of the rational-value segment. Younger members (18–25 years) were overrepresented in both high-stress emotional (mean age = 2.1) and low-engagement clusters (mean age = 2.4), exhibiting greater impulse sensitivity than older peers (26–30 years, mean age = 3.3). Income critically shaped consumption motives: medium-low earners (Group 1) sought emotional relief, high-income consumers (Group 2) valued investment potential, and low-income participants (Group 3) responded primarily to promotions. These fault lines highlight that Gen Z’s therapeutic consumption is mediated by intersecting demographic realities.

Through these user profiles, we have not only realized a concrete interpretation of the model predictions, but also provided a clear direction for commercial applications: marketing can launch story marketing and IP sentiment packaging to satisfy emotional resonance for highly emotionally dependent types, emphasize quality and cost-effectiveness for rationally valued types, and utilize social communication to create a trend for mildly interested types. Furthermore, the characteristics behind these profiles correspond to the influencing factors we found; for example, emphasizing emotional value corresponds to emotion-driven type, emphasizing price and practicality corresponds to rational type, and emphasizing social influence corresponds to tastemaker type. This correspondence further supports our previous explanation of the mechanism of influencing factors, i.e., different factors have different dominant effects on different groups of people.

4. Discussion

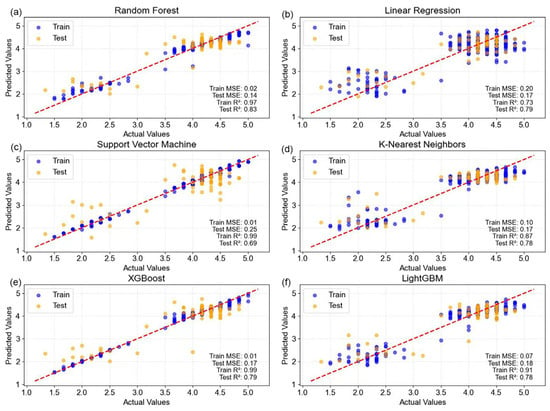

4.1. Model Performance and Methodological Validity

We compare random forest with other machine learning models to further highlight the advantages of the random forest model. Compared with linear regression, support vector regression, k-nearest neighbor, XGBoost, and the LightGBM model, the random forest model achieves higher prediction accuracy (R2 = 0.83, MSE of 0.13 for the test set), which is significantly better than the traditional model. The specific comparison is shown in Figure 7. This indicates that the decision mechanism of Generation Z’s therapeutic toy consumption intention has nonlinear characteristics, and machine learning methods can capture this complex relationship more adequately. At the same time, our model incorporates the features obtained from text sentiment analysis, which better compensates for the lack of questionnaires that are difficult to quantify emotional expression.

Figure 7.

Comparison of four machine learning performances: (a) random forest; (b) linear regression; (c) support vector machine; (d) k-nearest neighbors; (e) XGBoost; (f) LightGBM.

Compared with some studies that only use structured questionnaire data, our approach is more advantageous in explaining consumers’ emotional psychology. Due to the limited sample size, the model still has room for improvement, and the deep neural network may be able to further improve the accuracy, but may sacrifice certain interpretability. Considering the emphasis on interpretation in this study, we chose random forest that balances performance and interpretability. Overall, the model results support our research hypothesis that quantitative sentiment indicators help improve the accuracy of consumer behavior prediction in the context of emotional economy.

4.2. Comparative Analysis with Prior Studies

Our findings demonstrate significant consistency with established theories of emotional consumption while revealing novel quantitative insights. First, the dominance of Social Ostracism (β = 0.307) and Task Overload (β = 0.207) aligns with Djafarova & Bowes [1] confirming that Gen Z utilizes therapeutic consumption as compensatory behavior against social isolation and chronic stress—a phenomenon exacerbated by digital-era anxieties.

Methodologically, our hybrid framework quantifies emotional drivers via NLP and SHAP, our integrated predictive model achieves a balance between forecasting accuracy and decision interpretability in emotional value computation, revealing the impact of impulse consumption on the emotional economy. While prior studies qualitatively described emotion-driven purchases [2,13], there was no quantitative analysis conducted.

Crucially, our k-means segmentation diverges from Xie et al.’s [5] binary classification. We identified a distinct mildly interested cluster (20%) driven primarily by social influence (FOMO) rather than intrinsic emotion—a trend amplified by post-pandemic digital acculturation [7]. This tripartite typology reflects Gen Z’s heterogeneous response to emotional marketing, challenging homogeneous segmentation models.

4.3. Consistency of Influencing Factors Under Different Analytical Methods

A notable finding is the high degree of overlap of the resulting core influences, both through the importance analysis of random forests and Boruta–SHAP feature selection. This consistency increases the robustness of the findings. It means that these factors we identify are the key factors that really make a difference, rather than artifacts of a particular analytical approach. For example, sometimes machine learning models and statistical analyses may give incomplete agreement, but in our study, data-driven methods (random forest, Boruta) and theory-driven methods (questionnaire scales) reached a consensus, which enhanced the credibility of the findings. On the other hand, the different approaches also provide complementary perspectives. For example, RF emphasizes the importance of interaction and Boruta–SHAP emphasizes the importance of synchronous trends, and the combination of these two approaches provides us with a richer understanding of factors such as “social exclusion”—knowing that it significantly affects purchase intention and that it affects the probability of purchase. We know that it significantly affects purchase intention, but we also know that this effect is likely to be negatively correlated linearly (because the higher the social exclusion, the lower the purchase intention, and the two curves fall in tandem). Cross-method consistency is also reflected in the clustering results: the proportion of people in the emotionally driven group (40%) is similar to the proportion of high-willingness users predicted by our model, and high-willingness users are precisely people with high emotional values. All these are sideways indications that our multi-method combined analysis achieves corroboration and cross-validation effects, making the conclusions more solid.

4.4. Deeper Interpretation of User Characteristics and Behavior

The three types of user profiles obtained through clustering provide an intuitive framework for understanding consumer behavior in the emotional economy. The existence of highly emotionally dependent users validates the type of young people described in the theory of emotional consumption as those who “pay for what pleases them”. Their consumer behavior can be partially explained by emotional dependence and psychological compensation: when facing life stress, buying a healing object becomes a means of self-healing. This is in line with the psychological theory of emotion regulation, which states that individuals will regulate their emotions by making situational choices (e.g., buying something). The rational value group reminds us that not all Gen Zers are passionate about emotional consumption. In an environment of material abundance, there is a significant proportion of young people who remain rational and seek value for money, which may be related to their personal values or upbringing. Their presence suggests that the emotional economy is not replacing the rational economy, but that the two drivers are coexisting. The mildly interested type reflects the role of social influence in consumption—these people may not have strong emotional needs themselves, but they are willing to give it a try when “everyone is playing”. This confirms the influence of herd mentality and social currency theory in new consumption. For example, trend marketing often talks about “creating FOMO (fear of missing out)”, which targets this type of consumer psychology.

Further, these findings can be extended to think about marketing strategy more broadly. For emotionally driven users, brands can build emotional bonding, such as through IP stories and user communities creating healing topics to enhance loyalty. For rational users, brands need to emphasize functional value or develop products that are both healing and practical to impress them. For curious users, they can be stimulated to try and buy through social communication and hunger marketing, and then find ways to convert them into more loyal emotionally driven users. This suggests that consumers are still segmented in the emotional economy, and that different strategies need to accurately match different demographics. Our research methodology demonstrates the possibility of mining these insights from data.

4.5. Research Limitations and Future Improvements

Although this study achieved some meaningful results, there are limitations.

First, the geographical restriction to China limits cross-cultural generalizability. Future work should include comparative studies (e.g., U.S., EU), or add universal cross-cultural issues to future work and suggest the integration of the Internet of Things/digital twins [4,44].

Secondly, in addition to the responses from the questionnaires, we also incorporated user comment data to capture more natural expressions from consumers. The questionnaire responses may have social bias (for example, respondents tend to answer “I will purchase”, but their actual behavior may not match this). While anonymization and attention-check procedures helped reduce response bias, future research should integrate behavioral indicators, such as e-commerce purchase logs, to validate self-reported intentions against real purchasing behavior. Neurophysiological methods (e.g., EEG or eye-tracking) could be employed to assess subconscious emotional responses toward therapeutic toys, complementing self-reported and comment-based data. In future research, we will collaborate with e-commerce platforms (e.g., accessing Jellycat’s sales records), which will enable cross-validation of self-reported intentions with real transactions. And combining EEG experiments with behavioral data can uncover implicit emotional valence, providing a richer understanding of consumer engagement with therapeutic products [22].

Again, our model is cross-sectional and static and does not examine changes in the time dimension. Indeed, both mood and consumption behavior may fluctuate over time, and a person’s propensity to consume will be different during peaks and through stressful moods. In the future, we can consider introducing time series data to observe the dynamic relationship between sentiment indicators and consumption behavior. App-based diary studies tracking mood fluctuations and micro-purchases would capture causal, the text sentiment analysis we used still has room for improvement in contextual understanding, such as judging complex semantics like sarcasm and backtalk. With the advancement of pre-trained models and the enrichment of domain sentiment lexicon, the analysis accuracy can be further improved.

Despite the above limitations, the methodological framework of this study is somewhat generalizable and provides a reference direction for subsequent work. For example, the combination of sentiment analysis and SHAP analysis applied to the consumer domain is a useful exploration. In the future, we may try to integrate more physiological and behavioral data, such as brainwave (EEG) experiments to measure the emotional response of consumers’ brains when they see therapeutic toys, and to validate the emotional state reflected by the questionnaires and texts at the neural level. This will open up a macroconsumer perspective that complements our data-driven model. In addition, the application of reinforcement learning or causal inference promises to better discern causality from observational data-such as whether purchasing therapeutic toys actually reduces consumers’ anxiety levels, a reverse effect that we have not yet addressed. These reflections in the discussion point the way to future research.

5. Conclusions

In this work, we propose and validate an algorithmic modeling framework around the consumption behavior of Generation Z for therapeutic toys under the influence of the emotional economy. By integrating the methods of text sentiment analysis, feature selection, random forest modeling, and cluster analysis, we deeply reveal the mechanism of the role of emotional factors in consumption decisions. Unlike traditional S-O-R (stimulus–organism–response) models, our framework quantifies and evaluates the emotion factors through NLP, capturing unstructured emotional cues inaccessible to survey-based approaches. This bridges affective computing and consumer behavior prediction. The main conclusions are as follows:

- This study integrates affective computing into consumer behavior modeling, demonstrating that emotion-driven consumption dominates Gen Z’s therapeutic toy purchases.

- Our hybrid framework (sentiment analysis + RF + SHAP) achieves high accuracy (R2 = 0.83) while enabling interpretable segmentation. For practitioners, emotionally resonant marketing (e.g., IP storytelling) is critical for high-dependency clusters, whereas rational users respond to functional value.

- Generation Z therapeutic toy consumers can be divided into different segments and need to implement differentiation strategies. Cluster analysis revealed three typical user profiles: emotion-driven, rational value, and mildly dependent. This provides a basis for enterprises to conduct accurate marketing in the emotional economy environment.

Overall, the research in this paper demonstrates the feasibility and effectiveness of integrating the quantitative path of emotion into consumer behavior models under the new consumer trend. The framework we built digitizes consumers’ psychological and emotional factors and reveals their impact on consumption through algorithmic modeling, which greatly enriches the understanding of Generation Z’s consumer decision-making process. From a practical point of view, companies can learn from this idea, collect users’ emotional feedback data and combine it with sales forecasting models to improve the responsiveness of product development and marketing programs to users’ emotional needs. The era of the emotional economy brings both challenges and opportunities for market forecasting and analysis. As shown in this paper, with increasingly powerful AI and data analytics technologies, we are able to gain unprecedented insights into the emotional drivers within consumers. We believe that with the advancement of related research and refinement of methodologies, the integration of affective computing and consumer behavior will continue to deepen, bringing win–win value to both marketing science and consumer well-being.

Author Contributions

Conceptualization, X.M. and X.Q.; methodology, X.M.; software, X.Q.; validation, X.M., X.Q. and L.L.; formal analysis, X.M.; investigation, X.M., X.Q. and L.L.; resources, X.M.; data curation, X.M.; writing—original draft preparation, X.M.; writing—review and editing, X.M. and X.Q.; visualization, X.M. and X.Q.; supervision, L.L.; project administration, X.M.; funding acquisition, X.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Social Science Foundation of China, grant number 22FYB068, and the Postgraduate Research & Practice Innovation Program of Jiangsu Province, grant number KYCX24_3860.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

Thanks to the funding support from the National Social Science Foundation of China and the Postgraduate Research & Practice Innovation Program of Jiangsu Province.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RoBERTa-wwm-ext | Robustly optimized BERT approach with whole word masking extended |

| F1 | F1 score |

| MSE | Mean-squared error |

| KOL | Key opinion leader |

Appendix A

Questionnaire Design

Based on the independent variables such as stress, emotion, product advantages, and the dependent variable of purchase intention set in this paper, the questionnaire of this study was made by drawing on the scales developed and designed by the following scholars.

Among the antecedent variables that affect the purchase intention, aspects such as stress, emotion, and product advantages were selected for research (see Table A1, Table A2 and Table A3).

Table A1.

Stress measurement scale.

Table A1.

Stress measurement scale.

| Dimension | Item | Source |

|---|---|---|

| Work/Study Itself | 1. My work/study tasks are heavy with a large workload. | Pristiawati et al. [37] |

| 2. I feel like my work/study is never-ending. | ||

| 3. I often work overtime to complete my work/study tasks. | ||

| Career Development | 4. I see no hope for my future career advancement/academic growth. | |

| 5. I feel that the skills and knowledge I gain at work/school are limited. | ||

| Interpersonal Relationships | 6. I experience conflicts or unpleasantness with colleagues/classmates. | |

| 7. My supervisor/teacher is unwilling or unable to help me resolve work/study issues. | ||

| 8. I often feel excluded or isolated in my work/study life. |

Table A2.

Emotion measurement scale.

Table A2.

Emotion measurement scale.

| Dimension | Item | Source |

|---|---|---|

| Positive Emotions | 1. I am interested in therapeutic toys | Jang and Namkung [38] Park et al. [39] |

| 2. Buying therapeutic toys makes me happy | ||

| 3. Seeing therapeutic toys makes me feel calm | ||

| Negative Emotions | 4. I feel disgusted by therapeutic toys | |

| 5. Buying therapeutic toys makes me feel ashamed | ||

| 6. When I see therapeutic toys, I often feel sad |

Table A3.

Product advantages measurement scale.

Table A3.

Product advantages measurement scale.

| Dimension | Item | Source |

|---|---|---|

| Packaging | 1. The packaging of therapeutic toys attracts me to purchase. | Weiss [40] |

| Marketing | 2. The advertising and marketing of therapeutic toys are appealing. | |

| 3. The endorser of therapeutic toys is my idol. | ||

| Service | 4. The service of therapeutic toys is satisfactory |

This study holds that purchase intention can be measured from two aspects: product attitude and purchase possibility (see Table A4).

Table A4.

Purchase intention measurement scale.

Table A4.

Purchase intention measurement scale.

| Dimension | Item | Source |

|---|---|---|

| Purchase Likelihood | 1. I am likely to purchase therapeutic toys. | Dodds et al. [41] Herr et al. [42] Low and Lamb [43] |

| 2. I plan to purchase therapeutic toys. | ||

| 3. I hope to purchase therapeutic toys. | ||

| Product Attitude | 4. I think this product is good. | |

| 5. I think this product is pleasant. | ||

| 6. I think this product is valuable. |

Appendix B

Descriptive Statistical Analysis of the Sample

This survey describes and analyzes the overall situation of the sample by analyzing the gender, age, educational background, and income of the respondents (see Table A5). Among them, the proportion of women was 69.9% and that of men was 30.1%. The proportion of women was significantly higher than that of men. The interviewees are young people of Generation Z, the vast majority of whom are between 21 and 25 years old, demonstrating that the younger generation has a relatively high acceptance and concern for therapeutic toys. Those with a bachelor’s, associate’s, or master’s degree or above account for 77.6%. As Gen Z youth are currently mostly in education or just entering society, 65.5% of them have an income level of less than CNY 5000.

Table A5.

Sample structure statistics.

Table A5.

Sample structure statistics.

| Demographics | Frequency | Percentage (%) | |

|---|---|---|---|

| Gender | Male | 101 | 30.1 |

| Female | 235 | 69.9 | |

| Age | 16–20 | 96 | 28.6 |

| 21–25 | 150 | 44.6 | |

| 26–30 | 90 | 26.8 | |

| Education | High school or below | 75 | 22.3 |

| Undergraduate/Junior College | 235 | 69.9 | |

| Master’s or above | 26 | 7.7 | |

| Income Range | Below 1.5 k | 97 | 28.9 |

| 1.5 k–3 k | 46 | 13.7 | |

| 3 k–5 k | 77 | 22.9 | |

| 5 k–8 k | 71 | 21.1 | |

| Above 8 k | 45 | 13.4 | |

| Total | 336 | 100 | |

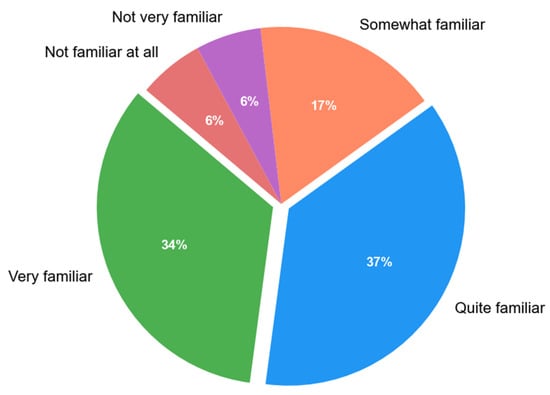

In addition, we also analyzed the degree of understanding of therapeutic toys among all the interviewees. It can be seen from Figure A1 that the vast majority of the group have a certain understanding of therapeutic toys. The proportions of those who have a very good understanding and those who have a relatively good understanding are 34% and 37%, respectively. Only 12% of people have little or no understanding of this type of consumer product.

Figure A1.

The respondents’ understanding of therapeutic toys.

We also conducted a survey on the purchase of therapeutic toys. A total of 271 respondents had purchased therapeutic toys (such as Jellycat, Pop Mart, Disney dolls, etc.), accounting for 80.7%, which is specifically shown in Table A6.

Table A6.

Purchase status of therapeutic toys.

Table A6.

Purchase status of therapeutic toys.

| Purchased Therapeutic Toys | Frequency | Percentage (%) |

|---|---|---|

| Yes | 271 | 80.7 |

| No | 65 | 19.3 |

| Total | 336 | 100 |

Figure A2.

Three types of user profiles: (a) high-emotional-dependence type; (b) rational value type; (c) mild interest type.

References

- Djafarova, E.; Bowes, T. ‘Instagram made Me buy it’: Generation Z impulse purchases in fashion industry. J. Retail. Consum. Serv. 2021, 59, 102345. [Google Scholar] [CrossRef]

- Priporas, C.-V.; Stylos, N.; Fotiadis, A.K. Generation Z consumers’ expectations of interactions in smart retailing: A future agenda. Comput. Hum. Behav. 2017, 77, 374–381. [Google Scholar] [CrossRef]

- Kadokawa, K. Five steps to understand the mental state: A contribution from the economics of emotions to the theory of mind. Curr. Psychol. 2024, 43, 35234–35248. [Google Scholar] [CrossRef]

- Harantová, V.; Mazanec, J. Generation Z’s Shopping Behavior in Second-Hand Brick-and-Mortar Stores: Emotions, Gender Dynamics, and Environmental Awareness. Behav. Sci. 2025, 15, 413. [Google Scholar] [CrossRef]

- Xie, N.; Wang, J.; Fan, J.; Chen, D. A Product Design Strategy That Comprehensively Considers Consumer Behavior and Psychological Emotional Needs. In Design, User Experience, and Usability, Proceedings of the 13th International Conference, DUXU 2024, Washington, DC, USA, 4 June–4 July 2024; Marcus, A., Rosenzweig, E., Soares, M.M., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 314–329. [Google Scholar]

- Siqueira, J.R.; Losada, M.O.; Peña-García, N.; Dakduk, S.; Lourenço, C.E. Do peer-to-peer interaction and peace of mind matter to the generation Z customer experience? A moderation-mediation analysis of retail experiences. J. Mark. Anal. 2025, 13, 424–444. [Google Scholar] [CrossRef]

- Pan, W.; Xing, Y.; Ge, S.; He, L.; Sha, Z.; Chen, Z.; Wong, C.; Jiang, W.; Li, Y.; Ma, X.; et al. Navigating the Generation Z Wave: Transforming Digital Assistants into Dream Companions with a Touch of Luxury, Hedonism, and Excitement. In Design, User Experience, and Usability, Proceedings of the 13th International Conference, DUXU 2024, Washington, DC, USA, 4 June–4 July 2024; Marcus, A., Rosenzweig, E., Soares, M.M., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 181–192. [Google Scholar]

- Hirschman, E.C.; Holbrook, M.B. Hedonic Consumption: Emerging Concepts, Methods and Propositions. J. Mark. 1982, 46, 92–101. [Google Scholar] [CrossRef]

- Weiss, H.M.; Cropanzano, R. Affective Events Theory: A theoretical discussion of the structure, causes and consequences of affective experiences at work. Res. Organ. Behav. 1996, 18, 34–74. [Google Scholar]

- Deci, E.L.; Ryan, R.M. The “What” and “Why” of Goal Pursuits: Human Needs and the Self-Determination of Behavior. Psychol. Inq. 2000, 11, 227–268. [Google Scholar] [CrossRef]

- Bassiouni, D.H.; Hackley, C. ‘Generation Z’ children’s adaptation to digital consumer culture: A critical literature review. J. Cust. Behav. 2014, 13, 113–133. [Google Scholar] [CrossRef]

- Sun, X.; Jin, Y.; Huang, X. POP Mart: How to Maximize IP Value in the Field of Art Toy? In Innovation of Digital Economy: Cases from China; Zhang, J., Ying, K., Wang, K., Fan, Z., Zhao, Z., Eds.; Springer Nature: Singapore, 2023; pp. 223–236. [Google Scholar]

- Kamboj, S.; Sarmah, B.; Gupta, S.; Dwivedi, Y. Examining branding co-creation in brand communities on social media: Applying the paradigm of Stimulus-Organism-Response. Int. J. Inf. Manag. 2018, 39, 169–185. [Google Scholar] [CrossRef]

- Sneha; Raza, S. Affective Computing for Health Management via Recommender Systems: Exploring Challenges and Opportunities. In Affective Computing for Social Good: Enhancing Well-Being, Empathy, and Equity; Garg, M., Prasad, R.S., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 163–182. [Google Scholar]

- Shukla, D.; Dwivedi, S.K. Sentiment analysis versus aspect-based sentiment analysis versus emotion analysis from text: A comparative study. Int. J. Syst. Assur. Eng. Manag. 2025, 16, 512–531. [Google Scholar] [CrossRef]

- Hou, Z.; Kolonin, A. Interpretable Sentiment Analysis and Text Segmentation for Chinese Language. Opt. Mem. Neural Netw. 2024, 33, S483–S489. [Google Scholar]

- Bai, H.; Wang, D.-L.; Feng, S.; Zhang, Y.-F. EKBSA: A Chinese Sentiment Analysis Model by Enhancing K-BERT. J. Comput. Sci. Technol. 2025, 40, 60–72. [Google Scholar] [CrossRef]

- Ho, M.T.; Mantello, P.; Vuong, Q.H. Emotional AI in education and toys: Investigating moral risk awareness in the acceptance of AI technologies from a cross-sectional survey of the Japanese population. Heliyon 2024, 10, e36251. [Google Scholar] [CrossRef] [PubMed]

- Lin, C.-H.; Cheng, P.-J. Assisting Drafting of Chinese Legal Documents Using Fine-Tuned Pre-trained Large Language Models. Rev. Socionetwork Strateg. 2025, 19, 83–110. [Google Scholar] [CrossRef]

- Yosipof, A.; Drori, N.; Elroy, O.; Pierraki, Y. Textual sentiment analysis and description characteristics in crowdfunding success: The case of cybersecurity and IoT industries. Electron. Mark. 2024, 34, 30. [Google Scholar] [CrossRef]

- Oh, H.; Hunter, M.D.; Chow, S.-M. Measurement Model Misspecification in Dynamic Structural Equation Models: Power, Reliability, and Other Considerations. Struct. Equ. Model. Multidiscip. J. 2025, 32, 511–528. [Google Scholar] [CrossRef]

- Sukheja, S.; Chopra, S.; Vijayalakshmi, M. Sentiment Analysis using Deep Learning—A survey. In Proceedings of the 2020 International Conference on Computer Science, Engineering and Applications (ICCSEA), Gunupur, India, 13–14 March 2020; pp. 1–4. [Google Scholar]

- Noitz, M.; Mörtl, C.; Böck, C.; Mahringer, C.; Bodenhofer, U.; Dünser, M.W.; Meier, J. Detection of Subtle ECG Changes Despite Superimposed Artifacts by Different Machine Learning Algorithms. Algorithms 2024, 17, 360. [Google Scholar] [CrossRef]

- Li, M.; Sun, H.; Huang, Y.; Chen, H. Shapley value: From cooperative game to explainable artificial intelligence. Auton. Intell. Syst. 2024, 4, 2. [Google Scholar] [CrossRef]

- Jamei, M.; Ali, M.; Karbasi, M.; Karimi, B.; Jahannemaei, N.; Farooque, A.A.; Yaseen, Z.M. Monthly sodium adsorption ratio forecasting in rivers using a dual interpretable glass-box complementary intelligent system: Hybridization of ensemble TVF-EMD-VMD, Boruta-SHAP, and eXplainable GPR. Expert Syst. Appl. 2024, 237, 121512. [Google Scholar] [CrossRef]

- Kursa, M.B.; Rudnicki, W.R. Feature Selection with the Boruta Package. J. Stat. Softw. 2010, 36, 1–13. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Long Beach, CA, USA, 2017; pp. 4768–4777. [Google Scholar]

- Vollert, S.; Atzmueller, M.; Theissler, A. In Interpretable Machine Learning: A brief survey from the predictive maintenance perspective. In Proceedings of the 2021 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vasteras, Sweden, 7–10 September 2021; pp. 1–8. [Google Scholar]

- Gao, W.; Ding, Z.; Lu, J.; Wan, Y. Low-carbon information quality dimensions and random forest algorithm evaluation model in digital marketing. Sci. Rep. 2024, 14, 22416. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Valecha, H.; Varma, A.; Khare, I.; Sachdeva, A.; Goyal, M. In Prediction of Consumer Behaviour using Random Forest Algorithm. In Proceedings of the 2018 5th IEEE Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON), Gorakhpur, India, 2–4 November 2018; pp. 1–6. [Google Scholar]

- Alantari, H.J.; Currim, I.S.; Deng, Y.; Singh, S. An empirical comparison of machine learning methods for text-based sentiment analysis of online consumer reviews. Int. J. Res. Mark. 2022, 39, 1–19. [Google Scholar] [CrossRef]

- Probst, P.; Wright, M.N.; Boulesteix, A.-L. Hyperparameters and tuning strategies for random forest. WIREs Data Min. Knowl. Discov. 2019, 9, e1301. [Google Scholar] [CrossRef]

- Nakagawa, S.; Johnson, P.C.D.; Schielzeth, H. The coefficient of determination R2 and intra-class correlation coefficient from generalized linear mixed-effects models revisited and expanded. J. R. Soc. Interface 2017, 14, 20170213. [Google Scholar] [CrossRef]

- Wilbert, H.J.; Hoppe, A.F.; Sartori, A.; Stefenon, S.F.; Silva, L.A. Recency, Frequency, Monetary Value, Clustering, and Internal and External Indices for Customer Segmentation from Retail Data. Algorithms 2023, 16, 396. [Google Scholar] [CrossRef]

- Abdel-Hakim, A.E.; Ibrahim, A.-M.M.; Bouazza, K.E.; Deabes, W.; Hedar, A.-R. Ellipsoidal K-Means: An Automatic Clustering Approach for Non-Uniform Data Distributions. Algorithms 2024, 17, 551. [Google Scholar] [CrossRef]

- Pristiawati, A.; Sudarnoto, L.; Suryani, A. Item development and psychometric testing of Work Stress Scale. In Proceedings of the 2024 International Conference on Assessment and Learning, Bali, Indonesia, 13–15 October 2022; pp. 43–52. [Google Scholar]

- Jang, S.; Namkung, Y. Perceived quality, emotions, and behavioral intentions: Application of an extended Mehrabian–Russell model to restaurants. J. Bus. Res. 2009, 62, 451–460. [Google Scholar] [CrossRef]

- Park, I.; Lee, J.; Lee, D.; Lee, C.; Chung, W.Y. Changes in consumption patterns during the COVID-19 pandemic: Analyzing the revenge spending motivations of different emotional groups. J. Retail. Consum. Serv. 2022, 65, 102874. [Google Scholar] [CrossRef]

- Weiss, L. Egocentric Processing: The Advantages of Person-Related Features in Consumers’ Product Decisions. J. Consum. Res. 2021, 49, 288–311. [Google Scholar] [CrossRef]

- Dodds, W.; Monroe, K.; Grewal, D. Effects of Price, Brand, and Store Information on Buyers’ Product Evaluations. J. Mark. Res. 1991, 28, 307–319. [Google Scholar] [PubMed]

- Herr, P.; Kardes, F.; Kim, J. Effects of Word-of-Mouth and Product-Attribute Information on Persuasion: An Accessibility-Diagnosticity Perspective. J. Consum. Res. 1991, 17, 454–462. [Google Scholar] [CrossRef]

- Low, G.; Lamb, C. The Measurement and Dimensionality of Brand Associations. J. Prod. Brand Manag. 2000, 9, 350–370. [Google Scholar] [CrossRef]

- Chung, K.C.; Tan, P.J.B. Iot-powered personalization: Creating the optimal shopping experience in digital twin vfrs. Internet Things 2024, 26, 101216. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).