Tolerance Proportionality and Computational Stability in Adaptive Parallel-in-Time Runge–Kutta Methods

Abstract

1. Introduction

2. Preliminaries: Main Tools and Requirements

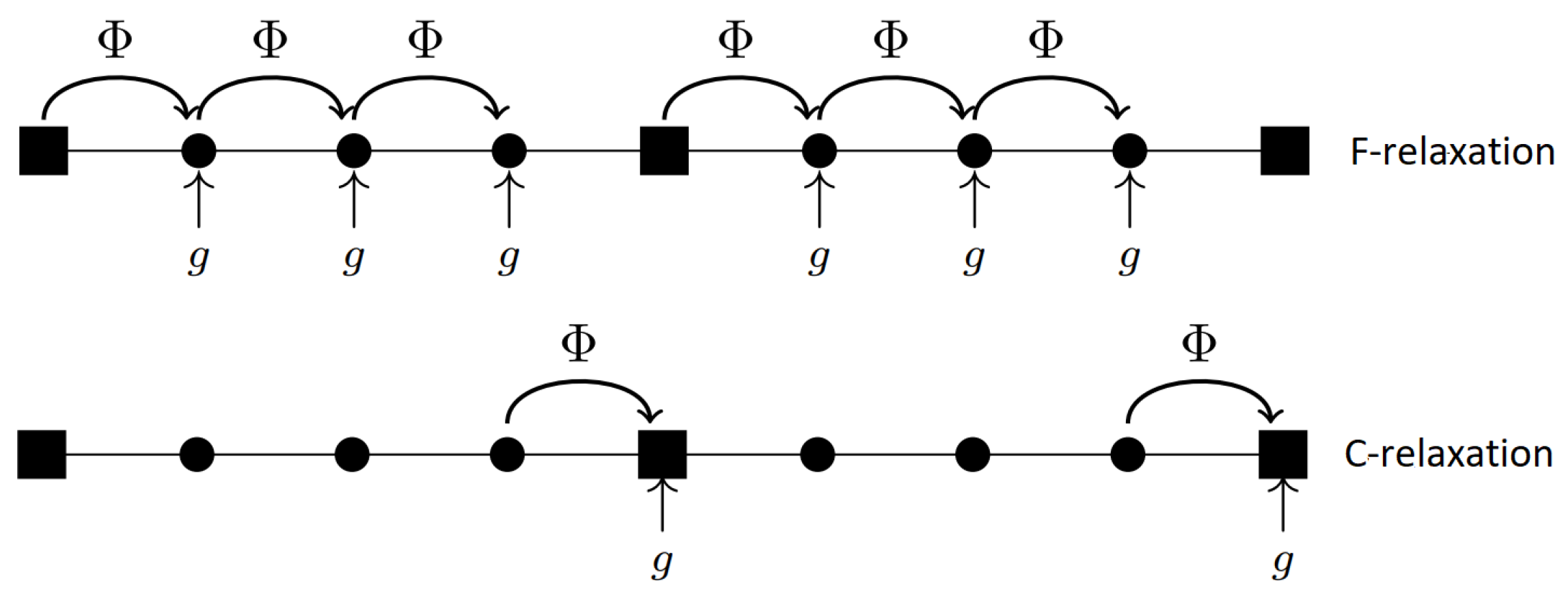

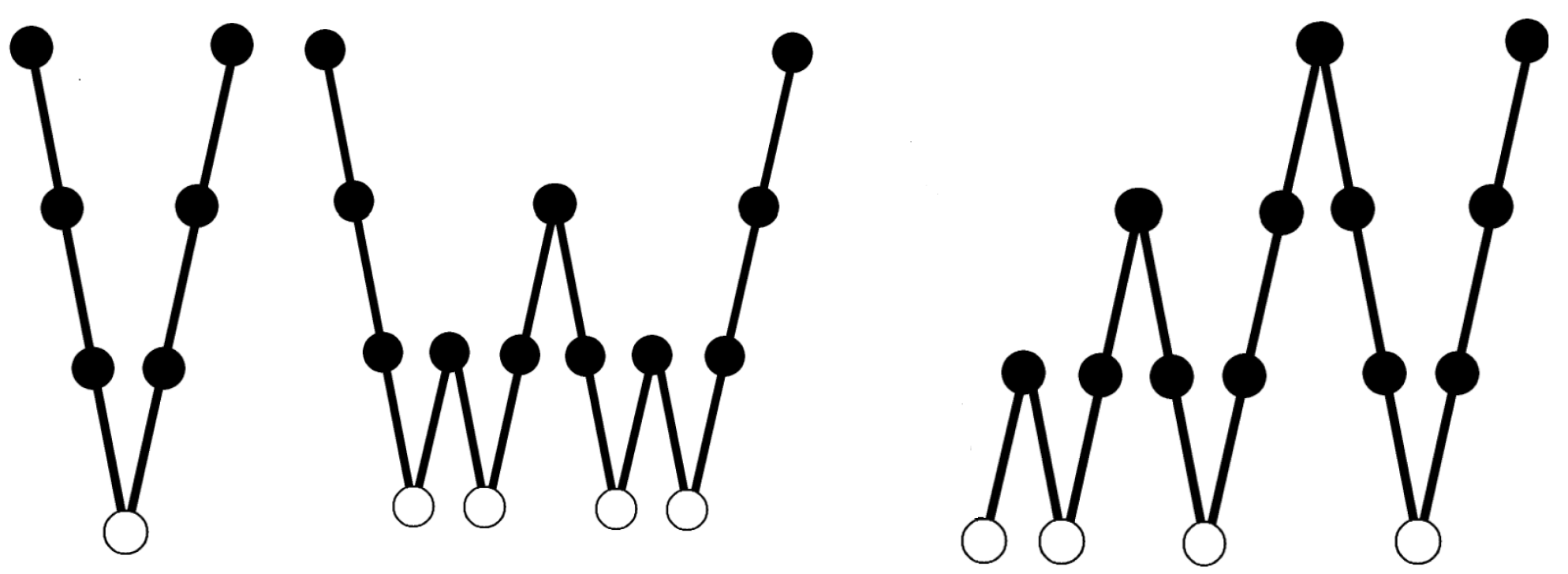

2.1. The MGRIT Algorithm

| Algorithm 1 Two-grid method. |

|

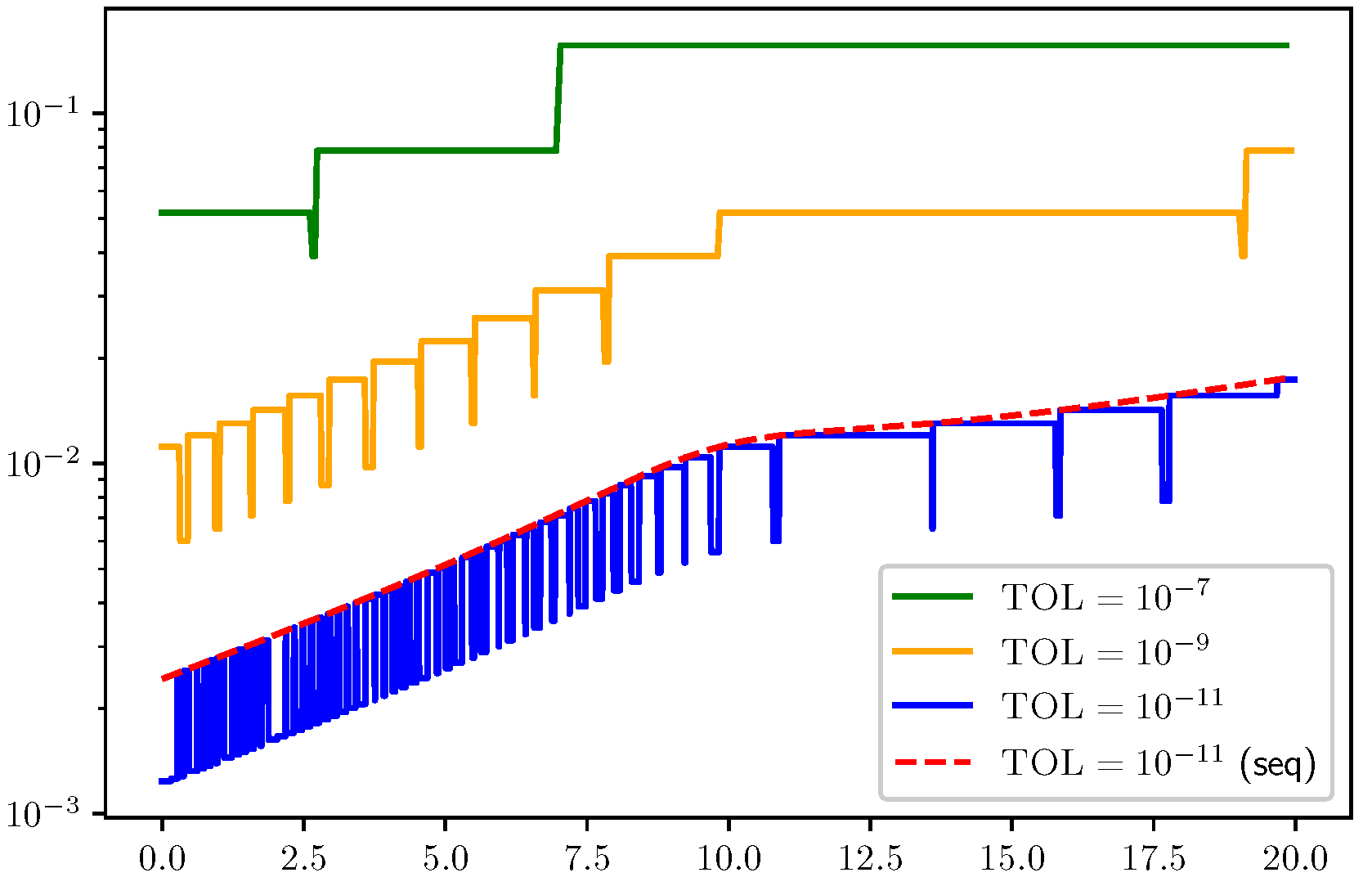

2.2. Tolerance Proportionality and Computational Stability

2.3. Classical Recursive Controllers

3. Results

3.1. ODE Test Problems

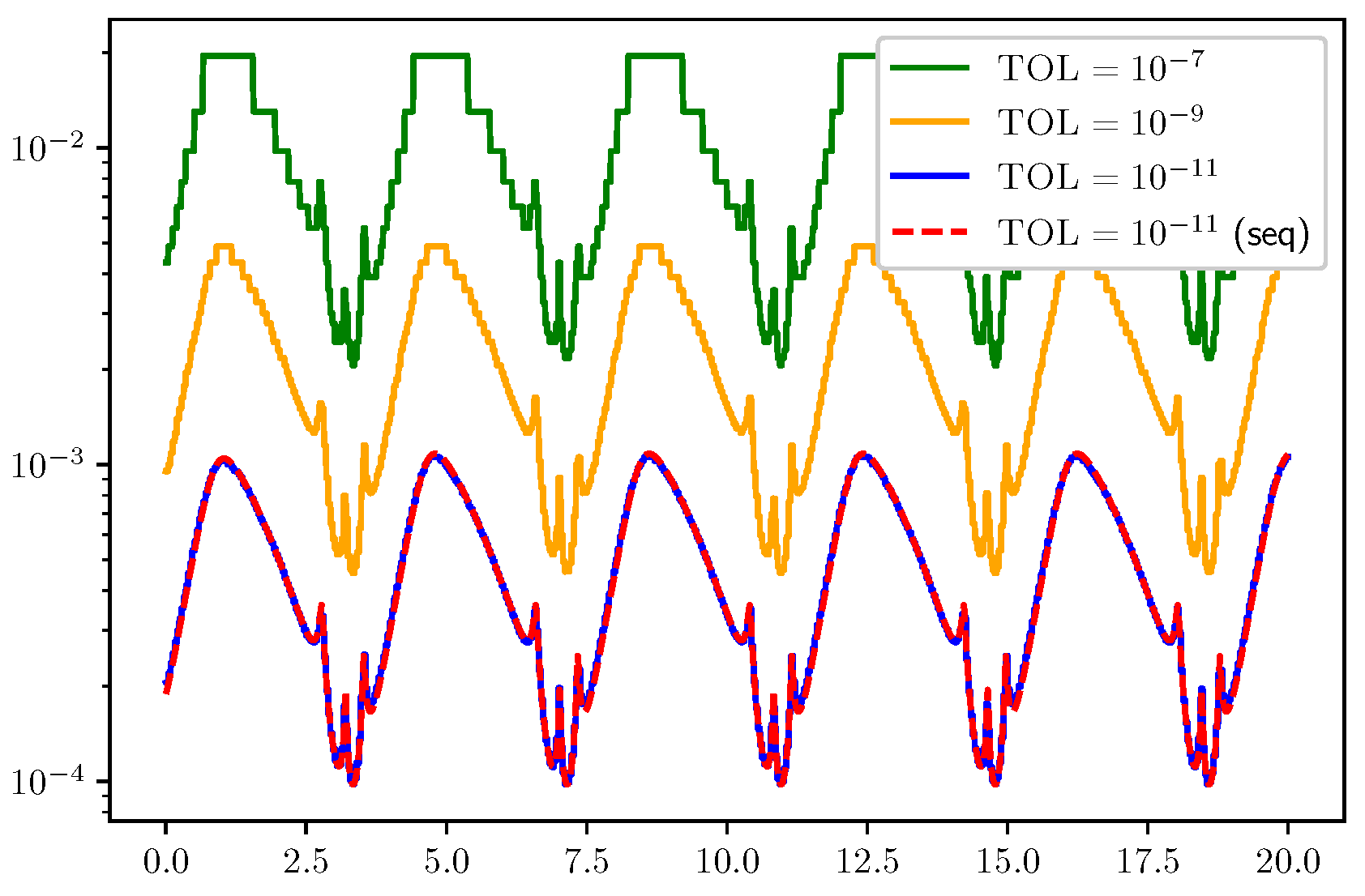

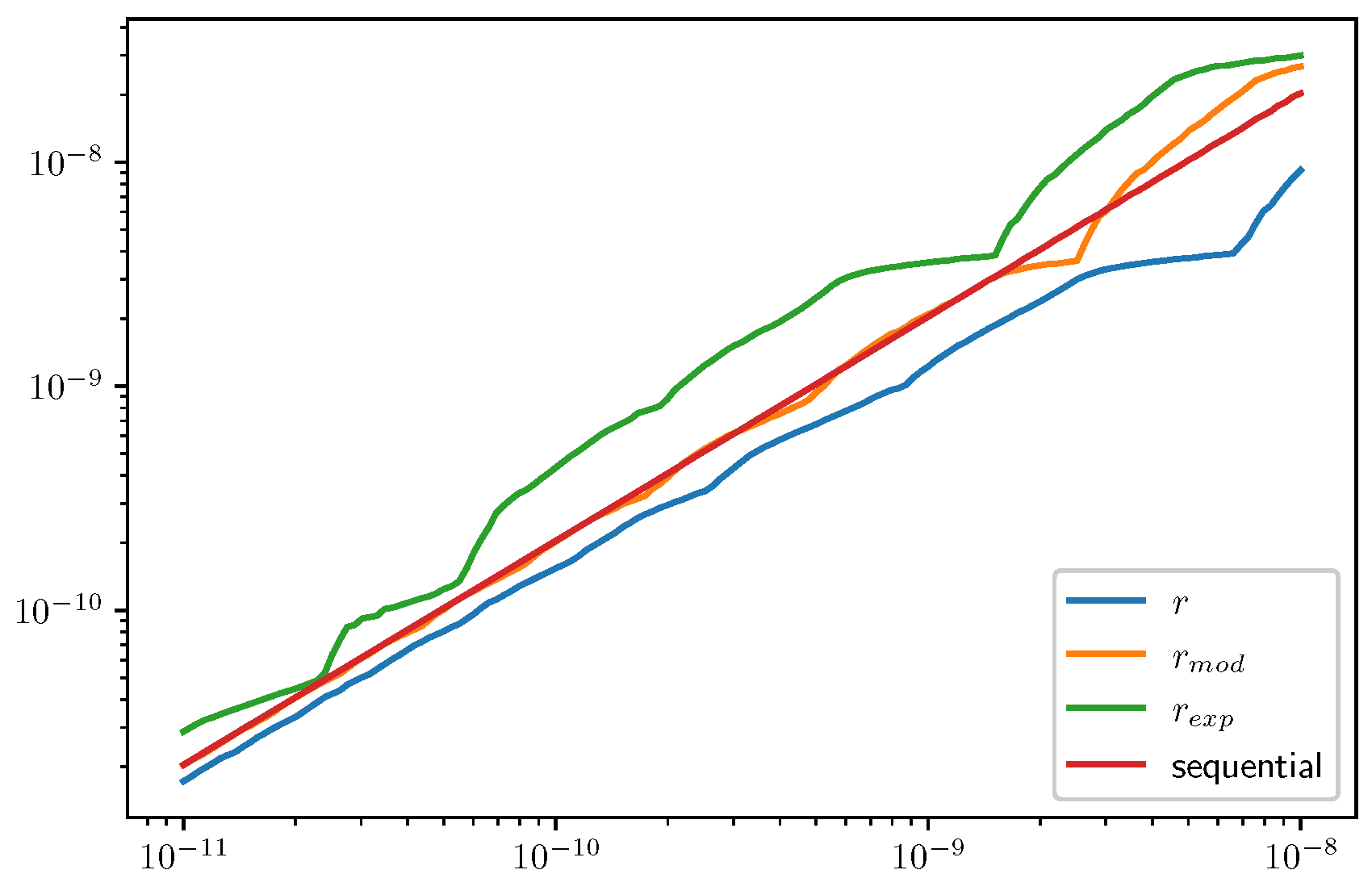

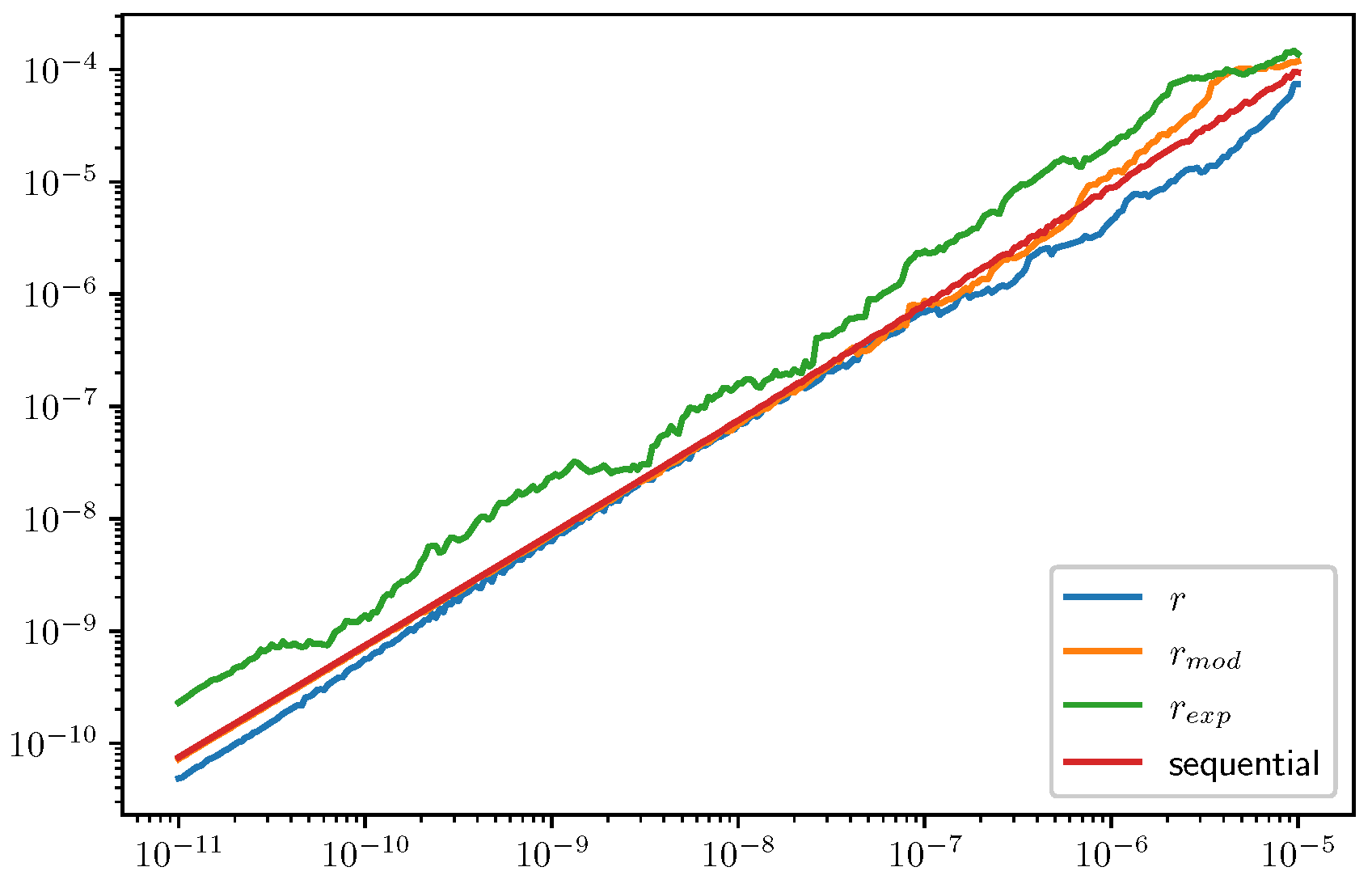

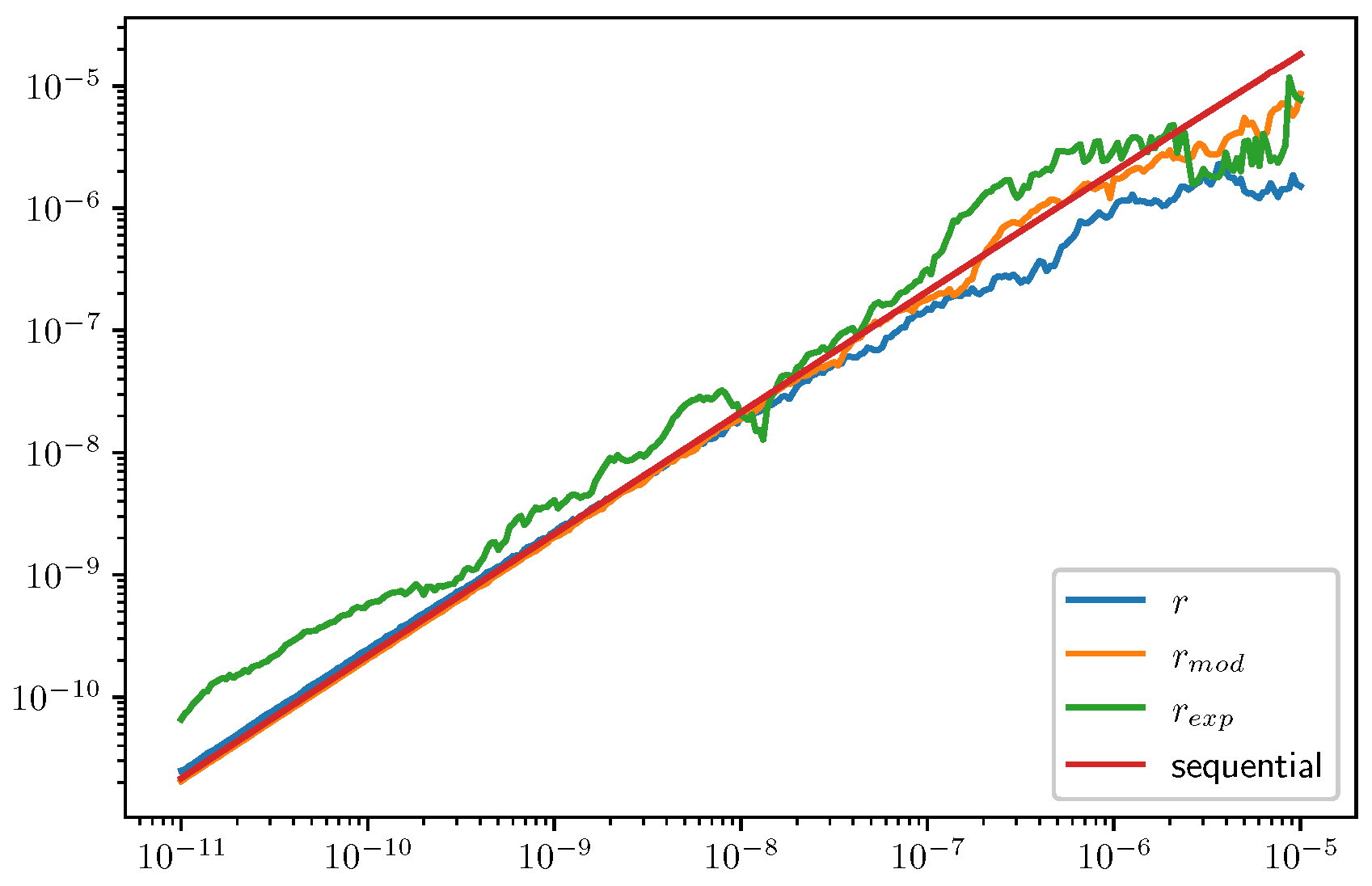

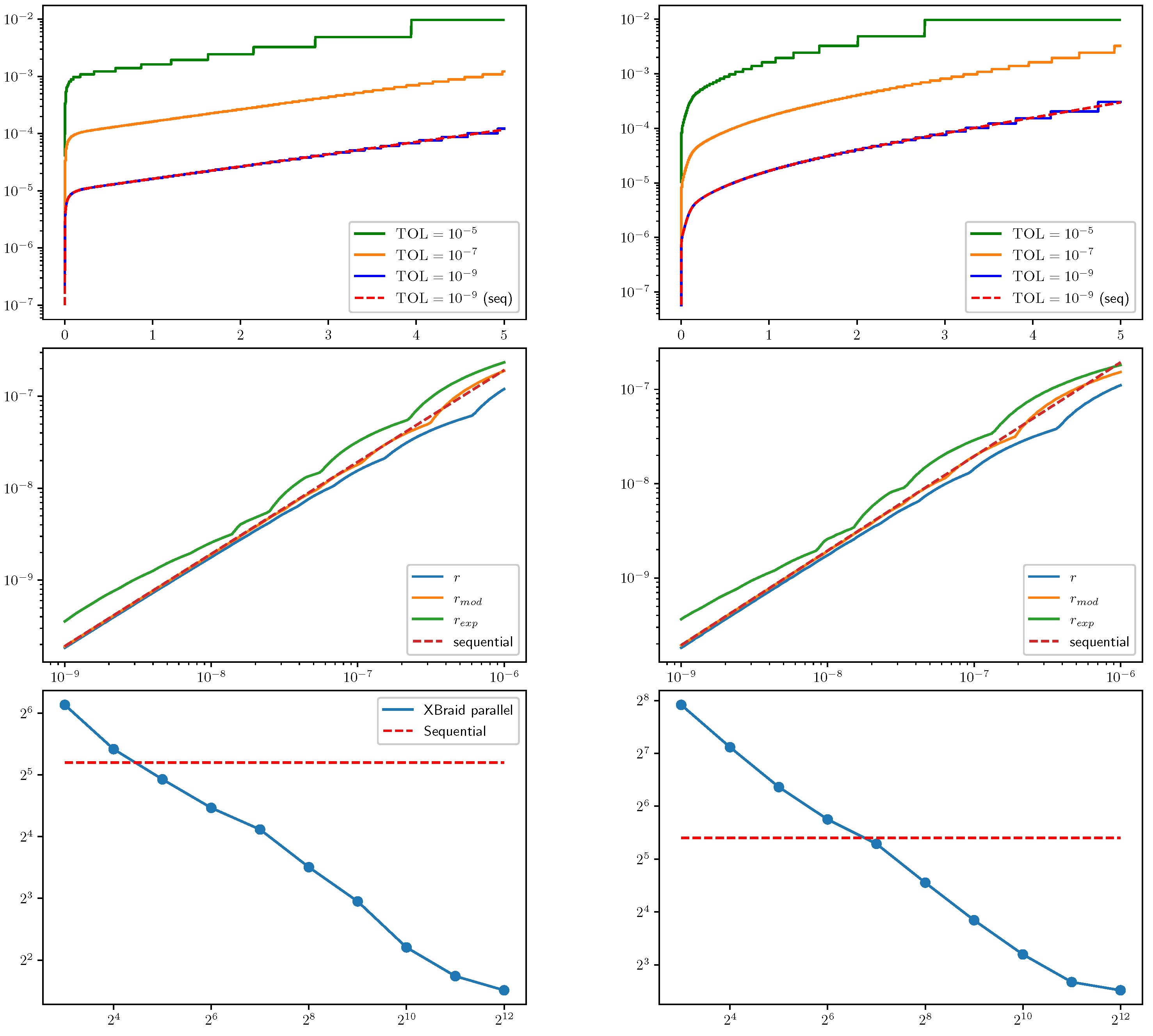

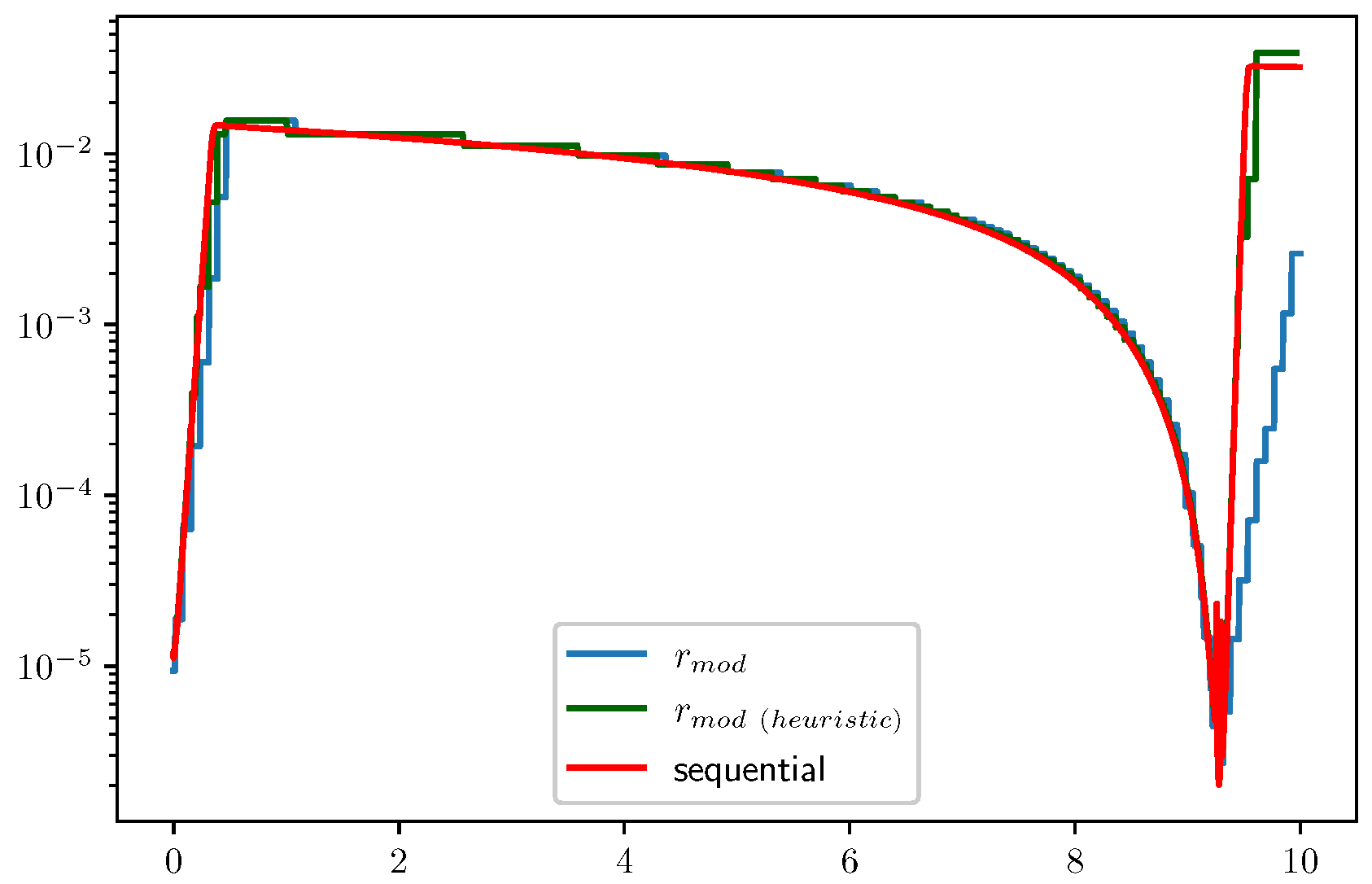

3.2. Results for Refinement Factors r, , and

3.2.1. The Final Algorithm with

- (A1)

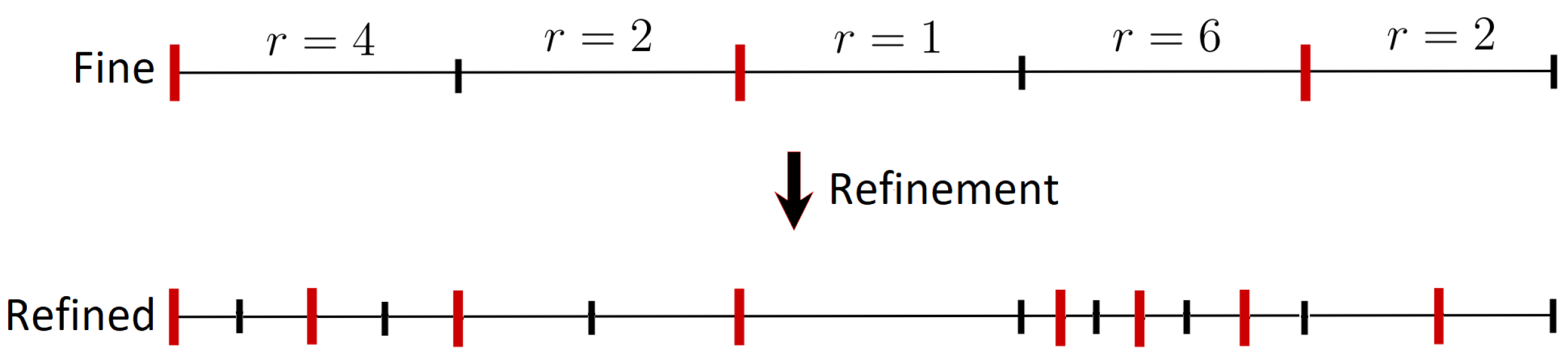

- Set an initial temporal grid and an initial guess of the solution (see Figure 3).

- (A2)

- Apply a given Runge–Kutta method in parallel from these initial values, and use multigrid cycles to improve the current approximation of the solution (see Figure 2).

- (A3)

- Determine the refinement factor for each subinterval on the finest grid based on (9).

- (A4)

- IF no refinement occurred and the accuracy criterion is satisfied then STOP.

- (A5)

- ELSE go to Step 2.

3.2.2. An Attempt with Exponential-Forgetting Filters

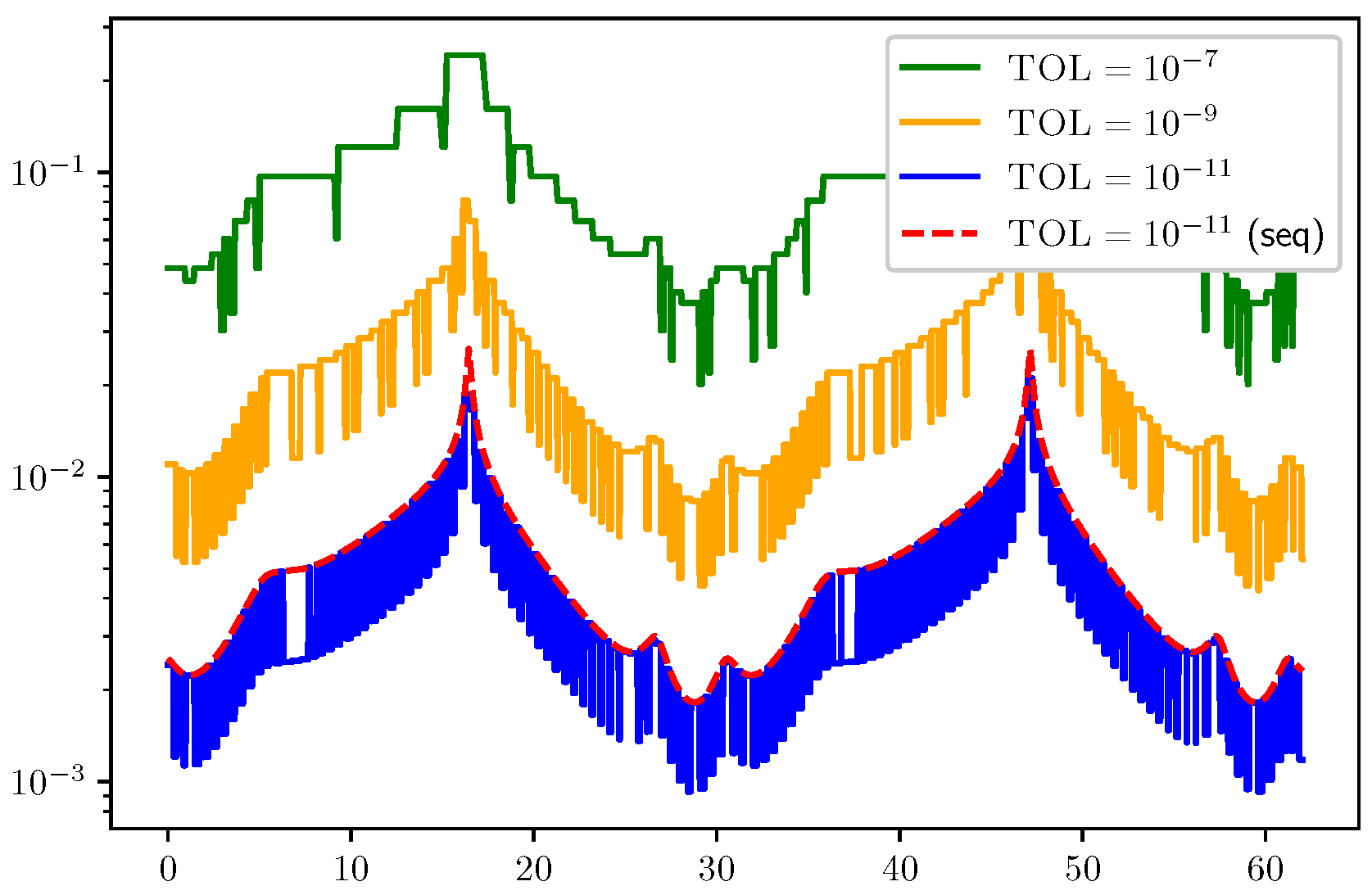

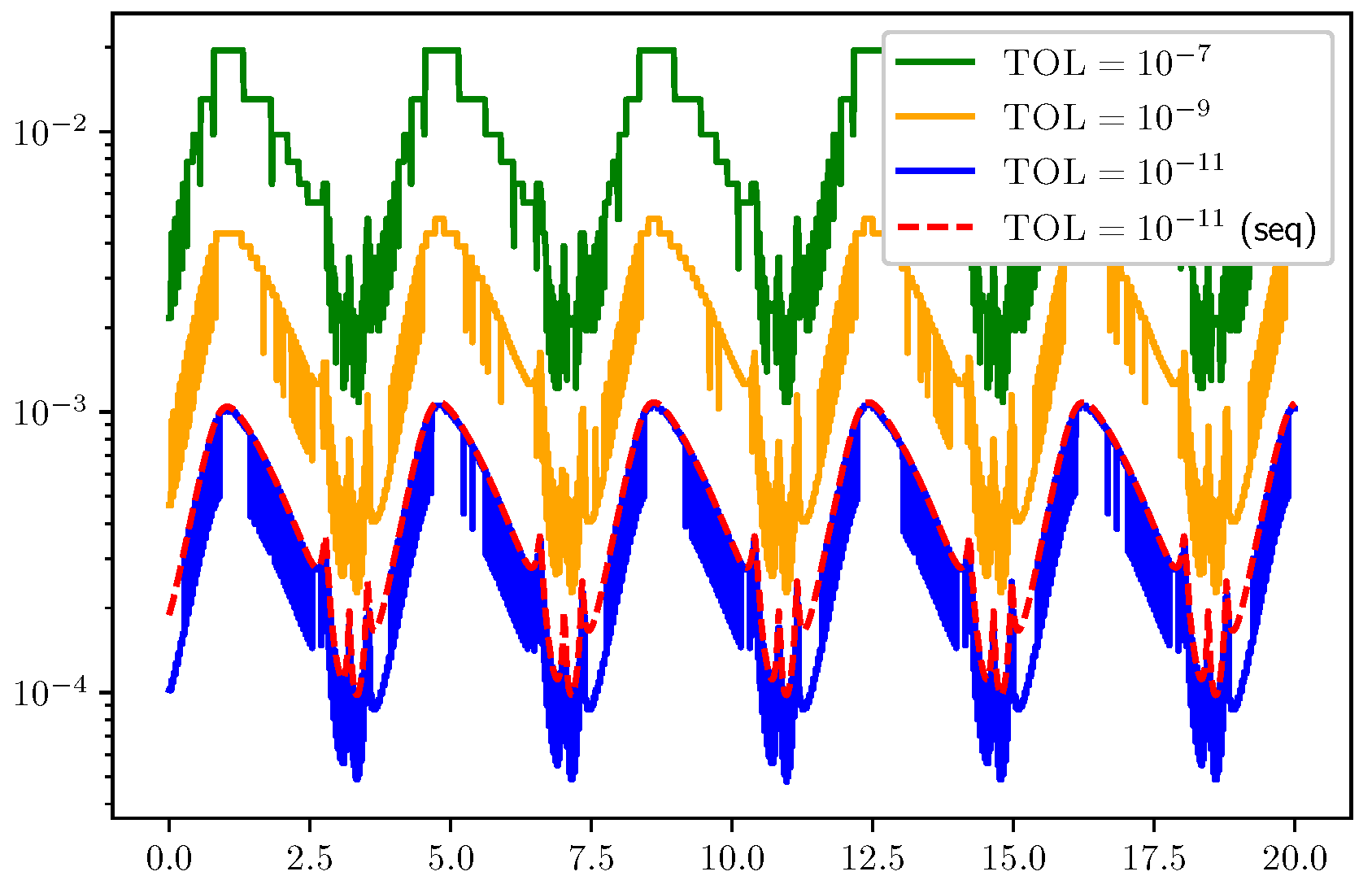

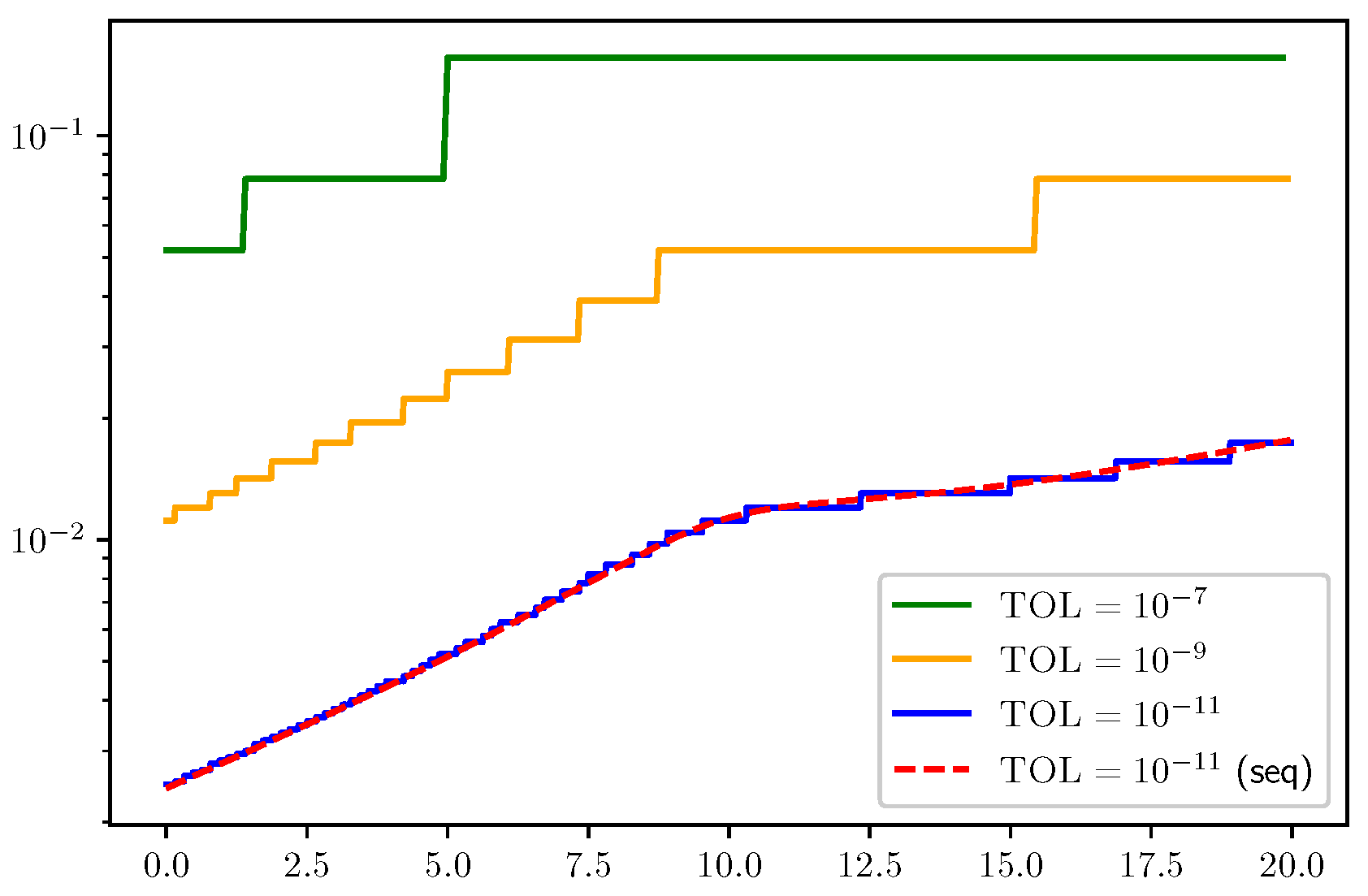

3.2.3. Tolerance Proportionality for the Algorithm (A1)–(A5)

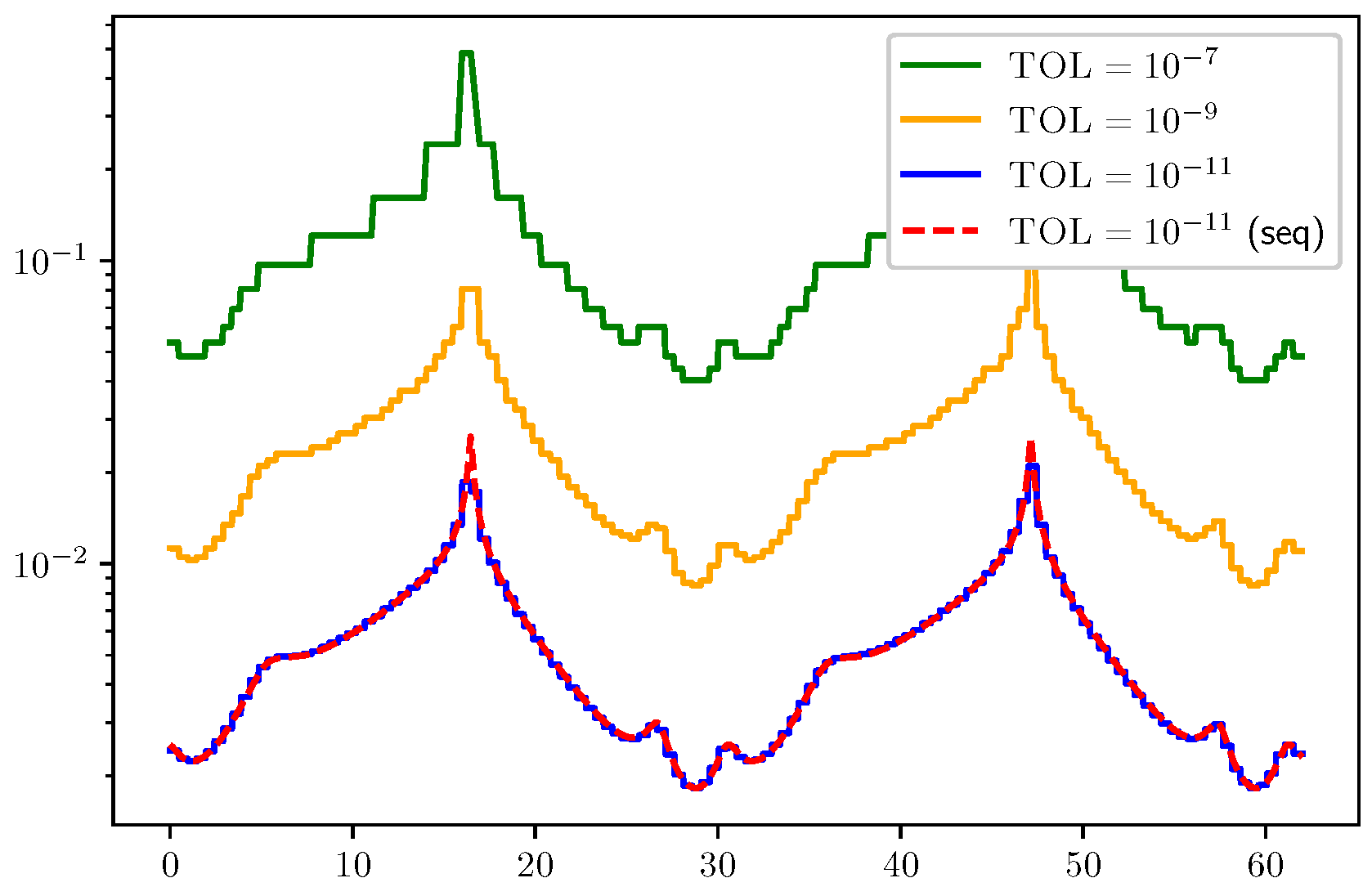

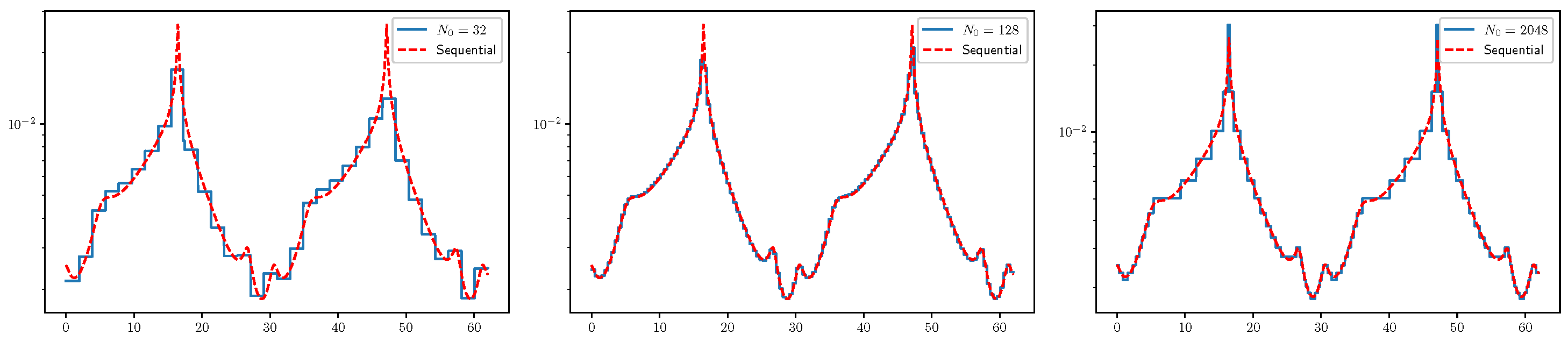

3.3. PDE Test Problems and Related Results

4. Discussion

4.1. Advantages

4.2. Limitations

4.3. Future Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| MGRIT | Multigrid reduction in time |

| ODE | Ordinary differential equation |

| PDE | Partial differential equation |

| Tolerance parameter |

References

- Houston, P.L. Chemical Kinetics and Reaction Dynamics; Dover Publications: Mineola, NY, USA, 2006. [Google Scholar]

- Jacobson, M.Z. Fundamentals of Atmospheric Modeling, 2nd ed.; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar] [CrossRef]

- Brenan, K.E.; Campbell, S.L.; Petzold, L.R. Numerical Solution of Initial-Value Problems in Differential-Algebraic Equations; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1995. [Google Scholar] [CrossRef]

- Hairer, E.; Norsett, S.P.; Wanner, G. Solving Ordinary Differential Equations I, Nonstiff Problems, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 1993. [Google Scholar] [CrossRef]

- Gustafsson, K.; Lundh, M.; Söderlind, G. A PI stepsize control for the numerical solution of ordinary differential equations. BIT Numer. Math. 1988, 28, 270–287. [Google Scholar] [CrossRef]

- Söderlind, G. Automatic control and adaptive time-stepping. Numer. Algorithms 2002, 31, 281–310. [Google Scholar] [CrossRef]

- Söderlind, G. Digital filters in adaptive time-stepping. ACM Trans. Math. Softw. 2003, 29, 1–26. [Google Scholar] [CrossRef]

- Margenov, S.; Slavchev, D. Performance Analysis and Parallel Scalability of Numerical Methods for Fractional-in-Space Diffusion Problems with Adaptive Time Stepping. Algorithms 2024, 17, 453. [Google Scholar] [CrossRef]

- Frei, S.; Heinlein, A. Towards parallel time-stepping for the numerical simulation of atherosclerotic plaque growth. J. Comput. Phys. 2023, 491, 112347. [Google Scholar] [CrossRef]

- Steinstraesser, J.G.C.; Peixoto, P.S.; Schreiber, M. Parallel-in-time integration of the shallow water equations on the rotating sphere using Parareal and MGRIT. J. Comput. Phys. 2024, 496, 112591. [Google Scholar] [CrossRef]

- Janssens, N.; Meyers, J. Parallel-in-time multiple shooting for optimal control problems governed by the Navier-Stokes equations. Comput. Phys. Commun. 2024, 296, 109019. [Google Scholar] [CrossRef]

- Zhen, M.; Ding, X.; Qu, K.; Cai, J.; Pan, S. Enhancing the Convergence of the Multigrid-Reduction-in-Time Method for the Euler and Navier-Stokes Equations. J. Sci. Comput. 2024, 100, 40. [Google Scholar] [CrossRef]

- Chen, R.T.Q.; Rubanova, Y.; Bettencourt, J.; Duvenaud, D.K. Neural Ordinary Differential Equations. Adv. Neural Inf. Process. Syst. 2018, 31, 6571–6583. Available online: https://proceedings.neurips.cc/paper_files/paper/2018/file/69386f6bb1dfed68692a24c8686939b9-Paper.pdf (accessed on 3 August 2025).

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Nguyen, H.; Tsai, R. Numerical wave propagation aided by deep learning. J. Comput. Phys. 2023, 475, 111828. [Google Scholar] [CrossRef]

- Ibrahim, A.Q.; Götschel, S.; Ruprecht, D. Parareal with a Physics-Informed Neural Network as Coarse Propagator. In Euro-Par 2023: Parallel Processing; Springer Nature: Cham, Switzerland, 2023; pp. 649–663. [Google Scholar] [CrossRef]

- Parpas, P.; Muir, C. Predict globally, correct locally: Parallel-in-time optimization of neural networks. Automatica 2025, 171, 111976. [Google Scholar] [CrossRef]

- Pamela, S.J.P.; Carey, N.; Brandstetter, J.; Akers, R.; Zanisi, L.; Buchanan, J.; Gopakumar, V.; Hoelzl, M.; Huijsmans, G.; Pentland, K.; et al. Neural-Parareal: Self-improving acceleration of fusion MHD simulations using time-parallelisation and neural operators. Comput. Phys. Commun. 2025, 307, 109391. [Google Scholar] [CrossRef]

- Gander, M.J. 50 years of time parallel time integration. In Multiple Shooting and Time Domain Decomposition Methods. Contributions in Mathematical and Computational Sciences; Carraro, T., Geiger, M., Körkel, S., Rannacher, R., Eds.; Springer: Cham, Switzerland, 2015; Volume 9, pp. 69–114. [Google Scholar] [CrossRef]

- Gander, M.J.; Lunet, T. Time Parallel Time Integration; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2024. [Google Scholar] [CrossRef]

- Falgout, R.D.; Friedhoff, S.; Kolev, T.V.; MacLachlan, S.P.; Schroder, J.B. Parallel Time Integration with Multigrid. SIAM J. Sci. Comput. 2014, 36, C635–C661. [Google Scholar] [CrossRef]

- Lions, J.-L.; Maday, Y.; Turinici, G. A parareal in time discretization of PDEs. C.R. Acad. Sci. Paris, Ser. I 2001, 332, 661–668. [Google Scholar] [CrossRef]

- Falgout, R.D.; Manteuffel, T.A.; O’Neill, B.; Schroder, J.B. Multigrid reduction in time for nonlinear parabolic problems: A Case Study. SIAM J. Sci. Comput. 2017, 39, S298–S322. [Google Scholar] [CrossRef]

- Falgout, R.D.; Lecouvez, M.; Woodward, C.S. A Parallel-in-Time Algorithm for Variable Step Multistep Methods. J. Comput. Sci. 2019, 37, 101029. [Google Scholar] [CrossRef]

- Günther, S.; Ruthotto, L.; Schroder, J.B.; Cyr, E.C.; Gauger, N.R. Layer-Parallel Training of Deep Residual Neural Network. SIAM J. Math. Data Sci. 2020, 2, 1–23. [Google Scholar] [CrossRef]

- Falgout, R.D.; Manteuffel, T.A.; O’Neill, B.; Schroder, J.B. Multigrid Reduction in Time with Richardson Extrapolation. Electron. Trans. Numer. Anal. 2021, 54, 210–233. [Google Scholar] [CrossRef]

- Sterck, H.D.; Falgout, R.D.; Krzysik, O.A.; Schroder, J.B. Efficient multigrid reduction-in-time for method-of-lines discretizations of linear advection. J. Sci. Comput. 2023, 96, 1. [Google Scholar] [CrossRef]

- Söderlind, G.; Wang, L. Adaptive time-stepping and computational stability. J. Comput. Appl. Math. 2006, 185, 225–243. [Google Scholar] [CrossRef]

- Fekete, I.; Izsák, F.; Kupás, V.P.; Söderlind, G. GitHub Repository. Available online: https://github.com/kvendel/Computational-stability-in-adaptive-parallel-in-time-Runge-Kutta-methods (accessed on 28 March 2025).

- McInnes, L.F.; Schroder, J.B.; Thompson, J.B.; Widener, S.F. XBraid: Parallel Multigrid-in-Time Software. SIAM J. Sci. Comput. 2023, 45, C443–C466. [Google Scholar]

- Kennedy, C.A.; Carpenter, M.H. Diagonally Implicit Runge-Kutta Methods for Ordinary Differential Equations. A Review; NASA Langley Research Center: Hampton, VA, USA, 2016; NASA/TM–2016-2191. Available online: https://ntrs.nasa.gov/citations/20160010150 (accessed on 3 August 2025).

- Arévalo, C.; Söderlind, G. Grid-independent construction of multistep methods. J. Comput. Math. 2017, 35, 672–692. [Google Scholar] [CrossRef]

- Söderlind, G.; Jay, L.; Calvo, M. Stiffness 1952-2012: Sixty years in search of a definition. BIT Numer. Math. 2015, 55, 531–558. [Google Scholar] [CrossRef]

- Söderlind, G. Logarithmic Norms; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fekete, I.; Izsák, F.; Kupás, V.P.; Söderlind, G. Tolerance Proportionality and Computational Stability in Adaptive Parallel-in-Time Runge–Kutta Methods. Algorithms 2025, 18, 484. https://doi.org/10.3390/a18080484

Fekete I, Izsák F, Kupás VP, Söderlind G. Tolerance Proportionality and Computational Stability in Adaptive Parallel-in-Time Runge–Kutta Methods. Algorithms. 2025; 18(8):484. https://doi.org/10.3390/a18080484

Chicago/Turabian StyleFekete, Imre, Ferenc Izsák, Vendel P. Kupás, and Gustaf Söderlind. 2025. "Tolerance Proportionality and Computational Stability in Adaptive Parallel-in-Time Runge–Kutta Methods" Algorithms 18, no. 8: 484. https://doi.org/10.3390/a18080484

APA StyleFekete, I., Izsák, F., Kupás, V. P., & Söderlind, G. (2025). Tolerance Proportionality and Computational Stability in Adaptive Parallel-in-Time Runge–Kutta Methods. Algorithms, 18(8), 484. https://doi.org/10.3390/a18080484