A Hybrid CNN–GRU–LSTM Algorithm with SHAP-Based Interpretability for EEG-Based ADHD Diagnosis

Abstract

1. Introduction

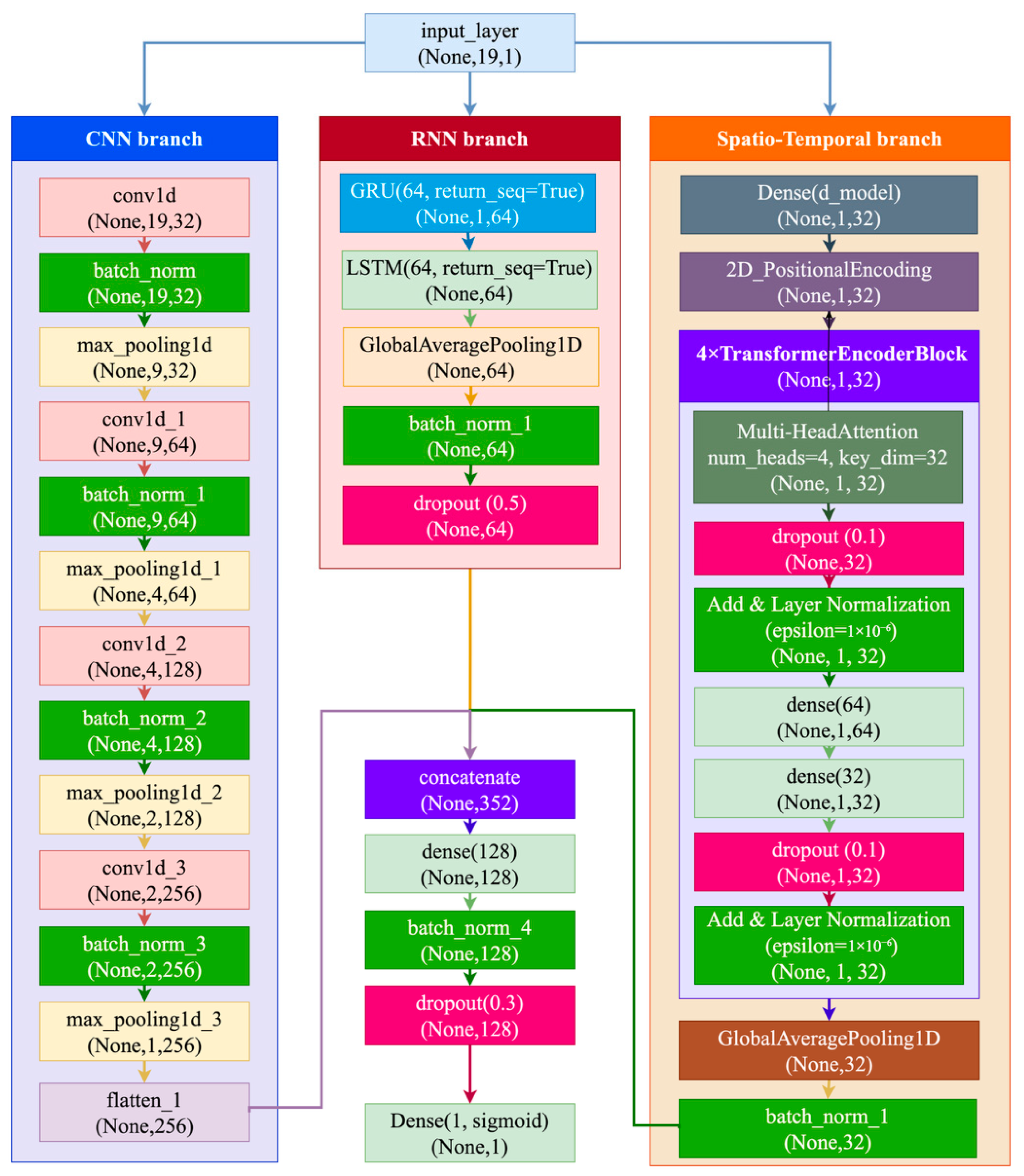

- Formulation of a hybrid deep learning architecture integrating CNN, GRU, and LSTM components for efficient extraction of spatial–temporal features from task-evoked EEG signals.

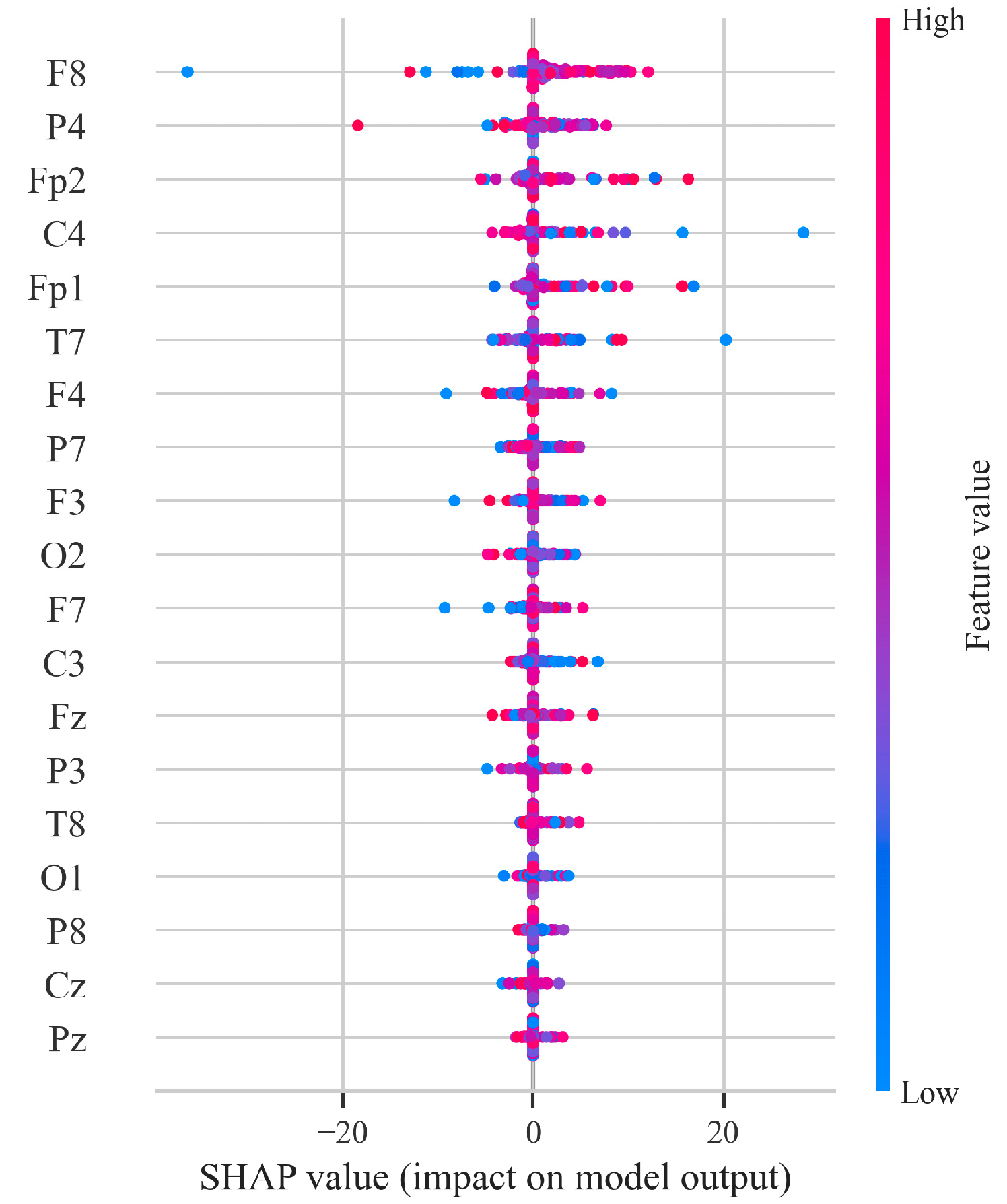

- Combination with SHAP-based interpretability for increased transparency and clinical trust, revealing EEG channels of most diagnostic importance.

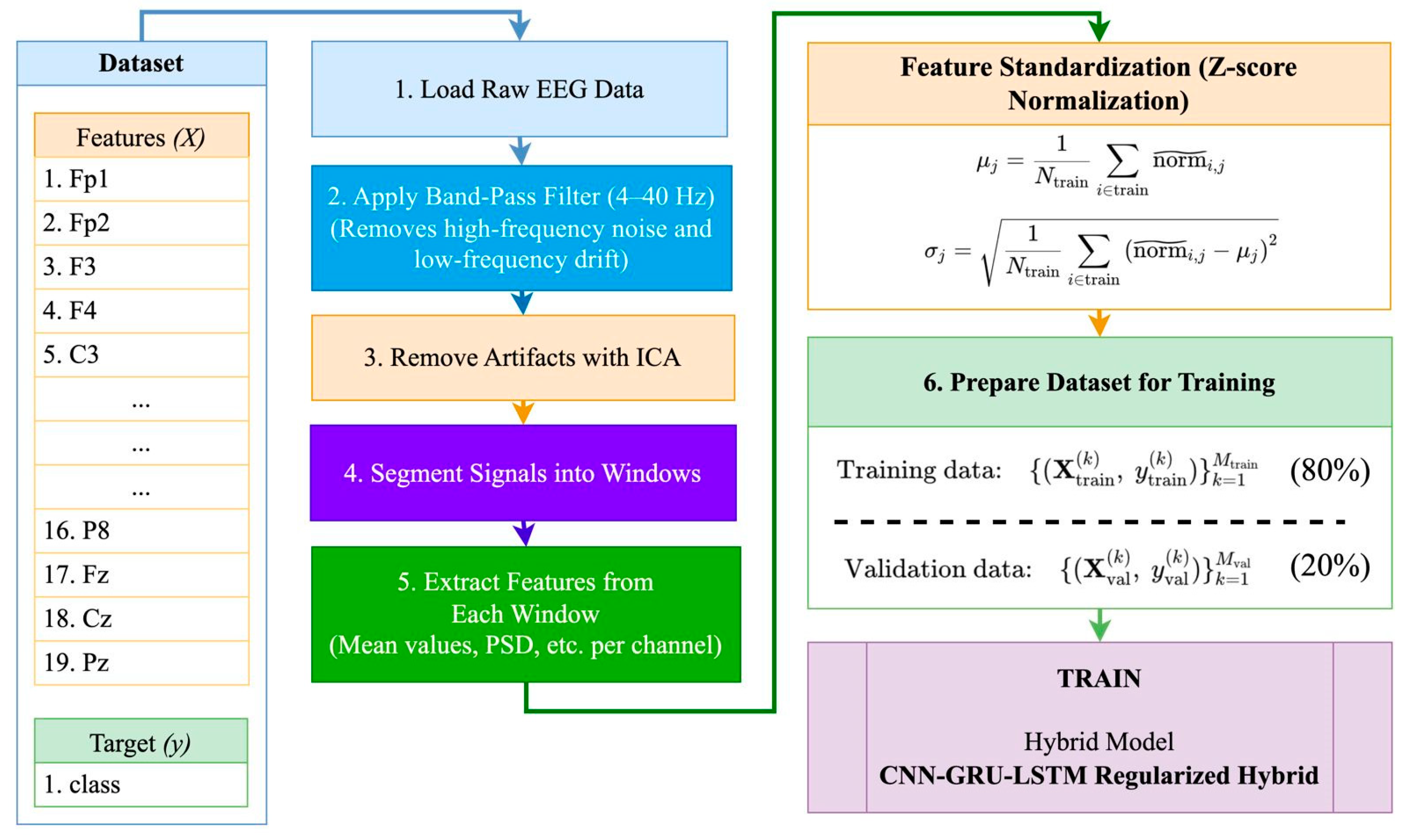

- Application of a stringent preprocessing pipeline—comprising ICA-based artifact removal, z-score normalization, and event-related segmentation—specifically designed for pediatric EEG recordings obtained during cognitively engaging tasks.

2. Related Work

2.1. Deep Learning and EEG-Based ADHD Classification

2.2. Interpretability and Research Gaps

3. Materials and Methods

3.1. Dataset Collection

3.2. Rationale for Method Selection

3.3. Feature Importance and the Selection Process

4. Results

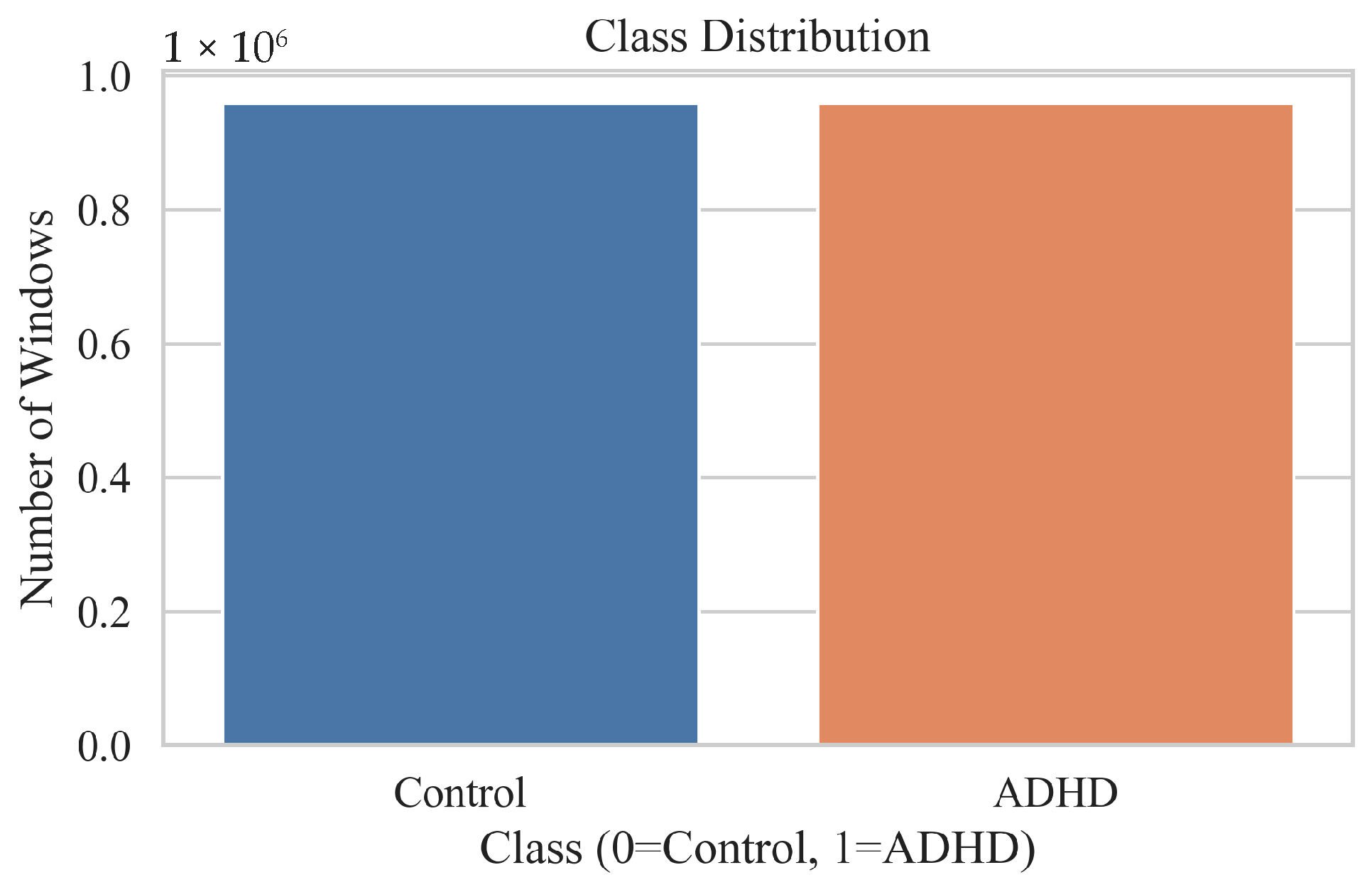

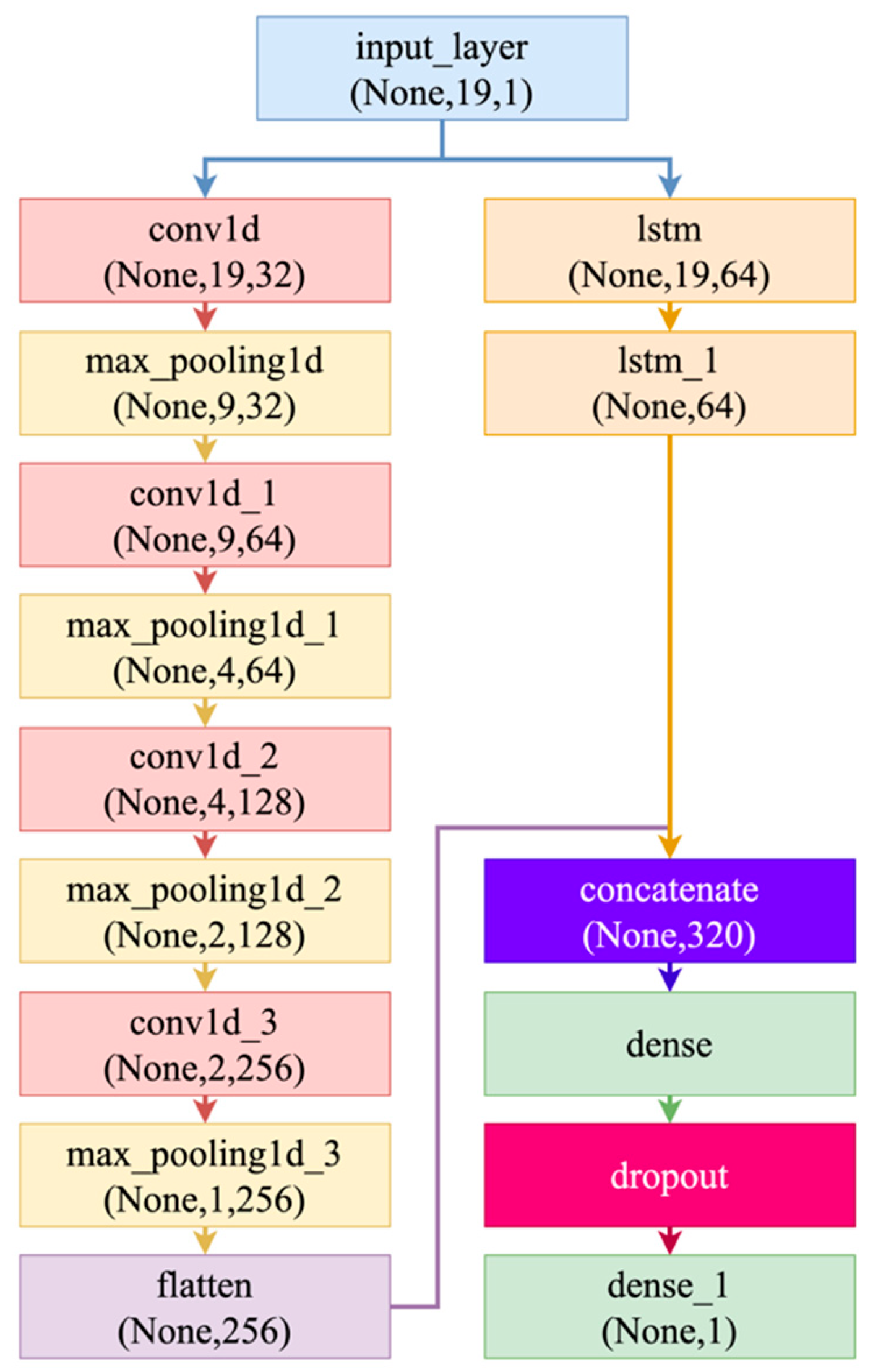

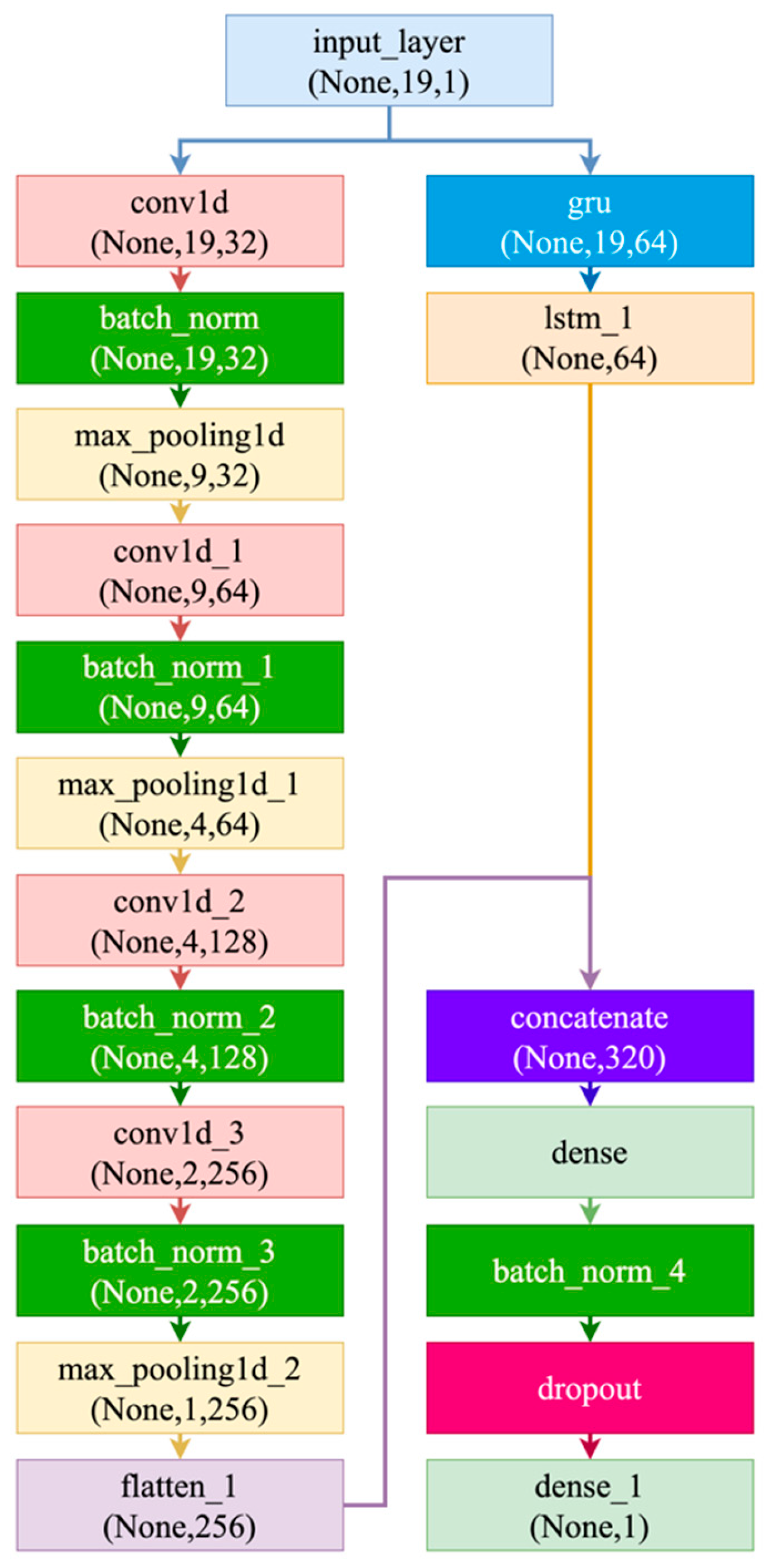

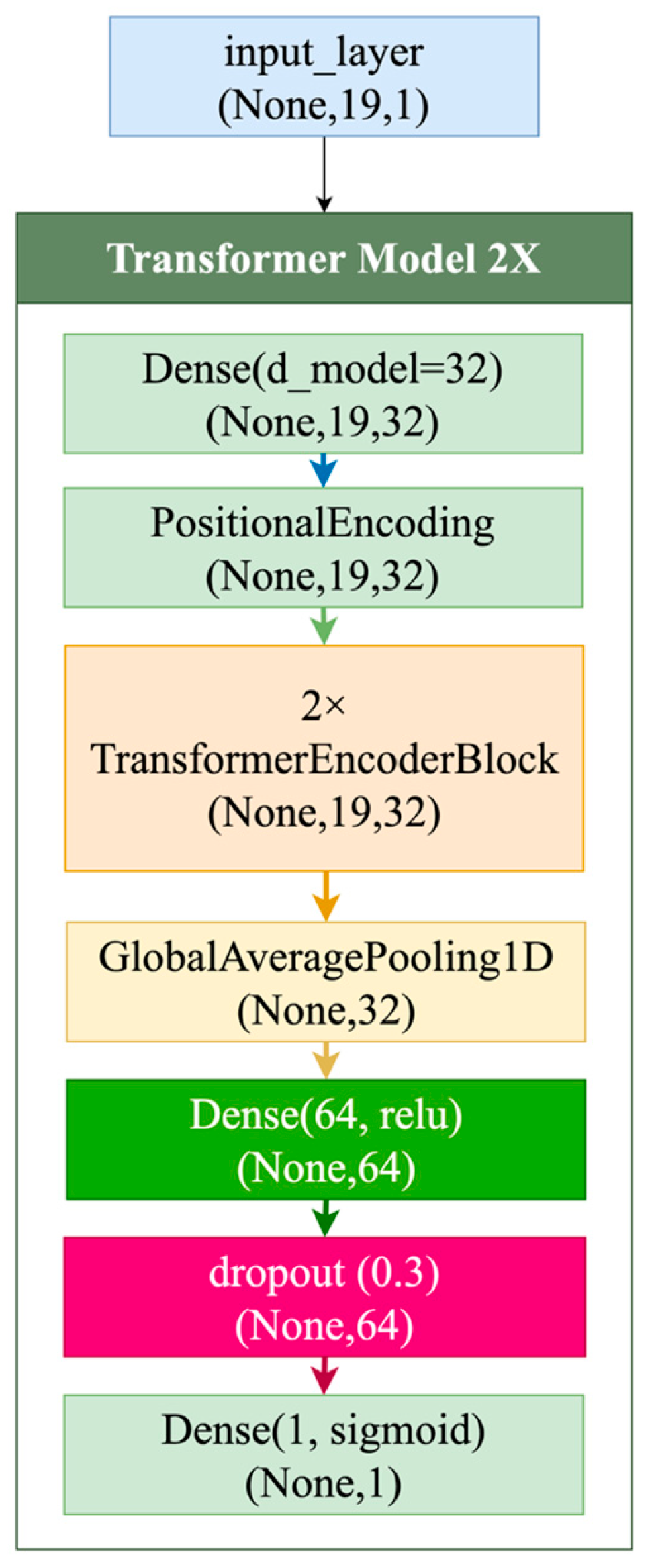

4.1. Exploratory Analysis and EEG Feature Visualization

4.2. Model Performance Metrics

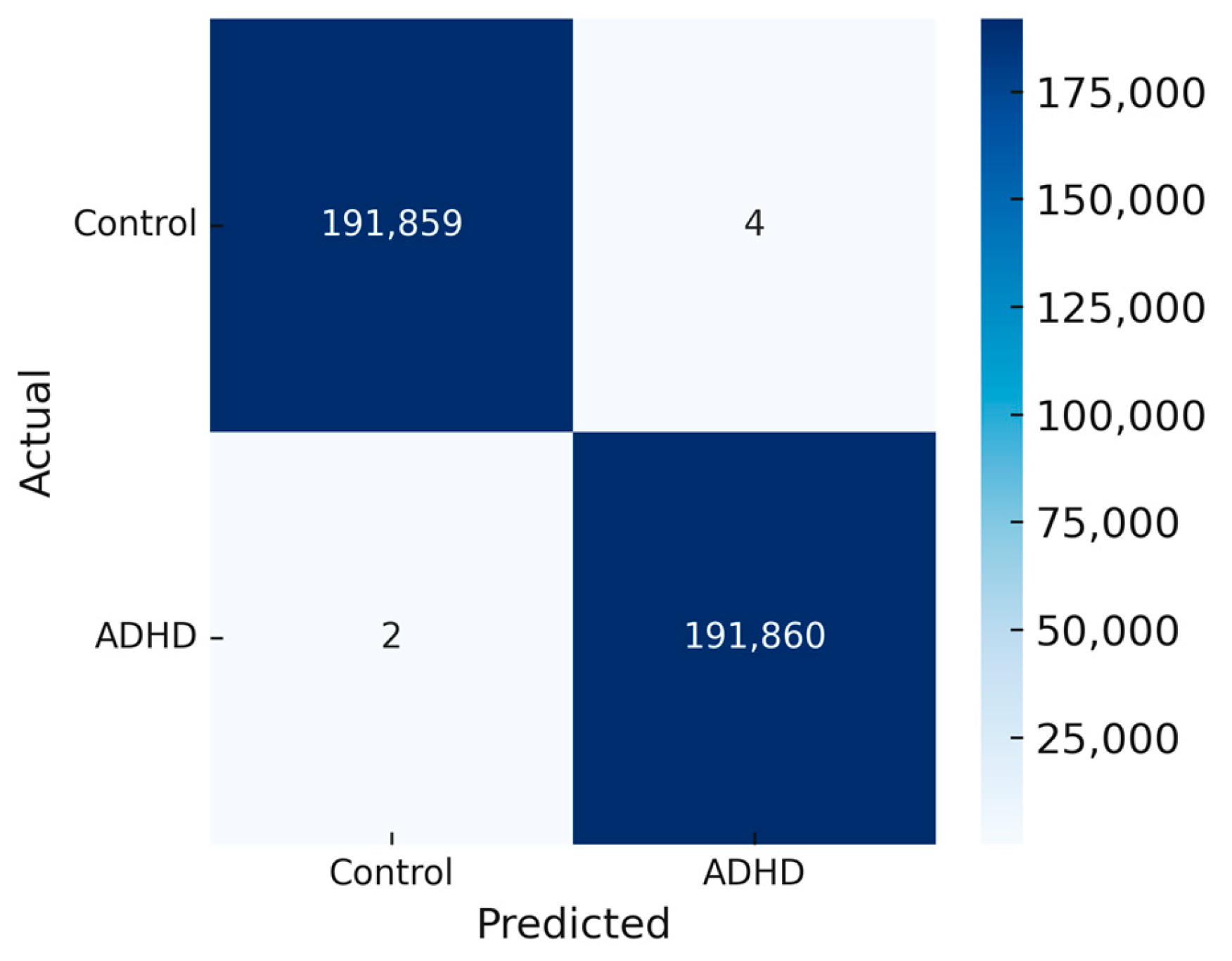

4.3. Confusion Matrix Analysis

4.4. SHAP-Based Feature Importance

5. Discussion

5.1. Comparison with Previous Studies

5.2. Limitations and Challenges

5.3. Future Research Directions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADHD | Attention Deficit Hyperactivity Disorder |

| EEG | Electroencephalography |

| SHAP | SHapley Additive exPlanations |

| ICA | Independent Component Analysis |

| PCA | Principal Component Analysis |

| TD | Typically Developing |

| API | Application Programming Interface |

| PSD | Power Spectral Density |

| ROC | Receiver Operating Characteristic |

| ReLU | Rectified Linear Unit |

| DL | Deep Learning |

| LSTM | Long Short-Term Memory |

| GRU | Gated Recurrent Unit |

References

- Polanczyk, G.V.; Salum, G.A.; Sugaya, L.S.; Caye, A.; Rohde, L.A. Annual Research Review: A Meta-Analysis of the Worldwide Prevalence of Mental Disorders in Children and Adolescents. J. Child Psychol. Psychiatry 2015, 56, 345–365. [Google Scholar] [CrossRef] [PubMed]

- Faraone, S.V.; Banaschewski, T.; Coghill, D.; Zheng, Y.; Biederman, J.; Bellgrove, M.A.; Rohde, L.A. The World Federation of ADHD International Consensus Statement: 208 Evidence-Based Conclusions about the Disorder. Neurosci. Biobehav. Rev. 2021, 128, 789–818. [Google Scholar] [CrossRef] [PubMed]

- French, B.; Sayal, K.; Daley, D. Barriers and Facilitators to Understanding of ADHD in Primary Care: A Mixed-Method Systematic Review. Eur. Child Adolesc. Psychiatry 2019, 28, 1037–1064. [Google Scholar] [CrossRef] [PubMed]

- Johnstone, S.J.; Jiang, H.; Sun, L.; Rogers, J.M.; Valderrama, J.; Zhang, D. Development of Frontal EEG Differences between Eyes-Closed and Eyes-Open Resting Conditions in Children: Data from a Single-Channel Dry-Sensor Portable Device. Clin. EEG Neurosci. 2021, 52, 235–245. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Sun, L.; Zhang, D.; Wang, S.; Hu, S.; Fang, B.; Wang, S. Phase-Amplitude Coupling Brain Networks in Children with Attention-Deficit/Hyperactivity Disorder. Clin. EEG Neurosci. 2022, 53, 399–405. [Google Scholar] [CrossRef] [PubMed]

- Gartstein, M.A.; Hancock, G.R.; Potapova, N.V.; Calkins, S.D.; Bell, M.A. Modeling Development of Frontal Electroencephalogram (EEG) Asymmetry: Sex Differences and Links with Temperament. Dev. Sci. 2020, 23, e12891. [Google Scholar] [CrossRef] [PubMed]

- Neurofeedback Collaborative Group. Neurofeedback for Attention-Deficit/Hyperactivity Disorder: 25-Month Follow-Up of Double-Blind Randomized Controlled Trial. J. Am. Acad. Child Adolesc. Psychiatry 2023, 62, 435–446. [Google Scholar] [CrossRef] [PubMed]

- Roy, Y.; Banville, H.; Albuquerque, I.; Gramfort, A.; Falk, T.H.; Faubert, J. Deep Learning-Based Electroencephalography Analysis: A Systematic Review. J. Neural Eng. 2019, 16, 051001. [Google Scholar] [CrossRef] [PubMed]

- Craik, A.; He, Y.; Contreras-Vidal, J.L. Deep Learning for Electroencephalogram (EEG) Classification Tasks: A Review. J. Neural Eng. 2019, 16, 031001. [Google Scholar] [CrossRef] [PubMed]

- Chugh, N.; Aggarwal, S.; Balyan, A. The Hybrid Deep Learning Model for Identification of Attention-Deficit/Hyperactivity Disorder Using EEG. Clin. EEG Neurosci. 2024, 55, 22–33. [Google Scholar] [CrossRef] [PubMed]

- Maniruzzaman, M.; Hasan, M.A.M.; Asai, N.; Shin, J. Optimal channels and features selection based ADHD detection from EEG signal using statistical and machine learning techniques. IEEE Access 2023, 11, 33570–33583. [Google Scholar] [CrossRef]

- Altun, S.; Alkan, A.; Altun, H. Automatic diagnosis of attention deficit hyperactivity disorder with continuous wavelet transform and convolutional neural network. Clin. Psychopharmacol. Neurosci. 2022, 20, 715. [Google Scholar] [CrossRef] [PubMed]

- Jahani, H.; Safaei, A.A. Efficient deep learning approach for diagnosis of attention-deficit/hyperactivity disorder in children based on EEG Signals. Cogn. Comput. 2024, 16, 2315–2330. [Google Scholar] [CrossRef]

- Khare, S.K.; Acharya, U.R. An explainable and interpretable model for attention deficit hyperactivity disorder in children using EEG signals. Comput. Biol. Med. 2023, 155, 106676. [Google Scholar] [CrossRef] [PubMed]

- Manjunatha, H.; Esfahani, E.T. Extracting interpretable EEG features from a deep learning model to assess the quality of human-robot co-manipulation. In Proceedings of the 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER), Online, 4–6 May 2021; pp. 339–342. [Google Scholar] [CrossRef]

- KR, A.B.; Srinivasan, S.; Mathivanan, S.K.; Venkatesan, M.; Malar, B.A.; Mallik, S.; Qin, H. A multi-dimensional hybrid CNN-BiLSTM framework for epileptic seizure detection using electroencephalogram signal scrutiny. Syst. Soft Comput. 2023, 5, 200062. [Google Scholar] [CrossRef]

- Yang, D.; Liu, Y.; Zhou, Z.; Yu, Y.; Liang, X. Decoding visual motions from EEG using attention-based RNN. Appl. Sci. 2020, 10, 5662. [Google Scholar] [CrossRef]

- Omar, S.M.; Kimwele, M.; Olowolayemo, A.; Kaburu, D.M. Enhancing EEG signals classification using LSTM-CNN architecture. Eng. Rep. 2024, 6, e12827. [Google Scholar] [CrossRef]

- Azami, H.; Mirjalili, M.; Rajji, T.K.; Wu, C.T.; Humeau-Heurtier, A.; Jung, T.P.; Liu, Y.H. Electroencephalogram and Event-Related Potential in Mild Cognitive Impairment: Recent Developments in Signal Processing, Machine Learning, and Deep Learning. IEEE J. Sel. Areas Sensors 2025, 2, 162–184. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, X. Use transfer learning to promote identification ADHD children with EEG recordings. In Proceedings of the 2019 Chinese Automation Congress (CAC), Hangzhou, China, 22–24 November 2019; IEEE: New York, NY, USA, 2019; pp. 2809–2813. [Google Scholar] [CrossRef]

| Ref | Study Focus | Methods | Key Findings | Identified Gaps |

|---|---|---|---|---|

| [11] | Entropy-based feature extraction | Random forest classifier | Achieved 97.53% accuracy | No interpretability; lacks temporal modeling |

| [12] | Time–frequency EEG representation for ADHD | Wavelet transform + deep CNN | Robust session-level accuracy | Spatial focus only; no temporal dynamics |

| [13] | Neuro-motor pattern analysis | Two-dimensional tracking + CNN | Identified motor planning deficits | EEG used indirectly; no EEG dynamics analyzed |

| [14] | Explainable AI in ADHD detection | SHAP, LIME, saliency maps | Enhanced clinician interpretability | Not combined with deep models |

| [15] | Mental state classification with explainable DL | CNN + attention + SHAP | Achieved interpretable 91% accuracy | General mental state decoding, not ADHD-specific |

| [16] | Spatiotemporal hybrid modeling in ADHD | CNN + BiLSTM | Outperformed conventional CNN and LSTM | No interpretability; no SHAP |

| [17] | Attention-based neural decoding | CNN + GRU + Attention | Improved detection of task-relevant EEG activity | Focused on general cognition, not ADHD |

| [18] | Importance of preprocessing in EEG classification | ICA + CNN-LSTM | Filtering and epoching improved model performance | No real-time classification |

| [19] | Task-based EEG learning for ADHD | Cognitive task EEG + CNN | Task-evoked EEG provided better discrimination | No interpretability features included |

| [20] | Transfer learning in EEG ADHD classification | Pretrained CNN + fine-tuning | Reduced training time with high accuracy (93%) | Requires pretraining on large datasets |

| Model | Fold | Accuracy (%) | Precision | Recall | F1-Score | AUC |

|---|---|---|---|---|---|---|

| CNN–LSTM | Fold 1 | 91.20 | 0.89 | 0.92 | 0.905 | 0.934 |

| Fold 2 | 92.50 | 0.91 | 0.93 | 0.920 | 0.945 | |

| Fold 3 | 93.10 | 0.92 | 0.94 | 0.930 | 0.948 | |

| Fold 4 | 91.80 | 0.90 | 0.92 | 0.910 | 0.938 | |

| Fold 5 | 93.20 | 0.91 | 0.95 | 0.930 | 0.941 | |

| CNN–GRU–LSTM | Fold 1 | 0.9965 | 0.9980 | 0.9949 | 0.9963 | 0.9998 |

| Fold 2 | 0.9963 | 0.9987 | 0.9940 | 0.9962 | 0.9999 | |

| Fold 3 | 0.9965 | 0.9976 | 0.9954 | 0.9965 | 0.9998 | |

| Fold 4 | 0.9956 | 0.9987 | 0.9926 | 0.9956 | 0.9996 | |

| Fold 5 | 0.9968 | 0.9991 | 0.9945 | 0.9968 | 0.9999 | |

| Transformer Encoder (2×) | Fold 1 | 0.7561 | 0.7702 | 0.7300 | 0.4239 | 0.8450 |

| Fold 2 | 0.7670 | 0.9228 | 0.5827 | 0.3692 | 0.8547 | |

| Fold 3 | 0.7285 | 0.7898 | 0.6228 | 0.3859 | 0.8126 | |

| Fold 4 | 0.7700 | 0.8004 | 0.7194 | 0.4202 | 0.8570 | |

| Fold 5 | 0.7710 | 0.7830 | 0.7650 | 0.4465 | 0.8570 | |

| Transformer Encoder (3×) | Fold 1 | 0.7358 | 0.7527 | 0.7024 | 0.4138 | 0.8158 |

| Fold 2 | 0.7058 | 0.7030 | 0.7128 | 0.4182 | 0.7838 | |

| Fold 3 | 0.7674 | 0.7771 | 0.7499 | 0.4305 | 0.8547 | |

| Fold 4 | 0.7555 | 0.8361 | 0.6356 | 0.3902 | 0.8407 | |

| Fold 5 | 0.7484 | 0.7723 | 0.7123 | 0.4210 | 0.8301 | |

| Spatiotemporal Transformer | Fold 1 | 0.9997 | 1.0000 | 0.9995 | 0.9997 | 0.9999 |

| Fold 2 | 0.9998 | 0.9998 | 0.9996 | 0.9997 | 0.9997 | |

| Fold 3 | 0.9998 | 1.0000 | 0.9999 | 0.9999 | 1.0000 | |

| Fold 4 | 0.9998 | 1.0000 | 0.9998 | 0.9999 | 0.9997 | |

| Fold 5 | 0.9999 | 1.0000 | 0.9999 | 0.9999 | 1.0000 | |

| Hybrid CNN–RNN–Transformer | Fold 1 | 0.9998 | 1.0000 | 0.9998 | 0.9999 | 1.0000 |

| Fold 2 | 0.9998 | 1.0000 | 0.9999 | 0.9999 | 1.0000 | |

| Fold 3 | 0.9999 | 1.0000 | 0.9999 | 0.9999 | 1.0000 | |

| Fold 4 | 0.9998 | 1.0000 | 0.9999 | 0.9999 | 1.0000 | |

| Fold 5 | 0.9999 | 1.0000 | 0.9999 | 0.9999 | 1.0000 |

| Model | Accuracy (%) | Precision | Recall | F1-Score | AUC |

|---|---|---|---|---|---|

| CNN–LSTM | 92.36 ± 0.79 | 0.906 ± 0.011 | 0.932 ± 0.011 | 0.919 ± 0.010 | 0.941 ± 0.005 |

| CNN–GRU–LSTM | 99.63 ± 0.04 | 0.998 ± 0.0006 | 0.994 ± 0.0011 | 0.996 ± 0.0004 | 0.9998 ± 0.0001 |

| Transformer Encoder (2×) | 75.85 ± 1.59 | 0.813 ± 0.0556 | 0.684 ± 0.0692 | 0.409 ± 0.0278 | 0.845 ± 0.0169 |

| Transformer Encoder (3×) | 74.26 ± 2.11 | 0.768 ± 0.0430 | 0.703 ± 0.0372 | 0.415 ± 0.0134 | 0.825 ± 0.0242 |

| Spatiotemporal Transformer | 99.88 ± 0.11 | 0.9996 ± 0.0001 | 0.9997 ± 0.0001 | 0.9998 ± 0.0001 | 0.9999 ± 0.00015 |

| Hybrid CNN + GRU + LSTM | 99.63 ± 0.04 | 0.998 ± 0.0005 | 0.994 ± 0.0010 | 0.996 ± 0.0004 | 0.9999 ± 0.0000 |

| Hybrid CNN–RNN–Transformer | 99.99 ± 0.005 | 1.0000 ± 0.0000 | 0.9999 ± 0.00004 | 0.9999 ± 0.00003 | 1.0000 ± 0.0000 |

| Model | Specificity | Balanced Accuracy | Cohen’s Kappa | MCC |

|---|---|---|---|---|

| CNN–LSTM | 0.9962 | 0.9905 | 0.9810 | 0.9811 |

| CNN–GRU–LSTM | 0.9991 | 0.9968 | 0.9936 | 0.9936 |

| Transformer Encoder (2×) | 0.8410 | 0.7625 | 0.519 | 0.531 |

| Transformer Encoder (3×) | 0.7880 | 0.7453 | 0.4906 | 0.4924 |

| Spatiotemporal Transformer | 0.9988 | 0.9999 | 0.9998 | 0.9998 |

| Hybrid CNN–RNN–Transformer | 0.999995 | 0.999943 | 0.999886 | 0.999886 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baibulova, M.; Aitimov, M.; Burganova, R.; Abdykerimova, L.; Sabirova, U.; Seitakhmetova, Z.; Uvaliyeva, G.; Orynbassar, M.; Kassekeyeva, A.; Kassim, M. A Hybrid CNN–GRU–LSTM Algorithm with SHAP-Based Interpretability for EEG-Based ADHD Diagnosis. Algorithms 2025, 18, 453. https://doi.org/10.3390/a18080453

Baibulova M, Aitimov M, Burganova R, Abdykerimova L, Sabirova U, Seitakhmetova Z, Uvaliyeva G, Orynbassar M, Kassekeyeva A, Kassim M. A Hybrid CNN–GRU–LSTM Algorithm with SHAP-Based Interpretability for EEG-Based ADHD Diagnosis. Algorithms. 2025; 18(8):453. https://doi.org/10.3390/a18080453

Chicago/Turabian StyleBaibulova, Makbal, Murat Aitimov, Roza Burganova, Lazzat Abdykerimova, Umida Sabirova, Zhanat Seitakhmetova, Gulsiya Uvaliyeva, Maksym Orynbassar, Aislu Kassekeyeva, and Murizah Kassim. 2025. "A Hybrid CNN–GRU–LSTM Algorithm with SHAP-Based Interpretability for EEG-Based ADHD Diagnosis" Algorithms 18, no. 8: 453. https://doi.org/10.3390/a18080453

APA StyleBaibulova, M., Aitimov, M., Burganova, R., Abdykerimova, L., Sabirova, U., Seitakhmetova, Z., Uvaliyeva, G., Orynbassar, M., Kassekeyeva, A., & Kassim, M. (2025). A Hybrid CNN–GRU–LSTM Algorithm with SHAP-Based Interpretability for EEG-Based ADHD Diagnosis. Algorithms, 18(8), 453. https://doi.org/10.3390/a18080453