Auto-Tuning Memory-Based Adaptive Local Search Gaining–Sharing Knowledge-Based Algorithm for Solving Optimization Problems

Abstract

1. Introduction

- Proposing an adaptive Auto-Tuning Memory-based GSK (ATM-GSK) algorithm, which enhances the original GSK algorithm with dynamic parameter adaptation.

- Integration of historical memory-based adaptiveness using a Gaussian-based weighted moving average mechanism that evaluates and adjusts parameter values based on their impact on the fitness function over time.

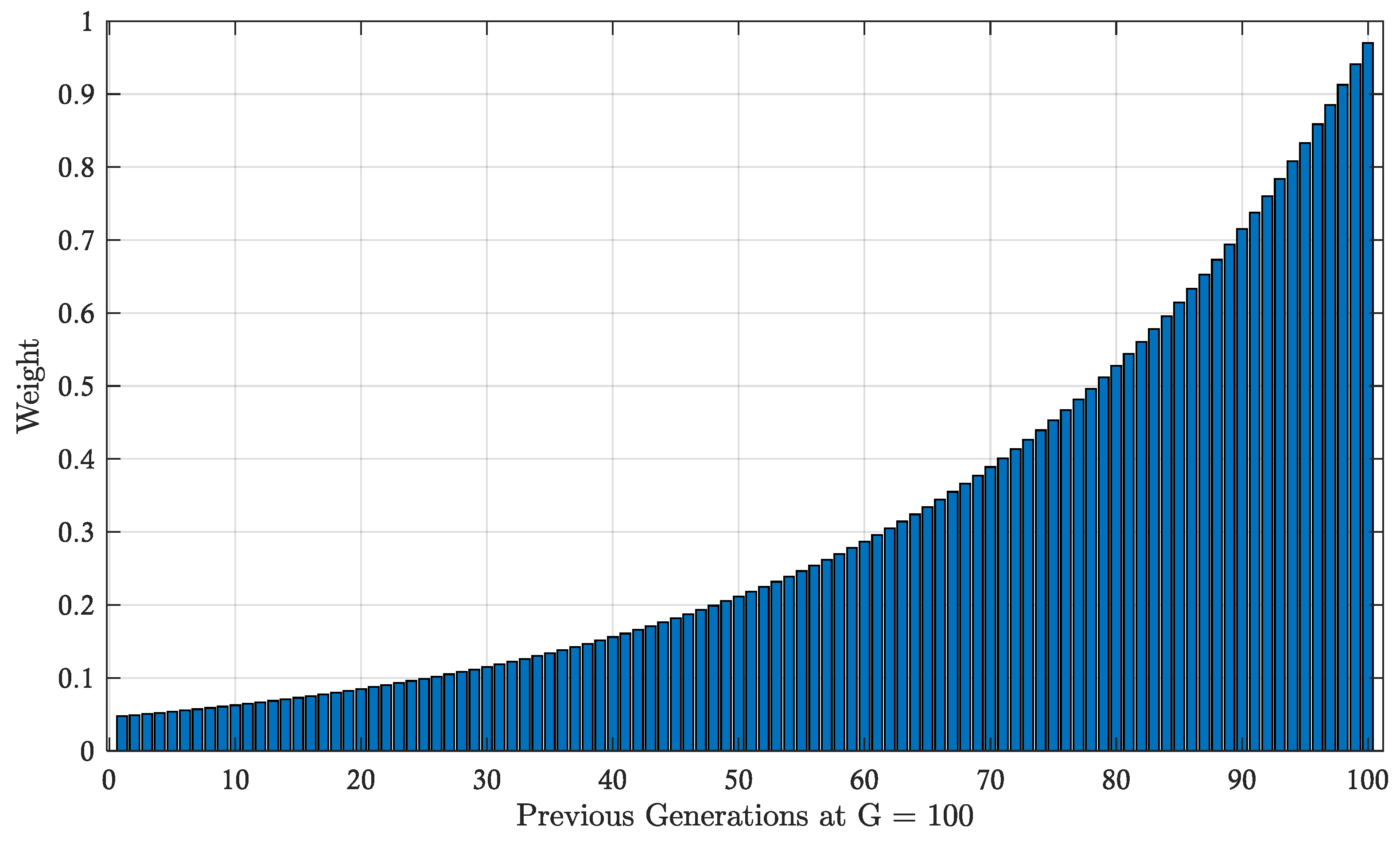

- Development of a novel selection mechanism using a memory-driven RWS strategy that leverages exponential memory-based knowledge and weighted moving averages to prioritize more effective parameter values. This adaptive tuning strategy improves the ability of the algorithm in escaping local optima and enhancing the exploration–exploitation balance.

- Incorporating an adaptive local search strategy to improve the exploitation capabilities of the algorithm. This approach enhances solution quality, preserves population diversity, and increases the ability of the algorithm to avoid trapping in local optima.

- Validation of the ATM-GSK algorithm through experiments on CEC 2011 and CEC 2017 benchmarks. Results show that ATM-GSK outperforms the original GSK and other metaheuristic algorithms in terms of convergence accuracy and computational robustness.

2. Literature Review

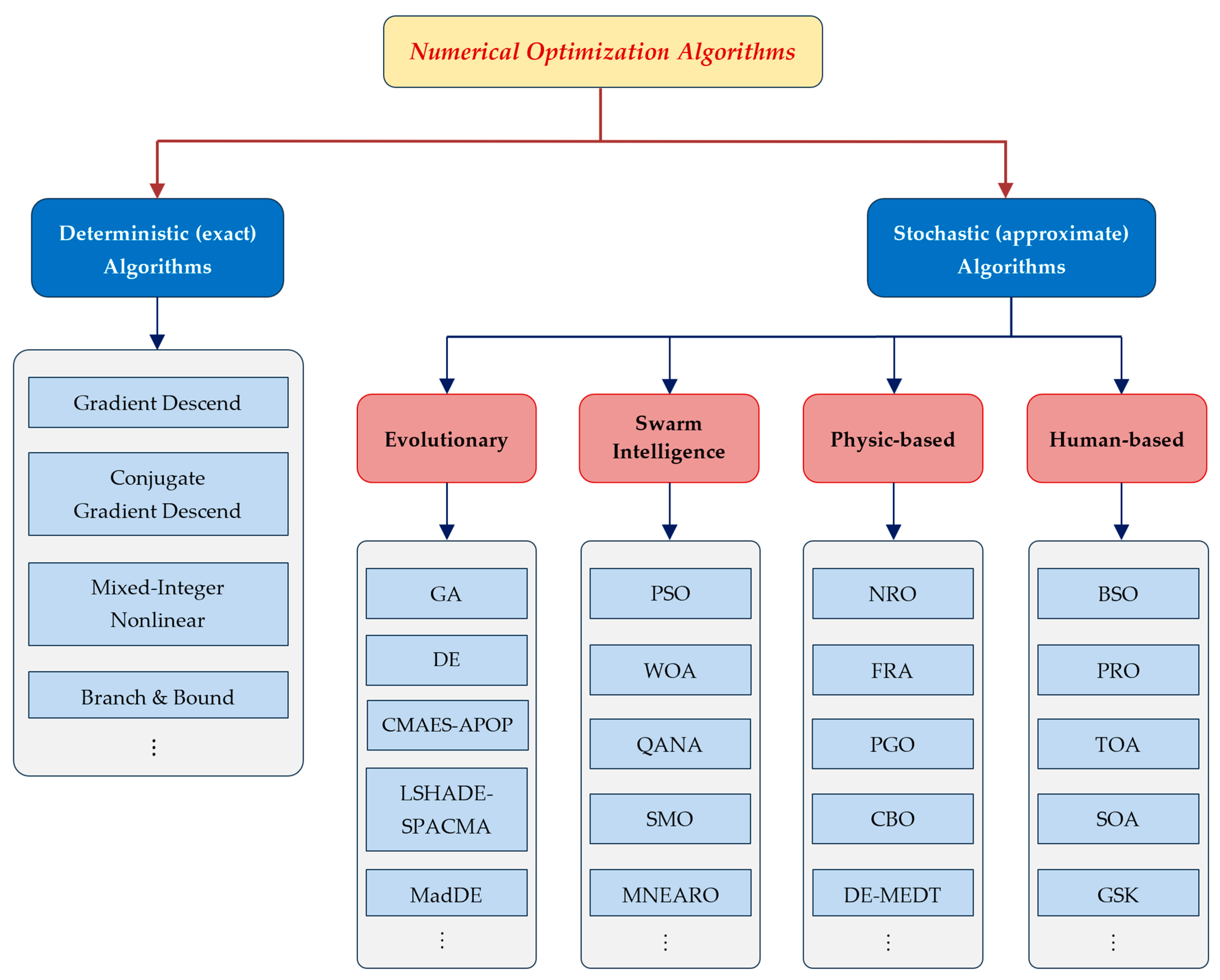

2.1. Metaheuristic Optimization Algorithms

2.2. GSK and Its Variants

2.2.1. Population Enhancement Techniques

2.2.2. Hybrid Algorithms

2.2.3. Strategy Enhancement Techniques

2.2.4. Composite Enhancement Techniques

2.3. Our Contributions Against the Existing Literature

3. Proposed ATMALS-GSK Algorithm

3.1. GSK

| Algorithm 1. Overall operation of original GSK Algorithm |

| Inputs: - N: Population size - D: Number of dimensions (decision variables) - K: Knowledge rate (controls the volume of knowledge exchange) - Kf: Knowledge factor (controls intensity of learning) - Kr: Knowledge ratio (probability of applying knowledge update) - MaxGen: Maximum number of generations - P: Proportion for grouping in senior phase Output: Global best solution (Xbest) 1 Initialize generation counter G ← 0 2 Generate an initial population of N individuals: 3 for 1 each i = 1:N 4 Initialize individual xi randomly in D-dimensional space 5 Evaluate fitness f (xi) for each individual i 6 for 2 each generation G = 1 to MaxGen: 7 Compute knowledge-sharing dimensions for both phases 8 Determine D(Junior) and D(Senior) using Equations (1) and (2). 9 Apply Junior Gaining–Sharing Knowledge Phase: 10 - For each individual i: 11 - Select nearest better (X_better) and worse (X_worse) individuals 12 - Choose a random peer (xr) for exploration 13 - Update D(Junior) dimensions based on the rules in Algorithm 2 14 Apply Senior Gaining–Sharing Knowledge Phase: 15 - Sort the population by fitness into Top P%, Middle N − 2P%, and Bottom P% 16 - For each individual i: 17 Select one peer from each group: X_best, X_mid, and X_worst 18 Update D(Senior) dimensions based on the rules in Algorithm 3 19 Evaluate new fitness values for all individuals 20 Update individuals based on new fitness: 21 Update the global best solution if a better one is found: 22 end for 2 23 end for 1 24 Return the global best solution found over all generations (X_best) |

3.1.1. Junior Phase

| Algorithm 2. Junior phase of GSK | |

| 1 | for 1 each solution i = 1:PopSize |

| 2 | for 2 each dimension j = 1:D |

| 3 | Generate a random number RND ∈ [0, 1] |

| 4 | if 1 RND ≤ Kr (Knowledge Ratio) |

| 5 | if 2 |

| 6 | ]; |

| 7 | else if 2 |

| 8 | ]; |

| 9 | end if 2 |

| 10 | else if 1 |

| 11 | ; |

| 12 | end if 1 |

| 13 | end for 2 |

| 14 | end for 1 |

3.1.2. Senior Phase

| Algorithm 3. Senior phase of GSK | |

| 1 | for 1 each individual i = 1:PopSize |

| 2 | for 2 each dimension j = 1:D |

| 3 | Generate a random number RND ∈ [0, 1] |

| 4 | if 1 (Knowledge Ratio) |

| 5 | if 2 |

| 6 | ]; |

| 7 | else if 2 |

| 8 | ]; |

| 9 | end if 2 |

| 10 | else if 1 |

| 11 | ; |

| 12 | end if 1 |

| 13 | end for 2 |

| 14 | end for 1 |

3.2. ATMALS-GSK

- Lack of generalizability across problem domains: Fixed parameter values might yield strong results for a particular problem but often fail to perform well across different problem settings or objective landscapes. What works for a scheduling problem, for instance, may underperform in a continuous optimization task.

- Inflexibility across search stages: The same parameter values are used throughout the entire execution of the algorithm, from the initial exploration phase to the final convergence phase. However, different phases of the search process typically benefit from different behaviors—strong exploration in early generations and refined exploitation in later ones. Static parameters fail to accommodate this shift, potentially leading to premature convergence or excessive wandering.

- No feedback from historical performance: The original GSK lacks a learning mechanism to leverage past search experience. Without any form of memory or adaptive control, the algorithm is unable to assess the effectiveness of different parameter configurations over time. This absence of self-tuning prevents the algorithm from evolving and improving its own strategy dynamically.

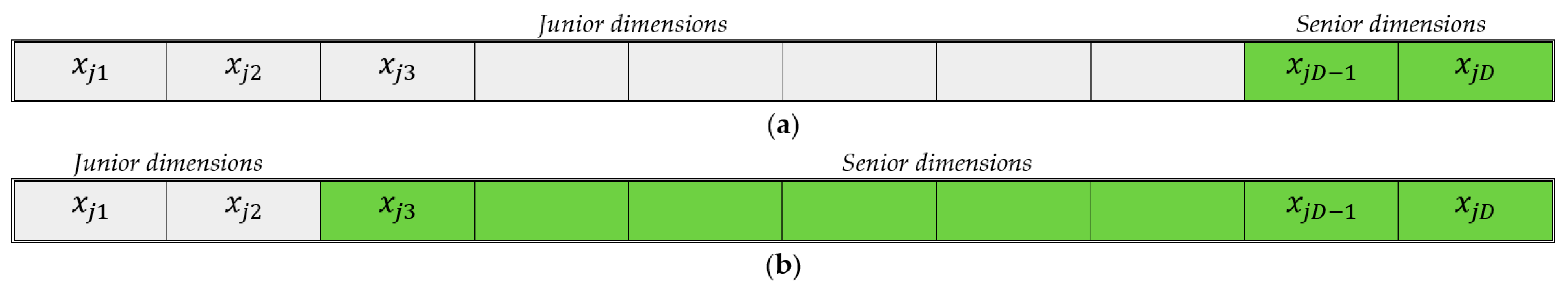

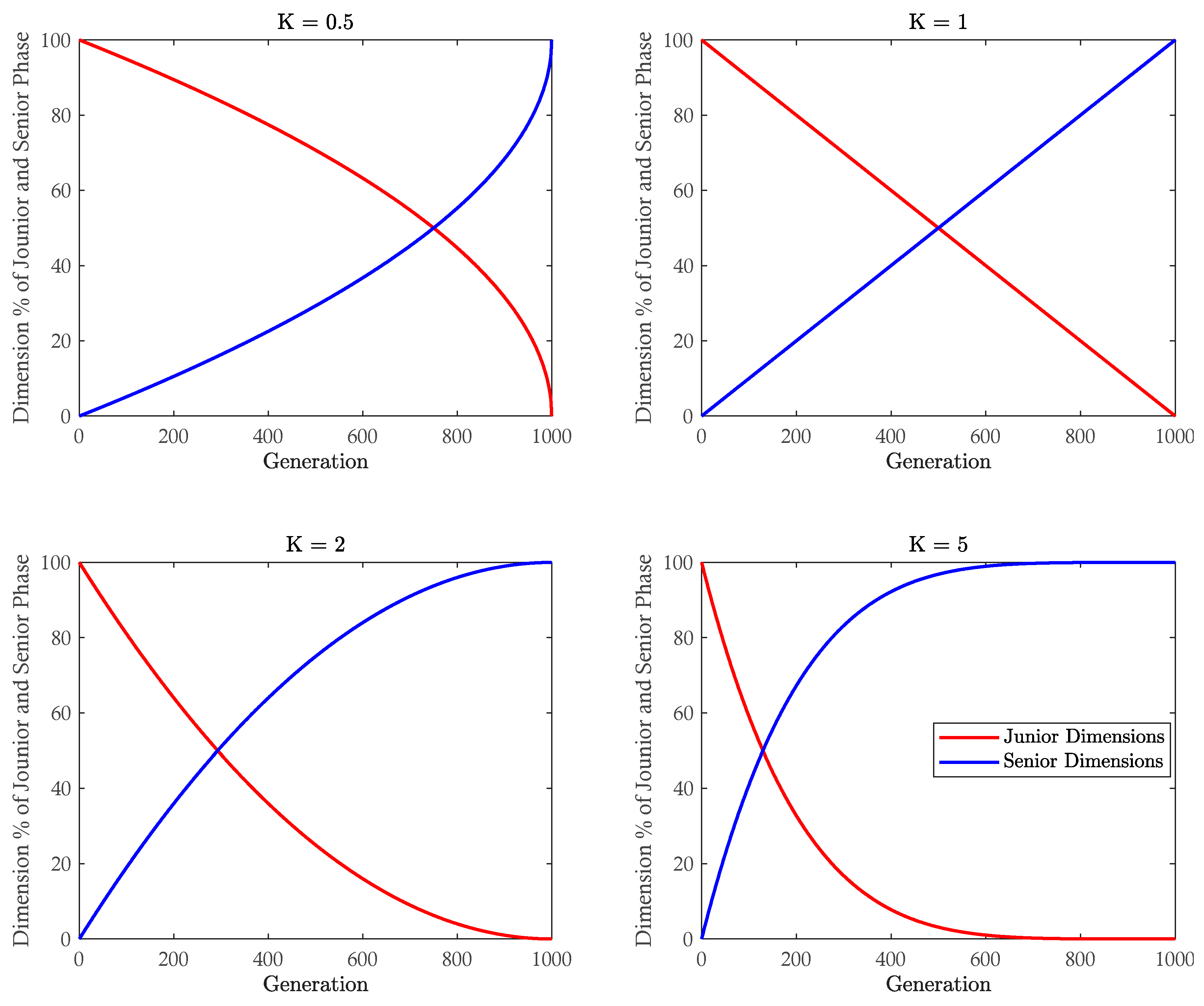

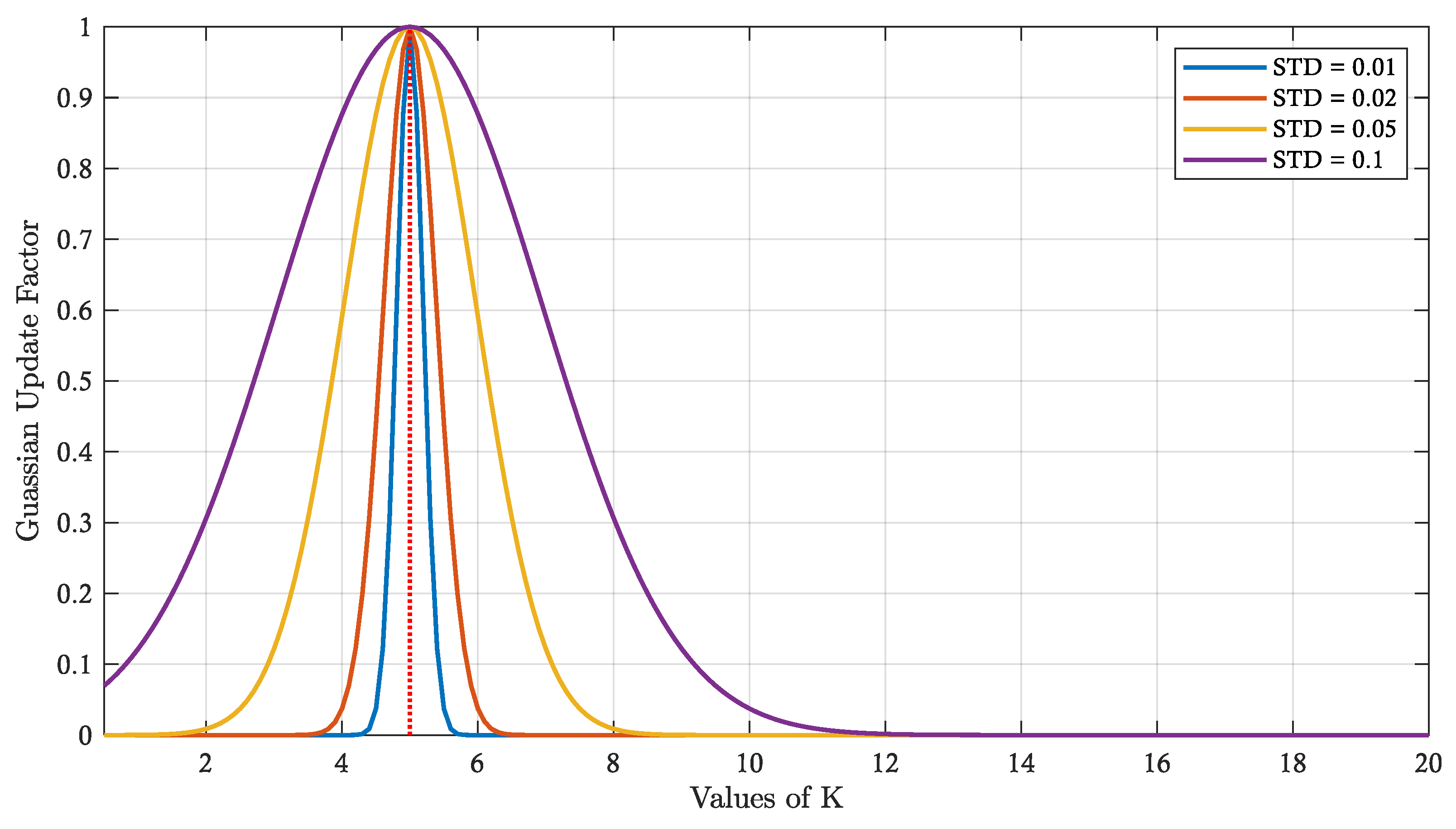

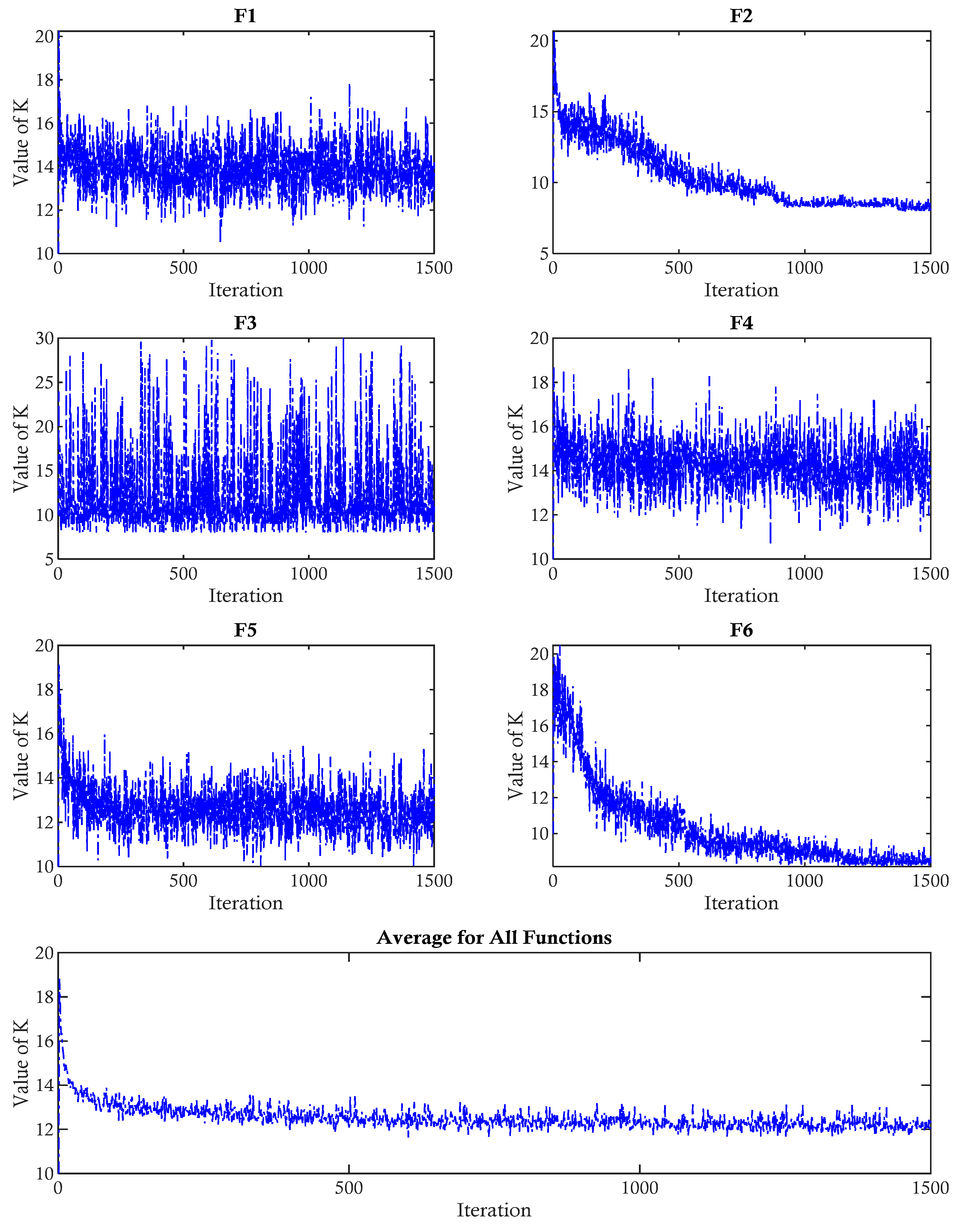

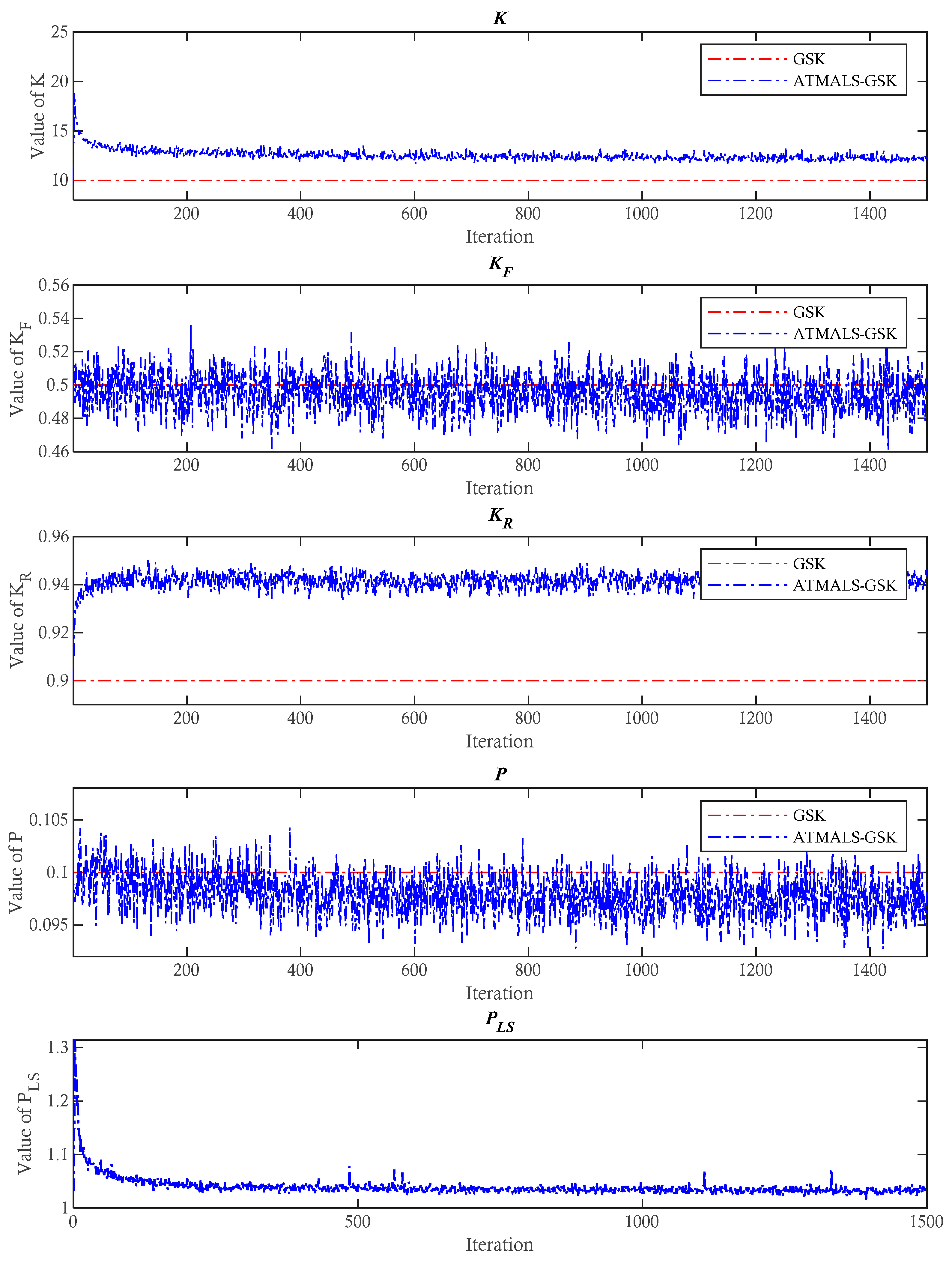

3.2.1. Adaptive K

3.2.2. Adaptive Kr

3.2.3. Adaptive Kf

3.2.4. Adaptive P

3.2.5. Adaptive PLS

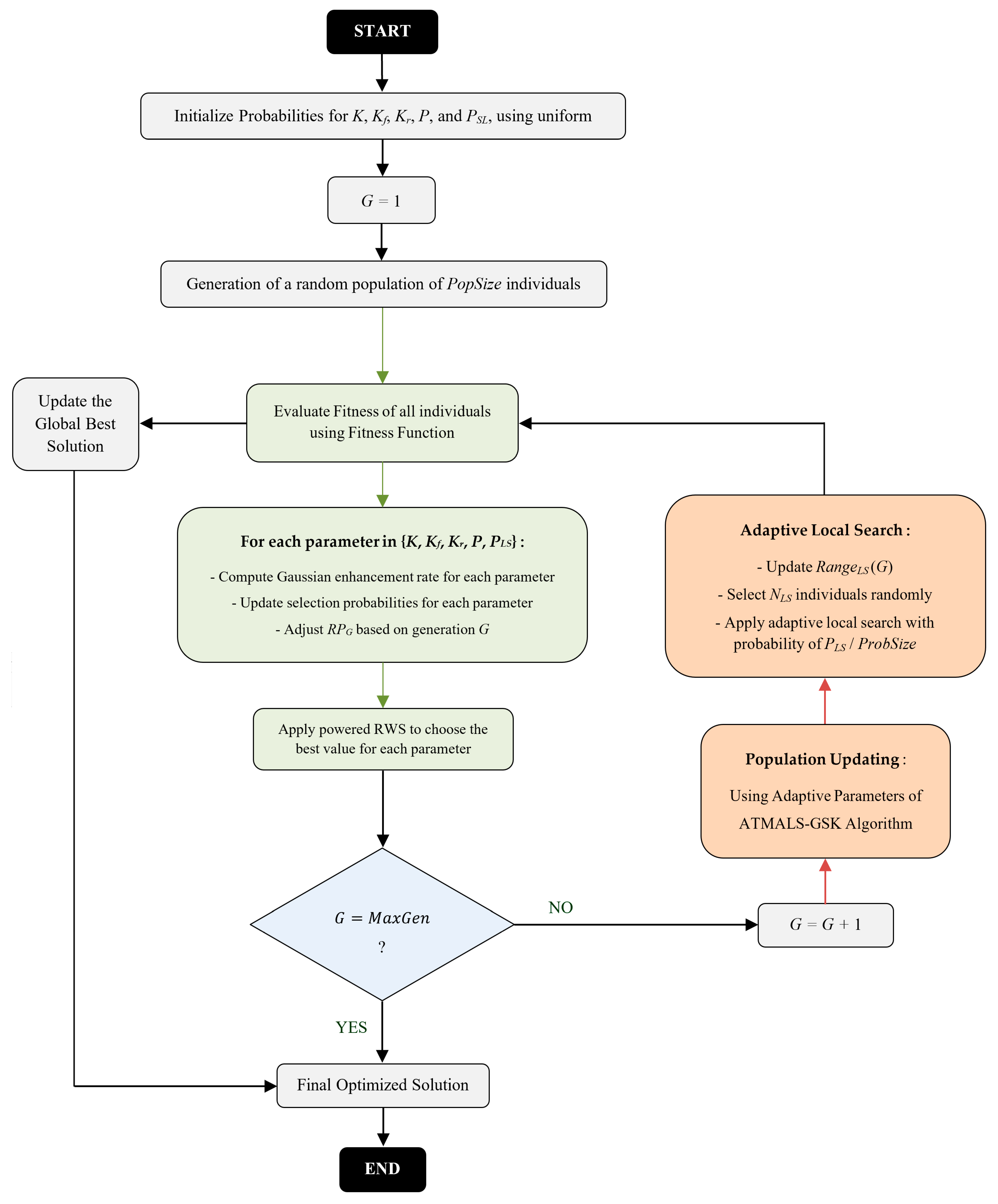

3.2.6. Overall Operation of ATMALS-GSK

| Algorithm 4. Proposed ATMALS-GSK Algorithm |

| Inputs: - MaxGen: Maximum generations - PopSize: Population size - ProbSize: Problem dimension - Fitness(): Objective function to minimize - KVALUES ← [1, 2, 3, …, 30] - KfVALUES ← [0.2, 0.3, …, 0.8] - KrVALUES ← [0.8, 0.81, …, 1.0] - PVALUES ← [0.05, 0.06, …, 0.2] - PLSVALUES ← [0.1, 0.11, …, 0.2] Initialize Probabilities for K, Kf, Kr, P, and PSL, using uniform distribution Initialize RPG ← 1, NLS ← 0.05 × PopSize, α ← 0.97, δ ← 0.05 × (max(VALUES) − min(VALUES)) Main ATMALS-GSK Algorithm: 1 Initialize population of PopSize individuals randomly 2 for 1 generation G = 1 to MaxGen do: 3 Evaluate Fitness of all individuals 4 for 2 each parameter in {K, Kf, Kr, P, PLS} do: 5 Compute Gaussian enhancement rate based on Equations (3) and (4) 6 Update selection probabilities using Equation (5) 7 Apply powered RWS using Equations (6) and (7) to choose parameter values 8 end for 2 9 RPG ← Adjust RPG based on generation G (gradual increase) 10 Partition individuals into Junior and Senior based on adaptive Kr value 11 for 3 each individual i in population do: 12 if i is in Junior group then: 13 Update D(junior) using junior learning rule (based on K) 14 else if i is in Senior group then: 15 Update D(senior) using senior learning rule (based on K) 16 end if 17 end for 3 18 Update RangeLS(G) using Equation (8) 19 Select NLS individuals randomly 20 for 4 each selected individual in NLS do: 21 for 5 each dimension d do: 22 Apply adaptive local search with probability of PLS/ProbSize 23 end for 5 24 end for 4 25 Update historical memory of parameters’ fitness improvements 26 Update the global best solution found so far 27 end for 1 Output: Best solution found and its fitness value |

4. Performance Evaluation

4.1. Settings

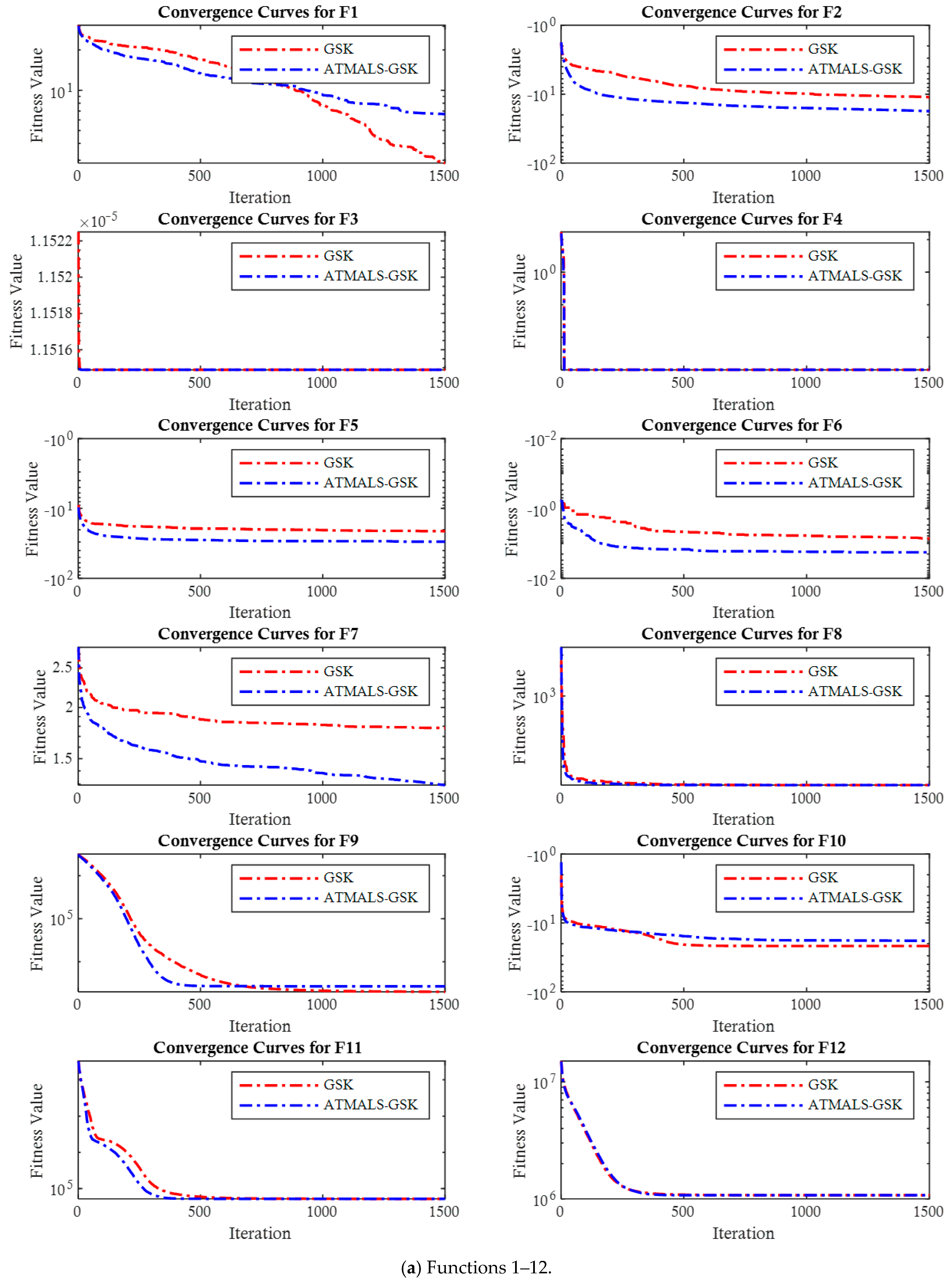

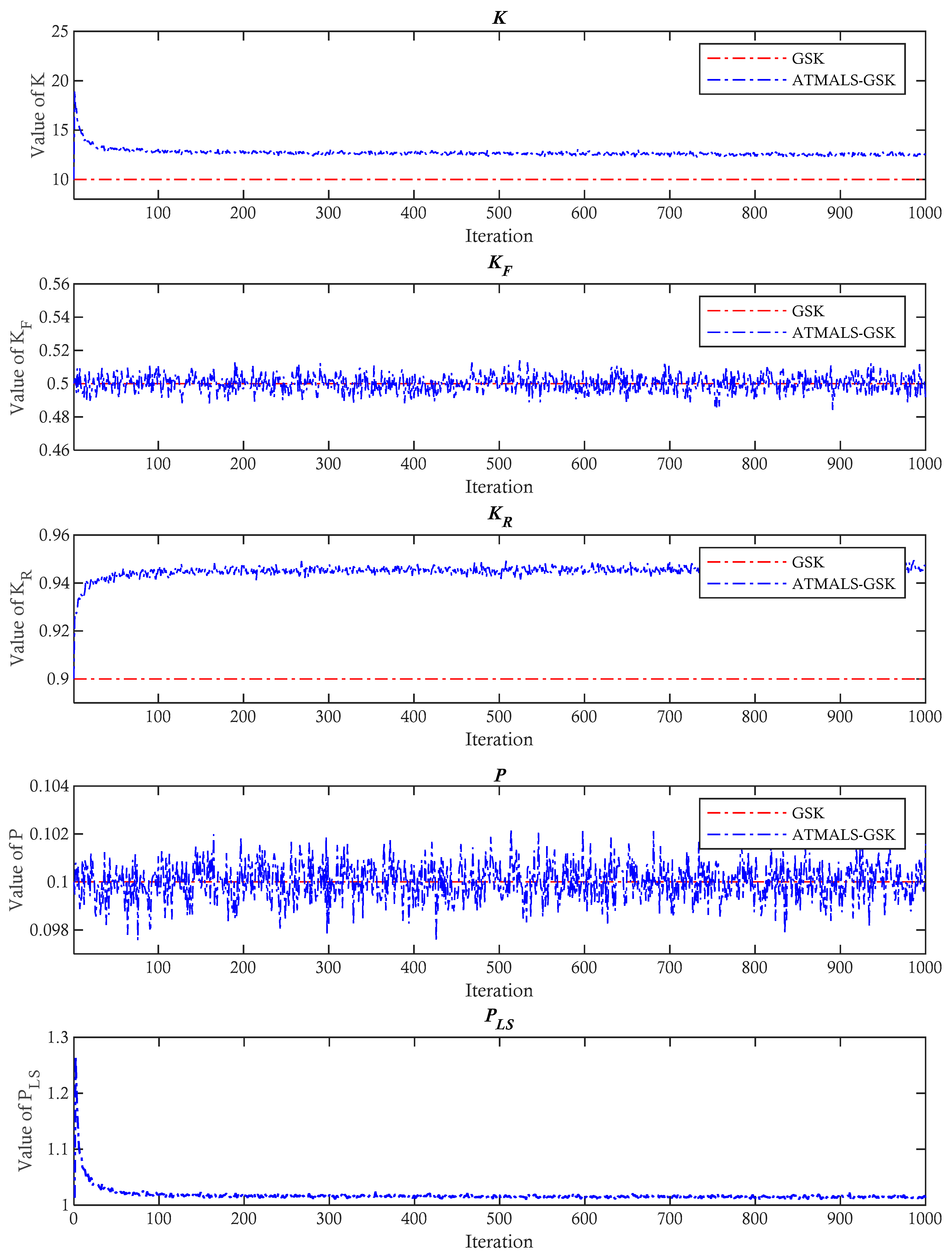

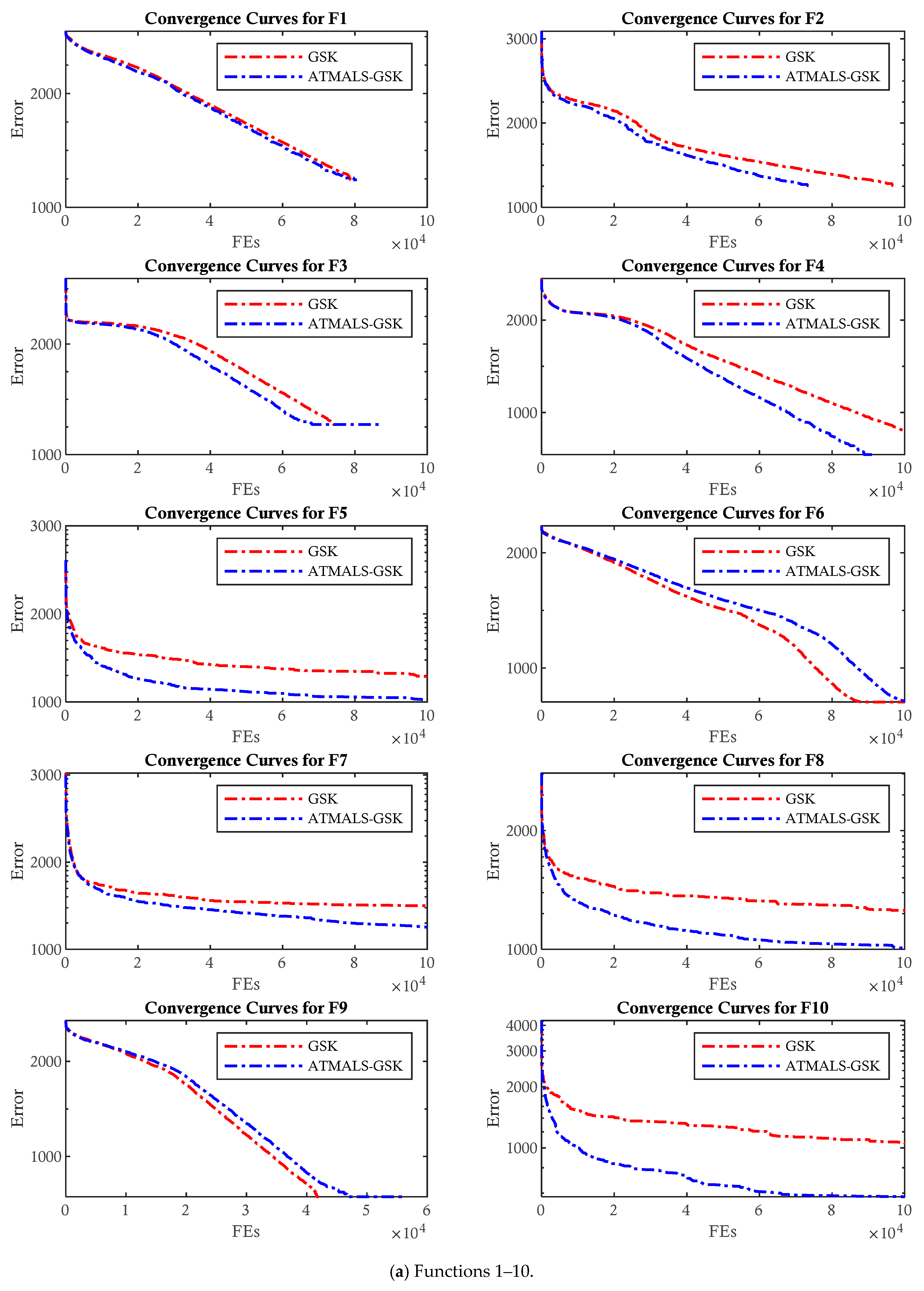

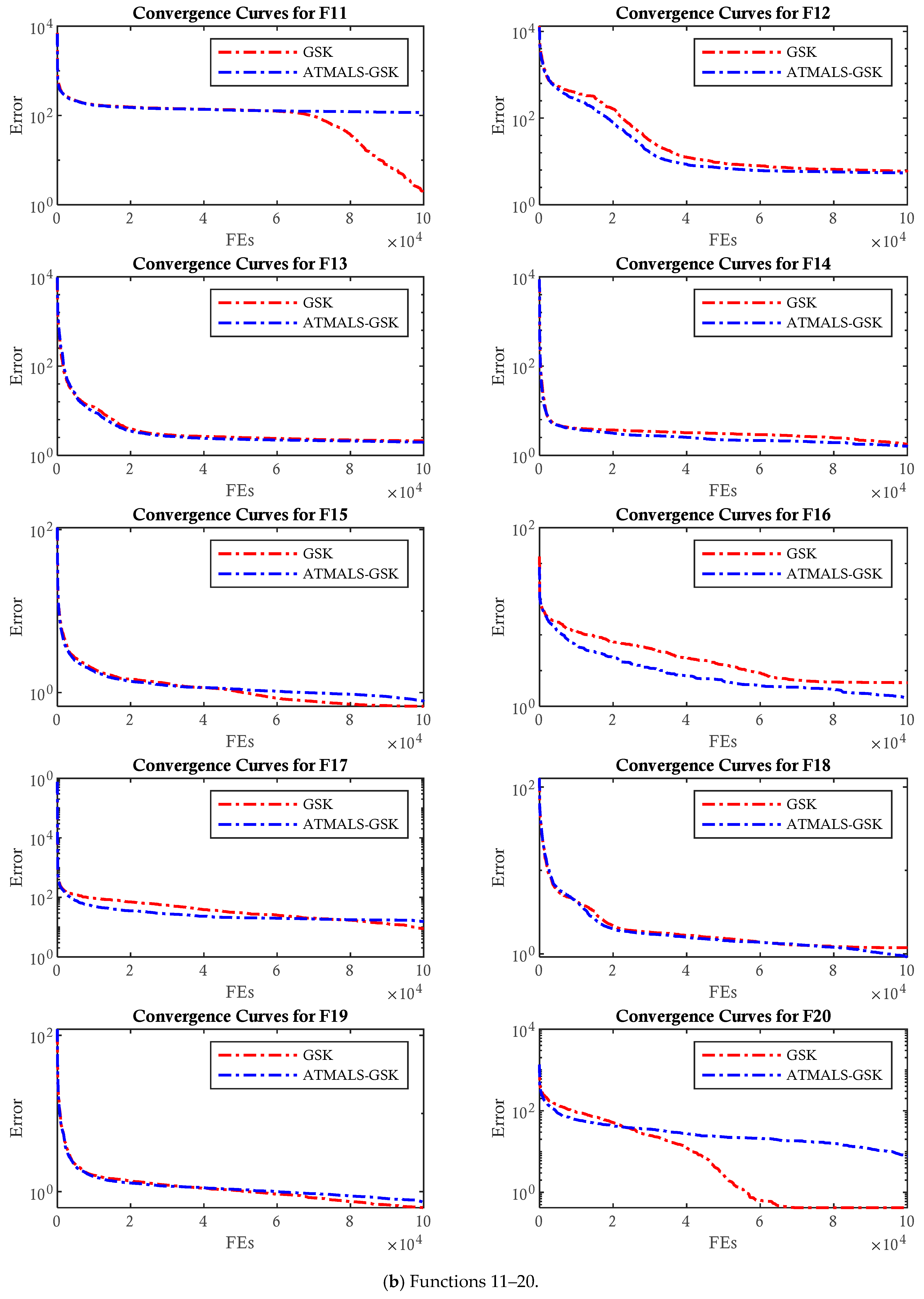

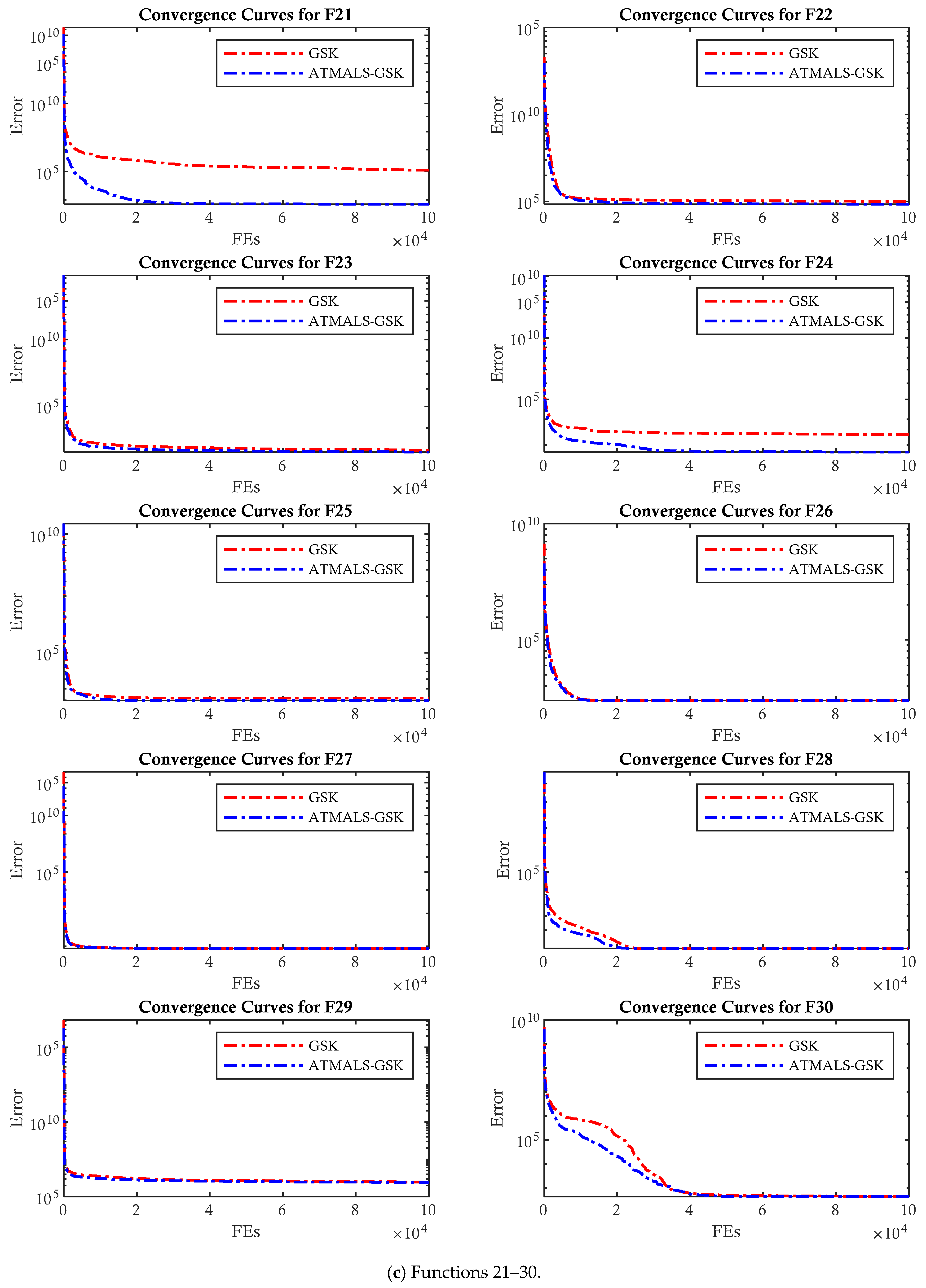

4.2. Results of ATMALS-GSK

4.2.1. CEC 2011

4.2.2. CEC 2017

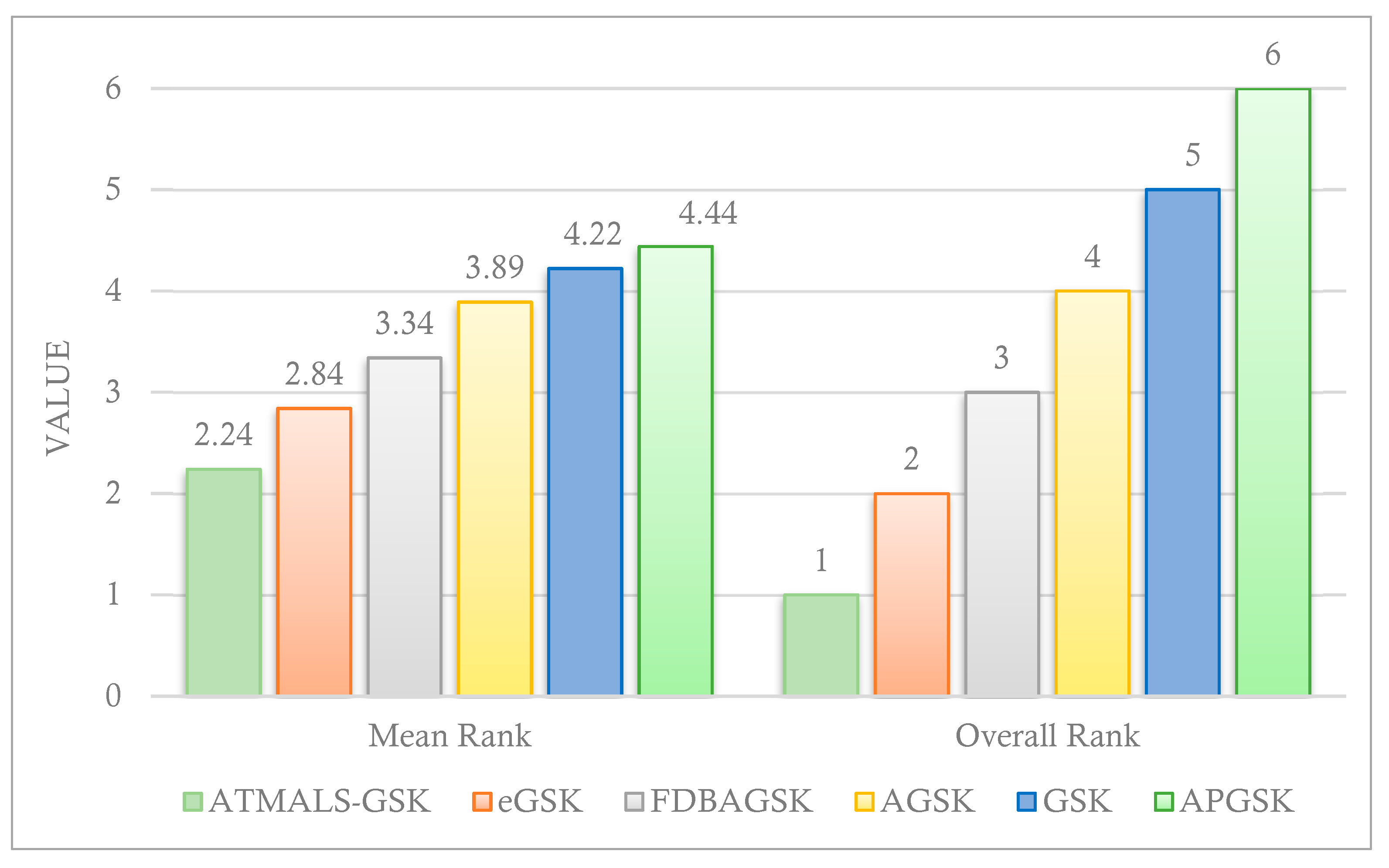

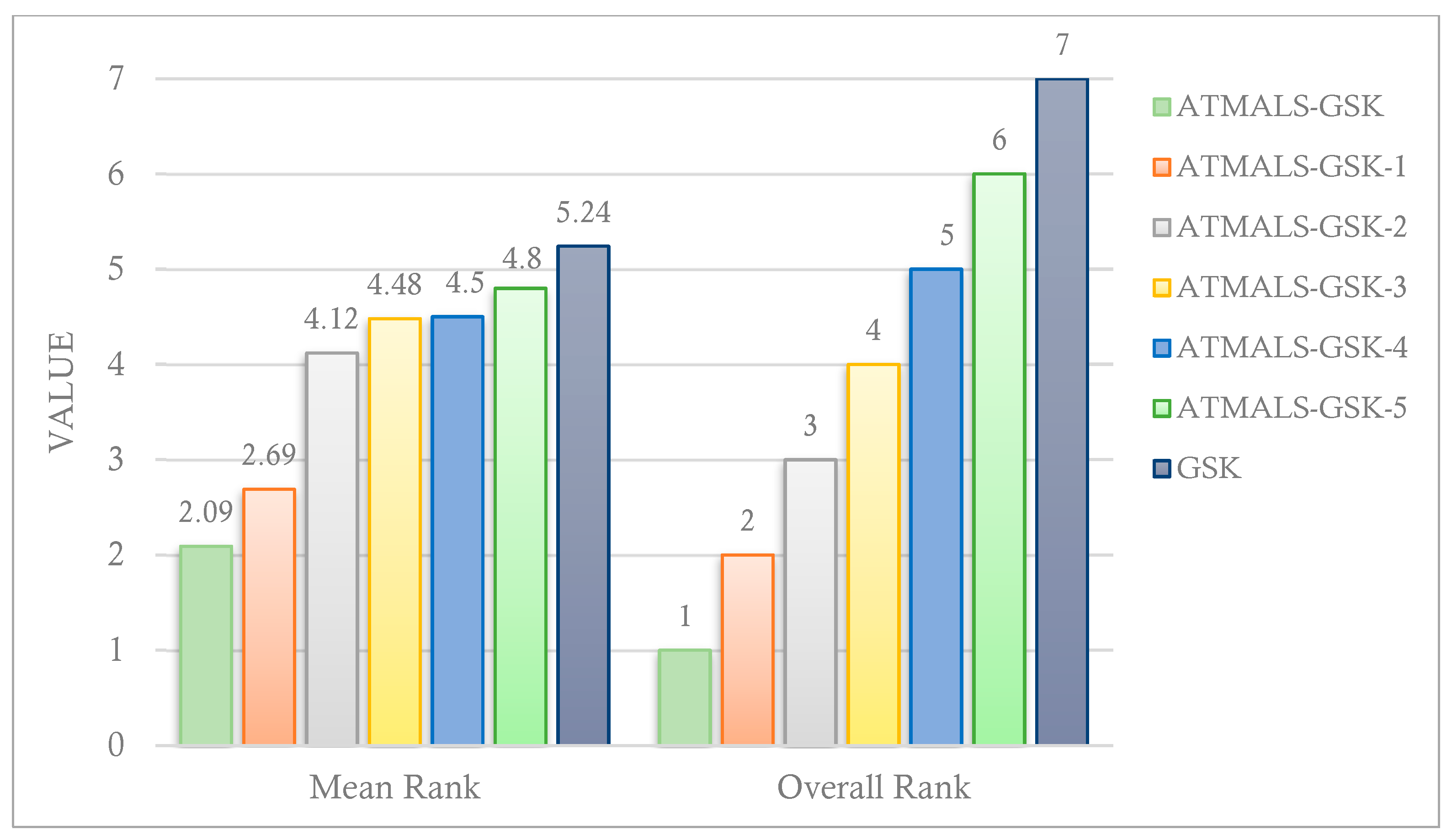

4.3. Comparison with GSK and Modified GSKs

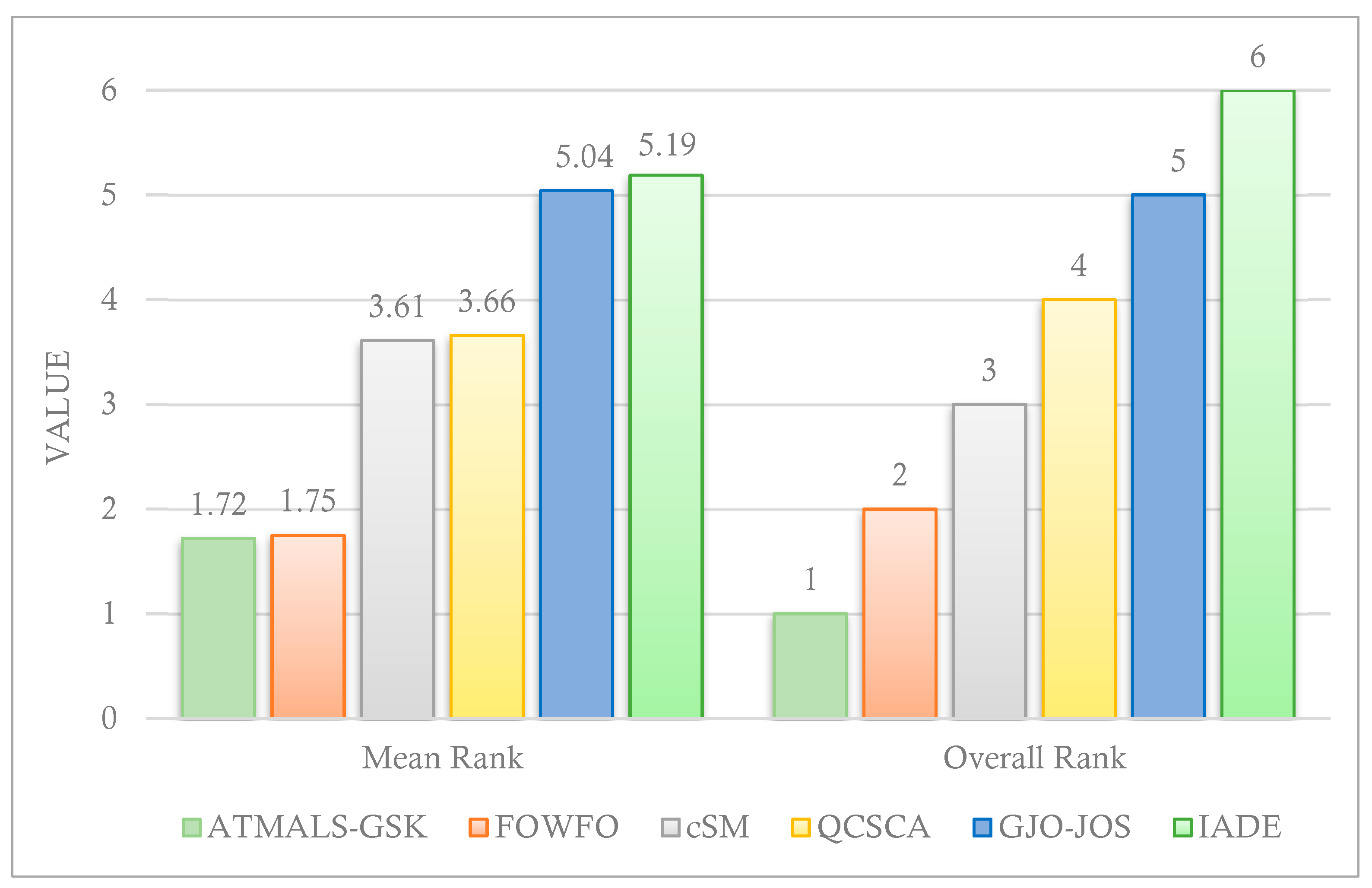

4.4. Comparison with Other Metaheuristic Algorithms

4.5. Statistical Analysis

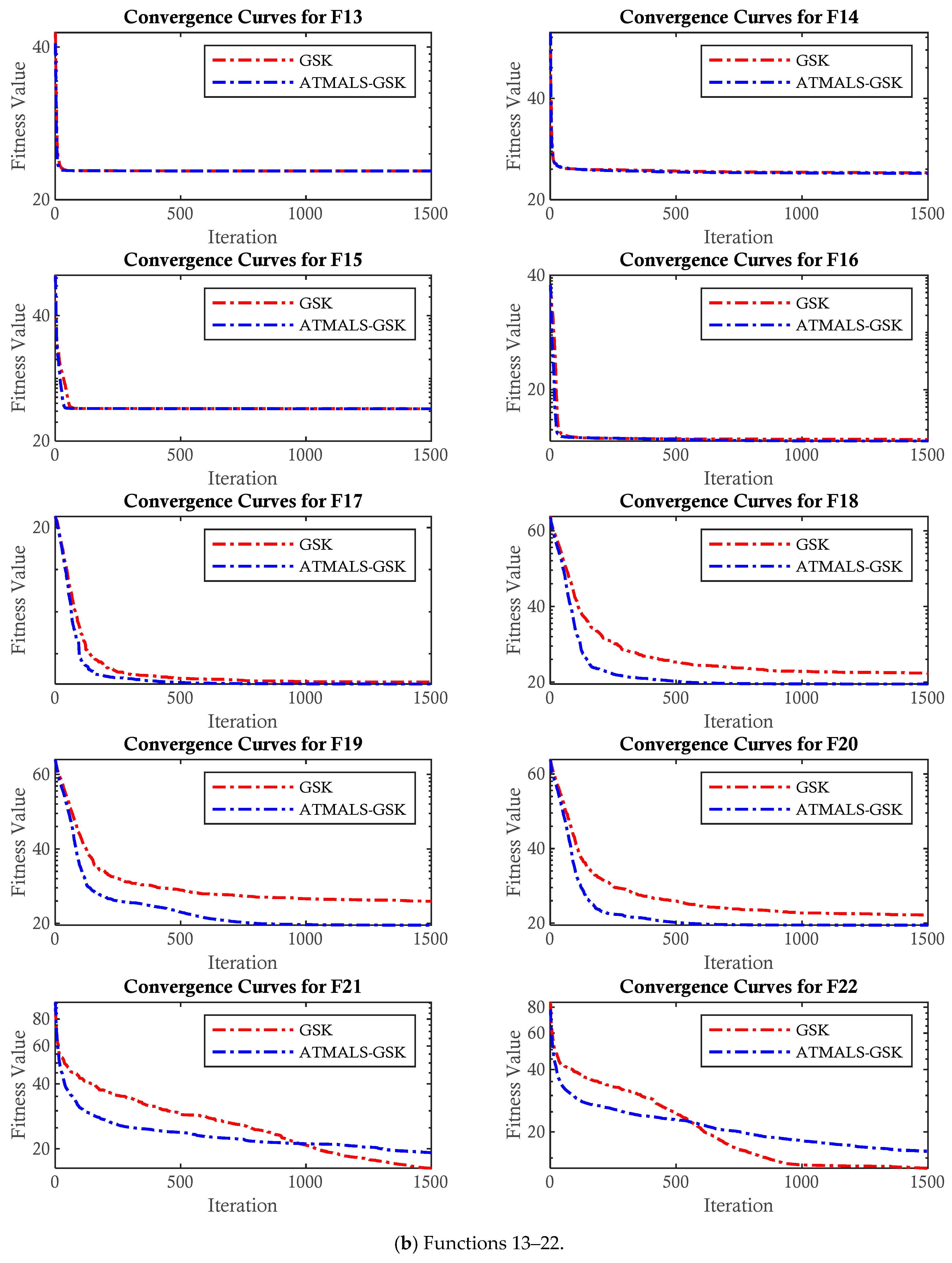

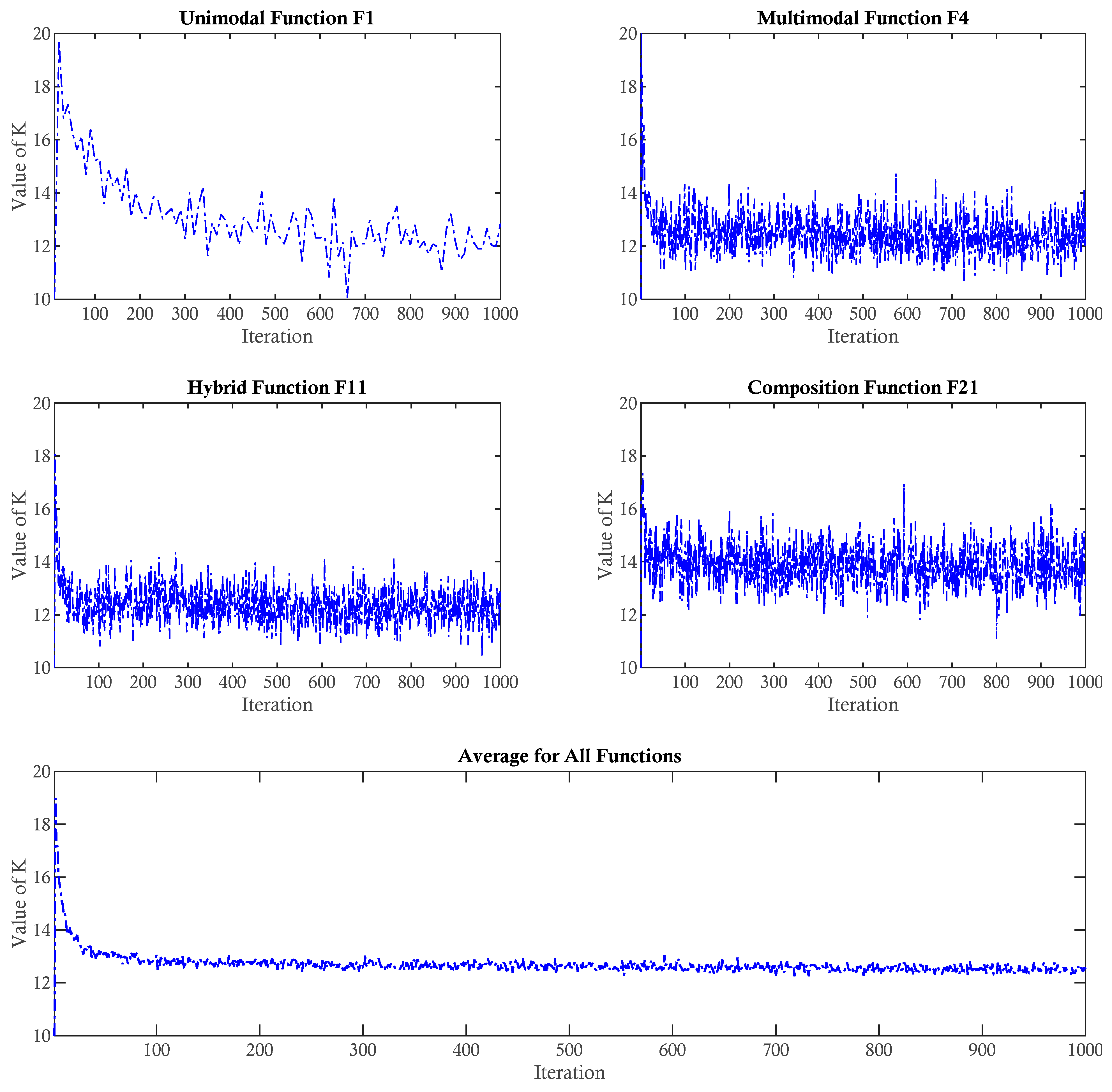

4.6. Parametric Study of ATMALS-GSK

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Selvarajan, S. A comprehensive study on modern optimization techniques for engineering applications. Artif. Intell. Rev. 2024, 57, 194. [Google Scholar] [CrossRef]

- Deng, J.; Zhou, C.; Wang, J. Approaches and application of heat and water network integration in chemical process system engineering: A review. Chem. Eng. Process.-Process Intensif. 2023, 183, 109263. [Google Scholar] [CrossRef]

- Fanian, F.; Rafsanjani, M.K.; Shokouhifar, M. Combined fuzzy-metaheuristic framework for bridge health monitoring using UAV-enabled rechargeable wireless sensor networks. Appl. Soft Comput. 2024, 167, 112429. [Google Scholar] [CrossRef]

- Shokouhifar, M.; Fanian, F.; Rafsanjani, M.K.; Hosseinzadeh, M.; Mirjalili, S. AI-driven cluster-based routing protocols in WSNs: A survey of fuzzy heuristics, metaheuristics, and machine learning models. Comput. Sci. Rev. 2024, 54, 100684. [Google Scholar] [CrossRef]

- Memarian, S.; Behmanesh-Fard, N.; Aryai, P.; Shokouhifar, M.; Mirjalili, S.; del Carmen Romero-Ternero, M. TSFIS-GWO: Metaheuristic-driven takagi-sugeno fuzzy system for adaptive real-time routing in WBANs. Appl. Soft Comput. 2024, 155, 111427. [Google Scholar] [CrossRef]

- Xie, L.; Wang, Y.; Tang, S.; Li, Y.; Zhang, Z.; Huang, C. Adaptive gaining-sharing knowledge-based variant algorithm with historical probability expansion and its application in escape maneuver decision making. Artif. Intell. Rev. 2025, 58, 1–43. [Google Scholar] [CrossRef]

- Janga Reddy, M.; Nagesh Kumar, D. Evolutionary algorithms, swarm intelligence methods, and their applications in water resources engineering: A state-of-the-art review. H2Open J. 2020, 3, 135–188. [Google Scholar] [CrossRef]

- Shokouhifar, M. FH-ACO: Fuzzy heuristic-based ant colony optimization for joint virtual network function placement and routing. Appl. Soft Comput. 2021, 107, 107401. [Google Scholar] [CrossRef]

- Siddique, N.; Adeli, H. Physics-based search and optimization: Inspirations from nature. Expert Syst. 2016, 33, 607–623. [Google Scholar] [CrossRef]

- Emami, H. Stock exchange trading optimization algorithm: A human-inspired method for global optimization. J. Supercomput. 2022, 78, 2125–2174. [Google Scholar] [CrossRef]

- Tanweer, M.R.; Sundaram, S. Human cognition inspired particle swarm optimization algorithm. In Proceedings of the 2014 IEEE Ninth International Conference on Intelligent Sensors, Sensor Networks and Information Processing, Singapore, 21–24 April 2014; pp. 1–6. [Google Scholar]

- Debnath, S.; Arif, W.; Baishya, S. Buyer inspired meta-heuristic optimization algorithm. Open Comput. Sci. 2020, 10, 194–219. [Google Scholar] [CrossRef]

- Liu, C.; Ma, Y.; Yin, H.; Yu, L. Human resource allocation for multiple scientific research projects via improved pigeon-inspired optimization algorithm. Sci. China Technol. Sci. 2021, 64, 139–147. [Google Scholar] [CrossRef]

- Veysari, E.F. A new optimization algorithm inspired by the quest for the evolution of human society: Human felicity algorithm. Expert Syst. Appl. 2022, 193, 116468. [Google Scholar]

- Liu, Z.; Jian, X.; Sadiq, T.; Shaikh, Z.A.; Alfarraj, O.; Alblehai, F.; Tolba, A. Efficient control of spider-like medical robots with capsule neural networks and modified spring search algorithm. Sci. Rep. 2025, 15, 13828. [Google Scholar] [CrossRef] [PubMed]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K. Gaining-sharing knowledge based algorithm for solving optimization problems: A novel nature-inspired algorithm. Int. J. Mach. Learn. Cybern. 2020, 11, 1501–1529. [Google Scholar] [CrossRef]

- Nguyen, D.M. The effect of mirrored sampling with active CMA and sample reuse in the CMAES-APOP algorithm. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Boston, MA, USA, 9–13 July 2022; pp. 403–406. [Google Scholar]

- Gao, Y.; Zhang, J.; Wang, Y.; Wang, J.; Qin, L. Love evolution algorithm: A stimulus–value–role theory-inspired evolutionary algorithm for global optimization. J. Supercomput. 2024, 80, 12346–12407. [Google Scholar] [CrossRef]

- Biswas, S.; Saha, D.; De, S.; Cobb, A.D.; Das, S.; Jalaian, B.A. Improving differential evolution through Bayesian hyperparameter optimization. In Proceedings of the 2021 IEEE Congress on Evolutionary Computation, Kraków, Poland, 28 June–1 July 2021; pp. 832–840. [Google Scholar]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. QANA: Quantum-based avian navigation optimizer algorithm. Eng. Appl. Artif. Intell. 2021, 104, 104314. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. Starling murmuration optimizer: A novel bio-inspired algorithm for global and engineering optimization. Comput. Methods Appl. Mech. Eng. 2022, 392, 114616. [Google Scholar] [CrossRef]

- Jangir, P.; Ezugwu, A.E.; Saleem, K.; Arpita Agrawal, S.P.; Pandya, S.B.; Parmar, A.; Gulothungan, G.; Abualigah, L. A hybrid mutational Northern Goshawk and elite opposition learning artificial rabbits optimizer for PEMFC parameter estimation. Sci. Rep. 2024, 14, 28657. [Google Scholar] [CrossRef]

- Wei, Z.; Huang, C.; Wang, X.; Han, T.; Li, Y. Nuclear reaction optimization: A novel and powerful physics-based algorithm for global optimization. IEEE Access 2019, 7, 66084–66109. [Google Scholar] [CrossRef]

- Tahani, M.; Babayan, N. Flow Regime Algorithm (FRA): A physics-based meta-heuristics algorithm. Knowl. Inf. Syst. 2019, 60, 1001–1038. [Google Scholar] [CrossRef]

- Kaveh, A.; Akbari, H.; Hosseini, S.M. Plasma generation optimization: A new physically-based metaheuristic algorithm for solving constrained optimization problems. Eng. Comput. 2021, 38, 1554–1606. [Google Scholar] [CrossRef]

- Chen, D.; Lu, R.; Li, S.; Zou, F.; Liu, Y. An enhanced colliding bodies optimization and its application. Artif. Intell. Rev. 2020, 53, 1127–1186. [Google Scholar] [CrossRef]

- Kaveh, A.; Hosseini, S.M.; Zaerreza, A. A physics-based metaheuristic algorithm based on doppler effect phenomenon and mean euclidian distance threshold. Period. Polytech. Civ. Eng. 2022, 66, 820–842. [Google Scholar] [CrossRef]

- Shi, Y. Brain storm optimization algorithm. In Advances in Swarm Intelligence: Second International Conference, ICSI 2011, Chongqing, China, June 12–15, 2011, Proceedings, Part I; Springer: Berlin/Heidelberg, Germany, 2011; pp. 303–309. [Google Scholar]

- Moosavi, S.H.S.; Bardsiri, V.K. Poor and rich optimization algorithm: A new human-based and multi populations algorithm. Eng. Appl. Artif. Intell. 2019, 86, 165–181. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P. Teamwork optimization algorithm: A new optimization approach for function minimization/maximization. Sensors 2021, 21, 4567. [Google Scholar] [CrossRef]

- Givi, H.; Hubalovska, M. Skill Optimization Algorithm: A New Human-Based Metaheuristic Technique. Comput. Mater. Contin. 2023, 74, 179–202. [Google Scholar] [CrossRef]

- Jalali, S.M.J.; Ahmadian, M.; Ahmadian, S.; Khosravi, A.; Alazab, M.; Nahavandi, S. An oppositional-Cauchy based GSK evolutionary algorithm with a novel deep ensemble reinforcement learning strategy for COVID-19 diagnosis. Appl. Soft Comput. 2021, 111, 107675. [Google Scholar] [CrossRef]

- Ma, H.; Zhang, J.; Wei, W.; Cheng, W.; Liu, Q. A Modified Gaining-Sharing Knowledge Algorithm Based on Dual-Population and Multi-operators for Unconstrained Optimization. In Advances in Swarm Intelligence: Second International Conference, ICSI 2011, Chongqing, China, June 12–15, 2011, Proceedings, Part I; Springer Nature: Cham, Switzerland, 2023; pp. 309–319. [Google Scholar]

- Bakır, H.; Duman, S.; Guvenc, U.; Kahraman, H.T. Improved adaptive gaining-sharing knowledge algorithm with FDB-based guiding mechanism for optimization of optimal reactive power flow problem. Electr. Eng. 2023, 105, 3121–3160. [Google Scholar] [CrossRef]

- Pan, J.S.; Liu, L.F.; Chu, S.C.; Song, P.C.; Liu, G.G. A new gaining-sharing knowledge based algorithm with parallel opposition-based learning for Internet of Vehicles. Mathematics 2023, 11, 2953. [Google Scholar] [CrossRef]

- Kamarposhti, M.A.; Shokouhandeh, H.; Lee, Y.; Kang, S.K.; Colak, I.; Barhoumi, E.M. Optimizing switch placement and status for sustainable grid operation and effective distributed generation integration using the APGSK-IMODE algorithm. Int. J. Low-Carbon Technol. 2024, 19, 2676–2686. [Google Scholar] [CrossRef]

- Liang, Z.; Wang, Z. Enhancing population diversity based gaining-sharing knowledge based algorithm for global optimization and engineering design problems. Expert Syst. Appl. 2024, 252, 123958. [Google Scholar] [CrossRef]

- Nabahat, M.; Khiyabani, F.M.; Navmipour, N.J. Hybrid Noise Reduction Filter Using the Gaining–Sharing Knowledge-Based Optimization and the Whale Optimization Algorithms. SN Comput. Sci. 2024, 5, 417. [Google Scholar] [CrossRef]

- Nunes, H.; Pombo, J.; Mariano, S.; do Rosário Calado, M. Parameter Estimation of Lithium-Ion Batteries Using GSKPSO Hybrid Method Based on Impedance Data. In Proceedings of the 2024 IEEE International Conference on Environment and Electrical Engineering and 2024 IEEE Industrial and Commercial Power Systems Europe (EEEIC/I&CPS Europe), Rome, Italy, 17–20 June 2024; pp. 1–6. [Google Scholar]

- Zhong, X.; Duan, M.; Zhang, X.; Cheng, P. A hybrid differential evolution based on gaining-sharing knowledge algorithm and harris hawks optimization. PLoS ONE 2021, 16, e0250951. [Google Scholar] [CrossRef] [PubMed]

- Navaneetha Krishnan, M.; Thiyagarajan, R. Multi-objective task scheduling in fog computing using improved gaining sharing knowledge based algorithm. Concurr. Comput. Pract. Exp. 2022, 34, e7227. [Google Scholar] [CrossRef]

- Liang, Z.; Shu, T.; Ding, Z. A novel improved whale optimization algorithm for global optimization and engineering applications. Mathematics 2024, 12, 636. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Abutarboush, H.F.; Hadi, A.A.; Mohamed, A.K. Gaining-sharing knowledge based algorithm with adaptive parameters for engineering optimization. IEEE Access 2021, 9, 65934–65946. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K.; Awad, N.H. Evaluating the performance of adaptive gainingsharing knowledge based algorithm on CEC 2020 benchmark problems. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation, Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Hodashinsky, I.A. Methods for improving the efficiency of swarm optimization algorithms. A survey. Autom. Remote Control 2020, 82, 935–967. [Google Scholar] [CrossRef]

- Sadeghi, A.; Daneshvar, A.; Zaj, M.M. Combined ensemble multi-class SVM and fuzzy NSGA-II for trend forecasting and trading in Forex markets. Expert Syst. Appl. 2021, 185, 115566. [Google Scholar] [CrossRef]

- Jawad, M.A.; Roshdy, H.S.M.; Mohamed, A.W. Enhanced Gaining-Sharing Knowledge-based algorithm. Results Control Optim. 2025, 19, 100542. [Google Scholar] [CrossRef]

- Zhou, Z.; Shojafar, M.; Abawajy, J.; Bashir, A.K. IADE: An improved differential evolution algorithm to preserve sustainability in a 6G network. IEEE Trans. Green Commun. Netw. 2021, 5, 1747–1760. [Google Scholar] [CrossRef]

- Arini, F.Y.; Sunat, K.; Soomlek, C. Golden jackal optimization with joint opposite selection: An enhanced nature-inspired optimization algorithm for solving optimization problems. IEEE Access 2022, 10, 128800–128823. [Google Scholar] [CrossRef]

- Hu, H.; Shan, W.; Tang, Y.; Heidari, A.A.; Chen, H.; Liu, H.; Wang, M.; Escorcia-Gutierrez, J.; Mansour, R.F.; Chen, J. Horizontal and vertical crossover of sine cosine algorithm with quick moves for optimization and feature selection. J. Comput. Des. Eng. 2022, 9, 2524–2555. [Google Scholar] [CrossRef]

- Khalfi, S.; Iacca, G.; Draa, A. A single-solution–compact hybrid algorithm for continuous optimization. Memetic Comput. 2023, 15, 155–204. [Google Scholar] [CrossRef]

- Tang, Z.; Wang, K.; Zang, Y.; Zhu, Q.; Todo, Y.; Gao, S. Fractional-order water flow optimizer. Int. J. Comput. Intell. Syst. 2024, 17, 84. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems; Technical Report; Jadavpur University: Kolkata, India; Nanyang Technological University: Singapore, 2010. [Google Scholar]

- Awad, N.H.; Ali, M.Z.; Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2017 Special Session and Competition on Single Objective Bound Constrained Real-Parameter Numerical Optimization; Technical Report; Nanyang Technological University: Singapore, 2016; pp. 1–34. [Google Scholar]

| Parameter | GSK | ATMALS-GSK | Range of Variation | Discretized Levels |

|---|---|---|---|---|

| Fixed Value | Initial Value | |||

| K | 10 | 10 | [5, 30] | 1 |

| Kf | 0.5 | 0.5 | [0.2, 0.8] | 0.1 |

| Kr | 0.9 | 0.9 | [0.85, 1] | 0.01 |

| P | 0.1 | 0.1 | [0.05, 0.15] | 0.01 |

| PLS | N/A | 1 | [1, 2]/ProbSize | 0.1 |

| NLS | N/A | 0.05 × PopSize | N/A | N/A |

| RPG | N/A | 5 | [3, 5] | N/A |

| K | G | D (Junior) | D (Senior) |

|---|---|---|---|

| 0.5 | 0 | 100 | 0 |

| 0.25 MaxGen | 87 | 13 | |

| 0.5 MaxGen | 71 | 29 | |

| 0.75 MaxGen | 50 | 50 | |

| MaxGen | 0 | 100 | |

| 1 | 0 | 100 | 0 |

| 0.25 MaxGen | 75 | 25 | |

| 0.5 MaxGen | 50 | 50 | |

| 0.75 MaxGen | 25 | 75 | |

| MaxGen | 0 | 100 | |

| 2 | 0 | 100 | 0 |

| 0.25 MaxGen | 56 | 44 | |

| 0.5 MaxGen | 25 | 75 | |

| 0.75 MaxGen | 6 | 94 | |

| MaxGen | 0 | 100 | |

| 5 | 0 | 100 | 0 |

| 0.25 MaxGen | 24 | 76 | |

| 0.5 MaxGen | 3 | 97 | |

| 0.75 MaxGen | 1 | 99 | |

| MaxGen | 0 | 100 |

| Function | GSK [16] | ATMALS-GSK (Proposed) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Best | Median | Worst | Mean | STD | Best | Median | Worst | Mean | STD | |

| 1 | 0.00 | 1.85 × 10−21 | 1.49 × 101 | 3.28 | 5.21 | 0.14 × 10−3 | 1.01 × 101 | 1.54 × 101 | 6.63 | 6.10 |

| 2 | −1.35 × 101 | −1.14 × 101 | −9.25 | −1.13 × 101 | 1.03 | −2.40 × 101 | −1.69 × 101 | −1.19 × 101 | −1.76 × 101 | 3.13 |

| 3 | 1.15 × 10−5 | 1.15 × 10−5 | 1.15 × 10−5 | 1.15 × 10−5 | 9.12 × 10−13 | 1.15 × 10−5 | 1.15 × 10−5 | 1.15 × 10−5 | 1.15 × 10−5 | 1.13× 10−20 |

| 4 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 5 | −2.39 × 101 | −2.08 × 101 | −1.87 × 101 | −2.06 × 101 | 1.21 | −3.59 × 101 | −2.89 × 101 | −2.42 × 101 | −2.97 × 101 | 3.31 |

| 6 | −1.16 × 101 | −6.85 | 0.00 | −6.94 | 2.48 | −2.91 × 101 | −1.85 × 101 | −7.69 | −1.79 × 101 | 4.85 |

| 7 | 1.59 | 1.78 | 1.97 | 1.78 | 1.08 × 10−1 | 9.99 × 10−1 | 1.2 | 1.49 | 1.29 | 1.25 × 10−1 |

| 8 | 2.20 × 102 | 2.20 × 102 | 2.20 × 102 | 2.20 × 102 | 0.00 | 2.20 × 102 | 2.20 × 102 | 2.20 × 102 | 2.20 × 102 | 0.00 |

| 9 | 1.29 × 103 | 2.07 × 103 | 3.09 × 103 | 2.11 × 103 | 5.02 × 102 | 1.52 × 103 | 2.43 × 103 | 5.31 × 103 | 2.67 × 103 | 9.43 × 102 |

| 10 | −2.18 × 101 | −2.16 × 101 | −2.14 × 101 | −2.16 × 101 | 1.19 × 10−1 | −2.18 × 101 | −1.75 × 101 | −1.30 × 101 | −1.81 × 101 | 3.07 |

| 11 | 5.09 × 104 | 5.24 × 104 | 5.39 × 104 | 5.24 × 104 | 6.88 × 102 | 5.15 × 104 | 5.23 × 104 | 5.31 × 104 | 5.23 × 104 | 4.29 × 102 |

| 12 | 1.07 × 106 | 1.07 × 106 | 1.08 × 106 | 1.07 × 106 | 1.73 × 103 | 1.06 × 106 | 1.07 × 106 | 1.07 × 106 | 1.07 × 106 | 2.10 × 103 |

| 13 | 1.54 × 104 | 1.54 × 104 | 1.55 × 104 | 1.54 × 104 | 2.44 | 1.54 × 104 | 1.54 × 104 | 1.54 × 104 | 1.54 × 104 | 1.29 × 10−5 |

| 14 | 1.82 × 104 | 1.84 × 104 | 1.86 × 104 | 1.84 × 104 | 1.22 × 102 | 1.80 × 104 | 1.81 × 104 | 1.84 × 104 | 1.82 × 104 | 1.02 × 102 |

| 15 | 3.28 × 104 | 3.28 × 104 | 3.28 × 104 | 3.28 × 104 | 1.55 × 101 | 3.27 × 104 | 3.27 × 104 | 3.28 × 104 | 3.27 × 104 | 3.13 × 101 |

| 16 | 1.32 × 105 | 1.35 × 105 | 1.39 × 105 | 1.35 × 105 | 2.22 × 103 | 1.25 × 105 | 1.27 × 105 | 1.29 × 105 | 1.27 × 105 | 9.35 × 102 |

| 17 | 1.97 × 106 | 2.03 × 106 | 2.36 × 106 | 2.09 × 106 | 1.20 × 105 | 1.87 × 106 | 1.89 × 106 | 1.93 × 106 | 1.90 × 106 | 1.61 × 104 |

| 18 | 1.17 × 106 | 1.27 × 106 | 1.57 × 106 | 1.27 × 106 | 7.56 × 104 | 9.36 × 105 | 9.42 × 105 | 9.46 × 105 | 9.42 × 105 | 2476.651 |

| 19 | 1.75 × 106 | 2.03 × 106 | 2.25 × 106 | 2.00 × 106 | 1.36 × 105 | 9.37 × 105 | 9.47 × 105 | 9.76 × 105 | 9.47 × 105 | 7.47 × 103 |

| 20 | 1.12 × 106 | 1.29 × 106 | 1.46 × 106 | 1.29 × 106 | 9.20 × 104 | 9.35 × 105 | 9.41 × 105 | 9.47 × 105 | 9.41 × 105 | 3.28 × 103 |

| 21 | 1.34 × 101 | 1.61 × 101 | 2.49 × 101 | 1.70 × 101 | 3.11 | 1.52 × 101 | 1.95 × 101 | 2.40 × 101 | 1.92 × 101 | 2.11 |

| 22 | 8.61 | 1.28 × 101 | 2.09 × 101 | 1.29 × 101 | 2.93 | 8.61 | 1.63 × 101 | 2.39 × 101 | 1.61 × 101 | 3.97 |

| Algorithms | R+ | R− | p Value | + | = | − | Dec. |

|---|---|---|---|---|---|---|---|

| ATMALS−GSK vs. GSK | 127 | 26 | 0.0168 | 12 | 5 | 5 | + |

| Function | GSK [16] | ATMALS-GSK (Proposed) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Best | Median | Worst | Mean | STD | Best | Median | Worst | Mean | STD | |

| 1 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 3 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 4 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 5 | 1.53 × 101 | 2.01 × 101 | 2.58 × 101 | 2.03 × 101 | 2.79 | 2.99 | 8.47 | 1.00 × 101 | 7.57 | 2.71 |

| 6 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 7 | 2.46 × 101 | 3.10 × 101 | 3.74 × 101 | 3.07 × 101 | 3.08 | 1.27 × 101 | 1.69 × 101 | 1.85 × 101 | 1.63 × 101 | 2.21 |

| 8 | 1.45 × 101 | 1.99 × 101 | 2.58 × 101 | 2.02 × 101 | 2.92 | 3.98 | 7.97 | 1.09 × 101 | 7.57 | 2.11 |

| 9 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 10 | 7.54 × 102 | 1.10 × 103 | 1.29 × 103 | 1.06 × 103 | 1.33 × 102 | 1.40 × 102 | 5.07 × 102 | 6.57 × 102 | 4.55 × 102 | 1.93 × 102 |

| 11 | 0.00 | 0.00 | 1.02× 10−8 | 0.00 | 0.00 | 6.40 × 10−1 | 1.77 | 2.67 | 1.67 | 7.10 × 10−1 |

| 12 | 1.42 × 101 | 7.57 × 101 | 2.69 × 102 | 8.93 × 101 | 7.26 × 101 | 2.12 × 10−1 | 1.05 × 101 | 3.45 × 101 | 1.12 × 101 | 9.45 |

| 13 | 9.19 × 10−1 | 6.56 | 9.73 | 6.56 | 1.41 | 3.32 × 10−1 | 1.80 | 5.70 | 2.65 | 2.18 |

| 14 | 5.19 × 10−3 | 5.42 | 1.19 × 101 | 5.88 | 3.06 | 3.57 × 10−1 | 2.94 | 3.56 | 2.78 | 9.25 × 10−1 |

| 15 | 2.96 × 10−3 | 1.75 × 10−1 | 5.00 × 10−1 | 2.22 × 10−1 | 2.13 × 10−1 | 1.45 × 10−2 | 1.30 × 10−1 | 2.63 × 10−1 | 1.47 × 10−1 | 8.60 × 10−2 |

| 16 | 3.29 × 10−1 | 9.48 × 10−1 | 1.18 × 101 | 4.27 | 4.95 | 4.89 × 10−1 | 1.11 | 1.80 | 1.12 | 4.70 × 10−1 |

| 17 | 9.76 × 10−1 | 9.94 | 2.52 × 101 | 1.17 × 101 | 7.09 | 2.26 | 1.26 × 101 | 2.27 × 101 | 1.25 × 101 | 9.02 × 101 |

| 18 | 2.05 × 10−2 | 4.30 × 10−1 | 5.01 × 10−1 | 3.20 × 10−1 | 1.92 × 10−1 | 3.94 × 10−2 | 3.32 × 10−1 | 4.95 × 10−1 | 3.04 × 10−1 | 1.85 × 10−1 |

| 19 | 2.81 × 10−2 | 6.95 × 10−2 | 1.80 | 1.55 × 10−1 | 3.51 × 10−1 | 5.36 × 10−2 | 1.27 × 10−1 | 2.20 × 10−1 | 1.39 × 10−1 | 5.66 × 10−2 |

| 20 | 3.12 × 10−1 | 3.12 × 10−1 | 2.03 × 101 | 1.19 | 3.99 | 1.16 | 1.97 | 4.55 | 2.51 | 1.14 |

| 21 | 1.00 × 102 | 2.20 × 102 | 2.31 × 102 | 1.93 × 102 | 5.07 × 101 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 0.00 |

| 22 | 1.00 × 102 | 1.00 × 102 | 1.02 × 102 | 1.00 × 102 | 4.87 × 10−1 | 0.00 | 1.00 × 102 | 1.00 × 102 | 6.38 × 101 | 4.73 × 101 |

| 23 | 3.11 × 102 | 3.18 × 102 | 3.29 × 102 | 3.18 × 102 | 3.99 | 2.24 × 10−1 | 3.06 × 102 | 3.10 × 102 | 2.76 × 102 | 9.72 × 101 |

| 24 | 2.78 × 102 | 3.50 × 102 | 3.57 × 102 | 3.44 × 102 | 1.87 × 101 | 1.00 × 102 | 3.35 × 102 | 3.39 × 102 | 3.11 × 102 | 7.44 × 101 |

| 25 | 3.98 × 102 | 4.34 × 102 | 4.46 × 102 | 4.27 × 102 | 2.05 × 101 | 3.97 × 102 | 3.97 × 102 | 3.98 × 102 | 3.97 × 102 | 1.32 × 10−1 |

| 26 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 0.00 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 0.00 |

| 27 | 3.89 × 102 | 3.90 × 102 | 3.90 × 102 | 3.89 × 102 | 2.17 × 10−1 | 3.89 × 102 | 3.89 × 102 | 3.89 × 102 | 3.89 × 102 | 1.98 × 10−1 |

| 28 | 3.00 × 102 | 3.00 × 102 | 3.97 × 102 | 3.12 × 102 | 3.20 × 101 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 3.00 × 102 | 0.00 |

| 29 | 2.39 × 102 | 2.48 × 102 | 2.57 × 102 | 2.48 × 102 | 4.76 | 2.32 × 102 | 2.39 × 102 | 2.43 × 102 | 2.38 × 102 | 3.33 |

| 30 | 3.96 × 102 | 4.65 × 102 | 5.02 × 102 | 4.56 × 102 | 3.44 × 101 | 3.94 × 102 | 4.42 × 102 | 4.42 × 102 | 4.29 × 102 | 2.09 × 101 |

| Function | GSK [16] | ATMALS-GSK (Proposed) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Best | Median | Worst | Mean | STD | Best | Median | Worst | Mean | STD | |

| 1 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 3 | 0.00 | 5.66 × 10−8 | 8.54 × 10−6 | 7.07 × 10−7 | 1.82 × 10−6 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| 4 | 9.28 × 10−3 | 4.00 | 7.25 × 101 | 1.11 × 101 | 2.17 × 101 | 0.00 | 1.99 | 3.98 | 1.99 | 2.10 |

| 5 | 1.36 × 102 | 1.62 × 102 | 1.73 × 102 | 1.60 × 102 | 8.87 | 1.08 × 101 | 2.67 × 101 | 9.72 × 101 | 1.53 × 101 | 1.90 × 101 |

| 6 | 0.00 | 5.47 × 10−7 | 8.42 × 10−6 | 1.52 × 10−6 | 2.36 × 10−6 | 2.26 × 10−5 | 5.71 × 10−5 | 1.39 × 10−4 | 6.82 × 10−5 | 3.90 × 10−5 |

| 7 | 1.64 × 102 | 1.87 × 102 | 1.99 × 102 | 1.87 × 102 | 8.40 | 2.52 × 101 | 5.60 × 101 | 9.83 × 101 | 4.33 × 101 | 1.15 × 101 |

| 8 | 1.24 × 102 | 1.56 × 102 | 1.73 × 102 | 1.55 × 102 | 1.11 × 101 | 1.07 × 101 | 2.34 × 101 | 5.06 × 101 | 1.35 × 101 | 1.29 × 101 |

| 9 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 8.95 × 10−2 | 1.79 × 10−2 | 3.77 × 10−2 |

| 10 | 5.84 × 103 | 6.68 × 103 | 7.16 × 103 | 6.69 × 103 | 3.54 × 102 | 1.63 × 103 | 2.96 × 103 | 3.49 × 103 | 2.80 × 103 | 5.90 × 102 |

| 11 | 6.00 × 10−1 | 8.57 | 9.85 × 101 | 3.30 × 101 | 3.83 × 101 | 9.94 | 2.04 × 101 | 7.19 × 101 | 1.46 × 101 | 1.73 × 101 |

| 12 | 1.03 × 103 | 5.32 × 103 | 1.91 × 104 | 6.62 × 103 | 4.59 × 103 | 9.02 × 102 | 3.12 × 103 | 8.19 × 103 | 3.84 × 103 | 2.62 × 103 |

| 13 | 4.61 × 101 | 9.11 × 101 | 1.82 × 102 | 9.83 × 101 | 3.42 × 101 | 2.06 × 101 | 3.91 × 101 | 6.34 × 101 | 3.01 × 101 | 1.12 × 101 |

| 14 | 4.72 × 101 | 5.73 × 101 | 6.65 × 101 | 5.69 × 101 | 5.49 | 1.04 × 101 | 1.79 × 101 | 4.57 × 101 | 1.78 × 101 | 4.26 |

| 15 | 2.13 | 9.17 | 7.48 × 101 | 1.45 × 101 | 1.49 × 101 | 5.88 | 1.75 × 101 | 1.92 × 101 | 1.43 × 101 | 5.56 |

| 16 | 4.35 × 102 | 7.76 × 102 | 1.15 × 103 | 7.96 × 102 | 1.94 × 102 | 1.97 × 101 | 5.47 × 102 | 7.50 × 102 | 2.53 × 102 | 2.81 × 102 |

| 17 | 6.28 × 101 | 2.04 × 102 | 3.77 × 102 | 1.89 × 102 | 9.44 × 101 | 2.92 × 101 | 6.37 × 101 | 1.41 × 102 | 4.71 × 101 | 2.30 × 101 |

| 18 | 2.25 × 101 | 3.69 × 101 | 4.80 × 101 | 3.68 × 101 | 5.42 | 2.25 × 101 | 2.74 × 101 | 4.02 × 101 | 3.02 × 101 | 6.67 |

| 19 | 3.42 | 1.13 × 101 | 2.22 × 101 | 1.29 × 101 | 6.02 | 8.03 | 1.37 × 101 | 1.65 × 101 | 1.30 × 101 | 3.34 |

| 20 | 1.34 | 5.51 × 101 | 4.51 × 102 | 1.08 × 102 | 1.14 × 102 | 3.73 × 101 | 5.58 × 101 | 1.62 × 102 | 4.74 × 101 | 3.35 × 101 |

| 21 | 3.20 × 102 | 3.49 × 102 | 3.55 × 102 | 3.46 × 102 | 8.30 | 2.51 × 102 | 2.65 × 102 | 2.79 × 102 | 2.66 × 102 | 9.12 |

| 22 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 0.00 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 1.00 × 102 | 0.00 |

| 23 | 3.50 × 102 | 4.86 × 102 | 5.07 × 102 | 4.70 × 102 | 4.49 × 101 | 3.97 × 102 | 4.28 × 102 | 4.43 × 102 | 3.44 × 102 | 1.70 × 101 |

| 24 | 5.29 × 102 | 5.70 × 102 | 5.86 × 102 | 5.68 × 102 | 1.45 × 101 | 4.52 × 102 | 4.77 × 102 | 4.91 × 102 | 4.75 × 102 | 1.48 × 101 |

| 25 | 3.87 × 102 | 3.87 × 102 | 3.87 × 102 | 3.87 × 102 | 2.11 × 10−1 | 3.83 × 102 | 3.86 × 102 | 3.87 × 102 | 3.86 × 102 | 1.07 |

| 26 | 6.93 × 102 | 9.56 × 102 | 2.12 × 103 | 9.87 × 102 | 2.49 × 102 | 1.45 × 103 | 1.87 × 103 | 1.97 × 103 | 1.79 × 103 | 1.93 × 102 |

| 27 | 4.80 × 102 | 4.92 × 102 | 5.14 × 102 | 4.93 × 102 | 8.02 | 4.87 × 102 | 4.97 × 102 | 5.06 × 102 | 4.96 × 102 | 6.31 |

| 28 | 3.00 × 102 | 3.00 × 102 | 4.04 × 102 | 3.21 × 102 | 4.22 × 101 | 3.00 × 102 | 3.00 × 102 | 4.03 × 102 | 3.10 × 102 | 3.26 × 101 |

| 29 | 4.23 × 102 | 5.92 × 102 | 7.69 × 102 | 5.77 × 102 | 1.01 × 102 | 4.09 × 102 | 5.13 × 102 | 5.68 × 102 | 4.32 × 102 | 5.95 × 101 |

| 30 | 1.94 × 103 | 2.09 × 103 | 2.36 × 103 | 2.08 × 103 | 9.27 × 101 | 1.97 × 103 | 2.06 × 103 | 2.12 × 103 | 2.06 × 103 | 5.55 × 101 |

| Function | GSK [16] | ATMALS-GSK (Proposed) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Best | Median | Worst | Mean | STD | Best | Median | Worst | Mean | STD | |

| 1 | 1.71 × 101 | 4.73 × 102 | 4.61 × 103 | 1.09 × 103 | 1.24 × 103 | 9.10 × 10−4 | 1.25 × 10−1 | 3.41 × 10−1 | 1.25 × 10−1 | 2.15 × 10−1 |

| 3 | 1.61 × 103 | 3.79 × 103 | 6.73 × 103 | 3.85 × 103 | 1.51 × 103 | 1.12 × 102 | 7.85 × 102 | 1.46 × 103 | 7.85 × 102 | 6.73 × 102 |

| 4 | 1.33 × 10−2 | 7.21 × 101 | 1.46 × 102 | 8.33 × 101 | 5.00 × 101 | 8.20 | 9.80 | 2.70 × 101 | 9.80 | 1.82 × 101 |

| 5 | 2.65 × 102 | 3.25 × 102 | 3.45 × 102 | 3.20 × 102 | 1.79 × 101 | 2.98 × 101 | 4.21 × 101 | 9.48 × 101 | 3.21 × 101 | 1.27 × 101 |

| 6 | 3.11 × 10−7 | 2.80 × 10−6 | 1.64 × 10−5 | 3.78 × 10−6 | 3.52 × 10−6 | 1.17 × 10−7 | 7.14 × 10−6 | 1.55 × 10−5 | 7.14 × 10−6 | 8.31 × 10−6 |

| 7 | 3.29 × 102 | 3.74 × 102 | 3.86 × 102 | 3.70 × 102 | 1.41 × 101 | 9.68 × 101 | 1.08 × 102 | 1.19 × 102 | 1.08 × 102 | 1.12 × 101 |

| 8 | 2.90 × 102 | 3.28 × 102 | 3.38 × 102 | 3.24 × 102 | 1.36 × 101 | 5.16 × 101 | 6.15 × 101 | 7.14 × 101 | 3.85 × 101 | 9.92 |

| 9 | 0.00 | 0.00 | 8.95 × 10−2 | 1.07 × 10−2 | 2.79 × 10−2 | 1.50 × 10−2 | 1.91 × 10−1 | 3.97 × 10−1 | 1.91 × 10−1 | 2.06 × 10−1 |

| 10 | 1.21 × 104 | 1.28 × 104 | 1.37 × 104 | 1.30 × 104 | 4.50 × 102 | 7.88 × 103 | 8.80 × 103 | 9.73 × 103 | 8.80 × 103 | 9.25 × 102 |

| 11 | 2.34 × 101 | 2.96 × 101 | 1.45 × 102 | 3.45 × 101 | 2.32 × 101 | 2.59 × 101 | 3.11 × 101 | 3.63 × 101 | 2.91 × 101 | 5.17 |

| 12 | 2.16 × 103 | 7.71 × 103 | 3.17 × 104 | 9.46 × 103 | 7.01 × 103 | 1.53 × 104 | 3.65 × 104 | 5.77 × 104 | 3.65 × 104 | 2.12 × 104 |

| 13 | 7.41 × 101 | 6.24 × 102 | 8.98 × 103 | 1.49 × 103 | 2.16 × 103 | 6.72 × 101 | 1.19 × 102 | 1.71 × 102 | 1.19 × 102 | 5.17 × 101 |

| 14 | 5.76 × 101 | 1.28 × 102 | 1.42 × 102 | 1.24 × 102 | 1.87 × 101 | 2.77 × 101 | 3.69 × 101 | 4.60 × 101 | 3.69 × 101 | 9.02 |

| 15 | 2.52 × 101 | 3.62 × 101 | 1.00 × 102 | 4.20 × 101 | 1.68 × 101 | 3.31 × 101 | 4.45 × 101 | 5.59 × 101 | 4.45 × 101 | 1.14 × 101 |

| 16 | 1.30 × 102 | 2.01 × 103 | 2.70 × 103 | 1.83 × 103 | 6.59 × 102 | 4.56 × 102 | 7.91 × 102 | 1.13 × 103 | 4.91 × 102 | 3.35 × 102 |

| 17 | 7.75 × 102 | 1.39 × 103 | 1.63 × 103 | 1.35 × 103 | 1.90 × 102 | 4.77 × 102 | 7.08 × 102 | 9.39 × 102 | 7.08 × 102 | 2.31 × 102 |

| 18 | 1.78 × 102 | 5.01 × 102 | 1.45 × 103 | 5.98 × 102 | 3.37 × 102 | 4.40 × 101 | 1.17 × 102 | 1.90 × 102 | 1.17 × 102 | 7.30 × 101 |

| 19 | 1.84 × 101 | 2.92 × 101 | 5.13 × 101 | 3.05 × 101 | 9.59 | 2.05 × 101 | 2.83 × 101 | 3.62 × 101 | 2.83 × 101 | 7.93 |

| 20 | 1.17 × 103 | 1.40 × 103 | 1.62 × 103 | 1.37 × 103 | 1.28 × 102 | 5.44 × 102 | 6.91 × 102 | 8.38 × 102 | 6.91 × 102 | 1.48 × 102 |

| 21 | 4.90 × 102 | 5.25 × 102 | 5.46 × 102 | 5.21 × 102 | 1.31 × 101 | 1.91 × 102 | 1.98 × 102 | 2.05 × 102 | 1.98 × 102 | 6.81 |

| 22 | 1.00 × 102 | 1.31 × 104 | 1.35 × 104 | 1.10 × 104 | 4.85 × 103 | 6.29 × 103 | 8.71 × 103 | 1.11 × 104 | 8.71 × 103 | 2.41 × 103 |

| 23 | 4.20 × 102 | 4.46 × 102 | 7.49 × 102 | 5.42 × 102 | 1.39 × 102 | 4.66 × 102 | 4.79 × 102 | 4.92 × 102 | 4.39 × 102 | 1.27 × 101 |

| 24 | 5.01 × 102 | 5.24 × 102 | 8.11 × 102 | 6.34 × 102 | 1.39 × 102 | 5.41 × 102 | 5.54 × 102 | 5.68 × 102 | 5.14 × 102 | 1.35 × 101 |

| 25 | 4.60 × 102 | 5.64 × 102 | 6.11 × 102 | 5.56 × 102 | 4.62 × 101 | 5.18 × 102 | 5.45 × 102 | 5.72 × 102 | 5.45 × 102 | 2.74 × 101 |

| 26 | 1.06 × 103 | 1.29 × 103 | 1.42 × 103 | 1.27 × 103 | 9.18 × 101 | 9.95 × 102 | 1.35 × 103 | 1.71 × 103 | 1.35 × 103 | 3.55 × 102 |

| 27 | 5.18 × 102 | 5.64 × 102 | 9.16 × 102 | 5.92 × 102 | 8.29 × 101 | 5.43 × 102 | 5.71 × 102 | 5.99 × 102 | 5.71 × 102 | 2.78 × 101 |

| 28 | 4.59 × 102 | 4.97 × 102 | 5.70 × 102 | 4.94 × 102 | 2.24 × 101 | 4.64 × 102 | 4.88 × 102 | 5.12 × 102 | 4.88 × 102 | 2.39 × 101 |

| 29 | 3.28 × 102 | 3.55 × 102 | 4.09 × 102 | 3.60 × 102 | 2.23 × 101 | 4.59 × 102 | 5.01 × 102 | 5.43 × 102 | 5.01 × 102 | 4.19 × 101 |

| 30 | 5.79 × 105 | 5.81 × 105 | 6.52 × 105 | 5.96 × 105 | 2.24 × 104 | 5.74 × 105 | 5.92 × 105 | 6.11 × 105 | 5.92 × 105 | 1.85 × 104 |

| Function | GSK [16] | ATMALS-GSK (Proposed) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Best | Median | Worst | Mean | STD | Best | Median | Worst | Mean | STD | |

| 1 | 3.96 × 10−1 | 5.38 × 103 | 2.11 × 104 | 5.80 × 103 | 4.63 × 103 | 1.96 × 10−1 | 1.98 | 7.98 | 2.55 | 4.79 |

| 3 | 6.01 × 104 | 1.15 × 105 | 1.48 × 105 | 1.15 × 105 | 2.15 × 104 | 4.87 × 104 | 4.91 × 104 | 5.30 × 104 | 4.91 × 104 | 1.26 × 104 |

| 4 | 8.46 × 101 | 2.17 × 102 | 2.89 × 102 | 2.05 × 102 | 4.57 × 101 | 1.81 × 102 | 1.97 × 102 | 2.03 × 102 | 1.98 × 102 | 1.45 × 101 |

| 5 | 7.26 × 101 | 7.70 × 102 | 8.14 × 102 | 5.31 × 102 | 3.39 × 102 | 3.70 × 102 | 5.30 × 102 | 5.90 × 102 | 5.40 × 102 | 2.17 × 101 |

| 6 | 1.25 × 10−5 | 6.83 × 10−5 | 3.14 × 10−2 | 1.72 × 10−3 | 6.46 × 10−3 | 1.80 × 10−6 | 6.20 × 10−3 | 3.90 × 10−2 | 1.24 × 10−2 | 2.73 × 10−2 |

| 7 | 8.33 × 102 | 8.76 × 102 | 9.16 × 102 | 8.75 × 102 | 1.83 × 101 | 6.99 × 102 | 8.21 × 102 | 8.99 × 102 | 8.20 × 102 | 1.91 × 101 |

| 8 | 5.90 × 101 | 7.36 × 102 | 8.18 × 102 | 4.92 × 102 | 3.41 × 102 | 3.95 × 102 | 4.91 × 102 | 5.88 × 102 | 4.92 × 102 | 1.12 × 101 |

| 9 | 5.45 | 7.69 | 1.72 × 101 | 8.46 | 3.41 | 4.75 | 8.90 | 1.60 × 101 | 8.80 | 3.14 |

| 10 | 2.87 × 104 | 2.94 × 104 | 3.04 × 104 | 2.95 × 104 | 4.44 × 102 | 2.28 × 104 | 2.45 × 104 | 2.85 × 104 | 2.44 × 104 | 1.01 × 103 |

| 11 | 1.61 × 102 | 2.65 × 102 | 4.64 × 102 | 2.78 × 102 | 6.85 × 101 | 1.30 × 102 | 1.70 × 102 | 2.80 × 102 | 1.76 × 102 | 6.28 × 101 |

| 12 | 2.25 × 104 | 6.53 × 104 | 3.23 × 105 | 8.34 × 104 | 7.52 × 104 | 1.20 × 104 | 2.92 × 105 | 5.88 × 105 | 3.78 × 105 | 1.69 × 105 |

| 13 | 5.17 × 101 | 2.85 × 103 | 9.31 × 103 | 3.20 × 103 | 2.64 × 103 | 1.06 × 102 | 1.30 × 102 | 2.00 × 102 | 1.31 × 102 | 5.43 × 101 |

| 14 | 3.30 × 102 | 2.53 × 103 | 1.69 × 104 | 4.64 × 103 | 4.47 × 103 | 6.20 × 102 | 2.49 × 103 | 3.50 × 103 | 2.57 × 103 | 2.31 × 103 |

| 15 | 3.20 × 101 | 4.23 × 102 | 5.20 × 103 | 7.33 × 102 | 1.09 × 103 | 5.90 × 101 | 9.90 × 101 | 1.40 × 102 | 1.04 × 102 | 4.05 × 101 |

| 16 | 2.87 × 102 | 8.25 × 102 | 7.04 × 103 | 2.27 × 103 | 2.61 × 103 | 8.60 × 102 | 1.80 × 103 | 2.30 × 103 | 1.89 × 103 | 4.24 × 102 |

| 17 | 2.14 × 103 | 4.12 × 103 | 4.59 × 103 | 3.91 × 103 | 6.68 × 102 | 8.00 × 102 | 1.30 × 103 | 2.80 × 103 | 1.57 × 103 | 6.92 × 102 |

| 18 | 1.93 × 104 | 4.43 × 104 | 1.87 × 105 | 5.73 × 104 | 3.63 × 104 | 9.50 × 103 | 1.13 × 104 | 1.30 × 104 | 1.14 × 104 | 4.25 × 103 |

| 19 | 5.04 × 101 | 8.41 × 102 | 3.25 × 103 | 1.00 × 103 | 8.30 × 102 | 9.00 × 101 | 1.55 × 102 | 2.30 × 102 | 1.61 × 102 | 7.63 × 101 |

| 20 | 3.97 × 103 | 4.52 × 103 | 4.80 × 103 | 4.46 × 103 | 2.22 × 102 | 3.10 × 103 | 2.80 × 103 | 2.90 × 103 | 2.84 × 103 | 5.21 × 102 |

| 21 | 2.83 × 102 | 3.29 × 102 | 1.02 × 103 | 6.07 × 102 | 3.37 × 102 | 2.10 × 102 | 2.85 × 102 | 3.10 × 102 | 2.89 × 102 | 2.11 × 101 |

| 22 | 2.86 × 104 | 3.01 × 104 | 3.09 × 104 | 3.00 × 104 | 4.57 × 102 | 2.10 × 104 | 2.35 × 104 | 3.80 × 104 | 2.43 × 104 | 1.92 × 103 |

| 23 | 5.86 × 102 | 6.11 × 102 | 6.49 × 102 | 6.11 × 102 | 1.58 × 101 | 5.25 × 102 | 5.85 × 102 | 6.45 × 102 | 5.87 × 102 | 1.45 × 101 |

| 24 | 8.89 × 102 | 9.33 × 102 | 9.69 × 102 | 9.32 × 102 | 1.70 × 101 | 8.40 × 102 | 9.10 × 102 | 9.50 × 102 | 9.11 × 102 | 1.77 × 101 |

| 25 | 7.61 × 102 | 8.20 × 102 | 8.99 × 102 | 8.21 × 102 | 4.34 × 101 | 7.20 × 102 | 7.50 × 102 | 7.80 × 102 | 7.53 × 102 | 4.18 × 101 |

| 26 | 3.33 × 103 | 3.63 × 103 | 3.96 × 103 | 3.66 × 103 | 1.78 × 102 | 3.12 × 103 | 3.35 × 103 | 3.65 × 103 | 3.42 × 103 | 1.28 × 103 |

| 27 | 6.20 × 102 | 6.46 × 102 | 7.30 × 102 | 6.57 × 102 | 3.07 × 101 | 6.10 × 102 | 6.78 × 102 | 7.90 × 102 | 6.87 × 102 | 3.47 × 101 |

| 28 | 4.99 × 102 | 5.57 × 102 | 6.09 × 102 | 5.53 × 102 | 3.25 × 101 | 3.10 × 102 | 3.30 × 102 | 3.85 × 102 | 3.51 × 102 | 1.10 × 102 |

| 29 | 9.12 × 102 | 1.21 × 103 | 1.56 × 103 | 1.21 × 103 | 1.74 × 102 | 1.01 × 103 | 1.37 × 103 | 1.78 × 103 | 1.39 × 103 | 2.14 × 102 |

| 30 | 2.41 × 103 | 3.02 × 103 | 3.72 × 103 | 2.99 × 103 | 2.73 × 102 | 2.40 × 103 | 3.21 × 103 | 3.40 × 103 | 3.23 × 103 | 8.70 × 102 |

| Fun. | GSK [16] | AGSK [46] | APGSK [45] | FDBAGSK [36] | eGSK [49] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| 3 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| 4 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 × 101 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| 5 | 2.03 × 101 ± 2.79 | 6.33 ± 1.89 | 8.04 ± 2.41 | 5.83 ± 1.64 | 4.82 ± 1.90 | 7.57 ± 2.71 |

| 6 | 0.00 ± 0.00 | 9.52 × 10−6 ± 2.42 × 10−5 | 4.45 × 10−5 ± 1.33 × 10−4 | 5.59 × 10−6 ± 1.35 × 10−5 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| 7 | 3.07 × 101 ± 3.08 | 1.80 × 101 ± 2.39 | 1.90 × 101 ± 3.50 | 1.81 × 101 ± 3.57 | 1.54 × 101 ± 2.43 | 1.63 × 101 ± 2.21 |

| 8 | 2.02 × 101 ± 2.92 | 8.12 ± 2.29 | 9.16 ± 3.07 | 7.54 ± 2.30 | 5.29 ± 2.08 | 7.57 ± 2.11 |

| 9 | 0.00 ± 0.00 | 1.42 × 10−2 ± 6.64 × 10−2 | 1.76 × 10−3 ± 1.25 × 10−2 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| 10 | 1.06 × 103 ± 1.33 × 102 | 3.20 × 102 ± 8.64 × 101 | 3.05 × 102 ± 1.05 × 102 | 3.02 × 102 ± 1.15 × 102 | 7.59 × 102 ± 2.35 × 102 | 4.55 × 102 ± 1.93 × 102 |

| 11 | 0.00 ± 0.00 | 3.99 × 10−1 ± 8.06 × 10−1 | 1.16 ± 9.76 × 10−1 | 9.30 × 10−1 ± 9.07 × 10−1 | 0.00 ± 0.00 | 1.67 ± 7.10 × 10−1 |

| 12 | 8.93 × 101 ± 7.26 × 101 | 4.46 × 101 ± 5.34 × 101 | 9.50 × 101 ± 8.64 × 101 | 7.06 × 101 ± 5.51 × 101 | 5.58 × 101 ± 6.17 × 101 | 1.12 × 101 ± 9.45 |

| 13 | 6.56 ± 1.41 | 3.35 ± 2.16 | 4.29 ± 2.70 | 4.15 ± 1.96 | 5.50 ± 6.56 × 10−1 | 2.65 ± 2.18 |

| 14 | 5.88 ± 3.06 | 3.91 × 10−2 ± 1.95 × 10−1 | 5.73 × 10−1 ± 6.10 × 10−1 | 1.41 × 10−1 ± 3.70 × 10−1 | 2.20 × 10−1 ± 4.61 × 10−1 | 2.78 ± 9.25 × 10−1 |

| 15 | 2.22 × 10−1 ± 2.13 × 10−1 | 1.97 × 10−2 ± 2.88 × 10−2 | 1.48 × 10−1 ± 2.02 × 10−1 | 3.33 × 10−2 ± 4.30 × 10−2 | 3.06 × 10−1 ± 2.18 × 10−1 | 1.47 × 10−1 ± 8.60 × 10−2 |

| 16 | 4.27 ± 4.95 | 7.01 × 10−1 ± 3.37 × 10−1 | 7.44 × 10−1 ± 7.35 × 10−1 | 1.52 ± 1.01 | 4.37 ± 5.17 | 1.12 ± 4.70 × 10−1 |

| 17 | 1.17 × 101 ± 7.09 | 1.48 ± 9.38 × 10−1 | 1.08 ± 8.74 × 10−1 | 1.41 ± 1.11 | 6.01 ± 6.22 | 1.25 × 101 ± 9.02 × 101 |

| 18 | 3.20 × 10−1 ± 1.92 × 10−1 | 9.94 × 10−2 ± 1.56 × 10−1 | 3.97 × 10−1 ± 3.95 × 10−1 | 1.25 × 10−1 ± 1.09 × 10−1 | 6.95 × 10−1 ± 2.84 | 3.04 × 10−1 ± 1.85 × 10−1 |

| 19 | 1.55 × 10−1 ± 3.51 × 10−1 | 6.96 × 10−2 ± 4.46 × 10−2 | 8.32 × 10−2 ± 9.37 × 10−2 | 8.91 × 10−2 ± 4.13 × 10−2 | 3.75 × 10−2 ± 4.31 × 10−2 | 1.39 × 10−1 ± 5.66 × 10−2 |

| 20 | 1.19 ± 3.99 | 4.28 × 10−2 ± 1.08 × 10−1 | 9.79 × 10−2 ± 2.84 × 10−1 | 4.28 × 10−2 ± 1.08 × 10−1 | 8.16 × 10−1 ± 2.79 | 2.51 ± 1.14 |

| 21 | 1.93 × 102 ± 5.07 × 101 | 1.02 × 102 ± 1.53 × 101 | 1.03 × 102 ± 3.34 × 101 | 9.80 × 101 ± 1.40 × 101 | 1.77 × 102 ± 4.80 × 101 | 1.00 × 102 ± 0.00 |

| 22 | 1.00 × 102 ± 4.87 × 10−1 | 8.00 × 101 ± 3.74 × 101 | 5.24 × 101 ± 4.54 × 101 | 5.87 × 101 ± 4.55 × 101 | 9.82 × 101 ± 1.40 × 101 | 6.38 × 101 ± 4.73 × 101 |

| 23 | 3.18 × 102 ± 3.99 | 2.97 × 102 ± 5.75 × 101 | 3.00 × 102 ± 5.77 × 101 | 2.98 × 102 ± 4.42 × 101 | 3.04 × 102 ± 2.17 | 2.76 × 102 ± 9.72 × 101 |

| 24 | 3.44 × 102 ± 1.87 × 101 | 1.04 × 102 ± 1.99 × 101 | 9.33 × 101 ± 2.36 × 101 | 1.00 × 102 ± 3.45 × 101 | 3.05 × 102 ± 7.57 × 101 | 3.11 × 102 ± 7.44 × 101 |

| 25 | 4.27 × 102 ± 2.05 × 101 | 3.86 × 102 ± 5.84 × 101 | 3.22 × 102 ± 1.31 × 102 | 3.57 × 102 ± 1.03 × 102 | 4.17 × 102 ± 2.30 × 101 | 3.97 × 102 ± 1.32 × 10−1 |

| 26 | 3.00 × 102 ± 0.00 | 2.53 × 102 ± 1.10 × 102 | 1.17 × 102 ± 1.10 × 102 | 2.33 × 102 ± 1.09 × 102 | 3.00 × 102 ± 6.43 × 10−14 | 3.00 × 102 ± 0.00 |

| 27 | 3.89 × 102 ± 2.17 × 10−1 | 3.89 × 102 ± 5.59 × 10−1 | 3.89 × 102 ± 1.73 | 3.89 × 102 ± 6.64 × 10−1 | 3.89 × 102 ± 1.70 × 10−1 | 3.89 × 102 ± 1.98 × 10−1 |

| 28 | 3.12 × 102 ± 3.20 × 101 | 2.29 × 102 ± 1.29 × 102 | 2.05 × 102 ± 1.40 × 102 | 2.18 × 102 ± 1.35 × 102 | 3.00 × 102 ± 1.37 × 10−13 | 3.00 × 102 ± 0.00 |

| 29 | 2.48 × 102 ± 4.76 | 2.43 × 102 ± 5.66 | 2.44 × 102 ± 1.03 × 101 | 2.44 × 102 ± 6.14 | 2.32 × 102 ± 2.88 | 2.38 × 102 ± 3.33 |

| 30 | 4.56 × 102 ± 3.44 × 101 | 4.70 × 102 ± 4.92 × 101 | 5.15 × 102 ± 8.71 × 101 | 4.97 × 102 ± 8.37 × 101 | 4.45 × 102 ± 3.58 × 101 | 4.29 × 102 ± 2.09 × 101 |

| Fun. | GSK [16] | AGSK [46] | APGSK [45] | FDBAGSK [36] | eGSK [49] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 0.00 ± 0.00 | 0.00 ± 0.00 | 1.65 × 10−5 ± 8.63 × 10−5 | 0.00 ± 0.00 | 1.75 × 10−1 ± 1.24 | 0.00 ± 0.00 |

| 3 | 7.07 × 10−7 ± 1.82 × 10−6 | 8.62 × 10−3 ± 3.06 × 10−2 | 4.37 × 10−1 ± 8.49 × 10−1 | 1.75 × 10−1 ± 1.08 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| 4 | 1.11 × 101 ± 2.17 × 101 | 8.87 ± 1.81 × 101 | 6.98 ± 1.68 × 101 | 3.07 × 101 ± 3.18 × 101 | 2.58 ± 1.92 | 1.99 ± 2.10 |

| 5 | 1.60 × 102 ± 8.87 | 9.21 × 101 ± 1.27 × 101 | 1.15 × 102 ± 1.33 × 101 | 7.12 × 101 ± 1.07 × 101 | 2.31 × 101 ± 8.24 | 1.53 × 101 ± 1.90 × 101 |

| 6 | 1.52 × 10−6 ± 2.36 × 10−6 | 6.98 × 10−6 ± 1.80 × 10−5 | 1.11 × 10−4 ± 3.68 × 10−4 | 4.71 × 10−6 ± 8.00 × 10−6 | 3.78 × 10−6 ± 4.11 × 10−6 | 6.82 × 10−5 ± 3.90 × 10−5 |

| 7 | 1.87 × 102 ± 8.40 | 1.17 × 102 ± 9.96 | 1.53 × 102 ± 1.78 × 101 | 1.06 × 102 ± 9.57 | 5.31 × 101 ± 8.29 | 4.33 × 101 ± 1.15 × 101 |

| 8 | 1.55 × 102 ± 1.11 × 101 | 9.74 × 101 ± 1.35 × 101 | 1.13 × 102 ± 1.66 × 101 | 7.87 × 101 ± 1.19 × 101 | 2.45 × 101 ± 8.47 | 1.35 × 101 ± 1.29 × 101 |

| 9 | 0.00 ± 0.00 | 7.50 ± 7.08 | 3.65 × 101 ± 3.79 × 101 | 2.22 ± 1.84 | 8.91 × 10−3 ± 6.36 × 10−2 | 1.79 × 10−2 ± 3.77 × 10−2 |

| 10 | 6.69 × 103 ± 3.54 × 102 | 3.31 × 103 ± 1.86 × 102 | 2.80 × 103 ± 2.40 × 102 | 3.24 × 103 ± 2.22 × 102 | 4.92 × 103 ± 9.42 × 102 | 2.80 × 103 ± 5.90 × 102 |

| 11 | 3.30 × 101 ± 3.83 × 101 | 3.65 × 101 ± 1.47 × 101 | 3.19 × 101 ± 1.32 × 101 | 1.96 × 101 ± 5.74 | 1.33 × 101 ± 2.06 × 101 | 1.46 × 101 ± 1.73 × 101 |

| 12 | 6.62 × 103 ± 4.59 × 103 | 8.86 × 103 ± 3.97 × 103 | 1.31 × 104 ± 9.01 × 103 | 1.04 × 104 ± 5.90 × 103 | 4.24 × 103 ± 4.97 × 103 | 3.84 × 103 ± 2.62 × 103 |

| 13 | 9.83 × 101 ± 3.42 × 101 | 6.17 × 101 ± 4.51 × 101 | 5.00 × 101 ± 2.35 × 101 | 6.03 × 101 ± 2.78 × 101 | 3.17 × 101 ± 2.31 × 101 | 3.01 × 101 ± 1.12 × 101 |

| 14 | 5.69 × 101 ± 5.49 | 4.19 × 101 ± 5.98 | 4.38 × 101 ± 1.02 × 101 | 3.76 × 101 ± 4.71 | 2.32 × 101 ± 3.71 | 1.78 × 101 ± 4.26 |

| 15 | 1.45 × 101 ± 1.49 × 101 | 1.90 × 101 ± 9.21 | 2.37 × 101 ± 1.98 × 101 | 1.42 × 101 ± 4.35 | 5.28 ± 3.64 | 1.43 × 101 ± 5.56 |

| 16 | 7.96 × 102 ± 1.94 × 102 | 7.40 × 102 ± 1.32 × 102 | 5.45 × 102 ± 1.39 × 102 | 6.42 × 102 ± 1.30 × 102 | 2.96 × 102 ± 2.22 × 102 | 2.53 × 102 ± 2.81 × 102 |

| 17 | 1.89 × 102 ± 9.44 × 101 | 1.82 × 102 ± 8.02 × 101 | 1.92 × 102 ± 7.73 × 101 | 1.65 × 102 ± 7.82 × 101 | 5.92 × 101 ± 3.89 × 101 | 4.71 × 101 ± 2.30 × 101 |

| 18 | 3.68 × 101 ± 5.42 | 5.28 × 102 ± 6.26 × 102 | 1.31 × 103 ± 1.14 × 103 | 2.80 × 102 ± 2.52 × 102 | 3.27 × 101 ± 2.09 × 101 | 3.02 × 101 ± 6.67 |

| 19 | 1.29 × 101 ± 6.02 | 1.52 × 101 ± 2.51 | 1.48 × 101 ± 3.35 | 1.37 × 101 ± 1.57 | 6.52 ± 1.88 | 1.30 × 101 ± 3.34 |

| 20 | 1.08 × 102 ± 1.14 × 102 | 2.42 × 102 ± 8.46 × 101 | 2.65 × 102 ± 7.96 × 101 | 2.04 × 102 ± 7.54 × 101 | 5.24 × 101 ± 4.31 × 101 | 4.74 × 101 ± 3.35 × 101 |

| 21 | 3.46 × 102 ± 8.30 | 2.91 × 102 ± 2.46 × 101 | 2.75 × 102 ± 7.85 × 101 | 2.47 × 102 ± 6.00 × 101 | 2.22 × 102 ± 9.58 | 2.66 × 102 ± 9.12 |

| 22 | 1.00 × 102 ± 0.00 | 3.51 × 102 ± 8.70 × 102 | 1.65 × 102 ± 4.42 × 102 | 1.00 × 102 ± 1.06 | 1.00 × 102 ± 7.33 × 10−13 | 1.00 × 102 ± 0.00 |

| 23 | 4.70 × 102 ± 4.49 × 101 | 4.39 × 102 ± 1.38 × 101 | 4.69 × 102 ± 2.84 × 101 | 4.18 × 102 ± 9.60 | 3.59 × 102 ± 8.30 | 3.44 × 102 ± 1.70 × 101 |

| 24 | 5.68 × 102 ± 1.45 × 101 | 5.06 × 102 ± 1.57 × 101 | 5.65 × 102 ± 5.90 × 101 | 4.96 × 102 ± 1.32 × 101 | 4.33 × 102 ± 7.37 | 4.75 × 102 ± 1.48 × 101 |

| 25 | 3.87 × 102 ± 2.11 × 10−1 | 3.87 × 102 ± 1.92 | 3.87 × 102 ± 1.34 | 3.87 × 102 ± 6.73 × 10−1 | 3.87 × 102 ± 1.86 | 3.86 × 102 ± 1.07 |

| 26 | 9.87 × 102 ± 2.49 × 102 | 5.85 × 102 ± 6.62 × 102 | 3.74 × 102 ± 4.90 × 102 | 7.18 × 102 ± 6.73 × 102 | 8.55 × 102 ± 3.21 × 102 | 1.79 × 103 ± 1.93 × 102 |

| 27 | 4.93 × 102 ± 8.02 | 4.98 × 102 ± 1.04 × 101 | 5.10 × 102 ± 1.35 × 101 | 5.01 × 102 ± 1.05 × 101 | 4.97 × 102 ± 8.59 | 4.96 × 102 ± 6.31 |

| 28 | 3.21 × 102 ± 4.22 × 101 | 3.13 × 102 ± 4.15 × 101 | 3.16 × 102 ± 3.46 × 101 | 3.32 × 102 ± 5.33 × 101 | 3.10 × 102 ± 3.10 × 101 | 3.10 × 102 ± 3.26 × 101 |

| 29 | 5.77 × 102 ± 1.01 × 102 | 6.41 × 102 ± 8.28 × 101 | 6.30 × 102 ± 5.35 × 101 | 5.88 × 102 ± 5.92 × 101 | 4.35 × 102 ± 4.07 × 101 | 4.32 × 102 ± 5.95 × 101 |

| 30 | 2.08 × 103 ± 9.27 × 101 | 2.14 × 103 ± 8.88 × 101 | 2.25 × 103 ± 1.79 × 102 | 2.18 × 103 ± 1.95 × 102 | 2.08 × 103 ± 1.35 × 102 | 2.06 × 103 ± 5.55 × 101 |

| Fun. | GSK [16] | AGSK [46] | APGSK [45] | FDBAGSK [36] | eGSK [49] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 1.09 × 103 ± 1.24 × 103 | 1.59 ± 3.54 | 9.59 × 102 ± 1.25 × 103 | 1.86 × 10−1 ± 6.16 × 10−1 | 2.82 × 10−1 ± 8.75 × 10−1 | 1.25 × 10−1 ± 2.15 × 10−1 |

| 3 | 3.85 × 103 ± 1.51 × 103 | 1.87 × 103 ± 2.10 × 103 | 3.60 × 103 ± 1.66 × 103 | 6.83 × 102 ± 7.91 × 102 | 9.42 × 10−7 ± 5.65 × 10−8 | 7.85 × 102 ± 6.73 × 102 |

| 4 | 8.33 × 101 ± 5.00 × 101 | 5.80 × 101 ± 4.07 × 101 | 8.13 × 101 ± 3.96 × 101 | 5.73 × 101 ± 4.13 × 101 | 1.02 × 101 ± 2.78 × 101 | 9.80 ± 1.82 × 101 |

| 5 | 3.20 × 102 ± 1.79 × 101 | 2.57 × 102 ± 2.06 × 101 | 2.71 × 102 ± 2.56 × 101 | 1.98 × 102 ± 2.09 × 101 | 3.79 × 101 ± 9.72 | 3.21 × 101 ± 1.27 × 101 |

| 6 | 3.78 × 10−6 ± 3.52 × 10−6 | 2.48 × 10−4 ± 3.74 × 10−4 | 1.17 × 10−3 ± 2.24 × 10−3 | 1.73 × 10−4 ± 2.59 × 10−4 | 1.18 × 10−5 ± 9.13 × 10−6 | 7.14 × 10−6 ± 8.31 × 10−6 |

| 7 | 3.70 × 102 ± 1.41 × 101 | 2.99 × 102 ± 2.85 × 101 | 3.53 × 102 ± 3.40 × 101 | 2.54 × 102 ± 2.41 × 101 | 9.07 × 101 ± 9.86 | 1.08 × 102 ± 1.12 × 101 |

| 8 | 3.24 × 102 ± 1.36 × 101 | 2.56 × 102 ± 2.81 × 101 | 2.75 × 102 ± 2.44 × 101 | 1.95 × 102 ± 1.91 × 101 | 4.09 × 101 ± 1.01 × 101 | 3.85 × 101 ± 9.92 |

| 9 | 1.07 × 10−2 ± 2.79 × 10−2 | 5.13 × 101 ± 4.26 × 101 | 1.46 × 103 ± 1.16 × 103 | 2.34 × 101 ± 2.49 × 101 | 2.02 × 10−1 ± 4.51 × 10−1 | 1.91 × 10−1 ± 2.06 × 10−1 |

| 10 | 1.30 × 104 ± 4.50 × 102 | 7.60 × 103 ± 3.54 × 102 | 6.06 × 103 ± 2.61 × 102 | 7.57 × 103 ± 3.37 × 102 | 1.06 × 104 ± 1.22 × 103 | 8.80 × 103 ± 9.25 × 102 |

| 11 | 3.45 × 101 ± 2.32 × 101 | 1.25 × 102 ± 2.74 × 101 | 9.18 × 101 ± 2.22 × 101 | 7.83 × 101 ± 2.09 × 101 | 2.97 × 101 ± 6.59 | 2.91 × 101 ± 5.17 |

| 12 | 9.46 × 103 ± 7.01 × 103 | 9.71 × 104 ± 8.84 × 104 | 1.08 × 105 ± 5.96 × 104 | 1.22 × 105 ± 8.58 × 104 | 3.98 × 104 ± 2.56 × 104 | 3.65 × 104 ± 2.12 × 104 |

| 13 | 1.49 × 103 ± 2.16 × 103 | 3.50 × 102 ± 1.74 × 102 | 3.31 × 102 ± 1.81 × 102 | 3.17 × 102 ± 1.76 × 102 | 8.40 × 101 ± 7.01 × 101 | 1.19 × 102 ± 5.17 × 101 |

| 14 | 1.24 × 102 ± 1.87 × 101 | 1.11 × 102 ± 3.55 × 101 | 1.58 × 102 ± 1.09 × 102 | 8.35 × 101 ± 1.15 × 101 | 4.03 × 101 ± 8.34 | 3.69 × 101 ± 9.02 |

| 15 | 4.20 × 101 ± 1.68 × 101 | 8.43 × 101 ± 3.82 × 101 | 1.06 × 102 ± 3.85 × 101 | 6.12 × 101 ± 9.46 | 5.08 × 101 ± 2.03 × 101 | 4.45 × 101 ± 1.14 × 101 |

| 16 | 1.83 × 103 ± 6.59 × 102 | 1.47 × 103 ± 1.39 × 102 | 1.02 × 103 ± 1.83 × 102 | 1.19 × 103 ± 1.84 × 102 | 5.01 × 102 ± 4.01 × 102 | 4.91 × 102 ± 3.35 × 102 |

| 17 | 1.35 × 103 ± 1.90 × 102 | 1.10 × 103 ± 1.51 × 102 | 8.70 × 102 ± 1.14 × 102 | 8.45 × 102 ± 1.71 × 102 | 7.48 × 102 ± 2.95 × 102 | 7.08 × 102 ± 2.31 × 102 |

| 18 | 5.98 × 102 ± 3.37 × 102 | 1.07 × 104 ± 1.23 × 104 | 9.11 × 103 ± 5.51 × 103 | 3.72 × 103 ± 2.25 × 103 | 1.21 × 102 ± 3.42 × 102 | 1.17 × 102 ± 7.30 × 101 |

| 19 | 3.05 × 101 ± 9.59 | 4.23 × 101 ± 8.07 | 4.03 × 101 ± 6.80 | 3.86 × 101 ± 6.89 | 3.00 × 101 ± 9.42 | 2.83 × 101 ± 7.93 |

| 20 | 1.37 × 103 ± 1.28 × 102 | 8.68 × 102 ± 1.25 × 102 | 7.39 × 102 ± 1.35 × 102 | 6.04 × 102 ± 1.68 × 102 | 7.57 × 102 ± 2.08 × 102 | 6.91 × 102 ± 1.48 × 102 |

| 21 | 5.21 × 102 ± 1.31 × 101 | 4.47 × 102 ± 3.33 × 101 | 4.55 × 102 ± 2.34 × 101 | 3.89 × 102 ± 2.14 × 101 | 2.36 × 102 ± 8.86 | 1.98 × 102 ± 6.81 |

| 22 | 1.10 × 104 ± 4.85 × 103 | 7.90 × 103 ± 1.64 × 103 | 6.18 × 103 ± 1.76 × 103 | 6.79 × 103 ± 2.97 × 103 | 9.57 × 103 ± 3.65 × 103 | 8.71 × 103 ± 2.41 × 103 |

| 23 | 5.42 × 102 ± 1.39 × 102 | 6.65 × 102 ± 2.22 × 101 | 7.69 × 102 ± 3.06 × 101 | 6.19 × 102 ± 2.23 × 101 | 4.51 × 102 ± 1.14 × 101 | 4.39 × 102 ± 1.27 × 101 |

| 24 | 6.34 × 102 ± 1.39 × 102 | 7.26 × 102 ± 2.94 × 101 | 8.40 × 102 ± 4.04 × 101 | 6.90 × 102 ± 2.31 × 101 | 5.20 × 102 ± 8.56 | 5.14 × 102 ± 1.35 × 101 |

| 25 | 5.56 × 102 ± 4.62 × 101 | 5.52 × 102 ± 3.40 × 101 | 5.63 × 102 ± 3.10 × 101 | 5.22 × 102 ± 4.06 × 101 | 5.63 × 102 ± 3.89 × 101 | 5.45 × 102 ± 2.74 × 101 |

| 26 | 1.27 × 103 ± 9.18 × 101 | 3.59 × 103 ± 7.34 × 102 | 2.80 × 103 ± 1.80 × 103 | 3.00 × 103 ± 4.95 × 102 | 1.16 × 103 ± 4.43 × 102 | 1.35 × 103 ± 3.55 × 102 |

| 27 | 5.92 × 102 ± 8.29 × 101 | 5.28 × 102 ± 1.63 × 101 | 5.62 × 102 ± 3.51 × 101 | 5.24 × 102 ± 1.94 × 101 | 6.09 × 102 ± 1.26 × 102 | 5.71 × 102 ± 2.78 × 101 |

| 28 | 4.94 × 102 ± 2.24 × 101 | 4.99 × 102 ± 3.50 × 101 | 4.93 × 102 ± 1.91 × 101 | 4.83 × 102 ± 2.86 × 101 | 4.93 × 102 ± 3.43 × 101 | 4.88 × 102 ± 2.39 × 101 |

| 29 | 3.60 × 102 ± 2.23 × 101 | 1.00 × 103 ± 1.36 × 102 | 1.01 × 103 ± 1.16 × 102 | 8.32 × 102 ± 1.26 × 102 | 4.37 × 102 ± 5.82 × 101 | 5.01 × 102 ± 4.19 × 101 |

| 30 | 5.96 × 105 ± 2.24 × 104 | 5.83 × 105 ± 6.20 × 103 | 5.86 × 105 ± 5.35 × 103 | 5.86 × 105 ± 1.60 × 104 | 6.09 × 105 ± 3.27 × 104 | 5.92 × 105 ± 1.85 × 104 |

| Fun. | GSK [16] | AGSK [46] | APGSK [45] | FDBAGSK [36] | eGSK [49] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 5.80 × 103 ± 4.63 × 103 | 5.08 × 101 ± 1.19 × 102 | 4.38 × 103 ± 2.89 × 103 | 5.99 ± 9.79 | 9.58 × 103 ± 4.05 × 104 | 2.55 ± 4.79 |

| 3 | 1.15 × 105 ± 2.15 × 104 | 1.08 × 105 ± 2.11 × 104 | 7.83 × 104 ± 9.64 × 103 | 5.55 × 104 ± 1.42 × 104 | 2.00 × 10−3 ± 7.54 × 10−3 | 4.91 × 104 ± 1.26 × 104 |

| 4 | 2.05 × 102 ± 4.57 × 101 | 1.68 × 102 ± 4.47 × 101 | 2.10 × 102 ± 5.52 × 101 | 2.07 × 102 ± 4.53 × 101 | 6.30 ± 1.87 × 101 | 4.90 ± 1.45 × 101 |

| 5 | 5.31 × 102 ± 3.39 × 102 | 7.28 × 102 ± 5.28 × 101 | 7.72 × 102 ± 4.46 × 101 | 6.66 × 102 ± 5.37 × 101 | 1.12 × 102 ± 1.72 × 101 | 9.70 × 101 ± 2.17 × 101 |

| 6 | 1.72 × 10−3 ± 6.46 × 10−3 | 2.01 × 10−2 ± 1.20 × 10−2 | 3.30 × 10−1 ± 2.97 × 10−1 | 1.63 × 10−2 ± 1.27 × 10−2 | 4.01 × 10−2 ± 5.90 × 10−2 | 1.24 × 10−2 ± 2.73 × 10−2 |

| 7 | 8.75 × 102 ± 1.83 × 101 | 8.73 × 102 ± 5.20 × 101 | 1.17 × 103 ± 6.49 × 101 | 8.03 × 102 ± 5.29 × 101 | 2.13 × 102 ± 2.45 × 101 | 1.68 × 102 ± 1.91 × 101 |

| 8 | 4.92 × 102 ± 3.41 × 102 | 7.15 × 102 ± 4.81 × 101 | 7.82 × 102 ± 4.48 × 101 | 6.47 × 102 ± 5.60 × 101 | 1.12 × 102 ± 1.66 × 101 | 9.65 × 101 ± 1.12 × 101 |

| 9 | 8.46 ± 3.41 | 5.50 × 102 ± 2.39 × 102 | 1.34 × 104 ± 4.65 × 103 | 7.14 × 102 ± 3.16 × 102 | 1.98 × 101 ± 1.11 × 101 | 1.05 × 101 ± 3.14 |

| 10 | 2.95 × 104 ± 4.44 × 102 | 2.33 × 104 ± 6.30 × 102 | 1.82 × 104 ± 4.05 × 102 | 2.34 × 104 ± 6.43 × 102 | 2.52 × 104 ± 3.03 × 103 | 2.44 × 104 ± 1.01 × 103 |

| 11 | 2.78 × 102 ± 6.85 × 101 | 8.81 × 102 ± 1.30 × 102 | 9.17 × 102 ± 1.39 × 102 | 9.43 × 102 ± 1.41 × 102 | 1.86 × 102 ± 7.58 × 101 | 1.76 × 102 ± 6.28 × 101 |

| 12 | 8.34 × 104 ± 7.52 × 104 | 2.36 × 105 ± 9.14 × 104 | 3.86 × 105 ± 1.42 × 105 | 4.37 × 105 ± 1.86 × 105 | 4.57 × 105 ± 2.05 × 105 | 3.78 × 105 ± 1.69 × 105 |

| 13 | 3.20 × 103 ± 2.64 × 103 | 3.61 × 103 ± 2.30 × 103 | 3.66 × 103 ± 2.39 × 103 | 4.10 × 103 ± 3.05 × 103 | 9.96 × 101 ± 6.48 × 101 | 1.31 × 102 ± 5.43 × 101 |

| 14 | 4.64 × 103 ± 4.47 × 103 | 1.57 × 104 ± 1.14 × 104 | 2.47 × 104 ± 1.82 × 104 | 2.17 × 104 ± 1.30 × 104 | 3.12 × 103 ± 2.51 × 103 | 2.57 × 103 ± 2.31 × 103 |

| 15 | 7.33 × 102 ± 1.09 × 103 | 4.34 × 102 ± 1.65 × 102 | 5.57 × 102 ± 2.25 × 102 | 4.64 × 102 ± 9.10 × 101 | 8.68 × 101 ± 3.39 × 101 | 1.04 × 102 ± 4.05 × 101 |

| 16 | 2.27 × 103 ± 2.61 × 103 | 4.11 × 103 ± 1.62 × 102 | 2.89 × 103 ± 3.19 × 102 | 3.68 × 103 ± 2.09 × 102 | 1.34 × 103 ± 4.90 × 102 | 1.89 × 103 ± 4.24 × 102 |

| 17 | 3.91 × 103 ± 6.68 × 102 | 2.87 × 103 ± 1.17 × 102 | 2.32 × 103 ± 1.77 × 102 | 2.51 × 103 ± 2.85 × 102 | 1.68 × 103 ± 7.56 × 102 | 1.57 × 103 ± 6.92 × 102 |

| 18 | 5.73 × 104 ± 3.63 × 104 | 6.22 × 104 ± 2.83 × 104 | 1.12 × 105 ± 4.34 × 104 | 7.75 × 104 ± 3.64 × 104 | 9.77 × 103 ± 4.86 × 103 | 1.14 × 104 ± 4.25 × 103 |

| 19 | 1.00 × 103 ± 8.30 × 102 | 1.82 × 102 ± 4.94 × 101 | 2.39 × 102 ± 1.06 × 102 | 1.93 × 102 ± 5.25 × 101 | 1.96 × 102 ± 3.37 × 102 | 1.61 × 102 ± 7.63 × 101 |

| 20 | 4.46 × 103 ± 2.22 × 102 | 2.76 × 103 ± 1.53 × 102 | 2.45 × 103 ± 2.07 × 102 | 2.41 × 103 ± 2.63 × 102 | 3.02 × 103 ± 6.43 × 102 | 2.84 × 103 ± 5.21 × 102 |

| 21 | 6.07 × 102 ± 3.37 × 102 | 9.36 × 102 ± 3.92 × 101 | 9.75 × 102 ± 5.17 × 101 | 8.77 × 102 ± 5.08 × 101 | 3.27 × 102 ± 1.75 × 101 | 2.89 × 102 ± 2.11 × 101 |

| 22 | 3.00 × 104 ± 4.57 × 102 | 2.38 × 104 ± 3.51 × 103 | 1.99 × 104 ± 3.84 × 102 | 2.41 × 104 ± 7.56 × 102 | 2.64 × 104 ± 2.41 × 103 | 2.43 × 104 ± 1.92 × 103 |

| 23 | 6.11 × 102 ± 1.58 × 101 | 1.19 × 103 ± 2.41 × 101 | 1.37 × 103 ± 3.88 × 101 | 1.04 × 103 ± 5.64 × 101 | 6.26 × 102 ± 1.94 × 101 | 5.87 × 102 ± 1.45 × 101 |

| 24 | 9.32 × 102 ± 1.70 × 101 | 1.62 × 103 ± 5.79 × 101 | 1.65 × 103 ± 5.48 × 101 | 1.53 × 103 ± 5.13 × 101 | 9.57 × 102 ± 2.07 × 101 | 9.11 × 102 ± 1.77 × 101 |

| 25 | 8.21 × 102 ± 4.34 × 101 | 8.07 × 102 ± 5.07 × 101 | 8.19 × 102 ± 3.76 × 101 | 7.71 × 102 ± 5.78 × 101 | 7.85 × 102 ± 6.35 × 101 | 7.53 × 102 ± 4.18 × 101 |

| 26 | 3.66 × 103 ± 1.78 × 102 | 1.09 × 104 ± 1.11 × 103 | 1.22 × 104 ± 7.50 × 102 | 1.01 × 104 ± 7.08 × 102 | 3.06 × 103 ± 1.64 × 103 | 3.42 × 103 ± 1.28 × 103 |

| 27 | 6.57 × 102 ± 3.07 × 101 | 6.41 × 102 ± 5.25 × 101 | 9.63 × 102 ± 5.64 × 101 | 6.37 × 102 ± 3.41 × 101 | 7.08 × 102 ± 4.65 × 101 | 6.87 × 102 ± 3.47 × 101 |

| 28 | 5.53 × 102 ± 3.25 × 101 | 5.63 × 102 ± 3.15 × 101 | 5.74 × 102 ± 2.83 × 101 | 5.72 × 102 ± 2.75 × 101 | 3.84 × 102 ± 1.17 × 102 | 3.51 × 102 ± 1.10 × 102 |

| 29 | 1.21 × 103 ± 1.74 × 102 | 3.69 × 103 ± 1.61 × 102 | 3.71 × 103 ± 2.41 × 102 | 3.17 × 103 ± 2.80 × 102 | 1.55 × 103 ± 2.86 × 102 | 1.39 × 103 ± 2.14 × 102 |

| 30 | 2.99 × 103 ± 2.73 × 102 | 5.01 × 103 ± 2.41 × 103 | 7.42 × 103 ± 2.35 × 103 | 4.71 × 103 ± 2.90 × 103 | 3.68 × 103 ± 7.86 × 102 | 3.23 × 103 ± 8.70 × 102 |

| Fun. | IADE [50] | GJO-JOS [51] | QCSCA [52] | cSM [53] | FOWFO [54] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 8.54 × 102 ± 1.51 × 103 | 2.30 × 104 ± 1.40 × 104 | 3.09 × 104 ± 1.37 × 105 | 2.19 × 103 ± 2.39 × 103 | 3.91 × 10−4 ± 3.18 × 10−4 | 0.00 ± 0.00 |

| 3 | 3.00 × 102 ± 8.90 × 10−10 | 3.00 × 102 ± 3.70 × 10−1 | 3.62 × 102 ± 1.59 × 102 | 1.38 × 10−12 ± 9.10 × 10−13 | 4.58 × 10−7 ± 4.09 × 10−7 | 0.00 ± 0.00 |

| 4 | 3.51 × 103 ± 4.55 × 10−13 | 4.10 × 102 ± 1.70 | 4.11 × 102 ± 1.79 × 101 | 6.32 × 10−1 ± 3.23 × 10−1 | 5.60 × 10−6 ± 1.71 × 10−5 | 0.00 ± 0.00 |

| 5 | 6.18 × 102 ± 3.88 | 5.20 × 102 ± 1.10 × 101 | 5.22 × 102 ± 7.13 | 1.46 × 101 ± 4.84 | 5.70 ± 1.69 | 7.57 ± 2.71 |

| 6 | 6.43 × 102 ± 6.73 | 6.00 × 102 ± 9.60 × 10−1 | 6.01 × 102 ± 2.69 × 10−1 | 5.44 × 10−4 ± 1.93 × 10−3 | 1.91 × 10−2 ± 1.04 × 10−2 | 0.00 ± 0.00 |

| 7 | 7.38 × 102 ± 1.53 × 101 | 7.30 × 102 ± 1.20 × 101 | 6.20 × 102 ± 3.72 | 2.35 × 101 ± 7.14 | 1.73 × 101 ± 3.03 | 1.63 × 101 ± 2.21 |

| 8 | 8.13 × 102 ± 4.02 | 8.20 × 102 ± 8.70 | 8.20 × 102 ± 5.90 | 1.57 × 101 ± 7.46 | 6.65 ± 2.21 | 7.57 ± 2.11 |

| 9 | 1.54 × 103 ± 5.51 × 101 | 9.00 × 102 ± 2.90 × 10−1 | 9.00 × 102 ± 1.31 × 10−1 | 3.56 × 10−2 ± 2.42 × 10−1 | 3.68 × 10−4 ± 8.83 × 10−4 | 0.00 ± 0.00 |

| 10 | 1.77 × 103 ± 2.69 × 102 | 1.70 × 103 ± 4.00 × 102 | 1.69 × 103 ± 2.11 × 102 | 5.92 × 102 ± 2.36 × 102 | 2.56 × 102 ± 1.24 × 102 | 4.55 × 102 ± 1.93 × 102 |

| 11 | 1.12 × 103 ± 6.18 | 1.10 × 103 ± 8.60 | 1.12 × 103 ± 6.32 | 1.32 × 101 ± 6.38 | 1.51 ± 9.92 × 10−1 | 1.67 ± 7.10 × 10−1 |

| 12 | 8.05 × 103 ± 1.29 × 104 | 5.60 × 105 ± 6.30 × 105 | 1.43 × 104 ± 1.39 × 104 | 5.79 × 104 ± 4.39 × 104 | 3.42 × 101 ± 4.12 × 101 | 1.12 × 101 ± 9.45 |

| 13 | 4.96 × 103 ± 7.30 × 103 | 1.10 × 104 ± 5.70 × 103 | 6.08 × 103 ± 6.93 × 103 | 4.92 × 103 ± 6.76 × 103 | 5.09 ± 2.97 | 2.65 ± 2.18 |

| 14 | 1.42 × 103 ± 2.02 × 101 | 1.50 × 103 ± 1.90 × 101 | 1.43 × 103 ± 2.49 × 101 | 8.09 × 101 ± 3.66 × 101 | 2.49 ± 1.78 | 2.78 ± 9.25 × 10−1 |

| 15 | 1.51 × 103 ± 6.90 | 1.60 × 103 ± 7.40 × 101 | 1.65 × 103 ± 4.16 × 102 | 4.40 × 102 ± 3.34 × 102 | 5.33 × 10−1 ± 3.54 × 10−1 | 1.47 × 10−1 ± 8.60 × 10−2 |

| 16 | 1.70 × 103 ± 1.17 × 102 | 1.80 × 103 ± 1.40 × 102 | 1.63 × 103 ± 4.81 × 101 | 5.18 × 101 ± 6.35 × 101 | 1.86 ± 6.26 × 10−1 | 1.12 ± 4.70 × 10−1 |

| 17 | 1.76 × 103 ± 6.04 × 101 | 1.70 × 103 ± 1.20 × 101 | 1.74 × 103 ± 2.17 × 101 | 3.76 × 101 ± 2.94 × 101 | 5.21 ± 2.30 | 1.25 × 101 ± 9.02 × 101 |

| 18 | 1.83 × 103 ± 9.11 | 3.00 × 104 ± 1.30 × 104 | 7.20 × 103 ± 7.51 × 103 | 1.02 × 104 ± 9.11 × 103 | 1.88 ± 1.10 | 3.04 × 10−1 ± 1.85 × 10−1 |

| 19 | 3.45 × 103 ± 8.35 | 2.00 × 103 ± 5.60 × 102 | 2.10 × 103 ± 7.50 × 102 | 4.68 × 102 ± 9.55 × 102 | 7.92 × 10−1 ± 3.88 × 10−1 | 1.39 × 10−1 ± 5.66 × 10−2 |

| 20 | 2.06 × 103 ± 5.02 × 101 | 2.10 × 103 ± 6.00 × 101 | 2.03 × 103 ± 3.05 × 101 | 1.00 × 101 ± 1.07 × 101 | 4.21 ± 2.44 | 2.51 ± 1.14 |

| 21 | 2.23 × 103 ± 6.78 × 101 | 2.20 × 103 ± 4.00 × 101 | 2.30 × 103 ± 4.95 × 101 | 1.67 × 102 ± 5.80 × 101 | 9.80 × 101 ± 1.40 × 101 | 1.00 × 102 ± 0.00 |

| 22 | 2.30 × 103 ± 1.00 | 2.30 × 103 ± 3.00 | 2.30 × 103 ± 2.02 × 101 | 8.66 × 101 ± 3.13 × 101 | 1.42 × 101 ± 3.23 × 101 | 6.38 × 101 ± 4.73 × 101 |

| 23 | 2.62 × 103 ± 1.43 × 101 | 2.60 × 103 ± 1.00 × 101 | 2.62 × 103 ± 5.07 | 3.15 × 102 ± 6.13 | 2.75 × 102 ± 9.27 × 101 | 2.76 × 102 ± 9.72 × 101 |

| 24 | 2.75 × 103 ± 1.04 × 101 | 2.60 × 103 ± 1.00 × 102 | 2.75 × 103 ± 3.58 × 101 | 3.22 × 102 ± 6.89 × 101 | 8.88 × 101 ± 3.13 × 101 | 3.11 × 102 ± 7.44 × 101 |

| 25 | 2.91 × 103 ± 2.35 × 101 | 2.90 × 103 ± 2.30 × 101 | 2.93 × 103 ± 5.10 × 101 | 4.03 × 102 ± 4.73 × 101 | 1.94 × 102 ± 1.12 × 102 | 3.97 × 102 ± 1.32 × 10−1 |

| 26 | 2.94 × 103 ± 4.97 × 101 | 2.90 × 103 ± 7.70 × 101 | 2.98 × 103 ± 2.48 × 102 | 3.20 × 102 ± 1.91 × 102 | 8.73 × 101 ± 1.01 × 102 | 3.00 × 102 ± 0.00 |

| 27 | 3.10 × 103 ± 5.62 | 3.10 × 103 ± 1.70 × 101 | 3.10 × 103 ± 7.53 | 3.92 × 102 ± 5.24 | 3.82 × 102 ± 4.52 × 101 | 3.89 × 102 ± 1.98 × 10−1 |

| 28 | 3.17 × 103 ± 6.95 × 10−13 | 3.30 × 103 ± 1.20 × 102 | 3.29 × 103 ± 1.20 × 102 | 3.70 × 102 ± 1.15 × 102 | 1.76 × 102 ± 1.42 × 102 | 3.00 × 102 ± 0.00 |

| 29 | 3.68 × 103 ± 3.40 × 101 | 3.20 × 103 ± 2.50 × 101 | 3.20 × 103 ± 2.53 × 101 | 2.68 × 102 ± 2.57 × 101 | 2.45 × 102 ± 1.27 × 101 | 2.38 × 102 ± 3.33 |

| 30 | 5.08 × 107 ± 6.10 × 10−8 | 5.10 × 105 ± 1.60 × 105 | 3.32 × 105 ± 3.68 × 105 | 1.26 × 105 ± 3.51 × 105 | 3.97 × 102 ± 6.67 | 4.29 × 102 ± 2.09 × 101 |

| Fun. | IADE [50] | GJO-JOS [51] | QCSCA [52] | cSM [53] | FOWFO [54] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 8.49 × 103 ± 6.38 × 103 | 2.10 × 105 ± 1.10 × 105 | 3.39 × 103 ± 3.66 × 103 | 2.30 × 103 ± 3.01 × 103 | 8.75 × 10−4 ± 3.89 × 10−4 | 0.00 ± 0.00 |

| 3 | 3.00 × 102 ± 7.95 × 10−1 | 6.40 × 102 ± 2.50 × 102 | 3.09 × 102 ± 2.95 × 101 | 9.89 × 10−10 ± 1.17× 10−9 | 4.53 × 10−6 ± 3.42 × 10−6 | 0.00 ± 0.00 |

| 4 | 1.94 × 104 ± 0.00 | 5.00 × 102 ± 1.70 × 101 | 4.07 × 102 ± 9.72 | 8.07 × 101 ± 1.02 × 101 | 5.43 ± 1.52 × 101 | 1.99 ± 2.10 |

| 5 | 7.93 × 102 ± 1.47 × 101 | 6.00 × 102 ± 5.60 × 101 | 5.17 × 102 ± 4.78 | 8.61 × 101 ± 2.30 × 101 | 5.49 × 101 ± 1.39 × 101 | 1.53 × 101 ± 1.90 × 101 |

| 6 | 6.55 × 102 ± 4.63 | 6.10 × 102 ± 5.90 | 6.00 × 102 ± 3.52 × 10−2 | 4.13 × 10−4 ± 8.21 × 10−4 | 4.61 × 10−2 ± 3.07 × 10−2 | 6.82 × 10−5 ± 3.90 × 10−5 |

| 7 | 9.04 × 102 ± 7.38 × 101 | 8.30 × 102 ± 6.20 × 101 | 7.27 × 102 ± 6.06 | 1.33 × 102 ± 2.61 × 101 | 7.91 × 101 ± 1.13 × 101 | 4.33 × 101 ± 1.15 × 101 |

| 8 | 9.24 × 102 ± 3.24 × 101 | 8.90 × 102 ± 4.60 × 101 | 8.14 × 102 ± 4.32 | 1.01 × 102 ± 2.82 × 101 | 5.60 × 101 ± 1.19 × 101 | 1.35 × 101 ± 1.29 × 101 |

| 9 | 5.44 × 103 ± 4.41 × 102 | 1.20 × 103 ± 4.40 × 102 | 9.00 × 102 ± 4.28 × 10−3 | 9.32 × 102 ± 8.65 × 102 | 1.90 × 101 ± 2.15 × 101 | 1.79 × 10−2 ± 3.77 × 10−2 |

| 10 | 4.75 × 103 ± 1.12 × 103 | 5.00 × 103 ± 1.70 × 103 | 1.57 × 103 ± 2.19 × 102 | 2.85 × 103 ± 4.80 × 102 | 2.02 × 103 ± 3.11 × 102 | 2.80 × 103 ± 5.90 × 102 |

| 11 | 1.22 × 103 ± 4.95 × 101 | 1.20 × 103 ± 4.20 × 101 | 1.11 × 103 ± 1.86 | 1.61 × 102 ± 5.33 × 101 | 1.26 × 101 ± 4.65 | 1.46 × 101 ± 1.73 × 101 |

| 12 | 5.57 × 104 ± 4.87 × 104 | 1.20 × 107 ± 1.10 × 107 | 1.80 × 104 ± 1.44 × 104 | 8.87 × 105 ± 8.57 × 105 | 2.92 × 102 ± 1.78 × 102 | 3.84 × 103 ± 2.62 × 103 |

| 13 | 1.57 × 104 ± 1.84 × 104 | 1.00 × 105 ± 7.50 × 104 | 4.94 × 103 ± 6.23 × 103 | 2.17 × 104 ± 2.35 × 104 | 4.43 × 101 ± 2.89 × 101 | 3.01 × 101 ± 1.12 × 101 |

| 14 | 1.64 × 103 ± 8.18 × 101 | 3.80 × 104 ± 3.00 × 104 | 1.42 × 103 ± 2.20 × 101 | 1.01 × 104 ± 7.92 × 103 | 2.91 × 101 ± 7.91 | 1.78 × 101 ± 4.26 |

| 15 | 6.84 × 103 ± 1.01 × 104 | 3.40 × 104 ± 2.20 × 104 | 1.53 × 103 ± 3.46 × 101 | 1.79 × 104 ± 1.39 × 104 | 1.27 × 101 ± 4.03 | 1.43 × 101 ± 5.56 |

| 16 | 2.37 × 103 ± 2.31 × 102 | 2.50 × 103 ± 3.60 × 102 | 1.61 × 103 ± 3.89 | 6.87 × 102 ± 2.37 × 102 | 4.08 × 102 ± 1.68 × 102 | 2.53 × 102 ± 2.81 × 102 |

| 17 | 2.08 × 103 ± 1.11 × 102 | 2.00 × 103 ± 1.40 × 102 | 1.73 × 103 ± 1.99 × 101 | 2.83 × 102 ± 1.70 × 102 | 8.79 × 101 ± 4.18 × 101 | 4.71 × 101 ± 2.30 × 101 |

| 18 | 1.47 × 104 ± 1.93 × 104 | 3.20 × 105 ± 3.30 × 105 | 6.49 × 103 ± 9.86 × 103 | 2.02 × 105 ± 1.89 × 105 | 2.67 × 101 ± 4.82 | 3.02 × 101 ± 6.67 |

| 19 | 7.20 × 103 ± 4.67 × 103 | 3.40 × 105 ± 3.20 × 105 | 1.91 × 103 ± 9.97 | 2.30 × 104 ± 2.07 × 104 | 1.36 × 101 ± 3.53 | 1.30 × 101 ± 3.34 |

| 20 | 2.53 × 103 ± 2.27 × 102 | 2.30 × 103 ± 1.10 × 102 | 2.01 × 103 ± 9.03 | 3.75 × 102 ± 1.45 × 102 | 1.12 × 102 ± 6.64 × 101 | 4.74 × 101 ± 3.35 × 101 |

| 21 | 2.38 × 103 ± 2.88 × 101 | 2.40 × 103 ± 5.70 × 101 | 2.30 × 103 ± 4.74 × 101 | 2.86 × 102 ± 2.26 × 101 | 1.96 × 102 ± 7.84 × 101 | 2.66 × 102 ± 9.12 |

| 22 | 4.08 × 103 ± 2.49 × 103 | 2.30 × 103 ± 1.30 | 2.30 × 103 ± 1.89 × 101 | 2.61 × 103 ± 1.27 × 103 | 9.93 × 101 ± 5.19 | 1.00 × 102 ± 0.00 |

| 23 | 2.73 × 103 ± 2.00 × 101 | 2.80 × 103 ± 5.30 × 101 | 2.62 × 103 ± 2.93 | 4.35 × 102 ± 2.61 × 101 | 2.64 × 102 ± 1.47 × 102 | 3.44 × 102 ± 1.70 × 101 |

| 24 | 2.94 × 103 ± 5.02 × 101 | 2.90 × 103 ± 5.10 × 101 | 2.72 × 103 ± 8.70 × 101 | 4.96 × 102 ± 6.26 × 101 | 3.64 × 102 ± 1.77 × 102 | 4.75 × 102 ± 1.48 × 101 |

| 25 | 2.90 × 103 ± 9.48 | 2.90 × 103 ± 1.20 × 101 | 2.93 × 103 ± 2.24 × 101 | 3.86 × 102 ± 1.21 | 3.85 × 102 ± 1.64 | 3.86 × 102 ± 1.07 |

| 26 | 5.56 × 103 ± 6.13 × 102 | 3.40 × 103 ± 8.50 × 102 | 2.90 × 103 ± 1.53 × 102 | 1.95 × 103 ± 4.32 × 102 | 2.55 × 102 ± 4.88 × 101 | 1.79 × 103 ± 1.93 × 102 |

| 27 | 3.24 × 103 ± 1.34 × 101 | 3.20 × 103 ± 1.60 × 101 | 3.09 × 103 ± 7.50 | 5.17 × 102 ± 9.77 | 4.97 × 102 ± 1.22 × 101 | 4.96 × 102 ± 6.31 |

| 28 | 3.54 × 103 ± 1.51 × 10−12 | 3.20 × 103 ± 2.70 × 101 | 3.23 × 103 ± 1.26 × 102 | 3.63 × 102 ± 5.49 × 101 | 3.00 × 102 ± 2.34 × 10−2 | 3.10 × 102 ± 3.26 × 101 |

| 29 | 7.34 × 103 ± 2.95 × 102 | 3.70 × 103 ± 1.60 × 102 | 3.18 × 103 ± 1.95 × 101 | 7.81 × 102 ± 1.72 × 102 | 4.97 × 102 ± 5.92 × 101 | 4.32 × 102 ± 5.95 × 101 |

| 30 | 2.65 × 109 ± 4.42 × 10−6 | 2.00 × 106 ± 9.60 × 105 | 1.18 × 105 ± 2.66 × 105 | 2.26 × 104 ± 1.53 × 104 | 1.98 × 103 ± 5.31 × 101 | 2.06 × 103 ± 5.55 × 101 |

| Fun. | IADE [50] | GJO-JOS [51] | QCSCA [52] | cSM [53] | FOWFO [54] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 3.25 × 103 ± 4.46 × 103 | 7.80 × 105 ± 4.50 × 105 | 3.02 × 103 ± 3.12 × 103 | 5.99 × 103 ± 6.65 × 103 | 1.78 × 10−2 ± 1.37 × 10−2 | 1.25 × 10−1 ± 2.15 × 10−1 |

| 3 | 2.46 × 103 ± 3.21 × 103 | 3.90 × 103 ± 1.90 × 103 | 3.02 × 102 ± 3.33 | 1.76 × 10−7 ± 1.72 × 10−7 | 2.24 ± 3.72 | 7.85 × 102 ± 6.73 × 102 |

| 4 | 3.12 × 104 ± 1.24 × 10−11 | 5.80 × 102 ± 5.10 × 101 | 4.05 × 102 ± 2.28 | 1.13 × 102 ± 3.89 × 101 | 2.15 × 101 ± 1.73 × 101 | 9.80 ± 1.82 × 101 |

| 5 | 8.88 × 102 ± 3.44 × 101 | 6.90 × 102 ± 8.60 × 101 | 5.15 × 102 ± 3.68 | 1.89 × 102 ± 4.15 × 101 | 1.17 × 102 ± 1.94 × 101 | 3.21 × 101 ± 1.27 × 101 |

| 6 | 6.62 × 102 ± 3.92 | 6.20 × 102 ± 1.20 × 101 | 6.00 × 102 ± 1.40 × 10−2 | 1.75 × 10−4 ± 4.74 × 10−4 | 1.01 × 10−1 ± 4.49 × 10−2 | 7.14 × 10−6 ± 8.31 × 10−6 |

| 7 | 1.23 × 103 ± 7.64 × 101 | 9.80 × 102 ± 1.20 × 102 | 7.25 × 102 ± 4.68 | 2.48 × 102 ± 4.20 × 101 | 1.45 × 102 ± 1.80 × 101 | 1.08 × 102 ± 1.12 × 101 |

| 8 | 1.09 × 103 ± 4.66 × 101 | 1.00 × 103 ± 9.00 × 101 | 8.12 × 102 ± 3.46 | 1.85 × 102 ± 3.01 × 101 | 1.18 × 102 ± 2.37 × 101 | 3.85 × 101 ± 9.92 |

| 9 | 1.31 × 104 ± 5.76 × 102 | 9.00 × 103 ± 7.00 × 103 | 9.00 × 102 ± 3.31 × 10−4 | 4.67 × 103 ± 2.57 × 103 | 3.95 × 102 ± 2.83 × 102 | 1.91 × 10−1 ± 2.06 × 10−1 |

| 10 | 7.98 × 103 ± 1.36 × 103 | 8.20 × 103 ± 3.00 × 103 | 1.46 × 103 ± 1.49 × 102 | 5.05 × 103 ± 6.73 × 102 | 3.84 × 103 ± 4.36 × 102 | 8.80 × 103 ± 9.25 × 102 |

| 11 | 1.32 × 103 ± 6.22 × 101 | 1.40 × 103 ± 6.80 × 101 | 1.11 × 103 ± 1.31 | 2.26 × 102 ± 5.19 × 101 | 5.21 × 101 ± 1.41 × 101 | 2.91 × 101 ± 5.17 |

| 12 | 9.99 × 105 ± 5.62 × 105 | 4.80 × 107 ± 2.50 × 107 | 2.00 × 104 ± 1.69 × 104 | 3.89 × 106 ± 2.38 × 106 | 2.83 × 103 ± 2.42 × 103 | 3.65 × 104 ± 2.12 × 104 |

| 13 | 8.85 × 103 ± 8.49 × 103 | 1.30 × 105 ± 6.50 × 104 | 3.48 × 103 ± 3.45 × 103 | 1.62 × 104 ± 1.37 × 104 | 1.04 × 102 ± 4.79 × 101 | 1.19 × 102 ± 5.17 × 101 |

| 14 | 5.27 × 103 ± 2.38 × 103 | 1.90 × 105 ± 1.00 × 105 | 1.43 × 103 ± 2.19 × 101 | 5.51 × 104 ± 4.25 × 104 | 5.83 × 101 ± 1.03 × 101 | 3.69 × 101 ± 9.02 |

| 15 | 1.00 × 104 ± 9.72 × 103 | 5.00 × 104 ± 2.60 × 104 | 1.52 × 103 ± 2.87 × 101 | 1.44 × 104 ± 9.47 × 103 | 4.33 × 101 ± 9.08 | 4.45 × 101 ± 1.14 × 101 |

| 16 | 3.07 × 103 ± 4.27 × 102 | 2.90 × 103 ± 4.50 × 102 | 1.61 × 103 ± 4.96 × 101 | 1.44 × 104 ± 3.69 × 102 | 9.73 × 102 ± 2.25 × 102 | 4.91 × 102 ± 3.35 × 102 |

| 17 | 3.27 × 103 ± 3.10 × 102 | 2.80 × 103 ± 4.30 × 102 | 1.72 × 103 ± 1.87 × 101 | 1.44 × 104 ± 2.87 × 102 | 6.46 × 102 ± 1.76 × 102 | 7.08 × 102 ± 2.31 × 102 |

| 18 | 1.12 × 105 ± 8.98 × 104 | 1.50 × 106 ± 7.80 × 105 | 3.60 × 103 ± 3.85 × 103 | 1.44 × 104 ± 1.11 × 105 | 4.18 × 101 ± 5.98 | 1.17 × 102 ± 7.30 × 101 |

| 19 | 1.67 × 108 ± 4.01 × 101 | 5.00 × 105 ± 3.40 × 105 | 1.90 × 103 ± 6.17 | 1.44 × 104 ± 1.71 × 104 | 3.05 × 101 ± 7.38 | 2.83 × 101 ± 7.93 |

| 20 | 3.39 × 103 ± 2.65 × 102 | 2.80 × 103 ± 3.60 × 102 | 2.00 × 103 ± 3.25 | 1.44 × 104 ± 1.80 × 102 | 5.07 × 102 ± 1.35 × 102 | 6.91 × 102 ± 1.48 × 102 |

| 21 | 2.52 × 103 ± 4.60 × 101 | 2.50 × 103 ± 8.70 × 101 | 2.29 × 103 ± 4.82 × 101 | 1.44 × 104 ± 4.00 × 101 | 3.15 × 102 ± 3.84 × 101 | 1.98 × 102 ± 6.81 |

| 22 | 1.01 × 104 ± 7.53 × 102 | 5.80 × 103 ± 4.20 × 103 | 2.30 × 103 ± 1.97 × 101 | 1.44 × 104 ± 7.06 × 102 | 3.82 × 103 ± 1.67 × 103 | 8.71 × 103 ± 2.41 × 103 |

| 23 | 2.97 × 103 ± 3.90 × 101 | 2.90 × 103 ± 5.40 × 101 | 2.61 × 103 ± 2.88 | 1.44 × 104 ± 3.65 × 101 | 5.40 × 102 ± 8.26 × 101 | 4.39 × 102 ± 1.27 × 101 |

| 24 | 3.13 × 103 ± 3.48 × 101 | 3.10 × 103 ± 6.90 × 101 | 2.72 × 103 ± 8.02 × 101 | 1.44 × 104 ± 4.28 × 101 | 7.02 × 102 ± 1.12 × 102 | 5.14 × 102 ± 1.35 × 101 |

| 25 | 3.09 × 103 ± 1.91 × 101 | 3.10 × 103 ± 3.60 × 101 | 2.92 × 103 ± 2.34 × 101 | 1.44 × 104 ± 3.34 × 101 | 4.89 × 102 ± 3.03 × 101 | 5.45 × 102 ± 2.74 × 101 |

| 26 | 8.18 × 103 ± 1.53 × 103 | 2.90 × 103 ± 3.30 × 102 | 2.90 × 103 ± 1.41 × 102 | 1.44 × 104 ± 3.58 × 102 | 6.14 × 102 ± 8.00 × 102 | 1.35 × 103 ± 3.55 × 102 |

| 27 | 3.62 × 103 ± 1.34 × 102 | 3.40 × 103 ± 6.30 × 101 | 3.09 × 103 ± 7.06 | 1.44 × 104 ± 4.77 × 101 | 5.50 × 102 ± 3.24 × 101 | 5.71 × 102 ± 2.78 × 101 |

| 28 | 3.88 × 103 ± 2.03 × 101 | 3.30 × 103 ± 4.10 × 101 | 3.23 × 103 ± 1.48 × 102 | 1.44 × 104 ± 1.70 × 101 | 4.60 × 102 ± 4.29 | 4.88 × 102 ± 2.39 × 101 |

| 29 | 1.55 × 105 ± 6.22 × 102 | 4.20 × 103 ± 2.90 × 102 | 3.17 × 103 ± 1.66 × 101 | 1.44 × 104 ± 2.58 × 102 | 6.76 × 102 ± 1.60 × 102 | 5.01 × 102 ± 4.19 × 101 |

| 30 | 6.21 × 109 ± 7.78 × 10−5 | 2.00 × 107 ± 5.20 × 106 | 1.79 × 105 ± 3.34 × 105 | 1.44 × 104 ± 3.35 × 106 | 5.97 × 105 ± 3.14 × 104 | 5.92 × 105 ± 1.85 × 104 |

| Fun. | IADE [50] | GJO-JOS [51] | QCSCA [52] | cSM [53] | FOWFO [54] | ATMALS-GSK |

|---|---|---|---|---|---|---|

| 1 | 9.46 × 104 ± 1.85 × 105 | 3.90 × 106 ± 1.10 × 106 | 3.27 × 103 ± 3.72 × 103 | 8.14 × 103 ± 1.28 × 104 | 1.88 × 103 ± 2.03 × 103 | 2.55 ± 4.79 |

| 3 | 3.68 × 104 ± 8.20 × 103 | 5.20 × 104 ± 1.10 × 104 | 3.00 × 102 ± 3.85 × 10−1 | 4.83 × 103 ± 2.26 × 103 | 4.23 × 104 ± 9.62 × 103 | 4.91 × 104 ± 1.26 × 104 |

| 4 | 7.98 × 104 ± 1.23 × 10−10 | 7.70 × 102 ± 5.20 × 101 | 4.04 × 102 ± 2.27 | 2.31 × 102 ± 2.72 × 101 | 1.52 × 102 ± 4.63 × 101 | 4.90 ± 1.45 × 101 |

| 5 | 1.41 × 103 ± 3.44 × 101 | 1.00 × 103 ± 1.70 × 102 | 5.11 × 102 ± 2.32 | 5.00 × 102 ± 6.65 × 101 | 3.11 × 102 ± 5.11 × 101 | 9.70 × 101 ± 2.17 × 101 |

| 6 | 6.62 × 102 ± 1.18 | 6.40 × 102 ± 9.70 | 6.00 × 102 ± 3.58 × 10−3 | 6.53 × 10−5 ± 1.06 × 10−4 | 2.96 × 10−1 ± 8.85 × 10−2 | 1.24 × 10−2 ± 2.73 × 10−2 |

| 7 | 2.59 × 103 ± 2.92 × 102 | 1.50 × 103 ± 2.30 × 102 | 7.23 × 102 ± 3.62 | 7.07 × 102 ± 7.90 × 101 | 3.43 × 102 ± 3.11 × 101 | 1.68 × 102 ± 1.91 × 101 |

| 8 | 1.66 × 103 ± 1.28 × 102 | 1.30 × 103 ± 1.80 × 102 | 8.09 × 102 ± 2.70 | 5.19 × 102 ± 7.77 × 101 | 3.28 × 102 ± 4.38 × 101 | 9.65 × 101 ± 1.12 × 101 |

| 9 | 2.39 × 104 ± 7.15 × 102 | 4.50 × 104 ± 1.60 × 104 | 9.00 × 102 ± 1.88 × 10−5 | 1.84 × 104 ± 5.08 × 103 | 7.71 × 103 ± 2.00 × 103 | 1.05 × 101 ± 3.14 |

| 10 | 1.57 × 104 ± 1.19 × 103 | 1.60 × 104 ± 5.70 × 103 | 1.28 × 103 ± 1.05 × 102 | 1.18 × 104 ± 1.00 × 103 | 1.05 × 104 ± 7.56 × 102 | 2.44 × 104 ± 1.01 × 103 |

| 11 | 3.40 × 103 ± 1.16 × 103 | 2.80 × 103 ± 2.90 × 102 | 1.10 × 103 ± 8.65 × 10−1 | 1.29 × 103 ± 2.17 × 102 | 2.70 × 102 ± 5.01 × 101 | 1.76 × 102 ± 6.28 × 101 |

| 12 | 1.07 × 107 ± 2.86 × 106 | 2.70 × 108 ± 1.20 × 108 | 1.55 × 104 ± 1.43 × 104 | 1.27 × 107 ± 4.80 × 106 | 1.36 × 105 ± 6.66 × 104 | 3.78 × 105 ± 1.69 × 105 |

| 13 | 1.02 × 104 ± 3.42 × 103 | 4.00 × 105 ± 8.70 × 105 | 2.96 × 103 ± 2.86 × 103 | 1.29 × 104 ± 1.32 × 104 | 3.49 × 102 ± 1.10 × 102 | 1.31 × 102 ± 5.43 × 101 |

| 14 | 2.31 × 105 ± 1.40 × 105 | 1.00 × 106 ± 4.50 × 105 | 1.42 × 103 ± 2.04 × 101 | 1.67 × 105 ± 7.05 × 104 | 2.10 × 102 ± 3.31 × 101 | 2.57 × 103 ± 2.31 × 103 |

| 15 | 4.79 × 103 ± 2.66 × 103 | 6.40 × 104 ± 2.90 × 104 | 1.42 × 103 ± 2.04 × 101 | 8.26 × 103 ± 8.01 × 103 | 1.92 × 102 ± 6.45 × 101 | 1.04 × 102 ± 4.05 × 101 |

| 16 | 5.59 × 103 ± 6.96 × 102 | 5.40 × 103 ± 5.50 × 102 | 1.61 × 103 ± 4.81 | 3.39 × 103 ± 6.43 × 102 | 2.87 × 103 ± 3.97 × 102 | 1.89 × 103 ± 4.24 × 102 |

| 17 | 4.86 × 103 ± 7.32 × 102 | 4.60 × 103 ± 5.00 × 102 | 1.72 × 103 ± 2.08 × 101 | 2.81 × 103 ± 4.78 × 102 | 2.07 × 103 ± 3.26 × 102 | 1.57 × 103 ± 6.92 × 102 |

| 18 | 5.51 × 105 ± 2.28 × 105 | 2.10 × 106 ± 9.40 × 105 | 2.02 × 103 ± 8.45 × 102 | 3.66 × 105 ± 1.29 × 105 | 3.90 × 102 ± 2.46 × 102 | 1.14 × 104 ± 4.25 × 103 |

| 19 | 2.82 × 109 ± 1.06 × 102 | 1.70 × 106 ± 9.60 × 105 | 1.90 × 103 ± 9.14 | 1.17 × 104 ± 1.16 × 104 | 1.10 × 102 ± 2.40 × 101 | 1.61 × 102 ± 7.63 × 101 |

| 20 | 5.27 × 103 ± 6.49 × 102 | 4.60 × 103 ± 1.00 × 103 | 2.00 × 103 ± 2.19 × 10−1 | 2.66 × 103 ± 4.39 × 102 | 1.97 × 103 ± 2.92 × 102 | 2.84 × 103 ± 5.21 × 102 |

| 21 | 2.95 × 103 ± 1.46 × 102 | 2.80 × 103 ± 1.50 × 102 | 2.29 × 103 ± 4.70 × 101 | 7.33 × 102 ± 7.54 × 101 | 5.46 × 102 ± 4.89 × 101 | 2.89 × 102 ± 2.11 × 101 |

| 22 | 1.83 × 104 ± 1.13 × 103 | 1.30 × 104 ± 9.10 × 103 | 2.30 × 103 ± 8.75 × 10−3 | 1.32 × 104 ± 1.24 × 103 | 1.18 × 104 ± 8.06 × 102 | 2.43 × 104 ± 1.92 × 103 |

| 23 | 3.47 × 103 ± 9.11 × 101 | 3.30 × 103 ± 1.40 × 102 | 2.61 × 103 ± 2.65 | 9.18 × 102 ± 6.16 × 101 | 7.72 × 102 ± 3.13 × 101 | 5.87 × 102 ± 1.45 × 101 |

| 24 | 4.07 × 103 ± 1.09 × 102 | 3.80 × 103 ± 1.90 × 102 | 2.71 × 103 ± 7.38 × 101 | 1.40 × 103 ± 7.62 × 101 | 1.25 × 103 ± 5.48 × 101 | 9.11 × 102 ± 1.77 × 101 |

| 25 | 3.38 × 103 ± 6.40 × 101 | 3.50 × 103 ± 4.90 × 101 | 2.92 × 103 ± 2.34 × 101 | 7.09 × 102 ± 6.05 × 101 | 7.30 × 102 ± 5.31 × 101 | 7.53 × 102 ± 4.18 × 101 |

| 26 | 1.87 × 104 ± 3.28 × 103 | 6.20 × 103 ± 4.20 × 103 | 2.92 × 103 ± 2.34 × 101 | 8.62 × 103 ± 8.60 × 102 | 6.37 × 103 ± 1.61 × 103 | 3.42 × 103 ± 1.28 × 103 |

| 27 | 3.92 × 103 ± 2.80 × 102 | 3.50 × 103 ± 5.90 × 101 | 3.10 × 103 ± 1.19 × 101 | 7.47 × 102 ± 4.96 × 101 | 6.85 × 102 ± 3.88 × 101 | 6.87 × 102 ± 3.47 × 101 |

| 28 | 5.46 × 103 ± 1.54 × 102 | 3.50 × 103 ± 5.10 × 101 | 3.20 × 103 ± 1.26 × 102 | 5.53 × 102 ± 3.30 × 101 | 5.55 × 102 ± 2.73 × 101 | 3.51 × 102 ± 1.10 × 102 |

| 29 | 5.20 × 104 ± 9.53 × 102 | 6.90 × 103 ± 8.90 × 102 | 3.16 × 103 ± 1.40 × 101 | 3.95 × 103 ± 5.28 × 102 | 2.67 × 103 ± 2.49 × 102 | 1.39 × 103 ± 2.14 × 102 |