Abstract

Substring counting is a classical algorithmic problem with numerous solutions that achieve linear time complexity. In this paper, we address a variation of the problem where, given three strings p, t, and s, we are interested in the number of occurrences of p in all strings that would result from inserting t into s at every possible position. Essentially, we are solving several substring counting problems of the same substring p in related strings. We give a detailed description of several conceptually different approaches to solving this problem and conclude with an algorithm that has a linear time complexity. The solution is based on a recent result from the field of substring search in compressed sequences and exploits the periodicity of strings. We also provide a self-contained implementation of the algorithm in C++ and experimentally verify its behavior, chiefly to demonstrate that its running time is linear in the lengths of all three input strings.

1. Introduction

Substring search is one of the classical algorithmic problems, which has a somewhat surprising linear-time solution. However, there is an abundance of algorithms that address the same or similar problems [1]. In this paper, we focus on a new version of the problem, where we are dealing with a changing string in which we are searching for a substring.

We are given strings s, t, and p. We will denote the length of the string s as . If we insert t into s at position k (where ), we obtain a new string that is formed by the first k characters of string s, followed by the entire string t and concluded by the remaining characters of s. We want to count the number of occurrences of p as a substring in every string that can be obtained with the insertion of t into s at all possible positions k. We make no assumption regarding the lengths of the input strings. They may be potentially millions of characters long; they may be comparable in length, or some may be much shorter than others. The only constraint is that s and t together should not be shorter than p, as that would make the problem trivial.

For example, if we insert into at position , we get abaab. For insertions at and we get aabab and ababa, respectively. If we are interested in occurrences of , we will find two when inserting t into s at : ababa and ababa (occurrences can of course overlap).

The problem can be solved trivially by employing any substring counting algorithm with a linear time complexity on all strings obtained by inserting t into s. However, we are interested in more efficient solutions that do not have a quadratic time complexity in terms of the length of string s, which we are modifying and searching the substring in.

The paper presents different approaches to solving the problem in the following sections from less to more computationally efficient. Some approaches are improvements of the previous ones while others exploit different properties. We first establish some preliminaries, which are used throughout the rest of the paper. Then we describe a solution with time complexity that is quadratic in terms of the length of the pattern string p but linear in the length of the strings s and t. We achieve this through the use of similarities between the problems of counting the same pattern string p in strings that are a result of inserting t into s in adjacent locations. The following chapter improves this solution with a precomputation that gives us a solution with subquadratic time complexity. Next, we focus on a different, geometric interpretation of a problem, which reorders the computation of solutions to the problems and achieves a time complexity of . It is based on reducing the problem to the point location problem, more precisely to rectangle stabbing or dynamic interval stabbing [2,3]. Finally, we present a solution with a linear time complexity , which is based on a recent result [4] on finding substrings in a compressed text [5].

This paper presents a theoretical result in string processing: a worst-case linear-time algorithm that generalizes the classical string matching problem. Specifically, instead of computing pattern occurrence counts in a single, fixed text, our algorithm efficiently computes such counts across a linear number of systematically modified texts, all within the same asymptotic time bound.

We outline some potential areas of application for the developed algorithms. In bioinformatics, pattern counting is fundamental to genome sequence analysis, where the patterns of interest may correspond to known biological motifs or regulatory elements. The inserted sequences can represent indels or longer structural variants. They could also originate from viruses that are able to insert their genetic material into host chromosomes. In cybersecurity and plagiarism detection, obfuscation techniques often involve the insertion of irrelevant content to evade detection of known signatures. In natural language processing, the insertion of specific phrases or modifiers can be used to detect meaningful linguistic variants. Beyond these applications, the algorithmic techniques presented here may be relevant for developing related algorithms for efficient processing of sequential data.

2. Materials and Methods

2.1. Preliminaries

We will denote the length of the string x by , except in the big O notation or under the root , where we will omit the characters. We will use python (https://docs.python.org/3/library/stdtypes.html#typesseq-common, accessed on 18 June 2025) notation for individual characters and substrings. The string x consists of characters ; the substring comprises the characters ; if i is absent in this notation, we mean ; if j is absent, we mean ; if i or j is negative, we should add to it. Position i in the string x refers to the boundary between characters and (position 0 is the left edge of the first character, and position is the right edge of the last character). The notation will represent the string that we get if we read the characters of the string x from right to left, so ; prefixes and suffixes of the strings x and are of course closely related: and .

We will frequently make use of prefix tables as we know them from the Knuth–Morris–Pratt algorithm [6]. For a given string x, let be largest integer such that is a suffix of the table can be computed in time. For strings x and y, we will further define as the largest integer such that is a suffix of ; the table can be computed in time by an algorithm very similar to that for . In other words, and tell us, for each prefix of x and of y, what is the longest prefix of x that it ends with.

The table is useful, for example, in finding occurrences of the string x as a substring in the string y: these occurrences end at those positions i (recall that position i is the boundary between characters and ) where and . This will also be useful to have stored in a table; so let if x appears in y starting at position i (that is, if ), otherwise, let . Let us further define a table of partial sums: , which therefore counts all occurrences of x as a substring in y at positions 0 to i. All these tables can be calculated in linear time.

Similar to the above precomputation of prefixes, we can also preprocess suffixes; let us define tables and which tell us, for each suffix of x or y, what is the longest suffix of x that it begins with. More precisely, let be the smallest value such that is a prefix of ; and let be the smallest value such that is a prefix of . Both tables can be computed in linear time by a similar procedure as for and , except that we process strings from right to left. (We can also reverse the strings and use the previous procedures for prefix tables; namely and .)

2.2. General Approach

We will successfully solve the problem if we count, for each , the number of occurrences of p in the string , which arises from inserting t into s at position k. These occurrences can be divided into those that

- (I)

- lie entirely within or entirely within ;

- (II)

- Lie entirely within t;

- (III)

- Start within and end within t;

- (IV)

- Start within t and end within ; and

- (V)

- Start within and end within , and in between extend over the entire t.

- Each of these five types of occurrences will be considered separately and the results added up at the end. Of course, type (II) comes into play only if , and type (V) only if .

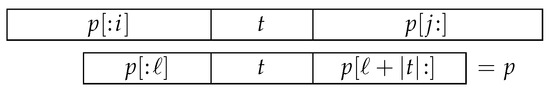

(I) Suppose that p appears at position i in s, that is . This occurrence lies entirely within if , therefore ; there are a total of such occurrences.

However, if we want the occurrence to lie entirely within , that means that ; such occurrences can be counted by taking the number of all occurrences of p in s and subtracting those that start at positions from 0 to . Thus, we get .

(II) The second type consists of all occurrences of p in t, so there are of these. We use this result at every k.

(III) The third type consists of occurrences of p that begin within and end within t. Suppose that the left j characters of p lie in and the rest in t; thus ends with , and t starts with . We already know that the longest prefix of p appearing at the end of is characters long; for the purposes of our discussion, we can therefore replace with .

Let be the number of those occurrences of p in that start within the first part, i.e., in . One possibility is that such an occurrence starts at the beginning of the string (in this case t must start with ); the other option is that it starts a bit later, so that only the first characters of our occurrence lie in . Therefore ends with ; and we already know that the next position j for which this condition is satisfied is . The table D is prepared in advance with Algorithm 1 (with a linear time complexity), and then at each k, we know that occurrences of p start within and end within t.

In the above procedure, we need to be able to quickly check whether t starts with for a given i. We will use tables and that were prepared in advance. Now tells us that is the longest suffix of p that occurs at the beginning of t; the second longest such suffix is , the third , and so on. We therefore prepare the table E (with Algorithm 2), which tells us, for each possible index j, whether occurs at the beginning of t. Hence, the condition “if t starts with ” in our procedure for computing table D can now be checked by looking at the value of .

| Algorithm 1 Computation of the table —occurrences of p in that start within the first part |

; for to : (*occurrences that have fewer than i characters in *) ; if t starts with then (*the occurrence of p at the start of *) ; |

| Algorithm 2 Computation of the table —does occur at the start of t? |

for to do ; ; while : ; ; |

(IV) The fourth type consists of the occurrences of p that start within t and end within . These can be counted using the same procedure as for (III), except that we reverse all three strings.

(V) We are left with those occurrences of p that start within , end within , and contain the entire t in between. This means that must end with some prefix of p and we already know that the longest such prefix is characters long; similarly, must start with some suffix of p, and the longest such suffix is characters long, where . Instead of strings and we can focus on and from here on. (These i and j can of course be different for different k, and where relevant we will refer to them as and .) We will present several ways of counting the occurrences of p in strings of the form , from simpler and less efficient to more efficient, but more complicated. Everything we have presented so far regarding types (I) to (IV) had linear time and space complexity, , so the computational complexity of the solution as a whole depends mainly on how we solve the task for type (V).

2.3. Quadratic Solution

Let be the number of those occurrences of p in the string that start within and end within . This function is interesting for and (so that the first and third part, and , are shorter than p). Edge cases are , because then the first or third part is empty and therefore p can not overlap with it.

Let us now consider the general case. One occurrence of p in may already appear at the beginning of this string; and the rest must begin later, such that within the first part of our string, , they only have some shorter prefix, say , which is therefore also a suffix of the string . The longest such prefix is of length ; all these later occurrences therefore lie not only within , but also within , so there are of them. We have thus obtained a recurrence that is the core of Algorithm 3 for calculating all possible values of the function f using dynamic programming.

| Algorithm 3 A quadratic dynamic programming solution for counting occurrences of p in |

1 for to do for to : 2 ; (*Does p occur at the start of ?*) 3 if t occurs in p at position i (i.e., if ) then 4 if and starts with then 5 ; (*Add later occurrences.*) 6 ; 7 ; |

Before the procedure for calculating the function f is actually useful, we still need to consider some important details. We check the condition in line 3 by checking if . In line 4 the condition checks whether an occurrence of p that started at the beginning of the string would even reach at the end, because we are only interested in such occurrences—those that lie entirely within , have already been considered in under type (III) in Section 2.2. Next, we have to check, in line 4, whether some suffix of p begins with some shorter suffix of p. Recall that the longest that occurs at the beginning of the string is the one with ; the next is for , and so on. In line 4 we are essentially checking whether this sequence of shorter suffixes eventually reaches . For this purpose we can use a tree structure, which we will also need later in more efficient solutions. Let us construct a tree in which there is a node for each u from 1 to ; the root of the tree is the node (recall that the number in the suffix notation, such as , represents the position or index at which this suffix begins; therefore, shorter suffixes have larger indices, and the largest among them is , which represents the empty suffix), and for every , let node u be a child of node . Line 4 now actually asks whether node is an ancestor of node j in the tree .

This can be checked efficiently if we do a preorder traversal of all nodes in the tree—that is, the order in which we list the root first, then recursively add each of its subtrees (Algorithm 4). For each node u, we remember its position in that order (say ) and the position of the last of its descendants (say ). Then the descendants of u are exactly those nodes that are located in the order at indices from to . To check whether some is a prefix of , we only have to check if . (Another way to check whether is a prefix of is with the Z-algorithm ([7], pp. 7–10). For any string w, we can prepare a table in time, where the element (for ) tells us the length of the longest common prefix of the strings w and . The question we are interested in—i.e., whether is a prefix of —is equivalent to asking whether , which is equivalent to , and hence to the question whether and match in the first characters, i.e., whether . However, for our purposes the tree-based solution is more useful than the one using the Z-algorithm, as we will later use the tree in solutions with a lower time complexity than the quadratic one that we are describing now.)

Our procedure so far (Algorithm 3) has computed by checking whether p occurs at the beginning of and handling subsequent occurrences via for . Of course, we could also go the other way: we would first check whether p occurs at the end (instead of the beginning) of and then add earlier occurrences via for . To check if p occurs at the end of , we should first check whether t occurs in p at indices from to j (with the same table as before in line 3), and then we would be interested in whether ends with . Here, it would be helpful to construct a tree , which would have one node for each u from 0 to ; node 0 would be the root, and for every , the node u would be a child of node . Using the procedure Preorder on this tree would give us tables and . We should then check whether is an ancestor of node i in the tree.

| Algorithm 4 Tree traversal for determining the ancestor–descendant relationships |

procedure Traverse (tree T, node u, tables and , index i): ; ; for every child v of node u in tree T: Traverse(T, v, σ, τ, i); ; return i; |

procedure Preorder(input: tree T; output: tables and ): let and be tables with indices from 1 to ; Traverse(T, root of T, σ, τ, 0); return ; main call: Preorder(TS); |

Thus, we see that we can compute quickly, in time, either from or from ; both ways will come in handy later.

Our current procedure for computing the function f would take time to prepare trees and and tables , , and , followed by time to calculate the value of for all possible pairs . This solution has a time complexity of .

2.4. Distinguished Nodes

We do not really need the values of , which we calculated in the quadratic solution, for all pairs , but only for one such pair for each k (i.e., each possible position where t can be inserted into s), which is only values and not all . We will show how to compute those values of the function f that we really need, without computing all the others.

Let us traverse the tree from the bottom up (from leaves towards the root) and compute the sizes of the subtrees; whenever we encounter a node u with its subtree (let us call it ; it consists of u and all its descendants) of size at least nodes, we mark u as a distinguished node and cut off the subtree . Cutting it off means we will not count the nodes in it when we consider subtrees of u’s ancestors higher up in the tree. When we finally obtain to the root, we mark it as well, regardless of how many nodes are still left in the tree at that time. Algorithm 5 calculates, for each node u, its nearest marked ancestor ; if u itself is marked, then .

Now we have at most marked nodes (because every time we marked a node, we also cut off least nodes from the tree, and the number of all nodes is ); and each node is less than steps away from its nearest marked ancestor (if it were or more steps away, some lower-lying ancestor of this node would have a subtree with at least nodes, and would therefore get marked and its subtree cut off).

| Algorithm 5 Marking distinguished nodes |

procedure Mark(u): (*Variable N counts the number of nodes in reachable through unmarked descendants.*) ; for every child v of node u: Mark(v); if or then M[u]: = u ; (*Mark u.*) else M[u]: = −1; return N; (*Unmarked u.*) |

main call: Mark(0); (*Start at the root—node 0.*) (*Pass the information about the nearest marked ancestor down the tree to all unmarked nodes. (from parents to children)*) for u: = 1 to |p| − 1 do if M[u] < 0 then M[u]: = M[Pp[u]]; |

We know that for every i. For each marked i, we can compute all values of for from ; since there are at most marked nodes, this takes time. Let us describe how we can answer each of the queries that we are interested in. The nearest marked ancestor of node is ; since it is marked, we already know for all j, i.e., also for , and since lies in the tree at most steps below node , we can calculate from in at most steps. Since we have to do this for each query, it takes overall time. To save space, we can solve queries in groups according to ; after we process all queries that share the same node , we can forget the results . Algorithm 6 presents the pseudocode of this approach to counting the occurrences of type (V).

| Algorithm 6 Rearranging queries |

(*Group queries based on the nearest marked ancestor.*) for to do if then empty list; for to do add k to ; (*Process marked nodes u.*) for to do if : (*Compute for all j as .*) ; for downto 1 do in store that is computed from , which is stored in ; (*Answer queries , where u is the nearest marked ancestor of .*) for each k in : (*Prepare the path in the tree from to its ancestor u.*) empty stack; ; while : push i to S and assign ; (*Compute for all nodes i on the path from u to .*) ; (*i.e., *) while S not empty: node popped from S; from , currently stored in r, compute and store it in r; (*r is now equal to , i.e., the answer to query k, which is the number of occurrences of p in that start within and end within .*) |

Everything we did to count the occurrences of types (I) to (IV) had a linear time complexity in terms of the length of the input strings, so the overall time complexity of this solution is with an space complexity. (Note that this time complexity can be worse than the quadratic solution in cases where .)

2.5. Geometric Interpretation

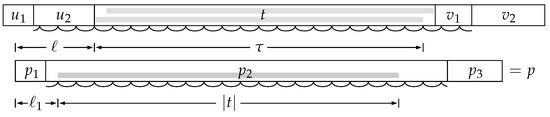

We want to count those occurrences of p in that start within and end within (Figure 1). Such an occurrence is therefore of the form , where , , and the following three conditions apply: (a) must be a suffix of , (b) must be a prefix of , and (c) t must appear in p as a substring starting at index ℓ, i.e., .

Figure 1.

The alignment of string p as an occurrence in .

To check (c), we prepare the table in advance and check whether .

Let us now consider the condition (a), i.e., that must be a suffix of . We know that the longest prefix of p that is also a suffix of is characters long; the second longest is characters long, and so on. The condition that must be a suffix of essentially asks whether ℓ occurs somewhere in the sequence . This is equivalent to asking whether ℓ is an ancestor of i in the tree , which, as we have already seen, can be checked with the condition .

Similar reasoning holds for condition (b), i.e., that must be a prefix of , except that now instead of the table and the tree we use the table and the tree . The condition (b) asks whether is an ancestor of j in the tree , which can be checked with .

The two inequalities we have thus obtained,

have a geometric interpretation which will prove useful: if we think of and as the x- and y-coordinates, respectively, of a point on the two-dimensional plane, then the two inequalities require the x-coordinate to lie on a certain range and the y-coordinate to lie within a certain other range; in other words, the point must lie within a certain rectangle.

Recall that i and j depend on the position k where the string t has been inserted into s; therefore, to avoid confusion, we will now denote them by and . We will refer to the point from the previous paragraph as , and to the rectangle as . Thus, we now see that p occurs in such that the occurrence of t at position ℓ of p aligns with the occurrence of t at position k of if and only if lies in . Actually we are not interested in whether a match occurs for a particular k and ℓ, but in how many occurrences there are for a particular k; in other words, for each k (from 1 to ) we want to know how many rectangles contain the point . Here ℓ ranges over all positions where t appears as a substring in p (i.e., where or, equivalently, ); by limiting ourselves to such ℓ, we also take care of condition (c). Thus, we have points and rectangles, and for each point we are interested in how many of the rectangles contain it.

We have thus reduced our string-searching problem to a geometrical problem, which turns out to be useful because the latter problem can be solved efficiently using a well-known computational geometry approach called a plane sweep [8]. Imagine moving a vertical line across the plane from left to right (x-coordinates—i.e., the values from the table—go from 1 to ) and let us maintain some data structure with information about which y-coordinate intervals are covered by those rectangles that are present at the current x-coordinate. When we reach the left edge of a rectangle during the plane sweep, we must insert it into this data structure, and when we reach its right edge, we remove it from the data structure. Because the y-coordinates (i.e., the values from the table) in our case also take values from 1 to , a suitable data structure is, for example, the Fenwick tree [9]. Imagine a table h in which the element stores the difference between the number of rectangles (from those present at the current x-coordinate) that have their lower edge at y, and those that have their upper edge at . Then the sum is equal to the number of rectangles that (at the current x) cover the point . The Fenwick tree allows us to compute such sums in time and also make updates of the tree with the same time complexity, when any of the values changes. Algorithm 7 describes this plane sweep with pseudocode.

| Algorithm 7 Plane sweep |

let F be a Fenwick tree with all values initialized to 0; for to : for every rectangle with the left edge at : increase and decrease in F by 1; for every point with : compute the sum in F, i.e., the number of occurrences of p in that start within and end within ; for every rectangle with the right edge at : decrease and increase in F by 1; |

We have to prepare in advance, for every coordinate x, a list of rectangles that have their left edge at x, a list of rectangles that have right edge at x, and a list of points with this x-coordinate. Preparing these lists takes time; each operation on F takes time, and these operations are insertions of rectangles, deletions and sum queries. If we add everything we have seen in dealing with types (I) to (IV) in Section 2.2, the total time complexity of our solution is . Instead of using the Fenwick tree, we could use the square root decomposition on the table h (where we divide the table h into approximately blocks with elements each and we maintain the sum of each block), but the time complexity would increase to .

2.6. Linear-Time Solution

We will first review a recently published algorithm by Ganardi and Gawrychowski [10] (hereinafter: GG), which we can adapt for solving our problem with a linear time complexity. The algorithm we need is in ([10], Theorem 3.2); we will only need to adjust it a little so that it counts the occurrences of p instead of just checking whether any occurrence exists at all. Intuitively, this algorithm is based on the following observations: if a string of the form (where is a prefix of p and is a suffix of p) contains an occurrence of p which begins within u and ends within v, this occurrence of p must have a sufficiently long overlap with at least one of the strings u, t and v. All these three strings are substrings of p, and if p overlaps heavily with one of its substrings, it necessarily follows that the overlapping part of the string must be periodic, and any additional occurrences of p in can only be found by shifting p by an integer number of these periods. The number of occurrences can thus be calculated without having to deal with each occurrence individually. The details of this, however, are somewhat more complicated and we will present them in the rest of this section.

In the following, we will come across some basic concepts related to the periodicity of strings ([11], Chapter 8). We will say that the string x is periodic with a period of length d if and if for each i in the range ; in other words, if , i.e., if some string of length is both a prefix and a suffix of x. The prefix is called a period of x; if there is no risk of confusion, we will also use the term period for its length d.

A string x can have periods of different lengths; we will refer to the shortest period of x as its base period. If some period of x is at most characters long, then its length is a multiple of the base period. (This can be proven by contradiction. Suppose this were not always true; consider any such x that has a base period y and also some longer period z, where and for which is not a multiple of . Then is of the form for some and some r from the range . Define and ; thus and . Let us look at the first characters of the string x; since y is a period of x, this prefix has the form ; and since z is a period of x, this prefix has the form ; but it is the same prefix both times, so the last characters of this prefix must be the same: . This means that if we concatenate several copies of the strings and together, it does not matter in what order we do it; so . Now consider ; since x has a period y and , x is a prefix of , and since , x is also a prefix of ; therefore, x has a period , which is in contradiction with the initial assumption that y is the base (i.e., shortest) period of x.)

It follows from the definition of periodicity that if y is both a prefix and a suffix of x (and if ), then x is periodic with a period of length . (If, in addition, also holds, so that occurrences of the string y at the beginning and end of the string x overlap, y is also periodic with a period of this length.) This period is the shorter and the longer y is, so we obtain the base period at the longest y that is both a prefix and a suffix of the string x. If instead of x we consider only its prefix , we know that the longest y that is both a prefix and a suffix of is characters long; therefore, has a base period (if , this formula gives the base period i, which of course means that is not periodic at all.

Let lcp be the length of the longest common prefix of and , i.e., of two suffixes of p. We can compute this in time if we have preprocessed the string p to build its suffix tree and some auxiliary tables (in time). Each suffix of p corresponds to a node in its suffix tree; the longest common prefix of two suffixes is obtained by finding the lowest common ancestor of the corresponding nodes, which is a classic problem and can be answered in constant time with linear preprocessing time [12,13]. To build a suffix tree in linear time, one can use, for example, Ukkonen’s algorithm [14], or first construct a suffix array (e.g., using the DC3 algorithm of Kärkkäinen et al. [15,16]) and its corresponding longest-common-prefix array [17], and then use these two arrays to construct the suffix tree (this latter approach is what we used in our implementation). We can similarly compute the longest common suffix of two prefixes of p, which will be useful later.

Suppose that x and y are two substrings of p, e.g., and , and that we would like to check whether x appears as a substring in y starting at position k; then (provided that ) we only need to check whether lcp; if that is true, x really appears there (), otherwise the value of the function lcp tells us after how many characters the first mismatch occurs. We can further generalize this: if are substrings of p and we are interested in whether x appears as a substring in starting at position k, we need to perform at most three calls to the lcp function: first, for the part where x overlaps with u; if no mismatch is observed there, we check the area where x overlaps with t; if everything matches there too, we check the area where x overlaps with v.

Let us begin with a rough outline of the GG algorithm (Algorithm 8). Recall that we would like to solve problems of the form “how many occurrences of p are there in the string that start within u and end in v?”, where u is some prefix of p, and v is some suffix of p. (To be more precise: for each position k from the range we are interested in occurrences of p in the string , where, as we have seen, we can limit ourselves to the string with and .) Assume, of course, that and that t appears at least once as a substring in p (which can be checked with the table ), because otherwise the occurrences we are looking for cannot exist at all. Since the strings p and t are the same in all our queries, we will list only u and v as the arguments of the GG function. Regarding the loop 1–3, we note that it will have to be executed at most twice, since u starts as a prefix of p, i.e., it is shorter than ; it is at least halved in each iteration of the loop, therefore it will be shorter than after at most two halvings. In step 2, we rely on the fact that the occurrences of p we are looking for overlap significantly with u (by at least one half of the latter); we will look at the details in Section 2.6.1. In step 3, we want to discover for some substring of p (namely the right half of the current u, which was a prefix of p) how long is the longest suffix of this substring that is also a prefix of p; we do not know how to do this in time, but we can solve such problems as a batch in time, which is good enough for our purposes, since we know that we will have to execute the GG algorithm for pairs . (For details of this step, see Section 2.6.3.) Steps 4–6 are just a mirror image of steps 1–3 and the same considerations apply to them; that loop also makes at most two iterations. (Furthermore, note that steps 3 and 6 ensure that no occurrence of p is counted more than once: for example, those counted in step 2 all start within the left half of u, and this is immediately cut off in step 3, so these occurrences do not appear again, e.g., in step 5). In step 7, we then know that u and v are shorter than ; if is longer than p, then t must be at least characters long and we can make use of this when counting occurrences of p in ; we will look at the details in Section 2.6.2. As we will see, steps 2 and 7 each take time; the GG algorithm can therefore be executed times in time, and time is spent on preprocessing the data, so we have a solution with a linear time complexity.

| Algorithm 8 Adjustment of the GG algorithm |

algorithm GG(u, v): input: two strings, u (a prefix of p) and v (a suffix of p); output: the number of occurrences of p in that start within u and end within v; 1 while : 2 count occurrences of p in that start within the left half of u (at positions ) and end within v; 3 the longest suffix of the current u that is shorter than and is also a prefix of p; 4 while : 5 count occurrences of p in that start within u and end within the right half of v (at most characters before the end of v); 6 the longest prefix of the current v that is shorter than and is also a suffix of p; 7 count all occurrences of p in the current string ; |

2.6.1. Counting Early and Late Occurrences

Let us now consider step 2 of Algorithm 8 in more detail. The main idea will be as follows: we will find the base period of the string u; calculate how far this period extends from the beginning of strings and p; and discuss various cases of alignment with respect to the lengths of these periodic parts.

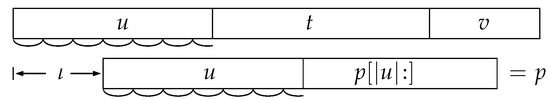

We are, then, interested in occurrences of p in that begin at some position . Since u is also a prefix of p, the occurrence of u at the beginning of p overlaps by at least one half with that at the beginning of (Figure 2). So u’s suffix of length is also its prefix; therefore, u has a period of length ; and since the length of this period is at most , it must be a multiple of the base period. Let us denote the base period by d (represented by the arcs under the strings in Figure 2); recall that it can be computed as . We need to consider only positions of the form for integer ; the lower bound for is obtained from the conditions and (so that the occurrence of p ends within v and not sooner), and the upper bound from the conditions (so that the occurrence of p begins within the first half of u) and (so that the occurrence of p does not extend beyond the end of ). Let us call these bounds and . If , we can immediately conclude that no appropriate occurrence of p exists.

Figure 2.

The periodicity of u when p occurs in the first half.

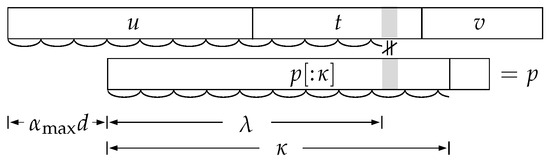

The string u is a prefix of p and has a base period d; perhaps there exists a longer prefix of p with this period; let be the longest such prefix. We obtain it by finding the longest common prefix of the strings p and , i.e., lcp(0,d). Let be the length of the longest common prefix of the strings and (in other words, we are interested in whether appears in starting at ; we already know that there is certainly no mismatch with u, so at most two calls of lcp are enough to check whether everything matches with t and v or find where the first mismatch occurs). Then we know that the string is periodic with the same period of length d as and u. An example is shown in Figure 3.

Figure 3.

The reach of the base period d at the beginnings of the sequences and p.

(1) If , we have found a suitable occurrence of p, and since has a period of d until the end of this occurrence, this means that if we were now to shift p in steps of d characters to the left and then compare it with the same part of , we would see exactly the same substring in after each such shift as before, i.e., we would also find occurrences of p in all these places. In this case, all from to are suitable, so we have found occurrences.

(2) If , we have found an occurrence of in starting at . This occurrence of may or may not continue into an occurrence of the entire p; this can be checked with at most two more calls of lcp. As for possible occurrences further to the left, i.e., starting at for some : in such an occurrence, should match with the character , which is still in the periodic part of (), i.e., it is equal to the character d places further to the left, which in turn must be equal to the character . So and should be the same, but they certainly are not, because then the periodic prefix of p (with period d) would be at least characters long, not just characters. Thus, we see that for there is definitely a mismatch and p does not occur there.

(3) If , we have a mismatch between and . We did not find an occurrence of p at , but what about for smaller values of ? If we now move p left in steps of d, the same character of the string will have to match , , and so on. As long as these indices are smaller than , all these characters are equal to the character , since this part of p is periodic with period d; therefore, a mismatch will occur for them as well. In general, if p starts at , the character will have to match ; a necessary condition to avoid the aforementioned mismatch is therefore or . Thus, we have obtained a new, tighter upper bound for , which we will call . For , the string lies entirely within the periodic part of the string , i.e., within .

(3.1) If , we know that there is no possible and we will not find an occurrence of p here.

(3.2) Otherwise, if , we see that for , the string p (if it starts at position in ) lies entirely within the periodic part of ; since means that p is itself entirely periodic with the same period of length d, we can conclude that, for each (from to ), p matches completely with the corresponding part of ; thus there are occurrences.

(3.3) However, if , we can reason very similarly as in case (2). At we have an occurrence of , which may or may not be extended to an occurrence of the whole p; we check this with at most two calls of lcp. At the string lies entirely within the periodic part of the string ; if we then decrease and thus shift p to the left by d or a multiple of d characters, then will certainly also fall within the periodic part of the string . In order for the entire p to occur at such a position, among other things, and would have to match with the corresponding characters of the string , but since these two characters are both in the periodic part of the string , they are equal, while the characters and are not equal (because is the longest prefix of p with period d); therefore, a mismatch will definitely occur for every and p does not appear there. Step 2 of the GG algorithm can therefore be summarized by Algorithm 9.

| Algorithm 9 Occurrences of p in that start within the left half of u and end within v. |

; ; if then return 0; length of the longest prefix of p with a period d; length of the longest common prefix of and ; if : if then return else if p is a prefix of then return 1 else return 0; else: ; if then return 0 else if then return else if p is a prefix of then return 1 else return 0; |

2.6.2. Counting the Remaining Occurrences

Now let us consider step 7 of Algorithm 8. At that point in the GG algorithm, we have already truncated u and v so that they are shorter than . If or or , we can immediately conclude that there are no relevant occurrences of p. We can assume that , as otherwise the string would certainly be shorter than .

We are interested in such occurrences of p in that start within u and end within v; such an occurrence also covers the entire t in the middle. Candidates for a suitable occurrence of p in are therefore present only where t appears in p (more precisely: the fact that t occurs in p starting at ℓ is a necessary condition for p to appear in starting at ). We compute in advance (using the table ) the index of the first and (if it exists) second occurrence of t as a substring in p; let us call them and . If there was just one occurrence of t in p, then there is also just one candidate for the occurrence of p in and with two more calls of lcp we can check whether p really occurs there (i.e., whether is a suffix of u and is a prefix of v).

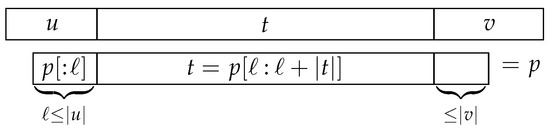

Now suppose that there are at least two occurrences of t in p. Let us consider some occurrence of p in (of course one that starts within u and ends within v); the string t in matches one of the occurrences of t in p; let ℓ be the position where this occurrence of t in p begins (Figure 4). There are at most characters to the left of this occurrence of t in p (otherwise p would already extend beyond the left edge of ), and there are at most characters to the right of it (otherwise p would extend beyond the right edge of ). Therefore, no other occurrence of t in p can begin more than characters to the left of ℓ or more than characters to the right of ℓ. If another occurrence of t in p begins at position , then .

Figure 4.

The alignment of string t with string p at an occurrence of p in .

Since no occurrence of t in p is very far from ℓ, it follows that the first two occurrences of t in p (i.e., those at and ) cannot be very far from each other. We can verify this as follows: (1) if the occurrence of t in p at ℓ was exactly the first occurrence of t in p (i.e., if ), it follows that is greater than by at most , so ; (2) if the occurrence of t in p at ℓ was not the first occurrence of t in p, but the second or some later one (that is, if ), it follows that and are both less than or equal to ℓ, but by at most , so again .

We see that if p occurs in (starting within u and ending within v), the first two occurrences of t in p are less than apart. So, we can start by computing and if , we can immediately conclude that we will not find, in , any occurrences of p that start within u and end within v.

Otherwise, we know henceforth that , so the first two occurrences of t in p overlap by more than half; the overlapping part is, therefore, both a prefix and a suffix of t, say for and ; so t is periodic with a period of length . Now suppose that t has some shorter period . If , the characters and both lie in the first occurrence of t in p, so they are equal since t has period ; if , then both of these characters lie in the second occurrence of t in p and are therefore equal. Since this is true for all , we have found another intermediate occurrence of t in p starting at , which contradicts the assumption that the second occurrence is the one at . Thus, d is the shortest period of t.

Consider the first occurrence of t in p, the one starting at , and see how far left and right of it we could extend t’s period of length d; that is, we need to compute the longest common suffix of the strings and , and the longest common prefix of the strings and . Now we can imagine p partitioned into , where is the maximal substring that contains that occurrence of t and has a period d.

Similarly, in , let us see how far into u and v the middle part of t could be extended while maintaining periodicity with period d. This requires some caution: t starts with the beginning of the period, so when we extend the middle part from the right end of u leftwards, we must expect the end of the period at the right end of u. So we must compute the longest common suffix of u and something that ends with the end of t’s period, which is not necessarily t itself because its length is not necessarily a multiple of the period length. A similar consideration applies to extending the middle part from the left end of v rightwards. Let be the length of what remains of t if we restrict ourselves only to periods that occur in their entirety. Since , it follows that , so is longer than and . If we now find the longest common suffix of the strings u and and the longest common prefix of the strings v and , we will receive what we are looking for, and we do not have to worry about running out of t before u or v. From now on, let us imagine in the form , where is periodic with a period of length d. Figure 5 shows the resulting partition of the strings and p.

Figure 5.

Partition of the strings according to the range of the period of the string t. In t above, and are marked in gray, which are useful in determining and . In the string below, the first occurrence of t in p (starting at ), from which actually grew, is marked in darker gray.

We know that if p appears in , then the t in matches some occurrence of t in p, say the one at ℓ (see Figure 5); if this is not precisely the first occurrence (the one at ), then the first one can start at most characters further to the left (otherwise p would extend over the left edge of there); both occurrences of t in p (at and ℓ) overlap by more than half, so t is periodic with a period of length ; this is less than , so this period is a multiple of the base period, i.e., d. Thus, we see that only those occurrences of t in p that begin at positions of the form for integer are relevant.

Furthermore, since the occurrences of t in p at ℓ and at overlap by more than half, this overlapping part is longer than d, i.e., the base period of t. In p, the content of this overlapping part, since it lies within the first occurrence of t in p, continues with the period d to the left and right throughout , but since this overlapping part also lies within the occurrence of t at ℓ, which matches with t in , the content of this overlapping part also continues in (with the period d) to the left and right throughout . If we look at what happens in where we have the string in p, we see that if extended to the left of , we would have a contradiction, because we chose in such a way that a period of length d cannot extend further than the left edge of (if we start from t in ). For a similar reason, cannot extend to the right of ; it must therefore remain within .

We said that t starts at in p and matches with t in ; so p starts at in , and starts at in . From the condition that must not extend to the left of , we get , and since it must not extend to the right of , we get . In addition, we still expect that p starts within u, so , and that it ends within v, so . From these inequalities, we can now determine the smallest and largest acceptable value of ( and ).

If , we can immediately conclude that there are no relevant occurrences of p. Otherwise, if and are empty strings, then and for every (from to ) lies within , so there are no mismatches; then we have occurrences.

If is not empty, we can check the case separately (i.e., we check whether p appears in starting at ; we need at most two calls of lcp). What about larger ? If we go from to some larger , the string p moves by d or a multiple of d characters to the left relative to ; this moves , (i.e., the first character of the string ) so far to the left that its corresponding character of (with which the first character of the string will have to match if p is to occur at the current position in ) belongs to the periodic part (i.e., ); in addition, recall that (if the new is still valid at all, i.e., ) the string also overlaps with by more than one whole period, so the character is still in such a position that its corresponding character in is in the periodic part of ; so here we have two characters in that are exactly d places apart, hence they are equal. The first character of cannot be equal to the character d places to the left of it, because otherwise the periodic part of p, i.e., , could be expanded even further to the right and this character would not belong to but instead to . We see that it is impossible that, in the new position of p, the character and the one d places to the left of it would match with the corresponding characters of ; there will be a mismatch in at least one place, so p can not occur there. Thus, we do not need to consider the case at all.

The remaining possibility is that is empty, but is not empty. An analogous analysis to the previous paragraph tells us that it is sufficient to check whether p occurs in at , and we do not need to consider smaller values of . Thus, step 7 of the GG algorithm can be described with the pseudocode of Algorithm 10.

| Algorithm 10 Occurrences of p in the shortened string . |

positions of the first two occurrences of t in p; if there is only one occurrence then return 1 or 0, depending on whether p occurs in at position or not; ; if then return 0; partition p into , where is the maximal substring that contains the first occurrence of t in p and has period d; partition into , where is the maximal substring containing t between u and v and has period d; compute and from the aforementioned inequalities; if then return 0 else if is nonempty then return 1 or 0, depending on whether p appears in at position or not else if is nonempty then return 1 or 0, depending on whether p appears in at position or not else return ; |

All of these things can be prepared in advance (, using the table ) or computed in time (with a small constant number of computations of the longest common prefix or suffix).

2.6.3. Finding the Next Candidate Prefix (or Suffix)

In this subsection we describe step 3 of Algorithm 8 in more detail. The purpose of that step is to answer, in time, up to queries (one for each position k where the string t may be inserted into s) of the form “given a prefix u of p, find the longest suffix of u that is shorter than and is also a prefix of p”. We may describe such a query with a pair , where is the length of the original prefix and is the maximum length of the new shorter prefix that we are looking for. Ganardi and Gawrychowski showed that this problem can be reduced to a weighted-ancestor problem in the suffix tree of ([10], Lemma 2.2), for which they then provided a linear-time solution ([10], Section 4). However, this solution is quite complex and in the present subsection we will present a simpler alternative which avoids the need for weighted-ancestor queries.

The longest suffix of that is also a prefix of p has the length , the second longest has the length , and so on. We could examine this sequence until we reach an element that is ; that would be the answer to the query . We can also imagine this procedure as climbing the tree (first introduced in Section 2.3), starting from the node and proceeding upwards towards the root until we reach a node with the value . However, we can save time by observing that several of these paths up the tree, for different queries, sometimes meet in the same node and thenceforth always move together.

If several paths reach some node v and do not end there (because the bounds of those queries are ), all these paths will continue into v’s parent and will never separate again, regardless of where in v’s subtree they started. Thus, in a certain sense, we no longer need to distinguish between the nodes w, v, and v’s descendants, as the result of any query with is the same regardless of which of these nodes its path begins in. We can imagine these nodes as having merged into one, namely into v’s parent w.

To follow the paths up the tree, we will visit the nodes of the tree in decreasing order, from to 0. Upon reaching node v, we can answer all queries whose bound is exactly , and then we can merge v with its parent, thereby taking into account the fact that all paths that reach v and do not end there will proceed to v’s parent. For every node w that has not yet been merged with its parent, we maintain a set of all nodes that have already been merged into w; at the start of the procedure, each node forms a singleton set by itself. The pseudocode of this approach is shown in Algorithm 11.

| Algorithm 11 Answering a batch of queries in the KMP-tree . |

1 ; 2 for downto 0: 3 for each query having : 4 the answer to this query is the smallest element of that member of F which contains ; 5 merge, in F, the set containing v and the set containing ; |

The following invariant holds at the start of each iteration of the main loop: F contains one set for each node , and this set contains w as well as those children of w (in the tree ) that are greater than v, and all the descendants of those children. In each node has a smaller value than its children, therefore w is the smallest member of . If some query has and , this means that is a descendant of w and that all the nodes on the path from to w, except w itself, are greater than v; therefore the path for this query will rise from to w and then stop; thus step 4 of our algorithm is correct in reporting w as the answer to this query. Step 5 ensures that the loop invariant is maintained into the next iteration. Step 3 requires us to sort the queries by , which can be achieved in linear time using counting sort.

The time complexity of this algorithm depends on how we implement the union-find data structure F. The traditional implementation using a disjoint-set forest results in a time complexity of for Algorithm 11 [18], and hence for the solution of our problem as a whole. Here is the inverse Ackermann function, which grows very slowly, making this solution almost linear. However, to obtain a truly linear solution, we can use the slightly more complex static tree set union data structure due to Gabow and Tarjan [19,20], which assumes that the elements are arranged in a tree and that the union operation is always performed between a set containing some node of the tree and the set containing its parent; hence this structure is perfectly suited to our needs. (A minor note to anyone intending to reimplement their approach: ([20], p. 212) uses the macrofind operation in step 7 of find, whereas ([19], p. 248) uses microfind; the latter is correct while the former can lead to an infinite loop. Moreover it may be useful to point out that must return, as the “name” of the macroset containing x, not an arbitrary member of that set, but the member which is closest to the root of the tree.) Algorithm 11 then runs in time and our solution as a whole in time.

3. Experimental Results and Discussion

We implemented the described solution in C++ as a working example of a solution with a linear time complexity. It is publicly available in an online repository (https://github.com/janezb/insertions, accessed on 16 June 2025). It is a self-contained solution with no external dependencies. Note that the implementation was not optimized for speed so there should be plenty of room for improvements if the actual running time is of importance. All the experiments presented in this section were carried out on a desktop computer with a 3.2 GHz i9-12900K CPU and 64 GB of main memory; the source code was compiled with clang 7.0.0 with all optimizations enabled (-O3).

In this section, we want to demonstrate the linearity of its time complexity and describe the behavior of the algorithm on different inputs that offers further insight into its design. Random data or actual text is unlikely to present an obstacle to any string counting approaches as mismatches occur quickly. This is shown in Table 1, which shows running times in seconds for a reasonably large choice of string lengths , , and . The strings are either randomly generated, substrings (constructed in two ways, on which see the next two paragraphs) of a longer English text, fragments of DNA sequences or of C++ source code, or substrings of a periodic string with a base period of length d (for various values of d). We can see that periodic strings provide the largest obstacle as they force the algorithm to execute all steps. (Defining the strings in this way means that there will be approx. positions k where t may be inserted into s without “disrupting” its period, and for every such k the string t in may align with any of the occurrences of t in p. Recall that Algorithm 11 starts with u being the longest prefix of p that is a suffix of ; because of the periodicity of p and s, this string u will be at most d characters shorter than p (except if k is close to the beginning of s, but this exception is a small one provided that s is long relative to p), and d (being the length of the period) is small relative to . Hence u is longer than , and steps 1–3 of Algorithm 11 get executed. In step 3, after u is cut down by half, it needs to be reduced by no more than d characters in order to become a prefix of p again; hence the loop in steps 1–3 needs to be executed twice (which is the maximum possible amount). The same applies analogously to steps 4–6. Moreover, in step 7, since occurrences of t in p are so frequent (occurring every d characters), none of the checks in the first few lines of Algorithm 10 succeed in terminating it early, and so it has to be executed in full).

Table 1.

Running times on various types of strings, with , , and .

For “English text 1”, we extracted the letters (converted to lowercase) from an English-language document, resulting in a long string w; we then used the first characters of w to form the string s, the next characters to form t, and the next characters to form p. Since the strings are long, t is not a substring of p and running time is very short, similar to the case of purely random binary strings.

For “English text 2”, we selected p as a random substring (of length ) of , then selected t as a random substring (of length ) of p; those parts of which did not end up in t were then used to form s. This ensures that t is a substring of p and p does occur (once) in one string of the form , so that the GG algorithm does need to be executed; but that is the only value of k for which u and v are of nontrivial length; even there, however, they cannot both be longer than since p itself is only twice as long as t. Accordingly, only one of u and v is ever longer than , so that only one of the loops 1–3 and 4–6 in Algorithm 8 needs to be executed and some running time is saved by not having to initialize the union-find data structure for the loop that does not need to be executed.

For the “DNA sequences” and “C++ source code” rows in the table, we generated the strings s, t, and p in the same way as for “English text 2”, except that the initial string w was obtained differently. For the DNA sequences, we used as w a DNA sequence of the yeast Saccharomyces cerevisiae (the sequence was obtained from The Saccharomyces Genome Database project (SGD—https://www.yeastgenome.org/, accessed on 18 June 2025) that hosts the data files of the S. cerevisiae strain S288C. More specifically, we used the sequence of chromosome 4 of the release R64-4-1 (http://sgd-archive.yeastgenome.org/sequence/S288C_reference/NCBI_genome_source/chr04.fsa, accessed on 18 June 2025) for C++ source code, and we obtained w by concatenating the files in the include directory of the C++ standard library as distributed with Microsoft Visual Studio. As expected, both of these kinds of strings behave similarly to the English text for the purposes of this experiment.

All reported time measurements in Table 1 are averages and standard deviations over 100 runs (the later experiments in the rest of this section will show averages over 10 runs). For the “same strings” column, the same triple of strings was used in all the runs, while for the “different strings” column a different triple was generated for each run, according to the rules of the corresponding row (for the “English text 1”, this means that the initial w from which s, t, and p are generated was obtained as a random substring of length from a longer sequence of English-language text). Comparing the results in the two columns shows that the measurements are fairly robust both with regard to repeating an experiment on the same test case as well as across different test cases (different triples of strings s, t, p) of the same type.

We will restrict the subsequent analysis to periodic strings with the length of period and further limit the lengths of the input to . The pattern p should be longer than the inserted string t so that its occurrences can also span the entire inserted string (otherwise Algorithm 8 never gets executed). The pattern p also should not exceed the total length of the string after insertion, i.e., .

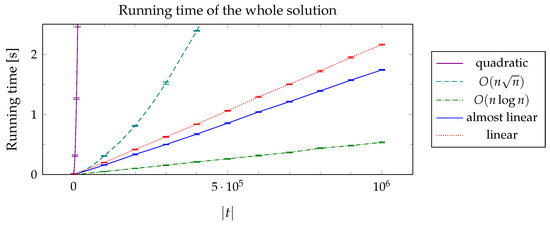

Figure 6 shows a comparison of the running times of the different solutions presented in this paper: the quadratic-time solution based on dynamic programming (Section 2.3); its improvement based on distinguished nodes (Section 2.4); the “geometric” solution based on a plane sweep (Section 2.5); and lastly two variants of the solution based on Algorithm 8: the almost-linear solution where a disjoint-set forest is used in the implementation of Algorithm 11, and the truly linear solution where the static tree set union data structure is used instead.

Figure 6.

Comparison of the running times of different solutions, for and .

As expected, the quadratic and the solutions are the slowest, and their running time grows in accordance with their asymptotic time complexity. For the other three solutions, however, it turns out that their running time in practice is just the opposite of what one might expect given their asymptotical complexity: the solution is a clear winner in practice, being about three times faster than the almost-linear solution, which in turn is approx. faster than the truly linear solution; moreover, the solution is much easier to implement. This illustrates the importance, in practice, of the constant factors hidden inside the notation. Extrapolating the curves from Figure 6 suggests that it would take strings of a quite intractable length, approximately characters, before the logarithmic factor in the running time of the plane-sweep solution would outweigh the advantage it currently enjoys due to its lower constant factor.

The plane-sweep solution also has the advantage of requiring substantially less memory than the linear solution; in our implementation, the plane-sweep solution required ≈170 bytes of memory per character of p, as compared to ≈390 bytes of the linear solution; and ≈45 bytes of memory per character of s, as compared to ≈176 of the linear solution (though admittedly this latter figure could be reduced to about 80 at the cost of a mild additional inconvenience in the implementation). On the whole, the linear solution consumed about 2.5 to 3 times as much memory as the plane-sweep solution; for example, solving a test case with , , and required ≈1.83 GB of memory with the plane-sweep solution vs. ≈4.95 GB with the linear solution. Thus, dealing with the strings whose lengths are in the tens of millions is just barely feasible on a typical desktop computer, while lengths in the hundreds of millions would not be feasible.

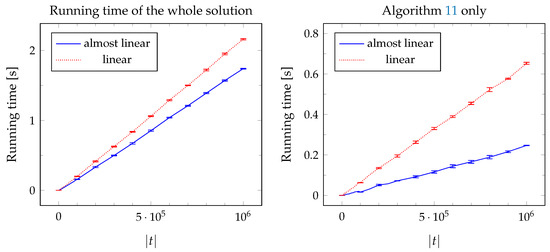

In Figure 7, we compare the performance of the almost-linear solution (using a disjoint-set forest in Algorithm 11) with the truly linear solution (which uses the static tree set union data structure instead). We show the results for different sizes of the inserted string t, with the other two strings being proportionally longer: , . Both solutions exhibit linear behavior (as expected, considering that the non-linear term in the complexity of the almost-linear solution is the very slowly growing inverse Ackermann’s function) but the almost-linear solution turns out to be faster in practice: with the almost-linear instead of the linear implementation of union-find, Algorithm 11 runs approximately 2.8 times faster and the solution as a whole (of which Algorithm 11 is only a small part) runs approx. 25 % faster. Note that we did not optimize the implementation for speed as we are interested in its asymptotic properties, therefore this comparison might not reflect the true potential of each method (for example, Gabow and Tarjan ([19], p. 249) reported that in some of their experiments, the running time of their static tree set union data structure was only 0.6–0.7 times as long as that of the disjont-set forests). In the remainder of this section, we will report the results of the linear solution.

Figure 7.

Comparison of the almost-linear union-find data structure with the truly linear one, for and . The left chart shows the running times of the solution as a whole, the right chart shows only the time spent on Algorithm 11 (including the initialization of the union-find data structure).

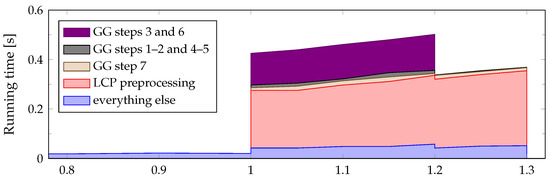

We have also analyzed the contribution of each part of the algorithm to its total running time. Here we provide a breakdown for the case of periodic strings () of lengths , , and , thus at the middle of the “interesting” range of (i.e., ). Algorithm 8 spends 480 ms in the following ways:

- It spent 263 ms (54.7 %) on preprocessing to support constant-time LCP queries (this includes building suffix trees of p and ).

- It spendt 17 ms (3.6 %) on counting early and late occurrences of p (steps 2 and 5 of Algorithm 8; described in more detail as Algorithm 9 in Section 2.6.1).

- It spent 133 ms (27.7 %) on processing the ancestor queries (steps 3 and 6 of Algorithm 8; described in more detail as Algorithm 11 in Section 2.6.3). This includes the initialization of the static tree set union data structure.

- It spent 19 ms (3.9 %) on counting the remaining occurrences of p (step 7 of Algorithm 8; described in more detail as Algorithm 10 in Section 2.6.2).

- It spent 48 ms (10.1 %) on everything else: computing the various prefix and suffix tables from the KMP algorithm, counting those occurrences of p in that do not span the entire t (i.e., the first four types of occurrences from Section 2.2), and cleanup at the end of the algorithm.

The relative amount of time spent on different parts of the solution remains fairly stable as the length of the pattern p varies (while the strings s and t are kept constant); this is illustrated by the stacked area chart on Figure 8. Major changes only occur when certain parts of the solution can be skipped altogether. For , no part of Algorithm 8 needs to be executed and only the “everything else” category remains. For , steps 1–6 of Algorithm 8 do not need to be executed, which also means we do not have to initialize the union-find data structure; we still need to preprocess p to support constant-time LCP queries, however, since these are needed in step 7.

Figure 8.

Running time of individual parts of the algorithm, for , , and various lengths .

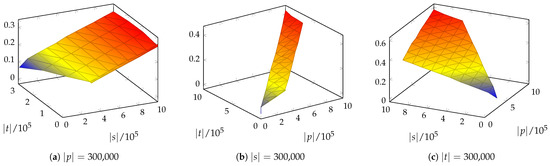

The size of the input to our algorithm is defined by three variables—the lengths of the strings p, s, and t. We can show the behavior of the algorithm by fixing one variable to a reasonable value and observing the 3D chart of the running time as a function of the other two variables. In Figure 9, we can observe approximately plane-shaped charts, demonstrating that the running time is linear in the lengths of the input strings.

Figure 9.

Plots of running times (shown in seconds on the z-axis) when the length of one of the strings s, t, p is fixed at while the length of the other two strings varies. Results are shown only for those combinations of , , where and where all three lengths are ≤.

4. Conclusions

We investigated the problem of computing the number of occurrences of substring p in all possible insertions of string t into string s. We have presented four solutions with different time complexities and based on different techniques. Somewhat surprisingly, the problem can be solved with an algorithm that has linear time complexity and is based on an adaptation of a recent result from the field of string finding in a compressed text. The algorithm is thoroughly explained. An implementation in C++ of the described solution is publicly available.

We encountered some interesting problems that we were unable to solve. In the geometric solution using a plane sweep, the rectangles are neatly nested in individual dimensions. Projections of rectangles onto coordinate axes are a set of segments, where for every two segments, one is completely contained in the other, or the segments do not intersect. This is an obvious consequence of the fact that each rectangle corresponds to a node or subtree. We have not been able to exploit these properties in our solution.

The second open problem is a simplification of the GG algorithm. The basic result from which our solution is derived was developed for string searching in different concatenations of three substrings of the same string. In our problem, however, we are dealing with inserting one string into all possible places in the other string. As we have shown, we can reduce the problem to searching for a string p in concatenations of a prefix of the string p, the entire string t, and a suffix of the string p. For this specific case, further simplifications may be possible.

Author Contributions

Conceptualization, methodology, investigation, formal analysis, validation, resources, writing—review and editing, J.B. and T.H.; software, writing—original draft preparation, visualization, J.B.; supervision, project administration, T.H. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the research programmes P2-0103 and P2-0209 of the Slovenian Research and Innovation Agency.

Data Availability Statement

The software implementation of the presented algorithm is openly available at https://github.com/janezb/insertions, accessed on 16 June 2025.

Acknowledgments

The authors would like to thank Paweł Gawrychowski for sharing their results in the field of string searching in compressed texts, which led to a linear solution to the problem.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Hakak, S.I.; Kamsin, A.; Shivakumara, P.; Gilkar, G.A.; Khan, W.Z.; Imran, M. Exact string matching algorithms: Survey, issues, and future research directions. IEEE Access 2019, 7, 69614–69637. [Google Scholar] [CrossRef]

- Chazelle, B. Filtering search: A new approach to query-answering. SIAM J. Comput. 1986, 15, 703–724. [Google Scholar] [CrossRef]

- Schmidt, J.M. Interval stabbing problems in small integer ranges. In Algorithms and Computation: 20th International Symposium, ISAAC 2009, Honolulu, Hawaii, USA, 16–18 December 2009; Proceedings 20; Number 5878 in Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2009; pp. 163–172. [Google Scholar] [CrossRef]

- Gawrychowski, P. Pattern matching in Lempel-Ziv compressed strings: Fast, simple, and deterministic. In European Symposium on Algorithms, Proceedings of the 19th Annual European Symposium on Algorithms (ESA 2011), Saarbrücken, Germany, 5–9 September 2011; Number 6942 in Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; pp. 421–432. [Google Scholar] [CrossRef]

- Amir, A.; Benson, G. Two-dimensional periodicity and its applications. In Proceedings of the Third Annual ACM-SIAM Symposium on Discrete Algorithms (SODA ’92), Orlando, FL, USA, 27–29 January 1992; SIAM: Philadelphia, PA, USA, 1992; pp. 440–452. [Google Scholar]

- Knuth, D.E.; Morris, J.H., Jr.; Pratt, V.R. Fast pattern matching in strings. SIAM J. Comput. 1977, 6, 323–350. [Google Scholar] [CrossRef]

- Gusfield, D. Algorithms on Strings, Trees, and Sequences: Computer Science and Computational Biology; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar] [CrossRef]

- de Berg, M.; Cheong, O.; van Kreveld, M.; Overmars, M. Computational Geometry: Algorithms and Applications; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar] [CrossRef]

- Fenwick, P.M. A new data structure for cumulative frequency tables. Softw. Pract. Exp. 1994, 24, 327–336. [Google Scholar] [CrossRef]

- Ganardi, M.; Gawrychowski, P. Pattern Matching on Grammar-Compressed Strings in Linear Time. In Proceedings of the 2022 Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), Alexandria, VA, USA, 9–12 January 2022; SIAM: Philadelphia, PA, USA, 2022; pp. 2833–2846. [Google Scholar] [CrossRef]

- Lothaire, M. Algebraic Combinatorics on Words; Encyclopedia of Mathematics and Its Applications; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar] [CrossRef]

- Schieber, B.; Vishkin, U. On finding lowest common ancestors: Simplification and parallelization. SIAM J. Comput. 1988, 17, 1253–1262. [Google Scholar] [CrossRef]

- Bender, M.A.; Farach-Colton, M. The LCA Problem Revisited. In LATIN 2000: Theoretical Informatics: 4th Latin American Symposium, Punta del Este, Uruguay, 10–14 April 2000; Proceedings 4; Number 1776 in Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2000; pp. 88–94. [Google Scholar] [CrossRef]

- Ukkonen, E. On-line construction of suffix trees. Algorithmica 1995, 14, 249–260. [Google Scholar] [CrossRef]

- Kärkkäinen, J.; Sanders, P. Simple linear work suffix array construction. In Automata, Languages and Programming: 30th International Colloquium, ICALP 2003 Eindhoven, The Netherlands, 30 June–4 July 2003; Proceedings 30; Number 2719 in Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2003; pp. 943–955. [Google Scholar] [CrossRef]

- Kärkkäinen, J.; Sanders, P.; Burkhardt, S. Linear work suffix array construction. J. ACM 2006, 53, 918–936. [Google Scholar] [CrossRef]

- Kasai, T.; Lee, G.; Arimura, H.; Arikawa, S.; Park, K. Linear-time longest-common-prefix computation in suffix arrays and its applications. In Combinatorial Pattern Matching: 12th Annual Symposium, CPM 2001 Jerusalem, Israel, 1–4 July 2001; Proceedings 12; Number 2089 in Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2001; pp. 181–192. [Google Scholar] [CrossRef]

- Tarjan, R.E. Efficiency of a good but not linear set union algorithm. J. ACM 1975, 22, 215–225. [Google Scholar] [CrossRef]

- Gabow, H.N.; Tarjan, R.E. A linear-time algorithm for a special case of disjoint set union. In Proceedings of the 15th Annual ACM Symposium on Theory of Computing (STOC ’83), Boston, MA, USA, 25–27 April 1983; ACM: New York, NY, USA, 1983; pp. 246–251. [Google Scholar] [CrossRef]

- Gabow, H.N.; Tarjan, R.E. A linear-time algorithm for a special case of disjoint set union. J. Comput. Syst. Sci. 1985, 30, 209–221. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).