Abstract

In structural health monitoring (SHM), ensuring data completeness is critical for enhancing the accuracy and reliability of structural condition assessments. SHM data are prone to random missing values due to signal interference or connectivity issues, making precise data imputation essential. A latent factorization of tensor (LFT)-based method has proven effective for such problems, with optimization typically achieved via stochastic gradient descent (SGD). However, SGD-based LFT models and other imputation methods exhibit significant sensitivity to learning rates and slow tail-end convergence. To address these limitations, this study proposes an RMSprop-incorporated latent factorization of tensor (RLFT) model, which integrates an adaptive learning rate mechanism to dynamically adjust step sizes based on gradient magnitudes. Experimental validation on a scaled bridge accelerometer dataset demonstrates that RLFT achieves faster convergence and higher imputation accuracy compared to state-of-the-art models including SGD-based LFT and the long short-term memory (LSTM) network, with improvements of at least 10% in both imputation accuracy and convergence rate, offering a more efficient and reliable solution for missing data handling in SHM.

1. Introduction

In recent decades, the continuous development of civil infrastructure has led to the widespread deployment of structural health monitoring (SHM) systems for real-time performance assessments. These systems continuously collect multi-modal data (e.g., vibrations, strains, and displacements) through sensors, forming the basis for damage detection and condition evaluation. However, factors such as improper installation, environmental noise, and extreme weather introduce anomalous data, significantly compromising monitoring reliability. Among these anomalies, data loss is the most prevalent. Specifically, in field applications, random missing data caused by signal interference, poor sensor connectivity, or transmission failures pose critical challenges [1,2]. Such data incompleteness not only adversely impacts downstream tasks like damage identification and state assessment but also degrades overall SHM system efficacy, making SHM data imputation a fundamentally challenging problem.

To address these challenges, multiple imputation methods have been proposed [3,4,5]. Early-stage matrix imputation approaches, such as alternating least squares (ALS), demonstrate certain advantages in handling high-dimensional incomplete (HDI) data by leveraging low-rank matrix assumptions for missing value reconstruction [6]. However, ALS exhibits limited imputation accuracy for non-low-rank structured data and high sensitivity to initialization, failing to deliver stable high-precision results. These limitations become particularly pronounced when processing temporally complex datasets.

To overcome ALS limitations, convolutional neural networks (CNNs) have been introduced for data imputation tasks. CNNs automatically learn spatial features through convolutional layers, demonstrating strong nonlinear modeling capabilities to capture complex patterns and improve imputation accuracy [7,8]. However, CNNs require highly structured spatial data and fail to fully exploit temporal dependencies when processing time-series data.

To address the CNN’s limitations in temporal data processing, long short-term memory (LSTM)-based imputation algorithms were proposed. As a specialized recurrent neural network, LSTM effectively captures long-term dependencies in time-series data through memory cells and gating mechanisms, making it particularly suitable for time-dependent SHM data. This enables more accurate missing data reconstruction by leveraging temporal patterns [9,10]. Although LSTMs excel at temporal modeling, their training complexity and susceptibility to overfitting remain challenges. Consequently, deep learning methods often struggle to fully characterize the inherent spatiotemporal coupling features in bridge SHM data [11].

Building upon these developments, the stochastic gradient descent (SGD)-based latent factorization of tensor (LFT) model was proposed [12,13,14,15]. This approach integrates tensor decomposition with SGD optimization to effectively handle multi-dimensional complex data relationships while maintaining scalability for large-scale dataset training. Compared to ALS, CNN, and LSTM, the LFT model demonstrates superior performance when processing high-dimensional, sparse, and structurally complex data. Chen et al. [16] present a momentum-incorporated biased non-negative and adaptive LFT model, which shows higher computational efficiency and missing link prediction accuracy in efficient representation learning of dynamic weighted directed networks than existing advanced models. However, the SGD-based LFT model suffers from learning rate sensitivity and slow tail-end convergence, which impair training stability and convergence speed. Additionally, its demanding hyperparameter tuning requirements limit practical usability and operational robustness.

To address these challenges, this paper proposes an RMSprop-incorporated latent factorization of tensor (RLFT) model, with the primary research focus on incorporating an adaptive learning rate mechanism into the SGD training process. Specifically, the RLFT model enhances the baseline LFT framework by integrating the RMSprop algorithm, which employs exponential moving averages of squared gradients to dynamically adjust learning rates. This adaptive mechanism effectively suppresses gradient fluctuations and accommodates sparse gradients, thereby optimizing the convergence path for smoother and more efficient training. The proposed approach not only maintains data imputation accuracy but also significantly improves the convergence rate. The key contributions include the following:

- The incorporation of the RLFT optimization model for SHM data imputation, enabling rapid convergence with properly tuned hyperparameters;

- A systematic analysis of missing rate impacts on model performance, with comprehensive RLFT algorithm design and analysis to guide engineering applications.

2. Preliminaries

This section presents the fundamental notations, problem formulation, and methodology concerning the HID tensor in our study.

2.1. Symbol Appointment

SHM data typically constitute HDI tensors with inherent spatiotemporal characteristics [17,18]. When utilizing such tensors as primary inputs for structural state analysis and data imputation, the target tensor contains numerous unknown elements representing missing measurements. This study specifically examines model performance under varying missing rates.

Table 1 summarizes the mathematical notations and their definitions for tensor-based latent factorization.

Table 1.

Symbols used and their descriptions.

2.2. Problem Formulation

A multi-dimensional representation is constructed for a structural state in SHM data by modeling these relationships as a third-order tensor, capturing high-dimensional features across various time points, locations, and working conditions. For an incomplete tensor Y, each element yijk represents the acceleration measurement recorded at the jth sensor location and ith time point under the kth working condition.

Let Λ and Γ denote the known and unknown datasets of Y, respectively. The canonical polyadic (CP) tensor-based latent factor (LF) decomposition method [19,20,21,22,23,24,25,26,27] constructs an approximation of the original tensor Y, as shown in Equation (1) [19]:

where each component tensor Xr is defined as follows:

The tensor Y is decomposed into R rank-one tensors X1, X2, …, XR. Each rank-one tensor Xr∈ ℝ|I|×|J|×|K| can be expressed as the outer product of three LF vectors tr, lr, and wr with lengths |I|, |J|, and |K|, respectively, as shown in Equation (2) [19]:

Each element in Xr is given by Equation (3) [20]:

The rth LF vectors from matrices T, L, and W collectively form the rth rank-one tensor Xr, yielding the approximation . Each element in is computed as Equation (4) [20]:

Considering only the data in Λ, the discrepancy between the corresponding elements of Y and is measured using the Euclidean distance metric. To prevent overfitting, regularization terms are incorporated, yielding the objective loss function (Equation (5)) [21]:

where λt, λl, and λw denote the regularization coefficients for the latent feature matrices T, L, and W, respectively.

2.3. RMSprop Method

The RMSprop method dynamically adjusts learning rates by combining adaptive learning rate mechanisms with accumulated gradient squares, thereby accelerating model training. Specifically, it employs exponentially weighted moving averages to accumulate squared gradients, incorporating historical gradient magnitudes during parameter updates. This accumulation effectively mitigates gradient oscillations, particularly for sparse gradients or non-stationary objectives, enabling self-adaptive learning rate adjustment. During iterative training, RMSprop applies bias correction to the accumulated gradient squares to ensure accurate initial estimates, ultimately computing parameter-specific adaptive learning rates to accelerate convergence and enhance optimization stability. For a given objective function J(θ), the parameter θ is updated with adaptive learning rates, as shown in Equation (6) [12]:

where A0 denotes the initial value of the accumulated squared gradients, while As−1 and As represent the accumulated squared gradients at time steps s − 1 and s, respectively. The decay rate γ controls the weighting of historical information. The variables θs−1 and θs correspond to the parameter states at iterations s − 1 and s, and os indicates the training instance encountered during the sth update. The base learning rate η and a small constant δ (to prevent division by zero) complete the parameter set.

3. RMSprop SGD-Based LFT Model

This section presents our proposed RLFT model that enhances traditional SGD-based LFT through adaptive learning rate optimization. The following subsections detail the baseline implementation (Section 3.1), our improved RLFT model (Section 3.2), and algorithmic analysis (Section 3.3).

3.1. Standard SGD-Based LFT Model

In LF analysis, the SGD-based LFT stands out for its straightforward operation and computationally efficient performance. This algorithm calculates the gradient for each training sample and adjusts the parameters in the opposite direction of this gradient, thereby continuously optimizing and progressively reducing the value of the target loss function (5). With SGD, the objective loss function is minimized, as shown in Equation (7) [22]:

where represents the instantaneous loss corresponding to an individual training instance yijk ∈ Λ, s denotes the sth update point, and ηir, ηjr, and ηkr represent the learning rates for tir, ljr, and wkr, respectively. The gradient formula is given by Equation (8) [22]:

Substituting Formula (7) into Formula (6) yields Equation (9) [22]:

3.2. RLFT Model

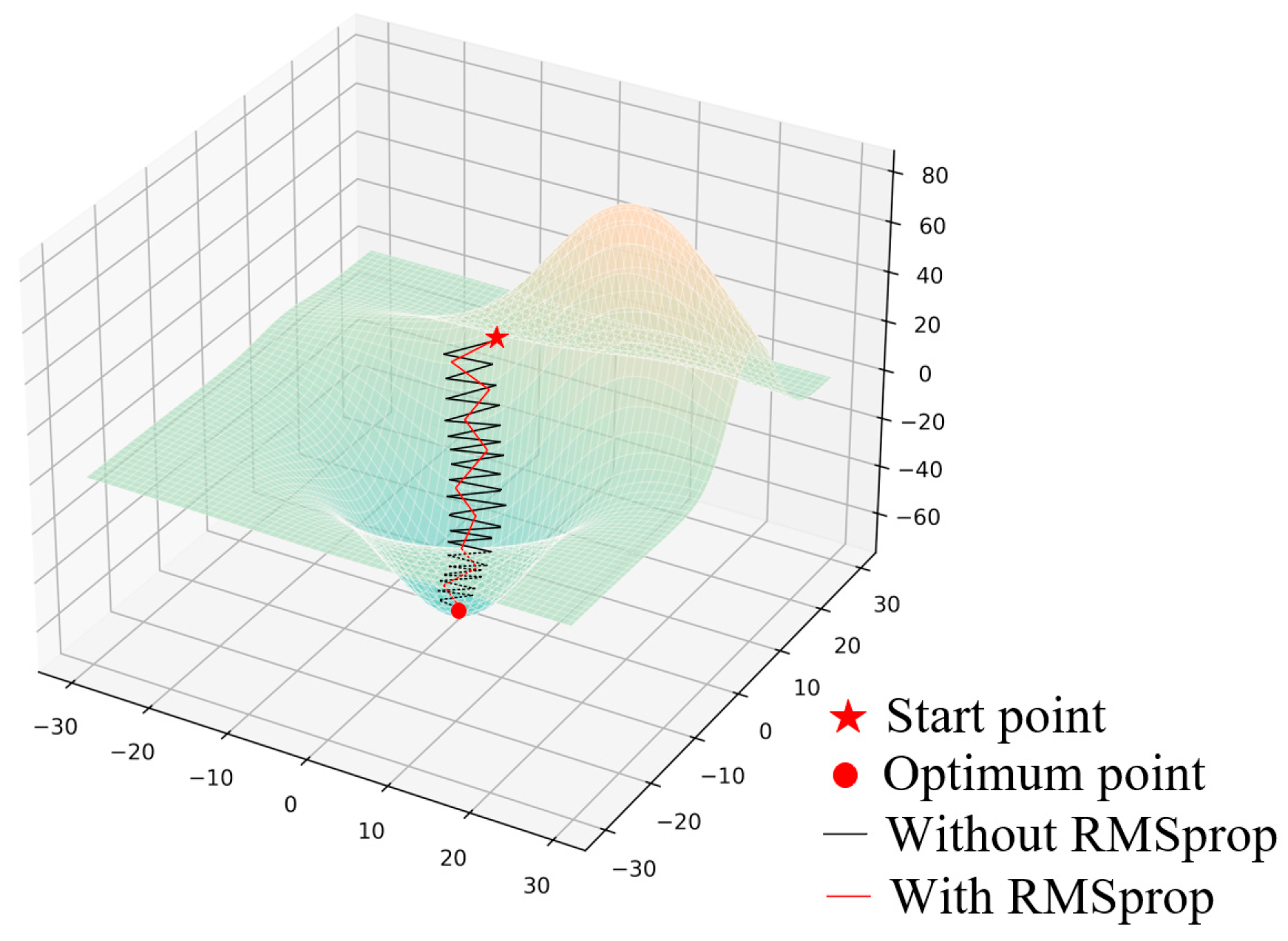

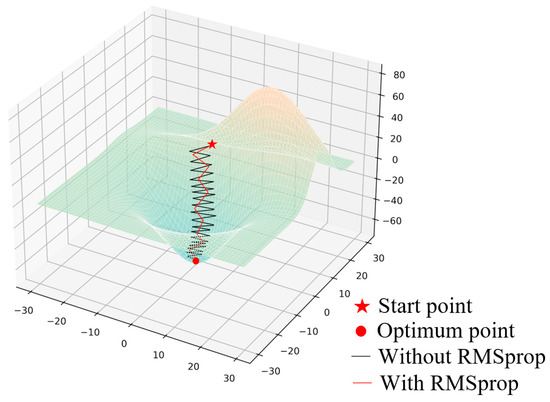

In the SGD-based LFT model, if the objective function’s surface exhibits complex topography (such as elongated valleys), the gradient direction may deviate from the optimal path, leading to slow convergence or even oscillations. The RMSprop algorithm addresses this by employing exponentially weighted moving averages of squared gradients to dynamically adapt the learning rate. This approach mitigates gradient fluctuations and accommodates sparse gradients, thereby optimizing the path toward more stable and efficient convergence. As illustrated in Figure 1, RMSprop demonstrates a more direct trajectory toward the optimal solution, achieving faster convergence with reduced oscillations. Therefore, adopting the RMSprop algorithm is essential for enhancing model training efficiency in complex optimization problems.

Figure 1.

Training process by LFT with/without RMSprop.

When integrating the adaptive learning rate method (i.e., the RMSprop algorithm) into the SGD-based LFT model, it is also necessary to update the decision parameters for each training instance during every iteration. According to Formula (6), the update rules for tir, ljr, and wkr can be derived by following Equations (10)–(12) [23]:

The update of tir is as follows:

The update of ljr is as follows:

The update of wkr is as follows:

By combining Formulas (10)–(12) with Formula (8), the objective loss function is minimized, as shown in Equation (13) [23]:

3.3. Algorithm Design and Analysis

To elucidate the operational principles of the RLFT model, the workflow of the RLFT algorithm is summarized as follows:

According to Algorithm 1, the computational complexity of the RLFT algorithm is characterized, as shown in Equation (14) [24]:

Regarding its storage complexity, this is primarily determined by (a) the known dataset Λ, (b) three LF matrices, and (c) three RMSprop correlation matrices. The storage complexity of the RLFT algorithm is formalized below using Equation (15) [24]:

All the above complexity calculations disregard constant factors and lower-order terms. The above computational analysis demonstrates that the RLFT model achieves high efficiency in both computation and storage.

| Algorithm 1. RLFT Algorithm | |

| Operation | Cost |

| Input: Λ, R, λt, λl, λw, ηir, ηjr, ηkr, γ, δ, and iterations | - |

| Initializing the latent factor matrix: T, L, and W | Θ((|I| + |J| + |K|)×R) |

| Initializing the RMSprop correlation matrix: At, Al, and Aw = 0 | Θ((|I| + |J| + |K|)×R) |

| for iteration = 1 to iterations (Maximum number of iterations N) | ×N |

| for each yijk ∈ Λ | ×|Λ| |

| Θ(R) | |

| for r = 1 to R do | ×R |

| Θ(1) × 6 | |

| end for | - |

| end for | - |

| end while | - |

| Output LF matrix: T, L, and W | - |

4. Experimental Results and Analysis

This section systematically evaluates the proposed RLFT model through comprehensive experiments. We first describe the experimental setup including datasets, evaluation metrics, and implementation details (Section 4.1), then present comparative results with state-of-the-art methods (Section 4.2). The analysis validates RLFT’s advantages in both accuracy and computational efficiency.

4.1. General Settings

The experimental configuration is organized into three components: (1) benchmark datasets for performance validation, (2) quantitative metrics for objective assessments, and (3) implementation parameters ensuring reproducible results. This standardized setup guarantees a fair comparison across all evaluated methods.

4.1.1. Datasets

To comprehensively evaluate the performance of the RLFT model, we conducted experiments using the Yunnan Hechonggou scaled bridge model dataset. This dataset records acceleration vibration signals from a 1:20 scale model of the Heichonggou Bridge, featuring high fidelity and comprehensive annotations. Serving as an excellent benchmark for SHM data imputation tasks, it significantly enhances the model’s capability to characterize structural dynamic responses and improve the accuracy of missing data reconstruction. Detailed dataset specifications are provided in Table 2.

Table 2.

Experimental dataset specifications.

The undamaged structural state of the scaled bridge model was selected, with synchronized timestamps identified to construct a third-order tensor from SHM data across varying time points, locations, and working conditions. Given the dataset’s measurement completeness, random tensor entries are artificially removed at missing ratios of 0.1, 0.3, 0.5, 0.7, and 0.9 to simulate data loss scenarios. The dataset is partitioned into training and test sets following an 8:2 ratio.

4.1.2. Evaluation Metrics

In this experiment, the accuracy of the imputation algorithm is primarily evaluated based on the test set’s performance. To quantify model performance and generalization capability, we selected two evaluation metrics: root mean square error (RMSE) and mean absolute error (MAE). These metrics, respectively, reflect the discrepancy between completed values and actual measurements. While RMSE demonstrates higher sensitivity to large errors, MAE exhibits relatively lower sensitivity to outliers and better represents the average error magnitude. Together, these metrics not only indirectly reflect the model’s ability to capture latent features, but also directly demonstrate its effectiveness in missing data imputation. The corresponding formulas are as follows for Equation (16) [25]:

where V denotes the test set. The experiment also tracked the number of iterations required to reach minimum accuracy, demonstrating the model’s optimization efficiency and convergence rate during training.

4.1.3. Model Settings

This experiment involved five models, with detailed specifications provided in Table 3.

Table 3.

Comparison of models in the experiment.

- RLFT: The proposed model in this study.

- LFT: It shares the same underlying principle with RLFT but employs fixed learning rates to update parameters sample by sample.

- ALS: It employs CP decomposition, alternately fixing two factor matrices while solving for the third latent feature matrix in each iteration, and repeats this process until convergence is achieved.

- MF: It completes missing data by first consolidating raw data into a matrix format, then decomposes it into a product of two low-rank matrices. Missing values are inferred by leveraging patterns and structures in observed data.

- LSTM: It captures long-range dependencies in time-series data through memory cells and gating mechanisms, then utilizes these learned temporal features to predict and impute missing data points. This paper presents a missing data imputation model based on LSTM, which utilizes a single-layer unidirectional LSTM network as its core architecture for processing time-series data. The model constructs training samples through a sliding window method, with input dimensions of (1729, 50, and 90), and outputs the completed acceleration data (1729 and 90). The network includes an LSTM layer with 64 hidden units, complemented by a dropout rate of 0.2 and L2 regularization of 1e-3 to prevent overfitting, followed by a 32-dimensional ReLU fully connected layer and a linear output layer to accomplish feature transformation. The training process employs the Adam optimizer for 1000 iterations, with a batch size of 32 and equipped with an early stopping mechanism, aiming to minimize the loss to achieve the imputation of multivariate time series.

The experimental parameter settings are as follows:

- All five models adopt a rank of five and a maximum iteration count of 1000.

- As detailed in Section 3, the performance of the RLFT model primarily depends on the learning rate, the regularization coefficient, and the decay rate. Through parameter tuning, we set the learning rate to 1 × 10−6, the regularization coefficient to 1 × 10−5, and the decay rate to 0.99 for optimal performance.

- A comparative summary of model parameter configurations is presented in Table 4.

Table 4. Comparative model parameter settings.

Table 4. Comparative model parameter settings. - The training process incorporated an early stopping mechanism. Training terminated if either

- The accuracy difference between consecutive iterations fell below 1 × 10−10;

- The gap between the current iteration count and the iteration achieving minimum accuracy exceeded 50.

This strategy prevented overfitting while improving computational efficiency.

4.2. Comparison Results

This section evaluates all five models under missing data ratios of 0.1, 0.3, 0.5, 0.7, and 0.9 to comprehensively assess their performance across varying levels of data incompleteness. The experiment includes the following comparisons:

- We compare the RLFT model with its LFT baseline to validate the improvement from RMSprop optimization.

- The evaluation contrasts LF decomposition with CP decomposition in data imputation tasks to assess their respective technical merits.

- We examine the performance differences between LF decomposition and MF to demonstrate the proposed method’s advantages in handling complex data structures.

- The analysis compares RLFT with LSTM models to quantify their relative capabilities in temporal feature extraction.

- All models undergo parallel runtime measurements to evaluate their computational efficiency differences.

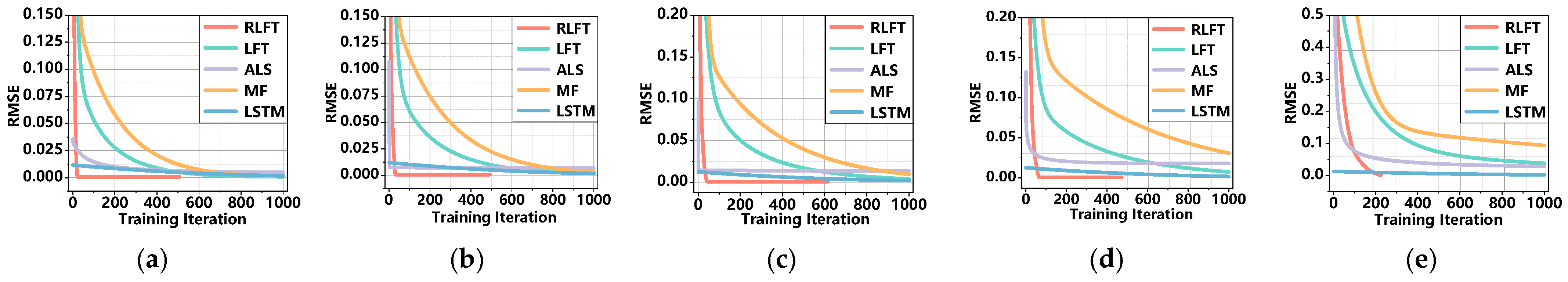

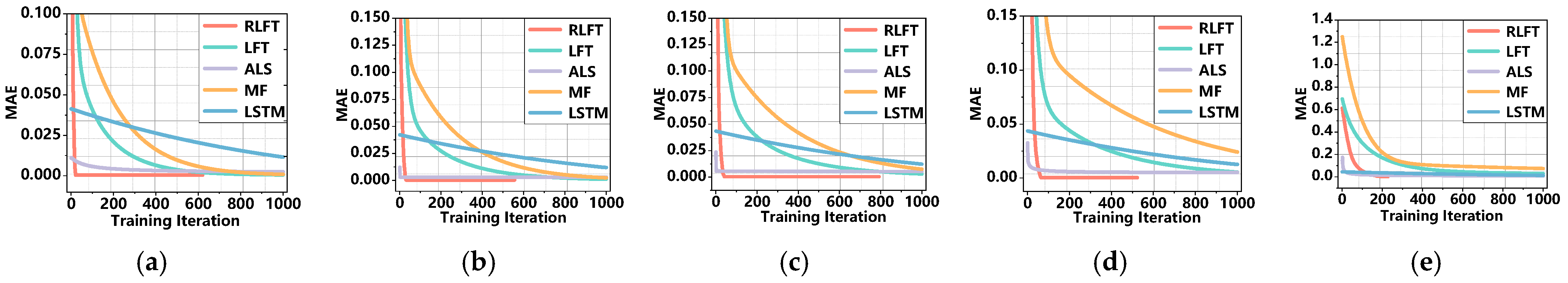

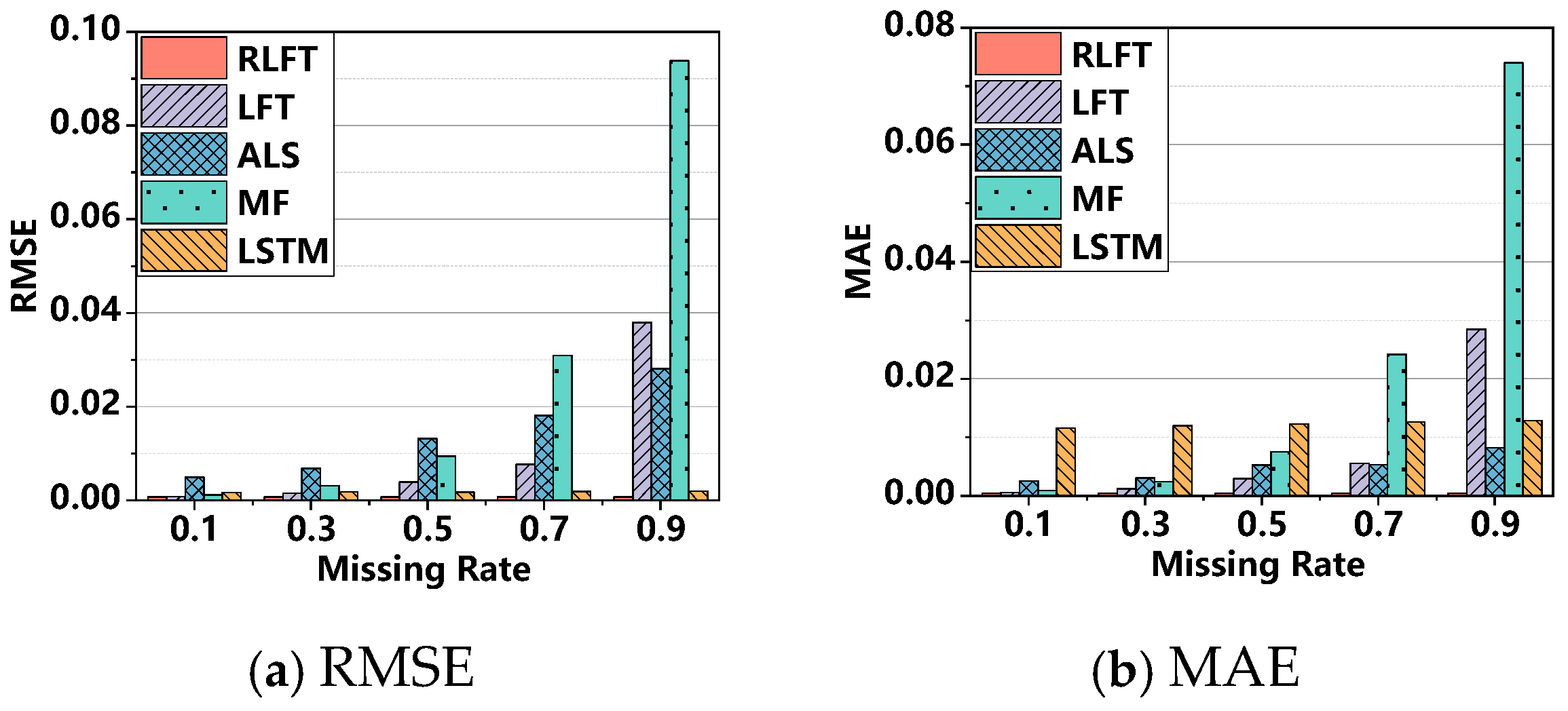

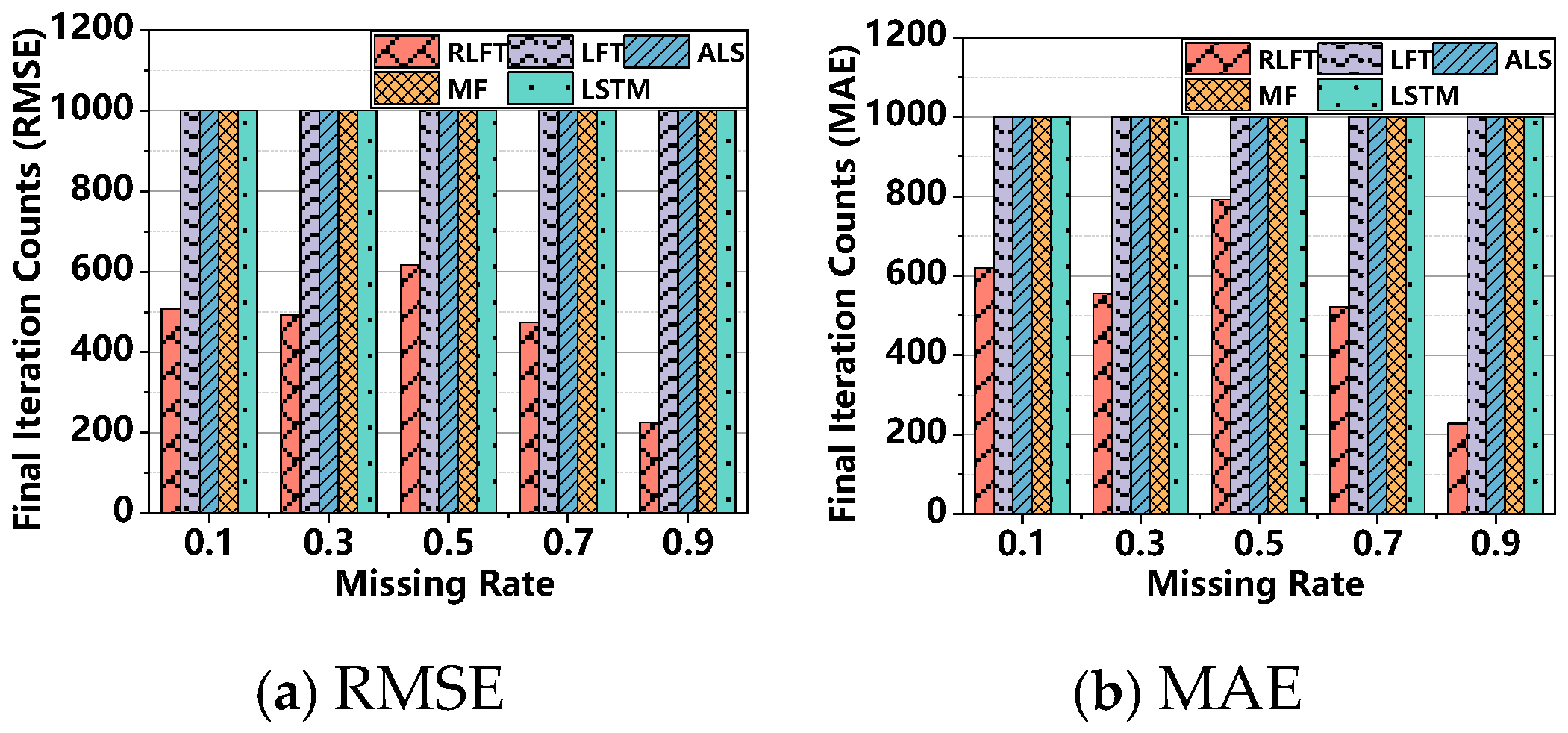

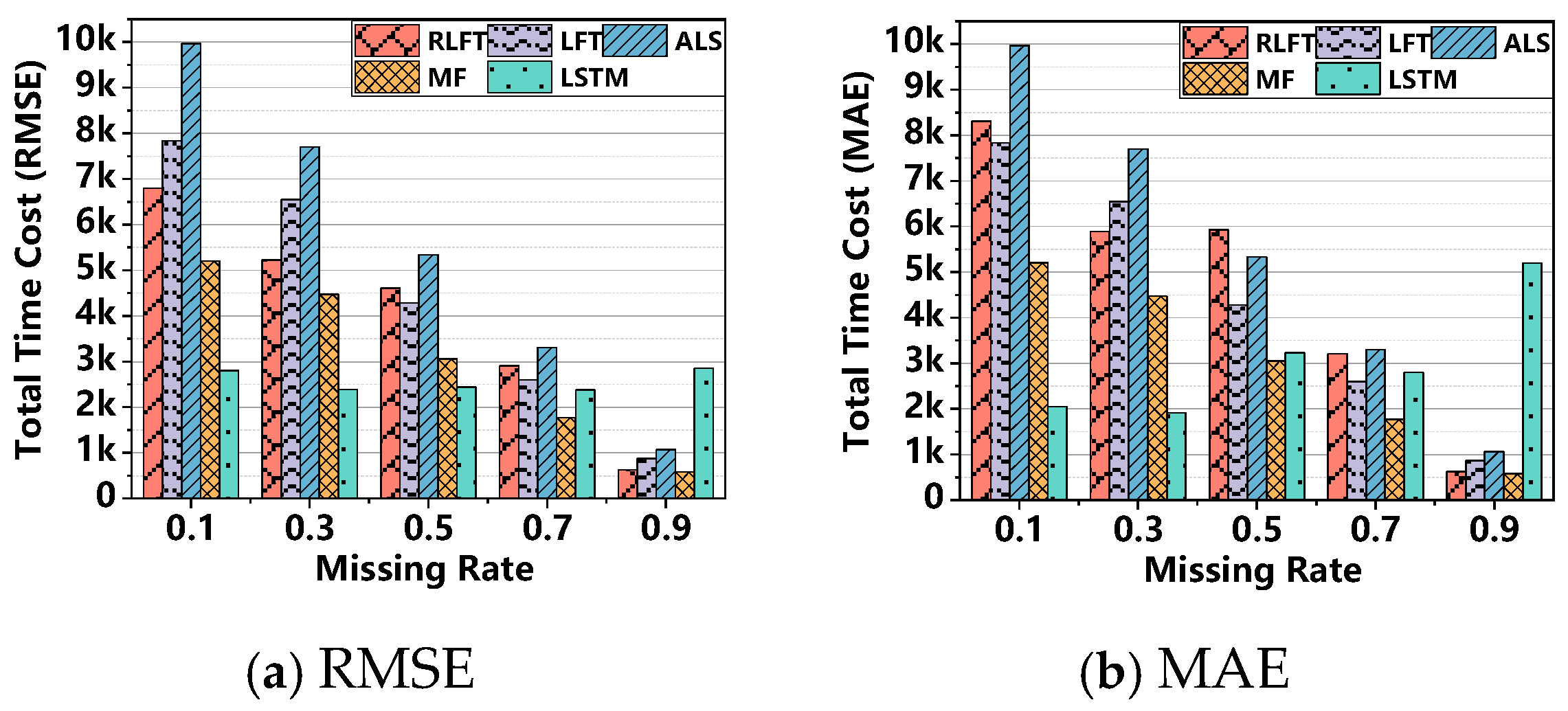

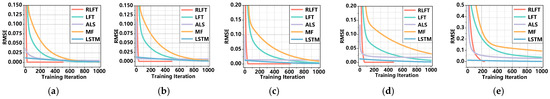

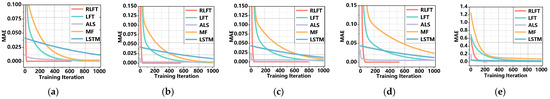

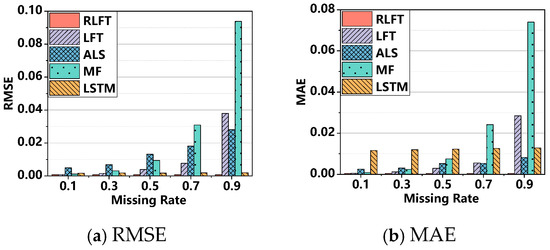

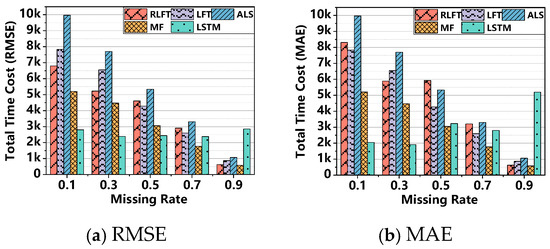

Figure 2 and Figure 3 present the training curves for all models, while Figure 4, Figure 5 and Figure 6 display the comparative results of RMSE, MAE, final iteration counts, and computational time costs across all models. Table 5 summarizes the RMSE and MAE performance metrics along with the corresponding final iteration numbers for each model. Table 6 provides detailed records of the computational time costs for all evaluated models.

Figure 2.

Training curves of RMSE for all models with varying missing rates. (a) Missing rates = 0.1; (b) missing rates = 0.3; (c) missing rates = 0.5; (d) missing rates = 0.7; (e) missing rates = 0.9.

Figure 3.

Training curves of MAE for all models with varying missing rates. (a) Missing rates = 0.1; (b) missing rates = 0.3; (c) missing rates = 0.5; (d) missing rates = 0.7; (e) missing rates = 0.9.

Figure 4.

RMSE and MAE performance of all models across different missing rates.

Figure 5.

Final iteration counts required by all models to achieve minimum RMSE and MAE values under different missing rates.

Figure 6.

Time cost of all models across different missing rates.

Table 5.

Minimum RMSE/MAE values and convergence iteration counts for all models.

Table 6.

Time cost values and convergence iteration counts for all models.

Based on these results, we observe that within acceptable experimental error margins, the imputation accuracy of each model decreases as the missing rate increases. Therefore, algorithms require higher precision when handling more sparse data imputation tasks. The key findings are as follows:

- RLFT model achieves higher imputation accuracy than its baseline LFT model. As shown in Figure 4 and Table 5, RLFT attains minimum RMSE values of 7.220 × 10−4, 7.222 × 10−4, 7.268 × 10−4, 7.276 × 10−4, and 7.303 × 10−4 at missing rates of 0.1, 0.3, 0.5, 0.7, and 0.9, respectively, representing reductions of 13.29%, 54.69%, 81.57%, 90.55%, and 98.08% compared to LFT’s minimum RMSE. Similarly, RLFT’s minimum MAE values are 4.636 × 10−4, 4.647 × 10−4, 4.665 × 10−4, 4.675 × 10−4, and 4.709 × 10−4, showing improvements of 21.12%, 60.40%, 84.31%, 91.58%, and 98.35% over LFT.

- RLFT demonstrates faster convergence than the LFT at baseline. Figure 5 and Table 5 show that RLFT requires only 507, 493, 616, 473, and 226 iterations to reach minimum RMSE and 620, 556, 793, 523, and 228 iterations for minimum MAE at the respective missing rates. In contrast, LFT consistently requires a maximum of 1000 iterations to achieve both minimum RMSE and MAE across all five missing rate scenarios.

- Compared with other models,

- RLFT achieves higher imputation accuracy than the CP decomposition-based ALS model. For RMSE, RLFT reduces values by 85.52%, 89.44%, 94.48%, 95.98%, and 97.40% at different missing rates compared to ALS. For MAE, the reductions are 81.71%, 84.69%, 91.11%, 91.18%, and 94.27%, respectively. Moreover, RLFT converges faster than ALS, which requires a maximum of 1000 iterations to reach the minimum RMSE and MAE across all missing rates. These results strongly demonstrate the superiority of latent feature decomposition over traditional CP decomposition for missing data imputation tasks;

- RLFT shows better imputation accuracy than the MF model. The RMSE improvements are 39.38%, 76.85%, 92.34%, 97.65%, and 99.22%, while MAE shows reductions of 49.15%, 80.96%, 93.79%, 98.07%, and 99.36% at various missing rates. RLFT also converges faster than MF, which needs 1000 iterations to achieve the minimum RMSE and MAE in all cases. This conclusively proves that latent feature decomposition outperforms matrix factorization techniques, particularly in handling complex data structures;

- RLFT exhibits superior accuracy compared to the LSTM model. It reduces RMSE by 58.11%, 59.84%, 59.33%, 61.95%, and 62.58% and MAE by 96.00%, 96.12%, 96.21%, 96.30%, and 96.34% across different missing rates. Furthermore, RLFT converges more quickly than LSTM, which requires 1000 iterations to reach optimal RMSE and MAE values. This evidence firmly establishes RLFT’s better performance in spatiotemporal feature capture compared to LSTM models.

- The RLFT model demonstrates unique advantages in computational efficiency. As shown in Figure 6 and Table 6, RLFT exhibits significantly lower time costs compared to (1) the ALS model across all missing rates; (2) the LFT model at missing rates of 0.1, 0.3, and 0.9; and (3) the LSTM model at a missing rate of 0.9. However, RLFT requires more computation time than (1) the MF model, (2) the LFT model at missing rates of 0.5 and 0.7, and (3) the LSTM model at missing rates of 0.1, 0.3, 0.5, and 0.7. Notably, RLFT converges in only 507, 493, 616, 473, and 226 iterations across different missing rates, while all other models reach the preset maximum of 1000 iterations. This demonstrates RLFT’s superior training efficiency through faster convergence. Furthermore, RLFT outperforms all comparative models in both RMSE and MAE metrics. Therefore, when comprehensively considering both convergence speed and prediction accuracy, the RLFT model demonstrates unique advantages in computational efficiency, delivering more reliable prediction results within significantly reduced timeframes. This distinctive characteristic enables substantial savings in actual training time when processing large-scale or complex datasets.

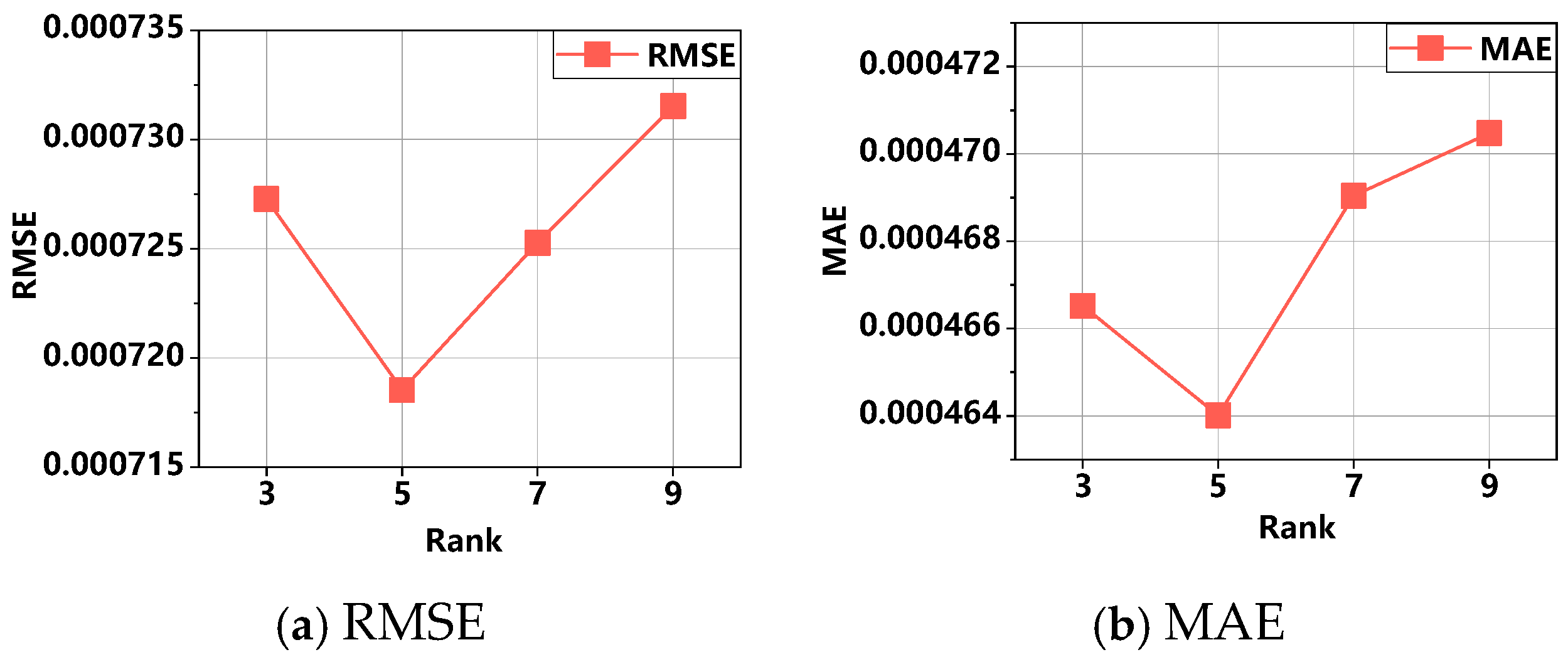

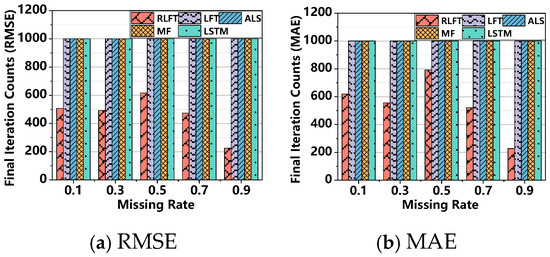

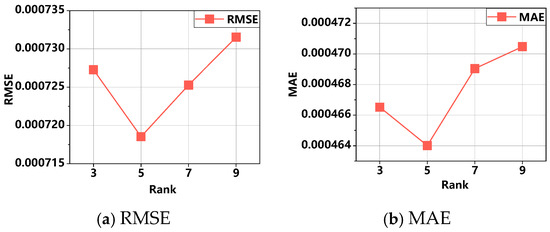

4.3. Effect of R

For the task of missing data imputation on HDI tensors, the LF dimension R affects the performance of the model based on LFT. In this section, we focus on the impact of R on the predictive accuracy of the RLFT model. Figure 7 illustrates the variation in the RLFT model as R increases from 3 to 9.

Figure 7.

The effect of R on the predictive accuracy of the RLFT model.

The key findings are as follows:

The predictive accuracy of the RLFT model exhibits a distinct trend as the rank R varies. As depicted in Figure 7, when the rank increases from 3 to 5, both the RMSE and MAE of the model decrease. Specifically, at rank 3, the RMSE is 7.273 × 10−4 and the MAE is 4.665 × 10−4. In contrast, at rank 5, the RMSE reduces to 7.185 × 10−4 and the MAE to 4.640 × 10−4, corresponding to predictive accuracy improvements of 1.21% and 0.54%, respectively. However, as the rank increases from 7 to 9, both RMSE and MAE exhibit an upward trend. This suggests that the model achieves optimal fitting at rank 5. Ranks that are either too high or too low may lead to suboptimal model performance. Consequently, selecting an appropriate rank value is crucial for optimizing the performance of the RLFT model in practical applications.

5. Conclusions

This paper proposes an RLFT model that incorporates the RMSprop mechanism to achieve rapid convergence and adaptive learning rate adjustment during training. By using structural state data from scaled bridge models as test cases, the experimental results demonstrate that the RLFT model comprehensively outperforms the comparison models (LFT, ALS, MF, and LSTM) in both imputation accuracy and convergence speed, proving its superiority in handling complex data structures and capturing spatiotemporal features.

However, several aspects of our work require further improvement:

- Computational efficiency optimization: Although the RLFT model shows certain advantages in time consumption, there remains room for enhancement.

- Manual hyperparameter adjustment: The model currently requires manual tuning for different missing rates or datasets, indicating the need for more efficient parameter optimization methods.

To address these limitations, future work will focus on further model optimization to achieve more efficient training and more accurate predictions. Notably, the imputation of continuously missing data presents another valuable research direction worthy of investigation.

In future work, we will delve into the effectiveness of RLFT in handling consecutively missing data. Though RLFT works well for random missing data, data loss in SHM is not always random. So, we need to test how RLFT performs in such cases. We will create experiments to mimic non-random missing data scenarios. This will let us evaluate RLFT’s data imputation accuracy and convergence speed in these situations and see how it affects the final monitoring results. Meanwhile, we plan to incorporate bias terms into the RLFT model to address measurement errors and uncertainties, thereby enhancing its robustness.

In addition, we will evaluate the applicability of RLFT in online or real-time SHM applications. As SHM systems typically require real-time structural health monitoring, the real-time performance of the method is crucial. We will explore how RLFT performs in real-time data processing, including its adaptability to data streams, processing speed, and stability and accuracy in real-time monitoring environments.

We plan to conduct integrated tests with existing online monitoring systems to verify the practicality and efficiency of RLFT. This will involve making necessary algorithmic adjustments to ensure that RLFT can operate efficiently in resource-constrained real-time systems and provide timely monitoring feedback. Through this work, we hope to further promote the application of RLFT in the field of SHM and offer more guidance and references for future research and practice.

Funding

This research was supported by the National Natural Science Foundation of China (62205039).

Data Availability Statement

Data will be made available by the authors upon request.

Conflicts of Interest

The author declares that there are no conflicts of interest regarding the publication of this paper.

References

- Du, J.; Hu, M.; Zhang, W. Missing data problem in the monitoring system: A review. IEEE Sens. J. 2020, 20, 13984–13998. [Google Scholar] [CrossRef]

- Zhang, L.; Qiu, G.; Chen, Z. Structural health monitoring methods of cables in cable-stayed bridge: A review. Measurement 2021, 168, 108343. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, M.; Wan, N.; Deng, Z.; He, Z.; Luo, J. Missing measurement data recovery methods in structural health monitoring: The state, challenges and case study. Measurement 2024, 231, 114528. [Google Scholar] [CrossRef]

- Salehi, H.; Gorodetsky, A.; Solhmirzaei, R.; Jiao, P. High-dimensional data analytics in civil engineering: A review on matrix and tensor decomposition. Eng. Appl. Artif. Intell. 2023, 125, 106659. [Google Scholar] [CrossRef]

- Momeni, H.; Ebrahimkhanlou, A. High-dimensional data analytics in structural health monitoring and non-destructive evaluation: A review paper. Smart Mater. Struct. 2022, 31, 043001. Available online: https://iopscience.iop.org/article/10.1088/1361-665X/ac50f4/meta (accessed on 3 June 2025). [CrossRef]

- Gómez-Sánchez, A.; Vitale, R.; Ruckebusch, C.; de Juan, A. Solving the missing value problem in PCA by Orthogonalized-Alternating Least Squares (O-ALS). Chemom. Intell. Lab. Syst. 2024, 250, 105153. [Google Scholar] [CrossRef]

- Tzoumpas, K.; Estrada, A.; Miraglio, P.; Zambelli, P. A data filling methodology for time series based on CNN and (Bi) LSTM neural networks. IEEE Access 2024, 12, 31443–31460. [Google Scholar] [CrossRef]

- Yu, Y.; Li, V.O.K.; Lam, J.C.K.; Chan, K.; Zhang, Q. CTDI: CNN-Transformer-Based Spatial-Temporal Missing Air Pollution Data Imputation. IEEE Trans. Big Data 2025. [Google Scholar] [CrossRef]

- Sun, Z.; Wang, X.; Han, T.; Huang, H.; Huang, X.; Wang, L.; Wu, Z. Pipeline deformation monitoring based on long-gauge FBG sensing system: Missing data recovery and deformation calculation. J. Civ. Struct. Health Monit. 2025, 1–21. [Google Scholar] [CrossRef]

- Jiang, H.; Sun, M.; Cai, J.; Wu, H.; Zhao, R.; Xing, Y.; Wang, F.; Yu, W. Nonlinear time-dependent mapping model for shm data utilizing gan-based imputation and LSTM for uncertainty quantification. Structures 2025, 72, 108290. [Google Scholar] [CrossRef]

- Khanahmadi, M. An effective vibration-based feature extraction method for single and multiple damage localization in thin-walled plates using one-dimensional wavelet transform: A numerical and experimental study. Thin-Walled Struct. 2024, 204, 112288. [Google Scholar] [CrossRef]

- Luo, X.; Wang, D.; Zhou, M.C.; Yuan, H. Latent factor-based recommenders relying on extended stochastic gradient descent algorithms. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 916–926. [Google Scholar] [CrossRef]

- Chen, M.; Wang, R.; Qiao, Y.; Luo, X. A generalized Nesterov’s accelerated gradient-incorporated non-negative latent-factorization-of-tensors model for efficient representation to dynamic QoS data. IEEE Trans. Emerg. Top. Comput. Intell. 2024. [Google Scholar] [CrossRef]

- Chen, M.; Tao, L.; Lou, J.; Luo, X. Latent-factorization-of-tensors-incorporated battery cycle life prediction. IEEE/CAA J. Autom. Sin. 2024, 12, 633–635. [Google Scholar] [CrossRef]

- Wu, H.; Luo, X.; Zhou, M.C. Advancing non-negative latent factorization of tensors with diversified regularization schemes. IEEE Trans. Serv. Comput. 2020, 15, 1334–1344. [Google Scholar] [CrossRef]

- Chen, M.; He, C.; Luo, X. MNL: A highly-efficient model for large-scale dynamic weighted directed network representation. IEEE Trans. Big Data 2022, 9, 889–903. [Google Scholar] [CrossRef]

- Ren, P.; Chen, X.; Sun, L.; Sun, H. Incremental Bayesian matrix/tensor learning for structural monitoring data imputation and response forecasting. Mech. Syst. Signal Process. 2021, 158, 107734. [Google Scholar] [CrossRef]

- Yi, L.; Ding, Y.; Hou, J.; Yue, Z.; Zhao, H. Structural health monitoring data cleaning based on Bayesian robust tensor learning. Struct. Health Monit. 2023, 22, 2169–2192. [Google Scholar] [CrossRef]

- Anaissi, A.; Makki Alamdari, M.; Rakotoarivelo, T.; Khoa, N.L.D. A tensor-based structural damage identification and severity assessment. Sensors 2018, 18, 111. [Google Scholar] [CrossRef]

- Anaissi, A.; Suleiman, B.; Zandavi, S.M. Online tensor-based learning model for structural damage detection. ACM Trans. Knowl. Discov. Data (TKDD) 2021, 15, 1–18. [Google Scholar] [CrossRef]

- Chen, M.; Wu, H. Efficient representation to dynamic QoS data via momentum-incorporated biased nonnegative and adaptive latent factorization of tensors. In Proceedings of the 2021 International Conference on Cyber-Physical Social Intelligence (ICCSI), Beijing, China, 18–20 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, M.; Wu, H.; He, C.; Chen, S. Momentum-incorporated latent factorization of tensors for extracting temporal patterns from QoS data. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2021; IEEE: Piscataway, NJ, USA, 2019; pp. 1757–1762. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, M.; Shang, M.; Luo, X. A momentum-incorporated latent factorization of tensors model for temporal-aware QoS missing data prediction. Neurocomputing 2019, 367, 299–307. [Google Scholar] [CrossRef]

- Luo, X.; Chen, M.; Wu, H.; Liu, Z.; Yuan, H.; Zhou, M. Adjusting learning depth in nonnegative latent factorization of tensors for accurately modeling temporal patterns in dynamic QoS data. IEEE Trans. Autom. Sci. Eng. 2021, 18, 2142–2155. [Google Scholar] [CrossRef]

- Chen, M.; Luo, X. Efficient representation to dynamic QoS data via generalized nesterov’s accelerated gradient-incorporated biased non-negative latent factorization of tensors. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 576–581. [Google Scholar] [CrossRef]

- Chen, M.; Wu, H. A breif review on data-driven battery health estimation methods for energy storage systems. In Proceedings of the 2022 IEEE International Conference on Networking, Sensing and Control (ICNSC), Shanghai, China, 15–18 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, M.; Ma, G.; Liu, W.; Zeng, N.; Luo, X. An overview of data-driven battery health estimation technology for battery management system. Neurocomputing 2023, 532, 152–169. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).