Abstract

For large-scale multi-objective optimization, it is particularly challenging for evolutionary algorithms to converge to the Pareto Front. Most existing multi-objective evolutionary algorithms (MOEAs) handle convergence and diversity in a mutually dependent manner during the evolution process. In this case, the performance degradation of one solution may lead to the deterioration of the performance of the other solution. This paper proposes a two-stage multi-objective optimization algorithm based on decision variable clustering (LSMOEA-VT) to solve large-scale optimization problems. In LSMOEA-VT, decision variables are divided into two categories and use dimensionality reduction methods to optimize the variables that affect evolutionary convergence. Following this, we performed an interdependence analysis to break down the convergence variables into multiple subcomponents that are more tractable. Furthermore, a non-dominated dynamic weight aggregation method is used to enhance the diversity of the population. To evaluate the performance of our proposed algorithm, we performed extensive comparative experiments against four optimization algorithms across a diverse set of benchmark problems, including eight multi-objective optimization problems and nine large-scale optimization problems. The experimental results show that our proposed algorithm performs well on some test functions and has certain competitiveness.

1. Introduction

In the field of evolutionary computation, researchers only focus on single-objective optimization problems; however, as real-world problems grow in complexity, they frequently involve multiple conflicting objectives, leading to their classification as multi-objective optimization problems (MOPs). To tackle these challenges, scholars have developed a plethora of evolutionary algorithms, such as NSGA-II [1], MOEA/D [2], and NSGA-III [3].

Empirical evidence demonstrates that these algorithms have yielded commendable outcomes in both benchmark scenarios and practical applications [4,5,6]. However, due to the increasing complexity of problems in the real world, such as engineering design [7], traffic control [8], and groundwater monitoring [9]. Numerous problems encompass a vast array of decision variables, and due to the curse of dimensionality, the efficacy and efficiency of prevailing optimization methodologies experience a significant decline as the dimensionality escalates. Generally speaking, there are two reasons for this phenomenon: convergence pressure and diversity management. The former issue arises from the fact that in multi-objective optimization, as the number of decision variables grows, the solution space volume increases at an exponential rate, leading to a dramatic increase in computational complexity. The latter challenge stems from the sparse distribution of candidate solutions within the objective space, which undermines the efficacy of conventional diversity maintenance strategies.

To address this category of problems, a “divide and conquer” strategy is commonly employed, which was first proposed in [10]. The core concept involves partitioning the large-scale decision variables in large-scale multi-objective problems (LSMOPs) into multiple lower-dimensional groups and subsequently optimizing each sub-problem independently following decomposition.

In the context of coevolution, the selection of an appropriate decomposition strategy is of paramount importance. The most optimal strategy entails grouping variables that exhibit interactions while segregating independent variables into distinct groups. Currently, there are two predominant grouping strategies: one is the fixed grouping strategy [11,12,13], and the other is the dynamic grouping strategy [14,15]. In a fixed grouping strategy, the composition of groups remains unchanged throughout the optimization process, as exemplified by the LMEA [16]. Conversely, in dynamic grouping strategies, the grouping configuration evolves continuously in tandem with the optimization process, as seen in the CCGDE3. The efficacy of grouping decision variables significantly influences the performance of the algorithm. For single-objective optimization problems [17,18], due to the straightforward dependency relationships among variables, such issues are relatively more tractable. In contrast, multi-objective problems involve intricate dependencies [19,20,21] not only among variables but also across multiple objectives, thereby compounding the complexity of the solution process. Consequently, the judicious grouping of decision variables presents a formidable challenge.

When addressing multi-objective optimization problems, it is imperative to account for the interrelationships among variables. Researchers have undertaken extensive work in this domain, with two of the most seminal algorithms being the multi-objective evolutionary algorithm based on decision variable analysis (MOEA/DVA) [22] and the evolutionary algorithm based on decision variable clustering (LMEA) [16]. Both algorithms categorize decision variables into convergence variables and diversity variables. Convergence variables are associated with the proximity of the population to the true Pareto front, whereas diversity variables govern the distribution of the population within the objective space.

This paper proposes a two-stage large-scale optimization algorithm based on clustering for large-scale multi-objective optimization problems. The main contributions are summarized as follows.

- (1)

- The decision variable clustering strategy effectively categorizes all variables into two distinct groups: those related to diversity and those related to convergence, thereby facilitating the evolutionary process. This approach employs the angle between the convergence direction and the sampled solution as a distinguishing feature, subsequently clustering these features using the k-means method. Variables associated with smaller angles contribute more significantly to convergence, whereas those with larger angles play a more substantial role in enhancing diversity.

- (2)

- By employing the non-dominated dynamic weight aggregation method, Pareto optimal solutions are identified in multi-objective optimization problems (MOPs) with varying Pareto front shapes. Subsequently, the optimization problem involving reference lines is addressed by leveraging these solutions within the Pareto optimal subspace, thereby resolving the population diversity issue.

- (3)

- To assess the efficacy of the proposed algorithm, comprehensive empirical evaluations were performed on a range of benchmark problems. These evaluations involved comparisons with several advanced MOEAs designed for addressing both multi-objective optimization problems and large-scale optimization challenges. The experimental results conclusively demonstrate that the algorithm introduced in this study is capable of effectively solving large-scale optimization problems characterized by numerous decision variables, achieving commendable performance outcomes.

2. Background

2.1. Multi-Objective Optimization

Optimization problems with multiple mutually conflicting objectives are called MOPs. A MOP can be described by Equation (1), where represents a candidate solution and D is the dimension of the decision vector. M is the dimension of the target space, which is the number of targets in MOP. When D ≥ 100, MOP is considered a large-scale multi-objective optimization problem.

In multi-objective optimization problems, there are a large number of optimal solutions that cannot be mutually dominated. Assuming there are two candidate solutions and in the decision space . When they satisfy Equation (2), Pareto dominates , denoted as . We can define the dominance relationship between the two solutions of the minimum MOP as follows:

Generally speaking, it is difficult to obtain all Pareto optimal solutions. Therefore, we achieve three goals: (1) Enhance the convergence ability of the algorithm so that all final solutions are close to the true Pareto front (PF). (2) Improve the diversity of algorithms so that the target vector has a diverse distribution on the PF while satisfying the convergence speed condition; (3) Minimize the waste of computing resources while ensuring convergence and diversity. In the past few years, the field of multi-objective optimization has attracted a lot of attention, and many researchers are dedicated to the research in this area.

2.2. Large-Scale Multi-Objective Optimization

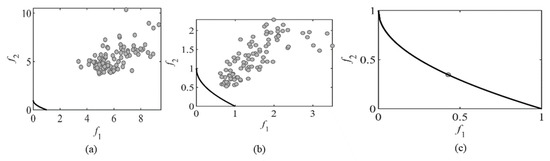

In practical applications, there are a large number of multi-objective optimization problems containing thousands of decision variables, but existing multi-objective evolutionary algorithms cannot perfectly solve these problems. Experience has shown that as the number of variables in the search space increases, the effectiveness of most MOEAs sharply decreases. In LSMOEAs based on decision variable grouping, the result of decision variable grouping will directly affect the final solution quality. Although the existing algorithms can achieve satisfactory results in most cases, there will be a lack of diversity of solutions for some problems. As shown in Figure 1, the black curve represents the ideal Pareto frontier for the bi-objective optimization problem BT6, and the gray point represents the feasible solution obtained by the algorithm in the current optimization stage. It can be seen from Figure 1 that as the algorithm evolves, the solution obtained gradually concentrates on a local point on the Pareto front. This shows that with the evolution of the algorithm, the diversity of the population has been significantly lost. Paper [15] first applied the coevolution (CC) framework to evolutionary algorithms for solving large-scale multi-objective optimization problems. Recently, Ma et al. proposed a multi-objective evolutionary algorithm for solving large-scale multi-objective problems, called MOEA/DVA [22]. The algorithm uses a method called decision variable analysis to divide all decision variables into three categories. By perturbing the value of some variables, if the generated solution is not dominant after the variable perturbation and is not dominated by other solutions, the variable is called a distance variable. If there is a dominant relationship between the solutions generated after the disturbance, this variable is called the position variable. Otherwise, this variable is called a mixed variable. Zhang [16] proposed another new evolutionary algorithm using the decision variable clustering method. It first clusters all decision variables into two categories, one of which affects the convergence performance, and the other variables are related to diversity. Then in the optimization process, two different strategies are adopted for the two categories.

Figure 1.

Examples of the loss of population diversity in the LSMOEA solution. (a) Early evolution; (b) Metaphase of evolution; (c) End of evolution.

In addition, there is a dynamic grouping strategy. In the paper [23], Wolfe first designed a decomposition pool with different group sizes. Then, select one of the group sizes from the pool. Divides the entire dimension into many groups of different sizes based on the selected grouping size. This method can save a lot of computing resources. In addition, another strategy is to transform the original LSMOP into a simpler MOP with a relatively small number of decision variables. The weighted optimization framework (WOF) [24] is representative of this category. In WOF, decision variables are divided into several groups, each of which has a weight variable. Therefore, the optimization of the same group of weight variables can be regarded as the optimization of the subproblem in the original decision space subspace. Another typical algorithm is a large-scale multi-objective algorithm based on problem reconstruction (LSMOF) [25]. The core idea of LSMOF is to transform the original LSMOP into a low-dimensional single-objective optimization problem through problem reconstruction. In the reconstructed problem, the decision space is reduced from the dimension of weight variable reconstruction, and the target space is therefore reduced, so a group of excellent solutions can be obtained efficiently.

There are also some other strategies, such as the recently proposed multi-objective particle swarm optimization algorithm based on the competition mechanism (CMOPSO) [26], which does not use a grouping strategy or decision variable analysis strategy but uses a particle swarm optimizer (PSO) to enhance the diversity of the population and uses a paired competition strategy [27] to solve LSMOP. Zhang et al. [28] established an information feedback model (IFM) to generate promising population offspring. IM-MOEA [29] built an inverse model based on a Gaussian process, mapping the solution from the target space to the decision space so as to generate children by sampling the target space.

3. Proposed Algorithm

In this section, we proposed a two-stage large-scale optimization algorithm based on decision variable clustering to solve large-scale multi-objective optimization problems, named LSMOEA-VT. The main framework of LSMOEA-VT was shown in Algorithm 1.

| Algorithm 1 Main Framework of LSMOEA-VT |

|

| 1. P ← Initialize(N); |

| 2. [DV, CV] ← VariableClustering (P, nSel, nPer); |

| 3. subCVs ←InteractionAnalysis (P, CV, nCor); |

| 4. while termination criterion not fulfilled do |

| 5. P′ ← ConvergenceOptimization(P, subCVs); |

| 6. Q ← Find some Pareto-optimal solutions of |

| 7. Ω ← Learn the subspace based on Q; |

| 8. Update the given reference lines ; |

| 9. P ← Diversity maintaining with in Ω; |

| 10. Return P. |

In the first stage of the algorithm, we use clustering methods to divide all decision variables into two categories, one related to convergence and the other related to diversity. Therefore, we can apply specific treatments to each group. Due to the fact that the number of decision variables after clustering may still be relatively large, we can conduct interdependence analysis on these variables to test the interdependence relationships between them. In the second stage, we can search for a set of Pareto optimal solutions for a given large-scale problem, then learn POS from the found Pareto optimal solutions, and finally obtain a final solution with convergence and diversity by updating the given reference line. Several crucial components of LSMOEA-VT are further illustrated in the coming parts.

3.1. Clustering of Decision Variables

Algorithm 2 delineates the methodology for clustering decision variables. Consider a bi-objective optimization problem wherein each individual comprises five decision variables, denoted as x1, x2, x3, x4, and x5. Firstly, two candidate solutions are randomly selected from the entire solution set. Subsequently, the variables of these selected solutions are perturbed ten times. The perturbed solutions are then normalized to form a fitting line, denoted as L. The equation f1 + ⋯ + fm = 1 represents the direction of convergence. In high-dimensional decision spaces, dynamic clustering during optimization would introduce significant computational overhead, especially when evaluating complex objective functions. Offline clustering allows us to preprocess the decision space and reduce computational complexity during the optimization process; clustering provided a stable and consistent framework for interaction analysis. Dynamic clustering, while potentially more adaptive, could introduce variability in the sensitivity analysis due to changes in cluster boundaries during optimization. By performing clustering offline, we establish a fixed reference framework that facilitates clearer interpretation of the relationships between decision variables and objective functions. Following this, the angle between the fitting line L and the hyperplane normal is calculated. Variables exhibiting larger angles contribute more significantly to diversity, whereas those with smaller angles contribute more to convergence. It is noteworthy that the greater the number of candidate solutions selected for decision variables, the more angles associated with each variable will be generated. Therefore, to achieve more precise results, it is advisable to select as many solutions as feasible. Finally, the k-means clustering method is employed to partition all variables in the decision space into two groups. Variables with smaller average angles are categorized as convergence-related variables, while the remaining variables are classified as diversity-related variables.

| Algorithm 2 Clustering of Decision Variables | |

| Input: pop, number of candidate solutionsSelNum, number of perturbationPerNum Output: [Diver,Conver] | |

| 1. | for i = 1:n do |

| 2. | C ← Choose SelNum solutions from pop randomly; |

| 3. | for j = 1:SelNum do |

| 4. | Make perturbation for the i-th variable of C[j] for PerNum times to bring a generation SP and then normalize it; |

| 5. | Generate a line L for SP in objective space; |

| 6. | Angle[i][j] ← the angle between L and normal line of hyperplane; |

| 7. | MSE[i][j] ← the mean square error of the fitting; |

| 8. | end for |

| 9. | end for |

| 10. | CV ← {mean(MSE[i]) < 1 × 10−2}; |

| 11. | [C1, C2] ← apply k-means to group all variables into two categories adopting Angle as feature; |

| 12. | if CV⋂C1 ≠ ∅ and CV⋂C2 ≠ ∅ then |

| 13. | CV ← CV⋂C, C is either C1 or C2 depending on which of the average of Angle is smaller; |

| 14. | end if |

| 15. | DV ← {j ∉ CV } |

3.2. Interaction Analysis

The number of decision variables is very large in the large-scale optimization algorithm, and it will affect the convergence rate of real PF. Therefore, the interaction between convergence variables is tested in Algorithm 3. According to this relationship, these variables will continue to be divided into subgroups. The interacting variables are in the same subgroup, and there is no interaction between the two subgroups. Usually, the interaction between two variables is defined as In brief, when the dominance relationship of the solution changes xi and xj. Respectively, the opposite results are produced; then, this pair of decision variables is dependent. In Algorithm 3, we first initialize the empty subset of the interaction variable group and then assign the convergence-related variables to different subgroups according to the paired interactions between variables. In extreme cases, there are two cases. One is that there is only one subgroup, which means that all convergence variables are completely separable. In the other case, if the decision variables are completely separable, the number of subgroups will be |CV|.

| Algorithm 3 Interaction Analysis | |

| Input: pop, Conver, number of chosen solutions CorNum Output: subCVs | |

| 1. set subCVs as ∅; | |

| 2. for all the ν ∈ CV do | |

| 3. set CorSet as ∅; | |

| 4. for all the Group ∈ subCVs do | |

| 5. for all the u ∈ Group do | |

| 6. flag ← False; | |

| 7. for i = 1: CorNum do | |

| 8. Choose an individual p from pop randomly; | |

| 9. if ν has interaction with u in individual p then | |

| 10. flag ← True; | |

| 11. CorSet = {Group} cupCorSet; | |

| 12. Break; | |

| 13. end if | |

| 14. end for | |

| 15. if flag is True then | |

| 16. Break; | |

| 17. end if | |

| 18. end for | |

| 19. end for | |

| 20. if CorSet = ∅ then | |

| 21. subCVs = subCVs∪{{ν}}; | |

| 22. else | |

| 23. subCVs = subCVs/CorSet; | |

| 24. Group ← all variables in CorSet and ν; | |

| 25. subCVs = subCVs∪{Group}; | |

| 26. end if | |

| 27. end for | |

3.3. Pareto Optimal Subspace Learning

Algorithm 4 represents the process of Pareto optimal subspace learning. First, the Pareto optimal solution in Q is represented by the matrix M ∈ R|P|×n, and then M is updated to M′ (line 2 and line 3) by calculating the average value of M and subtracting the average value. Then, calculating the eigenvalues of the M′ covariance matrix (line 4). Then calculate the median of each column in M (line 10) and set the elements in the lower and upper limits of x to the corresponding median. Finally, PoS is generated by sampling all points in the updated upper and lower limits (line 15).

| Algorithm 4 Pareto-Optimal Subspace Learning | |

| 1. | Input: The Pareto-optimal solutions Q, a threshold value ε, the lower bound = , the upper bound = . Output: The learned Pareto-optimal subspace Ω |

| 2. | Let a matrix M ∈ R|P|×n denote the solutions in Q |

| 3. | mean ← Calculate the mean values of M; |

| 4. | M′ ← Update M by subtracting mean; |

| 5. | ← Calculate the eigenvalues of the covariance matrix of M′; |

| 6. | ← Record the positions of the eigenvalues with the descending order; |

| 7. | |

| 8 | While < ε do |

| 9. | |

| 10. | end |

| 11. | median ← Calculate the median value of each column in M; |

| 12. | for i ← j + 1 to n do |

| 13. | ← Copy the component of median; |

| 14. | ← Copy the component of median; |

| 15. | end |

| 16. | Ω ← All points in ; |

| 17. | Return Ω. |

3.4. Maintaining Population Diversity

Algorithm 5 shows the detailed steps to maintain population diversity. First, we can draw the reference line by finding the extreme point, the lowest point, and the ideal point (lines 1–6, 7–12, and 13–15 of the algorithm). When searching for extreme points, each extreme point is obtained by processing the single objective problem in Ω; the definition is shown in Equation (3):

In short, we can find the nearest solution of the angle between the target vector and the corresponding axis. In addition, the lowest point and ideal point are obtained by extracting the maximum and minimum values from each dimension of the extreme point, and the mapping of each reference line is realized by line 14 in the algorithm. The PF in the multi-objective optimization problem may not occupy the entire target space, or only a part of N reference lines pass through the PF. In this case, the final solution will lose diversity. Therefore, the purpose of drawing reference lines is to keep the preset N reference lines within PF as far as possible so as to maintain the diversity of solutions. Finally, each solution is obtained by solving SOP (4) with a set of reference lines r, and the reference lines in r are evenly distributed in PF, and each solution in Q has the smallest cosine value for each reference line, so the solution obtained has good diversity.

| Algorithm 5 Diversity Maintaining | |

| Input: The Pareto-optimal subspace Ω, the updated reference lines ; Output: P | |

| 1. | ← ∅ |

| 2. | for i ← 1 to m do |

| 3. | ← Initialize a vector of m elements with zeros except for the i-th element with one; |

| 4. | ← Solve the single-objective optimization problem |

| 5. | ; |

| 6. | end |

| 7. | ← ∅ |

| 8. | ← ∅ |

| 9. | for i ← 1 to m do |

| 10. | ← The maximal element at the i-th component of ; |

| 11. | ← The minimal element at the i-th component of ; |

| 12. | end |

| 13. | for each do |

| 14. | r ← |

| 15. | end |

| 16. | P ← ∅ |

| 17. | for i ← 1 to N do |

| 18. | ; |

| 19. | P ← P∪; |

| 20. | end |

| 21. | Return P. |

4. Experiments and Results

In order to verify the effectiveness of the proposed algorithm, we compare it with four other advanced algorithms, namely NSGA-III [3], MOEA/D [2], MOEA/DVA [22], and LMEA [16]. on some standard test suits, namely DTLZ [30], WFG [31], UF [32], and LSMOP [33]. All experiments in this paper were run in MATLAB R2020b on a personal computer. The Windows 10 64-bit system is configured with an Intel (R) Core (TM) i7 4720HQ CPU and 12G of RAM. The computer is manufactured by ASUS in Suzhou, China.

4.1. Experimental Setting

For a fair comparison, all comparison algorithms use the recommended parameter values to achieve the best performance. The parameters of the experiment are set as follows: All comparison algorithms simulate binary crossover and polynomial mutation operations to generate offspring. For each test problem, the crossover probability is pc = 1.0. Crossover probability is an important parameter in genetic algorithms used to control the probability of individuals performing crossover operations during the evolutionary process. The Pc is a value between 0 and 1, representing the probability of two parent individuals performing crossover operations. And the mutation rate is pm = 1/D, where D is the number of decision variables. Mutation rate serves as a crucial parameter in algorithms, governing the probability of random alterations in individual genes. For all algorithms, the population size is set to 100. For test questions with decision variables of 100, 500, and 1000, the maximum evaluation times are set to 1,000,000, 5,000,000, and 15,000,000, respectively. Two widely used performance indicators, namely inverse generation distance (IGD) [34] and HV [35], are used to evaluate the performance of the compared algorithms. IGD is an index that can evaluate convergence and uniformity performance at the same time. The calculation method is as follows.

PS represents the Pareto optimal solution set in the population, and PS* represents the real Pareto optimal solution set. di represents the minimum Euclidean distance between the candidate solution in PS and the real solution in PS* after mapping to the target space. The smaller the IGD value, the better the performance of the algorithm. HV evaluates the performance of the algorithm in convergence and diversity in a quantitative way by calculating the sum of the super volume of the hypercube composed of all nondominated solutions and the nadir point in the population.

ci represents a hypercube formed by a non-dominated solution in PS and a reference point xref as a diagonal line. The calculation method is shown in Equation (6). The larger the HV value, the closer the solution of the algorithm is to the true PF, and the better the performance of the algorithm. All algorithms are independently run 20 times for each test problem to obtain statistical results.

4.2. Experiments and Analysis

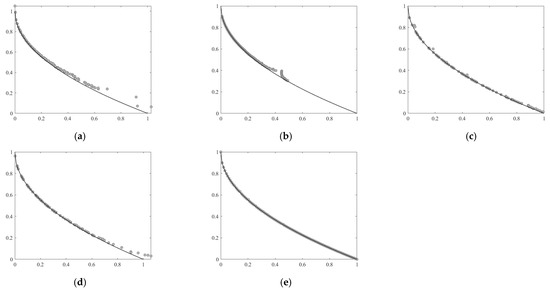

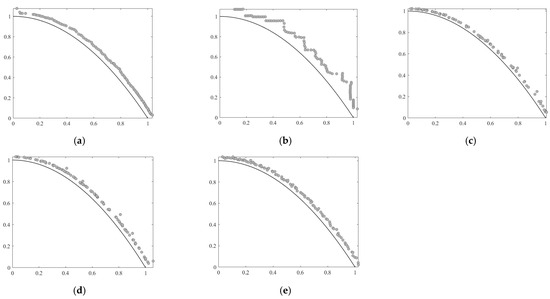

4.2.1. Results of Problems with 30 Variables

Figure 2 and Figure 3 show the PF values obtained by the five algorithms on the UF2 and UF4 test functions. From the figure, we can clearly see that NSGA-III and MOEA/D have obtained satisfactory convergence results on the UF2 problem, but the final population results obtained by them do not cover the entire Pareto front. The convergence result of LMEA is very good, but the diversity of the population is not as good as LSMOEA-VT. On the UF4 problem, NSGA-III and MOEA/D algorithms perform poorly, and the population results do not fully converge to the real PF. The convergence and diversity of the LSMOEA-VT population have obtained good results in both UF2 and UF4 problems.

Figure 2.

The PF obtained by five algorithms on UF2 function with 30 decision variables. (a) NSGA-III; (b) MOEA/D; (c) MOEA/DVA; (d) LMEA; (e) LSMOEA-VT.

Figure 3.

The PF obtained by five algorithms on UF4 function with 30 decision variables. (a) NSGA-III; (b) MOEA/D; (c) MOEA/DVA; (d) LMEA; (e) LSMOEA-VT.

4.2.2. Performance Comparison Between LMEA and Existing MOEAs on Large-Scale MaOPs

Table 1 shows the statistical results of IGD values obtained by 5 comparison algorithms through 20 independent runs on 8 test problems (100, 500, and 1000 decision variables). The best results of each test are shown in bold. Wilcoxon rank sum test is also used. The symbols “+”, “−”, and “≈” indicate that the results are significantly better, significantly worse, and basically the same as LSMOEA-VT. Besides, the best results in Table 1 and Table 2 are shown in bold. From the table, we can find that LSMOEA-VT achieves the best results for most test problems in terms of the statistical results of IGD. NSGA-III and MOEA/D can perform well in some test functions, but their overall performance is not as good as MOEA/DVA, which is used to solve large-scale multi-objective optimization problems, LMEA, and our LSMOEA-VT algorithm. On the other hand, we can see that when the number of decision variables increases from 100 to 1000, the IGD values obtained by LMEA and LSMOEA-VT on each test function are very good, which shows that LMEA and LSMOEA-VT have good expansibility. On the other hand, on the DTLZ1-DTLZ4 problem, LSMOEA-VT and other comparison algorithms have achieved different and better results, but on the DTLZ7 test problem, LSMOEA-VT performance is always higher than other comparison algorithms. This is because the DTLZ7 problem has an irregular Pareto front, while MOEA/D and MOEA/DVA both deal with diversity problems based on decomposition, which is not applicable to the DTLZ7 problem. On the contrary, the strategy of maintaining population diversity proposed in algorithm 5 can well deal with this irregular Pareto front. Finally, the performance of MOEA/DVA is better than LSMOEA-VT in UF9 and UF10 testing. This is because in these problems, MOEA/DVA optimizes all variables related to convergence and diversity as diversity-related variables, while MOEA/DVA uses a uniform sampling method to initialize the diversity-related variables, which can obtain a good candidate solution distribution in the UF problem.

Table 1.

IGD metric values of the five algorithms on DTLZ, WFG, and UF.

Table 2.

IGD metric values of the five algorithms on LSMOP1-LSMOP9.

In order to further study the performance of LSMOEA-VT on large-scale optimization problems, we have performed more tests on the recently developed large-scale optimization test suite, namely the LSMOP test suite. The LSMOP test suite consists of nine test problems, LSMOP1–LSMOP9. These test problems have complex variable relations on the Pareto set, and the decision variables are mixed and separated. Therefore, the LSMOP test problems can better reflect the challenges faced by large-scale optimization problems. From Table 2, we can see that LSMOEA-VT achieves the best performance on LSMOP1, LSMOP2, LSMOP3, LSMOP5, and LSMOP9. For LSMOP8, the IGD obtained by LSMOEA-VT is slightly worse than that of LMEA. This is because LSMOP8 is a multi-mode problem with a mixture of partially separable and completely separable decision variables. Because LSMOEA-VT achieves the efficient solution of LSMOPs from the perspective of decision variable grouping, the effect of the grouping method may be affected in the face of multimodal problems and the problem of partial separability of decision variables, resulting in poor performance of the algorithm. But overall, LSMOEA-VT has achieved good performance in LSMOP testing problems.

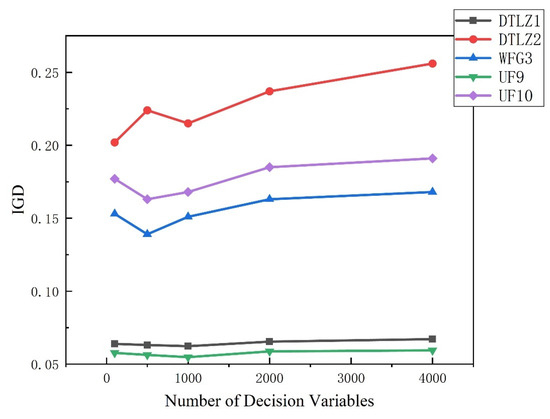

4.2.3. Performance of LMEA on Large-Scale MaOPs with 2000 and 4000 Decision Variables

In the previous part, we proved the excellent performance of the proposed algorithm LSMOEA-VT when the number of decision variables is from 100 to 1000. In this part, we further studied the performance of LSMOEA-VT when there are more decision variables. Table 3 shows the performance of the proposed LSMOEA-VT algorithm on DTLZ1, DTLZ7, WFG3, UF9, and UF10 when the decision variables are 2000 and 4000, respectively. From the table, we can see that LSMOEA-VT has obtained similar HV indicators on each test problem.

Table 3.

HV metric values of LMEA on DTLZ1–DTLZ7, WFG3, UF9, and UF10, with 2000 and 4000 decision variables.

For further observation, Figure 4 shows the IGD index values of LSMOEA-VT on DTLZ 1, DTLZ 2, WFG3, UF9, and UF10, and 20 independent operation comparisons are made when the number of decision variables is 100, 500, 1000, 2000, and 4000. From the figure, we can see that with the increase of the number of decision variables, the IGD index obtained by LSMOEA-VT on test problems has hardly decreased, which shows that the LSMOEA-VT algorithm proposed by us has good scalability.

Figure 4.

IGD metric values of LSMOEA-VT on DTLZ1, DTLZ2, WFG3, UF9, and UF10 with different numbers of decision variables.

4.2.4. Efficiency Comparison Between LSMOEA-VT and Other Algorithms

In order to further study the performance of LSMOEA-VT, we compare the running time of five algorithms on some test problems, in which the number of decision variables of DTLZ1, DTLZ2, WFG3, UF9, and UF10 is 100, and the number of LSMOEA-VT is 300. The results are shown in Table 4. From the table, we can see that LSMOEA-VT spends the least time on DTLZ1, DTLZ 2, WFG3, UF9, and UF10 issues, and the time spent on the LMEA algorithm based on the grouping strategy is also much less than MOEA/DVA, because MOEA/DVA spends a lot of time on the analysis of decision variables, resulting in poor performance. At the same time, on the LSMOP test suite, because the program is relatively simple and ignores the impact of performance, NSGA-III takes the least time among all algorithms. The time required for LSMOEA-VT is longer than that for LMEA. This is because the computational cost for maintaining the population diversity in the LSMOEA-VT algorithm is higher than that of LMEA. Therefore, although the population diversity in the LSMOEA-VT algorithm is increased, it consumes more computational resources.

Table 4.

Average runtimes (s) of five algorithms on some test problems.

5. Conclusions and Future Work

In this paper, a two-stage large-scale optimization algorithm based on decision variable clustering (LSMOEA-VT) is proposed to solve large-scale optimization problems. The decision variables are classified in LSMOEA-VT, and the dimension reduction method is used to optimize the variables that affect the evolutionary convergence. Then, a non-dominated dynamic weight aggregation method is used to solve a set of single-objective optimization problems with reference lines through Pareto optimal subspace to enhance the diversity of the population. In the above experiments, we have carried out experimental comparisons on some low-dimensional benchmark functions, namely the UF test suite, and the experiments show that we can obtain satisfactory results with less computational cost. In addition, we also compared with other algorithms on DTLZ, WFG, and UF test suites when the decision variable is 100, 500, and 1000, and obtained satisfactory results in convergence and diversity, thus verifying the effectiveness and efficiency of our algorithm. Finally, we also try the problem of 4000 decision variables to verify the scalability of our algorithm.

Although our algorithm has excellent performance in solving large-scale optimization problems, there are still some problems to be solved. First, in the first stage of decision variables, we simply divide the decision variables into convergence correlation and diversity correlation; even the grouped decision variables may have different correlations with the objective function, and whether there is a more accurate classification method. It consumes a lot of computing resources in the decision variable grouping stage. Therefore, how to deal with these variables efficiently is a very valuable research problem. In addition, in real society, due to the complexity of decision variables, how to use our algorithm to solve these practical application problems may be an interesting direction.

Author Contributions

Conceptualization, J.L.; methodology, T.L.; software, J.L.; validation, J.L.; formal analysis, J.L.; investigation, T.L.; resources, T.L.; data curation, T.L.; writing—original draft preparation, J.L.; writing—review and editing, T.L.; visualization, T.L.; supervision, J.L.; project administration, T.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable, using a publicly available dataset.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, H. MOEA/D: A multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 2007, 11, 712–731. [Google Scholar] [CrossRef]

- Deb, K.; Jain, H. An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part i: Solving problems with box constraints. IEEE Trans. Evol. Comput. 2014, 18, 577–601. [Google Scholar] [CrossRef]

- Liu, C.; Liu, J.; Jiang, Z. A multiobjective evolutionary algorithm based on similarity for community detection from signed social networks. IEEE Trans. Cybern. 2014, 44, 2274–2287. [Google Scholar]

- Zhu, H.; Shi, Y. Brain storm optimization algorithm for full area coverage of wireless sensor networks. In Proceedings of the 2016 Eighth International Conference on Advanced Computational Intelligence (ICACI), Chiang Mai, Thailand, 14–16 February 2016; pp. 14–20. [Google Scholar]

- Guo, Y.; Liu, D.; Chen, M.; Liu, Y. An energy-efficient coverage optimization method for the wireless sensor networks based on multi-objective quantum-inspired cultural algorithm. In Proceedings of the Advances in Neural Networks ISNN 2013, Dalian, China, 4–6 July 2013; pp. 343–349. [Google Scholar]

- Fleming, P.J.; Purshouse, R.C.; Lygoe, R.J. Many-objective optimization: An engineering design perspective. In Proceedings of the 3rd International Conference on Evolutionary Multi-Criterion Optimization, Guanajuato, Mexico, 9–11 March 2005; pp. 14–32. [Google Scholar]

- Garcia, J.; Berlanga, A.; López, J.M.M. Effective evolutionary algorithms for many-specifications attainment: Application to air traffic control tracking filters. IEEE Trans. Evol. Comput. 2009, 13, 151–168. [Google Scholar]

- Kollat, J.B.; Reed, P.M.; Maxwell, R.M. Many-objective groundwater monitoring network design using bias-aware ensemble Kalman filtering, evolutionary optimization, and visual analytics. Water Resour. Res. 2011, 47, 1–18. [Google Scholar] [CrossRef]

- Potter, M.A.; Jong, K.A.D. A cooperative coevolutionary approach to function optimization. Third Parallel Prob. Solving Form Nat. 1994, 866, 249–257. [Google Scholar]

- Li, X.; Mei, Y.; Yao, X.; Omidvar, M.N. Cooperative co-evolution with differential grouping for large scale optimization. IEEE Trans. Evol. Comput. 2014, 18, 378–393. [Google Scholar]

- Chen, W.; Weise, T.; Yang, Z.; Tang, K. Large-scale global optimization using cooperative coevolution with variable interaction learning. In Proceedings of the International Conference on Parallel Problem Solving from Nature, Krakow, Poland, 11–15 September 2010; pp. 300–309. [Google Scholar]

- Sun, Y.; Kirley, M.; Halgamuge, S.K. Extended differential grouping for large scale global optimization with direct and indirect variable interactions. In Proceedings of the 2015 Annual Conference on Genetic and Evolutionary Computation, Madrid, Spain, 11–15 July 2015; pp. 313–320. [Google Scholar]

- Omidvar, M.N.; Li, X.; Yao, X. Cooperative co-evolution with delta grouping for large scale non-separable function optimization. In Proceedings of the IEEE Congress on Evolutionary Computation, New Orleans, LA, USA, 5–8 June 2011; pp. 1–8. [Google Scholar]

- Yang, Z.; Tang, K.; Yao, X. Multilevel cooperative coevolution for large scale optimization. In Proceedings of the IEEE Congress on Evolutionary Computation, Hong Kong, China, 1–6 June 2008; pp. 1663–1670. [Google Scholar]

- Zhang, X.; Tian, Y.; Cheng, R.; Jin, Y. A decision variable clustering-based evolutionary algorithm for large-scale many-objective optimization. IEEE Trans. Evol. Comput. 2016, 22, 97–112. [Google Scholar] [CrossRef]

- Hajikolaei, K.H.; Pirmoradi, Z.; Cheng, G.H.; Wang, G.G. Decomposition for large-scale global optimization based on quantified variable correlations uncovered by metamodeling. Eng. Optim. 2015, 47, 429–452. [Google Scholar] [CrossRef]

- Ghorpadeaher, J.; Metre, V.A. Clustering multidimensional data with PSO based algorithm. arXiv 2014, arXiv:1402.6428. [Google Scholar]

- Chen, W.N.; Zhang, J.; Chung, H.S.H.; Zhong, W.L.; Wu, W.G.; Shi, Y.H. A novel set-based particle swarm optimization method for discrete optimization problems. IEEE Trans. Evol. Comput. 2010, 14, 278–300. [Google Scholar] [CrossRef]

- Chen, W.N.; Zhang, J.; Lin, Y.; Chen, N.; Zhan, Z.H.; Chung, S.H.; Li, Y.; Shi, Y.H. Particle swarm optimization with an aging leader and challengers. IEEE Trans. Evol. Comput. 2013, 17, 241–258. [Google Scholar] [CrossRef]

- Yu, W.J.; Shen, M.; Chen, W.N.; Zhan, Z.H.; Gong, Y.J.; Lin, Y.; Liu, O.; Zhang, J. Differential evolution with two-level parameter adaptation. IEEE Trans. Cybern. 2014, 44, 1080–1099. [Google Scholar] [CrossRef]

- Ma, X.; Liu, F.; Qi, Y.; Wang, X.; Li, L.; Jiao, L. A multiobjective evolutionary algorithm based on decision variable analyses for multiobjective optimization problems with large-scale variables. IEEE Trans. Evol. Comput. 2015, 20, 275–298. [Google Scholar] [CrossRef]

- Wolfe, D.A.; Hollander, M. Nonparametric statistical methods. In Biostatistics and Microbiology: A Survival Manual; Springer: New York, NY, USA, 2009. [Google Scholar]

- Zille, H.; Ishibuchi, H.; Mostaghim, S.; Nojima, Y. A framework for large-scale multiobjective optimization based on problem transformation. IEEE Trans. Evol. Comput. 2018, 22, 260–275. [Google Scholar] [CrossRef]

- He, C.; Li, L.; Tian, Y.; Zhang, X.; Cheng, R.; Jin, Y.; Yao, X. Accelerating Large-Scale Multiobjective Optimization via Problem Reformulation. IEEE Trans. Evol. Comput. 2019, 23, 949–961. [Google Scholar] [CrossRef]

- Zhang, X.; Zheng, X.; Cheng, R.; Qiu, J.; Jin, Y. A competitive mechanism based multi-objective particle swarm optimizer with fast convergence. Inf. Sci. 2018, 427, 63–76. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y. A competitive swarm optimizer for large scale optimization. IEEE Trans. Cybern. 2015, 45, 191–204. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, G.-G.; Li, K.; Yeh, W.-C.; Jian, M.; Dong, J. Enhancing MOEA/D with information feedback models for large-scale many-objective optimization. Inf. Sci. 2020, 522, 1–16. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y.; Narukawa, K.; Sendhoff, B. A multiobjective evolutionary algorithm using gaussian process-based inverse modeling. IEEE Trans. Evol. Comput. 2015, 19, 838–856. [Google Scholar] [CrossRef]

- Deb, K.; Thiele, L.; Laumanns, M.; Zitzler, E. Scalable Test Problems for Evolutionary Multiobjective Optimization; Springer: London, UK, 2005. [Google Scholar]

- Huband, S.; Hingston, P.; Barone, L.; While, L. A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans. Evol. Comput. 2006, 10, 477–506. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhou, A.; Zhao, S.; Suganthan, P.N.; Liu, W.; Tiwari, S. Multiobjective Optimization Test Instances for the CEC 2009 Special Session and Competition; Nanyang Technological University: Singapore, 2008; Volume 264, pp. 1–30. [Google Scholar]

- Cheng, R.; Jin, Y.; Olhofer, M.; Sendhoff, B. Test problems for largescale multi- and many-objective optimization. IEEE Trans. Cybern. 2017, 47, 4108–4121. [Google Scholar] [CrossRef] [PubMed]

- Zhou, A.; Jin, Y.; Zhang, Q.; Sendhoff, B.; Tsang, E. Combining model-based and genetics-based offspring generation for multi-objective optimization using a convergence criterion. In Proceedings of the IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; pp. 892–899. [Google Scholar]

- While, L.; Hingston, P.; Barone, L.; Huband, S. A faster algorithm for calculating hypervolume. IEEE Trans. Evol. Comput. 2006, 10, 29–38. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).