An Active Learning and Deep Attention Framework for Robust Driver Emotion Recognition

Abstract

1. Introduction

2. Related Works

3. Background Theory

3.1. Conventional Neural Network (CNN)

3.2. Residual Network (ResNets)

3.3. Active Learning Framework

3.4. Deep Attention Mechanism (DAM)

3.5. Active Learning and Attention Mechanism

3.5.1. Labeling Effort Reduction

3.5.2. Weighted-Cluster Loss for Class Imbalance

3.6. Attention Mechanism

3.6.1. Spatial Attention

3.6.2. Temporal Attention

3.6.3. Cross-Modal Fusion

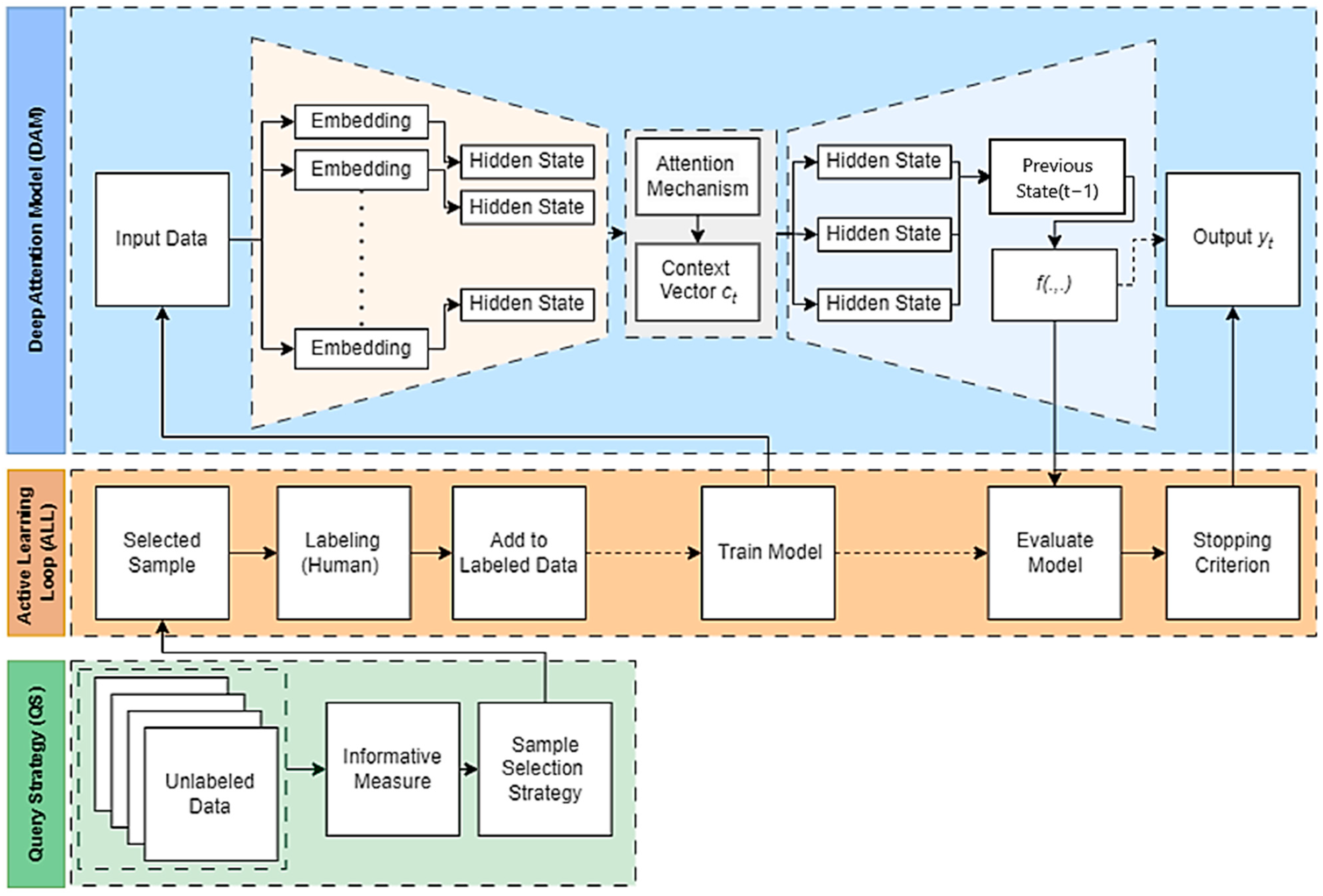

4. Proposed Framework (ALDAM)

4.1. Overview of the Architecture

4.2. Active Learning Loop

4.3. Deep Attention Mechanism

- Spatial Attention: Highlights critical facial regions (e.g., eyes, mouth), suppressing background noise.

- Temporal Attention: Prioritizes emotionally salient frames in sequential video data.

- Cross-Modal Fusion: Combines facial features with contextual cues (e.g., posture, environment), improving resilience to occlusion and illumination shifts.

4.4. Weighted-Cluster Loss

4.5. System Architecture

- Input data layer: The model takes an input sequence , where each is an element of the input.

- Preprocessing layer: The preprocessing stage ensures high-quality inputs for emotion recognition by combining image enhancement, noise removal, and face detection. A pretrained DnCNN model [70] was employed to suppress noise, Gaussian filtering preserved detail, and Z-score normalization standardized luminance ranges for uniformity. Finally, Faster R-CNN [71] was used to detect, extract, and crop faces under varying lighting and pose conditions, providing clean and consistent inputs for downstream model training.

- Embedding layer: The input sequence is passed through an embedding layer to convert each element into an embedding vector .

- Encoder layer: The embeddings are fed into the encoder, which produces hidden states . The encoder can be an RNN, CNN, or Transformer encoder.

- Attention mechanism layer: The attention mechanism calculates a context vector for each time step . This context vector is a weighted sum of the encoder hidden states, where the weights are determined by alignment scores between the current decoder state and the encoder states .

- Decoder layer: The context vector and the previous decoder state are combined to produce the current decoder state . The decoder state is then used to generate the output for the current time step.

| Algorithm 1: Active Learning Iteration with DAM, QS, and ALL |

| Input: |

| U: Unlabeled data pool L: Initial labeled dataset DAM: Deep Attention Model |

| Output: |

| The DAM has been trained using refined attention weights. |

| Begin |

| 1. Train the DAM on the labeled dataset L. 2. For each sample u in U: 2.1 Compute uncertainty score using DAM → uncertainty(u). 3. Use the Query Strategy (QS) to select the most informative samples S ⊆ U. 4. Query the oracle to obtain true labels for the selected samples S → labels(S). 5. Update datasets: 5.1 Add (S, labels(S)) to the labeled dataset L. 5.2 Remove S from the unlabeled data pool U. 6. Retrain the DAM on the updated labeled dataset L |

| End |

| Algorithm 2: Deep active-attention training algorithm based on a joint loss function |

| Input: Training data , mini-batch size , number of epochs , number of iterations in each epoch , learning rate and , and hyper-parameters . |

| 1: Initialization: initialize the deep neural network parameters such as , weighted_softmax, loss parameters , and weighted_clustering loss parameters |

| 2: Train the initial DAM model using Equation (20) |

| (20) |

| 3: For to do |

| 4: Minimizing the loss function using Equation (21) |

| 5: (21) |

| 6: Calculate the joint loss function using Equations (22) |

| 7: (22) |

| 8: Compute the backpropagation error for each using Equation (23) |

| 9: (23) |

| 10: Update the loss parameters based on using the weighted_softmax using Equation (24) |

| 11: (24) |

| 12: Update the loss parameters based on weighted_clustering based for each j using Equation (25) |

| 13: (25) |

| 14: Update the model parameters using Equation (26) |

| 15: (26) |

| 16: End for |

| 17: Retain the DAM with the updated labeled dataset and refined attention weights: |

| 18: Repeat the active learning query and model update steps until a stopping criterion is met (e.g., a fixed number of iterations, convergence in model performance, or a predefined labeling budget). |

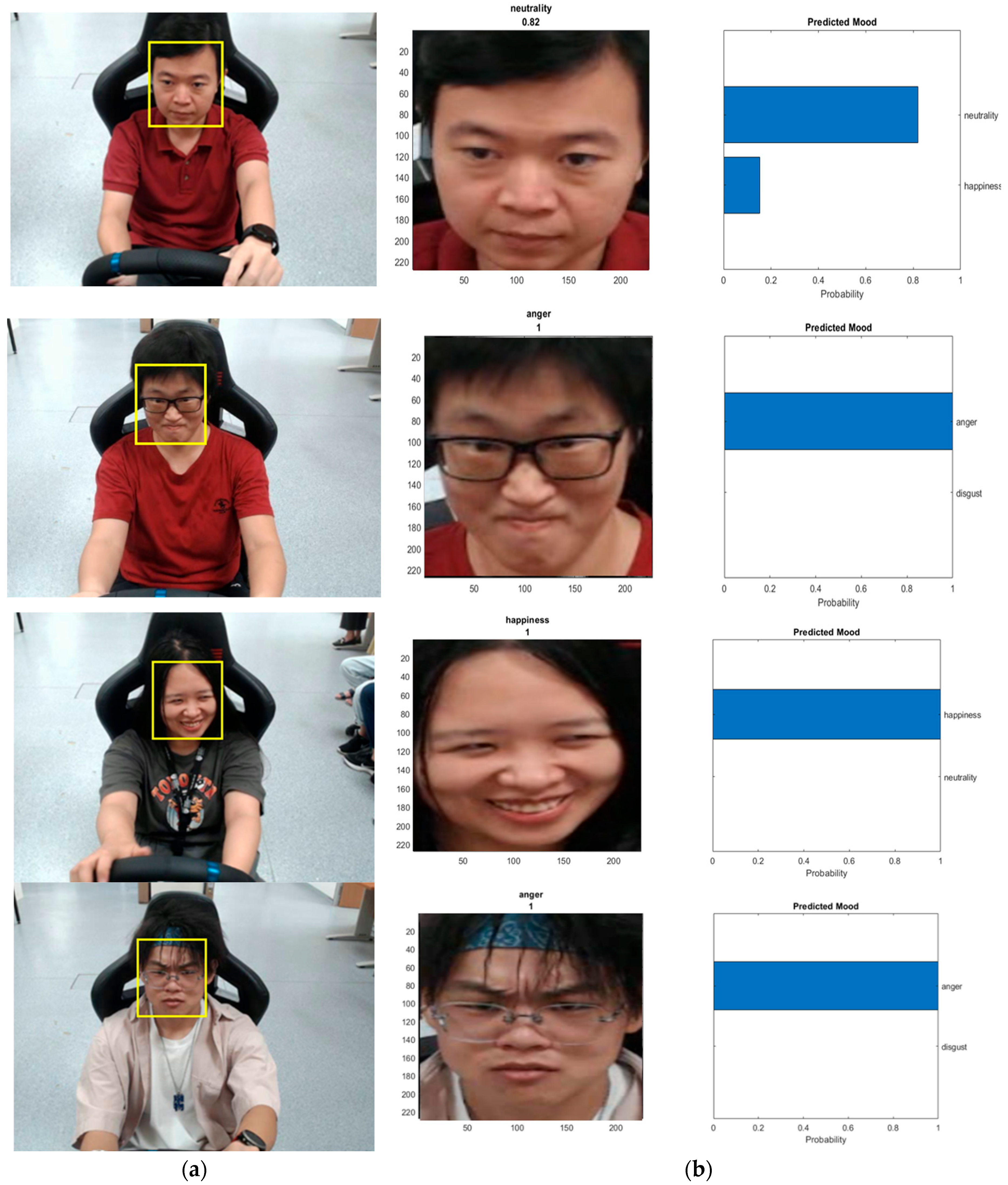

5. Experimental Results

5.1. Experimental Setup

5.2. Dataset Variability and Multimodal Extension

5.3. Training Datasets

5.4. Unseen Driver’s Emotion Recognition Testing Dataset

5.5. Evaluation Metrics

5.6. Experiment Setups and Implementation Details

5.6.1. Preprocessing Experimental Results and Overall Performance

5.6.2. Class-Wise Recognition Performance

5.6.3. Validation Convergence and Robustness

5.6.4. Experimental Results Using the AffectNet Dataset

5.7. Extended Comparative Analysis and State-of-the-Art Comparison

6. Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AL | Active Learning |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| IoU | Intersection over Union |

| ML | Region-based Convolutional Neural Network |

| R-CNN | Scale-Invariant Feature Transform |

| ORB | Oriented FAST and Rotated BRIEF |

| LSTM | Long Short-Term Memory |

| FPS | Frames Per Second |

| GT | Ground Truth |

| TP | True Positive |

| FP | False Positive |

| FN | False Negative |

| TN | True Negative |

| F1 | F1 Score (harmonic mean of precision and recall) |

| ALDAM | Active Learning Scheme-Based Deep Attention Mechanism |

References

- Hickson, S.; Dufour, N.; Sud, A.; Kwatra, V.; Essa, I. Eyemotion: Classifying facial expressions in VR using eye-tracking cameras. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; IEEE: New York, NY, USA, 2019; pp. 1626–1635. [Google Scholar]

- Chen, C.H.; Lee, I.J.; Lin, L.Y. Augmented reality-based self-facial modeling to promote the emotional expression and social skills of adolescents with autism spectrum disorders. Res. Dev. Disabil. 2015, 36, 396–403. [Google Scholar] [CrossRef]

- Ngo, Q.T.; Yoon, S. Facial expression recognition based on weighted-cluster loss and deep transfer learning using a highly imbalanced dataset. Sensors 2020, 20, 2639. [Google Scholar] [CrossRef]

- Zhan, C.; Li, W.; Ogunbona, P.; Safaei, F. A real-time facial expression recognition system for online games. Int. J. Comput. Games Technol. 2008, 2008, 542918. [Google Scholar] [CrossRef]

- Wang, J.; Gong, Y. Recognition of multiple drivers’ emotional state. In Proceedings of the 19th International Conference on Pattern Recognition (ICPR), Tampa, FL, USA, 8–11 December 2008; IEEE: New York, NY, USA, 2008; pp. 1–4. [Google Scholar]

- Jafarpour, S.; Rahimi-Movaghar, V. Determinants of risky driving behavior: A narrative review. Med. J. Islam. Repub. Iran 2014, 28, 142. [Google Scholar] [PubMed]

- Scott-Parker, B. Emotions, behaviour, and the adolescent driver: A literature review. Transp. Res. Part F Traffic Psychol. Behav. 2017, 50, 1–37. [Google Scholar] [CrossRef]

- Sharma, S.; Guleria, K.; Tiwari, S.; Kumar, S. A deep learning-based convolutional neural network model with VGG16 feature extractor for the detection of Alzheimer disease using MRI scans. Meas. Sens. 2022, 24, 100506. [Google Scholar] [CrossRef]

- Mathew, A.; Amudha, P.; Sivakumari, S. Deep learning techniques: An overview. In Advanced Machine Learning Technologies and Applications: Proceedings of AMLTA 2020; Springer: Singapore, 2020; pp. 599–608. [Google Scholar]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar] [CrossRef]

- Verma, M.; Mandal, M.; Reddy, S.K.; Meedimale, Y.R.; Vipparthi, S.K. Efficient neural architecture search for emotion recognition. Expert Syst. Appl. 2023, 224, 119957. [Google Scholar] [CrossRef]

- El Boudouri, Y.; Bohi, A. Emonext: An adapted ConvNeXt for facial emotion recognition. In Proceedings of the 2023 IEEE 25th International Workshop on Multimedia Signal Processing (MMSP), Poitiers, France, 27–29 September 2023; IEEE: New York, NY, USA, 2023; pp. 1–6. [Google Scholar]

- Kopalidis, T.; Solachidis, V.; Vretos, N.; Daras, P. Advances in facial expression recognition: A survey of methods, benchmarks, models, and datasets. Information 2024, 15, 135. [Google Scholar] [CrossRef]

- Sajjad, M.; Ullah, F.U.M.; Ullah, M.; Christodoulou, G.; Cheikh, F.A.; Hijji, M.; Muhammad, K.; Rodrigues, J.J. A comprehensive survey on deep facial expression recognition: Challenges, applications, and future guidelines. Alex. Eng. J. 2023, 68, 817–840. [Google Scholar] [CrossRef]

- Kim, C.L.; Kim, B.G. Few-shot learning for facial expression recognition: A comprehensive survey. J. Real-Time Image Process. 2023, 20, 52. [Google Scholar] [CrossRef]

- Zhang, F.; Zhang, T.; Mao, Q.; Duan, L.; Xu, C. Facial expression recognition in the wild: A cycle-consistent adversarial attention transfer approach. In Proceedings of the 26th ACM International Conference on Multimedia, Seoul, Republic of Korea, 22–26 October 2018; pp. 126–135. [Google Scholar]

- Li, Y.; Liu, H.; Liang, J.; Jiang, D. Occlusion-robust facial expression recognition based on multi-angle feature extraction. Appl. Sci. 2025, 15, 5139. [Google Scholar] [CrossRef]

- Tian, Y.L.; Kanade, T.; Cohn, J.F. Evaluation of Gabor-wavelet-based facial action unit recognition in image sequences of increasing complexity. In Proceedings of the Fifth IEEE International Conference on Automatic Face and Gesture Recognition (FGR), Washington, DC, USA, 20–21 May 2002; IEEE: New York, NY, USA, 2002; pp. 229–234. [Google Scholar]

- Shan, C.; Gong, S.; McOwan, P.W. Facial expression recognition based on local binary patterns: A comprehensive study. Image Vis. Comput. 2009, 27, 803–816. [Google Scholar] [CrossRef]

- Dahmane, M.; Meunier, J. Emotion recognition using dynamic grid-based HoG features. In Proceedings of the 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), Santa Barbara, CA, USA, 21–23 March 2011; IEEE: New York, NY, USA, 2011; pp. 884–888. [Google Scholar]

- Balaban, S. Deep learning and face recognition: The state of the art. Proc. SPIE Biometric Surveill. Technol. Human Act. Identif. XII 2015, 9457, 68–75. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: New York, NY, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Lee, T.S. Image representation using 2D Gabor wavelets. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 959–971. [Google Scholar] [CrossRef]

- Whitehill, J.; Omlin, C.W. Haar features for FACS AU recognition. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), Southampton, UK, 10–12 April 2006; IEEE: New York, NY, USA, 2006. [Google Scholar]

- Ojala, T.; Pietikäinen, M.; Mäenpää, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Shafiq, M.; Gu, Z. Deep residual learning for image recognition: A survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- Gong, H.; Chen, L.; Pan, H.; Li, S.; Guo, Y.; Fu, L.; Hu, T.; Mu, Y.; Tyasi, T.L. Sika deer facial recognition model based on SE-ResNet. Comput. Mater. Contin. 2022, 72, 6015–6027. [Google Scholar] [CrossRef]

- Li, H.; Hu, H.; Jin, Z.; Xu, Y.; Liu, X. The image recognition and classification model based on ConvNeXt for intelligent arms. In Proceedings of the 2025 IEEE 5th International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 23–25 May 2025; IEEE: New York, NY, USA, 2025; pp. 1436–1441. [Google Scholar]

- Yuen, K.; Martin, S.; Trivedi, M.M. On looking at faces in an automobile: Issues, algorithms and evaluation on a naturalistic driving dataset. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; IEEE: New York, NY, USA, 2016; pp. 2777–2782. [Google Scholar]

- Huang, C.; Li, Y.; Loy, C.C.; Tang, X. Deep imbalanced learning for face recognition and attribute prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2781–2794. [Google Scholar] [CrossRef]

- LeCun, Y.; Denker, J.; Solla, S. Optimal brain damage. Adv. Neural Inf. Process. Syst. 1989, 2. Available online: https://proceedings.neurips.cc/paper/1989/hash/6c9882bbac1c7093bd25041881277658-Abstract.html (accessed on 27 September 2025).

- Shukla, A.K.; Shukla, A.; Singh, R. Automatic attendance system based on CNN–LSTM and face recognition. Int. J. Inf. Technol. 2024, 16, 1293–1301. [Google Scholar] [CrossRef]

- Gao, M.; Zhang, Z.; Yu, G.; Arık, S.Ö.; Davis, L.S.; Pfister, T. Consistency-based semi-supervised active learning: Towards minimizing labeling cost. In European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2020; pp. 510–526. [Google Scholar]

- Revina, I.M.; Emmanuel, W.S. A survey on human face expression recognition techniques. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 619–628. [Google Scholar] [CrossRef]

- Guo, M.H.; Xu, T.X.; Liu, J.J.; Liu, Z.N.; Jiang, P.T.; Mu, T.J.; Zhang, S.H.; Martin, R.R.; Cheng, M.M.; Hu, S.M. Attention mechanisms in computer vision: A survey. Comput. Vis. Media 2022, 8, 331–368. [Google Scholar] [CrossRef]

- Ullah, K.; Ahsan, M.; Hasanat, S.M.; Haris, M.; Yousaf, H.; Raza, S.F.; Tandon, R.; Abid, S.; Ullah, Z. Short-term load forecasting: A comprehensive review and simulation study with CNN-LSTM hybrids approach. IEEE Access 2024, 12, 11523–11547. [Google Scholar] [CrossRef]

- Settles, B. Active learning literature survey. Univ. Wisconsin–Madison Tech. Rep. 2009, 1648, 1–67. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Zhong, Z.; Li, J.; Ma, L.; Jiang, H.; Zhao, H. Deep residual networks for hyperspectral image classification. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; IEEE: New York, NY, USA, 2017; pp. 1824–1827. [Google Scholar]

- Iftene, M.; Liu, Q.; Wang, Y. Very high resolution images classification by fine tuning deep convolutional neural networks. In Proceedings of the Eighth International Conference on Digital Image Processing (ICDIP 2016), Chengdu, China, 20–22 May 2016; SPIE: Bellingham, WA, USA, 2016; Volume 10033, pp. 464–468. [Google Scholar]

- Thorpe, M.; van Gennip, Y. Deep limits of residual neural networks. Res. Math. Sci. 2023, 10, 6. [Google Scholar] [CrossRef]

- Targ, S.; Almeida, D.; Lyman, K. ResNet in ResNet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar] [CrossRef]

- Durga, B.K.; Rajesh, V. A ResNet deep learning based facial recognition design for future multimedia applications. Comput. Electr. Eng. 2022, 104, 108384. [Google Scholar] [CrossRef]

- Koonce, B. ResNet 50. In Convolutional Neural Networks with Swift for TensorFlow: Image Recognition and Dataset Categorization; Apress: Berkeley, CA, USA, 2021; pp. 63–72. [Google Scholar]

- Panda, M.K.; Subudhi, B.N.; Veerakumar, T.; Jakhetiya, V. Modified ResNet-152 network with hybrid pyramidal pooling for local change detection. IEEE Trans. Artif. Intell. 2023, 5, 1599–1612. [Google Scholar] [CrossRef]

- Demir, A.; Yilmaz, F.; Kose, O. Early detection of skin cancer using deep learning architectures: ResNet-101 and Inception-V3. In 2019 Medical Technologies Congress (TIPTEKNO), Izmir, Turkey, 3–5 October 2019; IEEE: New York, NY, USA, 2019; pp. 1–4. [Google Scholar]

- Sainath, T.N.; Kingsbury, B.; Soltau, H.; Ramabhadran, B. Optimization techniques to improve training speed of deep neural networks for large speech tasks. IEEE Trans. Audio Speech Lang. Process. 2013, 21, 2267–2276. [Google Scholar] [CrossRef]

- Ren, P.; Xiao, Y.; Chang, X.; Huang, P.Y.; Li, Z.; Gupta, B.B.; Chen, X.; Wang, X. A survey of deep active learning. ACM Comput. Surv. 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Gal, Y.; Islam, R.; Ghahramani, Z. Deep Bayesian active learning with image data. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, Australia, 6–11 August 2017; 2017; pp. 1183–1192. [Google Scholar]

- Kirsch, A.; van Amersfoort, J.; Gal, Y. BatchBALD: Efficient and diverse batch acquisition for deep Bayesian active learning. Adv. Neural Inf. Process. Syst. 2019, 32. Available online: https://proceedings.neurips.cc/paper_files/paper/2019/hash/95323660ed2124450caaac2c46b5ed90-Abstract.html (accessed on 27 September 2025).

- Sener, O.; Savarese, S. Active learning for convolutional neural networks: A core-set approach. arXiv 2017, arXiv:1708.00489. [Google Scholar]

- Ash, J.T.; Zhang, C.; Krishnamurthy, A.; Langford, J.; Agarwal, A. Deep batch active learning by diverse, uncertain gradient lower bounds. arXiv 2019, arXiv:1906.03671. [Google Scholar]

- Zhao, Z.; Zeng, Z.; Xu, K.; Chen, C.; Guan, C. DSAL: Deeply supervised active learning from strong and weak labelers for biomedical image segmentation. IEEE J. Biomed. Health Inform. 2021, 25, 3744–3751. [Google Scholar] [CrossRef]

- Zhang, C.; Chaudhuri, K. Active learning from weak and strong labelers. Adv. Neural Inf. Process. Syst. 2015, 28. Available online: https://proceedings.neurips.cc/paper_files/paper/2015/hash/eba0dc302bcd9a273f8bbb72be3a687b-Abstract.html (accessed on 27 September 2025).

- Hanneke, S. Theory of disagreement-based active learning. Found. Trends Mach. Learn. 2014, 7, 131–309. [Google Scholar] [CrossRef]

- Tosh, C.J.; Hsu, D. Simple and near-optimal algorithms for hidden stratification and multi-group learning. In Proceedings of the 39th International Conference on Machine Learning (ICML), Baltimore, MD, USA, 17–23 July 2022; pp. 21633–21657. [Google Scholar]

- Du, P.; Chen, H.; Zhao, S.; Chai, S.; Chen, H.; Li, C. Contrastive active learning under class distribution mismatch. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 4260–4273. [Google Scholar] [CrossRef]

- Raghavan, H.; Madani, O.; Jones, R. Active learning with feedback on features and instances. J. Mach. Learn. Res. 2006, 7, 1655–1686. [Google Scholar]

- Shah, N.A.; Safaei, B.; Sikder, S.; Vedula, S.S.; Patel, V.M. StepAL: Step-aware active learning for cataract surgical videos. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Marrakesh, Morocco, 6–10 October 2025; Springer Nature: Cham, Switzerland, 2025; pp. 552–562. [Google Scholar]

- Ildiz, M.E.; Huang, Y.; Li, Y.; Rawat, A.S.; Oymak, S. From self-attention to Markov models: Unveiling the dynamics of generative transformers. arXiv 2024, arXiv:2402.13512. [Google Scholar] [CrossRef]

- Makkuva, A.V.; Bondaschi, M.; Girish, A.; Nagle, A.; Jaggi, M.; Kim, H.; Gastpar, M. Attention with Markov: A framework for principled analysis of transformers via Markov chains. arXiv 2024, arXiv:2402.04161. [Google Scholar] [CrossRef]

- Al-Azzawi, A.; Ouadou, A.; Max, H.; Duan, Y.; Tanner, J.J.; Cheng, J. DeepCryoPicker: Fully automated deep neural network for single protein particle picking in cryo-EM. BMC Bioinform. 2020, 21, 509. [Google Scholar] [CrossRef]

- Al-Azzawi, A.; Ouadou, A.; Tanner, J.J.; Cheng, J. AutoCryoPicker: An unsupervised learning approach for fully automated single particle picking in cryo-EM images. BMC Bioinform. 2019, 20, 326. [Google Scholar] [CrossRef]

- Al-Azzawi, A.; Ouadou, A.; Tanner, J.J.; Cheng, J. A super-clustering approach for fully automated single particle picking in cryo-EM. Genes 2019, 10, 666. [Google Scholar] [CrossRef] [PubMed]

- Alani, A.A.; Al-Azzawi, A. Optimizing web page retrieval performance with advanced query expansion: Leveraging ChatGPT and metadata-driven analysis. J. Supercomput. 2025, 81, 569. [Google Scholar] [CrossRef]

- Al-Azzawi, A. Deep semantic segmentation-based unlabeled positive CNN’s loss function for fully automated human finger vein identification. In AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2023; Volume 2872. [Google Scholar]

- Chowdhary, K. Natural language processing. In Fundamentals of Artificial Intelligence; Springer: Cham, Switzerland, 2020; pp. 603–649. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; IEEE: New York, NY, USA, 2015; pp. 1440–1448. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2019, 10, 18–31. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended Cohn–Kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops (CVPRW), San Francisco, CA, USA, 13–18 June 2010; IEEE: New York, NY, USA, 2010; pp. 94–101. [Google Scholar]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.-L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.-H.; et al. Challenges in representation learning: A report on three machine learning contests. In Proceedings of the International Conference on Neural Information Processing (ICONIP), Daegu, Republic of Korea, 3–7 November 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 117–124. [Google Scholar]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. Emotic: Emotions in context dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 61–69. [Google Scholar]

- Azzawi, A.A.; Al-Saedi, M.A. Face recognition based on mixed between selected feature by multiwavelet and particle swarm optimization. In Proceedings of the 2010 Developments in e-Systems Engineering (DeSE), London, UK, 6–8 September 2010; IEEE: New York, NY, USA, 2010; pp. 199–204. [Google Scholar]

- Al-Azzawi, A.; Hind, J.; Cheng, J. Localized deep-CNN structure for face recognition. In Proceedings of the 2018 11th International Conference on Developments in eSystems Engineering (DeSE), Cambridge, UK, 2–5 September 2018; IEEE: New York, NY, USA, 2018; pp. 52–57. [Google Scholar]

- Al-Azzawi, A.; Al-Sadr, H.; Cheng, J.; Han, T.X. Localized Deep Norm-CNN structure for face verification. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; IEEE: New York, NY, USA, 2018; pp. 8–15. [Google Scholar]

- Al-Azzawi, A. Deep learning approach for secondary structure protein prediction based on first level features extraction using a latent CNN structure. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 4. [Google Scholar] [CrossRef]

- Al-Azzawi, A.; Alsaedi, M. Secondary structure protein prediction-based first level features extraction using U-Net and sparse auto-encoder. JOIV Int. J. Inform. Vis. 2025, 9, 1476–1484. [Google Scholar] [CrossRef]

- Al-Azzawi, A.; Hussein, M.K. Fully automated unsupervised learning approach for thermal camera calibration and an accurate COVID-19 human temperature tracking. Multidiscip. Sci. J. 2025, 7, 2025058. [Google Scholar] [CrossRef]

- Al-Azzawi, A.; Hussein, M.K. Fully automated real-time approach for human temperature prediction and COVID-19 detection-based thermal skin face extraction using deep semantic segmentation. Multidiscip. Sci. J. 2025, 7, 2025065. [Google Scholar] [CrossRef]

- Al Kafaf, D.; Thamir, N.; Al-Azzawi, A. Breast cancer prediction: A CNN approach. Multidiscip. Sci. J. 2024, 6, 2024156. [Google Scholar] [CrossRef]

- Alhashmi, S.A.; Al-Azawi, A. A review of the single-stage vs. two-stage detectors algorithm: Comprehensive insights into object detection. Int. J. Environ. Sci. 2025, 11, 775–787. [Google Scholar]

- Alani, A.A.; Al-Azzawia, A. Design a secure customize search engine based on link’s metadata analysis. In Proceedings of the 2025 5th International Conference on Innovative Research in Applied Science, Engineering and Technology (IRASET), Tangier, Morocco, 15–16 May 2025; IEEE: New York, NY, USA, 2025; pp. 1–7. [Google Scholar]

- Gao, R.; Lu, H.; Al-Azzawi, A.; Li, Y.; Zhao, C. DRL-FVRestore: An adaptive selection and restoration method for finger vein images based on deep reinforcement. Appl. Sci. 2023, 13, 699. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, H.; He, Z.; Al-Azzawi, A.; Ma, S.; Lin, C. Spore: Spatio-temporal collaborative perception and representation space disentanglement for remote heart rate measurement. Neurocomputing 2025, 630, 129717. [Google Scholar] [CrossRef]

- Al-Azzawi, A. An efficient spatially invariant model for fingerprint authentication based on particle swarm optimization. Unpubl. Manuscr. Available online: https://www.researchgate.net/publication/322499068_An_Efficient_Spatially_Invariant_Model_for_Fingerprint_Authentication_based_on_Particle_Swarm_Optimization (accessed on 27 September 2025).

- Al-Ghalibi, M.; Al-Azzawi, A.; Lawonn, K. NLP-based sentiment analysis for Twitter’s opinion mining and visualization. In Proceedings of the Eleventh International Conference on Machine Vision (ICMV 2018), Munich, Germany, 1–3 November 2018; SPIE: Bellingham, WA, USA, 2019; Volume 11041, pp. 618–626. [Google Scholar]

- Al-Azzawi, A.; Mora, F.T.; Lim, C.; Shang, Y. An artificial intelligent methodology-based Bayesian belief networks constructing for big data economic indicators prediction. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 5. [Google Scholar] [CrossRef]

- Janarthan, S.; Thuseethan, S.; Joseph, C.; Palanisamy, V.; Rajasegarar, S.; Yearwood, J. Efficient attention–lightweight deep learning architecture integration for plant pest recognition. IEEE Trans. AgriFood Electron. 2025, 3, 548–560. [Google Scholar] [CrossRef]

- Wang, H.; Sun, H.M.; Zhang, W.L.; Chen, Y.X.; Jia, R.S. FANN: A novel frame attention neural network for student engagement recognition in facial video. Vis. Comput. 2025, 41, 6011–6025. [Google Scholar] [CrossRef]

| Dataset | Number of Classes | Total Images | Train/Val/Test Split | Notes |

|---|---|---|---|---|

| AffectNet | 8 | ~1,000,000 | 450 k/500/500 | Large-scale, in-the-wild facial expressions with high variability |

| CK+ | 7 | ~600 | 327/100/170 | Controlled lab environment, posed expressions |

| FER-2013 | 7 | 35,887 | 28,709/3589/3589 | Crowdsourced, noisy labels, widely used benchmark |

| EMOTIC | 26 | ~23,000 | 17,000/3000/3000 | Context-rich dataset, emotions in natural driving-like environments |

| Scenario Type | Samples | Lighting Conditions | Occlusion Rate | Use Case |

|---|---|---|---|---|

| Highway Daylight | 28,742 | Consistent | 12% | Baseline Performance |

| Urban Night Driving | 19,885 | Low/Artificial | 18% | Low-Light Robustness |

| Tunnel Transitions | 8932 | Rapid Changes | 22% | Adaptive Illumination |

| Laboratory-Collected | 35,887 | Controlled | 0% | Initial Warm-up |

| Dataset | Original Dataset (Average) | Preprocessed Dataset (Average) | ||||

|---|---|---|---|---|---|---|

| PSNR | MSE | SNR | PSNR | MSE | SNR | |

| 1 | 50.18117 | 0.185031 | 32.683075 | 56.17213 | 0.005417 | 35.383724 |

| 2 | 60.11579 | 0.059399 | 30.955073 | 66.10306 | 0.019574 | 31.917117 |

| 3 | 60.25558 | 0.057518 | 31.015714 | 62.24455 | 0.007664 | 32.038335 |

| 4 | 60.12888 | 0.059221 | 31.021141 | 64.11828 | 0.009365 | 32.048946 |

| 5 | 51.28006 | 0.090647 | 31.432153 | 59.26693 | 0.010922 | 32.875347 |

| Model | Dataset | Accuracy (%) | F1 Score (%) | AUC (%) |

|---|---|---|---|---|

| CNN (baseline) | AffectNet | 82.14 | 83.76 | 84.12 |

| ResNet-50 | AffectNet | 89.25 | 90.42 | 91.08 |

| SE-ResNet-50 | AffectNet | 92.38 | 93.25 | 94.12 |

| ALDAM (ours) | AffectNet | 97.58 | 98.64 | 98.76 |

| CNN (baseline) | FER-2013 | 78.62 | 79.25 | 80.12 |

| SE-ResNet-50 | FER-2013 | 86.71 | 87.94 | 88.02 |

| ALDAM (ours) | FER-2013 | 94.35 | 95.80 | 96.22 |

| Emotion Class | SE-ResNet-50 (%) | ALDAM (%) |

|---|---|---|

| Anger | 85.1 | 92.8 |

| Disgust | 74.5 | 90.2 |

| Fear | 72.3 | 89.7 |

| Happiness | 96.4 | 98.9 |

| Sadness | 84.8 | 93.6 |

| Surprise | 91.5 | 97.4 |

| Neutral | 88.6 | 95.2 |

| Dataset | Accuracy (%) | F1 Score (%) | AUC (%) | Labeling Effort Reduction | Improvement over Baselines |

|---|---|---|---|---|---|

| AffectNet | 97.21 | 98.35 | 98.42 | ~40% | +32.8% |

| CK+ | 98.14 | 99.02 | 99.18 | ~40% | +34.44% |

| FER-2013 | 96.85 | 98.12 | 98.56 | ~40% | +30.1% |

| EMOTIC | 98.12 | 98.78 | 99.03 | ~40% | +33.2% |

| Average | 97.58 | 98.64 | 98.76 | ~40% | +34.44% |

| Model | Year | Dataset | Accuracy (%) | References |

|---|---|---|---|---|

| Deep-Emotion | 2015 | FER2013 | 70.02 | [85] |

| VGG-16 | 2016 | FER2013 | 60.66 | [86] |

| VGG-19 | 2016 | FER2013 | 60.92 | [86] |

| ResNet-50 | 2016 | FER2013 | 58.61 | [87] |

| ResNet-50 + CBAM | 2018 | FER2013 | 59.9 | [88] |

| ResNet-50 + CBAM (Enhanced) | 2023 | FER2013 | 71.24 | [89] |

| Swin-FER | 2024 | FER2013 | 71.11 | [89] |

| Our Model | 2025 | FER2013 | 98.75 | - |

| ResNet-50 | 2016 | AffectNet | 58 | [89] |

| VGG-16 | 2016 | AffectNet | 57 | [85] |

| Swin-FER | 2024 | AffectNet | 66 | [89] |

| Our Model | 2025 | AffectNet | 98.71 | - |

| Method Category | Accuracy Gain (%) | Computed Increase | Memory Requirements |

|---|---|---|---|

| Conventional → CNN | 28.9 | 12× | 8× |

| CNN → SE-ResNet-50 | 3.7 | 1.8× | 1.5× |

| SE-ResNet-50 → Ours | 12.18 | 0.9× | 1.1× |

| Driving Scenario | Accuracy (%) | F1 Score (%) | Notes |

|---|---|---|---|

| Highway daylight | 98.7 | 98.6 | Consistent lighting |

| Urban night driving | 97.9 | 98.4 | Low-light, artificial illumination |

| Tunnel transitions | 97.2 | 98.2 | Rapid light changes, high glare |

| Laboratory-controlled | 98.9 | 98.6 | Ideal conditions |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-dabbagh, B.S.N.; Ledezma Espino, A.; Miguel, A.S.d. An Active Learning and Deep Attention Framework for Robust Driver Emotion Recognition. Algorithms 2025, 18, 669. https://doi.org/10.3390/a18100669

Al-dabbagh BSN, Ledezma Espino A, Miguel ASd. An Active Learning and Deep Attention Framework for Robust Driver Emotion Recognition. Algorithms. 2025; 18(10):669. https://doi.org/10.3390/a18100669

Chicago/Turabian StyleAl-dabbagh, Bashar Sami Nayyef, Agapito Ledezma Espino, and Araceli Sanchis de Miguel. 2025. "An Active Learning and Deep Attention Framework for Robust Driver Emotion Recognition" Algorithms 18, no. 10: 669. https://doi.org/10.3390/a18100669

APA StyleAl-dabbagh, B. S. N., Ledezma Espino, A., & Miguel, A. S. d. (2025). An Active Learning and Deep Attention Framework for Robust Driver Emotion Recognition. Algorithms, 18(10), 669. https://doi.org/10.3390/a18100669