Hybrid Artificial Bee Colony Algorithm for Test Case Generation and Optimization

Abstract

1. Introduction

2. Related Work

2.1. Classical Test Design Techniques

2.1.1. Equivalence Partitioning

2.1.2. Boundary Value Analysis

2.1.3. Pairwise Testing

2.1.4. Bug Masking

2.2. Metaheuristic Algorithms for Test Optimization

2.3. Artificial Bee Colony Algorithm

2.4. Hybrid Metaheuristic Approaches in Test Generation

3. Proposed Method

3.1. Phases and Types of Bees

3.2. Evaluation of Solutions and Selection

- S(vi) represents the base score of value vi;

- B(vi) is the uniqueness bonus;

- P(t) is the global penalty for defects.

3.3. Integrated Metaheuristics

3.3.1. Influence of Parameters on the Final Outcome

3.3.2. Adaptive Scout Phase

3.3.3. Directed Onlooker Selection via Restricted Candidate List

3.3.4. Scout Bee Mechanism for Escaping Local Optima

3.3.5. Simulated Annealing and Hill Climbing (HC)

3.3.6. Avoiding Duplicate Solutions (Tabu Search)

3.3.7. Fast Local Search (FLS)

3.3.8. Combined Strategy with Genetic Algorithm Components

3.4. Summary: When and Why to Use ABC

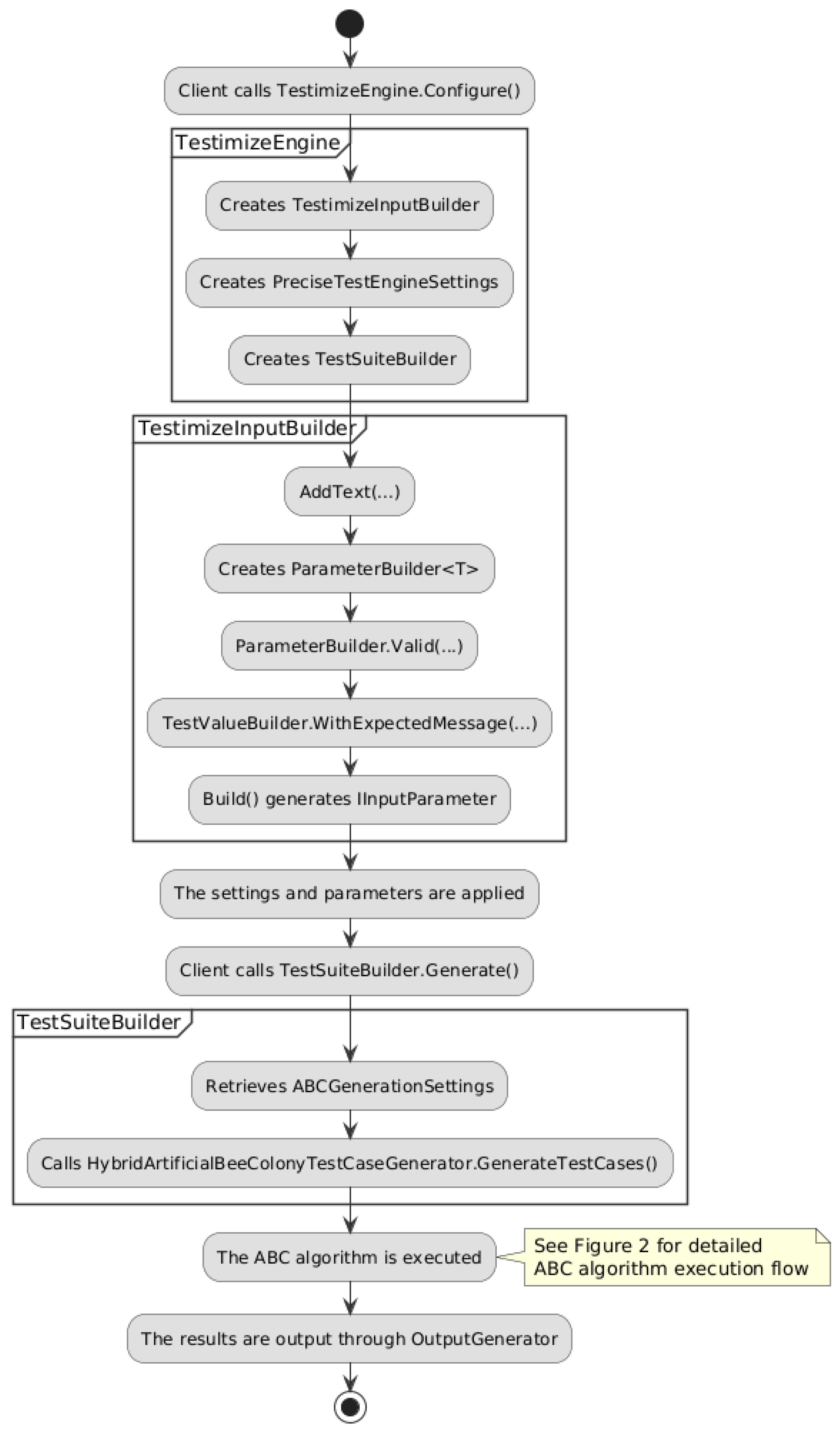

4. Program Implementation and Algorithm Logic

4.1. Implementation of the Hybrid Algorithm

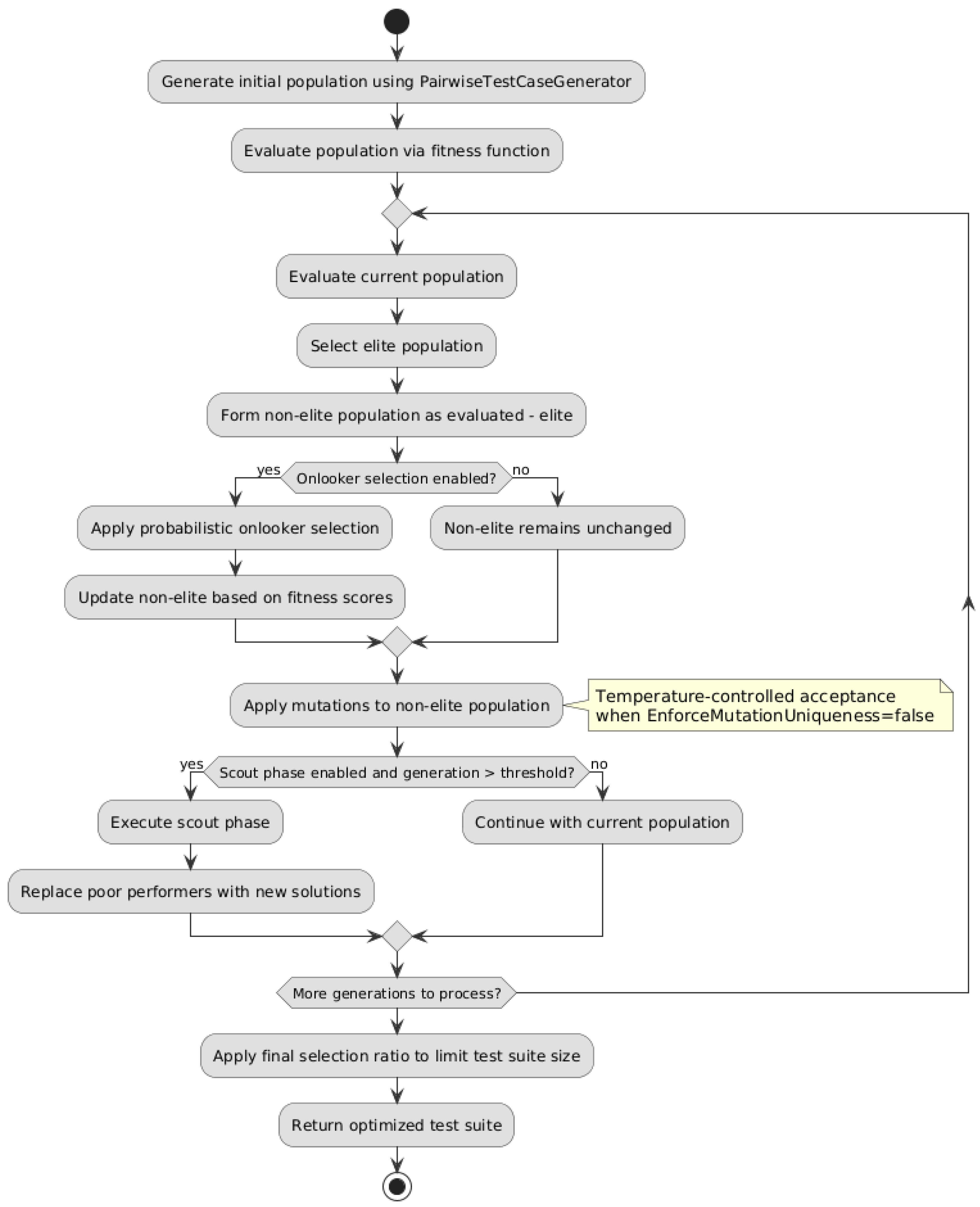

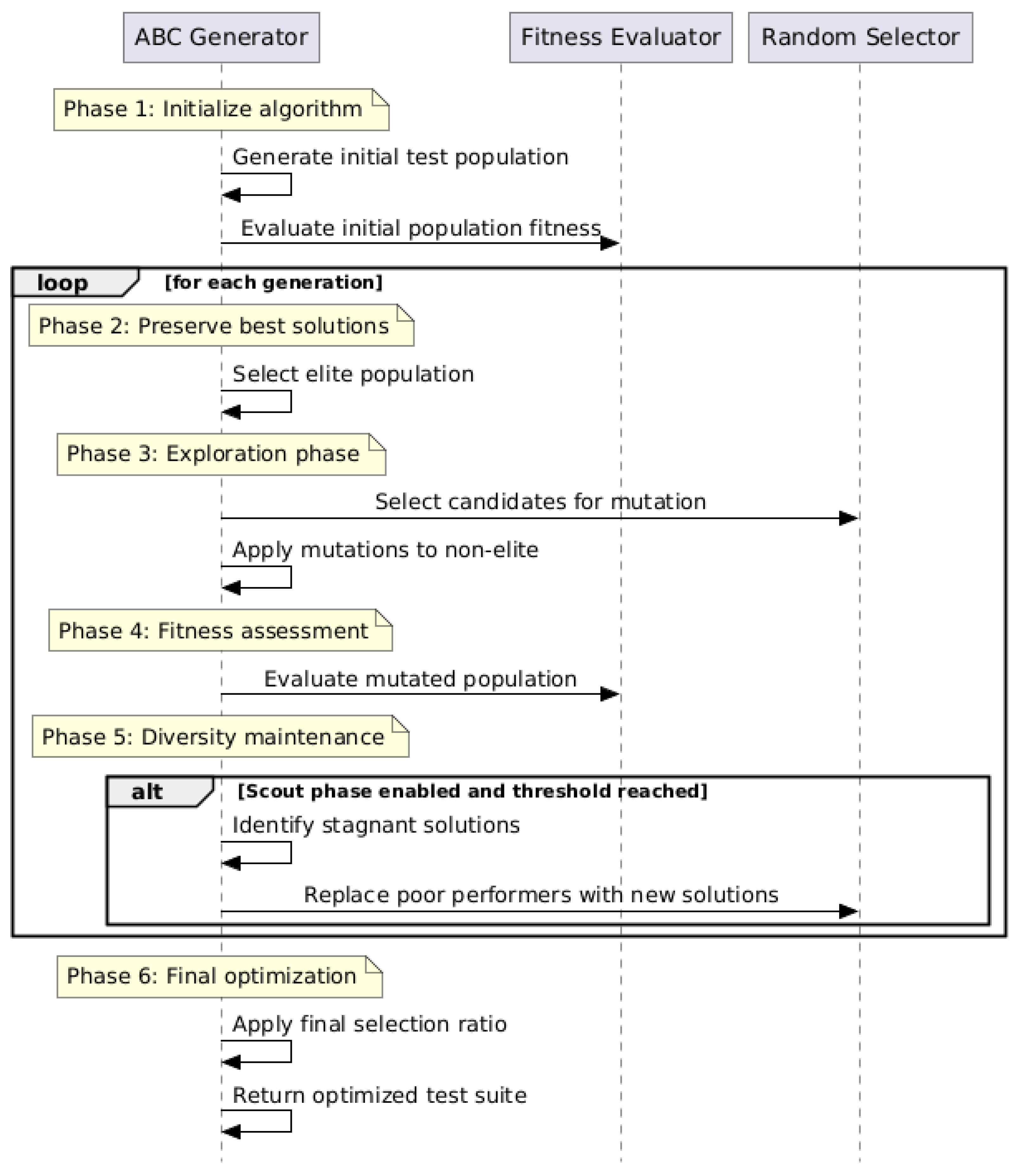

4.2. Main Phases of the Hybrid ABC Algorithm

| Algorithm 1. Hybrid ABC Test Case Generation |

| Input: Parameters P, TestValues V, Settings S Output: Optimized test suite T

|

4.3. Configuration Parameters and Adaptive Behavior

4.4. Evaluation of Solutions: Fitness Function

| Algorithm 2. Evaluate Test Case Fitness |

| Input: TestCase tc, Population P, GlobalHistory H Output: Fitness score 1: score ← 0 2: firstTimeCount ← 0 3: invalidCount ← Count(tc.Values where Category ∈ {Invalid, BoundaryInvalid}) 4: // Initialize count for population-unique values 5: if NOT AllowMultipleInvalid AND invalidCount > 1 then 6: return -50 × invalidCount 7: end if 8: coveredValues ← GetCoveredValues(P) 9: for i = 0 to |tc.Values| − 1 do 10: value ← tc.Values[i] 11: // Base score by category 12: switch value.Category do 13: BoundaryValid: score ← score + 20 14: Valid: score ← score + 2 15: BoundaryInvalid: score ← score − 1 16: Invalid: score ← score − 2 17: end switch 18: // Initialize global history for this parameter position if needed 19: if i ∉ H.SeenValues then 20: H.SeenValues[i] ← ∅ 21: end if 22: // Global first-time bonus (across all generations) 23: if value ∉ H.SeenValues[i] then 24: H.SeenValues[i].Add(value) 25: score ← score + 25 26: end if 27: // Check if value is new to current population 28: if i ∉ coveredValues OR value ∉ coveredValues[i] then 29: firstTimeCount ← firstTimeCount + 1 30: coveredValues[i].Add(value) 31: end if 32: end for 33: // Diversity amplification 34: if firstTimeCount > 0 then 35: multiplier ← 1 + firstTimeCount × 0.25 36: score ← score + (25 × multiplier) 37: end if 38: return score |

4.5. Stagnation Handling and Cooling Mechanisms

| Algorithm 3. Mutate Population |

| Input: nonElitePopulation, parameters, iteration Output: updatedPopulation 1: temperature ← max(0.1, 1.0 × CoolingRate^generation) 2: for each original in nonElitePopulation do 3: mutated ← ApplyMutation(original, parameters) // MutationRate check inside 4: if mutated ≠ original and mutated ∉ evaluatedPopulation then 5: originalScore ← Evaluate(original) 6: mutatedScore ← Evaluate(mutated) 7: if EnforceMutationUniqueness then 8: accept ← mutatedScore > originalScore 9: else 10: Δ ← mutatedScore − originalScore 11: acceptance ← exp(Δ / temperature) 12: accept ← Δ > 0 or random() < acceptance 13: end if 14: if accept then 15: Replace original with mutated in evaluatedPopulation 16: end if 17: end if 18: end for |

5. Analysis of Experimental Results

5.1. Configuration and Evaluation Criteria

5.2. Experimental Scenario and Results

5.3. Comparative Analysis of Results

5.3.1. Behavior with Activation/Deactivation of Onlooker and Scout Phases

5.3.2. Influence of Parameters Such as MutationRate, EliteRatio, CoolingRate, and Others

5.4. Results and Observations

5.5. Advantages and Limitations of Each Approach

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ABC | Artificial Bee Colony |

| BVA | Boundary Value Analysis |

| EP | Equivalence Partitioning |

| GA | Genetic Algorithms |

| SA | Simulated Annealing |

| GLS | Guided Local Search |

| RCL | Restricted Candidate List |

| HC | Hill Climbing |

| TS | Tabu Search |

| FLS | Fast Local Search |

References

- Dobslaw, F.; Feldt, R.; Gomes de Oliveira Neto, F.G. Automated Black-Box Boundary Value Detection. PeerJ Comput. Sci. 2023, 9, e1625. [Google Scholar] [CrossRef]

- NUnit. Available online: https://nunit.org/ (accessed on 19 October 2025).

- Microsoft. Unit Testing with MSTest in .NET Core. Available online: https://learn.microsoft.com/en-us/dotnet/core/testing/unit-testing-with-mstest (accessed on 19 October 2025).

- xUnit.net. Available online: https://xunit.net/ (accessed on 19 October 2025).

- Chavez, B. Bogus—C# Port of faker.js. GitHub. Available online: https://github.com/bchavez/Bogus (accessed on 19 October 2025).

- AutoFixture. Available online: https://github.com/AutoFixture/AutoFixture (accessed on 19 October 2025).

- Microsoft. PICT—Pairwise Independent Combinatorial Testing. GitHub. Available online: https://github.com/microsoft/pict (accessed on 19 October 2025).

- EvoSuite. Automatic Unit Test Generation for Java. Available online: https://www.evosuite.org/ (accessed on 19 October 2025).

- FsCheck. Available online: https://fscheck.github.io/FsCheck/ (accessed on 19 October 2025).

- Bach, J.; Schroeder, P. Pairwise Testing: A Best Practice That Isn’t. In Proceedings of the 22nd Pacific Northwest Software Quality Conference, Portland, OR, USA, 11–13 October 2004; pp. 180–196. [Google Scholar]

- Jorgensen, P.C. Boundary Value Testing. In Software Testing: A Craftsman’s Approach, 4th ed.; CRC Press: Boca Raton, FL, USA, 2014; Chapter 5; pp. 79–94. ISBN 978-1-4665-6069-7. [Google Scholar]

- Scott, A. MADLab: Masking and Multiple Bug Diagnosis. Ph.D. Thesis, University of Edinburgh, Edinburgh, UK, 1994. [Google Scholar]

- Mala, D.J.; Mohan, V. ABC Tester-Artificial Bee Colony Based Software Test Suite Optimization Approach. Int. J. Softw. Eng. 2009, 2, 15–43. [Google Scholar]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- He, D.; Liu, D.; Li, L. Evolutionary Test Case Generation with Improved Genetic Algorithm. Intell. Decis. Technol. 2025, 19, 2310–2323. [Google Scholar] [CrossRef]

- Ahmad, M.Z.Z.; Othman, R.R.; Ali, M.S.A.R.; Ramli, N. Self-Adapting Ant Colony Optimization Algorithm Using Fuzzy Logic (ACOF) for Combinatorial Test Suite Generation. IOP Conf. Ser. Mater. Sci. Eng. 2020, 767, 012017. [Google Scholar] [CrossRef]

- Nasser, A.B.; Abdul-Qawy, A.S.H.; Abdullah, N.; Hujainah, F.; Zamli, K.Z.; Ghanem, W.A.H.M. Latin Hypercube Sampling Jaya Algorithm based Strategy for T-way Test Suite Generation. In Proceedings of the 2020 9th International Conference on Software and Computer Applications (ICSCA ‘20), Langkawi, Malaysia, 18–21 February 2020; pp. 105–109. [Google Scholar] [CrossRef]

- Xia, C.; Zhang, Y.; Hui, Z. Test Suite Reduction via Evolutionary Clustering. IEEE Access. 2021, 9, 28111–28121. [Google Scholar] [CrossRef]

- Broide, L.; Stern, R. EvoGPT: Enhancing Test Suite Robustness via LLM-Based Generation and Genetic Optimization. arXiv 2025, arXiv:2505.12424. [Google Scholar]

- Felding, E.; Strandberg, P.E.; Quttineh, N.H.; Afzal, W. Resource Constrained Test Case Prioritization with Simulated Annealing in an Industrial Context. In Proceedings of the 39th ACM/SIGAPP Symposium on Applied Computing (SAC ‘24), Avila, Spain, 8–12 April 2024; pp. 1694–1701. [Google Scholar] [CrossRef]

- Mgbemena, S.O.; Khatibsyarbini, M.; Isa, A.M. An Enhancement of Coverage-based Test Case Prioritization Technique Using Hybrid Genetic Algorithm. Int. J. Innov. Comput. 2025, 15, 109–117. [Google Scholar]

- Wang, H.; Du, P.; Xu, X.; Su, M.; Wen, S.; Yue, W.; Zhang, S. Adaptive Group Collaborative Artificial Bee Colony Algorithm. arXiv 2021, arXiv:2112.01215. [Google Scholar] [CrossRef]

- Alabbas, M.; Abdulkareem, A.H. Hybrid Artificial Bee Colony Algorithm with Multi-Using of Simulated Annealing Algorithm and Its Application in Attacking of Stream Cipher Systems. J. Theor. Appl. Inf. Technol. 2019, 97, 23–33. [Google Scholar]

- Kumar, S.; Sharma, V.K.; Kumari, R. A Novel Hybrid Crossover based Artificial Bee Colony Algorithm for Optimization Problem. arXiv 2014, arXiv:1407.5574. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, X.; Trik, M. Energy Efficient Multi Hop Clustering Using Artificial Bee Colony Metaheuristic in WSN. Sci. Rep. 2025, 15, 26803. [Google Scholar] [CrossRef] [PubMed]

- Ge, J.; Zhou, B.; Liu, N. Hybrid Artificial Bee Colony and Bat Algorithm for Efficient Resource Allocation in Edge-Cloud Systems. Int. J. Adv. Comput. Sci. Appl. 2025, 16, 1024–1031. [Google Scholar] [CrossRef]

- Yahaya, M.S.; Hashim, A.S.B.; Balogun, A.O.; Muazu, A.A.; Usman, F.S.; Aliyu, D.A.; Muhammad, A.U. Exploration and Exploitation Mechanism in Pairwise Test Case Generation: A Systematic Literature Review. IEEE Access 2025, 13, 82342–82371. [Google Scholar] [CrossRef]

- Chandrasekhara Reddy, T.; Srivani, V.; Mallikarjuna Reddy, A.; Vishnu Murthy, G. Test Case Optimization and Prioritization Using Improved Cuckoo Search and Particle Swarm Optimization Algorithm. Int. J. Eng. Technol. 2018, 7, 275–278. [Google Scholar] [CrossRef]

- Tsang, E.; Voudouris, C. Fast Local Search and Guided Local Search and Their Application to British Telecom’s Workforce Scheduling Problem. Oper. Res. Lett. 1997, 20, 119–127. [Google Scholar] [CrossRef]

- Vats, R.; Kumar, A. Artificial Bee Colony Based Prioritization Algorithm for Test Case Prioritization Problem. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 8347–8354. [Google Scholar] [CrossRef]

- Srikanth, A.; Kulkarni, N.J.; Naveen, K.V.; Singh, P.; Srivastava, P.R. Test Case Optimization Using Artificial Bee Colony Algorithm. Commun. Comput. Inf. Sci. 2011, 192, 570–579. [Google Scholar]

- Rani, S.; Suri, B.; Goyal, R. On the Effectiveness of Using Elitist Genetic Algorithm in Mutation Testing. Symmetry 2019, 11, 1145. [Google Scholar] [CrossRef]

- Durgut, R. Improved Binary Artificial Bee Colony Algorithm. Front. Inf. Technol. Electron. Eng. 2021, 22, 1080–1091. [Google Scholar] [CrossRef]

- Glover, F. Tabu Search—Part I. ORSA J. Comput. 1989, 1, 190–206. [Google Scholar] [CrossRef]

- Angelov, A. Testimize: Hybrid ABC Test Case Generation Framework. GitHub Repository. Licensed under Apache 2.0. Available online: https://github.com/AutomateThePlanet/Testimize (accessed on 19 October 2025).

- Wambua, A.W.; Wambugu, G.M. A Comparative Analysis of Bat and Genetic Algorithms for Test Case Prioritization in Regression Testing. Int. J. Intell. Syst. Appl. 2023, 15, 13–21. [Google Scholar] [CrossRef]

| Category | Examples | Contribution |

|---|---|---|

| Boundary Valid | 14, 99 | +20 points |

| Valid | 25, “test@example.com” | +2 points |

| Boundary Invalid | −1, 1000 | −1 point |

| Invalid | null, “!” | −2 points |

| Parameter | Valid Range | Default | Optimal | Description |

|---|---|---|---|---|

| FinalPopulationSelectionRatio | 0.1–0.9 | 0.5 | 0.6–0.8 | Proportion of population retained in final test suite |

| EliteSelectionRatio | 0.0–0.8 | 0.5 | 0.3–0.6 | Share of best test cases preserved between generations |

| TotalGenerations | 10–150+ | 50 | 100–150 | Number of ABC algorithm iterations |

| MutationRate | 0.0–1.0 | 0.3 | 0.5–0.8 | Probability of changing a value in a test case |

| CoolingRate | 0.5–0.99 | 0.95 | 0.9–0.95 | Rate of decreasing mutation activity (SA component) |

| OnlookerSelectionRatio | 0.0–0.95 | 0.1 | 0.3–0.4 | Proportion of solutions selected during onlooker phase |

| ScoutSelectionRatio | 0.0–0.95 | 0.3 | 0.1–0.3 | Proportion of stagnant solutions replaced by scouts |

| StagnationThresholdPercentage | 0.3–0.9 | 0.75 | 0.3–0.75 | Fraction of generations after which scout phase activates |

| EnableOnlookerSelection | true/false | false | true | Enables or disables the onlooker phase |

| EnableScoutPhase | true/false | false | true | Enables or disables the scout phase |

| EnforceMutationUniqueness | true/false | true | false | Allows only improving mutations (SA behavior) |

| AllowMultipleInvalidValues | true/false | true | false | Determines if multiple invalid values allowed per test |

| ID | Gen | MR | FPR | ESR | Onlk | Scout | ABC | Pairwise | 95% CI | Δ% | t | p |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| *1 | 150 * | 0.80 | 0.60 | 0.60 | Y | Y | 976.0 ± 10.5 | 580 ± 0 | [968–984] | 68.3 | 118.8 | <0.001 |

| *2 | 100 * | 0.50 | 0.60 | 0.30 | Y | Y | 975.9 ± 10.6 | 580 ± 0 | [968–983] | 68.3 | 118.1 | <0.001 |

| 3 | 100 | 0.50 | 0.60 | 0.30 | Y | N | 973.5 ± 7.9 | 580 ± 0 | [968–979] | 67.8 | 157.4 | <0.001 |

| 4 | 100 | 0.50 | 0.60 | 0.30 | N | Y | 971.6 ± 2.3 | 580 ± 0 | [970–973] | 67.5 | 545.4 | <0.001 |

| 5 | 100 | 0.50 | 0.60 | 0.30 | N | N | 970.9 ± 0.3 | 580 ± 0 | [971–971] | 67.4 | 390.9 | <0.001 |

| *6 | 100 * | 0.50 | 0.60 | 0.60 | Y | Y | 976.0 ± 10.5 | 580 ± 0 | [968–984] | 68.3 | 118.8 | <0.001 |

| 7 | 50 | 0.45 | 0.50 | 0.50 | Y | Y | 896.3 ± 11.0 | 775 ± 0 | [888–904] | 15.7 | 34.9 | <0.001 |

| 8 | 50 | 0.35 | 0.55 | 0.45 | Y | Y | 922.2 ± 7.3 | 780 ± 0 | [917–927] | 18.2 | 61.7 | <0.001 |

| 9 | 50 | 0.40 | 0.50 | 0.50 | Y | Y | 895.5 ± 12.1 | 775 ± 0 | [887–904] | 15.5 | 31.6 | <0.001 |

| 10 | 60 | 0.50 | 0.50 | 0.70 | Y | Y | 887.1 ± 11.4 | 775 ± 0 | [879–895] | 14.5 | 31.0 | <0.001 |

| 11 | 70 | 0.60 | 0.50 | 0.60 | Y | Y | 895.5 ± 12.1 | 775 ± 0 | [887–904] | 15.5 | 31.6 | <0.001 |

| 12 | 100 | 0.70 | 0.50 | 0.60 | N | N | 898.0 ± 10.5 | 775 ± 0 | [890–906] | 15.9 | 36.9 | <0.001 |

| 13 | 100 | 0.80 | 0.40 | 0.60 | N | N | 743.5 ± 12.1 | 678 ± 0 | [735–752] | 9.7 | 17.2 | <0.001 |

| 14 | 100 | 0.40 | 0.50 | 0.50 | N | N | 898.0 ± 10.5 | 775 ± 0 | [890–906] | 15.9 | 36.9 | <0.001 |

| 15 | 100 | 0.40 | 0.40 | 0.50 | N | N | 746.0 ± 12.9 | 678 ± 0 | [737–755] | 10.0 | 16.7 | <0.001 |

| ID | Gen | MR | FPR | ESR | Onlk | Scout | ABC | Pairwise | 95% CI | Δ% | t | p |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 150 | 0.80 | 0.60 | 0.60 | Y | Y | 1040.0 ± 16.7 | 885 ± 0 | [1028–1052] | 17.5 | 29.4 | <0.001 |

| 2 | 100 | 0.50 | 0.60 | 0.30 | Y | Y | 1050.0 ± 21.1 | 885 ± 0 | [1035–1065] | 18.6 | 24.8 | <0.001 |

| *3 | 100 | 0.50 | 0.60 | 0.30 | Y | N | 1052.5 ± 17.7 | 885 ± 0 | [1040–1065] | 18.9 | 30.0 | <0.001 |

| 4 | 100 | 0.50 | 0.60 | 0.30 | N | Y | 1042.5 ± 18.5 | 885 ± 0 | [1029–1056] | 17.8 | 27.0 | <0.001 |

| 5 | 100 | 0.50 | 0.60 | 0.30 | N | N | 1040.0 ± 20.4 | 885 ± 0 | [1025–1055] | 17.5 | 24.0 | <0.001 |

| 6 | 100 | 0.50 | 0.60 | 0.60 | Y | Y | 1050.0 ± 12.9 | 885 ± 0 | [1041–1059] | 18.6 | 40.4 | <0.001 |

| 7 | 50 | 0.45 | 0.50 | 0.50 | Y | Y | 914.5 ± 24.9 | 807 ± 0 | [897–932] | 13.3 | 13.7 | <0.001 |

| 8 | 50 | 0.35 | 0.55 | 0.45 | Y | Y | 952.6 ± 15.1 | 833 ± 0 | [942–963] | 14.4 | 25.1 | <0.001 |

| 9 | 50 | 0.40 | 0.50 | 0.50 | Y | Y | 912.0 ± 11.8 | 807 ± 0 | [904–920] | 13.0 | 28.2 | <0.001 |

| 10 | 60 | 0.50 | 0.50 | 0.70 | Y | Y | 922.0 ± 12.9 | 807 ± 0 | [913–931] | 14.3 | 28.2 | <0.001 |

| 11 | 70 | 0.60 | 0.50 | 0.60 | Y | Y | 912.0 ± 11.8 | 807 ± 0 | [904–920] | 13.0 | 28.2 | <0.001 |

| 12 | 100 | 0.70 | 0.50 | 0.60 | N | N | 927.0 ± 12.9 | 807 ± 0 | [918–936] | 14.9 | 29.4 | <0.001 |

| 13 | 100 | 0.80 | 0.40 | 0.60 | N | N | 763.0 ± 10.5 | 696 ± 0 | [755–771] | 9.6 | 20.1 | <0.001 |

| 14 | 100 | 0.40 | 0.50 | 0.50 | N | N | 914.5 ± 18.5 | 807 ± 0 | [901–928] | 13.3 | 18.4 | <0.001 |

| 15 | 100 | 0.40 | 0.40 | 0.50 | N | N | 773.0 ± 12.9 | 696 ± 0 | [764–782] | 11.1 | 18.9 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Angelov, A.; Lazarova, M. Hybrid Artificial Bee Colony Algorithm for Test Case Generation and Optimization. Algorithms 2025, 18, 668. https://doi.org/10.3390/a18100668

Angelov A, Lazarova M. Hybrid Artificial Bee Colony Algorithm for Test Case Generation and Optimization. Algorithms. 2025; 18(10):668. https://doi.org/10.3390/a18100668

Chicago/Turabian StyleAngelov, Anton, and Milena Lazarova. 2025. "Hybrid Artificial Bee Colony Algorithm for Test Case Generation and Optimization" Algorithms 18, no. 10: 668. https://doi.org/10.3390/a18100668

APA StyleAngelov, A., & Lazarova, M. (2025). Hybrid Artificial Bee Colony Algorithm for Test Case Generation and Optimization. Algorithms, 18(10), 668. https://doi.org/10.3390/a18100668