Quantifying Statistical Heterogeneity and Reproducibility in Cooperative Multi-Agent Reinforcement Learning: A Meta-Analysis of the SMAC Benchmark

Abstract

1. Introduction

- A robust database of SMAC performance results (win rates) from 2018 to 2024, covering 25 unique algorithm-map pairs across dozens of publications;

- Systematically quantify reproducibility, statistical heterogeneity, and effect sizes in published results;

- Identify evaluation practices and incorporate moderator analysis to contextualize variability and guide transparency and reproducibility improvements across studies.

2. Study Selection and Data Extraction

2.1. Algorithm Selection

2.2. Map Selection

2.3. Eligibility Criteria

- IC1. The study reports empirical results on the SMAC benchmark and specifies the map sets evaluated.

- IC2. A full win-rate-versus-environment-step curve is provided that extends to ≥2 million environment steps (training timesteps).

- IC3. The map curves include results for ≥4 of the five selected algorithms: IQL, VDN, QMIX, COMA, and QTRAN.

- IC4. The paper presents independent experimental runs (seeds) and reports dispersion statistics (e.g., mean ± SD, 95% CI) for each algorithm.

- IC5. The experiments are conducted with unmodified SMAC environment code for the 5 selected algorithms.

- EC1. The study does not evaluate any SMAC scenario.

- EC2. Win-rate curves terminate before 2 million steps.

- EC3. Fewer than four of the target algorithms are evaluated by the curves

- EC4. Win-rates are reported only as scalar end-point metrics.

- EC5. The paper aggregates results from multiple algorithms without per-algorithm statistics, preventing extraction of algorithm-specific effect sizes.

2.4. Search Strategy

2.5. Selection Process

2.6. Data Extraction

3. Meta-Analysis

3.1. Overview

3.2. Standard Training Budget

3.3. Primary Meta-Regression Model

3.4. Moderator Analysis

3.5. Prediction Intervals

4. Results

4.1. Overview of Included Evidence

4.2. Original SMAC Means Vs. Pooled Means

4.3. Heterogeneity

4.4. Moderator Analysis

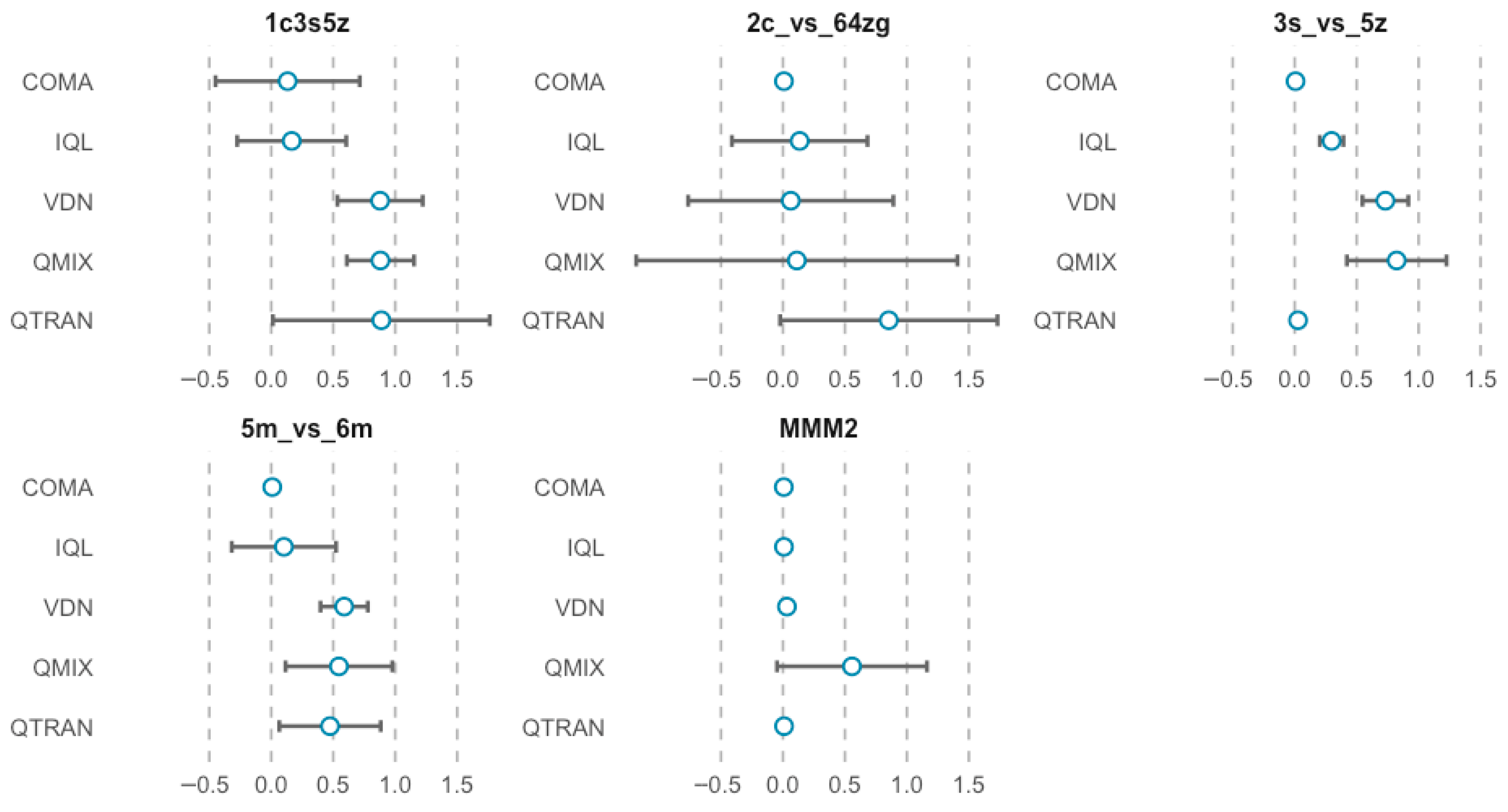

4.5. Prediction Intervals

5. Discussions

5.1. Finding Highlights in Context

5.2. Why RCI and SC2 Version Did Not Explain Variance

5.3. Methodological Limitations

5.4. Recommendations for Benchmarking Practices

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Converting Non-Variance Dispersion to Variance

Appendix A.1. From a 95% Confidence Interval on p

Appendix A.2. From 75% and 50% Confidence Intervals on p

- 75% CI (α = 0.25; ):

- 50% CI (α = 0.50; ):

Appendix A.3. From Central Quantile Ranges (IQR Only)

Appendix A.4. Estimating the Mean from Median and IQR (Wan & Luo)

- For :

- For :

Appendix B. Pooled Mean and Robust Variance-Estimated Standard Error

| Map | Algorithm | Pooled Mean | RVE SE |

|---|---|---|---|

| 1c3s5z | IQL | 0.165 | 0.054 |

| VDN | 0.878 | 0.050 | |

| QMIX | 0.881 | 0.044 | |

| COMA | 0.132 | 0.105 | |

| QTRAN | 0.888 | 0.011 | |

| 2c_vs_64zg | IQL | 0.135 | 0.102 |

| VDN | 0.063 | 0.144 | |

| QMIX | 0.112 | 0.260 | |

| COMA | 0.008 | 0.008 | |

| QTRAN | 0.854 | 0.040 | |

| 3s_vs_5z | IQL | 0.298 | 0.028 |

| VDN | 0.732 | 0.030 | |

| QMIX | 0.823 | 0.086 | |

| COMA | 0.007 | 0.008 | |

| QTRAN | 0.027 | 0.013 | |

| 5m_vs_6m | IQL | 0.102 | 0.088 |

| VDN | 0.588 | 0.037 | |

| QMIX | 0.546 | 0.051 | |

| COMA | 0.008 | 0.008 | |

| QTRAN | 0.474 | 0.030 | |

| MMM2 | IQL | 0.008 | 0.008 |

| VDN | 0.032 | 0.011 | |

| QMIX | 0.557 | 0.018 | |

| COMA | 0.007 | 0.008 | |

| QTRAN | 0.009 | 0.008 |

References

- Ning, Z.; Xie, L. A survey on multi-agent reinforcement learning and its application. J. Autom. Intell. 2024, 3, 73–91. [Google Scholar] [CrossRef]

- Yuan, L.; Zhang, Z.; Li, L.; Guan, C.; Yu, Y. A Survey of Progress on Cooperative Multi-agent Reinforcement Learning in Open Environment. arXiv 2023, arXiv:2312.01058. [Google Scholar] [CrossRef]

- Huh, D.; Mohapatra, P. Multi-agent Reinforcement Learning: A Comprehensive Survey. arXiv 2023, arXiv:2312.10256. [Google Scholar] [CrossRef]

- Samvelyan, M.; Rashid, T.; Schroeder de Witt, C.; Farquhar, G.; Nardelli, N.; Rudner, T.G.J.; Hung, C.-M.; Torr, P.H.S.; Foerster, J.; Whiteson, S. The StarCraft Multi-Agent Challenge. In Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, Montreal, QC, Canada, 13–17 May 2019; pp. 2186–2188. [Google Scholar]

- Gorsane, R.; Mahjoub, O.; de Kock, R.; Dubb, R.; Singh, S.; Pretorius, A. Towards a standardised performance evaluation protocol for cooperative MARL. In Proceedings of the 36th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; p. 398. [Google Scholar]

- Wang, J.; Ren, Z.; Liu, T.; Yu, Y.; Zhang, C. QPLEX: Duplex Dueling Multi-Agent Q-Learning. In Proceedings of the 9th International Conference on Learning Representations (ICLR), Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Li, C.; Liu, J.; Zhang, Y.; Wei, Y.; Niu, Y.; Yang, Y.; Liu, Y.; Ouyang, W. ACE: Cooperative multi-agent Q-learning with bidirectional action-dependency. In Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence and Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence and Thirteenth Symposium on Educational Advances in Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; p. 959. [Google Scholar]

- Ball, P. Is AI leading to a reproducibility crisis in science? Nature 2023, 624, 22–25. [Google Scholar] [CrossRef]

- DerSimonian, R.; Laird, N. Meta-analysis in clinical trials. Control Clin. Trials 1986, 7, 177–188. [Google Scholar] [CrossRef]

- Cobbe, K.; Hesse, C.; Hilton, J.; Schulman, J. Leveraging procedural generation to benchmark reinforcement learning. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; p. 191. [Google Scholar]

- Gulcehre, C.; Wang, Z.; Novikov, A.; Paine, T.; Gomez, S.; Zolna, K.; Agarwal, R.; Merel, J.S.; Mankowitz, D.J.; Paduraru, C.; et al. RL Unplugged: A Suite of Benchmarks for Offline Reinforcement Learning. In Proceedings of the Advances in Neural Information Processing Systems 33 (NeurIPS 2020), Virtual, 6–12 December 2020; pp. 7248–7259. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Tan, M. Multi-Agent Reinforcement Learning: Independent vs. Cooperative Agents; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1997; pp. 487–494. [Google Scholar]

- Sunehag, P.; Lever, G.; Gruslys, A.; Czarnecki, W.M.; Zambaldi, V.; Jaderberg, M.; Lanctot, M.; Sonnerat, N.; Leibo, J.Z.; Tuyls, K.; et al. Value-Decomposition Networks For Cooperative Multi-Agent Learning Based On Team Reward. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 2085–2087. [Google Scholar]

- Rashid, T.; Samvelyan, M.; De Witt, C.S.; Farquhar, G.; Foerster, J.; Whiteson, S. Monotonic value function factorisation for deep multi-agent reinforcement learning. J. Mach. Learn. Res. 2020, 21, 1–51. [Google Scholar]

- Foerster, J.N.; Farquhar, G.; Afouras, T.; Nardelli, N.; Whiteson, S. Counterfactual multi-agent policy gradients. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; p. 363. [Google Scholar]

- Son, K.; Kim, D.; Kang, W.J.; Hostallero, D.E.; Yi, Y. QTRAN: Learning to Factorize with Transformation for Cooperative Multi-Agent Reinforcement Learning. arXiv 2019, arXiv:1905.05408. [Google Scholar] [CrossRef]

- Amato, C. An Initial Introduction to Cooperative Multi-Agent Reinforcement Learning. arXiv 2024, arXiv:2405.06161. [Google Scholar] [CrossRef]

- Avalos, R.l.; Reymond, M.; Nowé, A.; Roijers, D.M. Local Advantage Networks for Cooperative Multi-Agent Reinforcement Learning. In Proceedings of the 21st International Conference on Autonomous Agents and Multiagent Systems, Virtual, New Zealand, 9–13 May 2022; 2022; pp. 1524–1526. [Google Scholar]

- Rohatgi, A. WebPlotDigitizer; 5.2; 2023. Available online: https://automeris.io/docs/#cite-webplotdigitizer (accessed on 1 March 2025).

- Sera, F.; Armstrong, B.; Blangiardo, M.; Gasparrini, A. An extended mixed-effects framework for meta-analysis. Stat. Med. 2019, 38, 5429–5444. [Google Scholar] [CrossRef]

- Hedges, L.V.; Tipton, E.; Johnson, M.C. Robust variance estimation in meta-regression with dependent effect size estimates. Res. Synth. Methods 2010, 1, 39–65. [Google Scholar] [CrossRef]

- Higgins, J.P.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar] [CrossRef] [PubMed]

- Team, R.C. R: A Language and Environment for Statistical Computing; 4.5.1; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Viechtbauer, W. Conducting Meta-Analyses in R with the metafor Package. J. Stat. Softw. 2010, 36, 1–48. [Google Scholar] [CrossRef]

- Pustejovsky, J.E.; Tipton, E. Small-Sample Methods for Cluster-Robust Variance Estimation and Hypothesis Testing in Fixed Effects Models. J. Bus. Econ. Stat. 2018, 36, 672–683. [Google Scholar] [CrossRef]

- Rashid, T.; Farquhar, G.; Peng, B.; Whiteson, S. Weighted QMIX: Expanding monotonic value function factorisation for deep multi-agent reinforcement learning. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Online, 6–12 December 2020; p. 855. [Google Scholar]

- Higgins, J.P.; Thompson, S.G.; Spiegelhalter, D.J. A re-evaluation of random-effects meta-analysis. J. R. Stat. Soc. Ser. A Stat. Soc. 2009, 172, 137–159. [Google Scholar] [CrossRef]

- IntHout, J.; Ioannidis, J.P.A.; Rovers, M.M.; Goeman, J.J. Plea for routinely presenting prediction intervals in meta-analysis. BMJ Open 2016, 6, e010247. [Google Scholar] [CrossRef]

- Papoudakis, G.; Christianos, F.; Schäfer, L.; Albrecht, S.V. Benchmarking Multi-Agent Deep Reinforcement Learning Algorithms in Cooperative Tasks. arXiv 2020, arXiv:2006.07869. [Google Scholar] [CrossRef]

- Schroeder de Witt, C. Coordination and Communication in Deep Multi-Agent Reinforcement Learning. Ph.D. Thesis, University of Oxford, Oxford, UK, 2021. [Google Scholar]

- Zhang, Q.; Wang, K.; Ruan, J.; Yang, Y.; Xing, D.; Xu, B. Enhancing Multi-agent Coordination via Dual-channel Consensus. Mach. Intell. Res. 2024, 21, 349–368. [Google Scholar] [CrossRef]

- Shen, S.; Fu, Y.; Su, H.; Pan, H.; Qiao, P.; Dou, Y.; Wang, C. Graphcomm: A Graph Neural Network Based Method for Multi-Agent Reinforcement Learning. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3510–3514. [Google Scholar]

- Zeng, X.; Peng, H.; Li, A.; Liu, C.; He, L.; Yu, P.S. Hierarchical state abstraction based on structural information principles. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence, Macao, China, 19–25 August 2023; p. 506. [Google Scholar]

- Liu, S.; Song, J.; Zhou, Y.; Yu, N.; Chen, K.; Feng, Z.; Song, M. Interaction Pattern Disentangling for Multi-Agent Reinforcement Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 8157–8172. [Google Scholar] [CrossRef]

- Yu, W.; Wang, R.; Hu, X. Learning Attentional Communication with a Common Network for Multiagent Reinforcement Learning. Comput. Intell. Neurosci. 2023, 2023, 5814420. [Google Scholar] [CrossRef]

- Wang, Z.; Meger, D. Leveraging World Model Disentanglement in Value-Based Multi-Agent Reinforcement Learning. arXiv 2023, arXiv:2309.04615. [Google Scholar] [CrossRef]

- Zhang, T.; Xu, H.; Wang, X.; Wu, Y.; Keutzer, K.; Gonzalez, J.E.; Tian, Y. Multi-Agent Collaboration via Reward Attribution Decomposition. arXiv 2020, arXiv:2010.08531. [Google Scholar] [CrossRef]

- Wan, L.; Song, X.; Lan, X.; Zheng, N. Multi-agent Policy Optimization with Approximatively Synchronous Advantage Estimation. arXiv 2020, arXiv:2012.03488. [Google Scholar] [CrossRef]

- Wang, Y.; Han, B.; Wang, T.; Dong, H.; Zhang, C. Off-Policy Multi-Agent Decomposed Policy Gradients. arXiv 2020, arXiv:2007.12322. [Google Scholar] [CrossRef]

- Yang, Y.; Hao, J.; Liao, B.; Shao, K.; Chen, G.; Liu, W.; Tang, H. Qatten: A General Framework for Cooperative Multiagent Reinforcement Learning. arXiv 2020, arXiv:2002.03939. [Google Scholar] [CrossRef]

- Wang, R.; Li, H.; Cui, D.; Xu, D. QFree: A Universal Value Function Factorization for Multi-Agent Reinforcement Learning. arXiv 2023, arXiv:2311.00356. [Google Scholar] [CrossRef]

- Qiu, W.; Wang, X.; Yu, R.; He, X.; Wang, R.; An, B.; Obraztsova, S.; Rabinovich, Z. RMIX: Learning risk-sensitive policies for cooperative reinforcement learning agents. In Proceedings of the 35th International Conference on Neural Information Processing Systems, Online, 6–14 December 2021; p. 1765. [Google Scholar]

- Wei, Q.; Xinrun, W.; Runsheng, Y.; Xu, H.; Rundong, W.; Bo, A.; Svetlana, O.; Zinovi, R. RMIX: Risk-Sensitive Multi-Agent Reinforcement Learning. arXiv 2021, arXiv:2102.08159. [Google Scholar]

- Wang, T.; Dong, H.; Lesser, V.; Zhang, C. ROMA: Multi-agent reinforcement learning with emergent roles. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020; p. 916. [Google Scholar]

- Luo, S.; Li, Y.; Li, J.; Kuang, K.; Liu, F.; Shao, Y.; Wu, C. S2RL: Do We Really Need to Perceive All States in Deep Multi-Agent Reinforcement Learning? In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington DC, USA, 14–18 August 2022; pp. 1183–1191. [Google Scholar]

- Wang, J.; Zhang, Y.; Gu, Y.; Kim, T.-K. SHAQ: Incorporating shapley value theory into multi-agent Q-Learning. In Proceedings of the 36th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; p. 430. [Google Scholar]

- Yao, X.; Wen, C.; Wang, Y.; Tan, X. SMIX(λ): Enhancing Centralized Value Functions for Cooperative Multiagent Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 52–63. [Google Scholar] [CrossRef]

- Hu, X.; Guo, P.; Li, Y.; Li, G.; Cui, Z.; Yang, J. TVDO: Tchebycheff Value-Decomposition Optimization for Multiagent Reinforcement Learning. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 12521–12534. [Google Scholar] [CrossRef]

- Yang, G.; Chen, H.; Zhang, M.; Yin, Q.; Huang, K. Uncertainty-based credit assignment for cooperative multi-agent reinforcement learning. J. Univ. Chin. Acad. Sci. 2024, 41, 231–240. [Google Scholar] [CrossRef]

- Wan, X.; Wang, W.; Liu, J.; Tong, T. Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Med. Res. Methodol. 2014, 14, 135. [Google Scholar] [CrossRef]

- Luo, D.; Wan, X.; Liu, J.; Tong, T. Optimally estimating the sample mean from the sample size, median, mid-range, and/or mid-quartile range. Stat. Methods Med. Res. 2018, 27, 1785–1805. [Google Scholar] [CrossRef]

| Map | Algorithm | Original | Pooled | Δ |

|---|---|---|---|---|

| 1c3s5z | IQL | 0.124 | 0.165 | +0.041 |

| VDN | 0.646 | 0.878 | +0.232 | |

| QMIX | 0.719 | 0.881 | +0.162 | |

| COMA | 0.174 | 0.132 | −0.042 | |

| QTRAN | — | 0.888 | n/a | |

| 2c_vs_64zg | IQL | 0.026 | 0.135 | +0.109 |

| VDN | 0.159 | 0.063 | −0.096 | |

| QMIX | 0.455 | 0.112 | −0.343 | |

| COMA | 0.000 | 0.008 | +0.008 | |

| QTRAN | — | 0.854 | n/a | |

| 3s_vs_5z | IQL | 0.321 | 0.298 | −0.023 |

| VDN | 0.664 | 0.732 | +0.068 | |

| QMIX | 0.634 | 0.823 | +0.189 | |

| COMA | 0.000 | 0.007 | +0.007 | |

| QTRAN | — | 0.027 | n/a | |

| 5m_vs_6m | IQL | 0.357 | 0.102 | −0.255 |

| VDN | 0.507 | 0.588 | +0.081 | |

| QMIX | 0.494 | 0.546 | +0.052 | |

| COMA | 0.000 | 0.008 | +0.008 | |

| QTRAN | — | 0.474 | n/a | |

| MMM2 | IQL | 0.003 | 0.008 | +0.005 |

| VDN | 0.009 | 0.032 | +0.023 | |

| QMIX | 0.434 | 0.557 | +0.123 | |

| COMA | 0.000 | 0.007 | +0.007 | |

| QTRAN | — | 0.009 | n/a |

| Map | Algorithm | ||||

|---|---|---|---|---|---|

| IQL | VDN | QMIX | QTRAN | COMA | |

| 1c3s5z | 78.4 | 95.4 | 96.2 | 85.5 | 89.6 |

| 2c_vs_64zg | 97.1 | 96.9 | 97.6 | 90.0 | 65.2 |

| 3s_vs_5z | 0.0 | 91.5 | 81.2 | 23.3 | 0.0 |

| 5m_vs_6m | 83.5 | 87.0 | 86.4 | 77.4 | 80 |

| MMM2 | 92.6 | 24.6 | 87.6 | 94.3 | 72.2 |

| Model | τ2 | I2 (%) | vs. Null | ||

|---|---|---|---|---|---|

| ΔI2 | LRT | p-Value | |||

| Null (cells only) | 0.00689 | 39.3 | — | — | — |

| +RCI | 0.00713 | 40.1 | −0.83 | 0.32 | 0.572 |

| +SC2 Version | 0.00723 | 40.4 | −1.16 | 1.12 | 0.570 |

| +Publication Year | 0.00572 | 34.9 | 4.34 | 5.06 | 0.024 |

| +All | 0.00636 | 37.4 | 1.88 | 6.33 | 0.176 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, R.; Liu, C. Quantifying Statistical Heterogeneity and Reproducibility in Cooperative Multi-Agent Reinforcement Learning: A Meta-Analysis of the SMAC Benchmark. Algorithms 2025, 18, 653. https://doi.org/10.3390/a18100653

Li R, Liu C. Quantifying Statistical Heterogeneity and Reproducibility in Cooperative Multi-Agent Reinforcement Learning: A Meta-Analysis of the SMAC Benchmark. Algorithms. 2025; 18(10):653. https://doi.org/10.3390/a18100653

Chicago/Turabian StyleLi, Rex, and Chunyu Liu. 2025. "Quantifying Statistical Heterogeneity and Reproducibility in Cooperative Multi-Agent Reinforcement Learning: A Meta-Analysis of the SMAC Benchmark" Algorithms 18, no. 10: 653. https://doi.org/10.3390/a18100653

APA StyleLi, R., & Liu, C. (2025). Quantifying Statistical Heterogeneity and Reproducibility in Cooperative Multi-Agent Reinforcement Learning: A Meta-Analysis of the SMAC Benchmark. Algorithms, 18(10), 653. https://doi.org/10.3390/a18100653